Can you imagine an AI capable of writing songs, novels, press releases, interviews, essays, technical manuals, programming code, prescribing medication and much more that we don't know yet? Watching GPT-3 in action doesn't seem like we're very far away.

In our latest report on natural language processing (NLP) we mentioned the GPT-2 algorithm developed by OpenAI (the company founded by such well-known names as Elon Musk) as an exponent of its capabilities for generating synthetic text with a quality indistinguishable from any other human-created text. The surprising results of GPT-2 led the company not to publish the source code of the algorithm because of its potential negative effects on the generation of deepfakes or false news

Recently (May 2020) a new version of the algorithm has been released, now called GPT-3, which includes functional innovations and improvements in performance and capacity to analyse and generate natural language texts.

In this post we try to summarize in a simple and affordable way the main new features of GPT-3. Do you dare to discover them?

We start directly, getting to the point. What does GPT-3 bring with it? (adaptation of the original post by Mayor Mundada).

- It is much bigger (complex) than everything we had before. Deep learning models based on neural networks are usually classified by their number of parameters. The greater the number of parameters, the greater the depth of the network and therefore its complexity. The training of the full version of GPT-2 resulted in 1.5 billion parameters. GPT-3 results in 175 billion parameters. GPT-3 has been trained on a basis of 570 GB of text compared to 40 GB of GPT-2.

- For the first time, it can be used as a product or service. For the first time it can be used as a product or service. That is, OpenAI has announced the availability of a public API for users to experiment with the algorithm. At the time of writing this post, access to the API is restricted (this is what we call a private preview) and access must be requested.

- The most important thing: the results. Despite the fact that the API is restricted by invitation, many Internet users (with access to the API) have published articles on its results in different fields.

What role do open data play?

It is rarely possible to see the power and benefits of open data as in this type of project. As mentioned above GPT-3 has been trained with 570 GB of data in text format. Well, it turns out that 60% of the training data of the algorithm comes from the source https://commoncrawl.org. Common Crawl is an open and collaborative project that provides a corpus for research, analysis, education, etc. As specified on the Common Crawl website the data provided are open and hosted under the AWS open data initiative. Much of the rest of the training data is also open including sources such as Wikipedia.

Use cases

Below are some of the examples and use cases that have had an impact.

Generation of synthetic text

This entry (no spoilers ;) ) from Manuel Araoz's blog shows the power of the algorithm to generate a 100% synthetic article on Artificial Intelligence. Manuel performs the following experiment: he provides GPT-3 with a minimal description of his biography included in his blog and a small fragment of the last entry in his blog. 117 words in total. After 10 runs of GPT-3 to generate related artificial text, Manuel is able to copy and paste the generated text, place a cover image and has a new post ready for his blog. Honestly, the text of the synthetic post is indistinguishable from an original post except for possible errors in names, dates, etc. that the text may include.

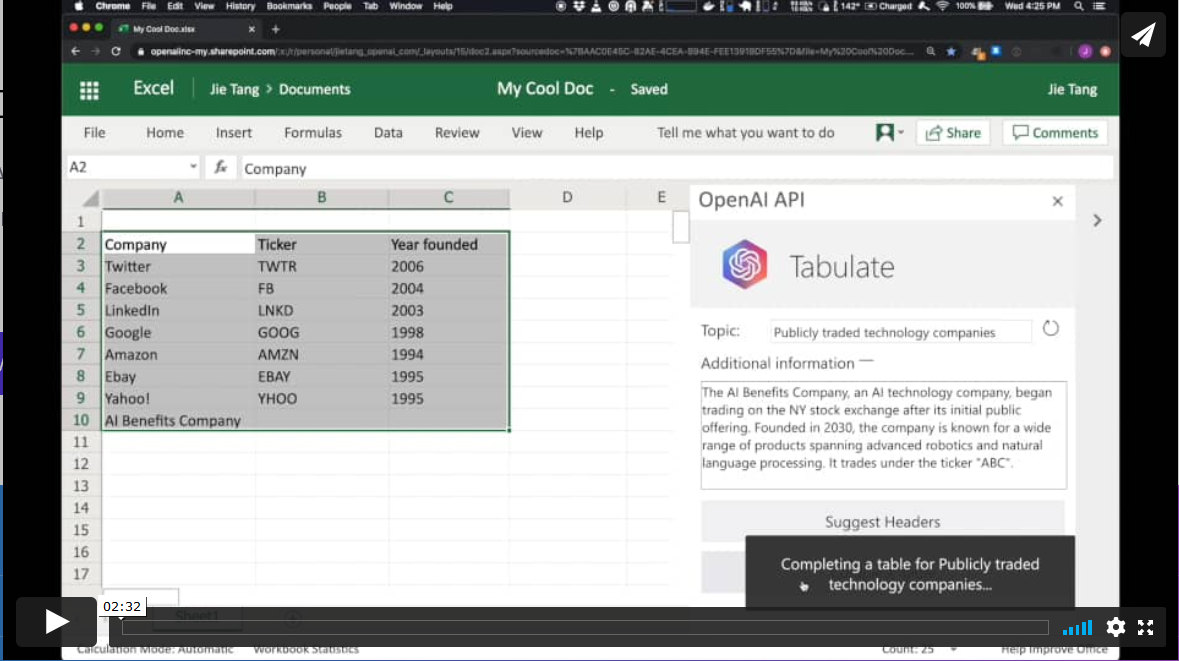

Productivity. Automatic generation of data tables.

In a different field, the GPT-3 algorithm has applications in the field of productivity. In this example GPT-3 is able to create a MS Excel table on a certain topic. For example, if we want to obtain a table, as a list, with the most representative technology companies and their year of foundation, we simply provide GPT-3 with the desired pattern and ask it to complete it. The starting pattern can be something similar to this table below (in a real example, the input data will be in English). GPT-3 will complete the shaded area with actual data. However, if in addition to the input pattern, we provide the algorithm with a plausible description of a fictitious technology company and ask you again to complete the table with the new information, the algorithm will include the data from this new fictitious company.

These examples are just a sample of what GPT-3 is capable of doing. Among its functionalities or applications are:

- semantic search (different from keyword search)

- the chatbots

- the revolution in customer services (call-center)

- the generation of multi-purpose text (creation of poems, novels, music, false news, opinion articles, etc.)

- productivity tools. We have seen an example on how to create data tables, but there is talk (and much) about the possibility of creating simple software such as web pages and small simple applications without the need for coding, just by asking GPT-3 and its brothers who are coming.

- online translation tools

- understanding and summarizing texts.

and so many other things we haven't discovered yet... We will continue to inform you about the next developments in NLP and in particular about GPT-3, a game-changer that has come to revolutionize everything we know at the moment.

Content elaborated by Alejandro Alija, expert in Digital Transformation and Innovation.

Contents and points of view expressed in this publication are the exclusive responsibility of its author.

Nosotros creamos un post en Medium utilizando GPT3 para ver si podían detectarlo o no los usuarios: https://medium.com/@info_87831/la-importancia-del-seo-en-el-mundo-digit… y al parecer, no solo no lo detectaron, sino que además ha recibido 19 aplausos.

La IA dentro de un tiempo será indetectable para los humanos, aunque todavía esta en una fase beta "por decirlo de alguna forma"

A ver si el corrector automático de este algoritmo es capaz de poner entre paréntesis los acentos que se suelen añadir en detrimento de la comprensión lectora.

Seguro que la transcripción del lenguaje natural reconoce en la pronunciación, mejor que las opciones de un diccionario, cuándo un como comparativo necesita distinguirse de un cómo relativo (ver linea 6) del artículo "Descubre los casos de uso del algoritmo GPT-3". Escribir también es procesar.

Muchas gracias Ana por informar de la errata. Ya está corregida. Un cordial saludo.