Open data from public sources has evolved over the years, from being simple repositories of information to constituting dynamic ecosystems that can transform public governance. In this context, artificial intelligence (AI) emerges as a catalytic technology that benefits from the value of open data and exponentially enhances its usefulness. In this post we will see what the mutually beneficial symbiotic relationship between AI and open data looks like.

Traditionally, the debate on open data has focused on portals: the platforms on which governments publish information so that citizens, companies and organizations can access it. But the so-called "Third Wave of Open Data," a term by New York University's GovLab, emphasizes that it is no longer enough to publish datasets on demand or by default. The important thing is to think about the entire ecosystem: the life cycle of data, its exploitation, maintenance and, above all, the value it generates in society.

What role can open data play in AI?

In this context, AI appears as a catalyst capable of automating tasks, enriching open government data (DMOs), facilitating its understanding and stimulating collaboration between actors.

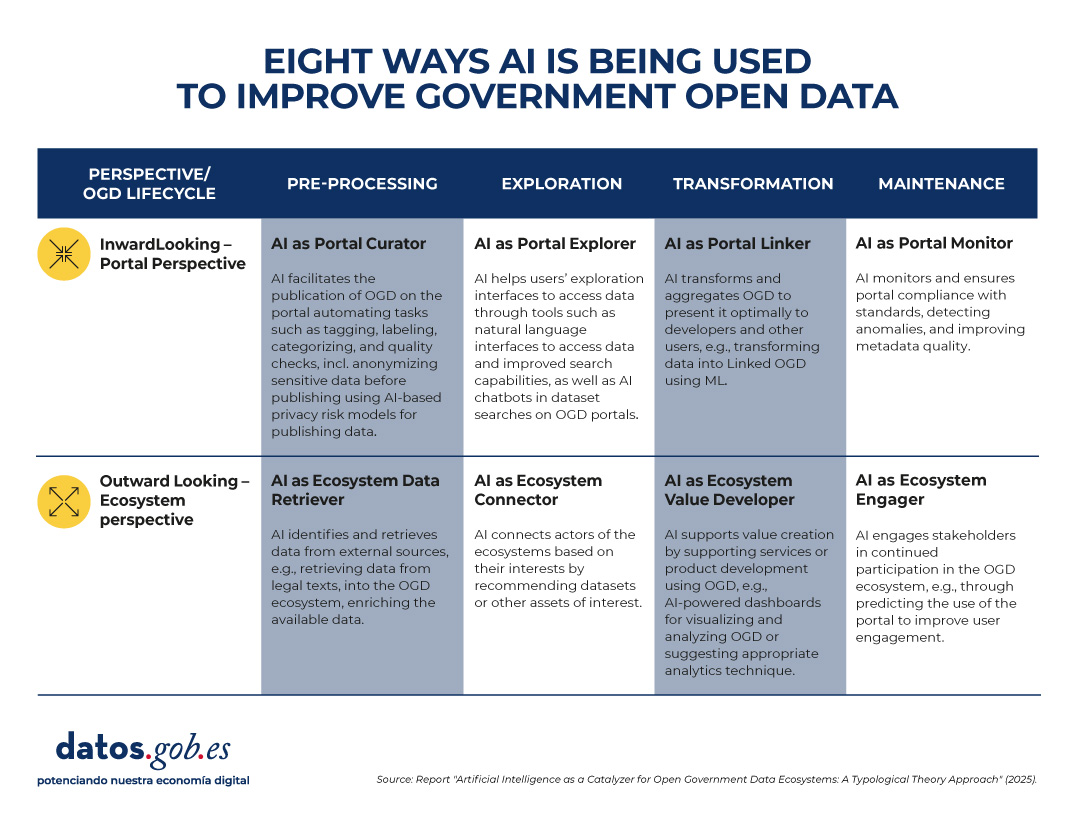

Recent research, developed by European universities, maps how this silent revolution is happening. The study proposes a classification of uses according to two dimensions:

-

Perspective, which in turn is divided into two possible paths:

-

Inward-looking (portal): The focus is on the internal functions of data portals.

-

Outward-looking (ecosystem): the focus is extended to interactions with external actors (citizens, companies, organizations).

-

-

Phases of the data life cycle, which can be divided into pre-processing, exploration, transformation and maintenance.

In summary, the report identifies these eight types of AI use in government open data, based on perspective and phase in the data lifecycle.

Figure 1. Eight uses of AI to improve government open data. Source: presentation “Data for AI or AI for data: artificial intelligence as a catalyst for open government ecosystems”, based on the report of the same name, from EU Open Data Days 2025.

A continuación, se detalla cada uno de estos usos:

1. Portal curator

This application focuses on pre-processing data within the portal. AI helps organize, clean, anonymize, and tag datasets before publication. Some examples of tasks are:

-

Automation and improvement of data publication tasks.

-

Performing auto-tagging and categorization functions.

-

Data anonymization to protect privacy.

-

Automatic cleaning and filtering of datasets.

-

Feature extraction and missing data handling.

2. Ecosystem data retriever

Also in the pre-processing phase, but with an external focus, AI expands the coverage of portals by identifying and collecting information from diverse sources. Some tasks are:

-

Retrieve structured data from legal or regulatory texts.

-

News mining to enrich datasets with contextual information.

-

Integration of urban data from sensors or digital records.

-

Discovery and linking of heterogeneous sources.

- Conversion of complex documents into structured information.

3. Portal explorer

In the exploration phase, AI systems can also make it easier to find and interact with published data, with a more internal approach. Some use cases:

-

Develop semantic search engines to locate datasets.

- Implement chatbots that guide users in data exploration.

-

Provide natural language interfaces for direct queries.

-

Optimize the portal's internal search engines.

-

Use language models to improve information retrieval.

4. Ecosystem connector

Operating also in the exploration phase, AI acts as a bridge between actors and ecosystem resources. Some examples are:

-

Recommend relevant datasets to researchers or companies.

-

Identify potential partners based on common interests.

-

Extract emerging themes to support policymaking.

-

Visualize data from multiple sources in interactive dashboards.

-

Personalize data suggestions based on social media activity.

5. Portal linker

This functionality focuses on the transformation of data within the portal. Its function is to facilitate the combination and presentation of information for different audiences. Some tasks are:

-

Convert data into knowledge graphs (structures that connect related information, known as Linked Open Data).

-

Summarize and simplify data with NLP (Natural Language Processing) techniques.

-

Apply automatic reasoning to generate derived information.

-

Enhance multivariate visualization of complex datasets.

-

Integrate diverse data into accessible information products.

6. Ecosystem value developer

In the transformation phase and with an external perspective, AI generates products and services based on open data that provide added value. Some tasks are:

-

Suggest appropriate analytical techniques based on the type of dataset.

-

Assist in the coding and processing of information.

-

Create dashboards based on predictive analytics.

-

Ensure the correctness and consistency of the transformed data.

-

Support the development of innovative digital services.

7. Portal monitor

It focuses on portal maintenance, with an internal focus. Their role is to ensure quality, consistency, and compliance with standards. Some tasks are:

-

Detect anomalies and outliers in published datasets.

-

Evaluate the consistency of metadata and schemas.

-

Automate data updating and purification processes.

-

Identify incidents in real time for correction.

-

Reduce maintenance costs through intelligent monitoring.

8. Ecosystem engager

And finally, this function operates in the maintenance phase, but outwardly. It seeks to promote citizen participation and continuous interaction. Some tasks are:

-

Predict usage patterns and anticipate user needs.

-

Provide personalized feedback on datasets.

-

Facilitate citizen auditing of data quality.

-

Encourage participation in open data communities.

-

Identify user profiles to design more inclusive experiences.

What does the evidence tell us?

The study is based on a review of more than 70 academic papers examining the intersection between AI and OGD (open government data). From these cases, the authors observe that:

-

Some of the defined profiles, such as portal curator, portal explorer and portal monitor, are relatively mature and have multiple examples in the literature.

-

Others, such as ecosystem value developer and ecosystem engager, are less explored, although they have the most potential to generate social and economic impact.

-

Most applications today focus on automating specific tasks, but there is a lot of scope to design more comprehensive architectures, combining several types of AI in the same portal or across the entire data lifecycle.

From an academic point of view, this typology provides a common language and conceptual structure to study the relationship between AI and open data. It allows identifying gaps in research and guiding future work towards a more systemic approach.

In practice, the framework is useful for:

-

Data portal managers: helps them identify what types of AI they can implement according to their needs, from improving the quality of datasets to facilitating interaction with users.

-

Policymakers: guides them on how to design AI adoption strategies in open data initiatives, balancing efficiency, transparency, and participation.

-

Researchers and developers: it offers them a map of opportunities to create innovative tools that address specific ecosystem needs.

Limitations and next steps of the synergy between AI and open data

In addition to the advantages, the study recognizes some pending issues that, in a way, serve as a roadmap for the future. To begin with, several of the applications that have been identified are still in early stages or are conceptual. And, perhaps most relevantly, the debate on the risks and ethical dilemmas of the use of AI in open data has not yet been addressed in depth: bias, privacy, technological sustainability.

In short, the combination of AI and open data is still a field under construction, but with enormous potential. The key will be to move from isolated experiments to comprehensive strategies, capable of generating social, economic and democratic value. AI, in this sense, does not work independently of open data: it multiplies it and makes it more relevant for governments, citizens and society in general.