Open data portals are an invaluable source of public information. However, extracting meaningful insights from this data can be challenging for users without advanced technical knowledge.

In this practical exercise, we will explore the development of a web application that democratizes access to this data through the use of artificial intelligence, allowing users to make queries in natural language.

The application, developed using the datos.gob.es portal as a data source, integrates modern technologies such as Streamlit for the user interface and Google's Gemini language model for natural language processing. The modular nature allows any Artificial Intelligence model to be used with minimal changes. The complete project is available in the Github repository.

Access the data laboratory repository on Github.

Run the data preprocessing code on Google Colab.

In this video, the author explains what you will find both on Github and Google Colab.

Application Architecture

The core of the application is based on four main interconnected sections that work together to process user queries:

- Context Generation

- Analyzes the characteristics of the chosen dataset.

- Generates a detailed description including dimensions, data types, and statistics.

- Creates a structured template with specific guidelines for code generation.

- Context and Query Combination

- Combines the generated context with the user's question, creating the prompt that the artificial intelligence model will receive.

- Response Generation

- Sends the prompt to the model and obtains the Python code that allows solving the generated question.

- Code Execution

- Safely executes the generated code with a retry and automatic correction system.

- Captures and displays the results in the application frontend.

Figure 1. Request processing flow

Development Process

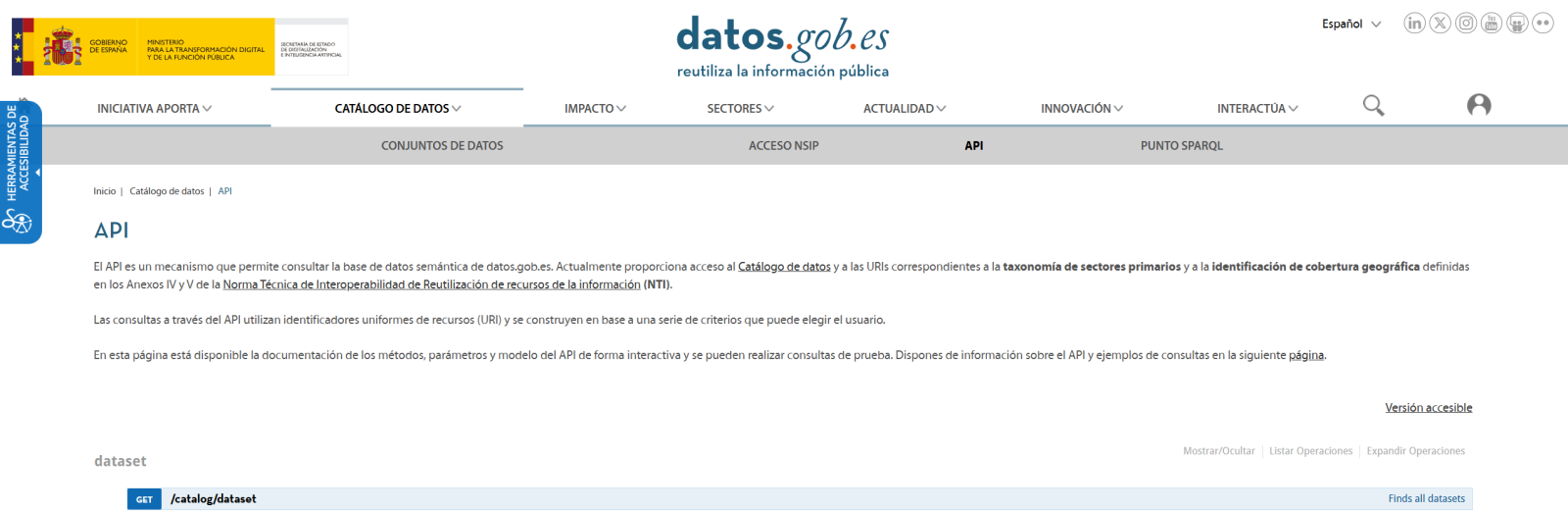

The first step is to establish a way to access public data. The datos.gob.es portal offers datasets via API. Functions have been developed to navigate the catalog and download these files efficiently.

Figura 2. API de datos.gob

The second step addresses the question: how to convert natural language questions into useful data analysis? This is where Gemini, Google's language model, comes in. However, it's not enough to simply connect the model; it's necessary to teach it to understand the specific context of each dataset.

A three-layer system has been developed:

- A function that analyzes the dataset and generates a detailed "technical sheet".

- Another that combines this sheet with the user's question.

- And a third that translates all this into executable Python code.

You can see in the image below how this process develops and, subsequently, the results of the generated code are shown once executed.

Figure 3. Visualization of the application's response processing

Finally, with Streamlit, a web interface has been built that shows the process and its results to the user. The interface is as simple as choosing a dataset and asking a question, but also powerful enough to display complex visualizations and allow data exploration.

The final result is an application that allows anyone, regardless of their technical knowledge, to perform data analysis and learn about the code executed by the model. For example, a municipal official can ask "What is the average age of the vehicle fleet?" and get a clear visualization of the age distribution.

Figure 4. Complete use case. Visualizing the distribution of registration years of the municipal vehicle fleet of Almendralejo in 2018

What Can You Learn?

This practical exercise allows you to learn:

- AI Integration in Web Applications:

- How to communicate effectively with language models like Gemini.

- Techniques for structuring prompts that generate precise code.

- Strategies for safely handling and executing AI-generated code.

- Web Development with Streamlit:

- Creating interactive interfaces in Python.

- Managing state and sessions in web applications.

- Implementing visual components for data.

- Working with Open Data:

- Connecting to and consuming public data APIs.

- Processing Excel files and DataFrames.

- Data visualization techniques.

- Development Best Practices:

- Modular structuring of Python code.

- Error handling and retries.

- Implementation of visual feedback systems.

- Web application deployment using ngrok.

Conclusions and Future

This exercise demonstrates the extraordinary potential of artificial intelligence as a bridge between public data and end users. Through the practical case developed, we have been able to observe how the combination of advanced language models with intuitive interfaces allows us to democratize access to data analysis, transforming natural language queries into meaningful analysis and informative visualizations.

For those interested in expanding the system's capabilities, there are multiple promising directions for its evolution:

- Incorporation of more advanced language models that allow for more sophisticated analysis.

- Implementation of learning systems that improve responses based on user feedback.

- Integration with more open data sources and diverse formats.

- Development of predictive and prescriptive analysis capabilities.

In summary, this exercise not only demonstrates the feasibility of democratizing data analysis through artificial intelligence, but also points to a promising path toward a future where access to and analysis of public data is truly universal. The combination of modern technologies such as Streamlit, language models, and visualization techniques opens up a range of possibilities for organizations and citizens to make the most of the value of open data.

Hola. Felicitaciones por la aplicación. Entiendo que dado que son datos abiertos, no tienen ningún tipo de riesgo con la normativa RGPD y por tanto el objetivo principal de facilitar el acceso libre a la información, lo cumple de manera eficiente.

Buenos días,

Muchas gracias, efectivamente y como comenta, se contempla al estar trabajando con datos del catálogo.

Un saludo.