In the public sector ecosystem, subsidies represent one of the most important mechanisms for promoting projects, companies and activities of general interest. However, understanding how these funds are distributed, which agencies call for the largest grants or how the budget varies according to the region or beneficiaries is not trivial when working with hundreds of thousands of records.

In this line, we present a new practical exercise in the series "Step-by-step data exercises", in which we will learn how to explore and model open data using Apache Spark, one of the most widespread platforms for distributed processing and large-scale machine learning.

In this laboratory we will work with real data from the National System of Advertising of Subsidies and Public Aid (BDNS) and we will build a model capable of predicting the budget range of new calls based on their main characteristics.

All the code used is available in the corresponding GitHub repository so that you can run it, understand it, and adapt it to your own projects.

Access the datalab repository on GitHub

Run the data pre-processing code on Google Colab

Context: why analyze public subsidies?

The BDNS collects detailed information on hundreds of thousands of calls published by different Spanish administrations: from ministries and regional ministries to provincial councils and city councils. This dataset is an extraordinarily valuable source for:

- analyse the evolution of public spending,

- understand which organisms are most active in certain areas,

- identify patterns in the types of beneficiaries,

- and to study the budget distribution according to sector or territory.

In our case, we will use the dataset to address a very specific question, but of great practical interest:

Can we predict the budget range of a call based on its administrative characteristics?

This capability would facilitate initial classification, decision-making support or comparative analysis within a public administration.

Objective of the exercise

The objective of the laboratory is twofold:

- Learn how to use Spark in a practical way:

- Upload a real high-volume dataset

- Perform transformations and cleaning

- Manipulate categorical and numeric columns

- Structuring a machine learning pipeline

- Building a predictive model

We will train a classifier capable of estimating whether a call belongs to one of these ranges of low budget (up to €20k), medium (between €20 and €150k) or high (greater than €150k), based on variables such as:

- Granting body

- Autonomous community

- Type of beneficiary

- Year of publication

- Administrative descriptions

Resources used

To complete this exercise we use:

Analytical tools

- Python, the main language of the project

- Google Colab, to run Spark and create Notebooks in a simple way

- PySpark, for data processing in the cleaning and modeling stages

- Pandas, for small auxiliary operations

- Plotly, for some interactive visualizations

Data

Official dataset of the National System of Advertising of Subsidies (BDNS), downloaded from the subsidy portal of the Ministry of Finance.

The data used in this exercise were downloaded on August 28, 2025. The reuse of data from the National System for the Publicity of Subsidies and Public Aid is subject to the legal conditions set out in https://www.infosubvenciones.es/bdnstrans/GE/es/avisolegal.

Development of the exercise

The project is divided into several phases, following the natural flow of a real Data Science case.

5.1. Data Dump and Transformation

In this first section we are going to automatically download the subsidy dataset from the API of the portal of the National System of Publicity of Subsidies (BDNS). We will then transform the data into an optimized format such as Parquet (columnar data format) to facilitate its exploration and analysis.

In this process we will use some complex concepts, such as:

- Asynchronous functions: allows two or more independent operations to be processed in parallel, which makes it easier to make the process more efficient.

- Rotary writer: when a limit on the amount of information is exceeded, the file being processed is closed and a new one is opened with an auto-incremental index (after the previous one). This avoids processing files that are too large and improves efficiency.

Figure 1. Screenshot of the API of the National System for Advertising Subsidies and Public Aid

5.2. Exploratory analysis

The aim of this phase is to get a first idea of the characteristics of the data and its quality.

We will analyze, among others, aspects such as:

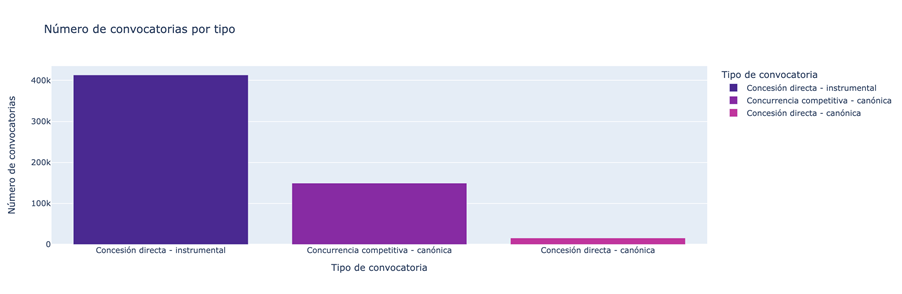

- Which types of subsidies have the highest number of calls.

Figure 2. Types of grants with the highest number of calls for applications.

- What is the distribution of subsidies according to their purpose (i.e. Culture, Education, Promotion of employment...).

Figure 3. Distribution of grants according to their purpose.

- Which purposes add a greater budget volume.

Figure 4. Purposes with the largest budgetary volume.

5.3. Modelling: construction of the budget classifier

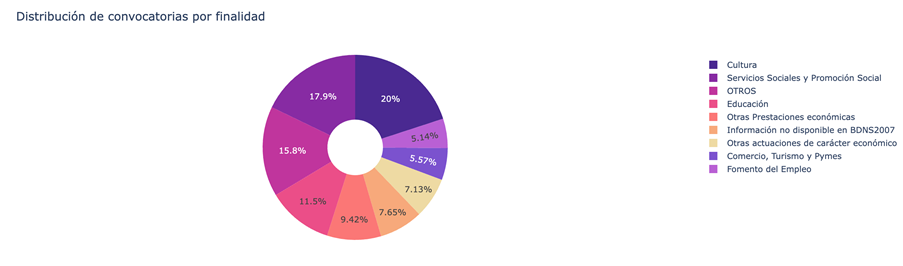

At this point, we enter the most analytical part of the exercise: teaching a machine to predict whether a new call will have a low, medium or high budget based on its administrative characteristics. To achieve this, we designed a complete machine learning pipeline in Spark that allows us to transform the data, train the model, and evaluate it in a uniform and reproducible way.

First, we prepare all the variables – many of them categorical, such as the convening body – so that the model can interpret them. We then combine all that information into a single vector that serves as the starting point for the learning phase.

With that foundation built, we train a classification model that learns to distinguish subtle patterns in the data: which agencies tend to publish larger calls or how specific administrative elements influence the size of a grant.

Once trained, we analyze their performance from different angles. We evaluate their ability to correctly classify the three budget ranges and analyze their behavior using metrics such as accuracy or the confusion matrix.

Figure 5. Accuracy metrics.

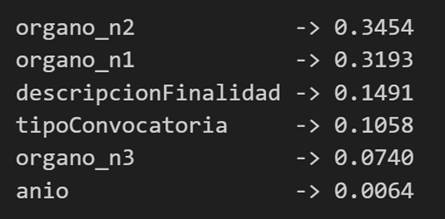

But we do not stop there: we also study which variables have had the greatest weight in the decisions of the model, which allows us to understand which factors seem most decisive when it comes to anticipating the budget of a call.

Figure 6. Variables that have had the greatest weight in the model's decisions.

Conclusions of the exercise

This laboratory will allow us to see how Spark simplifies the processing and modelling of high-volume data, especially useful in environments where administrations generate thousands of records per year, and to better understand the subsidy system after analysing some key aspects of the organisation of these calls.

Do you want to do the exercise?

If you're interested in learning more about using Spark and advanced public data analysis, you can access the repository and run the full Notebook step-by-step.

Content created by Juan Benavente, senior industrial engineer and expert in technologies related to the data economy. The content and views expressed in this publication are the sole responsibility of the author.

In the field of data science, the ability to build robust predictive models is fundamental. However, a model is not just a set of algorithms; it is a tool that must be understood, validated, and ultimately useful for decision-making.

Thanks to the transparency and accessibility of open data, we have the unique opportunity to work in this exercise with real, updated, and institutional-quality information that reflects environmental issues. This democratization of access not only allows for the development of rigorous analyses with official data but also contributes to informed public debate on environmental policies, creating a direct bridge between scientific research and societal needs.

In this practical exercise, we will dive into the complete lifecycle of a modeling project, using a real case study: the analysis of air quality in Castile and León. Unlike approaches that focus solely on the implementation of algorithms, our methodology focuses on:

- Loading and initial data exploration: identifying patterns, anomalies, and underlying relationships that will guide our modeling.

- Exploratory analysis for modeling: building visualizations and performing feature engineering to optimize the model.

- Development and evaluation of regression models: building and comparing multiple iterative models to understand how complexity affects performance.

- Model application and conclusions: using the final model to simulate scenarios and quantify the impact of potential environmental policies.

Access the data laboratory repository on Github.

Run the data pre-processing code on Google Colab.

Analysis Architecture

The core of this exercise follows a structured flow in four key phases, as illustrated in Figure 1. Each phase builds on the previous one, from initial data exploration to the final application of the model.

Figure 1. Phases of the predictive modeling project.

Development Process

1. Loading and Initial Data Exploration

The first step is to understand the raw material of our analysis: the data. Using an air quality dataset from Castile and León, spanning 24 years of measurements, we face common real-world challenges:

- Missing Values: variables such as CO and PM2.5 have limited data coverage.

- Anomalous Data: negative and extreme values are detected, likely due to sensor errors.

Through a process of cleaning and transformation, we convert the raw data into a clean and structured dataset, ready for modeling.

2. Exploratory Analysis for Modeling

Once the data is clean, we look for patterns. Visual analysis reveals a strong seasonality in NO₂ levels, with peaks in winter and troughs in summer. This observation is crucial and leads us to create new variables (feature engineering), such as cyclical components for the months, which allow the model to "understand" the circular nature of the seasons.

Figure 2. Seasonal variation of NO₂ levels in Castile and León.

3. Development and Evaluation of Regression Models

With a solid understanding of the data, we proceed to build three linear regression models of increasing complexity:

- Base Model: uses only pollutants as predictors.

- Seasonal Model: adds time variables.

- Complete Model: includes interactions and geographical effects.

Comparing these models allows us to quantify the improvement in predictive capability. The Seasonal Model emerges as the optimal choice, explaining almost 63% of the variability in NO₂, a remarkable result for environmental data.

4. Model Application and Conclusions

Finally, we subject the model to a rigorous diagnosis and use it to simulate the impact of environmental policies. For example, our analysis estimates that a 20% reduction in NO emissions could translate into a 4.8% decrease in NO₂ levels.

Figure 3. Performance of the seasonal model. The predicted values align well with the actual values.

What can you learn?

This practical exercise allows you to learn:

- Data project lifecycle: from cleaning to application.

- Linear regression techniques: construction, interpretation, and diagnosis.

- Handling time-series data: capturing seasonality and trends.

- Model validation: techniques like cross-validation and temporal validation.

- Communicating results: how to translate findings into actionable insights.

Conclusions and Future

This exercise demonstrates the power of a structured and rigorous approach in data science. We have transformed a complex dataset into a predictive model that is not only accurate but also interpretable and useful.

For those interested in taking this analysis to the next level, the possibilities are numerous:

- Incorporation of meteorological data: variables such as temperature and wind could significantly improve accuracy.

- More advanced models: exploring techniques such as Generalized Additive Models (GAM) or other machine learning algorithms.

- Spatial analysis: investigating how pollution patterns vary between different locations.

In summary, this exercise not only illustrates the application of regression techniques but also underscores the importance of an integrated approach that combines statistical rigor with practical relevance.

Data sharing has become a critical pillar for the advancement of analytics and knowledge exchange, both in the private and public sectors. Organizations of all sizes and industries—companies, public administrations, research institutions, developer communities, and individuals—find strong value in the ability to share information securely, reliably, and efficiently.

This exchange goes beyond raw data or structured datasets. It also includes more advanced data products such as trained machine learning models, analytical dashboards, scientific experiment results, and other complex artifacts that have significant impact through reuse. In this context, the governance of these resources becomes essential. It is not enough to simply move files from one location to another; it is necessary to guarantee key aspects such as access control (who can read or modify a given resource), traceability and auditing (who accessed it, when, and for what purpose), and compliance with regulations or standards, especially in enterprise and governmental environments.

To address these requirements, Unity Catalog emerges as a next-generation metastore, designed to centralize and simplify the governance of data and data-related resources. Originally part of the services offered by the Databricks platform, the project has now transitioned into the open source community, becoming a reference standard. This means that it can now be freely used, modified, and extended, enabling collaborative development. As a result, more organizations are expected to adopt its cataloging and sharing model, promoting data reuse and the creation of analytical workflows and technological innovation.

Figure 1. Image. Source: https://docs.unitycatalog.io/

Access the data lab repository on Github.

Run the data preprocessing code on Google Colab

Objectives

In this exercise, we will learn how to configure Unity Catalog, a tool that helps us organize and share data securely in the cloud. Although we will use some code, each step will be explained clearly so that even those with limited programming experience can follow along through a hands-on lab.

We will work with a realistic scenario in which we manage public transportation data from different cities. We’ll create data catalogs, configure a database, and learn how to interact with the information using tools like Docker, Apache Spark, and MLflow.

Difficulty level: Intermediate.

Figure 2: Unity catalogue schematic

Required Resources

In this section, we’ll explain the prerequisites and resources needed to complete this lab. The lab is designed to be run on a standard personal computer (Windows, macOS, or Linux).

We will be using the following tools and environments:

- Docker Desktop: Docker allows us to run applications in isolated environments called containers. A container is like a "box" that includes everything needed for the application to run properly, regardless of the operating system.

- Visual Studio Code: Our main working environment will be a Python Notebook, which we will run and edit using the widely adopted code editor Visual Studio Code (VS Code).

- Unity Catalog: Unity Catalog is a data governance tool that allows us to organize and control access to resources such as tables, data volumes, functions, and machine learning models. In this lab, we will use its open source version, which can be deployed locally, to learn how to manage data catalogs with permission control, traceability, and hierarchical structure. Unity Catalog acts as a centralized metastore, making data collaboration and reuse more secure and efficient.

- Amazon Web Services (AWS): AWS will serve as our cloud provider to host some of the lab’s data—specifically, raw data files (such as JSON) that we will manage using data volumes. We’ll use the Amazon S3 service to store these files and configure the necessary credentials and permissions so that Unity Catalog can interact with them in a controlled manner

Key Learnings from the Lab

Throughout this hands-on exercise, participants will deploy the application, understand its architecture, and progressively build a data catalog while applying best practices in organization, access control, and data traceability.

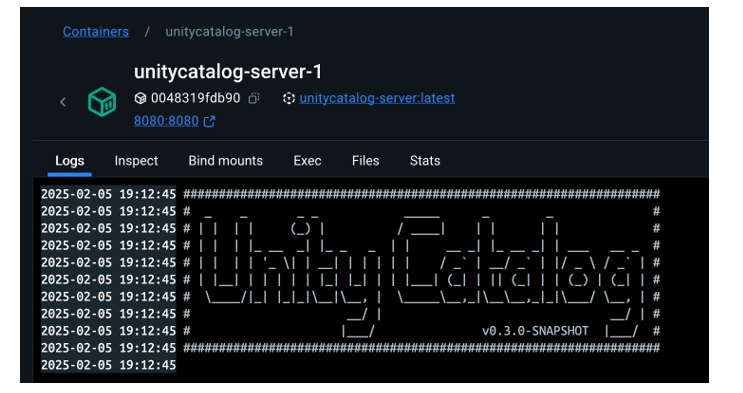

Deployment and First Steps

-

We clone the Unity Catalog repository and launch it using Docker.

-

We explore its architecture: a backend accessible via API and CLI, and an intuitive graphical user interface.

- We navigate the core resources managed by Unity Catalog: catalogs, schemas, tables, volumes, functions, and models.

Figure 2. Screenshot

What Will We Learn Here?

How to launch theapplication, understand its core components, and start interacting with it through different interfaces: the web UI, API, and CLI.

Resource Organization

-

We configure an external MySQL database as the metadata repository.

-

We create catalogs to represent different cities and schemas for various public services.

Figure 3. Screenshot

What Will We Learn Here?

How to structure data governance at different levels (city, service, dataset) and manage metadata in a centralized and persistent way.

Data Construction and Real-World Usage

-

We create structured tables to represent routes, buses, and bus stops.

-

We load real data into these tables using PySpark.

-

We set up an AWS S3 bucket as raw data storage (volumes).

- We upload JSON telemetry event files and govern them from Unity Catalog.

Figure 4. Diagram

What Will We Learn Here?

How to work with different types of data (structured and unstructured), and how to integrate them with external sources like AWS S3.

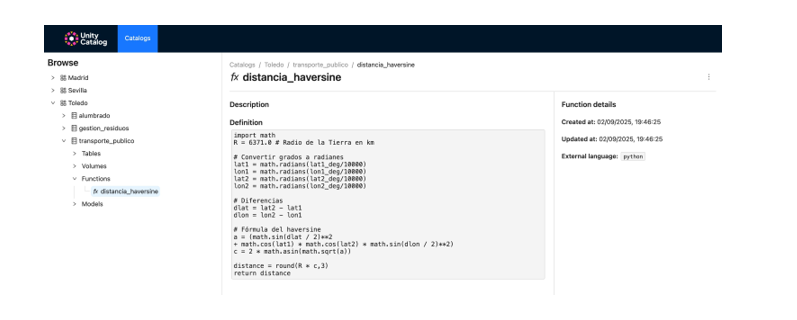

Reusable Functions and AI Models

-

We register custom functions (e.g., distance calculation) directly in the catalog.

-

We create and register machine learning models using MLflow.

- We run predictions from Unity Catalog just like any other governed resource.

Figure 5. Screenshot

What Will We Learn Here?

How to extend data governance to functions and models, and how to enable their reuse and traceability in collaborative environments.

Results and Conclusions

As a result of this hands-on lab, we gained practical experience with Unity Catalog as an open platform for the governance of data and data-related resources, including machine learning models. We explored its capabilities, deployment model, and usage through a realistic use case and a tool ecosystem similar to what you might find in an actual organization.

Through this exercise, we configured and used Unity Catalog to organize public transportation data. Specifically, you will be able to:

- Learn how to install tools like Docker and Spark.

- Create catalogs, schemas, and tables in Unity Catalog.

- Load data and store it in an Amazon S3 bucket.

- Implement a machine learning model using MLflow.

In the coming years, we will see whether tools like Unity Catalog achieve the level of standardization needed to transform how data resources are managed and shared across industries.

We encourage you to keep exploring data science! Access the full repository here

Content prepared by Juan Benavente, senior industrial engineer and expert in technologies linked to the data economy. The contents and points of view reflected in this publication are the sole responsibility of the author.

In the current landscape of data analysis and artificial intelligence, the automatic generation of comprehensive and coherent reports represents a significant challenge. While traditional tools allow for data visualization or generating isolated statistics, there is a need for systems that can investigate a topic in depth, gather information from diverse sources, and synthesize findings into a structured and coherent report.

In this practical exercise, we will explore the development of a report generation agent based on LangGraph and artificial intelligence. Unlike traditional approaches based on templates or predefined statistical analysis, our solution leverages the latest advances in language models to:

- Create virtual teams of analysts specialized in different aspects of a topic.

- Conduct simulated interviews to gather detailed information.

- Synthesize the findings into a coherent and well-structured report.

Access the data laboratory repository on Github.

Run the data preprocessing code on Google Colab.

As shown in Figure 1, the complete agent flow follows a logical sequence that goes from the initial generation of questions to the final drafting of the report.

Figure 1. Agent flow diagram.

Application Architecture

The core of the application is based on a modular design implemented as an interconnected state graph, where each module represents a specific functionality in the report generation process. This structure allows for a flexible workflow, recursive when necessary, and with capacity for human intervention at strategic points.

Main Components

The system consists of three fundamental modules that work together:

1. Virtual Analysts Generator

This component creates a diverse team of virtual analysts specialized in different aspects of the topic to be investigated. The flow includes:

- Initial creation of profiles based on the research topic.

- Human feedback point that allows reviewing and refining the generated profiles.

- Optional regeneration of analysts incorporating suggestions.

This approach ensures that the final report includes diverse and complementary perspectives, enriching the analysis.

2. Interview System

Once the analysts are generated, each one participates in a simulated interview process that includes:

- Generation of relevant questions based on the analyst's profile.

- Information search in sources via Tavily Search and Wikipedia.

- Generation of informative responses combining the obtained information.

- Automatic decision on whether to continue or end the interview based on the information gathered.

- Storage of the transcript for subsequent processing.

The interview system represents the heart of the agent, where the information that will nourish the final report is obtained. As shown in Figure 2, this process can be monitored in real time through LangSmith, an open observability tool that allows tracking each step of the flow.

Figure 2. System monitoring via LangGraph. Concrete example of an analyst-interviewer interaction.

3. Report Generator

Finally, the system processes the interviews to create a coherent report through:

- Writing individual sections based on each interview.

- Creating an introduction that presents the topic and structure of the report.

- Organizing the main content that integrates all sections.

- Generating a conclusion that synthesizes the main findings.

- Consolidating all sources used.

The Figure 3 shows an example of the report resulting from the complete process, demonstrating the quality and structure of the final document generated automatically.

Figure 3. View of the report resulting from the automatic generation process to the prompt "Open data in Spain".

What can you learn?

This practical exercise allows you to learn:

Integration of advanced AI in information processing systems:

- How to communicate effectively with language models.

- Techniques to structure prompts that generate coherent and useful responses.

- Strategies to simulate virtual teams of experts.

Development with LangGraph:

- Creation of state graphs to model complex flows.

- Implementation of conditional decision points.

- Design of systems with human intervention at strategic points.

Parallel processing with LLMs:

- Parallelization techniques for tasks with language models.

- Coordination of multiple independent subprocesses.

- Methods for consolidating scattered information.

Good design practices:

- Modular structuring of complex systems.

- Error handling and retries.

- Tracking and debugging workflows through LangSmith.

Conclusions and future

This exercise demonstrates the extraordinary potential of artificial intelligence as a bridge between data and end users. Through the practical case developed, we can observe how the combination of advanced language models with flexible architectures based on graphs opens new possibilities for automatic report generation.

The ability to simulate virtual expert teams, perform parallel research and synthesize findings into coherent documents, represents a significant step towards the democratization of analysis of complex information.

For those interested in expanding the capabilities of the system, there are multiple promising directions for its evolution:

- Incorporation of automatic data verification mechanisms to ensure accuracy.

- Implementation of multimodal capabilities that allow incorporating images and visualizations.

- Integration with more sources of information and knowledge bases.

- Development of more intuitive user interfaces for human intervention.

- Expansion to specialized domains such as medicine, law or sciences.

In summary, this exercise not only demonstrates the feasibility of automating the generation of complex reports through artificial intelligence, but also points to a promising path towards a future where deep analysis of any topic is within everyone's reach, regardless of their level of technical experience. The combination of advanced language models, graph architectures and parallelization techniques opens a range of possibilities to transform the way we generate and consume information.

Introduction

In previous content, we have explored in depth the exciting world of Large Language Models (LLM) and, in particular, the Retrieval Augmented Generation (RAG) techniques that are revolutionising the way we interact with conversational agents. This exercise marks a milestone in our series, as we will not only explain the concepts, but also guide you step-by-step in building your own RAG-powered conversational agent. For this, we will use a Google Colabnotebook.

Access the data lab repository on Github.

Execute the data pre-processing code on Google Colab.

Through this notebook, we will build a chat that uses RAG to improve its responses, starting from scratch. The notebook will guide the user through the whole process:

- Installation of dependencies.

- Setting up the environment.

- Integration of a source of information in the form of a post.

- Incorporation of this source into the chat knowledge base using RAG techniques.

- Finally, we can see how the model's response changes before and after providing the post and asking a specific question about its content.

Tools used

Before starting, it is necessary to introduce and explain which tools we have used and why we have chosen them. For the construction of this RAG application we have used 3 pieces of technology or tools: Google Colab, OpenAI y LangChain. Both Google Colab and OpenAI are old acquaintances and we have used them several times in previous content. Therefore, in this section, we pay special attention to explaining what LangChain is, as it is a new tool that we have not used in previous posts.

- Google Colab. As usual in our exercises, when computing resources are needed, as well as a user-friendly programming environment, we use Google Colab, as far as possible. Google Colab guarantees that any user who wants to reproduce the exercise can do so without complications derived from the configuration of the particular environments of each programmer. It should be noted that adapting this exercise inspired by previous resources available in LangChain to the Google Colab environment has been a challenge.

- OpenAI. As a provider of the Chat GPT large language model (LLM), OpenAI offers a variety of powerful language models, such as GPT-4, GPT-4o, GPT-4o mini, etc. that are used to process and generate natural language text. In this case, the OpenAI language model is used in the answer generation area, where the user's question and the retrieved documents are combined to produce an accurate answer.

- LangChain. It is an open source framework (set of libraries) designed to facilitate the development of large-scale language model (LLM) based applications. This framework is especially useful for integrating and managing complex flows that combine multiple components, such as language models, vector databases, and information retrieval tools, among others.

LangChain is widely used in the development of applications such as:

- Question and answer systems (QA systems).

- Virtual assistants with specific knowledge.

- Customised text generation systems.

- Data analysis tools based on natural language.

Key features of LangChain

- Modularity and flexibility. LangChain is designed with a modular architecture that allows developers to connect different tools and services. This includes language models (such as OpenAI, Hugging Face, or local LLM) and vector databases (such as Pinecone, ChromaDB or Weaviate). The List of chat models that can be interacted with through Langchain is extensive.

- Support for AGR (Generation Augmented Retrieval) techniques. Langhain facilitates the implementation of RAG techniques by enabling the direct integration of information retrieval and text generation models. This improves the accuracy of responses by enabling LLMs to work with up-to-date and specific knowledge.

- Optimising the handling of prompts. Langhain helps to design and manage complex prompts efficiently. It allows to dynamically build a relevant context that works with the model, optimising the use of tokens and ensuring that responses are accurate and useful.

- Tokens represent the basic units that an AI model uses to process text. A token can be a whole word, a part of a word or a punctuation mark. In the sentence "Hello world!" there are, for example, four different tokens: "Hello", "Hello", "World", "! Text processing requires more computational resources as the number of tokens increases. Free versions of AI models, including the one we use in this exercise, set limits on the number of tokens that can be processed.

- Integrate multiple data sources. The framework can connect to various data sources, such as databases, APIs or user uploaded documents. This makes it ideal for building applications that need access to large volumes of structured or unstructured information.

- Interoperability with multiple LLMs. LangChain is agnostic (can be adapted to various language model providers) with respect to the language model provider, which means that you can use OpenAI, Cohere, Anthropic or even locally hosted language models.

To conclude this section, it is worth noting the open source nature of Langhain, which facilitates collaboration and innovation in the development of applications based on language models. In addition, LangChain gives us incredible flexibility because it allows developers to easily integrate different LLMs, vectorisers and even final web interfaces into their applications.

Step-by-step exploration of the exercise: introduction to the Repository

The Github repository that we will use contains all the resources needed to build our RAG application. Inside, you will find:

- README: this file provides an overview of the project, instructions for use and additional resources.

- Jupyter Notebook: the example has been developed using a Jupyter Notebook format that we have already used in the past to code practical exercises combining a text document with code snippets executable in Google Colab. Here is the detailed implementation of the application, including data loading and processing, integration with language models such as GPT-44, configuration of information retrieval systems and generation of responses based on the retrieved data.

Notebook: preparing the Environment

Before starting, it is advisable to have the following requirements.

- Basic knowledge of Python and Natural Language Processing (NLP): although the notebook is self-explanatory, a basic understanding of these concepts will facilitate learning.

- Access to Google Colab: the notebook runs in this environment, which provides the necessary infrastructure.

- Accounts active in OpenAI and LangChain with valid API keys. These services are free and essential for running the notebook. Once you register with these services, you will need to generate an API Key to interact with the services. You will need to have this key handy so that you can paste it when executing the corresponding code snippet. If you need help to get these keys, any conversational assistant such as chatGPT or Google Gemini can help you step-by-step to get the keys. If you need visual guidance on youtube you will find thousands of tutorials.

- OpenAI API: https://openai.com/api/

- Lanchain API: https://www.langchain.com/

Exploring the Notebook: block by block

The notebook is divided into several blocks, each dedicated to a specific stage of the development of our RAG application. In the following, we will describe each block in detail, including the code used and its explanation.

Note to user. In the following, we are going to reproduce blocks of the code present in the Colab notebook. For clarity we have divided the code into self-contained units and formatted the code to highlight the syntax of the Python programming language. In addition, the outputs provided by the Notebook have been formatted and highlighted in JSON format to make them more readable. Note that this Notebook invokes language model APIs and therefore the model response changes with each run. This means that the outputs (the answers) presented in this post may not be exactly the same as what the user receives when running the Notebook in Colab.

Block 1: Installation and initial configuration

|

import os |

It is very important that you run these two lines at the beginning of the exercise and then do not run it again until you close and exit Google Colab.

|

%%capture |

|

!pip install langchain --quiet |

|

import getpass os.environ["LANGCHAIN_TRACING_V2"] = "true" |

When you run this snippet, a small dialogue box will appear below the snippet. There you must paste your Langchain API Key.

|

!pip install -qU langchain-openai |

|

import getpass |

When you run this snippet, a small dialogue box will appear below the snippet. There you must paste your OpenAI API Key.

In this first block, we have installed the necessary libraries for our project. Some of the most relevant are:

- openai: To interact with the OpenAI API and access models such as GPT-4.

- langchain: A framework that simplifies LLM application development.

- langchain-text-splitters: To break up long texts into smaller fragments that can be processed by language models.

- langchain-community: A collection of additional tools and components for LangChain.

- langchain-openai: To integrate LangChain with the OpenAI API.

- langgraph: To visualise the workflow of our RAG application.

- In addition to installing the libraries, we also set up the API keys for OpenAI and LangChain, using the getpass.getpass() function to enter them securely.

Block 2: Initialising the interaction with the LLM

Next, we start the first programmatic interaction (we pass our first prompt) with the language model. To check that everything works, we ask you to translate a simple sentence.

|

import getpass import os ] |

If everything went well we will get an output like this:

|

{ |

This block is a basic introduction to using an LLM for a simple task: translation. The OpenAI API key is configured and a gpt-4o-mini language model is instantiated using ChatOpenAI.

Two messages are defined:

- SystemMessage: Instruction to the model for translating from English into Italian.

- HumanMessage: The text to be translated ("hi!").

Finally, the model is invoked with llm.invoke(messages) to get the translation.

Block 3: Creating Embeddings

To understand the concept of Embeddings applied to the context of natural language processing, we recommend reading this post.

|

import getpass pip install -qU langchain-core from langchain_core.vectorstores import InMemoryVectorStore |

When you run this snippet, a small dialogue box will appear below the snippet. There you must paste your OpenAI API Key.

This block focuses on the creation of embeddings (vector representations of text) that capture their semantic meaning. We use the OpenAIEmbeddings class to access OpenAI's text-embedding-3-large model, which generates high-quality embeddings .

The embeddings will be stored in an InMemoryVectorStore, an in-memory data structure that allows efficient searches based on semantic similarity.

Block 4: Implementing RAG

|

#RAG import bs4 from langchain_community.document_loaders import WebBaseLoader # Manten únicamente el título del post, los encabezados y el contenido del HTML bs4_strainer = bs4.SoupStrainer(class_=("post-title", "post-header", "post-content")) loader = WebBaseLoader( web_paths=("https://datos.gob.es/es/blog/slm-llm-rag-y-fine-tuning-pilares-de-la-ia…",) ) docs = loader.load() assert len(docs) == 1 print(f"Total characters: {len(docs.page_content)}") from langchain_text_splitters import RecursiveCharacterTextSplitter text_splitter = RecursiveCharacterTextSplitter( chunk_size=1000, chunk_overlap=200, add_start_index=True, ) all_splits = text_splitter.split_documents(docs) print(f"Split blog post into {len(all_splits)} sub-documents.") document_ids = vector_store.add_documents(documents=all_splits) print(document_ids[:3]) |

This block is the heart of the RAG implementation. Start loading the content of a post, using WebBaseLoader and the URL of the post on SLM, LLM, RAG and Fine-tuning..

To prepare our Generation Augmented Retrieval (GAR) system, we start by processing the text of the post using segmentation techniques. This initial step is crucial, as we break down the content into smaller fragments that are complete in meaning. We use LangChain tools to perform this segmentation, assigning each fragment a unique identifier (id). This prior preparation allows us to subsequently perform efficient and accurate searches when the system needs to retrieve relevant information to answer queries.

The bs4.SoupStrainer is used to extract only the relevant sections of the HTML. The text of the post is split into smaller fragments with RecursiveCharacterTextSplitter, ensuring overlap between fragments to maintain context. These fragments are added to the vector_store created in the previous block, generating embeddings for each one.

We see that the result of one of the fragments informs us that it has split the document into 21 sub-documents.

|

Split blog post into 21 sub-documents. |

Documents have their own identifier. For example, the first 3 are identified as:.

|

["409f1bcb-1710-49b0-80f8-e45b7ca51a96", "e242f16c-71fd-4e7b-8b28-ece6b1e37a1c", "9478b11c-61ab-4dac-9903-f8485c4770c6"] |

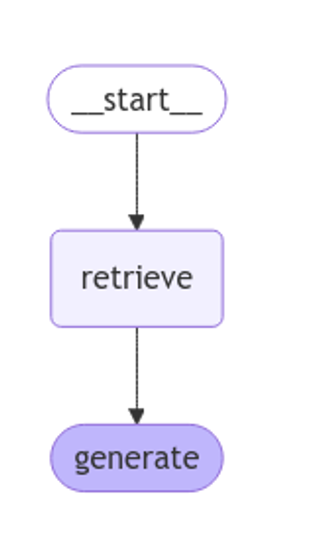

Block 5: Defining the Prompt and visualising the workflow

|

from langchain import hub prompt = hub.pull("rlm/rag-prompt") example_messages = prompt.invoke( {"context": "(context goes here)", "question": "(question goes here)"} ).to_messages() assert len(example_messages) == 1 print(example_messages.content) from langchain_core.documents import Document from typing_extensions import List, TypedDict class State(TypedDict): question: str context: List[Document] answer: str def retrieve(state: State): retrieved_docs = vector_store.similarity_search(state["question"]) return {"context": retrieved_docs} def generate(state: State): docs_content = "\n\n".join(doc.page_content for doc in state["context"]) messages = prompt.invoke({"question": state["question"], "context": docs_content}) response = llm.invoke(messages) return {"answer": response.content} from langgraph.graph import START, StateGraph graph_builder = StateGraph(State).add_sequence([retrieve, generate]) graph_builder.add_edge(START, "retrieve") graph = graph_builder.compile() from IPython.display import Image, display display(Image(graph.get_graph().draw_mermaid_png())) result = graph.invoke({"question": "What is Task Decomposition?"}) print(f"Context: {result["context"]}\n\n") print(f"Answer: {result["answer"]}") for step in graph.stream( {"question": "¿Cual es el futuro de la IA Generativa?"}, stream_mode="updates" ): print(f"{step}\n\n----------------\n") |

This block defines the prompt to be used to interact with the LLM. A predefined LangChain Hub prompt (rlm/rag-prompt) is used which is designed for RAG tasks.

Two functions are defined:

- retrieve: search the vector_store for snippets most similar to the user's question.

- generate: generates a response using the LLM, taking into account the context provided by the retrieved fragments.

langgraph is used to visualise the RAG workflow.

Figure 1: RAG workflow. Own elaboration.

Finally, the system is tested with two questions: one in English ("What is Task Decomposition?") and one in Spanish ("¿Cual es el futuro de la AI Generativa?").

The first question, "What is Task Decomposition?", is in English and is a generic question, unrelated to our content post. Therefore, although the system searches in its knowledge base previously created with the vectorisation of the document (post), it does not find any relation between the question and this context.

This text may vary with each execution

|

Answer: There is no explicit mention of the concept of "Task Decomposition" in the context provided. Therefore, I have no information on what Task Decomposition is. |

|

Answer: Task Decomposition is a process that decomposes a complex task into smaller, more manageable sub-tasks. This allows each subtask to be addressed independently, facilitating their resolution and improving overall efficiency. Although the context provided does not explicitly define Task Decomposition, this concept is common in AI and task optimisation. |

Answer: Task Decomposition is a process that decomposes a complex task into smaller, more manageable sub-tasks. This allows each subtask to be addressed independently, facilitating their resolution and improving overall efficiency. Although the context provided does not explicitly define Task Decomposition, this concept is common in AI and task optimisation.

|

{ |

As you can see in the response, the system retrieves 4 documents (in the diagram above, this corresponds to the "Retrieve" stage) with their corresponding "id" (identifiers), for example, the first document "id":. "53962c40-c08b-4547-a74a-26f63cced7e8" which corresponds to a fragment of the original post "title":. "SLM, LLM, RAG and Fine-tuning: Pillars of Modern Generative AI | datos.gob.es".

With these 4 fragments the system considers that it has enough relevant information to provide (in the diagram above, the "generate" stage) a satisfactory answer to the question.

|

{ |

Bloque 6: personalizando el prompt

|

from langchain_core.prompts import PromptTemplate template = """Use the following pieces of context to answer the question at the end. If you don"t know the answer, just say that you don"t know, don"t try to make up an answer. Use three sentences maximum and keep the answer as concise as possible. Always say "thanks for asking!" at the end of the answer. {context} Question: {question} Helpful Answer:""" custom_rag_prompt = PromptTemplate.from_template(template) |

This block customises the prompt to make answers more concise and add a polite sentence at the end. PromptTemplate is used to create a new prompt with the desired instructions.

Block 7: Adding metadata and refining your search

|

total_documents = len(all_splits) third = total_documents // 3 for i, document in enumerate(all_splits): if i < third: document.metadata["section"] = "beginning" elif i < 2 * third: document.metadata["section"] = "middle" else: document.metadata["section"] = "end" all_splits.metadata from langchain_core.vectorstores import InMemoryVectorStore vector_store = InMemoryVectorStore(embeddings) _ = vector_store.add_documents(all_splits) from typing import Literal from typing_extensions import Annotated class Search(TypedDict): """Search query.""" query: Annotated[str, ..., "Search query to run."] section: Annotated( Literal["beginning", "middle", "end"], ..., "Section to query.", ] class State(TypedDict): question: str query: Search context: List[Document] answer: str def analyze_query(state: State): structured_llm = llm.with_structured_output(Search) query = structured_llm.invoke(state["question"]) return {"query": query} def retrieve(state: State): query = state["query"] retrieved_docs = vector_store.similarity_search( query["query"], filter=lambda doc: doc.metadata.get("section") == query["section"], ) return {"context": retrieved_docs} def generate(state: State): docs_content = "\n\n".join(doc.page_content for doc in state["context"]) messages = prompt.invoke({"question": state["question"], "context": docs_content}) response = llm.invoke(messages) return {"answer": response.content} graph_builder = StateGraph(State).add_sequence([analyze_query, retrieve, generate]) graph_builder.add_edge(START, "analyze_query") graph = graph_builder.compile() display(Image(graph.get_graph().draw_mermaid_png())) for step in graph.stream( {"question": "¿Cual es el furturo de la IA Generativa en palabras del autor?"}, stream_mode="updates", ): print(f"{step}\n\n----------------\n") |

In this block, metadata is added to the post fragments, dividing them into three sections: "beginning", "middle" and "end". This allows for more refined searches, limiting the search to a specific section of the post.

A new analyze_query function is introduced that uses the LLM to determine the section of the post most relevant to the user's question. The RAG workflow is updated to include this new step.

Finally, the system is tested with a question in Spanish ("What is the future of Generative AI in the author's words?"), observing how the system uses the information in the "end" section of the post to generate a more accurate answer.

Let's look at the result:

Figure 2: RAG workflow. Own elaboration.

|

{ ---------------- { |

|

{ |

Conclusions

Through this notebook tour of Google Colab, we have experienced first-hand the construction of a conversational agent with RAG. We have learned to:

- Install the necessary libraries.

- Configure the development environment.

- Upload and process data.

- Create embeddings and store them in a vector_store.

- Implement the RAG recovery and generation stages.

- Customise the prompt to get more specific answers.

- Add metadata to refine the search.

This practical exercise provides you with the tools and knowledge you need to start exploring the potential of RAG and develop your own applications.

Dare to experiment with different information sources, language models and prompts to create increasingly sophisticated conversational agents!

Content prepared by Alejandro Alija, expert in Digital Transformation and Innovation. The contents and points of view reflected in this publication are the sole responsibility of its author.