In the field of geospatial data, encoding and standardisation play a key role in ensuring interoperability between systems and improving accessibility to information.

The INSPIRE Directive (Infrastructure for Spatial Information in Europe) determines the general rules for the establishment of an Infrastructure for Spatial Information in the European Community based on the Member States' Infrastructures. Adopted by the European Parliament and the Council on March 14, 2007 (Directive 2007/2/EC), it is designed to achieve these objectives by ensuring that spatial information is consistent and accessible across EU member countries.

Among the various encodings available for INSPIRE datasets, the GeoPackage standard emerges as a flexible and efficient alternative to traditional formats such as GML or GeoJSON. This article will explore how GeoPackage can improve INSPIRE data management and how it can be implemented using tools such as hale studio, a visual platform that facilitates data transformation according to INSPIRE specifications.

What is GeoPackage?

GeoPackage es un estándar desarrollado por el Open Geospatial Consortium (OGC) que utiliza SQLite como base para almacenar información geoespacial de manera compacta y accesible. Unlike other formats that require intermediate transformation processes, the data in a GeoPackage file can be read and updated directly in its native format. This allows for more efficient read and write operations, especially in GIS applications.

Main features of GeoPackage

- Open format and standard: as an open standard, GeoPackage is suitable for the publication of open spatial data, facilitating access to geospatial data in formats that users can handle without costly licensing or usage restrictions.

- Unique container: a GeoPackage file can store vector data, image mosaics and non-spatial data.

- Compatibility: is supported by several GIS platforms, including QGIS and ArcGIS, as well as ETL tools such as FME.

- Spatial indexing: the format includes spatial indexes (RTree) that allow faster data search and manipulation.

For more technical details, please refer to the GeoPackage standard on the OGC website.

Why use GeoPackage in INSPIRE?

INSPIRE requires spatial data to be interoperable at European level, and its default encoding standard is GML. However, GeoPackage is offered as an alternative that can reduce complexity in certain use cases, especially those where performance and usability are crucial.

The use of GeoPackage within INSPIRE is supported by good practices developed to create optimised logical models for ease of use in GIS environments. These practices allow the creation of use-case specific schemas and offer a flexibility that other formats do not provide. In addition, GeoPackage is especially useful in scenarios where medium to large datasets are handled, as its compact format reduces file size and therefore facilitates data exchange.

Implementation of GeoPackage in INSPIRE using Hale Studio

One of the recommended tools to implement GeoPackage in INSPIRE is the software open-source hale studio. This data transformation software allows mapping and transforming data models visually and without programming.

The following describes the basic steps for transforming an INSPIRE-compliant dataset using hale studio:

- Load the source model: import the dataset in its original format, such as GML.

- Define the target model (GeoPackage): load a blank GeoPackage file to act as the target model for storing the transformed data.

- Configure data mapping: through the hale visual interface, map attributes and apply transformation rules to ensure compliance with the INSPIRE GeoPackage model.

- Export the dataset: once the transformation has been validated, export the file in GeoPackage format.

Hale studio facilitates this transformation and enables data models to be optimised for improved performance in GIS environments. More information about hale studio and its transformation capabilities is available on its official website.

Examples of implementation

The application of the GeoPackage standard in INSPIRE has already been tested in several use cases, providing a solid framework for future implementations.

-

Environmental Noise Directive (END): in this context, GeoPackage has been used to store and manage noise-related data, aligning the models with INSPIRE specifications. The European Environment Agency (EEA) provides templates and guidelines to facilitate this implementation, available in its repository.

- Project GO-PEG: this project uses GeoPackage to develop 3D models in geology, allowing the detailed representation of geological areas, such as the Po basin in Italy. Guidelines and examples of GO-PEG implementation are available here.

These examples illustrate how GeoPackage can improve the efficiency and usability of INSPIRE data in practical applications, especially in GIS environments that require fast and direct manipulation of spatial data.

The implementation of GeoPackage in the framework of INSPIRE demonstrates its applicability for open data at the European level. Initiatives such as the Environmental Noise Directive (END) and the GO-PEG Project have shown how open data in GeoPackage can serve multiple sectors, from environmental management to geological surveys.

Benefits of GeoPackage for data providers and data users

The adoption of GeoPackage in INSPIRE offers benefits for both data generators and data consumers:

- For suppliers: GeoPackage's simplified model reduces coding errors and improves data harmonisation, making it easier to distribute data in compact formats.

- For users: compatibility with GIS tools allows access to data without the need for additional transformations, improving the consumption experience and reducing loading and consultation times.

Limitations and challenges

While GeoPackage is a robust alternative, there are some challenges to consider:

-

Interoperability limitations: unlike GML, GeoPackage is not compatible with all network data publishing services, although advances in protocols such as STAC are improving these limitations.

- Optimisation for large datasets: although GeoPackage is optimal for medium to large datasets, file size can be a constraint on extremely large data or low bandwidth networks.

Conclusion

The incorporation of the GeoPackage standard into INSPIRE represents a significant advance for the management and distribution of spatial data in Europe, promoting a more efficient and accessible spatial data infrastructure. This approach contributes to the interoperability of data and facilitates its use in various GIS systems, improving the experience of both providers and users.

For those wishing to implement this format, tools such as hale studio offer practical and accessible solutions that simplify the INSPIRE data transformation process. With the adoption of best practices and the use of optimised data models, GeoPackage can play a crucial role in the future of spatial data infrastructure in Europe. In addition, this approach aligned with the principles of transparency and data reuse allows administrations and organisations to take advantage of open data to support informed decision-making and the development of innovative applications in various areas.

Content prepared by Mayte Toscano, Senior Consultant in Data Economy Technologies. The contents and points of view reflected in this publication are the sole responsibility of its author.

Open data plays a relevant role in technological development for many reasons. For example, it is a fundamental component in informed decision making, in process evaluation or even in driving technological innovation. Provided they are of the highest quality, up-to-date and ethically sound, data can be the key ingredient for the success of a project.

In order to fully exploit the benefits of open data in society, the European Union has several initiatives to promote the data economy, a single digital model that encourages data sharing, emphasizing data sovereignty and data governance, the ideal and necessary framework for open data.

In the data economy, as stated in current regulations, the privacy of individuals and the interoperability of data are guaranteed. The regulatory framework is responsible for ensuring compliance with this premise. An example of this can be the modification of Law 37/2007 for the reuse of public sector information in compliance with European Directive 2019/1024. This regulation is aligned with the European Union's Data Strategy, which defines a horizon with a single data market in which a mutual, free and secure exchange between the public and private sectors is facilitated.

To achieve this goal, key issues must be addressed, such as preserving certain legal safeguards or agreeing on common metadata description characteristics that datasets must meet to facilitate cross-industry data access and use, i.e. using a common language to enable interoperability between dataset catalogs.

What are metadata standards?

A first step towards data interoperability and reuse is to develop mechanisms that enable a homogeneous description of the data and that, in addition, this description is easily interpretable and processable by both humans and machines. In this sense, different vocabularies have been created that, over time, have been agreed upon until they have become standards.

Standardized vocabularies offer semantics that serve as a basis for the publication of data sets and act as a "legend" to facilitate understanding of the data content. In the end, it can be said that these vocabularies provide a collection of metadata to describe the data being published; and since all users of that data have access to the metadata and understand its meaning, it is easier to interoperate and reuse the data.

W3C: DCAT and DCAT-AP Standards

At the international level, several organizations that create and maintain standards can be highlighted:

- World Wide Web Consortium (W3C): developed the Data Catalog Vocabulary (DCAT): a description standard designed with the aim of facilitating interoperability between catalogs of datasets published on the web.

- Subsequently, taking DCAT as a basis, DCAT-AP was developed, a specification for the exchange of data descriptions published in data portals in Europe that has more specific DCAT-AP extensions such as:

- GeoDCAT-AP which extends DCAT-AP for the publication of spatial data.

- StatDCAT-AP which also extends DCAT-AP to describe statistical content datasets.

- Subsequently, taking DCAT as a basis, DCAT-AP was developed, a specification for the exchange of data descriptions published in data portals in Europe that has more specific DCAT-AP extensions such as:

ISO: Organización de Estandarización Internacional

Además de World Wide Web Consortium, existen otras organizaciones que se dedican a la estandarización, por ejemplo, la Organización de Estandarización Internacional (ISO, por sus siglas en inglés Internacional Standarization Organisation).

- Entre otros muchos tipos de estándares, ISO también ha definido normas de estandarización de metadatos de catálogos de datos:

- ISO 19115 para describir información geográfica. Como ocurre en DCAT, también se han desarrollado extensiones y especificaciones técnicas a partir de ISO 19115, por ejemplo:

- ISO 19115-2 para datos ráster e imágenes.

- ISO 19139 proporciona una implementación en XML del vocabulario.

- ISO 19115 para describir información geográfica. Como ocurre en DCAT, también se han desarrollado extensiones y especificaciones técnicas a partir de ISO 19115, por ejemplo:

The horizon in metadata standards: challenges and opportunities

It's been a long time since we first heard about the Apache Hadoop ecosystem for distributed data processing. Things have changed a lot since then, and we now use higher-level tools to build solutions based on big data payloads. However, it is important to highlight some best practices related to our data formats if we want to design truly efficient and scalable big data solutions.

Introduction

Those of us who work in the data sector know the importance of efficiency in multiple aspects of data solutions and architectures. We talk about efficiency in terms of processing times, but also in terms of occupied space and, of course, storage costs. A good decision in terms of data format types can be vital with respect to the future scalability of a data-driven solution.

To discuss this topic, in this post we bring you a reflection on the Apache Parquet or simply Parquet data format. The first versions of Apache Parquet were released in 2013. Since 2015, Apache Parquet is one of the flagship projects sponsored and maintained by Apache Software Foundation (ASF). Let's get started!

What is Apache Parquet?

We know that you may have never heard of the Apache Parquet file format before. The Parquet format is a file type that contains data (table type) inside it, similar to the CSV file type. Although it may seem obvious, parquet files have a .parquet extension and unlike a CSV, it is not a plain text file (it is represented in binary form), which means that we cannot open and examine it with a simple text editor. The parquet format is a type of column-oriented file format. As you may have guessed, there are other row-oriented formats. Such is the case of CSV, TSV or AVRO formats.

But what does it mean for a data format to be row-oriented or column-oriented? In a CSV file (remember, row-oriented) each record is a row. In Parquet, however, it is each column that is stored independently. The most extreme difference is noticed when, in a CSV file, we want to read only one column. Although we only want to access the information of one column, because of the format type, we inevitably have to read all the rows of the table. When using Parquet format, each column is accessible independently from the rest. As the data in each column is expected to be homogeneous (of the same type), the parquet format opens endless possibilities when it comes to encoding, compressing and optimizing data storage. Otherwise, if we want to store data with the objective of reading many complete rows very often, the parquet format will penalize us in those reads and we will not be efficient since we are using column orientation to read rows.

Another feature of Parquet is that it is a self-describing data format that embeds the schema or structure within the data itself. That is, properties (or metadata) of the data such as the type (whether it is an integer, a real or a string), the number of values, the type of compression (data can be compressed to save space), etc. are included in the file itself along with the data as such. In this way, any program used to read the data can access this metadata, for example, to determine unambiguously what type of data is expected to be read in a given column. Who has never imported a CSV into a program and found that the data is misinterpreted (numbers as text, dates as numbers, etc.)?

As we have already mentioned, one of the disadvantages of parquet compared to CSV is that we cannot open it just by using a text editor. However, there are multiple tools to handle parquet files. To illustrate a simple example we can use parquet-tools in Python. In this example you can see the same dataset represented in parquet and csv format.

Earlier we mentioned that another differentiating feature of parquet versus CSV is that the former includes the schema of the data inside. To demonstrate it we are going to execute the command parquet-tools inspect test1.parquet.

Below we see how the tool shows us the schema of the data contained in the file organized by columns. We see, first, a summary of the number of columns, rows and format version and the size in bytes. Next, we see the name of the columns and then, for each column, the most important data, including the data type. We see how in column "one" data of type DOUBLE (suitable for real numbers) is stored, while in column "two" the data is of type BYTE_ARRAY which is used to store text strings.

############ file meta data ############

created_by: parquet-cpp version 1.5.1-SNAPSHOT

num_columns: 3

num_rows: 3

num_row_groups: 1

format_version: 1.0

serialized_size: 2226

############ Columns ############

one

two

three

############ Column(one) ############

name: one

path: one

max_definition_level: 1

max_repetition_level: 0

physical_type: DOUBLE

logical_type: None

converted_type (legacy): NONE

############ Column(two) ############

name: two

path: two

max_definition_level: 1

max_repetition_level: 0

physical_type: BYTE_ARRAY

logical_type: String

converted_type (legacy): UTF8

############ Column(three) ############

name: three

path: three

max_definition_level: 1

max_repetition_level: 0

physical_type: BOOLEAN

logical_type: None

converted_type (legacy): NONE

Summary of technical features of parquet files

- Apache Parquet is column-oriented and designed to provide efficient columnar storage compared to row-based file types such as CSV.

- Parquet files were designed with complex nested data structures in mind.

- Apache Parquet is designed to support very efficient compression and encoding schemes.

- Apache Parquet generates lower storage costs for data files and maximizes the effectiveness of data queries with current cloud technologies such as Amazon Athena, Redshift Spectrum, BigQuery and Azure Data Lakes.

- Licensed under the Apache license and available for any project.

What is Parquet used for?

Now that we know a little more about this data format, let's see in which occasions its use is more recommended. Undoubtedly the realm of parquets is Data Lakes. Data Lakes are distributed file storage spaces widely used today to create large heterogeneous corporate data repositories in the cloud. Unlike a Data Warehouse, a Data Lake does not have an underlying database engine nor is there a relational model of the data. But let's look at a practical example of the advantages of using Parquet over CSV in this type of storage.

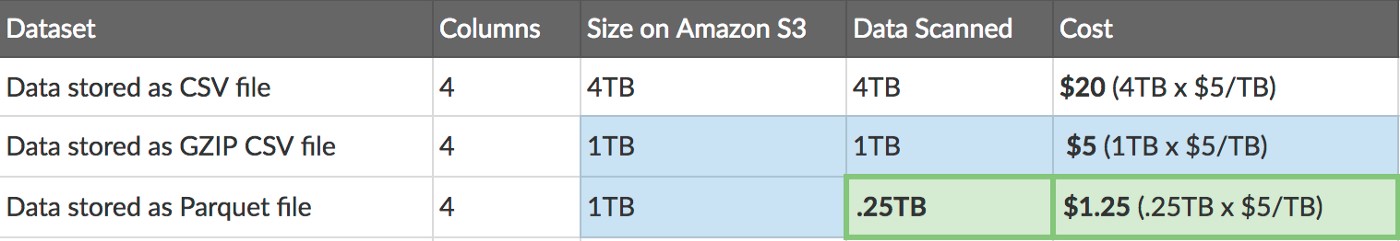

Suppose we have a dataset in table format (4 columns) representing the historical sales of a company over the last 10 years. If we store this table in CSV format in Amazon Web Services S3 we will see that the size it occupies is 4TB. If we compress this file with GZIP we will see that its size is reduced to a quarter (1TB). When we store this table in the same service (S3) in Parquet format, we see that it occupies the same size as the compressed CSV. But in addition, when we want to access a part of the data - let's say 1 single column - in the case of the CSV file (as mentioned above) we have to read the entire table, since it is a row storage. However, as the Parquet format is a column-oriented storage, we can read a single column independently, accessing only a quarter of the information in the table, with the time and cost savings that this entails.

Original post by Thomas Spicer in Medium.com

Once we have understood the efficiency of reading data using Parquet due to the columnar access of the data, we can now understand why most of today's data storage and processing services favour Parquet over CSV. These cloud services for data processing are highly popular with data professionals since the analyst or data scientist only has to worry about the analysis. It is the services that ensure accessibility and read efficiency.

It might seem that only the most sophisticated and analytical use cases use Parquet as a reference format, but there are already many teams in companies, which start using Parquet from the source for their Business Intelligence applications with tools for business users (non-technical) such as Power BI or Tableau.

In conclusion, in this post we have highlighted the positive features of the Parquet data format for data storage and processing when it comes to analytical use cases (machine learning, artificial intelligence) or with a strong column orientation (such as time series). As with everything in life, there is no perfect solution for all situations. There are and will continue to be row-oriented use cases, just as there are specific formats for storing images or maps. In any case, there is no doubt that if your application fits the characteristics of parquet, you will experience significant efficiency improvements if you opt for this format over other more conventional formats such as CSV. See you in the next post!

Content prepared by Alejandro Alija, expert in Digital Transformation and Innovation.

The contents and points of view reflected in this publication are the sole responsibility of its author.

In the digital world, data becomes a fundamental asset for companies. Thanks to them, they can better understand their environment, business and competition, and make convenient decisions at the right time.

In this context, it is not surprising that an increasing number of companies are looking for professional profiles with advanced digital capabilities. Workers who are able to search, find, process and communicate exciting stories based on data.

The report "How to generate value from data: formats, techniques and tools to analyse open data" aims to guide those professionals who wish to improve the digital skills highlighted above. It explores different techniques for the extraction and descriptive analysis of the data contained in the open data repositories.

The document is structured as follows:

- Data formats. Explanation of the most common data formats that can be found in an open data repository, paying special attention to csv and json.

- Mechanisms for data sharing through the Web. Collection of practical examples that illustrate how to extract data of interest from some of the most popular Internet repositories.

- Main licenses. The factors to be considered when working with different types of licenses are explained, guiding the reader towards their identification and recognition.

- Tools and technologies for data analysis. This section becomes slightly more technical. It shows different examples of extracting useful information from open data repositories, making use of some short code fragments in different programming languages.

- Conclusions. A technological vision of the future is offered, with an eye on the youngest professionals, who will be the workforce of the future.

The report is aimed at a general non-specialist public, although those readers familiar with data treatment and sharing o in the web world will find a familiar and recognizable reading.

Next, you can then download the full text, as well as the executive summary and a presentation.

Note: The published code is intended as a guide for the reader, but may require external dependencies or specific settings for each user who wishes to run it.