It's been a long time since we first heard about the Apache Hadoop ecosystem for distributed data processing. Things have changed a lot since then, and we now use higher-level tools to build solutions based on big data payloads. However, it is important to highlight some best practices related to our data formats if we want to design truly efficient and scalable big data solutions.

Introduction

Those of us who work in the data sector know the importance of efficiency in multiple aspects of data solutions and architectures. We talk about efficiency in terms of processing times, but also in terms of occupied space and, of course, storage costs. A good decision in terms of data format types can be vital with respect to the future scalability of a data-driven solution.

To discuss this topic, in this post we bring you a reflection on the Apache Parquet or simply Parquet data format. The first versions of Apache Parquet were released in 2013. Since 2015, Apache Parquet is one of the flagship projects sponsored and maintained by Apache Software Foundation (ASF). Let's get started!

What is Apache Parquet?

We know that you may have never heard of the Apache Parquet file format before. The Parquet format is a file type that contains data (table type) inside it, similar to the CSV file type. Although it may seem obvious, parquet files have a .parquet extension and unlike a CSV, it is not a plain text file (it is represented in binary form), which means that we cannot open and examine it with a simple text editor. The parquet format is a type of column-oriented file format. As you may have guessed, there are other row-oriented formats. Such is the case of CSV, TSV or AVRO formats.

But what does it mean for a data format to be row-oriented or column-oriented? In a CSV file (remember, row-oriented) each record is a row. In Parquet, however, it is each column that is stored independently. The most extreme difference is noticed when, in a CSV file, we want to read only one column. Although we only want to access the information of one column, because of the format type, we inevitably have to read all the rows of the table. When using Parquet format, each column is accessible independently from the rest. As the data in each column is expected to be homogeneous (of the same type), the parquet format opens endless possibilities when it comes to encoding, compressing and optimizing data storage. Otherwise, if we want to store data with the objective of reading many complete rows very often, the parquet format will penalize us in those reads and we will not be efficient since we are using column orientation to read rows.

Another feature of Parquet is that it is a self-describing data format that embeds the schema or structure within the data itself. That is, properties (or metadata) of the data such as the type (whether it is an integer, a real or a string), the number of values, the type of compression (data can be compressed to save space), etc. are included in the file itself along with the data as such. In this way, any program used to read the data can access this metadata, for example, to determine unambiguously what type of data is expected to be read in a given column. Who has never imported a CSV into a program and found that the data is misinterpreted (numbers as text, dates as numbers, etc.)?

As we have already mentioned, one of the disadvantages of parquet compared to CSV is that we cannot open it just by using a text editor. However, there are multiple tools to handle parquet files. To illustrate a simple example we can use parquet-tools in Python. In this example you can see the same dataset represented in parquet and csv format.

Earlier we mentioned that another differentiating feature of parquet versus CSV is that the former includes the schema of the data inside. To demonstrate it we are going to execute the command parquet-tools inspect test1.parquet.

Below we see how the tool shows us the schema of the data contained in the file organized by columns. We see, first, a summary of the number of columns, rows and format version and the size in bytes. Next, we see the name of the columns and then, for each column, the most important data, including the data type. We see how in column "one" data of type DOUBLE (suitable for real numbers) is stored, while in column "two" the data is of type BYTE_ARRAY which is used to store text strings.

############ file meta data ############

created_by: parquet-cpp version 1.5.1-SNAPSHOT

num_columns: 3

num_rows: 3

num_row_groups: 1

format_version: 1.0

serialized_size: 2226

############ Columns ############

one

two

three

############ Column(one) ############

name: one

path: one

max_definition_level: 1

max_repetition_level: 0

physical_type: DOUBLE

logical_type: None

converted_type (legacy): NONE

############ Column(two) ############

name: two

path: two

max_definition_level: 1

max_repetition_level: 0

physical_type: BYTE_ARRAY

logical_type: String

converted_type (legacy): UTF8

############ Column(three) ############

name: three

path: three

max_definition_level: 1

max_repetition_level: 0

physical_type: BOOLEAN

logical_type: None

converted_type (legacy): NONE

Summary of technical features of parquet files

- Apache Parquet is column-oriented and designed to provide efficient columnar storage compared to row-based file types such as CSV.

- Parquet files were designed with complex nested data structures in mind.

- Apache Parquet is designed to support very efficient compression and encoding schemes.

- Apache Parquet generates lower storage costs for data files and maximizes the effectiveness of data queries with current cloud technologies such as Amazon Athena, Redshift Spectrum, BigQuery and Azure Data Lakes.

- Licensed under the Apache license and available for any project.

What is Parquet used for?

Now that we know a little more about this data format, let's see in which occasions its use is more recommended. Undoubtedly the realm of parquets is Data Lakes. Data Lakes are distributed file storage spaces widely used today to create large heterogeneous corporate data repositories in the cloud. Unlike a Data Warehouse, a Data Lake does not have an underlying database engine nor is there a relational model of the data. But let's look at a practical example of the advantages of using Parquet over CSV in this type of storage.

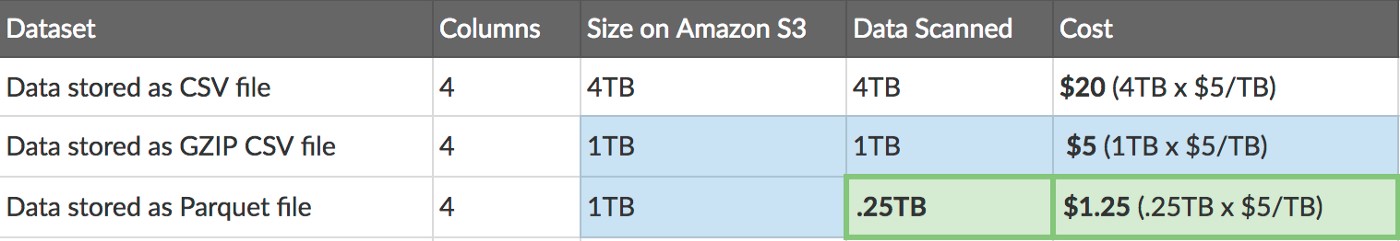

Suppose we have a dataset in table format (4 columns) representing the historical sales of a company over the last 10 years. If we store this table in CSV format in Amazon Web Services S3 we will see that the size it occupies is 4TB. If we compress this file with GZIP we will see that its size is reduced to a quarter (1TB). When we store this table in the same service (S3) in Parquet format, we see that it occupies the same size as the compressed CSV. But in addition, when we want to access a part of the data - let's say 1 single column - in the case of the CSV file (as mentioned above) we have to read the entire table, since it is a row storage. However, as the Parquet format is a column-oriented storage, we can read a single column independently, accessing only a quarter of the information in the table, with the time and cost savings that this entails.

Original post by Thomas Spicer in Medium.com

Once we have understood the efficiency of reading data using Parquet due to the columnar access of the data, we can now understand why most of today's data storage and processing services favour Parquet over CSV. These cloud services for data processing are highly popular with data professionals since the analyst or data scientist only has to worry about the analysis. It is the services that ensure accessibility and read efficiency.

It might seem that only the most sophisticated and analytical use cases use Parquet as a reference format, but there are already many teams in companies, which start using Parquet from the source for their Business Intelligence applications with tools for business users (non-technical) such as Power BI or Tableau.

In conclusion, in this post we have highlighted the positive features of the Parquet data format for data storage and processing when it comes to analytical use cases (machine learning, artificial intelligence) or with a strong column orientation (such as time series). As with everything in life, there is no perfect solution for all situations. There are and will continue to be row-oriented use cases, just as there are specific formats for storing images or maps. In any case, there is no doubt that if your application fits the characteristics of parquet, you will experience significant efficiency improvements if you opt for this format over other more conventional formats such as CSV. See you in the next post!

Content prepared by Alejandro Alija, expert in Digital Transformation and Innovation.

The contents and points of view reflected in this publication are the sole responsibility of its author.

Alejandro -

thanks for this very clear intro to Parquet!.

I'll try it

using R ,

with and without DuckDB...

Thanks/Gracias!

SFdude

San Francisco

=========

Hola. En cuanto a diferencial de rendimiento entre importar grandes volúmenes de datos mediante CSV o Parquet a clústeres de computación (Redshift, por ejemplo) ¿Habría alguna diferencia? ¿Lo habéis probado y medido? Gracias por adelantado.

Hola Miguel, muchas gracias por tu comentario.

AWS Redshift es un Data Warehouse con un diseño de arquitectura moderno sobre una base PostgreSQL. Es decir, cuando cargas datos en tablas de Redshift, en realidad estas cargando datos en una estructura de base de datos columnar orientada al análisis OLAP. La estructura columnar lo hace más similar al Parquet que al CSV pero en cualquier caso Redshift es una base de datos y los anteriores son formatos de ficheros. Muchas gracias por tu interés. No dudes en consultarnos cualquier otra duda.

https://docs.aws.amazon.com/redshift/latest/dg/c_redshift-and-postgres-…

Mi pregunta está centrada en Redshift. La reformulo:

¿Es posible que importar desde Parquet sea más rápido que desde CSV, usando COPY, evidentemente? Ya no sólo por el tamaño del archivo, sino por la propia estructura de los datos de .parquet y el formato columnar de Redshift. Y porque Parquet está orientado a este tipo de repositorios.

Hola, Alejandro:

Otra ventaja importante del formato .parquet sobre .csv es la posibilidad de realizar consultas directamente en SQL sobre el fichero, sin cargarlo antes en memoria, utilizando la librería DuckDB (que hace otras muchas cosas, por cierto).

Estas consultas, además, ganan por mucho en velocidad al procesamiento habitual con dataframes cuando hay cruces y/o agregaciones y el volumen de datos es significativo.

Para dar una idea de la mejora, lo estamos aplicando en ficheros con alrededor de 25 millones de filas sobre los que hacemos operaciones “groupby” y “merge” con dataframes y el tiempo se reduce desde algo más de un minuto por fichero a diez segundos.

Ni que decir tiene que, a cambio, hay que rehacer los scripts que ya estuvieran hechos ;-)

Saludos cordiales,

Juan Andrés

Qué buena aportación Juan! Desconocía la librería DuckDB. Me ha encantado y tiene implementaciones en varios lenguajes.

Muchas gracias por la aportación y espero que el resto del post, desde una perspectiva para el gran público, te haya gustado.

Un abrazo.

Alejandro.