Did you know that Spain created the first state agency specifically dedicated to the supervision of artificial intelligence (AI) in 2023? Even anticipating the European Regulation in this area, the Spanish Agency for the Supervision of Artificial Intelligence (AESIA) was born with the aim of guaranteeing the ethical and safe use of AI, promoting responsible technological development.

Among its main functions is to ensure that both public and private entities comply with current regulations. To this end, it promotes good practices and advises on compliance with the European regulatory framework, which is why it has recently published a series of guides to ensure the consistent application of the European AI regulation.

In this post we will delve into what the AESIA is and we will learn relevant details of the content of the guides.

What is AESIA and why is it key to the data ecosystem?

The AESIA was created within the framework of Axis 3 of the Spanish AI Strategy. Its creation responds to the need to have an independent authority that not only supervises, but also guides the deployment of algorithmic systems in our society.

Unlike other purely sanctioning bodies, the AESIA is designed as an intelligence Think & Do, i.e. an organisation that investigates and proposes solutions. Its practical usefulness is divided into three aspects:

- Legal certainty: Provides clear frameworks for businesses, especially SMEs, to know where to go when innovating.

- International benchmark: it acts as the Spanish interlocutor before the European Commission, ensuring that the voice of our technological ecosystem is heard in the development of European standards.

- Citizen trust: ensures that AI systems used in public services or critical areas respect fundamental rights, avoiding bias and promoting transparency.

Since datos.gob.es, we have always defended that the value of data lies in its quality and accessibility. The AESIA complements this vision by ensuring that, once data is transformed into AI models, its use is responsible. As such, these guides are a natural extension of our regular resources on data governance and openness.

Resources for the use of AI: guides and checklists

The AESIA has recently published materials to support the implementation and compliance with the European Artificial Intelligence regulations and their applicable obligations. Although they are not binding and do not replace or develop existing regulations, they provide practical recommendations aligned with regulatory requirements pending the adoption of harmonised implementing rules for all Member States.

They are the direct result of the Spanish AI Regulatory Sandbox pilot. This sandbox allowed developers and authorities to collaborate in a controlled space to understand how to apply European regulations in real-world use cases.

It is essential to note that these documents are published without prejudice to the technical guides that the European Commission is preparing. Indeed, Spain is serving as a "laboratory" for Europe: the lessons learned here will provide a solid basis for the Commission's working group, ensuring consistent application of the regulation in all Member States.

The guides are designed to be a complete roadmap, from the conception of the system to its monitoring once it is on the market.

Figure 1. AESIA guidelines for regulatory compliance. Source: Spanish Agency for the Supervision of Artificial Intelligence

- 01. Introductory to the AI Regulation: provides an overview of obligations, implementation deadlines and roles (suppliers, deployers, etc.). It is the essential starting point for any organization that develops or deploys AI systems.

- 02. Practice and examples: land legal concepts in everyday use cases (e.g., is my personnel selection system a high-risk AI?). It includes decision trees and a glossary of key terms from Article 3 of the Regulation, helping to determine whether a specific system is regulated, what level of risk it has, and what obligations are applicable.

- 03. Conformity assessment: explains the technical steps necessary to obtain the "seal" that allows a high-risk AI system to be marketed, detailing the two possible procedures according to Annexes VI and VII of the Regulation as valuation based on internal control or evaluation with the intervention of a notified body.

- 04. Quality management system: defines how organizations must structure their internal processes to maintain constant standards. It covers the regulatory compliance strategy, design techniques and procedures, examination and validation systems, among others.

- 05. Risk management: it is a manual on how to identify, evaluate and mitigate possible negative impacts of the system throughout its life cycle.

- 06. Human surveillance: details the mechanisms so that AI decisions are always monitorable by people, avoiding the technological "black box". It establishes principles such as understanding capabilities and limitations, interpretation of results, authority not to use the system or override decisions.

- 07. Data and data governance: addresses the practices needed to train, validate, and test AI models ensuring that datasets are relevant, representative, accurate, and complete. It covers data management processes (design, collection, analysis, labeling, storage, etc.), bias detection and mitigation, compliance with the General Data Protection Regulation, data lineage, and design hypothesis documentation, being of particular interest to the open data community and data scientists.

- 08. Transparency: establishes how to inform the user that they are interacting with an AI and how to explain the reasoning behind an algorithmic result.

- 09. Accuracy: Define appropriate metrics based on the type of system to ensure that the AI model meets its goal.

- 10. Robustness: Provides technical guidance on how to ensure AI systems operate reliably and consistently under varying conditions.

- 11. Cybersecurity: instructs on protection against threats specific to the field of AI.

- 12. Logs: defines the measures to comply with the obligations of automatic registration of events.

- 13. Post-market surveillance: documents the processes for executing the monitoring plan, documentation and analysis of data on the performance of the system throughout its useful life.

- 14. Incident management: describes the procedure for reporting serious incidents to the competent authorities.

- 15. Technical documentation: establishes the complete structure that the technical documentation must include (development process, training/validation/test data, applied risk management, performance and metrics, human supervision, etc.).

- 16. Requirements Guides Checklist Manual: explains how to use the 13 self-diagnosis checklists that allow compliance assessment, identifying gaps, designing adaptation plans and prioritizing improvement actions.

All guides are available here and have a modular structure that accommodates different levels of knowledge and business needs.

The self-diagnostic tool and its advantages

In parallel, the AESIA publishes material that facilitates the translation of abstract requirements into concrete and verifiable questions, providing a practical tool for the continuous assessment of the degree of compliance.

These are checklists that allow an entity to assess its level of compliance autonomously.

The use of these checklists provides multiple benefits to organizations. First, they facilitate the early identification of compliance gaps, allowing organizations to take corrective action prior to the commercialization or commissioning of the system. They also promote a systematic and structured approach to regulatory compliance. By following the structure of the rules of procedure, they ensure that no essential requirement is left unassessed.

On the other hand, they facilitate communication between technical, legal and management teams, providing a common language and a shared reference to discuss regulatory compliance. And finally, checklists serve as a documentary basis for demonstrating due diligence to supervisory authorities.

We must understand that these documents are not static. They are subject to an ongoing process of evaluation and review. In this regard, the EASIA continues to develop its operational capacity and expand its compliance support tools.

From the open data platform of the Government of Spain, we invite you to explore these resources. AI development must go hand in hand with well-governed data and ethical oversight.

Data possesses a fluid and complex nature: it changes, grows, and evolves constantly, displaying a volatility that profoundly differentiates it from source code. To respond to the challenge of reliably managing this evolution, we have developed the new 'Technical Guide: Data Version Control'.

This guide addresses an emerging discipline that adapts software engineering principles to the data ecosystem: Data Version Control (DVC). The document not only explores the theoretical foundations but also offers a practical approach to solving critical data management problems, such as the reproducibility of machine learning models, traceability in regulatory audits, and efficient collaboration in distributed teams.

Why is a guide on data versioning necessary?

Historically, data versioning has been done manually (files with suffixes like "_final_v2.csv"), an error-prone and unsustainable approach in professional environments. While tools like Git have revolutionized software development, they are not designed to efficiently handle large files or binaries, which are intrinsic characteristics of datasets.

This guide was created to bridge that technological and methodological gap, explaining the fundamental differences between code versioning and data versioning. The document details how specialized tools like DVC (Data Version Control) allow you to manage the data lifecycle with the same rigor as code, ensuring that you can always answer the question: "What exact data was used to obtain this result?"

Structure and contents

The document follows a progressive approach, starting from basic concepts and progressing to technical implementation, and is structured in the following key blocks:

- Version Control Fundamentals: Analysis of the current problem (the "phantom model", impossible audits) and definition of key concepts such as Snapshots, Data Lineage and Checksums.

- Strategies and Methodologies: Adaptation of semantic versioning (SemVer) to datasets, storage strategies (incremental vs. full) and metadata management to ensure traceability.

- Tools in practice: A detailed analysis of tools such as DVC, Git LFS and cloud-native solutions (AWS, Google Cloud, Azure), including a comparison to choose the most suitable one according to the size of the team and the data.

- Practical case study: A step-by-step tutorial on how to set up a local environment with DVC and Git, simulating a real data lifecycle: from generation and initial versioning, to updating, remote synchronization, and rollback.

- Governance and best practices: Recommendations on roles, retention policies and compliance to ensure successful implementation in the organization.

Figure 1: Practical example of using GIT and DVC commands included in the guide.

Who is it aimed at?

This guide is designed for a broad technical profile within the public and private sectors: data scientists, data engineers, analysts and data catalog managers.

It is especially useful for professionals looking to streamline their workflows, ensure the scientific reproducibility of their research, or guarantee regulatory compliance in regulated sectors. While basic knowledge of Git and the command line is recommended, the guide includes practical examples and detailed explanations to facilitate learning.

The future new version of the Technical Standard for Interoperability of Public Sector Information Resources (NTI-RISP) incorporates DCAT-AP-ES as a reference model for the description of data sets and services. This is a key step towards greater interoperability, quality and alignment with European data standards.

This guide aims to help you migrate to this new model. It is aimed at technical managers and managers of public data catalogs who, without advanced experience in semantics or metadata models, need to update their RDF catalog to ensure its compliance with DCAT-AP-ES. In addition, the guidelines in the document are also applicable for migration from other RDF-based metadata models, such as local profiles, DCAT, DCAT-AP or sectoral adaptations, as the fundamental principles and verifications are common.

Why migrate to DCAT-AP-ES?

Since 2013, the Technical Standard for the Interoperability of Public Sector Information Resources has been the regulatory framework in Spain for the management and openness of public data. In line with the European and Spanish objectives of promoting the data economy, the standard has been updated in order to promote the large-scale exchange of information in distributed and federated environments.

This update, which at the time of publication of the guide is in the administrative process, incorporates a new metadata model aligned with the most recent European standards: DCAT-AP-ES. These standards facilitate the homogeneous description of the reusable data sets and information resources made available to the public. DCAT-AP-ES adopts the guidelines of the European metadata exchange scheme DCAT-AP (Data Catalog Vocabulary – Aplication Profile), thus promoting interoperability between national and European catalogues.

The advantages of adopting DCAT-AP-ES can be summarised as follows:

- Semantic and technical interoperability: ensures that different catalogs can understand each other automatically.

- Regulatory alignment: it responds to the new requirements provided for in the NTI-RISP and aligns the catalogue with Directive (EU) 2019/1024 on open data and the re-use of public sector information and Implementing Regulation (EU) 2023/138 establishing a list of specific High Value Datasets or HVD), facilitating the publication of HVDs and associated data services.

- Improved ability to find resources: Makes it easier to find, locate, and reuse datasets using standardized, comprehensive metadata.

- Reduction of incidents in the federation: minimizes errors and conflicts by integrating catalogs from different Administrations, guaranteeing consistency and quality in interoperability processes.

What has changed in DCAT-AP-ES?

DCAT-AP-ES expands and orders the previous model to make it more interoperable, more legally accurate and more useful for the maintenance and technical reuse of data catalogues.

The main changes are:

- In the catalog: It is now possible to link catalogs to each other, record who created them, add a supplementary statement of rights to the license, or describe each entry using records.

- In datasets: New properties are added to comply with regulations on high-value sets, support communication, document provenance and relationships between resources, manage versions, and describe spatial/temporal resolution or website. Likewise, the responsibility of the license is redefined, moving its declaration to the most appropriate level.

- For distributions: Expanded options to indicate planned availability, legislation, usage policy, integrity, packaged formats, direct download URL, own license, and lifecycle status.

A practical and gradual approach

Many catalogs already meet the requirements set out in the 2013 version of NTI-RISP. In these cases, the migration to DCAT-AP-ES requires a reduced adjustment, although the guide also contemplates more complex scenarios, following a progressive and adaptable approach.

The document distinguishes between the minimum compliance required and some extensions that improve quality and interoperability.

It is recommended to follow an iterative strategy: starting from the minimum core to ensure operational continuity and, subsequently, planning the phased incorporation of additional elements, such as data services, contact, applicable legislation, categorization of HVDs and contextual metadata. This approach reduces risks, distributes the effort of adaptation, and favors an orderly transition.

Once the first adjustments have been made, the catalogue can be federated with both the National Catalogue, hosted in datos.gob.es, and the Official European Data Catalogue, progressively increasing the quality and interoperability of the metadata.

The guide is a technical support material that facilitates a basic transition, in accordance with the minimum interoperability requirements. In addition, it complements other reference resources, such as the DCAT-AP-ES Application Profile Model and Implementation Technical Guide, the implementation examples (Migration from NIT-RISP to DCAT-AP-ES and Migration from NTI-RISP to DCAT-AP-ES HHD), and the complementary conventions to the DCAT-AP-ES model that define additional rules to address practical needs.

Data science has become a pillar of evidence-based decision-making in the public and private sectors. In this context, there is a need for a practical and universal guide that transcends technological fads and provides solid and applicable principles. This guide offers a decalogue of good practices that accompanies the data scientist throughout the entire life cycle of a project, from the conceptualization of the problem to the ethical evaluation of the impact.

- Understand the problem before looking at the data. The initial key is to clearly define the context, objectives, constraints, and indicators of success. A solid framing prevents later errors.

- Know the data in depth. Beyond the variables, it involves analyzing their origin, traceability and possible biases. Data auditing is essential to ensure representativeness and reliability.

- Ensure quality. Without clean data there is no science. EDA techniques, imputation, normalization and control of quality metrics allow to build solid and reproducible bases.

- Document and version. Reproducibility is a scientific condition. Notebooks, pipelines, version control, and MLOps practices ensure traceability and replicability of processes and models.

- Choose the right model. Sophistication does not always win: the decision must balance performance, interpretability, costs and operational constraints.

- Measure meaningfully. Metrics should align with goals. Cross-validation, data drift control and rigorous separation of training, validation and test data are essential to ensure generalization.

- Visualize to communicate. Visualization is not an ornament, but a language to understand and persuade. Data-driven storytelling and clear design are critical tools for connecting with diverse audiences.

- Work as a team. Data science is collaborative: it requires data engineers, domain experts, and business leaders. The data scientist must act as a facilitator and translator between the technical and the strategic.

- Stay up-to-date (and critical). The ecosystem is constantly evolving. It is necessary to combine continuous learning with selective criteria, prioritizing solid foundations over passing fads.

-

Be ethical. Models have a real impact. It is essential to assess bias, protect privacy, ensure explainability and anticipate misuse. Ethics is a compass and a condition of legitimacy.

Finally, the report includes a bonus-track on Python and R, highlighting that both languages are complementary allies: Python dominates in production and deployment, while R offers statistical rigor and advanced visualization. Knowing both multiplies the versatility of the data scientist.

The Data Scientist's Decalogue is a practical, timeless and cross-cutting guide that helps professionals and organizations turn data into informed, reliable and responsible decisions. Its objective is to strengthen technical quality, collaboration and ethics in a discipline in full expansion and with great social impact.

Listen to the podcast (only available in Spanish)

Content prepared by Alejandro Alija, expert in Digital Transformation and Innovation. The contents and points of view reflected in this publication are the sole responsibility of the author.

Open data is a fundamental fuel for contemporary digital innovation, creating information ecosystems that democratise access to knowledge and foster the development of advanced technological solutions.

However, the mere availability of data is not enough. Building robust and sustainable ecosystems requires clear regulatory frameworks, sound ethical principles and management methodologies that ensure both innovation and the protection of fundamental rights. Therefore, the specialised documentation that guides these processes becomes a strategic resource for governments, organisations and companies seeking to participate responsibly in the digital economy.

In this post, we compile recent reports, produced by leading organisations in both the public and private sectors, which offer these key orientations. These documents not only analyse the current challenges of open data ecosystems, but also provide practical tools and concrete frameworks for their effective implementation.

State and evolution of the open data market

Knowing what it looks like and what changes have occurred in the open data ecosystem at European and national level is important to make informed decisions and adapt to the needs of the industry. In this regard, the European Commission publishes, on a regular basis, a Data Markets Report, which is updated regularly. The latest version is dated December 2024, although use cases exemplifying the potential of data in Europe are regularly published (the latest in February 2025).

On the other hand, from a European regulatory perspective, the latest annual report on the implementation of the Digital Markets Act (DMA)takes a comprehensive view of the measures adopted to ensure fairness and competitiveness in the digital sector. This document is interesting to understand how the regulatory framework that directly affects open data ecosystems is taking shape.

At the national level, the ASEDIE sectoral report on the "Data Economy in its infomediary scope" 2025 provides quantitative evidence of the economic value generated by open data ecosystems in Spain.

The importance of open data in AI

It is clear that the intersection between open data and artificial intelligence is a reality that poses complex ethical and regulatory challenges that require collaborative and multi-sectoral responses. In this context, developing frameworks to guide the responsible use of AI becomes a strategic priority, especially when these technologies draw on public and private data ecosystems to generate social and economic value. Here are some reports that address this objective:

- Generative IA and Open Data: Guidelines and Best Practices: the U.S. Department of Commerce. The US government has published a guide with principles and best practices on how to apply generative artificial intelligence ethically and effectively in the context of open data. The document provides guidelines for optimising the quality and structure of open data in order to make it useful for these systems, including transparency and governance.

- Good Practice Guide for the Use of Ethical Artificial Intelligence: This guide demonstrates a comprehensive approach that combines strong ethical principles with clear and enforceable regulatory precepts.. In addition to the theoretical framework, the guide serves as a practical tool for implementing AI systems responsibly, considering both the potential benefits and the associated risks. Collaboration between public and private actors ensures that recommendations are both technically feasible and socially responsible.

- Enhancing Access to and Sharing of Data in the Age of AI: this analysis by the Organisation for Economic Co-operation and Development (OECD) addresses one of the main obstacles to the development of artificial intelligence: limited access to quality data and effective models. Through examples, it identifies specific strategies that governments can implement to significantly improve data access and sharing and certain AI models.

- A Blueprint to Unlock New Data Commons for AI: Open Data Policy Lab has produced a practical guide that focuses on the creation and management of data commons specifically designed to enable cases of public interest artificial intelligence use. The guide offers concrete methodologies on how to manage data in a way that facilitates the creation of these data commons, including aspects of governance, technical sustainability and alignment with public interest objectives.

- Practical guide to data-driven collaborations: the Data for Children Collaborative initiative has published a step-by-step guide to developing effective data collaborations, with a focus on social impact. It includes real-world examples, governance models and practical tools to foster sustainable partnerships.

In short, these reports define the path towards more mature, ethical and collaborative data systems. From growth figures for the Spanish infomediary sector to European regulatory frameworks to practical guidelines for responsible AI implementation, all these documents share a common vision: the future of open data depends on our ability to build bridges between the public and private sectors, between technological innovation and social responsibility.

The Spanish Data Protection Agency has recently published the Spanish translation of the Guide on Synthetic Data Generation, originally produced by the Data Protection Authority of Singapore. This document provides technical and practical guidance for data protection officers, managers and data protection officers on how to implement this technology that allows simulating real data while maintaining their statistical characteristics without compromising personal information.

The guide highlights how synthetic data can drive the data economy, accelerate innovation and mitigate risks in security breaches. To this end, it presents case studies, recommendations and best practices aimed at reducing the risks of re-identification. In this post, we analyse the key aspects of the Guide highlighting main use cases and examples of practical application.

What are synthetic data? Concept and benefits

Synthetic data is artificial data generated using mathematical models specifically designed for artificial intelligence (AI) or machine learning (ML) systems. This data is created by training a model on a source dataset to imitate its characteristics and structure, but without exactly replicating the original records.

High-quality synthetic data retain the statistical properties and patterns of the original data. They therefore allow for analyses that produce results similar to those that would be obtained with real data. However, being artificial, they significantly reduce the risks associated with the exposure of sensitive or personal information.

For more information on this topic, you can read this Monographic report on synthetic data:. What are they and what are they used for? with detailed information on the theoretical foundations, methodologies and practical applications of this technology.

The implementation of synthetic data offers multiple advantages for organisations, for example:

- Privacy protection: allow data analysis while maintaining the confidentiality of personal or commercially sensitive information.

- Regulatory compliance: make it easier to follow data protection regulations while maximising the value of information assets.

- Risk reduction: minimise the chances of data breaches and their consequences.

- Driving innovation: accelerate the development of data-driven solutions without compromising privacy.

- Enhanced collaboration: Enable valuable information to be shared securely across organisations and departments.

Steps to generate synthetic data

To properly implement this technology, the Guide on Synthetic Data Generation recommends following a structured five-step approach:

- Know the data: cClearly understand the purpose of the synthetic data and the characteristics of the source data to be preserved, setting precise targets for the threshold of acceptable risk and expected utility.

- Prepare the data: iidentify key insights to be retained, select relevant attributes, remove or pseudonymise direct identifiers, and standardise formats and structures in a well-documented data dictionary .

- Generate synthetic data: sselect the most appropriate methods according to the use case, assess quality through completeness, fidelity and usability checks, and iteratively adjust the process to achieve the desired balance.

- Assess re-identification risks: aApply attack-based techniques to determine the possibility of inferring information about individuals or their membership of the original set, ensuring that risk levels are acceptable.

- Manage residual risks: iImplement technical, governance and contractual controls to mitigate identified risks, properly documenting the entire process.

Practical applications and success stories

To realise all these benefits, synthetic data can be applied in a variety of scenarios that respond to specific organisational needs. The Guide mentions, for example:

1 Generation of datasets for training AI/ML models: lSynthetic data solves the problem of the scarcity of labelled (i.e. usable) data for training AI models. Where real data are limited, synthetic data can be a cost-effective alternative. In addition, they allow to simulate extraordinary events or to increase the representation of minority groups in training sets. An interesting application to improve the performance and representativeness of all social groups in AI models.

2 Data analysis and collaboration: eThis type of data facilitates the exchange of information for analysis, especially in sectors such as health, where the original data is particularly sensitive. In this sector as in others, they provide stakeholders with a representative sample of actual data without exposing confidential information, allowing them to assess the quality and potential of the data before formal agreements are made.

3 Software testing: sis very useful for system development and software testing because it allows the use of realistic, but not real data in development environments, thus avoiding possible personal data breaches in case of compromise of the development environment..

The practical application of synthetic data is already showing positive results in various sectors:

I. Financial sector: fraud detection. J.P. Morgan has successfully used synthetic data to train fraud detection models, creating datasets with a higher percentage of fraudulent cases that significantly improved the models' ability to identify anomalous behaviour.

II. Technology sector: research on AI bias. Mastercard collaborated with researchers to develop methods to test for bias in AI using synthetic data that maintained the true relationships of the original data, but were private enough to be shared with outside researchers, enabling advances that would not have been possible without this technology.

III. Health sector: safeguarding patient data. Johnson & Johnson implemented AI-generated synthetic data as an alternative to traditional anonymisation techniques to process healthcare data, achieving a significant improvement in the quality of analysis by effectively representing the target population while protecting patients' privacy.

The balance between utility and protection

It is important to note that synthetic data are not inherently risk-free. The similarity to the original data could, in certain circumstances, allow information about individuals or sensitive data to be leaked. It is therefore crucial to strike a balance between data utility and data protection.

This balance can be achieved by implementing good practices during the process of generating synthetic data, incorporating protective measures such as:

- Adequate data preparation: removal of outliers, pseudonymisation of direct identifiers and generalisation of granular data.

- Re-identification risk assessment: analysis of the possibility that synthetic data can be linked to real individuals.

- Implementation of technical controls: adding noise to data, reducing granularity or applying differential privacy techniques.

Synthetic data represents a exceptional opportunity to drive data-driven innovation while respecting privacy and complying with data protection regulations. Their ability to generate statistically representative but artificial information makes them a versatile tool for multiple applications, from AI model training to inter-organisational collaboration and software development.

By properly implementing the best practices and controls described in Guide on synthetic data generation translated by the AEPD, organisations can reap the benefits of synthetic data while minimising the associated risks, positioning themselves at the forefront of responsible digital transformation. The adoption of privacy-enhancing technologies such as synthetic data is not only a defensive measure, but a proactive step towards an organisational culture that values both innovation and data protection, which are critical to success in the digital economy of the future.

How can public administrations harness the value of data? This question is not a simple one to address; its answer is conditioned by several factors that have to do with the context of each administration, the data available to it and the specific objectives set.

However, there are reference guides that can help define a path to action. One of them is published by the European Commission through the EU Publications Office, Data Innovation Toolkit, which emerges as a strategic compass to navigate this complex data innovation ecosystem.

This tool is not a simple manual as it includes templates to make the implementation of the process easier. Aimed at a variety of profiles, from novice analysts to experienced policy makers and technology innovators, Data Innovation Toolkit is a useful resource that accompanies you through the process, step by step.

It aims to democratise data-driven innovation by providing a structured framework that goes beyond the mere collection of information. In this post, we will analyse the contents of the European guide, as well as the references it provides for good innovative use of data.

Structure covering the data lifecycle

The guide is organised in four main steps, which address the entire data lifecycle.

-

Planning

The first part of the guide focuses on establishing a strong foundation for any data-driven innovation project. Before embarking on any process, it is important to define objectives. To do so, the Data Innovation Toolkit suggests a deep reflection that requires aligning the specific needs of the project with the strategic objectives of the organisation. In this step, stakeholder mapping is also key. This implies a thorough understanding of the interests, expectations and possible contributions of each actor involved. This understanding enables the design of engagement strategies that maximise collaboration and minimise potential conflicts.

To create a proper data innovation team, we can use the RACI matrix (Responsible, Accountable, Consulted, Informed) to define precise roles and responsibilities. It is not just about bringing professionals together, but about building multidisciplinary teams where each member understands their exact role and contribution to the project. To assist in this task the guide provides:

- Challenge definition tool: to identify and articulate the key issues they seek to address, summarising them in a single statement.

- Stakeholder mapping tool: to visualise the network of individuals and organisations involved, assessing their influence and interests.

- Team definition tool: to make it easier to identify people in your organisation who can help you.

- Tool to define roles: to, once the necessary profiles have been defined, determine their responsibilities and role in the data project in more detail, using a RACI matrix.

- Tool to define People: People is a concept used to define specific types of users, called behavioural archetypes. This guide helps to create these detailed profiles, which represent the users or clients who will be involved in the project.

- Tool for mapping Data Journey: to make a synthetic representation describing step by step how a user can interact with his data. The process is represented from the user's perspective, describing what happens at each stage of the interaction and the touch points.

-

Collection and processing

Once the team has been set up and the objectives have been identified, a classification of the data is made that goes beyond the traditional division between quantitative and qualitative data.

Quantitative scope:

-

Discrete data, such as the number of complaints in a public service, represents not only a number, but an opportunity to systematically identify areas for improvement. They allow administrations to map recurrent problems and design targeted interventions. Ongoing data, such as response times for administrative procedures, provide a snapshot of operational efficiency. It is not just a matter of measuring, but of understanding the factors that influence the variability of these times and designing more agile and efficient processes.

Qualitative:

-

Nominal (name) data enables the categorisation of public services, allowing for a more structured understanding of the diversity of administrative interventions.

-

Ordinal (number) data, such as satisfaction ratings, become a prioritisation tool for continuous improvement.

A series of checklists are available in the document to review this aspect:

- Checklist of data gaps: to identify if there are any gaps in the data to be used and, if so, how to fill them.

- Template for data collection: to align the dataset to the objective of the innovative analysis.

- Checklist of data collection: to ensure access to the data sources needed to run the project.

- Checklist of data quality: to review the quality level of the dataset.

- Data processing letters: to check that data is being processed securely, efficiently and in compliance with regulations.

-

Sharing and analysis

At this point, the Data Innovation Toolkit proposes four analysis strategies that transform data into actionable knowledge.

- Descriptive analysis: goes beyond the simple visualisation of historical data, allowing the construction of narratives that explain the evolution of the phenomena studied.

- Diagnostic analysis: delves deeper into the investigation of causes, unravelling the hidden patterns that explain the observed behaviours.

- Predictive analytics: becomes a strategic planning tool, allowing administrations to prepare for future scenarios.

- Prescriptive analysis: goes a step further, not only projecting trends, but recommending concrete actions based on data modelling.

In addition to analysis, the ethical dimension is fundamental. The guide therefore sets out strict protocols to ensure secure data transfers, regulatory compliance, transparency and informed consent. In this section, the following checklistis provided:

- Data sharing template: to ensure secure, legal and transparent sharing.

- Checklist for data sharing: to perform all the necessary steps to share data securely, ethically and achieving all the defined objectives.

- Data analysis template: to conduct a proper analysis to obtain insights useful and meaningful for the project.

-

Use and evaluation

The last stage focuses on converting the insights into real actions. The communication of results, the definition of key performance indicators (KPIs), impact measurement and scalability strategies become tools for continuous improvement.

A collaborative resource in continuous improvement

In short, the toolkit offers a comprehensive transformation: from evidence-based decision making to personalising public services, increasing transparency and optimising resources. You can also check the checklist available in this section which are:

- Checklist for data use: to review that the data and the conclusions drawn are used in an effective, accountable and goal-oriented manner.

- Data innovation through KPI tool: to define the KPIs that will measure the success of the process.

- Impact measurement and success evaluation tools: to assess the success and impact of the innovation in the data project.

- Data innovation scalability plan: to identify strategies to scale the project effectively.

In addition, this repository of innovation resources and data is a dynamic catalogue of knowledge including expertise articles, implementation guides, case studies and learning materials.

You can access here the list of materials provided by the Data Innovation Toolkit.

You can even contact the development team if you have any questions or would like to contribute to the repository:

To conclude, harnessing the value of data with an innovative perspective is not a magic leap, but a gradual and complex process. On this path, the Data Innovation Toolkit can be useful as it offers a structured framework. Effective implementation will require investment in training, cultural adaptation and long-term commitment.

We are living in a historic moment in which data is a key asset, on which many small and large decisions of companies, public bodies, social entities and citizens depend every day. It is therefore important to know where each piece of information comes from, to ensure that the issues that affect our lives are based on accurate information.

What is data subpoena?

When we talk about "citing" we refer to the process of indicating which external sources have been used to create content. This is a highly commendable issue that affects all data, including public data as enshrined in our legal system. In the case of data provided by administrations, Royal Decree 1495/2011 includes the need for the reuser to cite the source of origin of the information.

To assist users in this task, the Publications Office of the European Union published Data Citation: A guide to best practice, which discusses the importance of data citation and provides recommendations for good practice, as well as the challenges to be overcome in order to cite datasets correctly.

Why is data citation important?

The guide mentions the most relevant reasons why it is advisable to carry out this practice:

- Credit. Creating datasets takes work. Citing the author(s) allows them to receive feedback and to know that their work is useful, which encourages them to continue working on new datasets.

- Transparency. When data is cited, the reader can refer to it to review it, better understand its scope and assess its appropriateness.

- Integrity. Users should not engage in plagiarism. They should not take credit for the creation of datasets that are not their own.

- Reproducibility. Citing the data allows a third party to attempt to reproduce the same results, using the same information.

- Re-use. Data citation makes it easier for more and more datasets to be made available and thus to increase their use.

- Text mining. Data is not only consumed by humans, it can also be consumed by machines. Proper citation will help machines better understand the context of datasets, amplifying the benefits of their reuse.

General good practice

Of all the general good practices included in the guide, some of the most relevant are highlighted below:

- Be precise. It is necessary that the data cited are precisely defined. The data citation should indicate which specific data have been used from each dataset. It is also important to note whether they have been processed and whether they come directly from the originator or from an aggregator (such as an observatory that has taken data from various sources).

- It uses "persistent identifiers" (PIDs). Just as every book in a library has an identifier, so too can (and should) have an identifier. Persistent identifiers are formal schemes that provide a common nomenclature, which uniquely identify data sets, avoiding ambiguities. When citing datasets, it is necessary to locate them and write them as an actionable hyperlink, which can be clicked on to access the cited dataset and its metadata. There are different families of PIDs, but the guide highlights two of the most common: the Handle system and the Digital Object Identifier (DOI).

- Indicates the time at which the data was accessed. This issue is of great importance when working with dynamic data (which are updated and changed periodically) or continuous data (on which additional data are added without modifying the old data). In such cases, it is important to cite the date of access. In addition, if necessary, the user can add "snapshots" of the dataset, i.e. copies taken at specific points in time.

- Consult the metadata of the dataset used and the functionalities of the portal in which it is located. Much of the information necessary for the citation is contained in the metadata.

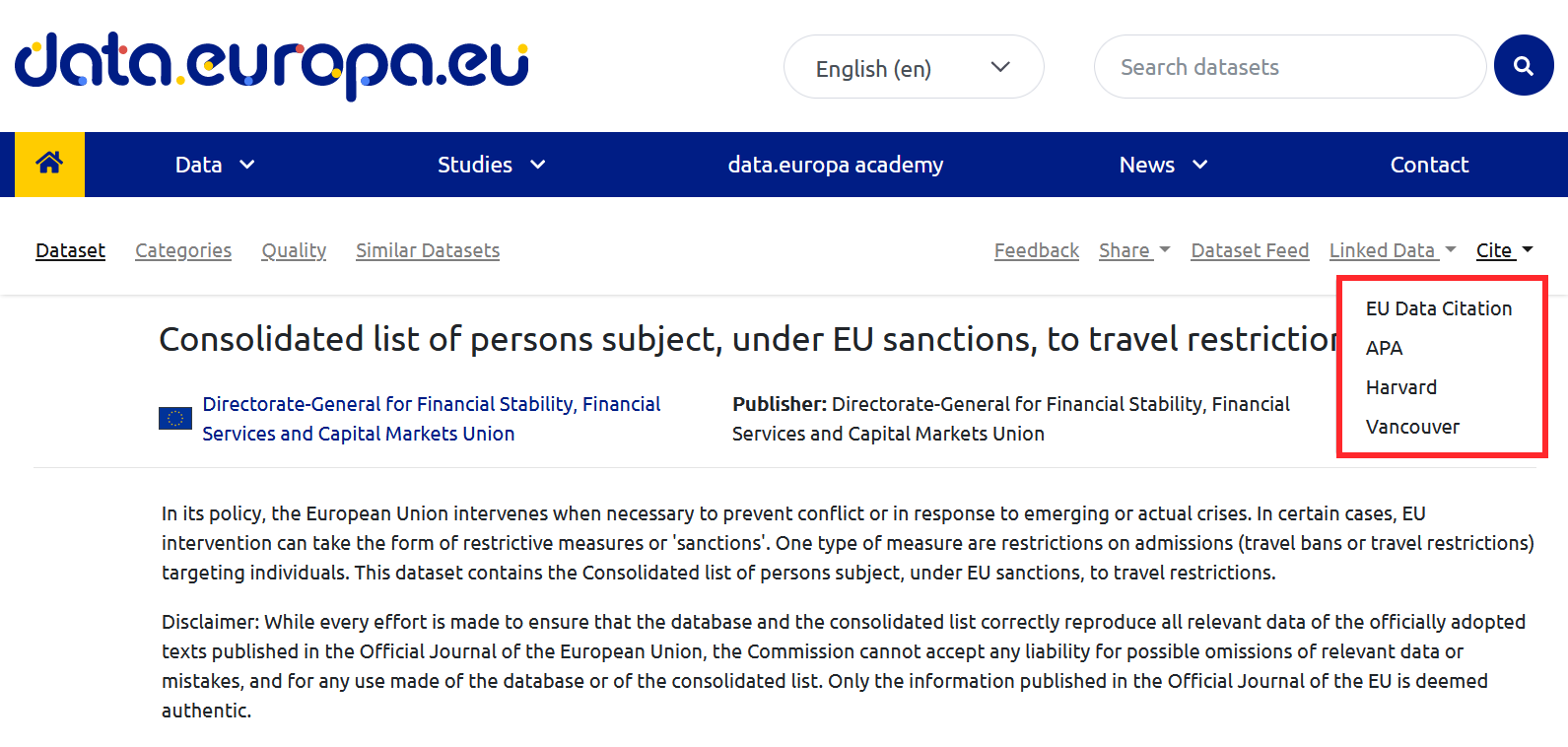

In addition, data portals can include tools to assist with citation. This is the case of data.europa.ue, where you can find the citation button in the top menu.

- Rely on software tools. Most of the software used to create documents allows for the automatic creation and formatting of citations, ensuring their formatting. In addition, there are specific citation management tools such as BibTeX or Mendeley, which allow the creation of citation databases taking into account their peculiarities, a very useful function when it is necessary to cite numerous datasets in multiple documents

With regard to the order of all this information, there are different guidelines for the general structure of citations. The guide shows the most appropriate forms of citation according to the type of document in which the citation appears (journalistic documents, online, etc.), including examples and recommendations. One example is the Interinstitutional Style Guide (ISG), which is published by the EU Publications Office. This style guide does not contain specific guidance on how to cite data, but it does contain a general citation structure that can be applied to datasets, shown in the image below.

How to cite correctly

The second part of the report contains the technical reference material for creating citations that meet the above recommendations. It covers the elements that a citation should include and how to arrange them for different purposes.

Elements that should be included in a citation include:

- Author, can refer to either the individual who created the dataset (personal author) or the responsible organisation (corporate author).

- Title of the dataset.

- Version/edition.

- Publisher, which is the entity that makes the dataset available and may or may not coincide with the author (in case of coincidence it is not necessary to repeat it).

- Date of publication, indicating the year in which it was created. It is important to include the time of the last update in brackets.

- Date of citation, which expresses the date on which the creator of the citation accessed the data, including the time if necessary. For date and time formats, the guide recommends using the DCAT specification , as it offers greater accuracy in terms of interoperability.

- Persistent identifier.

The guide ends with a series of annexes containing checklists, diagrams and examples.

If you want to know more about this document, we recommend you to watch this webinar where the most important points are summarised.

Ultimately, correctly citing datasets improves the quality and transparency of the data re-use process, while at the same time stimulating it. Encouraging the correct citation of data is therefore not only recommended, but increasingly necessary.

The Open Government Guide for Public Employees is a manual to guide the staff of public administrations at all levels (local, regional and state) on the concept and conditions necessary to achieve an "inclusive open government in a digital environment". Specifically, the document seeks for the administration to assume open government as a cross-cutting element of society, fostering its connection with the Sustainable Development Goals.

It is a comprehensive, practical and well-structured guide that facilitates the understanding and implementation of the principles of open government, providing examples and best practices that foster the development of the necessary skills to facilitate the long-term sustainability of open government.

What is open government?

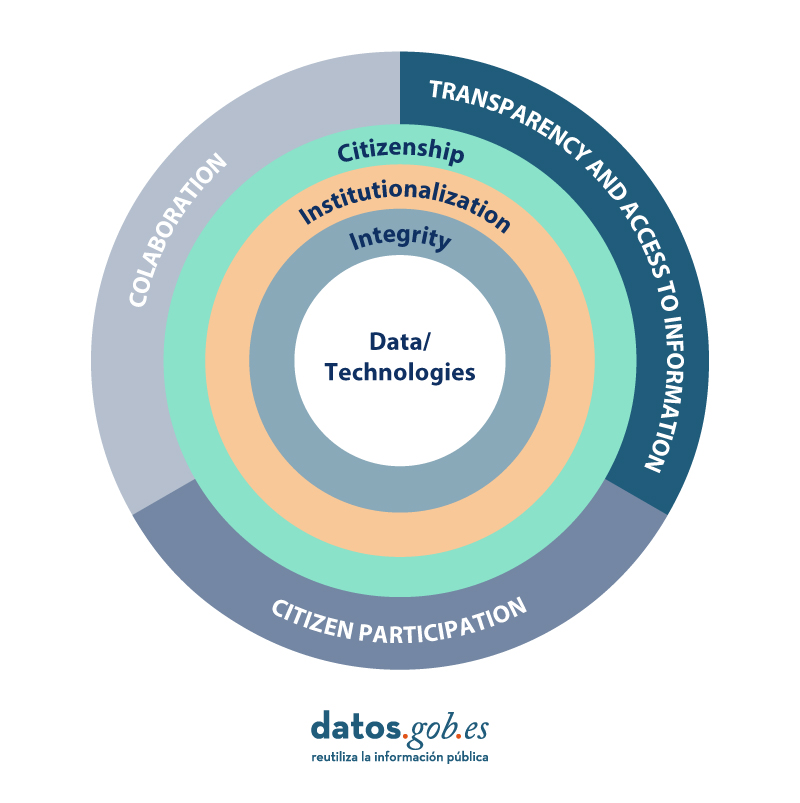

The guide adopts the most widely accepted definition of open government, based on three axes:

- Transparency and access to information (vision axis): Refers to open access to public information to facilitate greater accountability.

- Citizen participation (voice axis): It offers the possibility for citizens to be heard and intervene to improve decision-making and co-creation processes in public policies.

- Collaboration (value axis): Focuses on cooperation within the administration or externally, with citizens or civil society organizations, through innovation to generate greater co-production in the design and implementation of public services.

This manual defines these axes and breaks them down into their most relevant elements for better understanding and application. According to the guide, the basic elements of open administration are:

- An integrity that cuts across all public action.

- Data are "the raw material of governments and public administrations" and, for this reason, must be made available to "any actor", respecting the limits established by law. The use of information and communication technologies (digital) is conceived as a "space for the expansion of public action", without neglecting the digital divide.

- The citizenry is placed at the center of open administration, because it is not only the object of public action, but also "must enjoy a leading role in all the dynamics of transparency, participation and collaboration".

- Sustainability of government initiatives.

Adapted from a visual of the Open Government Guide for Public Employees. Source: https://funcionpublica.hacienda.gob.es/Secretaria-de-Estado-de-Funcion-Publica/Actualidad/ultimas-noticias/Noticias/2023/04/2023_04_11.html

Benefits of Open Government

With all this, a number of benefits are achieved:

-

Increased institutional quality and legitimacy

-

Increased trust in institutions

-

More targeted policies to serve citizens

- More equitable access to policy formulation

How can I use the guide?

The guide is very useful because, in order to explain some concepts, it poses challenges so that civil servants themselves can reflect on them and even put them into practice. The authors also propose cases that provide an overview of open government in the world and its evolution, both in terms of the concepts related to it and the laws, regulations, relevant plans and areas of application (including Law 19/2023 on transparency, the Digital Spain 2025 agenda, the Digital Rights Charter and the General Data Protection Regulation, known as RGPD). As an example, the cases he mentions include the Elkar-EKIN Social Inclusion Plan of the Provincial Council of Gipuzkoa and Frena La Curva, an initiative launched by members of the Directorate General of Citizen Participation and the LAAAB of the Government of Aragon during COVID-19.

The guide also includes a self-diagnostic test on accountability, fostering collaboration, bibliographical references and proposals for improvement.

In addition, it offers diagrams and summaries to explain and schematize each concept, as well as specific guidelines to put them into practice. For example, it includes the question "Where are the limits on access to public information? To answer this question, the guide cites the cases in which access can be given to information that refers to a person's ideology, beliefs, religious or union affiliation (p. 26). With adaptation to specific contexts, the manual could very well serve as a basis for organizing training workshops for civil servants because of the number of relevant issues it addresses and its organization.

The authors are right to also include warnings and constructive criticisms of the situation of open government in institutions. Although they do not point out directly, they talk about:

- Black boxes: they are criticized for being closed systems. It is stated that black boxes should be opened and made transparent and that "the representation of sectors traditionally excluded from public decisions should be increased".

- Administrative language: This is a challenge for real transparency, since, according to a study mentioned in the guide, out of 760 official texts, 78% of them were not clear. Among the most difficult to understand are applications for scholarships, grants and subsidies, and employment-related procedures.

- The existence of a lack of transparency in some municipalities, according to another study mentioned in the guide. The global open government index, elaborated by the World Justice Project, places Spain in 24th place, behind countries such as Estonia (14th), Chile (18th), Costa Rica (19th) or Uruguay (21st) and ahead of Italy (28th), Greece (36th) or Romania (51st), among 102 countries. Open Knowledge Foundation has stopped updating its Global Open Data Index, specifically on open data.

In short, public administration is conceived as a step towards an open state, with the incorporation of the values of openness in all branches of government, including the legislative and judicial branches, in addition to government.

Additional issues to consider

For those who want to follow the path to open government, there are a number of issues to consider:

-

The guide can be adapted to different spheres and scales of public. But public administration is not homogeneous, nor do the people in it have the same responsibilities, motivations, knowledge or attitudes to open government. A review of citizen use of open data in the Basque administration concluded that one obstacle to transparency is the lack of acceptance or collaboration in some sectors of the administration itself. A step forward, therefore, could be to conduct internal campaigns to disseminate the advantages for the administration of integrating citizen perspectives and to generate those spaces to integrate their contributions.

- Although the black box model is disappearing from the public administration, which is subject to great scrutiny, it has returned in the form of closed and opaque algorithmic systems applied to public administration. There are many studies in the scientific literature -for example, this one- that warn that erroneous opaque box systems may be operating in public administration without anyone noticing until harmful results are generated. This is an issue that needs to be reviewed.

- In order to adapt it to specific contexts, it should be possible to define more concretely what participation, collaboration and co-creation are. As the guide indicates, they imply not only transparency, but also the implementation of collaborative or innovative initiatives. But it is also necessary to ask a series of additional questions: what is a collaborative or innovation initiative, what methodologies exist, how is it organized and how is its success measured?

- The guide highlights the need to include citizens in open government. When talking about inclusion and participation, organized civil society and academia are mentioned above all, for example, in the Open Government Forum. But there is room for improvement to encourage individual participation and collaboration, especially for people with little access to technology. The guide mentions gender, territorial, age and disability digital divides, but does not explore them. However, when access to many public services, aid and assistance has been platformized (especially after the COVID-19 pandemic), such digital divides affect many people, especially the elderly, low-income and women. Since a generalist guide cannot address all relevant issues in detail, this would merit a separate guide.

Public institutions are increasingly turning to algorithmic decision-making for effective, fast and inclusive decision making. Therefore, it is also increasingly relevant to train the administration itself in open government in a digitized, digitized and platformized environment. This guide is a great first step for those who want to approach the subject.

Content prepared by Miren Gutiérrez, PhD and researcher at the University of Deusto, expert in data activism, data justice, data literacy and gender disinformation. The contents and views reflected in this publication are the sole responsibility of the author.

When launching an open data initiative, it is necessary that everyone involved in its development is aware of the benefits of open data, and that they are clear about the processes and workflows needed to achieve the goals. This is the only way to achieve an initiative with up-to-date data that meets the necessary quality parameters.

This idea was clear to the Alba Smart Initiative, which is why they have created various materials that not only serve to provide knowledge to all those involved, but also to motivate and raise awareness among heads of service and councillors about the need (and obligation) to publish as much information as possible for the use of citizens.

What is Alba Smart?

The Alba Smart project is jointly developed by the city councils of Almendralejo and Badajoz with the aim of advancing in their development as smart cities. Among the areas covered are the control of tourist mobility flows, the creation of an innovation hub, the installation of wifi access points in public buildings, the implementation of social wifi and the management of car parks and fleets.

Within the framework of this project, a platform has been developed to unify the information of devices and systems, thus facilitating the management of public services in a more efficient way. Alba Smart also incorporates a balanced scorecard, as well as an open data portal for each city council (Almendralejo's and Badajoz's are available here).

The Alba Smart initiative has the collaboration of Red.es through the National Plan for Smart Cities.

Activities to promote open data

Within the context of Alba Smart, there is an Open Data working group of the Badajoz City Council, which has launched several activities focused on the dissemination of open data in the framework of a local entity.

One of the first actions they have carried out is the creation of an internal WIKI in which they have been documenting all the materials that have been useful to them. This WIKI facilitates the sharing of internal content, so that all users have at their disposal materials of interest to answer questions such as: what is open data, what roles, tasks and processes are involved, why is it necessary to adopt this type of policies, etc.

In addition, on the public part of the Badajoz website, both Transparency and Open Data have shared a series of documents included in this WIKI that may also be of interest to other local initiatives:

Contents related to Transparency

The website includes a summary section with content on TRANSPARENCY. Among other issues, it includes a list of ITA2017 indicators and their assignment to each City Council Service.

This section also includes the regulatory framework that applies to the territory, as well as external references.

Content related to open data

It also includes a summary section on OPEN DATA, which includes the regulations to be applied and links of interest, in addition to:

- The list of 40 datasets recommended by the FEMP in 2019. This document includes a series of datasets that should be published by all local authorities in Spain in order to standardise the publication of open data and facilitate its management. The list generated by Alba Smart includes information on the local council service responsible for opening each dataset.

- A summary of the implementation plan followed by the council, which includes the workflow to be followed, emphasising the need for it to be carried out continuously: identifying new sources of information, reviewing internal processes, etc.

- A series of training videos, produced in-house, to assist colleagues in the preparation and publication of data. They include tutorials on how to organise data, how to catalogue data, and the review and approval process, among others.

- A (sample) manual on how to upload a file to their open data platform, which is developed with CKAN. It is a practical document, with screenshots, showing the whole process step by step.

- The list of vocabularies they use as a reference, which allow to systematically organise, categorise and tag information.

Among its next steps is the presentation of the datasets in the map viewer of the municipality. Data from the portal is already being fed into the corporate GIS to facilitate this function in the future.

All these actions are intended to ensure the sustainability of the portal, facilitating its continuous updating by a team with clear working procedures.