In any data management environment (companies, public administration, consortia, research projects), having data is not enough: if you don't know what data you have, where it is, what it means, who maintains it, with what quality, when it changed or how it relates to other data, then the value is very limited. Metadata —data about data—is essential for:

-

Visibility and access: Allow users to find what data exists and can be accessed.

-

Contextualization: knowing what the data means (definitions, units, semantics).

-

Traceability/lineage: Understanding where data comes from and how it has been transformed.

-

Governance and control: knowing who is responsible, what policies apply, permissions, versions, obsolescence.

-

Quality, integrity, and consistency: Ensuring data reliability through rules, metrics, and monitoring.

-

Interoperability: ensuring that different systems or domains can share data, using a common vocabulary, shared definitions, and explicit relationships.

In short, metadata is the lever that turns "siloed" data into a governed information ecosystem. As data grows in volume, diversity, and velocity, its function goes beyond simple description: metadata adds context, allows data to be interpreted, and makes it findable, accessible, interoperable, and reusable (FAIR).

In the new context driven by artificial intelligence, this metadata layer becomes even more relevant, as it provides the provenance information needed to ensure traceability, reliability, and reproducibility of results. For this reason, some recent frameworks extend these principles to FAIR-R, where the additional "R" highlights the importance of data being AI-ready, i.e. that it meets a series of technical, structural and quality requirements that optimize its use by artificial intelligence algorithms.

Thus, we are talking about enriched metadata, capable of connecting technical, semantic and contextual information to enhance machine learning, interoperability between domains and the generation of verifiable knowledge.

From traditional metadata to "rich metadata"

Traditional metadata

In the context of this article, when we talk about metadata with a traditional use, we think of catalogs, dictionaries, glossaries, database data models, and rigid structures (tables and columns). The most common types of metadata are:

-

Technical metadata: column type, length, format, foreign keys, indexes, physical locations.

-

Business/Semantic Metadata: Field Name, Description, Value Domain, Business Rules, Business Glossary Terms.

-

Operational/execution metadata: refresh rate, last load, processing times, usage statistics.

-

Quality metadata: percentage of null values, duplicates, validations.

-

Security/access metadata: access policies, permissions, sensitivity rating.

-

Lineage metadata: Transformation tracing in data pipelines .

This metadata is usually stored in repositories or cataloguing tools, often with tabular structures or relational bases, with predefined links.

Why "rich metadata"?

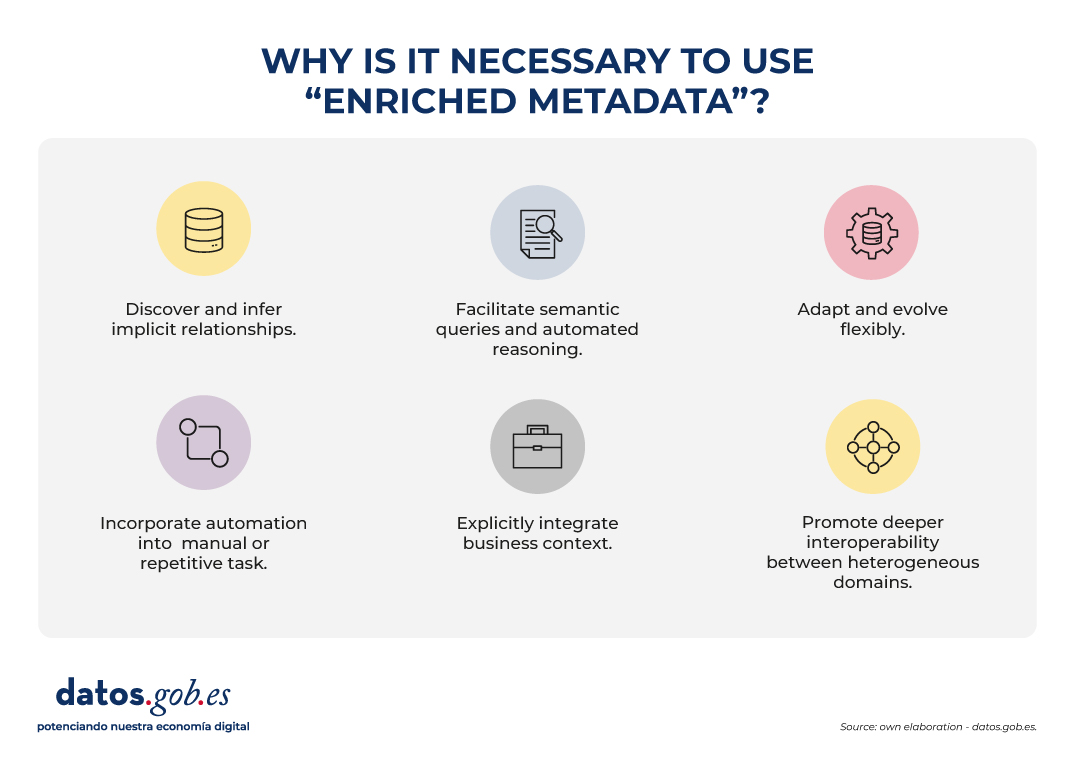

Rich metadata is a layer that not only describes attributes, but also:

- They discover and infer implicit relationships, identifying links that are not expressly defined in data schemas. This allows, for example, to recognize that two variables with different names in different systems actually represent the same concept ("altitude" and "elevation"), or that certain attributes maintain a hierarchical relationship ("municipality" belongs to "province").

- They facilitate semantic queries and automated reasoning, allowing users and machines to explore relationships and patterns that are not explicitly defined in databases. Rather than simply looking for exact matches of names or structures, rich metadata allows you to ask questions based on meaning and context. For example, automatically identifying all datasets related to "coastal cities" even if the term does not appear verbatim in the metadata.

- They adapt and evolve flexibly, as they can be extended with new entity types, relationships, or domains without the need to redesign the entire catalog structure. This allows new data sources, models or standards to be easily incorporated, ensuring the long-term sustainability of the system.

- They incorporate automation into tasks that were previously manual or repetitive, such as duplication detection, automatic matching of equivalent concepts, or semantic enrichment using machine learning. They can also identify inconsistencies or anomalies, improving the quality and consistency of metadata.

- They explicitly integrate the business context, linking each data asset to its operational meaning and its role within organizational processes. To do this, they use controlled vocabularies, ontologies or taxonomies that facilitate a common understanding between technical teams, analysts and business managers.

- They promote deeper interoperability between heterogeneous domains, which goes beyond the syntactic exchange facilitated by traditional metadata. Rich metadata adds a semantic layer that allows you to understand and relate data based on its meaning, not just its format. Thus, data from different sources or sectors – for example, Geographic Information Systems (GIS), Building Information Modeling (BIM) or the Internet of Things (IoT) – can be linked in a coherent way within a shared conceptual framework. This semantic interoperability is what makes it possible to integrate knowledge and reuse information between different technical and organizational contexts.

This turns metadata into a living asset, enriched and connected to domain knowledge, not just a passive "record".

The Evolution of Metadata: Ontologies and Knowledge Graphs

The incorporation of ontologies and knowledge graphs represents a conceptual evolution in the way metadata is described, related and used, hence we speak of enriched metadata. These tools not only document the data, but connect them within a network of meaning, allowing the relationships between entities, concepts, and contexts to be explicit and computable.

In the current context, marked by the rise of artificial intelligence, this semantic structure takes on a fundamental role: it provides algorithms with the contextual knowledge necessary to interpret, learn and reason about data in a more accurate and transparent way. Ontologies and graphs allow AI systems not only to process information, but also to understand the relationships between elements and to generate grounded inferences, opening the way to more explanatory and reliable models.

This paradigm shift transforms metadata into a dynamic structure, capable of reflecting the complexity of knowledge and facilitating semantic interoperability between different domains and sources of information. To understand this evolution, it is necessary to define and relate some concepts:

Ontologies

In the world of data, an ontology is a highly organized conceptual map that clearly defines:

- What entities exist (e.g., city, river, road).

- What properties they have (e.g. a city has a name, town, zip code).

- How they relate to each other (e.g. a river runs through a city, a road connects two municipalities).

The goal is for people and machines to share the same vocabulary and understand data in the same way. Ontologies allow:

- Define concepts and relationships: for example, "a plot belongs to a municipality", "a building has geographical coordinates".

- Set rules and restrictions: such as "each building must be exactly on a cadastral plot".

- Unify vocabularies: if in one system you say "plot" and in another "cadastral unit", ontology helps to recognize that they are analogous.

- Make inferences: from simple data, discover new knowledge (if a building is on a plot and the plot in Seville, it can be inferred that the building is in Seville).

- Establish a common language: they work as a dictionary shared between different systems or domains (GIS, BIM, IoT, cadastre, urban planning).

In short: an ontology is the dictionary and the rules of the game that allow different geospatial systems (maps, cadastre, sensors, BIM, etc.) to understand each other and work in an integrated way.

Knowledge Graphs

A knowledge graph is a way of organizing information as if it were a network of concepts connected to each other.

-

Nodes represent things or entities, such as a city, a river, or a building.

-

The edges (lines) show the relationships between them, for example: "is in", "crosses" or "belongs to".

-

Unlike a simple drawing of connections, a knowledge graph also explains the meaning of those relationships: it adds semantics.

A knowledge graph combines three main elements:

-

Data: specific cases or instances, such as "Seville", "Guadalquivir River" or "Seville City Hall Building".

-

Semantics (or ontology): the rules and vocabularies that define what kinds of things exist (cities, rivers, buildings) and how they can relate to each other.

-

Reasoning: the ability to discover new connections from existing ones (for example, if a river crosses a city and that city is in Spain, the system can deduce that the river is in Spain).

In addition, knowledge graphs make it possible to connect information from different fields (e.g. data on people, places and companies) under the same common language, facilitating analysis and interoperability between disciplines.

In other words, a knowledge graph is the result of applying an ontology (the data model) to several individual datasets (spatial elements, other territory data, patient records or catalog products, etc.). Knowledge graphs are ideal for integrating heterogeneous data, because they do not require a previously complete rigid schema: they can be grown flexibly. In addition, they allow semantic queries and navigation with complex relationships. Here's an example for spatial data to understand the differences:

|

Spatial data ontology (conceptual model) |

Knowledge graph (specific examples with instances) |

|---|---|

|

|

|

|

Use Cases

To better understand the value of smart metadata and semantic catalogs, there is nothing better than looking at examples where they are already being applied. These cases show how the combination of ontologies and knowledge graphs makes it possible to connect dispersed information, improve interoperability and generate actionable knowledge in different contexts.

From emergency management to urban planning or environmental protection, different international projects have shown that semantics is not just theory, but a practical tool that transforms data into decisions.

Some relevant examples include:

- LinkedGeoData that converted OpenStreetMap data into Linked Data, linking it to other open sources.

- Virtual Singapore is a 3D digital twin that integrates geospatial, urban and real-time data for simulation and planning.

- JedAI-spatial is a tool for interconnecting 3D spatial data using semantic relationships.

- SOSA Ontology, a standard widely used in sensor and IoT projects for environmental observations with a geospatial component.

- European projects on digital building permits (e.g. ACCORD), which combine semantic catalogs, BIM models, and GIS data to automatically validate building regulations.

Conclusions

The evolution towards rich metadata, supported by ontologies, knowledge graphs and FAIR-R principles, represents a substantial change in the way data is managed, connected and understood. This new approach makes metadata an active component of the digital infrastructure, capable of providing context, traceability and meaning, and not just describing information.

Rich metadata allows you to learn from data, improve semantic interoperability between domains, and facilitate more expressive queries, where relationships and dependencies can be discovered in an automated way. In this way, they favor the integration of dispersed information and support both informed decision-making and the development of more explanatory and reliable artificial intelligence models.

In the field of open data, these advances drive the transition from descriptive repositories to ecosystems of interconnected knowledge, where data can be combined and reused in a flexible and verifiable way. The incorporation of semantic context and provenance reinforces transparency, quality and responsible reuse.

This transformation requires, however, a progressive and well-governed approach: it is essential to plan for systems migration, ensure semantic quality, and promote the participation of multidisciplinary communities.

In short, rich metadata is the basis for moving from isolated data to connected and traceable knowledge, a key element for interoperability, sustainability and trust in the data economy.

Content prepared by Mayte Toscano, Senior Consultant in Data Economy Technologies. The contents and points of view reflected in this publication are the sole responsibility of the author.