In this episode we talk about the environment, focusing on the role that data plays in the ecological transition. Can open data help drive sustainability and protect the planet? We found out with our two guests:

- Francisco José Martínez García, conservation director of the natural parks of the south of Alicante.

- José Norberto Mazón, professor of computer languages and systems at the University of Alicante.

Listen to the full podcast (only available in Spanish)

Summary / Transcript of the interview

1. You are both passionate about the use of data for society, how did you discover the potential of open data for environmental management?

Francisco José Martínez: For my part, I can tell you that when I arrived at the public administration, at the Generalitat Valenciana, the Generalitat launched a viewer called Visor Gva, which is open, which gives a lot of information on images, metadata, data in various fields... And the truth is that it made it much easier for me - and continues to make it easier - for me to work on the resolution of files and the work of a civil servant. Later, another database was also incorporated, which is the Biodiversity Data Bank, which offers data in grids of one kilometer by one kilometer. And finally, already applied to the natural spaces and wetlands that I direct, water quality data, all of them are open and can be the object of generating applied research by all researchers.

Jose Norberto Mazón: In my case, it was precisely with Francisco as director. He directs three natural parks that are wetlands in the south of Alicante and about one of them, in which we had special interest, which is the Natural Park of Laguna de la Mata and Torrevieja, Francisco told us about his experience -all this experience that he has just commented on-. We at the University of Alicante have been working for some time on data management, open data, data interoperability, etc., and we saw the opportunity to make a perspective of data management, data generation and reuse of data from the territory, from the Natural Park itself. Together with other entities such as Proyecto Mastral, Faunatura, AGAMED, and also colleagues from the Polytechnic University of Valencia, we saw the possibility of studying these useful data, focusing above all on the concept of high-value data, which the European Union was betting on them: data that has the potential to generate socio-economic or environmental benefits, benefit all users and contribute to making a European society based on the data economy. And well, we set out there to see how we could collaborate, especially to discover the potential of data at the territory level.

2. Through a strategy called the Green Deal, the European Union aims to become the world's first competitive and resource-efficient economy, achieving net-zero greenhouse gas emissions by 2050. What concrete measures are most urgent to achieve this and how can data help to achieve these goals?

Francisco José Martínez: The European Union has several lines, several projects such as the LIFE project, focused on endangered species, the ERDF funds to restore habitats... Here in Laguna de la Mata and Torrevieja, we have improved terrestrial habitats with these ERDF funds and it is precisely about these habitats being better CO2 capturers and generating more native plant communities, eliminating invasive species. Then we also have the regulation, at the regulatory level, on nature restoration, which has been in force since 2024, and which requires us to restore up to 30% of degraded terrestrial and marine ecosystems. I must also say that the Biodiversity Foundation, under the Ministry, generates quite a few projects related, for example, to the generation of climate shelters in urban areas. In other words, there are a series of projects and a lot of funding in everything that has to do with renaturalization, habitat improvement and species conservation.

Jose Norberto Mazón: I would also focus, to complement what Francis has said, on all data management, the importance given to data management at the level of the European Green Deal, specifically with data sharing projects, to make data more interoperable. In other words, in the end, all those actors that generate data can be useful through their combination and generate much more value in what are called data spaces and especially in the data space of the European Green Deal. Recently, in addition, they have just finished some initial projects. For example, to highlight a couple of them, the USAGE project (Urban Data Spaces for Green dEal), which I am going to comment on with two specific pilots that they have developed very interestingly. One on how everything that has to do with data to mitigate climate change has to be introduced into urban management in the city of Ferrara, in Italy. And another pilot on data governance and how it has to be done to comply with the FAIR principles, in this case in Zaragoza, with a concept of climate islands that is also very interesting. And then there is another project, AD4GD (All Data for Green Deal) that has also carried out pilots in relation to this interoperability of data. In this case, in the Berlin Lake Network. Berlin has about 300 lakes that have to monitor the quality of the water, the quantity of water, etc. and it has been done through sensorization. The management of biological corridors in Catalonia, too, with data on how species move and how it is necessary to manage these biological corridors. And they have also done some air quality initiatives with citizen science. These projects have already been completed, but there is a super interesting project at the European level that is going to launch this large data space of the European Pact, which is the SAGE (Sustainable Green Europe Data Space) project, which is developing ten use cases that encompass this entire great area of the European Green Deal. Specifically, to highlight one that is very pertinent, because it is aligned with what are the natural parks, the wetlands of the south of Alicante and that Francisco directs, is that of the commitments between nature and ecosystem services. That is, how nature must be protected, how we have to conserve, but we also have to allow these socio-economic activities in a sustainable way. This data space will integrate remote sensing, models based on artificial intelligence, data, etc.

3. Would you like to add any other projects at this local or regional level?

Francisco José Martínez: Yes, of course. Well, the one we have done with Norberto, his team and several teams, several departments of the Polytechnic University of Valencia and the University of Alicante, and it is the digital twin. Research has been carried out for the generation of a digital twin in the Natural Park of Las Lagunas, here in Torrevieja. And the truth is that it has been an applied research, a lot of data has been generated from sensors, also from direct observations or from image and sound recorders. A good record of information has been made at the level of noise, climate, meteorological data to be able to carry out good management and that it is an invaluable help for the management of those of us who have to make decisions day by day. Other data that have also been carried out in this project here has been the collection of data of a social nature, tourist use, people's feelings (whether they agree with what they see in the natural space or not). In other words, we have improved our knowledge of this natural space thanks to this digital twin and that is information that neither our viewer nor the Biodiversity Data Bank can provide us.

Jose Norberto Mazón: Francisco was talking, for example, about the knowledge of people, about the influx of people from certain areas of the natural park. And also to know what they feel, what the people who visit it think, because if it is not through surveys that are very cumbersome, etcetera is complicated. We have put at the service of discovering that knowledge, this digital twin with a multitude of that sensorization and with data that in the end are also interoperable and that allow us to know the territory very well. Obviously, the fact that it is territorial does not mean that it is not scalable. What we are doing with the digital twin project, the ChanTwin project, what we are doing is that it can be dumped or extrapolated to any other natural area, because the problems that we have had in the end we are going to find in any natural area, such as connectivity problems, interoperability problems of data that come from sensors, etc. We have sensors of many types, influx of people, water quality, temperatures and climatic variables, pollution, etc. and in the end also with all the guarantees of data privacy. I have to say this, which is very important because we always try to ensure that this data collection, of course, guarantees people's privacy. We can know the concerns of the people who visit the park and also, for example, the origin of those people. And this is very interesting information at the level of park management, because in this way, for example, Francisco can make more informed decisions to better manage the park. But, the people who visit the park come from a specific municipality, with a city council that, for example, has a Department of the Environment or has a Department of Tourism. And this information can be very interesting to highlight certain aspects, for example, environmental, biodiversity, or socio-economic activity.

Francisco José Martínez: Data are fundamental in the management of the natural environment of a wetland, a mountain, a forest, a pasture... in general of all natural spaces. Note that only with the follow-up and monitoring of certain environmental parameters do we serve to explain events that can happen, for example, a fish mortality. Without having had the history of the dissolved oxygen temperature data, it is very difficult to know if it is because of that or because of a pollutant. For example, the temperature of water, which is related to dissolved oxygen: the higher the temperature, the less dissolved oxygen. And without oxygen, it turns out that they appear in spring and summer – okay, whatever the ambient temperatures are, it moves to the water, to the lagoons, to the wetlands – a disease appears that is botulism and there have already been two years that more than a thousand animals have died every year. The way to control it is by anticipating that these temperatures are going to reach a specific one, that from there the oxygen almost disappears from the waters and gives us time to plan the work teams that are removing the corpses, which is the fundamental action to avoid it. Another, for example, is the monthly census of waterfowl, which are observed in person, which are recorded, which we also have recorders that record sounds. With that we can know the dynamics when species come in migration and with that we can also manage water. Another example can be that of the temperature of the lagoon here in La Mata, which we are monitoring with the digital twin, because we know that when it reaches almost thirty degrees, the main food of the birds disappears, which is brine shrimp, because they cannot live in those extreme temperatures with that salinity. but we can bring in sea water, which despite the fact that it has been very hot these last springs and summers, is always cooler and we can refresh and extend the life of this species that is precisely synchronized with the reproduction of birds. So we can manage the water thanks to the monitoring and thanks to the data we have on the temperatures of the waters.

Jose Norberto Mazón: Look at the importance of these examples that Francisco mentioned, which are paradigmatic, and also the importance of the use of data. I would simply add a question that in the end these data, the effort is to make them all open and that they comply with those FAIR principles, that is, that they are interoperable, because as we have heard Francis have commented, they are data from many sources, each with different characteristics, collected in different ways, etc. You're talking to us about sensor data, but also other data that is collected in another way. And then also that they allow us in some way to start co-creation processes of tools that use this data at various levels. Of course, at the level of management of the natural park itself to make informed decisions, but also at the level of citizenship, even at the level of other types of professionals. As Francisco said, in the parks, in these wetlands, economic activities are carried out and therefore also being able to co-create tools with these actors or with the university research staff themselves is very interesting. And here it is always a matter of encouraging third parties, both natural and legal, for example, companies or startups, entrepreneurs, etc. that they make various applications and value-added services with that data: that they design easy-to-use tools for decision-making, for example, or any other type of tool. This would be very interesting, because it would also give us an entrepreneurial ecosystem around that data. And what this would also do is make society itself more involved from this open data, from the reuse of open data, in environmental care and environmental awareness.

4. An important aspect of this transition is that it must be "fair and leave no one behind". What role can data play in ensuring that equity?

Francisco José Martínez: In our case, we have been carrying out citizen science actions with the Environmental Education and Dissemination technicians. We are collecting data with people who sign up for these activities. We do two activities a month and, for example, we have carried out censuses of bats of different species - because one sees bats and does not distinguish the species, sometimes not even seeing them - on night routes, to detect and record them. We have also done photo trapping activities to detect mammals that are very difficult to see. With this we get children, families, people in general to know a fauna that they do not know exists when they are walking in the mountains. And I believe that we reach a lot of people and that we are disseminating it to as many people, as many sectors as possible.

Jose Norberto Mazón: And from that data, in fact, look at all the amount of data that Francis is talking about. From there, and promoting that line that Francisco follows as director of the Natural Parks of the south of Alicante, what we ask ourselves is: can we go one step further using technology? And we have made video games that make it possible to have more awareness among those target groups that may otherwise be very difficult to reach. For example, teenagers, who must be instilled in some way that behavior, that importance of natural parks as well. And we think that video games can be a very interesting channel. And how have we done it? Basing these video games on data, on data that come from what Francisco has commented on and also from the data of the digital twin itself. That is, data we have on the water surface, noise levels... We include all this data in video games. They are dynamic video games that allow us to have a better awareness of what the natural park is and of the environmental values and conservation of biodiversity.

5. You've been talking to us for a while about all the data you use, which in the end comes from various sources. Can we summarize the type of data you use in your day-to-day life and what are the challenges you encounter when integrating it into specific projects?

Francisco José Martínez: The data are spatial, they are images with their metadata, censuses of birds, mammals, the different taxonomic groups, fauna, flora... We also carry out inventories of protected flora in danger of extinction. Fundamental meteorological data that, by the way, are also very important when it comes to the issue of civil protection. Look at all the disasters that there are with cold drops or cut-off lows. Very important data such as water quality, physical and chemical data, height of the water sheet that helps us to know evaporation, evaporation curves and thus manage water inputs and of course, social data for public use. Because public use is very important in natural spaces. It is a way of opening up to citizens, to people so that they can know their natural resources and know them, value them and thus protect them. As for the difficulty, it is true that there is a series of data, especially when research is carried out, which we cannot access. They are in repositories for technicians who are in the administration or even for consultants who are difficult to access. I think Norberto can explain this better: how this could be integrated into platforms, by sectors, by groups...

Jose Norberto Mazón: In fact, it is a core issue for us. In the end there is a lot of open data, as Francis has explained throughout this little time that we have been talking, but it is true that they are very dispersed because they are also generated to meet various objectives. In the end, the main objective of open data is that it is reused, that is, that it is used for purposes other than those for which it was initially granted. But what we find is that in the end there are many proposals that are, as we would say, top-down (very top down). But really, where the problem lies is in the territory, from below, in all the actors involved in the territory, which apart from a lot of data is generated in the territory itself. In other words, it is true that there is data, for example, satellite data with remote sensing, which is generated by the satellites themselves and then reused by us, but then the data that comes from sensors or the data that comes from citizen science, etc., are generated in the territory itself. And we find that many times, in the end of that data, for example, if there are researchers who do a job in a specific natural park, then obviously that research team publishes its articles and data in open (because by the law of science they have to publish them in open in repositories). But of course, that is very research-oriented. So, the other types of actors, for example, the management of the park, the managers of a local entity or even the citizens themselves, are perhaps not aware that this data is available and do not even have mechanisms to consult it and obtain value from it. The greatest difficulty, in fact, is this, in that the data generated from the territory is reused from the territory. It is very easy to reuse them from the territory to solve these problems as well. And that difficulty is what we are trying to tackle with these projects that we have underway, at the moment with the creation of a data lake, a data architecture that allows us to manage all that heterogeneity of the data and do it from the territory. But of course, here what we really have to do is try to do it in a federated way, with that philosophy of open data at the federated level and also with a plus as well, because it is true that the casuistry within the territory is very large. There are a multitude of actors, because we are talking about open data, but there may also be actors who say "I want to share certain data, but not certain other data yet, because I may lose a certain competitiveness, but I would not mind being able to share it in three months' time". In other words, it is also necessary to have control over a certain type of data and that open data coexists with another type of data that can be shared. Maybe not so broadly, but in a way, let's say, providing great value. We are looking at this possibility with a new project that we are creating: a space for environmental data, biodiversity in these three natural parks in the south of the province of Alicante, and we are working on that project: Heleade.

If you want to know more about these projects, we invite you to visit their websites:

Interview clips

1. How was the digital twin of the Lagunas de Torrevieja Natural Park conceived?

2. What projects are being promoted within the framework of the European Green Deal Data Space?

Open health data is one of the most valuable assets of our society. Well managed and shared responsibly, they can save lives, drive medical discoveries, or even optimize hospital resources. However, for decades, this data has remained fragmented in institutional silos, with incompatible formats and technical and legal barriers that made it difficult to reuse. Now, the European Union is radically changing the landscape with an ambitious strategy that combines two complementary approaches:

- Facilitate open access to statistics and non-sensitive aggregated data.

- Create secure infrastructures to share personal health data under strict privacy guarantees.

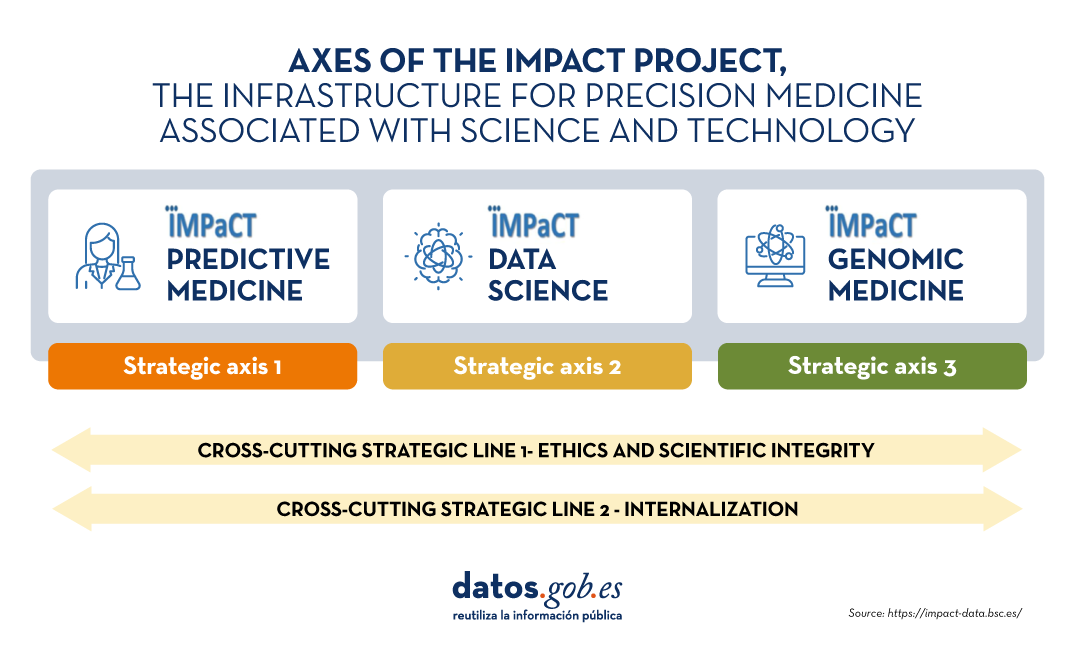

In Spain, this transformation is already underway through the National Health Data Space or research groups that are at the forefront of the innovative use of health data. Initiatives such as IMPACT-Data, which integrates medical data to drive precision medicine, demonstrate the potential of working with health data in a structured and secure way. And to make it easier for all this data to be easy to find and reuse, standards such as HealthDCAT-AP are implemented.

All this is perfectly aligned with the European strategy of the European Health Data Space Regulation (EHDS), officially published in March 2025, which is also integrated with the Open Data Directive (ODD), in force since 2019. Although the two regulatory frameworks have different scopes, their interaction offers extraordinary opportunities for innovation, research and the improvement of healthcare across Europe.

A recent report prepared by Capgemini Invent for data.europa.eu analyzes these synergies. In this post, we explore the main conclusions of this work and reflect on its relevance for the Spanish open data ecosystem.

-

Two complementary frameworks for a common goal

On the one hand, the European Health Data Space focuses specifically on health data and pursues three fundamental objectives:

- Facilitate international access to health data for patient care (primary use).

- Promote the reuse of this data for research, public policy, and innovation (secondary use).

- Technically standardize electronic health record (EHR) systems to improve cross-border interoperability.

For its part, the Open Data Directive has a broader scope: it encourages the public sector to make government data available to any user for free reuse. This includes High-Value Datasets that must be published for free, in machine-readable formats, and via APIs in six categories that did not originally include "health." However, in the proposal to expand the new categories published by the EU, the health category does appear.

The complementarity between the two regulatory frameworks is evident: while the ODD facilitates open access to aggregated and non-sensitive health statistics, the EHDS regulates controlled access to individual health data under strict conditions of security, consent and governance. Together, they form a tiered data sharing system that maximizes its social value without compromising privacy, in full compliance with the General Data Protection Regulation (GDPR).

Main benefits computer by user groups

The report looks at four main user groups and examines both the potential benefits and challenges they face in combining EHDS data with open data.

-

Patients: Informed Empowerment with Practical Barriers

European patients will gain faster and more secure access to their own electronic health records, especially in cross-border contexts thanks to infrastructures such as MyHealth@EU. This project is particularly useful for European citizens who are displaced in another European country. .

Another interesting project that informs the public is PatientsLikeMe, which brings together more than 850,000 patients with rare or chronic diseases in an online community that shares information of interest about treatments and other issues.

-

Potential health professionals subordinate to integration

On the other hand, healthcare professionals will be able to access clinical patient data earlier and more easily, even across borders, improving continuity of care and the quality of diagnosis and treatment.

The combination with open data could amplify these benefits if tools are developed that integrate both sources of information directly into electronic health record systems.

3. Policymakers: data for better decisions

Public officials are natural beneficiaries of the convergence between EHDS and open data. The possibility of combining detailed health data (upon request and authorisation through the Health Data Access Bodies that each Member State must establish) with open statistical and contextual information would allow for much more robust evidence-based policies to be developed.

The report mentions use cases such as combining health data with environmental information to assess health impacts. A real example is the French Green Data for Health project, which crosses open data on noise pollution with information on prescriptions for sleep medications from more than 10 million inhabitants, investigating correlations between environmental noise and sleep disorders.

4. Researchers and reusers: the main immediate beneficiaries

Researchers, academics and innovators are the group that will most directly benefit from the EHDS-ODD synergy as they have the skills and tools to locate, access, combine and analyse data from multiple sources. In addition, their work already routinely involves the integration of various data sets.

A recent study published in PLOS Digital Health on the case of Andalusia demonstrates how open data in health can democratize research in health AI and improve equity in treatment.

The development of EHDS is being supported by European programmes such as EU4Health, Horizon Europe and specific projects such as TEHDAS2, which help to define technical standards and pilot real applications.

-

Recommendations to maximize impact

The report concludes with four key recommendations that are particularly relevant to the Spanish open data ecosystem:

- Stimulate research at the EHDS-open data intersection through dedicated funding. It is essential to encourage researchers who combine these sources to translate their findings into practical applications: improved clinical protocols, decision tools, updated quality standards.

- Evaluate and facilitate direct use by professionals and patients. Promoting data literacy and developing intuitive applications integrated into existing systems (such as electronic health records) could change this.

- Strengthen governance through education and clear regulatory frameworks. As EHDS technical entities become operationalized, clear regulation defining common regulatory frameworks will be essential.

- Monitor, evaluate and adapt. The period 2025-2031 will see the gradual entry into force of the various EHDS requirements. Regular evaluations are recommended to assess how EHDS is actually being used, which combinations with open data are generating the most value, and what adjustments are needed.

Moreover, for all this to work, the report suggests that portals such as data.europa.eu (and by extension, datos.gob.es) should highlight practical examples that demonstrate how open data complements protected data from sectoral spaces, thus inspiring new applications.

Overall, the role of open data portals will be fundamental in this emerging ecosystem: not only as providers of quality datasets, but also as facilitators of knowledge, meeting spaces between communities and catalysts for innovation. The future of European healthcare is now being written, and open data plays a leading role in that story.

Collaborative culture and citizen open data projects are key to democratic access to information. This contributes to free knowledge that allows innovation to be promoted and citizens to be empowered.

In this new episode of the datos.gob.es podcast, we are joined by two professionals linked to citizen projects that have revolutionized the way we access, create and reuse knowledge. We welcome:

- Florencia Claes, professor and coordinator of Free Culture at the Rey Juan Carlos University, and former president of Wikimedia Spain.

- Miguel Sevilla-Callejo, researcher at the CSIC (Spanish National Research Council) and Vice-President of the OpenStreetMap Spain association.

Listen to the full podcast (only available in Spanish)

Summary / Transcript of the interview

1. How would you define free culture?

Florencia Claes: It is any cultural, scientific, intellectual expression, etc. that as authors we allow any other person to use, take advantage of, reuse, intervene in and relaunch into society, so that another person does the same with that material.

In free culture, licenses come into play, those permissions of use that tell us what we can do with those materials or with those expressions of free culture.

2. What role do collaborative projects have within free culture?

Miguel Sevilla-Callejo: Having projects that are capable of bringing together these free culture initiatives is very important. Collaborative projects are horizontal initiatives in which anyone can contribute. A consensus is structured around them to make that project, that culture, grow.

3. You are both linked to collaborative projects such as Wikimedia and OpenStreetMap. How do these projects impact society?

Florencia Claes: Clearly the world would not be the same without Wikipedia. We cannot conceive of a world without Wikipedia, without free access to information. I think Wikipedia is associated with the society we are in today. It has built what we are today, also as a society. The fact that it is a collaborative, open, free space, means that anyone can join and intervene in it and that it has a high rigor.

So, how does it impact? It impacts that (it will sound a little cheesy, but...) we can be better people, we can know more, we can have more information. It has an impact on the fact that anyone with access to the internet, of course, can benefit from its content and learn without necessarily having to go through a paywall or be registered on a platform and change data to be able to appropriate or approach the information.

Miguel Sevilla-Callejo: We call OpenStreetMap the Wikipedia of maps, because a large part of its philosophy is copied or cloned from the philosophy of Wikipedia. If you imagine Wikipedia, what people do is they put encyclopedic articles. What we do in OpenStreetMap is to enter spatial data. We build a map collaboratively and this assumes that the openstreetmap.org page, which is where you could go to look at the maps, is just the tip of the iceberg. That's where OpenStreetMap is a little more diffuse and hidden, but most of the web pages, maps and spatial information that you are seeing on the Internet, most likely in its vast majority, comes from the data of the great free, open and collaborative database that is OpenStreetMap.

Many times you are reading a newspaper and you see a map and that spatial data is taken from OpenStreetMap. They are even used in agencies: in the European Union, for example, OpenStreetMap is being used. It is used in information from private companies, public administrations, individuals, etc. And, in addition, being free, it is constantly reused.

I always like to bring up projects that we have done here, in the city of Zaragoza. We have generated the entire urban pedestrian network, that is, all the pavements, the zebra crossings, the areas where you can circulate... and with this a calculation is made of how you can move around the city on foot. You can't find this information on sidewalks, crosswalks and so on on a website because it's not very lucrative, such as getting around by car, and you can take advantage of it, for example, which is what we did in some jobs that I directed at university, to be able to know how different mobility is with blind people. in a wheelchair or with a baby carriage.

4. You are telling us that these projects are open. If a citizen is listening to us right now and wants to participate in them, what should they do to participate? How can you be part of these communities?

Florencia Claes: The interesting thing about these communities is that you don't need to be formally associated or linked to them to be able to contribute. In Wikipedia you simply enter the Wikipedia page and become a user, or not, and you can edit. What is the difference between making your username or not? In that you will be able to have better access to the contributions you have made, but we do not need to be associated or registered anywhere to be able to edit Wikipedia.

If there are groups at the local or regional level related to the Wikimedia Foundation that receive grants and grants to hold meetings or activities. That's good, because you meet people with the same concerns and who are usually very enthusiastic about free knowledge. As my friends say, we are a bunch of geeks who have met and feel that we have a group of belonging in which we share and plan how to change the world.

Miguel Sevilla-Callejo: In OpenStreetMap it is practically the same, that is, you can do it alone. It is true that there is a bit of a difference with respect to Wikipedia. If you go to the openstreetmap.org page, where we have all the documentation – which is wiki.OpenStreetMap.org – you can go there and you have all the documentation.

It is true that to edit in OpenStreetMap you do need a user to better track the changes that people make to the map. If it were anonymous there could be more of a problem, because it is not like the texts in Wikipedia. But as Florencia said, it's much better if you associate yourself with a community.

We have local groups in different places. One of the initiatives that we have recently reactivated is the OpenStreetMap Spain association, in which, as Florencia said, we are a group of those who like data and free tools, and there we share all our knowledge. A lot of people come up to us and say "hey, I just entered OpenStreetMap, I like this project, how can I do this? How can I do the other?"And well, it's always much better to do it with other colleagues than to do it alone. But anyone can do it.

5. What challenges have you encountered when implementing these collaborative projects and ensuring their sustainability over time? What are the main challenges, both technical and social, that you face?

Miguel Sevilla-Callejo: One of the problems we find in all these movements that are so horizontal and in which we have to seek consensus to know where to move forward, is that in the end it is relatively problematic to deal with a very diverse community. There is always friction, different points of view... I think this is the most problematic thing. What happens is that, deep down, as we are all moved by enthusiasm for the project, we end up reaching agreements that make the project grow, as can be seen in Wikimedia and OpenStreetMap themselves, which continue to grow and grow.

From a technical point of view, for some things in particular, you have to have a certain computer prowess, but we are very, very basic. For example, we have made mapathons, which consist of us meeting in an area with computers and starting to put spatial information in areas, for example, where there has been a natural disaster or something like that. Basically, on a satellite image, people place little houses where they see - little houses there in the middle of the Sahel, for example, to help NGOs such as Doctors Without Borders. That's very easy: you open it in the browser, open OpenStreetMap and right away, with four prompts, you're able to edit and contribute.

It is true that, if you want to do things that are a little more complex, you have to have more computer skills. So it is true that we always adapt. There are people who are entering data in a very pro way, including buildings, importing data from the cadastre... and there are people like a girl here in Zaragoza who recently discovered the project and is entering the data they find with an application on their mobile phone.

I do really find a certain gender bias in the project. That within OpenStreetMap worries me a little, because it is true that a large majority of the people we are editing, including the community, are men and that in the end does mean that some data has a certain bias. But hey, we're working on it.

Florencia Claes: In that sense, in the Wikimedia environment, that also happens to us. We have, more or less worldwide, 20% of women participating in the project against 80% of men and that means that, for example, in the case of Wikipedia, there is a preference for articles about footballers sometimes. It is not a preference, but simply that the people who edit have those interests and as they are more men, we have more footballers, and we miss articles related, for example, to women's health.

So we do face biases and we face that coordination of the community. Sometimes people with many years participate, new people... and achieving a balance is very important and very difficult. But the interesting thing is when we manage to keep in mind or remember that the project is above us, that we are building something, that we are giving something away, that we are participating in something very big. When we become aware of that again, the differences calm down and we focus again on the common good which, after all, I believe is the goal of these two projects, both in the Wikimedia environment and OpenStreetMap.

6. As you mentioned, both Wikimedia and OpenStreetMap are projects built by volunteers. How do you ensure data quality and accuracy?

Miguel Sevilla-Callejo: The interesting thing about all this is that the community is very large and there are many eyes watching. When there is a lack of rigor in the information, both in Wikipedia – which people know more about – but also in OpenStreetMap, alarm bells go off. We have tracking systems and it's relatively easy to see dysfunctions in the data. Then we can act quickly. This gives a capacity, in OpenStreetMap in particular, to react and update the data practically immediately and to solve those problems that may arise also quite quickly. It is true that there has to be a person attentive to that place or that area.

I've always liked to talk about OpenStreetMap data as a kind of - referring to it as it is done in the software - beta map, which has the latest, but there can be some minimal errors. So, as a strongly updated and high-quality map, it can be used for many things, but for others of course not, because we have another reference cartography that is being built by the public administration.

Florencia Claes: In the Wikimedia environment we also work like this, because of the mass, because of the number of eyes that are looking at what we do and what others do. Each one, within this community, is assuming roles. There are roles that are scheduled, such as administrators or librarians, but there are others that simply: I like to patrol, so what I do is keep an eye on new articles and I could be looking at the articles that are published daily to see if they need any support, any improvement or if, on the contrary, they are so bad that they need to be removed from the main part or erased.

The key to these projects is the number of people who participate and everything is voluntary, altruistic. The passion is very high, the level of commitment is very high. So people take great care of those things. Whether data is curated to upload to Wikidata or an article is written on Wikipedia, each person who does it, does it with great affection, with great zeal. Then time goes by and he is aware of that material that he uploaded, to see how it continued to grow, if it was used, if it became richer or if, on the contrary, something was erased.

Miguel Sevilla-Callejo: Regarding the quality of the data, I find interesting, for example, an initiative that the Territorial Information System of Navarre has now had. They have migrated all their data for planning and guiding emergency routes to OpenStreetMap, taking their data. They have been involved in the project, they have improved the information, but taking what was already there [in OpenStreetMap], considering that they had a high quality and that it was much more useful to them than using other alternatives, which shows the quality and importance that this project can have.

7. This data can also be used to generate open educational resources, along with other sources of knowledge. What do these resources consist of and what role do they play in the democratization of knowledge?

Florencia Claes: OER, open educational resources, should be the norm. Each teacher who generates content should make it available to citizens and should be built in modules from free resources. It would be ideal.

What role does the Wikimedia environment have in this? From housing information that can be used when building resources, to providing spaces to perform exercises or to take, for example, data and do work with SPARQL. In other words, there are different ways of approaching Wikimedia projects in relation to open educational resources. You can intervene and teach students how to identify data, how to verify sources, to simply make a critical reading of how information is presented, how it is curated, and make, for example, an assessment between languages.

Miguel Sevilla-Callejo: OpenStreetMap is very similar. What's interesting and unique is what the nature of the data is. It's not exactly information in different formats like in Wikimedia. Here the information is that free spatial database that is OpenStreetMap. So the limits are the imagination.

I remember that there was a colleague who went to some conferences and made a cake with the OpenStreetMap map. He would feed it to the people and say, "See? These are maps that we have been able to eat because they are free." To make more serious or more informal or playful cartography, the limit is only your imagination. It happens exactly the same as with Wikipedia.

8. Finally, how can citizens and organizations be motivated to participate in the creation and maintenance of collaborative projects linked to free culture and open data?

Florencia Claes: I think we have to clearly do what Miguel said about the cake. You have to make a cake and invite people to eat cake. Seriously talking about what we can do to motivate citizens to reuse this data, I believe, especially from personal experience and from the groups with which I have worked on these platforms, that the interface is friendly is a very important step.

In Wikipedia in 2015, the visual editor was activated. The visual editor made us join many more women to edit Wikipedia. Before, it was edited only in code and code, because at first glance it can seem hostile or distant or "that doesn't go with me". So, to have interfaces where people don't need to have too much knowledge to know that this is a package that has such and such data and I'm going to be able to read it with such a program or I'm going to be able to dump it into such and such a thing and make it simple, friendly, attractive... I think that this is going to remove many barriers and that it will put aside the idea that data is for computer scientists. And I think that data goes further, that we can really take advantage of all of them in very different ways. So I think it's one of the barriers that we should overcome.

Miguel Sevilla-Callejo: It didn't happen to us that until about 2015 (forgive me if it's not exactly the date), we had an interface that was quite horrible, almost like the code edition you have in Wikipedia, or worse, because you had to enter the data knowing the labeling, etc. It was very complex. And now we have an editor that basically you're in OpenStreetMap, you hit edit and a super simple interface comes out. You don't even have to put labeling in English anymore, it's all translated. There are many things pre-configured and people can enter the data immediately and in a very simple way. So what that has allowed is that many more people come to the project.

Another very interesting thing, which also happens in Wikipedia, although it is true that it is much more focused on the web interface, is that around OpenStreetMap an ecosystem of applications and services has been generated that has made it possible, for example, to appear mobile applications that, in a very fast, very simple way, allow data to be put directly on foot on the ground. And this makes it possible for people to enter the data in a simple way.

I wanted to stress it again, although I know that we are reiterating all the time in the same circumstance, but I think it is important to comment on it, because I think that we forget that within the projects: we need people to be aware again that data is free, that it belongs to the community, that it is not in the hands of a private company, that it can be modified, that it can be transformed, that behind it there is a community of voluntary, free people, but that this does not detract from the quality of the data, and that it reaches everywhere. So that people come closer and don't see us as a weirdo. I think that Wikipedia is much more integrated into society's knowledge and now with artificial intelligence much more, but it happens to us in OpenStreetMap, that they look at you like saying "but what are you telling me if I use another application on my mobile?" or you're using ours, you're using OpenStreetMap data without knowing it. So we need to get closer to society, to get them to know us better.

Returning to the issue of association, that is one of our objectives, that people know us, that they know that this data is open, that it can be transformed, that they can use it and that they are free to have it to build, as I said before, what they want and the limit is their imagination.

Florencia Claes: I think we should somehow integrate through gamification, through games in the classroom, the incorporation of maps, of data within the classroom, within the day-to-day schooling. I think we would have a point in our favour there. Given that we are within a free ecosystem, we can integrate visualization or reuse tools on the same pages of the data repositories that I think would make everything much friendlier and give a certain power to citizens, it would empower them in such a way that they would be encouraged to use them.

Miguel Sevilla-Callejo: It's interesting that we have things that connect both projects (we also sometimes forget the people of OpenStreetMap and Wikipedia), that there is data that we can exchange, coordinate and add. And that would also add to what you just said.

Subscribe to our Spotify profile to keep up to date with our podcasts

Interview clips

1. What is OpenStreetMap?

2. How does Wikimedia help in the creation of Open Educational Resources?

Open data is a fundamental fuel for contemporary digital innovation, creating information ecosystems that democratise access to knowledge and foster the development of advanced technological solutions.

However, the mere availability of data is not enough. Building robust and sustainable ecosystems requires clear regulatory frameworks, sound ethical principles and management methodologies that ensure both innovation and the protection of fundamental rights. Therefore, the specialised documentation that guides these processes becomes a strategic resource for governments, organisations and companies seeking to participate responsibly in the digital economy.

In this post, we compile recent reports, produced by leading organisations in both the public and private sectors, which offer these key orientations. These documents not only analyse the current challenges of open data ecosystems, but also provide practical tools and concrete frameworks for their effective implementation.

State and evolution of the open data market

Knowing what it looks like and what changes have occurred in the open data ecosystem at European and national level is important to make informed decisions and adapt to the needs of the industry. In this regard, the European Commission publishes, on a regular basis, a Data Markets Report, which is updated regularly. The latest version is dated December 2024, although use cases exemplifying the potential of data in Europe are regularly published (the latest in February 2025).

On the other hand, from a European regulatory perspective, the latest annual report on the implementation of the Digital Markets Act (DMA)takes a comprehensive view of the measures adopted to ensure fairness and competitiveness in the digital sector. This document is interesting to understand how the regulatory framework that directly affects open data ecosystems is taking shape.

At the national level, the ASEDIE sectoral report on the "Data Economy in its infomediary scope" 2025 provides quantitative evidence of the economic value generated by open data ecosystems in Spain.

The importance of open data in AI

It is clear that the intersection between open data and artificial intelligence is a reality that poses complex ethical and regulatory challenges that require collaborative and multi-sectoral responses. In this context, developing frameworks to guide the responsible use of AI becomes a strategic priority, especially when these technologies draw on public and private data ecosystems to generate social and economic value. Here are some reports that address this objective:

- Generative IA and Open Data: Guidelines and Best Practices: the U.S. Department of Commerce. The US government has published a guide with principles and best practices on how to apply generative artificial intelligence ethically and effectively in the context of open data. The document provides guidelines for optimising the quality and structure of open data in order to make it useful for these systems, including transparency and governance.

- Good Practice Guide for the Use of Ethical Artificial Intelligence: This guide demonstrates a comprehensive approach that combines strong ethical principles with clear and enforceable regulatory precepts.. In addition to the theoretical framework, the guide serves as a practical tool for implementing AI systems responsibly, considering both the potential benefits and the associated risks. Collaboration between public and private actors ensures that recommendations are both technically feasible and socially responsible.

- Enhancing Access to and Sharing of Data in the Age of AI: this analysis by the Organisation for Economic Co-operation and Development (OECD) addresses one of the main obstacles to the development of artificial intelligence: limited access to quality data and effective models. Through examples, it identifies specific strategies that governments can implement to significantly improve data access and sharing and certain AI models.

- A Blueprint to Unlock New Data Commons for AI: Open Data Policy Lab has produced a practical guide that focuses on the creation and management of data commons specifically designed to enable cases of public interest artificial intelligence use. The guide offers concrete methodologies on how to manage data in a way that facilitates the creation of these data commons, including aspects of governance, technical sustainability and alignment with public interest objectives.

- Practical guide to data-driven collaborations: the Data for Children Collaborative initiative has published a step-by-step guide to developing effective data collaborations, with a focus on social impact. It includes real-world examples, governance models and practical tools to foster sustainable partnerships.

In short, these reports define the path towards more mature, ethical and collaborative data systems. From growth figures for the Spanish infomediary sector to European regulatory frameworks to practical guidelines for responsible AI implementation, all these documents share a common vision: the future of open data depends on our ability to build bridges between the public and private sectors, between technological innovation and social responsibility.

On 16 May, Lanzarote became the epicentre of open culture and open data in Spain with the celebration of the IV Encuentro Nacional de Datos Abiertos (ENDA). Under the slogan "Data in the culture of open knowledge", this edition brought together more than a hundred experts, professionals and open data enthusiasts to reflect on how to boost the development and progress of our society through free access to information.

The event, held in the emblematic Jameos del Agua Auditorium, was organised by the Government of the Canary Islands, through the Directorate General for the Digital Transformation of Public Services, the Directorate General for Transparency and Citizen Participation, the Canary Islands Institute of Statistics and the Island Council of Lanzarote under the brand "Canarias Datos Abiertos".

The transformation to data-driven organisations

The day began with the inauguration by Antonio Llorens de la Cruz, Vice Councillor for Administrations and Transparency of the Government of the Canary Islands, and Miguel Ángel Jiménez Cabrera, Councillor of the Area of Presidency, Human Resources, New Technologies, Energy, Housing, Transport, Mobility and Accessibility of the Island Council of Lanzarote.

This was followed by a talk by Óscar Corcho García, Professor at the Polytechnic University of Madrid, who addressed the " Challenges in the transformation of an organisation to be data-centric, using knowledge graphs. The case of the European Railway Agency ". Corcho presented the case study of the European Railway Agency (ERA).

In his presentation, Corcho insisted that the transformation from a traditional to a data-driven organisation goes far beyond technology implementation. This transformation process requires strengthening the legal framework, harmonising processes, vocabularies and master data, establishing governance of the ontology model and creating a community of users to further enrich the model.

In this process, metadata, data catalogues and reference data are key elements. In addition, knowledge graphs are essential tools for connecting and integrating data from proprietary systems.

Open data for science in the service of public decisions

The first of the roundtables addressed how open data can serve science to improve public decisions. Participants highlighted the need to strengthen the data economy, move towards technological sovereignty and promote effective citizen participation.

Diego Ramiro Fariñas, Director of the Institute of Economics, Geography and Demography of the Spanish National Research Council (CSIC), highlighted:

- The importance of longitudinal data infrastructures, i.e. data that are collected over time for the same units.

- The value of linked data in breaking down information silos.

- The need to preserve statistical heritage.

- The project Es_Datalab, which allows cross-referencing data such as those of the Tax Agency with those of Health.

- The potential of synthetic data to reduce bias in AI applications.

Ramiro Fariñas also emphasised that the National Statistics Institute has transformed its entire statistical production towards data mining, and that leading institutes such as the Canary Islands and Andalusia are improving the publication of data to improve public policies. He pointed out two fundamental aspects: the need for greater interlocution between data producers and the training of administration staff to overcome the main barriers to putting science at the service of public decisions.

Izaskun Lacunza Aguirrebengoa, Director of the Spanish Foundation for Science and Technology (FECYT), stressed the importance of transforming the model of science, making it easier for scientific institutions to protect and share research information. He explained the concept of open science in contrast to some of the current practices, where knowledge generated with public funds ends up being controlled by private oligopolies that subsequently sell this processed information to the very institutions that generated it. Lacunza advocated public-public collaboration through initiatives such as the Office of Science and Technology in Congress.

Another participant in this round table was Tania Gullón Muñoz-Repiso, Coordinator of the Innovation and Geospatial Analysis Area of the Ministry of Transport and Sustainable Mobility, who shared how data is crucial for the management of emergencies such as the DANA. The Ministry's mobility data has hundreds of reusers, drives new businesses and enables predictive modelling. Gullón insisted that it is key that the data provided by citizens include an explanation of how it has been used, considering this feedback fundamental to give value to open science.

Open culture: removing barriers to knowledge

The round table "Open culture: how data brings us closer to knowledge" discussed how to remove barriers to access, study and transformation of knowledge so that it can be returned to society and its potential can be harnessed.

In this thematic block, Florencia Claes, Academic Director of Free Culture at the Office of Free Knowledge and Culture (OfiLibre) of the Universidad Rey Juan Carlos (URJC), defined open culture as the current that seeks access to knowledge without barriers and the possibility of being able to appropriate that knowledge, study it and share it again with society. He highlighted interesting ideas such as that publishing content on the internet does not automatically mean that it is open, as open content must meet certain standards and conditions that are not always met.

Claes explained the value of Open Educational Resources (OER) and how the URJC has a specific office to disseminate open culture, open science and open data. He pointed out that there is a deficiency in the training of university teaching staff on licensing and OER, considering this training as a key element to advance in the culture of openness.

In addition, he stressed that mass access to data facilitates its control, error detection and improvement. For this, initiatives such as Wikimedia or OpenStreetMap are very interesting, both projects accept voluntary participation and your contribution is essential to building and maintaining online open environments.

At the same table, Julio Cordal Elviro, Head of the Area of Library Projects and responsible for relations with Europeana at the Ministry of Culture, explained the evolution of Europeana from simple harvester to digital library, with projects based on semantic metadata, highlighting the challenges of standardisation and digital preservation of more than 60 million cultural works. He explained that the emergence of Google Books acted as a catalyst to "get the ball rolling" in this area.

Cordal also presented the Hispanaproject, which compiles information on digitised collections throughout Spain and federates with Europeana, and mentioned that they have begun to generate OER. He underlined how the use of technologies such as OCR (rOptical Character Recognition) and the online availability of funds makes it easier for researchers to save infinite time in their work. "When you make data open and free, you are opening up new opportunities," he concluded.

On the other hand, José Luis Bueren Gómez-Acebo, Technical Directorof the National Library of Spain (BNE), shared the digital transformation process of the institution, its commitment to open licences and the importance of the emotional component that drives citizen participation in cultural projects.

Bueren explained how the BNE continues its work of compiling and digitising all the bibliographic works produced in Spain, keeping connected with Wikidata and other international libraries in a standardised way. Through initiatives such as BNE Data, they offer a more practical and didactic vision of the information they publish.

He stressed the importance of citizens re-appropriating the cultural content, feeling that it is theirs, recalling that the BNE is indebted to the scientific community and to all citizens. Among the innovative projects they are promoting, he mentioned the automatic transcription of manuscripts. As challenges for the future, he pointed to sustainability, the management of intellectual property and the need for cultural institutions to be able to adapt to new trends.

Prioritisation of public data openness

As in each edition, ENDA presented a specific challenge. This year, Casey Abernethy, Technical Manager of the Asociación Multisectorial de la Información (ASEDIE), and José de León Rojas, Head of the Negociado de Modernización del Cabildo Insular de Lanzarote, presented a methodology and tool to help public administrations decide what datasets they should publish and in what order of priority, based on:

- Data sets recommended by the FEMP.

- Priority sets defined in the UNE Standard on Smart Cities and Open Data.

- High-value assemblies according to European standards.

- Sets requested by ASEDIE (Top 10 ASEDIE).

- Sets derived from transparency indices or regulations.

The proposed methodology considers three fundamental indices: organisational maturity, technical difficulty and strategic relevance. The 4th challenge in the context of the Encuentro was specifically aimed at choosing the key datasets to be published in a public administration according to its open data maturity. This methodology has been implemented in an operational tool that can be found on the Meetings website.

The power of free software and open communities

During the afternoon, the panel "Unlocking the potential of open data" highlighted how free software and open communities drive the use and exploitation of open data:

- Emilio López Cano, Professor at the Universidad Rey Juan Carlos and president of the Hispanic R Community, showed how the R community facilitates the use of open data through specific packages.

- Miguel Sevilla Callejo, Research Assistant at the Pyrenean Institute of Ecology of the CSIC and vice-president of the OpenStreetMap Spain association, presented OpenStreetMap as an invaluable source of open spatial data and highlighted its importance in emergency situations.

- Patricio del Boca, Technical Lead and member of the Open Knowledge Foundation (OKFN) CKAN technical team, explained the advantages of CKAN as an open source platform for implementing open data portals and presented the new Open Data Editor tool.

Open administrations at the service of citizens

The last round table addressed how administrations can bring data and its value closer to citizens:

- Ascensión Hidalgo Bellota, Deputy Director General for Transparency of Madrid City Council, presented "View Madrid with Open Data". Hidalgo stressed that the project has significantly reduced the number of citizen consultations thanks to its clarifying nature, thus demonstrating a double benefit: bringing data closer to the population and optimising the administration's resources.

- Carlos Alonso Peña, Director of the Design, Innovation and Exploitation Division at the Directorate General for Data, highlighted the cultural change that the Administration is undergoing, moving from data protection to responsible openness. He presented the Data Directorate General's initiatives to move beyond open data towards a single data market: the data spaces, where concrete solutions are being developed to demonstrate the business potential in this area. He also pointed to the growing importance of private data in the wake of the General Data Regulation and the obligations it establishes.

- Joseba Asiain Albisu, Director General of the Directorate General of the Presidency, Open Government and Relations with the Parliament of Navarre of the Government of Navarre, explained Navarre's strategy to improve data quality, centralise information and promote continuous evaluation. He commented on how the Government of Navarra seeks to balance quantity and quality in the publication of data, centralising data from the entire region and submitting metadata to external evaluation, with the collaboration of, among others, datos.gob.es.

The value of open data meetings

The IV ENDA has demonstrated, once again, the importance of these spaces for reflection and debate for:

- Sharing good practices and experiences between public administrations.

- Encourage collaboration between institutions, academia and the private sector.

- Promote the culture of open data as a tool for social innovation.

- Promote the development of skills in public administration staff.

- Improving public policies through information sharing.

After four consecutive editions, the National Open Data Meeting has established itself as a must-attend event for all the people and entities involved in the open data ecosystem in Spain. This event contributes significantly to building a more informed, participatory and transparent society.

ENDA will continue in 2026 with its fifth edition, committed to continue promoting the culture of open data as a driver of economic and social development in our country. The organisation has already announced that the next edition of the event will be held in Navarre in 2026.. Follow us on social media to keep up to date with events on open data and related technologies. You can read us on Twitter (X), LinkedIn and Instagram.

Open knowledge is knowledge that can be reused, shared and improved by other users and researchers without noticeable restrictions. This includes data, academic publications, software and other available resources. To explore this topic in more depth, we have representatives from two institutions whose aim is to promote scientific production and make it available in open access for reuse:

- Mireia Alcalá Ponce de León, Information Resources Technician of the Learning, Research and Open Science Area of the Consortium of University Services of Catalonia (CSUC).

- Juan Corrales Corrillero, Manager of the data repository of the Madroño Consortium.

Listen to the full podcast (only available in Spanish)

Summary / Transcript of the interview

1. Can you briefly explain what the institutions you work for do?

Mireia Alcalá: The CSUC is the Consortium of University Services of Catalonia and is an organisation that aims to help universities and research centres located in Catalonia to improve their efficiency through collaborative projects. We are talking about some 12 universities and almost 50 research centres.

We offer services in many areas: scientific computing, e-government, repositories, cloud administration, etc. and we also offer library and open science services, which is what we are closest to. In the area of learning, research and open science, which is where I am working, what we do is try to facilitate the adoption of new methodologies by the university and research system, especially in open science, and we give support to data management research.

Juan Corrales: The Consorcio Madroño is a consortium of university libraries of the Community of Madrid and the UNED (National University of Distance Education) for library cooperation.. We seek to increase the scientific output of the universities that are part of the consortium and also to increase collaboration between the libraries in other areas. We are also, like CSUC, very involved in open science: in promoting open science, in providing infrastructures that facilitate it, not only for the members of the Madroño Consortium, but also globally. Apart from that, we also provide other library services and create structures for them.

2. What are the requirements for an investigation to be considered open?

Juan Corrales: For research to be considered open there are many definitions, but perhaps one of the most important is given by the National Open Science Strategy, which has six pillars.

One of them is that it is necessary to put in open access both research data and publications, protocols, methodologies.... In other words, everything must be accessible and, in principle, without barriers for everyone, not only for scientists, not only for universities that can pay for access to these research data or publications. It is also important to use open source platforms that we can customise. Open source is software that anyone, in principle with knowledge, can modify, customise and redistribute, in contrast to the proprietary software of many companies, which does not allow all these things. Another important point, although this is still far from being achieved in most institutions, is allowing open peer review, because it allows us to know who has done a review, with what comments, etc. It can be said that it allows the peer review cycle to be redone and improved. A final point is citizen science: allowing ordinary citizens to be part of science, not only within universities or research institutes.

And another important point is adding new ways of measuring the quality of science.

Mireia Alcalá: I agree with what Juan says. I would also like to add that, for an investigation process to be considered open, we have to look at it globally. That is, include the entire data lifecycle. We cannot talk about a science being open if we only look at whether the data at the end is open. Already at the beginning of the whole data lifecycle, it is important to use platforms and work in a more open and collaborative way.

3. Why is it important for universities and research centres to make their studies and data available to the public?

Mireia Alcalá: I think it is key that universities and centres share their studies, because a large part of research, both here in Spain and at European and world level, is funded with public money. Therefore, if society is paying for the research, it is only logical that it should also benefit from its results. In addition, opening up the research process can help make it more transparent, more accountable, etc. Much of the research done to date has been found to be neither reusable nor reproducible. What does this mean? That the studies that have been done, almost 80% of the time someone else can't take it and reuse that data. Why? Because they don't follow the same standards, the same mannersand so on. So, I think we have to make it extensive everywhere and a clear example is in times of pandemics. With COVID-19, researchers from all over the world worked together, sharing data and findings in real time, working in the same way, and science was seen to be much faster and more efficient.

Juan Corrales: The key points have already been touched upon by Mireia. Besides, it could be added that bringing science closer to society can make all citizens feel that science is something that belongs to us, not just to scientists or academics. It is something we can participate in and this can also help to perhaps stop hoaxes, fake news, to have a more exhaustive vision of the news that reaches us through social networks and to be able to filter out what may be real and what may be false.

4.What research should be published openly?

Juan Corrales: Right now, according to the law we have in Spain, the latest Law of science, all publications that are mainly financed by public funds or in which public institutions participatemust be published in open access. This has not really had much repercussion until last year, because, although the law came out two years ago, the previous law also said so, there is also a law of the Community of Madrid that says the same thing.... but since last year it is being taken into account in the evaluation that the ANECA (the Quality Evaluation Agency) does on researchers.. Since then, almost all researchers have made it a priority to publish their data and research openly. Above all, data was something that had not been done until now.

Mireia Alcalá: At the state level it is as Juan says. We at the regional level also have a law from 2022, the Law of science, which basically says exactly the same as the Spanish law. But I also like people to know that we have to take into account not only the state legislation, but also the calls for proposals from where the money to fund the projects comes from. Basically in Europe, in framework programmes such as Horizon Europe, it is clearly stated that, if you receive funding from the European Commission, you will have to make a data management plan at the beginning of your research and publish the data following the FAIR principles.

5. Among other issues, both CSUC and Consorcio Madroño are in charge of supporting entities and researchers who want to make their data available to the public. How should a process of opening research data be? What are the most common challenges and how do you solve them?

Mireia Alcalá: In our repository, which is called RDR (from Repositori de Dades de Recerca), it is basically the participating institutions that are in charge of supporting the research staff.. The researcher arrives at the repository when he/she is already in the final phase of the research and needs to publish the data yesterday, and then everything is much more complex and time consuming. It takes longer to verify this data and make it findable, accessible, interoperable and reusable.

In our particular case, we have a checklist that we require every dataset to comply with to ensure this minimum data quality, so that it can be reused. We are talking about having persistent identifiers such as ORCID for the researcher or ROR to identify the institutions, having documentation explaining how to reuse that data, having a licence, and so on. Because we have this checklist, researchers, as they deposit, improve their processes and start to work and improve the quality of the data from the beginning. It is a slow process. The main challenge, I think, is that the researcher assumes that what he has is data, because most of them don't know it. Most researchers think of data as numbers from a machine that measures air quality, and are unaware that data can be a photograph, a film from an archaeological excavation, a sound captured in a certain atmosphere, and so on. Therefore, the main challenge is for everyone to understand what data is and that their data can be valuable to others.

And how do we solve it? Trying to do a lot of training, a lot of awareness raising. In recent years, the Consortium has worked to train data curation staff, who are dedicated to helping researchers directly refine this data. We are also starting to raise awareness directly with researchers so that they use the tools and understand this new paradigm of data management.

Juan Corrales: In the Madroño Consortium, until November, the only way to open data was for researchers to pass a form with the data and its metadata to the librarians, and it was the librarians who uploaded it to ensure that it was FAIR. Since November, we also allow researchers to upload data directly to the repository, but it is not published until it has been reviewed by expert librarians, who verify that the data and metadata are of high quality. It is very important that the data is well described so that it can be easily found, reusable and identifiable.

As for the challenges, there are all those mentioned by Mireia - that researchers often do not know they have data - and also, although ANECA has helped a lot with the new obligations to publish research data, many researchers want to put their data running in the repositories, without taking into account that they have to be quality data, that it is not enough to put them there, but that it is important that these data can be reused later.

6. What activities and tools do you or similar institutions provide to help organisations succeed in this task?

Juan Corrales: From Consorcio Madroño, the repository itself that we use, the tool where the research data is uploaded, makes it easy to make the data FAIR, because it already provides unique identifiers, fairly comprehensive metadata templates that can be customised, and so on. We also have another tool that helps create the data management plans for researchers, so that before they create their research data, they start planning how they're going to work with it. This is very important and has been promoted by European institutions for a long time, as well as by the Science Act and the National Open Science Strategy.

Then, more than the tools, the review by expert librarians is also very important. There are other tools that help assess the quality of adataset, of research data, such as Fair EVA or F-Uji, but what we have found is that those tools at the end what they are evaluating more is the quality of the repository, of the software that is being used, and of the requirements that you are asking the researchers to upload this metadata, because all our datasets have a pretty high and quite similar evaluation. So what those tools do help us with is to improve both the requirements that we're putting on our datasets, on our datasets, and to be able to improve the tools that we have, in this case the Dataverse software, which is the one we are using.

Mireia Alcalá: At the level of tools and activities we are on a par, because we have had a relationship with the Madroño Consortium for years, and just like them we have all these tools that help and facilitate putting the data in the best possible way right from the start, for example, with the tool for making data management plans. Here at CSUC we have also been working very intensively in recent years to close this gap in the data life cycle, covering issues of infrastructures, storage, cloud, etc. so that, when the data is analysed and managed, researchers also have a place to go. After the repository, we move on to all the channels and portals that make it possible to disseminate and make all this science visible, because it doesn't make sense for us to make repositories and they are there in a silo, but they have to be interconnected. For many years now, a lot of work has been done on making interoperability protocols and following the same standards. Therefore, data has to be available elsewhere, and both Consorcio Madroño and we are everywhere possible and more.

7. Can you tell us a bit more about these repositories you offer? In addition to helping researchers to make their data available to the public, you also offer a space, a digital repository where this data can be housed, so that it can be located by users.