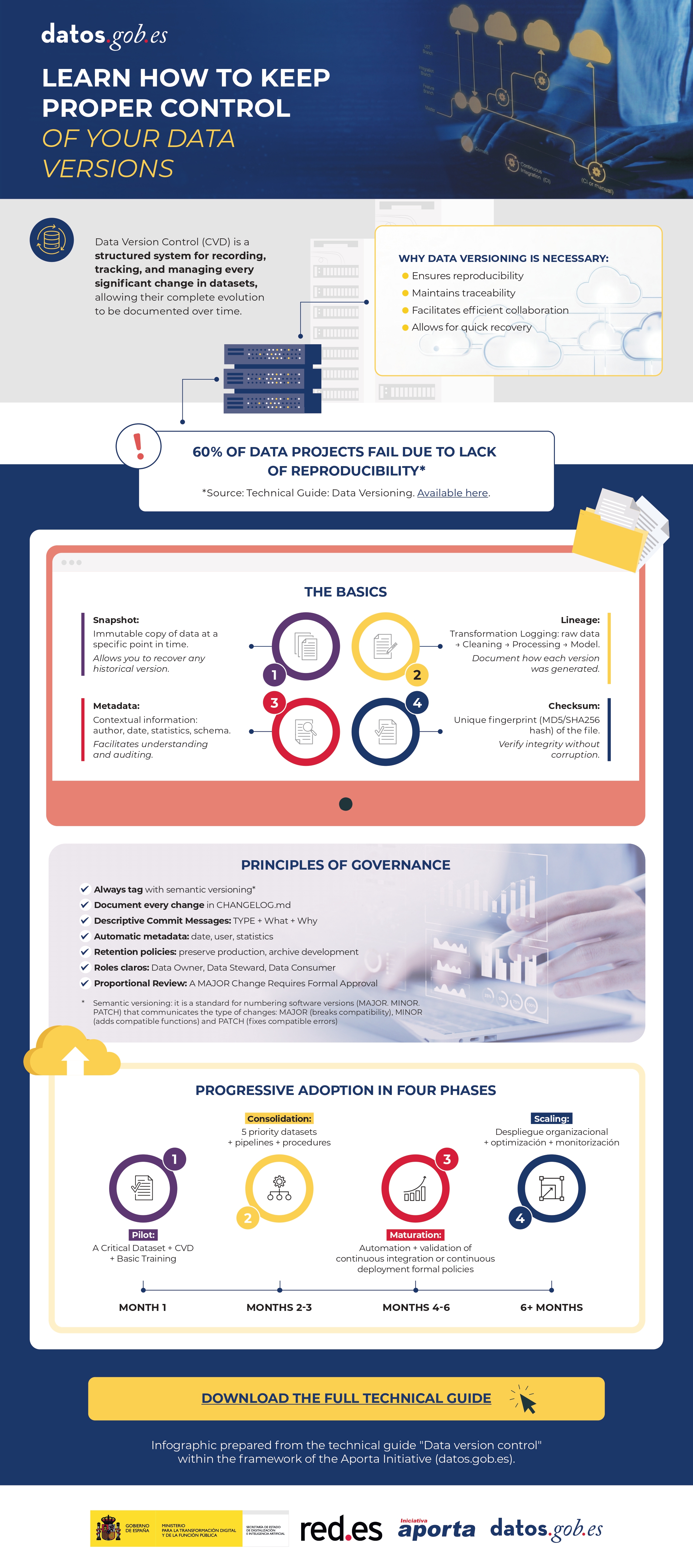

Data possesses a fluid and complex nature: it changes, grows, and evolves constantly, displaying a volatility that profoundly differentiates it from source code. To respond to the challenge of reliably managing this evolution, we have developed the new 'Technical Guide: Data Version Control'.

This guide addresses an emerging discipline that adapts software engineering principles to the data ecosystem: Data Version Control (DVC). The document not only explores the theoretical foundations but also offers a practical approach to solving critical data management problems, such as the reproducibility of machine learning models, traceability in regulatory audits, and efficient collaboration in distributed teams.

Why is a guide on data versioning necessary?

Historically, data versioning has been done manually (files with suffixes like "_final_v2.csv"), an error-prone and unsustainable approach in professional environments. While tools like Git have revolutionized software development, they are not designed to efficiently handle large files or binaries, which are intrinsic characteristics of datasets.

This guide was created to bridge that technological and methodological gap, explaining the fundamental differences between code versioning and data versioning. The document details how specialized tools like DVC (Data Version Control) allow you to manage the data lifecycle with the same rigor as code, ensuring that you can always answer the question: "What exact data was used to obtain this result?"

Structure and contents

The document follows a progressive approach, starting from basic concepts and progressing to technical implementation, and is structured in the following key blocks:

- Version Control Fundamentals: Analysis of the current problem (the "phantom model", impossible audits) and definition of key concepts such as Snapshots, Data Lineage and Checksums.

- Strategies and Methodologies: Adaptation of semantic versioning (SemVer) to datasets, storage strategies (incremental vs. full) and metadata management to ensure traceability.

- Tools in practice: A detailed analysis of tools such as DVC, Git LFS and cloud-native solutions (AWS, Google Cloud, Azure), including a comparison to choose the most suitable one according to the size of the team and the data.

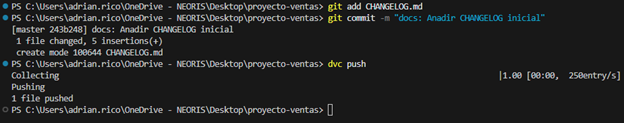

- Practical case study: A step-by-step tutorial on how to set up a local environment with DVC and Git, simulating a real data lifecycle: from generation and initial versioning, to updating, remote synchronization, and rollback.

- Governance and best practices: Recommendations on roles, retention policies and compliance to ensure successful implementation in the organization.

Figure 1: Practical example of using GIT and DVC commands included in the guide.

Who is it aimed at?

This guide is designed for a broad technical profile within the public and private sectors: data scientists, data engineers, analysts and data catalog managers.

It is especially useful for professionals looking to streamline their workflows, ensure the scientific reproducibility of their research, or guarantee regulatory compliance in regulated sectors. While basic knowledge of Git and the command line is recommended, the guide includes practical examples and detailed explanations to facilitate learning.