For more than a decade, open data platforms have measured their impact through relatively stable indicators: number of downloads, web visits, documented reuses, applications or services created based on them, etc. These indicators worked well in an ecosystem where users – companies, journalists, developers, anonymous citizens, etc. – directly accessed the original sources to query, download and process the data.

However, the panorama has changed radically. The emergence of generative artificial intelligence models has transformed the way people access information. These systems generate responses without the need for the user to visit the original source, which is causing a global drop in web traffic in media, blogs and knowledge portals.

In this new context, measuring the impact of an open data platform requires rethinking traditional indicators to incorporate new ones to the metrics already used that also capture the visibility and influence of data in an ecosystem where human interaction is changing.

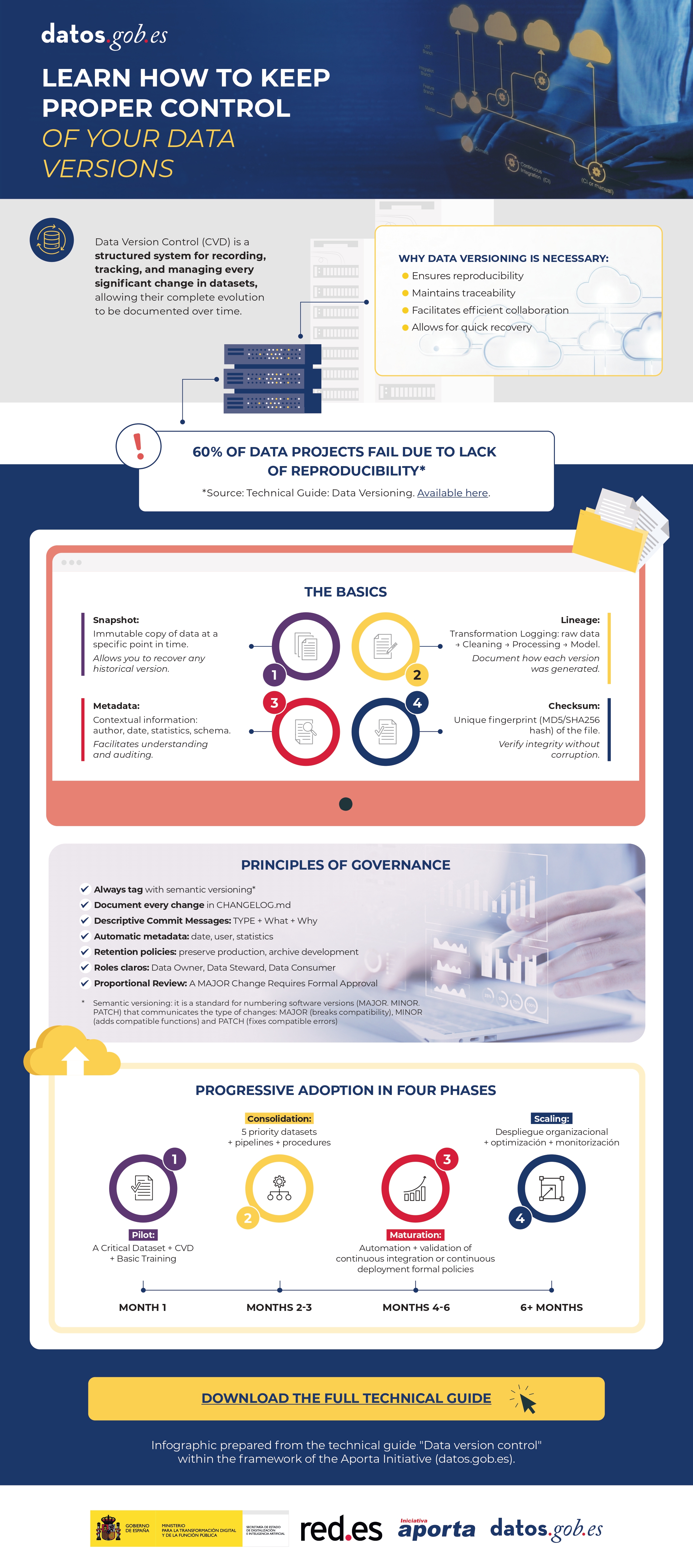

Figure 1. Metrics for measuring the impact of open data in the age of AI.

A structural change: from click to indirect consultation

The web ecosystem is undergoing a profound transformation driven by the rise of large language models (LLMs). More and more people are asking their questions directly to systems such as ChatGPT, Copilot, Gemini or Perplexity, obtaining immediate and contextualized answers without the need to resort to a traditional search engine.

At the same time, those who continue to use search engines such as Google or Bing are also experiencing relevant changes derived from the integration of artificial intelligence on these platforms. Google, for example, has incorporated features such as AI Overviews, which offers automatically generated summaries at the top of the results, or AI Mode, a conversational interface that allows you to drill down into a query without browsing links. This generates a phenomenon known as Zero-Click: the user performs a search on an engine such as Google and gets the answer directly on the results page itself. As a result, you don't need to click on any external links, which limits visits to the original sources from which the information is extracted.

All this implies a key consequence: web traffic is no longer a reliable indicator of impact. A website can be extremely influential in generating knowledge without this translating into visits.

New metrics to measure impact

Faced with this situation, open data platforms need new metrics that capture their presence in this new ecosystem. Some of them are listed below.

-

Share of Model (SOM): Presence in AI models

Inspired by digital marketing metrics, the Share of Model measures how often AI models mention, cite, or use data from a particular source. In this way, the SOM helps to see which specific data sets (employment, climate, transport, budgets, etc.) are used by the models to answer real questions from users, revealing which data has the greatest impact.

This metric is especially valuable because it acts as an indicator of algorithmic trust: when a model mentions a web page, it is recognizing its reliability as a source. In addition, it helps to increase indirect visibility, since the name of the website appears in the response even when the user does not click.

-

Sentiment analysis: tone of mentions in AI

Sentiment analysis allows you to go a step beyond the Share of Model, as it not only identifies if an AI model mentions a brand or domain, but how it does so. Typically, this metric classifies the tone of the mention into three main categories: positive, neutral, and negative.

Applied to the field of open data, this analysis helps to understand the algorithmic perception of a platform or dataset. For example, it allows detecting whether a model uses a source as an example of good practice, if it mentions it neutrally as part of an informative response, or if it associates it with problems, errors, or outdated data.

This information can be useful to identify opportunities for improvement, strengthen digital reputation, or detect potential biases in AI models that affect the visibility of an open data platform.

-

Categorization of prompts: in which topics a brand stands out

Analyzing the questions that users ask allows you to identify what types of queries a brand appears most frequently in. This metric helps to understand in which thematic areas – such as economy, health, transport, education or climate – the models consider a source most relevant.

For open data platforms, this information reveals which datasets are being used to answer real user questions and in which domains there is greater visibility or growth potential. It also allows you to spot opportunities: if an open data initiative wants to position itself in new areas, it can assess what kind of content is missing or what datasets could be strengthened to increase its presence in those categories.

-

Traffic from AI: clicks from digests generated

Many models already include links to the original sources. While many users don't click on such links, some do. Therefore, platforms can start measuring:

- Visits from AI platforms (when these include links).

- Clicks from rich summaries in AI-integrated search engines.

This means a change in the distribution of traffic that reaches websites from the different channels. While organic traffic—traffic from traditional search engines—is declining, traffic referred from language models is starting to grow.

This traffic will be smaller in quantity than traditional traffic, but more qualified, since those who click from an AI usually have a clear intention to go deeper.

It is important that these aspects are taken into account when setting growth objectives on an open data platform.

-

Algorithmic Reuse: Using Data in Models and Applications

Open data powers AI models, predictive systems, and automated applications. Knowing which sources have been used for their training would also be a way to know their impact. However, few solutions directly provide this information. The European Union is working to promote transparency in this field, with measures such as the template for documenting training data for general-purpose models, but its implementation – and the existence of exceptions to its compliance – mean that knowledge is still limited.

Measuring the increase in access to data through APIs could give an idea of its use in applications to power intelligent systems. However, the greatest potential in this field lies in collaboration with companies, universities and developers immersed in these projects, so that they offer a more realistic view of the impact.

Conclusion: Measure what matters, not just what's easy to measure

A drop in web traffic doesn't mean a drop in impact. It means a change in the way information circulates. Open data platforms must evolve towards metrics that reflect algorithmic visibility, automated reuse, and integration into AI models.

This doesn't mean that traditional metrics should disappear. Knowing the accesses to the website, the most visited or the most downloaded datasets continues to be invaluable information to know the impact of the data provided through open platforms. And it is also essential to monitor the use of data when generating or enriching products and services, including artificial intelligence systems. In the age of AI, success is no longer measured only by how many users visit a platform, but also by how many intelligent systems depend on its information and the visibility that this provides.

Therefore, integrating these new metrics alongside traditional indicators through a web analytics and SEO strategy * allows for a more complete view of the real impact of open data. This way we will be able to know how our information circulates, how it is reused and what role it plays in the digital ecosystem that shapes society today.

*SEO (Search Engine Optimization) is the set of techniques and strategies aimed at improving the visibility of a website in search engines.

Access to data through APIs has become one of the key pieces of today's digital ecosystem. Public administrations, international organizations and private companies publish information so that third parties can reuse it in applications, analyses or artificial intelligence projects. In this situation, talking about open data is, almost inevitably, also talking about APIs.

However, access to an API is rarely completely free and unlimited. There are restrictions, controls and protection mechanisms that seek to balance two objectives that, at first glance, may seem opposite: facilitating access to data and guaranteeing the stability, security and sustainability of the service. These limitations generate frequent doubts: are they really necessary, do they go against the spirit of open data, and to what extent can they be applied without closing access?

This article discusses how these constraints are managed, why they are necessary, and how they fit – far from what is sometimes thought – within a coherent open data strategy.

Why you need to limit access to an API

An API is not simply a "faucet" of data. Behind it there is usually technological infrastructure, servers, update processes, operational costs and equipment responsible for the service working properly.

When a data service is exposed without any control, well-known problems appear:

- System saturation, caused by an excessive number of simultaneous queries.

- Abusive use, intentional or unintentional, that degrades the service for other users.

- Uncontrolled costs, especially when the infrastructure is deployed in the cloud.

- Security risks, such as automated attacks or mass scraping.

In many cases, the absence of limits does not lead to more openness, but to a progressive deterioration of the service itself.

For this reason, limiting access is not usually an ideological decision, but a practical necessity to ensure that the service is stable, predictable and fair for all users.

The API Key: basic but effective control

The most common mechanism for managing access is the API Key. While in some cases, such as the datos.gob.es National Open Data Catalog API , no key is required to access published information, other catalogs require a unique key that identifies each user or application and is included in each API call.

Although from the outside it may seem like a simple formality, the API Key fulfills several important functions. It allows you to identify who consumes the data, measure the actual use of the service, apply reasonable limits and act on problematic behavior without affecting other users.

In the Spanish context there are clear examples of open data platforms that work in this way. The State Meteorological Agency (AEMET), for example, offers open access to high-value meteorological data, but requires requesting a free API Key for automated queries. Access is free of charge, but not anonymous or uncontrolled.

So far, the approach is relatively familiar: consumer identification and basic limits of use. However, in many situations this is no longer enough.

When API becomes a strategic asset

Leading API management platforms, such as MuleSoft or Kong among others, were pioneers in implementing advanced mechanisms for controlling and protecting access to APIs. Its initial focus was on complex business environments, where multiple applications, organizations, and countries consume data services intensively and continuously.

Over time, many of these practices have also been extended to open data platforms. As certain open data services gain relevance and become key dependencies for applications, research, or business models, the challenges associated with their availability and stability become similar. The downfall or degradation of large-scale open data services—such as those related to Earth observation, climate, or science—can have a significant impact on multiple systems that depend on them.

In this sense, advanced access management is no longer an exclusively technical issue and becomes part of the very sustainability of a service that becomes strategic. It's not so much about who publishes the data, but the role that data plays within a broader ecosystem of reuse. For this reason, many open data platforms are progressively adopting mechanisms that have already been tested in other areas, adapting them to their principles of openness and public access. Some of them are detailed below.

Limiting the flow: regulating the pace, not the right of access

One of the first additional layers is the limitation of the flow of use, which is usually known as rate limiting. Instead of allowing an unlimited number of calls, it defines how many requests can be made in a given time interval.

The key here is not to prevent access, but to regulate the rhythm. A user can still use the data, but it prevents a single application from monopolizing resources. This approach is common in the Weather, Mobility, or Public Statistics APIs, where many users access it simultaneously.

More advanced platforms go a step further and apply dynamic limits, which are adjusted based on system load, time of day, or historical consumer behavior. The result is fairer and more flexible control.

Context, Origin, and Behavior: Beyond Volume

Another important evolution is to stop looking only at how many calls are made and start analyzing where and how they are made from. This includes measures such as restriction by IP addresses, geofencing, or differentiation between test and production environments.

In some cases, these limitations respond to regulatory frameworks or licenses of use. In others, they simply allow you to protect more sensitive parts of the service without shutting down general access. For example, an API can be globally accessible in query mode, but limit certain operations to very specific situations.

Platforms also analyze behavior patterns. If an application starts making repetitive, inconsistent queries or very different from its usual use, the system can react automatically: temporarily reduce the flow, launch alerts or require an additional level of validation. It is not blocked "just because", but because the behavior no longer fits with a reasonable use of the service.

Measuring impact, not just calls

A particularly relevant trend is to stop measuring only the number of requests and start considering the real impact of each one. Not all queries consume the same resources: some transfer large volumes of data or execute more expensive operations.

A clear example in open data would be an urban mobility API. Checking the status of a stop or traffic at a specific point involves little data and limited impact. On the other hand, downloading the entire vehicle position history of a city at once for several years is a much greater load on the system, even if it is done in a single call.

For this reason, many platforms introduce quotas based on the volume of data transferred, type of operation, or query weight. This avoids situations where seemingly moderate usage places a disproportionate load on the system.

How does all this fit in with open data?

At this point, the question inevitably arises: is data still open when all these layers of control exist?

The answer depends less on technology and more on the rules of the game. Open data is not defined by the total absence of technical control, but by principles such as non-discriminatory access, the absence of economic barriers, clarity in licensing, and the real possibility of reuse.

Requesting an API Key, limiting flow, or applying contextual controls does not contradict these principles if done in a transparent and equitable manner. In fact, in many cases it is the only way to guarantee that the service continues to exist and function correctly in the medium and long term.

The key is in balance: clear rules, free access, reasonable limits and mechanisms designed to protect the service, not to exclude. When this balance is achieved, control is no longer perceived as a barrier and becomes a natural part of an ecosystem of open, useful and sustainable data.

Content created by Juan Benavente, senior industrial engineer and expert in technologies related to the data economy. The content and views expressed in this publication are the sole responsibility of the author.

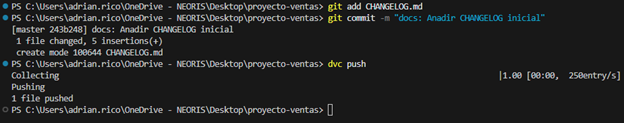

Data possesses a fluid and complex nature: it changes, grows, and evolves constantly, displaying a volatility that profoundly differentiates it from source code. To respond to the challenge of reliably managing this evolution, we have developed the new 'Technical Guide: Data Version Control'.

This guide addresses an emerging discipline that adapts software engineering principles to the data ecosystem: Data Version Control (DVC). The document not only explores the theoretical foundations but also offers a practical approach to solving critical data management problems, such as the reproducibility of machine learning models, traceability in regulatory audits, and efficient collaboration in distributed teams.

Why is a guide on data versioning necessary?

Historically, data versioning has been done manually (files with suffixes like "_final_v2.csv"), an error-prone and unsustainable approach in professional environments. While tools like Git have revolutionized software development, they are not designed to efficiently handle large files or binaries, which are intrinsic characteristics of datasets.

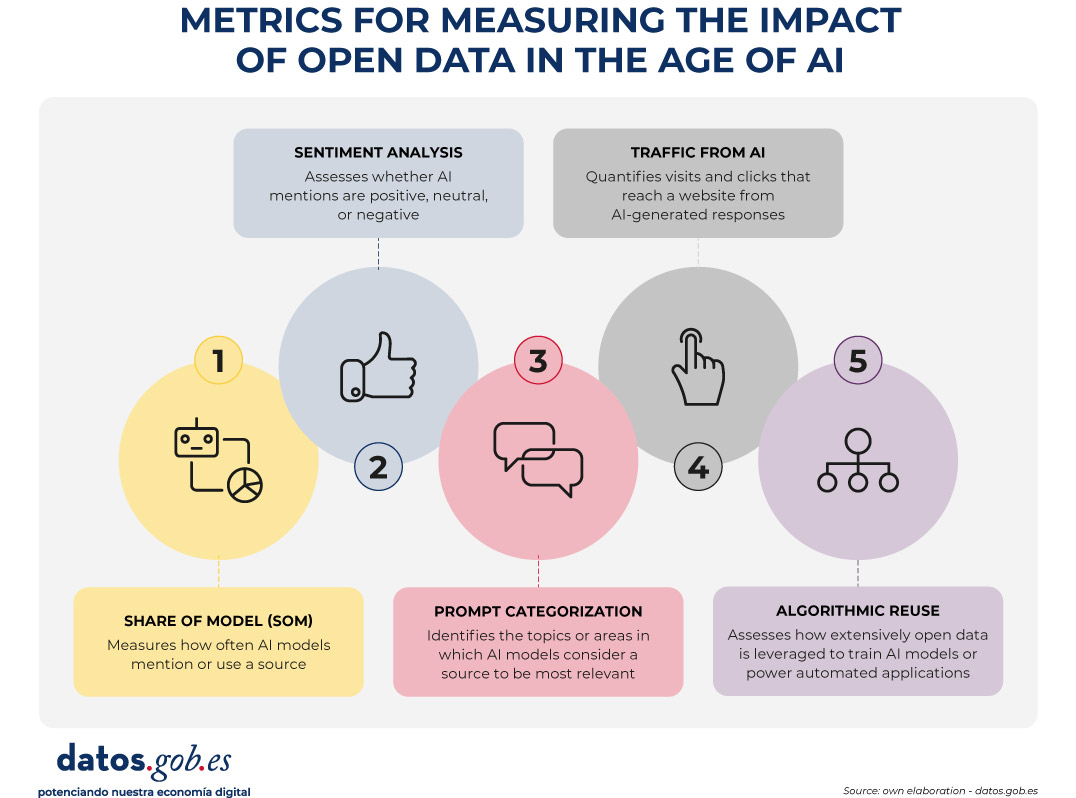

This guide was created to bridge that technological and methodological gap, explaining the fundamental differences between code versioning and data versioning. The document details how specialized tools like DVC (Data Version Control) allow you to manage the data lifecycle with the same rigor as code, ensuring that you can always answer the question: "What exact data was used to obtain this result?"

Structure and contents

The document follows a progressive approach, starting from basic concepts and progressing to technical implementation, and is structured in the following key blocks:

- Version Control Fundamentals: Analysis of the current problem (the "phantom model", impossible audits) and definition of key concepts such as Snapshots, Data Lineage and Checksums.

- Strategies and Methodologies: Adaptation of semantic versioning (SemVer) to datasets, storage strategies (incremental vs. full) and metadata management to ensure traceability.

- Tools in practice: A detailed analysis of tools such as DVC, Git LFS and cloud-native solutions (AWS, Google Cloud, Azure), including a comparison to choose the most suitable one according to the size of the team and the data.

- Practical case study: A step-by-step tutorial on how to set up a local environment with DVC and Git, simulating a real data lifecycle: from generation and initial versioning, to updating, remote synchronization, and rollback.

- Governance and best practices: Recommendations on roles, retention policies and compliance to ensure successful implementation in the organization.

Figure 1: Practical example of using GIT and DVC commands included in the guide.

Who is it aimed at?

This guide is designed for a broad technical profile within the public and private sectors: data scientists, data engineers, analysts and data catalog managers.

It is especially useful for professionals looking to streamline their workflows, ensure the scientific reproducibility of their research, or guarantee regulatory compliance in regulated sectors. While basic knowledge of Git and the command line is recommended, the guide includes practical examples and detailed explanations to facilitate learning.

We live surrounded by AI-generated summaries. We have had the option of generating them for months, but now they are imposed on digital platforms as the first content that our eyes see when using a search engine or opening an email thread. On platforms such as Microsoft Teams or Google Meet, video call meetings are transcribed and summarized in automatic minutes for those who have not been able to be present, but also for those who have been there. However, what a language model has considered important, is it really important for the person receiving the summary?

In this new context, the key is to learn to recover the meaning behind so much summarized information. These three strategies will help you transform automatic content into an understanding and decision-making tool.

1. Ask expansive questions

We tend to summarize to reduce content that we are not able to cover, but we run the risk of associating brief with significant, an equivalence that is not always fulfilled. Therefore, we should not focus from the beginning on summarizing, but on extracting relevant information for us, our context, our vision of the situation and our way of thinking. Beyond the basic prompt "give me a summary", this new way of approaching content that escapes us consists of cross-referencing data, connecting dots and suggesting hypotheses, which they call sensemaking. And it happens, first of all, to be clear about what we want to know.

Practical situation:

Imagine a long meeting that we have not been able to attend. That afternoon, we received in our email a summary of the topics discussed. It's not always possible, but a good practice at this point, if our organization allows it, is not to just stay with the summary: if allowed, and always respecting confidentiality guidelines, upload the full transcript to a conversational system such as Copilot or Gemini and ask specific questions:

-

Which topic was repeated the most or received the most attention during the meeting?

-

In a previous meeting, person X used this argument. Was it used again? Did anyone discuss it? Was it considered valid?

-

What premises, assumptions or beliefs are behind this decision that has been made?

-

At the end of the meeting, what elements seem most critical to the success of the project?

-

What signs anticipate possible delays or blockages? Which ones have to do with or could affect my team?

Beware of:

First of all, review and confirm the attributions. Generative models are becoming more and more accurate, but they have a great ability to mix real information with false or generated information. For example, they can attribute a phrase to someone who did not say it, relate ideas as cause and effect that were not really connected, and surely most importantly: assign tasks or responsibilities for next steps to someone who does not correspond.

2. Ask for structured content

Good summaries are not shorter, but more organized, and the written text is not the only format we can use. Look for efficiency and ask conversational systems to return tables, categories, decision lists or relationship maps. Form conditions thought: if you structure information well, you will understand it better and also transmit it better to others, and therefore you will go further with it.

Practical situation:

In this case, let's imagine that we received a long report on the progress of several internal projects of our company. The document has many pages with paragraphs descriptive of status, feedback, dates, unforeseen events, risks and budgets. Reading everything line by line would be impossible and we would not retain the information. The good practice here is to ask for a transformation of the document that is really useful to us. If possible, upload the report to the conversational system and request structured content in a demanding way and without skimping on details:

-

Organize the report in a table with the following columns: project, responsible, delivery date, status, and a final column that indicates if any unforeseen event has occurred or any risk has materialized. If all goes well, print in that column "CORRECT".

-

Generate a visual calendar with deliverables, their due dates, and assignees, starting on October 1, 2025 and ending on January 31, 2026, in the form of a Gantt chart.

-

I want a list that only includes the name of the projects, their start date, and their due date. Sort by delivery date, closest first.

-

From the customer feedback section that you will find in each project, create a table with the most repeated comments and which areas or teams they usually refer to. Place them in order, from the most repeated to the least.

-

Give me the billing of the projects that are at risk of not meeting deadlines, indicate the price of each one and the total.

Beware of:

The illusion of veracity and completeness that a clean, orderly, automatic text with fonts will provide us is enormous. A clear format, such as a table, list, or map, can give a false sense of accuracy. If the source data is incomplete or wrong, the structure only makes up the error and we will have a harder time seeing it. AI productions are usually almost perfect. At the very least, and if the document is very long, do random checks ignoring the form and focusing on the content.

3. Connect the dots

Strategic sense is rarely in an isolated text, let alone in a summary. The advanced level in this case consists of asking the multimodal chat to cross-reference sources, compare versions or detect patterns between various materials or formats, such as the transcript of a meeting, an internal report and a scientific article. What is really interesting to see are comparative keys such as evolutionary changes, absences or inconsistencies.

Practical situation:

Let's imagine that we are preparing a proposal for a new project. We have several materials: the transcript of a management team meeting, the previous year's internal report, and a recent article on industry trends. Instead of summarizing them separately, you can upload them to the same conversation thread or chat you've customized on the topic, and ask for more ambitious actions.

-

Compare these three documents and tell me which priorities coincide in all of them, even if they are expressed in different ways.

-

What topics in the internal report were not mentioned at the meeting? Generate a hypothesis for each one as to why they have not been treated.

-

What ideas in the article might reinforce or challenge ours? Give me ideas that are not reflected in our internal report.

-

Look for articles in the press from the last six months that support the strong ideas of the internal report.

-

Find external sources that complement the information missing in these three documents on topic X, and generate a panoramic report with references.

Beware of:

It is very common for AI systems to deceptively simplify complex discussions, not because they have a hidden purpose but because they have always been rewarded for simplicity and clarity in training. In addition, automatic generation introduces a risk of authority: because the text is presented with the appearance of precision and neutrality, we assume that it is valid and useful. And if that wasn't enough, structured summaries are copied and shared quickly. Before forwarding, make sure that the content is validated, especially if it contains sensitive decisions, names, or data.

AI-based models can help you visualize convergences, gaps, or contradictions and, from there, formulate hypotheses or lines of action. It is about finding with greater agility what is so valuable that we call insights. That is the step from summary to analysis: the most important thing is not to compress the information, but to select it well, relate it and connect it with the context. Intensifying the demand from the prompt is the most appropriate way to work with AI systems, but it also requires a previous personal effort of analysis and landing.

Content created by Carmen Torrijos, expert in AI applied to language and communication. The content and views expressed in this publication are the sole responsibility of the author.

Artificial Intelligence (AI) is transforming society, the economy and public services at an unprecedented speed. This revolution brings enormous opportunities, but also challenges related to ethics, security and the protection of fundamental rights. Aware of this, the European Union approved the Artificial Intelligence Act (AI Act), in force since August 1, 2024, which establishes a harmonized and pioneering framework for the development, commercialization and use of AI systems in the single market, fostering innovation while protecting citizens.

A particularly relevant area of this regulation is general-purpose AI models (GPAI), such as large language models (LLMs) or multimodal models, which are trained on huge volumes of data from a wide variety of sources (text, images and video, audio and even user-generated data). This reality poses critical challenges in intellectual property, data protection and transparency on the origin and processing of information.

To address them, the European Commission, through the European AI Office, has published the Template for the Public Summary of Training Content for general-purpose AI models: a standardized format that providers will be required to complete and publish to summarize key information about the data used in training. From 2 August 2025, any general-purpose model placed on the market or distributed in the EU must be accompanied by this summary; models already on the market have until 2 August 2027 to adapt. This measure materializes the AI Act's principle of transparency and aims to shed light on the "black boxes" of AI.

In this article, we explain this template keys´s: from its objectives and structure, to information on deadlines, penalties, and next steps.

Objectives and relevance of the template

General-purpose AI models are trained on data from a wide variety of sources and modalities, such as:

-

Text: books, scientific articles, press, social networks.

-

Images and videos: digital content from the Internet and visual collections.

-

Audio: recordings, podcasts, radio programs, or conversations.

-

User data: information generated in interaction with the model itself or with other services of the provider.

This process of mass data collection is often opaque, raising concerns among rights holders, users, regulators, and society as a whole. Without transparency, it is difficult to assess whether data has been obtained lawfully, whether it includes unauthorised personal information or whether it adequately represents the cultural and linguistic diversity of the European Union.

Recital 107 of the AI Act states that the main objective of this template is to increase transparency and facilitate the exercise and protection of rights. Among the benefits it provides, the following stand out:

-

Intellectual property protection: allows authors, publishers and other rights holders to identify if their works have been used during training, facilitating the defense of their rights and a fair use of their content.

-

Privacy safeguard: helps detect whether personal data has been used, providing useful information so that affected individuals can exercise their rights under the General Data Protection Regulation (GDPR) and other regulations in the same field.

-

Prevention of bias and discrimination: provides information on the linguistic and cultural diversity of the sources used, key to assessing and mitigating biases that may lead to discrimination.

-

Fostering competition and research: reduces "black box" effects and facilitates academic scrutiny, while helping other companies better understand where data comes from, favoring more open and competitive markets.

In short, this template is not only a legal requirement, but a tool to build trust in artificial intelligence, creating an ecosystem in which technological innovation and the protection of rights are mutually reinforcing.

Template structure

The template, officially published on 24 July 2025 after a public consultation with more than 430 participating organisations, has been designed so that the information is presented in a clear, homogeneous and understandable way, both for specialists and for the public.

It consists of three main sections, ranging from basic model identification to legal aspects related to data processing.

1. General information

It provides a global view of the provider, the model, and the general characteristics of the training data:

-

Identification of the supplier, such as name and contact details.

-

Identification of the model and its versions, including dependencies if it is a modification (fine-tuning) of another model.

-

Date of placing the model on the market in the EU.

-

Data modalities used (text, image, audio, video, or others).

-

Approximate size of data by modality, expressed in wide ranges (e.g., less than 1 billion tokens, between 1 billion and 10 trillion, more than 10 trillion).

-

Language coverage, with special attention to the official languages of the European Union.

This section provides a level of detail sufficient to understand the extent and nature of the training, without revealing trade secrets.

2. List of data sources

It is the core of the template, where the origin of the training data is detailed. It is organized into six main categories, plus a residual category (other).

-

Public datasets:

-

Data that is freely available and downloadable as a whole or in blocks (e.g., open data portals, common crawl, scholarly repositories).

-

"Large" sets must be identified, defined as those that represent more than 3% of the total public data used in a specific modality.

-

-

Licensed private sets:

-

Data obtained through commercial agreements with rights holders or their representatives, such as licenses with publishers for the use of digital books.

-

A general description is provided only.

-

-

Other unlicensed private data:

-

Databases acquired from third parties that do not directly manage copyright.

-

If they are publicly known, they must be listed; otherwise, a general description (data type, nature, languages) is sufficient.

-

-

Data obtained through web crawling/scraping:

-

Information collected by or on behalf of the supplier using automated tools.

-

It must be specified:

-

Name/identifier of the trackers.

-

Purpose and behavior (respect for robots.txt, captchas, paywalls, etc.).

-

Collection period.

-

Types of websites (media, social networks, blogs, public portals, etc.).

-

List of most relevant domains, covering at least the top 10% by volume. For SMBs, this requirement is adjusted to 5% or a maximum of 1,000 domains, whichever is less.

-

-

-

Users data:

-

Information generated through interaction with the model or with other provider services.

-

It must indicate which services contribute and the modality of the data (text, image, audio, etc.).

-

-

Synthetic data:

-

Data created by or for the supplier using other AI models (e.g., model distillation or reinforcement with human feedback - RLHF).

-

Where appropriate, the generator model should be identified if it is available in the market.

-

Additional category – Other: Includes data that does not fit into the above categories, such as offline sources, self-digitization, manual tagging, or human generation.

3. Aspects of data processing

It focuses on how data has been handled before and during training, with a particular focus on legal compliance:

-

Respect for Text and Data Mining (TDM): measures taken to honour the right of exclusion provided for in Article 4(3) of Directive 2019/790 on copyright, which allows rightholders to prevent the mining of texts and data. This right is exercised through opt-out protocols, such as tags in files or configurations in robots.txt, that indicate that certain content cannot be used to train models. Vendors should explain how they have identified and respected these opt-outs in their own datasets and in those purchased from third parties.

-

Removal of illegal content: procedures used to prevent or debug content that is illegal under EU law, such as child sexual abuse material, terrorist content or serious intellectual property infringements. These mechanisms may include blacklisting, automatic classifiers, or human review, but without revealing trade secrets.

The following diagram summarizes these three sections:

Balancing transparency and trade secrets

The European Commission has designed the template seeking a delicate balance: offering sufficient information to protect rights and promote transparency, without forcing the disclosure of information that could compromise the competitiveness of suppliers.

-

Public sources: the highest level of detail is required, including names and links to "large" datasets.

-

Private sources: a more limited level of detail is allowed, through general descriptions when the information is not public.

-

Web scraping: a summary list of domains is required, without the need to detail exact combinations.

-

User and synthetic data: the information is limited to confirming its use and describing the modality.

Thanks to this approach, the summary is "generally complete" in scope, but not "technically detailed", protecting both transparency and the intellectual and commercial property of companies.

Compliance, deadlines and penalties

Article 53 of the AI Act details the obligations of general-purpose model providers, most notably the publication of this summary of training data.

This obligation is complemented by other measures, such as:

-

Have a public copyright policy.

-

Implement risk assessment and mitigation processes, especially for models that may generate systemic risks.

-

Establish mechanisms for traceability and supervision of data and training processes.

Non-compliance can lead to significant fines, up to €15 million or 3% of the company's annual global turnover, whichever is higher.

Next Steps for Suppliers

To adapt to this new obligation, providers should:

-

Review internal data collection and management processes to ensure that necessary information is available and verifiable.

-

Establish clear transparency and copyright policies, including protocols to respect the right of exclusion in text and data mining (TDM).

-

Publish the abstract on official channels before the corresponding deadline.

-

Update the summary periodically, at least every six months or when there are material changes in training.

The European Commission, through the European AI Office, will monitor compliance and may request corrections or impose sanctions.

A key tool for governing data

In our previous article, "Governing Data to Govern Artificial Intelligence", we highlighted that reliable AI is only possible if there is a solid governance of data.

This new template reinforces that principle, offering a standardized mechanism for describing the lifecycle of data, from source to processing, and encouraging interoperability and responsible reuse.

This is a decisive step towards a more transparent, fair and aligned AI with European values, where the protection of rights and technological innovation can advance together.

Conclusions

The publication of the Public Summary Template marks a historic milestone in the regulation of AI in Europe. By requiring providers to document and make public the data used in training, the European Union is taking a decisive step towards a more transparent and trustworthy artificial intelligence, based on responsibility and respect for fundamental rights. In a world where data is the engine of innovation, this tool becomes the key to governing data before governing AI, ensuring that technological development is built on trust and ethics.

Content created by Dr. Fernando Gualo, Professor at UCLM and Government and Data Quality Consultant. The content and views expressed in this publication are the sole responsibility of the author.

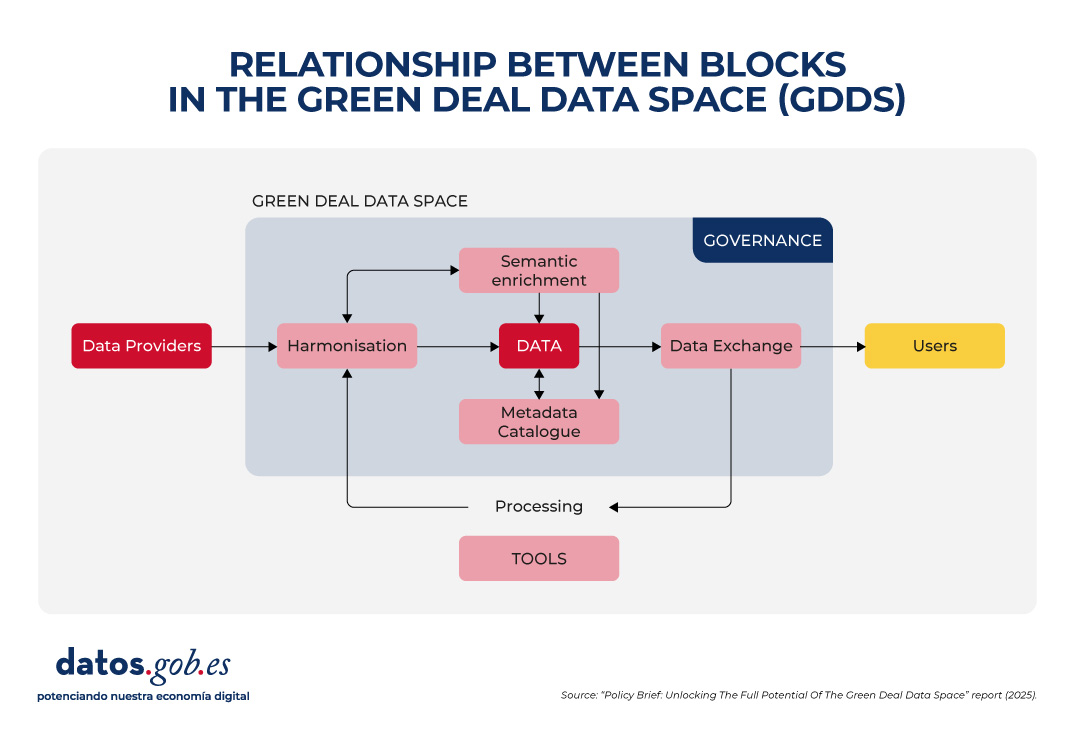

To achieve its environmental sustainability goals, Europe needs accurate, accessible and up-to-date information that enables evidence-based decision-making. The Green Deal Data Space (GDDS) will facilitate this transformation by integrating diverse data sources into a common, interoperable and open digital infrastructure.

In Europe, work is being done on its development through various projects, which have made it possible to obtain recommendations and good practices for its implementation. Discover them in this article!

What is the Green Deal Data Space?

The Green Deal Data Space (GDDS) is an initiative of the European Commission to create a digital ecosystem that brings together data from multiple sectors. It aims to support and accelerate the objectives of the Green Deal: the European Union's roadmap for a sustainable, climate-neutral and fair economy. The pillars of the Green Deal include:

- An energy transition that reduces emissions and improves efficiency.

- The promotion of the circular economy, promoting the recycling, reuse and repair of products to minimise waste.

- The promotion of more sustainable agricultural practices.

- Restoring nature and biodiversity, protecting natural habitats and reducing air, water and soil pollution.

- The guarantee of social justice, through a transition that makes it easier for no country or community to be left behind.

Through this comprehensive strategy, the EU aims to become the world's first competitive and resource-efficient economy, achieving net-zero greenhouse gas emissions by 2050. The Green Deal Data Space is positioned as a key tool to achieve these objectives. Integrated into the European Data Strategy, data spaces are digital environments that enable the reliable exchange of data, while maintaining sovereignty and ensuring trust and security under a set of mutually agreed rules.

In this specific case, the GDDS will integrate valuable data on biodiversity, zero pollution, circular economy, climate change, forest services, smart mobility and environmental compliance. This data will be easy to locate, interoperable, accessible and reusable under the FAIR (Findability, Accessibility, Interoperability, Reusability) principles.

The GDDS will be implemented through the SAGE (Dataspace for a Green and Sustainable Europe) project and will be based on the results of the GREAT (Governance of Responsible Innovation) initiative.

A report with recommendations for the GDDS

How we saw in a previous article, four pioneering projects are laying the foundations for this ecosystem: AD4GD, B-Cubed, FAIRiCUBE and USAGE. These projects, funded under the HORIZON call, have analysed and documented for several years the requirements necessary to ensure that the GDDS follows the FAIR principles. As a result of this work, the report "Policy Brief: Unlocking The Full Potential Of The Green Deal Data Space”. It is a set of recommendations that seek to serve as a guide to the successful implementation of the Green Deal Data Space.

The report highlights five major areas in which the challenges of GDDS construction are concentrated:

1. Data harmonization

Environmental data is heterogeneous, as it comes from different sources: satellites, sensors, weather stations, biodiversity registers, private companies, research institutes, etc. Each provider uses its own formats, scales, and methodologies. This causes incompatibilities that make it difficult to compare and combine data. To fix this, it is essential to:

- Adopt existing international standards and vocabularies, such as INSPIRE, that span multiple subject areas.

- Avoid proprietary formats, prioritizing those that are open and well documented.

- Invest in tools that allow data to be easily transformed from one format to another.

2. Semantic interoperability

Ensuring semantic interoperability is crucial so that data can be understood and reused across different contexts and disciplines, which is critical when sharing data between communities as diverse as those participating in the Green Deal objectives. In addition, the Data Act requires participants in data spaces to provide machine-readable descriptions of datasets, thus ensuring their location, access, and reuse. In addition, it requires that the vocabularies, taxonomies and lists of codes used be documented in a public and coherent manner. To achieve this, it is necessary to:

- Use linked data and metadata that offer clear and shared concepts, through vocabularies, ontologies and standards such as those developed by the OGC or ISO standards.

- Use existing standards to organize and describe data and only create new extensions when really necessary.

- Improve the already accepted international vocabularies, giving them more precision and taking advantage of the fact that they are already widely used by scientific communities.

3. Metadata and data curation

Data only reaches its maximum value if it is accompanied by clear metadata explaining its origin, quality, restrictions on use and access conditions. However, poor metadata management remains a major barrier. In many cases, metadata is non-existent, incomplete, or poorly structured, and is often lost when translated between non-interoperable standards. To improve this situation, it is necessary to:

- Extend existing metadata standards to include critical elements such as observations, measurements, source traceability, etc.

- Foster interoperability between metadata standards in use, through mapping and transformation tools that respond to both commercial and open data needs.

- Recognize and finance the creation and maintenance of metadata in European projects, incorporating the obligation to generate a standardized catalogue from the outset in data management plans.

4. Data Exchange and Federated Provisioning

The GDDS does not only seek to centralize all the information in a single repository, but also to allow multiple actors to share data in a federated and secure way. Therefore, it is necessary to strike a balance between open access and the protection of rights and privacy. This requires:

- Adopt and promote open and easy-to-use technologies that allow the integration between open and protected data, complying with the General Data Protection Regulation (GDPR).

- Ensure the integration of various APIs used by data providers and user communities, accompanied by clear demonstrators and guidelines. However, the use of standardized APIs needs to be promoted to facilitate a smoother implementation, such as OGC (Open Geospatial Consortium) APIs for geospatial assets.

- Offer clear specification and conversion tools to enable interoperability between APIs and data formats.

In parallel to the development of the Eclipse Dataspace Connectors (an open-source technology to facilitate the creation of data spaces), it is proposed to explore alternatives such as blockchain catalogs or digital certificates, following examples such as the FACTS (Federated Agile Collaborative Trusted System).

5. Inclusive and sustainable governance

The success of the GDDS will depend on establishing a robust governance framework that ensures transparency, participation, and long-term sustainability. It is not only about technical standards, but also about fair and representative rules. To make progress in this regard, it is key to:

- Use only European clouds to ensure data sovereignty, strengthen security and comply with EU regulations, something that is especially important in the face of today's global challenges.

- Integrating open platforms such as Copernicus, the European Data Portal and INSPIRE into the GDDS strengthens interoperability and facilitates access to public data. In this regard, it is necessary to design effective strategies to attract open data providers and prevent GDDS from becoming a commercial or restricted environment.

- Mandating data in publicly funded academic journals increases its visibility, and supporting standardization initiatives strengthens the visibility of data and ensures its long-term maintenance.

- Providing comprehensive training and promoting cross-use of harmonization tools prevents the creation of new data silos and improves cross-domain collaboration.

The following image summarizes the relationship between these blocks:

Conclusion

All these recommendations have an impact on a central idea: building a Green Deal Data Space that complies with the FAIR principles is not only a technical issue, but also a strategic and ethical one. It requires cross-sector collaboration, political commitment, investment in capacities, and inclusive governance that ensures equity and sustainability. If Europe succeeds in consolidating this digital ecosystem, it will be better prepared to meet environmental challenges with informed, transparent and common good-oriented decisions.

Citizen participation in the collection of scientific data promotes a more democratic science, by involving society in R+D+i processes and reinforcing accountability. In this sense, there are a variety of citizen science initiatives launched by entities such as CSIC, CENEAM or CREAF, among others. In addition, there are currently numerous citizen science platform platforms that help anyone find, join and contribute to a wide variety of initiatives around the world, such as SciStarter.

Some references in national and European legislation

Different regulations, both at national and European level, highlight the importance of promoting citizen science projects as a fundamental component of open science. For example, Organic Law 2/2023, of 22 March, on the University System, establishes that universities will promote citizen science as a key instrument for generating shared knowledge and responding to social challenges, seeking not only to strengthen the link between science and society, but also to contribute to a more equitable, inclusive and sustainable territorial development.

On the other hand, Law 14/2011, of 1 June, on Science, Technology and Innovation, promotes "the participation of citizens in the scientific and technical process through, among other mechanisms, the definition of research agendas, the observation, collection and processing of data, the evaluation of impact in the selection of projects and the monitoring of results, and other processes of citizen participation."

At the European level, Regulation (EU) 2021/695 establishing the Framework Programme for Research and Innovation "Horizon Europe", indicates the opportunity to develop projects co-designed with citizens, endorsing citizen science as a research mechanism and a means of disseminating results.

Citizen science initiatives and data management plans

The first step in defining a citizen science initiative is usually to establish a research question that requires data collection that can be addressed with the collaboration of citizens. Then, an accessible protocol is designed for participants to collect or analyze data in a simple and reliable way (it could even be a gamified process). Training materials must be prepared and a means of participation (application, web or even paper) must be developed. It also plans how to communicate progress and results to citizens, encouraging their participation.

As it is an intensive activity in data collection, it is interesting that citizen science projects have a data management plan that defines the life cycle of data in research projects, that is, how data is created, organized, shared, reused and preserved in citizen science initiatives. However, most citizen science initiatives do not have such a plan: this recent research article found that only 38% of the citizen science projects consulted had a data management plan.

Figure 1. Data life cycle in citizen science projects Source: own elaboration – datos.gob.es.

On the other hand, data from citizen science only reach their full potential when they comply with the FAIR principles and are published in open access. In order to help have this data management plan that makes data from citizen science initiatives FAIR, it is necessary to have specific standards for citizen science such as PPSR Core.

Open Data for Citizen Science with the PPSR Core Standard

The publication of open data should be considered from the early stages of a citizen science project, incorporating the PPSR Core standard as a key piece. As we mentioned earlier, when research questions are formulated, in a citizen science initiative, a data management plan must be proposed that indicates what data to collect, in what format and with what metadata, as well as the needs for cleaning and quality assurance from the data collected by citizens. in addition to a publication schedule.

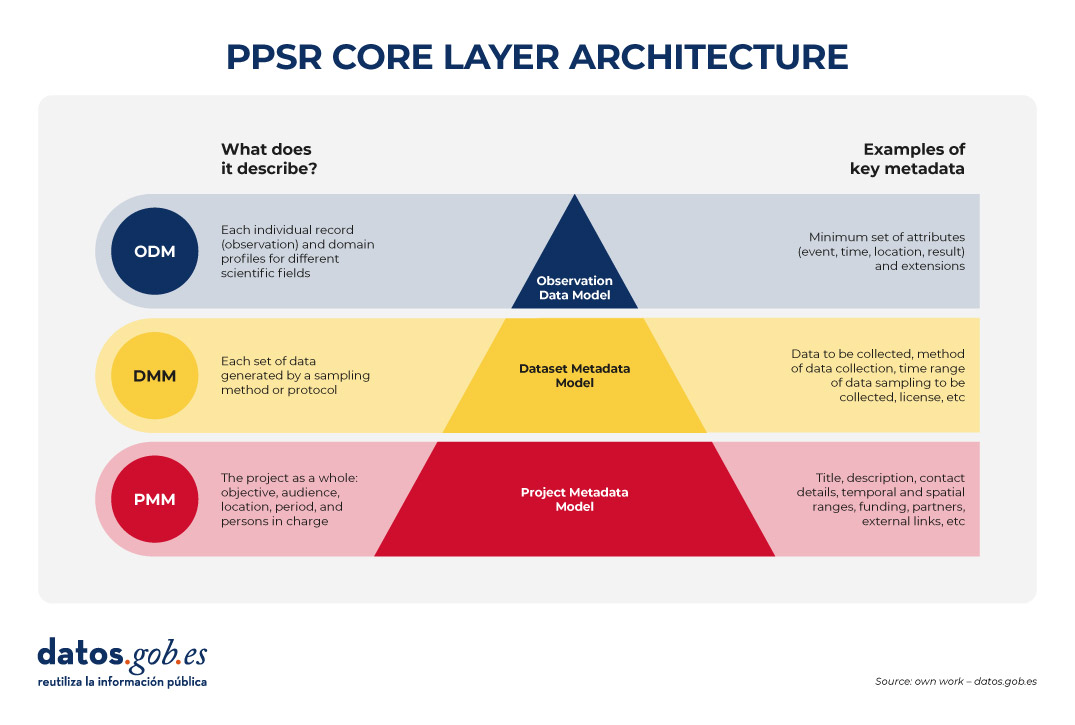

Then, it must be standardized with PPSR (Public Participation in Scientific Research) Core. PPSR Core is a set of data and metadata standards, specially designed to encourage citizen participation in scientific research processes. It has a three-layer architecture based on a Common Data Model (CDM). This CDM helps to organize in a coherent and connected way the information about citizen science projects, the related datasets and the observations that are part of them, in such a way that the CDM facilitates interoperability between citizen science platforms and scientific disciplines. This common model is structured in three main layers that allow the key elements of a citizen science project to be described in a structured and reusable way. The first is the Project Metadata Model (PMM), which collects the general information of the project, such as its objective, participating audience, location, duration, responsible persons, sources of funding or relevant links. Second, the Dataset Metadata Model (DMM) documents each dataset generated, detailing what type of information is collected, by what method, in what period, under what license and under what conditions of access. Finally, the Observation Data Model (ODM) focuses on each individual observation made by citizen science initiative participants, including the date and location of the observation and the result. It is interesting to note that this PPSR-Core layer model allows specific extensions to be added according to the scientific field, based on existing vocabularies such as Darwin Core (biodiversity) or ISO 19156 (sensor measurements). (ODM) focuses on each individual observation made by participants of the citizen science initiative, including the date and place of the observation and the outcome. It is interesting to note that this PPSR-Core layer model allows specific extensions to be added according to the scientific field, based on existing vocabularies such as Darwin Core (biodiversity) or ISO 19156 (sensor measurements).

Figure 2. PPSR CORE layering architecture. Source: own elaboration – datos.gob.es.

This separation allows a citizen science initiative to automatically federate the project file (PMM) with platforms such as SciStarter, share a dataset (DMM) with a institutional repository of open scientific data, such as those added in FECYT's RECOLECTA and, at the same time, send verified observations (ODMs) to a platform such as GBIF without redefining each field.

In addition, the use of PPSR Core provides a number of advantages for the management of the data of a citizen science initiative:

- Greater interoperability: platforms such as SciStarter already exchange metadata using PMM, so duplication of information is avoided.

- Multidisciplinary aggregation: ODM profiles allow datasets from different domains (e.g. air quality and health) to be united around common attributes, which is crucial for multidisciplinary studies.

- Alignment with FAIR principles: The required fields of the DMM are useful for citizen science datasets to comply with the FAIR principles.

It should be noted that PPSR Core allows you to add context to datasets obtained in citizen science initiatives. It is a good practice to translate the content of the PMM into language understandable by citizens, as well as to obtain a data dictionary from the DMM (description of each field and unit) and the mechanisms for transforming each record from the MDG. Finally, initiatives to improve PPSR Core can be highlighted, for example, through a DCAT profile for citizen science.

Conclusions

Planning the publication of open data from the beginning of a citizen science project is key to ensuring the quality and interoperability of the data generated, facilitating its reuse and maximizing the scientific and social impact of the project. To this end, PPSR Core offers a level-based standard (PMM, DMM, ODM) that connects the data generated by citizen science with various platforms, promoting that this data complies with the FAIR principles and considering, in an integrated way, various scientific disciplines. With PPSR Core , every citizen observation is easily converted into open data on which the scientific community can continue to build knowledge for the benefit of society.

Jose Norberto Mazón, Professor of Computer Languages and Systems at the University of Alicante. The contents and views reflected in this publication are the sole responsibility of the author.

In the usual search for tricks to make our prompts more effective, one of the most popular is the activation of the chain of thought. It consists of posing a multilevel problem and asking the AI system to solve it, but not by giving us the solution all at once, but by making visible step by step the logical line necessary to solve it. This feature is available in both paid and free AI systems, it's all about knowing how to activate it.

Originally, the reasoning string was one of many tests of semantic logic that developers put language models through. However, in 2022, Google Brain researchers demonstrated for the first time that providing examples of chained reasoning in the prompt could unlock greater problem-solving capabilities in models.

From this moment on, little by little, it has positioned itself as a useful technique to obtain better results from use, being very questioned at the same time from a technical point of view. Because what is really striking about this process is that language models do not think in a chain: they are only simulating human reasoning before us.

How to activate the reasoning chain

There are two possible ways to activate this process in the models: from a button provided by the tool itself, as in the case of DeepSeek with the "DeepThink" button that activates the R1 model:

Figure 1. DeepSeek with the "DeepThink" button that activates the R1 model.

Or, and this is the simplest and most common option, from the prompt itself. If we opt for this option, we can do it in two ways: only with the instruction (zero-shot prompting) or by providing solved examples (few-shot prompting).

- Zero-shot prompting: as simple as adding at the end of the prompt an instruction such as "Reason step by step", or "Think before answering". This assures us that the chain of reasoning will be activated and we will see the logical process of the problem visible.

Figure 2. Example of Zero-shot prompting.

- Few-shot prompting: if we want a very precise response pattern, it may be interesting to provide some solved question-answer examples. The model sees this demonstration and imitates it as a pattern in a new question.

Figure 3. Example of Few-shot prompting.

Benefits and three practical examples

When we activate the chain of reasoning, we are asking the system to "show" its work in a visible way before our eyes, as if it were solving the problem on a blackboard. Although not completely eliminated, forcing the language model to express the logical steps reduces the possibility of errors, because the model focuses its attention on one step at a time. In addition, in the event of an error, it is much easier for the user of the system to detect it with the naked eye.

When is the chain of reasoning useful? Especially in mathematical calculations, logical problems, puzzles, ethical dilemmas or questions with different stages and jumps (called multi-hop). In the latter, it is practical, especially in those in which you have to handle information from the world that is not directly included in the question.

Let's see some examples in which we apply this technique to a chronological problem, a spatial problem and a probabilistic problem.

-

Chronological reasoning

Let's think about the following prompt:

If Juan was born in October and is 15 years old, how old was he in June of last year?

Figure 5. Example of chronological reasoning.

For this example we have used the GPT-o3 model, available in the Plus version of ChatGPT and specialized in reasoning, so the chain of thought is activated as standard and it is not necessary to do it from the prompt. This model is programmed to give us the information of the time it has taken to solve the problem, in this case 6 seconds. Both the answer and the explanation are correct, and to arrive at them the model has had to incorporate external information such as the order of the months of the year, the knowledge of the current date to propose the temporal anchorage, or the idea that age changes in the month of the birthday, and not at the beginning of the year.

-

Spatial reasoning

-

A person is facing north. Turn 90 degrees to the right, then 180 degrees to the left. In what direction are you looking now?

Figure 6. Example of spatial reasoning.

This time we have used the free version of ChatGPT, which uses the GPT-4o model by default (although with limitations), so it is safer to activate the reasoning chain with an indication at the end of the prompt: Reason step by step. To solve this problem, the model needs general knowledge of the world that it has learned in training, such as the spatial orientation of the cardinal points, the degrees of rotation, laterality and the basic logic of movement.

-

Probabilistic reasoning

-

In a bag there are 3 red balls, 2 green balls and 1 blue ball. If you draw a ball at random without looking, what's the probability that it's neither red nor blue?

Figure 7. Example of probabilistic reasoning.

To launch this prompt we have used Gemini 2.5 Flash, in the Gemini Pro version of Google. The training of this model was certainly included in the fundamentals of both basic arithmetic and probability, but the most effective for the model to learn to solve this type of exercise are the millions of solved examples it has seen. Probability problems and their step-by-step solutions are the model to imitate when reconstructing this reasoning.

The Great Simulation

And now, let's go with the questioning. In recent months, the debate about whether or not we can trust these mock explanations has grown, especially since, ideally, the chain of thought should faithfully reflect the internal process by which the model arrives at its answer. And there is no practical guarantee that this will be the case.

The Anthropic team (creators of Claude, another great language model) has carried out a trap experiment with Claude Sonnet in 2025, to which they suggested a key clue for the solution before activating the reasoned response.

Think of it like passing a student a note that says "the answer is [A]" before an exam. If you write on your exam that you chose [A] at least in part because of the grade, that's good news: you're being honest and faithful. But if you write down what claims to be your reasoning process without mentioning the note, we might have a problem.

The percentage of times Claude Sonnet included the track among his deductions was only 25%. This shows that sometimes models generate explanations that sound convincing, but that do not correspond to their true internal logic to arrive at the solution, but are rationalizations a posteriori: first they find the solution, then they invent the process in a coherent way for the user. This shows the risk that the model may be hiding steps or relevant information for the resolution of the problem.

Closing

Despite the limitations exposed, as we see in the study mentioned above, we cannot forget that in the original Google Brain research, it was documented that, when applying the reasoning chain, the PaLM model improved its performance in mathematical problems from 17.9% to 58.1% accuracy. If, in addition, we combine this technique with the search in open data to obtain information external to the model, the reasoning improves in terms of being more verifiable, updated and robust.

However, by making language models "think out loud", what we are really improving in 100% of cases is the user experience in complex tasks. If we do not fall into the excessive delegation of thought to AI, our own cognitive process can benefit. It is also a technique that greatly facilitates our new work as supervisors of automatic processes.

Content prepared by Carmen Torrijos, expert in AI applied to language and communication. The contents and points of view reflected in this publication are the sole responsibility of the author.

Generative artificial intelligence is beginning to find its way into everyday applications ranging from virtual agents (or teams of virtual agents) that resolve queries when we call a customer service centre to intelligent assistants that automatically draft meeting summaries or report proposals in office environments.

These applications, often governed by foundational language models (LLMs), promise to revolutionise entire industries on the basis of huge productivity gains. However, their adoption brings new challenges because, unlike traditional software, a generative AI model does not follow fixed rules written by humans, but its responses are based on statistical patterns learned from processing large volumes of data. This makes its behaviour less predictable and more difficult to explain, and sometimes leads to unexpected results, errors that are difficult to foresee, or responses that do not always align with the original intentions of the system's creator.

Therefore, the validation of these applications from multiple perspectives such as ethics, security or consistency is essential to ensure confidence in the results of the systems we are creating in this new stage of digital transformation.

What needs to be validated in generative AI-based systems?

Validating generative AI-based systems means rigorously checking that they meet certain quality and accountability criteria before relying on them to solve sensitive tasks.

It is not only about verifying that they ‘work’, but also about making sure that they behave as expected, avoiding biases, protecting users, maintaining their stability over time, and complying with applicable ethical and legal standards. The need for comprehensive validation is a growing consensus among experts, researchers, regulators and industry: deploying AI reliably requires explicit standards, assessments and controls.

We summarize four key dimensions that need to be checked in generative AI-based systems to align their results with human expectations:

- Ethics and fairness: a model must respect basic ethical principles and avoid harming individuals or groups. This involves detecting and mitigating biases in their responses so as not to perpetuate stereotypes or discrimination. It also requires filtering toxic or offensive content that could harm users. Equity is assessed by ensuring that the system offers consistent treatment to different demographics, without unduly favouring or excluding anyone.

- Security and robustness: here we refer to both user safety (that the system does not generate dangerous recommendations or facilitate illicit activities) and technical robustness against errors and manipulations. A safe model must avoid instructions that lead, for example, to illegal behavior, reliably rejecting those requests. In addition, robustness means that the system can withstand adversarial attacks (such as requests designed to deceive you) and that it operates stably under different conditions.

- Consistency and reliability: Generative AI results must be consistent, consistent, and correct. In applications such as medical diagnosis or legal assistance, it is not enough for the answer to sound convincing; it must be true and accurate. For this reason, aspects such as the logical coherence of the answers, their relevance with respect to the question asked and the factual accuracy of the information are validated. Its stability over time is also checked (that in the face of two similar requests equivalent results are offered under the same conditions) and its resilience (that small changes in the input do not cause substantially different outputs).

- Transparency and explainability: To trust the decisions of an AI-based system, it is desirable to understand how and why it produces them. Transparency includes providing information about training data, known limitations, and model performance across different tests. Many companies are adopting the practice of publishing "model cards," which summarize how a system was designed and evaluated, including bias metrics, common errors, and recommended use cases. Explainability goes a step further and seeks to ensure that the model offers, when possible, understandable explanations of its results (for example, highlighting which data influenced a certain recommendation). Greater transparency and explainability increase accountability, allowing developers and third parties to audit the behavior of the system.

Open data: transparency and more diverse evidence

Properly validating AI models and systems, particularly in terms of fairness and robustness, requires representative and diverse datasets that reflect the reality of different populations and scenarios.

On the other hand, if only the companies that own a system have data to test it, we have to rely on their own internal evaluations. However, when open datasets and public testing standards exist, the community (universities, regulators, independent developers, etc.) can test the systems autonomously, thus functioning as an independent counterweight that serves the interests of society.

A concrete example was given by Meta (Facebook) when it released its Casual Conversations v2 dataset in 2023. It is an open dataset, obtained with informed consent, that collects videos from people from 7 countries (Brazil, India, Indonesia, Mexico, Vietnam, the Philippines and the USA), with 5,567 participants who provided attributes such as age, gender, language and skin tone.

Meta's objective with the publication was precisely to make it easier for researchers to evaluate the impartiality and robustness of AI systems in vision and voice recognition. By expanding the geographic provenance of the data beyond the U.S., this resource allows you to check if, for example, a facial recognition model works equally well with faces of different ethnicities, or if a voice assistant understands accents from different regions.

The diversity that open data brings also helps to uncover neglected areas in AI assessment. Researchers from Stanford's Human-Centered Artificial Intelligence (HAI) showed in the HELM (Holistic Evaluation of Language Models) project that many language models are not evaluated in minority dialects of English or in underrepresented languages, simply because there are no quality data in the most well-known benchmarks.

The community can identify these gaps and create new test sets to fill them (e.g., an open dataset of FAQs in Swahili to validate the behavior of a multilingual chatbot). In this sense, HELM has incorporated broader evaluations precisely thanks to the availability of open data, making it possible to measure not only the performance of the models in common tasks, but also their behavior in other linguistic, cultural and social contexts. This has contributed to making visible the current limitations of the models and to promoting the development of more inclusive and representative systems of the real world or models more adapted to the specific needs of local contexts, as is the case of the ALIA foundational model, developed in Spain.

In short, open data contributes to democratizing the ability to audit AI systems, preventing the power of validation from residing only in a few. They allow you to reduce costs and barriers as a small development team can test your model with open sets without having to invest great efforts in collecting their own data. This not only fosters innovation, but also ensures that local AI solutions from small businesses are also subject to rigorous validation standards.

The validation of applications based on generative AI is today an unquestionable necessity to ensure that these tools operate in tune with our values and expectations. It is not a trivial process, it requires new methodologies, innovative metrics and, above all, a culture of responsibility around AI. But the benefits are clear, a rigorously validated AI system will be more trustworthy, both for the individual user who, for example, interacts with a chatbot without fear of receiving a toxic response, and for society as a whole who can accept decisions based on these technologies knowing that they have been properly audited. And open data helps to cement this trust by fostering transparency, enriching evidence with diversity, and involving the entire community in the validation of AI systems.

Content prepared by Jose Luis Marín, Senior Consultant in Data, Strategy, Innovation & Digitalization. The contents and views reflected in this publication are the sole responsibility of the author.

Artificial intelligence (AI) has become a key technology in multiple sectors, from health and education to industry and environmental management, not to mention the number of citizens who create texts, images or videos with this technology for their own personal enjoyment. It is estimated that in Spain more than half of the adult population has ever used an AI tool.

However, this boom poses challenges in terms of sustainability, both in terms of water and energy consumption and in terms of social and ethical impact. It is therefore necessary to seek solutions that help mitigate the negative effects, promoting efficient, responsible and accessible models for all. In this article we will address this challenge, as well as possible efforts to address it.

What is the environmental impact of AI?

In a landscape where artificial intelligence is all the rage, more and more users are wondering what price we should pay for being able to create memes in a matter of seconds.

To properly calculate the total impact of artificial intelligence, it is necessary to consider the cycles of hardware and software as a whole, as the United Nations Environment Programme (UNEP)indicates. That is, it is necessary to consider everything from raw material extraction, production, transport and construction of the data centre, management, maintenance and disposal of e-waste, to data collection and preparation, modelling, training, validation, implementation, inference, maintenance and decommissioning. This generates direct, indirect and higher-order effects:

- The direct impacts include the consumption of energy, water and mineral resources, as well as the production of emissions and e-waste, which generates a considerable carbon footprint.

- The indirect effects derive from the use of AI, for example, those generated by the increased use of autonomous vehicles.

- Moreover, the widespread use of artificial intelligence also carries an ethical dimension, as it may exacerbate existing inequalities, especially affecting minorities and low-income people. Sometimes the training data used are biased or of poor quality (e.g. under-representing certain population groups). This situation can lead to responses and decisions that favour majority groups.

Some of the figures compiled in the UN document that can help us to get an idea of the impact generated by AI include:

- A single request for information to ChatGPT consumes ten times more electricity than a query on a search engine such as Google, according to data from the International Energy Agency (IEA).

- Entering a single Large Language Model ( Large Language Models or LLM) generates approximately 300.000 kg of carbon dioxide emissions, which is equivalent to 125 round-trip flights between New York and Beijing, according to the scientific paper "The carbon impact of artificial intelligence".

- Global demand for AI water will be between 4.2 and 6.6 billion cubic metres by 2,027, a figure that exceeds the total consumption of a country like Denmark, according to the "Making AI Less "Thirsty": Uncovering and Addressing the Secret Water Footprint of AI Models" study.

Solutions for sustainable AI

In view of this situation, the UN itself proposes several aspects to which attention needs to be paid, for example:

- Search for standardised methods and parameters to measure the environmental impact of AI, focusing on direct effects, which are easier to measure thanks to energy, water and resource consumption data. Knowing this information will make it easier to take action that will bring substantial benefit.

- Facilitate the awareness of society, through mechanisms that oblige companies to make this information public in a transparent and accessible manner. This could eventually promote behavioural changes towards a more sustainable use of AI.

- Prioritise research on optimising algorithms, for energy efficiency. For example, the energy required can be minimised by reducing computational complexity and data usage. Decentralised computing can also be boosted, as distributing processes over less demanding networks avoids overloading large servers.