Data visualization is a fundamental practice to democratize access to public information. However, creating effective graphics goes far beyond choosing attractive colors or using the latest technological tools. As Alberto Cairo, an expert in data visualization and professor at the academy of the European Open Data Portal (data.europa.eu), points out, "every design decision must be deliberate: inevitably subjective, but never arbitrary." Through a series of three webinars that you can watch again here, the expert offered innovative tips to be at the forefront of data visualization.

When working with data visualization, especially in the context of public information, it is crucial to debunk some myths ingrained in our professional culture. Phrases like "data speaks for itself," "a picture is worth a thousand words," or "show, don't count" sound good, but they hide an uncomfortable truth: charts don't always communicate automatically.

The reality is more complex. A design professional may want to communicate something specific, but readers may interpret something completely different. How can you bridge the gap between intent and perception in data visualization? In this post, we offer some keys to the training series.

A structured framework for designing with purpose

Rather than following rigid "rules" or applying predefined templates, the course proposes a framework of thinking based on five interrelated components:

- Content: the nature, origin, and limitations of the data

- People: The audience we are targeting

- Intention: The Purposes We Define

- Constraints: The Constraints We Face

- Results: how the graph is received

This holistic approach forces us to constantly ask ourselves: what do our readers really need to know? For example, when communicating information about hurricane or health emergency risks, is it more important to show exact trajectories or communicate potential impacts? The correct answer depends on the context and, above all, on the information needs of citizens.

The danger of over-aggregation

Even without losing sight of the purpose, it is important not to fall into adding too much information or presenting only averages. Imagine, for example, a dataset on citizen security at the national level: an average may hide the fact that most localities are very safe, while a few with extremely high rates distort the national indicator.

As Claus O. Wilke explains in his book "Fundamentals of Data Visualization," this practice can hide crucial patterns, outliers, and paradoxes that are precisely the most relevant to decision-making. To avoid this risk, the training proposes to visualize a graph as a system of layers that we must carefully build from the base:

1. Encoding

- It's the foundation of everything: how we translate data into visual attributes. Research in visual perception shows us that not all "visual channels" are equally effective. The hierarchy would be:

- Most effective: position, length and height

- Moderately effective: angle, area and slope

- Less effective: color, saturation, and shape

How do we put this into practice? For example, for accurate comparisons, a bar chart will almost always be a better choice than a pie chart. However, as nuanced in the training materials, "effective" does not always mean "appropriate". A pie chart can be perfect when we want to express the idea of a "whole and its parts", even if accurate comparisons are more difficult.

2. Arrangement

- The positioning, ordering, and grouping of elements profoundly affects perception. Do we want the reader to compare between categories within a group, or between groups? The answer will determine whether we organize our visualization with grouped or stacked bars, with multiple panels, or in a single integrated view.

3. Scaffolding

Titles, introductions, annotations, scales and legends are fundamental. In datos.gob.es we've seen how interactive visualizations can condense complex information, but without proper scaffolding, interactivity can confuse rather than clarify.

The value of a correct scale

One of the most delicate – and often most manipulable – technical aspects of a visualization is the choice of scale. A simple modification in the Y-axis can completely change the reader's interpretation: a mild trend may seem like a sudden crisis, or sustained growth may go unnoticed.

As mentioned in the second webinar in the series, scales are not a minor detail: they are a narrative component. Deciding where an axis begins, what intervals are used, or how time periods are represented involves making choices that directly affect one's perception of reality. For example, if an employment graph starts the Y-axis at 90% instead of 0%, the decline may seem dramatic, even if it's actually minimal.

Therefore, scales must be honest with the data. Being "honest" doesn't mean giving up on design decisions, but rather clearly showing what decisions were made and why. If there is a valid reason for starting the Y-axis at a non-zero value, it should be explicitly explained in the graph or in its footnote. Transparency must prevail over drama.

Visual integrity not only protects the reader from misleading interpretations, but also reinforces the credibility of the communicator. In the field of public data, this honesty is not optional: it is an ethical commitment to the truth and to citizen trust.

Accessibility: Visualize for everyone

On the other hand, one of the aspects often forgotten is accessibility. About 8% of men and 0.5% of women have some form of color blindness. Tools like Color Oracle allow you to simulate what our visualizations look like for people with different types of color perception impairments.

In addition, the webinar mentioned the Chartability project, a methodology to evaluate the accessibility of data visualizations. In the Spanish public sector, where web accessibility is a legal requirement, this is not optional: it is a democratic obligation. Under this premise, the Spanish Federation of Municipalities and Provinces published a Data Visualization Guide for Local Entities.

Visual Storytelling: When Data Tells Stories

Once the technical issues have been resolved, we can address the narrative aspect that is increasingly important to communicate correctly. In this sense, the course proposes a simple but powerful method:

- Write a long sentence that summarizes the points you want to communicate.

- Break that phrase down into components, taking advantage of natural pauses.

- Transform those components into sections of your infographic.

This narrative approach is especially effective for projects like the ones we found in data.europa.eu, where visualizations are combined with contextual explanations to communicate the value of high-value datasets or in datos.gob.es's data science and visualization exercises.

The future of data visualization also includes more creative and user-centric approaches. Projects that incorporate personalized elements, that allow readers to place themselves at the center of information, or that use narrative techniques to generate empathy, are redefining what we understand by "data communication".

Alternative forms of "data sensification" are even emerging: physicalization (creating three-dimensional objects with data) and sonification (translating data into sound) open up new possibilities for making information more tangible and accessible. The Spanish company Tangible Data, which we echo in datos.gob.es because it reuses open datasets, is proof of this.

Figure 1. Examples of data sensification. Source: https://data.europa.eu/sites/default/files/course/webinar-data-visualisation-episode-3-slides.pdf

By way of conclusion, we can emphasize that integrity in design is not a luxury: it is an ethical requirement. Every graph we publish on official platforms influences how citizens perceive reality and make decisions. That is why mastering technical tools such as libraries and visualization APIs, which are discussed in other articles on the portal, is so relevant.

The next time you create a visualization with open data, don't just ask yourself "what tool do I use?" or "Which graphic looks best?". Ask yourself: what does my audience really need to know? Does this visualization respect data integrity? Is it accessible to everyone? The answers to these questions are what transform a beautiful graphic into a truly effective communication tool.

The EU Open Data Days 2025 is an essential event for all those interested in the world of open data and innovation in Europe and the world. This meeting, to be held on 19-20 March 2025, will bring together experts, practitioners, developers, researchers and policy makers to share knowledge, explore new opportunities and address the challenges facing the open data community.

The event, organised by the European Commission through data.europa.eu, aims to promote the re-use of open data. Participants will have the opportunity to learn about the latest trends in the use of open data, discover new tools and discuss the policies and regulations that are shaping the digital landscape in Europe.

Where and when does it take place?

El evento se celebrará en el Centro Europeo de Convenciones de Luxemburgo, aunque también se podrá seguir online, con el siguiente horario:

- Wednesday 19 March 2025, from 13:30 to 18:30.

- Thursday 20 March 2025, from 9:00 to 15:30.

What issues will be addressed?

The agenda of the event is already available, where we find different themes, such as, for example:

- Success stories and best practices: the event will be attended by professionals working at the frontline of European data policy to share their experience. Among other issues, these experts will provide practical guidance on how to inventory and open up a country's public sector data, address the work involved in compiling high-value datasets or analyse perspectives on data reuse in business models. Good practices for quality metadata or improved data governance and interoperability will also be explained.

- Focus on the use of artificial intelligence (AI): open data offers an invaluable source for the development and advancement of AI. In addition, AI can optimise the location, management and use of this data, offering tools to help streamline processes and extract greater insight. In this regard, the event will address the potential of AI to transform open government data ecosystems, fostering innovation, improving governance and enhancing citizen participation. The managers of Norway's national data portal will tell how they use an AI-based search engine to improve data localisation. In addition, the advances in linguistic data spaces and their use in language modelling will be explained, and how to creatively combine open data for social impact will be explored.

- Learning about data visualisation: event attendees will be able to explore how data visualisation is transforming communication, policy making and citizen engagement. Through various cases (such as the family tree of 3,000 European royals or UNESCO's Intangible Cultural Heritage relationships) it will show how iterative design processes can uncover hidden patterns in complex networks, providing insights into storytelling and data communication. It will also address how design elements such as colour, scale and focus influence the perception of data.

- Examples and use cases: multiple examples of concrete projects based on the reuse of data will be shown, in fields such as energy, urban development or the environment. Among the experiences that will be shared is a Spanish company, Tangible Data, which will tell how physical data sculptures turn complex datasets into accessible and engaging experiences.

These are just some of the topics to be addressed, but there will also be discussions on open science, the role of open data in transparency and accountability, etc.

Why are EU Open Data Days so important?

Access to open data has proven to be a powerful tool for improving decision-making, driving innovation and research, and improving the efficiency of organisations. At a time when digitisation is advancing rapidly, the importance of sharing and reusing data is becoming increasingly crucial to address global challenges such as climate change, public health or social justice.

The EU Open Data Days 2025 are an opportunity to explore how open data can be harnessed to build a more connected, innovative and participatory Europe.

In addition, for those who choose to attend in person, the event will also be an opportunity to establish contacts with other professionals and organisations in the sector, creating new collaborations that can lead to innovative projects.

How can I attend?

To attend in person, it is necessary to register through this link. However, registration is not required to attend the event online.

If you have any queries, an e-mail address has been set up to answer any questions you may have about the event: EU-Open-Data-Days@ec.europa.eu.

More information on the event website.

Today's climate crisis and environmental challenges demand innovative and effective responses. In this context, the European Commission's Destination Earth (DestinE) initiative is a pioneering project that aims to develop a highly accurate digital model of our planet.

Through this digital twin of the Earth it will be possible to monitor and prevent potential natural disasters, adapt sustainability strategies and coordinate humanitarian efforts, among other functions. In this post, we analyse what the project consists of and the state of development of the project.

Features and components of Destination Earth

Aligned with the European Green Pact and the Digital Europe Strategy, Destination Earth integrates digital modeling and climate science to provide a tool that is useful in addressing environmental challenges. To this end, it has a focus on accuracy, local detail and speed of access to information.

In general, the tool allows:

- Monitor and simulate Earth system developments, including land, sea, atmosphere and biosphere, as well as human interventions.

- To anticipate environmental disasters and socio-economic crises, thus enabling the safeguarding of lives and the prevention of significant economic downturns.

- Generate and test scenarios that promote more sustainable development in the future.

To do this, DestinE is subdivided into three main components :

- Data lake:

- What is it? A centralised repository to store data from a variety of sources, such as the European Space Agency (ESA), EUMETSAT and Copernicus, as well as from the new digital twins.

- What does it provide? This infrastructure enables the discovery and access to data, as well as the processing of large volumes of information in the cloud.

·The DestinE Platform:.

- What is it? A digital ecosystem that integrates services, data-driven decision-making tools and an open, flexible and secure cloud computing infrastructure.

- What does it provide? Users have access to thematic information, models, simulations, forecasts and visualisations that will facilitate a deeper understanding of the Earth system.

- Digital cufflinks and engineering:

- What are they? There are several digital replicas covering different aspects of the Earth system. The first two are already developed, one on climate change adaptation and the other on extreme weather events.

- WHAT DOES IT PROVIDE? These twins offer multi-decadal simulations (temperature variation) and high-resolution forecasts.

Discover the services and contribute to improve DestinE

The DestinE platform offers a collection of applications and use cases developed within the framework of the initiative, for example:

- Digital twin of tourism (Beta): it allows to review and anticipate the viability of tourism activities according to the environmental and meteorological conditions of its territory.

- VizLab: offers an intuitive graphical user interface and advanced 3D rendering technologies to provide a storytelling experience by making complex datasets accessible and understandable to a wide audience..

- miniDEA: is an interactive and easy-to-use DEA-based web visualisation app for previewing DestinE data.

- GeoAI: is a geospatial AI platform for Earth observation use cases.

- Global Fish Tracking System (GFTS): is a project to help obtain accurate information on fish stocks in order to develop evidence-based conservation policies.

- More resilient urban planning: is a solution that provides a heat stress index that allows urban planners to understand best practices for adapting to extreme temperatures in urban environments..

- Danube Delta Water Reserve Monitoring: is a comprehensive and accurate analysis based on the DestinE data lake to inform conservation efforts in the Danube Delta, one of the most biodiverse regions in Europe.

Since October this year, the DestinE platform has been accepting registrations, a possibility that allows you to explore the full potential of the tool and access exclusive resources. This option serves to record feedback and improve the project system.

To become a user and be able to generate services, you must follow these steps..

Project roadmap:

The European Union sets out a series of time-bound milestones that will mark the development of the initiative:

- 2022 - Official launch of the project.

- 2023 - Start of development of the main components.

- 2024 - Development of all system components. Implementation of the DestinE platform and data lake. Demonstration.

- 2026 - Enhancement of the DestinE system, integration of additional digital twins and related services.

- 2030 - Full digital replica of the Earth.

Destination Earth not only represents a technological breakthrough, but is also a powerful tool for sustainability and resilience in the face of climate challenges. By providing accurate and accessible data, DestinE enables data-driven decision-making and the creation of effective adaptation and mitigation strategies.

As part of the European Cybersecurity Awareness Month, the European data portal, data.europa.eu, has organized a webinar focused on the protection of open data.This event comes at a critical time when organisations, especially in the public sector, face the challenge of balancing data transparency and accessibility with the need to protect against cyber threats.

The online seminar was attended by experts in the field of cybersecurity and data protection, both from the private and public sector.

The expert panel addressed the importance of open data for government transparency and innovation, as well as emerging risks related to data breaches, privacy issues and other cybersecurity threats. Data providers, particularly in the public sector, must manage this paradox of making data accessible while ensuring its protection against malicious use.

During the event, a number of malicious tactics used by some actors to compromise the security of open data were identified. These tactics can occur both before and after publication. Knowing about them is the first step in preventing and counteracting them.

Pre-publication threats

Before data is made publicly available, it may be subject to the following threats:

-

Supply chain attacks: attackers can sneak malicious code into open data projects, such as commonly used libraries (Pandas, Numpy or visualisation modules), by exploiting the trust placed in these resources. This technique allows attackers to compromise larger systems and collect sensitive information in a gradual and difficult to detect manner.

- Manipulation of information: data may be deliberately altered to present a false or misleading picture. This may include altering numerical values, distorting trends or creating false narratives. These actions undermine the credibility of open data sources and can have significant consequences, especially in contexts where data is used to make important decisions.

- Envenenamiento de datos (data poisoning): attackers can inject misleading or incorrect data into datasets, especially those used for training AI models. This can result in models that produce inaccurate or biased results, leading to operational failures or poor business decisions.

Post-publication threats

Once data has been published, it remains vulnerable to a variety of attacks:

-

Compromise data integrity: attackers can modify published data, altering files, databases or even data transmission. These actions can lead to erroneous conclusions and decisions based on false information.

- Re-identification and breach of privacy: data sets, even if anonymised, can be combined with other sources of information to reveal the identity of individuals. This practice, known as 're-identification', allows attackers to reconstruct detailed profiles of individuals from seemingly anonymous data. This represents a serious violation of privacy and may expose individuals to risks such as fraud or discrimination.

- Sensitive data leakage: open data initiatives may accidentally expose sensitive information such as medical records, personally identifiable information (emails, names, locations) or employment data. This information can be sold on illicit markets such as the dark web, or used to commit identity fraud or discrimination.

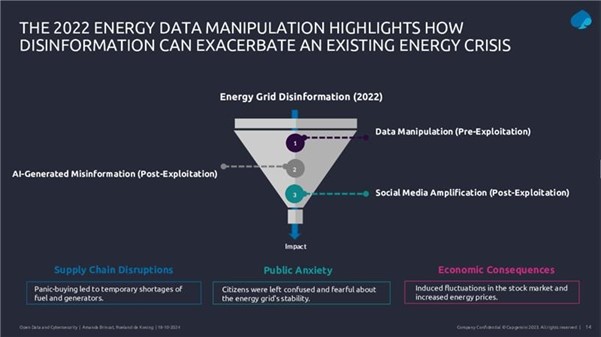

Following on from these threats, the webinar presented a case study on how cyber disinformation exploited open data during the energy and political crisis associated with the Ukraine war in 2022. Attackers manipulated data, generated false content with artificial intelligence and amplified misinformation on social media to create confusion and destabilise markets.

Figure 1. Slide from the webinar presentation "Safeguarding open data: cybersecurity essentials and skills for data providers".

Data protection and data governance strategies

In this context, the implementation of a robust governance structure emerges as a fundamental element for the protection of open data. This framework should incorporate rigorous quality management to ensure accuracy and consistency of data, together with effective updating and correction procedures. Security controls should be comprehensive, including:

- Technical protection measures.

- Integrity check procedures.

- Access and modification monitoring systems.

Risk assessment and risk management requires a systematic approach starting with a thorough identification of sensitive and critical data. This involves not only the cataloguing of critical information, but also a detailed assessment of its sensitivity and strategic value. A crucial aspect is the identification and exclusion of personal data that could allow the identification of individuals, implementing robust anonymisation techniques where necessary.

For effective protection, organisations must conduct comprehensive risk analyses to identify potential vulnerabilities in their data management systems and processes. These analyses should lead to the implementation of robust security controls tailored to the specific needs of each dataset. In this regard, the implementation of data sharing agreements establishes clear and specific terms for the exchange of information with other organisations, ensuring that all parties understand their data protection responsibilities.

Experts stressed that data governance must be structured through well-defined policies and procedures that ensure effective and secure information management. This includes the establishment of clear roles and responsibilities, transparent decision-making processes and monitoring and control mechanisms. Mitigation procedures must be equally robust, including well-defined response protocols, effective preventive measures and continuous updating of protection strategies.

In addition, it is essential to maintain a proactive approach to security management. A strategy that anticipates potential threats and adapts protection measures as the risk landscape evolves. Ongoing staff training and regular updating of policies and procedures are key elements in maintaining the effectiveness of these protection strategies. All this must be done while maintaining a balance between the need for protection and the fundamental purpose of open data: its accessibility and usefulness to the public.

Legal aspects and compliance

In addition, the webinar explained the legal and regulatory framework surrounding open data. A crucial point was the distinction between anonymization and pseudo-anonymization in the context of the GDPR (General Data Protection Regulation).

On the one hand, anonymised data are not considered personal data under the GDPR, because it is impossible to identify individuals. However, pseudo-anonymisation retains the possibility of re-identification if combined with additional information. This distinction is crucial for organisations handling open data, as it determines which data can be freely published and which require additional protections.

To illustrate the risks of inadequate anonymisation, the webinar presented the Netflix case in 2006, when the company published a supposedly anonymised dataset to improve its recommendation algorithm. However, researchers were able to "re-identify" specific users by combining this data with publicly available information on IMDb. This case demonstrates how the combination of different datasets can compromise privacy even when anonymisation measures have been taken.

In general terms, the role of the Data Governance Act in providing a horizontal governance framework for data spaces was highlighted, establishing the need to share information in a controlled manner and in accordance with applicable policies and laws. The Data Governance Regulation is particularly relevant to ensure that data protection, cybersecurity and intellectual property rights are respected in the context of open data.

The role of AI and cybersecurity in data security

The conclusions of the webinar focused on several key issues for the future of open data. A key element was the discussion on the role of artificial intelligence and its impact on data security. It highlighted how AI can act as a cyber threat multiplier, facilitating the creation of misinformation and the misuse of open data.

On the other hand, the importance of implementing Privacy Enhancing Technologies (PETs ) as fundamental tools to protect data was emphasized. These include anonymisation and pseudo-anonymisation techniques, data masking, privacy-preserving computing and various encryption mechanisms. However, it was stressed that it is not enough to implement these technologies in isolation, but that they require a comprehensive engineering approach that considers their correct implementation, configuration and maintenance.

The importance of training

The webinar also emphasised the critical importance of developing specific cybersecurity skills. ENISA's cyber skills framework, presented during the session, identifies twelve key professional profiles, including the Cybersecurity Policy and Legal Compliance Officer, the Cybersecurity Implementer and the Cybersecurity Risk Manager. These profiles are essential to address today's challenges in open data protection.

Figure 2. Slide presentation of the webinar " Safeguarding open data: cybersecurity essentials and skills for data providers".

In summary, a key recommendation that emerged from the webinar was the need for organisations to take a more proactive approach to open data management. This includes the implementation of regular impact assessments, the development of specific technical competencies and the continuous updating of security protocols. The importance of maintaining transparency and public confidence while implementing these security measures was also emphasised.

In an increasingly information-driven world, open data is transforming the way we understand and shape our societies. This data are a valuable source of knowledge that also helps to drive research, promote technological advances and improve policy decision-making.

In this context, the Publications Office of the European Union organises the annual EU Open Data Days to highlight the role of open data in European society and all the new developments. The next edition will take place on 19-20 March 2025 at the European Conference Centre Luxembourg (ECCL) and online.

This event, organised by the data.europa.eu, europe's open data portal, will bring together data providers, enthusiasts and users from all over the world, and will be a unique opportunity to explore the potential of open data in various sectors. From success stories to new initiatives, this event is a must for anyone interested in the future of open data.

What are EU Open Data Days?

EU Open Data Days are an opportunity to exchange ideas and network with others interested in the world of open data and related technologies. This event is particularly aimed at professionals involved in data publishing and reuse, analysis, policy making or academic research.However, it is also open to the general public. After all, these are two days of sharing, learning and contributing to the future of open data in Europe.

What can you expect from EU Open Data Days 2025?

The event programme is designed to cover a wide range of topics that are key to the open data ecosystem, such as:

- Success stories and best practices: real experiences from those at the forefront of data policy in Europe, to learn how open data is being used in different business models and to address the emerging frontiers of artificial intelligence.

- Challenges and solutions: an overview of the challenges of using open data, from the perspective of publishers and users, addressing technical, ethical and legal issues.

- Visualising impact: analysis of how data visualisation is changing the way we communicate complex information and how it can facilitate better decision-making and encourage citizen participation.

- Data literacy: training to acquire new skills to maximise the potential of open data in each area of work or interest of the attendees.

An event open to all sectors

The EU Open Data Days are aimed at a wide audience: the public, the media, the general public and the general public.

- Private sector: data analytics specialists, developers and technology solution providers will be able to learn new techniques and trends, and connect with other professionals in the sector.

- Public sector: policy makers and government officials will discover how open data can be used to improve decision-making, increase transparency and foster innovation in policy design.

- Academia and education: researchers, teachers and students will be able to engage in discussions on how open data is fuelling new research and advances in areas as diverse as social sciences, emerging technologies and economics.

- Journalism and media: Data journalists and communicators will learn how to use data visualisation to tell more powerful and accurate stories, fostering better public understanding of complex issues.

Submit your proposal before 22 October

Would you like to present a paper at the EU Open Data Days 2025? You have until Tuesday 22 October to send your proposal on one of the above-mentioned themes. Papers that address open data or related areas are sought, such as data visualisation or the use of artificial intelligence in conjunction with open data.

The European data portal is looking for inspiring cases that demonstrate the impact of open data use in Europe and beyond. The call is open to participants from all over the world and from all sectors: from international, national and EU public organisations, to academics, journalists and data visualisation experts. Selected projects will be part of the conference programme, and presentations must be made in English.

Proposals should be between 20 and 35 minutes in length, including time for questions and answers. If your proposal is selected, travel and accommodation expenses (one night) will be reimbursed for participants from the academic sector, the public sector and NGOs.

For further details and clarifications, please contact the organising team by email: EU-Open-Data-Days@ec.europa.eu.

- Deadline for submission of proposals: 22 October 2024.

- Notification to selected participants: November 2024.

- Delivery of the draft presentation: 15 January 2025.

- Delivery of the final presentation: 18 February 2025.

- Conference dates: 19-20 March 2025.

The future of open data is now. The EU Open Data Days 2025 will not only be an opportunity to learn about the latest trends and practices in data use, but also to build a stronger and more collaborative community around open data. Registration for the event will open in late autumn 2024, we will announce it through our social media channels on TwitterlinkedIn and Instagram.

The transformative potential of open data initiatives is now widely recognised as they offer opportunities for fostering innovation, greater transparency and improved efficiency in many processes. However, reliable measurement of the real impact of these initiatives is difficult to obtain.

From this same space we have also raised on more than one occasion the recurring question of what would be the best way to measure the impact of open data, we have reviewed different methods and best practices to try to quantify it, as well as to analyse it through detailed use cases or the specific impact on specific topics and sectors such as employment, geographic data, transport or sustainable development objectives. Now, thanks to the report "Indicators for Open Data Impact Assessment" by the data.europa.eu team, we have a new resource to not only understand but also be able to amplify the impact of open data initiatives by designing the right indicators. This publication will provide a quick analysis of the importance of these indicators and also briefly explain how they can be used to maximise the potential of open data.

Understanding open data and its value chain

Open data refers to the practice of making data available to the public in a way that makes it freely accessible and usable. Beyond ensuring simple availability, the real value of open data lies in its use in various domains, fostering economic growth, improving public sector transparency and driving social innovation. However, quantifying the real impact of data openness poses significant challenges due to the multiple ways in which data is used and the wide-ranging implications it can have for society.

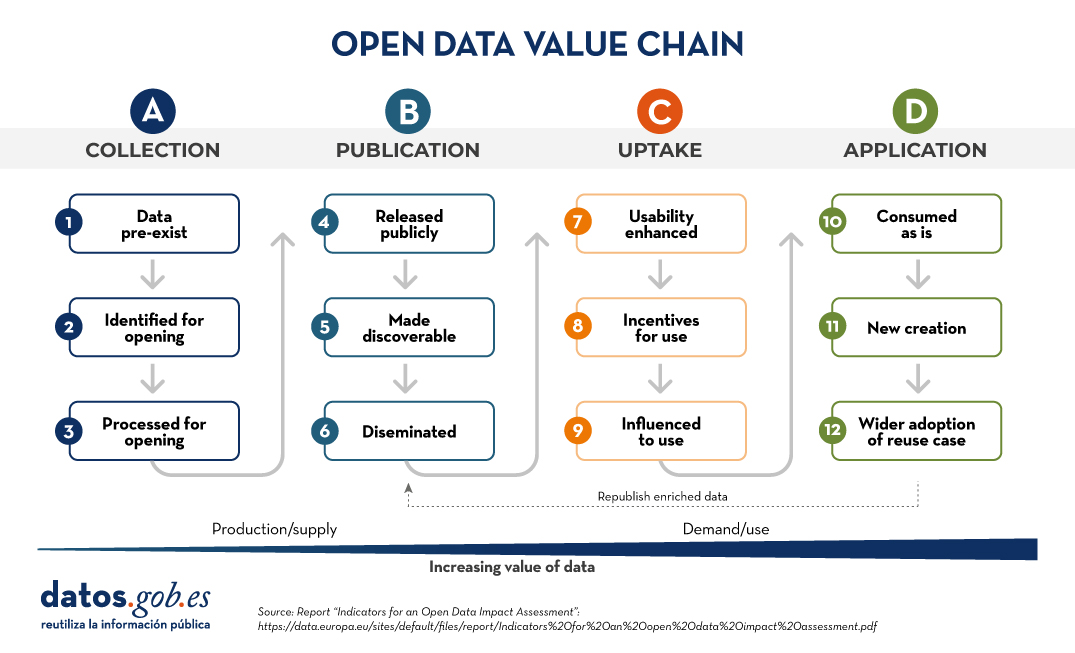

To understand the impact of open data, we must first understand its value chain, which will provide us with a structured and appropriate framework for transforming raw data into actionable insights. This chain includes four main stages that form a continuous process from the initial production to the final use of the data:

Figure 1: Open Data Value Chain

- Collection: this consists of identifying existing data and establishing the necessary procedures for their cataloguing.

- Publication: making data available in an accessible form and easy to locate.

- Uptake:will come sooner when data is easy to use and accompanied by the right incentives to use it.

- Application:either through direct consumption of the data or through some transformation that adds new value to the initial data.

Each of these steps will play a critical role in contributing to the overall effectiveness and value derived from open data. The indicators developed to assess the impact of open data will also be closely linked to each of these stages, providing a holistic view of how data is transformed from simple information into a powerful tool for development.

Indicators for impact assessment

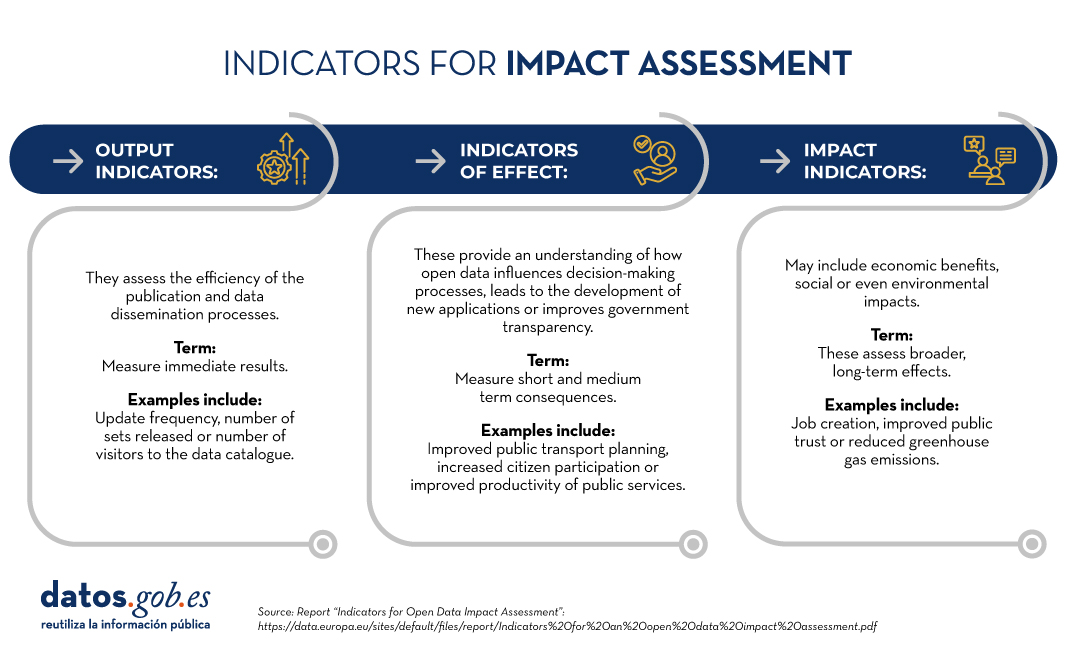

The report introduces a set of robust indicators that are specifically designed to monitor open data initiatives through their outputs, outcomes and impact as a result of their value chain. These indicators should not be seen as simple metrics, but as tools to help us understand the effectiveness of open data initiatives and make strategic improvements. Let us look at these indicators in a little more detail:

-

Output indicators: are those that focus on measuring the immediate results that come from making open data available. Examples would be the number of datasets released, the frequency of dataset updates , the number of visitors to the data catalogue, the accessibility of the data across various platforms, or even the efforts made to promote the data and increase its visibility. Output indicators help us to assess the efficiency of data publication and dissemination processes and are generally easy to measure, although they will only give a fairly superficial measure of impact.

-

Indicators of effect: Outcome indicators measure the short- and medium-term consequences of open data. These indicators are crucial to understand how open data influences decision-makingprocesses, leads to the development of new applications or improves government transparency. Thus, improved public transport planning based on usage data, increased citizen participation in the development of public policies to tackle climate change brought about by the increased availability of data and information, or improved productivity of public services through the use of data can be considered as significant examples of outcome indicators.

- Impact indicators: This is the deepest level of measurement, as impact indicators assess the broader, long-term effects of open data. These indicators may include economic benefits such as job creation or GDP growth, social impacts such as improved trust in public entities, or even environmental impacts such as the effective reduction of greenhouse gases. At this level, indicators are much more complex and specific, and should therefore be defined for each specific domain to be analysed and also according to the objectives set within that domain.

Figure 2: Indicators for impact assessmen

Implementing these indicators in practice will require the implementation of a robust methodological framework that can capture and analyse data from a variety of sources. It is recommended to combine automated and survey-based data collection methods to collect more comprehensive data. This type of dual approach allows for capturing quantitative data through automated systems while also incorporating qualitative insights through user feedback and more in-depth use case analysis.

Looking to the future

The future of open data impact assessment looks towards refining the indicators used for measurement and consolidating them through the use of interactive monitoring tools. Such tools would enable the possibility of a more continuous assessment that would provide real-time information on how open data is being used and its effects in different sectors. In addition, the development of standardised metrics for these indicators would facilitate comparative analysis across regions and over time, further improving the overall understanding of the impact of open data.

Another important factor to take into account are possible privacy and ethical considerations applicable to the selected indicators. As in any other data-centric initiative, privacy and data protection considerations will be paramount and mandatory for the indicators developed. However, once we get into its use by users, it could lead to more delicate situations. Generally, this should not be a particularly problematic issue when monitoring data. However, once we get into its use by users, it could lead to more delicate situations. Ensuring anonymity in indicators and secure practices in their management is also crucial to maintaining trust and integrity in open data processes.

In conclusion, the development and implementation of specific detailed indicators following the recommendations of the report"Indicators for an Open Data Impact Assessment" would be a significant step forward in terms of how we measure and understand the impact of open data.Continuous refinement and adaptation of these indicators will also be crucial as they evolve in tandem with the open data strategies they accompany and their growing sphere of influence. In the medium term the European Commission will further develop its analysis in this area of work through the data.europa.eu project with the ultimate goal of being able to formulate a common methodology for the assessment of the impact of the re-use of public data and to develop an interactive monitoring tool for its implementation.

Content prepared by Carlos Iglesias, Open data Researcher and consultant, World Wide Web Foundation. The contents and views expressed in this publication are the sole responsibility of the author.

The European Open Science Cloud (EOSC) is a European Union initiative that aims to promote open science through the creation of an open, collaborative and sustainabledigital research infrastructure. EOSC's main objective is to provide European researchers with easier access to the data, tools and resources they need to conduct quality research.

EOSC on the European Research and Data Agenda

EOSC is part of the 20 actions of the European Research Area (ERA) agenda 2022-2024 and is recognised as the European data space for science, research and innovation, to be integrated with other sectoral data spaces defined in the European data strategy. Among the expected benefits of the platform are the following:

- An improvement in the confidence, quality and productivity of European science.

- The development of new innovative products and services.

- Improving the impact of research in tackling major societal challenges.

The EOSC platform

EOSC is in fact an ongoing process that sets out a roadmap in which all European states participate, based on the central idea that research data is a public good that should be available to all researchers, regardless of their location or affiliation. This model aims to ensure that scientific results comply with the FAIR (Findable, Accessible, Interoperable, Reusable) Principles to facilitate reuse, as in any other data space.

However, the most visible part of EOSC is its platform that gives access to millions of resources contributed by hundreds of content providers. This platform is designed to facilitate the search, discovery and interoperability of data and other content such as training resources, security, analysis, tools, etc. To this end, the key elements of the architecture envisaged in EOSC include two main components:

- EOSC Core: which provides all the basic elements needed to discover, share, access and reuse resources - authentication, metadata management, metrics, persistent identifiers, etc.

- EOSC Exchange: to ensure that common and thematic services for data management and exploitation are available to the scientific community.

In addition, the ESOC Interoperability Framework (EOSC-IF)is a set of policies and guidelines that enable interoperability between different resources and services and facilitate their subsequent combination.

The platform is currently available in 24 languages and is continuously updated to add new data and services. Over the next seven years, a joint investment by the EU partners of at least EUR 1 billion is foreseen for its further development.

Participation in EOSC

The evolution of EOSC is being guided by a tripartite coordinating body consisting of the European Commission itself, the participating countries represented on the EOSC Steering Board and the research community represented through the EOSC Association. In addition, in order to be part of the ESCO community, you only have to follow a series of minimum rules of participation:

- The whole EOSC concept is based on the general principle of openness.

- Existing EOSC resources must comply with the FAIR principles.

- Services must comply with the EOSC architecture and interoperability guidelines.

- EOSC follows the principles of ethical behaviour and integrity in research.

- EOSC users are also expected to contribute to EOSC.

- Users must comply with the terms and conditions associated with the data they use.

- EOSC users always cite the sources of the resources they use in their work.

- Participation in EOSC is subject to applicable policies and legislation.

EOSC in Spain

The Consejo Superior de Investigaciones Científicas (CSIC) of Spain was one of the 4 founding members of the association and is currently a commissioned member of the association, in charge of coordination at national level.

CSIC has been working for years on its open access repository DIGITAL.CSIC as a step towards its future integration into EOSC. Within its work in open science we can highlight for example the adoption of the Current Research Information System (CRIS), information systems designed to help research institutions to collect, organise and manage data on their research activity: researchers, projects, publications, patents, collaborations, funding, etc.

CRIS are already important tools in helping institutions track and manage their scientific output, promoting transparency and open access to research. But they can also play an important role as sources of information feeding into the EOSC, as data collected in CRIS can also be easily shared and used through the EOSC.

The road to open science

Collaboration between CRIS and ESCO has the potential to significantly improve the accessibility and re-use of research data, but there are also other transitional actions that can be taken on the road to producing increasingly open science:

- Ensure the quality of metadata to facilitate open data exchange.

- Disseminate the FAIR principles among the research community.

- Promote and develop common standards to facilitate interoperability.

- Encourage the use of open repositories.

- Contribute by sharing resources with the rest of the community.

This will help to boost open science, increasing the efficiency, transparency and replicability of research.

Content prepared by Carlos Iglesias, Open data Researcher and consultant, World Wide Web Foundation.

The contents and points of view reflected in this publication are the sole responsibility of its author.

On September 8, the webinar \"Geospatial Trends 2023: Opportunities for data.europa.eu\" was held, organized by the Data Europa Academy and focused on emerging trends in the geospatial field. Specifically, the online conference addressed the concept of GeoAI (Geospatial Artificial Intelligence), which involves the application of artificial intelligence (AI) combined with geospatial data.

Next, we will analyze the most cutting-edge technological developments of 2023 in this field, based on the knowledge provided by the experts participating in the aforementioned webinar.

What is GeoAI?

The term GeoAI, as defined by Kyoung-Sook Kim, co-chair of the GeoAI Working Group of the Open Geospatial Consortium (OGC), refers to \"a set of methods or automated entities that use geospatial data to perceive, construct (automate), and optimize spaces in which humans, as well as everything else, can safely and efficiently carry out their geographically referenced activities.\"

GeoAI allows us to create unprecedented opportunities, such as:

- Extracting geospatial data enriched with deep learning: Automating the extraction, classification, and detection of information from data such as images, videos, point clouds, and text.

- Conducting predictive analysis with machine learning: Facilitating the creation of more accurate prediction models, pattern detection, and automation of spatial algorithms.

- Improving the quality, uniformity, and accuracy of data: Streamlining manual data generation workflows through automation to enhance efficiency and reduce costs.

- Accelerating the time to gain situational knowledge: Assisting in responding more rapidly to environmental needs and making more proactive, data-driven decisions in real-time.

- Incorporating location intelligence into decision-making: Offering new possibilities in decision-making based on data from the current state of the area that needs governance or planning.

Although this technology gained prominence in 2023, it was already discussed in the 2022 geospatial trends report, where it was indicated that integrating artificial intelligence into spatial data represents a great opportunity in the world of open data and the geospatial sector.

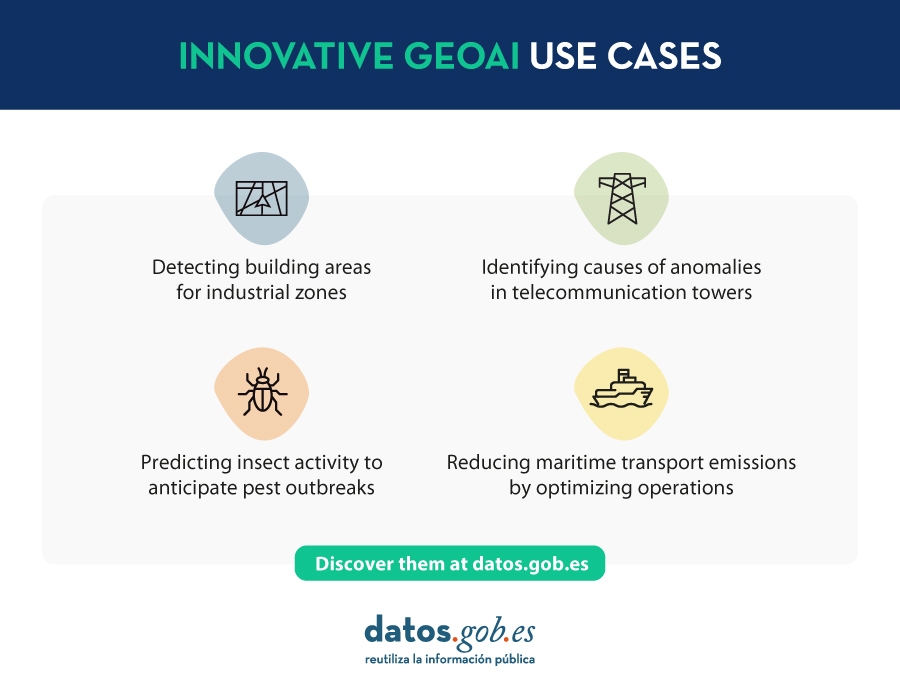

Use Cases of GeoAI

During the Geospatial Trends 2023 conference, companies in the GIS sector, Con terra and 52ºNorth, shared practical examples highlighting the use of GeoAI in various geospatial applications.

Examples presented by Con terra included:

- KINoPro: A research project using GeoAI to predict the activity of the \"black arches\" moth and its impact on German forests.

- Anomaly detection in cell towers: Using a neural network to detect causes of anomalies in towers that can affect the location in emergency calls.

- Automated analysis of construction areas: Aiming to detect building areas for industrial zones using OpenData and satellite imagery.

On the other hand, 52ºNorth presented use cases such as MariData, which seeks to reduce emissions from maritime transport by using GeoAI to calculate optimal routes, considering ship position, environmental data, and maritime traffic regulations. They also presented KI:STE, which applies artificial intelligence technologies in environmental sciences for various projects, including classifying Sentinel-2 images into (un)protected areas.

These projects highlight the importance of GeoAI in various applications, from predicting environmental events to optimizing maritime transport routes. They all emphasize that this technology is a crucial tool for addressing complex problems in the geospatial community.

GeoAI not only represents a significant opportunity for the spatial sector but also tests the importance of having open data that adheres to FAIR principles (Findable, Accessible, Interoperable, Reusable). These principles are essential for GeoAI projects as they ensure transparent, efficient, and ethical access to information. By adhering to FAIR principles, datasets become more accessible to researchers and developers, fostering collaboration and continuous improvement of models. Additionally, transparency and the ability to reuse open data contribute to building trust in results obtained through GeoAI projects.

Reference

| Reference video | https://www.youtube.com/watch?v=YYiMQOQpk8A |

The active participation of young people in civic and political life is one of the keys to strengthening democracy in Europe. Analyzing and understanding the voice of young people provides insight into their attitudes and opinions, something that helps to foresee future trends in society with sufficient room for maneuver to address their needs and concerns towards a more prosperous and comfortable future for all.

In the mission to gain a clearer perspective on how they participate in Europe, open data has become a valuable tool. In this post, we will explore how young people in Europe actively engage in society and politics through relevant European Union (EU) open data published on the European open data portal.

Youth commitment in the European elections

The European Union has as one of its objectives to promote the active participation of young people in democracy and society. Their participation in elections and civic activities enriches European democracy. Young people bring diverse ideas and perspectives, which contributes to decision-making and ensures that policies are tailored to their needs and challenges. In addition, their participation contributes to a political system that reflects the interests of all citizens, which in turn fosters an inclusive and peaceful society.

In the last European Parliament elections, the highest turnout in the last 20 years was achieved, with more than 50% of the European population voting, as corroborated by the EU's Eurobarometer post-election survey. This increase in turnout was largely due to an increase in youth participation.

The data show that the younger generation (under 25) increased their electoral participation by 14% to 42%, while the participation of 25-39 year olds increased by 12% to 47%, compared between the 2014 and 2019 European elections. This growth in youth participation raises a question: what motivated young people to participate more? According to the 2021 Eurobarometer Youth Survey, a sense of duty as a citizen (32%) and a willingness to take responsibility for the future (32%) were the main factors motivating young people to vote in the European elections.

Why do young people want to participate in the EU?

In addition to voting in elections, there are other ways in which young people demonstrate that they are an active part of citizenship. The Youth Survey 2021 reveals interesting data about their interest in politics and civic life.

In general, politics is a topic that interests them. The majority of participants in the Youth Survey 2021 claimed to discuss politics with friends or family (85%). In addition, many said they understand how their country's national government works (58%). However, most young people feel they have little influence on important decisions, laws, and policies that affect them. Young people feel they have more say in their local government (47%), than in the national government (40%) or at the EU level (30%).

The next step, after understanding the policy, is action. Young people believe that certain political and civic activities are more effective than others in getting their voice to decision-makers. In order, voting in elections (41%), participating in protests or street demonstrations (33%) and participating in petitions (30%) were considered the three most effective activities by respondents. Many young people had voted in their last local, national or European elections (46%) and had created or signed a petition (42%).

However, the survey reveals an interesting divergence between young people's perceptions and their actions. On some occasions, youth get involved in activities even though they are not what they consider to be the most effective, as in the case of online signature petitions. On the other hand, they do not always participate in activities that they perceive to be effective, such as street protests or contact with politicians.

The youth impulse for European democracy

Young people want the issues they see as priorities to be on the political agenda of the next European elections. A more recent special Eurobarometer on democracy in action in 2023 revealed that young people aged 15-24 are the age group most satisfied with the functioning of democracy in the EU (61%, compared to the EU average of 54%).

Climate change is a particularly prominent concern among young people, with 40% of respondents aged 15-24 considering this issue a priority, compared to 31% of the general EU population.

To encourage youth participation in the European political agenda, initiatives have been developed that use open data to bring politics closer to citizens. Examples such as TrackmyEU and Democracy Game seek to engage young people in politics and enable them to access information on EU policies and participate in debates and civic activities.

In general, open data provides valuable insights into many realities, for example, that affecting youth and their interaction in society and politics. This analysis enables governments and public administrations to make informed decisions on issues affecting this social group. Young Europeans are interested in politics, actively participate in elections and get involved in youth organizations; they are concerned about issues such as inequality and climate change. Open data is also used in initiatives that promote the participation of young people in political and civic life, further strengthening European democracy.

In an increasingly digital and data-driven society, access to open data is essential to understand the concerns and interests of youth and their participation in civic and political decision-making. As a part of an active and engaged citizenry, youth have an important role to play in Europe's future, and open data is an essential tool to support their participation.

Content based on the post from the European open data portal Understanding youth engagement in Europe through open data.

IATE, which stands for Interactive Terminology for Europe, is a dynamic database designed to support the multilingual drafting of European Union texts. It aims to provide relevant, reliable and easily accessible data with a distinctive added value compared to other sources of lexical information such as electronic archives, translation memories or the Internet.

This tool is of interest to EU institutions that have been using it since 2004 and to anyone, such as language professionals or academics, public administrations, companies or the general public. This project, launched in 1999 by the Translation Center, is available to any organization or individual who needs to draft, translate or interpret a text on the EU.

Origin and usability of the platform

IATE was created in 2004 by merging different EU terminology databases.The original Eurodicautom, TIS, Euterpe, Euroterms and CDCTERM databases were imported into IATE. This process resulted in a large number of duplicate entries, with the consequence that many concepts are covered by several entries instead of just one. To solve this problem, a cleaning working group was formed and since 2015 has been responsible for organizing analyses and data cleaning initiatives to consolidate duplicate entries into a single entry. This explains why statistics on the number of entries and terms show a downward trend, as more content is deleted and updated than is created.

In addition to being able to perform queries, there is the possibility to download your datasets together with the IATExtract extraction tool that allows you to generate filtered exports.

This inter-institutional terminology base was initially designed to manage and standardize the terminology of EU agencies. Subsequently, however, it also began to be used as a support tool in the multilingual drafting of EU texts, and has now become a complex and dynamic terminology management system. Although its main purpose is to facilitate the work of translators working for the EU, it is also of great use to the general public.

IATE has been available to the public since 2007 and brings together the terminology resources of all EU translation services. The Translation Center manages the technical aspects of the project on behalf of the partners involved: European Parliament (EP), Council of the European Union (Consilium), European Commission (COM), Court of Justice (CJEU), European Central Bank (ECB), European Court of Auditors (ECA), European Economic and Social Committee (EESC/CoR), European Committee of the Regions (EESC/CoR), European Investment Bank (EIB) and the Translation Centre for the Bodies of the European Union (CoT).

The IATE data structure is based on a concept-oriented approach, which means that each entry corresponds to a concept (terms are grouped by their meaning), and each concept should ideally be covered by a single entry. Each IATE entry is divided into three levels:

-

Language Independent Level (LIL)

-

Language Level (LL)

-

Term Level (TL) For more information, see Section 3 ('Structure Overview') below.

Reference source for professionals and useful for the general public

IATE reflects the needs of translators in the European Union, so that any field that has appeared or may appear in the texts of the publications of the EU environment, its agencies and bodies can be covered. The financial crisis, the environment, fisheries and migration are areas that have been worked on intensively in recent years. To achieve the best result, IATE uses the EuroVoc thesaurus as a classification system for thematic fields.

As we have already pointed out, this database can be used by anyone who is looking for the right term about the European Union. IATE allows exploration in fields other than that of the term consulted and filtering of the domains in all EuroVoc fields and descriptors. The technologies used mean that the results obtained are highly accurate and are displayed as an enriched list that also includes a clear distinction between exact and fuzzy matches of the term.

The public version of IATE includes the official languages of the European Union, as defined in Regulation No. 1 of 1958. In addition, a systematic feed is carried out through proactive projects: if it is known that a certain topic is to be covered in EU texts, files relating to this topic are created or improved so that, when the texts arrive, the translators already have the required terminology in IATE.

How to use IATE

To search in IATE, simply type in a keyword or part of a collection name. You can define further filters for your search, such as institution, type or date of creation. Once the search has been performed, a collection and at least one display language are selected.

To download subsets of IATE data you need to be registered, a completely free option that allows you to store some user preferences in addition to downloading. Downloading is also a simple process and can be done in csv or tbx format.

The IATE download file, whose information can also be accessed in other ways, contains the following fields:

-

Language independent level:

-

Token number: the unique identifier of each concept.

-

Subject field: the concepts are linked to fields of knowledge in which they are used. The conceptual structure is organized around twenty-one thematic fields with various subfields. It should be noted that concepts can be linked to more than one thematic field.

-

Language level:

-

Language code: each language has its own ISO code.

-

Term level

-

Term: concept of the token.

-

Type of term. They can be: terms, abbreviation, phrase, formula or short formula.

-

Reliability code. IATE uses four codes to indicate the reliability of terms: untested, minimal, reliable or very reliable.

-

Evaluation. When several terms are stored in a language, specific evaluations can be assigned as follows: preferable, admissible, discarded, obsolete and proposed.

A continuously updated terminology database

The IATE database is a document in constant growth, open to public participation, so that anyone can contribute to its growth by proposing new terminologies to be added to existing files, or to create new files: you can send your proposal to iate@cdt.europa.eu, or use the 'Comments' link that appears at the bottom right of the file of the term you are looking for. You can provide as much relevant information as you wish to justify the reliability of the proposed term, or suggest a new term for inclusion. A terminologist of the language in question will study each citizen's proposal and evaluate its inclusion in the IATE.

In August 2023, IATE announced the availability of version 2.30.0 of this data system, adding new fields to its platform and improving functions, such as the export of enriched files to optimize data filtering. As we have seen, this EU inter-institutional terminology database will continue to evolve continuously to meet the needs of EU translators and IATE users in general.

Another important aspect is that this database is used for the development of computer-assisted translation (CAT) tools, which helps to ensure the quality of the translation work of the EU translation services. The results of translators' terminology work are stored in IATE and translators, in turn, use this database for interactive searches and to feed domain- or document-specific terminology databases for use in their CAT tools.

IATE, with more than 7 million terms in over 700,000 entries, is a reference in the field of terminology and is considered the largest multilingual terminology database in the world. More than 55 million queries are made to IATE each year from more than 200 countries, which is a testament to its usefulness.