In the last six months, the open data ecosystem in Spain has experienced intense activity marked by regulatory and strategic advances, the implementation of new platforms and functionalities in data portals, or the launch of innovative solutions based on public information.

In this article, we review some of those advances, so you can stay up to date. We also invite you to review the article on the news of the first half of 2025 so that you can have an overview of what has happened this year in the national data ecosystem.

Cross-cutting strategic, regulatory and policy developments

Data quality, interoperability and governance have been placed at the heart of both the national and European agenda, with initiatives seeking to foster a robust framework for harnessing the value of data as a strategic asset.

One of the main developments has been the launch of a new digital package by the European Commission in order to consolidate a robust, secure and competitive European data ecosystem. This package includes a digital bus to simplify the application of the Artificial Intelligence (AI) Regulation. In addition, it is complemented by the new Data Union Strategy, which is structured around three pillars:

- Expand access to quality data to drive artificial intelligence and innovation.

- Simplify the existing regulatory framework to reduce barriers and bureaucracy.

- Protect European digital sovereignty from external dependencies.

Its implementation will take place gradually over the next few months. It will be then that we will be able to appreciate its effects on our country and the rest of the EU territories.

Activity in Spain has also been - and will be - marked by the V Open Government Plan 2025-2029, approved last October. This plan has more than 200 initiatives and contributions from both civil society and administrations, many of them related to the opening and reuse of data. Spain's commitment to open data has also been evident in its adherence to the International Open Data Charter, a global initiative that promotes the openness and reuse of public data as tools to improve transparency, citizen participation, innovation and accountability.

Along with the promotion of data openness, work has also been done on the development of data sharing spaces. In this regard, the UNE 0087 standard was presented, which is in addition to UNE specifications on data and defines for the first time in Spain the key principles and requirements for creating and operating in data spaces, improving their interoperability and governance.

More innovative data-driven solutions

Spanish bodies continue to harness the potential of data as a driver of solutions and policies that optimise the provision of services to citizens. Some examples are:

- The Ministry of Health and citizen science initiative, Mosquito Alert, are using artificial intelligence and automated image analysis to improve real-time detection and tracking of tiger mosquitoes and invasive species.

- The Valenciaport Foundation, together with other European organisations, has launched a free tool that allows the benefits of installing wind and photovoltaic energy systems in ports to be assessed.

- The Cabildo de la Palma opted for smart agriculture with the new Smart Agro website: farmers receive personalised irrigation recommendations according to climate and location. The Cabildo has also launched a viewer to monitor mobility on the island.

- The City Council of Segovia has implemented a digital twin that centralizes high-value applications and geographic data, allowing the city to be visualized and analyzed in an interactive three-dimensional environment. It improves municipal management and promotes transparency and citizen participation.

- Vila-real City Council has launched a digital application that integrates public transport, car parks and tourist spots in real time. The project seeks to optimize urban mobility and promote sustainability through smart technology.

- Sant Boi City Council has launched an interactive map made with open data that centralises information on urban transport, parking and sustainable options on a single platform, in order to improve urban mobility.

- The DataActive International Research Network has been inaugurated, an initiative funded by the Higher Sports Council that seeks to promote the design of active urban environments through the use of open data.

Not only public bodies reuse open data, universities are also working on projects linked to digital innovation based on public information:

- Students from the Universitat de València have designed projects that use AI and open data to prevent natural disasters.

- Researchers from the University of Castilla-La Mancha have shown that it is feasible to reuse air quality prediction models in different areas of Madrid using transfer learning.

In addition to solutions, open data can also be used to shape other types of products, including sculptures. This is the case of "The skeleton of climate change", a figure presented by the National Museum of Natural Sciences, based on data on changes in global temperature from 1880 to 2024.

New portals and functionalities to extract value from data

The solutions and innovations mentioned above are possible thanks to the existence of multiple platforms for opening or sharing data that do not stop incorporating new data sets and functionalities to extract value from them. Some of the developments we have seen in this regard in recent months are:

- The National Observatory of Technology and Society (ONTSI) has launched a new website. One of its new features is Ontsi Data, a tool for preparing reports with indicators from both its portal and third parties.

- The General Council of Notaries has launched a Housing Statistical Portal, an open tool with reliable and up-to-date data on the real estate market in Spain.

- The Spanish Agency for Food Safety and Nutrition (AESAN) has inaugurated on its website an open data space with microdata on the composition of food and beverages marketed in Spain.

- The Centre for Sociological Research (CIS) launched a renewed website, adapted to any device and with a more powerful search engine to facilitate access to its studies and data.

- The National Geographic Institute (IGN) has presented a new website for SIOSE, the Information System on Land Occupation in Spain, with a more modern, intuitive and dynamic design. In addition, it has made available to the public a new version of the Geographic Reference Information of Transport Networks (IGR-RT), segmented by provinces and modes of transport, and available in Shapefile and GeoPackage.

- The AKIS Advisors Platform, promoted by the Ministry of Agriculture, Fisheries and Food, has launched a new open data API that allows registered users to download and reuse content related to the agri-food sector in Spain.

- The Government of Catalonia launched a new corporate website that centralises key aspects of European funds, public procurement, transparency and open data in a single point. It has also launched a website where it collects information on the AI systems it uses.

- PortCastelló has published its 2024 Proceedings in open data format. All the management, traffic, infrastructures and economic data of the port are now accessible and reusable by any citizen.

- Researchers from the Universitat Oberta de Catalunya and the Institute of Photonic Sciences have created an open library with data on 140 biomolecules. A pioneering resource that promotes open science and the use of open data in biomedicine.

- CitriData, a federated space for data, models and services in the Andalusian citrus value chain, was also presented. Its goal is to transform the sector through the intelligent and collaborative use of data.

Other organizations are immersed in the development of their novelties. For example, we will soon see the new Open Data Portal of Aguas de Alicante, which will allow public access to key information on water management, promoting the development of solutions based on Big Data and AI.

These months have also seen strategic advances linked to improving the quality and use of data, such as the Data Government Model of the Generalitat Valenciana or the Roadmap for the Provincial Strategy of artificial intelligence of the Provincial Council of Castellón.

Datos.gob.es also introduced a new platform aimed at optimizing both publishing and data access. If you want to know this and other news of the Aporta Initiative in 2025, we invite you to read this post.

Encouraging the use of data through events, resources and citizen actions

The second half of 2025 was the time chosen by a large number of public bodies to launch tenders aimed at promoting the reuse of the data they publish. This was the case of the Junta de Castilla y León, the Madrid City Council, the Valencia City Council and the Provincial Council of Bizkaia. Our country has also participated in international events such as the NASA Space Apps Challenge.

Among the events where the power of open data has been disseminated, the Open Government Partnership (OGP) Global Summit, the Iberian Conference on Spatial Data Infrastructures (JIIDE), the International Congress on Transparency and Open Government or the 17th International Conference on the Reuse of Public Sector Information of ASEDIE stand out. although there were many more.

Work has also been done on reports that highlight the impact of data on specific sectors, such as the DATAGRI Chair 2025 Report of the University of Cordoba, focused on the agri-food sector. Other published documents seek to help improve data management, such as "Fundamentals of Data Governance in the context of data spaces", led by DAMA Spain, in collaboration with Gaia-X Spain.

Citizen participation is also critical to the success of data-driven innovation. In this sense, we have seen both activities aimed at promoting the publication of data and improving those already published or their reuse:

- The Barcelona Open Data Initiative requested citizen help to draw up a ranking of digital solutions based on open data to promote healthy ageing. They also organized a participatory activity to improve the iCuida app, aimed at domestic and care workers. This app allows you to search for public toilets, climate shelters and other points of interest for the day-to-day life of caregivers.

- The Spanish Space Agency launched a survey to find out the needs and uses of Earth Observation images and data within the framework of strategic projects such as the Atlantic Constellation.

In conclusion, the activities carried out in the second half of 2025 highlight the consolidation of the open data ecosystem in Spain as a driver of innovation, transparency and citizen participation. Regulatory and strategic advances, together with the creation of new platforms and solutions based on data, show a firm commitment on the part of institutions and society to take advantage of public information as a key resource for sustainable development, the improvement of services and the generation of knowledge.

As always, this article is just a small sample of the activities carried out. We invite you to share other activities that you know about through the comments.

Spain once again stands out in the European open data landscape. The Open Data Maturity 2025 report places our country among the leaders in the opening and reuse of public sector information, consolidating an upward trajectory in digital innovation.

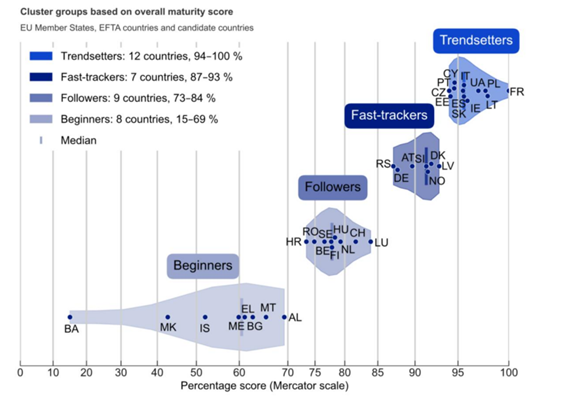

The report, produced annually by the European data portal, data.europa.eu, assesses the degree of maturity of open data in Europe. To do this, it analyzes several indicators, grouped into four dimensions: policy, portal, quality and impact. This year's edition has involved 36 countries, including the 27 Member States of the European Union (EU), three European Free Trade Association countries (Iceland, Norway and Switzerland) and six candidate countries (Albania, Bosnia and Herzegovina, Montenegro, North Macedonia, Serbia and Ukraine).

This year, Spain is in fifth position among the countries of the European Union and sixth out of the total number of countries analysed, tied with Italy. Specifically, a total score of 95.6% was obtained, well above the average of the countries analysed (81.1%). With this data, Spain improves its score compared to 2024, when it obtained 94.8%.

Spain, among the European leaders

With this position, Spain is once again among the countries that prescribe open data (trendsetters), i.e. those that set trends and serve as an example of good practices to other States. Spain shares a group with France, Lithuania, Poland, Ukraine, Ireland, the aforementioned Italy, Slovakia, Cyprus, Portugal, Estonia and the Czech Republic.

The countries in this group have advanced open data policies, aligned with the technical and political progress of the European Union, including the publication of high-value datasets. In addition, there is strong coordination of open data initiatives at all levels of government. Its national portals offer comprehensive features and quality metadata, with few limitations on publication or use. This means that published data can be more easily reused for multiple purposes, helping to generate a positive impact in different areas.

Figure 1. Member countries of the different clusters.

The keys to Spain's progress

According to the report, Spain strengthened its leadership in open data through strategic policy development, technical modernization, and reuse-driven innovation. In particular, improvements in the political sphere are what have boosted Spain's growth:

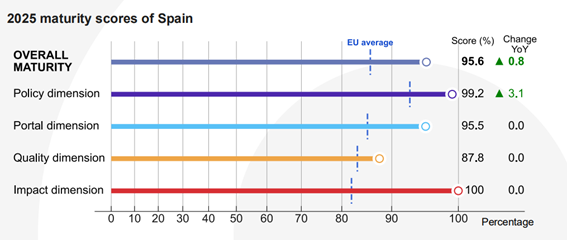

Figure 2. Spain's score in the different dimensions together with growth over the previous year.

As shown in the image, the political dimension has reached a score of 99.2% compared to 96% last year, standing out from the European average of 93.1%. The reason for this growth is the progress in the regulatory framework. In this regard, the report highlights the configuration of the V Open Government Plan, developed through a co-creation process in which all stakeholders participated. This plan has introduced new initiatives related to the governance and reuse of open data. Another noteworthy issue is that Spain promoted the publication of high-value datasets, in line with Implementing Regulation (EU) 2023/138.

The rest of the dimensions remain stable, all of them with scores above the European average: in the portal dimension, 95.5% has been obtained compared to 85.45% in Europe, while the quality dimension has been valued with 87.8% compared to 83.4% in the rest of the countries analysed. The Impact block continues to be our great asset, with 100% compared to 82.1% in Europe. In this dimension, we continue to position ourselves as great leaders, thanks to a clear definition of reuse, the systematic measurement of data use and the existence of examples of impact in the governmental, social, environmental and economic spheres.

Although there have not been major movements in the score of these dimensions, the report does highlight milestones in Spain in all areas. For example, the datos.gob.es platform underwent a major redesign, including adjustments to the DCAT-AP-ES metadata profile, in order to improve quality and interoperability. In this regard, a specific implementation guide was published and a learning and development community was consolidated through GitHub. In addition, the portal's search engine and monitoring tools were improved, including tracking external reuse through GitHub references and rich analytics through interactive dashboards.

The involvement of the infomediary sector has been key in strengthening Spain's leadership in open data. The report highlights the importance of activities such as the National Open Data Meeting, with challenges that are worked on jointly by a multidisciplinary team with representatives of public, private and academic institutions, edition after edition. In addition, the Spanish Federation of Municipalities and Provinces identified 80 essential data sets on which local governments should focus when advancing in the opening of information, promoting coherence and reuse at the municipal level.

The following image shows the specific score for each of the subdimensions analyzed:

Figure 3. Spain's score in the different dimensions and subcategories.

You can see the details of the report for Spain on the website of the European portal.

Next steps and common challenges

The report concludes with a series of specific recommendations for each group of countries. For the group of trendsetters, in which Spain is located, the recommendations are not so much focused on reaching maturity – already achieved – but on deepening and expanding their role as European benchmarks. Some of the recommendations are:

- Consolidate thematic ecosystems (supplier and reuser communities) and prioritize high-value data in a systematic way.

- Align local action with the national strategy, enabling "data-driven" policies.

- Cooperate with data.europa.eu and other countries to implement and adapt an impact assessment framework with domain-by-domain metrics.

- Develop user profiles and allow their contributions to the national portal.

- Improve data and metadata quality and localization through validation tools, artificial intelligence, and user-centric flows.

- Apply domain-specific standards to harmonize datasets and maximize interoperability, quality, and reusability.

- Offer advanced and certified training in regulations and data literacy.

- Collaborate internationally on reusable solutions, such as shared or open source software.

Spain is already working on many of these points to continue improving its open data offer. The aim is for more and more reusers to be able to easily take advantage of the potential of public information to generate services and solutions that generate a positive impact on society as a whole.

The position achieved by Spain in this European ranking is the result of the work of all public initiatives, companies, user communities and reusers linked to open data, which promote an ecosystem that does not stop growing. Thank you for the effort!

Open data from public sources has evolved over the years, from being simple repositories of information to constituting dynamic ecosystems that can transform public governance. In this context, artificial intelligence (AI) emerges as a catalytic technology that benefits from the value of open data and exponentially enhances its usefulness. In this post we will see what the mutually beneficial symbiotic relationship between AI and open data looks like.

Traditionally, the debate on open data has focused on portals: the platforms on which governments publish information so that citizens, companies and organizations can access it. But the so-called "Third Wave of Open Data," a term by New York University's GovLab, emphasizes that it is no longer enough to publish datasets on demand or by default. The important thing is to think about the entire ecosystem: the life cycle of data, its exploitation, maintenance and, above all, the value it generates in society.

What role can open data play in AI?

In this context, AI appears as a catalyst capable of automating tasks, enriching open government data (DMOs), facilitating its understanding and stimulating collaboration between actors.

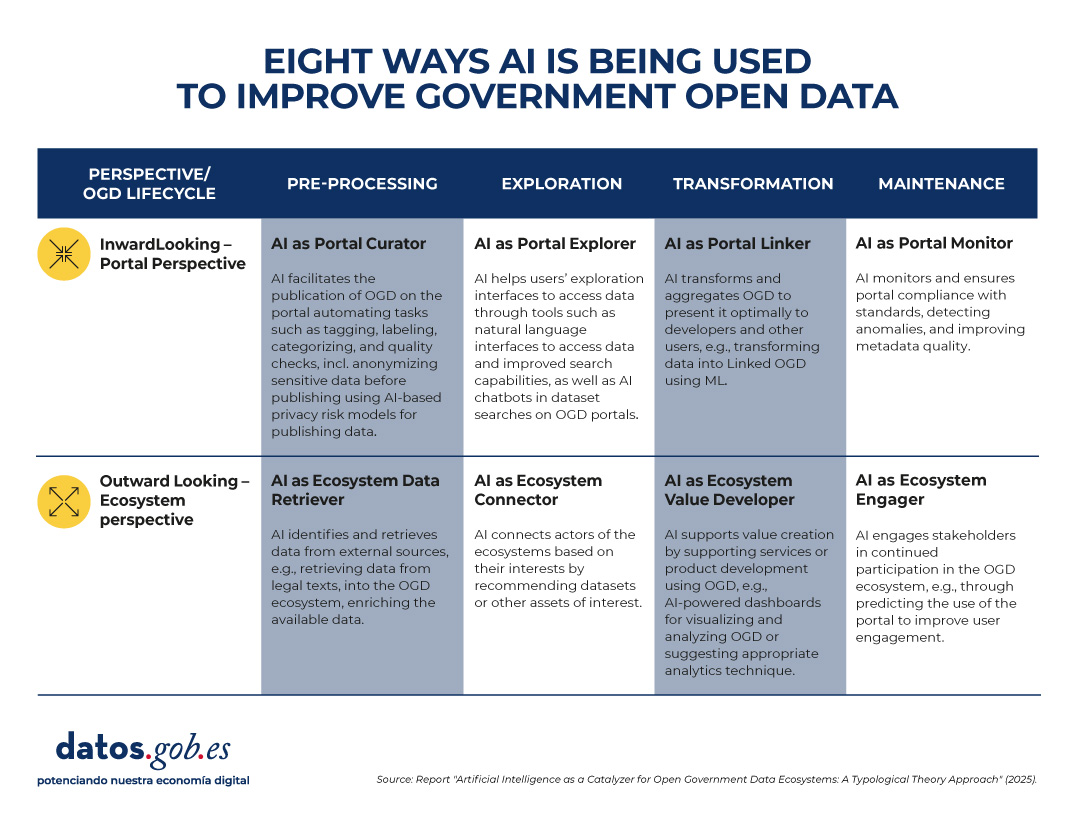

Recent research, developed by European universities, maps how this silent revolution is happening. The study proposes a classification of uses according to two dimensions:

- Perspective, which in turn is divided into two possible paths:

- Inward-looking (portal): The focus is on the internal functions of data portals.

- Outward-looking (ecosystem): the focus is extended to interactions with external actors (citizens, companies, organizations).

- Phases of the data life cycle, which can be divided into pre-processing, exploration, transformation and maintenance.

In summary, the report identifies these eight types of AI use in government open data, based on perspective and phase in the data lifecycle.

Figure 1. Eight uses of AI to improve government open data. Source: presentation “Data for AI or AI for data: artificial intelligence as a catalyst for open government ecosystems”, based on the report of the same name, from EU Open Data Days 2025.

Each of these uses is detailed below:

1. Portal curator

This application focuses on pre-processing data within the portal. AI helps organize, clean, anonymize, and tag datasets before publication. Some examples of tasks are:

- Automation and improvement of data publication tasks.

- Performing auto-tagging and categorization functions.

- Data anonymization to protect privacy.

- Automatic cleaning and filtering of datasets.

- Feature extraction and missing data handling.

2. Ecosystem data retriever

Also in the pre-processing phase, but with an external focus, AI expands the coverage of portals by identifying and collecting information from diverse sources. Some tasks are:

- Retrieve structured data from legal or regulatory texts.

- News mining to enrich datasets with contextual information.

- Integration of urban data from sensors or digital records.

- Discovery and linking of heterogeneous sources.

- Conversion of complex documents into structured information.

3. Portal explorer

In the exploration phase, AI systems can also make it easier to find and interact with published data, with a more internal approach. Some use cases:

- Develop semantic search engines to locate datasets.

- Implement chatbots that guide users in data exploration.

- Provide natural language interfaces for direct queries.

- Optimize the portal's internal search engines.

- Use language models to improve information retrieval.

4. Ecosystem connector

Operating also in the exploration phase, AI acts as a bridge between actors and ecosystem resources. Some examples are:

- Recommend relevant datasets to researchers or companies.

- Identify potential partners based on common interests.

- Extract emerging themes to support policymaking.

- Visualize data from multiple sources in interactive dashboards.

- Personalize data suggestions based on social media activity.

5. Portal linker

This functionality focuses on the transformation of data within the portal. Its function is to facilitate the combination and presentation of information for different audiences. Some tasks are:

- Convert data into knowledge graphs (structures that connect related information, known as Linked Open Data).

- Summarize and simplify data with NLP (Natural Language Processing) techniques.

- Apply automatic reasoning to generate derived information.

- Enhance multivariate visualization of complex datasets.

- Integrate diverse data into accessible information products.

6. Ecosystem value developer

In the transformation phase and with an external perspective, AI generates products and services based on open data that provide added value. Some tasks are:

- Suggest appropriate analytical techniques based on the type of dataset.

- Assist in the coding and processing of information.

- Create dashboards based on predictive analytics.

- Ensure the correctness and consistency of the transformed data.

- Support the development of innovative digital services.

7. Portal monitor

It focuses on portal maintenance, with an internal focus. Their role is to ensure quality, consistency, and compliance with standards. Some tasks are:

- Detect anomalies and outliers in published datasets.

- Evaluate the consistency of metadata and schemas.

- Automate data updating and purification processes.

- Identify incidents in real time for correction.

- Reduce maintenance costs through intelligent monitoring.

8. Ecosystem engager

And finally, this function operates in the maintenance phase, but outwardly. It seeks to promote citizen participation and continuous interaction. Some tasks are:

- Predict usage patterns and anticipate user needs.

- Provide personalized feedback on datasets.

- Facilitate citizen auditing of data quality.

- Encourage participation in open data communities.

- Identify user profiles to design more inclusive experiences.

What does the evidence tell us?

The study is based on a review of more than 70 academic papers examining the intersection between AI and OGD (open government data). From these cases, the authors observe that:

- Some of the defined profiles, such as portal curator, portal explorer and portal monitor, are relatively mature and have multiple examples in the literature.

- Others, such as ecosystem value developer and ecosystem engager, are less explored, although they have the most potential to generate social and economic impact.

- Most applications today focus on automating specific tasks, but there is a lot of scope to design more comprehensive architectures, combining several types of AI in the same portal or across the entire data lifecycle.

From an academic point of view, this typology provides a common language and conceptual structure to study the relationship between AI and open data. It allows identifying gaps in research and guiding future work towards a more systemic approach.

In practice, the framework is useful for:

- Data portal managers: helps them identify what types of AI they can implement according to their needs, from improving the quality of datasets to facilitating interaction with users.

- Policymakers: guides them on how to design AI adoption strategies in open data initiatives, balancing efficiency, transparency, and participation.

- Researchers and developers: it offers them a map of opportunities to create innovative tools that address specific ecosystem needs.

Limitations and next steps of the synergy between AI and open data

In addition to the advantages, the study recognizes some pending issues that, in a way, serve as a roadmap for the future. To begin with, several of the applications that have been identified are still in early stages or are conceptual. And, perhaps most relevantly, the debate on the risks and ethical dilemmas of the use of AI in open data has not yet been addressed in depth: bias, privacy, technological sustainability.

In short, the combination of AI and open data is still a field under construction, but with enormous potential. The key will be to move from isolated experiments to comprehensive strategies, capable of generating social, economic and democratic value. AI, in this sense, does not work independently of open data: it multiplies it and makes it more relevant for governments, citizens and society in general.

Open data is a fundamental fuel for contemporary digital innovation, creating information ecosystems that democratise access to knowledge and foster the development of advanced technological solutions.

However, the mere availability of data is not enough. Building robust and sustainable ecosystems requires clear regulatory frameworks, sound ethical principles and management methodologies that ensure both innovation and the protection of fundamental rights. Therefore, the specialised documentation that guides these processes becomes a strategic resource for governments, organisations and companies seeking to participate responsibly in the digital economy.

In this post, we compile recent reports, produced by leading organisations in both the public and private sectors, which offer these key orientations. These documents not only analyse the current challenges of open data ecosystems, but also provide practical tools and concrete frameworks for their effective implementation.

State and evolution of the open data market

Knowing what it looks like and what changes have occurred in the open data ecosystem at European and national level is important to make informed decisions and adapt to the needs of the industry. In this regard, the European Commission publishes, on a regular basis, a Data Markets Report, which is updated regularly. The latest version is dated December 2024, although use cases exemplifying the potential of data in Europe are regularly published (the latest in February 2025).

On the other hand, from a European regulatory perspective, the latest annual report on the implementation of the Digital Markets Act (DMA)takes a comprehensive view of the measures adopted to ensure fairness and competitiveness in the digital sector. This document is interesting to understand how the regulatory framework that directly affects open data ecosystems is taking shape.

At the national level, the ASEDIE sectoral report on the "Data Economy in its infomediary scope" 2025 provides quantitative evidence of the economic value generated by open data ecosystems in Spain.

The importance of open data in AI

It is clear that the intersection between open data and artificial intelligence is a reality that poses complex ethical and regulatory challenges that require collaborative and multi-sectoral responses. In this context, developing frameworks to guide the responsible use of AI becomes a strategic priority, especially when these technologies draw on public and private data ecosystems to generate social and economic value. Here are some reports that address this objective:

- Generative IA and Open Data: Guidelines and Best Practices: the U.S. Department of Commerce. The US government has published a guide with principles and best practices on how to apply generative artificial intelligence ethically and effectively in the context of open data. The document provides guidelines for optimising the quality and structure of open data in order to make it useful for these systems, including transparency and governance.

- Good Practice Guide for the Use of Ethical Artificial Intelligence: This guide demonstrates a comprehensive approach that combines strong ethical principles with clear and enforceable regulatory precepts.. In addition to the theoretical framework, the guide serves as a practical tool for implementing AI systems responsibly, considering both the potential benefits and the associated risks. Collaboration between public and private actors ensures that recommendations are both technically feasible and socially responsible.

- Enhancing Access to and Sharing of Data in the Age of AI: this analysis by the Organisation for Economic Co-operation and Development (OECD) addresses one of the main obstacles to the development of artificial intelligence: limited access to quality data and effective models. Through examples, it identifies specific strategies that governments can implement to significantly improve data access and sharing and certain AI models.

- A Blueprint to Unlock New Data Commons for AI: Open Data Policy Lab has produced a practical guide that focuses on the creation and management of data commons specifically designed to enable cases of public interest artificial intelligence use. The guide offers concrete methodologies on how to manage data in a way that facilitates the creation of these data commons, including aspects of governance, technical sustainability and alignment with public interest objectives.

- Practical guide to data-driven collaborations: the Data for Children Collaborative initiative has published a step-by-step guide to developing effective data collaborations, with a focus on social impact. It includes real-world examples, governance models and practical tools to foster sustainable partnerships.

In short, these reports define the path towards more mature, ethical and collaborative data systems. From growth figures for the Spanish infomediary sector to European regulatory frameworks to practical guidelines for responsible AI implementation, all these documents share a common vision: the future of open data depends on our ability to build bridges between the public and private sectors, between technological innovation and social responsibility.

Data reuse continues to grow in Spain, as confirmed by the last report of the Multisectorial Association of Information (ASEDIE), which analyses and describes the situation of the infomediary sector in the country. The document, now in its 13th edition, was presented last Friday, 4 April, at an event highlighting the rise of the data economy in the current landscape.

The following are the main key points of the report.

An overall profit of 146 million euros in 2023

Since 2013, ASEDIE's Infomediary sector report has been continuously monitoring this sector, made up of companies and organisations that reuse data - generally from the public sector, but also from private sources - to generate value-added products or services. Under the title "Data Economy in its infomediary scope", this year's report underlines the importance of public-private partnerships in driving the data economy and presents relevant data on the current state of the sector.

It should be noted that the financial information used for sales and employees corresponds to the financial year 2023, as financial information for the year 2024 was not yet available at the time of reporting. The main conclusions are:

- Since the first edition of the report, the number of infomediaries identified has risen from 444 to 757, an increase of 70%. This growth reflects its dynamism, with annual peaks and troughs, showing a positive evolution that consolidates its recovery after the pandemic, although there is still room for development.

- The sector is present in all the country's Autonomous Communities, including the Autonomous City of Melilla. The Community of Madrid leads the ranking with 38% of infomediaries, followed by Catalonia, Andalusia and the Community of Valencia, which represent 15%, 11% and 9%, respectively. The remaining 27% is distributed among the other autonomous communities.

- 75% of infomediary companies operate in the sub-sectors of geographic information, market, economic and financial studies, and infomediation informatics (focused on the development of technological solutions for the management, analysis, processing and visualisation of data).

- The infomediary sector shows a growth and consolidation trend, with 66% of companies operating for less than 20 years. Of this group, 32% are between 11 and 20 years old, while 34% are less than a decade old. Furthermore, the increase in companies between 11 and 40 years old indicates that more companies have managed to sustain themselves over time.

- In terms of sales, the estimated volume amounts to 2,646 million euros and the evolution of average sales increases by 10.4%. The average turnover per company is over 4.4 million euros, while the median is 442,000 euros. Compared to the previous year, the average has increased by 200,000 euros, while the median has decreased by 30,000 euros.

- It is estimated that the infomediary sector employs some 24,620 people, 64% of whom are concentrated in three sub-sectors. These figures represent a growth of 6% over the previous year. Although the overall average is 39 employees per company, the median per sub-sector is no more than 6, indicating that much of the employment is concentrated in a small number of large companies. The average turnover per employee was 108,000 euros this year, an increase of 8% compared to the previous year.

- The subscribed capital of the sector amounts to EUR 252 million. This represents an increase of 6%, which breaks the negative trend of recent years.

- 74% of the companies have reported profits. The aggregate net profit of the 539 companies for which data is available exceeded 145 million euros.

The following visual summarises some of this data:

Figure 1. Source: Asedie Infomediary Sector Report. "Data Economy in its infomediary scope" (2025).

Significant advances in the ASEDIE Top 10

The Asedie Top 10 aims to identify and promote the openness of selected datasets for reuse. This initiative seeks to foster collaboration between the public and private sectors, facilitating access to information that can generate significant economic and social benefits. Its development has taken place in three phases, each focusing on different datasets, the evolution of which has been analysed in this report:.

- Phase 1 (2019), which promoted the opening of databases of associations, cooperatives and foundations. Currently, 16 Autonomous Communities allow access to the three databases and 11 already offer NIF data. There is a lack of access to cooperatives in a community.

- Phase 2 (2020), focusing on datasets related to energy efficiency certificates, SAT registers and industrial estates. All communities have made energy efficiency data available to citizens, but one is missing in industrial parks and three in SAT registers.

- Phase 3 (2023), focusing on datasets of economic agents, education centres, health centres and ERES-ERTES (Expediente de Regulación de Empleo y Expediente de Regulación Temporal de Empleo). Progress has been made compared to last year, but work is ongoing to achieve greater uniformity of information.

New success stories and best practices

The report concludes with a section compiling several success stories of products and services developed with public information and contributing to the growth of our economy, for example:

- Energy Efficiency Improvement Calculator: allows to identify the necessary interventions and estimate the associated costs and the impact on the energy efficiency certification (EEC).

- GEOPUBLIC: is a tool designed to help Public Administrations better understand their territory. It allows for an analysis of strengths, opportunities and challenges in comparison with other similar regions, provinces or municipalities. Thanks to its ability to segment business and socio-demographic data at different scales, it facilitates the monitoring of the life cycle of enterprises and their influence on the local economy.

- New website of the DBK sectoral observatory: improves the search for sectoral information, thanks to the continuous monitoring of some 600 Spanish and Portuguese sectors. Every year it publishes more than 300 in-depth reports and 1,000 sectoral information sheets.

- Data assignment and repair service: facilitates the updating of information on the customers of electricity retailers by allowing this information to be enriched with the cadastral reference associated with the supply point. This complies with a requirement of the State Tax Administration Agency (AEAT).

The report also includes good practices of public administrations such as:

- The Callejero Digital de Andalucía Unificado (CDAU), which centralises, standardises and keeps the region's geographical and postal data up to date.

- The Geoportal of the Madrid City Council, which integrates metadata, OGC map services, a map viewer and a geolocator that respect the INSPIRE and LISIGE directives. It is easy to use for both professionals and citizens thanks to its intuitive and accessible interface.

- The Canary Statistics Institute (ISTAC), which has made an innovative technological ecosystem available to society. It features eDatos, an open source infrastructure for statistical data management ensuring transparency and interoperability.

- The Spanish National Forest Inventory (IFN) and its web application Download IFN, a basic resource for forest management, research and education. Allows easy filtering of plots for downloading.

- The Statistical Interoperability Node, which provides legal, organisational, semantic and technical coverage for the integration of the different information systems of the different levels of administrative management.

- The Open Cohesion School, an innovative educational programme of the Generalitat de Catalunya aimed at secondary school students. Students investigate publicly funded projects to analyse their impact, while developing digital skills, critical thinking and civic engagement.

- The National Publicity System for Public Subsidies and Grants, which has unveiled a completely redesigned website. It has improved its functionality with API-REST queries and downloads. More information here.

In conclusion, the infomediary sector in Spain consolidifies itself as a key driver for the economy, showing a solid evolution and steady growth. With a record number of companies and a turnover exceeding 2.6 billion euros in 2023, the sector not only generates employment, but also positions itself as a benchmark for innovation. Information as a strategic resource drives a more efficient and connected economic future. Its proper use, always from an ethical perspective, promises to continue to be a source of progress both nationally and internationally.

Open data portals help municipalities to offer structured and transparent access to the data they generate in the exercise of their functions and in the provision of the services they are responsible for, while also fostering the creation of applications, services and solutions that generate value for citizens, businesses and public administrations themselves.

The report aims to provide a practical guide for municipal administrations to design, develop and maintain effective open data portals, integrating them into the overall smart city strategy. The document is structured in several sections ranging from strategic planning to technical and operational recommendations necessary for the creation and maintenance of open data portals. Some of the main keys are:

Fundamental principles

The report highlights the importance of integrating open data portals into municipal strategic plans, aligning portal objectives with local priorities and citizens' expectations. It also recommends drawing up a Plan of measures for the promotion of openness and re-use of data (RISP Plan in Spanish acronyms), including governance models, clear licences, an open data agenda and actions to stimulate re-use of data. Finally, it emphasises the need for trained staff in strategic, technical and functional areas, capable of managing, maintaining and promoting the reuse of open data.

General requirements

In terms of general requirements to ensure the success of the portal, the importance of offering quality data, consistent and updated in open formats such as CSV and JSON, but also in XLS, favouring interoperability with national and international platforms through open standards such as DCAT-AP, and guaranteeing effective accessibility of the portal through an intuitive and inclusive design, adapted to different devices. It also points out the obligation to strictly comply with privacy and data protection regulations, especially the General Data Protection Regulation (GDPR).

To promote re-use, the report advises fostering dynamic ecosystems through community events such as hackathons and workshops, highlighting successful examples of practical application of open data. Furthermore, it insists on the need to provide useful tools such as APIs for dynamic queries, interactive data visualisations and full documentation, as well as to implement sustainable funding and maintenance mechanisms.

Technical and functional guidelines

Regarding technical and functional guidelines, the document details the importance of building a robust and scalable technical infrastructure based on cloud technologies, using diverse storage systems such as relational databases, NoSQL and specific solutions for time series or geospatial data. It also highlights the importance of integrating advanced automation tools to ensure consistent data quality and recommends specific solutions to manage real-time data from the Internet of Things (IoT).

In relation to the usability and structure of the portal, the importance of a user-centred design is emphasised, with clear navigation and a powerful search engine to facilitate quick access to data. Furthermore, it stresses the importance of complying with international accessibility standards and providing tools that simplify interaction with data, including clear graphical displays and efficient technical support mechanisms.

The report also highlights the key role of APIs as fundamental tools to facilitate automated and dynamic access to portal data, offering granular queries, clear documentation, robust security mechanisms and reusable standard formats. It also suggests a variety of tools and technical frameworks to implement these APIs efficiently.

Another critical aspect highlighted in the document is the identification and prioritisation of datasets for publication, as the progressive planning of data openness allows adjusting technical and organisational processes in an agile way, starting with the data of greatest strategic relevance and citizen demand.

Finally, the guide recommends establishing a system of metrics and indicators according to the UNE 178301:2015 standard to assess the degree of maturity and the real impact of open data portals. These metrics span strategic, legal, organisational, technical, economic and social domains, providing a holistic approach to measure both the effectiveness of data publication and its tangible impact on society and the local economy.

Conclusions

In conclusion, the report provides a strategic, technical and practical framework that serves as a reference for the deployment of municipal open data portals for cities to maximise their potential as drivers of economic and social development. In addition, the integration of artificial intelligence at various points in open data portal projects represents a strategic opportunity to expand their capabilities and generate a greater impact on citizens.

You can read the full report here.

Open source artificial intelligence (AI) is an opportunity to democratise innovation and avoid the concentration of power in the technology industry. However, their development is highly dependent on the availability of high quality datasets and the implementation of robust data governance frameworks. A recent report by Open Future and the Open Source Initiative (OSI) analyses the challenges and opportunities at this intersection, proposing solutions for equitable and accountable data governance. You can read the full report here.

In this post, we will analyse the most relevant ideas of the document, as well as the advice it offers to ensure a correct and effective data governance in artificial intelligence open source and take advantage of all its benefits.

The challenges of data governance in AI

Despite the vast amount of data available on the web, accessing and using it to train AI models poses significant ethical, legal and technical challenges. For example:

- Balancing openness and rights: In line with the Data Governance Regulation (DGA), broad access to data should be guaranteed without compromising intellectual property rights, privacy and fairness.

- Lack of transparency and openness standards: It is important that models labelled as "open" meet clear criteria for transparency in the use of data.

- Structural biases: Many datasets reflect linguistic, geographic and socio-economic biases that can perpetuate inequalities in AI systems.

- Environmental sustainability: the intensive use of resources to train AI models poses sustainability challenges that must be addressed with more efficient practices.

- Engage more stakeholders: Currently, developers and large corporations dominate the conversation on AI, leaving out affected communities and public organisations.

Having identified the challenges, the report proposes a strategy for achieving the main goal: adequate data governance in open source AI models. This approach is based on two fundamental pillars.

Towards a new paradigm of data governance

Currently, access to and management of data for training AI models is marked by increasing inequality. While some large corporations have exclusive access to vast data repositories, many open source initiatives and marginalised communities lack the resources to access quality, representative data. To address this imbalance, a new approach to data management and use in open source AI is needed. The report highlights two fundamental changes in the way data governance is conceived:

On the one hand, adopting a data commons approach which is nothing more than an access model that ensures a balance between data openness and rights protection.. To this end, it would be important to use innovative licences that allow data sharing without undue exploitation. It is also relevant to create governance structures that regulate access to and use of data. And finally, implement compensation mechanisms for communities whose data is used in artificial intelligence.

On the other hand, it is necessary to transcend the vision focused on AI developers and include more actors in data governance, such as:

- Data owners and content-generating communities.

- Public institutions that can promote openness standards.

- Civil society organisations that ensure fairness and responsible access to data.

By adopting these changes, the AI community will be able to establish a more inclusive system, in which the benefits of data access are distributed in a manner that is equitable and respectful of the rights of all stakeholders. According to the report, the implementation of these models will not only increase the amount of data available for open source AI, but will also encourage the creation of fairer and more sustainable tools for society as a whole.

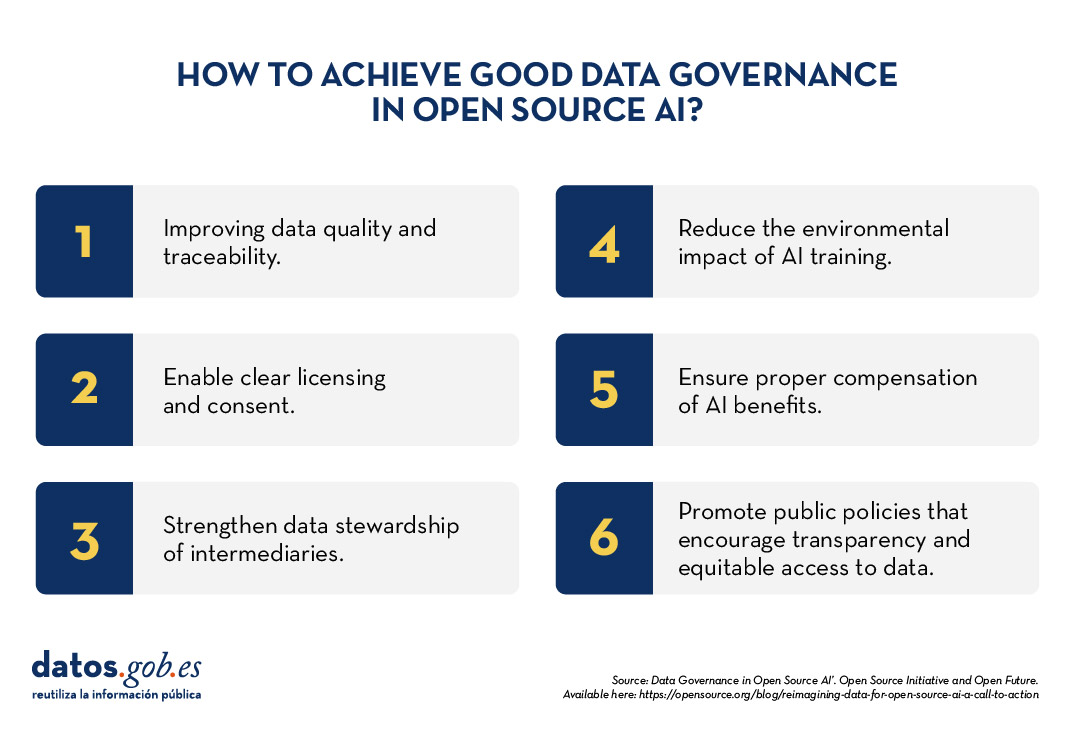

Advice and strategy

To make robust data governance effective in open source AI, the report proposes six priority areas for action:

- Data preparation and traceability: Improve the quality and documentation of data sets.

- Licensing and consent mechanisms: allow data creators to clearly define their use.

- Data stewardship: strengthen the role of intermediaries who manage data ethically.

- Environmental sustainability: Reduce the impact of AI training with efficient practices.

- Compensation and reciprocity: ensure that the benefits of AI reach those who contribute data.

- Public policy interventions: promote regulations that encourage transparency and equitable access to data.

Open source artificial intelligence can drive innovation and equity, but to achieve this requires a more inclusive and sustainable approach to data governance. Adopting common data models and broadening the ecosystem of actors will build AI systems that are fairer, more representative and accountable to the common good.

The report published by Open Future and Open Source Initiative calls for action from developers, policymakers and civil society to establish shared standards and solutions that balance open data with the protection of rights. With strong data governance, open source AI will be able to deliver on its promise to serve the public interest.

Researchers and students from various centers have also reported advances resulting from working with data:The last days of the year are always a good time to look back and assess the progress made. If a few weeks ago we took stock of what happened in the Aporta initiative, now it is time to compile the news related to data sharing, open data and the technologies linked to them.

Six months ago, we already made a first collection of milestones in the sector. On this occasion, we will summarise some of the innovations, improvements and achievements of the last half of the year.

Regulating and driving artificial intelligence

La inteligencia artificial (IA) continúa siendo uno de los campos donde cada día se aprecian nuevos avances. Se trata de un sector cuyo auge es relativamente nuevo y que necesita regulación. Por ello, la Unión Europea publicó el pasado julio el Reglamento de inteligencia artificial, una norma que marcará el entorno regulatorio europeo y global. Alineada con Europa, España ya presentó unos meses antes su nueva Estrategia de inteligencia artificial 2024, con el fin de establecer un marco para acelerar el desarrollo y expansión de la IA en España.

Artificial intelligence (AI) continues to be one of the fields where new advances are being made every day. This is a relatively new and booming sector in need of regulation. Therefore, last July, the European Union published the Artificial Intelligence Regulation, a standard that will shape the European and global regulatory environment. Aligned with Europe, Spain had already presented its new Artificial Intelligence Strategy 2024 a few months earlier, with the aim of establishing a framework to accelerate the development and expansion of AI in Spain.

On the other hand, in October, Spain took over the co-presidency of the Open Government Partnership. Its roadmap includes promoting innovative ideas, taking advantage of the opportunities offered by open data and artificial intelligence. As part of the position, Spain will host the next OGP World Summit in Vitoria.

Innovative new data-driven tools

Data drives a host of disruptive technological tools that can generate benefits for all citizens. Some of those launched by public bodies in recent months include:

- The Ministry of Transport and Sustainable Mobility has started to use Big Data technology to analyse road traffic and improve investments and road safety.

- The Principality of Asturias announces a plan to use Artificial Intelligence to end traffic jams during the summer, through the development of a digital twin.

- The Government of Aragon presented a new tourism intelligence system, which uses Big Data and AI to improve decision-making in the sector.

- The Region of Murcia has launched “Murcia Business Insight”, a business intelligence application that allows dynamic analysis of data on the region's companies: turnover, employment, location, sector of activity, etc.

- The Granada City Council has used Artificial Intelligence to improve sewerage. The aim is to achieve "more efficient" maintenance planning and execution, with on-site data.

- The Segovia City Council and Visa have signed a collaboration agreement to develop an online tool with real, aggregated and anonymous data on the spending patterns of foreign Visa cardholders in the capital. This initiative will provide relevant information to help tailor strategies to promote international tourism.

Researchers and students from various centers have also reported advances resulting from working with data:

- Researchers from the Center for Genomic Regulation (CRG) in Barcelona, the University of the Basque Country (UPV/EHU), the Donostia International Physics Center (DIPC) and the Fundación Biofísica Bizkaia have trained an algorithm to detect tissue alterations in the early stages and improve cancer diagnosis.

- Researchers from the Spanish National Research Council (CSIC) and KIDO Dynamics have launched a project to extract metadata from mobile antennas to understand the flow of people in natural landscapes. The objective is to identify and monitor the impact of tourism.

- A student at the University of Valladolid (UVa) has designed a project to improve the management and analysis of forest ecosystems in Spain at the local level, by converting municipal boundaries into a linked open data format. The results are available for re-use.

Advances in data spaces

The Ministry for Digital Transformation and the Civil Service and, specifically, the Secretariat of State for Digitalisation and Artificial Intelligence continues to make progress in the implementation of data spaces, through various actions:

- A Plan for the Promotion of Sectoral Data Spaces has been presented to promote secure data sharing.

- The development of Data Spaces for Intelligent Urban Infrastructures (EDINT) has been launched. This project, which will be carried out through the Spanish Federation of Municipalities and Provinces (FEMP), contemplates the creation of a multi-sectoral data space that will bring together all the information collected by local entities.

- In the field of digitalisation, aid has been launched for the digital transformation of strategic productive sectors through the development of technological products and services for data spaces.

Functionalities that bring data closer to reusers

The open data platforms of the various agencies have also introduced new developments, as new datasets, functionalities, strategies or reports:

- The Ministry for Ecological Transition and the Demographic Challenge has launched a new application for viewing the National Air Quality Index (AQI) in real time. It includes health recommendations for the general population and the sensitive population.

- The Andalusian Government has published a "Guide for the design of Public Policy Pilot Studies". It proposes a methodology for designing pilot studies and a system for collecting evidence for decision-making.

- The Government of Catalonia has initiated steps to implement a new data governance model that will improve relations with citizens and companies.

- The Madrid City Council is implementing a new 3D cartography and thermal map. In the Blog IDEE (Spatial Data Infrastructure of Spain) they explain how this 3D model of the capital was created using various data capture technologies.

- The Canary Islands Statistics Institute (ISTAC) has published 6,527 thematic maps with labor indicators on the Canary Islands in its open data catalog.

- Open Data Initiative and the Democratic Union of Pensioners and Retirees of Spain, with support from the Ministry of Social Rights, Consumption and Agenda 2030, presented the first Data website of the Data Observatory x Seniors. Its aim is to facilitate the analysis of healthy ageing in Spain and strategic decision-making. The Barcelona Initiative also launched a challenge to identify 50 datasets related to healthy ageing, a project supported by the Barcelona Provincial Council.

- The Centre for Technological Development and Innovation (CDTI) has presented a dashboard in beta phase with open data in exploitable format.

In addition, work continues to promote the opening up of data from various institutions:

- Asedie and the King Juan Carlos University (Madrid) have launched the Open Data Reuse Observatory to promote the reuse of open data. It already has the commitment of the Madrid City Council and they are looking for more institutions to join their Manifesto.

- The Cabildo of Tenerife and the University of La Laguna have developed a Sustainable Mobility Strategy in the Macizo de Anaga Biosphere Reserve. The aim is to obtain real-time data in order to take measures adapted to demand.

Data competitions and events to encourage the use of open data

Summer was the time chosen by various public bodies to launch competitions for products and/or services based on open data. This is the case of:

- The Community of Madrid held DATAMAD 2024 at the Universidad Rey Juan Carlos de Madrid. The event included a workshop on how to reuse open data and a datathon.

- More than 200 students registered for the I Malackathon, organised by the University of Malaga, a competition that awarded projects that used open data to propose solutions for water resource management.

- The Junta de Castilla y León held the VIII Open Data Competition, whose winners were announced in November.

- The II UniversiData Datathon was also launched. 16 finalists have been selected. The winners will be announced on 13 February 2025.

- The Cabildo of Tenerife also organised its I Open Data Competition: Ideas for reuse. They are currently evaluating the applications received. They will later launch their 2nd Open Data Competition: APP development.

- The Government of Euskadi held its V Open Data Competition. The finalists in both the Applications and Ideas categories are now known.

Also in these months there have been multiple events, which can be seen online, such as:

- The III GeoEuskadi Congress and XVI Iberian Conference on Spatial Data Infrastructures (JIIDE).

- DATAforum Justice 2024.

Other examples of events that were held but are not available online are the III Congress & XIV Conference of R Users, the Novagob 2024 Public Innovation Congress, DATAGRI 2024 or the Data Governance for Local Entities Conference, among others.

These are just a few examples of the activity carried out during the last six months in the Spanish data ecosystem. We encourage you to share other experiences you know of in the comments or via our email address dinamizacion@datos.gob.es.

The European data portal, data.europa.eu, has published the Open Data Maturity Index 2024, an annual report that assesses the level of open data maturity of European countries.

The 34 participating countries, including the 27 EU Member States, four candidate countries (Bosnia and Herzegovina, Albania, Serbia and Ukraine) and three European Free Trade Association countries (Iceland, Norway and Switzerland) were surveyed.

In this year's edition, Spain obtains an overall rating of 95% out of 100%. This places it in sixth place overall. As reflected in the following image, for yet another year, Spain is in the group of so-called trendsetter countries (trendsetter) , which are those with the best scores in the ranking, and which also include France, Poland, Ukraine, Slovakia, Ireland, Lithuania, Czech Republic, Italy, Estonia and Cyprus.

Figure 1: Groups of participating countries according to their overall open data maturity score.

Above the EU27 average in all four dimensions analysed

The Policy Dimension, focusing on open data policies in different countries, analyses the existence of national governance models for open data management and the measures that have been put in place to implement existing strategies. In these aspects, Spain scored 96% compared to the European average of 91%. The most positive aspects identified are:

- Alignment with European policies: The report highlights that Spain is fully aligned with the European Open Data Directive, among other recent data-related regulations that have come into force.

- Well-defined action plans: It highlights the strategies deployed in different public administrations focused on incentivising the publication and re-use of data generated in real time and data from citizens.

- Strengthening competences: It focuses on how Spain has developed training programmes to improve the skills of civil servants in managing and publishing open data, ensuring quality standards and fostering a data culture in public administration.

The Impact Dimension analyses the activities carried out to monitor and measure both the re-use of open data, and the impact created as a result of this re-use. Year after year, this has been the least mature dimension in Europe. Thus, compared to an EU average of 80%, Spain obtains a score of 100% for the development of numerous actions, among which the following stand out:

- Multi-sectoral collaboration: The report highlights how our country is presented as an example of interaction between public administrations, private companies and civil society, materialized in examples such as the close ties between the public sector and the Multisectoral Association of Information (ASEDIE), which produces year after year the ASEDIE report on the reuse of public sector information.

- Examples of re-use in key sectors: It shows how Spain has promoted numerous cases of open data reuse in strategic areas such as the environment, mobility and energy.

- Innovation in communication: The document highlights the effort invested in innovative communication strategies to raise public awareness of the value of data, and especially young audiences. Also noteworthy is the production of podcasts featuring interviews with open data experts, accompanied by short promotional videos.

The Portal Dimension focuses on analysing the functionalities of the national platform to enableusers to access open data and interact within the community. With 96% compared to 82% in the EU27, Spain is positioned as one of the European benchmarks in improving user experience and optimising national portals. The highlights of the report are:

- Sustainability and continuous improvement: According to the report, Spain has demonstrated a strong commitment to the sustainability of the national open data platform (datos.gob.es) and its adaptation to new technological demands.

- Interaction with users: One of the great strengths is the active promotion from the platform of the datasets available and of the channels through which users can request data that are not available in the National Catalogue.

Finally, the Quality Dimension examines the mechanisms for ensuring the quality of (meta)data. Here Spain scores 88% compared to 79% in the EU. Spain continues to stand out with initiatives that ensure the reliability, accessibility and standardisation of open data. Some of the strengths highlighted in the report are:

- Metadata automation: It highlights the use of advanced techniques for automatic metadata collection, reducing reliance on manual processes and improving accuracy and real-time updating.

- Guidelines for data and metadata quality: Spain provides many practical guidelines to improve the publication and quality of open data, including anonymisation techniques, publication in tabular formats (CSV) and the use of APIs.

Continuing to innovate to maintain Spain's advanced position in open data maturity

While Spain continues to stand out in the EU thanks to its open data ecosystem, efforts must continue. To this end, the same report identifies lines of work for countries, such as Spain, that seek to maintain their advanced position in open data maturity and to continue innovating. Among others, the following recommendations are made:

- Consolidating open data ecosystems: Strengthen thematic communities of providers and re-users by prioritising High Value Datasets (HVDs) in their development and promotion.

- Promoting coordination: Align the national strategy with the needs of agencies and local authorities.

- Develop country-specific impact metrics: Collaborate with universities, research institutions and others to develop impact assessment frameworks.

- Measure and disseminate the impact of open data: Conduct regular (annual or biannual) assessments of the economic, environmental and social impact of open data, promoting the results to generate political support.

- Facilitate the participation of the open data community: Ensure that providers improve the publication of data based on user feedback and ratings.

- Increase the quality of data and metadata: Use automated tools and validations to improve publication standards, including adopting artificial intelligence technology to optimise metadata quality.

- Promote successful reuse cases: Publish and promote success stories in the use of open data, interact with providers and users to identify innovative needs and applications.

Overall, the report shows good progress on open data across Europe. Although there are areas for improvement, the European open data landscape is consolidating, with Spain at the top of the table. Read here the complete Open Data Maturity Index 2024.

Data sandboxes are tools that provide us with environments to test new data-related practices and technologies, making them powerful instruments for managing and using data securely and effectively. These spaces are very useful in determining whether and under what conditions it is feasible to open the data. Some of the benefits they offer are:

- Controlled and secure environments: provide a workspace where information can be explored and its usefulness and quality assessed before committing to wider sharing. This is particularly important in sensitive sectors, where privacy and data security are paramount.

- Innovation: they provide a safe space for experimentation and rapid prototyping, allowing for rapid iteration, testing and refining new ideas and data-driven solutions as test bench before launching them to the public.

- Multi-sectoral collaboration: facilitate collaboration between diverse actors, including government entities, private companies, academia and civil society. This multi-sectoral approach helps to break down data silos and promotes the sharing of knowledge and good practices across sectors.

- Adaptive and scalable use: they can be adjusted to suit different data types, use cases and sectors, making them a versatile tool for a variety of data-driven initiatives.

- Cross-border data exchange: they provide a viable solution to manage the challenges of data exchange between different jurisdictions, especially with regard to international privacy regulations.

The report "Data Sandboxes: Managing the Open Data Spectrum" explores the concept of data sandboxes as a tool to strike the right balance between the benefits of open data and the need to protect sensitive information.

Value proposition for innovation

In addition to all the benefits outlined above, data sandboxes also offer a strong value proposition for organisations looking to innovate responsibly. These environments help us to improve data quality by making it easier for users to identify inconsistencies so that improvements can be made. They also contribute to reducing risks by providing secure environments to enable work with sensitive data. By fostering cross-disciplinary experimentation, collaboration and innovation, they contribute to increasing the usability of data and developing a data-driven culture within organisations. In addition, data sandboxes help reduce barriers to data access , improving transparency and accountability, which strengthens citizens' trust and leads to an expansion of data exchanges.

Types of data sandboxes and characteristics

Depending on the main objective when implementing a sandbox, there are three different types of sandboxes:

- Regulatory sandboxes, which allow companies and organisations to test innovative services under the close supervision of regulators in a specific sector or area.

- Innovation sandboxes, which are frequently used by developers to test new features and get quick feedback on their work.

- Research sandboxes, which make it easier for academia and industry to safely test new algorithms or models by focusing on the objective of their tests, without having to worry about breaching established regulations.

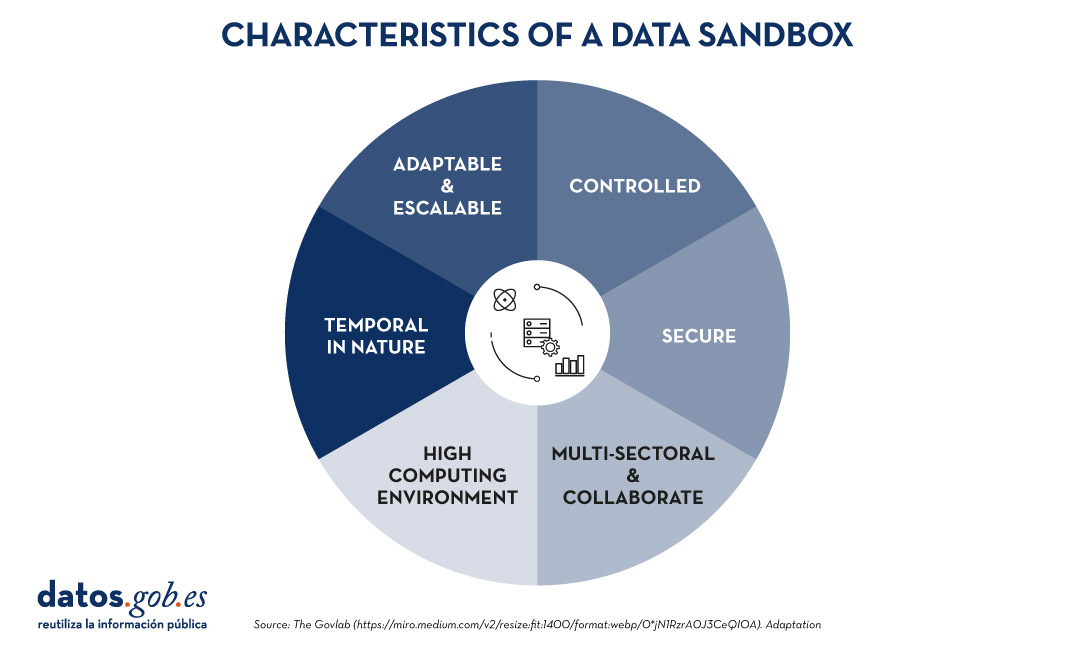

In any case, regardless of the type of sandbox we are working with, they are all characterised by the following common key aspects:

Figure 1. Characteristics of a data sandbox. Adaptation of a visual of The Govlab.

Each of these is described below:

- Controlled: these are restricted environments where sensitive and analysed data can be accessed securely, ensuring compliance with relevant regulations.

- Secure: they protect the privacy and security of data, often using anonymised or synthetic data.

- Collaborative: facilitating collaboration between different regions, sectors and roles, strengthening data ecosystems.

- High computational capacity: provide advanced computational resources capable of performing complex tasks on the data when needed.

- Temporal in nature: They are designed for temporary use and with a short life cycle, allowing for rapid and focused experimentation that either concludes once its objective is achieved or becomes a new long-term project.

- Adaptable: They are flexible enough to customise and scale according to needs and different data types, use cases and contexts.

Examples of data sandboxes

Data sandboxes have long been successfully implemented in multiple sectors across Europe and around the world, so we can easily find several examples of their implementation on our continent:

- Data science lab in Denmark: it provides access to sensitive administrative data useful for research, fostering innovation under strict data governance policies.

- TravelTech in Lithuania: an open access sandbox that provides tourism data to improve business and workforce development in the sector.

- INDIGO Open Data Sandbox: it promotes data sharing across sectors to improve social policies, with a focus on creating a secure environment for bilateral data sharing initiatives.

- Health data science sandbox in Denmark: a training platform for researchers to practice data analysis using synthetic biomedical data without having to worry about strict regulation.

Future direction and challenges

As we have seen, data sandboxes can be a powerful tool for fostering open data, innovation and collaboration, while ensuring data privacy and security. By providing a controlled environment for experimentation with data, they enable all interested parties to explore new applications and knowledge in a reliable and safe way. Sandboxes can therefore help overcome initial barriers to data access and contribute to fostering a more informed and purposeful use of data, thus promoting the use of data-driven solutions to public policy problems.

However, despite their many benefits, data sandboxes also present a number of implementation challenges. The main problems we might encounter in implementing them include:

- Relevance: ensure that the sandbox contains high quality and relevant data, and that it is kept up to date.

- Governance: establish clear rules and protocols for data access, use and sharing, as well as monitoring and compliance mechanisms.

- Scalability: successfully export the solutions developed within the sandbox and be able to translate them into practical applications in the real world.

- Risk management: address comprehensively all risks associated with the re-use of data throughout its lifecycle and without compromising its integrity.

However, as technologies and policies continue to evolve, it is clear that data sandboxes are set to be a useful tool and play an important role in managing the spectrum of data openness, thereby driving the use of data to solve increasingly complex problems. Furthermore, the future of data sandboxes will be influenced by new regulatory frameworks (such as Data Regulations and Data Governance) that reinforce data security and promote data reuse, and by integration with privacy preservation and privacy enhancing technologies that allow us to use data without exposing any sensitive information. Together, these trends will drive more secure data innovation within the environments provided by data sandboxes.

Content prepared by Carlos Iglesias, Open data Researcher and consultant, World Wide Web Foundation. The contents and views expressed in this publication are the sole responsibility of the author.