In the current landscape of data analysis and artificial intelligence, the automatic generation of comprehensive and coherent reports represents a significant challenge. While traditional tools allow for data visualization or generating isolated statistics, there is a need for systems that can investigate a topic in depth, gather information from diverse sources, and synthesize findings into a structured and coherent report.

In this practical exercise, we will explore the development of a report generation agent based on LangGraph and artificial intelligence. Unlike traditional approaches based on templates or predefined statistical analysis, our solution leverages the latest advances in language models to:

- Create virtual teams of analysts specialized in different aspects of a topic.

- Conduct simulated interviews to gather detailed information.

- Synthesize the findings into a coherent and well-structured report.

Access the data laboratory repository on Github.

Run the data preprocessing code on Google Colab.

As shown in Figure 1, the complete agent flow follows a logical sequence that goes from the initial generation of questions to the final drafting of the report.

Figure 1. Agent flow diagram.

Application Architecture

The core of the application is based on a modular design implemented as an interconnected state graph, where each module represents a specific functionality in the report generation process. This structure allows for a flexible workflow, recursive when necessary, and with capacity for human intervention at strategic points.

Main Components

The system consists of three fundamental modules that work together:

1. Virtual Analysts Generator

This component creates a diverse team of virtual analysts specialized in different aspects of the topic to be investigated. The flow includes:

- Initial creation of profiles based on the research topic.

- Human feedback point that allows reviewing and refining the generated profiles.

- Optional regeneration of analysts incorporating suggestions.

This approach ensures that the final report includes diverse and complementary perspectives, enriching the analysis.

2. Interview System

Once the analysts are generated, each one participates in a simulated interview process that includes:

- Generation of relevant questions based on the analyst's profile.

- Information search in sources via Tavily Search and Wikipedia.

- Generation of informative responses combining the obtained information.

- Automatic decision on whether to continue or end the interview based on the information gathered.

- Storage of the transcript for subsequent processing.

The interview system represents the heart of the agent, where the information that will nourish the final report is obtained. As shown in Figure 2, this process can be monitored in real time through LangSmith, an open observability tool that allows tracking each step of the flow.

Figure 2. System monitoring via LangGraph. Concrete example of an analyst-interviewer interaction.

3. Report Generator

Finally, the system processes the interviews to create a coherent report through:

- Writing individual sections based on each interview.

- Creating an introduction that presents the topic and structure of the report.

- Organizing the main content that integrates all sections.

- Generating a conclusion that synthesizes the main findings.

- Consolidating all sources used.

The Figure 3 shows an example of the report resulting from the complete process, demonstrating the quality and structure of the final document generated automatically.

Figure 3. View of the report resulting from the automatic generation process to the prompt "Open data in Spain".

What can you learn?

This practical exercise allows you to learn:

Integration of advanced AI in information processing systems:

- How to communicate effectively with language models.

- Techniques to structure prompts that generate coherent and useful responses.

- Strategies to simulate virtual teams of experts.

Development with LangGraph:

- Creation of state graphs to model complex flows.

- Implementation of conditional decision points.

- Design of systems with human intervention at strategic points.

Parallel processing with LLMs:

- Parallelization techniques for tasks with language models.

- Coordination of multiple independent subprocesses.

- Methods for consolidating scattered information.

Good design practices:

- Modular structuring of complex systems.

- Error handling and retries.

- Tracking and debugging workflows through LangSmith.

Conclusions and future

This exercise demonstrates the extraordinary potential of artificial intelligence as a bridge between data and end users. Through the practical case developed, we can observe how the combination of advanced language models with flexible architectures based on graphs opens new possibilities for automatic report generation.

The ability to simulate virtual expert teams, perform parallel research and synthesize findings into coherent documents, represents a significant step towards the democratization of analysis of complex information.

For those interested in expanding the capabilities of the system, there are multiple promising directions for its evolution:

- Incorporation of automatic data verification mechanisms to ensure accuracy.

- Implementation of multimodal capabilities that allow incorporating images and visualizations.

- Integration with more sources of information and knowledge bases.

- Development of more intuitive user interfaces for human intervention.

- Expansion to specialized domains such as medicine, law or sciences.

In summary, this exercise not only demonstrates the feasibility of automating the generation of complex reports through artificial intelligence, but also points to a promising path towards a future where deep analysis of any topic is within everyone's reach, regardless of their level of technical experience. The combination of advanced language models, graph architectures and parallelization techniques opens a range of possibilities to transform the way we generate and consume information.

Open data portals are an invaluable source of public information. However, extracting meaningful insights from this data can be challenging for users without advanced technical knowledge.

In this practical exercise, we will explore the development of a web application that democratizes access to this data through the use of artificial intelligence, allowing users to make queries in natural language.

The application, developed using the datos.gob.es portal as a data source, integrates modern technologies such as Streamlit for the user interface and Google's Gemini language model for natural language processing. The modular nature allows any Artificial Intelligence model to be used with minimal changes. The complete project is available in the Github repository.

Access the data laboratory repository on Github.

Run the data preprocessing code on Google Colab.

In this video, the author explains what you will find both on Github and Google Colab.

Application Architecture

The core of the application is based on four main interconnected sections that work together to process user queries:

- Context Generation

- Analyzes the characteristics of the chosen dataset.

- Generates a detailed description including dimensions, data types, and statistics.

- Creates a structured template with specific guidelines for code generation.

- Context and Query Combination

- Combines the generated context with the user's question, creating the prompt that the artificial intelligence model will receive.

- Response Generation

- Sends the prompt to the model and obtains the Python code that allows solving the generated question.

- Code Execution

- Safely executes the generated code with a retry and automatic correction system.

- Captures and displays the results in the application frontend.

Figure 1. Request processing flow

Development Process

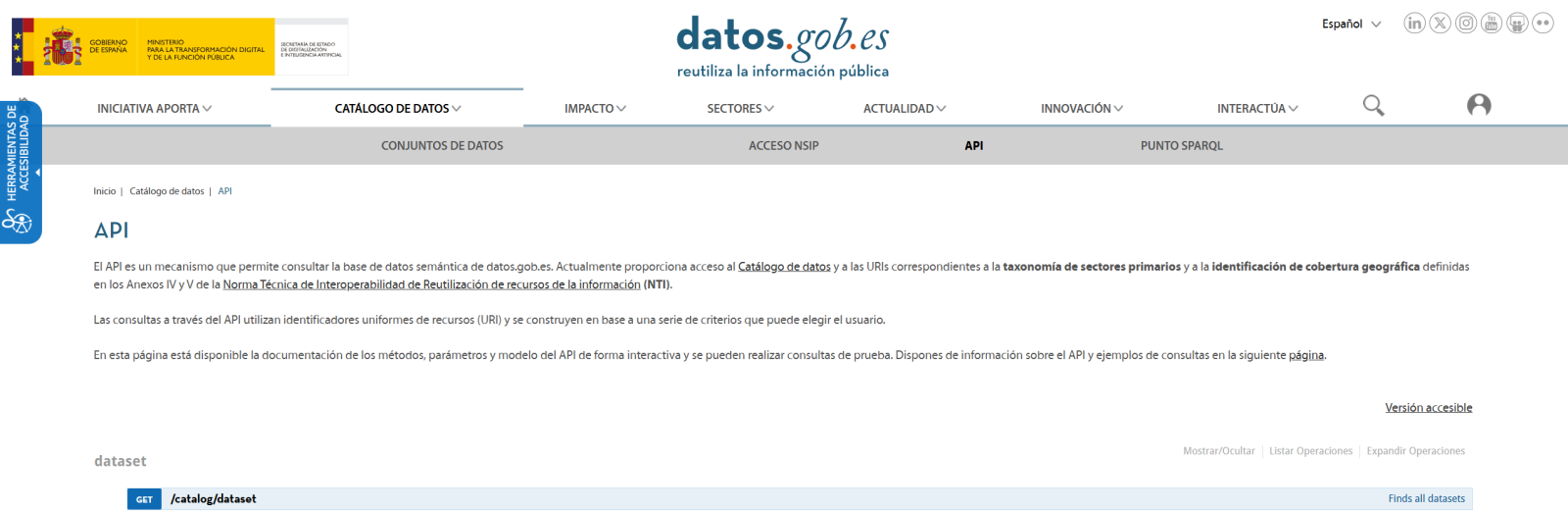

The first step is to establish a way to access public data. The datos.gob.es portal offers datasets via API. Functions have been developed to navigate the catalog and download these files efficiently.

Figura 2. API de datos.gob

The second step addresses the question: how to convert natural language questions into useful data analysis? This is where Gemini, Google's language model, comes in. However, it's not enough to simply connect the model; it's necessary to teach it to understand the specific context of each dataset.

A three-layer system has been developed:

- A function that analyzes the dataset and generates a detailed "technical sheet".

- Another that combines this sheet with the user's question.

- And a third that translates all this into executable Python code.

You can see in the image below how this process develops and, subsequently, the results of the generated code are shown once executed.

Figure 3. Visualization of the application's response processing

Finally, with Streamlit, a web interface has been built that shows the process and its results to the user. The interface is as simple as choosing a dataset and asking a question, but also powerful enough to display complex visualizations and allow data exploration.

The final result is an application that allows anyone, regardless of their technical knowledge, to perform data analysis and learn about the code executed by the model. For example, a municipal official can ask "What is the average age of the vehicle fleet?" and get a clear visualization of the age distribution.

Figure 4. Complete use case. Visualizing the distribution of registration years of the municipal vehicle fleet of Almendralejo in 2018

What Can You Learn?

This practical exercise allows you to learn:

- AI Integration in Web Applications:

- How to communicate effectively with language models like Gemini.

- Techniques for structuring prompts that generate precise code.

- Strategies for safely handling and executing AI-generated code.

- Web Development with Streamlit:

- Creating interactive interfaces in Python.

- Managing state and sessions in web applications.

- Implementing visual components for data.

- Working with Open Data:

- Connecting to and consuming public data APIs.

- Processing Excel files and DataFrames.

- Data visualization techniques.

- Development Best Practices:

- Modular structuring of Python code.

- Error handling and retries.

- Implementation of visual feedback systems.

- Web application deployment using ngrok.

Conclusions and Future

This exercise demonstrates the extraordinary potential of artificial intelligence as a bridge between public data and end users. Through the practical case developed, we have been able to observe how the combination of advanced language models with intuitive interfaces allows us to democratize access to data analysis, transforming natural language queries into meaningful analysis and informative visualizations.

For those interested in expanding the system's capabilities, there are multiple promising directions for its evolution:

- Incorporation of more advanced language models that allow for more sophisticated analysis.

- Implementation of learning systems that improve responses based on user feedback.

- Integration with more open data sources and diverse formats.

- Development of predictive and prescriptive analysis capabilities.

In summary, this exercise not only demonstrates the feasibility of democratizing data analysis through artificial intelligence, but also points to a promising path toward a future where access to and analysis of public data is truly universal. The combination of modern technologies such as Streamlit, language models, and visualization techniques opens up a range of possibilities for organizations and citizens to make the most of the value of open data.

Open data portals play a fundamental role in accessing and reusing public information. A key aspect in these environments is the tagging of datasets, which facilitates their organization and retrieval.

Word embeddings represent a transformative technology in the field of natural language processing, allowing words to be represented as vectors in a multidimensional space where semantic relationships are mathematically preserved. This exercise explores their practical application in a tag recommendation system, using the datos.gob.es open data portal as a case study.

The exercise is developed in a notebook that integrates the environment configuration, data acquisition, and recommendation system processing, all implemented in Python. The complete project is available in the Github repository.

Access the data lab repository on GitHub.

Run the data preprocessing code on Google Colab.

In this video, the author explains what you will find both on Github and Google Colab (English subtitles available).

Understanding word embeddings

Word embeddings are numerical representations of words that revolutionize natural language processing by transforming text into a mathematically processable format. This technique encodes each word as a numerical vector in a multidimensional space, where the relative position between vectors reflects semantic and syntactic relationships between words. The true power of embeddings lies in three fundamental aspects:

- Context capture: unlike traditional techniques such as one-hot encoding, embeddings learn from the context in which words appear, allowing them to capture meaning nuances.

- Semantic algebra: the resulting vectors allow mathematical operations that preserve semantic relationships. For example, vector('Madrid') - vector('Spain') + vector('France') ≈ vector('Paris'), demonstrating the capture of capital-country relationships.

- Quantifiable similarity: similarity between words can be measured through metrics, allowing identification of not only exact synonyms but also terms related in different degrees and generalizing these relationships to new word combinations.

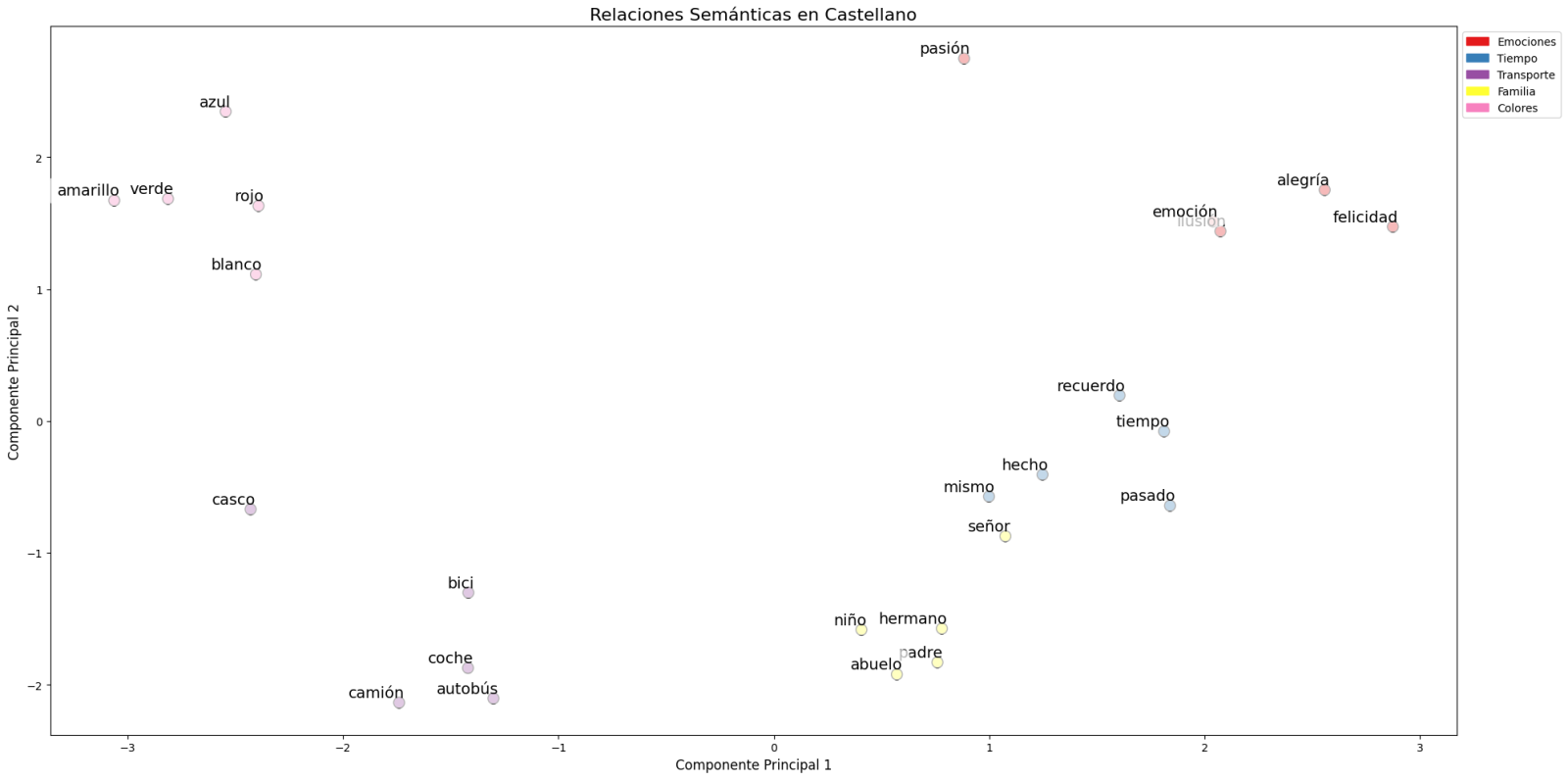

In this exercise, pre-trained GloVe (Global Vectors for Word Representation) embeddings were used, a model developed by Stanford that stands out for its ability to capture global semantic relationships in text. In our case, we use 50-dimensional vectors, a balance between computational complexity and semantic richness. To comprehensively evaluate its ability to represent Spanish language, multiple tests were conducted:

- Word similarity was analyzed using cosine similarity, a metric that evaluates the angle between two word vectors. This measure results in values between -1 and 1, where values close to 1 indicate high semantic similarity, while values close to 0 indicate little or no relationship. Terms like "amor" (love), "trabajo" (work), and "familia" (family) were evaluated to verify that the model correctly identified semantically related words.

- The model's ability to solve linguistic analogies was tested, for example, "hombre es a mujer lo que rey es a reina" (Man is to woman what king is to queen), confirming its ability to capture complex semantic relationships.

- Vector operations were performed (such as "rey - hombre + mujer") to check if the results maintained semantic coherence.

- Finally, dimensionality reduction techniques were applied to a representative sample of 40 Spanish words, allowing visualization of semantic relationships in a two-dimensional space. The results revealed natural grouping patterns among semantically related terms, as observed in the figure:

- Emotions: alegría (joy), felicidad (happiness) or pasión (passion) appear grouped in the upper right.

- Family-related terms: padre (father), hermano (brother) or abuelo (grandfather) concentrate at the bottom.

- Transport: coche (car), autobús (bus), or camión (truck) form a distinctive group.

- Colors: azul (blue), verde (green) or rojo (red) appear close to each other.

Figure 1. Principal Components Analysis on 50 dimensions (embeddings) with an explained variability percentage by the two components of 0.46

To systematize this evaluation process, a unified function has been developed that encapsulates all the tests described above. This modular architecture allows automatic and reproducible evaluation of different pre-trained embedding models, thus facilitating objective comparison of their performance in Spanish language processing. The standardization of these tests not only optimizes the evaluation process but also establishes a consistent framework for future comparisons and validations of new models by the public.

The good capacity to capture semantic relationships in Spanish language is what we leverage in our tag recommendation system.

Embedding-based Recommendation System

Leveraging the properties of embeddings, we developed a tag recommendation system that follows a three-phase process:

- Embedding generation: for each dataset in the portal, we generate a vector representation combining the title and description. This allows us to compare datasets by their semantic similarity.

- Similar dataset identification: using cosine similarity between vectors, we identify the most semantically similar datasets.

- Tag extraction and standardization: from similar sets, we extract their associated tags and map them with Eurovoc thesaurus terms. This thesaurus, developed by the European Union, is a multilingual controlled vocabulary that provides standardized terminology for cataloging documents and data in the field of European policies. Again, leveraging the power of embeddings, we identify the semantically closest Eurovoc terms to our tags, thus ensuring coherent standardization and better interoperability between European information systems.

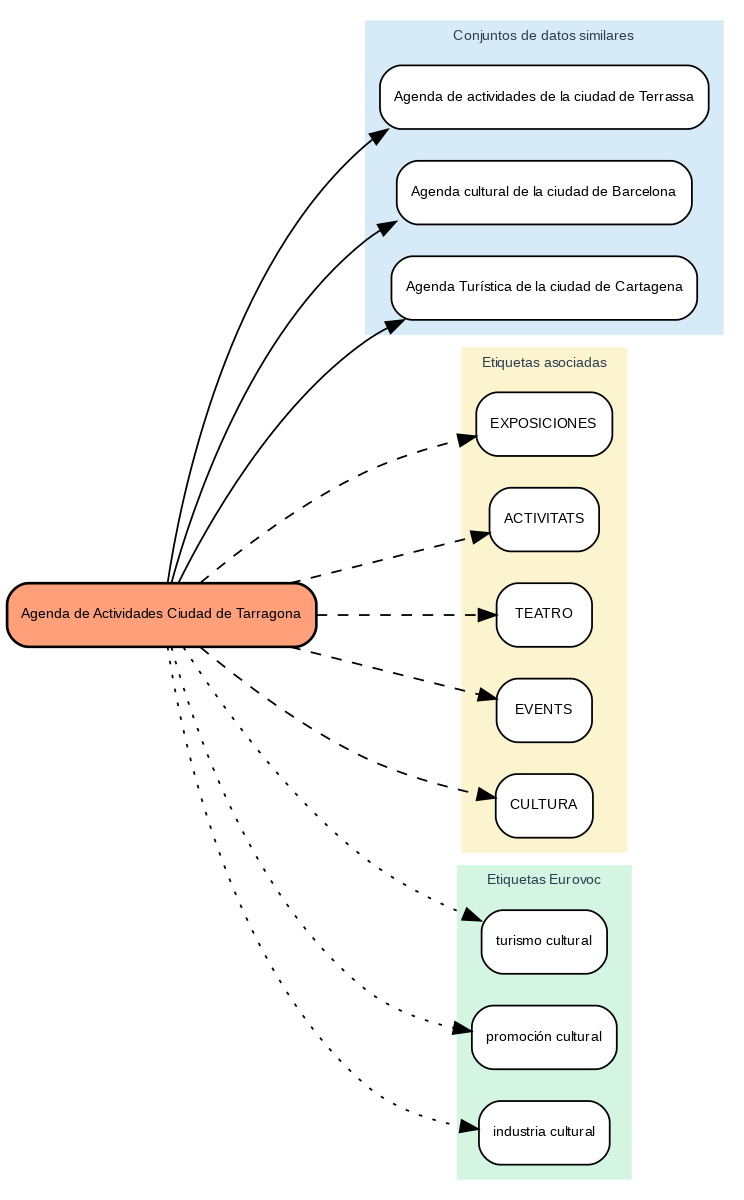

The results show that the system can generate coherent and standardized tag recommendations. To illustrate the system's operation, let's take the case of the dataset "Tarragona City Activities Agenda":

Figure 2. Tarragona City Events Guide

The system:

- Finds similar datasets like "Terrassa Activities Agenda" and "Barcelona Cultural Agenda".

- Identifies common tags from these datasets, such as "EXHIBITIONS", "THEATER", and "CULTURE".

- Suggests related Eurovoc terms: "cultural tourism", "cultural promotion", and "cultural industry".

Advantages of the Approach

This approach offers significant advantages:

- Contextual Recommendations: the system suggests tags based on the real meaning of the content, not just textual matches.

- Automatic Standardization: integration with Eurovoc ensures a controlled and coherent vocabulary.

- Continuous Improvement: the system learns and improves its recommendations as new datasets are added.

- Interoperability: the use of Eurovoc facilitates integration with other European systems.

Conclusions

This exercise demonstrates the great potential of embeddings as a tool for associating texts based on their semantic nature. Through the analyzed practical case, it has been possible to observe how, by identifying similar titles and descriptions between datasets, precise recommendations of tags or keywords can be generated. These tags, in turn, can be linked with keywords from a standardized thesaurus like Eurovoc, applying the same principle.

Despite the challenges that may arise, implementing these types of systems in production environments presents a valuable opportunity to improve information organization and retrieval. The accuracy in tag assignment can be influenced by various interrelated factors in the process:

- The specificity of dataset titles and descriptions is fundamental, as correct identification of similar content and, therefore, adequate tag recommendation depends on it.

- The quality and representativeness of existing tags in similar datasets directly determines the relevance of generated recommendations.

- The thematic coverage of the Eurovoc thesaurus, which, although extensive, may not cover specific terms needed to describe certain datasets precisely.

- The vectors' capacity to faithfully capture semantic relationships between content, which directly impacts the precision of assigned tags.

For those who wish to delve deeper into the topic, there are other interesting approaches to using embeddings that complement what we've seen in this exercise, such as:

- Using more complex and computationally expensive embedding models (like BERT, GPT, etc.)

- Training models on a custom domain-adapted corpus.

- Applying deeper data cleaning techniques.

In summary, this exercise not only demonstrates the effectiveness of embeddings for tag recommendation but also unlocks new possibilities for readers to explore all the possibilities this powerful tool offers.

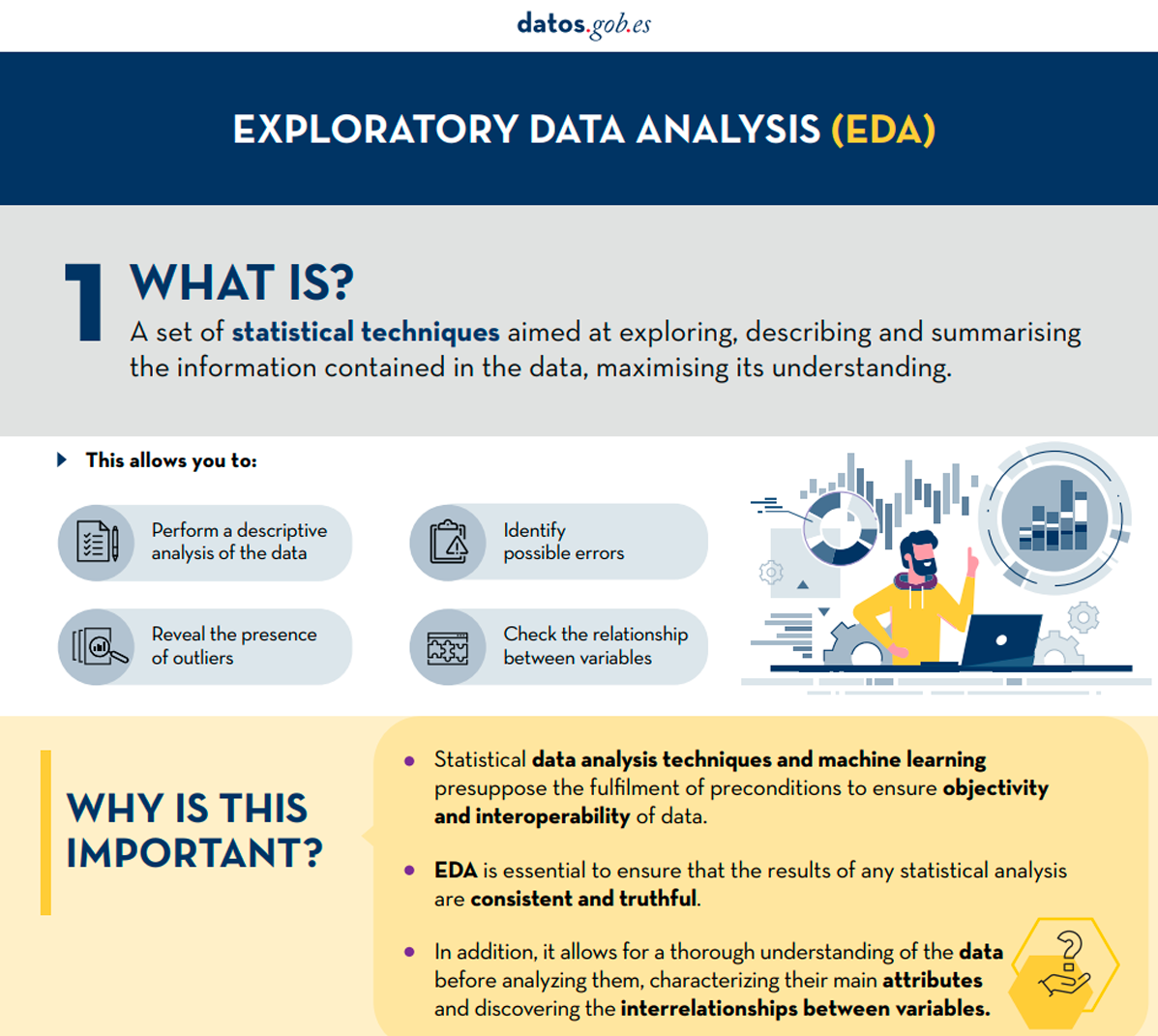

The following presents a new guide to Exploratory Data Analysis (EDA) implemented in Python, which evolves and complements the version published in R in 2021. This update responds to the needs of an increasingly diverse community in the field of data science.

Exploratory Data Analysis (EDA) represents a critical step prior to any statistical analysis, as it allows:

- Comprehensive understanding of the data before analyzing it.

- Verification of statistical requirements that will ensure the validity of subsequent analyses.

To exemplify its importance, let's take the case of detecting and treating outliers, one of the tasks to be performed in an EDA. This phase has a significant impact on fundamental statistics such as the mean, standard deviation, or coefficient of variation.

This guide maintains as a practical case the analysis of air quality data from Castilla y León, demonstrating how to transform public data into valuable information through the use of fundamental Python libraries such as pandas, matplotlib, and seaborn, along with modern automated analysis tools like ydata-profiling.

In addition to explaining the different phases of an EDA, the guide illustrates them with a practical case. In this sense, the analysis of air quality data from Castilla y León is maintained as a practical case. Through explanations that users can replicate, public data is transformed into valuable information using fundamental Python libraries such as pandas, matplotlib, and seaborn, along with modern automated analysis tools like ydata-profiling.

Why a new guide in Python?

The choice of Python as the language for this new guide reflects its growing relevance in the data science ecosystem. Its intuitive syntax and extensive catalog of specialized libraries have made it a fundamental tool for data analysis. By maintaining the same dataset and analytical structure as the R version, understanding the differences between both languages is facilitated. This is especially valuable in environments where multiple technologies coexist. This approach is particularly relevant in the current context, where numerous organizations are migrating their analyses from traditional languages/tools like R, SAS, or SPSS to Python. The guide seeks to facilitate these transitions and ensure continuity in the quality of analyses during the migration process.

New features and improvements

The content has been enriched with the introduction to automated EDA and data profiling tools, thus responding to one of the latest trends in the field. The document delves into essential aspects such as environmental data interpretation, offers a more rigorous treatment of outliers, and presents a more detailed analysis of correlations between variables. Additionally, it incorporates good practices in code writing.

The practical application of these concepts is illustrated through the analysis of air quality data, where each technique makes sense in a real context. For example, when analyzing correlations between pollutants, it not only shows how to calculate them but also explains how these patterns reflect real atmospheric processes and what implications they have for air quality management.

Structure and contents

The guide follows a practical and systematic approach, covering the five fundamental stages of EDA:

- Descriptive analysis to obtain a representative view of the data.

- Variable type adjustment to ensure consistency.

- Detection and treatment of missing data.

- Identification and management of outliers.

- Correlation analysis between variables.

Figure 1. Phases of exploratory data analysis. Source: own elaboration.

As a novelty in the structure, a section on automated exploratory analysis is included, presenting modern tools that facilitate the systematic exploration of large datasets.

Who is it for?

This guide is designed for open data users who wish to conduct exploratory analyses and reuse the valuable sources of public information found in this and other data portals worldwide. While basic knowledge of the language is recommended, the guide includes resources and references to improve Python skills, as well as detailed practical examples that facilitate self-directed learning.

The complete material, including both documentation and source code, is available in the portal's GitHub repository. The implementation has been done using open-source tools such as Jupyter Notebook in Google Colab, which allows reproducing the examples and adapting the code according to the specific needs of each project.

The community is invited to explore this new guide, experiment with the provided examples, and take advantage of these resources to develop their own open data analyses.

Click to see the full infographic, in accessible version

Figure 2. Capture of the infographic. Source: own elaboration.

1. Introduction

Visualizations are graphical representations of data that allow for the simple and effective communication of information linked to them. The possibilities for visualization are very broad, from basic representations such as line graphs, bar charts or relevant metrics, to visualizations configured on interactive dashboards.

In this section "Visualizations step by step" we are periodically presenting practical exercises using open data available on datos.gob.es or other similar catalogs. In them, the necessary steps to obtain the data, perform the transformations and relevant analyses to, finally obtain conclusions as a summary of said information, are addressed and described in a simple way.

Each practical exercise uses documented code developments and free-to-use tools. All generated material is available for reuse in the GitHub repository of datos.gob.es.

In this specific exercise, we will explore tourist flows at a national level, creating visualizations of tourists moving between autonomous communities (CCAA) and provinces.

Access the data laboratory repository on Github.

Execute the data pre-processing code on Google Colab.

In this video, the author explains what you will find on both Github and Google Colab.

2. Context

Analyzing national tourist flows allows us to observe certain well-known movements, such as, for example, that the province of Alicante is a very popular summer tourism destination. In addition, this analysis is interesting for observing trends in the economic impact that tourism may have, year after year, in certain CCAA or provinces. The article on experiences for the management of visitor flows in tourist destinations illustrates the impact of data in the sector.

3. Objective

The main objective of the exercise is to create interactive visualizations in Python that allow visualizing complex information in a comprehensive and attractive way. This objective will be met using an open dataset that contains information on national tourist flows, posing several questions about the data and answering them graphically. We will be able to answer questions such as those posed below:

- In which CCAA is there more tourism from the same CA?

- Which CA is the one that leaves its own CA the most?

- What differences are there between tourist flows throughout the year?

- Which Valencian province receives the most tourists?

The understanding of the proposed tools will provide the reader with the ability to modify the code contained in the notebook that accompanies this exercise to continue exploring the data on their own and detect more interesting behaviors from the dataset used.

In order to create interactive visualizations and answer questions about tourist flows, a data cleaning and reformatting process will be necessary, which is described in the notebook that accompanies this exercise.

4. Resources

Dataset

The open dataset used contains information on tourist flows in Spain at the CCAA and provincial level, also indicating the total values at the national level. The dataset has been published by the National Institute of Statistics, through various types of files. For this exercise we only use the .csv file separated by ";". The data dates from July 2019 to March 2024 (at the time of writing this exercise) and is updated monthly.

Number of tourists by CCAA and destination province disaggregated by PROVINCE of origin

The dataset is also available for download in this Github repository.

Analytical tools

The Python programming language has been used for data cleaning and visualization creation. The code created for this exercise is made available to the reader through a Google Colab notebook.

The Python libraries we will use to carry out the exercise are:

- pandas: is a library used for data analysis and manipulation.

- holoviews: is a library that allows creating interactive visualizations, combining the functionalities of other libraries such as Bokeh and Matplotlib.

5. Exercise development

To interactively visualize data on tourist flows, we will create two types of diagrams: chord diagrams and Sankey diagrams.

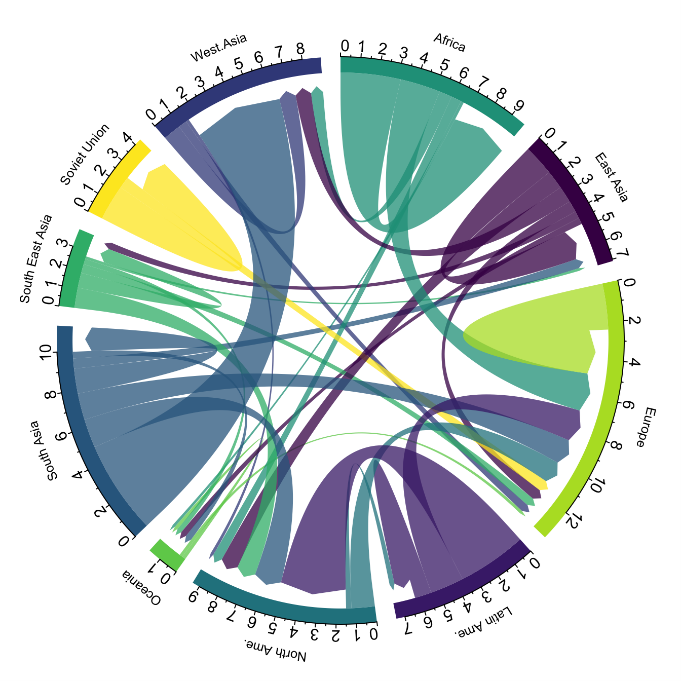

Chord diagrams are a type of diagram composed of nodes and edges, see Figure 1. The nodes are located in a circle and the edges symbolize the relationships between the nodes of the circle. These diagrams are usually used to show types of flows, for example, migratory or monetary flows. The different volume of the edges is visualized in a comprehensible way and reflects the importance of a flow or a node. Due to its circular shape, the chord diagram is a good option to visualize the relationships between all the nodes in our analysis (many-to-many type relationship).

Figure 1. Chord Diagram (Global Migration). Source.

Sankey diagrams, like chord diagrams, are a type of diagram composed of nodes and edges, see Figure 2. The nodes are represented at the margins of the visualization, with the edges between the margins. Due to this linear grouping of nodes, Sankey diagrams are better than chord diagrams for analyses in which we want to visualize the relationship between:

- several nodes and other nodes (many-to-many, or many-to-few, or vice versa)

- several nodes and a single node (many-to-one, or vice versa)

Figure 2. Sankey Diagram (UK Internal Migration). Source.

The exercise is divided into 5 parts, with part 0 ("initial configuration") only setting up the programming environment. Below, we describe the five parts and the steps carried out.

5.1. Load data

This section can be found in point 1 of the notebook.

In this part, we load the dataset to process it in the notebook. We check the format of the loaded data and create a pandas.DataFrame that we will use for data processing in the following steps.

5.2. Initial data exploration

This section can be found in point 2 of the notebook.

In this part, we perform an exploratory data analysis to understand the format of the dataset we have loaded and to have a clearer idea of the information it contains. Through this initial exploration, we can define the cleaning steps we need to carry out to create interactive visualizations.

If you want to learn more about how to approach this task, you have at your disposal this introductory guide to exploratory data analysis.

5.3. Data format analysis

This section can be found in point 3 of the notebook.

In this part, we summarize the observations we have been able to make during the initial data exploration. We recapitulate the most important observations here:

| Province of origin | Province of origin | CCAA and destination province | CCAA and destination province | CCAA and destination province | Tourist concept | Period | Total |

|---|---|---|---|---|---|---|---|

| National Total | National Total | Tourists | 2024M03 | 13.731.096 | |||

| National Total | Ourense | National Total | Andalucía | Almería | Tourists | 2024M03 | 373 |

Figure 3. Fragment of the original dataset.

We can observe in columns one to four that the origins of tourist flows are disaggregated by province, while for destinations, provinces are aggregated by CCAA. We will take advantage of the mapping of CCAA and their provinces that we can extract from the fourth and fifth columns to aggregate the origin provinces by CCAA.

We can also see that the information contained in the first column is sometimes superfluous, so we will combine it with the second column. In addition, we have found that the fifth and sixth columns do not add value to our analysis, so we will remove them. We will rename some columns to have a more comprehensible pandas.DataFrame.

5.4. Data cleaning

This section can be found in point 4 of the notebook.

In this part, we carry out the necessary steps to better format our data. For this, we take advantage of several functionalities that pandas offers us, for example, to rename the columns. We also define a reusable function that we need to concatenate the values of the first and second columns with the aim of not having a column that exclusively indicates "National Total" in all rows of the pandas.DataFrame. In addition, we will extract from the destination columns a mapping of CCAA to provinces that we will apply to the origin columns.

We want to obtain a more compressed version of the dataset with greater transparency of the column names and that does not contain information that we are not going to process. The final result of the data cleaning process is the following:

| Origin | Province of origin | Destination | Province of destination | Period | Total |

|---|---|---|---|---|---|

| National Total | National Total | 2024M03 | 13731096.0 | ||

| Galicia | Ourense | Andalucía | Almería | 2024M03 | 373.0 |

Figure 4. Fragment of the clean dataset.

5.5. Create visualizations

This section can be found in point 5 of the notebook

In this part, we create our interactive visualizations using the Holoviews library. In order to draw chord or Sankey graphs that visualize the flow of people between CCAA and CCAA and/or provinces, we have to structure the information of our data in such a way that we have nodes and edges. In our case, the nodes are the names of CCAA or province and the edges, that is, the relationship between the nodes, are the number of tourists. In the notebook we define a function to obtain the nodes and edges that we can reuse for the different diagrams we want to make, changing the time period according to the season of the year we are interested in analyzing.

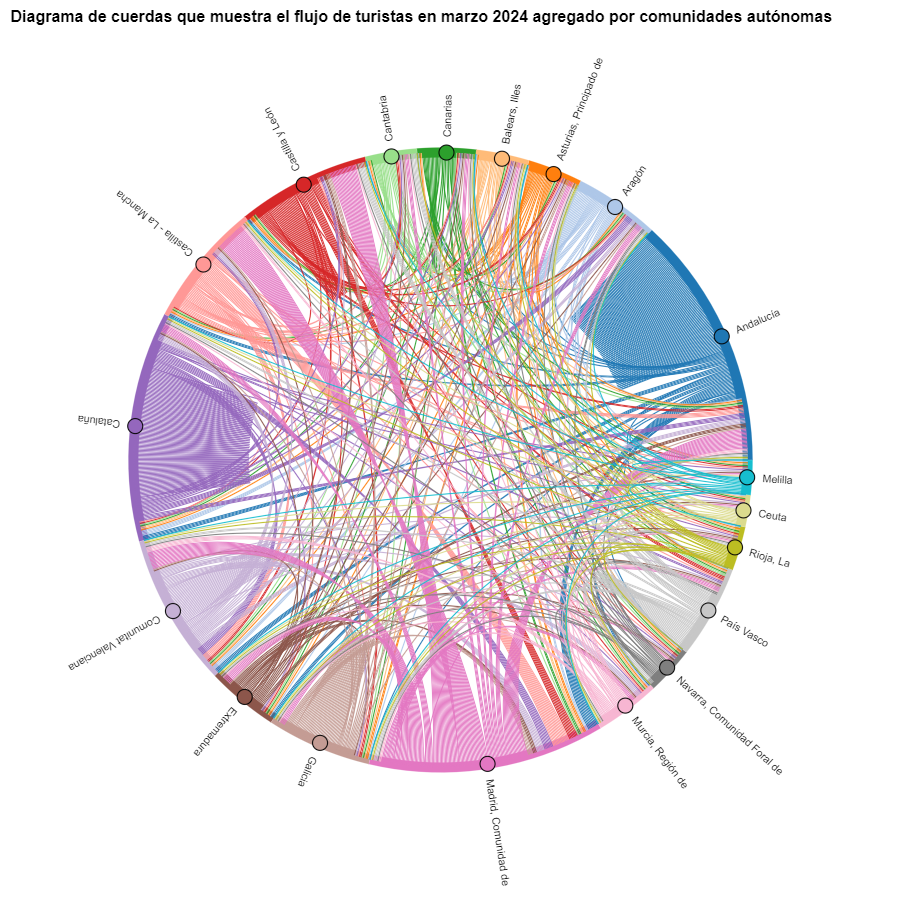

We will first create a chord diagram using exclusively data on tourist flows from March 2024. In the notebook, this chord diagram is dynamic. We encourage you to try its interactivity.

Figure 5. Chord diagram showing the flow of tourists in March 2024 aggregated by autonomous communities.

The chord diagram visualizes the flow of tourists between all CCAA. Each CA has a color and the movements made by tourists from this CA are symbolized with the same color. We can observe that tourists from Andalucía and Catalonia travel a lot within their own CCAA. On the other hand, tourists from Madrid leave their own CA a lot.

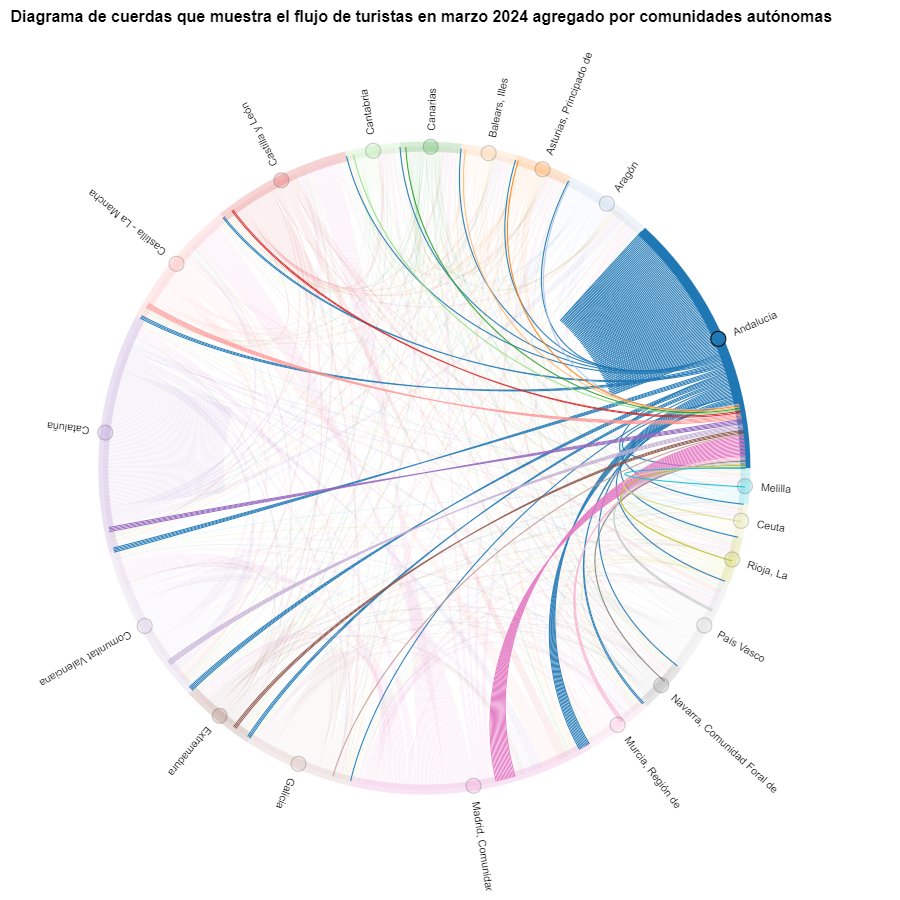

Figure 6. Chord diagram showing the flow of tourists entering and leaving Andalucía in March 2024 aggregated by autonomous communities.

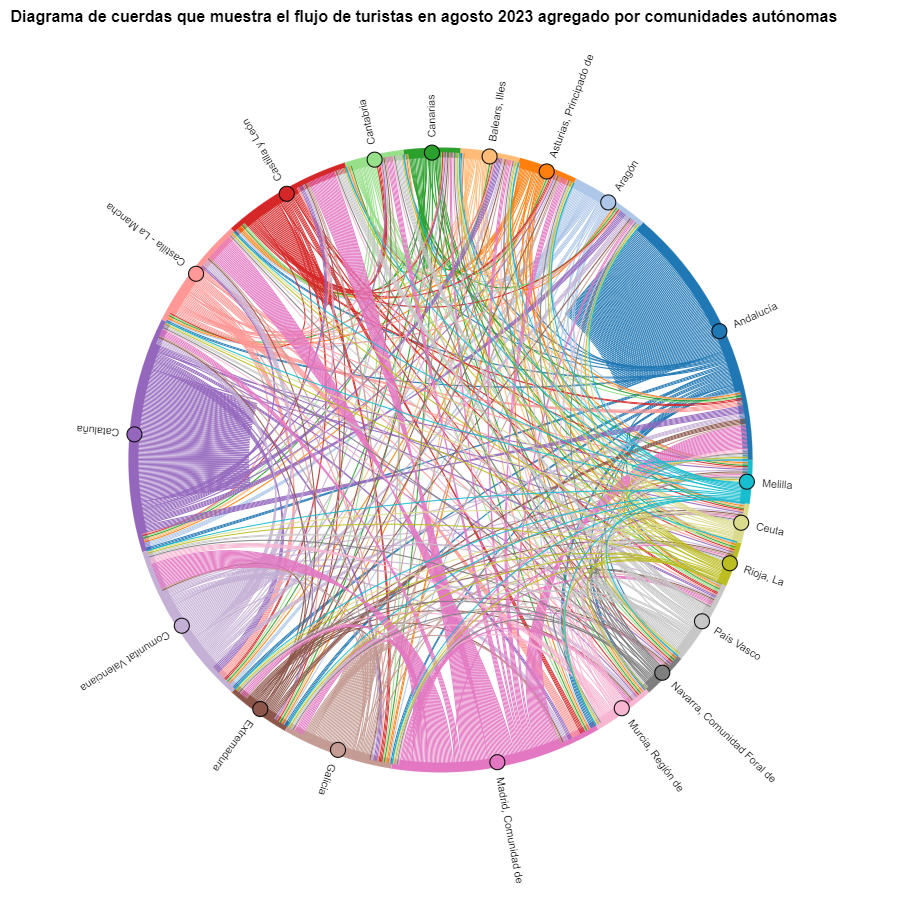

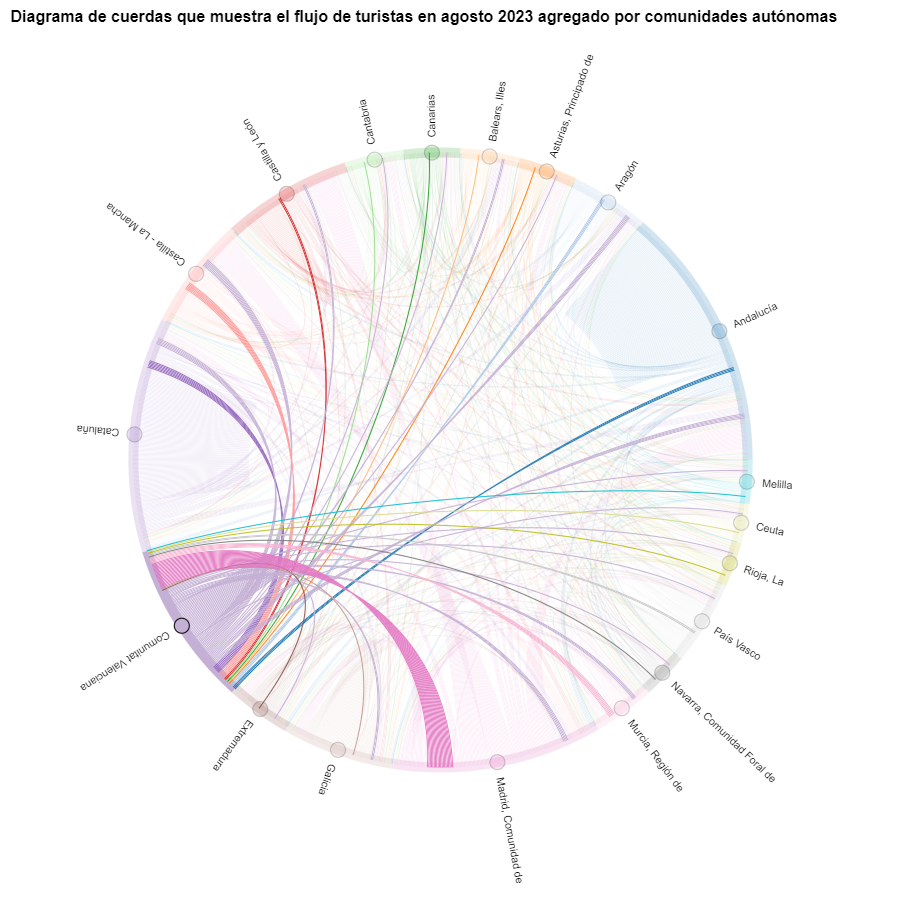

We create another chord diagram using the function we have created and visualize tourist flows in August 2023.

Figure 7. Chord diagram showing the flow of tourists in August 2023 aggregated by autonomous communities.

We can observe that, broadly speaking, tourist movements do not change, only that the movements we have already observed for March 2024 intensify.

Figure 8. Chord diagram showing the flow of tourists entering and leaving the Valencian Community in August 2023 aggregated by autonomous communities.

The reader can create the same diagram for other time periods, for example, for the summer of 2020, in order to visualize the impact of the pandemic on summer tourism, reusing the function we have created.

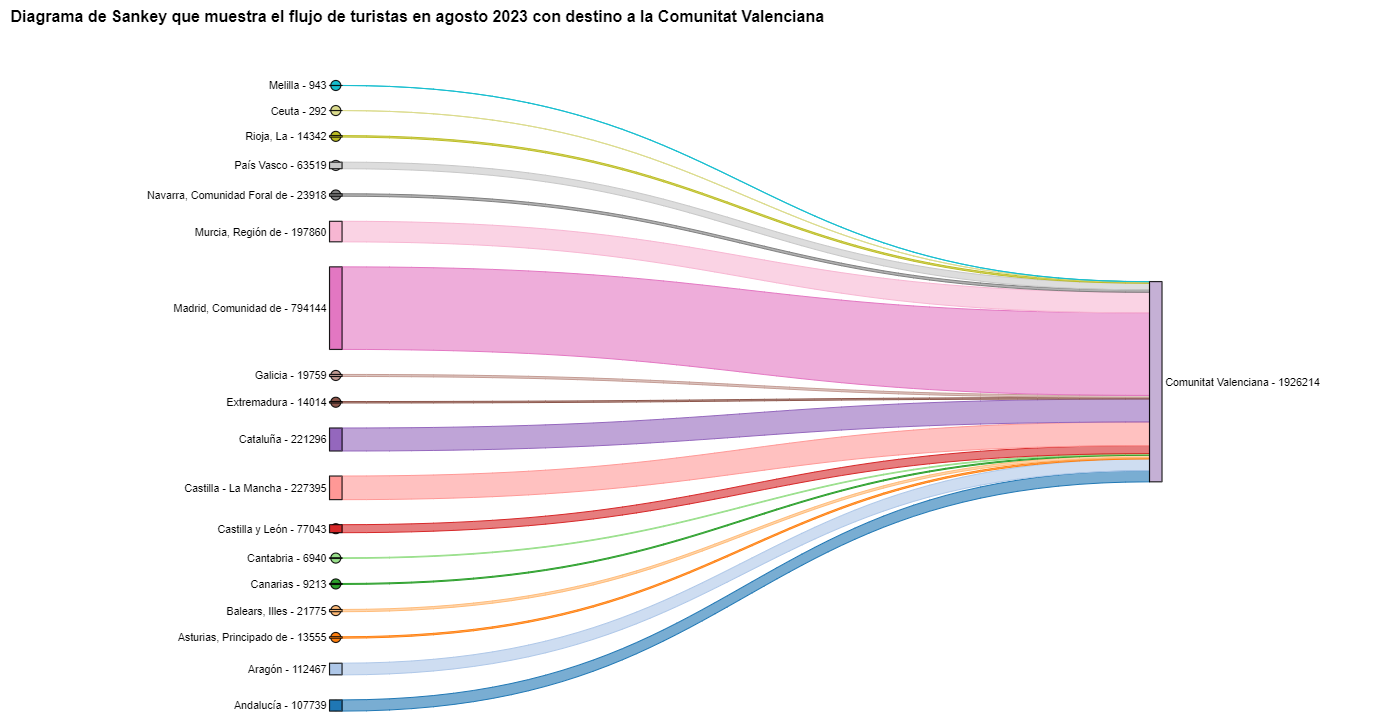

For the Sankey diagrams, we will focus on the Valencian Community, as it is a popular holiday destination. We filter the edges we created for the previous chord diagram so that they only contain flows that end in the Valencian Community. The same procedure could be applied to study any other CA or could be inverted to analyze where Valencians go on vacation. We visualize the Sankey diagram which, like the chord diagrams, is interactive within the notebook. The visual aspect would be like this:

Figure 9. Sankey diagram showing the flow of tourists in August 2023 destined for the Valencian Community.

As we could already intuit from the chord diagram above, see Figure 8, the largest group of tourists arriving in the Valencian Community comes from Madrid. We also see that there is a high number of tourists visiting the Valencian Community from neighboring CCAA such as Murcia, Andalucía, and Catalonia.

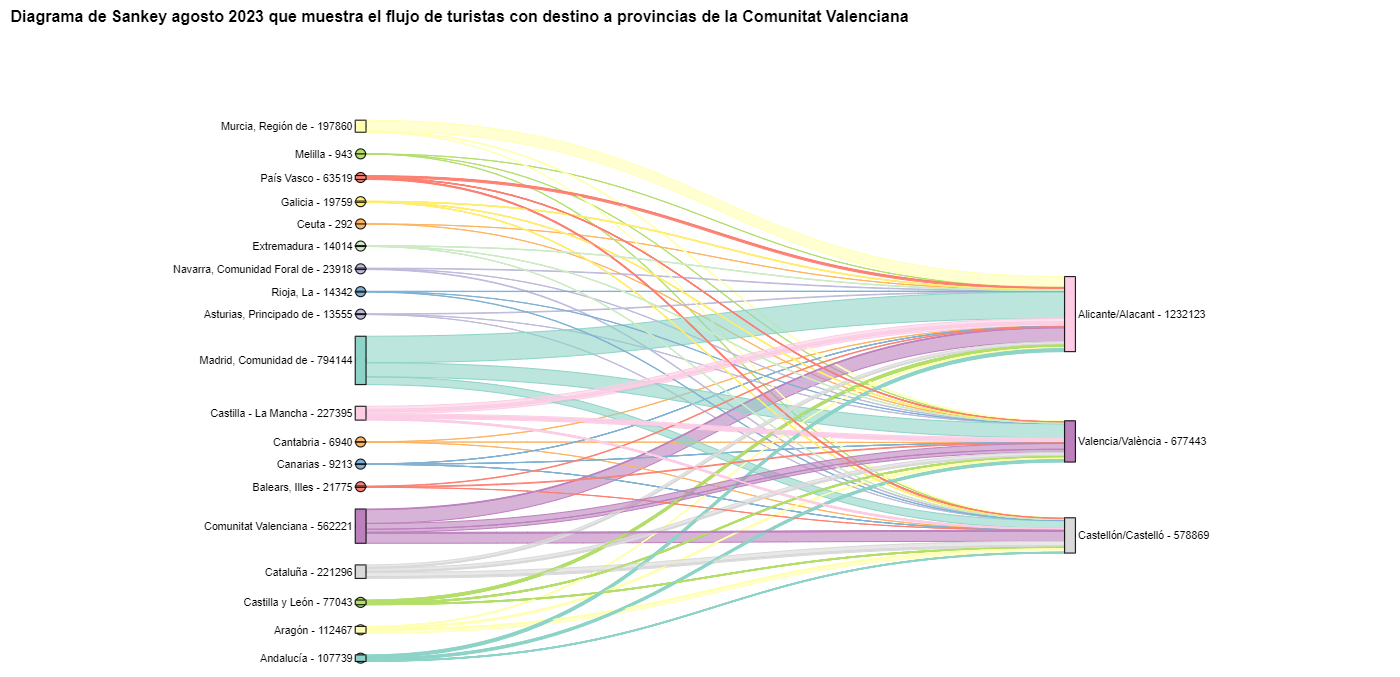

To verify that these trends occur in the three provinces of the Valencian Community, we are going to create a Sankey diagram that shows on the left margin all the CCAA and on the right margin the three provinces of the Valencian Community.

To create this Sankey diagram at the provincial level, we have to filter our initial pandas.DataFrame to extract the relevant information from it. The steps in the notebook can be adapted to perform this analysis at the provincial level for any other CA. Although we are not reusing the function we used previously, we can also change the analysis period.

The Sankey diagram that visualizes the tourist flows that arrived in August 2023 to the three Valencian provinces would look like this:

Figure 10. Sankey diagram August 2023 showing the flow of tourists destined for provinces of the Valencian Community.

We can observe that, as we already assumed, the largest number of tourists arriving in the Valencian Community in August comes from the Community of Madrid. However, we can verify that this is not true for the province of Castellón, where in August 2023 the majority of tourists were Valencians who traveled within their own CA.

6. Conclusions of the exercise

Thanks to the visualization techniques used in this exercise, we have been able to observe the tourist flows that move within the national territory, focusing on making comparisons between different times of the year and trying to identify patterns. In both the chord diagrams and the Sankey diagrams that we have created, we have been able to observe the influx of Madrilenian tourists on the Valencian coasts in summer. We have also been able to identify the autonomous communities where tourists leave their own autonomous community the least, such as Catalonia and Andalucía.

7. Do you want to do the exercise?

We invite the reader to execute the code contained in the Google Colab notebook that accompanies this exercise to continue with the analysis of tourist flows. We leave here some ideas of possible questions and how they could be answered:

- The impact of the pandemic: we have already mentioned it briefly above, but an interesting question would be to measure the impact that the coronavirus pandemic has had on tourism. We can compare the data from previous years with 2020 and also analyze the following years to detect stabilization trends. Given that the function we have created allows easily changing the time period under analysis, we suggest you do this analysis on your own.

- Time intervals: it is also possible to modify the function we have been using in such a way that it not only allows selecting a specific time period, but also allows time intervals.

- Provincial level analysis: likewise, an advanced reader with Pandas can challenge themselves to create a Sankey diagram that visualizes which provinces the inhabitants of a certain region travel to, for example, Ourense. In order not to have too many destination provinces that could make the Sankey diagram illegible, only the 10 most visited could be visualized. To obtain the data to create this visualization, the reader would have to play with the filters they apply to the dataset and with the groupby method of pandas, being inspired by the already executed code.

We hope that this practical exercise has provided you with sufficient knowledge to develop your own visualizations. If you have any data science topic that you would like us to cover soon, do not hesitate to propose your interest through our contact channels.

In addition, remember that you have more exercises available in the section "Data science exercises".

1. Introduction

Visualisations are graphical representations of data that allow to communicate, in a simple and effective way, the information linked to the data. The visualisation possibilities are very wide ranging, from basic representations such as line graphs, bar charts or relevant metrics, to interactive dashboards.

In this section of "Step-by-Step Visualisations we are regularly presenting practical exercises making use of open data available at datos.gob.es or other similar catalogues. They address and describe in a simple way the steps necessary to obtain the data, carry out the relevant transformations and analyses, and finally draw conclusions, summarizing the information.

Documented code developments and free-to-use tools are used in each practical exercise. All the material generated is available for reuse in the GitHub repository of datos.gob.es.

In this particular exercise, we will explore the current state of electric vehicle penetration in Spain and the future prospects for this disruptive technology in transport.

Access the data lab repository on Github.

Run the data pre-processing code on Google Colab.

In this video (available with English subtitles), the author explains what you will find both on Github and Google Colab.

2. Context: why is the electric vehicle important?

The transition towards more sustainable mobility has become a global priority, placing the electric vehicle (EV) at the centre of many discussions on the future of transport. In Spain, this trend towards the electrification of the car fleet not only responds to a growing consumer interest in cleaner and more efficient technologies, but also to a regulatory and incentive framework designed to accelerate the adoption of these vehicles. With a growing range of electric models available on the market, electric vehicles represent a key part of the country's strategy to reduce greenhouse gas emissions, improve urban air quality and foster technological innovation in the automotive sector.

However, the penetration of EVs in the Spanish market faces a number of challenges, from charging infrastructure to consumer perception and knowledge of EVs. Expansion of the freight network, together with supportive policies and fiscal incentives, are key to overcoming existing barriers and stimulating demand. As Spain moves towards its sustainability and energy transition goals, analysing the evolution of the electric vehicle market becomes an essential tool to understand the progress made and the obstacles that still need to be overcome.

3. Objective

This exercise focuses on showing the reader techniques for the processing, visualisation and advanced analysis of open data using Python. We will adopt a "learning-by-doing" approach so that the reader can understand the use of these tools in the context of solving a real and topical challenge such as the study of EV penetration in Spain. This hands-on approach not only enhances understanding of data science tools, but also prepares readers to apply this knowledge to solve real problems, providing a rich learning experience that is directly applicable to their own projects.

The questions we will try to answer through our analysis are:

- Which vehicle brands led the market in 2023?

- Which vehicle models were the best-selling in 2023?

- What market share will electric vehicles absorb in 2023?

- Which electric vehicle models were the best-selling in 2023?

- How have vehicle registrations evolved over time?

- Are we seeing any trends in electric vehicle registrations?

- How do we expect electric vehicle registrations to develop next year?

- How much CO2 emission reduction can we expect from the registrations achieved over the next year?

4. Resources

To complete the development of this exercise we will require the use of two categories of resources: Analytical Tools and Datasets.

4.1. Dataset

To complete this exercise we will use a dataset provided by the Dirección General de Tráfico (DGT) through its statistical portal, also available from the National Open Data catalogue (datos.gob.es). The DGT statistical portal is an online platform aimed at providing public access to a wide range of data and statistics related to traffic and road safety. This portal includes information on traffic accidents, offences, vehicle registrations, driving licences and other relevant data that can be useful for researchers, industry professionals and the general public.

In our case, we will use their dataset of vehicle registrations in Spain available via:

- Open Data Catalogue of the Spanish Government.

- Statistical portal of the DGT.

Although during the development of the exercise we will show the reader the necessary mechanisms for downloading and processing, we include pre-processed data

in the associated GitHub repository, so that the reader can proceed directly to the analysis of the data if desired.

*The data used in this exercise were downloaded on 04 March 2024. The licence applicable to this dataset can be found at https://datos.gob.es/avisolegal.

4.2. Analytical tools

- Programming language: Python - a programming language widely used in data analysis due to its versatility and the wide range of libraries available. These tools allow users to clean, analyse and visualise large datasets efficiently, making Python a popular choice among data scientists and analysts.

- Platform: Jupyter Notebooks - ia web application that allows you to create and share documents containing live code, equations, visualisations and narrative text. It is widely used for data science, data analytics, machine learning and interactive programming education.

-

Main libraries and modules:

- Data manipulation: Pandas - an open source library that provides high-performance, easy-to-use data structures and data analysis tools.

- Data visualisation:

- Matplotlib: a library for creating static, animated and interactive visualisations in Python..

- Seaborn: a library based on Matplotlib. It provides a high-level interface for drawing attractive and informative statistical graphs.

- Statistics and algorithms:

- Statsmodels: a library that provides classes and functions for estimating many different statistical models, as well as for testing and exploring statistical data.

- Pmdarima: a library specialised in automatic time series modelling, facilitating the identification, fitting and validation of models for complex forecasts.

5. Exercise development

It is advisable to run the Notebook with the code at the same time as reading the post, as both didactic resources are complementary in future explanations

The proposed exercise is divided into three main phases.

5.1 Initial configuration

This section can be found in point 1 of the Notebook.

In this short first section, we will configure our Jupyter Notebook and our working environment to be able to work with the selected dataset. We will import the necessary Python libraries and create some directories where we will store the downloaded data.

5.2 Data preparation

This section can be found in point 2 of the Notebookk.

All data analysis requires a phase of accessing and processing to obtain the appropriate data in the desired format. In this phase, we will download the data from the statistical portal and transform it into the format Apache Parquet format before proceeding with the analysis.

Those users who want to go deeper into this task, please read this guide Practical Introductory Guide to Exploratory Data Analysis.

5.3 Data analysis

This section can be found in point 3 of the Notebook.

5.3.1 Análisis descriptivo

In this third phase, we will begin our data analysis. To do so,we will answer the first questions using datavisualisation tools to familiarise ourselves with the data. Some examples of the analysis are shown below:

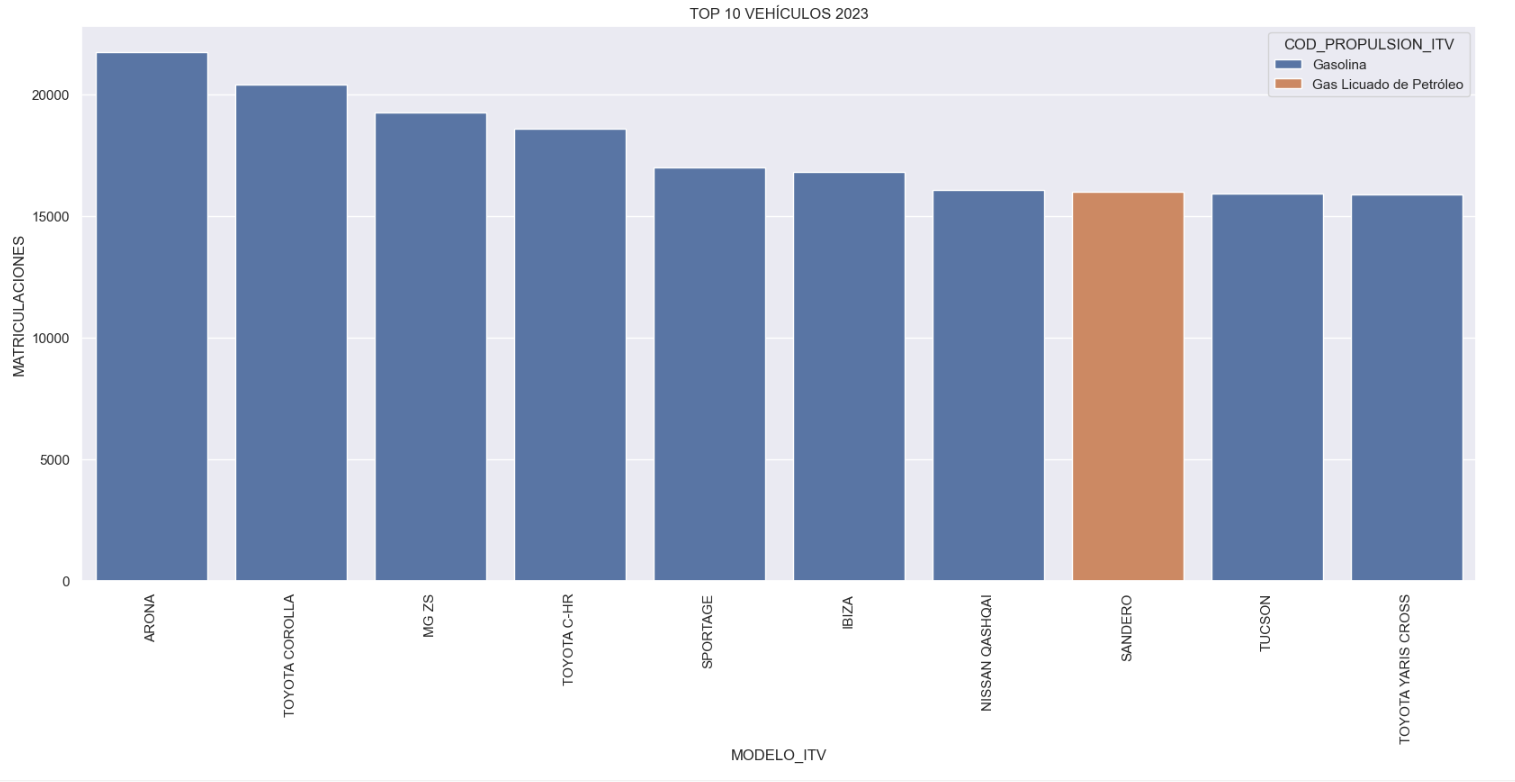

- Top 10 Vehicles registered in 2023: In this visualisation we show the ten vehicle models with the highest number of registrations in 2023, also indicating their combustion type. The main conclusions are:

- The only European-made vehicles in the Top 10 are the Arona and the Ibiza from Spanish brand SEAT. The rest are Asians.

- Nine of the ten vehicles are powered by gasoline.

- The only vehicle in the Top 10 with a different type of propulsion is the DACIA Sandero LPG (Liquefied Petroleum Gas).

Figure 1. Graph "Top 10 vehicles registered in 2023"

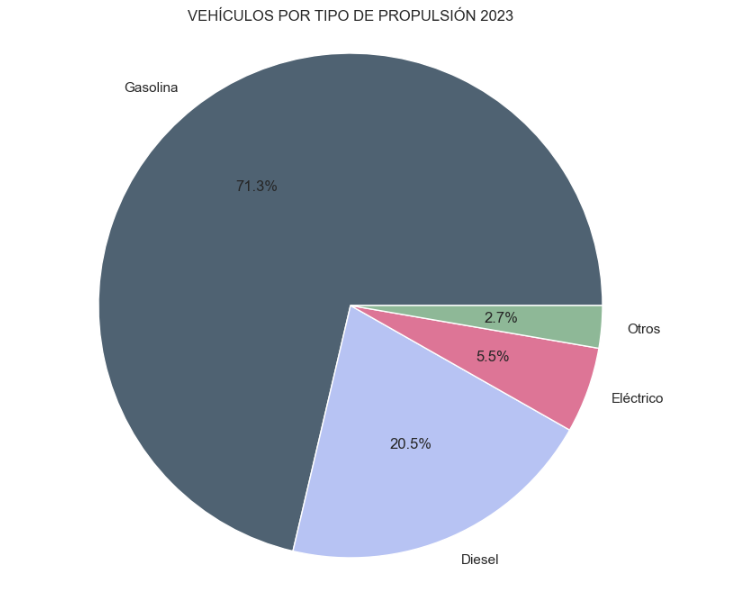

- Market share by propulsion type: In this visualisation we represent the percentage of vehicles registered by each type of propulsion (petrol, diesel, electric or other). We see how the vast majority of the market (>70%) was taken up by petrol vehicles, with diesel being the second choice, and how electric vehicles reached 5.5%.

Figure 2. Graph "Market share by propulsion type".

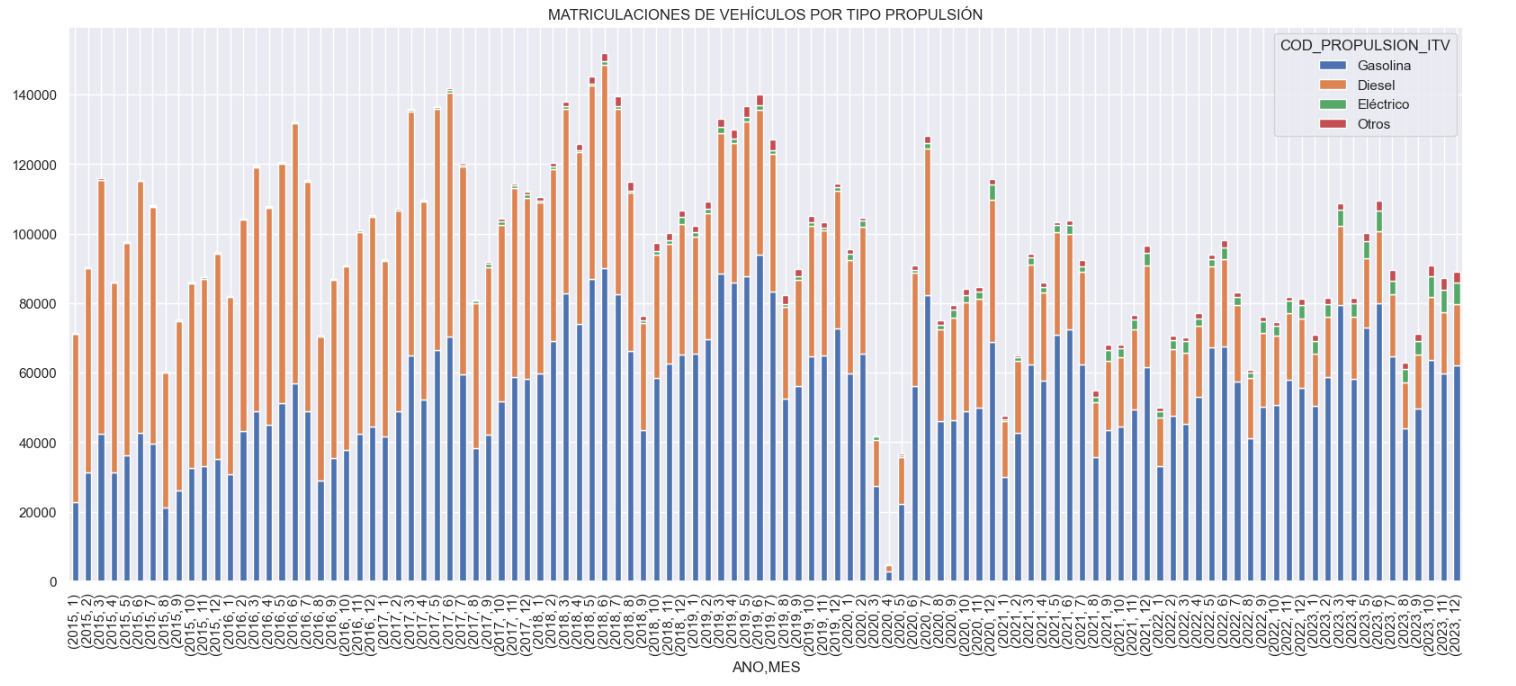

- Historical development of registrations: This visualisation represents the evolution of vehicle registrations over time. It shows the monthly number of registrations between January 2015 and December 2023 distinguishing between the propulsion types of the registered vehicles, and there are several interesting aspects of this graph:

- We observe an annual seasonal behaviour, i.e. we observe patterns or variations that are repeated at regular time intervals. We see recurring high levels of enrolment in June/July, while in August/September they decrease drastically. This is very relevant, as the analysis of time series with a seasonal factor has certain particularities.

-

The huge drop in registrations during the first months of COVID is also very remarkable.

-

We also see that post-covid enrolment levels are lower than before.

-

Finally, we can see how between 2015 and 2023 the registration of electric vehicles is gradually increasing.

Figure 3. Graph "Vehicle registrations by propulsion type".

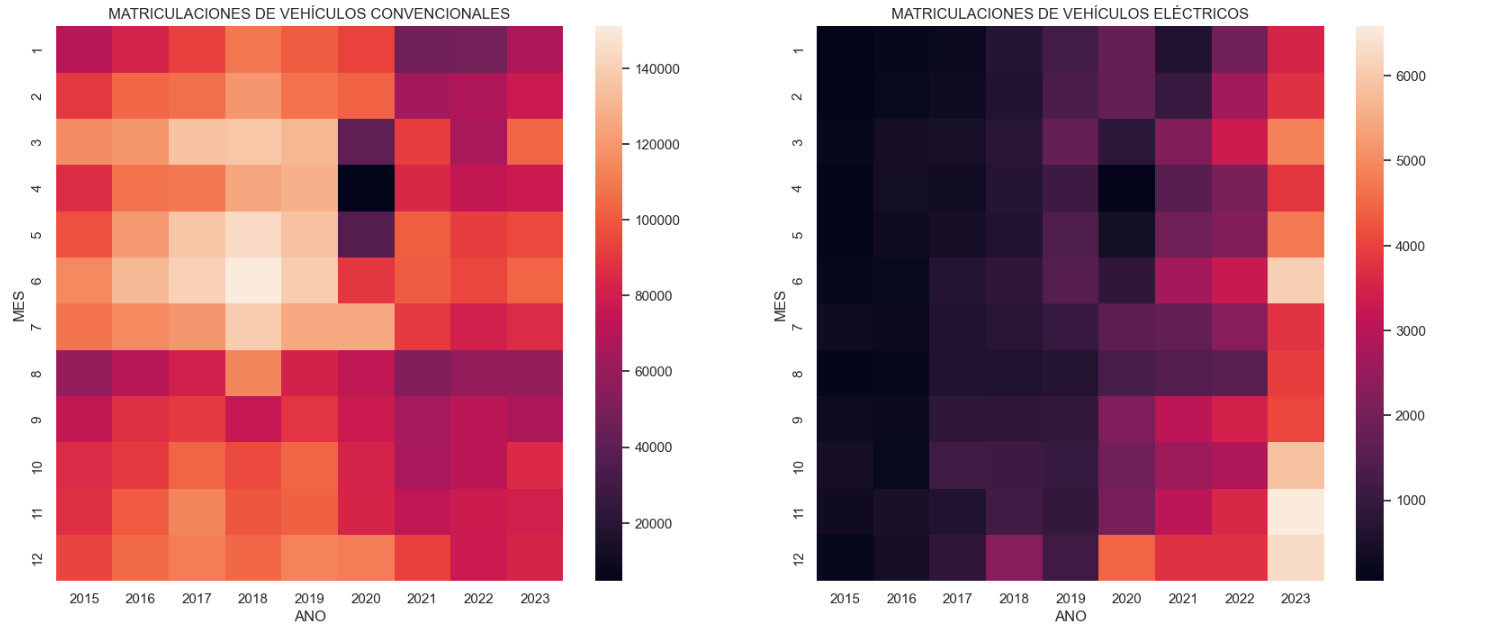

- Trend in the registration of electric vehicles: We now analyse the evolution of electric and non-electric vehicles separately using heat maps as a visual tool. We can observe very different behaviours between the two graphs. We observe how the electric vehicle shows a trend of increasing registrations year by year and, despite the COVID being a halt in the registration of vehicles, subsequent years have maintained the upward trend.

Figure 4. Graph "Trend in registration of conventional vs. electric vehicles".

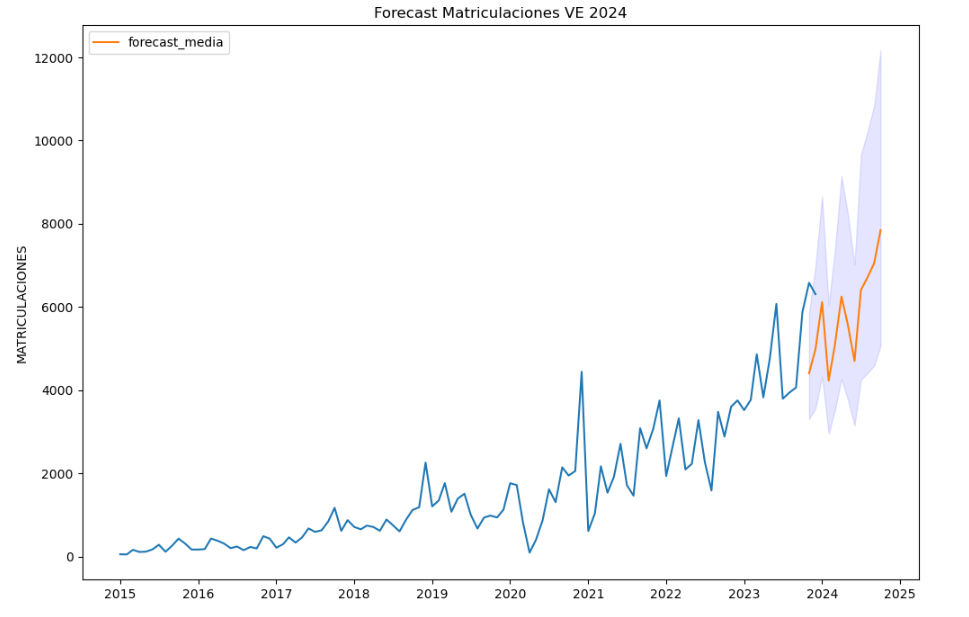

5.3.2. Predictive analytics

To answer the last question objectively, we will use predictive models that allow us to make estimates regarding the evolution of electric vehicles in Spain. As we can see, the model constructed proposes a continuation of the expected growth in registrations throughout the year of 70,000, reaching values close to 8,000 registrations in the month of December 2024 alone.

Figure 5. Graph "Predicted electric vehicle registrations".

5. Conclusions

As a conclusion of the exercise, we can observe, thanks to the analysis techniques used, how the electric vehicle is penetrating the Spanish vehicle fleet at an increasing speed, although it is still at a great distance from other alternatives such as diesel or petrol, for now led by the manufacturer Tesla. We will see in the coming years whether the pace grows at the level needed to meet the sustainability targets set and whether Tesla remains the leader despite the strong entry of Asian competitors.

6. Do you want to do the exercise?

If you want to learn more about the Electric Vehicle and test your analytical skills, go to this code repository where you can develop this exercise step by step.

Also, remember that you have at your disposal more exercises in the section "Step by step visualisations" "Step-by-step visualisations" section.

Content elaborated by Juan Benavente, industrial engineer and expert in technologies linked to the data economy. The contents and points of view reflected in this publication are the sole responsibility of the author.

Today, 23 April, is World Book Day, an occasion to highlight the importance of reading, writing and the dissemination of knowledge. Active reading promotes the acquisition of skills and critical thinking by bringing us closer to specialised and detailed information on any subject that interests us, including the world of data.

Therefore, we would like to take this opportunity to showcase some examples of books and manuals regarding data and related technologies that can be found on the web for free.

1. Fundamentals of Data Science with R, edited by Gema Fernandez-Avilés and José María Montero (2024)

Access the book here.

- What is it about? The book guides the reader from the problem statement to the completion of the report containing the solution to the problem. It explains some thirty data science techniques in the fields of modelling, qualitative data analysis, discrimination, supervised and unsupervised machine learning, etc. It includes more than a dozen use cases in sectors as diverse as medicine, journalism, fashion and climate change, among others. All this, with a strong emphasis on ethics and the promotion of reproducibility of analyses.

- Who is it aimed at? It is aimed at users who want to get started in data science. It starts with basic questions, such as what is data science, and includes short sections with simple explanations of probability, statistical inference or sampling, for those readers unfamiliar with these issues. It also includes replicable examples for practice.

- Language: Spanish.

2. Telling stories with data, Rohan Alexander (2023).

Access the book here.

- What is it about? The book explains a wide range of topics related to statistical communication and data modelling and analysis. It covers the various operations from data collection, cleaning and preparation to the use of statistical models to analyse the data, with particular emphasis on the need to draw conclusions and write about the results obtained. Like the previous book, it also focuses on ethics and reproducibility of results.

- Who is it aimed at? It is ideal for students and entry-level users, equipping them with the skills to effectively conduct and communicate a data science exercise. It includes extensive code examples for replication and activities to be carried out as evaluation.

- Language: English.

3. The Big Book of Small Python Projects, Al Sweigart (2021)

Access the book here.

- What is it about? It is a collection of simple Python projects to learn how to create digital art, games, animations, numerical tools, etc. through a hands-on approach. Each of its 81 chapters independently explains a simple step-by-step project - limited to a maximum of 256 lines of code. It includes a sample run of the output of each programme, source code and customisation suggestions.

- Who is it aimed at? The book is written for two groups of people. On the one hand, those who have already learned the basics of Python, but are still not sure how to write programs on their own. On the other hand, those who are new to programming, but are adventurous, enthusiastic and want to learn as they go along. However, the same author has other resources for beginners to learn basic concepts.

- Language: English.

4. Mathematics for Machine Learning, Marc Peter Deisenroth A. Aldo Faisal Cheng Soon Ong (2024)

Access the book here.

- What is it about? Most books on machine learning focus on machine learning algorithms and methodologies, and assume that the reader is proficient in mathematics and statistics. This book foregrounds the mathematical foundations of the basic concepts behind machine learning

- Who is it aimed at? The author assumes that the reader has mathematical knowledge commonly learned in high school mathematics and physics subjects, such as derivatives and integrals or geometric vectors. Thereafter, the remaining concepts are explained in detail, but in an academic style, in order to be precise.

- Language: English.

5. Dive into Deep Learning, Aston Zhang, Zack C. Lipton, Mu Li, Alex J. Smola (2021, continually updated)

Access the book here.

- What is it about? The authors are Amazon employees who use the mXNet library to teach Deep Learning. It aims to make deep learning accessible, teaching basic concepts, context and code in a practical way through examples and exercises. The book is divided into three parts: introductory concepts, deep learning techniques and advanced topics focusing on real systems and applications.

- Who is it aimed at? This book is aimed at students (undergraduate and postgraduate), engineers and researchers, who are looking for a solid grasp of the practical techniques of deep learning. Each concept is explained from scratch, so no prior knowledge of deep or machine learning is required. However, knowledge of basic mathematics and programming is necessary, including linear algebra, calculus, probability and Python programming.

- Language: English.

6. Artificial intelligence and the public sector: challenges, limits and means, Eduardo Gamero and Francisco L. Lopez (2024)

Access the book here.

- What is it about? This book focuses on analysing the challenges and opportunities presented by the use of artificial intelligence in the public sector, especially when used to support decision-making. It begins by explaining what artificial intelligence is and what its applications in the public sector are, and then moves on to its legal framework, the means available for its implementation and aspects linked to organisation and governance.

- Who is it aimed at? It is a useful book for all those interested in the subject, but especially for policy makers, public workers and legal practitioners involved in the application of AI in the public sector.

- Language: Spanish

7. A Business Analyst’s Introduction to Business Analytics, Adam Fleischhacker (2024)

Access the book here.

- What is it about? The book covers a complete business analytics workflow, including data manipulation, data visualisation, modelling business problems, translating graphical models into code and presenting results to stakeholders. The aim is to learn how to drive change within an organisation through data-driven knowledge, interpretable models and persuasive visualisations.

- Who is it aimed at? According to the author, the content is accessible to everyone, including beginners in analytical work. The book does not assume any knowledge of the programming language, but provides an introduction to R, RStudio and the "tidyverse", a series of open source packages for data science.

- Language: English.

We invite you to browse through this selection of books. We would also like to remind you that this is only a list of examples of the possibilities of materials that you can find on the web. Do you know of any other books you would like to recommend? let us know in the comments or email us at dinamizacion@datos.gob.es!

1. Introduction

Visualizations are graphical representations of data that allow you to communicate, in a simple and effective way, the information linked to it. The visualization possibilities are very extensive, from basic representations such as line graphs, bar graphs or relevant metrics, to visualizations configured on interactive dashboards.

In the section of “Step-by-step visualizations” we are periodically presenting practical exercises making use of open data available in datos.gob.es or other similar catalogs. They address and describe in a simple way the steps necessary to obtain the data, carry out the transformations and analyses that are pertinent to finally obtain conclusions as a summary of this information.

In each of these hands-on exercises, conveniently documented code developments are used, as well as free-to-use tools. All generated material is available for reuse in the datos.gob.es GitHub repository.

Accede al repositorio del laboratorio de datos en Github.

Ejecuta el código de pre-procesamiento de datos sobre Google Colab.

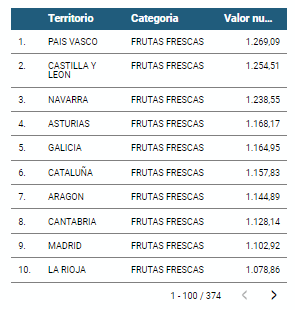

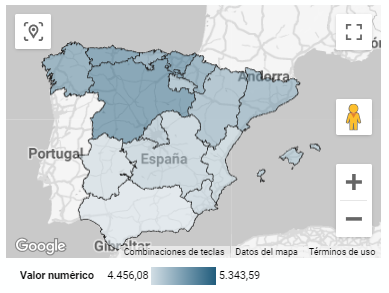

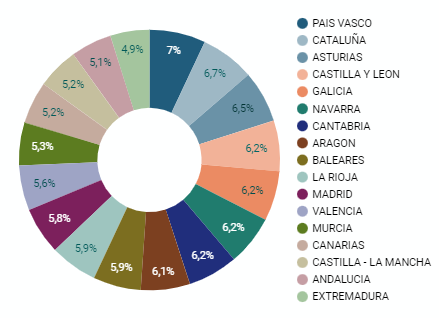

2. Objetive

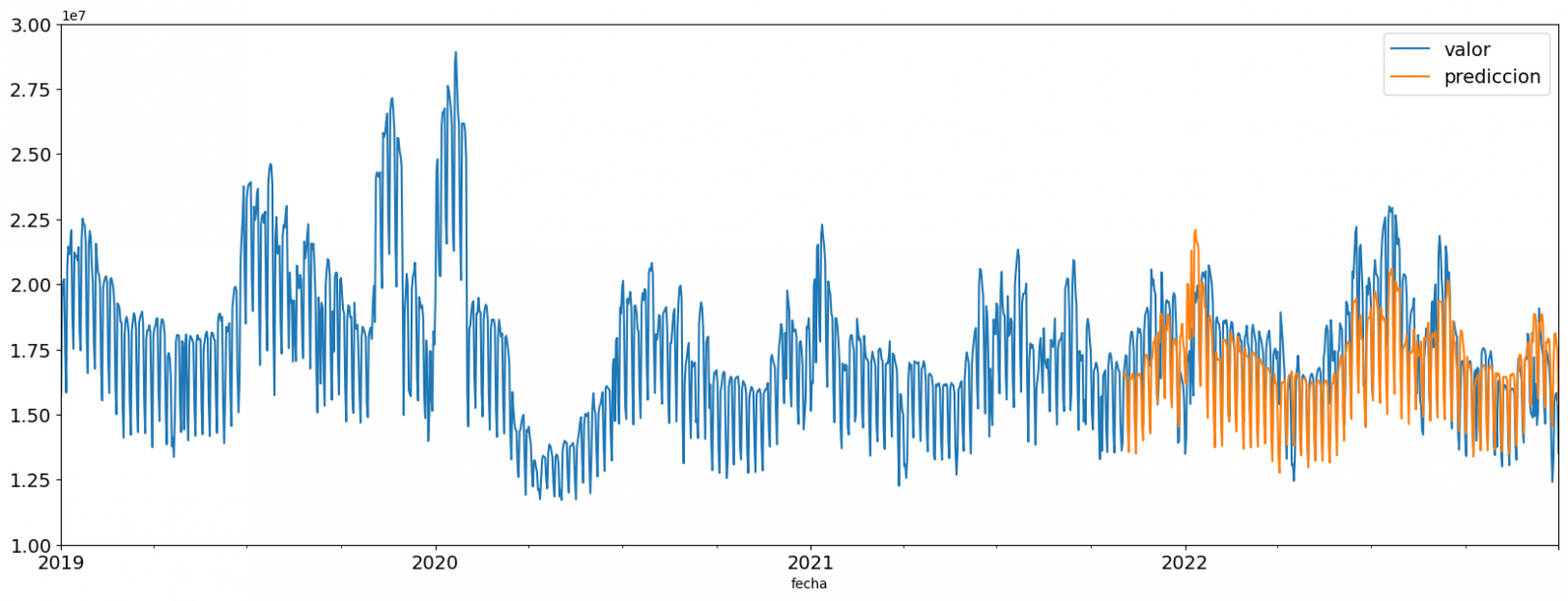

The main objective of this exercise is to show how to carry out, in a didactic way, a predictive analysis of time series based on open data on electricity consumption in the city of Barcelona. To do this, we will carry out an exploratory analysis of the data, define and validate the predictive model, and finally generate the predictions together with their corresponding graphs and visualizations.

Predictive time series analytics are statistical and machine learning techniques used to forecast future values in datasets that are collected over time. These predictions are based on historical patterns and trends identified in the time series, with their primary purpose being to anticipate changes and events based on past data.

The initial open dataset consists of records from 2019 to 2022 inclusive, on the other hand, the predictions will be made for the year 2023, for which we do not have real data.

Once the analysis has been carried out, we will be able to answer questions such as the following:

- What is the future prediction of electricity consumption?

- How accurate has the model been with the prediction of already known data?

- Which days will have maximum and minimum consumption based on future predictions?

- Which months will have a maximum and minimum average consumption according to future predictions?

These and many other questions can be solved through the visualizations obtained in the analysis, which will show the information in an orderly and easy-to-interpret way.

3. Resources

3.1. Datasets

The open datasets used contain information on electricity consumption in the city of Barcelona in recent years. The information they provide is the consumption in (MWh) broken down by day, economic sector, zip code and time slot.

These open datasets are published by Barcelona City Council in the datos.gob.es catalogue, through files that collect the records on an annual basis. It should be noted that the publisher updates these datasets with new records frequently, so we have used only the data provided from 2019 to 2022 inclusive.

These datasets are also available for download from the following Github repository.

3.2. Tools

To carry out the analysis, the Python programming language written on a Jupyter Notebook hosted in the Google Colab cloud service has been used.

"Google Colab" or, also called Google Colaboratory, is a cloud service from Google Research that allows you to program, execute and share code written in Python or R on top of a Jupyter Notebook from your browser, so it requires no configuration. This service is free of charge.

The Looker Studio tool was used to create the interactive visualizations.

"Looker Studio", formerly known as Google Data Studio, is an online tool that allows you to make interactive visualizations that can be inserted into websites or exported as files.

If you want to know more about tools that can help you in data processing and visualization, you can refer to the "Data processing and visualization tools" report.

4. Predictive time series analysis

Predictive time series analysis is a technique that uses historical data to predict future values of a variable that changes over time. Time series is data that is collected at regular intervals, such as days, weeks, months, or years. It is not the purpose of this exercise to explain in detail the characteristics of time series, as we focus on briefly explaining the prediction model. However, if you want to know more about it, you can consult the following manual.

This type of analysis assumes that the future values of a variable will be correlated with historical values. Using statistical and machine learning techniques, patterns in historical data can be identified and used to predict future values.

The predictive analysis carried out in the exercise has been divided into five phases; data preparation, exploratory data analysis, model training, model validation, and prediction of future values), which will be explained in the following sections.

The processes described below are developed and commented on in the following Notebook executable from Google Colab along with the source code that is available in our Github account.

It is advisable to run the Notebook with the code at the same time as reading the post, since both didactic resources are complementary in future explanations.

4.1 Data preparation

This section can be found in point 1 of the Notebook.

In this section, the open datasets described in the previous points that we will use in the exercise are imported, paying special attention to obtaining them and validating their content, ensuring that they are in the appropriate and consistent format for processing and that they do not contain errors that could condition future steps.

4.2 Exploratory Data Analysis (EDA)

This section can be found in point 2 of the Notebook.

In this section we will carry out an exploratory data analysis (EDA), in order to properly interpret the source data, detect anomalies, missing data, errors or outliers that could affect the quality of subsequent processes and results.

Then, in the following interactive visualization, you will be able to inspect the data table with the historical consumption values generated in the previous point, being able to filter by specific period. In this way, we can visually understand the main information in the data series.

Once you have inspected the interactive visualization of the time series, you will have observed several values that could potentially be considered outliers, as shown in the figure below. We can also numerically calculate these outliers, as shown in the notebook.

Once the outliers have been evaluated, for this year it has been decided to modify only the one registered on the date "2022-12-05". To do this, the value will be replaced by the average of the value recorded the previous day and the day after.

The reason for not eliminating the rest of the outliers is because they are values recorded on consecutive days, so it is assumed that they are correct values affected by external variables that are beyond the scope of the exercise. Once the problem detected with the outliers has been solved, this will be the time series of data that we will use in the following sections.

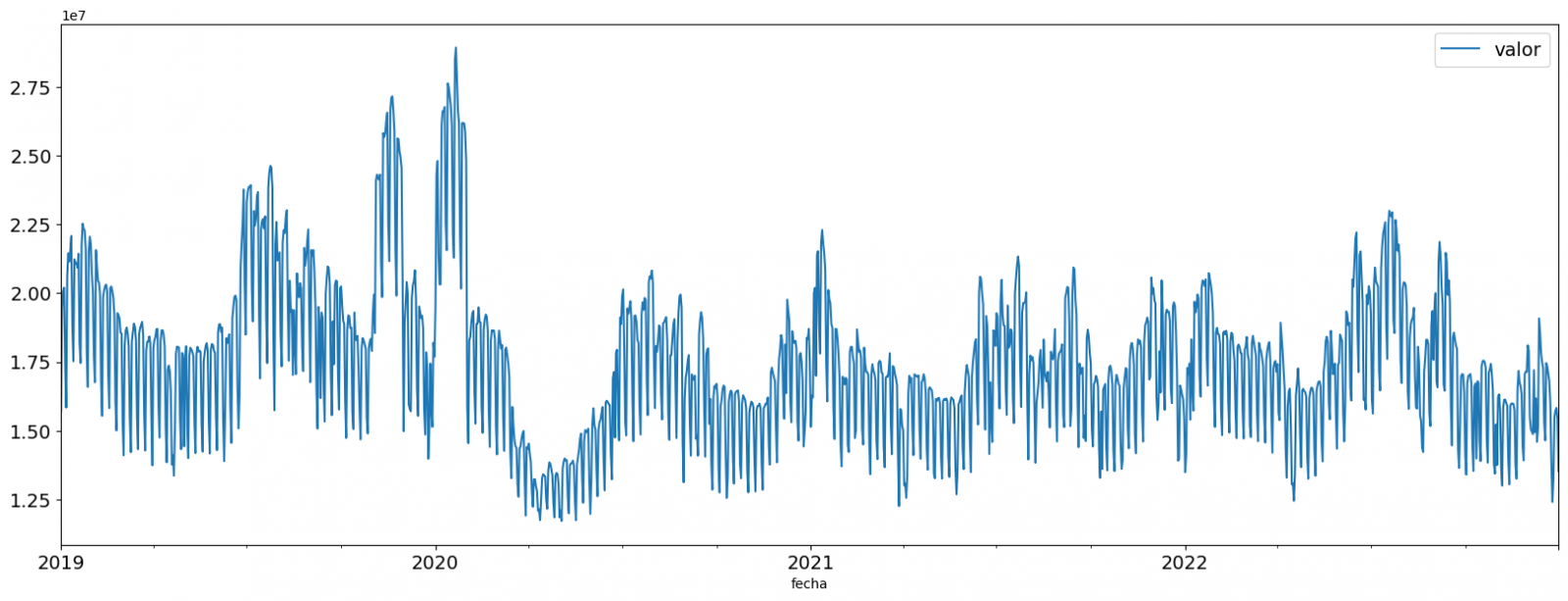

Figure 2. Time series of historical data after outliers have been processed.

If you want to know more about these processes, you can refer to the Practical Guide to Introduction to Exploratory Data Analysis.

4.3 Model training

This section can be found in point 3 of the Notebook.

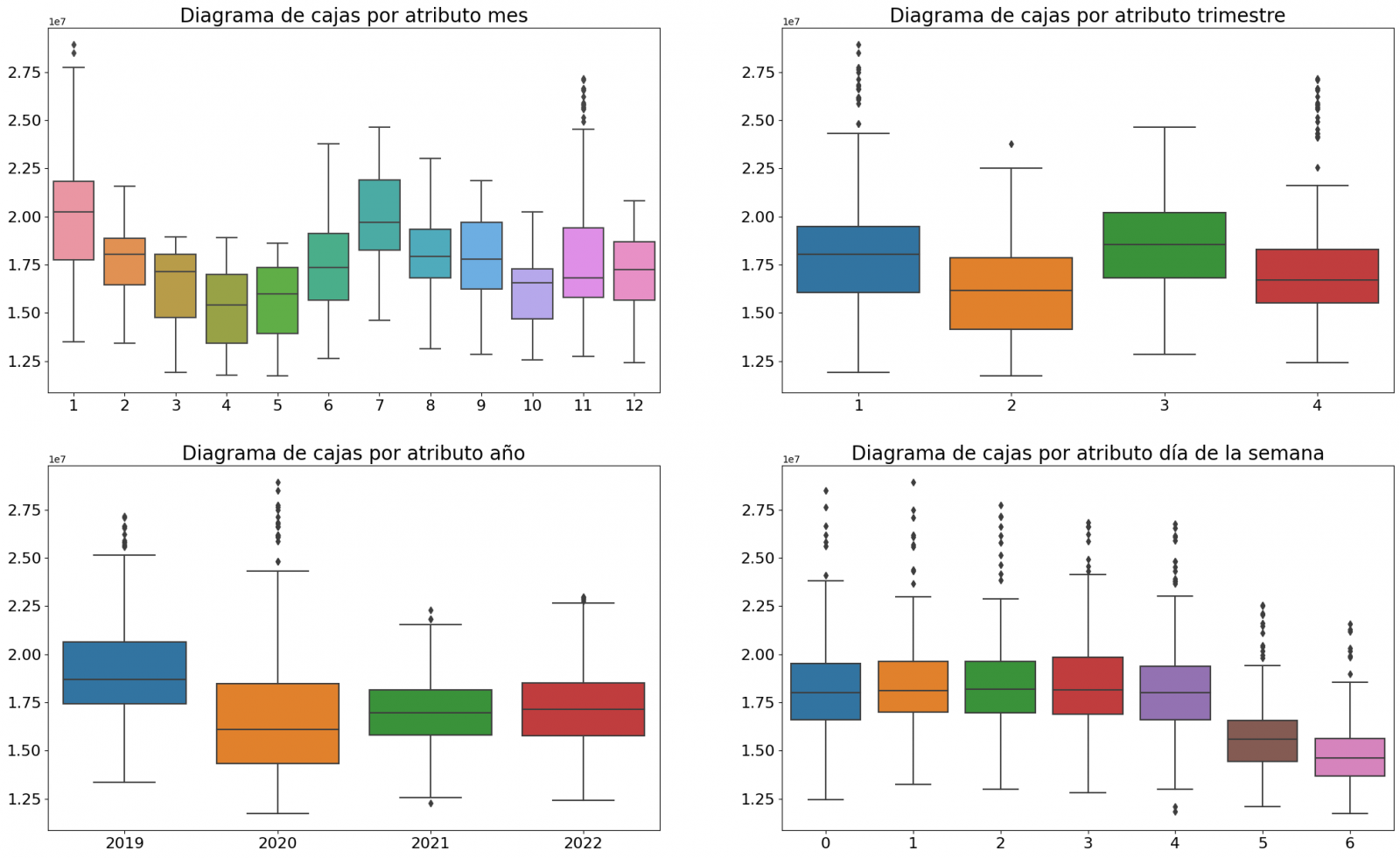

First, we create within the data table the temporal attributes (year, month, day of the week, and quarter). These attributes are categorical variables that help ensure that the model is able to accurately capture the unique characteristics and patterns of these variables. Through the following box plot visualizations, we can see their relevance within the time series values.

Figure 3. Box Diagrams of Generated Temporal Attributes

We can observe certain patterns in the charts above, such as the following:

- Weekdays (Monday to Friday) have a higher consumption than on weekends.

- The year with the lowest consumption values is 2020, which we understand is due to the reduction in service and industrial activity during the pandemic.

- The month with the highest consumption is July, which is understandable due to the use of air conditioners.

- The second quarter is the one with the lowest consumption values, with April standing out as the month with the lowest values.

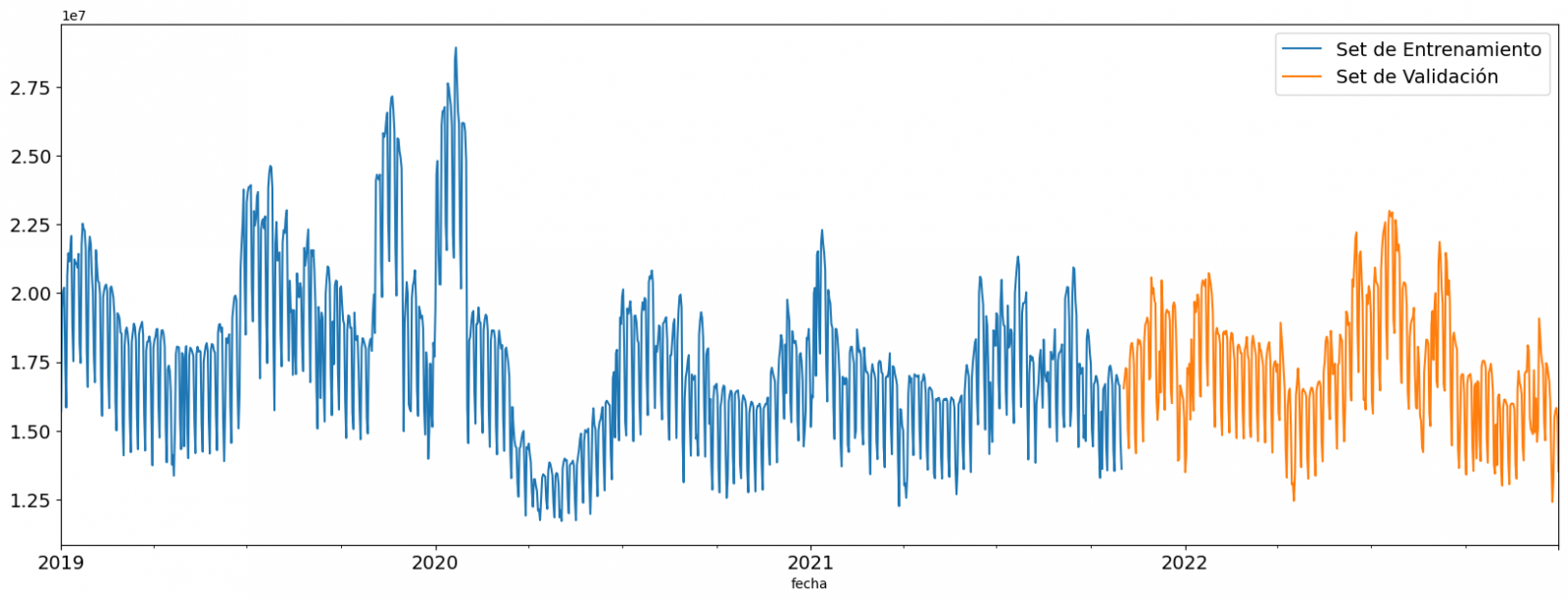

Next, we divide the data table into training set and validation set. The training set is used to train the model, i.e., the model learns to predict the value of the target variable from that set, while the validation set is used to evaluate the performance of the model, i.e., the model is evaluated against the data from that set to determine its ability to predict the new values.

This splitting of the data is important to avoid overfitting, with the typical proportion of the data used for the training set being 70% and the validation set being approximately 30%. For this exercise we have decided to generate the training set with the data between "01-01-2019" to "01-10-2021", and the validation set with those between "01-10-2021" and "31-12-2022" as we can see in the following graph.

Figure 4. Historical data time series divided into training set and validation set

For this type of exercise, we have to use some regression algorithm. There are several models and libraries that can be used for time series prediction. In this exercise we will use the "Gradient Boosting" model, a supervised regression model that is a machine learning algorithm used to predict a continuous value based on the training of a dataset containing known values for the target variable (in our example the variable "value") and the values of the independent variables (in our exercise the temporal attributes).

It is based on decision trees and uses a technique called "boosting" to improve the accuracy of the model, being known for its efficiency and ability to handle a variety of regression and classification problems.

Its main advantages are the high degree of accuracy, robustness and flexibility, while some of its disadvantages are its sensitivity to outliers and that it requires careful optimization of parameters.

We will use the supervised regression model offered in the XGBBoost library, which can be adjusted with the following parameters:

- n_estimators: A parameter that affects the performance of the model by indicating the number of trees used. A larger number of trees generally results in a more accurate model, but it can also take more time to train.

- early_stopping_rounds: A parameter that controls the number of training rounds that will run before the model stops if performance in the validation set does not improve.

- learning_rate: Controls the learning speed of the model. A higher value will make the model learn faster, but it can lead to overfitting.

- max_depth: Control the maximum depth of trees in the forest. A higher value can provide a more accurate model, but it can also lead to overfitting.

- min_child_weight: Control the minimum weight of a sheet. A higher value can help prevent overfitting.

- Gamma: Controls the amount of expected loss reduction needed to split a node. A higher value can help prevent overfitting.

- colsample_bytree: Controls the proportion of features that are used to build each tree. A higher value can help prevent overfitting.

- Subsample: Controls the proportion of the data that is used to construct each tree. A higher value can help prevent overfitting.

These parameters can be adjusted to improve model performance on a specific dataset. It's a good idea to experiment with different values of these parameters to find the value that provides the best performance in your dataset.

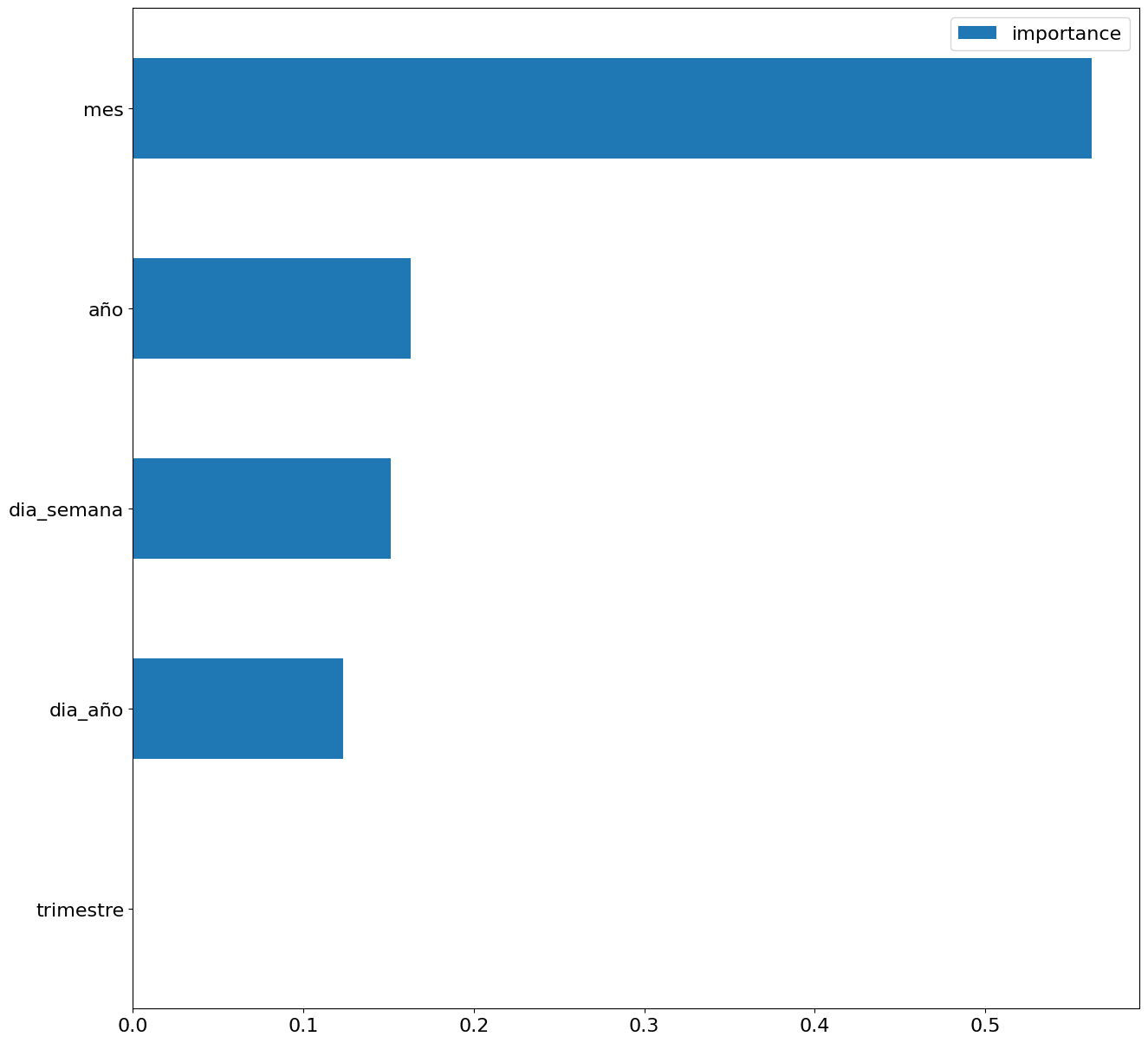

Finally, by means of a bar graph, we will visually observe the importance of each of the attributes during the training of the model. It can be used to identify the most important attributes in a dataset, which can be useful for model interpretation and feature selection.

Figure 5. Bar Chart with Importance of Temporal Attributes

4.4 Model training

This section can be found in point 4 of the Notebook.

Once the model has been trained, we will evaluate how accurate it is for the known values in the validation set.

We can visually evaluate the model by plotting the time series with the known values along with the predictions made for the validation set as shown in the figure below.

Figure 6. Time series with validation set data next to prediction data.

We can also numerically evaluate the accuracy of the model using different metrics. In this exercise, we have chosen to use the mean absolute percentage error (ASM) metric, which has been 6.58%. The accuracy of the model is considered high or low depending on the context and expectations in such a model, generally an ASM is considered low when it is less than 5%, while it is considered high when it is greater than 10%. In this exercise, the result of the model validation can be considered an acceptable value.

If you want to consult other types of metrics to evaluate the accuracy of models applied to time series, you can consult the following link.

4.5 Predictions of future values

This section can be found in point 5 of the Notebook.

Once the model has been generated and its MAPE = 6.58% performance has been evaluated, we will apply this model to all known data, in order to predict the unknown electricity consumption values for 2023.

First of all, we retrain the model with the known values until the end of 2022, without dividing it into a training and validation set. Finally, we calculate future values for the year 2023.

Figure 7. Time series with historical data and prediction for 2023

In the following interactive visualization you can see the predicted values for the year 2023 along with their main metrics, being able to filter by time period.

Improving the results of predictive time series models is an important goal in data science and data analytics. Several strategies that can help improve the accuracy of the exercise model are the use of exogenous variables, the use of more historical data or generation of synthetic data, optimization of parameters, ...

Due to the informative nature of this exercise and to promote the understanding of less specialized readers, we have proposed to explain the exercise in a way that is as simple and didactic as possible. You may come up with many ways to optimize your predictive model to achieve better results, and we encourage you to do so!

5. Conclusions of the exercise

Once the exercise has been carried out, we can see different conclusions such as the following:

- The maximum values for consumption predictions in 2023 are given in the last half of July, exceeding values of 22,500,000 MWh

- The month with the highest consumption according to the predictions for 2023 will be July, while the month with the lowest average consumption will be November, with a percentage difference between the two of 25.24%

- The average daily consumption forecast for 2023 is 17,259,844 MWh, 1.46% lower than that recorded between 2019 and 2022.

We hope that this exercise has been useful for you to learn some common techniques in the study and analysis of open data. We'll be back to show you new reuses. See you soon!

1. Introduction

Visualizations are graphical representations of data that allow the information linked to them to be communicated in a simple and effective way. The visualization possibilities are very wide, from basic representations, such as line, bar or sector graphs, to visualizations configured on interactive dashboards.

In this "Step-by-Step Visualizations" section we are regularly presenting practical exercises of open data visualizations available in datos.gob.es or other similar catalogs. They address and describe in a simple way the stages necessary to obtain the data, perform the transformations and analyses that are relevant to, finally, enable the creation of interactive visualizations that allow us to obtain final conclusions as a summary of said information. In each of these practical exercises, simple and well-documented code developments are used, as well as tools that are free to use. All generated material is available for reuse in the GitHub Data Lab repository.

Then, and as a complement to the explanation that you will find below, you can access the code that we will use in the exercise and that we will explain and develop in the following sections of this post.

Access the data lab repository on Github.

Run the data pre-processing code on top of Google Colab.

2. Objetive

The main objective of this exercise is to show how to perform a network or graph analysis based on open data on rental bicycle trips in the city of Madrid. To do this, we will perform a preprocessing of the data in order to obtain the tables that we will use next in the visualization generating tool, with which we will create the visualizations of the graph.