Last March 13th, a session of the Mobility Working Group of the Gaia-X Spain Hub was held, addressing the main challenges of the sector regarding projects related to data sharing and exploitation. The session, which took place at the Technical School of Civil Engineers of the Polytechnic University of Madrid, allowed attendees to learn firsthand about the main challenges of the sector, as well as some of the cutting-edge data projects in the mobility industry. The event was also a meeting point where ideas and reflections were shared among key actors in the sector.

The session began with a presentation from the Ministry of Transport, Mobility, and Urban Agenda, which highlighted the great importance of the National Access Point for Multimodal Transport, a European project that allows all information on passenger transport services in the country to be centralized in a single national point, with the aim of providing the foundation for driving the development of future mobility services.

Next, the Data Office of the State Secretariat for Artificial Intelligence (SEDIA) provided their vision of the Data Spaces development model and the design principles of such spaces aligned with European values. The importance of business networks based on data ecosystems, the intersectoral nature of the Mobility industry, and the significant role of open data in the sector's data spaces were highlighted.

Next, use cases were presented by Vicomtech, Amadeus, i2CAT, and the Alcobendas City Council, which allowed attendees to learn firsthand about some examples of technology use for data sharing projects (both data spaces and data lakes).

Finally, an initial study by the i2CAT Foundation, FACTUAL Consulting, and EIT Urban Mobility on the basic components of future mobility data spaces in Spain was presented. The study, which can be downloaded here in Spanish, addresses the potential of mobility data spaces for the Spanish market. Although it focuses on Spain, it takes a national and international research approach, framed in the European context to establish standards, develop the technical components that enable data spaces, the first flagship projects, and address common challenges to achieve milestones in sustainable mobility in Europe.

The presentations used in the session are available at this link.

Updated: 21/03/2024

On January 2023, the European Commission published a list of high-value datasets that public sector bodies must make available to the public within a maximum of 16 months. The main objective of establishing the list of high-value datasets was to ensure that public data with the highest socio-economic potential are made available for re-use with minimal legal and technical restriction, and at no cost. Among these public sector datasets, some, such as meteorological or air quality data, are particularly interesting for developers and creators of services such as apps or websites, which bring added value and important benefits for society, the environment or the economy.

The publication of the Regulation has been accompanied by frequently asked questions to help public bodies understand the benefit of HVDS (High Value Datasets) for society and the economy, as well as to explain some aspects of the obligatory nature of HVDS (High Value Datasets) and the support for publication.

In line with this proposal, Executive Vice-President for a Digitally Ready Europe, Margrethe Vestager, stated the following in the press release issued by the European Commission:

"Making high-value datasets available to the public will benefit both the economy and society, for example by helping to combat climate change, reducing urban air pollution and improving transport infrastructure. This is a practical step towards the success of the Digital Decade and building a more prosperous digital future".

In parallel, Internal Market Commissioner Thierry Breton also added the following words on the announcement of the list of high-value data: "Data is a cornerstone of our industrial competitiveness in the EU. With the new list of high-value datasets we are unlocking a wealth of public data for the benefit of all”. Start-ups and SMEs will be able to use this to develop new innovative products and solutions to improve the lives of citizens in the EU and around the world.

Six categories to bring together new high-value datasets

The regulation is thus created under the umbrella of the European Open Data Directive, which defines six categories to differentiate the new high-value datasets requested:

- Geospatial

- Earth observation and environmental

- Meteorological

- Statistical

- Business

- Mobility

However, as stated in the European Commission's press release, this thematic range could be extended at a later stage depending on technological and market developments. Thus, the datasets will be available in machine-readable format, via an application programming interface (API) and, if relevant, also with a bulk download option.

In addition, the reuse of datasets such as mobility or building geolocation data can expand the business opportunities available for sectors such as logistics or transport. In parallel, weather observation, radar, air quality or soil pollution data can also support research and digital innovation, as well as policy making in the fight against climate change.

Ultimately, greater availability of data, especially high-value data, has the potential to boost entrepreneurship as these datasets can be an important resource for SMEs to develop new digital products and services, which in turn can also attract new investors.

Find out more in this infographic:

Access the accessible version on two pages.

1. Introduction

Visualizations are graphical representations of data that allows comunication in a simple and effective way the information linked to it. The visualization possibilities are very wide, from basic representations, such as a graph of lines, bars or sectors, to visualizations configured on dashboards or interactive dashboards. Visualizations play a fundamental role in drawing conclusions using visual language, also allowing to detect patterns, trends, anomalous data or project predictions, among many other functions.

In this section of "Step-by-Step Visualizations" we are periodically presenting practical exercises of open data visualizations available in datos.gob.es or other similar catalogs. They address and describe in a simple way the necessary stages to obtain the data, perform the transformations and analysis that are relevant to it and finally, the creation of interactive visualizations. From these visualizations we can extract information to summarize in the final conclusions. In each of these practical exercises, simple and well-documented code developments are used, as well as free to use tools. All generated material is available for reuse in the Github data lab repository belonging to datos.gob.es.

In this practical exercise, we have carried out a simple code development that is conveniently documented based on free to use tool.

Access the data lab repository on Github.

Run the data pre-processing code on Google Colab.

2. Objetive

The main objective of this post is to show how to make an interactive visualization based on open data. For this practical exercise we have used a dataset provided by the Ministry of Justice that contains information about the toxicological results made after traffic accidents that we will cross with the data published by the Central Traffic Headquarters (DGT) that contain the detail on the fleet of vehicles registered in Spain.

From this data crossing we will analyze and be able to observe the ratios of positive toxicological results in relation to the fleet of registered vehicles.

It should be noted that the Ministry of Justice makes available to citizens various dashboards to view data on toxicological results in traffic accidents. The difference is that this practical exercise emphasizes the didactic part, we will show how to process the data and how to design and build the visualizations.

3. Resources

3.1. Datasets

For this case study, a dataset provided by the Ministry of Justice has been used, which contains information on the toxicological results carried out in traffic accidents. This dataset is in the following Github repository:

The datasets of the fleet of vehicles registered in Spain have also been used. These data sets are published by the Central Traffic Headquarters (DGT), an agency under the Ministry of the Interior. They are available on the following page of the datos.gob.es Data Catalog:

3.2. Tools

To carry out the data preprocessing tasks it has been used the Python programming language written on a Jupyter Notebook hosted in the Google Colab cloud service.

Google Colab (also called Google Colaboratory), is a free cloud service from Google Research that allows you to program, execute and share code written in Python or R from your browser, so it does not require the installation of any tool or configuration.

For the creation of the interactive visualization, the Google Data Studio tool has been used.

Google Data Studio is an online tool that allows you to make graphs, maps or tables that can be embedded in websites or exported as files. This tool is simple to use and allows multiple customization options.

If you want to know more about tools that can help you in the treatment and visualization of data, you can use the report "Data processing and visualization tools".

4. Data processing or preparation

Before launching to build an effective visualization, we must carry out a previous treatment of the data, paying special attention to obtaining it and validating its content, ensuring that it is in the appropriate and consistent format for processing and that it does not contain errors.

The processes that we describe below will be discussed in the Notebook that you can also run from Google Colab. Link to Google Colab notebook

As a first step of the process, it is necessary to perform an exploratory data analysis (EDA) in order to properly interpret the starting data, detect anomalies, missing data or errors that could affect the quality of subsequent processes and results. Pre-processing of data is essential to ensure that analyses or visualizations subsequently created from it are reliable and consistent. If you want to know more about this process, you can use the Practical Guide to Introduction to Exploratory Data Analysis.

The next step to take is the generation of the preprocessed data tables that we will use to generate the visualizations. To do this we will adjust the variables, cross data between both sets and filter or group as appropriate.

The steps followed in this data preprocessing are as follows:

- Importing libraries

- Loading data files to use

- Detection and processing of missing data (NAs)

- Modifying and adjusting variables

- Generating tables with preprocessed data for visualizations

- Storage of tables with preprocessed data

You will be able to reproduce this analysis since the source code is available in our GitHub account. The way to provide the code is through a document made on a Jupyter Notebook that once loaded into the development environment you can execute or modify easily. Due to the informative nature of this post and favor the understanding of non-specialized readers, the code does not intend to be the most efficient, but to facilitate its understanding, so you will possibly come up with many ways to optimize the proposed code to achieve similar purposes. We encourage you to do so!

5. Generating visualizations

Once we have done the preprocessing of the data, we go with the visualizations. For the realization of these interactive visualizations, the Google Data Studio tool has been used. Being an online tool, it is not necessary to have software installed to interact or generate any visualization, but it is necessary that the data tables that we provide are properly structured, for this we have made the previous steps for the preparation of the data.

The starting point is the approach of a series of questions that visualization will help us solve. We propose the following:

- How is the fleet of vehicles in Spain distributed by Autonomous Communities?

- What type of vehicle is involved to a greater and lesser extent in traffic accidents with positive toxicological results?

- Where are there more toxicological findings in traffic fatalities?

Let''s look for the answers by looking at the data!

5.1. Fleet of vehicles registered by Autonomous Communities

This visual representation has been made considering the number of vehicles registered in the different Autonomous Communities, breaking down the total by type of vehicle. The data, corresponding to the average of the month-to-month records of the years 2020 and 2021, are stored in the "parque_vehiculos.csv" table generated in the preprocessing of the starting data.

Through a choropleth map we can visualize which CCAAs are those that have a greater fleet of vehicles. The map is complemented by a ring graph that provides information on the percentages of the total for each Autonomous Community.

As defined in the "Data visualization guide of the Generalitat Catalana" the choropletic (or choropleth) maps show the values of a variable on a map by painting the areas of each affected region of a certain color. They are used when you want to find geographical patterns in the data that are categorized by zones or regions.

Ring charts, encompassed in pie charts, use a pie representation that shows how the data is distributed proportionally.

Once the visualization is obtained, through the drop-down tab, the option to filter by type of vehicle appears.

View full screen visualization

5.2. Ratio of positive toxicological results for different types of vehicles

This visual representation has been made considering the ratios of positive toxicological results by number of vehicles nationwide. We count as a positive result each time a subject tests positive in the analysis of each of the substances, that is, the same subject can count several times in the event that their results are positive for several substances. For this purpose, the table "resultados_vehiculos.csv” has been generated during data preprocessing.

Using a stacked bar chart, we can evaluate the ratios of positive toxicological results by number of vehicles for different substances and different types of vehicles.

As defined in the "Data visualization guide of the Generalitat Catalana" bar graphs are used when you want to compare the total value of the sum of the segments that make up each of the bars. At the same time, they offer insight into how large these segments are.

When stacked bars add up to 100%, meaning that each segmented bar occupies the height of the representation, the graph can be considered a graph that allows you to represent parts of a total.

The table provides the same information in a complementary way.

Once the visualization is obtained, through the drop-down tab, the option to filter by type of substance appears.

View full screen visualization

5.3. Ratio of positive toxicological results for the Autonomous Communities

This visual representation has been made taking into account the ratios of the positive toxicological results by the fleet of vehicles of each Autonomous Community. We count as a positive result each time a subject tests positive in the analysis of each of the substances, that is, the same subject can count several times in the event that their results are positive for several substances. For this purpose, the "resultados_ccaa.csv" table has been generated during data preprocessing.

It should be noted that the Autonomous Community of registration of the vehicle does not have to coincide with the Autonomous Community where the accident has been registered, however, since this is a didactic exercise and it is assumed that in most cases they coincide, it has been decided to start from the basis that both coincide.

Through a choropleth map we can visualize which CCAAs are the ones with the highest ratios. To the information provided in the first visualization on this type of graph, we must add the following.

As defined in the "Data Visualization Guide for Local Entities" one of the requirements for choropleth maps is to use a numerical measure or datum, a categorical datum for the territory, and a polygon geographic datum.

The table and bar chart provides the same information in a complementary way.

Once the visualization is obtained, through the peeling tab, the option to filter by type of substance appears.

View full screen visualization

6. Conclusions of the study

Data visualization is one of the most powerful mechanisms for exploiting and analyzing the implicit meaning of data, regardless of the type of data and the degree of technological knowledge of the user. Visualizations allow us to build meaning on top of data and create narratives based on graphical representation. In the set of graphical representations of data that we have just implemented, the following can be observed:

- The fleet of vehicles of the Autonomous Communities of Andalusia, Catalonia and Madrid corresponds to about 50% of the country''s total.

- The highest positive toxicological results ratios occur in motorcycles, being of the order of three times higher than the next ratio, passenger cars, for most substances.

- The lowest positive toxicology result ratios occur in trucks.

- Two-wheeled vehicles (motorcycles and mopeds) have higher "cannabis" ratios than those obtained in "cocaine", while four-wheeled vehicles (cars, vans and trucks) have higher "cocaine" ratios than those obtained in "cannabis"

- The Autonomous Community where the ratio for the total of substances is highest is La Rioja.

It should be noted that in the visualizations you have the option to filter by type of vehicle and type of substance. We encourage you to do so to draw more specific conclusions about the specific information you''re most interested in.

We hope that this step-by-step visualization has been useful for learning some very common techniques in the treatment and representation of open data. We will return to show you new reuses. See you soon!

Este informe, que publica el Portal de Datos Europeo, analiza el potencial de reutilización de los datos en tiempo real. Los datos en tiempo real ofrecen información con alta frecuencia de actualización sobre el entorno que nos rodea (por ejemplo, información sobre el tráfico, datos meteorológicos, mediciones de la contaminación ambiental, información sobre riesgos naturales, etc.).

El documento resume los resultados y conclusiones de un seminario web organizado por el equipo del Portal de Datos Europeo celebrado el pasado 5 de abril de 2022, donde se explicaron diferentes formas de compartir datos en tiempo real desde plataformas de datos abiertos.

En primer lugar, el informe hace un repaso sobre el fundamento de los datos en tiempo real e incluye ejemplos que justifican el valor que aporta este tipo de datos para, a continuación, describir dos enfoques tecnológicos sobre cómo compartir datos en tiempo real del ámbito de IoT y el transporte. Incluye, además, un bloque que resume las principales conclusiones de las preguntas y comentarios de los participantes que giran, principalmente, en torno a difentes necesidades de fuentes de datos y funcionalidades requeridas para su reutilización.

Para terminar, basándose en el feedback y la discusión generada, se proporciona un conjunto de recomendaciones y acciones a corto y medio plazo sobre cómo mejorar la capacidad para localizar fuentes de datos en tiempo real a través del Portal de Datos Europeo.

Este informe se encuentra disponible en el siguiente enlace: "Datos en tiempo real: Enfoques para integrar fuentes de datos en tiempo real en data.europa.eu"

Open data portals are experiencing a significant growth in the number of datasets being published in the transport and mobility category. For example, the EU's open data portal already has almost 48,000 datasets in the transport category or Spain's own portal datos.gob.es, which has around 2,000 datasets if we include those in the public sector category. One of the main reasons for the growth in the publication of transport-related data is the existence of three directives that aim to maximise the re-use of datasets in the area. The PSI directive on the re-use of public sector information in combination with the INSPIRE directive on spatial information infrastructure and the ITS directive on the implementation of intelligent transport systems, together with other legislative developments, make it increasingly difficult to justify keeping transport and mobility data closed.

In this sense, in Spain, Law 37/2007, as amended in November 2021, adds the obligation to publish open data to commercial companies belonging to the institutional public sector that act as airlines. This goes a step further than the more frequent obligations with regard to data on public passenger transport services by rail and road.

In addition, open data is at the heart of smart, connected and environmentally friendly mobility strategies, both in the case of the Spanish "es.movilidad" strategy and in the case of the sustainable mobility strategy proposed by the European Commission. In both cases, open data has been introduced as one of the key innovation vectors in the digital transformation of the sector to contribute to the achievement of the objectives of improving the quality of life of citizens and protecting the environment.

However, much less is said about the importance and necessity of open data during the research phase, which then leads to the innovations we all enjoy. And without this stage in which researchers work to acquire a better understanding of the functioning of the transport and mobility dynamics of which we are all a part, and in which open data plays a fundamental role, it would not be possible to obtain relevant innovations or well-informed public policies. In this sense, we are going to review two very relevant initiatives in which coordinated multi-national efforts are being made in the field of mobility and transport research.

The information and monitoring system for transport research and innovation

At the European level, the EU also strongly supports research and innovation in transport, aware that it needs to adapt to global realities such as climate change and digitalisation. The Strategic Transport Research and Innovation Agenda (STRIA) describes what the EU is doing to accelerate the research and innovation needed to radically change transport by supporting priorities such as electrification, connected and automated transport or smart mobility.

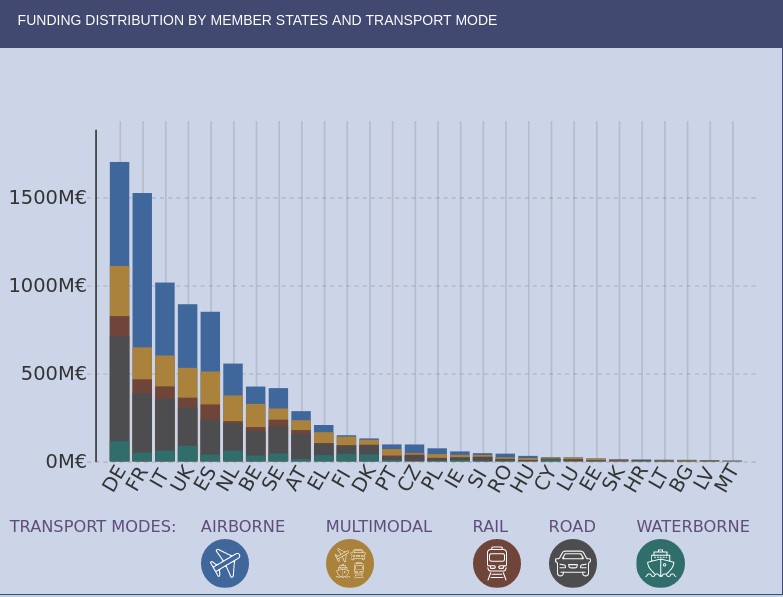

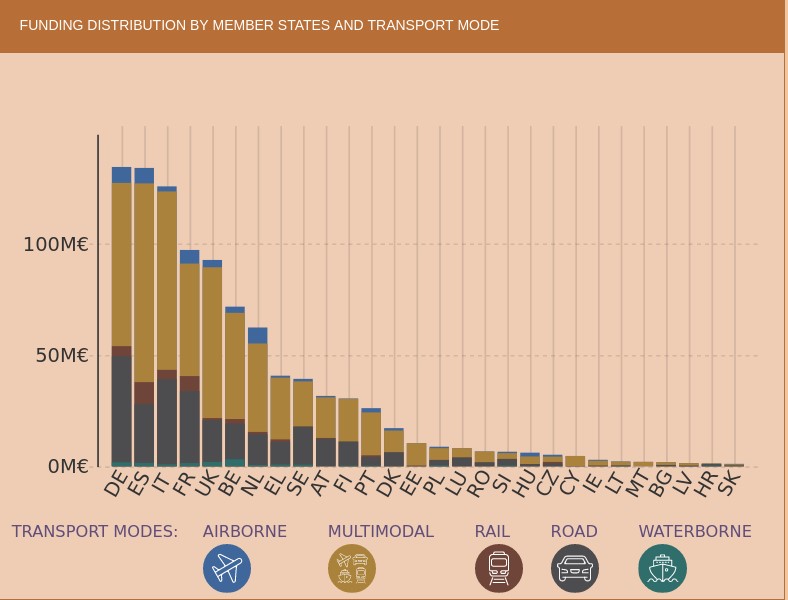

In this sense, the Transport Research and Innovation Monitoring and Information System (TRIMIS) is the tool maintained by the European Commission to provide open access information on research and innovation (R&I) in transport and was launched with the mission to support the formulation of public policies in the field of transport and mobility.

TRIMIS maintains an up-to-date dashboard to visualise data on transport research and innovation and provides an overview and detailed data on the funding and organisations involved in this research. The information can be filtered by the seven STRIA priorities and also includes data on the innovation capacity of the transport sector.

If we look at the geographical distribution of research funds provided by TRIMIS, we see that Spain appears in fifth place, far behind Germany and France. The transport systems in which the greatest effort is being made are road and air transport, beneficiaries of more than half of the total effort.

However, we find that in the strategic area of Smart Mobility and Services (SMO), which are evaluated in terms of their contribution to the overall sustainability of the energy and transport system, Spain is leading the research effort at the same level as Germany. It should also be noted that the effort being made in Spain in terms of multimodal transport is higher than in other countries.

As an example of the research effort being carried out in Spain, we have the pilot dataset to implement semantic capabilities on traffic incident information related to safety on the Spanish state road network, except for the Basque Country and Catalonia, which is published by the General Directorate of Traffic and which uses an ontology to represent traffic incidents developed by the University of Valencia.

The area of intelligent mobility systems and services aims to contribute to the decarbonisation of the European transport sector and its main priorities include the development of systems that connect urban and rural mobility services and promote modal shift, sustainable land use, travel demand sufficiency and active and light travel modes; the development of mobility data management solutions and public digital infrastructure with fair access or the implementation of intermodality, interoperability and sectoral coupling.

The 100 mobility questions initiative

The 100 Questions Initiative, launched by The Govlab in collaboration with Schmidt Futures, aims to identify the world's 100 most important questions in a number of domains critical to the future of humanity, such as gender, migration or air quality.

One of these domains is dedicated precisely to transport and urban mobility and aims to identify questions where data and data science have great potential to provide answers that will help drive major advances in knowledge and innovation on the most important public dilemmas and the most serious problems that need to be solved.

In accordance with the methodology used, the initiative completed the fourth stage on 28 July, in which the general public voted to decide on the final 10 questions to be addressed. The initial 48 questions were proposed by a group of mobility experts and data scientists and are designed to be data-driven and planned to have a transformative impact on urban mobility policies if they can be solved.

In the next stage, the GovLab working group will identify which datasets could provide answers to the selected questions, some as complex as "where do commuters want to go but really can't and what are the reasons why they can't reach their destination easily?" or "how can we incentivise people to make trips by sustainable modes, such as walking, cycling and/or public transport, rather than personal motor vehicles?"

Other questions relate to the difficulties encountered by reusers and have been frequently highlighted in research articles such as "Open Transport Data for maximising reuse in multimodal route": "How can transport/mobility data collected with devices such as smartphones be shared and made available to researchers, urban planners and policy makers?"

In some cases it is foreseeable that the datasets needed to answer the questions may not be available or may belong to private companies, so an attempt will also be made to define what new datasets should be generated to help fill the gaps identified. The ultimate goal is to provide a clear definition of the data requirements to answer the questions and to facilitate the formation of data collaborations that will contribute to progress towards these answers.

Ultimately, changes in the way we use transport and lifestyles, such as the use of smartphones, mobile web applications and social media, together with the trend towards renting rather than owning a particular mode of transport, have opened up new avenues towards sustainable mobility and enormous possibilities in the analysis and research of the data captured by these applications.

Global initiatives to coordinate research efforts are therefore essential as cities need solid knowledge bases to draw on for effective policy decisions on urban development, clean transport, equal access to economic opportunities and quality of life in urban centres. We must not forget that all this knowledge is also key to proper prioritisation so that we can make the best use of the scarce public resources that are usually available to meet the challenges.

Content written by Jose Luis Marín, Senior Consultant in Data, Strategy, Innovation & Digitalization.

The contents and views reflected in this publication are the sole responsibility of the author.

This report published by the European Data Portal (EDP) aims to help open data users in harnessing the potential of the data generated by the Copernicus program.

The Copernicus project generates high-value satellite data, generating a large amount of Earth observation data, this is in line with the European Data Portal's objective of increasing the accessibility and value of open data.

The report addresses the following questions, What can I do with Copernicus data? How can I access the data?, and What tools do I need to use the data? using the information found in the European Data Portal, specialized catalogues and examining practical examples of applications using Copernicus data.

This report is available at this link: "Copernicus data for the open data community"

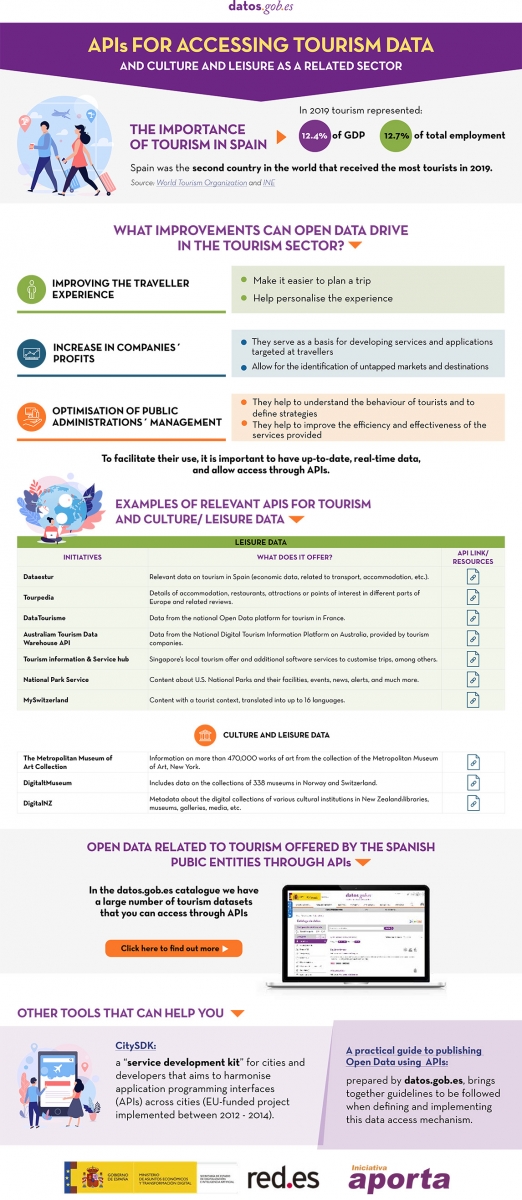

Spain was the second country in the world that received the most tourists during 2019, with 83.8 million visitors. That year, tourism activity represented 12.4% of GDP, employing more than 2.2 million people (12.7% of the total). It is therefore a fundamental sector for our economy.

These figures have been reduced due to the pandemic, but the sector is expected to recover in the coming months. Open data can help. Up-to-date information can bring benefits to all actors involved in this industry:

- Tourists: Open data helps tourists plan their trips, providing them with the information they need to choose where to stay or what activities to do. The up-to-date information that open data can provide is particularly important in times of COVID. There are several portals that collect information and visualisations of travel restrictions, such as the UN's Humanitarian Data Exchange. This website hosts a daily updated interactive map of travel restrictions by country and airline.

- Businesses. Businesses can generate various applications targeted at travellers, with useful information. In addition, by analysing the data, tourism establishments can detect untapped markets and destinations. They can also personalise their offers and even create recommendation systems that help to promote different activities, with a positive impact on the travellers' experience.

- Public administrations. More and more governments are implementing solutions to capture and analyse data from different sources in real time, in order to better understand the behaviour of their visitors. Examples include Segovia, Mallorca and Gran Canaria. Thanks to these tools, they will be able to define strategies and make informed decisions, for example, aimed at avoiding overcrowding. In this sense, tools such as Affluences allow them to report on the occupation of museums, swimming pools and shops in real time, and to obtain predictions for successive time slots.

The benefits of having quality tourism-related data are such that it is not surprising that the Spanish Government has chosen this sector as a priority when it comes to creating data spaces that allow voluntary data sharing between organisations. In this way, data from different sources can be cross-referenced, enriching the various use cases.

The data used in this field are very diverse: data on consumption, transport, cultural activities, economic trends or even weather forecasts. But in order to make good use of this highly dynamic data, it needs to be available to users in appropriate, up-to-date formats and access needs to be automated through application programming interfaces (APIs).

Many organisations already offer data through APIs. In this infographic you can see several examples linked to our country at national, regional and local level. But in addition to general data portals, we can also find APIs in open data platforms linked exclusively to the tourism sector. In the following infographic you can see several examples:

Click here to see the infographic in full size and in its accessible version.

Do you know more examples of APIs or other resources that facilitate access to tourism-related open data? Leave us a comment or write to datos.gob.es!

Content prepared by the datos.gob.es team.

Mobility is a key economic driver. Increasing the efficiency and quality of a country's mobility system contributes both to the strength of its economy and to improving the quality of life of its citizens. This is particularly important in the mobility systems of cities and their metropolitan areas, where most of the population and, thus, most of the economic activity is concentrated.

Aware of this - and because we citizens demand it - local authorities have for decades allocated a significant part of their annual resources to expanding, improving and making their transport and mobility networks more efficient.

In the last decade, open data has been one of the most important vectors of innovation that have been introduced in the mobility strategies developed by cities, giving rise to initiatives that would have been difficult to imagine in previous periods. Despite all the complexities involved, opening both static and real-time mobility datasets for reuse is actually cheap and simple compared to the cost of building a new transport infrastructure or the cost of acquiring and maintaining the operational support systems (OSS) associated with mobility services. In addition, the existence of an increasing deployment of sensor networks, accessible through control systems deployed in the context of "smart city" strategies, makes the task a little easier.

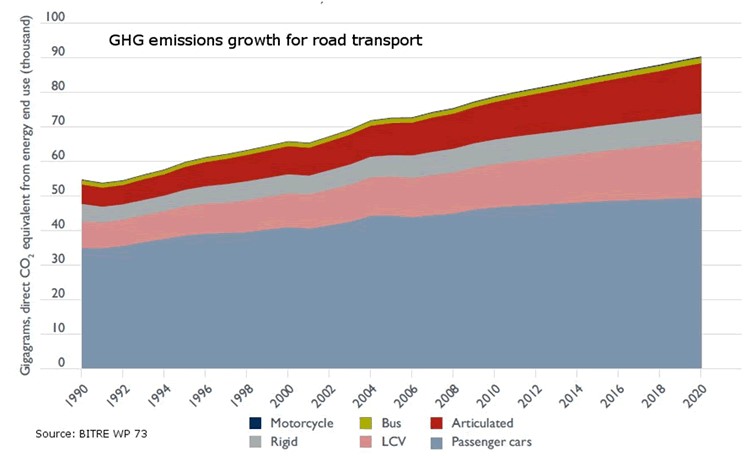

We should not forget, moreover, that public transport is key to tackling climate change as it is one of the fastest growing sources of greenhouse gas emissions, and public transport offers the best mobility solution to move people quickly and efficiently in cities around the world. As shown in the figure, simply shifting passengers using their private vehicles to public transport has a major impact on reducing greenhouse gas emissions. The Bus Industry Confederation estimates that shifting passengers from cars to public transport can lead to a 65% reduction in emissions during peak hours. This reduction could be as high as 95% in emissions during off-peak hours for those commuters who switch from private cars to public transport.

For all these reasons, there are already numerous examples where freeing up transport and mobility data to put it in the hands of travellers is proving to be a policy with important benefits for many cities: it allows better use of resources and contributes to more efficient mobility in urban space.

Let's look at some examples that may not be as well-known as the ones that usually reach the media, but which demonstrate how the release of data allows for innovations that benefit both users and, in some cases, the authorities themselves.

Redesigning New York City bus routes

All cities are constantly thinking of ways to improve their bus routes in order to provide the best possible service to citizens. In New York City, however, the open data policy, as an unplanned consequence, provided an important aid to the authorities, based on the analysis of data from the bus network users themselves.

The rider-driven Bus Turnaround Coalition campaign, supported by TransitCenter, a foundation working to improve public transport in US cities, and the Riders Alliance, is using open data to raise awareness about the state of New York City's bus network, proposing solutions for improvement to the Metropolitan Transportation Authority (MTA).

To formulate their recommendations, the organisations analysed bus arrival times using the MTA's own location maps, incorporated real-time data through the GTFS specification, reviewed ridership data, and mapped (and optimised) bus routes.

Among the most innovative proposals is the shift in approach to route design criteria. Instead of trying to cater to all types of travellers, the Bus Turnaround Coalition, after analysing how people actually move around the city and what type of transport they would need to achieve their goals efficiently, proposed the following recommendations:

- Add lines to take passengers from the outskirts of the city directly to the underground lines, facilitating a quick trip.

- Improve lines to offer short, fast routes within a neighbourhood for people who want to run a quick errand or visit a close friend.

- Split routes that are too long to minimise the risk of delays.

- Readjust the distance between stops, which are often too close together, complementing gaps in metro coverage.

Open data has turned frequent rider protests and complaints about poor network performance into a set of reasoned, data-driven inputs, which have been captured in a series of MTA commitments to improve New York's bus network, such as redesigning the network by 2021, increasing journey speeds by 25%, and proactively managing bus maintenance.

Bicycle usage data in San Francisco

Like many other cities, San Francisco, through its Municipal Transportation Agency (SFMTA), records travel data from users of its public bike-sharing system and makes it available as open data. In this case, the transport authority itself publishes regular reports, both on the overall use of the system and on the conclusions it draws for the improvement of the city's own mobility.

By documenting and analysing the volumes and trends of bicycle use in San Francisco, they are able to support the goals of the SFMTA's Strategic Plan, which aims to prioritise other forms of travel in the city than the private car.

For example, ongoing analysis of bicycle passenger volumes at key intersections in the city and citizen input has reduced traffic congestion and accidents by re-prioritising vehicle traffic priorities according to actual roadway usage at any given time of day.

Efficient parking in Sacramento

Many cities try to address traffic congestion problems from different perspectives including efficient parking management. Therefore, one of the datasets frequently published by cities with open data initiatives is public parking occupancy.

In the city of Sacramento, California, the open data initiative publishes datasets from the citywide sensor network that monitors parking availability at parking meters and not only in the city's public car parks. In this way they have managed to reduce emissions as vehicles spend less time looking for parking, while significantly improving traffic flow and the satisfaction of citizens using the Sacpark app.

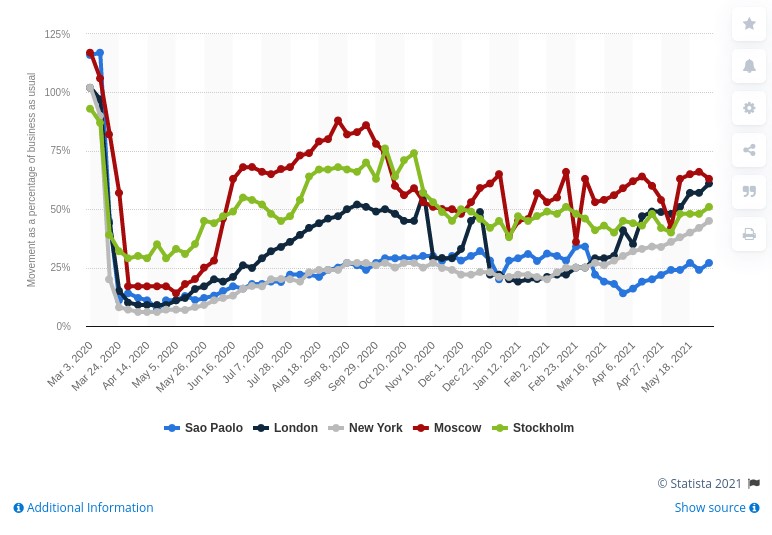

In 2020, due to the pandemic, passenger transport around the world was drastically reduced due to the mobility restriction policies that governments around the world had to deploy to curb the spread of the virus, as seen in the image below.

In June 2021 cities are still far from recovering the levels of mobility they had in March 2020, but we continue to make progress in making data the basis on which to build useful information, and essential in the new innovations coming through artificial intelligence.

So, as the pandemic recedes, and many initiatives resume, we continue to see how open data is at the heart of smart, connected and environmentally friendly mobility strategies.

Content prepared by Jose Luis Marín, Senior Consultant in Data, Strategy, Innovation & Digitalization.

The contents and views reflected in this publication are the sole responsibility of the author.

A symptom of the maturity of an open data ecosystem is the possibility of finding datasets and use cases across different sectors of activity. This is considered by the European Open Data Portal itself in its maturity index. The classification of data and their uses by thematic categories boosts re-use by allowing users to locate and access them in a more targeted way. It also allows needs in specific areas to be detected, priority sectors to be identified and impact to be estimated more easily.

In Spain we find different thematic repositories, such as UniversiData, in the case of higher education, or TURESPAÑA, for the tourism sector. However, the fact that the competences of certain subjects are distributed among the Autonomous Communities or City Councils complicates the location of data on the same subject.

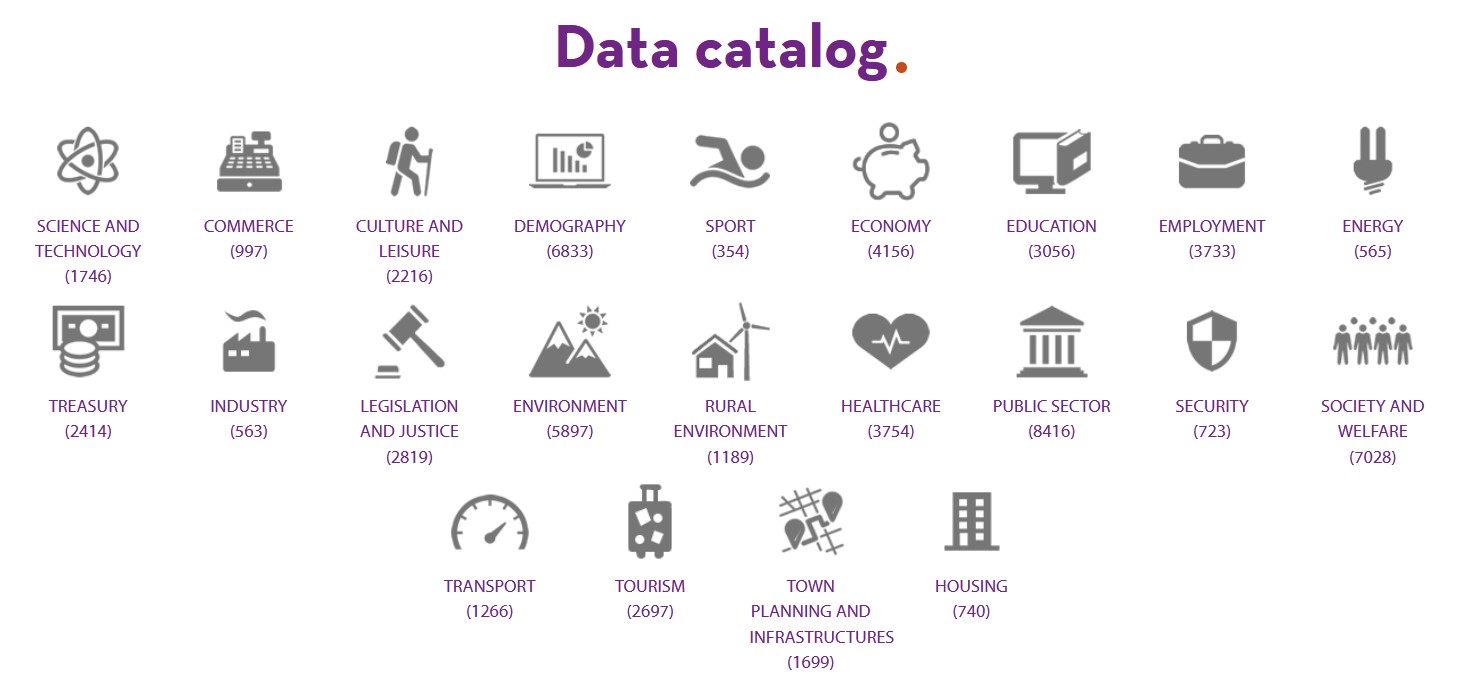

Datos.gob.es brings together the open data of all the Spanish public bodies that have carried out a federation process with the portal. Therefore, in our catalogue you can find datasets from different publishers segmented by 22 thematic categories, those considered by the Technical Interoperability Standard.

Number of datasets by category as of June 2021

But in addition to showing the datasets divided by subject area, it is also important to show highlighted datasets, use cases, guides and other help resources by sector, so that users can more easily access content related to their areas of interest. For this reason, at datos.gob.es we have launched a series of web sections focused on different sectors of activity, with specific content for each area.

4 sectorial sections that will be gradually extended to other areas of interest

Currently in datos.gob.es you can find 4 sectors: Environment, Culture and leisure, Education and Transport. These sectors have been highlighted for different strategic reasons:

- Environment: Environmental data are essential to understand how our environment is changing in order to fight climate change, pollution and deforestation. The European Commission itself considers environmental data to be highly valuable data in Directive 2019/1024. At datos.gob.es you can find data on air quality, weather forecasting, water scarcity, etc. All of them are essential to promote solutions for a more sustainable world.

- Transport: Directive 2019/1024 also highlights the importance of transport data. Often in real time, this data facilitates decision-making aimed at efficient service management and improving the passenger experience. Transport data are among the most widely used data to create services and applications (e.g. those that inform about traffic conditions, bus timetables, etc.). This category includes datasets such as real-time traffic incidents or fuel prices.

- Education: With the advent of COVID-19, many students had to follow their studies from home, using digital solutions that were not always ready. In recent months, through initiatives such as the Aporta Challenge, an effort has been made to promote the creation of solutions that incorporate open data in order to improve the efficiency of the educational sphere, drive improvements - such as the personalisation of education - and achieve more universal access to knowledge. Some of the education datasets that can be found in the catalogue are the degrees offered by Spanish universities or surveys on household spending on education.

- Culture and leisure: Culture and leisure data is a category of great importance when it comes to reusing it to develop, for example, educational and learning content. Cultural data can help generate new knowledge to help us understand our past, present and future. Examples of datasets are the location of monuments or listings of works of art.

Structure of each sector

Each sector page has a homogeneous structure, which facilitates the location of contents also available in other sections.

It starts with a highlight where you can see some examples of outstanding datasets belonging to this category, and a link to access all the datasets of this subject in the catalogue.

It continues with news related to the data and the sector in question, which can range from events or information on specific initiatives (such as Procomún in the field of educational data or the Green Deal in the environment) to the latest developments at strategic and operational level.

Finally, there are three sections related to use cases: innovation, reusing companies and applications. In the first section, articles provide examples of innovative uses, often linked to disruptive technologies such as Artificial Intelligence. In the last two sections, we find specific files on companies and applications that use open data from this category to generate a benefit for society or the economy.

Highlights section on the home page

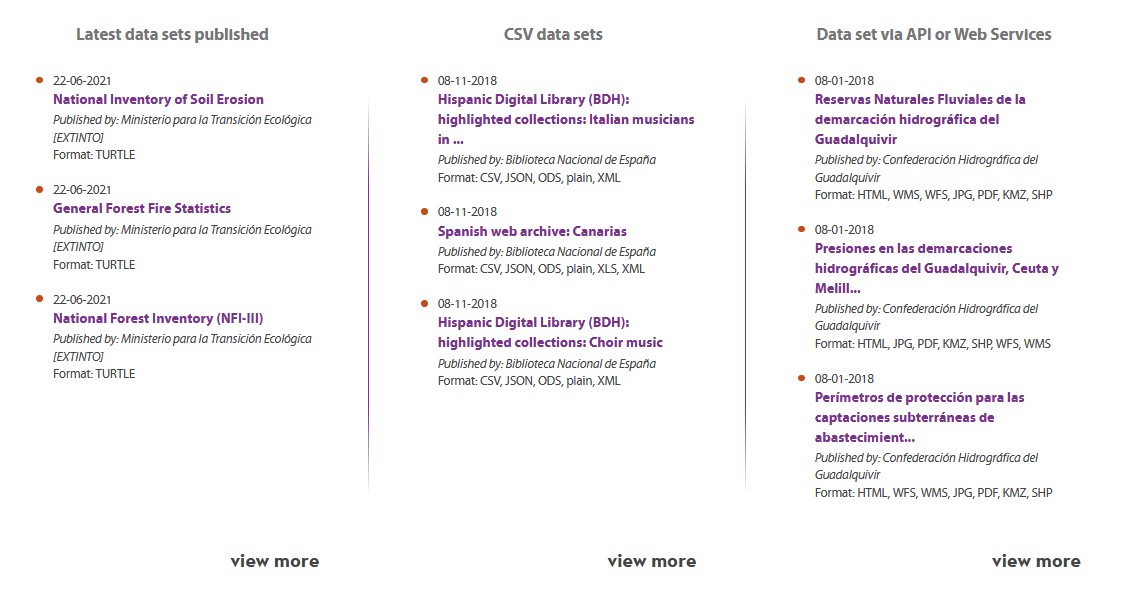

In addition to the creation of sectoral pages, over the last year, datos.gob.es has also incorporated a section of highlighted datasets. The aim is to give greater visibility to those datasets that meet a series of characteristics: they have been updated, are in CSV format or can be accessed via API or web services.

What other sectors would you like to highlight?

The plans of datos.gob.es include continuing to increase the number of sectors to be highlighted. Therefore, we invite you to leave in comments any proposal you consider appropriate.

Artificial intelligence is transforming companies, with supply chain processes being one of the areas that is obtaining the greatest benefit. Its management involves all resource management activities, including the acquisition of materials, manufacturing, storage and transportation from origin to final destination.

In recent years, business systems have been modernized and are now supported by increasingly ubiquitous computer networks. Within these networks, sensors, machines, systems, vehicles, smart devices and people are interconnected and continuously generating information. To this must be added the increase in computational capacity, which allows us to process these large amounts of data generated quickly and efficiently. All these advances have contributed to stimulating the application of Artificial Intelligence technologies that offer a sea of possibilities.

In this article we are going to review some Artificial Intelligence applications at different points in the supply chain.

Technological implementations in the different phases of the supply chain

Planning

According Gartner, volatility in demand is one of the aspects that most concern entrepreneurs. The COVID-19 crisis has highlighted the weakness in planning capacity within the supply chain. In order to properly organize production, it is necessary to know the needs of the customers. This can be done through techniques of predictive analytics that allow us to predict demand, that is, estimate a probable future request for a product or service. This process also serves as the starting point for many other activities, such as warehousing, shipping, product pricing, purchasing raw materials, production planning, and other processes that aim to meet demand.

Access to real-time data allows the development of Artificial Intelligence models that take advantage of all the contextual information to obtain more precise results, reducing the error significantly compared to more traditional forecasting methods such as ARIMA or exponential smoothing.

Production planning is also a recurring problem where variables of various kinds play an important role. Artificial intelligence systems can handle information involving material resources; the availability of human resources (taking into account shifts, vacations, leave or assignments to other projects) and their skills; the available machines and their maintenance and information on the manufacturing process and its dependencies to optimize production planning in order to satisfactorily meet the objectives.

Production

Within of the stages of the production process, one of the stages more driven by the application of artificial intelligence is the quality control and, more specifically, the detection of defects. According to European Comission, 50% of the production can end up as scrap due to defects, while, in complex manufacturing lines, the percentage can rise to 90%. On the other hand, non-automated quality control is an expensive process, as people need to be trained to be able to perform the inspections properly and, furthermore, these manual inspections could cause bottlenecks in the production line, delaying delivery times. Coupled with this, inspectors do not increase in number as production increases.

In this scenario, the application of computer vision algorithms can solve all these problems. These systems learn from defect examples and can thus extract common patterns to be able to classify future production defects. The advantages of these systems is that they can achieve the precision of a human or even better, since they can process thousands of images in a very short time and are scalable.

On the other hand, it is very important to ensure the reliability of the machinery and reduce the chances of production stoppage due to breakdowns. In this sense, many companies are betting on predictive maintenance systems that are capable of analyzing monitoring data to assess the condition of the machinery and schedule maintenance if necessary.

Open data can help when training these algorithms. As an example, the Nasa offers a collection of data sets donated by various universities, agencies or companies useful for the development of prediction algorithms. These are mostly time series of data from a normal operating state to a failed state. This article shows how one of these specific data sets (Turbofan Engine Degradation Simulation Data Set, which includes sensor data from 100 engines of the same model) can be taken to perform a exploratory analysis and a model of linear regression reference.

Transport

Route optimization is one of the most critical elements in transportation planning and business logistics in general. Optimal planning ensures that the load arrives on time, reducing cost and energy to a minimum. There are many variables that intervene in the process, such as work peaks, traffic incidents, weather conditions, etc. And that's where artificial intelligence comes into play. A route optimizer based on artificial intelligence is able to combine all this information to offer the best possible route or modify it in real time depending on the incidents that occur during the journey.

Logistics organizations use transport data and official maps to optimize routes in all modes of transport, avoiding areas with high congestion, improving efficiency and safety. According to the study “Open Data impact Map”, The open data most demanded by these companies are those directly related to the means of transport (routes, public transport schedules, number of accidents…), but also geospatial data, which allow them to better plan their trips.

In addition, exist companies that share their data in B2B models. As stated in the Cotec Foundation report “Guide for opening and sharing data in the business environment”, The Spanish company Primafrio, shares data with its customers as an element of value in their operations for the location and positioning of the fleet and products (real-time data that can be useful to the customer, such as the truck license plate, position, driver , etc.) and for billing or accounting tasks. As a result, your customers have optimized order tracking and their ability to advance billing.

Closing the transport section, uOne of the objectives of companies in the logistics sector is to ensure that goods reach their destination in optimal conditions. This is especially critical when working with companies in the food industry. Therefore, it is necessary to monitor the state of the cargo during transport. Controlling variables such as temperature, location or detecting impacts is crucial to know how and when the load deteriorated and, thus, be able to take the necessary corrective actions to avoid future problems. Technologies such as IoT, Blockchain and Artificial Intelligence are already being applied to these types of solutions, sometimes including the use of open data.

Customer service

Offering good customer service is essential for any company. The implementation of conversational assistants allows to enrich the customer experience. These assistants allow users to interact with computer applications conversationally, through text, graphics or voice. By means of speech recognition techniques and natural language processing, these systems are capable of interpreting the intention of users and taking the necessary actions to respond to their requests. In this way, users could interact with the wizard to track their shipment, modify or place an order. In the training of these conversational assistants it is necessary to use quality data, to achieve an optimal result.

In this article we have seen only some of the applications of artificial intelligence to different phases of the supply chain, but its capacity is not only limited to these. There are other applications such as automated storage used by Amazon at its facilities, dynamic prices depending on the demand or the application of artificial intelligence in marketing, which only give an idea of how artificial intelligence is revolutionizing consumption and society.

Content elaborated by Jose Antonio Sanchez, expert in Data Science and enthusiast of the Artificial Intelligence.

Contents and points of view expressed in this publication are the exclusive responsibility of its author.