Our language, both written and spoken, constitutes a peculiar type of data. Language is the human means of communication par excellence and its main features are ambiguity and complexity. Due to language is unstructured data, its processing has traditionally been a challenge to the machines, making it difficult to use in the analysis processes of the information. With the explosion of social networks and the advancement of supercomputing and data analytics in fields as diverse as medicine or call-centers, it is not surprising that a large part of artificial intelligence subfields are dedicated to developing strategies and algorithms to process and generate natural language.

Introduction

In the domain of data analysis applied to natural language processing, there are two major goals: Understanding and Generating.

- Understand: there are a set of techniques, tools and algorithms whose main objective is to process and understand the natural language to finally transform this information into structured data that can be exploited / used by a machine. There are different levels of complexity, depending on whether the message that is transmitted in natural language have a written or spoken format. In addition, the language of the message greatly increases the processing complexity, since the recognition algorithms must be trained in that specific language or another similar one.

- Generate: we find algorithms that try to generate messages in natural language. That is, algorithms that consume classical or structured data to generate a communication in natural language, whether written or spoken.

Figure 1. Natural language processing and its two associated approaches show a diagram to better understand these tasks that are part of natural language processing.

To better understand these techniques, let's give some examples:

Natural Language Understanding

In the first group, we can find those applications using by a human to request a certain information or describe a problem and a machine is responsible for receiving and processing that message with the aim of resolving or addressing the request. For example, when we call the information services of almost any company, our call is answered by a machine that, through a guided or assisted dialogue, tries to direct us to the most appropriate service or department. In this concrete example, the difficulty is twofold: the system has to convert our spoken message into a written message and store it, and then treat it with a routine or natural language processing algorithm that interprets the request and makes the correct decision based on a pre-established decision tree.

Another example of application of this techniques is those situations whit lot of written information from routine reports. For example, a medical or a police report. This type of reports usually contain structured data, filling different boxes into a specific software applications - for example, date, subject, name of the declarant or patient, etc. - However, they also usually contain one or several free-format fields where the events are described.

Currently, that free-format text (written digitally) is stored, but is not processed to interpret its content. In Health field, for example, the Apache CTAKES tool could be used to change this situation. It is a software development framework that allows the interpretation and extraction of clinical terms included in medical reports for later use in the form of diagnostic assistant or the creation of medical dictionaries (you can find more information in this interesting video that explains how CTAKES works).

Natural Language Generation

On the other hand, the applications and examples that demonstrate the potential of natural language generation, whether text-form or speech-form, are multiple and very varied. One of the best known examples is the generation of weather summaries. Most of the summaries and meteorological reports found on the Web have been generated with a natural language generation engine based on the quantitative prediction data offered by official agencies. In a very simplified way, a natural language generation algorithm would generate the expression “Time is getting worse” as a linguistic description of the next quantitative variable (∆P=Pt0-Pt1), which is a calculation that shows that atmospheric pressure is going down. Changes in atmospheric pressure are a well-known indicator of the evolution of short-term weather. The obvious question now is how the algorithm determines what to write. The answer is simple. Most of these techniques use templates to generate natural language. Templates that use phrases that are combined depending on the outputs determined by the algorithm. That said, this might seem simple, however, the system can reach a high degree of complexity if fuzzy logic is introduced to determine (in the example) to what degree the time is getting worse.

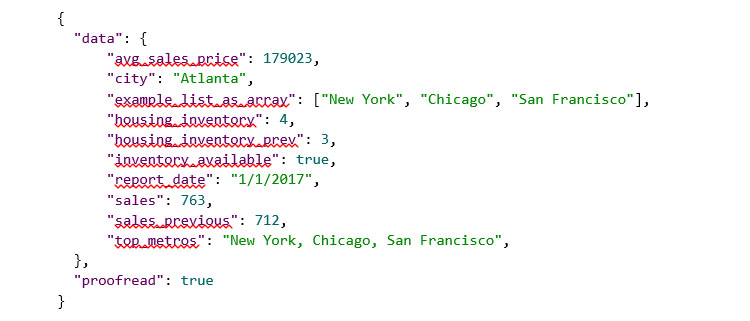

Other typical examples where natural language generation is commonly found are the summaries of sporting events or the periodic reports of some markets such as stock market or real estate. For example, making use of a simple tool to generate natural language from the following set of structured data (in JSON format):

We will obtain the following summary in natural language, in addition to some details regarding the readability and metrics of the returned text:

Another example is machine translation, one of the applications with most impact in natural language processing. It covers both comprehension and generation with an added complexity: understanding and processing is based on the origin-language and the message-generation is written on the target language.

All these examples show that the benefits of using tools for processing natural language are multiple. These tools facilitate an escalation that allows some companies to generate 10,000 or 100,000 weekly narratives that give value to their clients. Without these generation and automation tools it would be impossible to reach these levels. These tools allow the democratization of certain services for non-expert users in the management of quantitative variables. This is the case of narratives that summarize our electricity consumption without having to be an expert aware of variables such as kWh.

From the point of view of natural language processing, these technologies have radically changed the way to understand after-sales service to the customer. Combined with technologies such as chatbots or conversational robots, they improve the customer experience, taking care of clients quickly and without schedules needs. Executed by machines, these natural language processing technologies can also consult customer data efficiently while being guided through (assisted) dialogue in their natural language.

Given all these possibilities for the future, it is not surprising that an increasing number of companies and organizations are developing natural language processing, a field that can bring great benefits to our society. Noteworthy is the initiative of the State Secretariat for Digital Progress, which has designed a specific plan for the promotion of language technologies (www.PlanTL.es), meaning natural language processing, machine translation and conversational systems.

Content prepared by Alejandro Alija, expert in Digital Transformation and innovation.

Contents and points of view expressed in this publication are the exclusive responsibility of its author.