Updated: 21/03/2024

On January 2023, the European Commission published a list of high-value datasets that public sector bodies must make available to the public within a maximum of 16 months. The main objective of establishing the list of high-value datasets was to ensure that public data with the highest socio-economic potential are made available for re-use with minimal legal and technical restriction, and at no cost. Among these public sector datasets, some, such as meteorological or air quality data, are particularly interesting for developers and creators of services such as apps or websites, which bring added value and important benefits for society, the environment or the economy.

The publication of the Regulation has been accompanied by frequently asked questions to help public bodies understand the benefit of HVDS (High Value Datasets) for society and the economy, as well as to explain some aspects of the obligatory nature of HVDS (High Value Datasets) and the support for publication.

In line with this proposal, Executive Vice-President for a Digitally Ready Europe, Margrethe Vestager, stated the following in the press release issued by the European Commission:

"Making high-value datasets available to the public will benefit both the economy and society, for example by helping to combat climate change, reducing urban air pollution and improving transport infrastructure. This is a practical step towards the success of the Digital Decade and building a more prosperous digital future".

In parallel, Internal Market Commissioner Thierry Breton also added the following words on the announcement of the list of high-value data: "Data is a cornerstone of our industrial competitiveness in the EU. With the new list of high-value datasets we are unlocking a wealth of public data for the benefit of all”. Start-ups and SMEs will be able to use this to develop new innovative products and solutions to improve the lives of citizens in the EU and around the world.

Six categories to bring together new high-value datasets

The regulation is thus created under the umbrella of the European Open Data Directive, which defines six categories to differentiate the new high-value datasets requested:

- Geospatial

- Earth observation and environmental

- Meteorological

- Statistical

- Business

- Mobility

However, as stated in the European Commission's press release, this thematic range could be extended at a later stage depending on technological and market developments. Thus, the datasets will be available in machine-readable format, via an application programming interface (API) and, if relevant, also with a bulk download option.

In addition, the reuse of datasets such as mobility or building geolocation data can expand the business opportunities available for sectors such as logistics or transport. In parallel, weather observation, radar, air quality or soil pollution data can also support research and digital innovation, as well as policy making in the fight against climate change.

Ultimately, greater availability of data, especially high-value data, has the potential to boost entrepreneurship as these datasets can be an important resource for SMEs to develop new digital products and services, which in turn can also attract new investors.

Find out more in this infographic:

Access the accessible version on two pages.

Digital Earth Solutions is a technology company whose aim is to contribute to the conservation of marine ecosystems through innovative ocean modelling solutions.

Based on more than 20 years of CSIC studies in ocean dynamics, Digital Solutions has developed a unique software capable of predicting in a few minutes and with high precision the geographical evolution of any spill or floating body (plastics, people, algae...), forecasting its trajectory in the sea for the following days or its origin by analysing its movement back in time.

Thanks to this technology, it is possible to minimise the impact of oil and other waste spills on coasts, seas and oceans.

For years now we have been announcing that Artificial Intelligence is undergoing one of its most prolific, exciting periods. A time when applications and use cases begin to be seen in which human intelligence merges with artificial intelligence. Some occupations are changing forever. Journalists and writers now have software tools that can write for them. Content creators - images or video - can ask the machine to create for them just by saying a phrase. In this post we have taken a closer look at this last example. We have been able to test Dall-e 2 and the results have left us speechless.

Introduction

Nowadays, in the technological community worldwide, there is an underlying buzz, a collective excitement of all lovers of digital technologies and in particular of artificial intelligence. On several occasions we have mentioned the innovations of the company Open AI in this communication space. We have written several articles where we talk about the GPT-3 algorithm and what it is capable of in the field of natural language processing. Recently, OpenAI has been doing away with the waiting lists (on which many of us had been enrolled for a long time) to allow us to test in a limited way the capabilities of the GPT-3 algorithm implemented in different types of applications.

Example of the multiple applications of GPT-3 in the field of natural language.

We recommend our readers to try out the text completion tool with which, merely by providing a short sentence, the AI completes the text with several paragraphs indistinguishable from human writing. The last few days have been hectic with crowds of people testing the ChatGPT-3 tool. The degree of naturalness with which AI can have a conversation is simply amazing. The results are having an impact on a wide variety of use cases, such as support for software developers. ChatGPT-3 has been able to programme simple code routines or algorithms just from a description in natural language of what you want to programme. However, the result is even more impressive when we realise that AI is capable of correcting its own programming errors.

DALL-E

Leaving aside the capabilities of generating natural language indistinguishable from that written by a human, now let's take a look the main theme of this post. One of the most amazing applications of the AI of OpenAI is the solution known as DALL-E. What better way to introduce DALL-E than ask ChatGPT-3 what DALL-E is.

The more formal description of DALL-E, according to its own website, is as follows:

DALL·E is a 12-billion parameter version of GPT-3 trained to generate images from text descriptions. DALL-E has a diverse set of capabilities, including creating anthropomorphised versions of animals and objects, combining unrelated concepts in plausible ways, rendering text, and applying transformations to existing images..

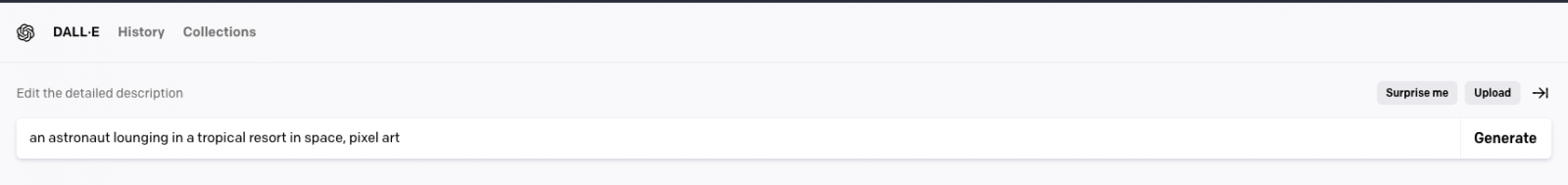

There is currently a second version of the algorithm. DALL-E 2 capable of generating more realistic and precise images with a resolution 4 times higher. The tool for trying out DALL-E is available here https://labs.openai.com/. To use it, we first need to create an OpenAI account that will allow us to play with all the tools of the company. When we access the test website we can write our own text or ask the tool to generate random descriptions of images in natural language to create images. For example, by clicking the Surprise me button:

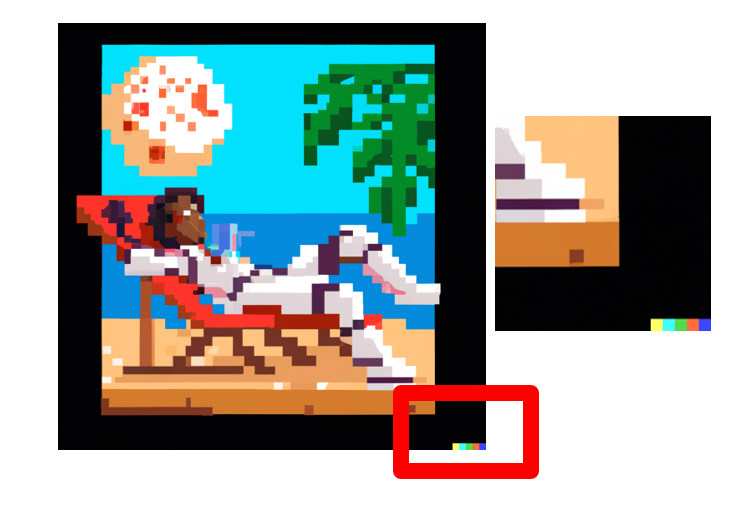

The web generates this random description for us: an astronaut lounging in a tropical resort in space, pixel art

And this is the result:

We repeat: An expressive oil painting of a basketball player dunking, depicted as an explosion of a nebula

We can assure you that the exercise is somewhat addictive and we admit that some of us have spent hours of our weekends playing with the descriptions and waiting, over and over again, for the amazing result.

About DALL-E 2 training

DALL-E 2 (arXiv:2204.06125) is a refined version of the original DALL-E system (arXiv:2102.12092). To train the original DALL-E model, which contains 12 billion parameters, a set of 250 million text-image pairs was used (publicly available online). This data set is a mixture of several prior datasets comprising: Conceptual Captions by Google; Wikipedia's text-image pairs and a filtered subset of YFCC100M.

DALL-E 2 trivia

Some interesting things besides the tests that we can do to generate our own images. OpenAI has created a specific Github repository which describes the risks and limitations of DALL-E. At the site it is reported, for example, that, for the time being, the use of DALL-E is limited to non-commercial purposes. So it is not possible to make any commercial use of the images generated. In other words, they cannot be sold or licensed under any circumstances. In this regard, all the images generated by DALL-E include a distinctive mark that lets you know that they have been generated by AI. At the Github site we can find loads of information about the generation of explicit content, the risks related with the bias that AI can introduce into the generation of images and the inappropriate uses of DALL-E such as the harassment, bullying or exploitation of individuals.

Along national lines, MarIA

Along national lines, after months of tests and adjustments, MarIA, the first supermassive artificial intelligence, has seen the light of day, trained with open data from the web archives of the National Library of Spain (BNE) and thanks to the computing resources of the National Supercomputing Centre. With regard to this post, MarIA has been trained using the GPT-2 algorithm which we have talked about many months ago in this space. To carry out the MarIA training, 135 billion previous words from the National Library's documentary bank have been used with a total volume of 570 Gigabytes of information.

Conclusions

As the days and weeks go by since the general opening of the APIs and the OpenIA tools, there has been a torrent of publications on all kinds of media, social media and specialised blogs about the capabilities and possibilities of Chat GPT-3 and DALL-E. I don't think that at this time anyone is capable of predicting the potential commercial, scientific and social applications of this technology. What is clear is that many of us think that OpenAI has shown only a sample of what it is capable of and it seems that we may be on the verge of a historic milestone in the development of AI after many years of overexpectations and unfulfilled promises. We will continue to report on the progress of GTP-3, but for the time being, all we can do is to keep enjoying, playing and learning with the simple tools that we have at our disposal!

Content prepared by Alejandro Alija, an expert in Digital Transformation.

The contents and points of view reflected in this publication are the sole responsibility of its author.

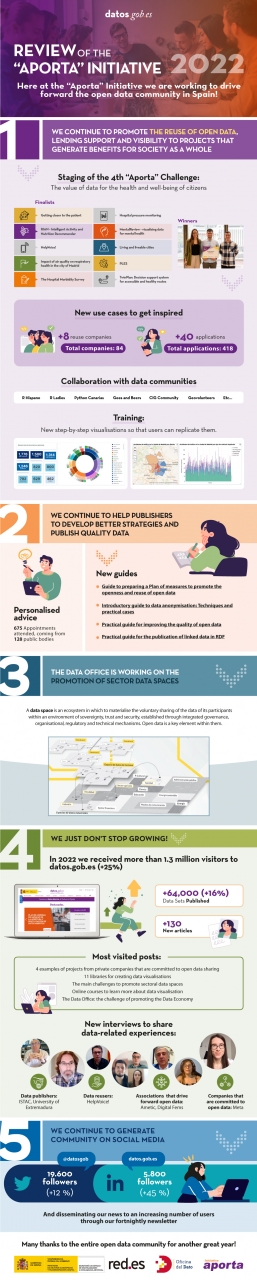

Just a few days before the end of 2022, we’d like to take this opportunity to take stock of the year that is drawing to a close, a period during which the open data community has not stopped growing in Spain and in which we have joined our joint forces and desires with the Data Office. The latter unit is responsible for boosting the management, sharing and use of data throughout all the production sectors of the Spanish Economy and Society, focusing its efforts in particular on promoting spaces for sharing and making use of sectoral data.

It is precisely thanks to the incorporation of the Data Office under the Aporta Initiative that we have been able to double the dissemination effect and promote the role that open data plays in the development of the data economy.

Concurrently, during 2022 we have continued working to bring open data closer to the public, the professional community and public administrations. Thus, and with the aim of promoting the reuse of open data for social purposes, we have once again organised a new edition of the Aporta Challenge.

Focusing on the health and well-being of citizens, the fourth edition of this competition featured three winners of the very highest level and the common denominator of their digital solutions is to improve the physical and mental health of people, thanks to services developed with open data.

New examples of use cases and step-by-step visualisations

In turn, throughout this year we have continued to characterise new examples of use cases that help to increase the catalogue of open data reuse companies and applications. With the new admissions, datos.gob.es already has a catalogue of 84 reuse companies and a total of 418 applications developed from open data. Of the latter, more than 40 were identified in 2022.

Furthermore, since last year we inaugurated the step-by -step visualisations section, we have continued to explore their potential so that users can be inspired and easily replicate the examples.

Reports, guides and audio-visual material to promote the use of open data

For the purpose of continuing to provide advice to the communities of open data publishers and reusers, another of the mainstays in 2022 has been a focus on offering innovative reports on the latest trends in artificial intelligence and other emerging technologies, as well as the development of guides , infographics and videos which foster an up-close knowledge of new use cases and trends related with open data.

Some of the most frequently read articles at the datos.gob.es portal have been '4 examples of projects by private companies that are committed to open data sharing', 'How is digital transformation evolving in Spain?' either 'The main challenges to promote sectoral data spaces', inter alia. As far as the interviews are concerned, we would highlight those held with the winners of the 4th “Aporta” Challenge, with Hélène Verbrugghe, Public Policy Manager for Spain and Portugal of Meta or with Alberto González Yanes, Head of the Economic Statistics Service of the Canary Islands Institute of Statistics (ISTAC), inter alia.

Finally, we would like to thank the open data community for its support for another year. During 2022, we have managed to ensure that the National Data Catalogue exceeds 64,000 published data sets. In addition, datos.gob.es has received more than 1,300,000 visits, 25% more than in 2021, and the profiles of datos.gob.es on LinkedIn and Twitter have grown by 45% and 12%, respectively.

Here at datos.gob.es and the Data Office we are taking on this new year full of enthusiasm and a desire to work so that open data keep making progress in Spain through publishers and reusers.

Here's to a highly successful 2023!

If you’d like to see the infographic in full size you can click here.

** In order to access the links included in the image itself, please download the pdf version available below.

In this post we have described step-by-step a data science exercise in which we try to train a deep learning model with a view to automatically classifying medical images of healthy and sick people.

Diagnostic imaging has been around for many years in the hospitals of developed countries; however, there has always been a strong dependence on highly specialised personnel. From the technician who operates the instruments to the radiologist who interprets the images. With our current analytical capabilities, we are able to extract numerical measures such as volume, dimension, shape and growth rate (inter alia) from image analysis. Throughout this post we will try to explain, through a simple example, the power of artificial intelligence models to expand human capabilities in the field of medicine.

This post explains the practical exercise (Action section) associated with the report “Emerging technologies and open data: introduction to data science applied to image analysis”. Said report introduces the fundamental concepts that allow us to understand how image analysis works, detailing the main application cases in various sectors and highlighting the role of open data in their implementation.

Previous projects

However, we could not have prepared this exercise without the prior work and effort of other data science lovers. Below we have provided you with a short note and the references to these previous works.

- This exercise is an adaptation of the original project by Michael Blum on the STOIC2021 - disease-19 AI challenge. Michael's original project was based on a set of images of patients with Covid-19 pathology, along with other healthy patients to serve as a comparison.

- In a second approach, Olivier Gimenez used a data set similar to that of the original project published in a competition of Kaggle. This new dataset (250 MB) was considerably more manageable than the original one (280GB). The new dataset contained just over 1,000 images of healthy and sick patients. Olivier's project code can be found at the following repository.

Datasets

In our case, inspired by these two amazing previous projects, we have built an educational exercise based on a series of tools that facilitate the execution of the code and the possibility of examining the results in a simple way. The original data set (chest x-ray) comprises 112,120 x-ray images (front view) from 30,805 unique patients. The images are accompanied by the associated labels of fourteen diseases (where each image can have multiple labels), extracted from associated radiological reports using natural language processing (NLP). From the original set of medical images we have extracted (using some scripts) a smaller, delimited sample (only healthy people compared with people with just one pathology) to facilitate this exercise. In particular, the chosen pathology is pneumothorax.

If you want further information about the field of natural language processing, you can consult the following report which we already published at the time. Also, in the post 10 public data repositories related to health and wellness the NIH is referred to as an example of a source of quality health data. In particular, our data set is publicly available here.

Tools

To carry out the prior processing of the data (work environment, programming and drafting thereof), R (version 4.1.2) and RStudio (2022-02-3) was used. The small scripts to help download and sort files have been written in Python 3.

Accompanying this post, we have created a Jupyter notebook with which to experiment interactively through the different code snippets that our example develops. The purpose of this exercise is to train an algorithm to be able to automatically classify a chest X-ray image into two categories (sick person vs. non-sick person). To facilitate the carrying out of the exercise by readers who so wish, we have prepared the Jupyter notebook in the Google Colab environment which contains all the necessary elements to reproduce the exercise step-by-step. Google Colab or Collaboratory is a free Google tool that allows you to programme and run code on python (and also in R) without the need to install any additional software. It is an online service and to use it you only need to have a Google account.

Logical flow of data analysis

Our Jupyter Notebook carries out the following differentiated activities which you can follow in the interactive document itself when you run it on Google Colab.

- Installing and loading dependencies.

- Setting up the work environment

- Downloading, uploading and pre-processing of the necessary data (medical images) in the work environment.

- Pre-visualisation of the loaded images.

- Data preparation for algorithm training.

- Model training and results.

- Conclusions of the exercise.

Then we carry out didactic review of the exercise, focusing our explanations on those activities that are most relevant to the data analysis exercise:

- Description of data analysis and model training

- Modelling: creating the set of training images and model training

- Analysis of the training result

- Conclusions

Description of data analysis and model training

The first steps that we will find going through the Jupyter notebook are the activities prior to the image analysis itself. As in all data analysis processes, it is necessary to prepare the work environment and load the necessary libraries (dependencies) to execute the different analysis functions. The most representative R package of this set of dependencies is Keras. In this article we have already commented on the use of Keras as a Deep Learning framework. Additionally, the following packages are also required: htr; tidyverse; reshape2; patchwork.

Then we have to download to our environment the set of images (data) we are going to work with. As we have previously commented, the images are in remote storage and we only download them to Colab at the time we analyse them. After executing the code sections that download and unzip the work files containing the medical images, we will find two folders (No-finding and Pneumothorax) that contain the work data.

Once we have the work data in Colab, we must load them into the memory of the execution environment. To this end, we have created a function that you will see in the notebook called process_pix(). This function will search for the images in the previous folders and load them into the memory, in addition to converting them to grayscale and normalising them all to a size of 100x100 pixels. In order not to exceed the resources that Google Colab provides us with for free, we limit the number of images that we load into memory to 1000 units. In other words, the algorithm will be trained with 1000 images, including those that it will use for training and those that it will use for subsequent validation.

Once we have the images perfectly classified, formatted and loaded into memory, we carry out a quick visualisation to verify that they are correct. We obtain the following results:

Self-evidently, in the eyes of a non-expert observer, there are no significant differences that allow us to draw any conclusions. In the steps below we will see how the artificial intelligence model actually has a better clinical eye than we do.

Modelling

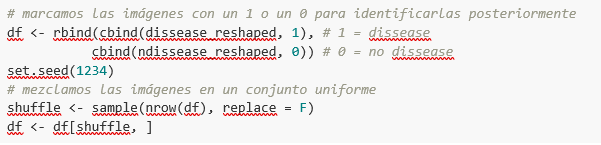

Creating the training image set

As we mentioned in the previous steps, we have a set of 1000 starting images loaded in the work environment. Until now, we have had classified (by an x-ray specialist) those images of patients with signs of pneumothorax (on the path "./data/Pneumothorax") and those patients who are healthy (on the path "./data/No -Finding")

The aim of this exercise is precisely to demonstrate the capacity of an algorithm to assist the specialist in the classification (or detection of signs of disease in the x-ray image). With this in mind, we have to mix the images to achieve a homogeneous set that the algorithm will have to analyse and classify using only their characteristics. The following code snippet associates an identifier (1 for sick people and 0 for healthy people) so that, later, after the algorithm's classification process, it is possible to verify those that the model has classified correctly or incorrectly.

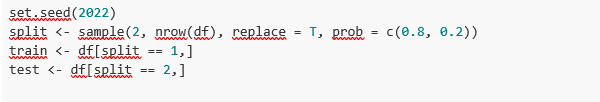

So, now we have a uniform “df” set of 1000 images mixed with healthy and sick patients. Next, we split this original set into two. We are going to use 80% of the original set to train the model. In other words, the algorithm will use the characteristics of the images to create a model that allows us to conclude whether an image matches the identifier 1 or 0. On the other hand, we are going to use the remaining 20% of the homogeneous mixture to check whether the model, once trained, is capable of taking any image and assigning it 1 or 0 (sick, not sick).

Model training

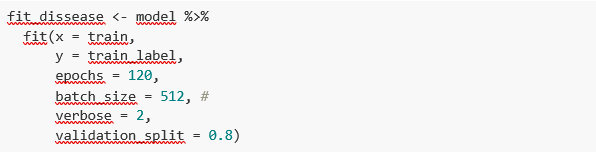

Right, now all we have left to do is to configure the model and train with the previous data set.

Before training, you will see some code snippets which are used to configure the model that we are going to train. The model we are going to train is of the binary classifier type. This means that it is a model that is capable of classifying the data (in our case, images) into two categories (in our case, healthy or sick). The model selected is called CNN or Convolutional Neural Network. Its very name already tells us that it is a neural networks model and thus falls under the Deep Learning discipline. These models are based on layers of data features that get deeper as the complexity of the model increases. We would remind you that the term deep refers precisely to the depth of the number of layers through which these models learn.

Note: the following code snippets are the most technical in the post. Introductory documentation can be found here, whilst all the technical documentation on the model's functions is accessible here.

Finally, after configuring the model, we are ready to train the model. As we mentioned, we train with 80% of the images and validate the result with the remaining 20%.

Training result

Well, now we have trained our model. So what's next? The graphs below provide us with a quick visualisation of how the model behaves on the images that we have reserved for validation. Basically, these figures actually represent (the one in the lower panel) the capability of the model to predict the presence (identifier 1) or absence (identifier 0) of disease (in our case pneumothorax). The conclusion is that when the model trained with the training images (those for which the result 1 or 0 is known) is applied to 20% of the images for which the result is not known, the model is correct approximately 85% (0.87309) of times.

Indeed, when we request the evaluation of the model to know how well it classifies diseases, the result indicates the capability of our newly trained model to correctly classify 0.87309 of the validation images.

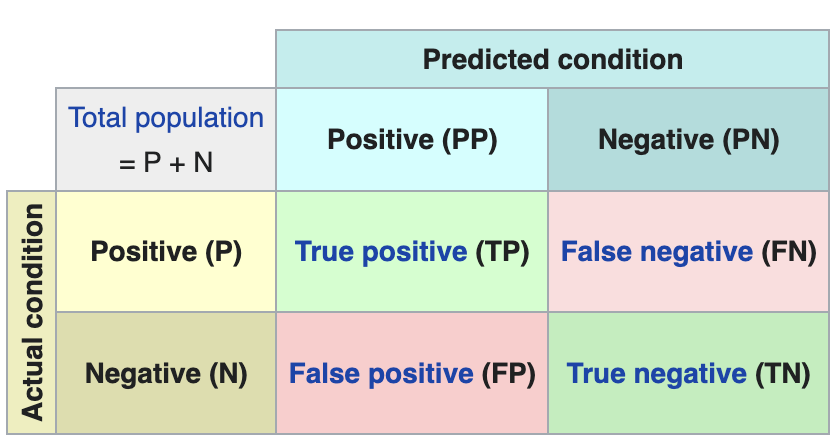

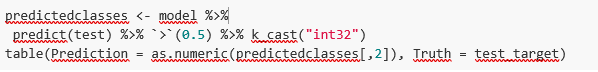

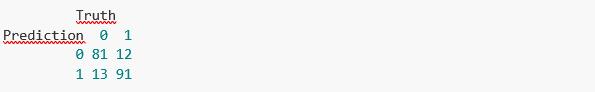

Now let’s make some predictions about patient images. In other words, once the model has been trained and validated, we wonder how it is going to classify the images that we are going to give it now. As we know "the truth" (what is called the ground truth) about the images, we compare the result of the prediction with the truth. To check the results of the prediction (which will vary depending on the number of images used in the training) we use that which in data science is called the confusion matrix. The confusion matrix:

- Places in position (1,1) the cases that DID have disease and the model classifies as "with disease"

- Places in position (2,2), the cases that did NOT have disease and the model classifies as "without disease"

In other words, these are the positions in which the model "hits" its classification.

In the opposite positions, in other words, (1,2) and (2,1) are the positions in which the model is "wrong". So, position (1,2) are the results that the model classifies as WITH disease and the reality is that they were healthy patients. Position (2,1), the very opposite.

Explanatory example of how the confusion matrix works. Source: Wikipedia https://en.wikipedia.org/wiki/Confusion_matrix

In our exercise, the model gives us the following results:

In other words, 81 patients had this disease and the model classifies them correctly. Similarly, 91 patients were healthy and the model also classifies them correctly. However, the model classifies as sick 13 patients who were healthy. Conversely, the model classifies 12 patients who were actually sick as healthy. When we add the hits of the 81+91 model and divide it by the total validation sample, we obtain 87% accuracy of the model.

Conclusions

In this post we have guided you through a didactic exercise consisting of training an artificial intelligence model to carry out chest x-ray imaging classifications with the aim of determining automatically whether someone is sick or healthy. For the sake of simplicity, we have chosen healthy patients and patients with pneumothorax (only two categories) previously diagnosed by a doctor. The journey we have taken gives us an insight into the activities and technologies involved in automated image analysis using artificial intelligence. The result of the training affords us a reasonable classification system for automatic screening with 87% accuracy in its results. Algorithms and advanced image analysis technologies are, and will increasingly be, an indispensable complement in multiple fields and sectors, such as medicine. In the coming years, we will see the consolidation of systems which naturally combine the skills of humans and machines in expensive, complex or dangerous processes. Doctors and other workers will see their capabilities increased and strengthened thanks to artificial intelligence. The joining of forces between machines and humans will allow us to reach levels of precision and efficiency never seen before. We hope that through this exercise we have helped you to understand a little more about how these technologies work. Don't forget to complete your learning with the rest of the materials that accompany this post.

Content prepared by Alejandro Alija, an expert in Digital Transformation.The contents and points of view reflected in this publication are the sole responsibility of its author.

We present a new report in the series 'Emerging Technologies and Open Data', by Alejandro Alija. The aim of these reports is to help the reader understand how various technologies work, what is the role of open data in them and what impact they will have on our society. This series includes monographs on data analysis techniques such as natural language analysis and predictive analytics. This new volume of the series analyzes the key aspects of data analysis applied to images and, through this exercise, Artificial Intelligence applied to the identification and classification of diseases by means of medical radio imaging, delves into the more practical side of the monograph.

Image analysis adopts different names and ways of referring to it. Some of the most common are visual analytics, computer vision or image processing. The importance of this type of analysis is of great relevance nowadays, since many of the most modern algorithmic techniques of artificial intelligence have been designed specifically for this purpose. Some of its applications can be seen in our daily lives, such as the identification of license plates to access a parking lot or the digitization of scanned text to be manipulated.

The report introduces the fundamental concepts that allow us to understand how image analysis works, detailing the main application cases in various sectors. After a brief introduction by the author, which will serve as a basis for contextualizing the subject matter, the full report is presented, following the traditional structure of the series:

- Awareness. The Awareness section explains the key concepts of image analysis techniques. Through this section, readers can find answers to questions such as: how are images manipulated as data, how are images classified, and discover some of the most prominent applications in image analysis.

- Inspire. The Inspire section takes a detailed look at some of the main use cases in sectors as diverse as agriculture, industry and real estate. It also includes examples of applications in the field of medicine, where the author shows some particularly important challenges in this area.

- Action: In this case, the Action section has been published in notebook format, separately from the theoretical report. It shows a practical example of Artificial Intelligence applied to the identification and classification of diseases using medical radio imaging. This post includes a step-by-step explanation of the exercise. The source code is available so that readers can learn and experiment by themselves the intelligent analysis of images.

Below, you can download the report - Awareness and Inspire sections - in pdf and word (reusable version).

The last few months of the year are always accompanied by numerous innovations in the open data ecosystem. It is the time chosen by many organisations to stage conferences and events to show the latest trends in the field and to demonstrate their progress.

New functionalities and partnerships

Public bodies have continued to make progress in their open data strategies, incorporating new functionalities and data sets at their open data platforms. Examples include:

- On 11 November, the Ministry for the Ecological Transition and the Demographic Challenge and The Information Lab Spain presented the SIDAMUN platform (Integrated Municipal Data System). It is a data visualisation tool with interactive dashboards which show detailed information about the current status of the territory.

- The Ministry of Agriculture, Food and Fisheries has published four interactive reports to exploit more than 500 million data elements and thus provide information in a simple way about the status and evolution of the Spanish primary sector.

- The Open Data Portal of the Regional Government of Andalusia has been updated in order to promote the reuse of information, expanding the possibilities of access through APIs in a more efficient, automated way.

- The National Geographic Institute has updated the information on green routes (reconditioned railway lines) which are already available for download in KML, GPX and SHP.

- The Institute for Statistics and Cartography of Andalusia has published data on the Natural Movement of the Population for 2021, which provides information on births, marriages and deaths.

We have also seen advances made from a strategic perspective and in terms of partnerships. The Regional Ministry of Participation and Transparency of the Valencian Regional Government set in motion a participatory process to design the first action plan of the 'OGP Local' programme of the Open Government Partnership. In turn, the Government of the Canary Islands has applied for admission to the International Open Government Partnership and it will strengthen collaboration with the local entities of the islands, thereby mainstreaming the Open Government policies.

In addition, various organisations have announced news for the coming months. This is the case of Cordoba City Council which is set to launch in the near future a new portal with open data, or of Torrejon City Council which has included in its local action plan the creation of an Open data portal, as well as the promotion of the use of big data in institutions.

Open data tenders, a showcase for finding talent and new use cases

During the autumn, the winners of various contests were announced which sought to promote the reuse of open data. Thanks to these tenders, we have also learned of numerous cases of reuse which demonstrate open data's capacity to generate social and economic benefits.

- At the end of October we met the winners of our “Aporta” Challenge. First prize went to HelpVoice!, a service that seeks to help the elderly using speech recognition techniques based on automatic learning. A web environment to facilitate the analysis and interactive visualisation of microdata from the Hospital Morbidity Survey and an app to promote healthy habits won second and third prizes, respectively.

- The winners of the ideas and applications tender of Open Data Euskadi were also announced. The winners include a smart assistant for energy saving and an app to locate free parking spaces.

- Aragon Open Data, the open data portal of the Government of Aragon, celebrated its tenth anniversary with a face-to-face datathon to prototype services that help people through portal data. The award for the most innovative solution with the greatest impact went to Certifica-Tec, a website that allows you to geographically view the status of energy efficiency certificates.

- The Biscay Open Data Datathon set out to transform Biscay based on its open data. At the end of November, the final event of the Datathon was held. The winner was Argilum, followed by Datoston.

- UniversiData launched its first datathon, whose winning projects have just been announced.

In addition, in the last few months other initiatives related with the reuse of data have been announced such as:

- Researchers from Technical University of Madrid have carried out a study where they use artificial intelligence algorithms to analyse clinical data on lung cancer patients, scientific publications and open data. The aim is to obtain statistical patterns that allow the treatments to be improved.

- The Research Report 2021 that the University of Extremadura has just published was generated automatically from the open data portal. It is a document containing more than 1,200 pages which includes the investigations of all the departments of the centre.

- F4map is a 3D map that has been produced thanks to the open data of the OpenStreetMap collaborative community. Hence, and alternating visualisation in 2D and 3D, it offers a detailed view of different cities, buildings and monuments from all around the world.

Dissemination of open data and their use cases through events

One thing autumn has stood out for has been for the staging of events focused on the world of data, many of which were recorded and can be viewed again online. Examples include:

- The Ministry of Justice and the University of Salamanca organised the symposium Justice and Law in Data: The role of Data as an enabler and engine for change for the transformation of Justice and Law”. During the event reflections were made on data as a public asset. All the presentations are available on the Youtube channel of the University.

- In October Madrid hosted a new edition of the Data Management Summit Spain. The day before there was a prior session, organised in collaboration with DAMA España and the Data Office, aimed exclusively at representatives of the public administration and focused on open data and the exchange of information between administrations. This can be seen on Youtube too.

- The Barcelona Provincial Council, the Castellon Provincial Council and the Government of Aragon organised the National Open Data Meeting, with the aim of making clear the importance of the latter in territorial cohesion.

- The Iberian Conference on Spatial Data Infrastructure was held in Seville, where geographic information trends were discussed.

- A recording of the Associationism Seminars 2030, organised by the Government of the Canary Islands, can also be viewed. As regards the presentations, we would highlight the one related with the ‘Map of Associationism in the Canary Islands' which makes this type of data visible in an interactive way.

- ASEDIE organised the 14th edition of its International Conference on the reuse of public sector Information which featured various round tables, including one on 'The Data Economy: rights, obligations, opportunities and barriers'.

Guides and courses

During these months, guides have also been published which seek to help publishers and reusers in their work with open data. From datos.gob.es we have published documents on How to prepare a Plan of measures to promote the opening and reuse of open data, the guide to Introduction to data anonymisation: Techniques and practical cases and the Practical guide for improving the quality of open data. In addition, other organisations have also published help documents such as:

- The Regional Government of Valencia has published a guide that compiles transparency obligations established by the Valencian law for public sector entities.

- The Spanish Data Protection Agency (AEPD) has translated the Singapore Data Protection Authority’s Guide to Basic Anonymisation, in view of its educational value and special interest to data protection officers. The guide is complemented by a free data anonymisation tool, which the AEPD makes available to organisations

- The NETWORK of Local Entities for Transparency and Citizen Participation of the FEMP has just presented the Data visualisation guide for Local Entities, a document with good practices and recommendations. The document refers to a previous work of the City Council of L'Hospitalet.

International news

During this period, we have also seen developments at European level. Some of the ones we are highlighting are:

- In October the final of the EUdatathon 2022. The finalist teams were previously selected from a total of 156 initial proposals.

- The European Data Portal has launched the initiative Use Case Observatory to measure the impact of open data by monitoring 30 use cases over 3 years.

- A group of scientists from the Dutch Institute for Fundamental Energy Research has created a database of 31,618 molecules thanks to algorithms trained with artificial intelligence.

- The World Bank has developed a new food and nutrition security dashboard which offers the latest global and national data.

These are just a few examples of what the open data ecosystem has produced in recent months. If you would like to share with us any other news, leave us a comment or send us an e-mail to dinamizacion@datos.gob.es

Effective equality between men and women is a common goal to be achieved as a society. This is stated by the United Nations (UN), which includes "Achieve gender equality and empower all women and girls" as one of the Sustainable Development Goals to be achieved by 2030.

For this, it is essential to have quality data that show us the reality and the situations of risk and vulnerability that women face. This is the only way to design effective policies that are more equitable and informed, in areas such as violence against women or the fight to break glass ceilings. This has led to an increasing number of organisations opening up data related to gender inequality. However, according to the UN itself, less than half of the data needed to monitor gender inequality is currently available.

What data are needed?

In order to understand the real situation of women and girls in the world, it is necessary to systematically include a gender analysis in all stages of the production of statistics. This implies from using gender-sensitive concepts to broadening the sources of information in order to highlight phenomena that are currently not being measured.

Gender data does not only refer to sex-disaggregated data. Data also need to be based on concepts and definitions that adequately reflect the diversity of women and men, capturing all aspects of their lives and especially those areas that are most susceptible to inequalities. In addition, data collection methods need to take into account stereotypes and social and cultural factors that may induce gender bias in the data.

Resources for gender mainstreaming in data

From datos.gob.es we have already addressed this issue in other contents, providing some initial clues on the creation of datasets with a gender perspective, but more and more organisations are becoming involved in this area, producing materials that can help to alleviate this issue.

The UN Statistics Division produced the report “Integrating a Gender Perspective into Statistics” to provide the methodological and analytical information needed to improve the availability, quality and use of gender statistics. The report focuses on 10 themes: education; work; poverty; environment; food security; power and decision-making; population, households and families; health; migration, displaced persons and refugees; and violence against women. For each theme, the report details the gender issues to be addressed, the data needed to address them, data sources to be considered, and specific conceptual and measurement issues. The report also discusses in a cross-cutting manner how to generate surveys, conduct data analysis or generate appropriate visualisations.

UN agencies are also working on this issue in their various areas of action. For example, Unicef has also developed guides of interest such as “Gender statistics and administrative data systems”, which compiles resources such as conceptual and strategic frameworks, practical tools and use cases, among others.

Another example is the World Bank. This organisation has a gender-sensitive data portal, where it offers indicators and statistics on various aspects such as health, education, violence or employment. The data can be downloaded in CSV or Excel, but it is also displayed through narratives and visualisations, which make it easier to understand. In addition, they can be accessed through an API. This portal also includes a section where tools and guidelines are compiled to improve data collection, use and dissemination of gender statistics. These materials are focused on specific sectors, such as agri-food or domestic work. It also has a section on courses, where we can find, among others, training on how to communicate and use gender statistics.

Initiatives in Spain

If we focus on our country, we also find very interesting initiatives. We have already talked about GenderDataLab.org, a repository of open data with a gender perspective. Its website also includes guides on how to generate and share these datasets. If you want to know more about this project, we invite you to watch this interview with Thais Ruiz de Alda, founder and CEO of Digital Fems, one of the entities behind this initiative.

In addition, an increasing number of agencies are implementing mechanisms to publish gender-sensitive datasets. The Government of the Canary Islands has created the web tool “Canary Islands in perspective” to bring together different statistical sources and provide a scorecard with data disaggregated by sex, which is continuously updated. Another project worth mentioning is the “Women and Men in the Canary Islands” website, the result of a statistical operation designed by the Canary Islands Statistics Institute (ISTAC) in collaboration with the Canary Islands Institute for Equality. It compiles information from different statistical operations and analyses it from a gender perspective.

The Government of Catalonia has also included this issue in its Government Plan. In the report "Prioritisation of open data relating to gender inequality for the Government of Catalonia" they compile bibliography and local and international experiences that can serve as inspiration for both the publication and use of this type of datasets. The report also proposes a series of indicators to be taken into account and details some datasets that need to be opened up.

These are just a few examples that show the commitment of civil associations and public bodies in this area. A field we must continue to work in order to get the necessary data to be able to assess the real situation of women in the world and thus design political solutions that will enable a fairer world for all.

The first National Open Data Meeting will take place in Barcelona on 21 November. It is an initiative promoted and co-organised by Barcelona Provincial Council, Government of Aragon and Provincial Council of Castellón, with the aim of identifying and developing specific proposals to promote the reuse of open data.

This first meeting will focus on the role of open data in developing territorial cohesion policies that contribute to overcoming the demographic challenge.

Agenda

The day will begin at 9:00 am and will last until 18:00 pm.

After the opening, which will be given by Marc Verdaguer, Deputy for Innovation, Local Governments and Territorial Cohesion of the Barcelona Provincial Council, there will be a keynote speech, where Carles Ramió, Vice-Rector for Institutional Planning and Evaluation at Pompeu Fabra University, will present the context of the subject.

Then, the event will be divided into four sessions where the following topics will be discussed:

- 10:30 a.m. State of art: lights and some shadows of opening and reusing data.

- 12:30 p.m. What does society need and expect from public administrations' open data portals?

- 15:00. Local commitment to fight against depopulation through open data

- 4:30 p.m. What can Public Administrations do using their data to jointly fight depopulation?

Experts linked to various open data initiatives, public organisations and business associations will participate in the conference. Specifically, the Aporta Initiative will participate in the first session, where the challenges and opportunities of the use of open data will be discussed.

The importance of addressing the demographic challenge

The conference will address how the ageing of the population, the geographical isolation that hinders access to health, administrative and educational centres and the loss of economic activity affect the smaller municipalities, both rural and urban. A situation with great repercussions on the sustainability and supply of the whole country, as well as on the preservation of culture and diversity.

A few months ago, Facebook surprised us all with a name change: it became Meta. This change alludes to the concept of "metaverse" that the brand wants to develop, uniting the real and virtual worlds, connecting people and communities.

Among the initiatives within Meta is Data for Good, which focuses on sharing data while preserving people's privacy. Helene Verbrugghe, Public Policy Manager for Spain and Portugal at Meta spoke to datos.gob.es to tell us more about data sharing and its usefulness for the advancement of the economy and society.

Full interview:

1. What types of data are provided through the Data for Good Initiative?

Meta's Data For Good team offers a range of tools including maps, surveys and data to support our 600 or so partners around the world, ranging from large UN institutions such as UNICEF and the World Health Organization, to local universities in Spain such as the Universitat Poliècnica de Catalunya and the University of Valencia.

To support the international response to COVID-19, data such as those included in our Range of Motion Maps have been used extensively to measure the effectiveness of stay-at-home measures, and in our COVID-19 Trends and Impact Survey to understand issues such as reluctance to vaccinate and inform outreach campaigns. Other tools, such as our high-resolution population density maps, have been used to develop rural electrification plans and five-year water and sanitation investments in places such as Rwanda and Zambia. We also have AI-based poverty maps that have helped extend social protection in Togo and an international social connectivity index that has been useful for understanding cross-border trade and financial flows. Finally, we have recently worked to support groups such as the International Federation of the Red Cross and the International Organization for Migration in their response to the Ukraine crisis, providing aggregated information on the volumes of people leaving the country and arriving in places such as Poland, Germany and the Czech Republic.

Privacy is built into all our products by default; we aggregate and de-identify information from Meta platforms, and we do not share anyone's personal information.

2. What is the value for citizens and businesses? Why is it important for private companies to share their data?

Decision-making, especially in public policy, requires information that is as accurate as possible. As more people connect and share content online, Meta provides a unique window into the world. The reach of Facebook's platform across billions of people worldwide allows us to help fill key data gaps. For example, Meta is uniquely positioned to understand what people need in the first hours of a disaster or in the public conversation around a health crisis - information that is crucial for decision-making but was previously unavailable or too expensive to collect in time.

For example, to support the response to the crisis in Ukraine, we can provide up-to-date information on population changes in neighbouring countries in near real-time, faster than other estimates. We can also collect data at scale by promoting Facebook surveys such as our COVID-19 Trends and Impact Survey, which has been used to better understand how mask-wearing behaviour will affect transmission in 200 countries and territories around the world.

3. The information shared through Data for Good is anonymised, but what is the process like? How is the security and privacy of user data guaranteed?

Data For Good respects the choices of Facebook users. For example, all Data For Good surveys are completely voluntary. For location data used for Data For Good maps, users can choose whether they want to share that information from their location history settings.

We also strive to share how we protect privacy by publishing blogs about our methods and approaches. For example, you can read about our differential privacy approach to protecting mobility data used in the response to COVID-19 here.

4. What other challenges have you encountered in setting up an initiative of this kind and how have you overcome them?

When we started Data For Good, the vast majority of our datasets were only available through a licensing agreement, which was a cumbersome process for some partners and unfeasible for many governments. However, at the onset of the COVID-19 pandemic, we realised that, in order to operate at scale, we would need to make more of our work publicly available, while incorporating stringent measures, such as differential privacy, to ensure security. In recent years, most of our datasets have been made public on platforms such as the Humanitarian Data Exchange, and through this tool and other APIs, our public tools have been queried more than 55 million times in the past year. We are proud of the move towards open source sharing, which has helped us overcome early difficulties in scaling up and meeting the demand for our data from partners around the world.

5. What are Meta's future plans for Data for Good?

Our goal is to continue to help our partners get the most out of our tools, while continuing to evolve and create new ways to help solve real-world problems. In the past year, we have focused on growing our toolkit to respond to issues such as climate change through initiatives such as our Climate Change Opinion Survey, which will be expanded this year; as well as evolving our knowledge of cross-border population flows, which is proving critical in supporting the response to the crisis in Ukraine.