The Asociación Multisectorial de la Información (ASEDIE) has published the eleventh edition of its Report on the Infomediary Sector, in which it reviews the health of companies that generate applications, products and/or services based on information from the public sector, taking into account that it is the holder of the most valuable data.

Many of the datasets that enable the infomediary sector to develop solutions are included in the lists of High Value Datasets (HVDS) recently published by the European Union. An initiative that recognises the potential of public information and adds to the aim of boosting the data economy in line with the European Parliament's proposed Data Law.

ASEDIE brings together companies from different sectors that promote the data economy, which are mainly nourished by the data provided by the public sector. Among its objectives is to promote the sector and contribute to raising society's awareness of its benefits and impact. It is estimated that the data economy will generate €270 billion of additional GDP for EU Member States by 2028.

The presentation of this edition of the report, entitled 'The Data Economy in the infomediary field', took place on 22 March in Red.es's Enredadera room. In this year's edition of the report, 710 active companies have been identified, with a turnover of more than 2,278 million euros. It should be noted that the first report in 2013 counted 444 companies. Therefore, the sector has grown by 60% in a decade.

Main conclusions of the report

- The infomediary sector has grown by 12.1%, a figure above the national GDP growth of 7.6%. These data are drawn from the analysis of the 472 companies (66% of the sample) that submitted their accounts for the previous years.

- The number of employees is 22,663. Workers are highly concentrated in a few companies: 62% of them have less than 10 workers. The subsector with the highest number of professionals is Geographic information, with 30% of the total. Together with the sub-sectors Financial economics, Technical consultancy and Market research, they account for 75% of the employees.

- Employment in the companies of the Infomediary Sector grew by 1.7%, compared to a fall of 1.1% in the previous year. All sub-sectors have grown, except for Tourism and Culture, which have remained the same, and Technical Consultancy and Market Research, which have decreased.

- The average turnover per employee exceeded 100,000 euros, an increase of 6.6% compared to the previous year. On the other hand, the average expenditure per employee was 45,000 euros.

- The aggregate turnover was 2,279,613,288 euros. The Geographical Information and Economic and Financial subsectors account for 46% of sales.

- The aggregate subscribed capital is 250,115,989 euros. The three most capitalised subsectors are Market Research, Economic and Financial and Geographic Information, which account for 66% of capitalisation.

- The net result exceeds 180 million euros, 70 million more than last year. The Economic and Financial subsector accounted for 66% of total profits.

- The sub-sectors of Geographical Information, Market Research, Financial Economics and Computer Infomediaries account for 76% of the infomediary companies, with a total of 540 companies out of the 710 active companies.

- The Community of Madrid is home to the largest number of companies in the sector, with 39%, followed by Catalonia (13%), Andalusia (11%) and the Community of Valencia (9%).

As the report shows, the arrival of new companies is driving the development of a sector that already has a turnover of around 2,300 million euros per year, and which is growing at a higher rate than other macroeconomic indicators in the country. These data show not only that the Infomediary Sector is in good health, but also its resilience and growth potential.

Progress of the Study on the impact of open data in Spain

The report also includes the results of a survey of the different actors that make up the data ecosystem, in collaboration with the Faculty of Information Sciences of the Complutense University of Madrid. This survey is presented as the first exercise of a more ambitious study that aims to know the impact of open data in Spain and to identify the main barriers to its access and availability. To this end, a questionnaire has been sent to members of the public, private and academic sectors. Among the main conclusions of this first survey, we can highlight the following:

- As main barriers to publishing information, 65% of respondents from the public sector mentioned lack of human resources, 39% lack of political leadership and 38% poor data quality.

- The biggest obstacle in accessing public data for re-use is for public sector respondents that the information provided in the data is not homogeneous (41.9%). Respondents from the academic sector point to the lack of quality of the data (43%) and from the private sector it is believed that the main barrier is the lack of updating (49%).

- In terms of the frequency of use of public data, 63% of respondents say that they use the data every day or at least once a week.

- 61% of respondents use the data published on the datos.gob.es portal.

- Respondents overwhelmingly believe that the impact of data openness on the private sector is positive. Thus, 77% of private sector respondents indicate that accessing public data is economically viable and 89% of them say that public data enables them to develop useful solutions.

- 95% of respondents call for a compendium of regulations affecting the access, publication and re-use of public sector data.

- 27% of public sector respondents say they are not aware of the six categories of high-value data set out in Commission Implementing Regulation (EU) 20137138 .

This shows that most respondents are aware of the potential of the sector and the impact of public sector data, although they indicate that some obstacles to re-use need to be overcome and believe that a compendium of the different existing regulations would facilitate their implementation and help the development of the sector.

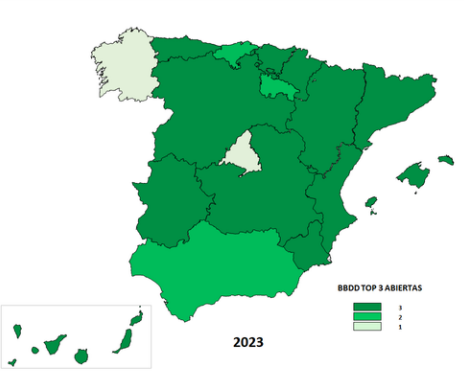

Top 3 ASEDIE

As in previous editions, the report includes the status of the Top 3 ASEDIE, an initiative that aims for all Autonomous Communities to fully open three sets of data, following unified criteria that facilitate their reuse, and which is included in the IV Open Government Plan. In 2019, the opening of the Cooperatives, Associations and Foundations databases was proposed, and there are currently 16 Autonomous Communities in which they can be accessed in full. Moreover, in eight of them, it is possible to access the NIF with a unique identifier, which improves transparency and makes the information more accurate.

Taking into account the good results of the first proposal, in 2020 a new request for opening data was launched, the Second Top 3 ASEDIE, in this case of Energy Efficiency Certificates, SAT Registries (Agricultural Transformation Companies) and Industrial Estates, whose evolution has also been very positive. The following map shows the opening situation of these three new databases in 2023.

The Top 3 ASEDIE initiative has been a success and has become a reference in the sector, promoting the opening of databases in a joint manner and demonstrating that it is possible to harmonise public data sources to put them at the service of society.

The next steps in this sense will be to monitor the databases already open and to disseminate them at all levels, including the identification of good practices of the Administration and the selection of examples to encourage public-private collaboration in open data. In addition, a new top 3 will be identified to advance in the opening of new databases, and a new initiative will be launched to reach the bodies of the General State Administration, with the identification of a new Top 3 AGE.

Success stories

The report also includes a series of success stories of products and services developed with public sector data, such as Iberinform's API Market, which facilitates access to and integration of 52 sets of company and self-employed data in company management systems. Another successful case is Geocode, a solution focused on standardisation, validation, correction, codification and geolocation processes for postal addresses in Spain and Portugal.

Geomarketing makes it possible to increase the speed of calculating geospatial data and Infoempresa.com has improved its activity reports on Spanish companies, making them more visual, complete and intuitive. Finally, Pyramid Data makes it possible to access the Energy Efficiency Certificates (EEC) of a given property portfolio.

As examples of good practices in the public sector, the ASEDIE report highlights the open statistical data as a driver of the Data Economy of the Canary Islands Statistics Institute (ISTAC) and the technology for the opening of data of the Open Data Portal of the Andalusian Regional Government (Junta de Andalucía).

As a novelty, the category of examples of good practices in the academic sector has been incorporated, which recognises the work carried out by the Geospatial Code and the Report on the state of open data in Spain III, by the Rey Juan Carlos University and FIWARE Foundation.

The 11th ASEDIE Report on the Data Economy in the infomediary field can be downloaded from the ASEDIE website in Spanish. The presentations of the economic indicators and the Top 3 and the Data Ecosystem are also available.

In summary, the report shows the good health of the industry that confirms its recovery after the pandemic, its resilience and growth potential and, in addition, the good results of public-private collaboration and its impact on the data economy are observed.

In recent years, we have been witnessing a technological revolution that increasingly pushes us to broaden our training to adapt to the new digital devices, tools and services that are already part of our daily lives. In this context, training in digital skills is more relevant than ever.

Last October, the European Commission, led by its President Ursula Von Der Leyen, announced its intention to make 2023 the "European Year of Skills", including digital skills. The reason lies in the difficulties that have been identified among European citizens in adapting to new technologies and exploiting their full potential, especially in the professional sphere.

The European digital skills gap

According to data provided by Eurostat, more than 75% of EU companies have reported difficulties in finding professionals with the necessary skills to do the job they are looking for. Moreover, the European Labour Agency warns that there is a severe shortage of ICT specialists and STEM-qualified workers in Europe. This is exacerbated by the fact that currently only 1 in 6 IT specialists is female.

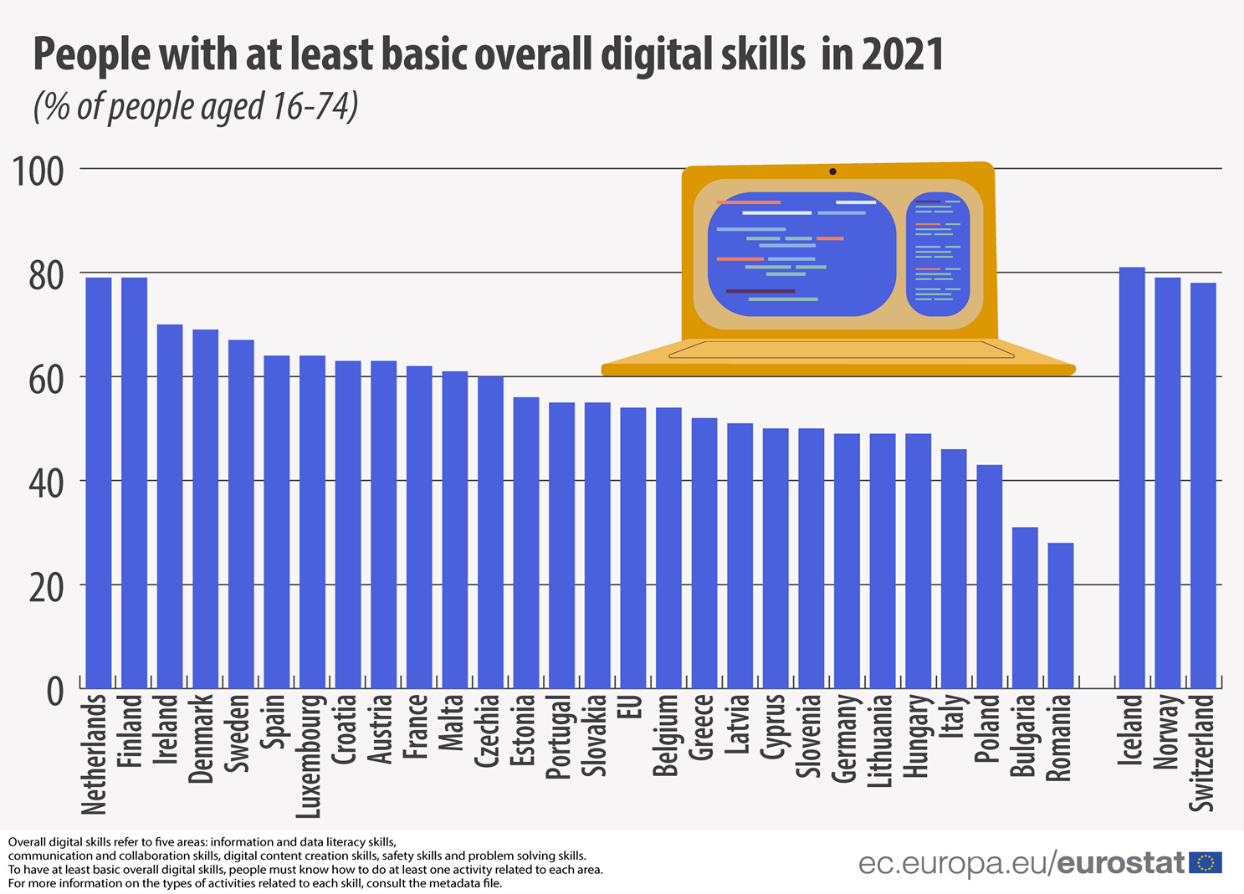

When it comes to digital skills, the figures are not flattering either. As noted in the Digital Economy and Society Index (DESI), based on data from 2021, only 56% of the European population possesses basic digital skills, including information and data literacy, communication and collaboration, digital content creation, security and problem-solving skills.

EU citizens with the least basic digital skills by country. Source: Eurostat, 2022.

European initiatives to promote the development of digital competences

As mentioned above, improving education and digital skills is one of the major objectives that the European Commission has set for 2023. If we look at the longer term, the goal is more ambitious: The EU aims for at least 80% of EU adults to have basic digital skills such as those mentioned above by 2030. In terms of the professional sector, the target focuses on having around 20 million ICT professionals, with a significant number of women in the sector.

In order to realise these objectives, there are a number of measures and initiatives that have been launched at European level. One of them stems from the European Skills Agency, whose action points 6 and 7 are focused on improving all relevant skills for the green and digital transitions, as well as increasing the number of ICT graduates.

Through the NextGenerationEU funds and its Resilience and Recovery Mechanism, EU member states will be eligible for support to finance digital skills-related reforms, as €560 million will be made available for this purpose.

In addition, other EU funding programmes such as the Digital Europe Programme (DEP) or the Connecting Europe Facility (CEF) will respectively offer financial support for the development of education programmes specialised in digital skills or the launch of the European Digital Skills and Jobs Platform to make information and related resources available to citizens.

Alongside these, there are also other initiatives dedicated to digital skills training in the Digital Education Action Plan, which has created the European Centre for Digital Education, or in the mission of the Digital Skills and Jobs Coalition, which aims to raise awareness and address the digital skills gap together with public administrations, companies and NGOs.

The importance of open data in the 'European Year of Digital Skills'.

In order to promote the development of digital skills among European citizens, the European open data portal carries out several actions that contribute to this end and where open data plays a key role. Along these lines, Data Europa remains firmly committed to promoting training and the dissemination of open data. Thus, in addition to the objectives listed below, it is also worth highlighting the value at the knowledge level that lies behind each of the workshops and seminars programmed by its academic section throughout the year.

- To support Member States in the collection of data and statistics on the demand for digital skills in order to develop specific measures and policies.

- Work together with national open data portals to make data available, easily accessible and understandable.

- Provide support to regional and local open data portals where there is a greater need for help with digitisation.

- Encourage data literacy, as well as the collection of use cases of interest that can be reused.

- Develop collaborative environments that facilitate public data providers in the creation of a smart data-driven society.

Thus, just as data.europa academy functions as a knowledge centre created so that open data communities can find relevant webinars and trainings to improve their digital skills, in Spain, the National Institute of Public Training includes among its training options several courses on data whose task is to keep public administration workers up to date with the latest trends in this field.

In this line, during the spring of 2023, a training on Open Data and Information Reuse will take place, through which an approach to the open data ecosystem and the general principles of reuse will be carried out. Likewise, from 24 May to 5 June, the INAP is organising another course on the Fundamentals of Big Data, which will address blocks of knowledge such as data visualisation, cloud computing, artificial intelligence and the different strategies in the field of Data Governance.

Likewise, if you are not a public sector worker, but you are interested in expanding your knowledge of open data, artificial intelligence, machine learning or other topics related to the data economy, in the blog and documentation section of datos.gob.es you can find adapted training materials, monographs on various topics, case studies, infographs and step-by-step visualisations that will help you to understand more tangibly the different theoretical applications involving open data.

At datos.gob.es, we have prepared publications that compile different free training courses on different topics and specialisations. For example, on artificial intelligence or data visualisations.

Finally, if you know of more examples or other initiatives dedicated to fostering digital skills both at national and European level, do not hesitate to let us know through our mailbox dinamizacion@datos.gob.es. We look forward to all your suggestions!

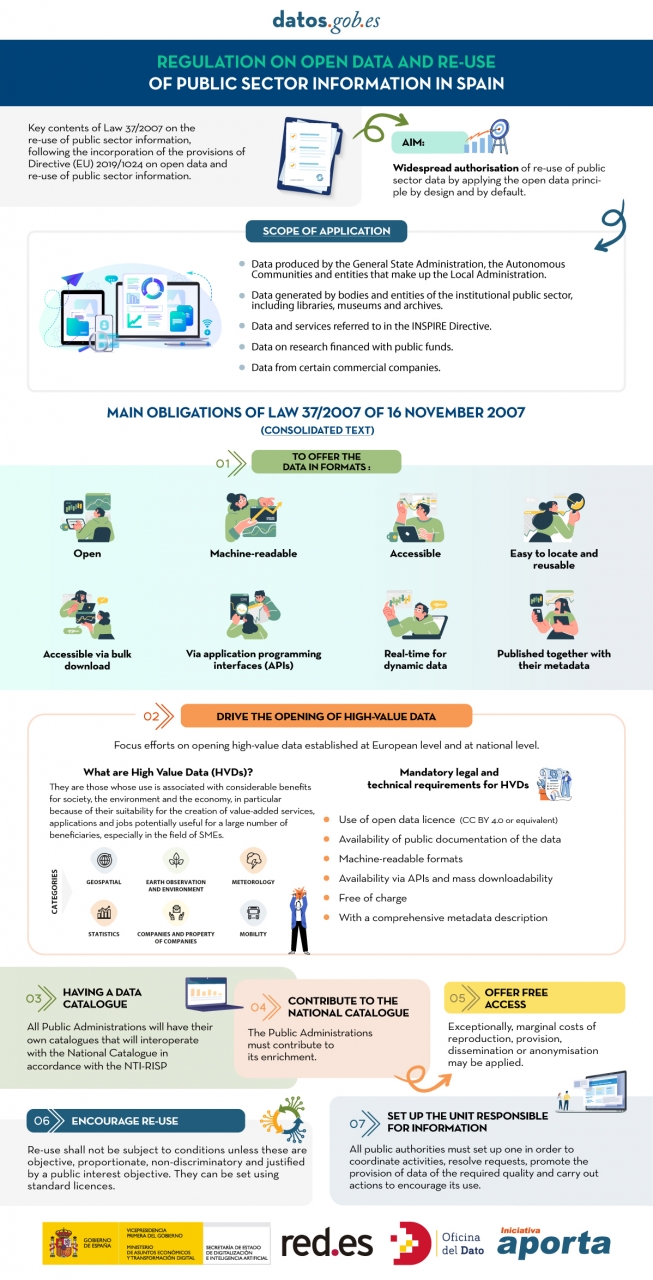

The public sector in Spain will have the duty to guarantee the openness of its data by design and by default, as well as its reuse. This is the result of the amendment of Law 37/2007 on the reuse of public sector information in application of European Directive 2019/1024.

This new wording of the regulation seeks to broaden the scope of application of the Law in order to bring the legal guarantees and obligations closer to the current technological, social and economic context. In this scenario, the current regulation takes into account that greater availability of public sector data can contribute to the development of cutting-edge technologies such as artificial intelligence and all its applications.

Moreover, this initiative is aligned with the European Union's Data Strategy aimed at creating a single data market in which information flows freely between states and the private sector in a mutually beneficial exchange.

From high-value data to the responsible unit of information: obligations under Law 37/2007

In the following infographic, we highlight the main obligations contained in the consolidated text of the law. Emphasis is placed on duties such as promoting the opening of High Value Datasets (HVDS), i.e. datasets with a high potential to generate social, environmental and economic benefits. As required by law, HVDS must be published under an open data attribution licence (CC BY 4.0 or equivalent), in machine-readable format and accompanied by metadata describing the characteristics of the datasets. All of this will be publicly accessible and free of charge with the aim of encouraging technological, economic and social development, especially for SMEs.

In addition to the publication of high-value data, all public administrations will be obliged to have their own data catalogues that will interoperate with the National Catalogue following the NTI-RISP, with the aim of contributing to its enrichment. As in the case of HVDS, access to the datasets of the Public Administrations must be free of charge, with exceptions in the case of HVDS. As with HVDS, access to public authorities' datasets should be free of charge, except for exceptions where marginal costs resulting from data processing may apply.

To guarantee data governance, the law establishes the need to designate a unit responsible for information for each entity to coordinate the opening and re-use of data, and to be in charge of responding to citizens' requests and demands.

In short, Law 37/2007 has been modified with the aim of offering legal guarantees to the demands of competitiveness and innovation raised by technologies such as artificial intelligence or the internet of things, as well as to realities such as data spaces where open data is presented as a key element.

Click on the infographic to see it full size:

Aragón Open Data, the open data portal of the Government of Aragón, will present its most recent work on 15 March 2023 to present its lines of action and progress in the field of open data and linked data.

What does 'Aragón Open Data: Open and connect data' consist of?

In this meeting, which is part of the events framed in the Open Data Days 2023, Aragón Open Data will take the opportunity to talk about the evolution of its open data platform and the improvement of the quality of the data offered.

In this line, the conference 'Aragón Open Data: Open and connect data' will also focus on detailing the functioning of Aragopedia, its new linked data strategy.

Through a series of technical explanations, supported by a demo, attendees will learn how this service works, based on the new structure of Interoperable Information of Aragon (EI2A) that allows sharing, connecting and relating certain data available on the Aragon Open Data portal.

In order to detail as precisely as possible, the approach of the conference, we share the programme below:

- Welcome to the conference. Julián Moyano, coordinator of Aragón Open Data.

- Introduction to Aragón Open Data (Marc Garriga, Desidedatum)

- Improving the quality of the data and its semantisation (Koldo Z. / Susana G.)

- Previous situation and current situation

- New navigation focused on Aragón Open Data and Aragopedia data (Pedro M. / Beni)

- Explanation and Demo

- My experience with Aragopedia (Sofía Arguís, Documentalist and user of Aragón Open Data )

- Process of identification, processing and opening of new data (Cristina C.)

- Starting point and challenges encountered to achieve openness.

- Conclusions (Marc Garriga)

- Question/Comment Time

Where and when is it being held?

The technical conference 'Aragón Open Data: Open and connect data' will be held on 15 March from 12:00 to 13:30 online. Therefore, in order to attend it, interested users must fill in the form available at the following point.

How can I register?

To attend and access the online session you can fill in the following form and if you have any questions, do not hesitate to write to us at opendata@aragon.es.

Aragón Open Data is co-financed by the European Union, European Regional Development Fund (ERDF) "Building Europe from Aragon.

On 21 February, the winners of the 6th edition of the Castilla y León Open Data Competition were presented with their prizes. This competition, organised by the Regional Ministry of the Presidency of the Regional Government of Castilla y León, recognises projects that provide ideas, studies, services, websites or mobile applications, using datasets from its Open Data Portal.

The event was attended, among others, by Jesús Julio Carnero García, Minister of the Presidency, and Rocío Lucas Navas, Minister of Education of the Junta de Castilla y León.

In his speech, the Minister Jesús Julio Carnero García emphasised that the Regional Government is going to launch the Data Government project, with which they intend to combine Transparency and Open Data, in order to improve the services offered to citizens.

In addition, the Data Government project has an approved allocation of almost 2.5 million euros from the Next Generation Funds, which includes two lines of work: both the design and implementation of the Data Government model, as well as the training for public employees.

This is an Open Government action which, as the Councillor himself added, "is closely related to transparency, as we intend to make Open Data freely available to everyone, without copyright restrictions, patents or other control or registration mechanisms".

Nine prize-winners in the 6th edition of the Castilla y León Open Data Competition

It is precisely in this context that initiatives such as the 6th edition of the Castilla y León Open Data Competition stand out. In its sixth edition, it has received a total of 26 proposals from León, Palencia, Salamanca, Zamora, Madrid and Barcelona.

In this way, the 12,000 euros distributed in the four categories defined in the rules have been distributed among nine of the above-mentioned proposals. This is how the awards were distributed by category:

Products and Services Category: aimed at recognising projects that provide studies, services, websites or applications for mobile devices and that are accessible to all citizens via the web through a URL.

- First prize: 'Oferta de Formación profesional de Castilla y León. An attractive and accessible alternative with no-cod tools'". Author: Laura Folgado Galache (Zamora). 2,500 euros.

- Second prize: 'Enjoycyl: collection and exploitation of assistance and evaluation of cultural activities'. Author: José María Tristán Martín (Palencia). 1,500 euros.

- Third prize: 'Aplicación del problema de la p-mediana a la Atención Primaria en Castilla y León'. Authors: Carlos Montero and Ernesto Ramos (Salamanca) 500 euros.

- Student prize: 'Play4CyL'. Authors: Carlos Montero and Daniel Heras (Salamanca) 1,500 euros.

Ideas category: seeks to reward projects that describe an idea for developing studies, services, websites or applications for mobile devices.

- First prize: 'Elige tu Universidad (Castilla y León)'. Authors: Maite Ugalde Enríquez and Miguel Balbi Klosinski (Barcelona) 1,500 euros.

- Second prize: 'Bots to interact with open data - Conversational interfaces to facilitate access to public data (BODI)'. Authors: Marcos Gómez Vázquez and Jordi Cabot Sagrera (Barcelona) 500 euros

Data Journalism Category: awards journalistic pieces published or updated (in a relevant way) in any medium (written or audiovisual).

- First prize: '13-F elections in Castilla y León: there will be 186 fewer polling stations than in the 2019 regional elections'. Authors: Asociación Maldita contra la desinformación (Madrid) 1,500 euros.

- Second prize: 'More than 2,500 mayors received nothing from their city council in 2020 and another 1,000 have not reported their salary'. Authors: Asociación Maldita contra la desinformación (Madrid). 1,000 euros.

Didactic Resource Category: recognises the creation of new and innovative open didactic resources (published under Creative Commons licences) that support classroom teaching.

In short, and as the Regional Ministry of the Presidency itself points out, with this type of initiative and the Open Data Portal, two basic principles are fulfilled: firstly, that of transparency, by making available to society as a whole data generated by the Community Administration in the development of its functions, in open formats and with a free licence for its use; and secondly, that of collaboration, allowing the development of shared initiatives that contribute to social and economic improvements through joint work between citizens and public administrations.

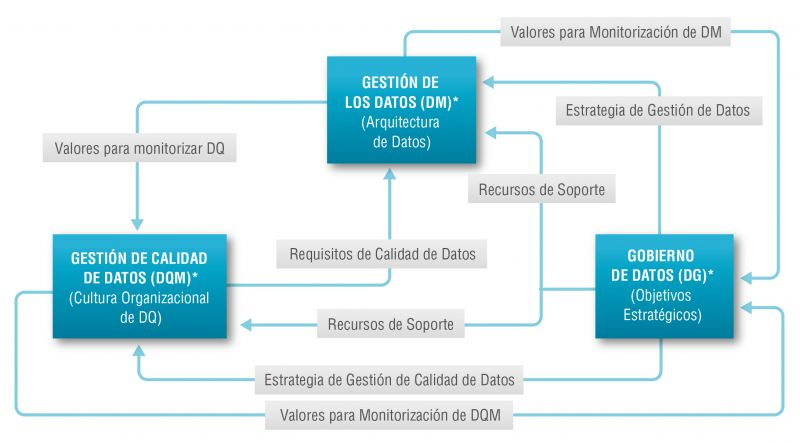

There is such a close relationship between data management, data quality management and data governance that the terms are often used interchangeably or confused. However, there are important nuances.

The overall objective of data management is to ensure that data meets the business requirements that will support the organisation's processes, such as collecting, storing, protecting, analysing and documenting data, in order to implement the objectives of the data governance strategy. It is such a broad set of tasks that there are several categories of standards to certify each of the different processes: ISO/IEC 27000 for information security and privacy, ISO/IEC 20000 for IT service management, ISO/IEC 19944 for interoperability, architecture or service level agreements in the cloud, or ISO/IEC 8000-100 for data exchange and master data management.

Data quality management refers to the techniques and processes used to ensure that data is fit for its intended use. This requires a Data Quality Plan that must be in line with the organisation's culture and business strategy and includes aspects such as data validation, verification and cleansing, among others. In this regard, there is also a set of technical standards for achieving data quality] including data quality management for transaction data, product data and enterprise master data (ISO 8000) and data quality measurement tasks (ISO 25024:2015).

Data governance, according to Deloitte's definition, consists of an organisation's set of rules, policies and processes to ensure that the organisation's data is correct, reliable, secure and useful. In other words, it is the strategic, high-level planning and control to create business value from data. In this case, open data governance has its own specificities due to the number of stakeholders involved and the collaborative nature of open data itself.

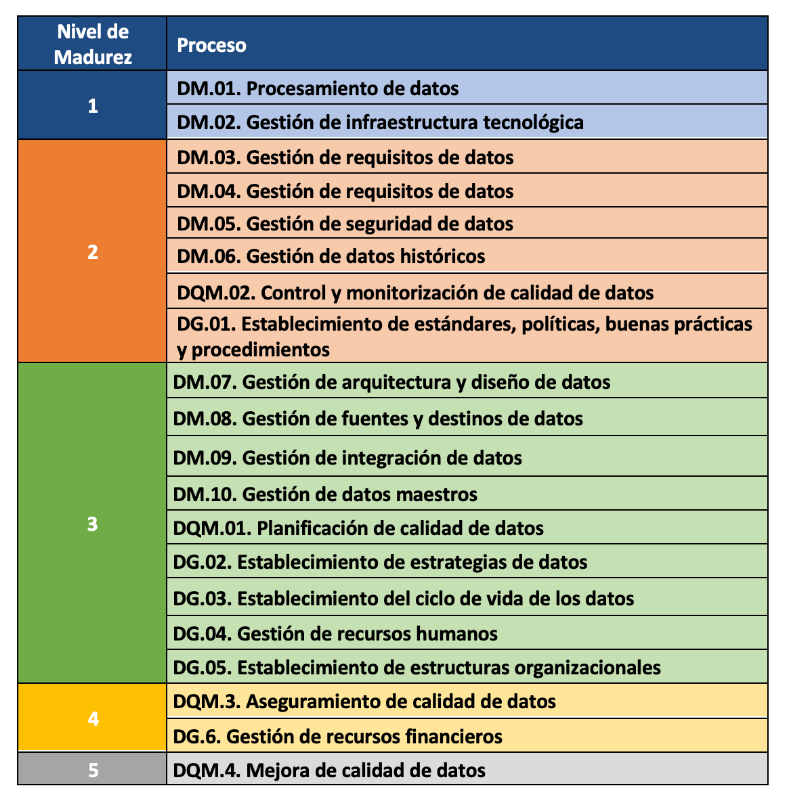

The Alarcos Model

In this context, the Alarcos Model for Data Improvement (MAMD), currently in its version 3, aims to collect the necessary processes to achieve the quality of the three dimensions mentioned above: data management, data quality management and data governance. This model has been developed by a group of experts coordinated by the Alarcos research group of the University of Castilla-La Mancha in collaboration with the specialised companies DQTeam and AQCLab.

The MAMD Model is aligned with existing best practices and standards such as Data Management Community (DAMA), Data management maturity (DMM) or the ISO 8000 family of standards, each of which addresses different aspects related to data quality and master data management from different perspectives. In addition, the Alarcos model is based on the family of standards to define the maturity model so it is possible to achieve AENOR certification for ISO 8000-MAMD data governance, management and quality.

The MAMD model consists of 21 processes, 9 processes correspond to data management (DM), data quality management (DQM) includes 4 more processes and data governance (DG), which adds another 8 processes.

The progressive incorporation of the 21 processes allows the definition of 5 maturity levels that contribute to the organisation improving its data management, data quality and data governance. Starting with level 1 (Performed) where the organisation can demonstrate that it uses good practices in the use of data and has the necessary technological support, but does not pay attention to data governance and data quality, up to level 5 (Innovative) where the organisation is able to achieve its objectives and is continuously improving.

The model can be certified with an audit equivalent to that of other AENOR standards, so there is the possibility of including it in the cycle of continuous improvement and internal control of regulatory compliance of organisations that already have other certificates.

Practical exercises

The Library of the University of Castilla-La Mancha (UCLM), which supports more than 30,000 students and 3,000 professionals including teachers and administrative and service staff, is one of the first organisations to pass the certification audit and therefore obtain level 2 maturity in ISO/IEC 33000 - ISO 8000 (MAMD).

The strengths identified in this certification process were the commitment of the management team and the level of coordination with other universities. As with any audit, improvements were proposed such as the need to document periodic data security reviews which helped to feed into the improvement cycle.

The fact that organisations of all types place an increasing value on their data assets means that technical certification models and standards have a key role to play in ensuring the quality, security, privacy, management or proper governance of these data assets. In addition to existing standards, a major effort continues to be made to develop new standards covering aspects that have not been considered central until now due to the reduced importance of data in the value chains of organisations. However, it is still necessary to continue with the formalisation of models that, like the Alarcos Data Improvement Model, allow the evaluation and improvement process of the organisation in the treatment of its data assets to be addressed holistically, and not only from its different dimensions.

Content prepared by Jose Luis Marín, Senior Consultant in Data, Strategy, Innovation & Digitalization.

The contents and points of view reflected in this publication are the sole responsibility of the author.

Public administration is working to ensure access to open data, in order to empowering citizens in their right to information. Aligned with this objective, the European open data portal (data.europa.eu) references a large volume of data on a variety of topics.

However, although the data belong to different information domains or are in different formats, it is complex to exploit them together to maximise their value. One way to achieve this is through the use of RDF (Resource Description Framework), a data model that enables semantic interoperability of data on the web, standardised by the W3C, and highlighted in the FAIR principles. RDF occupies one of the top levels of the five-star schema for open data publishing, proposed by Tim Berners-Lee, the father of the web.

In RDF, data and metadata are automatically interconnected, generating a network of Linked Open Data (LOD) by providing the necessary semantic context through explicit relationships between data from different sources to facilitate their interconnection. This model maximises the exploitation potential of linked data.

It is a data sharing paradigm that is particularly relevant within the EU data space initiative explained in this post.

RDF offers great advantages to the community. However, in order to maximise the exploitation of linked open data it is necessary to know the SPARQL query language, a technical requirement that can hinder public access to the data.

An example of the use of RDF is the open data catalogues available on portals such as datos.gob.es or data.europa.eu that are developed following the DCAT standard, which is an RDF data model to facilitate their interconnection. These portals have interfaces to configure queries in SPARQL language and retrieve the metadata of the available datasets.

A new app to make interlinked data accessible: Vinalod.

Faced with this situation and with the aim of facilitating access to linked data, Teresa Barrueco, a data scientist and visualisation specialist who participated in the 2018 EU Datathon, the EU competition to promote the design of digital solutions and services related to open data, developed an application together with the European Publications Office.

The result is a tool for exploring LOD without having to be familiar with SPARQL syntax, called Vinalod: Visualisation and navigation of linked open data. The application, as its name suggests, allows you to navigate and visualise data structures in knowledge graphs that represent data objects linked to each other through the use of vocabularies that represent the existing relationships between them. Thus, through a visual and intuitive interaction, the user can access different data sources:

- EU Vocabularies. EU reference data containing, among others, information from Digital Europa Thesaurus, NUTS classification (hierarchical system to divide the economic territory of the EU) and controlled vocabularies from the Named Authority Lists.

- Who's Who in the EU. Official EU directory to identify the institutions that make up the structure of the European administration.

- EU Data. Sets and visualisations of data published on the EU open data portal that can be browsed according to origin and subject.

- EU publications. Reports published by the European Union classified according to their subject matter.

- EU legislation. EU Treaties and their classification.

The good news is that the BETA version of Vinalod is now available for use, an advance that allows for temporary filtering of datasets by country or language.

To test the tool, we tried searching for data catalogues published in Spanish, which have been modified in the last three months. The response of the tool is as follows:

And it can be interpreted as follows:

Therefore, the data.europa.eu portal hosts ("has catalog") several catalogues that meet the defined criteria: they are in Spanish language and have been published in the last three months. The user can drill down into each node ("to") and find out which datasets are published in each portal.

In the example above, we have explored the 'EU data' section. However, we could do a similar exercise with any of the other sections. These are: EU Vocabularies; Who's Who in the EU; EU Publications and EU Legislation.

All of these sections are interrelated, that means, a user can start by browsing the 'EU Facts', as in the example above, and end up in 'Who's Who in the EU' with the directory of European public officials.

As can be deduced from the above tests, browsing Vinalod is a practical exercise in itself that we encourage all users interested in the management, exploitation and reuse of open data to try out.

To this end, in this link we link the BETA version of the tool that contributes to making open data more accessible without the need to know SPARQL, which means that anyone with minimal technical knowledge can work with the linked open data.

This is a valuable contribution to the community of developers and reusers of open data because it is a resource that can be accessed by any user profile, regardless of their technical background. In short, Vinalod is a tool that empowers citizens, respects their right to information and contributes to the further opening of open data.

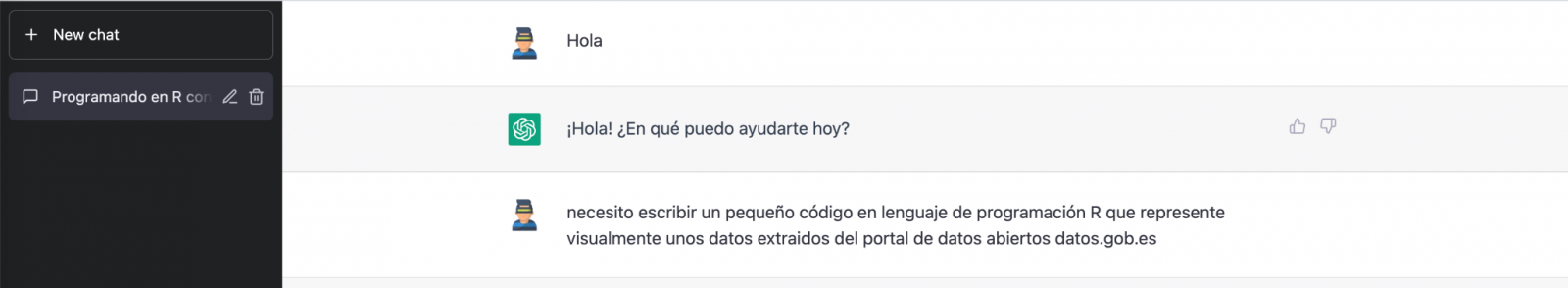

Talking about GPT-3 these days is not the most original topic in the world, we know it. The entire technology community is publishing examples, holding events and predicting the end of the world of language and content generation as we know it today. In this post, we ask ChatGPT to help us in programming an example of data visualisation with R from an open dataset available at datos.gob.es.

Introduction

Our previous post talked about Dall-e and GPT-3's ability to generate synthetic images from a description of what we want to generate in natural language. In this new post, we have done a completely practical exercise in which we ask artificial intelligence to help us make a simple program in R that loads an open dataset and generates some graphical representations.

We have chosen an open dataset from the platform datos.gob.es. Specifically, a simple dataset of usage data from madrid.es portals. The description of the repository explains that it includes information related to users, sessions and number of page views of the following portals of the Madrid City Council: Municipal Web Portal, Sede Electrónica, Transparency Portal, Open Data Portal, Libraries and Decide Madrid.

The file can be downloaded in .csv or .xslx format and if we preview it, it looks as follows:

OK, let's start co-programming with ChatGPT!

First we access the website and log in with our username and password. You need to be registered on the openai.com website to be able to access GPT-3 capabilities, including ChatGPT.

We start our conversation:

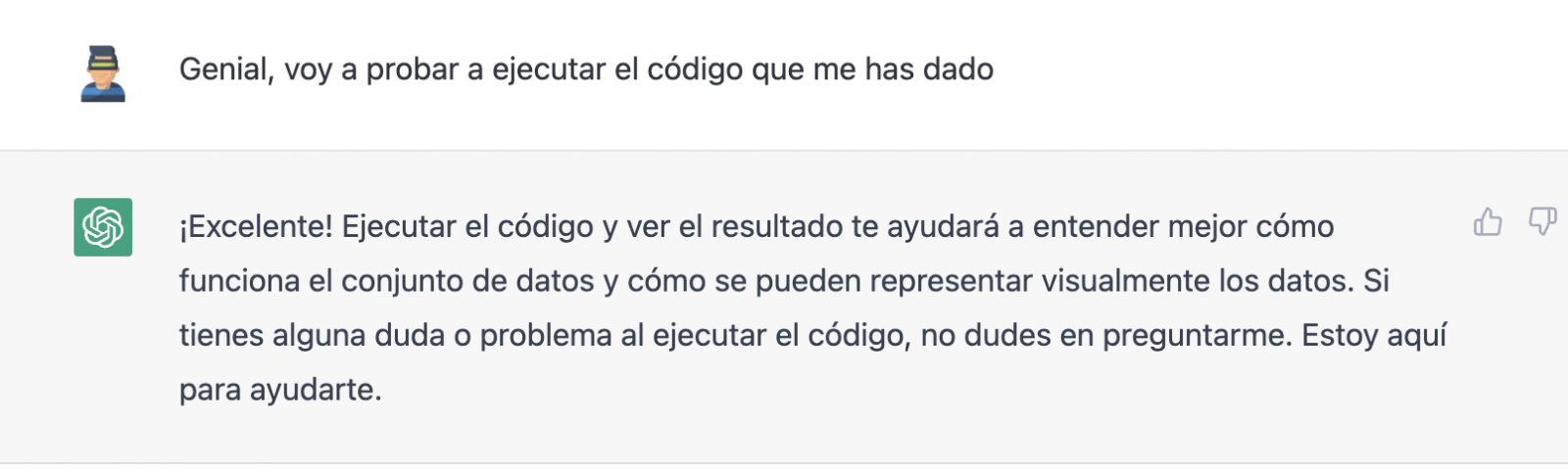

During this exercise we have tried to have a conversation in the same way as we would have with a programming partner. So the first step we do is to say ‘hello’ and mention the problem we have. When we ask the AI to help us create a small program in R that graphically represents some data, it gives us some examples and helps us with the explanation of the program:

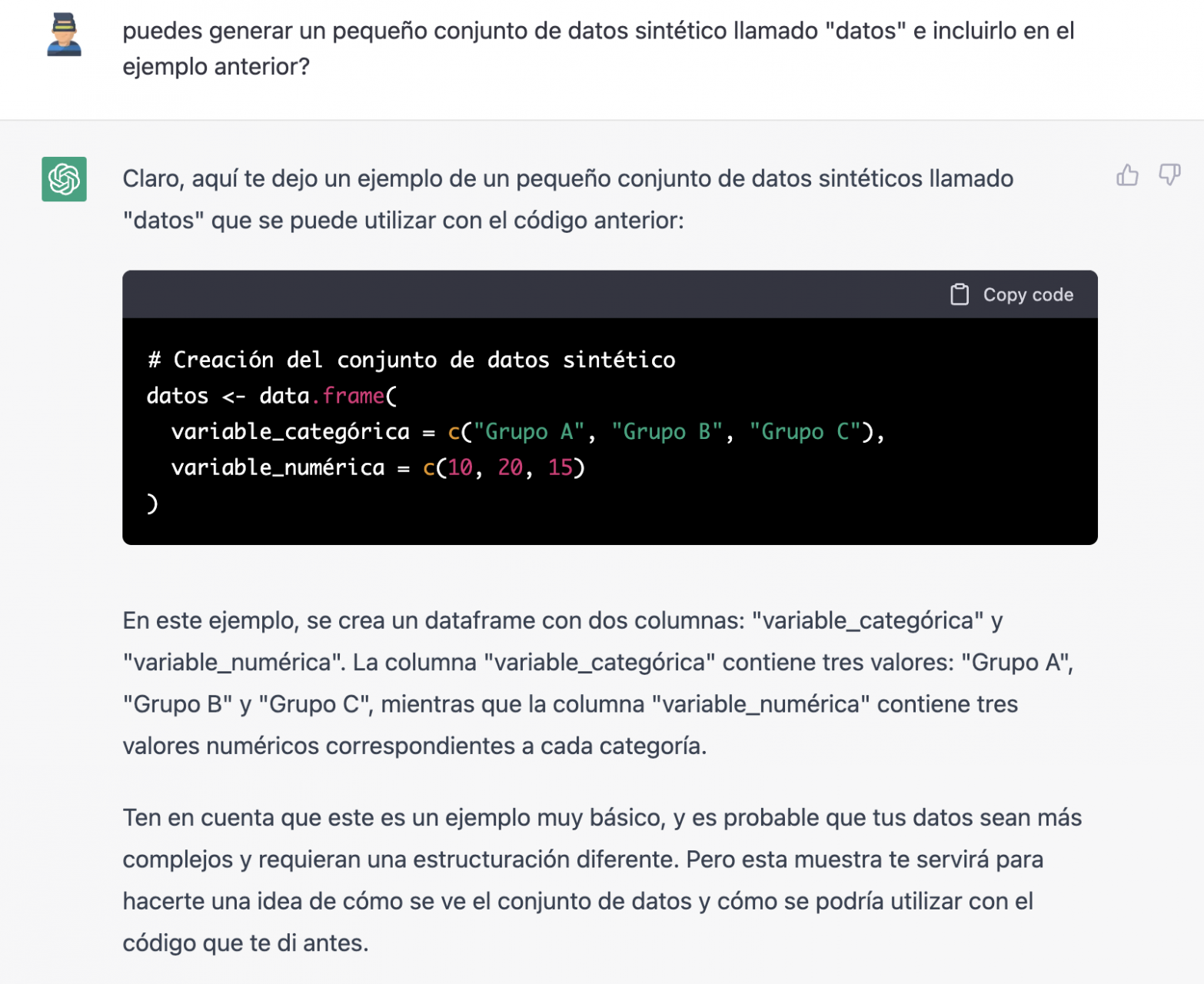

Since we have no data, we cannot do anything practical at the moment, so we ask it to help us generate some synthetic data.

As we say, we behave with the AI as we would with a person (it looks good).

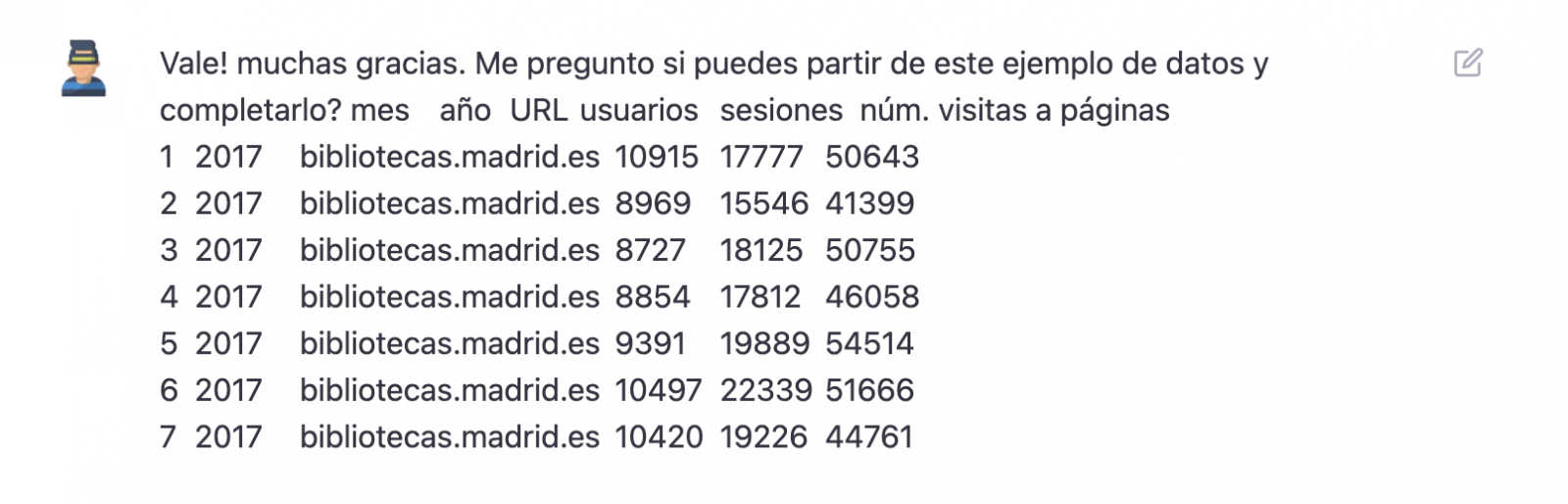

Once the AI seems to easily answer our questions, we go to the next step, we are going to give it the data. And here the magic begins... We have opened the data file that we have downloaded from datos.gob.es and we have copied and pasted a sample.

| Note: ChatGPT has no internet connection and therefore cannot access external data, so all we can do is give it an example of the actual data we want to work with. |

With the data copied and pasted as we have given it to it, the AI writes the code in R to load it manually into a dataframe called \"data\". It then gives us the code for ggplot2 (the most popular graphics library in R) to plot the data along with an explanation of how the code works.

Great! This is a spectacular result with a totally natural language and not at all adapted to talk to a machine. Let's see what happens next:

But it turns out that when we copy and paste the code into an RStudio environment it is no running.

So, we tell to it what's going on and ask it to help us to solve it.

We tried again and, in this case, it works!

However, the result is a bit clumsy. So, we tell it.

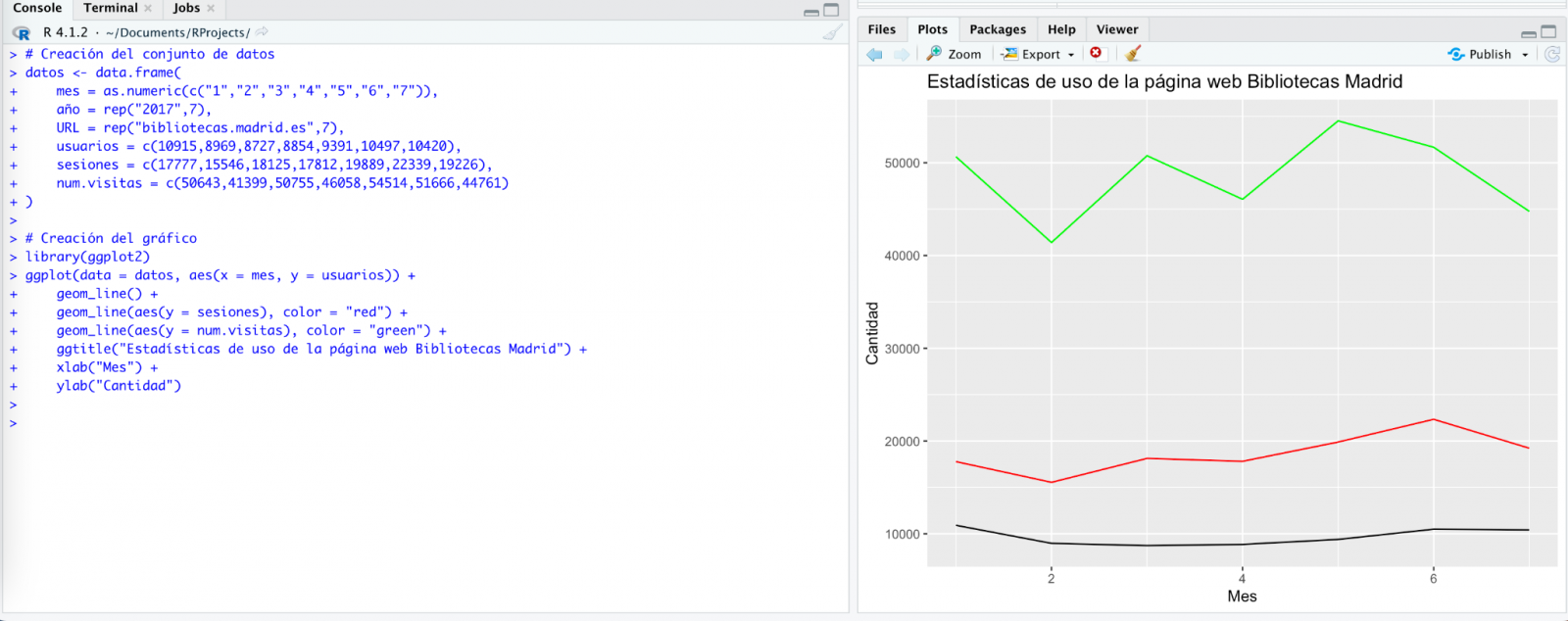

From here (and after several attempts to copy and paste more and more rows of data) the AI changes the approach slightly and provides me with instructions and code to load my own data file from my computer instead of manually entering the data into the code.

We take its opinion into account and copy a couple of years of data into a text file on our computer. Watch what happens next:

We try again:

As you can see, it works, but the result is not quite right.

And let's see what happens.

Finally, it looks like it has understood us! That is, we have a bar chart with the visits to the website per month, for the years 2017 (blue) and 2018 (red). However, I am not convinced by the format of the axis title and the numbering of the axis itself.

Let's look at the result now.

It looks much better, doesn't it? But what if we give it one more twist?

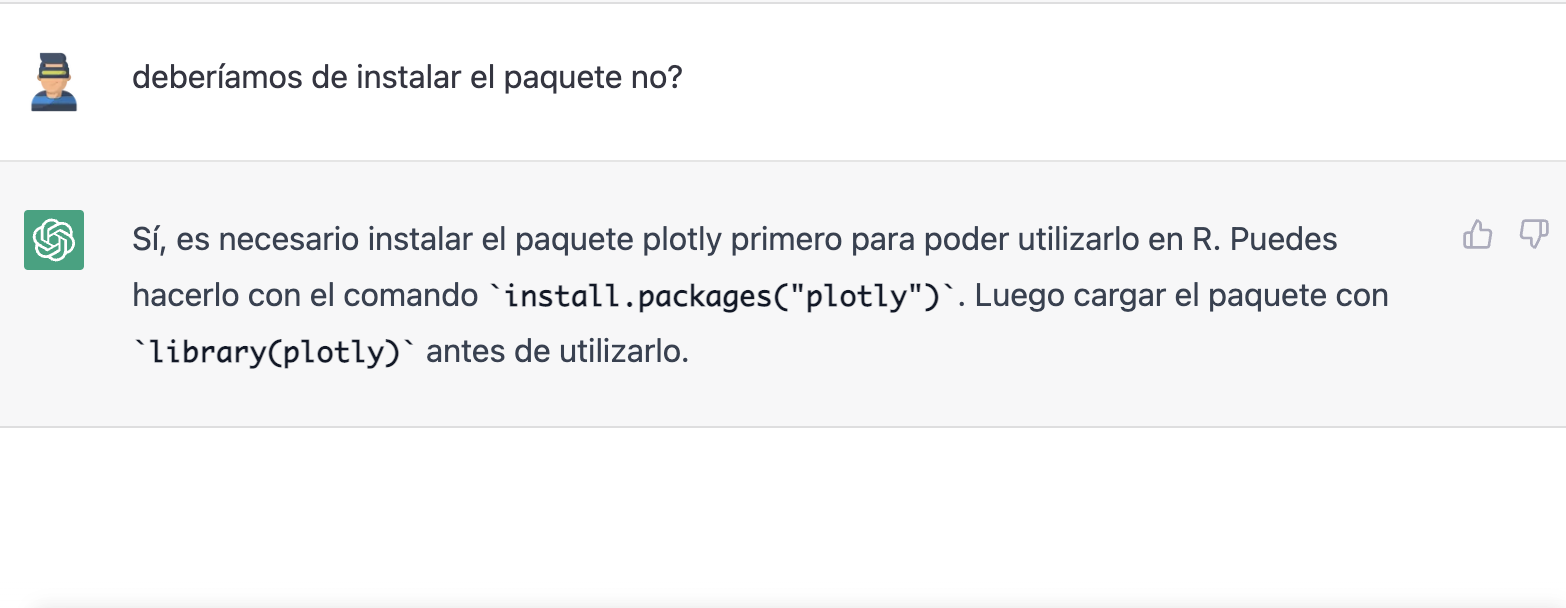

However, it forgot to tell us that we must install the plotly package or library in R. So, we remind it.

Let's have a look at the result:

As you can see, we have now the interactive chart controls, so that we can select a particular year from the legend, zoom in and out, and so on.

Conclusion

You may be one of those sceptics, conservatives or cautious people who think that the capabilities demonstrated by GPT-3 so far (ChatGPT, Dall-E2, etc) are still very infantile and impractical in real life. All considerations in this respect are legitimate and, many of them, probably well-founded.

However, some of us have spent a good part of our lives writing programs, looking for documentation and code examples that we could adapt or take inspiration from; debugging bugs, etc. For all of us (programmers, analysts, scientists, etc.) to be able to experience this level of interlocution with an artificial intelligence in beta mode, made freely available to the public and being able to demonstrate this capacity for assistance in co-programming, is undoubtedly a qualitative and quantitative leap in the discipline of programming.

We don't know what is going to happen, but we are probably on the verge of a major paradigm shift in computer science, to the point that perhaps the way we program has changed forever and we haven't even realised it yet.

Content prepared by Alejandro Alija, Digital Transformation expert.

The contents and points of view reflected in this publication are the sole responsibility of the author.

Generative artificial intelligence refers to machine’s ability to generate original and creative content, such as images, text or music, from a set of input data. As far as text generation is concerned, these models have been accessible, in an experimental format, for some time, but began to generate interest in mid-2020 when Open AI, an organisation dedicated to research in the field of artificial intelligence, published access to its GPT-3 language model via an API.

The GPT-3's architecture is composed of 175 billion parameters, comparing to its predecessor GPT-2 was 1.5 billion parameters, i.e. more than 100 times more. Therefore, GPT-3 represents a huge change in scale as it was also trained with a much larger corpus of data and a much larger token size, which allowed it to acquire a deeper and more complex understanding of the human language.

Although it was in 2022 when OpenAI announced the launch of chatGPT, which provides a conversational interface to a language model based on an improved version of GPT-3, it has only been in the last two months that the chat has attracted massive public attention, thanks to extensive media coverage that tries to respond to the emerging general interest.

In fact, ChatGPT is not only able to generate text from a set of characters (prompt) like GPT-3, but also it is able to respond to natural language questions in several languages including English, Spanish, French, German, Italian or Portuguese. This specific updated issue in the access interface from an API to a chatbot that has made the AI accessible to any type of user.

Maybe for this reason, more than a million people registered to use it in just five days, which has led to the multiplication of examples in which chatGPT produces software code, university-level essays, poems and even jokes. Not to mention the fact that it has been able to ace an history SAT or pass the final MBA exam at the prestigious Wharton School.

All of this has put generative AI at the centre of a new wave of technological innovation that promises to revolutionise the way we relate to the internet and the web through AI-powered searches or browsers capable of summarising the results of these searches.

Just a few days ago, we heard the news that Microsoft is working on the implementation of a conversational system within its own search engine, which has been developed based on the well-known Open AI language model and whose news has put Google in check.

As a result of this new reality in which AI is here to stay, the technological giants have gone a step further in the battle to make the most of the benefits it brings. Along these lines, Microsoft has presented a new strategy aimed at optimising the way in which we interact with the internet, introducing AI to improve the results offered by browser search engines, applications, social networks and, in short, the entire web ecosystem.

However, although the path in the development of new and future services offered by Open AI's remains to be seen, advances such as the mentioned above, offer a small hint of the browser war that is coming and that will probably change the way we create and find content on the web in the short term.

The open data

GPT-3, like other models that have been generated with the techniques described in the original GTP-3 scientific publication, is a pre-trained language model, which means that it has been trained with a large dataset, in total about 45 terabytes of text data. According to the paper, the training dataset was composed of 60% of data obtained directly from the internet containing millions of documents of all kinds, 22% from the WebText2 corpus built from Reddit, and the rest from a combination of books (16%) and Wikipedia (3%).

However, it is not known exactly how much open datasets GPT-3 uses, as OpenAI does not provide more specific details about the dataset used to train the model. What we can ask chatGPT itself are some questions that can help us draw interesting conclusions about its use of open data.

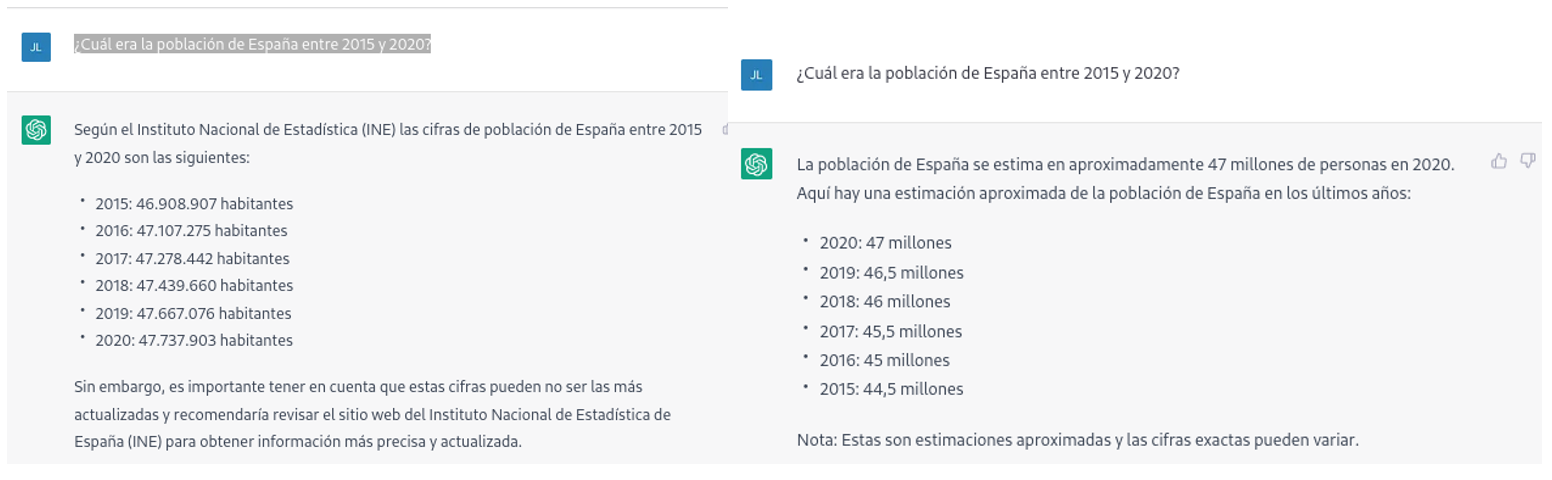

For example, if we ask chatGPT what was the population of Spain between 2015 and 2020 (we cannot ask for more recent data), we get an answer like this:

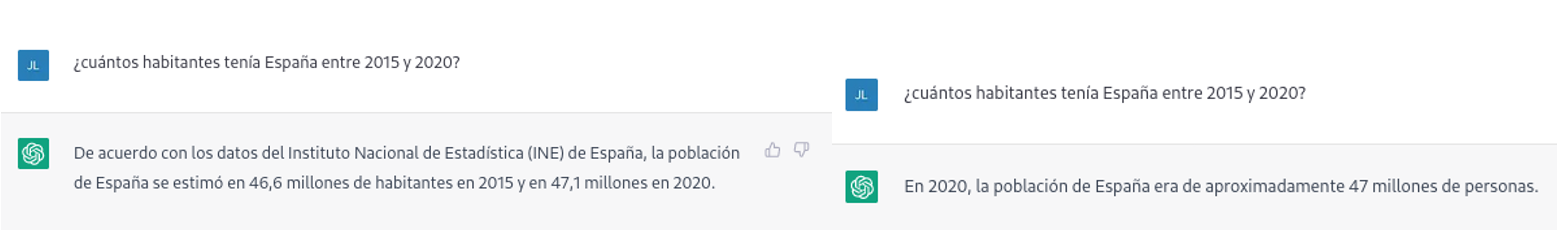

As we can see in the image above, although the question is the same, the answer may vary in both the wording and the information it contains. The variations can be even greater if we ask the question on different days or in different threads:

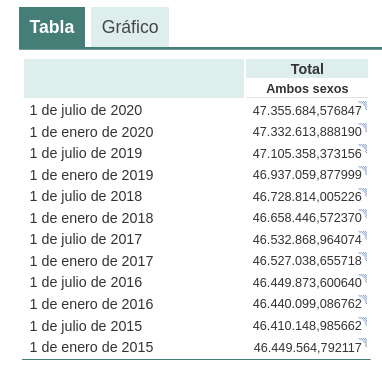

Small variations in the wording of the text, generating the question at different times in the conversation thread (remember that it saves the context) or in different threads or on different days may lead to slightly different results. Moreover, the answer is not completely accurate, as the tool itself warns us if we compare it with the INE's own series on the resident population in Spain, where it recommends us to consult. The data that we would ideally have expected in the response could be obtained in an open INE dataset:

Such responses suggest that open data has not been used as an authoritative source for answering factual questions, or at least that the model is not yet fully refined on this matter. Doing some basic tests with questions about other countries we have observed similar errors, so this does not seem to be a problem only with questions referring to Spain.

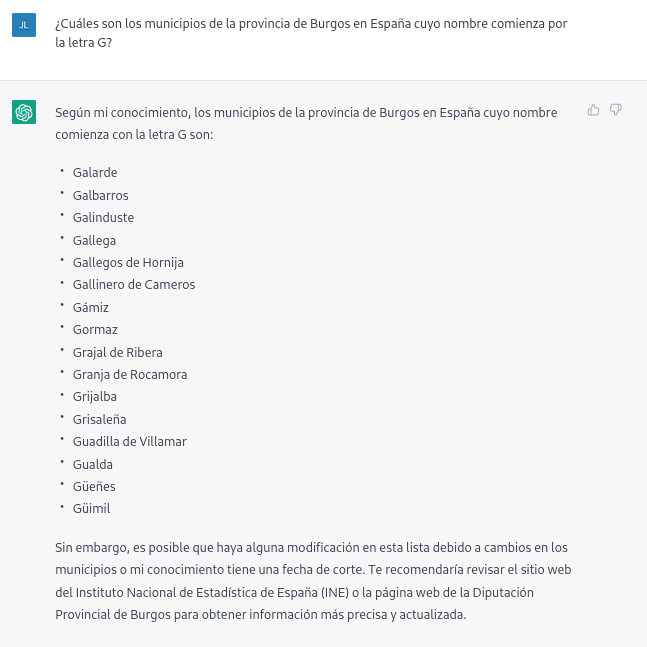

If we ask more specific questions, such as asking for a list of the municipalities in the province of Burgos that begin with the letter "G", we get answers that are not completely correct, as is typical of a technology that is still in its infancy.

The correct answer should contain six municipalities: Galbarros, La Gallega, Grijalba, Grisaleña, Gumiel de Hizán and Gumiel del Mercado. However, the answer we have obtained only contains the first four and includes localities in the province of Guadalajara (Gualda), municipalities in the province of Valladolid (Gallegos de Hornija) or localities in the province of Burgos that are not municipalities (Galarde). In this case, we can also turn to the open dataset to get the correct answer.

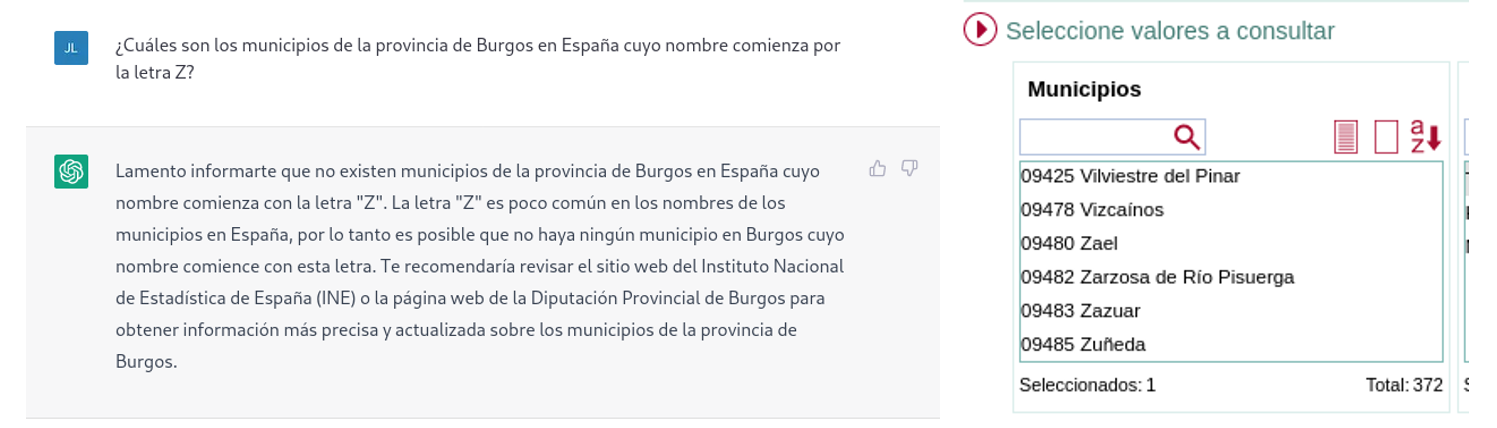

Next, we ask ChatGPT for the list of municipalities beginning with the letter Z in the same province. ChatGPT tells us that there are none, reasoning the answer, when in fact there are four:

As can be seen from the examples above, we can see how open data can indeed contribute to technological evolution and thus improve the performance of Open AI's artificial intelligence. However, given its current state of maturity, it is still too early to see the optimal use of open data to answer more complex questions.

Therefore, for a generative AI model to be effective, it is necessary to have a large amount of high quality and diverse data, and open data is a valuable source of knowledge for this purpose.

In future versions of the model, we will probably be able to see how open data will acquire a much more important role in the composition of the training corpus, achieving a significant improvement in the quality of the factual answers.

Content prepared by Jose Luis Marín, Senior Consultant in Data, Strategy, Innovation & Digitalization.

The contents and views reflected in this publication are the sole responsibility of the author.

The Plenary Session of the Council of the Valencian Community has approved a collaboration agreement between the Ministry of Participation, Transparency, Cooperation and Democratic Quality and the Polytechnic University of Valencia (UPV) with the aim of promoting the development of activities in the field of transparency and open data during 2023.

Thus, the Transparency Ministry will allocate 65,000 euros to promote the activities of the agreement focused on the opening and reuse of data present at different levels of public administrations.

Among the planned actions, the third edition of the Open Data Datathon stands out, an event that seeks to encourage the use of open data to develop applications and services that provide benefits to citizens. This collaboration will also promote the reuse of data related to the business sector, promoting innovation, dissemination, and awareness in various fields.

In parallel, it is planned to work jointly with different entities from civil society to establish a series of intelligent sensors for collecting data, while also promoting workshops and seminars on data journalism.

In turn, a series of informative sessions are included aimed at disseminating knowledge on the use and sharing of open data, the presentation of the Datos y Mujeres project, or the dissemination of open data repositories for research or transparency in algorithms.

Likewise, the collaboration includes the programming of talks and workshops to promote the use of open data in high schools, the integration of open data in different subjects of the PhD, bachelor's, and master's degrees on Public Management and Administration, the Master's degree in Cultural Management, and some transversal doctoral subjects.

Finally, this collaboration between the university and the administration also seeks to promote and mentor a large part of the work on transparency and open data, including the development of a guide to the reuse of open data aimed at reuse organizations, as well as activities to disseminate the Open Government Alliance (OGP) and action plans of the Valencian Community.

Previous projects related to open data

Apart from the plan of activities designed for this 2023 and detailed in the previous lines, this is not the first time that the Polytechnic University of Valencia and the Department of Participation and Transparency have worked together in the dissemination and promotion of open data. In fact, to be exact, they have been actively working through the Open Data and Transparency Observatory, belonging to the same university, to promote the value and sharing of data both in the academic and social spheres.

For instance, in line with this dissemination work, last year 2022, they promoted the 'Women and Data' initiative from the same entity, a project that brought together several women from the data field to talk about their professional experience, the challenges and opportunities addressed in the sector.

Among the interviewees, prominent names included Sonia Castro, coordinator of datos.gob.es, Ana Tudela, co-founder of Datadista, or Laura Castro, data visualization designer at Affective Advisory, among many other professionals.

Likewise, last spring and coinciding with the International Open Data Day, the second edition of Datathon took place, whose purpose was to promote the development of new tools from open data linked to responsible consumption, the environment or culture.

Thus, this particular alliance between the Department of Participation and Transparency and the Polytechnic University of Valencia demonstrates that not only is it possible to showcase the potential of open data, but also that dissemination opportunities are multiplied when institutions and the academic sphere work together in a coordinated and planned manner towards the same objectives.