Climate Modeling and Prediction: planning for a Sustainable Future

Climate models make it possible to predict how the climate will change in the future and, when properly trained, also help to identify potential impacts in specific regions. This enables governments and communities to take measures to adapt to rapidly changing conditions.

Increasingly, these models are fed by open datasets, and some climate models have even begun to be published freely and openly. In this line, we find the climate models published on the MIT Climate portal or the data and models published by NOAA Climate.gov. In this way, all kinds of institutions, scientists and even citizens can contribute to identifying possibilities for mitigating the effects of climate change.

Carbon emissions monitoring: carbon footprint tracking

Thanks to open data and some paid-for datasets, it is now possible to accurately track the carbon emissions of countries, cities and even companies on an ongoing basis. As exemplified by the International Energy Agency's (IEA) World Energy Outlook 2022 or the U.S. Environmental Protection Agency's Global Greenhouse Gas Emissions Data, these data are essential not only for measuring and analyzing emissions globally, but also for assessing progress towards emission reduction targets.

Adapting Agriculture: cultivating a resilient future

It is clear that climate change has a direct impact on agriculture and that this impact threatens a global food security that in itself is already a global challenge. Open data on weather patterns, rainfall and temperatures, land use and fertilizer and pesticide use, coupled with local data captured in the field, allow farmers to adapt their practices and evolve towards a model of precision agriculture. Choosing crops that are resilient to changing conditions, and managing inputs more efficiently thanks to this data, is crucial to ensure that agriculture remains sustainable and productive in the new scenarios.

Among other organizations, the Food and Agriculture Organization of the United Nations (FAO) highlights the importance of open data in climate-smart agriculture and publishes datasets on pesticide use, inorganic fertilizers, greenhouse gas emissions, agricultural production, etc., which contribute to improved land, water and food security management.

Natural Disaster Response: minimizing Impact

The analysis of data on extreme weather events, such as hurricanes or floods, makes it possible to design strategies that lead to a faster and more effective response when these events occur. In this way, on the one hand, lives are saved and, on the other, the high impact on affected communities is partially mitigated.

Open data such as those published by the US National Hurricane Center (NHC) or the European Environment Agency are valuable tools in natural disaster management as they help streamline disaster preparedness decision-making and provide an objective basis for assessment and prioritization.

Biodiversity and conservation: protecting our natural wealth

While it seems clear that biodiversity is vital to the health of the Earth, human activity continues to put it under great pressure, combining with climate change to threaten its stability. Open data on species populations, deforestation and other ecological indicators such as those published by governments and organizations around the world in the Global Biodiversity Information Facility (GBIF) help us to identify areas at risk more quickly and accurately and thus prioritize conservation efforts.

With the increased availability of open data, governments, institutions, companies and citizens can make informed decisions to mitigate the consequences of climate change and work together towards a more sustainable future.

Content prepared by Jose Luis Marín, Senior Consultant in Data, Strategy, Innovation & Digitalization.

The contents and points of view reflected in this publication are the sole responsibility of its author.

The digitalization in the public sector in Spain has also reached the judicial field. The first regulation to establish a legal framework in this regard was the reform that took place through Law 18/2011, of July 5th (LUTICAJ). Since then, there have been advances in the technological modernization of the Administration of Justice. Last year, the Council of Ministers approved a new legislative package to definitively address the digital transformation of the public justice service, the Digital Efficiency Bill.

This project incorporates various measures specifically aimed at promoting data-driven management, in line with the overall approach formulated through the so-called Data Manifesto promoted by the Data Office.

Once the decision to embrace data-driven management has been made, it must be approached taking into account the requirements and implications of Open Government, so that not only the possibilities for improvement in the internal management of judicial activity are strengthened, but also the possibilities for reuse of the information generated as a result of the development of said public service (RISP).

Open data: a premise for the digital transformation of justice

To address the challenge of the digital transformation of justice, data openness is a fundamental requirement. In this regard, open data requires conditions that allow their automated integration in the judicial field. First, an improvement in the accessibility conditions of the data sets must be carried out, which should be in interoperable and reusable formats. In fact, there is a need to promote an institutional model based on interoperability and the establishment of homogeneous conditions that, through standardization adapted to the singularities of the judicial field, facilitate their automated integration.

In order to deepen the synergy between open data and justice, the report prepared by expert Julián Valero identifies the keys to digital transformation in the judicial field, as well as a series of valuable open data sources in the sector.

If you want to learn more about the content of this report, you can watch the interview with its author.

Below, you can download the full report, the executive summary, and a summary presentation.

Summer is coming to an end. August is winding down, and September is on the horizon, bringing with it the return to routine and all that it entails. The start of the school year and the end of vacations can be challenging. However, this time of year, along with January, is a time for fresh beginnings and resolutions. As you head back to school, we at datos.gob.es propose a challenge: to learn more about open data and new technologies.

Whether you're looking for a career change, seeking to enrich your professional profile, or simply curious about this burgeoning field, we've selected content on disruptive technologies that we hope will pique your interest. In this post, you'll find articles, books, and even interviews covering data and the innovative technologies surrounding it.

Take note and prepare your backpack with readings on open data!

Piensa claro, Ocho reglas para descifrar el mundo y tener éxito en la era de los datos - Kiko Llaneras (2022)

In this compilation of data-based curiosities, El País journalist Kiko Llaneras offers practical advice for making reliable predictions, avoiding common mistakes, and questioning our intuition.

- What's it about? The book uses data to highlight situations such as the fact that most footballers are born in January or to explain the relationship between data and the Chernobyl disaster. These and other topics serve as the starting point for the development of eight independent chapters, in which Llaneras provides advice, based on his experience, on the use and treatment of data to arrive at sound conclusions.

- Who's it for? It's a very easy-to-understand book, and no prior knowledge of the subject is required. If the reader has an understanding of statistical topics and data analysis, they will enjoy some references. However, the examples the journalist uses to explain each piece of advice make the book an ideal choice for the general public.

Yasmín Belén Quiroga: “Promoting Transparency and Confidence in the Justice System through Gender-Perspective Open Data”; UN Women; Interview (03/24/2023)

The fifth United Nations Development Goal sets the target of achieving gender equality and empowering all women and girls. Open data plays a significant role in measuring its attainment and shaping the measures to achieve it. Lawyer and gender and data specialist Yasmín Belén Quiroga is one of the authors of "Gender-Perspective Open Data and Open Justice," a research project conducted within the framework of the Spotlight Initiative. In this project, the expert analyzes the experience of the court where she works and makes all the court's resolutions and judgments available through digital means.

- What's it about? The lawyer discusses various topics such as the importance of having a gender-perspective open data observatory, the role of open justice in social development, or recommendations for ensuring ethical data reuse. It's a light read that takes no more than 5 minutes.

- Who's it for? It may be of interest to anyone curious about the application of open data in the judicial system and gender perspective in the sector.

- For further reading: The United Nations portal has published "Gender-Perspective Open Data and Open Justice: The Experience of Court 10," a research project in which Quiroga participated, analyzing the importance of having a source of open and accessible data to eliminate issues like gender inequality.

"The Data Science Handbook: Advice and Insights from 25 Amazing Data Scientists"; Book (2020)

In this book, authored by four professionals in the data field, you'll find 25 interviews with leading American data scientists, including several leaders from major companies.

- What's it about? The book provides firsthand information from experienced data scientists and offers advice for a successful career in the field.

- Who's it for? It's designed for data professionals, whether beginners or more experienced individuals. Each interview offers a professional and personal perspective on the world of data, as well as practical advice.

"10 Breakthrough Technologies 2023"; MIT Technology Review; Article (01/09/2023)

Every year, the world's oldest technology magazine publishes a compilation of the most disruptive technological advancements of the year. In the 2023 list, technologies such as gene-editing tools, generative AI and its possibilities, and expanded geospatial data analysis are highlighted more than ever before.

- What's it about? It's a list of articles that delve into each technology in depth, discussing its current and future applications, as well as the contributions it can make to society.

- Who's it for? Anyone curious about developments in the world of technology.

The content on data and technology is endless, and the works mentioned above represent just a small sample intended to serve as an example. Therefore, with the aim of enriching this selection, we encourage you to complete this list in the comments. Would you like to recommend a book or article? We're all ears!

On August 1, the Junta de Castilla y León opened the deadline to receive new proposals in the field of open data. Thus, with the aim of "recognizing the realization of projects that provide any type of idea, study, service, website or applications for mobile devices, and that use datasets from the Open Data Portal of the Junta de Castilla y León", they have launched a new edition of their open data contest.

The initiative, which has been running since 2016, aims to awaken interest in open data and the multiple economic possibilities associated with it. In this way, it manages to encourage the production of services and projects linked to the reuse of public information and the data economy of Castilla y León.

The period for submitting projects in the different categories set out in the rules (Ideas, Products and Services, Educational Resource and Data Journalism) will be open for two months, extending until October 2. The procedure for submitting applications follows the same dynamics as in previous years: participants can choose to apply in person or electronically. The latter will be carried out through the Electronic Headquarters of Castilla y León and can be processed by both individuals and legal entities.

Promoting open data through four differentiated categories

As in previous editions, the projects and associated prizes are divided into four different categories:

Teaching Resource: Creation of open teaching resources (published under Creative Commons licenses), new and innovative, that use datasets from the Junta de Castilla y León's Open Data portal and that serve as support for classroom teaching. The 6th edition of the contest awarded the GeoChef project in this category. Its author received €1,500 in prize money.

Products and Services: Projects that provide studies, services, websites or applications for mobile devices using datasets from the Junta de Castilla y León's Open Data portal. In the 2022 edition, the first prize in this category went to 'Oferta de Formación profesional de Castilla y León, una alternativa atractiva y accesible con herramientas no-cod'. Its author won €2,500.

Data Journalism: This category includes journalistic pieces published or updated (in a relevant way) in any medium (written or audiovisual), using datasets from the Open Data portal of the Junta de Castilla y León. In the previous edition, Asociación Maldita took the first place thanks to the informative service, 'Elections 13-F in Castilla y León: there will be 186 polling stations less than in the autonomic elections of 2019'.

Ideas: This includes those projects that describe an idea that can be used to create studies, services, websites or applications for mobile devices. The main requirement they must meet is to use datasets from the Junta de Castilla y León's Open Data portal. Last year the project 'Elige tu Universidad (Castilla y León)' was awarded the first prize of €1,500.

Regarding the awards of this seventh edition, the prizes have an economic endowment of 12,000 €, which is distributed according to the awarded category and the position achieved.

Ideas Category

- First prize 1,500 €.

- Second prize 500 €.

Products and services category

- First prize 2.500 €

- Second prize 1.500 €.

- Third prize 500 €.

- Students prize: 1.500 €.

Educational resource category

- First prize 1.500 €.

Data Journalism Category

- First prize 1.500 €

- Second prize 1.000 €

As in previous editions of the competition, the final verdict will be issued by a jury made up of members with proven experience in the field of open data, information analysis or the digital economy. The jury's decisions will be made by majority vote and, in the event of a tie, the final decision will rest with the president.

Once the result is known, the winners will have a period of five working days to accept the award. If the prize is not accepted, it will be understood that the prize has been waived. If you want to consult in detail the conditions and legal bases of the contest you can access them through this link.

Open data is a valuable tool for making informed decisions that encourage the success of a process and enhance its effectiveness. From a sectorial perspective, open data provides relevant information about the legal, educational, or health sectors. All of these, along with many other areas, utilize open sources to measure improvement compliance or develop tools that streamline work for professionals.

The benefits of using open data are extensive, and their variety goes hand in hand with technological innovation: every day, more opportunities arise to employ open data in the development of innovative solutions. An example of this can be seen in urban development aligned with the sustainability values advocated by the United Nations (UN).

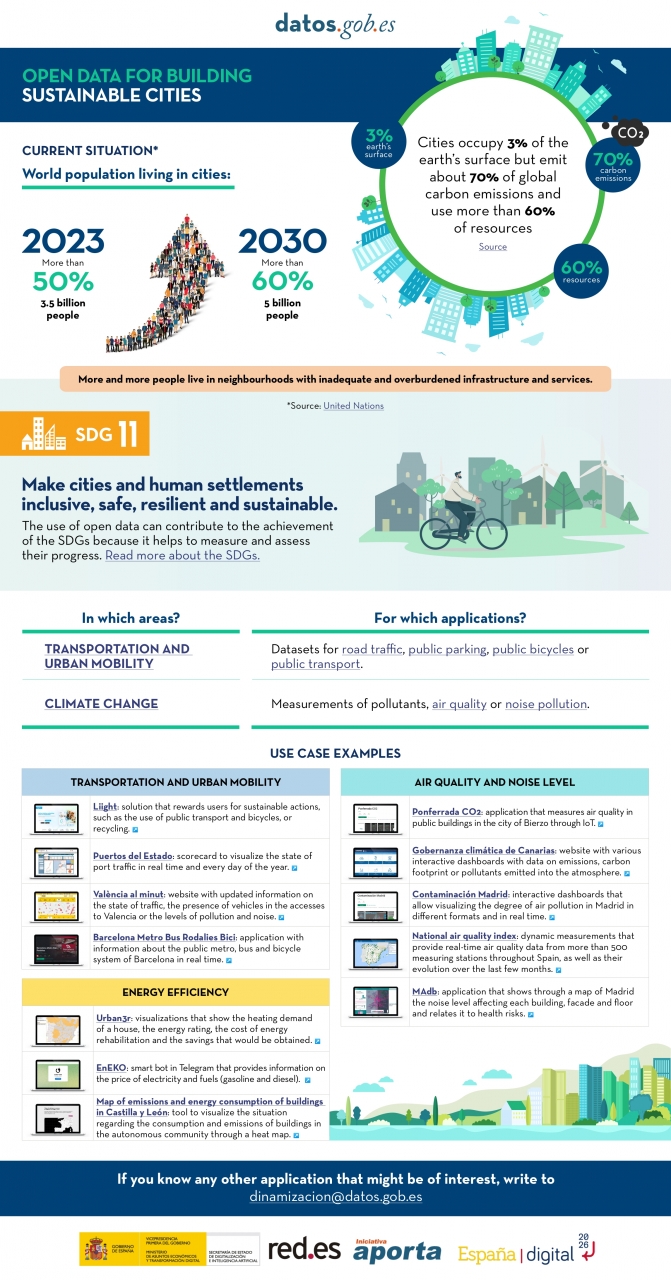

Cities cover only 3% of the Earth's surface; however, they emit 70% of carbon emissions and consume over 60% of the world's resources, according to the UN. In 2023, more than half of the global population lives in cities, and this figure is projected to keep growing. By 2030, it is estimated that over 5 billion people would live in cities, meaning more than 60% of the world's population.

Despite this trend, infrastructures and neighborhoods do not meet the appropriate conditions for sustainable development, and the goal is to "Make cities and human settlements inclusive, safe, resilient, and sustainable," as recognized in Sustainable Development Goal (SDG) number 11. Proper planning and management of urban resources are significant factors in creating and maintaining sustainability-based communities. In this context, open data plays a crucial role in measuring compliance with this SDG and thus achieving the goal of sustainable cities.

In conclusion, open data stands as a fundamental tool for the strengthening and progress of sustainable city development.

In this infographic, we have gathered use cases that utilize sets of open data to monitor and/or enhance energy efficiency, transportation and urban mobility, air quality, and noise levels. Issues that contribute to the proper functioning of urban centers.

Click on the infographic to view it in full size.

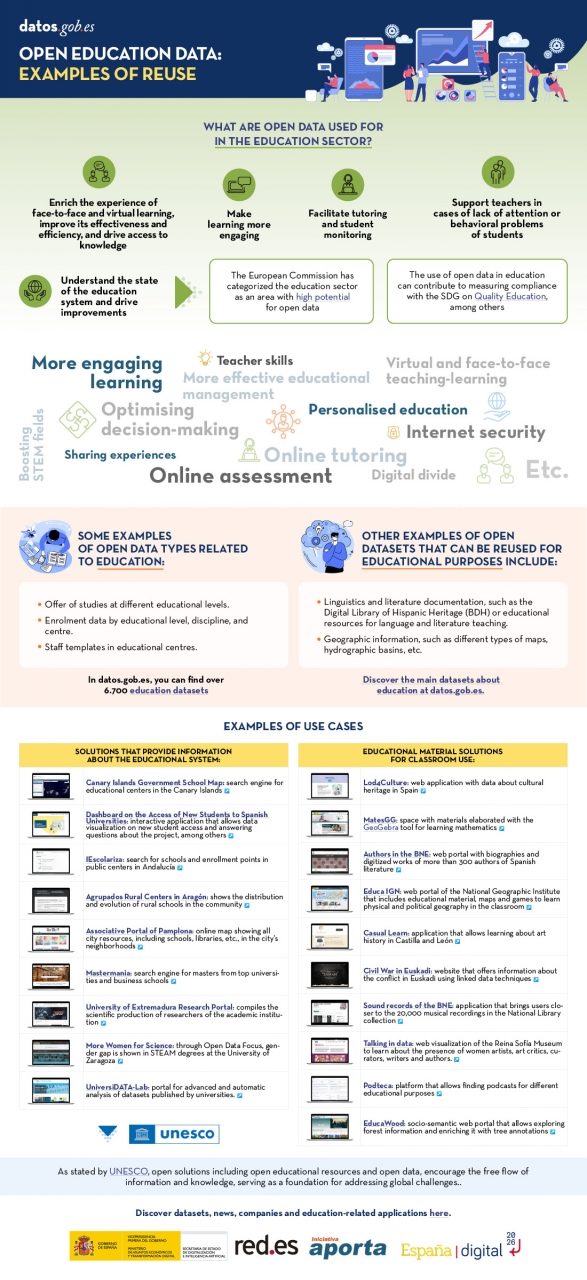

Open solutions, including Open Educational Resources (OER), Open Access to Scientific Information (OA), Free and Open-Source Software (FOSS), and open data, encourage the free flow of information and knowledge, serving as a foundation for addressing global challenges, as reminded by UNESCO.

The United Nations Educational, Scientific and Cultural Organization (UNESCO) recognizes the value of open data in the educational field and believes that its use can contribute to measuring the compliance of the Sustainable Development Goals, especially Goal 4 of Quality Education. Other international organizations also recognize the potential of open data in education. For example, the European Commission has classified the education sector as an area with high potential for open data.

Open data can be used as a tool for education and training in different ways. They can be used to develop new educational materials and to collect and analyze information about the state of the educational system, which can be used to drive improvement.

The global pandemic marked a milestone in the education field, as the use of new technologies became essential in the teaching and learning process, which became entirely virtual for months. Although the benefits of incorporating ICT and open solutions into education, a trend known as Edtech, had been talked about for years, COVID-19 accelerated this process.

Benefits of Using Open Data in the Classroom

In the following infographic, we summarize the benefits of utilizing open data in education and training, from the perspective of both students and educators, as well as administrators of the education system.

There are many datasets that can be used for developing educational solutions. At datos.gob.es, there are more than 6,700 datasets available, which can be supplemented by others used for educational purposes in different fields, such as literature, geography, history, etc.

Many solutions have been developed using open data for these purposes. We gather some of them based on their purpose: firstly, solutions that provide information on the education system to understand its situation and plan new measures, and secondly, those that offer educational material to use in the classroom.

In essence, open data is a key tool for the strengthening and progress of education, and we must not forget that education is a universal right and one of the main tools for the progress of humanity.

The emergence of artificial intelligence (AI), and ChatGPT in particular, has become one of the main topics of debate in recent months. This tool has even eclipsed other emerging technologies that had gained prominence in a wide range of fields (legal, economic, social and cultural). This is the case, for example, of web 3.0, the metaverse, decentralised digital identity or NFTs and, in particular, cryptocurrencies.

There is an unquestionable direct relationship between this type of technology and the need for sufficient and appropriate data, and it is precisely this last qualitative dimension that justifies why open data is called upon to play a particularly important role. Although, at least for the time being, it is not possible to know how much open data provided by public sector entities is used by ChatGPT to train its model, there is no doubt that open data is a key to improving their performance.

Regulation on the use of data by AI

From a legal point of view, AI is arousing particular interest in terms of the guarantees that must be respected when it comes to its practical application. Thus, various initiatives are being promoted that seek to specifically regulate the conditions for its use, among which the proposal being processed by the European Union stands out, where data are the object of special attention.

At the state level, Law 15/2022, of 12 July, on equal treatment and non-discrimination, was approved a few months ago. This regulation requires public administrations to promote the implementation of mechanisms that include guarantees regarding the minimisation of bias, transparency and accountability, specifically with regard to the data used to train the algorithms used for decision-making.

There is a growing interest on the part of the autonomous communities in regulating the use of data by AI systems, in some cases reinforcing guarantees regarding transparency. Also, at the municipal level, protocols are being promoted for the implementation of AI in municipal services in which the guarantees applicable to the data, particularly from the perspective of their quality, are conceived as a priority requirement.

The possible collision with other rights and legal interests: the protection of personal data

Beyond regulatory initiatives, the use of data in this context has been the subject of particular attention as regards the legal conditions under which it is admissible. Thus, it may be the case that the data to be used are protected by third party rights that prevent - or at least hinder - their processing, such as intellectual property or, in particular, the protection of personal data. This concern is one of the main motivations for the European Union to promote the Data Governance Regulation, a regulation that proposes technical and organisational solutions that attempt to make the re-use of information compatible with respect for these legal rights.

Precisely, the possible collision with the right to the protection of personal data has motivated the main measures that have been adopted in Europe regarding the use of ChatGPT. In this regard, the Garante per la Protezione dei Dati Personali has ordered a precautionary measure to limit the processing of Italian citizens' data, the Spanish Data Protection Agency has initiated ex officio inspections of OpenAI as data controller and, with a supranational scope, the European Data Protection Supervisor (EDPB) has created a specific working group.

The impact of the regulation on open data and re-use

The Spanish regulation on open data and re-use of public sector information establishes some provisions that must be taken into account by IA systems. Thus, in general, re-use will be admissible if the data has been published without conditions or, in the event that conditions are set, when they comply with those established through licences or other legal instruments; although, when they are defined, the conditions must be objective, proportionate, non-discriminatory and justified by a public interest objective.

As regards the conditions for re-use of information provided by public sector bodies, the processing of such information is only allowed if the content is not altered and its meaning is not distorted, and the source of the data and the date of its most recent update must be mentioned.

On the other hand, high-value datasets are of particular interest for these AI systems characterised by the intense re-use of third-party content given the massive nature of the data processing they carry out and the immediacy of the requests for information made by users. Specifically, the conditions established by law for the provision of these high-value datasets by public bodies mean that there are very few limitations and also that their re-use is greatly facilitated by the fact that the data must be freely available, be susceptible to automated processing, be provided through APIs and be provided in the form of mass downloading, where appropriate.

In short, considering the particularities of this technology and, therefore, the very unique circumstances in which the data are processed, it seems appropriate that the licences and, in general, the conditions under which public entities allow their re-use be reviewed and, where appropriate, updated to meet the legal challenges that are beginning to arise.

Content prepared by Julián Valero, Professor at the University of Murcia and Coordinator of the "Innovation, Law and Technology" Research Group (iDerTec).

The contents and points of view reflected in this publication are the sole responsibility of the author.

As more of our daily lives take place online, and as the importance and value of personal data increases in our society, standards protecting the universal and fundamental right to privacy, security and privacy - backed by frameworks such as the Universal Declaration of Human Rights or the European Declaration on Digital Rights - become increasingly important.

Today, we are also facing a number of new challenges in relation to our privacy and personal data. According to the latest Lloyd's Register Foundation report, at least three out of four internet users are concerned that their personal information could be stolen or otherwise used without their permission. It is therefore becoming increasingly urgent to ensure that people are in a position to know and control their personal data at all times.

Today, the balance is clearly tilted towards the large platforms that have the resources to collect, trade and make decisions based on our personal data - while individuals can only aspire to gain some control over what happens to their data, usually with a great deal of effort.

This is why initiatives such as MyData Global, a non-profit organisation that has been promoting a human-centred approach to personal data management for several years now and advocating for securing the right of individuals to actively participate in the data economy, are emerging. The aim is to redress the balance and move towards a people-centred view of data to build a more just, sustainable and prosperous digital society, the pillars of which would be:

- Establish relationships of trust and security between individuals and organisations.

- Achieve data empowerment, not only through legal protection, but also through measures to share and distribute the power of data.

- Maximising the collective benefits of personal data, sharing it equitably between organisations, individuals and society.

And in order to bring about the changes necessary to bring about this new, more humane approach to personal data, the following principles have been developed:

1 - People-centred control of data.

It is individuals who must have the power of decision in the management of everything that concerns their personal lives. They must have the practical means to understand and effectively control who has access to their data and how it is used and shared.

Privacy, security and minimal use of data should be standard practice in the design of applications, and the conditions of use of personal data should be fairly negotiated between individuals and organisations.

2 - People as the focal point of integration

The value of personal data grows exponentially with its diversity, while the potential threat to privacy grows at the same time. This apparent contradiction could be resolved if we place people at the centre of any data exchange, always focusing on their own needs above all other motivations.

Any use of personal data must revolve around the individual through deep personalisation of tools and services.

3 - Individual autonomy

In a data-driven society, individuals should not be seen solely as customers or users of services and applications. They should be seen as free and autonomous agents, able to set and pursue their own goals.

Individuals should be able to securely manage their personal data in the way they choose, with the necessary tools, skills and support.

4 - Portability, access and re-use

Enabling individuals to obtain and reuse their personal data for their own purposes and in different services is the key to moving from silos of isolated data to data as reusable resources.

Data portability should not merely be a legal right, but should be combined with practical means for individuals to effectively move data to other services or on their personal devices in a secure and simple way.

5 - Transparency and accountability

Organisations using an individual's data must be transparent about how they use it and for what purpose. At the same time, they must be accountable for their handling of that data, including any security incidents.

User-friendly and secure channels must be created so that individuals can know and control what happens to their data at all times, and thus also be able to challenge decisions based solely on algorithms.

6 - Interoperability

There is a need to minimise friction in the flow of data from the originating sources to the services that use it. This requires incorporating the positive effects of open and interoperable ecosystems, including protocols, applications and infrastructure. This will be achieved through the implementation of common norms and practices and technical standards.

The MyData community has been applying these principles for years in its work to spread a more human-centred vision of data management, processing and use, as it is currently doing for example through its role in the Data Spaces Support Centre, a reference project that is set to define the future responsible use and governance of data in the European Union.

And for those who want to delve deeper into people-centric data use, we will soon have a new edition of the MyData Conference, which this year will focus on showcasing case studies where the collection, processing and analysis of personal data primarily serves the needs and experiences of human beings.

Content prepared by Carlos Iglesias, Open data Researcher and consultant, World Wide Web Foundation.

The contents and views expressed in this publication are the sole responsibility of the author.

Books are an inexhaustible source of knowledge and experiences lived by others before us, which we can reuse to move forward in our lives. Libraries, therefore, are places where readers looking for books, borrow them, and once they have used them and extracted from them what they need, return them. It is curious to imagine the reasons why a reader needs to find a particular book on a particular subject.

In case there are several books that meet the required characteristics, what might be the criteria that weigh most heavily in choosing the book that the reader feels best contributes to his or her task. And once the loan period of the book is over, the work of the librarians to bring everything back to an initial state is almost magical.

The process of putting books back on the shelves can be repeated indefinitely. Both on those huge shelves that are publicly available to all readers in the halls, and on those smaller shelves, out of sight, where books that for some reason cannot be made publicly available rest in custody. This process has been going on for centuries since man began to write and to share his knowledge among contemporaries and between generations.

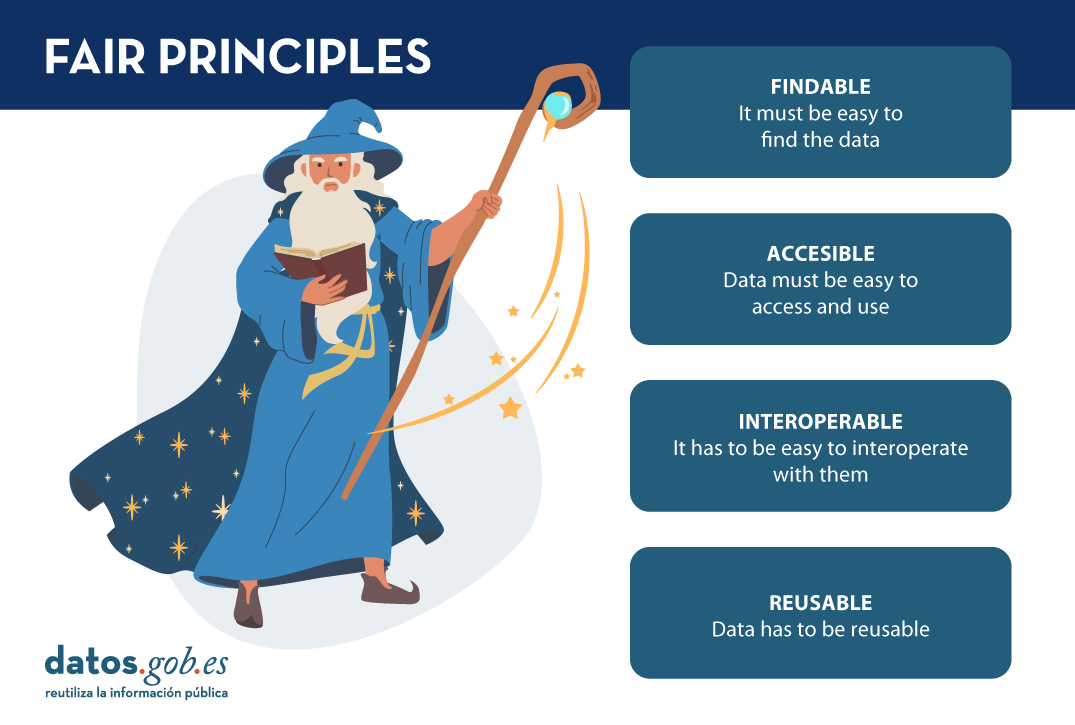

In a sense, data are like books. And data repositories are like libraries: in our daily lives, both professionally and personally, we need data that are on the "shelves" of numerous "libraries". Some, which are open, very few still, can be used; others are restricted, and we need permissions to use them.

In any case, they contribute to the development of personal and professional projects; and so, we are understanding that data is the pillar of the new data economy, just as books have been the pillar of knowledge for thousands of years.

As with libraries, in order to choose and use the most appropriate data for our tasks, we need "data librarians to work their magic" to arrange everything in such a way that it is easy to find, access, interoperate and reuse data. That is the secret of the "data wizards": something they warily call FAIR principles so that the rest of us humans cannot discover them. However, it is always possible to give some clues, so that we can make better use of their magic:

- It must be easy to find the data. This is where the "F" in the FAIR principles comes from, from "findable". For this, it is important that the data is sufficiently described by an adequate collection of metadata, so that it can be easily searched. In the same way that libraries have a shingle to label books, data needs its own label. The "data wizards" have to find ways to write the tags so that the books are easy to locate, on the one hand, and provide tools (such as search engines) so that users can search for them, on the other. Users, for our part, have to know and know how to interpret what the different book tags mean, and know how the search tools work (it is impossible not to remember here the protagonists of Dan Brown's "Angels and Demons" searching in the Vatican Library).

- Once you have located the data you intend to use, it must be easy to access and use. This is the A in FAIR's "accessible". Just as you have to become a member and get a library card to borrow a book from a library, the same applies to data: you have to get a licence to access the data. In this sense, it would be ideal to be able to access any book without having any kind of prior lock-in, as is the case with open data licensed under CC BY 4.0 or equivalent. But being a member of the "data library" does not necessarily give you access to the entire library. Perhaps for certain data resting on those shelves guarded out of reach of all eyes, you may need certain permissions (it is impossible not to remember here Umberto Eco's "The Name of the Rose").

- It is not enough to be able to access the data, it has to be easy to interoperate with them, understanding their meaning and descriptions. This principle is represented by the "I" for "interoperable" in FAIR. Thus, the "data wizards" have to ensure, by means of the corresponding techniques, that the data are described and can be understood so that they can be used in the users' context of use; although, on many occasions, it will be the users who will have to adapt to be able to operate with the data (impossible not to remember the elvish runes in J.R.R. Tolkien's "The Lord of the Rings").

- Finally, data, like books, has to be reusable to help others again and again to meet their own needs. Hence the "R" for "reusable" in FAIR. To do this, the "data wizards" have to set up mechanisms to ensure that, after use, everything can be returned to that initial state, which will be the starting point from which others will begin their own journeys.

As our society moves into the digital economy, our data needs are changing. It is not that we need more data, but that we need to dispose differently of the data that is held, the data that is produced and the data that is made available to users. And we need to be more respectful of the data that is generated, and how we use that data so that we don't violate the rights and freedoms of citizens. So it can be said, we face new challenges, which require new solutions. This forces our "data wizards" to perfect their tricks, but always keeping the essence of their magic, i.e. the FAIR principles.

Recently, at the end of February 2023, an Assembly of these data wizards took place. And they were discussing about how to revise the FAIR principles to perfect these magic tricks for scenarios as relevant as European data spaces, geospatial data, or even how to measure how well the FAIR principles are applied to these new challenges. If you want to see what they talked about, you can watch the videos and watch the material at the following link: https://www.go-peg.eu/2023/03/07/go-peg-final-workshop-28-february-20203-1030-1300-cet/

Content prepared by Dr. Ismael Caballero, Lecturer at UCLM

The contents and views reflected in this publication are the sole responsibility of the author.

The humanitarian crisis following the earthquake in Haiti in 2010 was the starting point for a voluntary initiative to create maps to identify the level of damage and vulnerability by areas, and thus to coordinate emergency teams. Since then, the collaborative mapping project known as Hot OSM (OpenStreetMap) has played a key role in crisis situations and natural disasters.

Now, the organisation has evolved into a global network of volunteers who contribute their online mapping skills to help in crisis situations around the world. The initiative is an example of data-driven collaboration to solve societal problems, a theme we explore in this data.gob.es report.

Hot OSM works to accelerate data-driven collaboration with humanitarian and governmental organisations, as well as local communities and volunteers around the world, to provide accurate and detailed maps of areas affected by natural disasters or humanitarian crises. These maps are used to help coordinate emergency response, identify needs and plan for recovery.

In its work, Hot OSM prioritises collaboration and empowerment of local communities. The organisation works to ensure that people living in affected areas have a voice and power in the mapping process. This means that Hot OSM works closely with local communities to ensure that areas important to them are mapped. In this way, the needs of communities are considered when planning emergency response and recovery.

Hot OSM's educational work

In addition to its work in crisis situations, Hot OSM is dedicated to promoting access to free and open geospatial data, and works in collaboration with other organisations to build tools and technologies that enable communities around the world to harness the power of collaborative mapping.

Through its online platform, Hot OSM provides free access to a wide range of tools and resources to help volunteers learn and participate in collaborative mapping. The organisation also offers training for those interested in contributing to its work.

One example of a HOT project is the work the organisation carried out in the context of Ebola in West Africa. In 2014, an Ebola outbreak affected several West African countries, including Sierra Leone, Liberia and Guinea. The lack of accurate and detailed maps in these areas made it difficult to coordinate the emergency response.

In response to this need, HOT initiated a collaborative mapping project involving more than 3,000 volunteers worldwide. Volunteers used online tools to map Ebola-affected areas, including roads, villages and treatment centres.

This mapping allowed humanitarian workers to better coordinate the emergency response, identify high-risk areas and prioritize resource allocation. In addition, the project also helped local communities to better understand the situation and participate in the emergency response.

This case in West Africa is just one example of HOT's work around the world to assist in humanitarian crisis situations. The organisation has worked in a variety of contexts, including earthquakes, floods and armed conflict, and has helped provide accurate and detailed maps for emergency response in each of these contexts.

On the other hand, the platform is also involved in areas where there is no map coverage, such as in many African countries. In these areas, humanitarian aid projects are often very challenging in the early stages, as it is very difficult to quantify what population is living in an area and where they are located. Having the location of these people and showing access routes "puts them on the map" and allows them to gain access to resources.

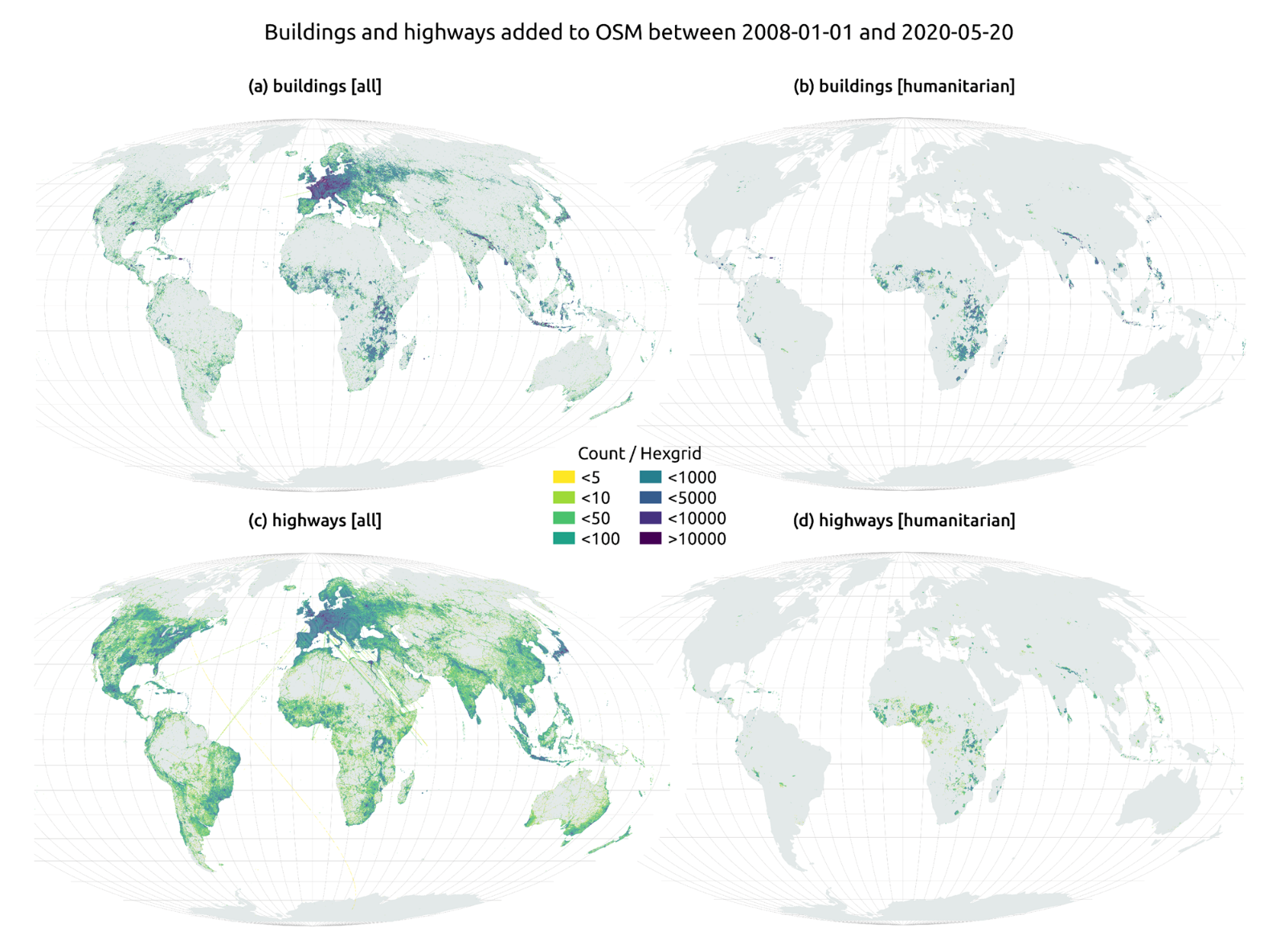

In this article The evolution of humanitarian mapping within the OpenStreetMap community by Nature, we can see graphically some of the achievements of the platform.

How to collaborate

It is easy to start collaborating with Hot OSM, just go to https://tasks.hotosm.org/explore and see the open projects that need collaboration.

This screen allows us a lot of options when searching for projects, selected by level of difficulty, organisation, location or interests among others.

To participate, simply click on the Register button.

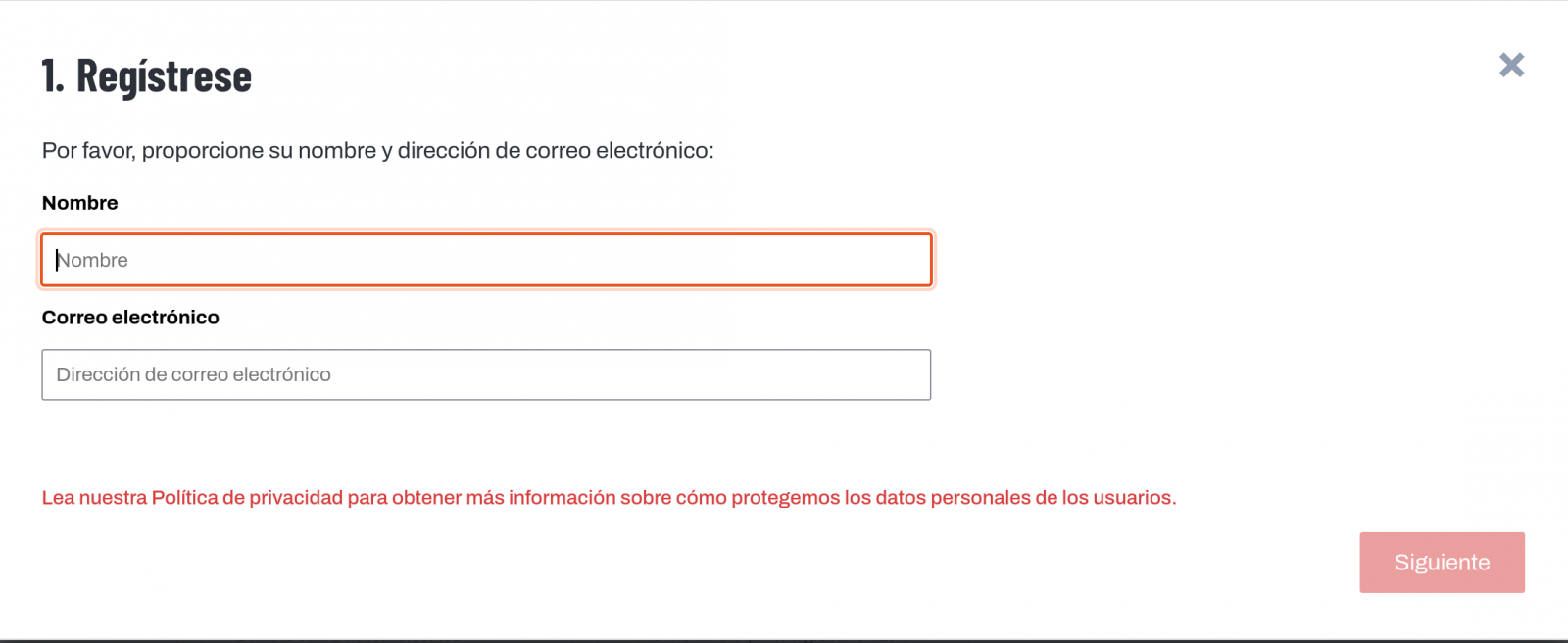

Give a name and an e-mail adress on the next screen:

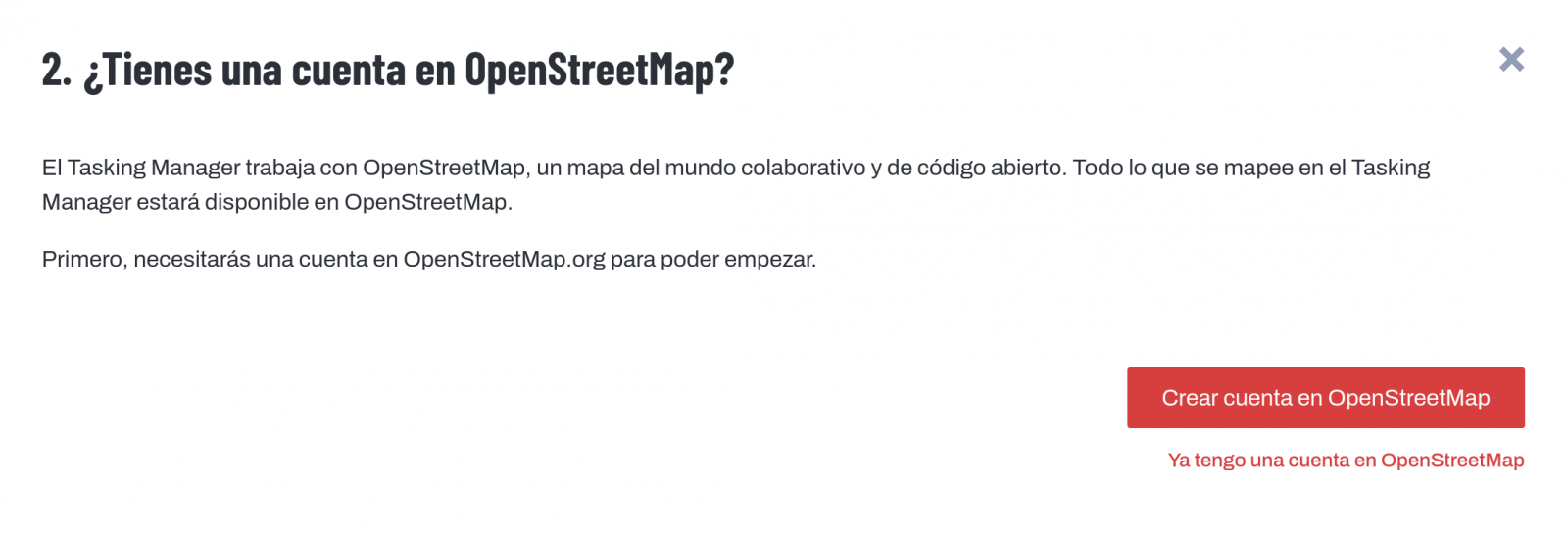

It will ask us if we have already created an account in Open Street Maps or if we want to create one.

If we want to see the process in more detail, this website makes it very easy.

Once the user has been created, on the learning page we find help on how to participate in the project.

It is important to note that the contributions of the volunteers are reviewed and validated and there is a second level of volunteers, the validators, who validate the work of the beginners. During the development of the tool, the HOT team has taken great care to make it a user-friendly application so as not to limit its use to people with computer skills.

In addition, organisations such as the Red Cross and the United Nations regularly organise mapathons to bring together groups of people for specific projects or to teach new volunteers how to use the tool. These meetings serve, above all, to remove the new users' fear of "breaking something" and to allow them to see how their voluntary work serves concrete purposes and helps other people.

Another of the project's great strengths is that it is based on free software and allows for its reuse. In the MissingMaps project's Github repository we can find the code and if we want to create a community based on the software, the Missing Maps organisation facilitates the process and gives visibility to our group.

In short, Hot OSM is a citizen science and data altruism project that contributes to bringing benefits to society through the development of collaborative maps that are very useful in emergency situations. This type of initiative is aligned with the European concept of data governance that seeks to encourage altruism to voluntarily facilitate the use of data for the common good.

Content by Santiago Mota, senior data scientist.

The contents and views reflected in this publication are the sole responsibility of the author.