1. Introduction

Data visualization is a task linked to data analysis that aims to graphically represent underlying data information. Visualizations play a fundamental role in the communication function that data possess, since they allow to drawn conclusions in a visual and understandable way, allowing also to detect patterns, trends, anomalous data or to project predictions, alongside with other functions. This makes its application transversal to any process in which data intervenes. The visualization possibilities are very numerous, from basic representations, such as a line graphs, graph bars or sectors, to complex visualizations configured from interactive dashboards.

Before we start to build an effective visualization, we must carry out a pre-treatment of the data, paying attention to how to obtain them and validating the content, ensuring that they do not contain errors and are in an adequate and consistent format for processing. Pre-processing of data is essential to start any data analysis task that results in effective visualizations.

A series of practical data visualization exercises based on open data available on the datos.gob.es portal or other similar catalogues will be presented periodically. They will address and describe, in a simple way; the stages necessary to obtain the data, perform the transformations and analysis that are relevant for the creation of interactive visualizations, from which we will be able summarize on in its final conclusions the maximum mount of information. In each of the exercises, simple code developments will be used (that will be adequately documented) as well as free and open use tools. All generated material will be available for reuse in the Data Lab repository on Github.

Visualization of the teaching staff of Castilla y León classified by Province, Locality and Teaching Specialty

2. Objetives

The main objective of this post is to learn how to treat a dataset from its download to the creation of one or more interactive graphs. For this, datasets containing relevant information on teachers and students enrolled in public schools in Castilla y León during the 2019-2020 academic year have been used. Based on these data, analyses of several indicators that relate teachers, specialties and students enrolled in the centers of each province or locality of the autonomous community.

3. Resources

3.1. Datasets

For this study, datasets on Education published by the Junta de Castilla y León have been selected, available on the open data portal datos.gob.es. Specifically:

- Dataset of the legal figures of the public centers of Castilla y León of all the teaching positions, except for the schoolteachers, during the academic year 2019-2020. This dataset is disaggregated by specialty of the teacher, educational center, town and province.

- Dataset of student enrolments in schools during the 2019-2020 academic year. This dataset is obtained through a query that supports different configuration parameters. Instructions for doing this are available at the dataset download point. The dataset is disaggregated by educational center, town and province.

3.2. Tools

To carry out this analysis (work environment set up, programming and writing) Python (versión 3.7) programming language and JupyterLab (versión 2.2) have been used. This tools will be found integrated in Anaconda, one of the most popular platforms to install, update or manage software to work with Data Science. All these tools are open and available for free.

JupyterLab is a web-based user interface that provides an interactive development environment where the user can work with so-called Jupyter notebooks on which you can easily integrate and share text, source code and data.

To create the interactive visualization, the Kibana tool (versión 7.10) has been used.

Kibana is an open source application that is part of the Elastic Stack product suite (Elasticsearch, Logstash, Beats and Kibana) that provides visualization and exploration capabilities of indexed data on top of the Elasticsearch analytics engine..

If you want to know more about these tools or others that can help you in the treatment and visualization of data, you can see the recently updated \"Data Processing and Visualization Tools\" report.

4. Data processing

As a first step of the process, it is necessary to perform an exploratory data analysis (EDA) to properly interpret the starting data, detect anomalies, missing data or errors that could affect the quality of subsequent processes and results. Pre-processing of data is essential to ensure that analyses or visualizations subsequently created from it are consistent and reliable.

Due to the informative nature of this post and to favor the understanding of non-specialized readers, the code does not intend to be the most efficient, but to facilitate its understanding. So you will probably come up with many ways to optimize the proposed code to get similar results. We encourage you to do so! You will be able to reproduce this analysis since the source code is available in our Github account. The way to provide the code is through a document made on JupyterLab that once loaded into the development environment you can execute or modify easily.

4.1. Installation and loading of libraries

The first thing we must do is import the libraries for the pre-processing of the data. There are many libraries available in Python but one of the most popular and suitable for working with these datasets is Pandas. The Pandas library is a very popular library for manipulating and analyzing datasets.

import pandas as pd 4.2. Loading datasets

First, we download the datasets from the open data catalog datos.gob.es and upload them into our development environment as tables to explore them and perform some basic data cleaning and processing tasks. For the loading of the data we will resort to the function read_csv(), where we will indicate the download url of the dataset, the delimiter (\"\";\"\" in this case) and, we add the parameter \"encoding\"\" that we adjust to the value \"\"latin-1\"\", so that it correctly interprets the special characters such as the letters with accents or \"\"ñ\"\" present in the text strings of the dataset.

#Cargamos el dataset de las plantillas jurídicas de los centros públicos de Castilla y León de todos los cuerpos de profesorado, a excepción de los maestros url = \"https://datosabiertos.jcyl.es/web/jcyl/risp/es/educacion/plantillas-centros-educativos/1284922684978.csv\"docentes = pd.read_csv(url, delimiter=\";\", header=0, encoding=\"latin-1\")docentes.head(3)#Cargamos el dataset de los alumnos matriculados en los centros educativos públicos de Castilla y León alumnos = pd.read_csv(\"matriculaciones.csv\", delimiter=\",\", names=[\"Municipio\", \"Matriculaciones\"], encoding=\"latin-1\") alumnos.head(3)The column \"\"Localidad\"\" of the table \"\"alumnos\"\" is composed of the code of the municipality and the name of the same. We must divide this column in two, so that its treatment is more efficient.

columnas_Municipios = alumnos[\"Municipio\"].str.split(\" \", n=1, expand = TRUE)alumnos[\"Codigo_Municipio\"] = columnas_Municipios[0]alumnos[\"Nombre_Munipicio\"] = columnas_Munipicio[1]alumnos.head(3)4.3. Creating a new table

Once we have both tables with the variables of interest, we create a new table resulting from their union. The union variables will be: \"\"Localidad\"\" in the table of \"\"docentes\"\" and \"\"Nombre_Municipio” in the table of \"\"alumnos\".

docentes_alumnos = pd.merge(docentes, alumnos, left_on = \"Localidad\", right_on = \"Nombre_Municipio\")docentes_alumnos.head(3)4.4. Exploring the dataset

Once we have the table that interests us, we must spend some time exploring the data and interpreting each variable. In these cases, it is very useful to have the data dictionary that always accompanies each downloaded dataset to know all its details, but this time we do not have this essential tool. Observing the table, in addition to interpreting the variables that make it up (data types, units, ranges of values), we can detect possible errors such as mistyped variables or the presence of missing values (NAs) that can reduce analysis capacity.

docentes_alumnos.info()In the output of this section of code, we can see the main characteristics of the table:

- Contains a total of 4,512 records

- It is composed of 13 variables, 5 numerical variables (integer type) and 8 categorical variables (\"object\" type)

- There is no missing of values.

Once we know the structure and content of the table, we must rectify errors, as is the case of the transformation of some of the variables that are not properly typified, for example, the variable that houses the center code (\"Código.centro\").

docentes_alumnos.Codigo_centro = data.Codigo_centro.astype(\"object\")docentes_alumnos.Codigo_cuerpo = data.Codigo_cuerpo.astype(\"object\")docentes_alumnos.Codigo_especialidad = data.Codigo_especialidad.astype(\"object\")Once we have the table free of errors, we obtain a description of the numerical variables, \"\"Plantilla\" and \"\"Matriculaciones\", which will help us to know important details. In the output of the code that we present below we observe the mean, the standard deviation, the maximum and minimum number, among other statistical descriptors.

docentes_alumnos.describe()4.5. Save the dataset

Once we have the table free of errors and with the variables that we are interested in graphing, we will save it in a folder of our choice to use it later in other analysis or visualization tools. We will save it in CSV format encoded as UTF-8 (Unicode Transformation Format) so that special characters are correctly identified by any tool we might use later.

df = pd.DataFrame(docentes_alumnos)filname = \"docentes_alumnos.csv\"df.to_csv(filename, index = FALSE, encoding = \"utf-8\")5. Creation of the visualization on the teachers of the public educational centers of Castilla y León using the Kibana tool

For the realization of this visualization, we have used the Kibana tool in our local environment. To do this it is necessary to have Elasticsearch and Kibana installed and running. The company Elastic makes all the information about the download and installation available in this tutorial.

Attached below are two video tutorials, which shows the process of creating the visualization and the interaction with the generated dashboard.

In this first video, you can see the creation of the dashboard by generating different graphic representations, following these steps:

- We load the table of previously processed data into Elasticsearch and generate an index that allows us to interact with the data from Kibana. This index allows search and management of data, practically in real time.

- Generation of the following graphical representations:

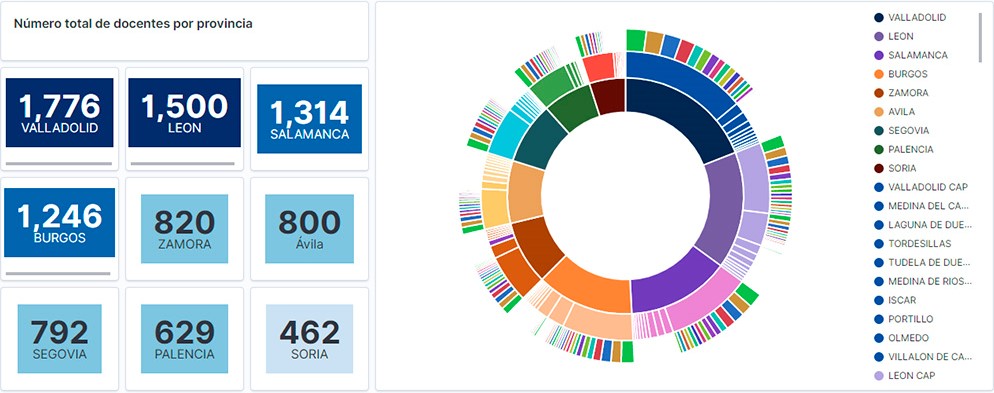

- Graph of sectors where to show the teaching staff by province, locality and specialty.

- Metrics of the number of teachers by province.

- Bar chart, where we will show the number of registrations by province.

- Filter by province, locality and teaching specialty.

- Construction of the dashboard.

In this second video, you will be able to observe the interaction with the dashboard generated previously.

6. Conclusions

Observing the visualization of the data on the number of teachers in public schools in Castilla y León, in the academic year 2019-2020, the following conclusions can be obtained, among others:

- The province of Valladolid is the one with both the largest number of teachers and the largest number of students enrolled. While Soria is the province with the lowest number of teachers and the lowest number of students enrolled.

- As expected, the localities with the highest number of teachers are the provincial capitals.

- In all provinces, the specialty with the highest number of students is English, followed by Spanish Language and Literature and Mathematics.

- It is striking that the province of Zamora, although it has a low number of enrolled students, is in fifth position in the number of teachers.

This simple visualization has helped us to synthesize a large amount of information and to obtain a series of conclusions at a glance, and if necessary, make decisions based on the results obtained. We hope you have found this new post useful and we will return to show you new reuses of open data. See you soon!

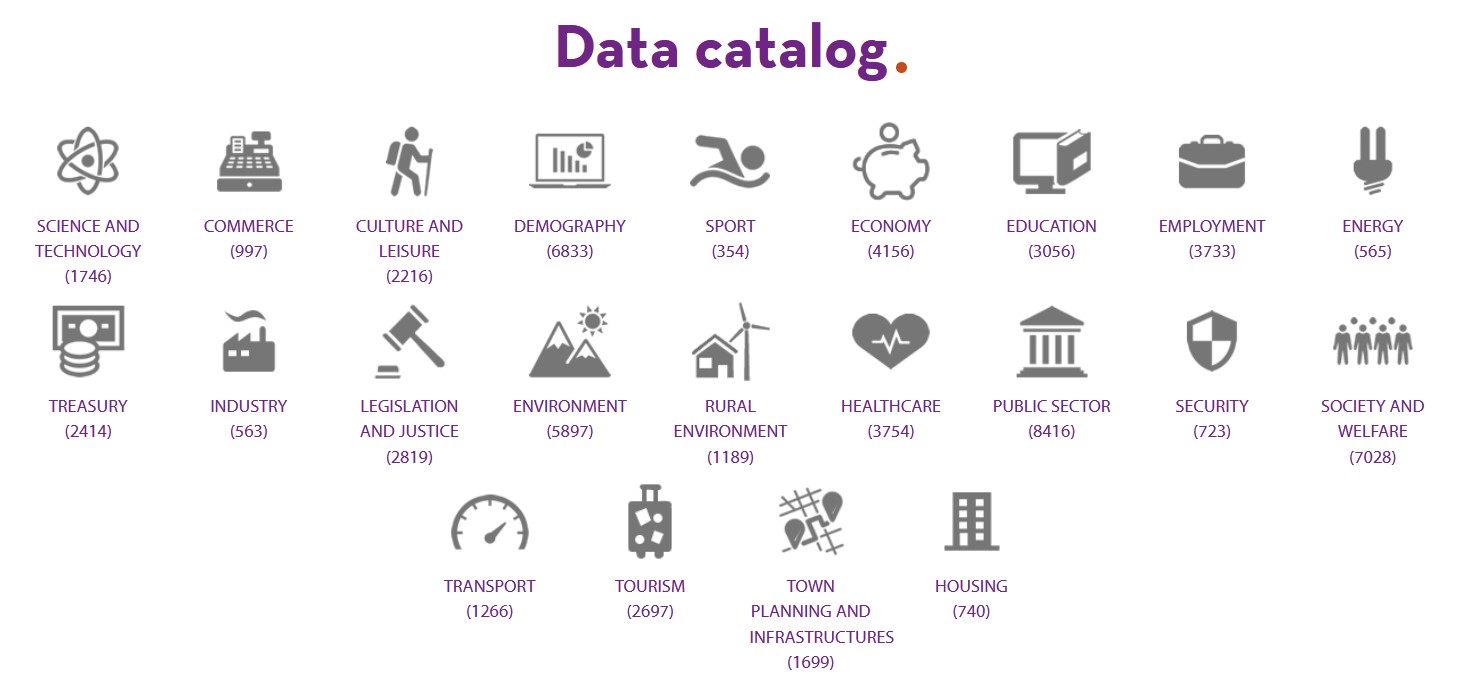

A symptom of the maturity of an open data ecosystem is the possibility of finding datasets and use cases across different sectors of activity. This is considered by the European Open Data Portal itself in its maturity index. The classification of data and their uses by thematic categories boosts re-use by allowing users to locate and access them in a more targeted way. It also allows needs in specific areas to be detected, priority sectors to be identified and impact to be estimated more easily.

In Spain we find different thematic repositories, such as UniversiData, in the case of higher education, or TURESPAÑA, for the tourism sector. However, the fact that the competences of certain subjects are distributed among the Autonomous Communities or City Councils complicates the location of data on the same subject.

Datos.gob.es brings together the open data of all the Spanish public bodies that have carried out a federation process with the portal. Therefore, in our catalogue you can find datasets from different publishers segmented by 22 thematic categories, those considered by the Technical Interoperability Standard.

Number of datasets by category as of June 2021

But in addition to showing the datasets divided by subject area, it is also important to show highlighted datasets, use cases, guides and other help resources by sector, so that users can more easily access content related to their areas of interest. For this reason, at datos.gob.es we have launched a series of web sections focused on different sectors of activity, with specific content for each area.

4 sectorial sections that will be gradually extended to other areas of interest

Currently in datos.gob.es you can find 4 sectors: Environment, Culture and leisure, Education and Transport. These sectors have been highlighted for different strategic reasons:

- Environment: Environmental data are essential to understand how our environment is changing in order to fight climate change, pollution and deforestation. The European Commission itself considers environmental data to be highly valuable data in Directive 2019/1024. At datos.gob.es you can find data on air quality, weather forecasting, water scarcity, etc. All of them are essential to promote solutions for a more sustainable world.

- Transport: Directive 2019/1024 also highlights the importance of transport data. Often in real time, this data facilitates decision-making aimed at efficient service management and improving the passenger experience. Transport data are among the most widely used data to create services and applications (e.g. those that inform about traffic conditions, bus timetables, etc.). This category includes datasets such as real-time traffic incidents or fuel prices.

- Education: With the advent of COVID-19, many students had to follow their studies from home, using digital solutions that were not always ready. In recent months, through initiatives such as the Aporta Challenge, an effort has been made to promote the creation of solutions that incorporate open data in order to improve the efficiency of the educational sphere, drive improvements - such as the personalisation of education - and achieve more universal access to knowledge. Some of the education datasets that can be found in the catalogue are the degrees offered by Spanish universities or surveys on household spending on education.

- Culture and leisure: Culture and leisure data is a category of great importance when it comes to reusing it to develop, for example, educational and learning content. Cultural data can help generate new knowledge to help us understand our past, present and future. Examples of datasets are the location of monuments or listings of works of art.

Structure of each sector

Each sector page has a homogeneous structure, which facilitates the location of contents also available in other sections.

It starts with a highlight where you can see some examples of outstanding datasets belonging to this category, and a link to access all the datasets of this subject in the catalogue.

It continues with news related to the data and the sector in question, which can range from events or information on specific initiatives (such as Procomún in the field of educational data or the Green Deal in the environment) to the latest developments at strategic and operational level.

Finally, there are three sections related to use cases: innovation, reusing companies and applications. In the first section, articles provide examples of innovative uses, often linked to disruptive technologies such as Artificial Intelligence. In the last two sections, we find specific files on companies and applications that use open data from this category to generate a benefit for society or the economy.

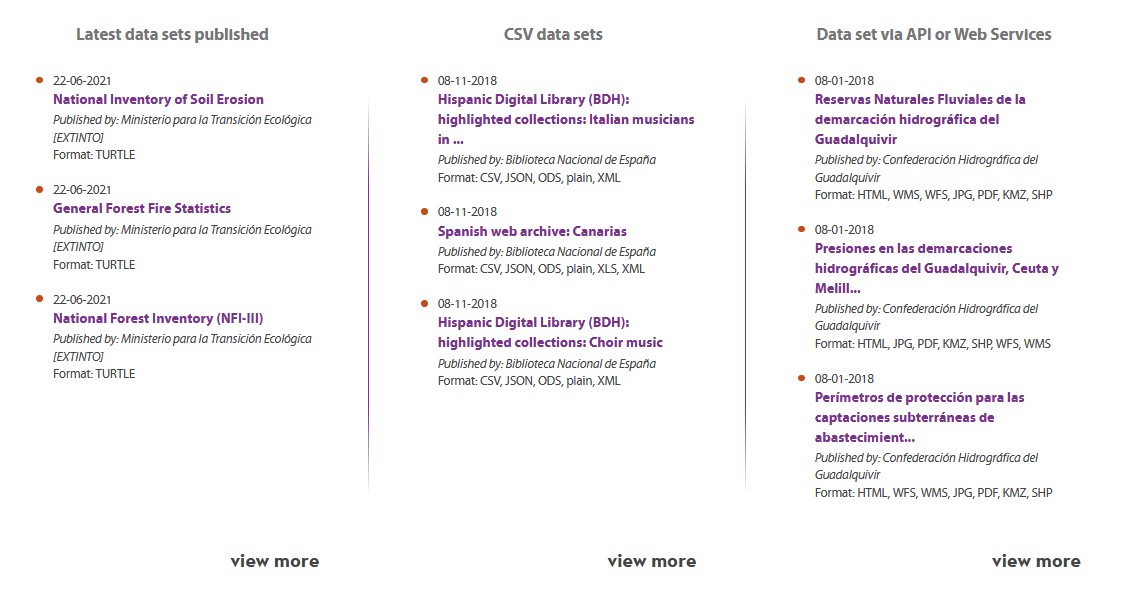

Highlights section on the home page

In addition to the creation of sectoral pages, over the last year, datos.gob.es has also incorporated a section of highlighted datasets. The aim is to give greater visibility to those datasets that meet a series of characteristics: they have been updated, are in CSV format or can be accessed via API or web services.

What other sectors would you like to highlight?

The plans of datos.gob.es include continuing to increase the number of sectors to be highlighted. Therefore, we invite you to leave in comments any proposal you consider appropriate.

The Hercules initiative was launched in November 2017, through an agreement between the University of Murcia and the Ministry of Economy, Industry and Competitiveness, with the aim of developing a Research Management System (RMS) based on semantic open data that offers a global view of the research data of the Spanish University System (SUE), to improve management, analysis and possible synergies between universities and the general public.

This initiative is complementary to UniversiDATA, where several Spanish universities collaborate to promote open data in the higher education sector by publishing datasets through standardised and common criteria. Specifically, a Common Core is defined with 42 dataset specifications, of which 12 have been published for version 1.0. Hercules, on the other hand, is a research-specific initiative, structured around three pillars:

- Innovative SGI prototype

- Unified knowledge graph (ASIO) 1],

- Data Enrichment and Semantic Analysis (EDMA)

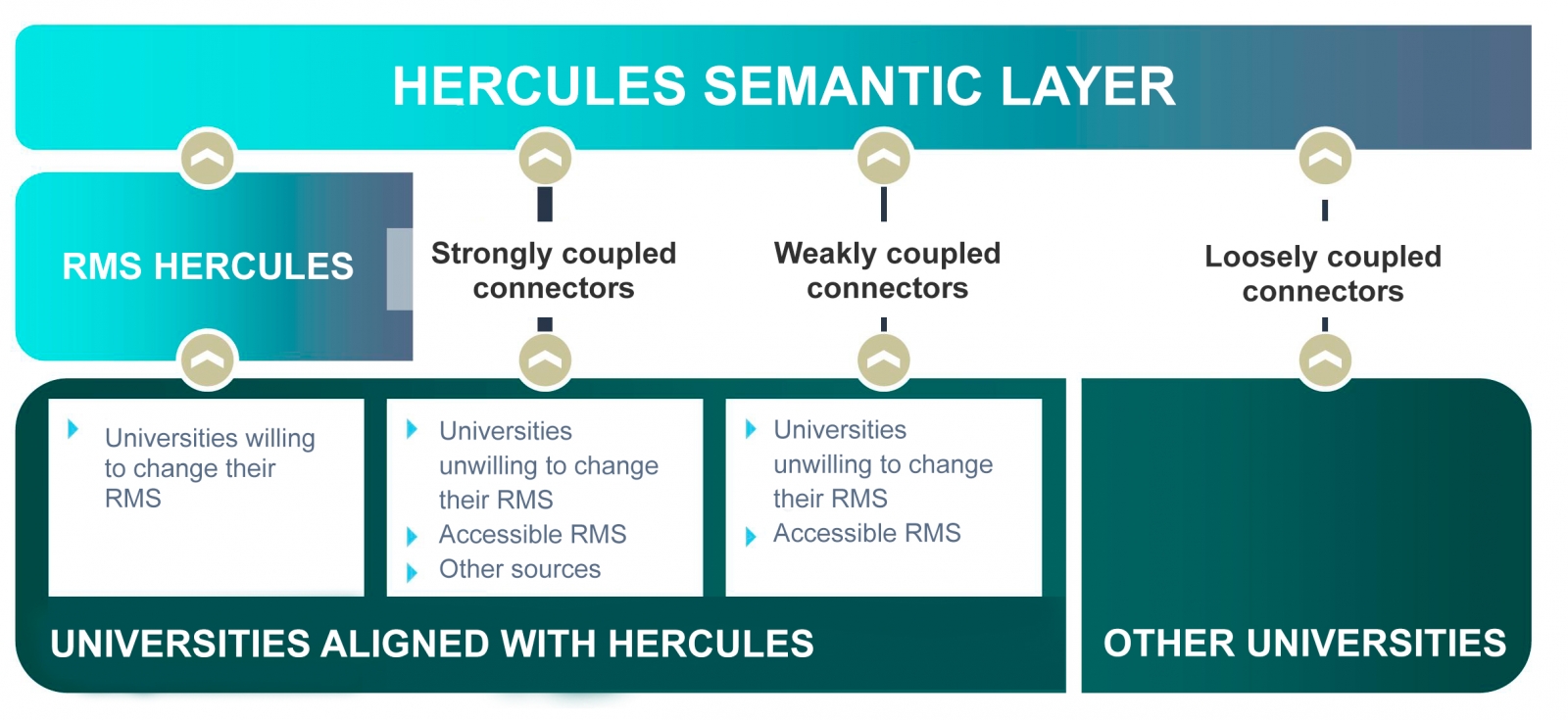

The ultimate goal is the publication of a unified knowledge graph integrating all research data that participating universities wish to make public. Hercules foresees the integration of universities at different levels, depending on their willingness to replace their RMS with the Hercules RMS. In the case of external RMSs, the degree of accessibility they offer will also have an impact on the volume of data they can share through the unified network.

General organisation chart of the Hercule initiative

Within the Hercules initiative, the ASIO Project (Semantic Architecture and Ontology Infrastructure) is integrated. The purpose of this sub-project is to define an Ontology Network for Research Management (Ontology Infrastructure). An ontology is a formal definition that describes with fidelity and high granularity a particular domain of discussion. In this case, the research domain, which can be extrapolated to other Spanish and international universities (at the moment the pilot is being developed with the University of Murcia). In other words, the aim is to create a common data vocabulary.

Additionally, through the Semantic Data Architecture module, an efficient platform has been developed to store, manage and publish SUE research data, based on ontologies, with the capacity to synchronise instances installed in different universities, as well as the execution of distributed federated queries on key aspects of scientific production, lines of research, search for synergies, etc.

As a solution to this innovation challenge, two complementary lines have been proposed, one centralised (synchronisation in writing) and the other decentralised (synchronisation in consultation). The architecture of the decentralised solution is explained in detail in the following sections.

Domain Driven Design

The data model follows the Domain Driven Design approach, modelling common entities and vocabulary, which can be understood by both developers and domain experts. This model is independent of the database, the user interface and the development environment, resulting in a clean software architecture that can adapt to changes in the model.

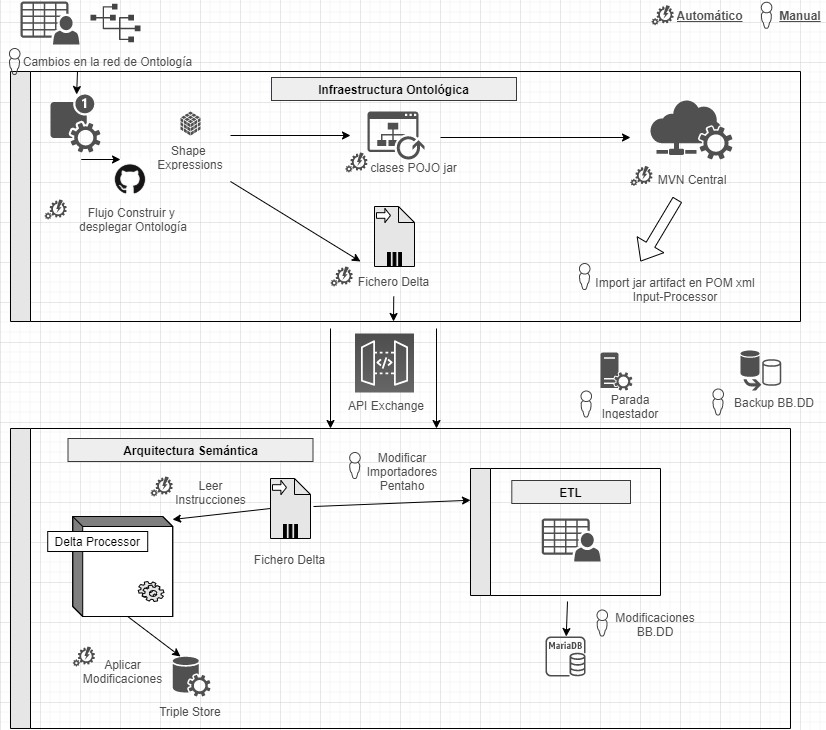

This is achieved by using Shape Expressions (ShEx), a language for validating and describing RDF datasets, with human-readable syntax. From these expressions, the domain model is automatically generated and allows orchestrating a continuous integration (CI) process, as described in the following figure.

Continuous integration process using Domain Driven Design (just available in Spanish)

By means of a system based on version control as a central element, it offers the possibility for domain experts to build and visualise multilingual ontologies. These in turn rely on ontologies both from the research domain: VIVO, EuroCRIS/CERIF or Research Object, as well as general purpose ontologies for metadata tagging: Prov-O, DCAT, etc.

Linked Data Platform

The linked data server is the core of the architecture, in charge of rendering information about all entities. It does this by collecting HTTP requests from the outside and redirecting them to the corresponding services, applying content negotiation, which provides the best representation of a resource based on browser preferences for different media types, languages, characters and encoding.

All resources are published following a custom-designed persistent URI scheme. Each entity represented by a URI (researcher, project, university, etc.) has a series of actions to consult and update its data, following the patterns proposed by the Linked Data Platform (LDP) and the 5-star model.

This system also ensures compliance with the FAIR (Findable, Accessible, Interoperable, Reusable) principles and automatically publishes the results of applying these metrics to the data repository.

Open data publication

The data processing system is responsible for the conversion, integration and validation of third-party data, as well as the detection of duplicates, equivalences and relationships between entities. The data comes from various sources, mainly the Hercules unified RMS, but also from alternative RMSs, or from other sources offering data in FECYT/CVN (Standardised Curriculum Vitae), EuroCRIS/CERIF and other possible formats.

The import system converts all these sources to RDF format and registers them in a specific purpose repository for linked data, called Triple Store, because of its capacity to store subject-predicate-object triples.

Once imported, they are organised into a knowledge graph, easily accessible, allowing advanced searches and inferences to be made, enhanced by the relationships between concepts.

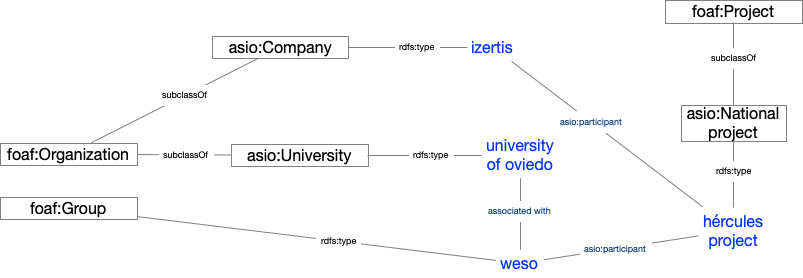

Example of a knowledge network describing the ASIO project

Results and conclusions

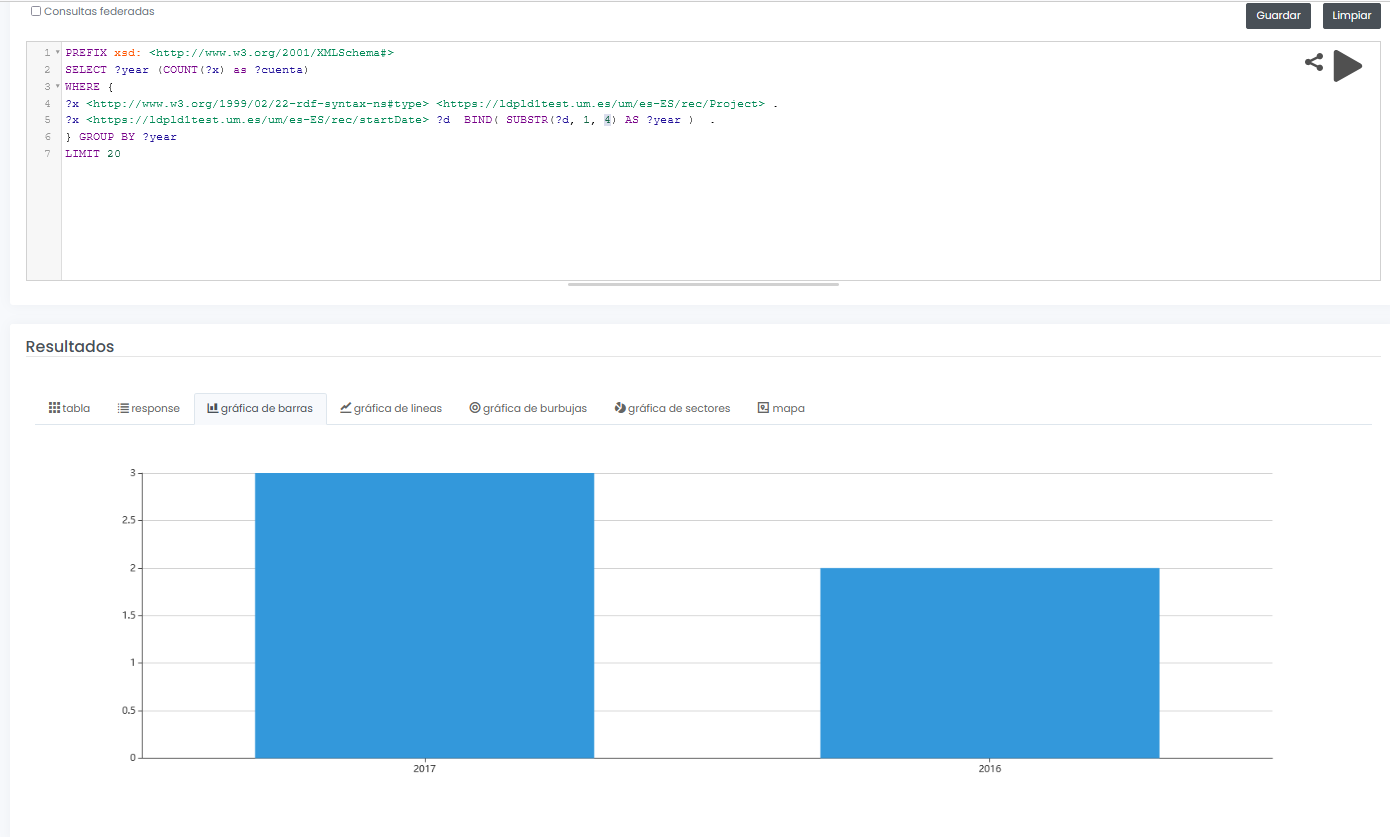

The final system not only allows to offer a graphical interface for interactive and visual querying of research data, but also to design SPARQL queries, such as the one shown below, even with the possibility to run the query in a federated way on all nodes of the Hercules network, and to display results dynamically in different types of graphs and maps.

In this example, a query is shown (with limited test data) of all available research projects grouped graphically by year:

PREFIX xsd: <http://www.w3.org/2001/XMLSchema#>

SELECT ?year (COUNT(?x) as ?cuenta)

WHERE {

?x <http://www.w3.org/1999/02/22-rdf-syntax-nes#type> <https://ldpld1test.um.es/um/es-ES/rec/Project> .

?x <https://ldpld1test.um.es/um/es-ES/rec/startDate> ?d BIND(SUBSTR(?d, 1, 4) as ?year) .

} GROUP BY ?year LIMIT 20

LIMIT 20

Ejemplo de consulta SPARQL con resultado gráfico

In short, ASIO offers a common framework for publishing linked open data, offered as open source and easily adaptable to other domains. For such adaptation, it would be enough to design a specific domain model, including the ontology and the import and validation processes discussed in this article.

Currently the project, in its two variants (centralised and decentralised), is in the process of being put into pre-production within the infrastructure of the University of Murcia, and will soon be publicly accessible.

[1 Graphs are a form of knowledge representation that allow concepts to be related through the integration of data sets, using semantic web techniques. In this way, the context of the data can be better understood, which facilitates the discovery of new knowledge.

Content prepared by Jose Barranquero, expert in Data Science and Quantum Computing.

The contents and views expressed in this publication are the sole responsibility of the author.

During 2020 the education sector has been exposed to great changes due to the global pandemic. Homes were transformed into classrooms, which was a challenge for everyone involved in the educational ecosystem.

The COTEC Foundation, a private non-profit organization charged with promoting innovation for economic and social development, closely followed this situation. Already in April, they published the first report "COVID 19 AND EDUCATION I: problems, responses and scenarios", where the educational challenges derived from the health emergency were analyzed and various action scenarios were proposed. It was then followed by "Covid-19 and Education II: homeschooling and inequality" and "Covid-19 and Education II: homeschooling and inequality."

We have spoken with Ainara Zubillaga, Director of Education and Training of the COTEC Foundation and co-author of the report, to analyze the situation of Spanish R&D in general and to tell us about the current situation and the evolution that our system is expected to experience. education in the years to come thanks to technology and data.

Complete interview

1. In March 2020, schools closed practically overnight. What challenges did this situation bring to light?

The closure of schools made visible the seams of the educational system. Problems that did not exist did not emerge, but it did put the magnifying glass on the structural deficits that already existed, just as it has accelerated trends that were knocking on the door.

The impact of the closure of educational centers has been twofold: educational and social. From the educational perspective, it has revealed the overload of our curriculum, a volume of content that is not capable of being addressed under normal conditions, which further complicated development in a remote education setting. Digitization has been the great challenge that schools and teachers have faced: from lack of resources (platforms, applications, digitization of materials, etc.), to little teacher training that has been forced to migrate their activity daily teacher to a format and a channel that many were unaware of how it works and how to take advantage of it didactically.

And from the social perspective, the closure of schools has made the educational gap visible, which has been shown to the general public through the digital gap. It is not only reduced to it, but it is what has allowed everyone to see the problems of equity and educational segregation beyond the classroom. The data are clear: it is fundamentally the socioeconomic level, the variable that affects the three digital gaps -access, use and center-, above comparisons with other countries or between Autonomous Communities.

2. When reviewing the educational policies of the different Autonomous Communities, what aspects could help to alleviate the different gaps detected and also avoid disparities between territories?

The most obvious seems to be any action linked to the reduction of the digital divide, but in its broadest sense, that is, we are not only talking about the provision of devices and the guarantee of connectivity, but also about digital competence and the didactic use of technologies.

And the other great aspect, without a doubt, are all the school reinforcement and support programs. The first data that we began to have on the impact of school closings in terms of learning loss clearly show that there is a significantly higher incidence on the most vulnerable students. The gaps that already existed, therefore, are widening, and it is necessary to reverse the trend.

3. In what way can open data drive improvements in the education sector that help overcome these challenges?

Data is a fundamental element for the transparency and evaluation of the functioning of the educational system and its policies. Only evidence on the degree of impact and operation of public policies can help us both to focus investments correctly, and to reinforce what does not work.

On the other hand, open data allows the different Ministries to share information, share good practices, and, ultimately, make public administration more efficient and put it at the service of the citizen. The COVID-19 AND EDUCATION III study: the response of the Administrations, which we launched from Cotec last December, reflects among its conclusions that a more accessible, transparent, direct and coordinated information system is necessary that allows sharing, replicating and transmitting good practices within the educational Administration.

Data is a fundamental element for the transparency and evaluation of the functioning of the educational system and its policies, which allow the sharing of information and good practices to make public administration more efficient.

4. Be part of the jury of the Aporta 2020 Challenge, which this year has focused on finding solutions based on data that help solve challenges in the education system. Can you tell us something about the solutions presented?

The proposals that have been submitted to the contest are an example of those trends that have accelerated and that I discussed earlier. They are clear examples, for the most part, of solutions aimed at the personalization of learning, which is undoubtedly one of the lines of development of digitization in education, and is thus also included in the Educa en Digital program of the Ministry of Education itself.

The personalization of the learning process is a clear example of the added value that technology brings to education: not only does it allow adjusting what is taught and how to the student and their needs, but also frees the teacher from routine monitoring functions, allowing you to focus on a more personalized attention, especially in those students who need more support.

5. You have just started the project La escuela, lo primero, what can you tell us about it?

La escuela, lo primero” is an innovation project whose objective is to offer the Administration, educational centers, teaching staff, and other institutions, the necessary tools to face the challenges faced by the educational system, the different scenarios and situations - face-to-face and at a distance - derived from the Covid-19 pandemic.

We started in July, with the first edition of the teaching innovation laboratories, and we have had the participation of more than 200 teachers from all over Spain, who have generated more than thirty proposals, all of them innovative solutions that include games, guides, decalogues or virtual resources, valid to be applied in any school.

The practical proposals address, among other challenges, the transfer of active methodologies to the virtual environment, the organization of times and spaces in a flexible way, the promotion of collaborative remote work, promoting student autonomy, reinforcing special educational needs or promoting coexistence.

"La escuela, lo primero" is an innovation project that offers tools to face the challenges faced by the educational system.

6. What other projects related to the educational field have you started or are you planning to develop?

We continue with our work of analysis and design of solutions and proposals aimed at responding to all the challenges that have arisen from the pandemic. The teaching laboratories at La Escuela Lo Primero are not only continuing, but will be completed with follow-up others that allow us to evaluate the implementation of the proposed solutions: what has worked and what has not, how has this peculiar course evolved, what new Challenges have arisen… and all this aimed at defining the lines of that school that we want to build.

And from the dimension most linked to public policies, we are working on the preparation of a Digitization Plan, which we hope will give guidance to both the Administration and the centers, in this process that is sometimes not being approached in the most appropriate way.

7. The COTEC Foundation prepares a report each year on the I+D situation in Spain. This year's report shows that R&D has gained weight in the production structure for the second consecutive year. What are we doing well in Spain? What is left to do?

If we look at the country as a whole, the data tell us that we are not a particularly innovative country (we occupy middle positions in international rankings, which do not correspond to the positions we have as an economic or scientific power).

Now, if our point of view goes down from the country scale to the individual scale, from the institutional to the personal, the perspective changes. In both the public and private sectors, innovative initiatives are proliferating increasingly, driven by strong creativity and individual enthusiasm. And to this is added that many of them are being developed in environments and through channels outside the classical structure of the science, technology and university system. Therefore, I believe that we are good individually, in projects that act as catalysts, but that, lacking a properly defined, articulated, connected and coordinated system, we lose strength in collective change.

And something similar happens in education: we have a gap between an innovative vocation of a large part of the teaching staff, reflected in this multitude of pilot projects and experiences, and the innovative performance of schools and the system as a whole. And that slows down the transformation of the educational system as a whole.

In both the public and private sectors, innovative initiatives are proliferating increasingly, driven by strong creativity and individual enthusiasm.

8. This year's COTEC report also highlights that Spain has a higher rate than the European average in STEM graduates, but with a much wider gender gap, what can we do to reverse this situation?

It seems clear that there is a gap between technology and women (and I emphasize technology because, for example, it is not produced in the health field, which is also another STEM, scientific area, but it does have a significant female presence). I think that this distancing is generated by the discourse underlying technology, which is focused on the device (the what), not on the process (the what for). We need to clearly link the purpose of technologies, contextualize them, give them a greater sense and social focus, and I believe that in this way we would minimize the distance that the general population and women in participating have towards technology. It would allow approaching the problem from another perspective: it is not about bringing women closer to technology, but about bringing technology closer to women.

Last October, the Aporta Initiative, together with the Secretary of State for Digitalization and Artificial Intelligence and Red.es, launched the third edition of the Aporta Challenge. Under the slogan "The value of data in digital education", the aim was to reward ideas and prototypes capable of identifying new opportunities to capture, analyse and use data intelligence in the development of solutions in the field of education.

Among the proposals submitted in Phase I, there were a wide range of entries. From individuals to university academic teams, educational institutions and private companies, which have devised web platforms, mobile applications and interactive solutions with data analytics and machine learning techniques as protagonists.

A jury of renowned prestige has been in charge of evaluating the proposals submitted based on a series of public criteria. The 10 solutions selected as finalists are:

EducaWood

- Team: Jimena Andrade, Guillermo Vega, Miguel Bote, Juan Ignacio Asensio, Irene Ruano, Felipe Bravo and Cristóbal Ordóñez.

What is it?

EducaWood is a socio-semantic web portal that allows to explore the forest information of an area of the Spanish territory and to enrich it with tree annotations. Teachers can propose environmental learning activities contextualized to their environment. Students carry out these activities during field visits by means of tree annotations (location and identification of species, measurements, microhabitats, photos, etc.) through their mobile devices. In addition, EducaWood allows virtual field visits and remote activities with the available forestry information and annotations generated by the community, thus enabling its use by vulnerable groups and in Covid scenarios.

EducaWood uses sources such as the Spanish Forest Map, the National Forest Inventory or GeoNames, which have been integrated and republished as linked open data. The annotations generated by the students' activities will also be published as linked open data, thus contributing to community benefit.

Data Education. Innovation and Human Rights.

- Team: María Concepción Catalán, Asociación Innovación y Derechos Humanos (ihr.world).

What is it?

This proposal presents a data education web portal for students and teachers focused on the Sustainable Development Goals (SDGs). Its main objective is to propose to its users different challenges to be solved through the use of data, such as 'What were women doing in Spain in 1920' or 'How much energy is needed to maintain a farm of 200 pigs'.

This initiative uses data from various sources such as the UN, the World Bank, Our World in Data, the European Union and each of its countries. In the case of Spain, it uses data from datos.gob.es and INE, among others.

UniversiDATA-Lab

- Team: Rey Juan Carlos University, Complutense University of Madrid, Autonomous University of Madrid, Carlos III University of Madrid and DIMETRICAL The Analytics Lab S.L.

What is it?

UniversiDATA-Lab is a public and open portal whose function is to host a catalog of advanced and automatic analyses of the datasets published in the UniversiDATA portal, and which is the result of the collaborative work of universities. It arises as a natural evolution of the current "laboratory" section of UniversiDATA, opening the scope of potential analysis to all present and future datasets/universities, in order to improve the aspects analysed and encourage universities to be laboratories of citizenship, providing a differential value to society.

All the datasets that universities are publishing or will publish in UniversiDATA are potentially usable to carry out in-depth analyses, always considering the respect for the protection of personal data. The specific sources of the analyses will be published on GitHub to encourage the collaboration of other users to contribute improvements.

LocalizARTE

- Team: Pablo García, Adolfo Ruiz, Miguel Luis Bote, Guillermo Vega, Sergio Serrano, Eduardo Gómez, Yannis Dimitriadis, Alejandra Martínez and Juan Ignacio Asensio.

What is it?

This web application pursues the learning of art history through different educational environments. It allows students to visualize and perform geotagged tasks on a map. Teachers can propose new tasks, which are added to the public repository, as well as select the tasks that may be more interesting for their students and visualize the ones they perform. On the other hand, a mobile version of LocalizARTE will be developed in the future, in which the user will need to be close to the place where the tasks are geotagged in order to perform them.

The open data used in the first version of LocalizARTE comes from the list of historical monuments of Castilla y León, DBpedia, Wikidata, Casual Learn SPARQL and OpenStreetMap.

Study PISA data and datos.gob.es

- Team: Antonio Benito, Iván Robles and Beatriz Martínez.

What is it?

This project is based on the creation of a dashboard that allows to view information from the PISA report, conducted by the OECD, or other educational assessments along with data provided by datos.gob.es of socioeconomic, demographic, educational or scientific scope. The objective is to detect which aspects favour an increase in academic performance using a machine learning model, so that effective decision-making can be carried out. The idea is that schools themselves can adapt their educational practices and curricula to the learning needs of students to ensure greater success.

This application uses various open data from INE, the Ministry of Education and Vocational Training or PISA Spain.

Big Data in Secondary Education... and Secondary in Education

- Team: Carmen Navarro, Nazaret Oporto School.

What is it?

This proposal pursues two objectives: on the one hand, to improve the training of secondary school students in digital skills, such as the control of their digital profiles on the Internet or the use of open data for their work and projects. On the other hand, the use of data generated by students in an e-learning platform such as Moodle to determine patterns and metrics to personalize learning. All of this is aligned with the SDGs and the 20-30 Agenda.

Data used for its development come from the WHO and the datathon "Big Data in the fight against obesity", where several students proposed measures to mitigate global obesity based on the study of public data.

DataLAB: the Data Lab in Education

- Team: iteNlearning, Ernesto Ferrández Bru.

What is it?

Data obtained with empirical Artificial Intelligence techniques such as big data or machine learning offer correlations, not causes. iteNleanring bases its technology on scientific models with evidence, as well as on data (from sources such as INE or the Basque Institute of Statistics - Eustat). These data are curated in order to assist teachers in decision making, once DataLAB identifies the specific needs of each student.

DataLAB Mathematics is a professional educational tool that, based on neuropsychological and cognitive models, measures the level of neurodevelopment of the specific cognitive processes developed by each student. This generates an educational scorecard that, based on data, informs us of the specific needs of each person (high ability, dyscalculia...) so that they can be enhanced and/or reinforced, allowing an evidence-based education.

The value of podcasting in digital education

- Team: Adrián Pradilla Pórtoles and Débora Núñez Morales.

What is it?

2020 has been the year in which podcasts have taken off as a new digital format for the consumption of different areas of information. This idea seeks to take advantage of the boom of this tool to use it in the educational field so that students can learn in a more enjoyable and different way.

The proposal includes the official syllabus of secondary or university education, as well as competitive examinations, which can be obtained from open data sources and official websites. Through natural language processing technologies, these syllabi are associated with existing audios of teachers on history, English, philosophy, etc. on platforms such as iVoox or Spotify, resulting in a list of podcasts by course and subject.

The data sources used for this proposal include the Public Employment Offer of Castilla La Mancha or the educational competences in different stages.

MIPs Project

- Team: Aday Melián Carrillo, Daydream Software.

What is it?

A MIP (Marked Information Picture) is a new interactive information tool, consisting of a series of interactive layers on static images that facilitate the retention of information and the identification of elements.

This project consists of a service for creating MIPs quickly and easily by manually drawing regions of interest on any image imported through the web. The created MIPs will be accessible from any device and have multiple applications as a teaching, personal and professional resource.

In addition to manual creation, the authors have implemented an automatic GeoJSON to MIP data converter in Python. As a first step, they have developed a MIP of Spanish provinces from this public database.

FRISCHLUFT

- Team: Harut Alepoglian and Benito Cuezva, German School Cultural Association, Zaragoza.

What is it?

The Frischluft (Fresh Air) project is a hardware and software solution for measuring environmental parameters in the school. It aims to improve the thermal comfort of the classrooms and increase the protection of the students through intelligent ventilation, while consolidating a tractor project that drives the digital transformation of the school.

This proposal uses data sources from Zaragoza City Council on CO2 levels in the urban environment of the city and international data repositories to measure global emissions, which are compared through statistical techniques and machine learning models.

Next steps

All of these ideas have been able to capture how to best use data intelligence to develop real solutions in the education sector. The finalists now have 3 months to develop a prototype. The three prototypes that receive the best evaluation from the jury, according to the established evaluation criteria, will be awarded 4,000, 3,000 and 2,000 euros respectively.

Good luck to all participants!

When we think of open data our first intuition is usually directed towards data generated by public sector bodies in the exercise of their functions and made available for reuse by citizens and businesses, i.e. public sector open data or open public data. This is natural, because public sector information represents an extraordinary source of data and the intelligent use of this data, including its processing through artificial intelligence applications, has great transformative potential in all sectors of the economy, as recognised by the European directive on open data and re-use of public sector information.

One of the most interesting novelties introduced by the directive was the initial but expandable definition of 6 thematic categories of high-value datasets, whose re-use is associated with considerable benefits for society, the environment and the economy. These six areas - Geospatial, Earth Observation and Environment, Meteorology, Statistics, Societies and Corporate Ownership and Mobility - are the ones that in 2019 were considered to have the greatest potential for the creation of value-added services and applications based on such datasets. However, looking ahead to 2021, which is almost a year into the global health crisis, it seems clear that this list misses two key areas with a high potential impact on society, namely health and education.

Indeed, we find that on the one hand, educational institutions are explicitly exempted from some obligations in the directive, and on the other hand, health sector data are hardly mentioned at all. The directive, therefore, does not provide for a development of these two areas that the circumstances of the covid-19 pandemic have brought to the forefront of society's priorities.

The availability of health and education data

Although health systems, both public and private, generate and store an enormous amount of valuable data in people's medical records, the availability of these data is very limited due to the very high complexity of processing them in a secure way. Health-related datasets are usually only available to the entity that generates them, despite the great value that their release could have for the advancement of scientific research.

The same could be said for data generated by student interaction with educational platforms, which is also generally not available as open data. As in the health sector, these datasets are usually only available to their owners, for whom they are a valuable asset for the improvement of the platforms, which is only a small part of their potential value to society.

The directive states that high-value data should be published in open formats that can be freely used, re-used and shared by anyone for any purpose. Furthermore, in order to ensure maximum impact and facilitate re-use, high-value datasets should be made available for re-use with very few legal restrictions and at no cost.

Health data are highly sensitive to the privacy of individuals, so the delicate trade-off between respect for privacy and the need to support the advancement of scientific research must always be kept in mind. The consideration of health and education data as high-value open data should probably maintain some particular restrictions due to the nature and sensitivity of these data and promote figures such as the donation of data for research purposes by patients or the exchange for the same purpose between researchers. In this sense, the 2018 regulation on data protection introduced the possibility of reusing data for research purposes, provided that the appropriate pseudonymisation measures and the rest of the legally stipulated guarantees are adopted.

The importance of public-private partnerships

Education and health are two areas where the private sector or public-private partnerships are making exciting strides in converting some of the potential of open data into benefits for society. Open data publishing is not the exclusive preserve of the public sector and there is a long tradition of private-public collaboration, largely channelled through universities. Let us look at some examples:

- There are a number of initiatives such as the pioneering The UCI Machine Learning Repository founded in 1987 as a repository of datasets used by the artificial intelligence community for empirical analysis of machine learning algorithms. This repository has been cited more than 1000 times, the highest number of citations obtained in the computer science domain. In this and other repositories also managed by universities or foundations with donations from private companies, we can also find open datasets released by companies or in which they have actively collaborated in their creation or development.

- Also large technology companies, no doubt inspired by these initiatives, maintain open data search engines or repositories such as Google's dataset search engine, AWS's open data registry, or Microsoft Azure's datasets, where datasets related to health or education are increasingly common.

- In terms of data that can contribute to improving education, for example, The Open University publishes OULAD (OpenUniversity Learning Analytics Dataset), an open learning analytics dataset containing data on courses, students and their interactions with the virtual learning environment for seven courses. However, there are very few comparable datasets whose joint use in projects would undoubtedly allow further progress to be made in areas such as detecting the risk of students dropping out.

- As far as the health sector is concerned, it is worth highlighting the case of the Spanish platform HealthData 29, developed by Fundación 29, which aims to create the necessary infrastructure to make it possible to securely publish open health datasets so that they are available to the community for research purposes. As part of this infrastructure, Foundation 29 has published the Health Data Playbook, which is a guide for the creation, within the current technical and legal framework, of a public repository of data from health systems, so that they can be used in medical research. Microsoft has collaborated in the preparation of this guide as a technological partner and Garrigues as a legal partner, and it is aimed at organisations that carry out health research.

At the moment the platform only has available the Covid Data Save Lives (COVIDDSL) dataset published by the HM Hospitales University Hospital Group, composed of clinical data on interactions recorded in the covid-19 treatment process. However, it is an excellent example of the potential that we may be missing out on globally by not collecting and publishing more and better data on patients diagnosed with covid-19 in a systematised way and on a global scale. The creation of predictive models of disease progression in patients, the development of epidemiological models on the spread of the virus, or the extraction of knowledge on the behaviour of the virus for vaccine development are just some of the use cases that would benefit from greater availability of this data.

Education and health are two of the great concerns of all developed societies in the world because they are closely related to the well-being of their citizens. But perhaps we have never been more aware of this than in the last year and this represents an extraordinary opportunity to drive initiatives that contribute to unlocking more open health and education data. Whether as high-value data or in any other form, these datasets are key to enabling us to better react to future health crisis situations but also to help us overcome the aftermath of the current one.

Content prepared by Jose Luis Marín, Senior Consultant in Data, Strategy, Innovation & Digitalization.

The contents and points of view reflected in this publication are the sole responsibility of its author.

The world of technology and data is constantly evolving. Keeping up with the latest developments and trends can be a difficult task. Therefore, spaces for dialogue where knowledge, doubts and recommendations can be shared are important.

What are communities?

Communities are open channels through which different people interested in the same subject or technology meet physically or virtually to contribute, ask questions, discuss and resolve issues related to that technology. They are commonly created through an online platform, although there are communities that organise regular meetings and events where they share experiences, establish objectives and strengthen the bonds created through the screen.

How do they work?

Many developer communities use open source platforms known as GitHub or Stack Overflow, through which they store and manage their code, as well as share and discuss related topics.

Regarding how they are organised, not all communities have an organisational chart as such, some do, but there is no parameter that governs the organisation of communities in general. However, there may be roles defined according to the skills and knowledge of each of their members.

Developer communities as data reusers

There are a significant number of communities that bring knowledge about data and its associated technologies to different user groups. Some of them are made up of developers, who come together to expand their skills through webinars, competitions or projects. Sometimes these activities help drive innovation and transformation in the world of technology and data, and can act as a showcase to promote the use of open data.

Here are three examples of data-related developer communities that may be of interest to you if you want to expand your knowledge in this field:

Hackathon Lovers

Since its creation in 2013, this community of developers, designers and entrepreneurs who love hackathons have been organising meetings to test new platforms, APIs, products, hardware, etc. Among its main objectives is to create new projects and learn, while users have fun and strengthen ties with other professionals.

The topics they address in their events are varied. In the #SerchathonSalud hackathon, they focused on promoting training and research in the field of health based on bibliographic searches in 3 databases (PubMed, Embase, Cochrane).). Other events have focused on the use of specific APIs. This is the case of #OpenApiHackathon, a development event on Open Banking and #hackaTrips, a hackathon to find ideas on sustainable tourism.

Through which channels can you follow their news?

Hackathon Lovers is present in the main social networks such as Twitter and Facebook, as well as YouTube, Github, Flickr and has its own blog.

Comunidad R Hispano

It was created in November 2011, as part of the 3rd R User Conference held at the Escuela de Organización Industrial in Madrid. Organised through local user groups, its main objective is to promote the advancement of knowledge and use of the R programming language, as well as the development of the profession in all its aspects, especially research, teaching and business.

One of its main fields is the training of R and associated technologies for its users, in which open data has a place. With regard to the activities they carry out, there are events such as:

- Annual conferences: so far there have been eleven editions based on talks and workshops with attendees with R software as the protagonist.

- Local initiatives: although the association is the main promoter of the annual conferences, the feeling of community is forged thanks to local groups such as those in Madrid, sponsored by RConsortium, the Canary Islands, which communicates aspects such as public and geographic data, or Seville, which during its latest hackathons has developed several packages linked to open data.

- Collaboration with groups and initiatives focused on data: such as UNED, Grupo de Periodismo de Datos, Grupo Machine Learning Spain or companies such as Kabel or Kernel Analytics.

- Collaboration with Spanish academic institutions: such as EOI, Universidad Francisco de Vitoria, ESIC, or K-School, among others.

- Relationship with international institutions: such as RConsortium or RStudio.

- Creation of data-centric packages in Spain: participation in ROpenSpain, an initiative for R and open data enthusiasts aimed at creating top quality R packages for the reuse of Spanish data of general interest.

Through which channels can you follow their news?

This community is made up of more than 500 members. The main communication channel for contacting its users is Twitter, although its local groups have their own accounts, as is the case in Malaga, the Canary Islands and Valencia, among others.

R- Ladies Madrid

R-Ladies Madrid is a local branch of R-Ladies Global -a project funded by the R Consortium-Linux Foundation- born in 2016. It is an open source community developed by women who support each other and help each other grow within the R sector.

The main activity of this community lies in the celebration of monthly meetings or meet ups where female speakers share knowledge and projects they are working on, or teach functionalities related to R. Its members range from professionals who have R as their main working tool to amateurs who are looking to learn and improve their skills.

R-Ladies Madrid is very active within the software community and supports different technological initiatives, from the creation of open source working groups to its participation in different technological events. In some of their working groups they use open data from sources such as the BOE or Open Data NASA. In addition, they have also helped to set up a working group with data on Covid-19. In previous years they have organised gender hackathons where all participating teams were made up of 50% women and proposed to work with data from non-profit organisations.

Through which channels can you follow their news?

R – Ladies Madrid is present on Twitter, as well as having a Meetup group.

This has been a first approximation, but there are more communities of developers related to the world of data in our country. These are essential not only to bring theoretical and technical knowledge to users, but also to promote the reuse of public data through various projects like the ones we have seen. Do you know of any other organisation with similar aims? Do not hesitate to write to us at dinamizacion@datos.gob.es or leave us all the information in the comments.

The pandemic that originated last year has brought about a significant change in the way we see the world and how we relate to it. As far as the education sector is concerned, students and teachers at all levels have been forced to change the face-to-face teaching and learning methodology for a telematic system.

In this context, within the framework of the Aporta Initiative, the study "Data-based educational technology to improve learning in the classroom and at home", by José Luis Marín, has been developed. This report offers several keys to reflect on the new challenges posed by this situation, which can be turned into opportunities if we manage to introduce changes that promote the improvement of the teaching-learning process beyond simply replacing face-to-face classes with online training.

The importance of data to improve the education sector

Through innovative educational technology based on data and artificial intelligence, some of the challenges facing the education system can be addressed. For this report, 4 of these challenges have been selected:

· Non-presential supervision of assessment tests: monitoring and surveillance of evaluative tests through telematic resources.

· Identification of behavioral or attention problems: alerting teachers to activities and behaviors that indicate attention, motivation or behavioral problems.

· Personalized and more attractive training programs: adaptation of learning routes and pace of students' learning.

· Improved performance on standardized tests: use of online learning platforms to improve results on standardized tests, to reinforce mastery of a particular subject, and to achieve fairer and more equitable assessment.

To address each of these four challenges, a simple structure divided into three sections is proposed:

1. Description of the problem, which allows us to put the challenge in context.

2. Analysis of some of the approaches based on the use of data and artificial intelligence that are used to offer a technological solution to the challenge in question.

3. Examples of relevant or highly innovative solutions or experiences.

The report also highlights the enormous complexity involved in this type of issues, so they should be approached with caution to avoid negative consequences on individuals, such as cybersecurity issues, invasion of privacy or risk of exclusion of some groups, among others. To this end, the document ends with a series of conclusions that converge in the idea that the best way to generate better results for all students, alleviating inequalities, is to combine excellent teachers and excellent technology that enhances their capabilities. In this process, open data can play an even more relevant role in improving the state of the art in educational technology and ensuring more widespread access to certain innovations that are largely based on machine learning or artificial intelligence technologies.

In this video, the author tells us more about the report:

Open data is an increasingly used resource for the training of students at different stages of the education system and for the continuous training of professionals from all sectors. There is already little doubt about the growing importance of all the skills related to data analysis and processing in relation to almost any knowledge discipline. Similarly, skills related to visualization and the construction of stories based on the conclusions drawn from any data analysis or modeling are increasingly needed to complement and extend the ever-necessary skills to communicate and present results of any kind of work.

Throughout the process of training professionals related to data science and artificial intelligence, open data is a valuable resource for gaining practical experience with the techniques and tools that are common in the profession. However, the effects that the use of data, usually open, has on the learning of other subjects, on the acquisition of other types of skills and even on the motivation of students towards learning, are also beginning to be appreciated.

As early as 2013, research that conducted a detailed quantitative comparison of different educational approaches adopted by 39 schools in New York City showed that the use of data to guide the educational programme was one of the five main policies that had an effect on improving academic performance.

Although the use of open data in the classroom has not been widely studied, the limited research conducted so far suggests at first glance that there is a lack of awareness of open data among educators. While we do not have a consolidated understanding of the effect of the use of open data in educational settings as it is not currently a widespread educational resource, there does appear to be a set of early adopter educators who make substantial use of open data in their teaching programmes.

The research "The use of open data as a material for learning" by the Institute of Educational Technology is based on the qualitative analysis of the experience of a group of these pioneering educators to draw a number of conclusions about the value of using open data in teaching.

One of the starting points is that open data does not seem to offer completely new educational or pedagogical methodologies, but rather its use complements existing concepts of teaching and learning such as research-based or project-based learning or personalisation of learning. Two conclusions stand out in this respect:

- Open datasets used as part of learning projects in any subject are usually relevant to the learner, either because they describe issues in their geographical or social environment, or because they relate to current issues or their own hobbies. Research shows that the mere use of these datasets that arouse student curiosity during the learning of any concept has positive effects on the motivation of students to go deeper into the subject and appreciate its usefulness.

- The use of open datasets offers the possibility to propose more advanced activities without increasing the difficulty of the training programme. Examples are cited in the research ranging from the use of open data to support the statistics training of high school students to the use of open scientific databases in the area of genomics to support the teaching of bioinformatics concepts. In this way students can acquire more advanced knowledge and skills that would otherwise probably only have been produced in the field of professional activity or would have been discarded due to insufficient time in the programme. This effect, especially at higher education levels, would also contribute to closing the gap between the education system and professional practice.

Although their effect has not yet been studied, open data competitions are another vehicle for channelling the practical training of students and for creating new educational resources. Increasingly, universities or secondary schools are encouraging teams to participate in regional or national open data competitions as an activity within certain subjects. Some competitions, such as the Castilla y León open data competition, even have a special category with a corresponding prize reserved for the participation of students.

Along the same lines, Barcelona City Council has been organising the Barcelona Dades Obertes Challenge for four years, which aims to bring the benefits of open data closer to the public and to promote its use in the city's educational centres. The challenge combines competition between schools, which have to develop a data-based project, with a specific training plan on open data for teachers, so that they can guide their students.

The fact that there is no more widespread use of open datasets in educational programmes can be attributed to factors such as the lack of teacher training or the difficulty in adapting existing data. Most open datasets come from professional environments such as scientific research or public service administration and learners and educators may not have the literacy or resources to take advantage of them even though tools are emerging that simplify some of the complexities of working with open data. In this regard, a stronger relationship and joint work between educators and learners and dataset producers could also encourage the deployment of more learning programmes.

This is why there are interesting initiatives such as UDIT (Use Open Research Data In Teaching) launched in 2017 with the aim of encouraging and helping higher education teachers to incorporate open research data and other open science concepts into their teaching to improve the learning process.

The International Open Data Charter already recognises the importance of engaging "with schools and higher education institutions to support further open data research and to incorporate data literacy into education programmes". The value of open data in the learning process has not yet been sufficiently explored. As an example, the usual discourse of the open data community always highlights the potential economic and social value of reuse, but not so much the potential of its use in education.

Content prepared by Jose Luis Marín, Senior Consultant in Data, Strategy, Innovation & Digitalization.

The contents and points of view reflected in this publication are the sole responsibility of its author.

2020 is coming to an end and in this unusual year we are going to have to experience a different, calmer Christmas with our closest nucleus. What better way to enjoy those moments of calm than to train and improve your knowledge of data and new technologies?

Whether you are looking for a reading that will make you improve your professional profile to which to dedicate your free time on these special dates, or if you want to offer your loved ones an educational and interesting gift, from datos.gob.es we want to propose some book recommendations on data and disruptive technologies that we hope will be of interest to you. We have selected books in Spanish and English, so that you can also put your knowledge of this language into practice.

Take note because you still have time to include one in your letter to Santa Claus!

INTELIGENCIA ARTIFICIAL, naturalmente. Nuria Oliver, ONTSI, red.es (2020)

What is it about?: This book is the first of the new collection published by the ONTSI called “Pensamiento para la sociedad digital”. Its pages offer a brief journey through the history of artificial intelligence, describing its impact today and addressing the challenges it presents from various points of view.

Who is it for?: It is aimed especially at decision makers, professionals from the public and private sector, university professors and students, third sector organizations, researchers and the media, but it is also a good option for readers who want to introduce themselves and get closer to the complex world of artificial intelligence.

Artificial Intelligence: A Modern Approach, Stuart Russell

What is it about?: Interesting manual that introduces the reader to the field of Artificial Intelligence through an orderly structure and understandable writing.

Who is it for?: This textbook is a good option to use as documentation and reference in different courses and studies in Artificial Intelligence at different levels. For those who want to become experts in the field.

Situating Open Data: Global Trends in Local Contexts, Danny Lämmerhirt, Ana Brandusescu, Natalia Domagala – African Minds (October 2020)

What is it about?: This book provides several empirical accounts of open data practices, the local implementation of global initiatives, and the development of new open data ecosystems.

Who is it for?: It will be of great interest to researchers and advocates of open data and to those in or advising government administrations in the design and implementation of effective open data initiatives. You can download its PDF version through this link.

The Elements of Statistical Learning: Data Mining, Inference, and Prediction, Second Edition (Springer Series in Statistics), Trevor Hustle, Jerome Friedman. – Springer (May 2017)

What is it about?: This book describes various statistical concepts in a variety of fields such as medicine, biology, finance, and marketing in a common conceptual framework. While the focus is statistical, the emphasis is on definitions rather than mathematics.

Who is it for?: It is a valuable resource for statisticians and anyone interested in data mining in science or industry. You can also download its digital version here.

Europa frente a EEUU y China: Prevenir el declive en la era de la inteligencia artificial, Luis Moreno, Andrés Pedreño – Kdp (2020)

What is it about?: This interesting book addresses the reasons for the European delay with respect to the power that the US and China do have, and its consequences, but above all it proposes solutions to the problem that is exposed in the work.

Who is it for?: It is a reflection for those interested in thinking about the change that Europe would need, in the words of its author, "increasingly removed from the revolution imposed by the new technological paradigm".

What is it about?: This book calls attention to the problems that can lead to the misuse of algorithms and proposes some ideas to avoid making mistakes.

Who is it for?: These pages do not appear overly technical concepts, nor are there formulas or complex explanations, although they do deal with dense problems that need the author's attention.

Data Feminism (Strong Ideas), Catherine D’Ignazio, Lauren F. Klein. MIT Press (2020)

What is it about?: These pages address a new way of thinking about data science and its ethics based on the ideas of feminist thought.

Who is it for?: To all those who are interested in reflecting on the biases built into the algorithms of the digital tools that we use in all areas of life.

Open Cities | Open Data: Collaborative Cities in the Information, Scott Hawken, Hoon Han, Chris Pettit – Palgrave Macmillan, Singapore (2020)

What is it about?: This book explains the importance of opening data in cities through a variety of critical perspectives, and presents strategies, tools, and use cases that facilitate both data openness and reuse..

Who is it for?: Perfect for those integrated in the data value chain in cities and those who have to develop open data strategies within the framework of a smart city, but also for citizens concerned about privacy and who want to know what happens - and what can happen- with the data generated by cities.

Although we would love to include them all on this list, there are many interesting books on data and technology that fill the shelves of hundreds of bookstores and online stores. If you have any extra recommendations that you want to make us, do not hesitate to leave us your favorite title in comments. The members of the datos.gob.es team will be delighted to read your recommendations this Christmas.