The main motivation of this first article - of a series of three - is to explain how to use the UNE 0077 data governance specification (see Figure 1) to establish approved and validated mechanisms that provide organizational support for aspects related to data openness and publication for subsequent use by citizens and other organizations.

To understand the need and utility of data governance, it must be noted that, as a premise, every organization should start with an organizational strategy. To better illustrate the article, consider the example of the municipality of an imaginary town called Vistabella. Suppose the organizational strategy of the Vistabella City Council is to maximize the transparency and quality of public services by reusing public service information.

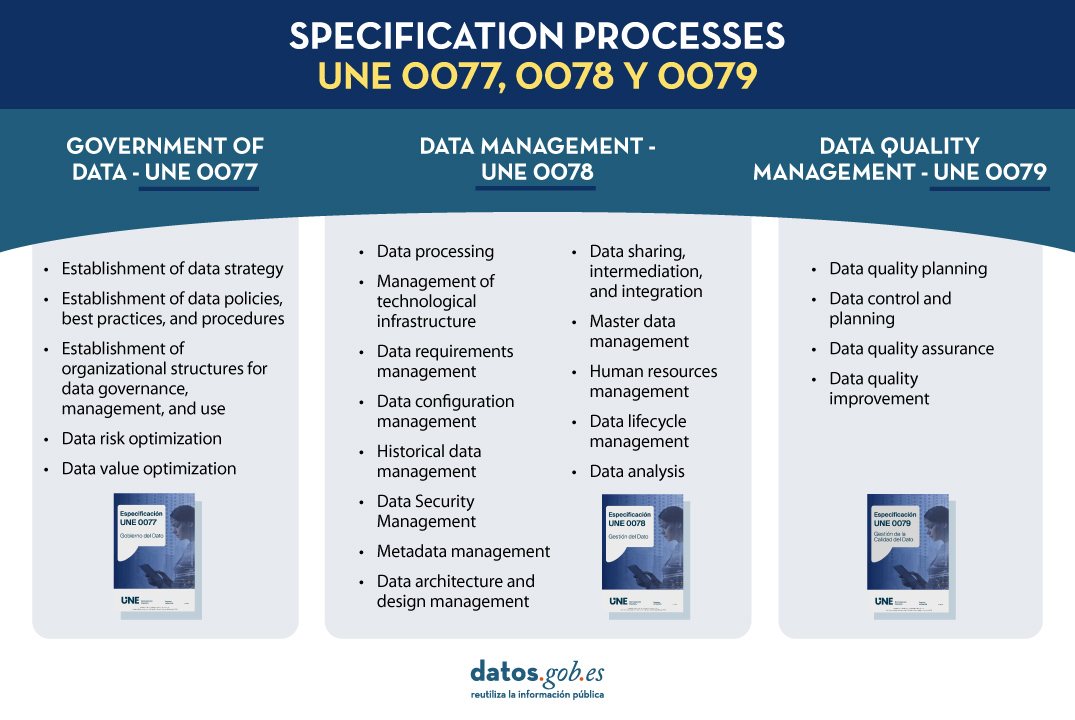

Fig. 1. Specification processes UNE 0077, 0078 and 0079

To support this organizational strategy, the Vistabella City Council needs a data strategy, the main objective of which is to promote the publication of open data on the respective open data portals and encourage their reuse to provide quality data to its residents transparently and responsibly. The Mayor of the Vistabella City Council must launch a data governance program to achieve this main objective. For this purpose, a working group composed of specialized data experts from the City Council is assigned to tackle this program. This group of experts is given the necessary authority, a budget, and a set of responsibilities.

When starting, these experts decide to follow the process approach proposed in UNE 0077, as it provides them with a suitable guide to carry out the necessary data governance actions, identifying the expected process outcomes for each of the processes and how these can be materialized into specific artifacts or work products.

This article explains how the experts have used the processes in the UNE 0077 specification to achieve their goal. Out of the five processes detailed in the specification, we will focus, by way of example, on only three of them: the one describing how to establish the data strategy, the one describing how to establish policies and best practices, and the one describing how to establish organizational structures.

Before we begin, it is important to remember the structure of the process descriptions in the different UNE specifications (UNE 0077, UNE 0078, and UNE 0079). All processes are described with a purpose, a list of expected process outcomes (i.e., what is expected to be achieved when the process is executed), a set of tasks that can be followed, and a set of artifacts or work products that are the manifestation of the process outcomes.

"Data Strategy Establishment Process"

The team of experts from the Vistabella City Council decided to follow each of the tasks proposed in UNE 0077 for this process. Below are some aspects of the execution of these tasks:

T1. Evaluate the capabilities, performance, and maturity of the City Council for the publication of open data. To do this, the working group gathered all possible information about the skills, competencies, and experiences in publishing open data that the Vistabella City Council already had. They also collected information about the downloads that have been made so far of published data, as well as a description of the data itself and the different formats in which it has been published. They also analyzed the City Council's environment to understand how open data is handled. The work product generated was an Evaluation Report on the organization's data capabilities, performance, and maturity.

T2. Develop and communicate the data strategy. Given its importance, to develop the data strategy, the working group used the Plan to promote the opening and reuse of open data as a reference to shape the data strategy stated earlier, which is to "promote the publication of open data on the respective open data portals and encourage their reuse to provide quality data to its residents transparently and responsibly." Additionally, it is important to note that data openness projects will be designed to eventually become part of the structural services of the Vistabella City Council. The work products generated will be the adapted Data Strategy itself and a specific communication plan for this strategy.

T3. Identify which data should be governed according to the data strategy. The Vistabella City Council has decided to publish more data about urban public transport and cultural events in the municipality, so these are the data that should be governed. This would include data of different types: statistical data, geospatial data, and some financial data. To do this, they propose using the Plan to promote the opening and reuse of open data again. The work product will be a list of the data that should be governed, and in this case, also published on the platform. Later on, the experts will be asked to reach an agreement on the meaning of the data and choose the most representative metadata to describe the different business, technical, and operational characteristics.

T4. Develop the portfolio of data programs and projects. To achieve the specific objective of the data strategy, a series of specific projects related to each other are identified, and their viability is determined. The work product generated through this task will be a portfolio of projects that covers these objectives:

- Planning, control, and improvement of the quality of open data

- Ensuring compliance with security standards

- Deployment of control mechanisms for data intermediation

- Management of the configuration of data published on the portal

T5. Monitor the degree of compliance with the data strategy. To do this, the working group defines a series of key performance indicators that are measured periodically to monitor key aspects related to the quality of open data, compliance with security standards, use of data intermediation mechanisms, and management of changes to the data published on the portal. The work product generated consists of periodic reports on the monitoring of the data strategy.

"Establishment of Data Policies, Best Practices, and Procedures Process"

The data strategy is implemented through a series of policies, best practices, and procedures. To determine these policies or procedures, you can follow the process of Establishing Data Policies, Best Practices, and Procedures detailed in UNE 0077. For each of the data identified in the previous process, it may be necessary to define specific policies for each area of action described in the established data strategy.

To have a systematic and consistent way of working and to avoid errors, the Vistabella City Council's working group decides to model and publish its own process for defining strategies based on the generic definition of that process contained in Specification UNE 0077, tailored to the specific characteristics of the Vistabella City Council.

This process could be followed by the working group as many times as necessary to define and approve data policies, best practices, and procedures.

In any case, it is important for the customization of this process to identify and select the principles, standards, ethical aspects, and relevant legislation related to open data. To do this, a framework is defined, consisting of a regulatory framework and a framework of standards.

The regulatory framework includes:

- The legal framework related to the reuse of public sector information.

- The General Data Protection Regulation (GDPR) to ensure that the minimum requirements for the security and privacy of information are met when publishing open data on the portal.

The framework of standards includes, among others:

- The practical guide for improving the quality of open data, which provides support to ensure that the shared data is of quality.

- The UNE specifications 0077, 0078, and 0079 themselves, which contain best practices for data governance, management, and quality.

This framework, along with the defined process, will be used by the working group to develop specific data policies that should be communicated through the appropriate publication, taking into account the most appropriate legal tools available. Some of these policies may be published, for example, as municipal resolutions or announcements, in compliance with the current regional or national legislation.

"Establishment of Organizational Structures for Data Governance, Management, and Use Process"

Even though the established Working Group is making initial efforts to address the strategy, it is necessary to create an organizational structure responsible for coordinating the necessary work related to the governance, management, and quality management of open data. For this purpose, the corresponding process detailed in UNE 0077 will be followed. Similar to the first section, the explanation is provided with the structure of the tasks to be developed:

T1. Define an organizational structure for data governance, management, and use. It is interesting to visualize the Vistabella City Council as a federated set of council offices and other municipal services that could share a common way of working, each with the necessary independence to define and publish their open data. Remember that initially, this data pertained to urban transport and cultural events. This involves identifying individual and collective roles, chains of responsibility, and accountability, as well as defining a way of communicating among them. The main product of this work will be an organizational structure to support various activities. These organizational structures must be compatible with the functional role structures that already exist in the City Council. In this regard, one can mention, by way of example, the information responsible unit, whose role is highlighted in Law 37/2007 as one of the most important roles. The information responsible unit primarily has the following four functions:

- Coordinate information reuse activities with existing policies regarding publications, administrative information, and electronic administration.

- Facilitate information about competent bodies within their scope for receiving, processing, and resolving reuse requests transmitted.

- Promote the provision of information in appropriate formats and keep it updated as much as possible.

- Coordinate and promote awareness, training, and promotional activities.

T2. Establish the necessary skills and knowledge. For each of the functions mentioned above of the information responsible units, it will be necessary to identify the skills and knowledge required to manage and publish the open data for which they are responsible. It is important to note that knowledge and skills should encompass both technical aspects in the field of open data publication and domain-specific knowledge related to the data being opened. All these knowledge and skills should be appropriately recognized and listed. Later on, a working group may be tasked with designing training plans to ensure that individuals involved in the information responsible units possess these knowledge and skills.

T3. Monitor the performance of organizational structures. In order to quantify the performance of organizational structures, it will be necessary to define and measure a series of indicators that allow modeling different aspects of the work of the people included in the organizational structures. This may include aspects such as the efficiency and effectiveness of their work or their problem-solving ability.

We have reached the end of this first article in which some aspects of how to use three of the five processes in the UNE 0077:2023 specification have been described to outline what open data governance should look like. This was done using an example of a City Council in an imaginary town called Vistabella, which is interested in publishing open data on urban transport and cultural events.

The content of this guide can be downloaded freely and free of charge from the AENOR portal through the link below by accessing the purchase section. Access to this family of UNE data specifications is sponsored by the Secretary of State for Digitalization and Artificial Intelligence, Directorate General for Data. Although the download requires prior registration, a 100% discount on the total price is applied at the time of finalizing the purchase. After finalizing the purchase, the selected standard or standards can be accessed from the customer area in the my products section.

https://tienda.aenor.com/norma-une-especificacion-une-0077-2023-n0071116

Content developed by Dr. Ismael Caballero, Associate Professor at UCLM, and Dr. Fernando Gualo, PhD in Computer Science, and Chief Executive Officer and Data Quality and Data Governance Consultant. The content and viewpoints reflected in this publication are the sole responsibility of the authors."

Open data plays a relevant role in technological development for many reasons. For example, it is a fundamental component in informed decision making, in process evaluation or even in driving technological innovation. Provided they are of the highest quality, up-to-date and ethically sound, data can be the key ingredient for the success of a project.

In order to fully exploit the benefits of open data in society, the European Union has several initiatives to promote the data economy, a single digital model that encourages data sharing, emphasizing data sovereignty and data governance, the ideal and necessary framework for open data.

In the data economy, as stated in current regulations, the privacy of individuals and the interoperability of data are guaranteed. The regulatory framework is responsible for ensuring compliance with this premise. An example of this can be the modification of Law 37/2007 for the reuse of public sector information in compliance with European Directive 2019/1024. This regulation is aligned with the European Union's Data Strategy, which defines a horizon with a single data market in which a mutual, free and secure exchange between the public and private sectors is facilitated.

To achieve this goal, key issues must be addressed, such as preserving certain legal safeguards or agreeing on common metadata description characteristics that datasets must meet to facilitate cross-industry data access and use, i.e. using a common language to enable interoperability between dataset catalogs.

What are metadata standards?

A first step towards data interoperability and reuse is to develop mechanisms that enable a homogeneous description of the data and that, in addition, this description is easily interpretable and processable by both humans and machines. In this sense, different vocabularies have been created that, over time, have been agreed upon until they have become standards.

Standardized vocabularies offer semantics that serve as a basis for the publication of data sets and act as a "legend" to facilitate understanding of the data content. In the end, it can be said that these vocabularies provide a collection of metadata to describe the data being published; and since all users of that data have access to the metadata and understand its meaning, it is easier to interoperate and reuse the data.

W3C: DCAT and DCAT-AP Standards

At the international level, several organizations that create and maintain standards can be highlighted:

- World Wide Web Consortium (W3C): developed the Data Catalog Vocabulary (DCAT): a description standard designed with the aim of facilitating interoperability between catalogs of datasets published on the web.

- Subsequently, taking DCAT as a basis, DCAT-AP was developed, a specification for the exchange of data descriptions published in data portals in Europe that has more specific DCAT-AP extensions such as:

- GeoDCAT-AP which extends DCAT-AP for the publication of spatial data.

- StatDCAT-AP which also extends DCAT-AP to describe statistical content datasets.

- Subsequently, taking DCAT as a basis, DCAT-AP was developed, a specification for the exchange of data descriptions published in data portals in Europe that has more specific DCAT-AP extensions such as:

ISO: Organización de Estandarización Internacional

Además de World Wide Web Consortium, existen otras organizaciones que se dedican a la estandarización, por ejemplo, la Organización de Estandarización Internacional (ISO, por sus siglas en inglés Internacional Standarization Organisation).

- Entre otros muchos tipos de estándares, ISO también ha definido normas de estandarización de metadatos de catálogos de datos:

- ISO 19115 para describir información geográfica. Como ocurre en DCAT, también se han desarrollado extensiones y especificaciones técnicas a partir de ISO 19115, por ejemplo:

- ISO 19115-2 para datos ráster e imágenes.

- ISO 19139 proporciona una implementación en XML del vocabulario.

- ISO 19115 para describir información geográfica. Como ocurre en DCAT, también se han desarrollado extensiones y especificaciones técnicas a partir de ISO 19115, por ejemplo:

The horizon in metadata standards: challenges and opportunities

The digitalization in the public sector in Spain has also reached the judicial field. The first regulation to establish a legal framework in this regard was the reform that took place through Law 18/2011, of July 5th (LUTICAJ). Since then, there have been advances in the technological modernization of the Administration of Justice. Last year, the Council of Ministers approved a new legislative package to definitively address the digital transformation of the public justice service, the Digital Efficiency Bill.

This project incorporates various measures specifically aimed at promoting data-driven management, in line with the overall approach formulated through the so-called Data Manifesto promoted by the Data Office.

Once the decision to embrace data-driven management has been made, it must be approached taking into account the requirements and implications of Open Government, so that not only the possibilities for improvement in the internal management of judicial activity are strengthened, but also the possibilities for reuse of the information generated as a result of the development of said public service (RISP).

Open data: a premise for the digital transformation of justice

To address the challenge of the digital transformation of justice, data openness is a fundamental requirement. In this regard, open data requires conditions that allow their automated integration in the judicial field. First, an improvement in the accessibility conditions of the data sets must be carried out, which should be in interoperable and reusable formats. In fact, there is a need to promote an institutional model based on interoperability and the establishment of homogeneous conditions that, through standardization adapted to the singularities of the judicial field, facilitate their automated integration.

In order to deepen the synergy between open data and justice, the report prepared by expert Julián Valero identifies the keys to digital transformation in the judicial field, as well as a series of valuable open data sources in the sector.

If you want to learn more about the content of this report, you can watch the interview with its author.

Below, you can download the full report, the executive summary, and a summary presentation.

Books are an inexhaustible source of knowledge and experiences lived by others before us, which we can reuse to move forward in our lives. Libraries, therefore, are places where readers looking for books, borrow them, and once they have used them and extracted from them what they need, return them. It is curious to imagine the reasons why a reader needs to find a particular book on a particular subject.

In case there are several books that meet the required characteristics, what might be the criteria that weigh most heavily in choosing the book that the reader feels best contributes to his or her task. And once the loan period of the book is over, the work of the librarians to bring everything back to an initial state is almost magical.

The process of putting books back on the shelves can be repeated indefinitely. Both on those huge shelves that are publicly available to all readers in the halls, and on those smaller shelves, out of sight, where books that for some reason cannot be made publicly available rest in custody. This process has been going on for centuries since man began to write and to share his knowledge among contemporaries and between generations.

In a sense, data are like books. And data repositories are like libraries: in our daily lives, both professionally and personally, we need data that are on the "shelves" of numerous "libraries". Some, which are open, very few still, can be used; others are restricted, and we need permissions to use them.

In any case, they contribute to the development of personal and professional projects; and so, we are understanding that data is the pillar of the new data economy, just as books have been the pillar of knowledge for thousands of years.

As with libraries, in order to choose and use the most appropriate data for our tasks, we need "data librarians to work their magic" to arrange everything in such a way that it is easy to find, access, interoperate and reuse data. That is the secret of the "data wizards": something they warily call FAIR principles so that the rest of us humans cannot discover them. However, it is always possible to give some clues, so that we can make better use of their magic:

- It must be easy to find the data. This is where the "F" in the FAIR principles comes from, from "findable". For this, it is important that the data is sufficiently described by an adequate collection of metadata, so that it can be easily searched. In the same way that libraries have a shingle to label books, data needs its own label. The "data wizards" have to find ways to write the tags so that the books are easy to locate, on the one hand, and provide tools (such as search engines) so that users can search for them, on the other. Users, for our part, have to know and know how to interpret what the different book tags mean, and know how the search tools work (it is impossible not to remember here the protagonists of Dan Brown's "Angels and Demons" searching in the Vatican Library).

- Once you have located the data you intend to use, it must be easy to access and use. This is the A in FAIR's "accessible". Just as you have to become a member and get a library card to borrow a book from a library, the same applies to data: you have to get a licence to access the data. In this sense, it would be ideal to be able to access any book without having any kind of prior lock-in, as is the case with open data licensed under CC BY 4.0 or equivalent. But being a member of the "data library" does not necessarily give you access to the entire library. Perhaps for certain data resting on those shelves guarded out of reach of all eyes, you may need certain permissions (it is impossible not to remember here Umberto Eco's "The Name of the Rose").

- It is not enough to be able to access the data, it has to be easy to interoperate with them, understanding their meaning and descriptions. This principle is represented by the "I" for "interoperable" in FAIR. Thus, the "data wizards" have to ensure, by means of the corresponding techniques, that the data are described and can be understood so that they can be used in the users' context of use; although, on many occasions, it will be the users who will have to adapt to be able to operate with the data (impossible not to remember the elvish runes in J.R.R. Tolkien's "The Lord of the Rings").

- Finally, data, like books, has to be reusable to help others again and again to meet their own needs. Hence the "R" for "reusable" in FAIR. To do this, the "data wizards" have to set up mechanisms to ensure that, after use, everything can be returned to that initial state, which will be the starting point from which others will begin their own journeys.

As our society moves into the digital economy, our data needs are changing. It is not that we need more data, but that we need to dispose differently of the data that is held, the data that is produced and the data that is made available to users. And we need to be more respectful of the data that is generated, and how we use that data so that we don't violate the rights and freedoms of citizens. So it can be said, we face new challenges, which require new solutions. This forces our "data wizards" to perfect their tricks, but always keeping the essence of their magic, i.e. the FAIR principles.

Recently, at the end of February 2023, an Assembly of these data wizards took place. And they were discussing about how to revise the FAIR principles to perfect these magic tricks for scenarios as relevant as European data spaces, geospatial data, or even how to measure how well the FAIR principles are applied to these new challenges. If you want to see what they talked about, you can watch the videos and watch the material at the following link: https://www.go-peg.eu/2023/03/07/go-peg-final-workshop-28-february-20203-1030-1300-cet/

Content prepared by Dr. Ismael Caballero, Lecturer at UCLM

The contents and views reflected in this publication are the sole responsibility of the author.

Motivation

According to the European Data Proposal Law, data is a fundamental component of the digital economy and an essential resource for ensuring ecological and digital transitions. In recent years, the volume of data generated by humans and machines has experienced an exponential increase. It is essential to unlock the potential of this data by creating opportunities for its reuse, removing obstacles to the development of the data economy, and respecting European norms and values. In line with the mission of reducing the digital divide, measures must be promoted that allow everyone to benefit from these opportunities fairly and equitably.

However, a downside of the high availability of data is that as more data accumulates, chaos ensues when it is not managed properly. The increase in volume, velocity, and variety of data also implies a greater difficulty in ensuring its quality. And in situations where data quality levels are inadequate, as analytical techniques used to process datasets become more sophisticated, individuals and communities can be affected in new and unexpected ways.

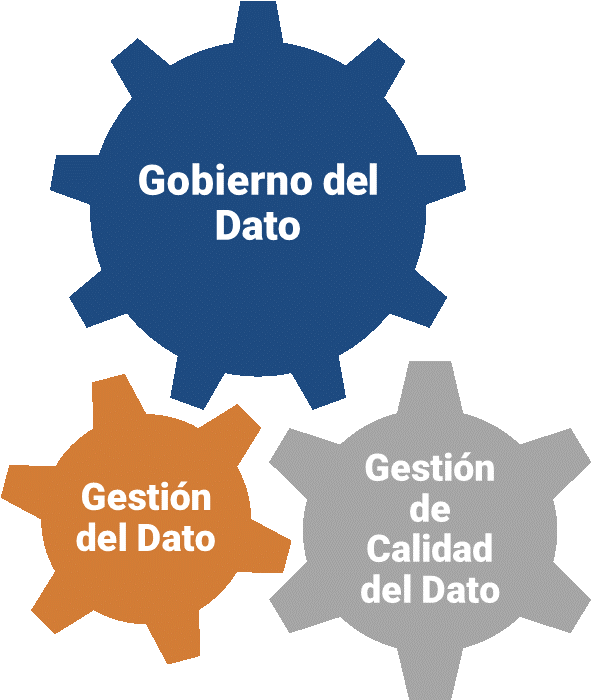

In this changing scenario, it is necessary to establish common processes applicable to data assets throughout an organization's lifecycle, maximizing their value through data governance initiatives that ensure a structured, managed, coherent, and standardized approach to all activities, operations, and services related to data. Ultimately, it must be ensured that the definition, creation, storage, maintenance, access, and use of data (data management) are done following a data strategy aligned with organizational strategies (data governance), and that the data used is suitable for the intended use (data quality).

UNE Specifications for Data Governance, Management, and Quality

The Data Office, a unit responsible for promoting the sharing, management, and use of data across all productive sectors of the Spanish economy and society, in response to the need for a reference framework that supports both public and private organizations in their efforts to ensure adequate data governance, management, and quality, has sponsored, promoted, and participated in the development of national UNE specifications in this regard.

The UNE 0077:2023 Data Governance, UNE 0078:2023 Data Management, and UNE 0079:2023 Data Quality Management specifications are designed to be applied jointly, enabling the creation of a solid and harmonized reference framework that promotes the adoption of sustainable and effective data practices.

Coordination is driven by data governance, which establishes the necessary mechanisms to ensure the proper use and exploitation of data through the implementation and execution of data management processes and data quality management processes, all in accordance with the needs of the relevant business process and taking into account the limitations and possibilities of the organizations that use the data.

Each regulatory specification is presented with a process-oriented approach, and each of the presented processes is described in terms of its contribution to the seven components of a data governance and management system, as introduced in COBIT 2019:

- Process, detailing its purpose, outcome, tasks, and products according to ISO 8000-61.

- Principles, policies, and frameworks.

- Organizational structures, identifying the data governance bodies and decision-making structures.

- Information, required and generated in each process.

- Culture, ethics, and behavior, as a set of individual and collective behaviors of people and the organization.

- People, skills, and competencies needed to complete all activities and make decisions and corrective actions.

- Services, infrastructure, and applications that include technology-related aspects to support data management, data quality management, and data governance processes.

UNE 0077:2023 Specification_Data Governance

The UNE 0077:2023 specification covers aspects related to data governance. It describes the creation of a data governance framework to evaluate, direct, and monitor the use of an organization's data, so that it contributes to its overall performance by obtaining the maximum value from the data while mitigating risks associated with its use. Therefore, data governance has a strategic character, while data management has a more operational focus aimed at achieving the goals set in the strategy.

The implementation of proper data governance involves the correct execution of the following processes:

- Establishment of data strategy

- Establishment of data policies, best practices, and procedures

- Establishment of organizational structures

- Optimization of data risks

- Optimization of data value

UNE 0078:2023 Specification_Data Management

The UNE 0078:2023 specification covers the aspects related to data management. Data management is defined as the set of activities aimed at ensuring the successful delivery of relevant data with adequate levels of quality to the agents involved throughout the data life cycle, supporting the business processes established in the organizational strategy, following the guidelines of data governance, and in accordance with the principles of data quality management.

The implementation of adequate data management involves the development of thirteen processes:

- Data processing

- Management of the technological infrastructure

- Management of data requirements

- Management of data configuration

- Historical data management

- Data security management

- Metadata management

- Management of data architecture and design

- Data sharing, intermediation and integration

- Master data management

- Human resource management

- Data lifecycle management

- Data analysis

UNE 0079:2023 Specification_Data Quality Management

The UNE 0079:2023 specification covers the data quality management processes necessary to establish a framework for improving data quality. Data quality management is defined as the set of activities aimed at ensuring that data has adequate quality levels for use that allows an organization's strategy to be satisfied. Having quality data will allow an organization to achieve the maximum potential of data through its business processes.

According to Deming's continuous improvement PDCA cycle, data quality management involves four processes:

- Data quality planning,

- Data quality control and monitoring,

- Data quality assurance, and

- Data quality improvement.

The data quality management processes are intended to ensure that data meets the data quality requirements expressed in accordance with the ISO/IEC 25012 standard.

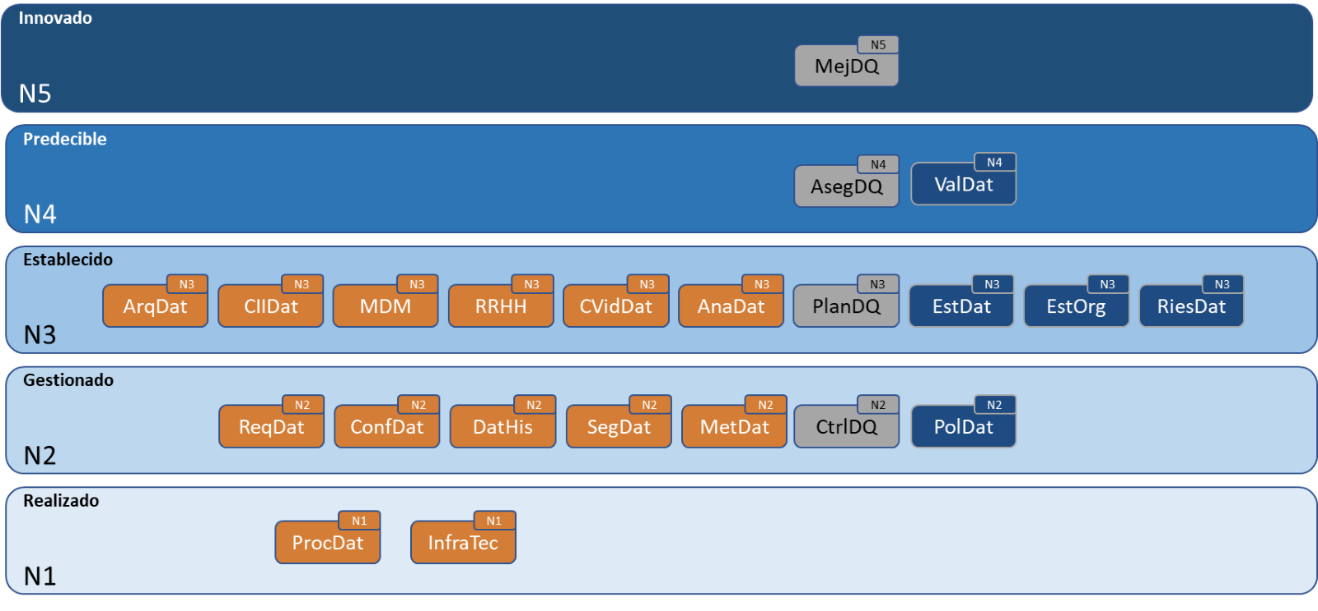

Maturity Model

As a joint application framework for the different specifications, a data maturity model is outlined that integrates the processes of governance, management, and data quality management, showing how the progressive implementation of processes and their capabilities can be carried out, defining a path of improvement and excellence across different levels to become a mature data organization.

The Data Office will promote the development of the UNE 0080 specification to provide a data maturity assessment model that complies with the content of the governance, management, and data quality management specifications and the aforementioned framework.

The content of this guide, as well as the rest of the UNE specifications mentioned, can be viewed freely and free of charge from the AENOR portal through the link below by accessing the purchase section and marking “read” in the dropdown where “pdf” is pre-selected. Access to this family of UNE data specifications is sponsored by the Secretary of State for Digitalization and Artificial Intelligence, Directorate General for Data. Although viewing requires prior registration, a 100% discount on the total price is applied at the time of finalizing the purchase. After finalizing the purchase, the selected standard or standards can be accessed from the customer area in the my products section.

The content of this guide can be downloaded freely and free of charge from the AENOR portal through the link below by accessing the purchase section. Access to this family of UNE data specifications is sponsored by the Secretary of State for Digitalization and Artificial Intelligence, Directorate General for Data. Although the download requires prior registration, a 100% discount on the total price is applied at the time of finalizing the purchase. After finalizing the purchase, the selected standard or standards can be accessed from the customer area in the my products section.

- UNE 0077:2023 SPECIFICATION: https://tienda.aenor.com/norma-une-especificacion-une-0077-2023-n0071116

- UNE 0078:2023 SPECIFICATION: https://tienda.aenor.com/norma-une-especificacion-une-0078-2023-n0071117

- UNE 0079:2023 SPECIFICATION: https://tienda.aenor.com/norma-une-especificacion-une-0079-2023-n0071118

- UNE 0080:2023 SPECIFICATION: https://tienda.aenor.com/norma-une-especificacion-une-0080-2023-n0071383

The Manifesto for a public data space has recently been published. The document raises the need to reinforce the importance of data in the current digital transformation process in this area. The document has been drawn up within the State Technical Committee of the Electronic Judicial Administration and was subsequently ratified by the competent Public Administrations in matters of Justice, i.e. the General State Administration through the Ministry of Justice and the Autonomous Communities that have assumed competence in this field, as well as the General Council of the Judiciary and the General State Prosecutor's Office.

Specifically, as is expressly recognised, it is "an instrument that seeks to improve the efficiency of Justice through data processing and to design public policies in the field of Justice, based on the consideration of data as a public good, in such a way as to guarantee both its production and its free access".

What are the main objectives to be achieved?

The document is part of a wider initiative called Data-driven Justice which, within the broader framework of the transformation of the public service of Justice, is conceived as a priority project for the Administration of Justice. Its main purpose is the creation of a secure, interoperable and reuse-oriented public data space. Specifically, it aims to:

- Promote a data-driven management model underpinning the transformation of Justice.

- Given that data must be considered a public good, it is considered a priority to guarantee free access to them.

- To promote a secure, interoperable and reuse-oriented public data space, which implies the need to address technical, organisational and, ultimately, legal challenges and problems. To this end, a governance model is proposed based on the configuration of access to data as a right, the promotion of interoperability, as well as, among other principles, the promotion of data literacy and the rejection of practices that prevent the re-use of data or, where appropriate, imply the recognition of exclusive rights.

- Ensure innovation in the field of Justice with a solution-oriented approach to concrete problems, in particular to promote cohesion and equality.

Difficulties and challenges from an open data and re-use perspective

This is undoubtedly a suggestive approach which, nevertheless, faces important challenges that go beyond the mere approval of formal documents and the promotion of legislative reforms.

Firstly, it is necessary to start from the existence of a plurality of subjects involved. To this end, the existence of a dual perspective in the public management of the judicial sphere must be emphasised. On the one hand, the Ministry of Justice or, as the case may be, the Autonomous Communities with transferred powers are the administrations that provide the material and personal resources to support management and, therefore, are responsible for exercising the powers relating to access and re-use of the information linked to their own sphere of competence. On the other hand, the Constitution reserves the exercise of the judicial function exclusively to judges and courts, which implies a significant role in the processing and management of documents. In this respect, the legislation grants an important role to the General Council of the Judiciary as regards access to and re-use of judicial decisions. Undoubtedly, the fact that the judicial governing body has ratified the Manifesto represents an important commitment beyond the legal regulation.

Secondly, although there has been significant progress since the approval in 2011 of a legislative framework aimed at promoting the digitisation of Justice, nevertheless, the daily reality of courts and tribunals often demonstrates the continued importance of paper-based management. Furthermore, major interoperability problems sometimes persist and, ultimately, the interconnection of the different technological tools and information systems is not always guaranteed in practice.

In order to address these challenges, two major initiatives have been promoted in recent months. On the one hand, the reform intended to be carried out by the Draft Act on Procedural Efficiency Measures in the Public Justice Service shows, in short, that the modernisation of the judiciary is still a pending objective. However, it should be borne in mind that this is not simply a purely technological challenge, but also requires important reforms in the organisational structure, document management and, in short, the culture that pervades a highly formalised area of the public sector. A major effort is therefore needed to manage the change that the Manifesto aims to promote.

With regard to open data and the re-use of public sector information, it is necessary to distinguish between purely administrative management, where the competence corresponds to the public administrations, as mentioned above, and judicial decisions, the latter being in the hands of the General Council of the Judiciary. In this respect, the important effort made by the judges' governing body to facilitate access to statistical information must be acknowledged. However, access to judicial decisions for re-use has significant restrictions which should be reconsidered in the light of European regulation. Even taking into account the progress made at the time with the implementation of the service of access to judicial decisions available through the CENDOJ, it is true that this is a model with significant limitations that may hinder the promotion of advanced digital services based on the use of data.

Even though the last attempt to regulate the singularities of the re-use of judicial information by the General Council of the Judiciary ended up being annulled by the Supreme Court, the aforementioned Draft Act contemplates a relevant measure in this respect. Specifically, within the framework of the electronic archiving of documents and files, it entrusts the General Council of the Judiciary with the regulation of "the re-use of judgments and other judicial decisions by digital means of reference or forwarding of information, whether or not for commercial purposes, by natural or legal persons to facilitate access to them by third parties".

More recently, at the end of July, the Council of Ministers approved a second legislative initiative that is already being processed in the Spanish Parliament and which incorporates some measures specifically dedicated to the promotion of digital efficiency. Specifically, in relation to the electronic judicial file, the reform aims to go beyond the document-based management model and proposes a paradigm shift based on the establishment of the general principle of a data-based justice system that, among other possibilities, facilitates "automated, proactive and assisted actions". With regard to open data and the reuse of information, the draft legislation includes a specific title that provides for the publication of open data on the Justice Administration Portal according to interoperability criteria and, whenever possible, in formats that allow automatic processing.

In short, data-driven management in the judicial sphere and, in particular, access to judicial information for reuse purposes requires a process of in-depth reflection in which not only the competent public bodies and legal publishers offering access to jurisprudence but, with a broader scope, the various legal professions and society in general can participate. Beyond the promotion of suggestive initiatives such as the Forum on the Digital Transformation of Justice, the first edition of which took place a few months ago, and the timely organisation of academic events where this debate can take place, such as the one held last October, ultimately we must start from an elementary principle: the need to promote a management model based on the opening up of information by default and by design. Only on this premise can the effective re-use of information in the public service of Justice be promoted definitively and with the appropriate legal guarantees.

Therefore, in view of the important legal reforms that are being processed, the time seems to have come to make a definitive commitment to the value of data in the judicial sphere under the protection of the objectives that the aforementioned Manifesto intends to address.

Content prepared by Julián Valero, professor at the University of Murcia and Coordinator of the Research Group "Innovation, Law and Technology" (iDerTec).

Contents and points of view expressed in this publication are the exclusive responsibility of its author.

Nowadays we can find a great deal of legislative information on the web. Countries, regions and municipalities make their regulatory and legal texts public through various spaces and official bulletins. The use of this information can be of great use in driving improvements in the sector: from facilitating the location of legal information to the development of chatbots capable of resolving citizens' legal queries.

However, locating, accessing and reusing these documents is often complex, due to differences in legal systems, languages and the different technical systems used to store and manage the data.

To address this challenge, the European Union has a standard for identifying and describing legislation called the European Legislation Identifier (ELI).

What is the European Legislation Identifier?

The ELI emerged in 2012 through Council Conclusions (2012/C 325/02) in which the European Union invited Member States to adopt a standard for the identification and description of legal documents. This initiative has been further developed and enriched by new conclusions published in 2017 (2017/C 441/05) and 2019 (2019/C 360/01).

The ELI, which is based on a voluntary agreement between EU countries, aims to facilitate access, sharing and interconnection of legal information published in national, European and global systems. This facilitates their availability as open datasets, fostering their re-use.

Specifically, the ELI allows:

- Identify legislative documents, such as regulations or legal resources, uniquely by means of a unique identifier (URI), understandable by both humans and machines.

- Define the characteristics of each document through automatically processable metadata. To this end, it uses vocabularies defined by means of ontologies agreed and recommended for each field.

Thanks to this, a series of advantages are achieved:

- It provides higher quality and reliability.

- It increases efficiency in information flows, reducing time and saving costs.

- It optimises and speeds up access to legislation from different legal systems by providing information in a uniform manner.

- It improves the interoperability of legal systems, facilitating cooperation between countries.

- Facilitates the re-use of legal data as a basis for new value-added services and products that improve the efficiency of the sector.

- It boosts transparency and accountability of Member States.

Implementation of the ELI in Spain

The ELI is a flexible system that must be adapted to the peculiarities of each territory. In the case of the Spanish legal system, there are various legal and technical aspects that condition its implementation.

One of the main conditioning factors is the plurality of issuers, with regulations at national, regional and local level, each of which has its own means of official publication. In addition, each body publishes documents in the formats it considers appropriate (pdf, html, xml, etc.) and with different metadata. To this must be added linguistic plurality, whereby each bulletin is published in the official languages concerned.

It was therefore agreed that the implementation of the ELI would be carried out in a coordinated manner by all administrations, within the framework of the Sectoral Commission for e-Government (CSAE), in two phases:

- Due to the complexity of local regulations, in the first phase, it was decided to address only the technical specification applicable to the State and the Autonomous Communities, by agreement of the CSAE of 13 March 2018.

- In February 2022, a new version was drafted to include local regulations in its application.

With this new specification, the common guidelines for the implementation of the ELI in the Spanish context are established, but respecting the particularities of each body. In other words, it only includes the minimum elements necessary to guarantee the interoperability of the legal information published at all levels of administration, but each body is still allowed to maintain its own official journals, databases, internal processes, etc.

With regard to the temporal scope, bodies have to apply these specifications in the following way:

- State regulations: apply to those published from 29/12/1978, as well as those published before if they have a consolidated version.

- Autonomous Community legislation: applies to legislation published on or after 29/12/1978.

- Local regulations: each entity may apply its own criteria.

How to implement the ELI?

The website https://www.elidata.es/ offers technical resources for the application of the identifier. It explains the contextual model and provides different templates to facilitate its implementation:

It also offers the list of common minimum metadata, among other resources.

In addition, to facilitate national coordination and the sharing of experiences, information on the implementation carried out by the different administrations can also be found on the website.

The ELI is already applied, for example, in the Official State Gazette (BOE). From its website it is possible to access all the regulations in the BOE identified with ELI, distinguishing between state and autonomous community regulations. If we take as a reference a regulation such as Royal Decree-Law 24/2021, which transposed several European directives (including the one on open data and reuse of public sector information), we can see that it includes an ELI permalink.

In short, we are faced with a very useful common mechanism to facilitate the interoperability of legal information, which can promote its reuse not only at a national level, but also at a European level, favouring the creation of the European Union's area of freedom, security and justice.

Content prepared by the datos.gob.es team.

This report published by the European Data Portal (EDP) covers the following topics.

Making data available as open data in all EU Member States is vital to harnessing its potential for European society and economy. In order to increase impact effectively, efforts must target the datasets that have the greatest potential in society and the economy.

In the regulation on open data and re-use of public sector information, the European Commission is mandated to adopt an implementing regulation specifying high-value datasets.

The line of argument developed in this report parallels what the Commission has done during the first quarter of 2021, to prepare the implementing regulation that includes a list of high-value datasets. This report reviews relevant literature, policy decisions and national initiatives to enable a deeper understanding of the situation around assessing the value of datasets.

The report is available at this link: "High-Value Datasets: Understanding the Data Providers' Perspective"

The current Directive 2019/1024 on open data and re-use of public sector information, adopted in June 2019, was established to replace and improve the former Directive 2003/98/EC. Among its objectives was to boost the availability of public sector data for re-use by establishing some minimum harmonisation rules that favour its use as a raw material for innovation in all economic sectors. It should be noted that this directive has been incorporated into Spanish law through Royal Decree-Law 24/2021, of 2 November, transposing several European Union directives.

Among the most significant changes introduced by Directive 2019/1024 was the drawing up of a list of high-value datasets to be highlighted among those held by public bodies.

High-value data: definition and characteristics

The Directive describes high-value data as "documents whose re-use is associated with considerable benefits for society, the environment and the economy, in particular because of their suitability for the creation of new, decent and quality value-added services, applications and jobs, and the number of potential beneficiaries of value-added services and applications based on such datasets".

This definition provides some clues as to how such high-value datasets can be identified. Identification can be carried out through a series of indicators including:

- Potential to generate:

- Significant social or environmental benefits

- Economic benefits and new revenues

- Innovative services

- Potential in terms of number of users benefited, with a particular focus on SMEs.

- Ability to be combined with other datasets.

How should high-value data be published?

According to the Directive, the publication of these datasets has to meet the following requirements:

- Be reusable free of charge.

- Available through application programming interfaces (APIs).

- Available in a machine-readable format.

- Feature a bulk download option, where possible.

In addition, they should be compatible with open standard licences.

Which thematic categories are considered high-value data?

The European Data Strategy incorporates high-value data as a common data layer that facilitates, together with data from the private sector, the deployment of sectoral data spaces in strategic areas.

Originally, the directive included in its annex a number of priority themes that could be considered high-value data: geospatial data, earth observation and environmental data, meteorological data, statistical data, business registers or transport data.

However, these categories were very broad. The EU has therefore launched an initiative to establish a list indicating more precisely what types of data are considered high-value and how they should be published. Following an extensive consultation of stakeholders and taking into account the outcome of the impact assessment, the Commission identified, within each of the six data categories, a number of datasets of particular value and the arrangements for their publication and re-use.

The list takes the form of a binding implementing act. The granularity and modality of publication varies from one dataset to another, trying to strike a balance between the potential socio-economic and environmental benefits and the financial and organisational burden to be borne by public data holders. Existing sectoral legislation governing these datasets should also be taken into account.

Open comment period on the draft law "Open Data: Availability of public datasets".

The next step is to get citizens' feedback on the proposed datasets. The European Commission currently has a specific section open on its website, at the end of which any citizen of the European Union can provide their comments to help improve and enrich this initiative. The public consultation will run for four weeks, from 24 May to 21 June 2022.

In order to submit your comments, you need to register using your email address or popular social networks such as Twitter or Facebook.

Remember that in order to express your opinion and for it to be taken into account by the public body, your comment must comply with the established rules and standards. In addition, you can consult the comments already made by other citizens from different countries and which are offered publicly. The website also includes a visualisation that presents data on the number of opinions offered per country or the category to which the participants belong (private companies, academic institutions, research institutions, NGOs, citizens, etc.).

This list will be a really important milestone as, for the first time in many years, it will be possible to establish an explicit and common guide on what are the minimum datasets that should always be available and what should be the conditions for their re-use throughout the European Union.

At the Spanish level, the Data Office, in collaboration with stakeholders, will be in charge of landing this list and specifying other additional datasets, both public and private, based on what is indicated in Royal Decree-Law 24/2021.

Data is a key pillar of digital transformation. Reliable and quality data are the basis of everything, from the main strategic decisions to the routine operational process, they are fundamental in the development of data spaces, as well as the basis of disruptive solutions linked to fields such as artificial intelligence or Big Data.

In this sense, the correct management and governance of data has become a strategic activity for all types of organizations, public and private.

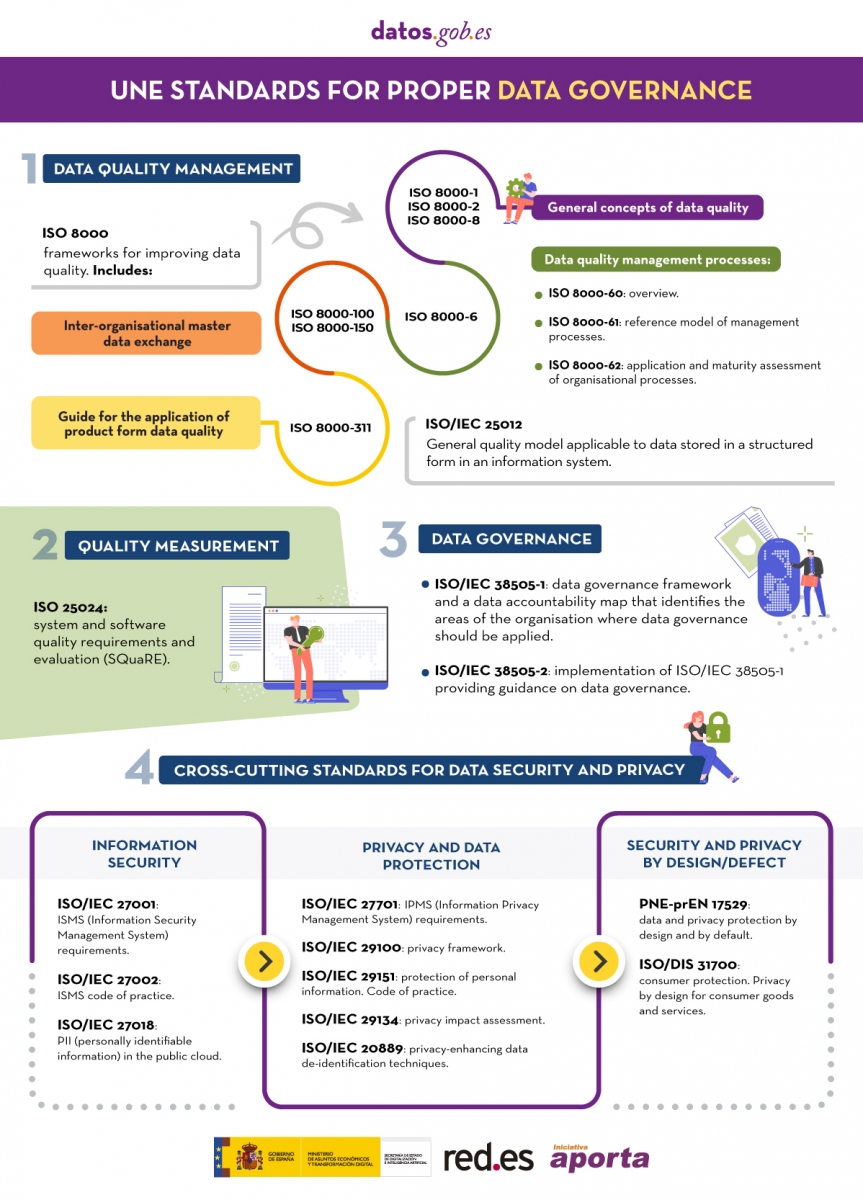

Data governance standardization is based on 4 principles:

- Governance

- Management

- Quality

- Security and data privacy

Those organizations that want to implement a solid governance framework based on these pillars have at their disposal a series of technical standards that provide guiding principles to ensure that an organization's data is properly managed and governed, both internally and by external contracts.

With the aim of trying to clarify doubts in this matter, the Spanish Association for Standardization (UNE), has published various support materials.

The first is an article on the different technical standards to consider when developing effective data governance . The rules contained in said article, together with some additional ones, are summarized in the following infographic:

(You can download the accessible version in word here)

In addition, the UNE has also published the report "Standards for the data economy" , which can be downloaded at the end of this article. The report begins with an introduction where the European legislative context that is promoting the Data Economy is deepened and the recognition that it makes of technical standardization as a key tool when it comes to achieving the objectives set. The technical standards included in the previous infographic are analyzed in more detail below.