Visualization is critical for data analysis. It provides a first line of attack, revealing intricate structures in data that cannot be absorbed otherwise. We discover unimaginable effects and question those that have been imagined."

William S. Cleveland (de Visualizing Data, Hobart Press)

Over the years an enormous amount of public information has been generated and stored. This information, if viewed in a linear fashion, consists of a large number of disjointed numbers and facts that, out of context, lack any meaning. For this reason, visualization is presented as an easy solution towards understanding and interpreting information.

To obtain good visualizations, it is necessary to work with data that meets two characteristics:

- It has to be quality data. They need to be accurate, complete, reliable, current and relevant.

- They have to be well treated. That is, conveniently identified, correctly extracted, in a structured way, etc.

Therefore, it is important to properly process the information before its graphic treatment. The treatment of the data and its visualization form an attractive tandem for the user who demands, more and more, to be able to interpret data in an agile and fast way.

There are a large number of tools for this purpose. The report "Data processing and visualization tools" offers us a list of different tools that help us in data processing, from obtaining them to creating a visualization that allows us to interpret them in a simple way.

What can you find in the report?

The guide includes a collection of tools for:

- Web scraping

- Data debugging

- Data conversion

- Data analysis for programmers and non-programmers

- Generic visualization services, geospatial and libraries and APIs.

- Network analysis

All the tools present in the guide have a freely available version so that any user can access them.

New edition 2021: incorporation of new tools

The first version of this report was published in 2016. Five years later it has been updated. The news and changes made are:

- New currently popular data visualization and processing tools such as Talend Open Studio, Python, Kibana or Knime have been incorporated.

- Some outdated tools have been removed.

- The layout has been updated.

If you know of any additional tools, not currently included in the guide, we invite you to share the information in the comments.

In addition, we have prepared a series of posts where the different types of tools that can be found in the report are explained:

The saying "a picture is worth a thousand words" is a clear example of popular wisdom based on science. 90% of the information we process is visual, thanks to a million nerve fibers that link the eye to the brain and more than 20,000 million neurons that perform the processing of the impulses received at high speed. That is why we are able to remember 80% of the images we see, while in the case of text and sound the percentages are reduced to 20% and 10%, respectively.

These data explain the importance of data visualization in any sector of activity. It is not the same to tell how an indicator evolves, as to see it through visual elements, such as graphs or maps. Data visualization helps us understand complex concepts and is an accessible way to detect and understand trends and patterns in the data.

Data Visualization and Smart Cities

In the case of Smart Cities, where so much information is generated and captured, data visualization is fundamental. Throughout the length and breadth of a smart city, there are a large number of sensors and smart devices, with different detection capabilities, which generate a large amount of raw data. To give an example, only the city of Barcelona has more than 18,000 sensors spread throughout the city that capture millions of data. This data allows from real time monitoring of the environment to informed decision making or accountability. Visualizing this data through visual dashboards speeds up all these processes.

To help Smart Cities in this task, from the Open Cities project, led by Red.es and four city councils (A Coruña, Madrid, Santiago de Compostela and Zaragoza), a series of visualization tools have been selected and an extension has been developed for CKAN similar to the functionality "Open With Apps", initially designed for the Data.gov portal, which facilitates the integration with this type of tools.

The integration method inspired by "Open with Apps"

The idea behind "Open With Apps" is to allow integration with some third party services, for some formats published in the open data portals, such as CSV or XLS, without the need to download and upload data manually, through the APIs or URIs of the external service.

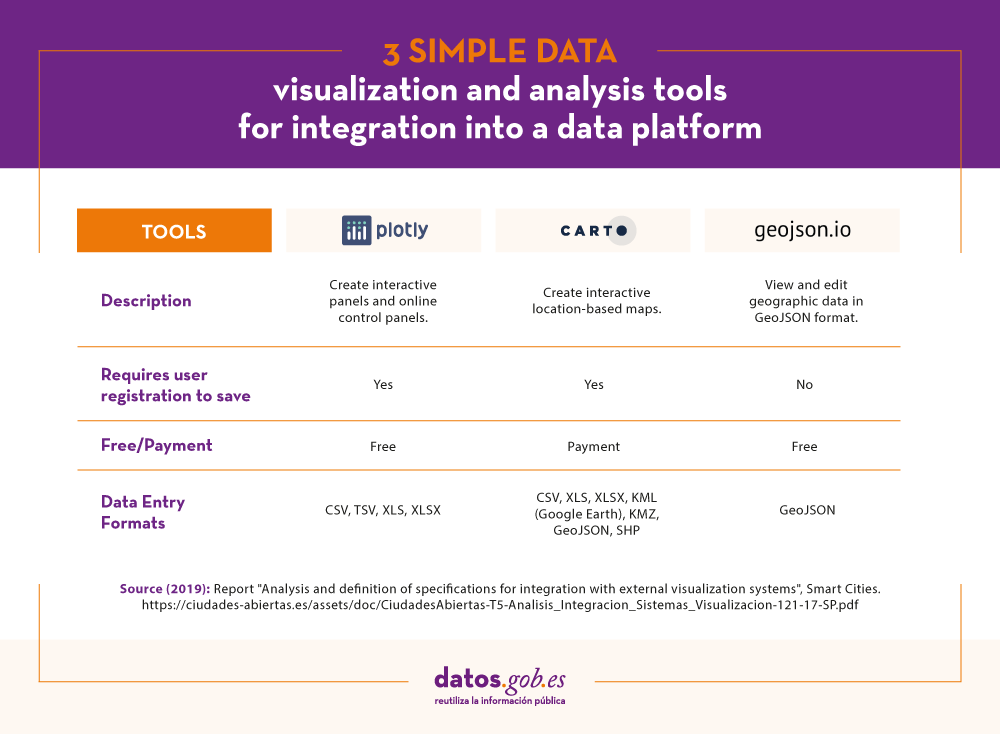

But not all display systems allow this functionality. Therefore, since the Open Cities project they have analyzed several platforms and online tools for creating visualizations and data analysis, and have selected 3 that meet the necessary characteristics for the described operation:

- The integration is done through links to websites without the need to download any software.

- In the invocation it is only necessary to pass as a parameter the download URL of the data file.

The result of this analysis has given rise to the report "Analysis and definition of specifications for integration with external visualization systems", where 3 tools that comply with these functionalities are highlighted.

3 simple data visualization and analysis tools

According to the aforementioned report, the 3 platforms that meet the necessary characteristics to achieve such operation are:

- Plotly: facilitates the creation of interactive data visualizations and control panels to share online with the audience. More advanced users can process data with any custom function, as well as create simulations with Python scripts. Supported formats are CSV, TSV, XLS and XLSX.

- Carto: formerly known as CartoDB, it generates interactive maps from geospatial data. The maps are automatically created and the user can filter and refine the data for more information. It accepts files in CSV, XLS, XLSX, KML (Google Earth), KMZ, GeoJSON and SHP formats.

- Geojson.io: allows to visualize and edit geographic data in GeoJSON format, as well as to export to a large number of formats.

For each of these tools the report includes a description of its requirements and limitations, its mode of use, a generic call and specific examples of calls along with the result obtained.

The "Open with" extension

As mentioned above, within the project a CKAN extension called "Open with" has also been developed. This extension allows to visualize the data files using the external visualization systems described above. It can be accessed through the project's GitHub account.

The report explains how to carry out its installation in a simple way, although if any doubt arises about its operation, users can contact Open Cities through the e-mail contacto@ciudadesabiertas.es.

Those interested in other CKAN extensions related to data visualization have at their disposal the report Analysis of the Visualization Extensions for CKAN, carried out within the framework of the same initiative. In the Gighub account, it is expected that examples of visualizations made will be published.

In short, data visualization is a fundamental leg of Smart Cities, and thanks to the work of the Open Cities team it will now be easier for any initiative to integrate simple data visualization solutions into their information management platforms.

Introduction

In this new post we introduce an important topic in the data analysis sector and that, however, tends to go unnoticed by most of the non-specialist audience. When we talk about advanced data analytics, we tend to think of sophisticated tools and advanced knowledge in machine learning and artificial intelligence. Without detracting from these skills so demanded today, there are much more basic aspects of data analysis that have a much greater impact on the end user or consumer of results. This time we talk about the communication of data analysis. Good communication of the results and process of a data analysis can make the difference between the success or failure of a data analytics project.

Communication. An integrated process

We could think that the communication of data analysis is a subsequent process and decoupled from the technical analysis itself. Ultimately, it is something that is left for last. This is a very common mistake among analysts and data scientists. Communication must be integrated with the analysis process. From the more tactical perspective of the code documentation and the analysis process, to the communication to the final public (in the form of presentations and / or reports). Everything must be an integrated process. In the same way that the DevOps philosophy has prevailed in the world of software development, in the data analysis space the DataOps philosophy must prevail. In both cases, the goal is continuous and agile delivery of value in the form of software and data.

Gartner defines DataOps as \"a collaborative data management practice focused on improving communication, integration and automation of data flows between data managers and consumers in an enterprise.\"

Innovation Insight for DataOps

Benefits of using an integrated data analysis and communication methodology.

- A single controlled and governed process. When we adopt DataOps we can be sure that all stages of data analysis are under control, governed and highly automated. This results in control and security of the data pipeline.

- Reproducible data science. When we communicate results and / or part of the data analysis process, it is common for other collaborators to start from your work to try to improve or modify the results. Sometimes they will just try to reproduce your same results. If the final communication has been part of the process in an integrated and automated way, your collaborators will have no problem reproducing those same results themselves. Otherwise, if the communication was done at the end of the process and decoupled (both in time and in the use of different tools) from the analysis, there is a high probability that the replay attempt will fail. Software development processes, whether they include data or not, are highly iterative. That is, hundreds if not thousands of code changes are made before obtaining the desired results. If these iterative changes, no matter how small, are decoupled from the final communication, surely, the result obtained will have obviated changes that will make their direct reproduction impossible.

- Continuous delivery of value. On many occasions I have experienced situations in which the preparation of results is left as the last phase of a project or data analysis process. Most of the efforts are focused on data analysis development and algorithm development (where applicable). This fact has a clear consequence. The last task is to prepare the communication and therefore it is the one that ends up concentrating less focus. All team efforts have been spent in previous phases. We are exhausted and the documentation and communication is what separates us from the delivery of the project. As a consequence, project documentation will be insufficient and communication poor. However, when we present the results to our clients, we will desperately try to convince that an excellent job of analysis has been done (and it will be) but our weapon is the communication that we have prepared and it is predictably much better.

- Improvement in the quality of communication. When we integrate development and communication, we are monitoring at all times what our clients are going to consume. In this way, during the analysis process, we have the agility to modify the results that we are producing (in the form of graphs, tables, etc.) in analysis time. On multiple occasions I have seen how after closing the analysis phase and reviewing the results produced, we realize that something is not well understood or can be improved in terms of communication. They can be simple things like the colors in a legend or the decimal digits in a table. However, if the analysis has been carried out with very different tools and decoupled from the production of results (for example, a presentation), the very idea of rerunning the analysis project to modify small details will set you off alarms at any time. analyst or data scientist. With the DataOps methodology and the right tools, we just have to rerun the data pipeline with the corresponding changes and everything will be re-created automatically.

Integrated communication tools

We have talked about the methodology and its benefits, but we must know that the tools to correctly implement the strategy play an important role. Without going any further, this post has been made entirely with a data pipeline that in the same process integrates: (1) the writing of this post, (2) the creation of a website for publication, (3) the versioning of the code and (4) the data analysis, although in this case, it is not relevant, as it only serves to illustrate that it is one more part of the process.

Without going into too many technical details, in the same work environment (and programming and document writing) RStudio and using the Markdown and Blogdown plugins we can create a complete website where we can publish our posts, in this case about analysis of data. The detailed explanation about the creation of the website that will host the following content posts will be left for another time. At this time we are going to focus on the generation of this content post in which we will show an example of data analysis.

To illustrate the process we are going to use this dataset available at datos.gob.es. It is a set of data that collects the uses of the citizen card of the Gijón City Council, during 2019 in the different services available in the city.

As we can see, at this point, we are already integrating the communication of a data analysis with your own analysis. The first thing we are going to do is load the dataset and see a preview of it.

file <- \"http://opendata.gijon.es/descargar.php?id=590&tipo=EXCEL\"\r\n Citicard <- read_csv2(file, col_names = TRUE)\r\n head(Citicard)| Date | Service | Instalation | Uses |

|---|---|---|---|

| 2019-01-01 | Public toilets | Avda Carlos Marx | 642 |

| 2019-01-01 | Public toilets | Avda del Llano | 594 |

| 2019-01-01 | Public toilets | C/ Puerto el Pontón | 139 |

| 2019-01-01 | Public toilets | Cerro Santa Catalina | 146 |

| 2019-01-01 | Public toilets | Donato Argüelles | 1095 |

| 2019-01-01 | Public toilets | El Rinconín | 604 |

Next we are going to generate an obvious and simple analysis in any exploratory phase (EDA - Exploratory Data Analysis) of a new data set. We are going to add the data set by Date and Services, thus obtaining the sum of card uses by date and type of service.

Citi_agg <- Citicard %>%\r\n group_by(Fecha, Servicio) %>%\r\n summarise(Usos = sum(Usos)) \r\n \r\n head(Citi_agg)| Date | Services | Uses |

|---|---|---|

| 2019-01-01 | Public toilets | 17251 |

| 2019-01-01 | Library loans | 15369 |

| 2019-01-01 | Transport | 1201471 |

| 2019-02-01 | Public toilets | 18186 |

| 2019-02-01 | Library loans | 14716 |

| 2019-02-01 | Transport | 1158109 |

We graph the result and observe how the majority use of the citizen card is the payment of public transport. Since we have generated an interactive graph, we can select in the Autoscale controls and click on the legend to remove the transport column and analyze in detail the differences between the use of Public Toilets and Library Loans.

Citi_fig <- ggplot(Citi_agg, aes(x=Fecha, y=Usos/1000, fill=Servicio)) +\r\n geom_bar(stat=\"identity\", colour=\"white\") + labs(x = \"Servicio\", y = \"Uso Tarjeta (en miles)\") + \r\n theme(\r\n axis.title.x = element_text(color = \"black\", size = 14, face = \"bold\"),\r\n axis.title.y = element_text(color = \"black\", size = 10, face = \"bold\")\r\n ) \r\n \r\n ggplotly(Citi_fig)When we discard for a moment the use of the card as a means of payment in public transport, we observe the greater use of the card for access to public toilets and to a lesser extent for the loan of means in public libraries. In the same way, the use of the zoom allows us to see with greater comfort and detail these differences in specific months.

If we ask ourselves what is the distribution of the total use of the Citizen Card throughout 2019, we can generate the following visualization and verify the evident result that the use in public transport represents 97%.

Tot_2019_uses <- sum(Citi_agg$Usos)\r\n Citi_agg_tot <- Citicard %>%\r\n group_by(Servicio) %>%\r\n summarise(Usos = 100*sum(Usos)/Tot_2019_uses) %>%\r\n arrange(desc(Usos))\r\n \r\n knitr::kable(Citi_agg_tot, \"pipe\", digits=0, col.names=(c(\"Servicio Usado\", \"Uso Tarjeta en %\")))| Used Service | Card Use in% |

|---|---|

| Transport | 97 |

| Public toilets | 2 |

| Library loans | 1 |

ggplot(Citi_agg_tot,aes(x=Servicio, y=Usos, fill=Servicio)) + \r\n geom_bar(stat=\"identity\", colour=\"white\") + labs(x = \"Servicio\", y = \"Uso en %\") + \r\n theme(\r\n axis.title.x = element_text(color = \"black\", size = 14, face = \"bold\"),\r\n axis.title.y = element_text(color = \"black\", size = 14, face = \"bold\")\r\n ) -> Citi_fig2\r\n \r\n \r\n ggplotly(Citi_fig2)Finished. In this post, we have seen how an integrated communication strategy allows us to integrate our technical analysis with the generation of consumable results in the form of tables and graphs ready for the end user. In the same process we integrate the calculations (aggregations, normalizations, etc.) with the production of quality results and with a format adapted to the non-specialist reader. In a non-integrated communication strategy, we would have post-processed the results of the technical analysis at a later point in time, and probably in a different tool. This would have made us less productive while losing track of the steps we have followed to generate the final result.

Conclusions

Communication is a fundamental aspect of data analysis. Poor communication can ruin excellent data analysis work, no matter how sophisticated it is. To carry out good communication it is necessary to implement an integrated communication strategy. This happens by adopting the DataOps philosophy to develop excellent, reproducible and automated work of data flows. We hope you liked the topic of this post and we will return later with content on DataOps and data communication. See you soon!

Content elaborated by Alejandro Alija, expert in Digital Transformation and Innovation.

Contents and points of view expressed in this publication are the exclusive responsibility of its author.

Within this technological maelstrom in which we are constantly immersed, every day that passes, humanity is creating a great amount of information that, in many cases, we are unable to deal with.

Public administrations also generate large volumes of information, which they make available to citizens so that we can reuse it from open data portals, but how can we take advantage of this data?

On many occasions, we think that only experts can analyse these large amounts of information, but this is not the case. In this article we are going to see what opportunities open data presents for users without technical knowledge or experience in data analysis and visualisation.

Generating knowledge in 4 simple steps with a use case

Within the Spanish Government's open data platform, we can find a multitude of data at our disposal. These data are grouped by category, subject, administration that publishes the data, format or with other tags that label us its content.

We can load this data into informational analysis applications, such as PowerBI, Qlik, Tableau, Tipco, Excel, etc., which will help us to create our own graphs and tables with hardly any computer knowledge. The use of these tools will allow us to develop our own informational analysis product, with which we can create filters or unplanned queries. All this without having other computer elements such as databases or ETL tools (Abbreviation of data Extraction, Transformation and Load).

Next we will see how we can build a first dashboard in a very simple way.

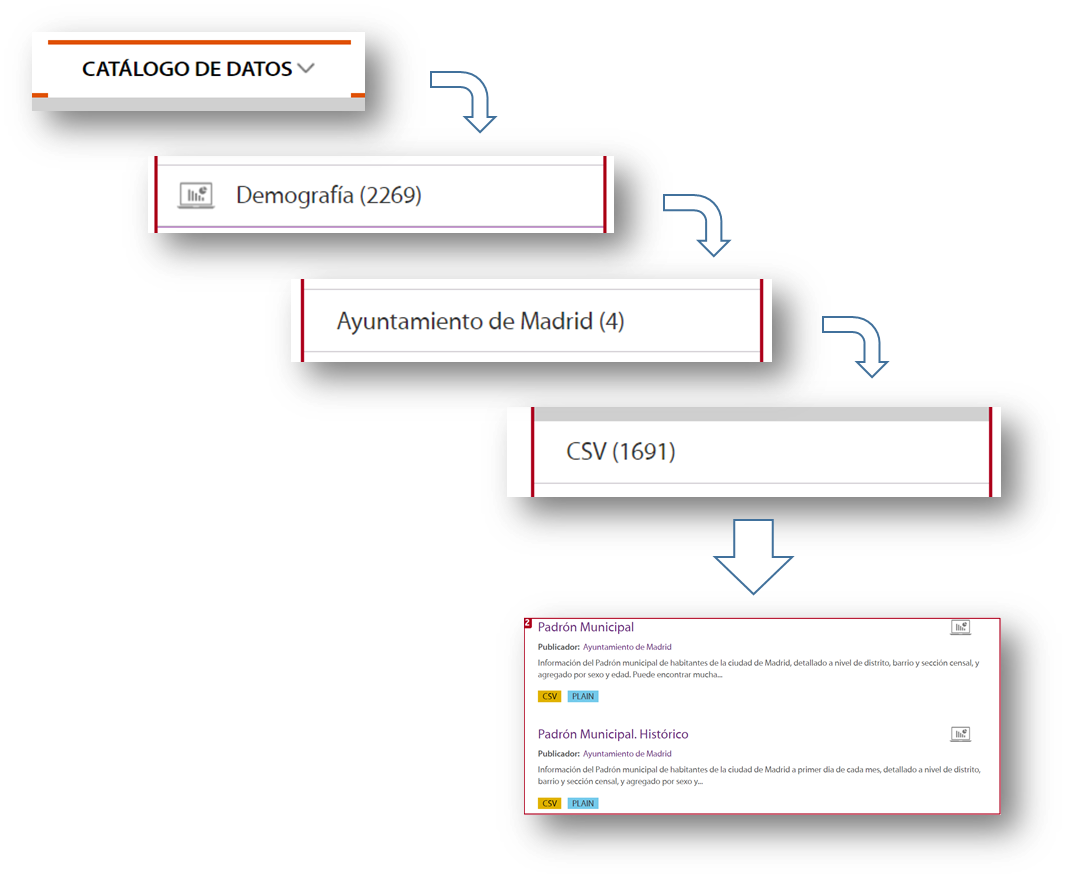

1.- Data selection

Before we start collecting meaningless data, the first thing we must decide is for what purpose we will use the data. The datos.gob.es catalogue is very extensive and it is very easy to get lost in this sea of data, so we must focus on the subject matter we are looking for and the administration that publishes it, if we know it. With this simple action we will greatly reduce the scope of our search.

Once we know what to look for, we must focus on the format of the data:

- If we want to collect the information directly to write our doctoral thesis, write an article for a media outlet with statistical data, or simply acquire new knowledge for our own interest, we will focus on taking information that is already prepared and worked on. We should then use data formats such as pdf, html, jpg, docx, etc. These formats will allow us to gather that knowledge without the need for additional technological tools, since the information is served in visual formats, the so-called unstructured formats.

- If we want to work on the information applying different calculation metrics and cross them with other data in our possession, in that case we must use structured information, that is, XLS, CSV, JSON, XML formats.

As an example, let's imagine that we want to analyse the population of each of the districts of the city of Madrid. In this case the dataset we need is the census of the Madrid City Council.

To locate this set of data, we selected Data Catalogue, Demography category, the City Council of Madrid as publisher, the CSV format and I already have the information I need on the right side of the screen. Another simple and complementary way to locate the information is to use the search engine included in the platform and type in "Padrón "+"Madrid".

With this search, the platform offers, among others, two sets of data: the historical census and the census of the last month published. For this example we will take the document corresponding to the August 2020 update.

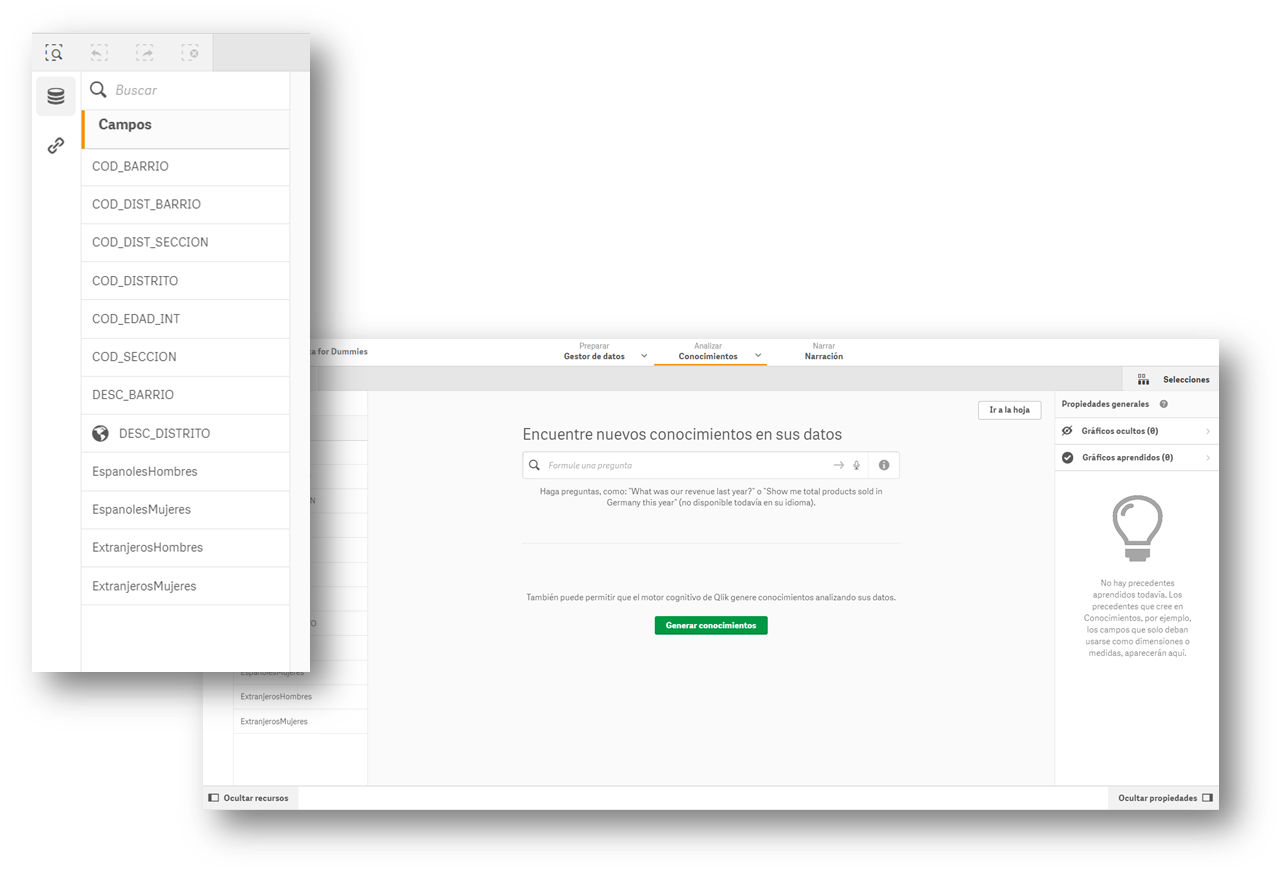

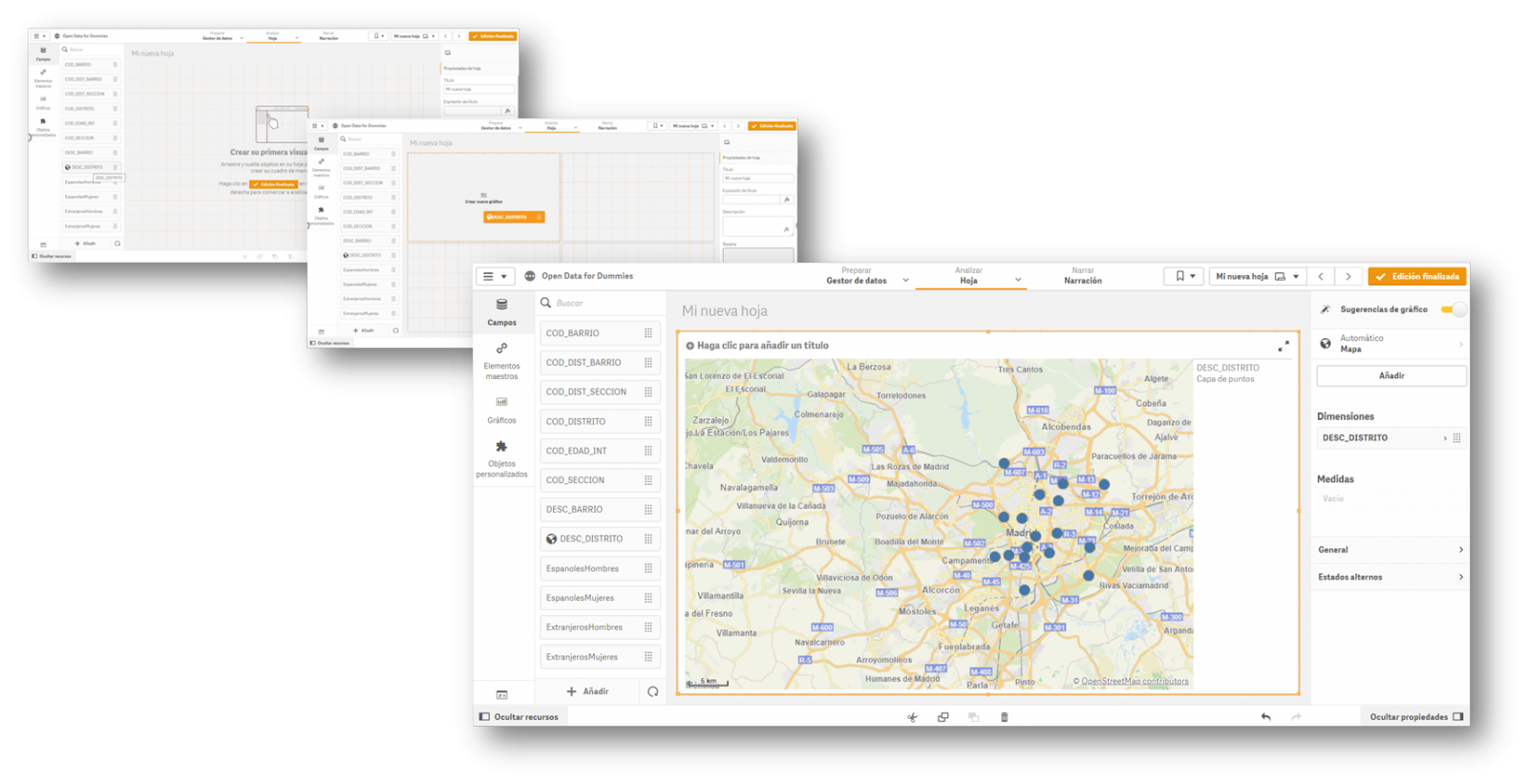

2.- Loading the information into an information display tool

Many of the information visualisation tools usually have built-in wizards to collect data that can be downloaded from an open data portal. The images in this article are from the Business version of QlikSense (which has a free 30-day trial), but any of the tools mentioned above work in a similar way. With a simple "drag and drop", you will already have the information inside the tool, to start creating indicators and thus generate knowledge.

Most of these tools directly interpret the content of the fields and propose a use for these values, differentiating them by data that can be used as filters, geographical data and data to formulate.

3.- Creation of the first graph or indicator

Now all that remains is to drag the fields on which we want to generate knowledge and create the first indicator on our dashboard. We will drag the field DESC_DISTRITO, which contains the description of the district, to see what happens.

Once the action has been carried out, we see that it has geo-positioned all the districts of Madrid on a map, although at first we do not have any information to analyse. In this first automatic visualization it shows us a point in the centre of the district, but it does not provide us with any other type of additional information.

4.- Creating value in our indicator

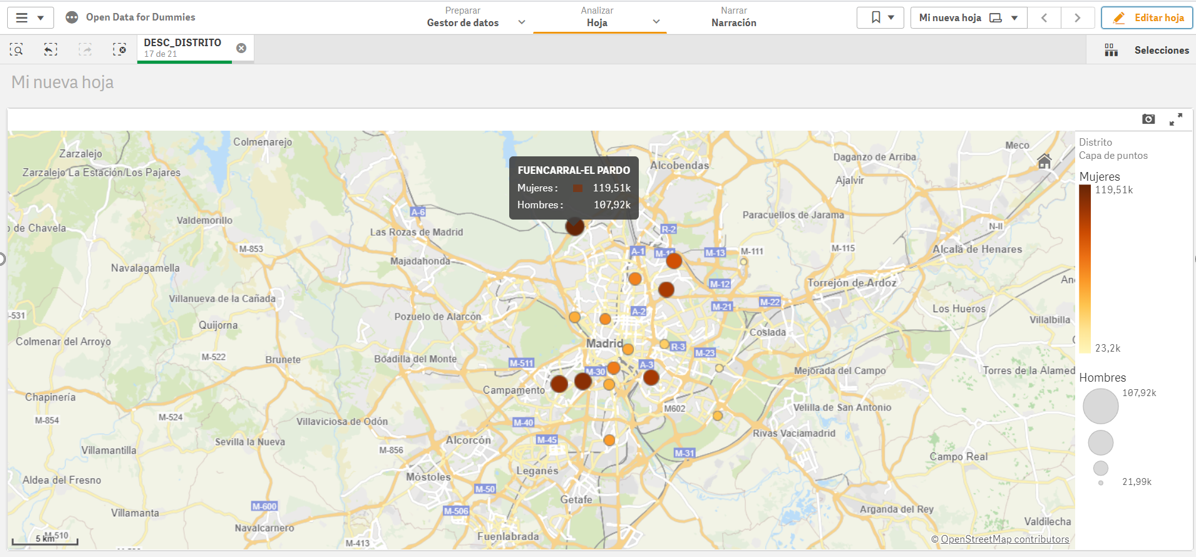

Once we have the points on the map, we need to know what we want to see within those points. We will continue with the "Drag and Drop" to count the men and women of Spanish nationality. Let's see what happens...

We see that, for each of the points, the tool has added the citizens by sex in each of the districts where they are registered.

In short, with four simple steps in which we have only selected the set of data and we have dragged and dropped the file into a visualisation tool, we have created the first indicator on our dashboard, where we can continue to generate knowledge.

If we continue to go deeper into the use of these tools, we will be able to create new graphics, such as dynamic tables, pie charts or interactive visualisations.

The interesting thing about this type of analysis is that it allows us to incorporate new sets of open data, such as the number of pharmacies in a district or the number and type of accidents in a particular area. By crossing the different data, we will be able to acquire more knowledge about the city and make informed decisions, such as which is the best area to set up a new pharmacy according to the population or to install a new traffic light.

Content elaborated by David Puig, Graduate in Information and Documentation and responsible for the Master Data and Reference Group at DAMA ESPAÑA

Contents and points of view expressed in this publication are the exclusive responsibility of its author.

The visual representation of data helps our brain to digest large amounts of information quickly and easily. Interactive visualizations make it easier for non-experts to analyze complex situations represented as data.

As we introduced in our last post on this topic, graphical data visualization is a whole discipline within the universe of data science. In this new post we want to put the focus on interactive data visualizations. Dynamic visualizations allow the user to interact with data and transform it into graphs, tables and indicators that have the ability to display different information according to the filters set by the user. To a certain extent, interactive visualizations are an evolution of classic visualizations, allowing us to condense much more information in a space similar to the usual reports and presentations.

The evolution of digital technologies has shifted the focus of visual data analytics to the web and mobile environments. The tools and libraries that allow the generation and conversion of classic or static visualizations into dynamic or interactive ones are countless. However, despite the new formats of representation and generation of visualizations, sometimes there is a risk of forgetting the good practices of design and composition, which must always be present. The ease to condense large amounts of information into interactive visualisations can means that, on many occasions, users try to include a lot of information in a single graph and make even the simplest of reports unreadable. But, let's go back to the positive side of interactive visualizations and analyse some of their most significant advantages.

Benefits of interactive displays

The benefits of interactive data displays are several:

- Web and mobile technologies mainly. Interactive visualizations are designed to be consumed from modern software applications, many of them 100% web and mobile oriented. This makes them easy to read from any device.

- More information in the same space. The interactive displays show different information depending on the filters applied by the user. Thus, if we want to show the monthly evolution of the sales of a company according to the geography, in a classic visualization, we would use a bar chart (months in the horizontal axis and sales in the vertical axis) for each geography. On the contrary, in an interactive visualization, we use a single bar chart with a filter next to it, where we select the geography we want to visualize at each moment.

- Customizations. With interactive visualizations, the same report or dashboard can be customized for each user or groups of users. In this way, using filters as a menu, we can select some data or others, depending on the type and level of the user-consumer.

- Self-service. There are very simple interactive visualization technologies, which allow users to configure their own graphics and panels on demand by simply having the source data accessible. In this way, a non-expert user in visualization, can configure his own report with only dragging and dropping the fields he wants to represent.

Practical example

To illustrate with a practical example the above reasoning we will select a data se available in datos.gob.es data catalogue. In particular, we have chosen the air quality data of the Madrid City Council for the year 2020. This dataset contains the measurements (hourly granularity) of pollutants collected by the air quality network of the City of Madrid. In this dataset, we have the hourly time series for each pollutant in each measurement station of the Madrid City Council, from January to May 2020. For the interpretation of the dataset, it is also necessary to obtain the interpretation file in pdf format. Both files can be downloaded from the following website (It is also available through datos.gob.es).

Interactive data visualization tools

Thanks to the use of modern data visualization tools (in this case Microsoft Power BI, a free and easily accessible tool) we have been able to download the air quality data for 2020 (approximately half a million records) in just 30 minutes and create an interactive report. In this report, the end user can choose the measuring station, either by using the filter on the left or by selecting the station on the map below. In addition, the user can choose the pollutant he/she is interested in and a range of dates. In this static capture of the report, we have represented all the stations and all the pollutants. The objective is to see the significant reduction of pollution in all pollutants (except ozone due to the suppression of nitrogen oxides) due to the situation of sudden confinement caused by the Covid-19 pandemic since mid-March. To carry out this exercise we could have used other tools such as MS Excel, Qlik, Tableau or interactive visualization packages on programming environments such as R or Python. These tools are perfect for communicating data without the need for programming or coding skills.

In conclusion, the discipline of data visualization (Visual Analytics) is a huge field that is becoming very relevant today thanks to the proliferation of web and mobile interfaces wherever we look. Interactive visualizations empower the end user and democratize access to data analysis with codeless tools, improving transparency and rigor in communication in any aspect of life and society, such as science, politics and education.

Content elaborated by Alejandro Alija, expert in Digital Transformation and Innovation.

Contents and points of view expressed in this publication are the exclusive responsibility of its author.

"The simple graph has brought more information to the data analyst’s mind than any other device.” — John Tukey

The graphic visualization of data constitutes a discipline within data science universe. This practice has become important milestones throughout history in data analytics. In this post we help you discover and understand its importance and impact in an enjoyable and practical way.

But, let's start the story at the beginning. In 1975, a 33-year-old man began to teach a course in statistics at Princeton University, laying the foundations of the future discipline of visual analytics. That young man, named Edward Tufte, is considered the Leonardo da Vinci of the data. Currently, Tufte is a professor emeritus of political science, statistics and computer science at Yale University. Between 2001 and 2006, Professor Tufte wrote a series of 4 books - considered already classic - on the graphic visualization of data. Some central ideas of Tufte's thesis refer to the elimination of useless and non-informative elements in the graphs. Tufte stand for the elimination of non-quantitative and decorative elements from the visualizations, arguing that these elements distract attention from the elements that are really explanatory and valuable.

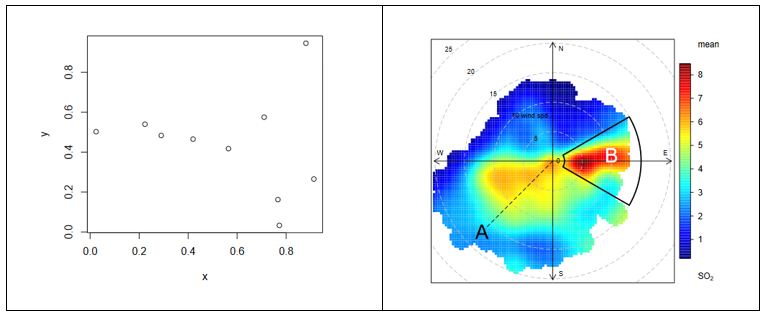

From the simplest graph to the most complex and refined one (figure 1), all graphs offer high value both to the analyst, during his data science process, and to the end user, to whom we are communicating a data-based story.

Figure 1. The figure shows the difference between two graphical visualizations of data. On the left, an example of the simplest data visualization that can be performed. Point representation in Cartesian coordinates x | y. On the right, an example of a complex data visualization in which the distribution of a pollutant (SO2) is represented in polar coordinates. The axes represent the wind directions N | S E | W (in degrees) while the radius of the distribution represents the wind speed according to the direction in m / s. The colour scale represents the average concentration of SO2 (in ppb) for those directions and wind speeds. With this type of visualization we can represent graphically three variables (wind direction, wind speed and concentration of pollutants) in a "flat" graph with two dimensions (2D). 2D visualization is very convenient because it is easier to interpret for the human brain.

Why is graphic visualization of data so important?

In data science there are many different types of data to analyze. One way of classifying data is according to their level of logical structure. For example, it is understood that data in spreadsheet-like formats - those data that are structured in the form of rows and columns - are data with a well-defined structure - or structured data. However, those data such as the 140 characters of a twitter feed are considered data without structure - or unstructured data. In the middle of these two extremes is a whole range of greys, from files delimited by special characters (commas, periods and commas, spaces, etc.) to images or videos on YouTube. It is evident that images and videos only make sense for humans once they are visually represented. It would be useless (for a human) to represent an image as a matrix integrated by numbers that represent a combination of RGB colors (Red, Green, Blue).

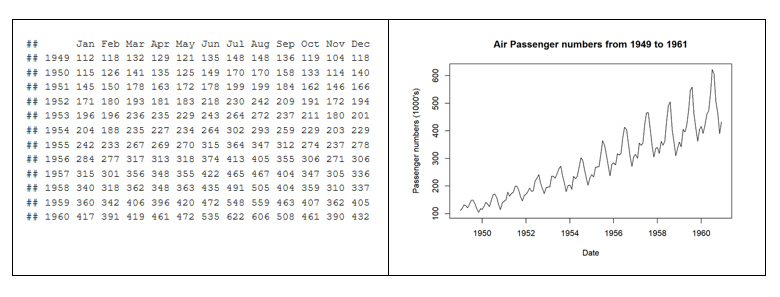

In the case of structured data, its graphic representation is necessary for all stages of the analysis process, from the exploratory stage to the final presentation of results. Let's see an example:

In 1963, the American airline company Pam Am used the graphic representation (time series 1949-1960) applied to the monthly number of international passengers in order to forecast the future demand for aircraft and place a purchase order. In the example, we see the difference between the matrix representation of the data and its graphic representation. The advantage of graphically representing the data is obvious with the example of Figure 2.

Figure 2. Difference between the tabular representation of the data and the graphic representation or visualization.

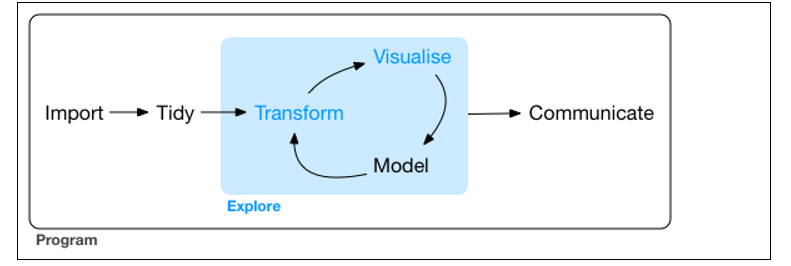

The graphic visualization of the data plays a fundamental role in all stages of data analysis. There are multiple approaches on how to perform a data analysis process correctly and completely. According to Garrett Grolemund and Hadley Wickham in their recent book R for Data Science, a standard process in data analysis would be as follows (figure 3):

Figure 3. Representation of a standard process using advanced data analytics.

Data visualization is at the core of the process. It is a basic tool for data analyst or data scientist who, through an iterative process, is transforming and composing a logical model with data. Based on the visualization, the analyst discovers the secrets buried in the data. The visualization allows quickly:

- Discard unrepresentative or erroneous data.

- Identify those variables that depend on each other and, therefore, contain redundant information

- Cut the data to be able to observe them from different perspectives.

- Finally, check that those models, trends, predictions and groups that we have applied to the data give us back the expected result.

Tools for visual data analysis

So important is the graphic visualization of data in all areas of science, engineering, business, banking, environment, etc. that there are many tools to design, develop and communicate the graphic visualization of the data.

These tools cover a broad spectrum of the target audience, from software developers, to data scientists, journalists or communication professionals.

- For software developers, there are hundreds of libraries and software packages containing thousands of types of visualizations. The developers just have to load these libraries in their respective programming frameworks and parameterize the type of graphic they wish to generate. The developer only has to indicate the data source that he wants to represent, the type of graph (lines, bars, etc.) and the parameterization of that graph (scales, colors, labels, etc.). In the last few years, web visualization has been in fashion, and the most popular libraries are based on JavaScript frameworks (most open source). Perhaps one of the most popular, according to its power, is D3.JS, although there are many more.

- The data scientist is accustomed to working with a concrete analysis framework that normally includes all the components, such as the visual analysis engine of the data, among others. Currently, the most popular environments for data science are R and Python, and both include native libraries for visual analytics. Perhaps the most popular and powerful library in R is ggplot2, while, matplotlib and Plotly are among the most popular in Python.

- For professional communicators or non-technical personnel from the different business areas (Marketing, Human Resources, Production, etc.) that need to make decisions based on data, there are tools - which are not only visual analytics tools - with functionalities to generate graphic representations of the data. Modern self-service Business Intelligence tools such as MS Excel, MS Power BI, Qlik, Tableau, etc. are great tools to communicate data without the need of programming or coding skills.

In conclusion, the visualization tools allow all these professionals to access to data in a more agile and simple way. In a universe where the amount of useful data to be analysed is continuously growing, this type of tools are becoming more and more necessary. This tools facilitate the creation of value from the data and, with this, improve decisions making regarding the present and the future of our business or activity.

If you want to know more about data visualization tools, we recommend the report Data visualization: definition, technologies and tools, as well as the training material Use of basic data processing tools.

Content prepared by Alejandro Alija, expert in Digital Transformation and innovation.

Contents and points of view expressed in this publication are the exclusive responsibility of its author.

Until relatively recently, talking about art and data in the same conversation might seem strange. However, recent advances in data science and artificial intelligence seem to open the door to a new discipline in which science, art and technology go hand in hand.

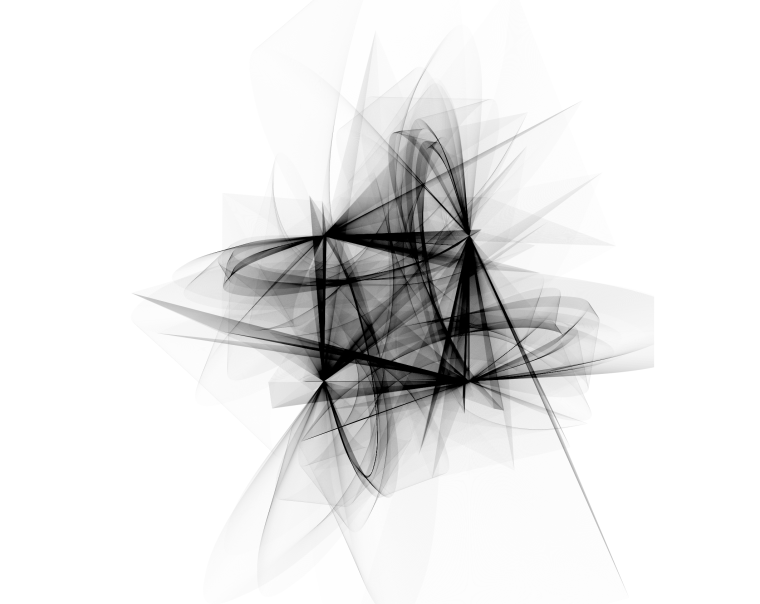

The cover image has been extracted from the blog https://www.r-graph-gallery.com and was originally created by Marcus Volz on his website.

The image above could be an abstract painting created by some modern art author and exhibited at the MoMA in New York. However, it is an image created with some R-code lines that use complex mathematical expressions. Despite the spectacularity of the resulting figure, the beautiful shape of the strokes does not represent a real form. But the ability to create art with data is not limited to generating abstract forms. The possibilities of creating art with code go much further. Here you are two examples:

Real art and representation of plants

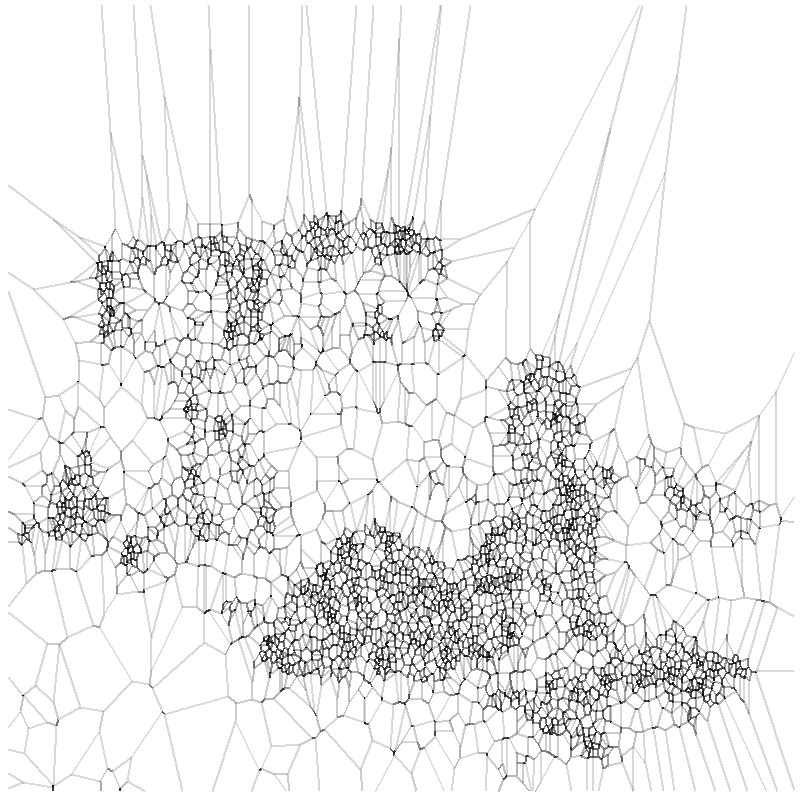

With less than 100 lines of R code we can create this plant and infinite variations in terms of branches, symmetry and complexity. Without being an expert in plants and algae, I am sure that I have seen plants and algae similar to this in many occasions. With these representations, we just try to reproduce what nature creates naturally, taking into account physics and mathematics laws. The figures shown below have been created using the R code originally extracted from Antonio Sánchez Chinchón blog.

Variations of plants artificially created by R code and fractal expressions.

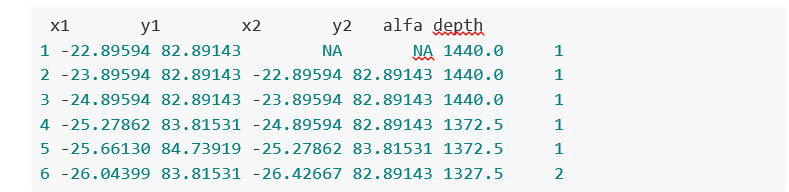

As an example, these are the data that make up some of the figures discussed above:

Photography and art with data

But it is not just possible to construct abstract figures or representations that imitate the forms of plants. With the help of data tools and artificial intelligence we can imitate, and even create new works. In the following example, we obtain simplified versions of photographs, using subsets of pixels from the original photograph. Let's see this example in detail.

We take a photograph of a bank of open images, in this case Wikimedia Commons website, such as the following:

By Finetooth - Own work, CC BY-SA 3.0, https://commons.wikimedia.org/w/index.php?curid=11692574

Next, we execute a relatively simple algorithm that generates polygonal shapes around the main pixels of the original image. In addition to a simple images treatment to turn this photograph into a flat black and white image, this algorithm applies a mathematical method called Voronoid diagram. When the subset of data (on which we apply the Voronoid diagram) is small, the result of the treatment is poor and we can barely distinguish the underlying form of the figure.

However, as we increase the subset of points to reproduce the initial photograph, we begin to find fascinating results. Finally, with less than 20% of all the points that make up the original image, we obtain a really beautiful and artistic result. This experiment is based on the original post by Antonio Sánchez Chinchón on his blog Fronkostin.

The ability to generate art with the powerful combination of mathematics and programming codes is absolutely powerful. In the following link it is possible to appreciate some of the most impressive works that exist in this art form. The author of this blog is Marcus Volz, researcher at the University of Melbourne. Marcus works with R to generate the figures in two dimensions and with Houdini for 3D and animation.

Content prepared by Alejandro Alija, expert in Digital Transformation and innovation.

Contents and points of view expressed in this publication are the exclusive responsibility of its author.

Vizzuality use geospatial and big data to create digital products designed to empower people to make the right decisions and enable and encourage positive changes.

They work, together with NGOs, governments, corporations and citizens on challenges concerning the climate emergency, the global loss of biodiversity, supply-chain transparency and inequality.

dotGIS is focused on the development of solutions and applications related to the management, integration and analysis of data with geospatial component.

EpData is the platform created by Europa Press to facilitate the use of public data by journalists, with the aim of both enriching news with graphics and context analysis and verifying the figures offered by various sources. The database is maintained by a multidisciplinary team of computer scientists and journalists who use new technologies and data analysis to improve the efficiency of data consumption and find relevant and informative patterns in the data.