A digital twin is a virtual, interactive representation of a real-world object, system or process. We are talking, for example, about a digital replica of a factory, a city or even a human body. These virtual models allow simulating, analysing and predicting the behaviour of the original element, which is key for optimisation and maintenance in real time.

Due to their functionalities, digital twins are being used in various sectors such as health, transport or agriculture. In this article, we review the benefits of their use and show two examples related to open data.

Advantages of digital twins

Digital twins use real data sources from the environment, obtained through sensors and open platforms, among others. As a result, the digital twins are updated in real time to reflect reality, which brings a number of advantages:

- Increased performance: one of the main differences with traditional simulations is that digital twins use real-time data for modelling, allowing better decisions to be made to optimise equipment and system performance according to the needs of the moment.

- Improved planning: using technologies based on artificial intelligence (AI) and machine learning, the digital twin can analyse performance issues or perform virtual "what-if" simulations. In this way, failures and problems can be predicted before they occur, enabling proactive maintenance.

- Cost reduction: improved data management thanks to a digital twin generates benefits equivalent to 25% of total infrastructure expenditure. In addition, by avoiding costly failures and optimizing processes, operating costs can be significantly reduced. They also enable remote monitoring and control of systems from anywhere, improving efficiency by centralizing operations.

- Customization and flexibility: by creating detailed virtual models of products or processes, organizations can quickly adapt their operations to meet changing environmental demands and individual customer/citizen preferences. For example, in manufacturing, digital twins enable customized mass production, adjusting production lines in real time to create unique products according to customer specifications. On the other hand, in healthcare, digital twins can model the human body to customize medical treatments, thereby improving efficacy and reducing side effects.

- Boosting experimentation and innovation: digital twins provide a safe and controlled environment for testing new ideas and solutions, without the risks and costs associated with physical experiments. Among other issues, they allow experimentation with large objects or projects that, due to their size, do not usually lend themselves to real-life experimentation.

- Improved sustainability: by enabling simulation and detailed analysis of processes and systems, organizations can identify areas of inefficiency and waste, thus optimizing the use of resources. For example, digital twins can model energy consumption and production in real time, enabling precise adjustments that reduce consumption and carbon emissions.

Examples of digital twins in Spain

The following three examples illustrate these advantages.

GeDIA project: artificial intelligence to predict changes in territories

GeDIA is a tool for strategic planning of smart cities, which allows scenario simulations. It uses artificial intelligence models based on existing data sources and tools in the territory.

The scope of the tool is very broad, but its creators highlight two use cases:

- Future infrastructure needs: the platform performs detailed analyses considering trends, thanks to artificial intelligence models. In this way, growth projections can be made and the needs for infrastructures and services, such as energy and water, can be planned in specific areas of a territory, guaranteeing their availability.

- Growth and tourism: GeDIA is also used to study and analyse urban and tourism growth in specific areas. The tool identifies patterns of gentrification and assesses their impact on the local population, using census data. In this way, demographic changes and their impact, such as housing needs, can be better understood and decisions can be made to facilitate equitable and sustainable growth.

This initiative has the participation of various companies and the University of Malaga (UMA), as well as the financial backing of Red.es and the European Union.

Digital twin of the Mar Menor: data to protect the environment

The Mar Menor, the salt lagoon of the Region of Murcia, has suffered serious ecological problems in recent years, influenced by agricultural pressure, tourism and urbanisation.

To better understand the causes and assess possible solutions, TRAGSATEC, a state-owned environmental protection agency, developed a digital twin. It mapped a surrounding area of more than 1,600 square kilometres, known as the Campo de Cartagena Region. In total, 51,000 nadir images, 200,000 oblique images and more than four terabytes of LiDAR data were obtained.

Thanks to this digital twin, TRAGSATEC has been able to simulate various flooding scenarios and the impact of installing containment elements or obstacles, such as a wall, to redirect the flow of water. They have also been able to study the distance between the soil and the groundwater, to determine the impact of fertiliser seepage, among other issues.

Challenges and the way forward

These are just two examples, but they highlight the potential of an increasingly popular technology. However, for its implementation to be even greater, some challenges need to be addressed, such as initial costs, both in technology and training, or security, by increasing the attack surface. Another challenge is the interoperability problems that arise when different public administrations establish digital twins and local data spaces. To address this issue further, the European Commission has published a guide that helps to identify the main organisational and cultural challenges to interoperability, offering good practices to overcome them.

In short, digital twins offer numerous advantages, such as improved performance or cost reduction. These benefits are driving their adoption in various industries and it is likely that, as current challenges are overcome, digital twins will become an essential tool for optimising processes and improving operational efficiency in an increasingly digitised world.

Almost half of European adults lack basic digital skills. According to the latest State of the Digital Decade report, in 2023, only 55.6% of citizens reported having such skills. This percentage rises to 66.2% in the case of Spain, ahead of the European average.

Having basic digital skills is essential in today's society because it enables access to a wider range of information and services, as well as effective communication in onlineenvironments, facilitating greater participation in civic and social activities. It is also a great competitive advantage in the world of work.

In Europe, more than 90% of professional roles require a basic level of digital skills. Technological knowledge has long since ceased to be required only for technical professions, but is spreading to all sectors, from business to transport and even agriculture. In this respect, more than 70% of companies said that the lack of staff with the right digital skills is a barrier to investment.

A key objective of the Digital Decade is therefore to ensure that at least 80% of people aged 16-74 have at least basic digital skills by 2030.

Basic technology skills that everyone should have

When we talk about basic technological capabilities, we refer, according to the DigComp framework , to a number of areas, including:

- Information and data literacy: includes locating, retrieving, managing and organising data, judging the relevance of the source and its content.

- Communication and collaboration: involves interacting, communicating and collaborating through digital technologies taking into account cultural and generational diversity. It also includes managing one's own digital presence, identity and reputation.

- Digital content creation: this would be defined as the enhancement and integration of information and content to generate new messages, respecting copyrights and licences. It also involves knowing how to give understandable instructions to a computer system.

- Security: this is limited to the protection of devices, content, personal data and privacy in digital environments, to protect physical and mental health.

- Problem solving: it allows to identify and solve needs and problems in digital environments. It also focuses on the use of digital tools to innovate processes and products, keeping up with digital evolution.

Which data-related jobs are most in demand?

Now that the core competences are clear, it is worth noting that in a world where digitalisation is becoming increasingly important , it is not surprising that the demand for advanced technological and data-related skills is also growing.

According to data from the LinkedIn employment platform, among the 25 fastest growing professions in Spain in 2024 are security analysts (position 1), software development analysts (2), data engineers (11) and artificial intelligence engineers (25). Similar data is offered by Fundación Telefónica's Employment Map, which also highlights four of the most in-demand profiles related to data:

- Data analyst: responsible for the management and exploitation of information, they are dedicated to the collection, analysis and exploitation of data, often through the creation of dashboards and reports.

- Database designer or database administrator: focused on designing, implementing and managing databases. As well as maintaining its security by implementing backup and recovery procedures in case of failures.

- Data engineer: responsible for the design and implementation of data architectures and infrastructures to capture, store, process and access data, optimising its performance and guaranteeing its security.

- Data scientist: focused on data analysis and predictive modelling, optimisation of algorithms and communication of results.

These are all jobs with good salaries and future prospects, but where there is still a large gap between men and women. According to European data, only 1 in 6 ICT specialists and 1 in 3 science, technology, engineering and mathematics (STEM) graduates are women.

To develop data-related professions, you need, among others, knowledge of popular programming languages such as Python, R or SQL, and multiple data processing and visualisation tools, such as those detailed in these articles:

- Debugging and data conversion tools

- Data analysis tools

- Data visualisation tools

- Data visualisation libraries and APIs

- Geospatial visualisation tools

- Network analysis tools

The range of training courses on all these skills is growing all the time.

Future prospects

Nearly a quarter of all jobs (23%) will change in the next five years, according to the World Economic Forum's Future of Jobs 2023 Report. Technological advances will create new jobs, transform existing jobs and destroy those that become obsolete. Technical knowledge, related to areas such as artificial intelligence or Big Data, and the development of cognitive skills, such as analytical thinking, will provide great competitive advantages in the labour market of the future. In this context, policy initiatives to boost society's re-skilling , such as the European Digital Education Action Plan (2021-2027), will help to generate common frameworks and certificates in a constantly evolving world.

The technological revolution is here to stay and will continue to change our world. Therefore, those who start acquiring new skills earlier will be better positioned in the future employment landscape.

Citizen science is consolidating itself as one of the most relevant sources of most relevant sources of reference in contemporary research contemporary research. This is recognised by the Centro Superior de Investigaciones Científicas (CSIC), which defines citizen science as a methodology and a means for the promotion of scientific culture in which science and citizen participation strategies converge.

We talked some time ago about the importance importance of citizen science in society in society. Today, citizen science projects have not only increased in number, diversity and complexity, but have also driven a significant process of reflection on how citizens can actively contribute to the generation of data and knowledge.

To reach this point, programmes such as Horizon 2020, which explicitly recognised citizen participation in science, have played a key role. More specifically, the chapter "Science with and for society"gave an important boost to this type of initiatives in Europe and also in Spain. In fact, as a result of Spanish participation in this programme, as well as in parallel initiatives, Spanish projects have been increasing in size and connections with international initiatives.

This growing interest in citizen science also translates into concrete policies. An example of this is the current Spanish Strategy for Science, Technology and Innovation (EECTI), for the period 2021-2027, which includes "the social and economic responsibility of R&D&I through the incorporation of citizen science" which includes "the social and economic responsibility of I through the incorporation of citizen science".

In short, we commented some time agoin short, citizen science initiatives seek to encourage a more democratic sciencethat responds to the interests of all citizens and generates information that can be reused for the benefit of society. Here are some examples of citizen science projects that help collect data whose reuse can have a positive impact on society:

AtmOOs Academic Project: Education and citizen science on air pollution and mobility.

In this programme, Thigis developed a citizen science pilot on mobility and the environment with pupils from a school in Barcelona's Eixample district. This project, which is already replicable in other schoolsconsists of collecting data on student mobility patterns in order to analyse issues related to sustainability.

On the website of AtmOOs Academic you can visualise the results of all the editions that have been carried out annually since the 2017-2018 academic year and show information on the vehicles used by students to go to class or the emissions generated according to school stage.

WildINTEL: Research project on life monitoring in Huelva

The University of Huelva and the State Agency for Scientific Research (CSIC) are collaborating to build a wildlife monitoring system to obtain essential biodiversity variables. To do this, remote data capture photo-trapping cameras and artificial intelligence are used.

The wildINTEL project project focuses on the development of a monitoring system that is scalable and replicable, thus facilitating the efficient collection and management of biodiversity data. This system will incorporate innovative technologies to provide accurate and objective demographic estimates of populations and communities.

Through this project which started in December 2023 and will continue until December 2026, it is expected to provide tools and products to improve the management of biodiversity not only in the province of Huelva but throughout Europe.

IncluScience-Me: Citizen science in the classroom to promote scientific culture and biodiversity conservation.

This citizen science project combining education and biodiversity arises from the need to address scientific research in schools. To do this, students take on the role of a researcher to tackle a real challenge: to track and identify the mammals that live in their immediate environment to help update a distribution map and, therefore, their conservation.

IncluScience-Me was born at the University of Cordoba and, specifically, in the Research Group on Education and Biodiversity Management (Gesbio), and has been made possible thanks to the participation of the University of Castilla-La Mancha and the Research Institute for Hunting Resources of Ciudad Real (IREC), with the collaboration of the Spanish Foundation for Science and Technology - Ministry of Science, Innovation and Universities.

The Memory of the Herd: Documentary corpus of pastoral life.

This citizen science project which has been active since July 2023, aims to gather knowledge and experiences from sheperds and retired shepherds about herd management and livestock farming.

The entity responsible for the programme is the Institut Català de Paleoecologia Humana i Evolució Social, although the Museu Etnogràfic de Ripoll, Institució Milà i Fontanals-CSIC, Universitat Autònoma de Barcelona and Universitat Rovira i Virgili also collaborate.

Through the programme, it helps to interpret the archaeological record and contributes to the preservation of knowledge of pastoral practice. In addition, it values the experience and knowledge of older people, a work that contributes to ending the negative connotation of "old age" in a society that gives priority to "youth", i.e., that they are no longer considered passive subjects but active social subjects.

Plastic Pirates Spain: Study of plastic pollution in European rivers.

It is a citizen science project which has been carried out over the last year with young people between 12 and 18 years of age in the communities of Castilla y León and Catalonia aims to contribute to generating scientific evidence and environmental awareness about plastic waste in rivers.

To this end, groups of young people from different educational centres, associations and youth groups have taken part in sampling campaigns to collect data on the presence of waste and rubbish, mainly plastics and microplastics in riverbanks and water.

In Spain, this project has been coordinated by the BETA Technology Centre of the University of Vic - Central University of Catalonia together with the University of Burgos and the Oxygen Foundation. You can access more information on their website.

Here are some examples of citizen science projects. You can find out more at the Observatory of Citizen Science in Spain an initiative that brings together a wide range of educational resources, reports and other interesting information on citizen science and its impact in Spain. do you know of any other projects? Send it to us at dinamizacion@datos.gob.es and we can publicise it through our dissemination channels.

Artificial intelligence (AI) is revolutionising the way we create and consume content. From automating repetitive tasks to personalising experiences, AI offers tools that are changing the landscape of marketing, communication and creativity.

These artificial intelligences need to be trained with data that are fit for purpose and not copyrighted. Open data is therefore emerging as a very useful tool for the future of AI.

The Govlab has published the report "A Fourth Wave of Open Data? Exploring the Spectrum of Scenarios for Open Data and Generative AI" to explore this issue in more detail. It analyses the emerging relationship between open data and generative AI, presenting various scenarios and recommendations. Their key points are set out below.

The role of data in generative AI

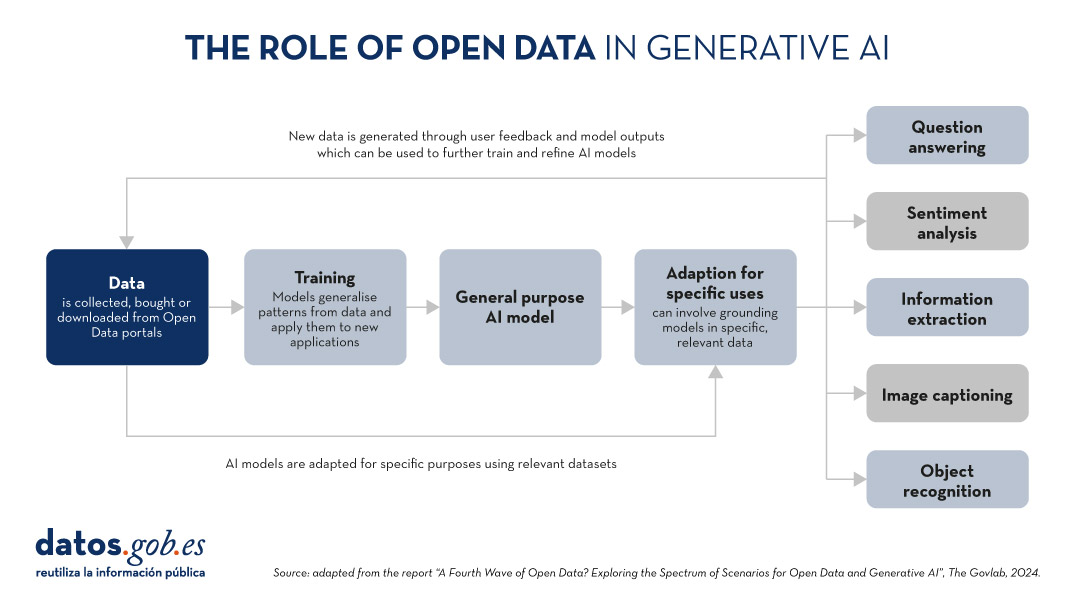

Data is the fundamental basis for generative artificial intelligence models. Building and training such models requires a large volume of data, the scale and variety of which is conditioned by the objectives and use cases of the model.

The following graphic explains how data functions as a key input and output of a generative AI system. Data is collected from various sources, including open data portals, in order to train a general-purpose AI model. This model will then be adapted to perform specific functions and different types of analysis, which in turn generate new data that can be used to further train models.

Figure 1. The role of open data in generative AI, adapted from the report “A Fourth Wave of Open Data? Exploring the Spectrum of Scenarios for Open Data and Generative AI”, The Govlab, 2024.

5 scenarios where open data and artificial intelligence converge

In order to help open data providers ''prepare'' their data for generative AI, The Govlab has defined five scenarios outlining five different ways in which open data and generative AI can intersect. These scenarios are intended as a starting point, to be expanded in the future, based on available use cases.

| Scenario | Function | Quality requirements | Metadata requirements | Example |

|---|---|---|---|---|

| Pre-training | Training the foundational layers of a generative AI model with large amounts of open data. | High volume of data, diverse and representative of the application domain and non-structured usage. | Clear information on the source of the data. | Data from NASA''s Harmonized Landsat Sentinel-2 (HLS) project were used to train the geospatial foundational model watsonx.ai. |

| Adaptation | Refinement of a pre-trained model with task-specific open data, using fine-tuning or RAG techniques. | Tabular and/or unstructured data of high accuracy and relevance to the target task, with a balanced distribution. | Metadata focused on the annotation and provenance of data to provide contextual enrichment. | Building on the LLaMA 70B model, the French Government created LLaMandement, a refined large language model for the analysis and drafting of legal project summaries. They used data from SIGNALE, the French government''s legislative platform. |

| Inference and Insight Generation | Extracting information and patterns from open data using a trained generative AI model. | High quality, complete and consistent tabular data. | Descriptive metadata on the data collection methods, source information and version control. | Wobby is a generative interface that accepts natural language queries and produces answers in the form of summaries and visualisations, using datasets from different offices such as Eurostat or the World Bank. |

| Data Augmentation | Leveraging open data to generate synthetic data or provide ontologies to extend the amount of training data. | Tabular and/or unstructured data which is a close representation of reality, ensuring compliance with ethical considerations. | Transparency about the generation process and possible biases. | A team of researchers adapted the US Synthea model to include demographic and hospital data from Australia. Using this model, the team was able to generate approximately 117,000 region-specific synthetic medical records. |

| Open-Ended Exploration | Exploring and discovering new knowledge and patterns in open data through generative models. | Tabular data and/or unstructured, diverse and comprehensive. | Clear information on sources and copyright, understanding of possible biases and limitations, identification of entities. | NEPAccess is a pilot to unlock access to data related to the US National Environmental Policy Act (NEPA) through a generative AI model. It will include functions for drafting environmental impact assessments, data analysis, etc. |

Figure 2. Five scenarios where open data and Artificial Intelligence converge, adapted from the report “A Fourth Wave of Open Data? Exploring the Spectrum of Scenarios for Open Data and Generative AI”, The Govlab, 2024.

You can read the details of these scenarios in the report, where more examples are explained. In addition, The Govlab has also launched an observatory where it collects examples of intersections between open data and generative artificial intelligence. It includes the examples in the report along with additional examples. Any user can propose new examples via this form. These examples will be used to further study the field and improve the scenarios currently defined.

Among the cases that can be seen on the web, we find a Spanish company: Tendios. This is a software-as-a-service company that has developed a chatbot to assist in the analysis of public tenders and bids in order to facilitate competition. This tool is trained on public documents from government tenders.

Recommendations for data publishers

To extract the full potential of generative AI, improving its efficiency and effectiveness, the report highlights that open data providers need to address a number of challenges, such as improving data governance and management. In this regard, they contain five recommendations:

- Improve transparency and documentation. Through the use of standards, data dictionaries, vocabularies, metadata templates, etc. It will help to implement documentation practices on lineage, quality, ethical considerations and impact of results.

- Maintaining quality and integrity. Training and routine quality assurance processes are needed, including automated or manual validation, as well as tools to update datasets quickly when necessary. In addition, mechanisms for reporting and addressing data-related issues that may arise are needed to foster transparency and facilitate the creation of a community around open datasets.

- Promote interoperability and standards. It involves adopting and promoting international data standards, with a special focus on synthetic data and AI-generated content.

- Improve accessibility and user-friendliness. It involves the enhancement of open data portals through intelligent search algorithms and interactive tools. It is also essential to establish a shared space where data publishers and users can exchange views and express needs in order to match supply and demand.

- Addressing ethical considerations. Protecting data subjects is a top priority when talking about open data and generative AI. Comprehensive ethics committees and ethical guidelines are needed around the collection, sharing and use of open data, as well as advanced privacy-preserving technologies.

This is an evolving field that needs constant updating by data publishers. These must provide technically and ethically adequate datasets for generative AI systems to reach their full potential.

Digital transformation has become a fundamental pillar for the economic and social development of countries in the 21st century. In Spain, this process has become particularly relevant in recent years, driven by the need to adapt to an increasingly digitalised and competitive global environment. The COVID-19 pandemic acted as a catalyst, accelerating the adoption of digital technologies in all sectors of the economy and society.

However, digital transformation involves not only the incorporation of new technologies, but also a profound change in the way organisations operate and relate to their customers, employees and partners. In this context, Spain has made significant progress, positioning itself as one of the leading countries in Europe in several aspects of digitisation.

The following are some of the most prominent reports analysing this phenomenon and its implications.

State of the Digital Decade 2024 report

The State of the Digital Decade 2024 report examines the evolution of European policies aimed at achieving the agreed objectives and targets for successful digital transformation. It assesses the degree of compliance on the basis of various indicators, which fall into four groups: digital infrastructure, digital business transformation, digital skills and digital public services.

Figure 1. Taking stock of progress towards the Digital Decade goals set for 2030, “State of the Digital Decade 2024 Report”, European Commission.

In recent years, the European Union (EU) has significantly improved its performance by adopting regulatory measures - with 23 new legislative developments, including, among others, the Data Governance Regulation and the Data Regulation- to provide itself with a comprehensive governance framework: the Digital Decade Policy Agenda 2030.

The document includes an assessment of the strategic roadmaps of the various EU countries. In the case of Spain, two main strengths stand out:

- Progress in the use of artificial intelligence by companies (9.2% compared to 8.0% in Europe), where Spain's annual growth rate (9.3%) is four times higher than the EU (2.6%).

- The large number of citizens with basic digital skills (66.2%), compared to the European average (55.6%).

On the other hand, the main challenges to overcome are the adoption of cloud services ( 27.2% versus 38.9% in the EU) and the number of ICT specialists ( 4.4% versus 4.8% in Europe).

The following image shows the forecast evolution in Spain of the key indicators analysed for 2024, compared to the targets set by the EU for 2030.

Figure 2. Key performance indicators for Spain, “Report on the State of the Digital Decade 2024”, European Commission.

Spain is expected to reach 100% on virtually all indicators by 2030. 26.7 billion (1.8 % of GDP), without taking into account private investments. This roadmap demonstrates the commitment to achieving the goals and targets of the Digital Decade.

In addition to investment, to achieve the objective, the report recommends focusing efforts in three areas: the adoption of advanced technologies (AI, data analytics, cloud) by SMEs; the digitisation and promotion of the use of public services; and the attraction and retention of ICT specialists through the design of incentive schemes.

European Innovation Scoreboard 2024

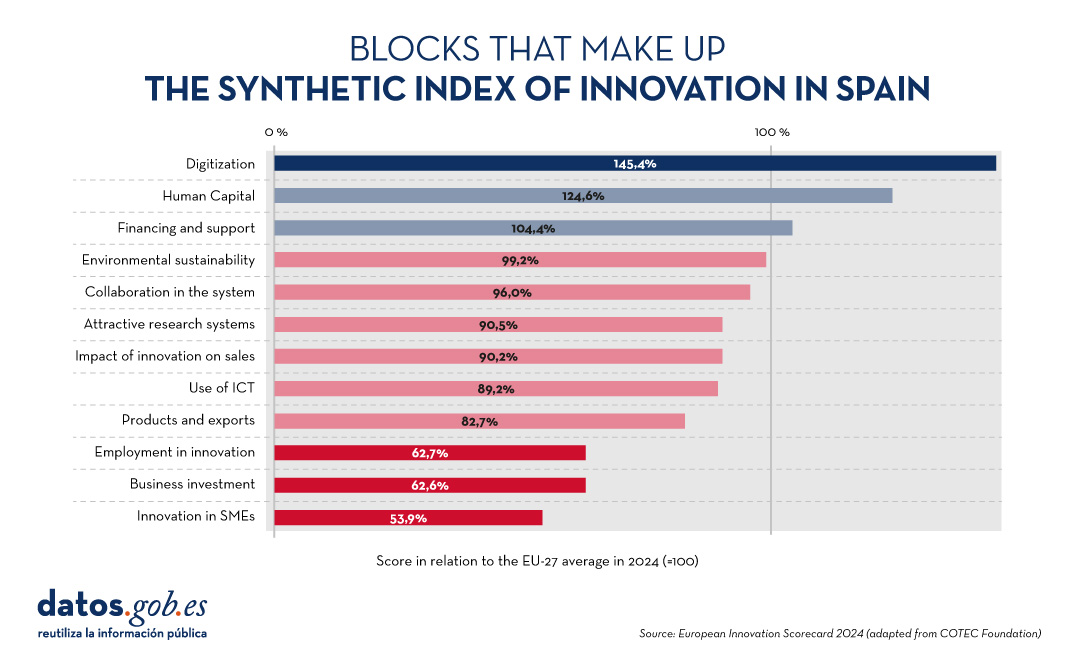

The European Innovation Scoreboard carries out an annual benchmarking of research and innovation developments in a number of countries, not only in Europe. The report classifies regions into four innovation groups, ranging from the most innovative to the least innovative: Innovation Leaders, Strong Innovators, Moderate Innovators and Emerging Innovators.

Spain is leading the group of moderate innovators, with a performance of 89.9% of the EU average. This represents an improvement compared to previous years and exceeds the average of other countries in the same category, which is 84.8%. Our country is above the EU average in three indicators: digitisation, human capital and financing and support. On the other hand, the areas in which it needs to improve the most are employment in innovation, business investment and innovation in SMEs. All this is shown in the following graph:

Figure 3. Blocks that make up the synthetic index of innovation in Spain, European Innovation Scorecard 2024 (adapted from the COTEC Foundation).

Spain's Digital Society Report 2023

The Telefónica Foundation also periodically publishes a report which analyses the main changes and trends that our country is experiencing as a result of the technological revolution.

The edition currently available is the 2023 edition. It highlights that "Spain continues to deepen its digital transformation process at a good pace and occupies a prominent position in this aspect among European countries", highlighting above all the area of connectivity. However, digital divides remain, mainly due to age.

Progress is also being made in the relationship between citizens and digital administrations: 79.7% of people aged 16-74 used websites or mobile applications of an administration in 2022. On the other hand, the Spanish business fabric is advancing in its digitalisation, incorporating digital tools, especially in the field of marketing. However, there is still room for improvement in aspects of big data analysis and the application of artificial intelligence, activities that are currently implemented, in general, only by large companies.

Artificial Intelligence and Data Talent Report

IndesIA, an association that promotes the use of artificial intelligence and Big Data in Spain, has carried out a quantitative and qualitative analysis of the data and artificial intelligence talent market in 2024 in our country.

According to the report, the data and artificial intelligence talent market represents almost 19% of the total number of ICT professionals in our country. In total, there are 145,000 professionals (+2.8% from 2023), of which only 32% are women. Even so, there is a gap between supply and demand, especially for natural language processing engineers. To address this situation, the report analyses six areas for improvement: workforce strategy and planning, talent identification, talent activation, engagement, training and development, and data-driven culture .

Other reports of interest

The COTEC Foundation also regularly produces various reports on the subject. On its website we can find documents on the budget execution of R&D in the public sector, the social perception of innovation or the regional talent map.

For their part, the Orange Foundation in Spain and the consultancy firm Nae have produced a report to analyse digital evolution over the last 25 years, the same period that the Foundation has been operating in Spain. The report highlights that, between 2013 and 2018, the digital sector has contributed around €7.5 billion annually to the country's GDP.

In short, all of them highlight Spain's position among the European leaders in terms of digital transformation, but with the need to make progress in innovation. This requires not only boosting economic investment, but also promoting a cultural change that fosters creativity. A more open and collaborative mindset will allow companies, administrations and society in general to adapt quickly to technological changes and take advantage of the opportunities they bring to ensure a prosperous future for Spain.

Do you know of any other reports on the subject? Leave us a comment or write to us at dinamizacion@datos.gos.es.

For many people, summer means the arrival of the vacations, a time to rest or disconnect. But those days off are also an opportunity to train in various areas and improve our competitive skills.

For those who want to take advantage of the next few weeks and acquire new knowledge, Spanish universities have a wide range of courses on a variety of subjects. In this article, we have compiled some examples of courses related to data training.

Geographic Information Systems (GIS) with QGIS. University of Alcalá de Henares (link not available).

The course aims to train students in basic GIS skills so that they can perform common processes such as creating maps for reports, downloading data from a GPS, performing spatial analysis, etc. Each student will have the possibility to develop their own GIS project with the help of the faculty. The course is aimed at university students of any discipline, as well as professionals interested in learning basic concepts to create their own maps or use geographic information systems in their activities.

- Date and place: June 27-28 and July 1-2 in online mode.

Citizen science applied to biodiversity studies: from the idea to the results. Pablo de Olavide University (Seville).

This course addresses all the necessary steps to design, implement and analyze a citizen science project: from the acquisition of basic knowledge to its applications in research and conservation projects. Among other issues, there will be a workshop on citizen science data management, focusing on platforms such as Observation.org y GBIF. It will also teach how to use citizen science tools for the design of research projects. The course is aimed at a broad audience, especially researchers, conservation project managers and students.

- Date and place: From July 1 to 3, 2024 in online and on-site (Seville).

Big Data. Data analysis and machine learning with Python. Complutense University of Madrid.

This course aims to provide students with an overview of the broad Big Data ecosystem, its challenges and applications, focusing on new ways of obtaining, managing and analyzing data. During the course, the Python language is presented, and different machine learning techniques are shown for the design of models that allow obtaining valuable information from a set of data. It is aimed at any university student, teacher, researcher, etc. with an interest in the subject, as no previous knowledge is required.

- Date and place: July 1 to 19, 2024 in Madrid.

Introduction to Geographic Information Systems with R. University of Santiago de Compostela.

Organized by the Working Group on Climate Change and Natural Hazards of the Spanish Association of Geography together with the Spanish Association of Climatology, this course will introduce the student to two major areas of great interest: 1) the handling of the R environment, showing the different ways of managing, manipulating and visualizing data. 2) spatial analysis, visualization and work with raster and vector files, addressing the main geostatistical interpolation methods. No previous knowledge of Geographic Information Systems or the R environment is required to participate.

- Date and place: July 2-5, 2024 in Santiago de Compostela

Artificial Intelligence and Large Language Models: Operation, Key Components and Applications. University of Zaragoza.

Through this course, students will be able to understand the fundamentals and practical applications of artificial intelligence focused on Large Language Model (LLM). Students will be taught how to use specialized libraries and frameworks to work with LLM, and will be shown examples of use cases and applications through hands-on workshops. It is aimed at professionals and students in the information and communications technology sector.

- Date and place: July 3 to 5 in Zaragoza.

Deep into Data Science. University of Cantabria.

This course focuses on the study of big data using Python. The emphasis of the course is on Machine Learning, including sessions on artificial intelligence, neural networks or Cloud Computing. This is a technical course, which presupposes previous knowledge in science and programming with Python.

- Date and place: From July 15 to 19, 2024 in Torrelavega.

Data management for the use of artificial intelligence in tourist destinations. University of Alicante.

This course approaches the concept of Smart Tourism Destination (ITD) and addresses the need to have an adequate technological infrastructure to ensure its sustainable development, as well as to carry out an adequate data management that allows the application of artificial intelligence techniques. During the course, open data and data spaces and their application in tourism will be discussed. It is aimed at all audiences with an interest in the use of emerging technologies in the field of tourism.

- Date and place: From July 22 to 26, 2024 in Torrevieja.

The challenges of digital transformation of productive sectors from the perspective of artificial intelligence and data processing technologies. University of Extremadura.

Now that the summer is over, we find this course where the fundamentals of digital transformation and its impact on productive sectors are addressed through the exploration of key data processing technologies, such as the Internet of Things, Big Data, Artificial Intelligence, etc. During the sessions, case studies and implementation practices of these technologies in different industrial sectors will be analyzed. All this without leaving aside the ethical, legal and privacy challenges. It is aimed at anyone interested in the subject, without the need for prior knowledge.

- Date and place: From September 17 to 19, in Cáceres.

These courses are just examples that highlight the importance that data-related skills are acquiring in Spanish companies, and how this is reflected in university offerings. Do you know of any other courses offered by public universities? Let us know in comments.

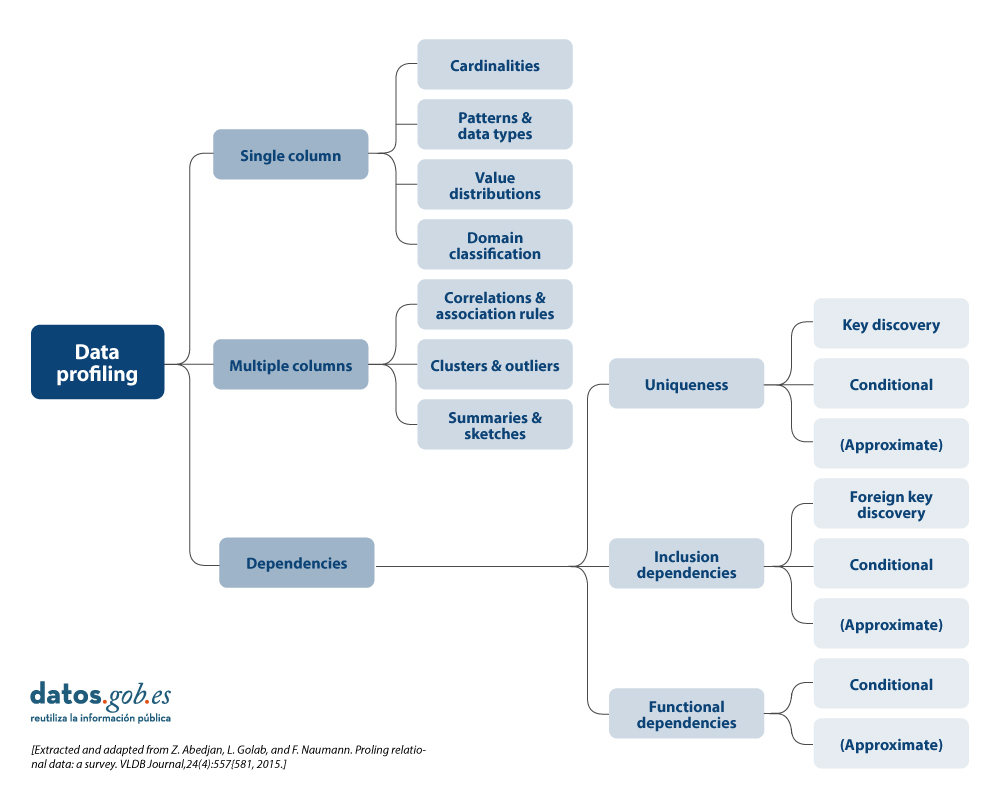

What is data profiling?

Data profiling is the set of activities and processes aimed at determining the metadata about a particular dataset. This process, considered as an indispensable technique during exploratory data analysis, includes the application of different statistics with the main objective of determining aspects such as the number of null values, the number of distinct values in a column, the types of data and/or the most frequent patterns of data values. Its ultimate goal is to provide a clear and detailed understanding of the structure, content and quality of the data, which is essential prior to its use in any application.

Types of data profiling

There are different alternatives in terms of the statistical principles to be applied during data profiling, as well as their typology. For this article, a review of various approaches by different authors has been carried out. On this basis, it is decided to focus the article on the typology of data profiling techniques on three high-level categories: single-column profiling, multi-column profiling and dependency profiling. For each category, possible techniques and uses are identified, as discussed below.

More detail on each of the categories and the benefits they bring is presented below.

1. Profiling of a column

Single-column profiling focuses on analysing each column of a dataset individually. This analysis includes the collection of descriptive statistics such as:

-

Count of distinct values, to determine the exact number of unique records in a list and to be able to sort them. For example, in the case of a dataset containing grants awarded by a public body, this task will allow us to know how many different beneficiaries there are for the beneficiaries column, and whether any of them are repeated.

-

Distribution of values (frequency), which refers to the analysis of the frequency with which different values occur within the same column. This can be represented by histograms that divide the values into intervals and show how many values are in each interval. For example, in an age column, we might find that 20 people are between 25-30 years old, 15 people are between 30-35 years old, and so on.

-

Counting null or missing values, which involves counting the number of null or empty values in each column of a dataset. It helps to determine the completeness of the data and can point to potential quality problems. For example, in a column of email addresses, 5 out of 100 records could be empty, indicating 5% missing data.

- Minimum, maximum and average length of values (for text columns), which is oriented to calculate what is the length of the values in a text column. This is useful for identifying unusual values and for defining length restrictions in databases. For example, in a column of names, we might find that the shortest name is 3 characters, the longest is 20 characters, and the average is 8 characters.

The main benefits of using this data profiling include:

- Anomaly detection: allows the identification of unusual or out-of-range values.

- Improved data preparation: assists in normalising and cleaning data prior to use in more advanced analytics or machine learningmodels.

2. Multi-column profiling

Multi-column profiling analyses the relationship between two or more columns within the same data set. This type of profiling may include:

-

Correlation analysis, used to identify relationships between numerical columns in a data set. A common technique is to calculate pairwise correlations between all numerical columns to discover patterns of relationships. For example, in a table of researchers, we might find that age and number of publications are correlated, indicating that as the age of researchers and their category increases, their number of publications tends to increase. A Pearson correlation coefficient could quantify this relationship.

- Outliers, which involves identifying data that deviate significantly from other data points. Outliers may indicate errors, natural variability or interesting data points that merit further investigation. For example, in a column of budgets for annual R&D projects, a value of one million euros could be an outlier if most of the income is between 30,000 and 100,000 euros. However, if the amount is represented in relation to the duration of the project, it could be a normal value if the 1 million project has 10 times the duration of the 100,000 euro project.

- Frequent value combination detection, focused on finding sets of values that occur together frequently in the data. They are used to discover associations between elements, as in transaction data. For example, in a shopping dataset, we might find that the products "nappies" and "baby formula" are frequently purchased together. An association rule algorithm could generate the rule {breads} → {formula milk}, indicating that customers who buy bread also tend to buy butter with a high probability.

The main benefits of using this data profiling include:

- Trend detection: allows the identification of significant relationships and correlations between columns, which can help in the detection of patterns and trends.

- Improved data consistency: ensures that there is referential integrity and that, for example, similar data type formats are followed between data across multiple columns.

- Dimensionality reduction: allows to reduce the number of columns containing redundant or highly correlated data.

3. Profiling of dependencies

Dependency profiling focuses on discovering and validating logical relationships between different columns, such as:

- Foreign key discovery, which is aimed at establishing which values or combinations of values from one set of columns also appear in the other set of columns, a prerequisite for a foreign key. For example, in the Investigator table, the ProjectID column contains the values [101, 102, 101, 103]. To set ProjectID as a foreign key, we verify that these values are also present in the ProjectID column of the Project table [101, 102, 103]. As all values match, ProjectID in Researcher can be a foreign key referring to ProjectID in Project.

- Functional dependencies, which establishes relationships in which the value of one column depends on the value of another. It is also used for the validation of specific rules that must be complied with (e.g. a discount value must not exceed the total value).

The main benefits of using this data profiling include:

- Improved referential integrity: ensures that relationships between tables are valid and maintained correctly.

- Consistency validation between values: allows to ensure that the data comply with certain constraints or calculations defined by the organisation.

- Data repository optimisation: allows to improve the structure and design of databases by validating and adjusting dependencies.

Uses of data profiling

The above-mentioned statistics can be used in many areas in organisations. One use case would be in data science and data engineering initiatives where it allows for a thorough understanding of the characteristics of a dataset prior to analysis or modelling.

- By generating descriptive statistics, identifying outliers and missing values, uncovering hidden patterns, identifying and correcting problems such as null values, duplicates and inconsistencies, data profiling facilitates data cleaning and data preparation, ensuring data quality and consistency.

- It is also crucial for the early detection of problems, such as duplicates or errors, and for the validation of assumptions in predictive analytics projects.

- It is also essential for the integration of data from multiple sources, ensuring consistency and compatibility.

- In the area of data governance, management and quality, profiling can help establish sound policies and procedures, while in compliance it ensures that data complies with applicable regulations.

- Finally, in terms of management, it helps optimiseExtract, Transform and Load ( ETL) processes, supports data migration between systems and prepares data sets for machine learning and predictive analytics, improving the effectiveness of data-driven models and decisions.

Difference between data profiling and data quality assessment

This term data profiling is sometimes confused with data quality assessment. While data profiling focuses on discovering and understanding the metadata and characteristics of the data, data quality assessment goes one step further and focuses for example on analysing whether the data meets certain requirements or quality standards predefined in the organisation through business rules. Likewise, data quality assessment involves verifying the quality value for different characteristics or dimensions such as those included in the UNE 0081 specification: accuracy, completeness, consistency or timeliness, etc., and ensuring that the data is suitable for its intended use in the organisation: analytics, artificial intelligence, business intelligence, etc.

Data profiling tools or solutions

Finally, there are several outstanding open source solutions (tools, libraries, or dependencies) for data profiling that facilitate the understanding of the data. These include:

- Pandas Profiling and YData Profiling offering detailed reporting and advanced visualisations in Python

- Great Expectations and Dataprep to validate and prepare data, ensuring data integrity throughout the data lifecycle

- R dtables that allows the generation of detailed reports and visualisations for exploratory analysis and data profiling for the R ecosystem.

In summary, data profiling is an important part of exploratory data analysis that provides a detailed understanding of the structure, contents, etc. and is recommended to be taken into account in data analysis initiatives. It is important to dedicate time to this activity, with the necessary resources and tools, in order to have a better understanding of the data being handled and to be aware that it is one more technique to be used as part of data quality management, and that it can be used as a step prior to data quality assessment

Content elaborated by Dr. Fernando Gualo, Professor at UCLM and Data Governance and Quality Consultant The content and the point of view reflected in this publication are the sole responsibility of its author.

The unstoppable advance of ICTs in cities and rural territories, and the social, economic and cultural context that sustains it, requires skills and competences that position us advantageously in new scenarios and environments of territorial innovation. In this context, the Provincial Council of Badajoz has been able to adapt and anticipate the circumstances, and in 2018 it launched the initiative "Badajoz Es Más - Smart Provincia".

What is "Badajoz Es Más"?

The project "Badajoz Is More" is an initiative carried out by the Provincial Council of Badajoz with the aim of achieving more efficient services, improving the quality of life of its citizens and promoting entrepreneurship and innovation through technology and data governance in a region made up of 135 municipalities. The aim is to digitally transform the territory, favouring the creation of business opportunities, social improvement andsettlement of the population.

Traditionally, "Smart Cities" projects have focused their efforts on cities, renovation of historic centres, etc. However, "Badajoz Es Más" is focused on the transformation of rural areas, smart towns and their citizens, putting the focus on rural challenges such as depopulation of rural municipalities, the digital divide, talent retention or the dispersion of services. The aim is to avoid isolated "silos" and transform these challenges into opportunities by improving information management, through the exploitation of data in a productive and efficient way.

Citizens at the Centre

The "Badajoz es Más" project aims to carry out the digital transformation of the territory by making available to municipalities, companies and citizens the new technologies of IoT, Big Data, Artificial Intelligence, etc. The main lines of the project are set out below.

Provincial Platform for the Intelligent Management of Public Services

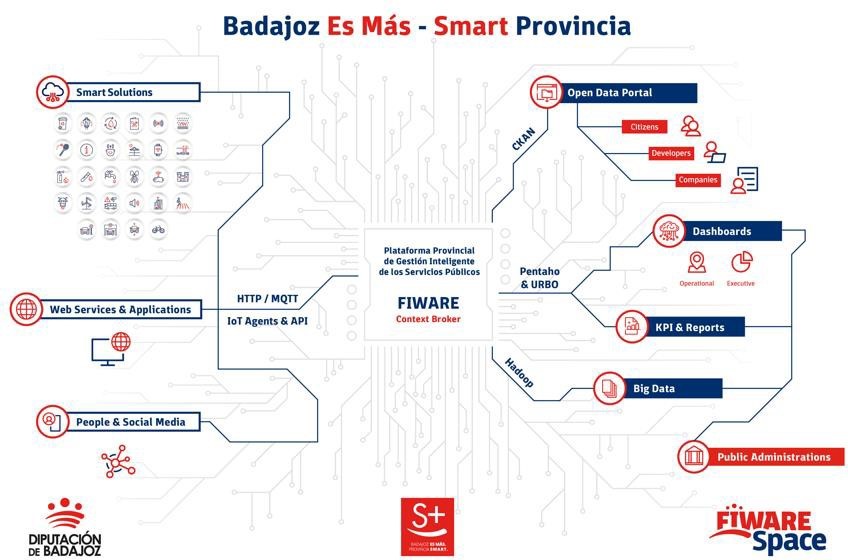

It is the core component of the initiative, as it allows for the integration of information from any IoT device, information system or data source in one place for storage, visualisation and in a single place for storage, visualisation and analysis. Specifically, data is collected from a variety of sources: the various sensors of smart solutions deployed in the region, web services and applications, citizen feedback and social networks.

All information is collected on a based on the open source standard FIWARE an initiative promoted by the European Commission that provides the capacity to homogenise data (FIWARE Data Model) and favour its interoperability. Built according to the guidelines set by AENOR (UNE 178104), it has a central module Orion Context Broker (OCB) which allows the entire information life cycleto be managed. In this way, it offers the ability to centrally monitor and manage a scalable set of public services through internal dashboards.

The platform is "multi-entity", i.e. it provides information, knowledge and services to both the Provincial Council itself and its associated Municipalities (also known as "Smart Villages"). The visualisation of the different information exploitation models processed at the different levels of the Platform is carried out on different dashboards, which can provide service to a specific municipality or locality only showing its data and services, or also provide a global view of all the services and data at the level of the Provincial Council of Badajoz.

Some of the information collected on the platform is also made available to third parties through various channels:

- Portal of open dopen data portal. Collected data that can be opened to third parties for reuse is shared through its open data portal. In it we can find information as diverse as real time data on the beaches with blue flags blue flag beaches in the region (air quality, water quality, noise pollution, capacity, etc. are monitored) or traffic flow, which makes it possible to predict traffic jams.

- Portal for citizens Digital Province Badajoz. This portal offers information on the solutions currently implemented in the province and their data in real time in a user-friendly way, with a simple user experience that allows non-technical people to access the projects developed.

The following graph shows the cycle of information, from its collection, through the platform and distribution to the different channels. All this under strong data governance.

Efficient public services

In addition to the implementation and start-up of the Provincial Platform for the Intelligent Management of Public Services, this project has already integrated various existing services or "verticals" for:

-

To start implementing these new services in the province and to be the example and the "spearhead" of this technological transformation.

- Show the benefits of the implementation of these technologies in order to disseminate and demonstrate them, with the aim of causing sufficient impact so that other local councils and organisations will gradually join the initiative.

There are currently more than 40 companies sending data to the Provincial Platform, more than 60 integrated data sources, more than 800 connected devices, more than 500 transactions per minute... It should be noted that work is underway to ensure that the new calls for tender include a clause so that data from the various works financed with public money can also be sent to the platform.

The idea is to be able to standardise management, so that the solution that has been implemented in one municipality can also be used in another. This not only improves efficiency, but also makes it possible to compare results between municipalities. You can visualise some of the services already implemented in the Province, as well as their Dashboards built from the Provincial Platform at this video.

Innovation Ecosystem

In order for the initiative to reach its target audience, the Provincial Council of Badajoz has developed an innovation ecosystem that serves as a meeting point for the Badajoz Provincial Council:

-

Citizens, who demand these services.

-

Entrepreneurs and educational entities, which have an interest in these technologies.

-

Companies, which have the capacity to implement these solutions.

- Public entities, which can implement this type of project.

The aim is to facilitate and provide the necessary tools, knowledge and advice so that the projects that emerge from this meeting can be carried out.

At the core of this ecosystem is a physical innovation centre called the FIWARE Space. FIWARE Space carries out tasks such as the organisation of events for the dissemination of Smart technologies and concepts among companies and citizens, demonstrative and training workshops, Hackathons with universities and study centres, etc. It also has a Showroom for the exhibition of solutions, organises financially endowed Challenges and is present at national and international congresses.

In addition, they carry out mentoring work for companies and other entities. In total, around 40 companies have been mentored by FIWARE Space, launching their own solutions on several occasions on the FIWARE Market, or proposing the generated data models as standards for the entire global ecosystem. These companies are offered a free service to acquire the necessary knowledge to work in a standardised way, generating uniform data for the rest of the region, and to connect their solutions to the platform, helping and advising them on the challenges that may arise.

One of the keys to FIWARE Space is its open nature, having signed many collaboration agreements and agreements with both local and international entities. For example, work on the standardisation of advanced data models for tourism is ongoing with the Future Cities Institute (Argentina). For those who would like more information, you can follow your centre's activity through its weekly blog.

Next steps: convergence with Data Spaces and Gaia-X

As a result of the collaborative and open nature of the project, the Data Space concept fits perfectly with the philosophy of "Badajoz is More". The Badajoz Provincial Council currently has a multitude of verticals with interesting information for sharing (and future exploitation) of data in a reliable, sovereign and secure way. As a Public Entity, comparing and obtaining other sources of data will greatly enrich the project, providing an external view that is essential for its growth. Gaia-X is the proposal for the creation of a data infrastructure for Europe, and it is the standard towards which the "Badajoz es Más" project is currently converging, as a result of its collaboration with the gaia-X Spain hub.

Today, 23 April, is World Book Day, an occasion to highlight the importance of reading, writing and the dissemination of knowledge. Active reading promotes the acquisition of skills and critical thinking by bringing us closer to specialised and detailed information on any subject that interests us, including the world of data.

Therefore, we would like to take this opportunity to showcase some examples of books and manuals regarding data and related technologies that can be found on the web for free.

1. Fundamentals of Data Science with R, edited by Gema Fernandez-Avilés and José María Montero (2024)

Access the book here.

- What is it about? The book guides the reader from the problem statement to the completion of the report containing the solution to the problem. It explains some thirty data science techniques in the fields of modelling, qualitative data analysis, discrimination, supervised and unsupervised machine learning, etc. It includes more than a dozen use cases in sectors as diverse as medicine, journalism, fashion and climate change, among others. All this, with a strong emphasis on ethics and the promotion of reproducibility of analyses.

- Who is it aimed at? It is aimed at users who want to get started in data science. It starts with basic questions, such as what is data science, and includes short sections with simple explanations of probability, statistical inference or sampling, for those readers unfamiliar with these issues. It also includes replicable examples for practice.

- Language: Spanish.

2. Telling stories with data, Rohan Alexander (2023).

Access the book here.

- What is it about? The book explains a wide range of topics related to statistical communication and data modelling and analysis. It covers the various operations from data collection, cleaning and preparation to the use of statistical models to analyse the data, with particular emphasis on the need to draw conclusions and write about the results obtained. Like the previous book, it also focuses on ethics and reproducibility of results.

- Who is it aimed at? It is ideal for students and entry-level users, equipping them with the skills to effectively conduct and communicate a data science exercise. It includes extensive code examples for replication and activities to be carried out as evaluation.

- Language: English.

3. The Big Book of Small Python Projects, Al Sweigart (2021)

Access the book here.

- What is it about? It is a collection of simple Python projects to learn how to create digital art, games, animations, numerical tools, etc. through a hands-on approach. Each of its 81 chapters independently explains a simple step-by-step project - limited to a maximum of 256 lines of code. It includes a sample run of the output of each programme, source code and customisation suggestions.

- Who is it aimed at? The book is written for two groups of people. On the one hand, those who have already learned the basics of Python, but are still not sure how to write programs on their own. On the other hand, those who are new to programming, but are adventurous, enthusiastic and want to learn as they go along. However, the same author has other resources for beginners to learn basic concepts.

- Language: English.

4. Mathematics for Machine Learning, Marc Peter Deisenroth A. Aldo Faisal Cheng Soon Ong (2024)

Access the book here.

- What is it about? Most books on machine learning focus on machine learning algorithms and methodologies, and assume that the reader is proficient in mathematics and statistics. This book foregrounds the mathematical foundations of the basic concepts behind machine learning

- Who is it aimed at? The author assumes that the reader has mathematical knowledge commonly learned in high school mathematics and physics subjects, such as derivatives and integrals or geometric vectors. Thereafter, the remaining concepts are explained in detail, but in an academic style, in order to be precise.

- Language: English.

5. Dive into Deep Learning, Aston Zhang, Zack C. Lipton, Mu Li, Alex J. Smola (2021, continually updated)

Access the book here.

- What is it about? The authors are Amazon employees who use the mXNet library to teach Deep Learning. It aims to make deep learning accessible, teaching basic concepts, context and code in a practical way through examples and exercises. The book is divided into three parts: introductory concepts, deep learning techniques and advanced topics focusing on real systems and applications.

- Who is it aimed at? This book is aimed at students (undergraduate and postgraduate), engineers and researchers, who are looking for a solid grasp of the practical techniques of deep learning. Each concept is explained from scratch, so no prior knowledge of deep or machine learning is required. However, knowledge of basic mathematics and programming is necessary, including linear algebra, calculus, probability and Python programming.

- Language: English.

6. Artificial intelligence and the public sector: challenges, limits and means, Eduardo Gamero and Francisco L. Lopez (2024)

Access the book here.

- What is it about? This book focuses on analysing the challenges and opportunities presented by the use of artificial intelligence in the public sector, especially when used to support decision-making. It begins by explaining what artificial intelligence is and what its applications in the public sector are, and then moves on to its legal framework, the means available for its implementation and aspects linked to organisation and governance.

- Who is it aimed at? It is a useful book for all those interested in the subject, but especially for policy makers, public workers and legal practitioners involved in the application of AI in the public sector.

- Language: Spanish

7. A Business Analyst’s Introduction to Business Analytics, Adam Fleischhacker (2024)

Access the book here.

- What is it about? The book covers a complete business analytics workflow, including data manipulation, data visualisation, modelling business problems, translating graphical models into code and presenting results to stakeholders. The aim is to learn how to drive change within an organisation through data-driven knowledge, interpretable models and persuasive visualisations.

- Who is it aimed at? According to the author, the content is accessible to everyone, including beginners in analytical work. The book does not assume any knowledge of the programming language, but provides an introduction to R, RStudio and the "tidyverse", a series of open source packages for data science.

- Language: English.

We invite you to browse through this selection of books. We would also like to remind you that this is only a list of examples of the possibilities of materials that you can find on the web. Do you know of any other books you would like to recommend? let us know in the comments or email us at dinamizacion@datos.gob.es!

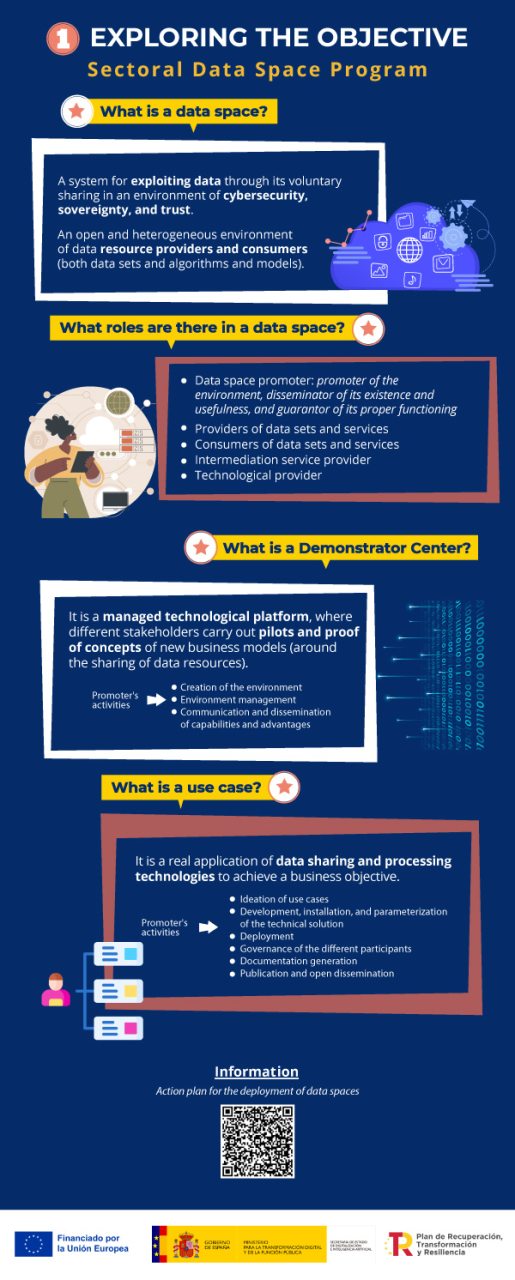

Between 2 April and 16 May, applications for the call on aid for the digital transformation of strategic productive sectors may be submitted at the electronic headquarters of the Ministry for Digital Transformation and Civil Service. Order TDF/1461/2023, of 29 December, modified by Order TDF/294/2024, regulates grants totalling 150 million euros for the creation of demonstrators and use cases, as part of a more general initiative of Sectoral Data Spaces Program, promoted by the State Secretary for Digitalisation and Artificial Intelligence and framed within the Recovery, Transformation and Resilience Plan (PRTR). The objective is to finance the development of data spaces and the promotion of disruptive innovation in strategic sectors of the economy, in line with the strategic lines set out in the Digital Spain Agenda 2026.

Lines, sectors and beneficiaries

The current call includes funding lines for experimental development projects in two complementary areas of action: the creation of demonstration centres (development of technological platforms for data spaces); and the promotion of specific use cases of these spaces. This call is addressed to all sectors except tourism, which has its own call. Beneficiaries may be single entities with their own legal personality, tax domicile in the European Union, and an establishment or branch located in Spain. In the case of the line for demonstration centres, they must also be associative or representative of the value chains of the productive sectors in territorial areas, or with scientific or technological domains.

Infographic-summary

The following infographics show the key information on this call for proposals:

Would you like more information?

- Access to the grant portal for application proposals in the following link. On the portal you will find the regulatory bases and the call for applications, a summary of its content, documentation and informative material with presentations and videos, as well as a complete list of questions and answers. In the mailbox espaciosdedatos@digital.gob.es you will get help about the call and the application procedure. From this portal you can access the electronic office for the application.

- Quick guide to the call for proposals in pdf + downloadable Infographics (on the Sectoral Data Program and Technical Information)

- Link to other documents of interest:

- Additional information on the data space concept