In the public sector ecosystem, subsidies represent one of the most important mechanisms for promoting projects, companies and activities of general interest. However, understanding how these funds are distributed, which agencies call for the largest grants or how the budget varies according to the region or beneficiaries is not trivial when working with hundreds of thousands of records.

In this line, we present a new practical exercise in the series "Step-by-step data exercises", in which we will learn how to explore and model open data using Apache Spark, one of the most widespread platforms for distributed processing and large-scale machine learning.

In this laboratory we will work with real data from the National System of Advertising of Subsidies and Public Aid (BDNS) and we will build a model capable of predicting the budget range of new calls based on their main characteristics.

All the code used is available in the corresponding GitHub repository so that you can run it, understand it, and adapt it to your own projects.

Access the datalab repository on GitHub

Run the data pre-processing code on Google Colab

Context: why analyze public subsidies?

The BDNS collects detailed information on hundreds of thousands of calls published by different Spanish administrations: from ministries and regional ministries to provincial councils and city councils. This dataset is an extraordinarily valuable source for:

- analyse the evolution of public spending,

- understand which organisms are most active in certain areas,

- identify patterns in the types of beneficiaries,

- and to study the budget distribution according to sector or territory.

In our case, we will use the dataset to address a very specific question, but of great practical interest:

Can we predict the budget range of a call based on its administrative characteristics?

This capability would facilitate initial classification, decision-making support or comparative analysis within a public administration.

Objective of the exercise

The objective of the laboratory is twofold:

- Learn how to use Spark in a practical way:

- Upload a real high-volume dataset

- Perform transformations and cleaning

- Manipulate categorical and numeric columns

- Structuring a machine learning pipeline

- Building a predictive model

We will train a classifier capable of estimating whether a call belongs to one of these ranges of low budget (up to €20k), medium (between €20 and €150k) or high (greater than €150k), based on variables such as:

- Granting body

- Autonomous community

- Type of beneficiary

- Year of publication

- Administrative descriptions

Resources used

To complete this exercise we use:

Analytical tools

- Python, the main language of the project

- Google Colab, to run Spark and create Notebooks in a simple way

- PySpark, for data processing in the cleaning and modeling stages

- Pandas, for small auxiliary operations

- Plotly, for some interactive visualizations

Data

Official dataset of the National System of Advertising of Subsidies (BDNS), downloaded from the subsidy portal of the Ministry of Finance.

The data used in this exercise were downloaded on August 28, 2025. The reuse of data from the National System for the Publicity of Subsidies and Public Aid is subject to the legal conditions set out in https://www.infosubvenciones.es/bdnstrans/GE/es/avisolegal.

Development of the exercise

The project is divided into several phases, following the natural flow of a real Data Science case.

5.1. Data Dump and Transformation

In this first section we are going to automatically download the subsidy dataset from the API of the portal of the National System of Publicity of Subsidies (BDNS). We will then transform the data into an optimized format such as Parquet (columnar data format) to facilitate its exploration and analysis.

In this process we will use some complex concepts, such as:

- Asynchronous functions: allows two or more independent operations to be processed in parallel, which makes it easier to make the process more efficient.

- Rotary writer: when a limit on the amount of information is exceeded, the file being processed is closed and a new one is opened with an auto-incremental index (after the previous one). This avoids processing files that are too large and improves efficiency.

Figure 1. Screenshot of the API of the National System for Advertising Subsidies and Public Aid

5.2. Exploratory analysis

The aim of this phase is to get a first idea of the characteristics of the data and its quality.

We will analyze, among others, aspects such as:

- Which types of subsidies have the highest number of calls.

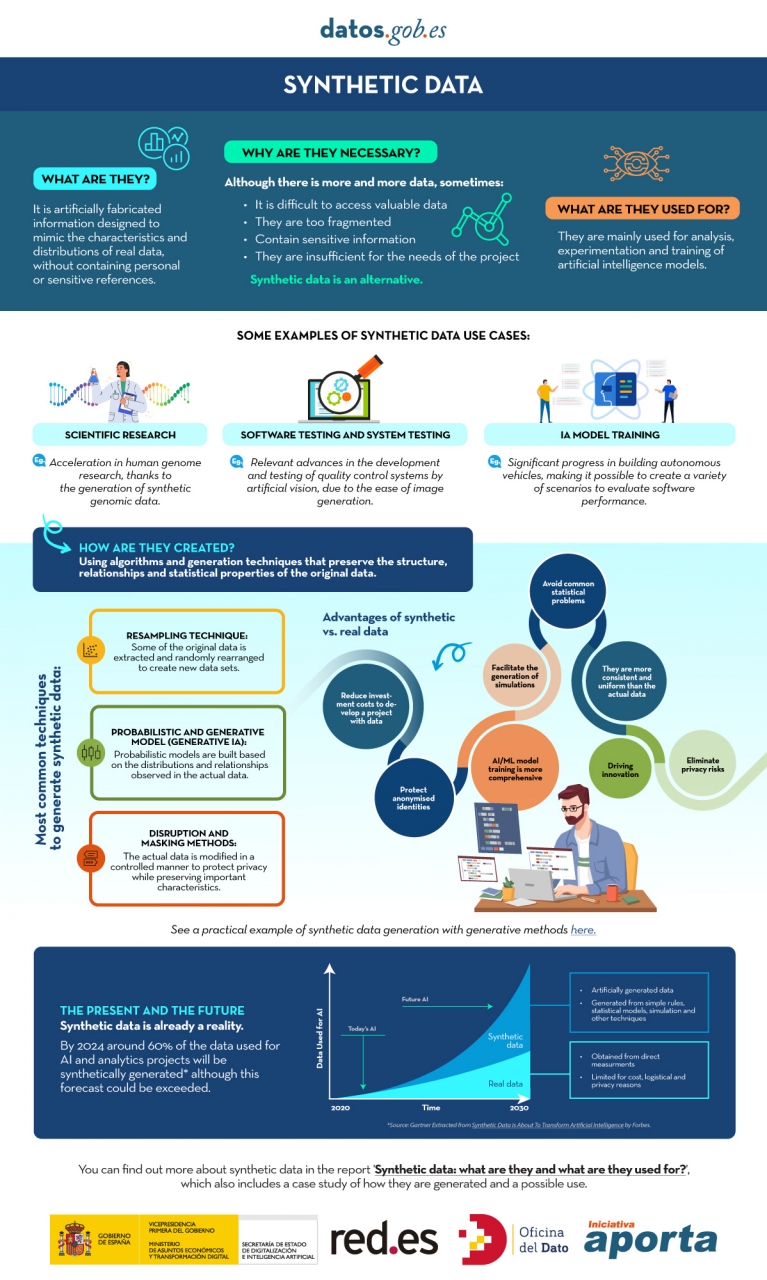

Figure 2. Types of grants with the highest number of calls for applications.

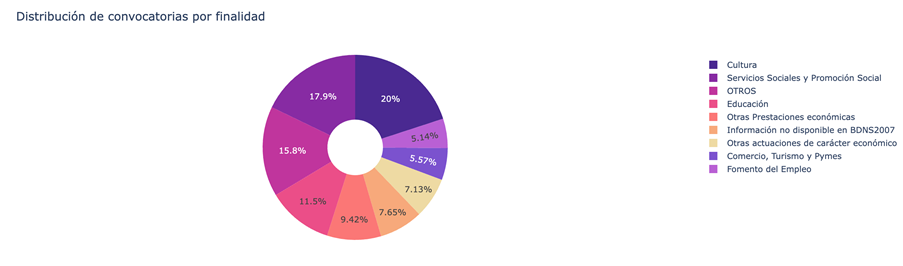

- What is the distribution of subsidies according to their purpose (i.e. Culture, Education, Promotion of employment...).

Figure 3. Distribution of grants according to their purpose.

- Which purposes add a greater budget volume.

Figure 4. Purposes with the largest budgetary volume.

5.3. Modelling: construction of the budget classifier

At this point, we enter the most analytical part of the exercise: teaching a machine to predict whether a new call will have a low, medium or high budget based on its administrative characteristics. To achieve this, we designed a complete machine learning pipeline in Spark that allows us to transform the data, train the model, and evaluate it in a uniform and reproducible way.

First, we prepare all the variables – many of them categorical, such as the convening body – so that the model can interpret them. We then combine all that information into a single vector that serves as the starting point for the learning phase.

With that foundation built, we train a classification model that learns to distinguish subtle patterns in the data: which agencies tend to publish larger calls or how specific administrative elements influence the size of a grant.

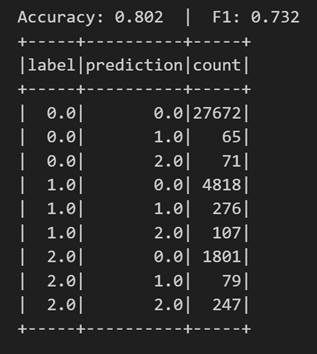

Once trained, we analyze their performance from different angles. We evaluate their ability to correctly classify the three budget ranges and analyze their behavior using metrics such as accuracy or the confusion matrix.

Figure 5. Accuracy metrics.

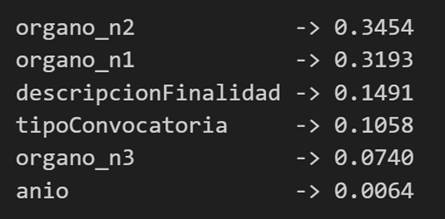

But we do not stop there: we also study which variables have had the greatest weight in the decisions of the model, which allows us to understand which factors seem most decisive when it comes to anticipating the budget of a call.

Figure 6. Variables that have had the greatest weight in the model's decisions.

Conclusions of the exercise

This laboratory will allow us to see how Spark simplifies the processing and modelling of high-volume data, especially useful in environments where administrations generate thousands of records per year, and to better understand the subsidy system after analysing some key aspects of the organisation of these calls.

Do you want to do the exercise?

If you're interested in learning more about using Spark and advanced public data analysis, you can access the repository and run the full Notebook step-by-step.

Content created by Juan Benavente, senior industrial engineer and expert in technologies related to the data economy. The content and views expressed in this publication are the sole responsibility of the author.

We live in an age where more and more phenomena in the physical world can be observed, measured, and analyzed in real time. The temperature of a crop, the air quality of a city, the state of a dam, the flow of traffic or the energy consumption of a building are no longer data that are occasionally reviewed: they are continuous flows of information that are generated second by second.

This revolution would not be possible without cyber-physical systems (CPS), a technology that integrates sensors, algorithms and actuators to connect the physical world with the digital world. But CPS does not only generate data: it can also be fed by open data, multiplying its usefulness and enabling evidence-based decisions.

In this article, we will explore what CPS is, how it generates massive data in real time, what challenges it poses to turn that data into useful public information, what principles are essential to ensure its quality and traceability, and what real-world examples demonstrate the potential for its reuse. We will close with a reflection on the impact of this combination on innovation, citizen science and the design of smarter public policies.

What are cyber-physical systems?

A cyber-physical system is a tight integration between digital components – such as software, algorithms, communication and storage – and physical components – sensors, actuators, IoT devices or industrial machines. Its main function is to observe the environment, process information and act on it.

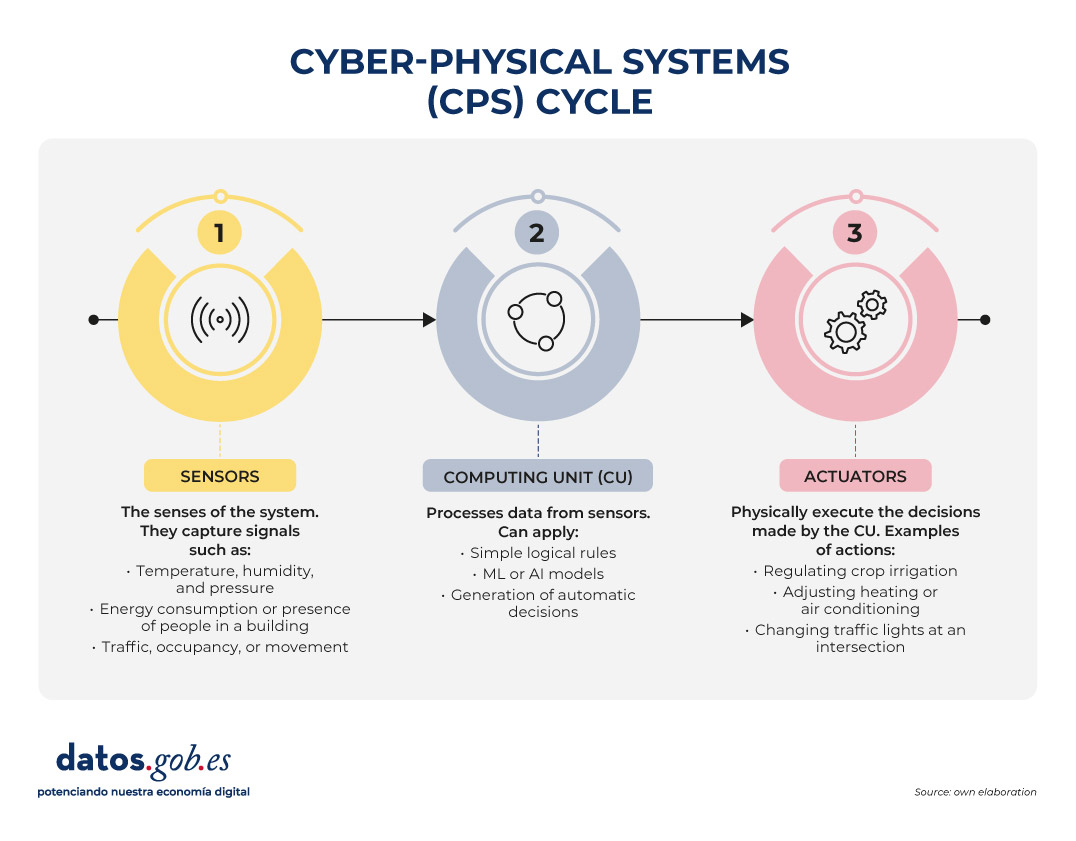

Unlike traditional monitoring systems, a CPS is not limited to measuring: it closes a complete loop between perception, decision, and action. This cycle can be understood through three main elements:

Figure 1. Cyber-physical systems cycle. Source: own elaboration

An everyday example that illustrates this complete cycle of perception, decision and action very well is smart irrigation, which is increasingly present in precision agriculture and home gardening systems. In this case, sensors distributed throughout the terrain continuously measure soil moisture, ambient temperature, and even solar radiation. All this information flows to the computing unit, which analyzes the data, compares it with previously defined thresholds or with more complex models – for example, those that estimate the evaporation of water or the water needs of each type of plant – and determines whether irrigation is really necessary.

When the system concludes that the floor has reached a critical level of dryness, the third element of CPS comes into play: the actuators. They are the ones who open the valves, activate the water pump or regulate the flow rate, and they do so for the exact time necessary to return the humidity to optimal levels. If conditions change—if it starts raining, if the temperature drops, or if the soil recovers moisture faster than expected—the system itself adjusts its behavior accordingly.

This whole process happens without human intervention, autonomously. The result is a more sustainable use of water, better cared for plants and a real-time adaptability that is only possible thanks to the integration of sensors, algorithms and actuators characteristic of cyber-physical systems.

CPS as real-time data factories

One of the most relevant characteristics of cyber-physical systems is their ability to generate data continuously, massively and with a very high temporal resolution. This constant production can be seen in many day-to-day situations:

- A hydrological station can record level and flow every minute.

- An urban mobility sensor can generate hundreds of readings per second.

- A smart meter records electricity consumption every few minutes.

- An agricultural sensor measures humidity, salinity, and solar radiation several times a day.

- A mapping drone captures decimetric GPS positions in real time.

Beyond these specific examples, the important thing is to understand what this capability means for the system as a whole: CPS become true data factories, and in many cases come to function as digital twins of the physical environment they monitor. This almost instantaneous equivalence between the real state of a river, a crop, a road or an industrial machine and its digital representation allows us to have an extremely accurate and up-to-date portrait of the physical world, practically at the same time as the phenomena occur.

This wealth of data opens up a huge field of opportunity when published as open information. Data from CPS can drive innovative services developed by companies, fuel high-impact scientific research, empower citizen science initiatives that complement institutional data, and strengthen transparency and accountability in the management of public resources.

However, for all this value to really reach citizens and the reuse community, it is necessary to overcome a series of technical, organisational and quality challenges that determine the final usefulness of open data. Below, we look at what those challenges are and why they are so important in an ecosystem that is increasingly reliant on real-time generated information.

The challenge: from raw data to useful public information

Just because a CPS generates data does not mean that it can be published directly as open data. Before reaching the public and reuse companies, the information needs prior preparation , validation, filtering and documentation. Administrations must ensure that such data is understandable, interoperable and reliable. And along the way, several challenges appear.

One of the first is standardization. Each manufacturer, sensor and system can use different formats, different sample rates or its own structures. If these differences are not harmonized, what we obtain is a mosaic that is difficult to integrate. For data to be interoperable, common models, homogeneous units, coherent structures, and shared standards are needed. Regulations such as INSPIRE or the OGC (Open Geospatial Consortium) and IoT-TS standards are key so that data generated in one city can be understood, without additional transformation, in another administration or by any reuser.

The next big challenge is quality. Sensors can fail, freeze always reporting the same value, generate physically impossible readings, suffer electromagnetic interference or be poorly calibrated for weeks without anyone noticing. If this information is published as is, without a prior review and cleaning process, the open data loses value and can even lead to errors. Validation – with automatic checks and periodic review – is therefore indispensable.

Another critical point is contextualization. An isolated piece of information is meaningless. A "12.5" says nothing if we don't know if it's degrees, liters or decibels. A measurement of "125 ppm" is useless if we do not know what substance is being measured. Even something as seemingly objective as coordinates needs a specific frame of reference. And any environmental or physical data can only be properly interpreted if it is accompanied by the date, time, exact location and conditions of capture. This is all part of metadata, which is essential for third parties to be able to reuse information unambiguously.

It's also critical to address privacy and security. Some CPS can capture information that, directly or indirectly, could be linked to sensitive people, property, or infrastructure. Before publishing the data, it is necessary to apply anonymization processes, aggregation techniques, security controls and impact assessments that guarantee that the open data does not compromise rights or expose critical information.

Finally, there are operational challenges such as refresh rate and robustness of data flow. Although CPS generates information in real time, it is not always appropriate to publish it with the same granularity: sometimes it is necessary to aggregate it, validate temporal consistency or correct values before sharing it. Similarly, for data to be useful in technical analysis or in public services, it must arrive without prolonged interruptions or duplication, which requires a stable infrastructure and monitoring mechanisms.

Quality and traceability principles needed for reliable open data

Once these challenges have been overcome, the publication of data from cyber-physical systems must be based on a series of principles of quality and traceability. Without them, information loses value and, above all, loses trust.

The first is accuracy. The data must faithfully represent the phenomenon it measures. This requires properly calibrated sensors, regular checks, removal of clearly erroneous values, and checking that readings are within physically possible ranges. A sensor that reads 200°C at a weather station or a meter that records the same consumption for 48 hours are signs of a problem that needs to be detected before publication.

The second principle is completeness. A dataset should indicate when there are missing values, time gaps, or periods when a sensor has been disconnected. Hiding these gaps can lead to wrong conclusions, especially in scientific analyses or in predictive models that depend on the continuity of the time series.

The third key element is traceability, i.e. the ability to reconstruct the history of the data. Knowing which sensor generated it, where it is installed, what transformations it has undergone, when it was captured or if it went through a cleaning process allows us to evaluate its quality and reliability. Without traceability, trust erodes and data loses value as evidence.

Proper updating is another fundamental principle. The frequency with which information is published must be adapted to the phenomenon measured. Air pollution levels may need updates every few minutes; urban traffic, every second; hydrology, every minute or every hour depending on the type of station; and meteorological data, with variable frequencies. Posting too quickly can generate noise; too slow, it can render the data useless for certain uses.

The last principle is that of rich metadata. Metadata explains the data: what it measures, how it is measured, with what unit, how accurate the sensor is, what its operating range is, where it is located, what limitations the measurement has and what this information is generated for. They are not a footnote, but the piece that allows any reuser to understand the context and reliability of the dataset. With good documentation, reuse isn't just possible: it skyrockets.

Examples: CPS that reuses public data to be smarter

In addition to generating data, many cyber-physical systems also consume public data to improve their performance. This feedback makes open data a central resource for the functioning of smart territories. When a CPS integrates information from its own sensors with external open sources, its anticipation, efficiency, and accuracy capabilities are dramatically increased.

Precision agriculture: In agriculture, sensors installed in the field allow variables such as soil moisture, temperature or solar radiation to be measured. However, smart irrigation systems do not rely solely on this local information: they also incorporate weather forecasts from AEMET, open IGN maps on slope or soil types, and climate models published as public data. By combining their own measurements with these external sources, agricultural CPS can determine much more accurately which areas of the land need water, when to plant, and how much moisture should be maintained in each crop. This fine management allows water and fertilizer savings that, in some cases, exceed 30%.

Water management: Something similar happens in water management. A cyber-physical system that controls a dam or irrigation canal needs to know not only what is happening at that moment, but also what may happen in the coming hours or days. For this reason, it integrates its own level sensors with open data on river gauging, rain and snow predictions, and even public information on ecological flows. With this expanded vision, the CPS can anticipate floods, optimize the release of the reservoir, respond better to extreme events or plan irrigation sustainably. In practice, the combination of proprietary and open data translates into safer and more efficient water management.

Impact: innovation, citizen science, and data-driven decisions

The union between cyber-physical systems and open data generates a multiplier effect that is manifested in different areas.

- Business innovation: Companies have fertile ground to develop solutions based on reliable and real-time information. From open data and CPS measurements, smarter mobility applications, water management platforms, energy analysis tools, or predictive systems for agriculture can emerge. Access to public data lowers barriers to entry and allows services to be created without the need for expensive private datasets, accelerating innovation and the emergence of new business models.

- Citizen science: the combination of SCP and open data also strengthens social participation. Neighbourhood communities, associations or environmental groups can deploy low-cost sensors to complement public data and better understand what is happening in their environment. This gives rise to initiatives that measure noise in school zones, monitor pollution levels in specific neighbourhoods, follow the evolution of biodiversity or build collaborative maps that enrich official information.

- Better public decision-making: finally, public managers benefit from this strengthened data ecosystem. The availability of reliable and up-to-date measurements makes it possible to design low-emission zones, plan urban transport more effectively, optimise irrigation networks, manage drought or flood situations or regulate energy policies based on real indicators. Without open data that complements and contextualizes the information generated by the CPS, these decisions would be less transparent and, above all, less defensible to the public.

In short, cyber-physical systems have become an essential piece of understanding and managing the world around us. Thanks to them, we can measure phenomena in real time, anticipate changes and act in a precise and automated way. But its true potential unfolds when its data is integrated into a quality open data ecosystem, capable of providing context, enriching decisions and multiplying uses.

The combination of SPC and open data allows us to move towards smarter territories, more efficient public services and more informed citizen participation. It provides economic value, drives innovation, facilitates research and improves decision-making in areas as diverse as mobility, water, energy or agriculture.

For all this to be possible, it is essential to guarantee the quality, traceability and standardization of the published data, as well as to protect privacy and ensure the robustness of information flows. When these foundations are well established, CPS not only measure the world: they help it improve, becoming a solid bridge between physical reality and shared knowledge.

Content created by Dr. Fernando Gualo, Professor at UCLM and Government and Data Quality Consultant. The content and views expressed in this publication are the sole responsibility of the author.

Quantum computing promises to solve problems in hours that would take millennia for the world's most powerful supercomputers. From designing new drugs to optimizing more sustainable energy grids, this technology will radically transform our ability to address humanity's most complex challenges. However, its true democratizing potential will only be realized through convergence with open data, allowing researchers, companies, and governments around the world to access both quantum computing power in the cloud and the public datasets needed to train and validate quantum algorithms.

Trying to explain quantum theory has always been a challenge, even for the most brilliant minds humanity has given in the last 2 centuries. The celebrated physicist Richard Feynman (1918-1988) put it with his trademark humor:

"There was a time when newspapers said that only twelve men understood the theory of relativity. I don't think it was ever like that [...] On the other hand, I think I can safely say that no one understands quantum mechanics."

And that was said by one of the most brilliant physicists of the twentieth century, Nobel Prize winner and one of the fathers of quantum electrodynamics. So great is the rarity of quantum behavior in the eyes of a human that, even Albert Einstein himself, in his now mythical phrase, said to Max Born, in a letter written to the German physicist in 1926, "God does not play dice with the universe" in reference to his disbelief about the probabilistic and non-deterministic properties attributed to quantum behavior. To which Niels Bohr - another titan of physics of the twentieth century - replied: "Einstein, stop telling God what to do."

Classical computing

If we want to understand why quantum mechanics proposes a revolution in computer science, we have to understand its fundamental differences from mechanics - and, therefore, - classical computing. Almost all of us have heard of bits of information at some point in our lives. Humans have developed a way of performing complex mathematical calculations by reducing all information to bits - the fundamental units of information with which a machine knows how to work -, which are the famous zeros and ones (0 and 1). With two simple values, we have been able to model our entire mathematical world. And why? Some will ask. Why base 2 and not 5 or 7? Well, in our classic physical world (in which we live day to day) differentiating between 0 and 1 is relatively simple; on and off, as in the case of an electrical switch, or north or south magnetization, in the case of a magnetic hard drive. For a binary world, we have developed an entire coding language based on two states: 0 and 1.

Quantum computing

In quantum computing, instead of bits, we use qubits. Qubits use several "strange" properties of quantum mechanics that allow them to represent infinite states at once between zero and one of the classic bits. To understand it, it's as if a bit could only represent an on or off state in a light bulb, while a qubit can represent all the light bulb's illumination intensities. This property is known as "quantum superposition" and allows a quantum computer to explore millions of possible solutions at the same time. But this is not all in quantum computing. If quantum superposition seems strange to you, wait until you see quantum entanglement. Thanks to this property, two "entangled" particles (or two qubits) are connected "at a distance" so that the state of one determines the state of the other. So, with these two properties we have information qubits, which can represent infinite states and are connected to each other. This system potentially has an exponentially greater computing capacity than our computers based on classical computing.

Two application cases of quantum computing

1. Drug discovery and personalized medicine. Quantum computers can simulate complex molecular interactions that are impossible to compute with classical computing. For example, protein folding – fundamental to understanding diseases such as Alzheimer's – requires analyzing trillions of possible configurations. A quantum computer could shave years of research to weeks, speeding up the development of new drugs and personalized treatments based on each patient's genetic profile.

2. Logistics optimization and climate change. Companies like Volkswagen already use quantum computing to optimize traffic routes in real time. On a larger scale, these systems could revolutionize the energy management of entire cities, optimizing smart grids that integrate renewables efficiently, or design new materials for CO2 capture that help combat climate change.

A good read recommended for a complete review of quantum computing here.

The role of open data (and computing resources)

The democratization of access to quantum computing will depend crucially on two pillars: open computing resources and quality public datasets. This combination is creating an ecosystem where quantum innovation no longer requires millions of dollars in infrastructure. Here are some options available for each of these pillars.

- Free access to real quantum hardware:

- IBM Quantum Platform: Provides free monthly access to quantum systems of more than 100 qubits for anyone in the world. With more than 400,000 registered users who have generated more than 2,800 scientific publications, it demonstrates how open access accelerates research. Any researcher can sign up for the platform and start experimenting in minutes.

- Open Quantum Institute (OQI): launched at CERN (the European Organization for Nuclear Research) in 2024, it goes further, providing not only access to quantum computing but also mentoring and educational resources for underserved regions. Its hackathon program in 2025 includes events in Lebanon, the United Arab Emirates, and other countries, specifically designed to mitigate the quantum digital divide.

- Public datasets for the development of quantum algorithms:

- QDataSet: Offers 52 public datasets with simulations of one- and two-qubit quantum systems, freely available for training quantum machine learning (ML) algorithms. Researchers without resources to generate their own simulation data can access its repository on GitHub and start developing algorithms immediately.

- ClimSim: This is a public climate-related modeling dataset that is already being used to demonstrate the first quantum ML algorithms applied to climate change. It allows any team, regardless of their budget, to work on real climate problems using quantum computing.

- PennyLane Datasets: is an open collection of molecules, quantum circuits, and physical systems that allows pharmaceutical startups without resources to perform expensive simulations and experiment with quantum-assisted drug discovery.

Real cases of inclusive innovation

The possibilities offered by the use of open data to quantum computing have been evident in various use cases, the result of specific research and calls for grants, such as:

- The Government of Canada launched in 2022 "Quantum Computing for Climate", a specific call for SMEs and startups to develop quantum applications using public climate data, demonstrating how governments can catalyze innovation by providing both data and financing for its use.

- The UK Quantum Catalyst Fund (£15 million) funds projects that combine quantum computing with public data from the UK's National Health Service (NHS) for problems such as optimising energy grids and medical diagnostics, creating solutions of public interest verifiable by the scientific community.

- The Open Quantum Institute's (OQI) 2024 report details 10 use cases for the UN Sustainable Development Goals developed collaboratively by experts from 22 countries, where the results and methodologies are publicly accessible, allowing any institution to replicate or improve these works).

- Red.es has opened an expression of interest aimed at agents in the quantum technologies ecosystem to collect ideas, proposals and needs that contribute to the design of the future lines of action of the National Strategy for Quantum Technologies 2025–2030, financed with 40 million euros from the ERDF Funds.

Current State of Quantum Computing

We are in the NISQ (Noisy Intermediate-Scale Quantum) era, a term coined by physicist John Preskill in 2018, which describes quantum computers with 50-100 physical qubits. These systems are powerful enough to perform certain calculations beyond the classical capabilities, but they suffer from incoherence, frequent errors that make them unviable in market applications.

IBM, Google, and startups like IonQ offer cloud access to their quantum systems, with IBM providing public access through the IBM Quantum Platform since 2016, being one of the first publicly accessible quantum processors connected to the cloud.

In 2019, Google achieved "quantum supremacy" with its 53-qubit Sycamore processor, which performed a calculation in about 200 seconds that would take about 10,000 years to a state-of-the-art classical supercomputer.

The latest independent analyses suggest that practical quantum applications may emerge around 2035-2040, assuming continued exponential growth in quantum hardware capabilities. IBM has committed to delivering a large-scale fault-tolerant quantum computer, IBM Quantum Starling, by 2029, with the goal of running quantum circuits comprising 100 million quantum gates on 200 logical qubits.

The Global Race for Quantum Leadership

International competition for dominance in quantum technologies has triggered an unprecedented wave of investment. According to McKinsey, until 2022 the officially recognized level of public investment in China (15,300 million dollars) exceeds that of the European Union (7,200 million dollars), the United States 1,900 million dollars) and Japan (1,800 million dollars) combined.

Domestically, the UK has committed £2.5 billion over ten years to its National Quantum Strategy to make the country a global hub for quantum computing, and Germany has made one of the largest strategic investments in quantum computing, allocating €3 billion under its economic stimulus plan.

Investment in the first quarter of 2025 shows explosive growth: quantum computing companies raised more than $1.25 billion, more than double the previous year, an increase of 128%, reflecting a growing confidence that this technology is approaching commercial relevance.

To end the section, a fantastic short interview with Ignacio Cirac, one of the "Spanish fathers" of quantum computing.

Quantum Spain Initiative

In the case of Spain, 60 million euros have been invested in Quantum Spain, coordinated by the Barcelona Supercomputing Center. The project includes:

- Installation of the first quantum computer in southern Europe.

- Network of 25 research nodes distributed throughout the country.

- Training of quantum talent in Spanish universities.

- Collaboration with the business sector for real-world use cases.

This initiative positions Spain as a quantum hub in southern Europe, crucial for not being technologically dependent on other powers.

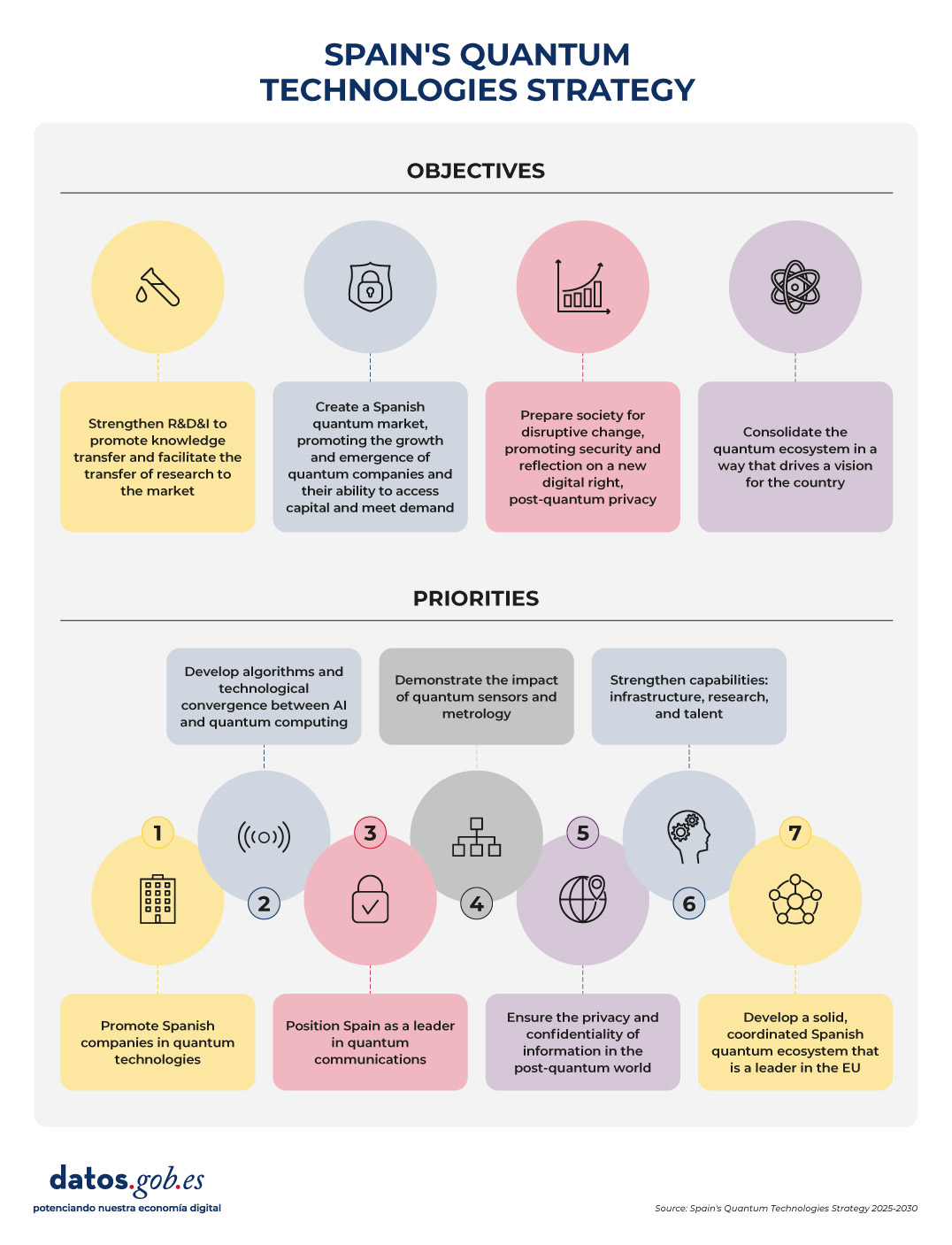

In addition, Spain's Quantum Technologies Strategy has recently been presented with an investment of 800 million euros. This strategy is structured into 4 strategic objectives and 7 priority actions.

Strategic objectives:

- Strengthen R+D+I to promote the transfer of knowledge and facilitate research reaching the market.

- To create a Spanish quantum market, promoting the growth and emergence of quantum companies and their ability to access capital and meet demand.

- Prepare society for disruptive change, promoting security and reflection on a new digital right, post-quantum privacy.

- Consolidate the quantum ecosystem in a way that drives a vision of the country.

Priority actions:

- Priority 1: To promote Spanish companies in quantum technologies.

- Priority 2: Develop algorithms and technological convergence between AI and Quantum.

- Priority 3: Position Spain as a benchmark in quantum communications.

- Priority 4: Demonstrate the impact of quantum sensing and metrology.

- Priority 5: Ensure the privacy and confidentiality of information in the post-quantum world.

- Priority 6: Strengthening capacities: infrastructure, research and talent.

- Priority 7: Develop a solid, coordinated and leading Spanish quantum ecosystem in the EU.

Figure 1. Spain's quantum technology strategy. Source: Author's own elaboration

In short, quantum computing and open data represent a major technological evolution that affects the way we generate and apply knowledge. If we can build a truly inclusive ecosystem—where access to quantum hardware, public datasets, and specialized training is within anyone's reach—we will open the door to a new era of collaborative innovation with a major global impact.

Content created by Alejandro Alija, expert in Digital Transformation and Innovation. The content and views expressed in this publication are the sole responsibility of the author.

The convergence between open data, artificial intelligence and environmental sustainability poses one of the main challenges for the digital transformation model that is being promoted at European level. This interaction is mainly materialized in three outstanding manifestations:

-

The opening of high-value data directly related to sustainability, which can help the development of artificial intelligence solutions aimed at climate change mitigation and resource efficiency.

-

The promotion of the so-called green algorithms in the reduction of the environmental impact of AI, which must be materialized both in the efficient use of digital infrastructure and in sustainable decision-making.

-

The commitment to environmental data spaces, generating digital ecosystems where data from different sources is shared to facilitate the development of interoperable projects and solutions with a relevant impact from an environmental perspective.

Below, we will delve into each of these points.

High-value data for sustainability

Directive (EU) 2019/1024 on open data and re-use of public sector information introduced for the first time the concept of high-value datasets, defined as those with exceptional potential to generate social, economic and environmental benefits. These sets should be published free of charge, in machine-readable formats, using application programming interfaces (APIs) and, where appropriate, be available for bulk download. A number of priority categories have been identified for this purpose, including environmental and Earth observation data.

This is a particularly relevant category, as it covers both data on climate, ecosystems or environmental quality, as well as those linked to the INSPIRE Directive, which refer to certainly diverse areas such as hydrography, protected sites, energy resources, land use, mineral resources or, among others, those related to areas of natural hazards, including orthoimages.

These data are particularly relevant when it comes to monitoring variables related to climate change, such as land use, biodiversity management taking into account the distribution of species, habitats and protected sites, monitoring of invasive species or the assessment of natural risks. Data on air quality and pollution are crucial for public and environmental health, so access to them allows exhaustive analyses to be carried out, which are undoubtedly relevant for the adoption of public policies aimed at improving them. The management of water resources can also be optimized through hydrography data and environmental monitoring, so that its massive and automated treatment is an inexcusable premise to face the challenge of the digitalization of water cycle management.

Combining it with other quality environmental data facilitates the development of AI solutions geared towards specific climate challenges. Specifically, they allow predictive models to be trained to anticipate extreme phenomena (heat waves, droughts, floods), optimize the management of natural resources or monitor critical environmental indicators in real time. It also makes it possible to promote high-impact economic projects, such as the use of AI algorithms to implement technological solutions in the field of precision agriculture, enabling the intelligent adjustment of irrigation systems, the early detection of pests or the optimization of the use of fertilizers.

Green algorithms and digital responsibility: towards sustainable AI

Training and deploying AI systems, particularly general-purpose models and large language models, involves significant energy consumption. According to estimates by the International Energy Agency, data centers accounted for around 1.5% of global electricity consumption in 2024. This represents a growth of around 12% per year since 2017, more than four times faster than the rate of total electricity consumption. Data center power consumption is expected to double to around 945 TWh by 2030.

Against this backdrop, green algorithms are an alternative that must necessarily be taken into account when it comes to minimising the environmental impact posed by the implementation of digital technology and, specifically, AI. In fact, both the European Data Strategy and the European Green Deal explicitly integrate digital sustainability as a strategic pillar. For its part, Spain has launched a National Green Algorithm Programme, framed in the 2026 Digital Agenda and with a specific measure in the National Artificial Intelligence Strategy.

One of the main objectives of the Programme is to promote the development of algorithms that minimise their environmental impact from conception ( green by design), so the requirement of exhaustive documentation of the datasets used to train AI models – including origin, processing, conditions of use and environmental footprint – is essential to fulfil this aspiration. In this regard, the Commission has published a template to help general-purpose AI providers summarise the data used for the training of their models, so that greater transparency can be demanded, which, for the purposes of the present case, would also facilitate traceability and responsible governance from an environmental perspective. as well as the performance of eco-audits.

The European Green Deal Data Space

It is one of the common European data spaces contemplated in the European Data Strategy that is at a more advanced stage, as demonstrated by the numerous initiatives and dissemination events that have been promoted around it. Traditionally, access to environmental information has been one of the areas with the most favourable regulation, so that with the promotion of high-value data and the firm commitment to the creation of a European area in this area, there has been a very remarkable qualitative advance that reinforces an already consolidated trend in this area.

Specifically, the data spaces model facilitates interoperability between public and private open data, reducing barriers to entry for startups and SMEs in sectors such as smart forest management, precision agriculture or, among many other examples, energy optimization. At the same time, it reinforces the quality of the data available for Public Administrations to carry out their public policies, since their own sources can be contrasted and compared with other data sets. Finally, shared access to data and AI tools can foster collaborative innovation initiatives and projects, accelerating the development of interoperable and scalable solutions.

However, the legal ecosystem of data spaces entails a complexity inherent in its own institutional configuration, since it brings together several subjects and, therefore, various interests and applicable legal regimes:

-

On the one hand, public entities, which have a particularly reinforced leadership role in this area.

-

On the other hand, private entities and citizens, who can not only contribute their own datasets, but also offer digital developments and tools that value data through innovative services.

-

And, finally, the providers of the infrastructure necessary for interaction within the space.

Consequently, advanced governance models are essential to deal with this complexity, reinforced by technological innovation and especially AI, since the traditional approaches of legislation regulating access to environmental information are certainly limited for this purpose.

Towards strategic convergence

The convergence of high-value open data, responsible green algorithms and environmental data spaces is shaping a new digital paradigm that is essential to address climate and ecological challenges in Europe that requires a robust and, at the same time, flexible legal approach. This unique ecosystem not only allows innovation and efficiency to be promoted in key sectors such as precision agriculture or energy management, but also reinforces the transparency and quality of the environmental information available for the formulation of more effective public policies.

Beyond the current regulatory framework, it is essential to design governance models that help to interpret and apply diverse legal regimes in a coherent manner, that protect data sovereignty and, ultimately, guarantee transparency and responsibility in the access and reuse of environmental information. From the perspective of sustainable public procurement, it is essential to promote procurement processes by public entities that prioritise technological solutions and interoperable services based on open data and green algorithms, encouraging the choice of suppliers committed to environmental responsibility and transparency in the carbon footprints of their digital products and services.

Only on the basis of this approach can we aspire to make digital innovation technologically advanced and environmentally sustainable, thus aligning the objectives of the Green Deal, the European Data Strategy and the European approach to AI.

Content prepared by Julián Valero, professor at the University of Murcia and coordinator of the Innovation, Law and Technology Research Group (iDerTec). The content and views expressed in this publication are the sole responsibility of the author.

Last October, Spain hosted the OGP 2025 Global Summit, an international benchmark event on open government. More than 2,000 representatives of governments, civil society organisations and public policy experts from around the world met in Vitoria-Gasteiz to discuss the importance of maintaining open, participatory and transparent governments as pillars of society.

The location chosen for this meeting was no coincidence: Spain has been building an open government model for more than a decade that has positioned it as an international benchmark. In this article we are going to review some of the projects that have been launched in our country to transform its public administration and bring it closer to citizens.

The strategic framework: action plans and international commitments

Open government is a culture of governance that promotes the principles of transparency, integrity, accountability, and stakeholder participation in support of democracy and inclusive growth.

Spain's commitment to open government has a consolidated track record. Since Spain joined the Open Government Partnership in 2011, the country has developed five consecutive action plans that have been broadening and deepening government openness initiatives. Each plan has been an advance over the previous one, incorporating new commitments and responding to the emerging challenges of the digital society.

The V Open Government Plan (2024-2028) represents the evolution of this strategy. Its development process incorporated a co-creation methodology that involved multiple actors from civil society, public administrations at all levels and experts in the field. This participatory approach made it easier for the plan to respond to real needs and to have the support of all the sectors involved.

Justice 2030: the biggest transformation of the judicial system in decades

Under the slogan "The greatest transformation of Justice in decades", the Justice 2030 programme is proposed as a roadmap to modernise the Spanish judicial system. Its objective is to build a more accessible, efficient, sustainable and people-centred justice system, through a co-governance model that involves public administrations, legal operators and citizens.

The plan is structured around three strategic axes:

1. Accessibility and people-centred justice

This axis seeks to ensure that justice reaches all citizens, reducing territorial, social and digital gaps. Among the main measures are:

- Face-to-face and digital access and attention: promotion of more accessible judicial headquarters, both physically and technologically, with services adapted to vulnerable groups.

- Basic legal education: legal literacy initiatives for the general population, promoting understanding of the judicial system.

- Inclusive justice: mediation and restorative justice programmes, with special attention to victims and groups in vulnerable situations.

- New social realities: adaptation of the judicial system to contemporary challenges (digital violence, environmental crimes, digital rights, etc.).

2. Efficiency of the public justice service

The programme argues that technological and organisational transformation is key to a more agile and efficient justice. This second axis incorporates advances aimed at modern management and digitalization:

- Justice offices in the municipalities: creation of access points to justice in small towns, bringing judicial services closer to the territory.

- Procedural and organisational reform: updating the Criminal Procedure Law and the procedural framework to improve coordination between courts.

- Electronic judicial file: consolidation of the digital file and interoperable tools between institutions.

- Artificial intelligence and judicial data: responsible use of advanced technologies to improve file management and workload prediction.

3. Sustainable and territorially cohesive justice

The third axis seeks to ensure that judicial modernisation contributes to the Sustainable Development Goals (SDGs) and territorial cohesion.

The main lines are:

- Environmental and climate justice: promotion of legal mechanisms that favor environmental protection and the fight against climate change.

- Territorial cohesion: coordination with autonomous communities to guarantee equal access to justice throughout the country.

- Institutional collaboration: strengthening cooperation between public authorities, local entities and civil society.

The Transparency Portal: the heart of the right to know

If Justice 2030 represents the transformation of access to justice, the Transparency Portal is designed to guarantee the citizen's right to public information. This digital platform, operational since 2014, centralises information on administrative organisations and allows citizens to exercise their right of access to public information in a simple and direct way. Its main functions are:

- Proactive publication of information on government activities, budgets, contracts, grants, agreements and administrative decisions, without the need for citizens to request it.

- Information request system to access documentation that is not publicly available, with legally established deadlines for the administrative response.

- Participatory processes that allow citizens to actively participate in the design and evaluation of public policies.

- Transparency indicators that objectively measure compliance with the obligations of the different administrations, allowing comparisons and encouraging continuous improvement.

This portal is based on three fundamental rights:

- Right to know: every citizen can access public information, either through direct consultation on the portal or by formally exercising their right of access when the information is not available.

- Right to understand: information must be presented in a clear, understandable way and adapted to different audiences, avoiding unnecessary technicalities and facilitating interpretation.

- Right to participate: citizens can intervene in the management of public affairs through the citizen participation mechanisms enabled on the platform.

The platform complies with Law 19/2013, of 9 December, on transparency, access to public information and good governance, a regulation that represented a paradigm shift, recognising access to information as a fundamental right of the citizen and not as a gracious concession of the administration.

Consensus for Open Government: National Open Government Strategy

Another project advocating for open government is the "Consensus for Open Administration." According to this reference document, it is not only a matter of opening data or creating transparency portals, but of radically transforming the way in which public policies are designed and implemented. This consensus replaces the traditional vertical model, where administrations decide unilaterally, with a permanent dialogue between administrations, legal operators and citizens. The document is structured in four strategic axes:

1. Administration Open to the capacities of the public sector

- Development of proactive, innovative and inclusive public employment.

- Responsible implementation of artificial intelligence systems.

- Creating secure and ethical shared data spaces.

2. Administration Open to evidence-informed public policies and participation:

- Development of interactive maps of public policies.

- Systematic evaluation based on data and evidence.

- Incorporation of the citizen voice in all phases of the public policy cycle.

3. Administration Open to citizens:

- Evolution of "My Citizen Folder" towards more personalized services.

- Implementation of digital tools such as SomosGob.

- Radical simplification of administrative procedures and procedures.

4. Administration Open to Transparency, Participation and Accountability:

- Complete renovation of the Transparency Portal.

- Improvement of the transparency mechanisms of the General State Administration.

- Strengthening accountability systems.

Figure 1: Consensus on open government a. Source: own elaboration

The Open Government Forum: a space for permanent dialogue

All these projects and commitments need an institutional space where they can be continuously discussed, evaluated and adjusted. That is precisely the function of the Open Government Forum, which functions as a body for participation and dialogue made up of representatives of the central, regional and local administration. And it is made up of 32 members of civil society carefully selected to ensure diversity of perspectives.

This balanced composition ensures that all voices are heard in the design and implementation of open government policies. The Forum meets regularly to assess the progress of commitments, identify obstacles and propose new initiatives that respond to emerging challenges.

Its transparent and participatory operation, with public minutes and open consultation processes, makes it an international benchmark for good practices in collaborative governance. The Forum is not simply a consultative body, but a space of co-decision where consensus is built that is later translated into concrete public policies.

Hazlab: innovation laboratory for citizen participation

Promoted by the General Directorate of Public Governance of the Ministry for Digital Transformation and Public Function, HazLab is part of the Plan for the Improvement of Citizen Participation in Public Affairs, included in Commitment 3 of the IV Open Government Plan of Spain (2020-2024).

HazLab is a virtual space designed to promote collaboration between the Administration, citizens, academia, professionals and social groups. Its purpose is to promote a new way of building public policies based on innovation, dialogue and cooperation. Specifically, there are three areas of work:

- Virtual spaces for collaboration, which facilitate joint work between administrations, experts and citizens.

- Projects for the design and prototyping of public services, based on participatory and innovative methodologies.

- Resource Library, a repository with audiovisual materials, articles, reports and guides on open government, participation, integrity and transparency.

Registration in HazLab is free and allows you to participate in projects, events and communities of practice. In addition, the platform offers a user manual and a code of conduct to facilitate responsible participation.

In conclusion, the open government projects that Spain is promoting represent much more than isolated initiatives of administrative modernization or technological updates. They constitute a profound cultural change in the very conception of public service, where citizens cease to be mere passive recipients of services to become active co-creators of public policies.

We live surrounded by AI-generated summaries. We have had the option of generating them for months, but now they are imposed on digital platforms as the first content that our eyes see when using a search engine or opening an email thread. On platforms such as Microsoft Teams or Google Meet, video call meetings are transcribed and summarized in automatic minutes for those who have not been able to be present, but also for those who have been there. However, what a language model has considered important, is it really important for the person receiving the summary?

In this new context, the key is to learn to recover the meaning behind so much summarized information. These three strategies will help you transform automatic content into an understanding and decision-making tool.

1. Ask expansive questions

We tend to summarize to reduce content that we are not able to cover, but we run the risk of associating brief with significant, an equivalence that is not always fulfilled. Therefore, we should not focus from the beginning on summarizing, but on extracting relevant information for us, our context, our vision of the situation and our way of thinking. Beyond the basic prompt "give me a summary", this new way of approaching content that escapes us consists of cross-referencing data, connecting dots and suggesting hypotheses, which they call sensemaking. And it happens, first of all, to be clear about what we want to know.

Practical situation:

Imagine a long meeting that we have not been able to attend. That afternoon, we received in our email a summary of the topics discussed. It's not always possible, but a good practice at this point, if our organization allows it, is not to just stay with the summary: if allowed, and always respecting confidentiality guidelines, upload the full transcript to a conversational system such as Copilot or Gemini and ask specific questions:

-

Which topic was repeated the most or received the most attention during the meeting?

-

In a previous meeting, person X used this argument. Was it used again? Did anyone discuss it? Was it considered valid?

-

What premises, assumptions or beliefs are behind this decision that has been made?

-

At the end of the meeting, what elements seem most critical to the success of the project?

-

What signs anticipate possible delays or blockages? Which ones have to do with or could affect my team?

Beware of:

First of all, review and confirm the attributions. Generative models are becoming more and more accurate, but they have a great ability to mix real information with false or generated information. For example, they can attribute a phrase to someone who did not say it, relate ideas as cause and effect that were not really connected, and surely most importantly: assign tasks or responsibilities for next steps to someone who does not correspond.

2. Ask for structured content

Good summaries are not shorter, but more organized, and the written text is not the only format we can use. Look for efficiency and ask conversational systems to return tables, categories, decision lists or relationship maps. Form conditions thought: if you structure information well, you will understand it better and also transmit it better to others, and therefore you will go further with it.

Practical situation:

In this case, let's imagine that we received a long report on the progress of several internal projects of our company. The document has many pages with paragraphs descriptive of status, feedback, dates, unforeseen events, risks and budgets. Reading everything line by line would be impossible and we would not retain the information. The good practice here is to ask for a transformation of the document that is really useful to us. If possible, upload the report to the conversational system and request structured content in a demanding way and without skimping on details:

-

Organize the report in a table with the following columns: project, responsible, delivery date, status, and a final column that indicates if any unforeseen event has occurred or any risk has materialized. If all goes well, print in that column "CORRECT".

-

Generate a visual calendar with deliverables, their due dates, and assignees, starting on October 1, 2025 and ending on January 31, 2026, in the form of a Gantt chart.

-

I want a list that only includes the name of the projects, their start date, and their due date. Sort by delivery date, closest first.

-

From the customer feedback section that you will find in each project, create a table with the most repeated comments and which areas or teams they usually refer to. Place them in order, from the most repeated to the least.

-

Give me the billing of the projects that are at risk of not meeting deadlines, indicate the price of each one and the total.

Beware of:

The illusion of veracity and completeness that a clean, orderly, automatic text with fonts will provide us is enormous. A clear format, such as a table, list, or map, can give a false sense of accuracy. If the source data is incomplete or wrong, the structure only makes up the error and we will have a harder time seeing it. AI productions are usually almost perfect. At the very least, and if the document is very long, do random checks ignoring the form and focusing on the content.

3. Connect the dots

Strategic sense is rarely in an isolated text, let alone in a summary. The advanced level in this case consists of asking the multimodal chat to cross-reference sources, compare versions or detect patterns between various materials or formats, such as the transcript of a meeting, an internal report and a scientific article. What is really interesting to see are comparative keys such as evolutionary changes, absences or inconsistencies.

Practical situation:

Let's imagine that we are preparing a proposal for a new project. We have several materials: the transcript of a management team meeting, the previous year's internal report, and a recent article on industry trends. Instead of summarizing them separately, you can upload them to the same conversation thread or chat you've customized on the topic, and ask for more ambitious actions.

-

Compare these three documents and tell me which priorities coincide in all of them, even if they are expressed in different ways.

-

What topics in the internal report were not mentioned at the meeting? Generate a hypothesis for each one as to why they have not been treated.

-

What ideas in the article might reinforce or challenge ours? Give me ideas that are not reflected in our internal report.

-

Look for articles in the press from the last six months that support the strong ideas of the internal report.

-

Find external sources that complement the information missing in these three documents on topic X, and generate a panoramic report with references.

Beware of:

It is very common for AI systems to deceptively simplify complex discussions, not because they have a hidden purpose but because they have always been rewarded for simplicity and clarity in training. In addition, automatic generation introduces a risk of authority: because the text is presented with the appearance of precision and neutrality, we assume that it is valid and useful. And if that wasn't enough, structured summaries are copied and shared quickly. Before forwarding, make sure that the content is validated, especially if it contains sensitive decisions, names, or data.

AI-based models can help you visualize convergences, gaps, or contradictions and, from there, formulate hypotheses or lines of action. It is about finding with greater agility what is so valuable that we call insights. That is the step from summary to analysis: the most important thing is not to compress the information, but to select it well, relate it and connect it with the context. Intensifying the demand from the prompt is the most appropriate way to work with AI systems, but it also requires a previous personal effort of analysis and landing.

Content created by Carmen Torrijos, expert in AI applied to language and communication. The content and views expressed in this publication are the sole responsibility of the author.

Data visualization is a fundamental practice to democratize access to public information. However, creating effective graphics goes far beyond choosing attractive colors or using the latest technological tools. As Alberto Cairo, an expert in data visualization and professor at the academy of the European Open Data Portal (data.europa.eu), points out, "every design decision must be deliberate: inevitably subjective, but never arbitrary." Through a series of three webinars that you can watch again here, the expert offered innovative tips to be at the forefront of data visualization.

When working with data visualization, especially in the context of public information, it is crucial to debunk some myths ingrained in our professional culture. Phrases like "data speaks for itself," "a picture is worth a thousand words," or "show, don't count" sound good, but they hide an uncomfortable truth: charts don't always communicate automatically.

The reality is more complex. A design professional may want to communicate something specific, but readers may interpret something completely different. How can you bridge the gap between intent and perception in data visualization? In this post, we offer some keys to the training series.

A structured framework for designing with purpose

Rather than following rigid "rules" or applying predefined templates, the course proposes a framework of thinking based on five interrelated components:

- Content: the nature, origin, and limitations of the data

- People: The audience we are targeting

- Intention: The Purposes We Define

- Constraints: The Constraints We Face

- Results: how the graph is received

This holistic approach forces us to constantly ask ourselves: what do our readers really need to know? For example, when communicating information about hurricane or health emergency risks, is it more important to show exact trajectories or communicate potential impacts? The correct answer depends on the context and, above all, on the information needs of citizens.

The danger of over-aggregation

Even without losing sight of the purpose, it is important not to fall into adding too much information or presenting only averages. Imagine, for example, a dataset on citizen security at the national level: an average may hide the fact that most localities are very safe, while a few with extremely high rates distort the national indicator.

As Claus O. Wilke explains in his book "Fundamentals of Data Visualization," this practice can hide crucial patterns, outliers, and paradoxes that are precisely the most relevant to decision-making. To avoid this risk, the training proposes to visualize a graph as a system of layers that we must carefully build from the base:

1. Encoding

- It's the foundation of everything: how we translate data into visual attributes. Research in visual perception shows us that not all "visual channels" are equally effective. The hierarchy would be:

- Most effective: position, length and height

- Moderately effective: angle, area and slope

- Less effective: color, saturation, and shape

How do we put this into practice? For example, for accurate comparisons, a bar chart will almost always be a better choice than a pie chart. However, as nuanced in the training materials, "effective" does not always mean "appropriate". A pie chart can be perfect when we want to express the idea of a "whole and its parts", even if accurate comparisons are more difficult.

2. Arrangement

- The positioning, ordering, and grouping of elements profoundly affects perception. Do we want the reader to compare between categories within a group, or between groups? The answer will determine whether we organize our visualization with grouped or stacked bars, with multiple panels, or in a single integrated view.

3. Scaffolding

Titles, introductions, annotations, scales and legends are fundamental. In datos.gob.es we've seen how interactive visualizations can condense complex information, but without proper scaffolding, interactivity can confuse rather than clarify.

The value of a correct scale

One of the most delicate – and often most manipulable – technical aspects of a visualization is the choice of scale. A simple modification in the Y-axis can completely change the reader's interpretation: a mild trend may seem like a sudden crisis, or sustained growth may go unnoticed.

As mentioned in the second webinar in the series, scales are not a minor detail: they are a narrative component. Deciding where an axis begins, what intervals are used, or how time periods are represented involves making choices that directly affect one's perception of reality. For example, if an employment graph starts the Y-axis at 90% instead of 0%, the decline may seem dramatic, even if it's actually minimal.

Therefore, scales must be honest with the data. Being "honest" doesn't mean giving up on design decisions, but rather clearly showing what decisions were made and why. If there is a valid reason for starting the Y-axis at a non-zero value, it should be explicitly explained in the graph or in its footnote. Transparency must prevail over drama.

Visual integrity not only protects the reader from misleading interpretations, but also reinforces the credibility of the communicator. In the field of public data, this honesty is not optional: it is an ethical commitment to the truth and to citizen trust.

Accessibility: Visualize for everyone

On the other hand, one of the aspects often forgotten is accessibility. About 8% of men and 0.5% of women have some form of color blindness. Tools like Color Oracle allow you to simulate what our visualizations look like for people with different types of color perception impairments.

In addition, the webinar mentioned the Chartability project, a methodology to evaluate the accessibility of data visualizations. In the Spanish public sector, where web accessibility is a legal requirement, this is not optional: it is a democratic obligation. Under this premise, the Spanish Federation of Municipalities and Provinces published a Data Visualization Guide for Local Entities.

Visual Storytelling: When Data Tells Stories

Once the technical issues have been resolved, we can address the narrative aspect that is increasingly important to communicate correctly. In this sense, the course proposes a simple but powerful method:

- Write a long sentence that summarizes the points you want to communicate.

- Break that phrase down into components, taking advantage of natural pauses.

- Transform those components into sections of your infographic.

This narrative approach is especially effective for projects like the ones we found in data.europa.eu, where visualizations are combined with contextual explanations to communicate the value of high-value datasets or in datos.gob.es's data science and visualization exercises.

The future of data visualization also includes more creative and user-centric approaches. Projects that incorporate personalized elements, that allow readers to place themselves at the center of information, or that use narrative techniques to generate empathy, are redefining what we understand by "data communication".

Alternative forms of "data sensification" are even emerging: physicalization (creating three-dimensional objects with data) and sonification (translating data into sound) open up new possibilities for making information more tangible and accessible. The Spanish company Tangible Data, which we echo in datos.gob.es because it reuses open datasets, is proof of this.

Figure 1. Examples of data sensification. Source: https://data.europa.eu/sites/default/files/course/webinar-data-visualisation-episode-3-slides.pdf

By way of conclusion, we can emphasize that integrity in design is not a luxury: it is an ethical requirement. Every graph we publish on official platforms influences how citizens perceive reality and make decisions. That is why mastering technical tools such as libraries and visualization APIs, which are discussed in other articles on the portal, is so relevant.

The next time you create a visualization with open data, don't just ask yourself "what tool do I use?" or "Which graphic looks best?". Ask yourself: what does my audience really need to know? Does this visualization respect data integrity? Is it accessible to everyone? The answers to these questions are what transform a beautiful graphic into a truly effective communication tool.

Artificial Intelligence (AI) is becoming one of the main drivers of increased productivity and innovation in both the public and private sectors, becoming increasingly relevant in tasks ranging from the creation of content in any format (text, audio, video) to the optimization of complex processes through Artificial Intelligence agents.

However, advanced AI models, and in particular large language models, require massive amounts of data for training, optimization, and evaluation. This dependence generates a paradox: at the same time as AI demands more and higher quality data, the growing concern for privacy and confidentiality (General Data Protection Regulation or GDPR), new data access and use rules (Data Act), and quality and governance requirements for high-risk systems (AI Regulation), as well as the inherent scarcity of data in sensitive domains limit access to actual data.

In this context, synthetic data can be an enabling mechanism to achieve new advances, reconciling innovation and privacy protection. On the one hand, they allow AI to be nurtured without exposing sensitive information, and when combined with quality open data, they expand access to domains where real data is scarce or heavily regulated.

What is synthetic data and how is it generated?

Simply put, synthetic data can be defined as artificially fabricated information that mimics the characteristics and distributions of real data. The main function of this technology is to reproduce the statistical characteristics, structure and patterns of the underlying real data. In the domain of official statistics, there are cases such as the United States Census , which publishes partially or totally synthetic products such as OnTheMap (mobility of workers between place of residence and workplace) or SIPP Synthetic Beta (socioeconomic microdata linked to taxes and social security).

The generation of synthetic data is currently a field still in development that is supported by various methodologies. Approaches can range from rule-based methods or statistical modeling (simulations, Bayesian, causal networks), which mimic predefined distributions and relationships, to advanced deep learning techniques. Among the most outstanding architectures we find:

- Generative Adversarial Networks (GANs): a generative model, trained on real data, learns to mimic its characteristics, while a discriminator tries to distinguish between real and synthetic data. Through this iterative process, the generator improves its ability to produce artificial data that is statistically indistinguishable from the originals. Once trained, the algorithm can create new artificial records that are statistically similar to the original sample, but completely new and secure.

- Variational Selfencoders (VAE): These models are based on neural networks that learn a probabilistic distribution in a latent space of the input data. Once trained, the model uses this distribution to obtain new synthetic observations by sampling and decoding the latent vectors. VAEs are often considered a more stable and easier option to train compared to GANs for tabular data generation.

- Autoregressive/hierarchical models and domain simulators: used, for example, in electronic medical record data, which capture temporal and hierarchical dependencies. Hierarchical models structure the problem by levels, first sampling higher-level variables and then lower-level variables conditioned to the previous ones. Domain simulators code process rules and calibrate them with real data, providing control and interpretability and ensuring compliance with business rules.

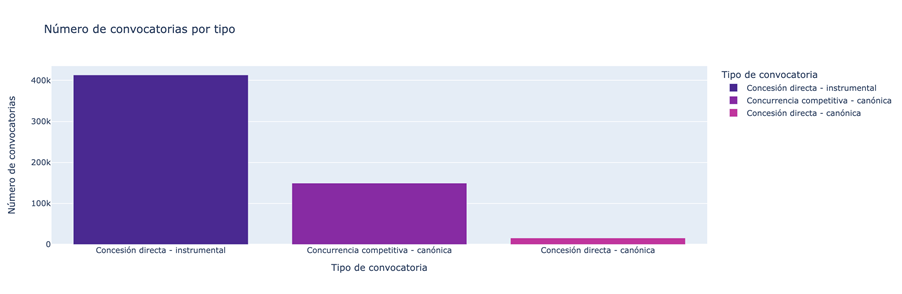

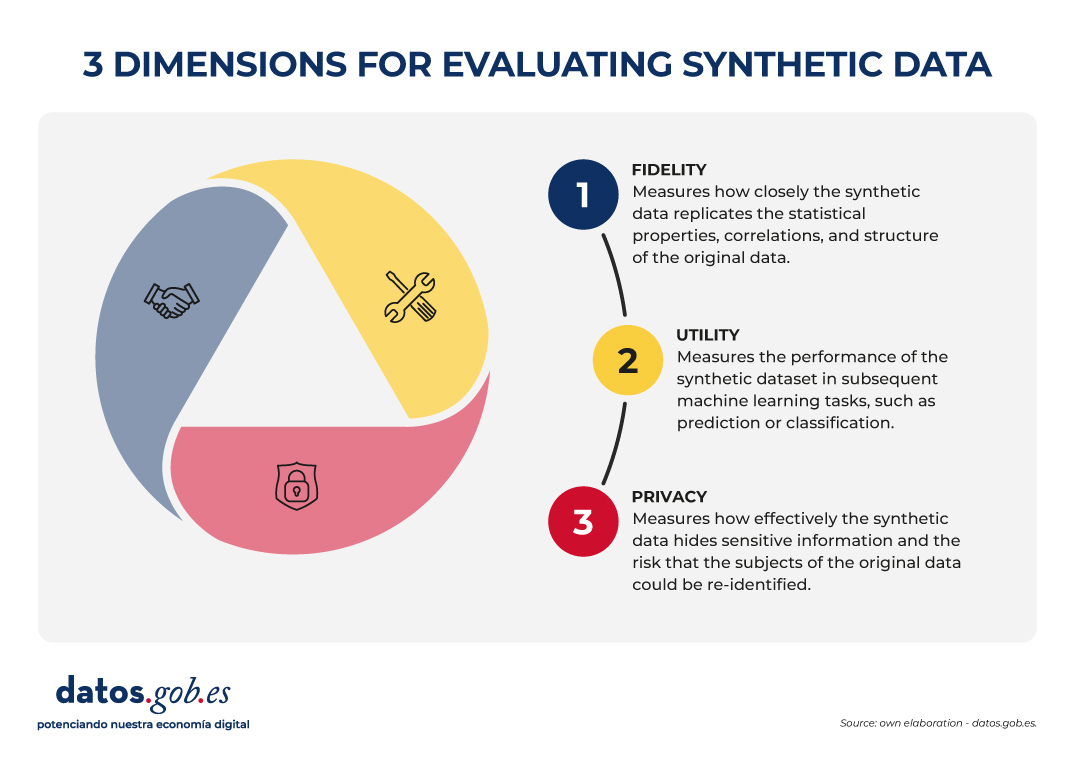

You can learn more about synthetic data and how it's created in this infographic:

Figure 1. Infographic on synthetic data. Source: Authors' elaboration - datos.gob.es.

While synthetic generation inherently reduces the risk of personal data disclosure, it does not eliminate it entirely. Synthetic does not automatically mean anonymous because, if the generators are trained inappropriately, traces of the real set can leak out and be vulnerable to membership inference attacks. Hence, it is necessary to use Privacy Enhancing Technologies (PET) such as differential privacy and to carry out specific risk assessments. The European Data Protection Supervisor (EDPS) has also underlined the need to carry out a privacy assurance assessment before synthetic data can be shared, ensuring that the result does not allow re-identifiable personal data to be obtained.

Differential Privacy (PD) is one of the main technologies in this domain. Its mechanism is to add controlled noise to the training process or to the data itself, mathematically ensuring that the presence or absence of any individual in the original dataset does not significantly alter the final result of the generation. The use of secure methods, such as Stochastic Gradient Descent with Differential Privacy (DP-SGD), ensures that the samples generated do not compromise the privacy of users who contributed their data to the sensitive set.

What is the role of open data?

Obviously, synthetic data does not appear out of nowhere, it needs real high-quality data as a seed and, in addition, it requires good validation practices. For this reason, open data or data that cannot be opened for privacy-related reasons is, on the one hand, an excellent raw material for learning real-world patterns and, on the other, an independent reference to verify that the synthetic resembles reality without exposing people or companies.