Imagine a machine that can tell if you're happy, worried, or about to make a decision, even before you know clearly. Although it sounds like science fiction, that future is already starting to take shape. Thanks to advances in neuroscience and technology, today we can record, analyze, and even predict certain patterns of brain activity. The data that is generated from these records is known as neurodata.

In this article we will explain this concept, as well as potential use cases, based on the report "TechDispatch on Neurodata", of the Spanish Data Protection Agency (AEPD).

What is neurodata and how is it collected?

The term neurodata refers to data that is collected directly from the brain and nervous system, using technologies such as electroencephalography (EEG), functional magnetic resonance imaging (fMRI), neural implants, or even brain-computer interfaces. In this sense, its uptake is driven by neurotechnologies.

According to the OECD, neurotechnologies are identified with "devices and procedures that are used to access, investigate, evaluate, manipulate, and emulate the structure and function of neural systems." Neurotechnologies can be invasive (if they require brain-computer interfaces that are surgically implanted in the brain) or non-invasive, with interfaces that are placed outside the body (such as glasses or headbands).

There are also two common ways to collect data:

- Passive collection, where data is captured on a regular basis without the subject having to perform any specific activity.

- Active collection, where data is collected while users perform a specific activity. For example, thinking explicitly about something, answering questions, performing physical tasks, or receiving certain stimuli.

Potential use cases

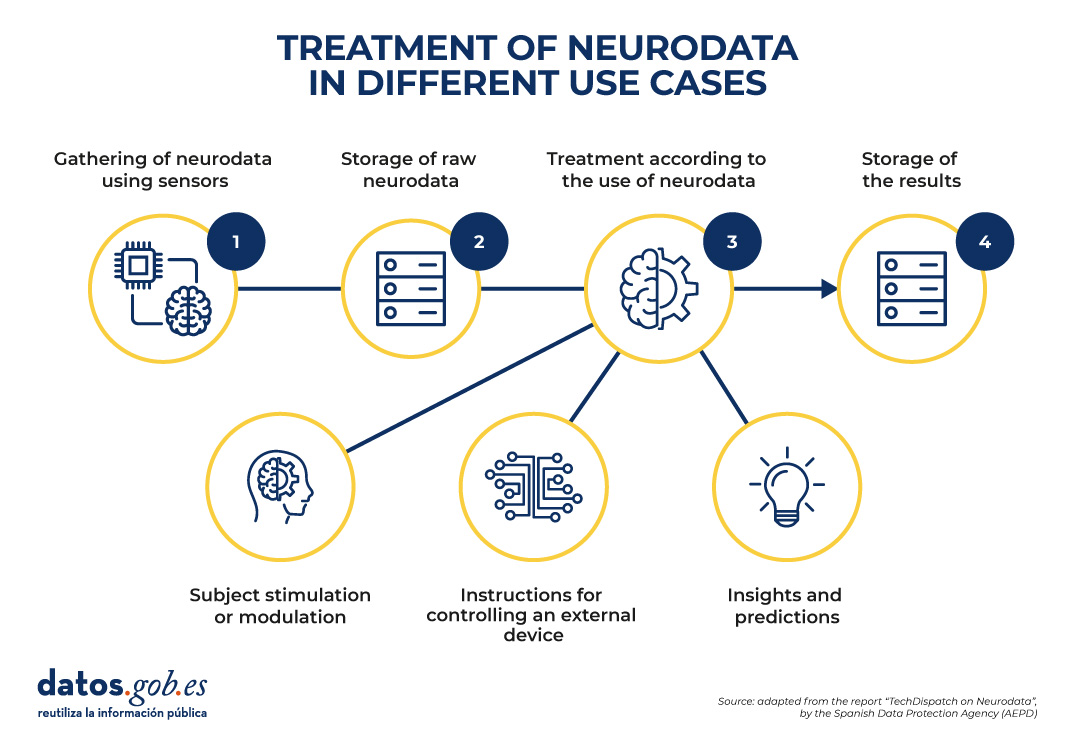

Once the raw data has been collected, it is stored and processed. The treatment will vary according to the purpose and the intended use of the neurodata.

Figure 1. Common structure to understand the processing of neurodata in different use cases. Source: "TechDispatch Report on Neurodata", by the Spanish Data Protection Agency (AEPD).

As can be seen in the image above, the Spanish Data Protection Agency has identified 3 possible purposes:

-

Neurodata processing to acquire direct knowledge and/or make predictions.

Neurodata can uncover patterns that decode brain activity in a variety of industries, including:

-

Health: Neurodata facilitates research into the functioning of the brain and nervous system, making it possible to detect signs of neurological or mental diseases, make early diagnoses, and predict their behavior. This facilitates personalized treatment from very early stages. Its impact can be remarkable, for example, in the fight against Alzheimer's, epilepsy or depression.

-

Education: through brain stimuli, students' performance and learning outcomes can be analyzed. For example, students' attention or cognitive effort can be measured. By cross-referencing this data with other internal (such as student preferences) and external (such as classroom conditions or teaching methodology) aspects, decisions can be made aimed at adapting the pace of teaching.

-

Marketing, economics and leisure: the brain's response to certain stimuli can be analysed to improve leisure products or advertising campaigns. The objective is to know the motivations and preferences that impact decision-making. They can also be used in the workplace, to track employees, learn about their skills, or determine how they perform under pressure.

- Safety and surveillance: Neurodata can be used to monitor factors that affect drivers or pilots, such as drowsiness or inattention, and thus prevent accidents.

-

Neurodata processing to control applications or devices.

As in the previous stage, it involves the collection and analysis of information for decision-making, but it also involves an additional operation: the generation of actions through mental impulses. Let's look at several examples:

-

Orthopaedic or prosthetic aids, medical implants or environment-assisted living: thanks to technologies such as brain-computer interfaces, it is possible to design prostheses that respond to the user's intention through brain activity. In addition, neurodata can be integrated with smart home systems to anticipate needs, adjust the environment to the user's emotional or cognitive states, and even issue alerts for early signs of neurological deterioration. This can lead to an improvement in patients' autonomy and quality of life.

- Robotics: The user's neural signals can be interpreted to control machinery, precision devices or applications without the need to use hands. This allows, for example, a person to operate a robotic arm or a surgical tool simply with their thinking, which is especially valuable in environments where extreme precision is required or when the operator has reduced mobility.

- Video games, virtual reality and metaverse: since neurodata allows software devices to be controlled, brain-computer interfaces can be developed that make it possible to manage characters or perform actions within a game, only with the mind, without the need for physical controls. This not only increases player immersion, but opens the door to more inclusive and personalized experiences.

- Defense: Soldiers can operate weapons systems, unmanned vehicles, drones, or explosive ordnance disposal robots remotely, increasing personal safety and operational efficiency in critical situations.

-

Neurodata treatment for the stimulation or modulation of the subject, achieving neurofeedback.

In this case, signals from the brain (outputs) are used to generate new signals that feed back into the brain (such as inputs), which involves the control of brain waves. It is the most complex field from an ethical point of view, since actions could be generated that the user is not aware of. Some examples are:

-

Psychology: Neurodata has the potential to change the way the brain responds to certain stimuli. They can therefore be used as a therapy method to treat ADHD (Attention Deficit Hyperactivity Disorder), anxiety, depression, epilepsy, autism spectrum disorder, insomnia or drug addiction, among others.

- Neuroenhancement: They can also be used to improve cognitive and affective abilities in healthy people. Through the analysis and personalized stimulation of brain activity, it is possible to optimize functions such as memory, concentration, decision-making or emotional management.

Ethical challenges of the use of neurodata

As we have seen, although the potential of neurodata is enormous, it also poses great ethical and legal challenges. Unlike other types of data, neurodata can reveal deeply intimate aspects of a person, such as their desires, emotions, fears, or intentions. This opens the door to potential misuses, such as manipulation, covert surveillance, or discrimination based on neural features. In addition, they can be collected remotely and acted upon without the subject being aware of the manipulation.

This has generated a debate about the need for new rights, such as neurorights, which seek to protect mental privacy, personal identity and cognitive freedom. Various international organizations, including the European Union, are taking measures to address these challenges and advance in the creation of regulatory and ethical frameworks that protect fundamental rights in the use of neurotechnological technologies. We will soon publish an article that will delve into these aspects.

In conclusion, neurodata is a very promising advance, but not without challenges. Its ability to transform sectors such as health, education or robotics is undeniable, but so are the ethical and legal challenges posed by its use. As we move towards a future where mind and machine are increasingly connected, it is crucial to establish regulatory frameworks that ensure the protection of human rights, especially mental privacy and individual autonomy. In this way, we will be able to harness the full potential of neurodata in a fair, safe and responsible way, for the benefit of society as a whole.

Data sharing has become a critical pillar for the advancement of analytics and knowledge exchange, both in the private and public sectors. Organizations of all sizes and industries—companies, public administrations, research institutions, developer communities, and individuals—find strong value in the ability to share information securely, reliably, and efficiently.

This exchange goes beyond raw data or structured datasets. It also includes more advanced data products such as trained machine learning models, analytical dashboards, scientific experiment results, and other complex artifacts that have significant impact through reuse. In this context, the governance of these resources becomes essential. It is not enough to simply move files from one location to another; it is necessary to guarantee key aspects such as access control (who can read or modify a given resource), traceability and auditing (who accessed it, when, and for what purpose), and compliance with regulations or standards, especially in enterprise and governmental environments.

To address these requirements, Unity Catalog emerges as a next-generation metastore, designed to centralize and simplify the governance of data and data-related resources. Originally part of the services offered by the Databricks platform, the project has now transitioned into the open source community, becoming a reference standard. This means that it can now be freely used, modified, and extended, enabling collaborative development. As a result, more organizations are expected to adopt its cataloging and sharing model, promoting data reuse and the creation of analytical workflows and technological innovation.

Figure 1. Image. Source: https://docs.unitycatalog.io/

Access the data lab repository on Github.

Run the data preprocessing code on Google Colab

Objectives

In this exercise, we will learn how to configure Unity Catalog, a tool that helps us organize and share data securely in the cloud. Although we will use some code, each step will be explained clearly so that even those with limited programming experience can follow along through a hands-on lab.

We will work with a realistic scenario in which we manage public transportation data from different cities. We’ll create data catalogs, configure a database, and learn how to interact with the information using tools like Docker, Apache Spark, and MLflow.

Difficulty level: Intermediate.

Figure 2: Unity catalogue schematic

Required Resources

In this section, we’ll explain the prerequisites and resources needed to complete this lab. The lab is designed to be run on a standard personal computer (Windows, macOS, or Linux).

We will be using the following tools and environments:

- Docker Desktop: Docker allows us to run applications in isolated environments called containers. A container is like a "box" that includes everything needed for the application to run properly, regardless of the operating system.

- Visual Studio Code: Our main working environment will be a Python Notebook, which we will run and edit using the widely adopted code editor Visual Studio Code (VS Code).

- Unity Catalog: Unity Catalog is a data governance tool that allows us to organize and control access to resources such as tables, data volumes, functions, and machine learning models. In this lab, we will use its open source version, which can be deployed locally, to learn how to manage data catalogs with permission control, traceability, and hierarchical structure. Unity Catalog acts as a centralized metastore, making data collaboration and reuse more secure and efficient.

- Amazon Web Services (AWS): AWS will serve as our cloud provider to host some of the lab’s data—specifically, raw data files (such as JSON) that we will manage using data volumes. We’ll use the Amazon S3 service to store these files and configure the necessary credentials and permissions so that Unity Catalog can interact with them in a controlled manner

Key Learnings from the Lab

Throughout this hands-on exercise, participants will deploy the application, understand its architecture, and progressively build a data catalog while applying best practices in organization, access control, and data traceability.

Deployment and First Steps

-

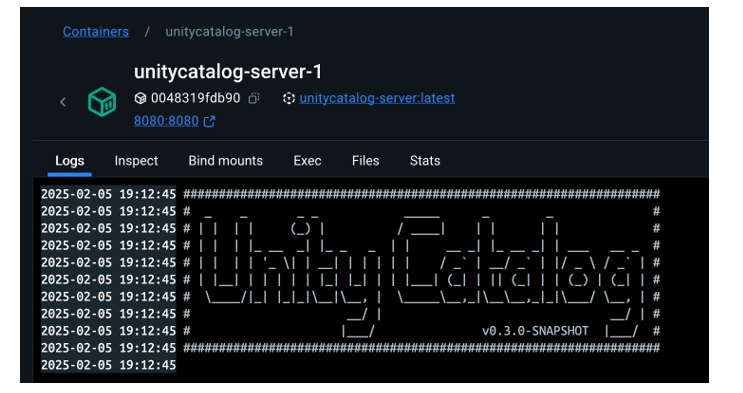

We clone the Unity Catalog repository and launch it using Docker.

-

We explore its architecture: a backend accessible via API and CLI, and an intuitive graphical user interface.

- We navigate the core resources managed by Unity Catalog: catalogs, schemas, tables, volumes, functions, and models.

Figure 2. Screenshot

What Will We Learn Here?

How to launch theapplication, understand its core components, and start interacting with it through different interfaces: the web UI, API, and CLI.

Resource Organization

-

We configure an external MySQL database as the metadata repository.

-

We create catalogs to represent different cities and schemas for various public services.

Figure 3. Screenshot

What Will We Learn Here?

How to structure data governance at different levels (city, service, dataset) and manage metadata in a centralized and persistent way.

Data Construction and Real-World Usage

-

We create structured tables to represent routes, buses, and bus stops.

-

We load real data into these tables using PySpark.

-

We set up an AWS S3 bucket as raw data storage (volumes).

- We upload JSON telemetry event files and govern them from Unity Catalog.

Figure 4. Diagram

What Will We Learn Here?

How to work with different types of data (structured and unstructured), and how to integrate them with external sources like AWS S3.

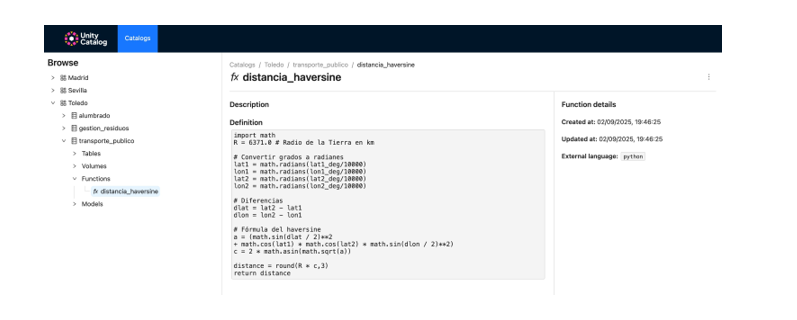

Reusable Functions and AI Models

-

We register custom functions (e.g., distance calculation) directly in the catalog.

-

We create and register machine learning models using MLflow.

- We run predictions from Unity Catalog just like any other governed resource.

Figure 5. Screenshot

What Will We Learn Here?

How to extend data governance to functions and models, and how to enable their reuse and traceability in collaborative environments.

Results and Conclusions

As a result of this hands-on lab, we gained practical experience with Unity Catalog as an open platform for the governance of data and data-related resources, including machine learning models. We explored its capabilities, deployment model, and usage through a realistic use case and a tool ecosystem similar to what you might find in an actual organization.

Through this exercise, we configured and used Unity Catalog to organize public transportation data. Specifically, you will be able to:

- Learn how to install tools like Docker and Spark.

- Create catalogs, schemas, and tables in Unity Catalog.

- Load data and store it in an Amazon S3 bucket.

- Implement a machine learning model using MLflow.

In the coming years, we will see whether tools like Unity Catalog achieve the level of standardization needed to transform how data resources are managed and shared across industries.

We encourage you to keep exploring data science! Access the full repository here

Content prepared by Juan Benavente, senior industrial engineer and expert in technologies linked to the data economy. The contents and points of view reflected in this publication are the sole responsibility of the author.

Generative artificial intelligence is beginning to find its way into everyday applications ranging from virtual agents (or teams of virtual agents) that resolve queries when we call a customer service centre to intelligent assistants that automatically draft meeting summaries or report proposals in office environments.

These applications, often governed by foundational language models (LLMs), promise to revolutionise entire industries on the basis of huge productivity gains. However, their adoption brings new challenges because, unlike traditional software, a generative AI model does not follow fixed rules written by humans, but its responses are based on statistical patterns learned from processing large volumes of data. This makes its behaviour less predictable and more difficult to explain, and sometimes leads to unexpected results, errors that are difficult to foresee, or responses that do not always align with the original intentions of the system's creator.

Therefore, the validation of these applications from multiple perspectives such as ethics, security or consistency is essential to ensure confidence in the results of the systems we are creating in this new stage of digital transformation.

What needs to be validated in generative AI-based systems?

Validating generative AI-based systems means rigorously checking that they meet certain quality and accountability criteria before relying on them to solve sensitive tasks.

It is not only about verifying that they ‘work’, but also about making sure that they behave as expected, avoiding biases, protecting users, maintaining their stability over time, and complying with applicable ethical and legal standards. The need for comprehensive validation is a growing consensus among experts, researchers, regulators and industry: deploying AI reliably requires explicit standards, assessments and controls.

We summarize four key dimensions that need to be checked in generative AI-based systems to align their results with human expectations:

- Ethics and fairness: a model must respect basic ethical principles and avoid harming individuals or groups. This involves detecting and mitigating biases in their responses so as not to perpetuate stereotypes or discrimination. It also requires filtering toxic or offensive content that could harm users. Equity is assessed by ensuring that the system offers consistent treatment to different demographics, without unduly favouring or excluding anyone.

- Security and robustness: here we refer to both user safety (that the system does not generate dangerous recommendations or facilitate illicit activities) and technical robustness against errors and manipulations. A safe model must avoid instructions that lead, for example, to illegal behavior, reliably rejecting those requests. In addition, robustness means that the system can withstand adversarial attacks (such as requests designed to deceive you) and that it operates stably under different conditions.

- Consistency and reliability: Generative AI results must be consistent, consistent, and correct. In applications such as medical diagnosis or legal assistance, it is not enough for the answer to sound convincing; it must be true and accurate. For this reason, aspects such as the logical coherence of the answers, their relevance with respect to the question asked and the factual accuracy of the information are validated. Its stability over time is also checked (that in the face of two similar requests equivalent results are offered under the same conditions) and its resilience (that small changes in the input do not cause substantially different outputs).

- Transparency and explainability: To trust the decisions of an AI-based system, it is desirable to understand how and why it produces them. Transparency includes providing information about training data, known limitations, and model performance across different tests. Many companies are adopting the practice of publishing "model cards," which summarize how a system was designed and evaluated, including bias metrics, common errors, and recommended use cases. Explainability goes a step further and seeks to ensure that the model offers, when possible, understandable explanations of its results (for example, highlighting which data influenced a certain recommendation). Greater transparency and explainability increase accountability, allowing developers and third parties to audit the behavior of the system.

Open data: transparency and more diverse evidence

Properly validating AI models and systems, particularly in terms of fairness and robustness, requires representative and diverse datasets that reflect the reality of different populations and scenarios.

On the other hand, if only the companies that own a system have data to test it, we have to rely on their own internal evaluations. However, when open datasets and public testing standards exist, the community (universities, regulators, independent developers, etc.) can test the systems autonomously, thus functioning as an independent counterweight that serves the interests of society.

A concrete example was given by Meta (Facebook) when it released its Casual Conversations v2 dataset in 2023. It is an open dataset, obtained with informed consent, that collects videos from people from 7 countries (Brazil, India, Indonesia, Mexico, Vietnam, the Philippines and the USA), with 5,567 participants who provided attributes such as age, gender, language and skin tone.

Meta's objective with the publication was precisely to make it easier for researchers to evaluate the impartiality and robustness of AI systems in vision and voice recognition. By expanding the geographic provenance of the data beyond the U.S., this resource allows you to check if, for example, a facial recognition model works equally well with faces of different ethnicities, or if a voice assistant understands accents from different regions.

The diversity that open data brings also helps to uncover neglected areas in AI assessment. Researchers from Stanford's Human-Centered Artificial Intelligence (HAI) showed in the HELM (Holistic Evaluation of Language Models) project that many language models are not evaluated in minority dialects of English or in underrepresented languages, simply because there are no quality data in the most well-known benchmarks.

The community can identify these gaps and create new test sets to fill them (e.g., an open dataset of FAQs in Swahili to validate the behavior of a multilingual chatbot). In this sense, HELM has incorporated broader evaluations precisely thanks to the availability of open data, making it possible to measure not only the performance of the models in common tasks, but also their behavior in other linguistic, cultural and social contexts. This has contributed to making visible the current limitations of the models and to promoting the development of more inclusive and representative systems of the real world or models more adapted to the specific needs of local contexts, as is the case of the ALIA foundational model, developed in Spain.

In short, open data contributes to democratizing the ability to audit AI systems, preventing the power of validation from residing only in a few. They allow you to reduce costs and barriers as a small development team can test your model with open sets without having to invest great efforts in collecting their own data. This not only fosters innovation, but also ensures that local AI solutions from small businesses are also subject to rigorous validation standards.

The validation of applications based on generative AI is today an unquestionable necessity to ensure that these tools operate in tune with our values and expectations. It is not a trivial process, it requires new methodologies, innovative metrics and, above all, a culture of responsibility around AI. But the benefits are clear, a rigorously validated AI system will be more trustworthy, both for the individual user who, for example, interacts with a chatbot without fear of receiving a toxic response, and for society as a whole who can accept decisions based on these technologies knowing that they have been properly audited. And open data helps to cement this trust by fostering transparency, enriching evidence with diversity, and involving the entire community in the validation of AI systems.

Content prepared by Jose Luis Marín, Senior Consultant in Data, Strategy, Innovation & Digitalization. The contents and views reflected in this publication are the sole responsibility of the author.

Data is a fundamental resource for improving our quality of life because it enables better decision-making processes to create personalised products and services, both in the public and private sectors. In contexts such as health, mobility, energy or education, the use of data facilitates more efficient solutions adapted to people's real needs. However, in working with data, privacy plays a key role. In this post, we will look at how data spaces, the federated computing paradigm and federated learning, one of its most powerful applications, provide a balanced solution for harnessing the potential of data without compromising privacy. In addition, we will highlight how federated learning can also be used with open data to enhance its reuse in a collaborative, incremental and efficient way.

Privacy, a key issue in data management

As mentioned above, the intensive use of data requires increasing attention to privacy. For example, in eHealth, secondary misuse of electronic health record data could violate patients' fundamental rights. One effective way to preserve privacy is through data ecosystems that prioritise data sovereignty, such as data spaces. A dataspace is a federated data management system that allows data to be exchanged reliably between providers and consumers. In addition, the data space ensures the interoperability of data to create products and services that create value. In a data space, each provider maintains its own governance rules, retaining control over its data (i.e. sovereignty over its data), while enabling its re-use by consumers. This implies that each provider should be able to decide what data it shares, with whom and under what conditions, ensuring compliance with its interests and legal obligations.

Federated computing and data spaces

Data spaces represent an evolution in data management, related to a paradigm called federated computing, where data is reused without the need for data flow from data providers to consumers. In federated computing, providers transform their data into privacy-preserving intermediate results so that they can be sent to data consumers. In addition, this enables other Data Privacy-Enhancing Technologies(Privacy-Enhancing Technologies)to be applied. Federated computing aligns perfectly with reference architectures such as Gaia-X and its Trust Framework, which sets out the principles and requirements to ensure secure, transparent and rule-compliant data exchange between data providers and data consumers.

Federated learning

One of the most powerful applications of federated computing is federated machine learning ( federated learning), an artificial intelligence technique that allows models to be trained without centralising data. That is, instead of sending the data to a central server for processing, what is sent are the models trained locally by each participant.

These models are then combined centrally to create a global model. As an example, imagine a consortium of hospitals that wants to develop a predictive model to detect a rare disease. Every hospital holds sensitive patient data, and open sharing of this data is not feasible due to privacy concerns (including other legal or ethical issues). With federated learning, each hospital trains the model locally with its own data, and only shares the model parameters (training results) centrally. Thus, the final model leverages the diversity of data from all hospitals without compromising the individual privacy and data governance rules of each hospital.

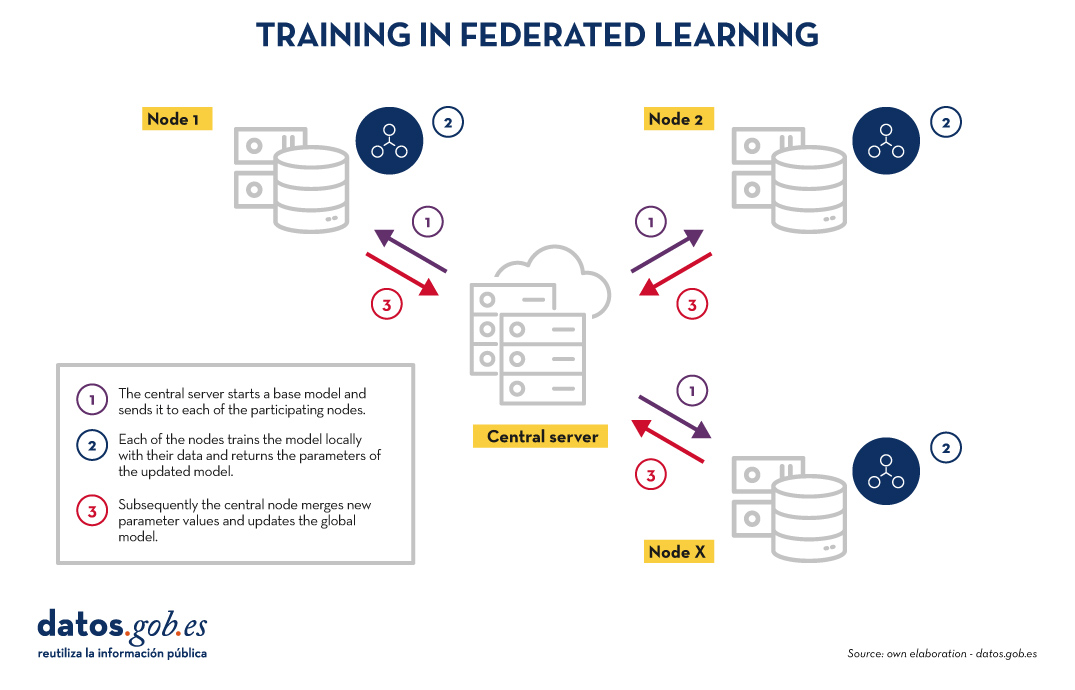

Training in federated learning usually follows an iterative cycle:

- A central server starts a base model and sends it to each of the participating distributed nodes.

- Each node trains the model locally with its data.

- Nodes return only the parameters of the updated model, not the data (i.e. data shuttling is avoided).

- The central server aggregates parameter updates, training results at each node and updates the global model.

- The cycle is repeated until a sufficiently accurate model is achieved.

Figure 1. Visual representing the federated learning training process. Own elaboration

This approach is compatible with various machine learning algorithms, including deep neural networks, regression models, classifiers, etc.

Benefits and challenges of federated learning

Federated learning offers multiple benefits by avoiding data shuffling. Below are the most notable examples:

- Privacy and compliance: by remaining at source, data exposure risks are significantly reduced and compliance with regulations such as the General Data Protection Regulation (GDPR) is facilitated.

- Data sovereignty: Each entity retains full control over its data, which avoids competitive conflicts.

- Efficiency: avoids the cost and complexity of exchanging large volumes of data, speeding up processing and development times.

- Trust: facilitates frictionless collaboration between organisations.

There are several use cases in which federated learning is necessary, for example:

- Health: Hospitals and research centres can collaborate on predictive models without sharing patient data.

- Finance: banks and insurers can build fraud detection or risk-sharing analysis models, while respecting the confidentiality of their customers.

- Smart tourism: tourist destinations can analyse visitor flows or consumption patterns without the need to unify the databases of their stakeholders (both public and private).

- Industry: Companies in the same industry can train models for predictive maintenance or operational efficiency without revealing competitive data.

While its benefits are clear in a variety of use cases, federated learning also presents technical and organisational challenges:

- Data heterogeneity: Local data may have different formats or structures, making training difficult. In addition, the layout of this data may change over time, which presents an added difficulty.

- Unbalanced data: Some nodes may have more or higher quality data than others, which may skew the overall model.

- Local computational costs: Each node needs sufficient resources to train the model locally.

- Synchronisation: the training cycle requires good coordination between nodes to avoid latency or errors.

Beyond federated learning

Although the most prominent application of federated computing is federated learning, many additional applications in data management are emerging, such as federated data analytics (federated analytics). Federated data analysis allows statistical and descriptive analyses to be performed on distributed data without the need to move the data to the consumers; instead, each provider performs the required statistical calculations locally and only shares the aggregated results with the consumer according to their requirements and permissions. The following table shows the differences between federated learning and federated data analysis.

|

Criteria |

Federated learning |

Federated data analysis |

|

Target |

Prediction and training of machine learning models. | Descriptive analysis and calculation of statistics. |

| Task type | Predictive tasks (e.g. classification or regression). | Descriptive tasks (e.g. means or correlations). |

| Example | Train models of disease diagnosis using medical images from various hospitals. | Calculation of health indicators for a health area without moving data between hospitals. |

| Expected output | Modelo global entrenado. | Resultados estadísticos agregados. |

| Nature | Iterativa. | Directa. |

| Computational complexity | Alta. | Media. |

| Privacy and sovereignty | High | Average |

| Algorithms | Machine learning. | Statistical algorithms. |

Figure 1. Comparative table. Source: own elaboration

Federated learning and open data: a symbiosis to be explored

In principle, open data resolves privacy issues prior to publication, so one would think that federated learning techniques would not be necessary. Nothing could be further from the truth. The use of federated learning techniques can bring significant advantages in the management and exploitation of open data. In fact, the first aspect to highlight is that open data portals such as datos.gob.es or data.europa.eu are federated environments. Therefore, in these portals, the application of federated learning on large datasets would allow models to be trained directly at source, avoiding transfer and processing costs. On the other hand, federated learning would facilitate the combination of open data with other sensitive data without compromising the privacy of the latter. Finally, the nature of a wide variety of open data types is very dynamic (such as traffic data), so federated learning would enable incremental training, automatically considering new updates to open datasets as they are published, without the need to restart costly training processes.

Federated learning, the basis for privacy-friendly AI

Federated machine learning represents a necessary evolution in the way we develop artificial intelligence services, especially in contexts where data is sensitive or distributed across multiple providers. Its natural alignment with the concept of the data space makes it a key technology to drive innovation based on data sharing, taking into account privacy and maintaining data sovereignty.

As regulation (such as the European Health Data Space Regulation) and data space infrastructures evolve, federated learning, and other types of federated computing, will play an increasingly important role in data sharing, maximising the value of data, but without compromising privacy. Finally, it is worth noting that, far from being unnecessary, federated learning can become a strategic ally to improve the efficiency, governance and impact of open data ecosystems.

Jose Norberto Mazón, Professor of Computer Languages and Systems at the University of Alicante. The contents and views reflected in this publication are the sole responsibility of the author.

In the current landscape of data analysis and artificial intelligence, the automatic generation of comprehensive and coherent reports represents a significant challenge. While traditional tools allow for data visualization or generating isolated statistics, there is a need for systems that can investigate a topic in depth, gather information from diverse sources, and synthesize findings into a structured and coherent report.

In this practical exercise, we will explore the development of a report generation agent based on LangGraph and artificial intelligence. Unlike traditional approaches based on templates or predefined statistical analysis, our solution leverages the latest advances in language models to:

- Create virtual teams of analysts specialized in different aspects of a topic.

- Conduct simulated interviews to gather detailed information.

- Synthesize the findings into a coherent and well-structured report.

Access the data laboratory repository on Github.

Run the data preprocessing code on Google Colab.

As shown in Figure 1, the complete agent flow follows a logical sequence that goes from the initial generation of questions to the final drafting of the report.

Figure 1. Agent flow diagram.

Application Architecture

The core of the application is based on a modular design implemented as an interconnected state graph, where each module represents a specific functionality in the report generation process. This structure allows for a flexible workflow, recursive when necessary, and with capacity for human intervention at strategic points.

Main Components

The system consists of three fundamental modules that work together:

1. Virtual Analysts Generator

This component creates a diverse team of virtual analysts specialized in different aspects of the topic to be investigated. The flow includes:

- Initial creation of profiles based on the research topic.

- Human feedback point that allows reviewing and refining the generated profiles.

- Optional regeneration of analysts incorporating suggestions.

This approach ensures that the final report includes diverse and complementary perspectives, enriching the analysis.

2. Interview System

Once the analysts are generated, each one participates in a simulated interview process that includes:

- Generation of relevant questions based on the analyst's profile.

- Information search in sources via Tavily Search and Wikipedia.

- Generation of informative responses combining the obtained information.

- Automatic decision on whether to continue or end the interview based on the information gathered.

- Storage of the transcript for subsequent processing.

The interview system represents the heart of the agent, where the information that will nourish the final report is obtained. As shown in Figure 2, this process can be monitored in real time through LangSmith, an open observability tool that allows tracking each step of the flow.

Figure 2. System monitoring via LangGraph. Concrete example of an analyst-interviewer interaction.

3. Report Generator

Finally, the system processes the interviews to create a coherent report through:

- Writing individual sections based on each interview.

- Creating an introduction that presents the topic and structure of the report.

- Organizing the main content that integrates all sections.

- Generating a conclusion that synthesizes the main findings.

- Consolidating all sources used.

The Figure 3 shows an example of the report resulting from the complete process, demonstrating the quality and structure of the final document generated automatically.

Figure 3. View of the report resulting from the automatic generation process to the prompt "Open data in Spain".

What can you learn?

This practical exercise allows you to learn:

Integration of advanced AI in information processing systems:

- How to communicate effectively with language models.

- Techniques to structure prompts that generate coherent and useful responses.

- Strategies to simulate virtual teams of experts.

Development with LangGraph:

- Creation of state graphs to model complex flows.

- Implementation of conditional decision points.

- Design of systems with human intervention at strategic points.

Parallel processing with LLMs:

- Parallelization techniques for tasks with language models.

- Coordination of multiple independent subprocesses.

- Methods for consolidating scattered information.

Good design practices:

- Modular structuring of complex systems.

- Error handling and retries.

- Tracking and debugging workflows through LangSmith.

Conclusions and future

This exercise demonstrates the extraordinary potential of artificial intelligence as a bridge between data and end users. Through the practical case developed, we can observe how the combination of advanced language models with flexible architectures based on graphs opens new possibilities for automatic report generation.

The ability to simulate virtual expert teams, perform parallel research and synthesize findings into coherent documents, represents a significant step towards the democratization of analysis of complex information.

For those interested in expanding the capabilities of the system, there are multiple promising directions for its evolution:

- Incorporation of automatic data verification mechanisms to ensure accuracy.

- Implementation of multimodal capabilities that allow incorporating images and visualizations.

- Integration with more sources of information and knowledge bases.

- Development of more intuitive user interfaces for human intervention.

- Expansion to specialized domains such as medicine, law or sciences.

In summary, this exercise not only demonstrates the feasibility of automating the generation of complex reports through artificial intelligence, but also points to a promising path towards a future where deep analysis of any topic is within everyone's reach, regardless of their level of technical experience. The combination of advanced language models, graph architectures and parallelization techniques opens a range of possibilities to transform the way we generate and consume information.

The Spanish Data Protection Agency has recently published the Spanish translation of the Guide on Synthetic Data Generation, originally produced by the Data Protection Authority of Singapore. This document provides technical and practical guidance for data protection officers, managers and data protection officers on how to implement this technology that allows simulating real data while maintaining their statistical characteristics without compromising personal information.

The guide highlights how synthetic data can drive the data economy, accelerate innovation and mitigate risks in security breaches. To this end, it presents case studies, recommendations and best practices aimed at reducing the risks of re-identification. In this post, we analyse the key aspects of the Guide highlighting main use cases and examples of practical application.

What are synthetic data? Concept and benefits

Synthetic data is artificial data generated using mathematical models specifically designed for artificial intelligence (AI) or machine learning (ML) systems. This data is created by training a model on a source dataset to imitate its characteristics and structure, but without exactly replicating the original records.

High-quality synthetic data retain the statistical properties and patterns of the original data. They therefore allow for analyses that produce results similar to those that would be obtained with real data. However, being artificial, they significantly reduce the risks associated with the exposure of sensitive or personal information.

For more information on this topic, you can read this Monographic report on synthetic data:. What are they and what are they used for? with detailed information on the theoretical foundations, methodologies and practical applications of this technology.

The implementation of synthetic data offers multiple advantages for organisations, for example:

- Privacy protection: allow data analysis while maintaining the confidentiality of personal or commercially sensitive information.

- Regulatory compliance: make it easier to follow data protection regulations while maximising the value of information assets.

- Risk reduction: minimise the chances of data breaches and their consequences.

- Driving innovation: accelerate the development of data-driven solutions without compromising privacy.

- Enhanced collaboration: Enable valuable information to be shared securely across organisations and departments.

Steps to generate synthetic data

To properly implement this technology, the Guide on Synthetic Data Generation recommends following a structured five-step approach:

- Know the data: cClearly understand the purpose of the synthetic data and the characteristics of the source data to be preserved, setting precise targets for the threshold of acceptable risk and expected utility.

- Prepare the data: iidentify key insights to be retained, select relevant attributes, remove or pseudonymise direct identifiers, and standardise formats and structures in a well-documented data dictionary .

- Generate synthetic data: sselect the most appropriate methods according to the use case, assess quality through completeness, fidelity and usability checks, and iteratively adjust the process to achieve the desired balance.

- Assess re-identification risks: aApply attack-based techniques to determine the possibility of inferring information about individuals or their membership of the original set, ensuring that risk levels are acceptable.

- Manage residual risks: iImplement technical, governance and contractual controls to mitigate identified risks, properly documenting the entire process.

Practical applications and success stories

To realise all these benefits, synthetic data can be applied in a variety of scenarios that respond to specific organisational needs. The Guide mentions, for example:

1 Generation of datasets for training AI/ML models: lSynthetic data solves the problem of the scarcity of labelled (i.e. usable) data for training AI models. Where real data are limited, synthetic data can be a cost-effective alternative. In addition, they allow to simulate extraordinary events or to increase the representation of minority groups in training sets. An interesting application to improve the performance and representativeness of all social groups in AI models.

2 Data analysis and collaboration: eThis type of data facilitates the exchange of information for analysis, especially in sectors such as health, where the original data is particularly sensitive. In this sector as in others, they provide stakeholders with a representative sample of actual data without exposing confidential information, allowing them to assess the quality and potential of the data before formal agreements are made.

3 Software testing: sis very useful for system development and software testing because it allows the use of realistic, but not real data in development environments, thus avoiding possible personal data breaches in case of compromise of the development environment..

The practical application of synthetic data is already showing positive results in various sectors:

I. Financial sector: fraud detection. J.P. Morgan has successfully used synthetic data to train fraud detection models, creating datasets with a higher percentage of fraudulent cases that significantly improved the models' ability to identify anomalous behaviour.

II. Technology sector: research on AI bias. Mastercard collaborated with researchers to develop methods to test for bias in AI using synthetic data that maintained the true relationships of the original data, but were private enough to be shared with outside researchers, enabling advances that would not have been possible without this technology.

III. Health sector: safeguarding patient data. Johnson & Johnson implemented AI-generated synthetic data as an alternative to traditional anonymisation techniques to process healthcare data, achieving a significant improvement in the quality of analysis by effectively representing the target population while protecting patients' privacy.

The balance between utility and protection

It is important to note that synthetic data are not inherently risk-free. The similarity to the original data could, in certain circumstances, allow information about individuals or sensitive data to be leaked. It is therefore crucial to strike a balance between data utility and data protection.

This balance can be achieved by implementing good practices during the process of generating synthetic data, incorporating protective measures such as:

- Adequate data preparation: removal of outliers, pseudonymisation of direct identifiers and generalisation of granular data.

- Re-identification risk assessment: analysis of the possibility that synthetic data can be linked to real individuals.

- Implementation of technical controls: adding noise to data, reducing granularity or applying differential privacy techniques.

Synthetic data represents a exceptional opportunity to drive data-driven innovation while respecting privacy and complying with data protection regulations. Their ability to generate statistically representative but artificial information makes them a versatile tool for multiple applications, from AI model training to inter-organisational collaboration and software development.

By properly implementing the best practices and controls described in Guide on synthetic data generation translated by the AEPD, organisations can reap the benefits of synthetic data while minimising the associated risks, positioning themselves at the forefront of responsible digital transformation. The adoption of privacy-enhancing technologies such as synthetic data is not only a defensive measure, but a proactive step towards an organisational culture that values both innovation and data protection, which are critical to success in the digital economy of the future.

The evolution of generative AI has been dizzying: from the first great language models that impressed us with their ability to reproduce human reading and writing, through the advanced RAG (Retrieval-Augmented Generation) techniques that quantitatively improved the quality of the responses provided and the emergence of intelligent agents, to an innovation that redefines our relationship with technology: Computer use.

At the end of April 2020, just one month after the start of an unprecedented period of worldwide home confinement due to the SAR-Covid19 global pandemic, we spread from datos.gob.es the large GPT-2 and GPT-3 language models. OpenAI, founded in 2015, had presented almost a year earlier (February 2019) a new language model that was able to generate written text virtually indistinguishable from that created by a human. GPT-2 had been trained on a corpus (set of texts prepared to train language models) of about 40 GB (Gigabytes) in size (about 8 million web pages), while the latest family of models based on GPT-4 is estimated to have been trained on TB (Terabyte) sized corpora; a thousand times more.

In this context, it is important to talk about two concepts:

- LLLMs (Large Language Models ): are large-scale language models, trained on vast amounts of data and capable of performing a wide range of linguistic tasks. Today, we have countless tools based on these LLMs that, by field of expertise, are able to generate programming code, ultra-realistic images and videos, and solve complex mathematical problems. All major companies and organisations in the digital-technology sector have embarked on integrating these tools into their different software and hardware products, developing use cases that solve or optimise specific tasks and activities that previously required a high degree of human intervention.

- Agents: The user experience with artificial intelligence models is becoming more and more complete, so that we can ask our interface not only to answer our questions, but also to perform complex tasks that require integration with other IT tools. For example, we not only ask a chatbot for information on the best restaurants in the area, but we also ask them to search for table availability for specific dates and make a reservation for us. This extended user experience is what artificial intelligence agentsprovide us with. Based on the large language models, these agents are able to interact with the outside world (to the model) and "talk" to other services via APIs and programming interfaces prepared for this purpose.

Computer use

However, the ability of agents to perform actions autonomously depends on two key elements: on the one hand, their concrete programming - the functionality that has been programmed or configured for them; on the other hand, the need for all other programmes to be ready to "talk" to these agents. That is, their programming interfaces must be ready to receive instructions from these agents. For example, the restaurant reservation application has to be prepared, not only to receive forms filled in by a human, but also requests made by an agent that has been previously invoked by a human using natural language. This fact imposes a limitation on the set of activities and/or tasks that we can automate from a conversational interface. In other words, the conversational interface can provide us with almost infinite answers to the questions we ask it, but it is severely limited in its ability to interact with the outside world due to the lack of preparation of the rest of computer applications.

This is where Computer use comes in. With the arrival of the Claude 3.5 Sonnet model, Anthropic has introduced Computer use, a beta capability that allows AI to interact directly with graphical user interfaces.

How does Computer use work?

Claude can move your computer cursor as if it were you, click buttons and type text, emulating the way humans operate a computer. The best way to understand how Computer use works in practice is to see it in action. Here is a link directly to the YouTube channel of the specific Computer use section.

Figure 1. Screenshot from Anthropic's YouTube channel, Computer use specific section.

Would you like to try it?

If you've made it this far, you can't miss out without trying it with your own hands.

Here is a simple guide to testing Computer use in an isolated environment. It is important to take into account the security recommendations that Antrophic proposes in its Computer use guidelines. This feature of the Claude Sonet model can perform actions on a computer and this can be potentially dangerous, so it is recommended to carefully review the security warning of Computer use.

All official developer documentation can be found in the antrophic's official Github repository. In this post, we have chosen to run Computer use in a Docker container environment. It is the easiest and safest way to test it. If you don't already have it, you can follow the simple official guidelines to pre-install it on your system.

To reproduce this test we propose to follow this script step by step:.

- Anthropic API Key. To interact with Claude Sonet you need an Anthropic account which you can create for free here. Once inside, you can go to the API Keys section and create a new one for your test

- Once you have your API Key, you must run this command in your terminal, substituting your key where it says "%your_api_key%":

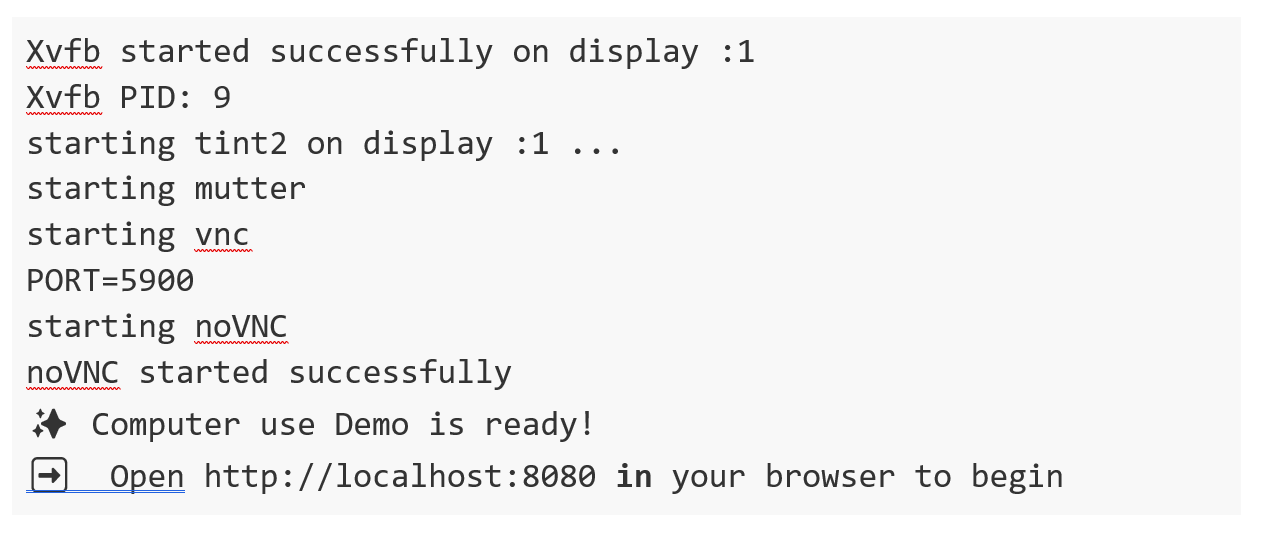

3. If everything went well, you will see these messages in your terminal and now you just have to open your browser and type this url in the navigation bar: htttp://localhost:8080/.

You will see your interface open:

Figure 2. Computer use interface.

You can now go to explore how Computer use works. We suggest the following prompt to get you started:

We suggest you start small. For example, ask them to open a browser and search for something. You can also ask him to give you information about your computer or operating system. Gradually, you can ask for more complex tasks. We have tested this prompt and after several trials we have managed to get Computer use to perform the complete task.

Open a browser, navigate to the datos.gob.es catalogue, use the search engine to locate a dataset on: Public security. Traffic accidents. 2014; Locate the file in csv format; download and open it with free Office.

Potential uses in data platforms such as datos.gob.es

In view of this first experimental version of Computer use, it seems that the potential of the tool is very high. We can imagine how many more things we can do thanks to this tool. Here are some ideas:

- We could ask the system to perform a complete search of datasets related to a specific topic and summarise the main results in a document. In this way, if for example we write an article on traffic data in Spain, we could unattended obtain a list of the main open datasets of traffic data in Spain in the datos.gob.es catalogue.

- In the same way, we could request a summary in the same way, but in this case, not of dataset, but of platform items.

- A slightly more sophisticated example would be to ask Claude, through the conversational interface of Computer use, to make a series of calls to the data API.gob.es to obtain information from certain datasets programmatically. To do this, we open a browser and log into an application such as Postman (remember at this point that Computer use is in experimental mode and does not allow us to enter sensitive data such as user credentials on web pages). We can then ask you to search for information about the datos.gob.es API and execute an http call, taking advantage of the fact that this API does not require authentication.

Through these simple examples, we hope that we have introduced you to a new application of generative AI and that you have understood the paradigm shift that this new capability represents. If the machine is able to emulate the use of a computer as we humans do, unimaginable new opportunities will open up in the coming months.

Content prepared by Alejandro Alija, expert in Digital Transformation and Innovation. The contents and points of view reflected in this publication are the sole responsibility of the author.

The enormous acceleration of innovation in artificial intelligence (AI) in recent years has largely revolved around the development of so-called "foundational models". Also known as Large [X] Models (Large [X] Models or LxM), Foundation Models, as defined by the Center for Research on Foundation Models (CRFM) of the Institute for Human-Centered Artificial Intelligence's (HAI) Stanford University's models that have been trained on large and highly diverse datasets and can be adapted to perform a wide range of tasks using techniques such as fine-tuning (fine-tuning).

It is precisely this versatility and adaptability that has made foundational models the cornerstone of the numerous applications of artificial intelligence being developed, since a single base architecture can be used across a multitude of use cases with limited additional effort.

Types of foundational models

The "X" in LxM can be replaced by several options depending on the type of data or tasks for which the model is specialised. The best known by the public are the LLM (Large Language Models), which are at the basis of applications such as ChatGPT or Gemini, and which focus on natural language understanding and generation.. LVMs (Large Vision Models), such as DINOv2 or CLIP, are designed tointerpret images and videos, recognise objects or generate visual descriptions.. There are also models such as Operator or Rabbit R1 that fall into the LAM (Large Action Models) category and are aimed atexecuting actions from complex instructions..

As regulations have emerged in different parts of the world, so have other definitions that seek to establish criteria and responsibilities for these models to foster confidence and security. The most relevant definition for our context is that set out in the European Union AI Regulation (AI Act), which calls them "general-purpose AI models" and distinguishes them by their "ability to competently perform a wide variety of discrete tasks" and because they are "typically trained using large volumes of data and through a variety of methods, such as self-supervised, unsupervised or reinforcement learning".

Foundational models in Spanish and other co-official languages

Historically, English has been the dominant language in the development of large AI models, to the extent that around 90% of the training tokens of today's large models are drawn from English texts. It is therefore logical that the most popular models, for example OpenAI's GPT family, Google's Gemini or Meta's Llama, are more competent at responding in English and perform less well when used in other languages such as Spanish.

Therefore, the creation of foundational models in Spanish, such as ALIA, is not simply a technical or research exercise, but a strategic move to ensure that artificial intelligence does not further deepen the linguistic and cultural asymmetries that already exist in digital technologies in general. The development of ALIA, driven by the Spain's Artificial Intelligence Strategy 2024, "based on the broad scope of our languages, spoken by 600 million people, aims to facilitate the development of advanced services and products in language technologies, offering an infrastructure marked by maximum transparency and openness".

Such initiatives are not unique to Spain. Other similar projects include BLOOM, a 176-billion-parameter multilingual model developed by more than 1,000 researchers worldwide and supporting 46 natural languages and 13 programming languages. In China, Baidu has developed ERNIE, a model with strong Mandarin capabilities, while in France the PAGNOL model has focused on improving French capabilities. These parallel efforts show a global trend towards the "linguistic democratisation" of AI.

Since the beginning of 2025, the first language models in the four co-official languages, within the ALIA project, have been available. The ALIA family of models includes ALIA-40B, a model with 40.40 billion parameters, which is currently the most advanced public multilingual foundational model in Europeand which was trained for more than 8 months on the MareNostrum 5 supercomputer, processing 6.9 trillion tokens equivalent to about 33 terabytes of text (about 17 million books!). Here all kinds of official documents and scientific repositories in Spanish are included, from congressional journals to scientific repositories or official bulletins to ensure the richness and quality of your knowledge.

Although this is a multilingual model, Spanish and co-official languages have a much higher weight than usual in these models, around 20%, as the training of the model was designed specifically for these languages, reducing the relevance of English and adapting the tokens to the needs of Spanish, Catalan, Basque and Galician.. As a result, ALIA "understands" our local expressions and cultural nuances better than a generic model trained mostly in English.

Applications of the foundational models in Spanish and co-official languages

It is still too early to judge the impact on specific sectors and applications that ALIA and other models that may be developed from this experience may have. However, they are expected to serve as a basis for improving many Artificial Intelligence applications and solutions:.

- Public administration and government: ALIA could give life to virtual assistants that attend to citizens 24 hours a day for procedures such as paying taxes, renewing ID cards, applying for grants, etc., as it is specifically trained in Spanish regulations. In fact, a pilot for the Tax Agency using ALIA, which would aim to streamline internal procedures, has already been announced.

- Education: A model such as ALIA could also be the basis for personalised virtual tutors to guide students in Spanish and co-official languages. For example, assistants who explain concepts of mathematics or history in simple language and answer questions from the students, adapting to their level since, knowing our language well, they would be able to provide important nuances in the answers and understand the typical doubts of native speakers in these languages. They could also help teachers by generating exercises or summaries of readings or assisting them in correcting students' work.

- Health: ALIA could be used to analyse medical texts and assist healthcare professionals with clinical reports, medical records, information leaflets, etc. For example, it could review patient files to extract key elements, or assist professionals in the diagnostic process. In fact, the Ministry of Health is planning a pilot application with ALIA to improve early detection of heart failure in primary care.

- Justice: In the legal field, ALIA would understand technical terms and contexts of Spanish law much better than a non-specialised model as it has been trained with legal vocabulary from official documents. An ALIA-based virtual paralegal could be able to answer basic citizen queries, such as how to initiate a given legal procedure, citing the applicable law. The administration of justice could also benefit from much more accurate machine translations of court documents between co-official languages.

Future lines

The development of foundation models in Spanish, as in other languages, is beginning to be seen outside the United States as a strategic issue that contributes to guaranteeing the technological sovereignty of countries. Of course, it will be necessary to continue training more advanced versions (models with up to 175 billion parameters are targeted, which would be comparable to the most powerful in the world), incorporating new open data, and fine-tuning applications. From the Data Directorate and the SEDIA it is intended to continue to support the growth of this family of models, to keep it at the forefront and ensure its adoption.

On the other hand, these first foundational models in Spanish and co-official languages have initially focused on written language, so the next natural frontier could be multimodality. Integrating the capacity to manage images, audio or video in Spanish together with the text would multiply its practical applications, since the interpretation of images in Spanish is one of the areas where the greatest deficiencies are detected in the large generic models.

Ethical issues will also need to be monitored to ensure that these models do not perpetuate bias and are useful for all groups, including those who speak different languages or have different levels of education. In this respect, Explainable Artificial Intelligence (XAI) is not optional, but a fundamental requirement to ensure its responsible adoption.. The National AI Supervisory Agency, the research community and civil society itself will have an important role to play here.

Content prepared by Jose Luis Marín, Senior Consultant in Data, Strategy, Innovation & Digitalization. The contents and views reflected in this publication are the sole responsibility of the author.

Did you know that data science skills are among the most in-demand skills in business? In this podcast, we are going to tell you how you can train yourself in this field, in a self-taught way. For this purpose, we will have two experts in data science:

- Juan Benavente, industrial and computer engineer with more than 12 years of experience in technological innovation and digital transformation. In addition, it has been training new professionals in technology schools, business schools and universities for years.

- Alejandro Alija, PhD in physics, data scientist and expert in digital transformation. In addition to his extensive professional experience focused on the Internet of Things (internet of things), Alejandro also works as a lecturer in different business schools and universities.

Listen to the full podcast (only available in Spanish)

Summary / Transcript of the interview

1. What is data science? Why is it important and what can it do for us?

Alejandro Alija: Data science could be defined as a discipline whose main objective is to understand the world, the processes of business and life, by analysing and observing data.Data science is a discipline whose main objective is to understand the world, the processes of business and life, by analysing and observing the data.. In the last 20 years it has gained exceptional relevance due to the explosion in data generation, mainly due to the irruption of the internet and the connected world.

Juan Benavente: The term data science has evolved since its inception. Today, a data scientist is the person who is working at the highest level in data analysis, often associated with the building of machine learning or artificial intelligence algorithms for specific companies or sectors, such as predicting or optimising manufacturing in a plant.

The profession is evolving rapidly, and is likely to fragment in the coming years. We have seen the emergence of new roles such as data engineers or MLOps specialists. The important thing is that today any professional, regardless of their field, needs to work with data. There is no doubt that any position or company requires increasingly advanced data analysis. It doesn't matter if you are in marketing, sales, operations or at university. Anyone today is working with, manipulating and analysing data. If we also aspire to data science, which would be the highest level of expertise, we will be in a very beneficial position. But I would definitely recommend any professional to keep this on their radar.

2. How did you get started in data science and what do you do to keep up to date? What strategies would you recommend for both beginners and more experienced profiles?

Alejandro Alija: My basic background is in physics, and I did my PhD in basic science. In fact, it could be said that any scientist, by definition, is a data scientist, because science is based on formulating hypotheses and proving them with experiments and theories. My relationship with data started early in academia. A turning point in my career was when I started working in the private sector, specifically in an environmental management company that measures and monitors air pollution. The environment is a field that is traditionally a major generator of data, especially as it is a regulated sector where administrations and private companies are obliged, for example, to record air pollution levels under certain conditions. I found historical series up to 20 years old that were available for me to analyse. From there my curiosity began and I specialised in concrete tools to analyse and understand what is happening in the world.

Juan Benavente: I can identify with what Alejandro said because I am not a computer scientist either. I trained in industrial engineering and although computer science is one of my interests, it was not my base. In contrast, nowadays, I do see that more specialists are being trained at the university level. A data scientist today has manyskills on their back such as statistics, mathematics and the ability to understand everything that goes on in the industry. I have been acquiring this knowledge through practice. On how to keep up to date, I think that, in many cases, you can be in contact with companies that are innovating in this field. A lot can also be learned at industry or technology events. I started in the smart cities and have moved on to the industrial world to learn little by little.

Alejandro Alija:. To add another source to keep up to date. Apart from what Juan has said, I think it's important to identify what we call outsiders, the manufacturers of technologies, the market players. They are a very useful source of information to stay up to date: identify their futures strategies and what they are betting on.

3. If someone with little or no technical knowledge wants to learn data science, where do they start?

Juan Benavente: In training, I have come across very different profiless: from people who have just graduated from university to profiles that have been trained in very different fields and find in data science an opportunity to transform themselves and dedicate themselves to this. Thinking of someone who is just starting out, I think the best thing to do is put your knowledge into practice. In projects I have worked on, we defined the methodology in three phases: a first phase of more theoretical aspects, taking into account mathematics, programming and everything a data scientist needs to know; once you have those basics, the sooner you start working and practising those skills, the better. I believe that skill sharpens the wit and, both to keep up to date and to train yourself and acquire useful knowledge, the sooner you enter into a project, the better. And even more so in a world that is so frequently updated. In recent years, the emergence of the Generative AI has brought other opportunities. There are also opportunities for new profiles who want to be trained . Even if you are not an expert in programming, you have tools that can help you with programming, and the same can happen in mathematics or statistics.

Alejandro Alija:. To complement what Juan says from a different perspective. I think it is worth highlighting the evolution of the data science profession.. I remember when that paper about "the sexiest profession in the world" became famous and went viral, but then things adjusted. The first settlers in the world of data science did not come so much from computer science or informatics. There were more outsiders: physicists, mathematicians, with a strong background in mathematics and physics, and even some engineers whose work and professional development meant that they ended up using many tools from the computer science field. Gradually, it has become more and more balanced. It is now a discipline that continues to have those two strands: people who come from the world of physics and mathematics towards the more basic data, and people who come with programming skills. Everyone knows what they have to balance in their toolbox. Thinking about a junior profile who is just starting out, I think a very important thing - and we see this when we teach - is programming skills. I would say that having programming skills is not just a plus, but a basic requirement for advancement in this profession. It is true that some people can do well without a lot of programming skills, but I would argue that a beginner needs to have those first programming skills with a basic toolset . We're talking about languages such as Python and R, which are the headline languages. You don't need to be a great coder, but you do need to have some basic knowledge to get started. Then, of course, specific training in the mathematical foundations of data science is crucial. The fundamental statistics and more advanced statistics are complements that, if present, will move a person along the data science learning curve much faster. Thirdly, I would say that specialisation in particular tools is important. Some people are more oriented towards data engineering, others towards the modelling world. Ideally, specialise in a few frameworks and use them together, as optimally as possible.

4. In addition to teaching, you both work in technology companies. What technical certifications are most valued in the business sector and what open sources of knowledge do you recommend to prepare for them?

Juan Benavente: Personally, it's not what I look at most, but I think it can be relevant, especially for people who are starting out and need help in structuring their approach to the problem and understanding it. I recommend certifications of technologies that are in use in any company where you want to end up working. Especially from providers of cloud computing and widespread data analytics tools. These are certifications that I would recommend for someone who wants to approach this world and needs a structure to help them. When you don't have a knowledge base, it can be a bit confusing to understand where to start. Perhaps you should reinforce programming or mathematical knowledge first, but it can all seem a bit complicated. Where these certifications certainly help you is, in addition to reinforcing concepts, to ensure that you are moving well and know the typical ecosystem of tools you will be working with tomorrow. It is not just about theoretical concepts, but about knowing the ecosystems that you will encounter when you start working, whether you are starting your own company or working in an established company. It makes it much easier for you to get to know the typical ecosystem of tools. Call it Microsoft Computing, Amazon or other providers of such solutions. This will allow you to focus more quickly on the work itself, and less on all the tools that surround it. I believe that this type of certification is useful, especially for profiles that are approaching this world with enthusiasm. It will help them both to structure themselves and to land well in their professional destination. They are also likely to be valued in selection processes.

Alejandro Alija: If someone listens to us and wants more specific guidelines, it could be structured in blocks. There are a series of massive online courses that, for me, were a turning point. In my early days, I tried to enrol in several of these courses on platforms such as Coursera, edX, where even the technology manufacturers themselves design these courses. I believe that this kind of massive, self-service, online courses provide a good starting base. A second block would be the courses and certifications of the big technology providers, such as Microsoft, Amazon Web Services, Google and other platforms that are benchmarks in the world of data. These companies have the advantage that their learning paths are very well structured, which facilitates professional growth within their own ecosystems. Certifications from different suppliers can be combined. For a person who wants to go into this field, the path ranges from the simplest to the most advanced certifications, such as being a data solutions architect or a specialist in a specific data analytics service or product. These two learning blocks are available on the internet, most of them are open and free or close to free. Beyond knowledge, what is valued is certification, especially in companies looking for these professional profiles.

5. In addition to theoretical training, practice is key, and one of the most interesting methods of learning is to replicate exercises step by step. In this sense, from datos.gob.es we offer didactic resources, many of them developed by you as experts in the project, can you tell us what these exercises consist of?. How are they approached?