The Centre de documentació i biblioteca del Institut Català d'Arqueologia Clàssica (ICAC) has the repository Open Science ICAC. This website is a space where science is shared in an accessible and inclusive way. The space introduces recommendations and advises on the process of publishing content. Also, on how to make the data generated during the research process available for future research work.

The website, in addition to being a repository of scientific research texts, is also a place to find tools and tips on how to approach the research data management process in each of its phases: before, during and at the time of publication.

- Before you begin: create a data management plan to ensure that your research proposal is as robust as possible. The Data Management Plan (DMP) is a methodological document that describes the life cycle of the data collected, generated and processed during a research project, a doctoral thesis, etc.

- During the research process: at this point it points out the need to unify the nomenclature of the documents to be generated before starting to collect files or data, in order to avoid an accumulation of disorganised content that will lead to lost or misplaced data. In addition, this section provides information on directory structure, folder names and file names, the creation of a txt file (README) describing the nomenclatures or the use of short, descriptive names such as project name/acronym, file creation date, sample number or version number. Recommendations on how to structure each of these fields so that they are reusable and easily searchable can also be found on the website.

- Publication of research data: in addition to the results of the research itself in the form of a thesis, dissertation, paper, etc., it recommends the publication of the data generated by the research process itself. The ICAC itself points out that research data remains valuable after the research project for which it was generated has ended, and that sharing data can open up new avenues of research without future researchers having to recreate and collect identical data. Finally, it outlines how, when and what to consider when publishing research data.

Graphical content for improving the quality of open data

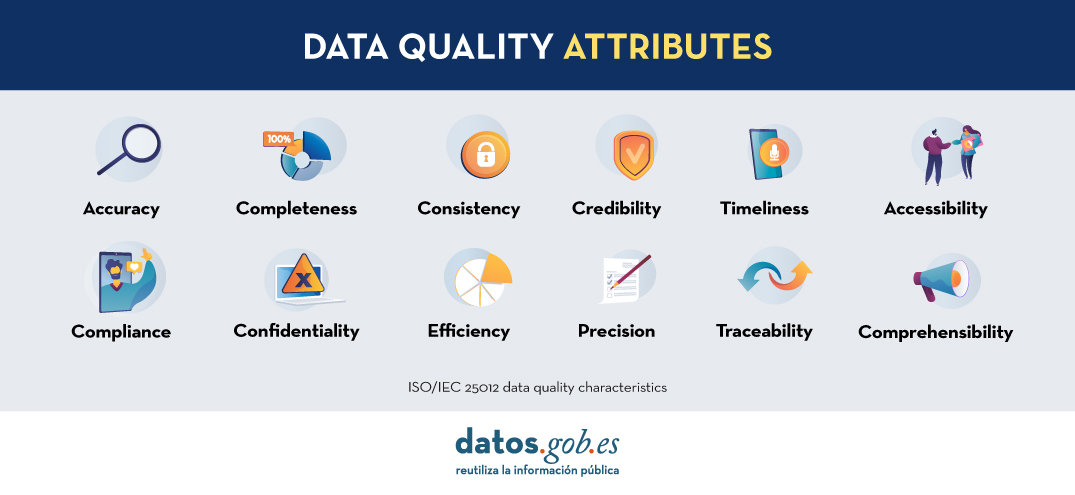

Recently, the ICAC has taken a further step to encourage good practice in the use of open data. To this end, it has developed a series of graphic contents based on the "Practical guide for the improvement of the quality of open data"produced by datos.gob.es. Specifically, the cultural body has produced four easy-to-understand infographics, in Catalan and English, on good practices with open data in working with databases and spreadsheets, texts and docs and CSV format.

All the infographics resulting from the adaptation of the guide are available to the general public and also to the centre's research staff at Recercat, Catalonia's research repository. Soon it will also be available on the Open Science website of the Institut Català d'Arqueologia Clàssica (ICAC)open Science ICAC.

The infographics produced by the ICAC review various aspects. The first ones contain general recommendations to ensure the quality of open data, such as the use of standardised character encoding, such as UTF-8, or naming columns correctly, using only lowercase letters and avoiding spaces, which are replaced by hyphens. Among the recommendations for generating quality data, they also include how to show the presence of null or missing data or how to manage data duplication, so that data collection and processing is centralised in a single system so that, in case of duplication, it can be easily detected and eliminated.

The latter deal with how to set the format of thenumerical figures and other data such as dates, so that they follow the ISO standardised system, as well as how to use dots as decimals. In the case of geographic information, as recommended by the Guide, its materials also include the need to reserve two columns for inserting the longitude and latitude of the geographic points used.

The third theme of these infographics focuses on the development of good databases or spreadsheets databases or spreadsheetsso that they are easily reusable and do not generate problems when working with them. Among the recommendations that stand out are consistency in generating names or codes for each item included in the data collection, as well as developing a help guide for the cells that are coded, so that they are intelligible to those who need to reuse them.

In the section on texts and documents within these databases, the infographics produced by the Institut Català d'Arqueologia Clàssica include some of the most important recommendations for creating texts and ensuring that they are preserved in the best possible way. Among them, it points to the need to save attachments to text documents such as images or spreadsheets separately from the text document. This ensures that the document retains its original quality, such as the resolution of an image, for example.

Finally, the fourth infographic that has been made available contains the most important recommendations for working with CSV format working with CSV format (comma separated value) format, such as creating a CSV document for each table and, in the case of working with a document with several spreadsheets, making them available independently. It also notes in this case that each row in the CSV document has the same number of columns so that they are easily workable and reusable, without the need for further clean-up.

As mentioned above, all infographics follow the recommendations already included in the Practical guide for improving the quality of open data.

The guide to improving open data quality

The "Practical guide for improving the quality of open data" is a document produced by datos.gob.es as part of the Aporta Initiative and published in September 2022. The document provides a compendium of guidelines for action on each of the defining characteristics of quality, driving quality improvement. In turn, this guide takes the data.europe.eu data quality guide, published in 2021 by the Publications Office of the European Union, as a reference and complements it so that both publishers and re-users of data can follow guidelines to ensure the quality of open data.

In summary, the guide aims to be a reference framework for all those involved in both the generation and use of open data so that they have a starting point to ensure the suitability of data both in making it available and in assessing whether a dataset is of sufficient quality to be reused in studies, applications, services or other.

At the end of 2023, as reported by datos.gob.es, the ISTAC made public more than 500 semantic assets, including 404 classifications or 100 concept schemes.

All these resources are available in the Open Data Catalog of the Canary Islands, an environment in which there is room for both semantic and statistical resources and which, therefore, may involve an extra difficulty for a user looking only for semantic assets.

To facilitate the reuse of these datasets with information so relevant to society, the Canary Islands Statistics Institute, with the collaboration of the Directorate General for the Digital Transformation of Public Services of the Canary Islands Government, published the Bank of Semantic Assets.

In this portal, the user can perform searches more easily by providing a keyword, identifier, name of the dataset or institution that prepares and maintains it.

The Bank of semantic assets of the Canary Islands Statistics Institute is an application that serves to explore the structural resources used by the ISTAC. In this way it is possible to reuse the semantic assets with which the ISTAC works, since it makes direct use of the eDatos APIs, the infrastructure that supports the Canary Islands statistics institute.

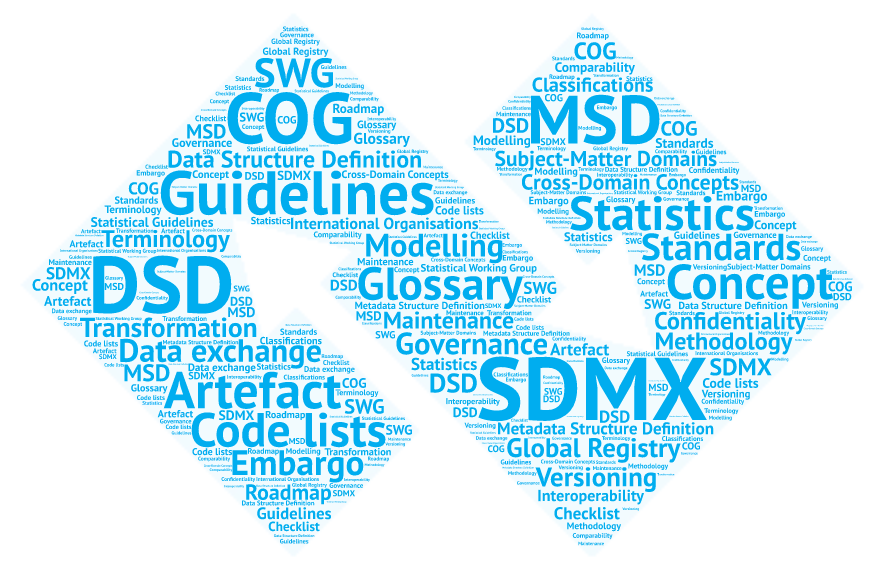

The number of resources to be consulted increases enormously with respect to the data available in the Catalog, since, on the one hand, it includes the DSD (Data Structures Definitions), with which the final data tables are built; and, on the other hand, because it includes not only the schemes and classifications, but also each of the codes, concepts and elements that compose them.

This tool is the equivalent of the aforementioned Fusion Metadata Registry used by SDMX, Eurostat or the United Nations; but with a much more practical and accessible approach without losing advanced functionalities. SDMX is the data and metadata sharing standard on which the aforementioned organizations are based. The use of this standard in applications such as ISTAC's makes it possible to homogenize in a simple way all the resources associated with the statistical data to be published.

The publication of data under the SDMX standard is a more laborious process, as it requires the generation of not only the data but also the publication keys, but in the long run it allows the creation of templates or statistical operations that can be compared with data from another country or region.

The application recently launched by the ISTAC allows you to navigate through all the structural resources of the ISTAC, including families of classifications or concepts, in an interconnected way, so it operates as a network.

Functionalities of the Semantic Asset Bank

The main advantage of this new tool over the aforementioned registries is its ease of use. Which, in this case, is directly measured by how easy it is to find a specific resource.

Thanks to the advanced search, specific resources can be filtered by ID, name, description and maintainer; to which is added the option of including only the results of interest, discriminating both by version and by whether they are recommended by the ISTAC or not.

In addition, it is designed to be a large interconnected bank, so that, entering a concept, classifications are recommended, or that in a DSD all the representations of the dimensions and attributes are linked.

These features not only differentiate the Semantic Asset Bank from other similar tools, but also represent a step forward in terms of interoperability and transparency by not only offering semantic resources but also their relationships with each other.

The new ISTAC resource complies with the provisions both at national level with the National Interoperability Scheme (Article 10, semantic assets), and at European level with the European Interoperability Framework (Article 3.4, semantic interoperability). Both documents defend the need and value of using common resources for the exchange of information, a maxim that is being implemented transversally in the Government of the Canary Islands.

Training Pill

To disseminate this new search engine for semantic assets, the ISTAC has published a short video explaining the Bank and its features, as well as providing the necessary information about SDMX. In this video it is possible to know, in a simple way and in just a few minutes how to use and get the most out of the new Semantic Assets Bank of the ISTAC through simple and complex searches and how to organize the data to respond to a previous analysis.

In summary, with the Semantic Asset Bank, the Canary Islands Statistics Institute has taken a significant step towards facilitating the reuse of its semantic assets. This tool, which brings together tens of thousands of structural resources, allows easy access to an interconnected network that complies with national and European interoperability standards.

The Canary Islands Statistics Institute (ISTAC) has added more than 500 semantic assets and more than 2100 statistical cubes to its catalogue.

This vast amount of information represents decades of work by the ISTAC in standardisation and adaptation to leading international standards, enabling better sharing of data and metadata between national and international information producers and consumers.

The increase in datasets not only quantitatively improves the directory at datos.canarias.es and datos.gob.es, but also broadens the uses it offers due to the type of information added.

New semantic assets

Semantic resources, unlike statistical resources, do not present measurable numerical data , such as unemployment data or GDP, but provide homogeneity and reproducibility.

These assets represent a step forward in interoperability, as provided for both at national level with the National Interoperability Scheme ( Article 10, semantic assets) and at European level with the European Interoperability Framework (Article 3.4, semantic interoperability). Both documents outline the need and value of using common resources for information exchange, a maxim that is being pursued at implementing in a transversal way in the Canary Islands Government. These semantic assets are already being used in the forms of the electronic headquarters and it is expected that in the future they will be the semantic assets used by the entire Canary Islands Government.

Specifically in this data load there are 4 types of semantic assets:

- Classifications (408 loaded): Lists of codes that are used to represent the concepts associated with variables or categories that are part of standardised datasets, such as the National Classification of Economic Activities (CNAE), country classifications such as M49, or gender and age classifications.

- Concept outlines (115 uploaded): Concepts are the definitions of the variables into which the data are disaggregated and which are finally represented by one or more classifications. They can be cross-sectional such as "Age", "Place of birth" and "Business activity" or specific to each statistical operation such as "Type of household chores" or "Consumer confidence index".

- Topic outlines (2 uploaded): They incorporate lists of topics that may correspond to the thematic classification of statistical operations or to the INSPIRE topic register.

- Schemes of organisations (6 uploaded): This includes outlines of entities such as organisational units, universities, maintaining agencies or data providers.

All these types of resources are part of the international SDMX (Statistical Data and Metadata Exchange) standard, which is used for the exchange of statistical data and metadata. The SDMX provides a common format and structure to facilitate interoperability between different organisations producing, publishing and using statistical data.

The Canary Islands Statistics Institute (ISTAC) has added more than 500 semantic assets and more than 2100 statistical cubes to its catalogue.

This vast amount of information represents decades of work by the ISTAC in standardisation and adaptation to leading international standards, enabling better sharing of data and metadata between national and international information producers and consumers.

The increase in datasets not only quantitatively improves the directory at datos.canarias.es and datos.gob.es, but also broadens the uses it offers due to the type of information added.

New semantic assets

Semantic resources, unlike statistical resources, do not present measurable numerical data , such as unemployment data or GDP, but provide homogeneity and reproducibility.

These assets represent a step forward in interoperability, as provided for both at national level with the National Interoperability Scheme ( Article 10, semantic assets) and at European level with the European Interoperability Framework (Article 3.4, semantic interoperability). Both documents outline the need and value of using common resources for information exchange, a maxim that is being pursued at implementing in a transversal way in the Canary Islands Government. These semantic assets are already being used in the forms of the electronic headquarters and it is expected that in the future they will be the semantic assets used by the entire Canary Islands Government.

Specifically in this data load there are 4 types of semantic assets:

- Classifications (408 loaded): Lists of codes that are used to represent the concepts associated with variables or categories that are part of standardised datasets, such as the National Classification of Economic Activities (CNAE), country classifications such as M49, or gender and age classifications.

- Concept outlines (115 uploaded): Concepts are the definitions of the variables into which the data are disaggregated and which are finally represented by one or more classifications. They can be cross-sectional such as "Age", "Place of birth" and "Business activity" or specific to each statistical operation such as "Type of household chores" or "Consumer confidence index".

- Topic outlines (2 uploaded): They incorporate lists of topics that may correspond to the thematic classification of statistical operations or to the INSPIRE topic register.

- Schemes of organisations (6 uploaded): This includes outlines of entities such as organisational units, universities, maintaining agencies or data providers.

All these types of resources are part of the international SDMX (Statistical Data and Metadata Exchange) standard, which is used for the exchange of statistical data and metadata. The SDMX provides a common format and structure to facilitate interoperability between different organisations producing, publishing and using statistical data.

From September 25th to 27th , Madrid will be hosting the fourth edition of the Open Science Fair, an international event on open science that will bring together experts from all over the world with the aim of identifying common practices, bringing positions closer together and, in short, improving synergies between the different communities and services working in this field.

This event is an initiative of OpenAIRE, an organisation that aims to create more open and transparent academic communication. This edition of the Open Science Fair is co-organised by the Spanish Foundation for Science and Technology (FECYT), which depends on the Ministry of Science and Innovation, and is one of the events sponsored by the Spanish Presidency of the spanish Presidency of the Council of the European Union.

The current state of open science

Science is no longer the preserve of scientists. Researchers, institutions, funding agencies and scientific publishers are part of an ecosystem that carries out work with a growing resonance with the public and a greater impact on society. In addition, it is becoming increasingly common for research groups to open up to collaborations with institutions around the world. Key to making this collaboration possible is the availability of data that is open and available for reuse in research.

However, to enable international and interdisciplinary research to move forward, it is necessary to ensure interoperability between communities and services, while maintaining the capacity to support different workflows and knowledge systems.

The objectives and programme of the Open Science Fair

In this context, the Open Science Fair 2023 is being held, with the aim of bringing together and empowering open science communities and services, identifying common practices related to open science to analyse the most suitable synergies and, ultimately sharing experiences that are developed in different parts of the world.

The event has an interesting programme that includes keynote speeches from relevant speakers, round tables, workshops, and training sessions, as well as a demonstration session. Attendees will be able to share experiences and exchange views, which will help define the most efficient ways for communities to work together and draw up tailor-made roadmaps for the implementation of open science.

This third edition of Open Science will focus on 'Open Science for Future Generations' and the main themes it will address, as highlighted on the the event's website, are:

- Progress and reform of research evaluation and open science. Connections, barriers and the way forward.

- Impact of artificial intelligence on open science and impact of open science on artificial intelligence.

- Innovation and disruption in academic publishing.

- Fair data, software and hardware.

- Openness in research and education.

- Public engagement and citizen science.

Open science and artificial intelligence

The artificial intelligence is gaining momentum in academia through data analysis. By analysing large amounts of data, researchers can identify patterns and correlations that would be difficult to reach through other methods. The use of open data in open science opens up an exciting and promising future, but it is important to ensure that the benefits of artificial intelligence are available to all in a fair and equitable way.

Given its high relevance, the Open Science Fair will host two keynote lectures and a panel discussion on 'AI with and for open science'. The combination of the benefits of open data and artificial intelligence is one of the areas with the greatest potential for significant scientific breakthroughs and, as such, will have its place at the event is one of the areas with the greatest potential for significant scientific breakthroughs and, as such, will have its place at the event. It will look from three perspectives (ethics, infrastructure and algorithms) at how artificial intelligence supports researchers and what the key ingredients are for open infrastructures to make this happen.

The programme of the Open Science Fair 2023 also includes the presentation of a demo of a tool for mapping the research activities of the European University of Technology EUt+ by leveraging open data and natural language processing. This project includes the development of a set of data-driven tools. Demo attendees will be able to see the developed platform that integrates data from public repositories, such as European research and innovation projects from CORDIS, patents from the European Patent Office database and scientific publications from OpenAIRE. National and regional project data have also been collected from different repositories, processed and made publicly available.

These are just some of the events that will take place within the Open Science Fair, but the full programme includes a wide range of events to explore multidisciplinary knowledge and research evaluation.

Although registration for the event is now closed, you can keep up to date with all the latest news through the hashtag #OSFAIR2023 on Twitter, LinkedIn and Facebook, as well as on the event's website website.

In addition, on the website of datos.gob.es and on our social networks you can keep up to date on the most important events in the field of open data, such as those that will take place during this autumn.

This free software application offers a map with all the trees in the city of Barcelona geolocated by GPS. The user can access in-depth information on the subject. For example, the program identifies the number of trees in each street, their condition and even the species.

The application's developer, Pedro López Cabanillas, has used datasets from Barcelona's open data portal (Open Data Barcelona) and states, in his blog, that it can be useful for botany students or "curious users". The Barcelona Trees application is now in its third beta version.

The program uses the framework Qt, C++ and QML languages, and can be built (using a suitable modern compiler) for the most common targets: Windows, macOS, Linux and Android operating systems.

Gaia-X represents an innovative paradigm for linking data more closely to the technological infrastructure underneath, so as to ensure the transparency, origin and functioning of these resources. This model allows us to deploy a sovereign and transparent data economy, which respects European fundamental rights, and which in Spain will take shape around the sectoral data spaces (C12.I1 and C14.I2 of the Recovery, Transformation and Resilience Plan). These data spaces will be aligned with the European regulatory framework, as well as with governance and instruments designed to ensure interoperability, and on which to articulate the sought-after single data market.

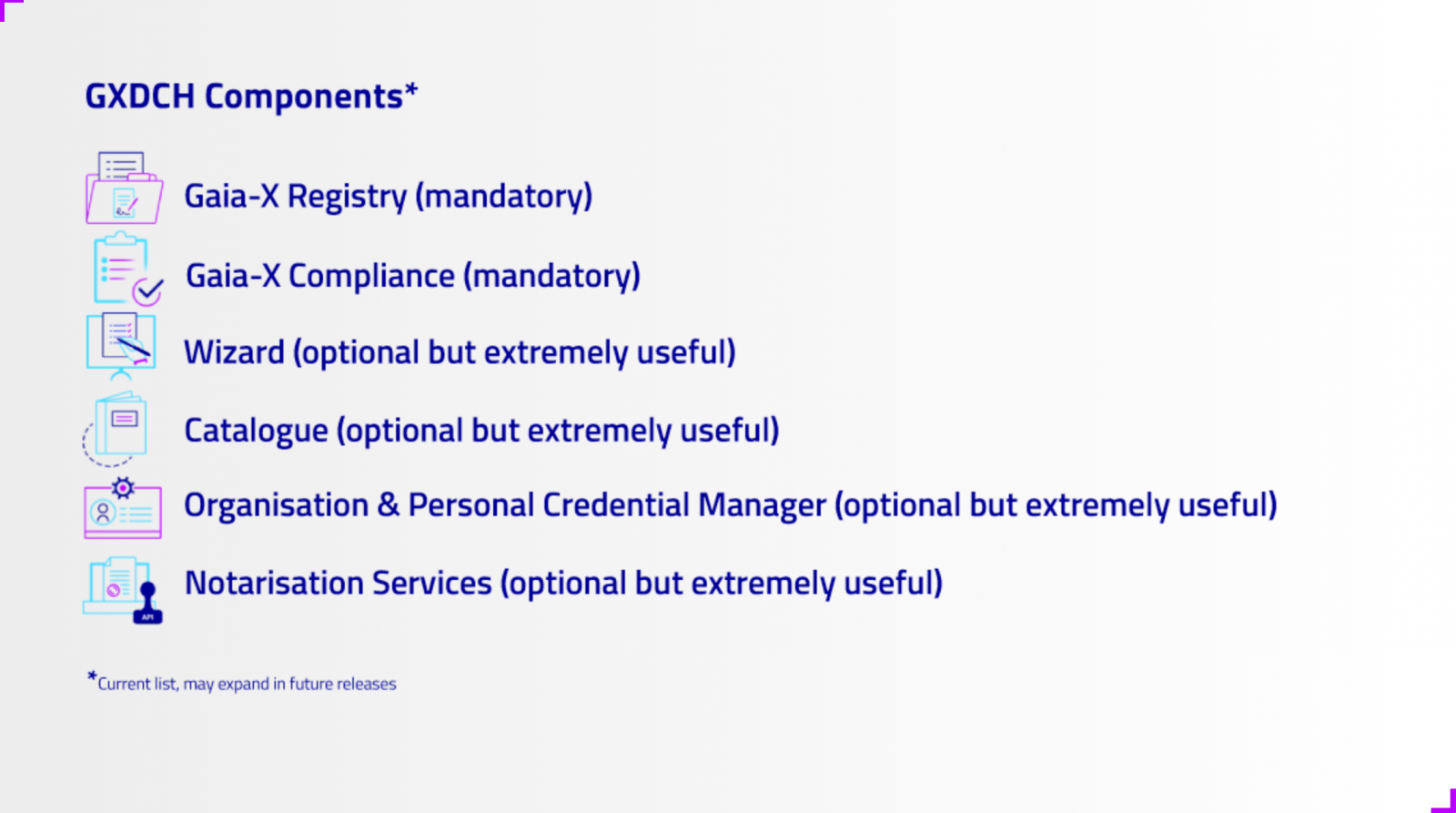

In this sense, Gaia-X interoperability nodes, or Gaia-X Digital Clearing House (GXDCH), aim to offer automatic validation services of interoperability rules to developers and participants of data spaces. The creation of such nodes was announced at the Gaia-X Summit 2022 in Paris last November. The Gaia-X architecture, promoted by the Gaia-X European Association for Data & Cloud AISBL, has established itself as a promising technological alternative for the creation of open and transparent ecosystems of data sets and services.

These ecosystems, federated by nature, will serve to develop the data economy at scale. But in order to do so, a set of minimum rules must be complied with to ensure interoperability between participants. Compliance with these rules is precisely the function of the GXDCH, serving as an "anchor" to deploy certified market services. Therefore, the creation of such a node in Spain is a crucial element for the deployment of federated data spaces at national level, which will stimulate development and innovation around data in an environment of respect for data sovereignty, privacy, transparency and fair competition.

The GXDCH is defined as a node where operational services of an ecosystem compliant with the Gaia-X interoperability rules are provided. Operational services" should be understood as services that are necessary for the operation of a data space, but are not in themselves data sharing services, data exploitation applications or cloud infrastructures. Gaia-X defines six operational services, of which at least two must be part of the mandatory nodes hosting the GXDCHs:

Mandatory services

- Gaia-X Registry: Defined as an immutable, non-repudiable, distributed database with code execution capabilities. Typically it would be a blockchain infrastructure supporting a decentralised identity service ('Self Sovereign Identity') in which, among others, the list of Trust Anchors or other data necessary for the operation of identity management in Gaia-X is stored.

- Gaia-X Compliance Service or Gaia-X Compliance Service: Belongs to the so-called Gaia-X Federation Services and its function is to verify compliance with the minimum interoperability rules defined by the Gaia-X Association (e.g. the Trust Framework).

Optional services

- Self-Descriptions (SDs) or Wizard Edition Service: SDs are verifiable credentials according to the standard defined by the W3C by means of which both the participants of a Gaia-X ecosystem and the products made available by the providers describe themselves. The aforementioned compliance service consists of validating that the SDs comply with the interoperability standards. The Wizard is a convenience service for the creation of Self-Descriptions according to pre-defined schemas.

- Catalogue: Storage service of the service offer available in the ecosystem for consultation.

- e-Wallet: For the management of verifiable credentials (SDs) by participants in a system based on distributed identities.

- Notary Service: Service for issuing verifiable credentials signed by accreditation authorities (Trust Anchors).

What is the Gaia-X Compliance Service (i.e. Compliance Service)?

The Gaia-X Compliance Service belongs to the so-called Gaia-X Federation Services and its function is to verify compliance with the minimum interoperability rules defined by the Gaia-X Association. Gaia-X calls these minimum interoperability rules (Trust Framework). It should be noted that the establishment of the Trust Framework is one of the differentiating contributions of the Gaia-X technology framework compared to other solutions on the market. But the objective is not just to establish interoperability standards, but to create a service that is operable and, as far as possible, automated, that validates compliance with the Trust Framework. This service is the Gaia-X Compliance Service.

The key element of these rules are the so-called "Self-Descriptions" (SDs). SDs are verifiable credentials according to the standard defined by the W3C by which both the participants of a data space and the products made available by the providers describe themselves. The Gaia-X Compliance service validates compliance with the Trust Framework by checking the SDs from the following points of view:

- Format and syntax of the SDs

- Validation of the SDs schemas (vocabulary and ontology)

- Validation of the cryptography of the signatures of the issuers of the SDs

- Attribute consistency

- Attribute value veracity.

Once the Self-Descriptions have been validated, the compliance service operator issues a verifiable credential that attests to compliance with interoperability standards, providing confidence to ecosystem participants. Gaia-X AISBL provides the necessary code to implement the Compliance Service and authorises the provision of the service to trusted entities, but does not directly operate the service and therefore requires the existence of partners to carry out this task.

Data anonymization defines the methodology and set of best practices and techniques that reduce the risk of identifying individuals, the irreversibility of the anonymization process, and the auditing of the exploitation of anonymized data by monitoring who, when, and for what purpose they are used.

This process is essential, both when we talk about open data and general data, to protect people's privacy, guarantee regulatory compliance, and fundamental rights.

The report "Introduction to Data Anonymization: Techniques and Practical Cases," prepared by Jose Barranquero, defines the key concepts of an anonymization process, including terms, methodological principles, types of risks, and existing techniques.

The objective of the report is to provide a sufficient and concise introduction, mainly aimed at data publishers who need to ensure the privacy of their data. It is not intended to be a comprehensive guide but rather a first approach to understand the risks and available techniques, as well as the inherent complexity of any data anonymization process.

What techniques are included in the report?

After an introduction where the most relevant terms and basic anonymization principles are defined, the report focuses on discussing three general approaches to data anonymization, each of which is further integrated by various techniques:

- Randomization: data treatment, eliminating correlation with the individual, through the addition of noise, permutation, or Differential Privacy.

- Generalization: alteration of scales or orders of magnitude through aggregation-based techniques such as K-Anonymity, L-Diversity, or T-Closeness.

- Pseudonymization: replacement of values with encrypted versions or tokens, usually through HASH algorithms, which prevent direct identification of the individual unless combined with additional data, which must be adequately safeguarded.

The document describes each of these techniques, as well as the risks they entail, providing recommendations to avoid them. However, the final decision on which technique or set of techniques is most suitable depends on each particular case.

The report concludes with a set of simple practical examples that demonstrate the application of K-Anonymity and pseudonymization techniques through encryption with key erasure. To simplify the execution of the case, users are provided with the code and data used in the exercise, available on GitHub. To follow the exercise, it is recommended to have minimal knowledge of the Python language.

You can now download the complete report, as well as the executive summary and a summary presentation.

After several months of tests and different types of training, the first massive Artificial Intelligence system in the Spanish language is capable of generating its own texts and summarising existing ones. MarIA is a project that has been promoted by the Secretary of State for Digitalisation and Artificial Intelligence and developed by the National Supercomputing Centre, based on the web archives of the National Library of Spain (BNE).

This is a very important step forward in this field, as it is the first artificial intelligence system expert in understanding and writing in Spanish. As part of the Language Technology Plan, this tool aims to contribute to the development of a digital economy in Spanish, thanks to the potential that developers can find in it.

The challenge of creating the language assistants of the future

MarIA-style language models are the cornerstone of the development of the natural language processing, machine translation and conversational systems that are so necessary to understand and automatically replicate language. MarIA is an artificial intelligence system made up of deep neural networks that have been trained to acquire an understanding of the language, its lexicon and its mechanisms for expressing meaning and writing at an expert level.

Thanks to this groundwork, developers can create language-related tools capable of classifying documents, making corrections or developing translation tools.

The first version of MarIA was developed with RoBERTa, a technology that creates language models of the "encoder" type, capable of generating an interpretation that can be used to categorise documents, find semantic similarities in different texts or detect the sentiments expressed in them.

Thus, the latest version of MarIA has been developed with GPT-2, a more advanced technology that creates generative decoder models and adds features to the system. Thanks to these decoder models, the latest version of MarIA is able to generate new text from a previous example, which is very useful for summarising, simplifying large amounts of information, generating questions and answers and even holding a dialogue.

Advances such as the above make MarIA a tool that, with training adapted to specific tasks, can be of great use to developers, companies and public administrations. Along these lines, similar models that have been developed in English are used to generate text suggestions in writing applications, summarise contracts or search for specific information in large text databases in order to subsequently relate it to other relevant information.

In other words, in addition to writing texts from headlines or words, MarIA can understand not only abstract concepts, but also their context.

More than 135 billion words at the service of artificial intelligence

To be precise, MarIA has been trained with 135,733,450,668 words from millions of web pages collected by the National Library, which occupy a total of 570 Gigabytes of information. The MareNostrum supercomputer at the National Supercomputing Centre in Barcelona was used for the training, and a computing power of 9.7 trillion operations (969 exaflops) was required.

Bearing in mind that one of the first steps in designing a language model is to build a corpus of words and phrases that serves as a database to train the system itself, in the case of MarIA, it was necessary to carry out a screening to eliminate all the fragments of text that were not "well-formed language" (numerical elements, graphics, sentences that do not end, erroneous encodings, etc.) and thus train the AI correctly.

Due to the volume of information it handles, MarIA is already the third largest artificial intelligence system for understanding and writing with the largest number of massive open-access models. Only the language models developed for English and Mandarin are ahead of it. This has been possible mainly for two reasons. On the one hand, due to the high level of digitisation of the National Library's heritage and, on the other hand, thanks to the existence of a National Supercomputing Centre with supercomputers such as the MareNostrum 4.

The role of BNE datasets

Since it launched its own open data portal (datos.bne.es) in 2014, the BNE has been committed to bringing the data available to it and in its custody closer: data on the works it preserves, but also on authors, controlled vocabularies of subjects and geographical terms, among others.

In recent years, the educational platform BNEscolar has also been developed, which seeks to offer digital content from the Hispánica Digital Library's documentary collection that may be of interest to the educational community.

Likewise, and in order to comply with international standards of description and interoperability, the BNE data are identified by means of URIs and linked conceptual models, through semantic technologies and offered in open and reusable formats. In addition, they have a high level of standardisation.

Next steps

Thus, and with the aim of perfecting and expanding the possibilities of use of MarIA, it is intended that the current version will give way to others specialised in more specific areas of knowledge. Given that it is an artificial intelligence system dedicated to understanding and generating text, it is essential for it to be able to cope with lexicons and specialised sets of information.

To this end, the PlanTL will continue to expand MarIA to adapt to new technological developments in natural language processing (more complex models than the GPT-2 now implemented, trained with larger amounts of data) and will seek ways to create workspaces to facilitate the use of MarIA by companies and research groups.

Content prepared by the datos.gob.es team.

The rise of smart cities, the distribution of resources during pandemics or the fight against natural disasters has awakened interest in geographic data. In the same way that open data in the healthcare field helps to implement social improvements related to the diagnosis of diseases or the reduction of waiting lists, Geographic Information Systems help to streamline and simplify some of the challenges of the future, with the aim of making them more environmentally sustainable, more energy efficient and more livable for citizens.

As in other fields, professionals dedicated to optimizing Geographic Information Systems (GIS) also build their own working groups, associations and training communities. GIS communities are groups of volunteers interested in using geographic information to maximize the social benefits that this type of data can bring in collective terms.

Thus, by addressing the different approaches offered by the field of geographic information, data communities work on the development of applications, the analysis of geospatial information, the generation of cartographies and the creation of informative content, among others.

In the following lines, we will analyze step by step what is the commitment and objective of three examples of GIS communities that are currently active.

Gis and Beers

What is it and what is its objective?

Gis and Beers is an association focused on the dissemination, analysis and design of tools linked to geographic information and cartographic data. Specialized in sustainability and environment, they use open data to propose and disseminate solutions that seek to design a sustainable and nature-friendly environment.

What functions does it perform?

In addition to disseminating specialized content such as reports and data analysis, the members of Gis and Beers offer training resources dedicated to facilitating the understanding of geographic information systems from an environmental perspective. It is common to read articles on their website focused on new environmental data or watch tutorials on how to access open data platforms specialized in the environment or the tools available for their management. Likewise, every time they detect the publication of a new open data catalog, they share on their website the necessary instructions for downloading the data, managing it and representing it cartographically.

Next steps

In line with the environmental awareness that marks the project, Gis and Beers is devoting more and more effort to strengthening two key pillars for its content: raising awareness of the importance of citizen science (a collaborative movement that provides data observed by citizens) and promoting access to data that facilitate modeling without previously adapting them to cartographic analysis needs.

The role of open data

The origin of most of the open data they use comes from state sources such as the IIGN, Aemet or INE, although they also draw on other options such as those offered by Google Earth Engine and Google Public Data.

How to contact them?

If you are interested in learning more about the work of this community or need to contact Gis and Beers, you can visit their website or write directly to this email account.

Geovoluntarios

What is it and what is its objective?

It is a non-profit Organization formed by professionals experienced in the use and remote application of geospatial technology and whose objective is to cooperate with other organizations that provide support in emergency situations and in projects aligned with the Sustainable Development Goals.

The association's main objectives are:

- To provide help to organizations in any of the phases of an emergency, prioritizing help to non-profit, life-saving organizations or those supporting the third sector. Some of them are Red Cross, Civil Protection, humanitarian organizations, etc.

- Encourage digital volunteering among people with knowledge or interest in geospatial technologies and working with geolocated data.

- Find ways to support organizations working towards the Sustainable Development Goals (SDGs).

- Provide geospatial tools and geolocated data to non-profit projects that would otherwise not be technically or economically feasible.

What functions does it perform?

The professional experience accumulated by the members of geovolunteers allows them to offer support in tasks related to the analysis of geographic data, the design of models or the monitoring of special emergency situations. Thus, the most common functions carried out as an NGO can be summarized as follows:

- Training and providing means to volunteers and organizations in all the necessary aspects to provide aid with guarantees: geographic information systems, spatial analysis, RGPD, security, etc.

- Facilitate the creation of temporary work teams to respond to requests for assistance received and that are in line with the organization's goals.

- Create working groups that maintain data that serve a general purpose.

- Seek collaboration agreements with other entities, organize and participate in events and carry out campaigns to promote digital volunteering.

From a more specific point of view, among all the projects in which Geovolunteers has participated, two initiatives in which the members were particularly involved are worth mentioning. On the one hand, the Covid data project, where a community of digital volunteers committed to the search and analysis of reliable data was created to provide quality information on the situation being experienced in each of the different autonomous communities of Spain. Another initiative to highlight was Reactiva Madrid, an event organized by the Madrid City Council and Esri Spain, which was created to identify and develop work that, through citizen participation, would help to prevent and/or solve problems related to the pandemic caused by COVID-19 in the areas of the economy, mobility and society.

Next steps

After two years focused on solving part of the problems generated by the Covid-19 crisis, Geovolunteers continues to focus on collaborating with organizations that are committed to assisting the most vulnerable people in emergency situations, without forgetting the commitment that links them to meeting the Sustainable Development Goals.

Thus, one of the projects in which the volunteers are most active is the implementation and improvement of GeoObs, an app to geolocate different observation projects on: dirty spots, fire danger, dangerous areas for bikers, improving a city, safe cycling, etc.

The role of open data

For an NGO like Geovolunteers, open data is essential both to develop the solidarity tasks they carry out together with other associations, as well as to design their own services and applications. Hence, these resources are part of the new functionalities on which the Association wants to focus.

So much so that data collection marks a starting point for the pilot projects that can currently be found under the Geovolunteers umbrella. Without going any further, the application mentioned above is an example that demonstrates how generating data by observation can contribute to enriching the available open data catalogs.

GIS Community

What is it and what is its objective?

GIS Community is a virtual collective that brings together professionals in the field of geographic data and information systems related to the same sector. Founded in 2009, they disseminate their work through social networks such as Facebook, Twitter or Instagram from where, in addition, they share news and relevant information on geotechnology, geoprocessing or land use planning among other topics.

Its objective is none other than to contribute to expand the informative and interesting knowledge for the geographic data community, a virtual space with little presence when this project began its work on the Internet.

What functions does it perform?

In line with the objectives mentioned above, the tasks developed by SIG are focused on the sharing and generation of content related to Geographic Information Systems. Given the diversity of fields and sectors of action within the same field, they try to balance the content of their publications to bring together both those who seek information and those who provide opportunities. For this reason it is possible to find news about events, training, research projects, news about entrepreneurs or literature among many others.

Next steps

Aware of the weight they have as a community within the field of geographic data, from SIG they plan to strengthen four axes that directly affect the work of the project: organize lectures and webinars, contact organizations and institutions capable of providing funding for projects in the GIS area, seek entities that provide open geospatial information and, finally, get part of the private sector to participate financially in the education and training of professionals in the field of GIS.

The role of open data

This is a community that is closely linked to the universe of open data, because it shares content that can be used, supplemented and redistributed freely by users. In fact, according to its own members, there is an increasing acceptance and preference for this trend, with community collaborators and their own projects driving the debate and interest in using open data in all active phases of their tasks or activities.

How to contact them?

As in the previous cases, if you are interested in contacting Comunidad SIG you can do so through their Facebook page, Twitter or Instagram or by sending an email to the following email.

Communities like Gis and Beers, SIG or Geovolunteers are just a small example of the work that the GIS collective is currently developing. If you are part of any data community in this or any other field or you know about the work of communities that may be of interest in datos.gob.es, do not hesitate to send us an email to dinamizacion@datos.gob.es.

Geo Developers

What is it and what is its purpose?

Geodevelopers is a community whose objective is to bring together developers and surveyors in the field of geographic data. The main function of this community is to share different professional experiences related to geographic data and, for this purpose, they organize talks where everyone can share their experience and knowledge with the rest.

Through their YouTube channel it is possible to access the trainings and talks held to date, as well as to be aware of the next ones that may be held.

The role of open data

Although this is not a community focused on the reuse of open data as such, they use it to develop some projects and extract new learnings that they then incorporate into their workflows.

Next steps and contact

The main objective for the future of Geodevelopers is to grow the community in order to continue sharing experiences and knowledge with the rest of the GIS stakeholders. If you want to get in touch and follow the evolution of this project you can do it through its Twitter profile.

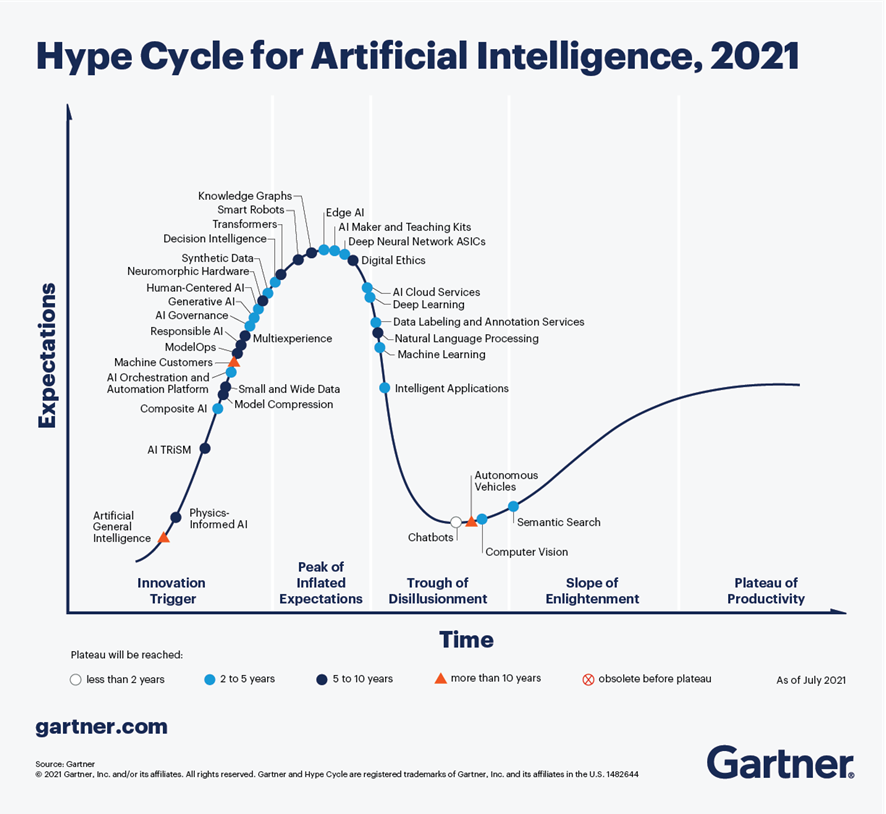

According to the latest analysis conducted by Gartner in September 2021, on Artificial Intelligence trends, Chatbots are one of the technologies that are closest to deliver effective productivity in less than 2 years. Figure 1, extracted from this report, shows that there are 4 technologies that are well past the peak of inflated expectations and are already starting to move out of the valley of disillusionment, towards states of greater maturity and stability, including chatbots, semantic search, machine vision and autonomous vehicles.

Figure 1-Trends in AI for the coming years.

In the specific case of chatbots, there are great expectations for productivity in the coming years thanks to the maturity of the different platforms available, both in Cloud Computing options and in open source projects, especially RASA or Xatkit. Currently it is relatively easy to develop a chatbot or virtual assistant without AI knowledge, using these platforms.

How does a chatbot work?

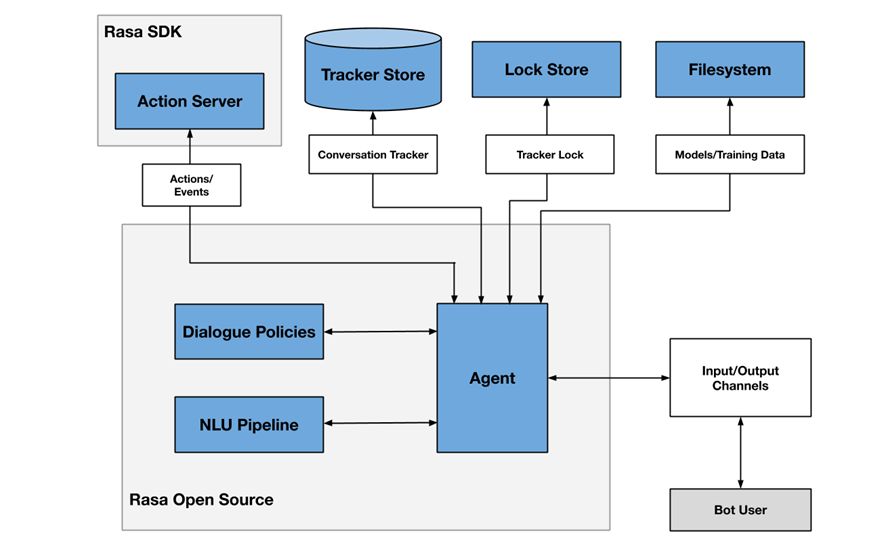

As an example, Figure 2 shows a diagram of the different components that a chatbot usually includes, in this case focused on the architecture of the RASA project.

Figure 2- RASA project architecture

One of the main components is the agent module, which acts as a controller of the data flow and is normally the system interface with the different input/output channels offered to users, such as chat applications, social networks, web or mobile applications, etc.

The NLU (Natural Languge Understanding) module is responsible for identifying the user's intention (what he/she wants to consult or do), entity extraction (what he/she is talking about) and response generation. It is considered a pipeline because several processes of different complexity are involved, in many cases even through the use of pre-trained Artificial Intelligence models.

Finally, the dialogue policies module defines the next step in a conversation, based on context and message history. This module is integrated with other subsystems such as the conversation store (tracker store) or the server that processes the actions necessary to respond to the user (action server).

Chatbots in open data portals as a mechanism to locate data and access information

There are more and more initiatives to empower citizens to consult open data through the use of chatbots, using natural language interfaces, thus increasing the net value offered by such data. The use of chatbots makes it possible to automate data collection based on interaction with the user and to respond in a simple, natural and fluid way, allowing the democratization of the value of open data.

At SOM Research Lab (Universitat Oberta de Catalunya) they were pioneers in the application of chatbots to improve citizens' access to open data through the Open Data for All and BODI (Bots to interact with open data - Conversational interfaces to facilitate access to public data) projects. You can find more information about the latter project in this article.

It is also worth mentioning the Aragón Open Data chatbot, from the open data portal of the Government of Aragón, which aims to bring the large amount of data available to citizens, so that they can take advantage of its information and value, avoiding any technical or knowledge barrier between the query made and the existing open data. The domains on which it offers information are:

- General information about Aragon and its territory

- Tourism and travel in Aragon

- Transportation and agriculture

- Technical assistance or frequently asked questions about the information society.

Conclusions

These are just a few examples of the practical use of chatbots in the valorization of open data and their potential in the short term. In the coming years we will see more and more examples of virtual assistants in different scenarios, both in the field of public administrations and in private services, especially focused on improving user service in e-commerce applications and services arising from digital transformation initiatives.

Content prepared by José Barranquero, expert in Data Science and Quantum Computing.

The contents and points of view reflected in this publication are the sole responsibility of the author.