It is possible that our ability to be surprised by new generative artificial intelligence (AI) tools is beginning to wane. The best example is GPT-o1, a new language model with the highest reasoning ability achieved so far, capable of verbalising - something similar to - its own logical processes, but which did not arouse as much enthusiasm at its launch as might have been expected. In contrast to the previous two years, in recent months we have had less of a sense of disruption and have reacted less massively to new developments.

One possible reflection is that we do not need, for now, more intelligence in the models, but to see with our own eyes a landing in concrete uses that make our lives easier: how do I use the power of a language model to consume content faster, to learn something new or to move information from one format to another? Beyond the big general-purpose applications, such as ChatGPT or Copilot, there are free and lesser-known tools that help us think better, and offer AI-based capabilities to discover, understand and share knowledge.

Generate podcasts from a file: NotebookLM

The NotebookLM automated podcasts first arrived in Spain in the summer of 2024 and did raise a significant stir, despite not even being available in Spanish. Following Google's style, the system is simple: simply upload a PDF file as a source to obtain different variations of the content provided by Gemini 2.0 (Google's AI system), such as a summary of the document, a study guide, a timeline or a list of frequently asked questions. In this case, we have used a report on artificial intelligence and democracy published by UNESCO in 2024 as an example.

Figure 1. Different summary options in NotebookLM.

While the study guide is an interesting output, offering a system of questions and answers to memorise and a glossary of terms, the star of NotebookLM is the so-called "audio summary": a completely natural conversational podcast between two synthetic interlocutors who comment in a pleasant way on the content of the PDF.

Figure 2. Audio summary in NotebookLM.

The quality of the content of this podcast still has room for improvement, but it can serve as a first approach to the content of the document, or help us to internalise it more easily from the audio while we take a break from screens, exercise or move around.

The trick: apparently, you can't generate the podcast in Spanish, only in English, but you can try with this prompt: "Make an audio summary of the document in Spanish". It almost always works.

Create visualisations from text: Napkin AI

Napkin offers us something very valuable: creating visualisations, infographics and mind maps from text content. In its free version, the system only asks you to log in with an email address. Once inside, it asks us how we want to enter the text from which we are going to create the visualisations. We can paste it or directly generate with AI an automatic text on any topic.

Figure 3. Starting points in Napkin.ai.

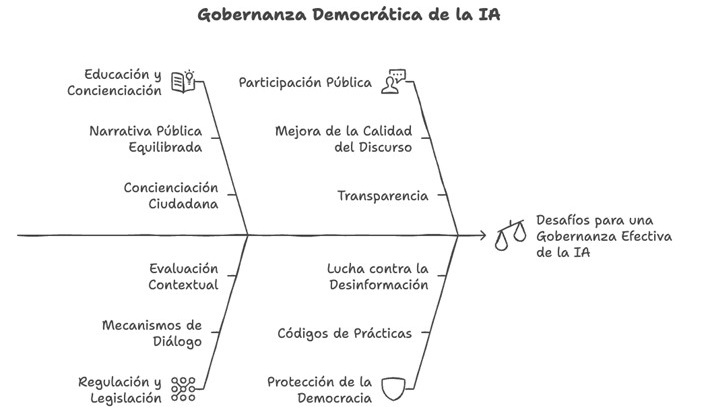

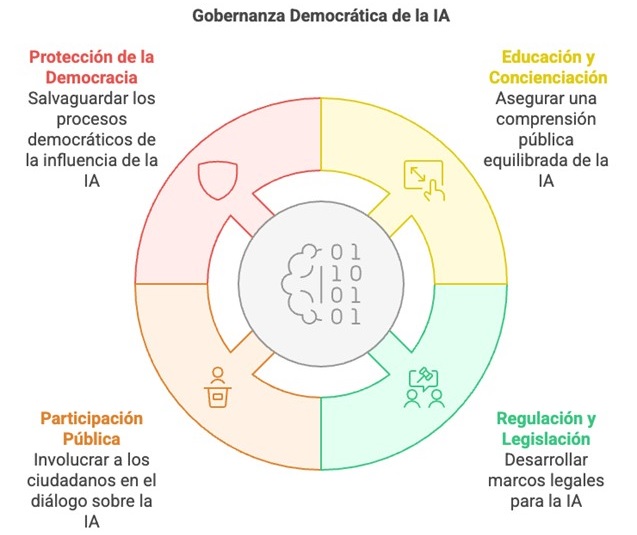

In this case, we will copy and paste an excerpt from the UNESCO report which contains several recommendations for the democratic governance of AI. From the text it receives, Napkin.ai provides illustrations and various types of diagrams. We can find from simpler proposals with text organised in keys and quadrants to others illustrated with drawings and icons.

Figure 4. Proposed scheme in Napkin.ai.

Although they are far from the quality of professional infographics, these visualisations can be useful on a personal and learning level, to illustrate a post in networks, to explain concepts internally to our team or to enrich our own content in the educational field.

The trick: if you click on Stylesin each scheme proposal, you will find more variations of the scheme with different colours and lines. You can also modify the texts by simply clicking on them once you select a visualisation.

Automatic presentations and slides: Gamma

Of all the content formats that AI is capable of generating, slideshows are probably the least successful. Sometimes the designs are not too elaborate, sometimes we don't get the template we want to use to be respected, almost always the texts are too simple. The particularity of Gamma, and what makes it more practical than other options such as Beautiful.ai, is that we can create a presentation directly from a text content that we can paste, generate with AI or upload in a file.

Figure 5. Starting points for Gamma.

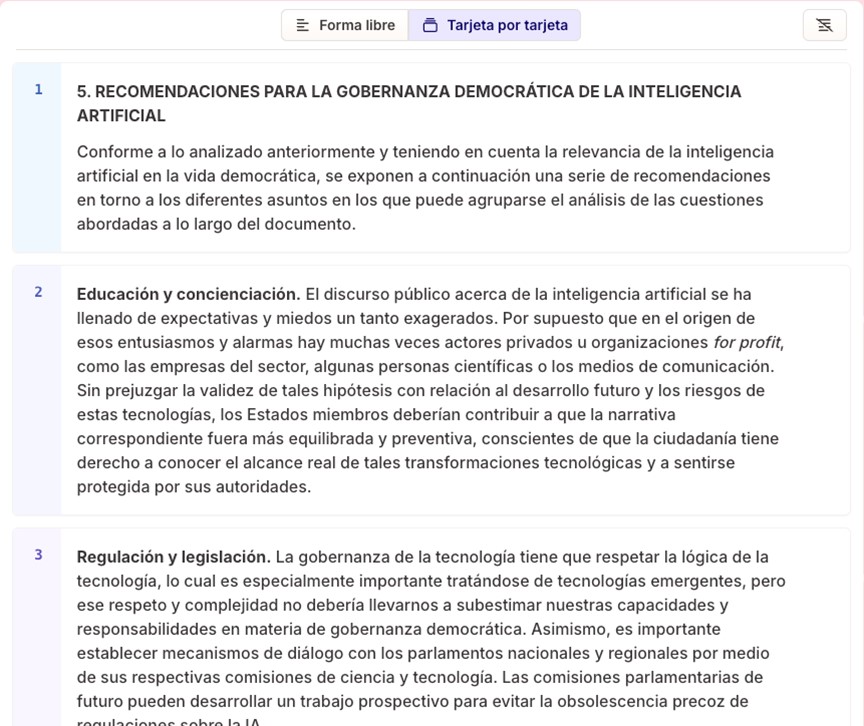

If we paste the same text as in the previous example, about UNESCO's recommendations for democratic governance of AI, in the next step Gamma gives us the choice between "free form" or "card by card". In the first option, the system's AI organises the content into slides while preserving the full meaning of each slide. In the second, it proposes that we divide the text to indicate the content we want on each slide.

Figure 6. Text automatically split into slides by Gamma.

We select the second option, and the text is automatically divided into different blocks that will be our future slides. By clicking on "Continue", we are asked to select a base theme. Finally, by clicking on "Generate", the complete presentation is automatically created.

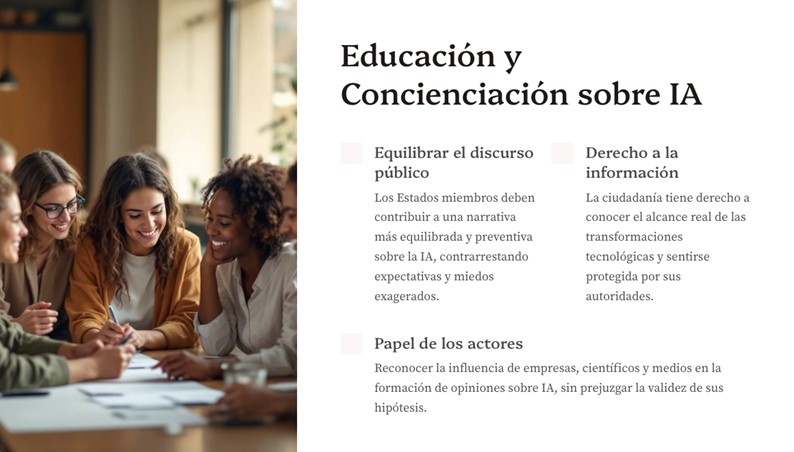

Figure 7. Example of a slide created with Gamma.

Gamma accompanies the slides with AI-created images that keep a certain coherence with the content, and gives us the option of modifying the texts or generating different images. Once ready, we can export it directly to Power Point format.

A trick: in the "edit with AI" button on each slide we can ask it to automatically translate it into another language, correct the spelling or even convert the text into a timeline.

Summarise from any format: NoteGPT

The aim of NoteGPT is very clear: to summarise content that we can import from many different sources. We can copy and paste a text, upload a file or an image, or directly extract the information from a link, something very useful and not so common in AI tools. Although the latter option does not always work well, it is one of the few tools that offer it.

Figure 8. Starting points for NoteGPT.

In this case, we introduce the link to a YouTube video containing an interview with Daniel Innerarity on the intersection between artificial intelligence and democratic processes. On the results screen, the first thing you get on the left is the full transcript of the interview, in good quality. We can locate the transcript of a specific fragment of the video, translate it into different languages, copy it or download it, even in an SRT file of time-mapped subtitles.

Figure 9. Example of transcription with minutes in NoteGPT

Meanwhile, on the right, we find the summary of the video with the most important points, sorted and illustrated with emojis. Also in the "AI Chat" button we can interact with a conversational assistant and ask questions about the content.

Figure 10. NoteGPT summary from a YouTube interview.

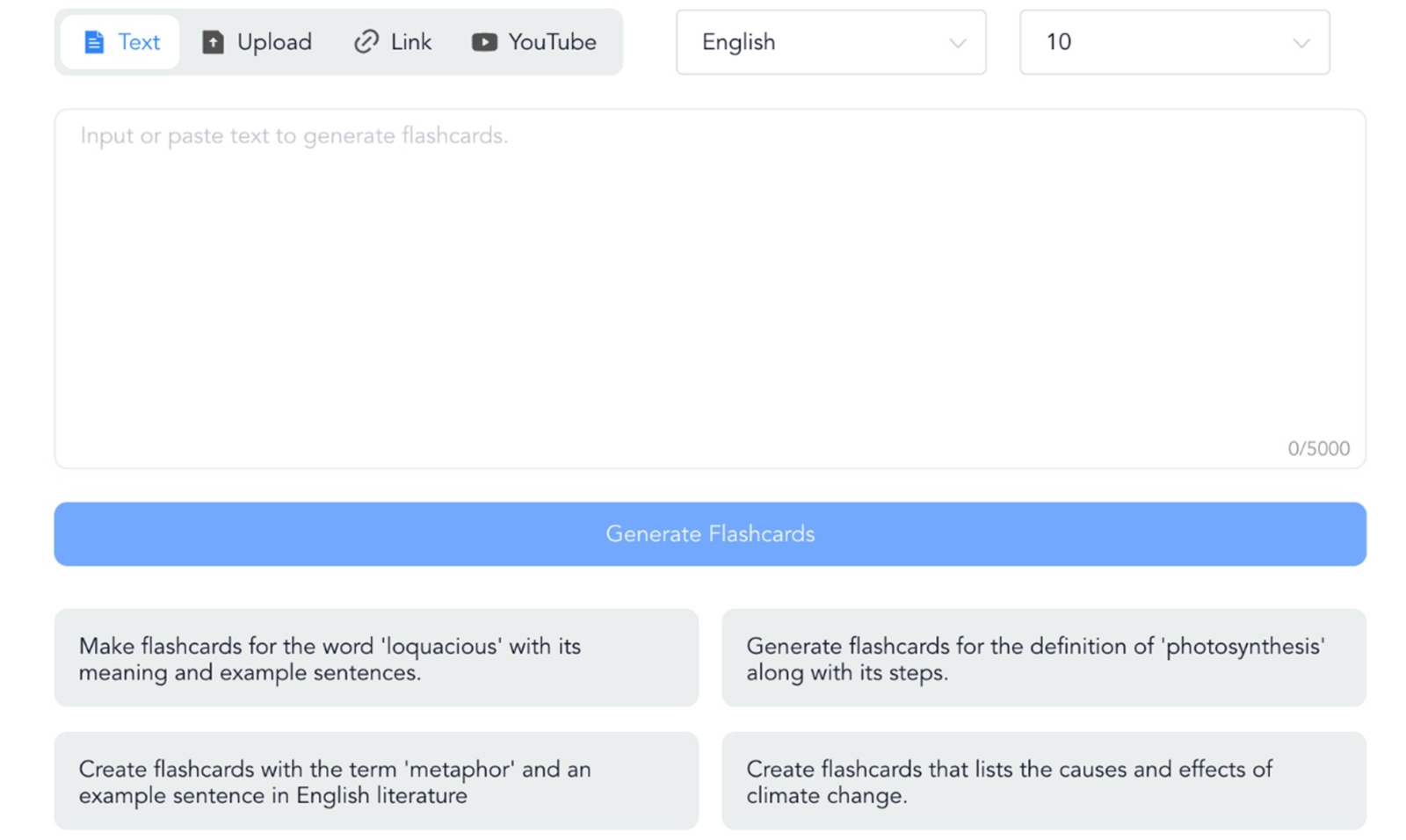

And although this is already very useful, the best thing we can find in NoteGPT are the flashcards, learning cards with questions and answers to internalise the concepts of the video.

Figure 11. NoteGPT learning card (question and answer).

A trick: if the summary only appears in English, try changing the language in the three dots on the right, next to "Summarize" and click "Summarize" again. The summary will appear in English below. In the case of flashcards, to generate them in English, do not try from the home page, but from "AI flashcards". Under "Create" you can select the language.

Figure 12. Creation of flashcards in NoteGPT.

Create videos about anything: Lumen5

Lumen5 makes it easy to create videos with AI by creating the script and images automatically from text or voice content. The most interesting thing about Lumen5 is the starting point, which can be a text, a document, simply an idea or also an existing audio recording or video.

Figure 13. Lumen5 options.

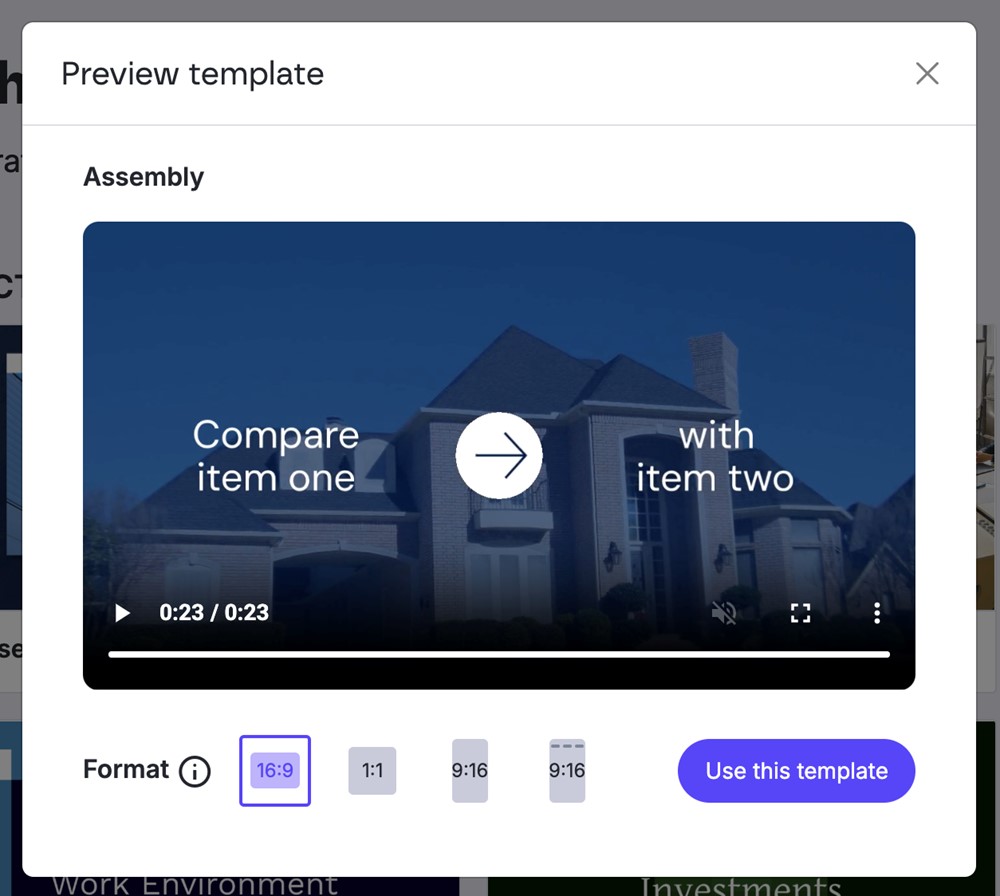

The system allows us, before creating the video and also once created, to change the format from 16:9 (horizontal) to 1:1 (square) or 9:16 (vertical), even with a special 9:16 option for Instagram stories.

Figure 14. Video preview and aspect ratio options.

In this case, we will start from the same text as in previous tools: UNESCO's recommendations for democratic governance of AI. Select the starting option "Text on media", paste it directly into the box and click on "Compose script". The result is a very simple and schematic script, divided into blocks with the basic points of the text, and a very interesting indication: a prediction of the length of the video with that script, approximately 1 minute and 19 seconds.

An important note: the script is not a voice-over, but the text that will be written on the different screens. Once the video is finished, you can translate the whole video into any other language.

Figure 15. Script proposal in Lumen5.

Clicking on "Continue" will take you to the last opportunity to modify the script, where you can add new text blocks or delete existing ones. Once ready, click on "Convert to video" and you will find the story board ready to modify images, colours or the order of the screens. The video will have background music, which you can also change, and at this point you can record your voice over the music to voice the script. Without too much effort, this is the end result:

Figura 16. Resultado final de un vídeo creado con Lumen5.

From the wide range of AI-based digital products that have flourished in recent years, perhaps thousands of them, we have gone through just five examples that show us that individual and collaborative knowledge and learning are more accessible than ever before. The ease of converting content from one format to another and the automatic creation of study guides and materials should promote a more informed and agile society, not only through text or images but also through information condensed in files or databases.

It would be a great boost to collective progress if we understood that the value of AI-based systems is not as simple as writing or creating content for us, but to support our reasoning processes, objectify our decision-making and enable us to handle much more information in an efficient and useful way. Harnessing new AI capabilities together with open data initiatives may be key to the next step in the evolution of human thinking.

Content prepared by Carmen Torrijos, expert in AI applied to language and communication. The contents and points of view reflected in this publication are the sole responsibility of the author.

Web API design is a fundamental discipline for the development of applications and services, facilitating the fluid exchange of data between different systems. In the context of open data platforms, APIs are particularly important as they allow users to access the information they need automatically and efficiently, saving costs and resources.

This article explores the essential principles that should guide the creation of effective, secure and sustainable web APIs, based on the principles compiled by the Technical Architecture Group linked to the World Wide Web Consortium (W3C), following ethical and technical standards. Although these principles refer to API design, many are applicable to web development in general.

The aim is to enable developers to ensure that their APIs not only meet technical requirements, but also respect users' privacy and security, promoting a safer and more efficient web for all.

In this post, we will look at some tips for API developers and how they can be put into practice.

Prioritise user needs

When designing an API, it is crucial to follow the hierarchy of needs established by the W3C:

- First, the needs of the end-user.

- Second, the needs of web developers.

- Third, the needs of browser implementers.

- Finally, theoretical purity.

In this way we can drive a user experience that is intuitive, functional and engaging. This hierarchy should guide design decisions, while recognising that sometimes these levels are interrelated: for example, an API that is easier for developers to use often results in a better end-user experience.

Ensures security

Ensuring security when developing an API is crucial to protect both user data and the integrity of the system. An insecure API can be an entry point for attackers seeking to access sensitive information or compromise system functionality. Therefore, when adding new functionalities, we must meet the user's expectations and ensure their security.

In this sense, it is essential to consider factors related to user authentication, data encryption, input validation, request rate management (or Rate Limiting, to limit the number of requests a user can make in a given period and avoid denial of service attacks), etc. It is also necessary to continually monitor API activities and keep detailed logs to quickly detect and respond to any suspicious activity.

Develop a user interface that conveys trust and confidence

It is necessary to consider how new functionalities impact on user interfaces. Interfaces must be designed so that users can trust and verify that the information provided is genuine and has not been falsified. Aspects such as the address bar, security indicators and permission requests should make it clear who you are interacting with and how.

For example, the JavaScript alert function, which allows the display of a modal dialogue box that appears to be part of the browser, is a case history that illustrates this need. This feature, created in the early days of the web, has often been used to trick users into thinking they are interacting with the browser, when in fact they are interacting with the web page. If this functionality were proposed today, it would probably not be accepted because of these security risks.

Ask for explicit consent from users

In the context of satisfying a user need, a website may use a function that poses a threat. For example, access to the user's geolocation may be helpful in some contexts (such as a mapping application), but it also affects privacy.

In these cases, the user's consent to their use is required. To do this:

- The user must understand what he or she is accessing. If you cannot explain to a typical user what he or she is consenting to in an intelligible way, you will have to reconsider the design of the function.

- The user must be able to choose to effectively grant or refuse such permission. If a permission request is rejected, the website will not be able to do anything that the user thinks they have dismissed.

By asking for consent, we can inform the user of what capabilities the website has or does not have, reinforcing their confidence in the security of the site. However, the benefit of a new feature must justify the additional burden on the user in deciding whether or not to grant permission for a feature.

Uses identification mechanisms appropriate to the context

It is necessary to be transparent and allow individuals to control their identifiers and the information attached to them that they provide in different contexts on the web.

Functionalities that use or rely on identifiers linked to data about an individual carry privacy risks that may go beyond a single API or system. This includes passively generated data (such as your behaviour on the web) and actively collected data (e.g. through a form). In this regard, it is necessary to understand the context in which they will be used and how they will be integrated with other web functionalities, ensuring that the user can give appropriate consent.

It is advisable to design APIs that collect the minimum amount of data necessary and use short-lived temporary identifiers, unless a persistent identifier is absolutely necessary.

Creates functionalities compatible with the full range of devices and platforms

As far as possible, ensure that the web functionalities are operational on different input and output devices, screen sizes, interaction modes, platforms and media, favouring user flexibility.

For example, the 'display: block', 'display: flex' and 'display: grid' layout models in CSS, by default, place content within the available space and without overlaps. This way they work on different screen sizes and allow users to choose their own font and size without causing text overflow.

Add new capabilities with care

Adding new capabilities to the website requires consideration of existing functionality and content, to assess how it will be integrated. Do not assume that a change is possible or impossible without first verifying it.

There are many extension points that allow you to add functionality, but there are changes that cannot be made by simply adding or removing elements, because they could generate errors or affect the user experience. It is therefore necessary to verify the current situation first, as we will see in the next section.

Before removing or changing functionality, understand its current use

It is possible to remove or change functions and capabilities, but the nature and extent of their impact on existing content must first be understood. This may require investigating how current functions are used.

The obligation to understand existing use applies to any function on which the content depends. Web functions are not only defined in the specifications, but also in the way users use them.

Best practice is to prioritise compatibility of new features with existing content and user behaviour. Sometimes a significant amount of content may depend on a particular behaviour. In these situations, it is not advisable to remove or change such behaviour.

Leave the website better than you found it

The way to add new capabilities to a web platform is to improve the platform as a whole, e.g. its security, privacy or accessibility features.

The existence of a defect in a particular part of the platform should not be used as an excuse to add or extend additional functionalities in order to fix it, as this may duplicate problems and diminish the overall quality of the platform. Wherever possible, new web capabilities should be created that improve the overall quality of the platform, mitigating existing shortcomings across the board.

Minimises user data

Functionalities must be designed to be operational with the minimal amount of user input necessary to achieve their objectives. In doing so, we limit the risks of disclosure or misuse.

It is recommended to design APIs so that websites find it easier to request, collect and/or transmit a small amount of data (more granular or specific data), than to work with more generic or massive data. APIs should provide granularity and user controls, in particular if they work on personal data.

Other recommendations

The document also provides tips for API design using various programming languages. In this sense, it provides recommendations linked to HTML, CSS, JavaScript, etc. You can read the recommendations here.

In addition, if you are thinking of integrating an API into your open data platform, we recommend reading the Practical guide to publishing Open Data using APIs.

By following these guidelines, you will be able to develop consistent and useful websites for users, allowing them to achieve their objectives in an agile and resource-optimised way.

Over the last decade, the amount of data that organisations generate and need to manage has grown exponentially. With the rise of the cloud, Internet of Things (IoT), edge computing and artificial intelligence (AI), enterprises face the challenge of integrating and governing data from multiple sources and environments. In this context, two key approaches to data management have emerged that seek to solve the problems associated with data centralisation: Data Mesh y Data Fabric. Although these concepts complement each other, each offers a different approach to solving the data challenges of modern organisations.

Why is a data lake not enough?

Many companies have implemented data lakes or centralised data warehouses with dedicated teams as a strategy to drive company data analytics. However, this approach often creates problems as the company scales up, for example:

- Centralised data equipment becomes a bottleneck. These teams cannot respond quickly enough to the variety and volume of questions that arise from different areas of the business.

- Centralisation creates a dependency that limits the autonomy of domain teams, who know their data needs best.

This is where the Data Meshapproach comes in.

Data Mesh: a decentralised, domain-driven approach

Data Mesh breaks the centralisation of data and distributes it across specific domains, allowing each business team (or domain team) to manage and control the data it knows and uses most. This approach is based on four basic principles:

- Domain ownership: instead of a central data computer having all the control, each computer is responsible for the data it generates. That is, if you are the sales team, you manage the sales data; if you are the marketing team, you manage the marketing data. Nobody knows this data better than the team that uses it on a daily basis.

- Data as a product: this idea reminds us that data is not only for the use of the domain that generates it, but can be useful for the entire enterprise. So each team should think of its data as a "product" that other teams can also use. This implies that the data must be accessible, reliable and easy to find, almost as if it were a public API.

- Self-service platform: decentralisation does not mean that every team has to reinvent the wheel. To prevent each domain team from specialising in complex data tools, the Data Mesh is supported by a self-service infrastructure that facilitates the creation, deployment and maintenance of data products. This platform should allow domain teams to consume and generate data without relying on high technical expertise.

- Federated governance: although data is distributed, there are still common rules for all. In a Data Mesh, governance is "federated", i.e. each device follows globally defined interoperability standards. This ensures that all data is secure, high quality and compliant.

These principles make Data Mesh an ideal architecture for organisations seeking greater agility and team autonomy without losing sight of quality and compliance. Despite decentralisation, Data Mesh does not create data silos because it encourages collaboration and standardised data sharing between teams, ensuring common access and governance across the organisation.

Data Fabric: architecture for secure and efficient access to distributed data

While the Data Mesh focuses on organising and owning data around domains, the Data Fabric is an architecture that allows connecting and exposing an organisation''s data, regardless of its location. Unlike approaches based on data centralisation, such as the data lake, the Data Fabric acts as a unified layer, providing seamless access to data distributed across multiple systems without the need to physically move it to a single repository.

In general terms, the Data Fabric is based on three fundamental aspects:

- Access to data: in a modern enterprise, data is scattered across multiple locations, such as data lakes, data warehouses, relational databases and numerous SaaS (Software as-a-Service) applications. Instead of consolidating all this data in one place, the Data Fabric employs a virtualisation layer that allows it to be accessed directly from its original sources. This approach minimises data duplication and enables real-time access, thus facilitating agile decision-making. In cases where an application requires low latencies, the Data Fabric also has robust integration tools, such as ETL (extract, transform and load), to move and transform data when necessary.

- Data lifecycle management: the Data Fabric not only facilitates access, but also ensures proper management throughout the entire data lifecycle. This includes critical aspects such as governance, privacy and compliance. The architecture of the Data Fabric relies on active metadata that automates the application of security and access policies, ensuring that only users with the appropriate permissions access the corresponding information. It also offers advanced traceability (lineage) functionalities, which allow tracking the origin of data, knowing its transformations and assessing its quality, which is essential in environments regulated under regulations such as the General Data Protection Regulation (GDPR).

- Data exposure: after connecting the data and applying the governance and security policies, the next step of the Data Fabric is to make that data available to end users. Through enterprise catalogues, data is organised and presented in a way that is accessible to analysts, data scientists and developers, who can locate and use it efficiently.

In short, the Data Fabric does not replace data lakes or data warehouses, but facilitates the integration and management of the organisation''s existing data. It aims to create a secure and flexible environment that enables the controlled flow of data and a unified view, without the need to physically move it, thus driving more agile and informed decision-making.

Data Mesh vs. Data Fabric. Competitors or allies?

While Data Mesh and Data Fabric have some objectives in common, each solves different problems and, in fact, benefits can be found in applying mechanisms from both approaches in a complementary manner. The following table shows a comparison of the two approaches:

| APEARANCE | DATA MESH | DATA FABRIC |

|---|---|---|

| Approach | Organisational and structural, domain-oriented. | Technical, focusing on data integration. |

| Purpose | Decentralise ownership and responsibility for data to domain teams. | Create a unified data access layer distributed across multiple environments. |

| Data management | Each domain manages its own data and defines quality standards. | Data is integrated through services and APIs, allowing a unified view without physically moving data. |

| Governance | Federated, with rules established by each domain, maintaining common standards. | Centralised at platform level, with automation of access and security policies through active metadata. |

Figure 1. Comparative table of Data Mesh VS. Data Fabric. Source: Own elaboration.

Conclusion

Both Data Mesh and Data Fabric are designed to solve the challenges of data management in modern enterprises. Data Mesh brings an organisational approach that empowers domain teams, while Data Fabric enables flexible and accessible integration of distributed data without the need to physically move it. The choice between the two, or a combination of the two, will depend on the specific needs of each organisation, although it is important to consider the investment in infrastructure, training and possible organisational changes that these approaches require. For small to medium-sized companies, a traditional data warehouse can be a practical and cost-effective alternative, especially if their data volumes and organisational complexity are manageable. However, given the growth of data ecosystems in organisations, both models represent a move towards a more agile, secure and useful data environment, facilitating data management that is better aligned with strategic objectives in an environment.

Definitions

- Data Lake: it is a storage repository that allows large volumes of data to be stored in their original format, whether structured, semi-structured or unstructured. Its flexible structure allows raw data to be stored and used for advanced analytics and machine learning.

- Data Warehouse: it is a structured data storage system that organises, processes and optimises data for analysis and reporting. It is designed for quick queries and analysis of historical data, following a predefined scheme for easy access to information.

References

- Dehghani, Zhamak. Data Mesh Principles and Logical Architecture. https://martinfowler.com/articles/data-mesh-principles.html.

- Dehghani, Zhamak. Data Mesh: Delivering Data-Driven Value at Scale. O''Reilly Media. Book detailing the implementation and fundamental principles of Data Mesh in organisations.

- Data Mesh Architecture. Website about Data Mesh and data architectures. https://www.datamesh-architecture.com/

- IBM Data Fabric. IBM Topics. https://www.ibm.com/topics/data-fabric

- IBM Technology. Data Fabric. Unifying Data Across Hybrid and Multicloud Environments. YouTube. https://www.youtube.com/watch?v=0Zzn4eVbqfk&t=4s&ab_channel=IBMTechnology

Content prepared by Juan Benavente, senior industrial engineer and expert in technologies linked to the data economy. The contents and points of view reflected in this publication are the sole responsibility of the author.

The increasing complexity of machine learning models and the need to optimise their performance has been driving the development of AutoML (Automated Machine Learning) for years. This discipline seeks to automate key tasks in the model development lifecycle, such as algorithm selection, data processing and hyperparameter optimisation.

AutoML allows users to develop models more easily and quickly. It is an approach that facilitates access to the discipline, making it accessible to professionals with less programming experience and speeding up processes for those with more experience. Thus, for a user with in-depth programming knowledge, AutoML can also be of interest. Thanks to auto machine learning, this user could automatically apply the necessary technical settings, such as defining variables or interpreting the results in a more agile way.

In this post, we will discuss the keys to these automation processes and compile a series of free and/or freemium open source tools that can help you to deepen your knowledge of AutoML.

Learn how to create your own machine learning modeling

As indicated above, thanks to automation, the training and evaluation process of models based on AutoML tools is faster than in a usual machine learning (ML) process, although the stages for model creation are similar.

In general, the key components of AutoML are:

- Data processing: automates tasks such as data cleaning, transformation and feature selection.

- Model selection: examines a variety of machine learning algorithms and chooses the most appropriate one for the specific task.

- Hyperparameter optimisation: automatically adjusts model parameters to improve model performance.

- Model evaluation: provides performance metrics and validates models using techniques such as cross-validation.

- Implementation and maintenance: facilitates the implementation of models in production and, in some cases, their upgrade.

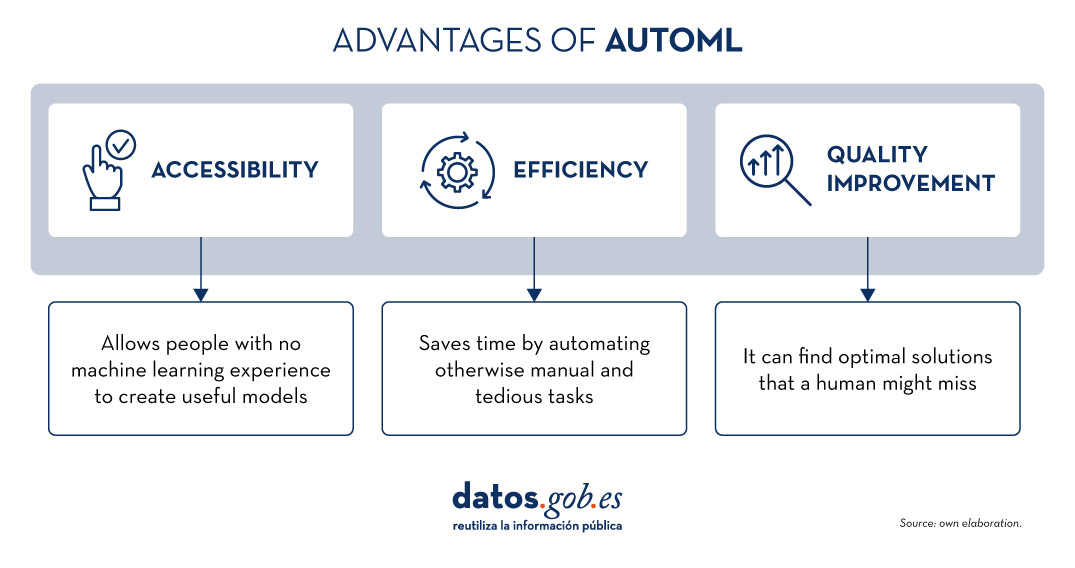

All these elements together offer a number of advantages as shown in the picture below

Figure 1. Source: Own elaboration

Examples of AutoML tools

Although AutoML can be very useful, it is important to highlight some of its limitations such as the risk of overfitting (when the model fits too closely to the training data and does not generalise knowledge well), the loss of control over the modelling process or the interpretability of certain results.

However, as AutoML continues to gain ground in the field of machine learning, a number of tools have emerged to facilitate its implementation and use. In the following, we will explore some of the most prominent open source AutoML tools:

H2O.ai, versatile and scalable, ideal for enterprises

H2O.ai is an AutoML platform that includes deep learning and machine learning models such as XGBoost (machine learning library designed to improve model efficiency) and a graphical user interface. This tool is used in large-scale projects and allows a high level of customisation. H2O.ai includes options for classification, regression and time series models, and stands out for its ability to handle large volumes of data.

Although H2O makes machine learning accessible to non-experts, some knowledge and experience in data science is necessary to get the most out of the tool. In addition, it enables a large number of modelling tasks that would normally require many lines of code, making it easier for the data analyst. H2O offers a freemium model and also has an open source community version.

TPOT, based on genetic algorithms, good option for experimentation

TPOT (Tree-based Pipeline Optimization Tool) is a free and open source Python machine learning tool that optimises processes through genetic programming.

This solution looks for the best combination of data pre-processing and machine learning models for a specific dataset. To do so, it uses genetic algorithms that allow it to explore and optimise different pipelines, data transformation and models. This is a more experimental option that may be less intuitive, but offers innovative solutions.

In addition, TPOT is built on top of the popular scikit-learn library, so models generated by TPOT can be used and adjusted with the same techniques that would be used in scikit-learn..

Auto-sklearn, accessible to scikit-learn users and efficient on structured problems

Like TPOT, Auto-sklearn is based on scikit-learn and serves to automate algorithm selection and hyperparameter optimisation in machine learning models in Python.

In addition to being a free and open source option, it includes techniques for handling missing data, a very useful feature when working with real-world datasets. On the other hand, Auto-sklearn offers a simple and easy-to-use API, allowing users to start the modelling process with few lines of code..

BigML, integration via REST APIs and flexible pricing models

BigML is a consumable, programmable and scalable machine learning platform that, like the other tools mentioned above, facilitates the resolution and automation of classification, regression, time series forecasting, cluster analysis, anomaly detection, association discovery and topic modelling tasks. It features an intuitive interface and a focus on visualisation that makes it easy to create and manage ML models, even for users with little programming knowledge.

In addition, BigML has a REST API that enables integration with various applications and languages, and is scalable to handle large volumes of data. On the other hand, it offers a flexible pricing model based on usage, and has an active community that regularly updates the learning resources available.

The following table shows a comparison between these tools:

| H2O.ai | TPOT | Auto-sklearn | BigML | |

|---|---|---|---|---|

| Use | For large-scale projects. | To experiment with genetic algorithms and optimise pipelines. | For users of scikit-learn who want to automate the model selection process and for structured tasks. | To create and deploy ML models in an accessible and simple way. |

| Difficult to configure | Simple, with advanced options. | Medium difficulty. A more technical option by genetic algorithms. | Medium difficulty. It requires technical configuration, but is easy for scikit-learn users. | Simple Intuitive interface with customisation options. |

| Ease of use | Easy to use with the most common programming languages. It has a graphical interface and APIs for R and Python. | Easy to use, but requires knowledge of Python. | Easy to use, but requires prior knowledge. Easy option for scikit-learn users. | Easy to use, focused on visualisation, no programming skills required. |

| Scalability | Scalable to large volumes of data. | Focus on small and medium-sized datasets. Less efficient on large datasets | Effective on small and medium sized datasets. | Scalable for different sizes of datasets. |

| Interoperability | Compatible with several libraries and languages, such as Java, Scala, Python and R. | Based on Python. | Based on Python integrating scikit-learn. | Compatible with REST APIs and various languages. |

| Community | Extensive and active sharing of reference documentation. | Less extensive, but growing. | It is supported by the scikit-learn community. | Active community and support available. |

| Disadvantages | Although versatile, its advanced customisation could be challenging for beginners without technical experience. | May be less efficient on large data sets due to the intensive nature of genetic algorithms. | Its performance is optimised for structured tasks (structured data), which may limit its use for other types of problems. | Its advanced customisation could be challenging for beginners without technical experience |

Figure 2. Comparative table of autoML tools. Source: Own elaboration

Each tool has its own value proposition, and the choice will depend on the specific needs and environment in which the user works.

Here are some examples of free and open source tools that you can explore to get into AutoML. We invite you to share your experience with these or other tools in the comments section below.

If you are looking for tools to help you in data processing, from datos.gob.es we offer you the report "Tools for data processing and visualisation", as well as the following monographic articles:.

Language models are at the epicentre of the technological paradigm shift that has been taking place in generative artificial intelligence (AI) over the last two years. From the tools with which we interact in natural language to generate text, images or videos and which we use to create creative content, design prototypes or produce educational material, to more complex applications in research and development that have even been instrumental in winning the 2024 Nobel Prize in Chemistry, language models are proving their usefulness in a wide variety of applicationsthat we are still exploring.

Since Google's influential 2017 paper "Attention is all you need" describing the architecture of the Transformers, the technology underpinning the new capabilities that OpenAI popularised in late 2022 with the launch of ChatGPT, the evolution of language models has been more than dizzying. In just two years, we have moved from models focused solely on text generation to multimodal versions that integrate interaction and generation of text, images and audio.

This rapid evolution has given rise to two categories of language models: SLMs (Small Language Models), which are lighter and more efficient, and LLLMs (Large Language Models), which are heavier and more powerful. Far from considering them as competitors, we should analyse SLM and LLM as complementary technologies. While LLLMs offer general processing and content generation capabilities, SLMs can provide support for more agile and specialised solutions for specific needs. However, both share one essential element: they rely on large volumes of data for training and at the heart of their capabilities is open data, which is part of the fuel used to train these language models on which generative AI applications are based.

LLLM: power driven by massive data

The LLLMs are large-scale language models with billions, even trillions, of parameters. These parameters are the mathematical units that allow the model to identify and learn patterns in the training data, giving them an extraordinary ability to generate text (or other formats) that is consistent and adapted to the users' context. These models, such as the GPT family from OpenAI, Gemini from Google or Llama from Meta, are trained on immense volumes of data and are capable of performing complex tasks, some even for which they were not explicitly trained.

Thus, LLMs are able to perform tasks such as generating original content, answering questions with relevant and well-structured information or generating software code, all with a level of competence equal to or higher than humans specialised in these tasks and always maintaining complex and fluent conversations.

The LLLMs rely on massive amounts of data to achieve their current level of performance: from repositories such as Common Crawl, which collects data from millions of web pages, to structured sources such as Wikipedia or specialised sets such as PubMed Open Access in the biomedical field. Without access to these massive bodies of open data, the ability of these models to generalise and adapt to multiple tasks would be much more limited.

However, as LLMs continue to evolve, the need for open data increases to achieve specific advances such as:

- Increased linguistic and cultural diversity: although today's LLMs are multilingual, they are generally dominated by data in English and other major languages. The lack of open data in other languages limits the ability of these models to be truly inclusive and diverse. More open data in diverse languages would ensure that LLMs can be useful to all communities, while preserving the world's cultural and linguistic richness.

- Reducción de sesgos: los LLM, como cualquier modelo de IA, son propensos a reflejar los sesgos presentes en los datos con los que se entrenan. This sometimes leads to responses that perpetuate stereotypes or inequalities. Incorporating more carefully selected open data, especially from sources that promote diversity and equality, is fundamental to building models that fairly and equitably represent different social groups.

- Constant updating: Data on the web and other open resources is constantly changing. Without access to up-to-date data, the LLMs generate outdated responses very quickly. Therefore, increasing the availability of fresh and relevant open data would allow LLMs to keep in line with current events[9].

- Entrenamiento más accesible: a medida que los LLM crecen en tamaño y capacidad, también lo hace el coste de entrenarlos y afinarlos. Open data allows independent developers, universities and small businesses to train and refine their own models without the need for costly data acquisitions. This democratises access to artificial intelligence and fosters global innovation.

To address some of these challenges, the new Artificial Intelligence Strategy 2024 includes measures aimed at generating models and corpora in Spanish and co-official languages, including the development of evaluation datasets that consider ethical evaluation.

SLM: optimised efficiency with specific data

On the other hand, SLMs have emerged as an efficient and specialised alternative that uses a smaller number of parameters (usually in the millions) and are designed to be lightweight and fast. Aunque no alcanzan la versatilidad y competencia de los LLM en tareas complejas, los SLM destacan por su eficiencia computacional, rapidez de implementación y capacidad para especializarse en dominios concretos.

For this, SLMs also rely on open data, but in this case, the quality and relevance of the datasets are more important than their volume, so the challenges they face are more related to data cleaning and specialisation. These models require sets that are carefully selected and tailored to the specific domain for which they are to be used, as any errors, biases or unrepresentativeness in the data can have a much greater impact on their performance. Moreover, due to their focus on specialised tasks, the SLMs face additional challenges related to the accessibility of open data in specific fields. For example, in sectors such as medicine, engineering or law, relevant open data is often protected by legal and/or ethical restrictions, making it difficult to use it to train language models.

The SLMs are trained with carefully selected data aligned to the domain in which they will be used, allowing them to outperform LLMs in accuracy and specificity on specific tasks, such as for example:

- Text autocompletion: a SLM for Spanish autocompletion can be trained with a selection of books, educational texts or corpora such as those to be promoted in the aforementioned AI Strategy, being much more efficient than a general-purpose LLM for this task.

- Legal consultations: a SLM trained with open legal datasets can provide accurate and contextualised answers to legal questions or process contractual documents more efficiently than a LLM.

- Customised education: ein the education sector, SLM trained with open data teaching resources can generate specific explanations, personalised exercises or even automatic assessments, adapted to the level and needs of the student.

- Medical diagnosis: An SLM trained with medical datasets, such as clinical summaries or open publications, can assist physicians in tasks such as identifying preliminary diagnoses, interpreting medical images through textual descriptions or analysing clinical studies.

Ethical Challenges and Considerations

We should not forget that, despite the benefits, the use of open data in language modelling presents significant challenges. One of the main challenges is, as we have already mentioned, to ensure the quality and neutrality of the data so that they are free of biases, as these can be amplified in the models, perpetuating inequalities or prejudices.

Even if a dataset is technically open, its use in artificial intelligence models always raises some ethical implications. For example, it is necessary to avoid that personal or sensitive information is leaked or can be deduced from the results generated by the models, as this could cause damage to the privacy of individuals.

The issue of data attribution and intellectual property must also be taken into account. The use of open data in business models must address how the original creators of the data are recognised and adequately compensated so that incentives for creators continue to exist.

Open data is the engine that drives the amazing capabilities of language models, both SLM and LLM. While the SLMs stand out for their efficiency and accessibility, the LLMs open doors to advanced applications that not long ago seemed impossible. However, the path towards developing more capable, but also more sustainable and representative models depends to a large extent on how we manage and exploit open data.

Contenido elaborado por Jose Luis Marín, Senior Consultant in Data, Strategy, Innovation & Digitalization. Los contenidos y los puntos de vista reflejados en esta publicación son responsabilidad exclusiva de su autor.

In February 2024, the European geospatial community took a major step forward with the first major update of the INSPIRE implementation schemes in almost a decade. This update, which generates version 5.0 of the schemas, introduces changes that affect the way spatial data are harmonised, transformed and published in Europe. For implementers, policy makers and data users, these changes present both challenges and opportunities.

In this article, we will explain what these changes entail, how they impact on data validation and what steps need to be taken to adapt to this new scenario.

What is INSPIRE and why does it matter?

The INSPIRE Directive (Infrastructure for Spatial Information in Europe) determines the general rules for the establishment of an Infrastructure for Spatial Information in the European Community based on the Member States'' Infrastructures. Adopted by the European Parliament and the Council on March 14, 2007 (Directive 2007/2/EC), it is designed to achieve these objectives by ensuring that spatial information is consistent and accessible across EU member countries.

What changes with the 5.0 upgrade?

The transition to version 5.0 brings significant modifications, some of which are not backwards compatible. Among the most notable changes are:

- Removal of mandatory properties: this simplifies data models, but requires implementers to review their previous configurations and adjust the data to comply with the new rules.

- Renaming of types and properties: with the update of the INSPIRE schemas to version 5.0, some element names and definitions have changed. This means that data that were harmonised following the 4.x schemas no longer exactly match the new specifications. In order to keep these data compliant with current standards, it is necessary to re-transform them using up-to-date tools. This re-transformation ensures that data continues to comply with INSPIRE standards and can be shared and used seamlessly across Europe. The complete table with these updates is as follows:

| Schema | Description of the change | Type of change | Latest version |

|---|---|---|---|

| ad | Changed the data type for the "building" association of the entity type Address. | Non-disruptive | v4.1 |

| au | Removed the enumeration from the schema and changed the encoding of attributes referring to enumerations. | Disruptive | v5.0 |

| BaseTypes.xsd | Removed VerticalPositionValue enumeration from BaseTypes schema. | Disruptive | v4.0 |

| ef | Added a new attribute "thematicId" to the AbstractMonitoringObject spatial object type | Non-disruptive | v4.1 |

| el-cov | Changed the encoding of attributes referring to enumerations. | Disruptive | v5.0 |

| ElevationBaseTypes.xsd | Deleted outline enumeration. | Disruptive | v5.0. |

| el-tin | Changed the encoding of attributes referring to enumerations. | Disruptive | v5.0 |

| el-vec | Removed the enumeration from the schema and changed the encoding of attributes referring to enumerations. | Disruptive | v5.0 |

| hh | Added new attributes to the EnvHealthDeterminantMeasure type, new entity types and removed some data types. | Disruptive | v5.0 |

| hy | Updated to version 5.0 as the schema imports the hy-p schema which was updated to version 5. | Disruptive y non-disruptive | v5.0 |

| hyp | Changed the data type of the geometry attribute of the DrainageBasin type. | Disruptive y non- disruptive | v5.0 |

| lcv | Added association role to the LandCoverUnit entity type. | Disruptive | v5.0 |

| mu | Changed the encoding of attributes referring to enumerations. | Disruptive | v4.0 |

| nz-core | Removed the enumeration from the schema and changed the encoding of attributes referring to enumerations. | Disruptive | v5.0 |

| ObservableProperties.xsd | Removed the enumeration from the schema and changed the encoding of attributes referring to enumerations. | Disruptive | v4.0 |

| pf | Changed the definition of the ProductionInstallation entity type. | Non-disruptive | v4.1 |

| plu | Fixed typo in the "backgroudMapURI" attribute of the BackgroundMapValue data type. | Disruptive | v4.0.1 |

| ps | Fixed typo in inspireId, added new attribute, and moved attributes to data type. | Disruptive | v5.0 |

| sr | Changed the stereotype of the ShoreSegment object from featureType to datatype. | Disruptive | v4.0.1 |

| su-vector | Added a new attribute StatisticalUnitType to entity type VectorStatisticalUnit | Non-disruptive | v4.1 |

| tn | Removed the enumeration from the schema and changed the encoding of attributes referring to enumerations. | Disruptive | v5.0 |

| tn-a | Changed the data type for the "controlTowers" association of the AerodromeNode entity type. | Non-disruptive | v4.1 |

| tn-ra | Removed enumerations from the schema and changed the encoding of attributes referring to enumerations. | Disruptive | v5.0 |

| tn-ro | Removed enumerations from the schema and changed the encoding of attributes referring to enumerations. | Disruptive | v5.0 |

| tn-w | Removed the abstract stereotype for the entity type TrafficSeparationScheme. Removed enumerations from the schema and changed the encoding of attributes referring to enumerations | Disruptive y non disruptive | v5.0 |

| us-govserv | Updated the version of the imported us-net-common schema (from 4.0 to 5.0). | Disruptive | v5.0 |

| us-net-common | Defined the data type for the authorityRole attribute. Changed the encoding of attributes referring to enumerations. | Disruptive | v5.0 |

| us-net-el | Updated the version of the imported us-net-common schema (from 4.0 to 5.0). | Disruptive | v5.0 |

| us-net-ogc | Updated the version of the imported us-net-common schema (from 4.0 to 5.0). | Disruptive | v5.0 |

| us-net-sw | Updated the version of the imported us-net-common schema (from 4.0 to 5.0). | Disruptive | v5.0 |

| us-net-th | Updated the version of the imported us-net-common schema (from 4.0 to 5.0). | Disruptive | v5.0 |

| us-net-wa | Updated the version of the imported us-net-common schema (from 4.0 to 5.0). | Disruptive | v5.0 |

Figure 1. Latest INSPIRE updates.

- Major changes in version 4.0: although normally a major change in a schema would lead to a new major version (e.g. from 4.0 to 5.0), some INSPIRE schemas in version 4.0 have received significant updates without changing version number. A notable example of this is the Planned Land Use (PLU) scheme. These updates imply that projects and services using the PLU scheme in version 4.0 must be reviewed and modified to adapt to the new specifications. This is particularly relevant for those working with XPlanung, a standard used in urban and land use planning in some European countries. The changes made to the PLU scheme oblige implementers to update their transformation projects and republish data to ensure that they comply with the new INSPIRE rules.

Impact on validation and monitoring

Updating affects not only how data is structured, but also how it is validated. The official INSPIRE tools, such as the Validador, have incorporated the new versions of the schemas, which generates different validation scenarios:

- Data conforming to previous versions: data harmonised to version 4.x can still pass basic validation tests, but may fail specific tests requiring the use of the updated schemas.

- Specific tests for updated themes: some themes, such as Protected Sites, require data to follow the most recent versions of the schemas to pass all compliance tests.

In addition, the Joint Research Center (JRC) has indicated that these updated versions will be used in official INSPIRE monitoring from 2025 onwards, underlining the importance of adapting as soon as possible.

What does this mean for consumers?

To ensure that data conforms to the latest versions of the schemas and can be used in European systems, it is essential to take concrete steps:

- If you are publishing new datasets: use the updated versions of the schemas from the beginning.

- If you are working with existing data: update the schemas of your datasets to reflect the changes you have made. This may involve adjusting types of features and making new transformations.

- Publishing services: If your data is already published, you will need to re-transform and republish it to ensure it conforms to the new specifications.

These actions are essential not only to comply with INSPIRE standards, but also to ensure long-term data interoperability.

Conclusion

The update to version 5.0 of the INSPIRE schemas represents a technical challenge, but also an opportunity to improve the interoperability and usability of spatial data in Europe. Adopting these modifications not only ensures regulatory compliance, but also positions implementers as leaders in the modernisation of spatial data infrastructure.

Although the updates may seem complex, they have a clear purpose: to strengthen the interoperability of spatial data in Europe. With better harmonised data and updated tools, it will be easier for governments, businesses and organisations to collaborate and make informed decisions on crucial issues such as sustainability, land management and climate change.

Furthermore, these improvements reinforce INSPIRE''s commitment to technological innovation, making European spatial data more accessible, useful and relevant in an increasingly interconnected world.

Content prepared by Mayte Toscano, Senior Consultant in Data Economy Technologies. The contents and points of view reflected in this publication are the sole responsibility of its author.

Data governance is crucial for the digital transformation of organisations. It is developed through various axes within the organisation, forming an integral part of the organisational digital transformation plan. In a world where organisations need to constantly reinvent themselves and look for new business models and opportunities to innovate, data governance becomes a key part of moving towards a fairer and more inclusive digital economy, while remaining competitive.

Organisations need to maximise the value of their data, identify new challenges and manage the role of data in the use and development of disruptive technologies such as Artificial Intelligence. Thanks to data governance, it is possible to make informed decisions, improve operational efficiency and ensure regulatory compliance, while ensuring data security and privacy.

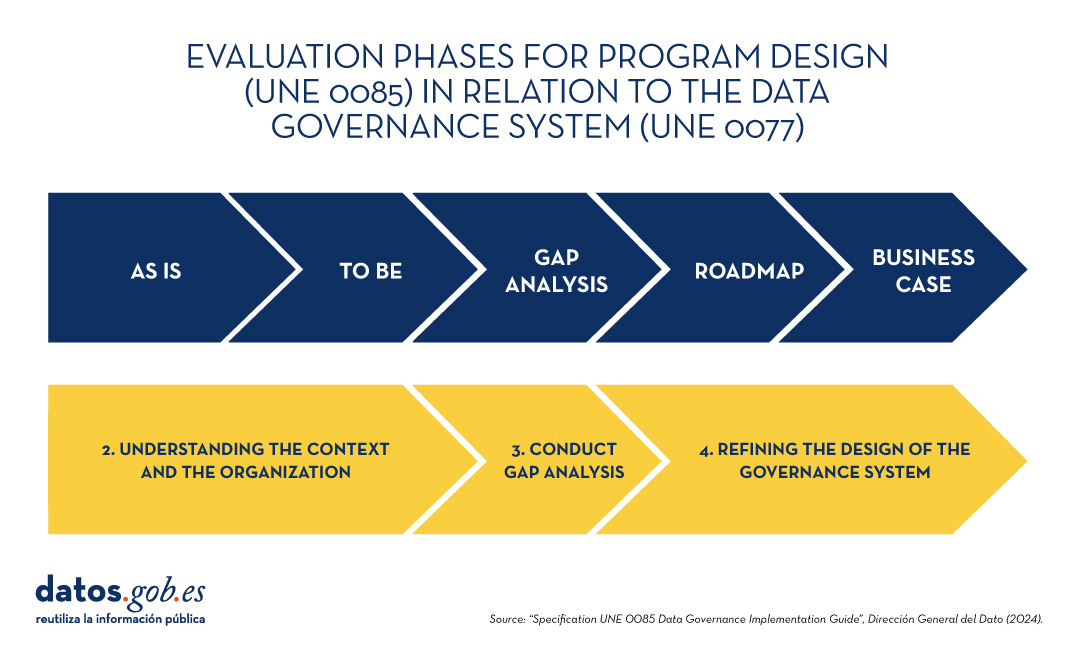

To achieve this, it is essential to carry out a planned digital transformation, centred on a strategic data governance plan that complements the organisation's strategic plan. The UNE 0085 guide helps to implement data governance in any organisation and does so by placing special emphasis on the design of the programme through an evaluation cycle based on gap analysis, which must be relevant and decisive for senior management to approve the launch of the programme.

The data governance office, key body of the programme

A data governance programme should identify what data is critical to the organisation, where it resides and how it is used.. This must be accompanied by a management system that coordinates the deployment of data governance, management and quality processes. An integrated approach with other management systems that the organisation may have, such as the business continuity management system or the information security system, is necessary.

The Data Governance Office is the area in charge of coordinating the development of the different components of the data governance and management system, i.e. it is the area that participates in the creation of the guidelines, rules and policies that allow the appropriate treatment of data, as well as ensuring compliance with the different regulations.

The Data Governance Office should be a key body of the programme. It serves as a bridge between business areas, coordinating data owners and data stewards at the organisational level.

UNE 0085: guidelines for implementing data governance

Implementing a data governance programme is not an easy task. To help organisations with this challenge, the new UNE 0085 has been developed, which follows a process approach as opposed to an artefact approach and summarises as a guide the steps to follow to implement such a programme, thus complementing the family of UNE standards on data governance, management and quality 0077, 0078, 0079 and 0080.

This guide:

- It emphasises the importance of the programme being born aligned with the strategic objectives of the organisation, with strong sponsorship.

- Describes at a high level the key aspects that should be covered by the programme.

- Detalla diferentes escenarios tipo, que pueden ayudar a una organización a clarificar por dónde empezar y qué iniciativas debería priorizar, el modelo operativo y roles que necesitará para el despliegue.

- It presents the design of the data governance programme through an evaluation cycle based on gap analysis. It starts with an initial assessment phase (As Is) to show the starting situation of the organisation followed by a second phase in which the scope and objectives of the programme are defined and aligned with the strategic objectives of the organisation phase (To be), to carry out the gap analysis phase. It ends with a business case that includes deliverables such as scope, frameworks, programme objectives and milestones, budget, roadmap and measurable benefits with associated KPIs among other aspects. This use case will serve as the launch of the data governance programme by management and thus its implementation throughout the organisation. The different phases of the cycle in relation to the UNE 0077 data governance system are presented below:

Finally, beyond processes and systems, we cannot forget people and the roles they play in this digital transformation. Data controllers and the entities involved are central to this organisational culture change. It is necessary to manage this change effectively in order to deploy a data governance operating model that fits the needs of each organisation.

It may seem complex to orchestrate and define an exercise of this magnitude, especially with abstract concepts related to data governance. This is where the new data governance office, which each organisation must establish, comes into play. This office will assist in these essential tasks, always following the appropriate frameworks and standards.

It is recommended to follow a methodology that facilitates this work, such as the UNE specifications for data governance, management and quality (0077, 0078, 0079 and 0080). These specifications are now complemented by the new UNE 0085, a practical implementation guide that can be downloaded free of charge from the AENOR website.

The content of this guide can be downloaded freely and free of charge from the AENOR portal through the link below by accessing the purchase section. Access to this family of UNE data specifications is sponsored by the Secretary of State for Digitalization and Artificial Intelligence, Directorate General for Data. Although the download requires prior registration, a 100% discount on the total price is applied at the time of finalizing the purchase. After finalizing the purchase, the selected standard or standards can be accessed from the customer area in “my products” section.

Today's climate crisis and environmental challenges demand innovative and effective responses. In this context, the European Commission's Destination Earth (DestinE) initiative is a pioneering project that aims to develop a highly accurate digital model of our planet.

Through this digital twin of the Earth it will be possible to monitor and prevent potential natural disasters, adapt sustainability strategies and coordinate humanitarian efforts, among other functions. In this post, we analyse what the project consists of and the state of development of the project.

Features and components of Destination Earth

Aligned with the European Green Pact and the Digital Europe Strategy, Destination Earth integrates digital modeling and climate science to provide a tool that is useful in addressing environmental challenges. To this end, it has a focus on accuracy, local detail and speed of access to information.

In general, the tool allows:

- Monitor and simulate Earth system developments, including land, sea, atmosphere and biosphere, as well as human interventions.

- To anticipate environmental disasters and socio-economic crises, thus enabling the safeguarding of lives and the prevention of significant economic downturns.

- Generate and test scenarios that promote more sustainable development in the future.

To do this, DestinE is subdivided into three main components :

- Data lake:

- What is it? A centralised repository to store data from a variety of sources, such as the European Space Agency (ESA), EUMETSAT and Copernicus, as well as from the new digital twins.

- What does it provide? This infrastructure enables the discovery and access to data, as well as the processing of large volumes of information in the cloud.

·The DestinE Platform:.

- What is it? A digital ecosystem that integrates services, data-driven decision-making tools and an open, flexible and secure cloud computing infrastructure.

- What does it provide? Users have access to thematic information, models, simulations, forecasts and visualisations that will facilitate a deeper understanding of the Earth system.

- Digital cufflinks and engineering:

- What are they? There are several digital replicas covering different aspects of the Earth system. The first two are already developed, one on climate change adaptation and the other on extreme weather events.

- WHAT DOES IT PROVIDE? These twins offer multi-decadal simulations (temperature variation) and high-resolution forecasts.

Discover the services and contribute to improve DestinE

The DestinE platform offers a collection of applications and use cases developed within the framework of the initiative, for example:

- Digital twin of tourism (Beta): it allows to review and anticipate the viability of tourism activities according to the environmental and meteorological conditions of its territory.

- VizLab: offers an intuitive graphical user interface and advanced 3D rendering technologies to provide a storytelling experience by making complex datasets accessible and understandable to a wide audience..

- miniDEA: is an interactive and easy-to-use DEA-based web visualisation app for previewing DestinE data.

- GeoAI: is a geospatial AI platform for Earth observation use cases.

- Global Fish Tracking System (GFTS): is a project to help obtain accurate information on fish stocks in order to develop evidence-based conservation policies.

- More resilient urban planning: is a solution that provides a heat stress index that allows urban planners to understand best practices for adapting to extreme temperatures in urban environments..

- Danube Delta Water Reserve Monitoring: is a comprehensive and accurate analysis based on the DestinE data lake to inform conservation efforts in the Danube Delta, one of the most biodiverse regions in Europe.

Since October this year, the DestinE platform has been accepting registrations, a possibility that allows you to explore the full potential of the tool and access exclusive resources. This option serves to record feedback and improve the project system.

To become a user and be able to generate services, you must follow these steps..

Project roadmap:

The European Union sets out a series of time-bound milestones that will mark the development of the initiative:

- 2022 - Official launch of the project.

- 2023 - Start of development of the main components.

- 2024 - Development of all system components. Implementation of the DestinE platform and data lake. Demonstration.

- 2026 - Enhancement of the DestinE system, integration of additional digital twins and related services.

- 2030 - Full digital replica of the Earth.

Destination Earth not only represents a technological breakthrough, but is also a powerful tool for sustainability and resilience in the face of climate challenges. By providing accurate and accessible data, DestinE enables data-driven decision-making and the creation of effective adaptation and mitigation strategies.

There is no doubt that data has become the strategic asset for organisations. Today, it is essential to ensure that decisions are based on quality data, regardless of the alignment they follow: data analytics, artificial intelligence or reporting. However, ensuring data repositories with high levels of quality is not an easy task, given that in many cases data come from heterogeneous sources where data quality principles have not been taken into account and no context about the domain is available.

To alleviate as far as possible this casuistry, in this article, we will explore one of the most widely used libraries in data analysis: Pandas. Let's check how this Python library can be an effective tool to improve data quality. We will also review the relationship of some of its functions with the data quality dimensions and properties included in the UNE 0081 data quality specification, and some concrete examples of its application in data repositories with the aim of improving data quality.

Using Pandas for data profiling

Si bien el data profiling y la evaluación de calidad de datos están estrechamente relacionados, sus enfoques son diferentes:

- Data Profiling: is the process of exploratory analysis performed to understand the fundamental characteristics of the data, such as its structure, data types, distribution of values, and the presence of missing or duplicate values. The aim is to get a clear picture of what the data looks like, without necessarily making judgements about its quality.

- Data quality assessment: involves the application of predefined rules and standards to determine whether data meets certain quality requirements, such as accuracy, completeness, consistency, credibility or timeliness. In this process, errors are identified and actions to correct them are determined. A useful guide for data quality assessment is the UNE 0081 specification.

It consists of exploring and analysing a dataset to gain a basic understanding of its structure, content and characteristics, before conducting a more in-depth analysis or assessment of the quality of the data. The main objective is to obtain an overview of the data by analysing the distribution, types of data, missing values, relationships between columns and detection of possible anomalies. Pandas has several functions to perform this data profiling.

En resumen, el data profiling es un paso inicial exploratorio que ayuda a preparar el terreno para una evaluación más profunda de la calidad de los datos, proporcionando información esencial para identificar áreas problemáticas y definir las reglas de calidad adecuadas para la evaluación posterior.

What is Pandas and how does it help ensure data quality?

Pandas is one of the most popular Python libraries for data manipulation and analysis. Its ability to handle large volumes of structured information makes it a powerful tool in detecting and correcting errors in data repositories. With Pandas, complex operations can be performed efficiently, from data cleansing to data validation, all of which are essential to maintain quality standards. The following are some examples of how to improve data quality in repositories with Pandas:

1. Detection of missing or inconsistent values: One of the most common data errors is missing or inconsistent values. Pandas allows these values to be easily identified by functions such as isnull() or dropna(). This is key for the completeness property of the records and the data consistency dimension, as missing values in critical fields can distort the results of the analyses.

-

# Identify null values in a dataframe.

df.isnull().sum()

2. Data standardisation and normalisation: Errors in naming or coding consistency are common in large repositories. For example, in a dataset containing product codes, some may be misspelled or may not follow a standard convention. Pandas provides functions like merge() to perform a comparison with a reference database and correct these values. This option is key to maintaining the dimension and semantic consistency property of the data.

# Substitution of incorrect values using a reference table

df = df.merge(product_codes, left_on='product_code', right_on='ref_code', how= 'left')

3. Validation of data requirements: Pandas allows the creation of customised rules to validate the compliance of data with certain standards. For example, if an age field should only contain positive integer values, we can apply a function to identify and correct values that do not comply with this rule. In this way, any business rule of any of the data quality dimensions and properties can be validated.

# Identify records with invalid age values (negative or decimals)

age_errors = df[(df['age'] < 0) | (df['age'] % 1 != 0)])

4. Exploratory analysis to identify anomalous patterns: Functions such as describe() or groupby() in Pandas allow you to explore the general behaviour of your data. This type of analysis is essential for detecting anomalous or out-of-range patterns in any data set, such as unusually high or low values in columns that should follow certain ranges.

# Statistical summary of the data

df.describe()

#Sort by category or property

df.groupby()

5. Duplication removal: Duplicate data is a common problem in data repositories. Pandas provides methods such as drop_duplicates() to identify and remove these records, ensuring that there is no redundancy in the dataset. This capacity would be related to the dimension of completeness and consistency.

# Remove duplicate rows

df = df.drop_duplicates()

Practical example of the application of Pandas

Having presented the above functions that help us to improve the quality of data repositories, we now consider a case to put the process into practice. Suppose we are managing a repository of citizens' data and we want to ensure:

- Age data should not contain invalid values (such as negatives or decimals).

- That nationality codes are standardised.

- That the unique identifiers follow a correct format.

- The place of residence must be consistent.

With Pandas, we could perform the following actions:

1. Age validation without incorrect values:

# Identify records with ages outside the allowed ranges (e.g. less than 0 or non-integers)

age_errors = df[(df['age'] < 0) | (df['age'] % 1 != 0)])

2. Correction of nationality codes:

# Use of an official dataset of nationality codes to correct incorrect entries

df_corregida = df.merge(nacionalidades_ref, left_on='nacionalidad', right_on='codigo_ref', how='left')

3. Validation of unique identifiers:

# Check if the format of the identification number follows a correct pattern

df['valid_id'] = df['identificacion'].str.match(r'^[A-Z0-9]{8}$')

errores_id = df[df['valid_id'] == False]

4. Verification of consistency in place of residence:

# Detect possible inconsistencies in residency (e.g. the same citizen residing in two places at the same time).

duplicados_residencia = df.groupby(['id_ciudadano', 'fecha_residencia'])['lugar_residencia'].nunique()

inconsistencias_residencia = duplicados_residencia[duplicados_residencia > 1]

Integration with a variety of technologies

Pandas is an extremely flexible and versatile library that integrates easily with many technologies and tools in the data ecosystem. Some of the main technologies with which Pandas is integrated or can be used are:

- SQL databases:

Pandas integrates very well with relational databases such as MySQL, PostgreSQL, SQLite, and others that use SQL. The SQLAlchemy library or directly the database-specific libraries (such as psycopg2 for PostgreSQL or sqlite3) allow you to connect Pandas to these databases, perform queries and read/write data between the database and Pandas.

- Common function: pd.read_sql() to read a SQL query into a DataFrame, and to_sql() to export the data from Pandas to a SQL table.

- REST and HTTP-based APIs:

Pandas can be used to process data obtained from APIs using HTTP requests. Libraries such as requests allow you to get data from APIs and then transform that data into Pandas DataFrames for analysis.

- Big Data (Apache Spark):

Pandas can be used in combination with PySpark, an API for Apache Spark in Python. Although Pandas is primarily designed to work with in-memory data, Koalas, a library based on Pandas and Spark, allows you to work with Spark distributed structures using a Pandas-like interface. Tools like Koalas help Pandas users scale their scripts to distributed data environments without having to learn all the PySpark syntax.

- Hadoop and HDFS:

Pandas can be used in conjunction with Hadoop technologies, especially the HDFS distributed file system. Although Pandas is not designed to handle large volumes of distributed data, it can be used in conjunction with libraries such as pyarrow or dask to read or write data to and from HDFS on distributed systems. For example, pyarrow can be used to read or write Parquet files in HDFS.

- Popular file formats:

Pandas is commonly used to read and write data in different file formats, such as:

- CSV: pd.read_csv()

- Excel: pd.read_excel() and to_excel().

- JSON: pd.read_json()

- Parquet: pd.read_parquet() for working with space and time efficient files.

- Feather: a fast file format for interchange between languages such as Python and R (pd.read_feather()).

- Data visualisation tools:

Pandas can be easily integrated with visualisation tools such as Matplotlib, Seaborn, and Plotly.. These libraries allow you to generate graphs directly from Pandas DataFrames.

- Pandas includes its own lightweight integration with Matplotlib to generate fast plots using df.plot().

- For more sophisticated visualisations, it is common to use Pandas together with Seaborn or Plotly for interactive graphics.

- Machine learning libraries:

Pandas is widely used in pre-processing data before applying machine learning models. Some popular libraries with which Pandas integrates are:

- Scikit-learn: la mayoría de los pipelines de machine learning comienzan con la preparación de datos en Pandas antes de pasar los datos a modelos de Scikit-learn.

- TensorFlow y PyTorch: aunque estos frameworks están más orientados al manejo de matrices numéricas (Numpy), Pandas se utiliza frecuentemente para la carga y limpieza de datos antes de entrenar modelos de deep learning.

- XGBoost, LightGBM, CatBoost: Pandas supports these high-performance machine learning libraries, where DataFrames are used as input to train models.

- Jupyter Notebooks:

Pandas is central to interactive data analysis within Jupyter Notebooks, which allow you to run Python code and visualise the results immediately, making it easy to explore data and visualise it in conjunction with other tools.

- Cloud Storage (AWS, GCP, Azure):

Pandas can be used to read and write data directly from cloud storage services such as Amazon S3, Google Cloud Storage and Azure Blob Storage. Additional libraries such as boto3 (for AWS S3) or google-cloud-storage facilitate integration with these services. Below is an example for reading data from Amazon S3.

import pandas as pd

import boto3

#Create an S3 client

s3 = boto3.client('s3')

#Obtain an object from the bucket

obj = s3.get_object(Bucket='mi-bucket', Key='datos.csv')

#Read CSV file from a DataFrame

df = pd.read_csv(obj['Body'])

10. Docker and containers: