The European Union is at the forefront of the development of safe, ethical and people-centred artificial intelligence (AI). Through a robust regulatory framework, based on human rights and fundamental values, the EU is building an AI ecosystem that simultaneously benefits citizens, businesses and public administrations. As part of its commitment to the proper development of this technology, the European Commission has proposed a set of actions to promote its excellence.

In this regard, a pioneering piece of legislation that establishes a comprehensive legal framework stands out: the AI Act. It classifies artificial intelligence models according to their level of risk and establishes specific obligations for providers regarding data and data governance. In parallel, the Coordinated Plan on AI updated in 2021 sets out a roadmap to boost investment, harmonise policies and encourage the uptake of AI across the EU.

Spain is aligned with Europe in this area and therefore has a strategy to accelerate its development and expansion.. In addition, the transposition of the AI law has recently been approved, with the preliminary draft law for an ethical, inclusive and beneficial use of artificial intelligence.

European projects transforming key sectors

In this context, the EU is funding numerous projects that use artificial intelligence technologies to solve challenges in various fields. Below, we highlight some of the most innovative ones, some of which have already been completed and some of which are underway:

Agriculture and food sustainability

Projects currently underway:

-

ANTARES: develops smart sensor technologies and big data to help farmers produce more food in a sustainable way, benefiting society, farm incomes and the environment.

Examples of other completed projects:

-

Pantheon: developed a control and data acquisition system, equivalent to industrial SCADA, for precision farming in large hazelnut orchards, increasing production, reducing chemical inputs and simplifying management.

-

Trimbot2020: researched robotics and vision technologies to create the first outdoor gardening robot, capable of navigating varied terrain and trimming rose bushes, hedges and topiary.

Industry and manufacturing

Projects currently underway:

-

SERENA: applies AI techniques to predict maintenance needs of industrial equipment, reducing costs and time, and improving the productivity of production processes..

-

SecondHands: has developed a robot capable of proactively assisting maintenance technicians by recognising human activity and anticipating their needs, increasing efficiency and productivity in industrial environments.

Examples of other completed projects:

-

QU4LITY: combined data and AI to increase manufacturing sustainability, providing a data-shared, SME-friendly, standardised and transformative zero-defect manufacturing model.

-

KYKLOS 4.0: explored how cyber-physical systems, product lifecycle management, augmented reality and AI can transform circular manufacturing through seven large-scale pilot projects.

Transport and mobility

Projects currently underway

-

VI-DAS: A project by a Spanish company working on advanced driver assistance systems and navigation aids, combining traffic understanding with consideration of the driver's physical, mental and behavioural state to improve road safety.

-

PILOTING: adapts, integrates and demonstrates robotic solutions in an integrated platform for the inspection and maintenance of refineries, bridges and tunnels.. One of its focuses is on boosting production and access to inspection data.

Examples of other completed projects:

- FABULOS: has developed and tested a local public transport system using autonomous minibuses, demonstrating its viability and promoting the introduction of robotic technologies in public infrastructure.

Social impact research

Projects currently underway:

-

HUMAINT: provides a multidisciplinary understanding of the current state and future evolution of machine intelligence and its potential impact on human behaviour, focusing on cognitive and socio-emotional capabilities.

-

AI Watch: monitors industrial, technological and research capacity, policy initiatives in Member States, AI adoption and technical developments, and their impact on the economy, society and public services.

Examples of other completed projects:

-

TECHNEQUALITY: examined the potential social consequences of the digital age, looking at how AI and robots affect work and how automation may impact various social groups differently.

Health and well-being

Projects currently underway:

-

DeepHealth: develops advanced tools for medical image processing and predictive modelling, facilitating the daily work of healthcare personnel without the need to combine multiple tools..

-

BigO: collects and analyses anonymised data on child behaviour patterns and their environment to extract evidence on local factors involved in childhood obesity.

Examples of other completed projects:

-

PRIMAGE: has created a cloud-based platform to support decision making for malignant solid tumours, offering predictive tools for diagnosis, prognosis and monitoring, using imaging biomarkers and simulation of tumour growth..

-

SelfBACK: provided personalised support to patients with low back pain through a mobile app, using sensor-collected data to tailor recommendations to each user.

-

EYE-RISK: developed tools that predict the likelihood of developing age-related eye diseases and measures to reduce this risk, including a diagnostic panel to assess genetic predisposition.

- Solve-RD: improved diagnosis of rare diseases by pooling patient data and advanced genetic methods.

The future of AI in Europe

These examples, both past and present, are very interesting use cases of the development of artificial intelligence in Europe. However, the EU's commitment to AI is also forward-looking. And it is reflected in an ambitious investment plan: the Commission plans to invest EUR 1 billion per year in AI, from the Digital Europe and Horizon Europe programmes, with the aim of attracting more than EUR 20 billion of total AI investment per year during this decade..

The development of an ethical, transparent and people-centred IA is already an EU objective that goes beyond the legal framework. With a hands-on approach, the European Union funds projects that not only drive technological innovation, but also address key societal challenges, from health to climate change, building a more sustainable, inclusive and prosperous future for all European citizens.

There is no doubt that artificial intelligence has become a fundamental pillar of technological innovation. Today, artificial intelligence (AI) can create chatbots specialised in open data, applications that facilitate professional work and even a digital Earth model to anticipate natural disasters.

The possibilities are endless, however, the future of AI also has challenges to overcome to make models more inclusive, accessible and transparent. In this respect, the European Union is developing various initiatives to make progress in this field.

European regulatory framework for a more open and transparent AI.

The EU's approach to AI seeks to give citizens the confidence to adopt these technologies and to encourage businesses to develop them. To this end, the European AI Regulation sets out guidelines for the development of artificial intelligence in line with European values of privacy, security and cultural diversity. On the other hand, the Data Governance Regulation (DGA) defines that broad access to data must be guaranteed without compromising intellectual property rights, privacy and fairness.

Together with the Artificial Intelligence Act, the update of the Coordinated Plan on AI ensures the security and fundamental rights of individuals and businesses, while strengthening investment and innovation in all EU countries. The Commission has also launched an Artificial Intelligence Innovation Package to help European start-ups and SMEs develop reliable AI that respects EU values and standards.

Other institutions are also working on boosting intelligence by pushing open source AI models as a very interesting solution. A recent report by Open Future and Open Source Initiative (OSI) defines what data governance should look like in open source AI models. One of the challenges highlighted in the report is precisely to strike a balance between open data and data rights, to achieve more transparency and to avoid cultural bias. In fact, experts in the field Ricard Martínez and Carmen Torrijos debated this issue in the pódcast of datos.gob.es.

The OpenEuroLLM project

With the aim of solving potential challenges and as an innovative and open solution, the European Union, through the Digital Europe programme has presented through this open source artificial intelligence project it is expected to create efficient, transparent language models aligned with European AI regulations.

The OpenEuroLLM project has as its main goal the development of state-of-the-art language models for a wide variety of public and private applications. Among the most important objectives, we can mention the following:

- Extend the multilingual capabilities of existing models: this includes not only the official languages of the European Union, but also other languages that are of social and economic interest. Europe is a continent rich in linguistic diversity, and the project seeks to reflect this diversity in AI models.

- Sustainable access to fundamental models: lthe models developed within the project will be easy to access and ready to be adjusted to various applications. This will not only benefit large enterprises, but also small and medium-sized enterprises (SMEs) that wish to integrate AI into their processes without facing technological barriers.

- Evaluation of results and alignment with European regulations: models will be evaluated according to rigorous safety standards and alignment with the European AI Regulation and other European regulatory frameworks. This will ensure that AI solutions are safe and respect fundamental rights.

- Transparency and accessibility: One of the premises of the project is to openly share the tools, processes and intermediate results of the training processes. This will allow other researchers and developers to reproduce, improve and adapt the models for their own purposes.

- Community building: OpenEuroLLM is not limited to modelling but also aims to build an active and engaged community, both in the public and private sector, that can collaborate, share knowledge and work together to advance AI research.

The OpenEuroLLM Consortium: a collaborative and multinational project

The OpenEuroLLM project is being developed by a consortium of 20 European research institutions , technology companies and supercomputing centres, under the coordination of Charles University (Czech Republic) and the collaboration of Silo GenAI (Finland). The consortium brings together some of the leading institutions and companies in the field of artificial intelligence in Europe, creating a multinational collaboration to develop open source language models.

The main institutions participating in the project include renowned universities such as University of Helsinki (Finland) and University of Oslo (Norway), as well as technology companies such as Aleph Alpha Research (Germany) or the company from Elche prompsit (Spain), among others. In addition, supercomputing centres such as the Barcelona Supercomputing Center (Spain) or SURF (The Netherlands) provide the infrastructure needed to train large-scale models.

Linguistic diversity, transparency and compliance with EU standards

One of the biggest challenges of globalised artificial intelligence is the inclusion of multiple languages and the preservation of cultural differences. Europe, with its vast linguistic diversity, presents a unique environment in which to address these issues. OpenEuroLLM is committed to preserving this diversity and ensuring that the AI models developed are sensitive to the linguistic and cultural variations of the region.

As we saw at the beginning of this post, technological development must go hand in hand with ethical and responsible values. In this respect, one of the key features of the OpenEuroLLM project is its focus on transparency. Models, data, documentation, training code and evaluation metrics will be fully available to the public. This will allow researchers and developers to audit, modify and improve the models, ensuring an open and collaborative approach.

In addition, the project is aligned with strict European AI regulations. OpenEuroLLM is designed to comply with the EU's AI Law , which sets stringent criteria to ensure safety, fairness and privacy in artificial intelligence systems.

Democratising access to AI

One of the most important achievements of OpenEuroLLLM is the democratisation of access to high-performance AI. Open source models will enable businesses, academic institutions and public sector organisations across Europe to have access to cutting-edge technology, regardless of their size or budget.

This is especially relevant for small and medium-sized enterprises (SMEs), which often face difficulties in accessing AI solutions due to high licensing costs or technological barriers. OpenEuroLLM will remove these barriers and enable companies to develop innovative products and services using AI, which will contribute to Europe's economic growth.

The OpenEuroLLM project is also an EU commitment to digital sovereignty that is strategically investing in the development of technological infrastructure that reduces dependence on global players and strengthens European competitiveness in the field of artificial intelligence. This is an important step towards artificial intelligence that is not only more advanced, but also fairer, safer and more responsible.

The increasing adoption of artificial intelligence (AI) systems in critical areas such as public administration, financial services or healthcare has brought the need for algorithmic transparency to the forefront. The complexity of AI models used to make decisions such as granting credit or making a medical diagnosis, especially when it comes to deep learning algorithms, often gives rise to what is commonly referred to as the "black box" problem, i.e. the difficulty of interpreting and understanding how and why an AI model arrives at a certain conclusion. The LLLMs or SLMs that we use so much lately are a clear example of a black box system where not even the developers themselves are able to foresee their behaviour.

In regulated sectors, such as finance or healthcare, AI-based decisions can significantly affect people's lives and therefore it is not acceptable to raise doubts about possible bias or attribution of responsibility. As a result, governments have begun to develop regulatory frameworks such as the Artificial Intelligence Regulation that require greater explainability and oversight in the use of these systems with the additional aim of generating confidence in the advances of the digital economy.

Explainable artificial intelligence (XAI) is the discipline that has emerged in response to this challenge, proposing methods to make the decisions of AI models understandable. As in other areas related to artificial intelligence, such as LLLM training, open data is an important ally of explainable artificial intelligence to build audit and verification mechanisms for algorithms and their decisions.

What is explainable AI (XAI)?

Explainable AI refers to methods and tools that allow humans to understand and trust the results of machine learning models. According to the U.S. National Institute of Standards and Technology (NIST), the NIST is the only organisation in the U.S. that has a national standards body. The four key principles of Explainable Artificial Intelligence in the US are to ensure that AI systems are transparent, understandable and trusted by users:

- Explainability (Explainability): the AI must provide clear and understandable explanations of how it arrives at its decisions and recommendations.

- Meaningful (Meaningful): explanations must be meaningful and understandable to users.

- Accuracy (Accuracy): AI must generate accurate and reliable results, and the explanation of these results must accurately reflect its performance.

- Knowledge Limits (Knowledge Limits): AI must recognise when it does not have sufficient information or confidence in a decision and refrain from issuing responses in such cases.

Unlike traditional "black box" AI systems, which generate results without revealing their internal logic, XAI works on the traceability, interpretability and accountability of these decisions. For example, if a neural network rejects a loan application, XAI techniques can highlight the specific factors that influenced the decision. Thus, while a traditional model would simply return a numerical rating of the credit file, an XAI system could also tell us something like "Payment history (23%), job stability (38%) and current level of indebtedness (32%) were the determining factors in the loan denial". This transparency is vital not only for regulatory compliance, but also for building user confidence and improving AI systems themselves.

Key techniques in XAI

The Catalogue of trusted AI tools and metrics from the OECD's Artificial Intelligence Policy Observatory (OECD.AI) collects and shares tools and metrics designed to help AI actors develop trusted systems that respect human rights and are fair, transparent, explainable, robust, safe and reliable. For example, two widely adopted methodologies in XAI are Local Interpretable Model-agnostic Explanations (LIME) and SHapley Additive exPlanations (SHAP).

- LIME approximates complex models with simpler, interpretable versions to explain individual predictions. It is a generally useful technique for quick interpretations, but not very stable in assigning the importance of variables from one example to another.

- SHAP quantifies the exact contribution of each input to a prediction using game theory principles. This is a more precise and mathematically sound technique, but much more computationally expensive.

For example, in a medical diagnostic system, both LIME and SHAP could help us interpret that a patient's age and blood pressure were the main factors that led to a diagnosis of high risk of infarction, although SHAP would give us the exact contribution of each variable to the decision.

One of the most important challenges in XAI is to find the balance between the predictive ability of a model and its explainability. Hybrid approaches are therefore often used, integrating a posteriori explanatory methods of decision making with complex models. For example, a bank could implement a deep learning system for fraud detection, but use SHAP values to audit its decisions and ensure that no discriminatory decisions are made.

Open data in the XAI

There are at least two scenarios in which value can be generated by combining open data with explainable artificial intelligence techniques:

- The first of these is the enrichment and validation of the explanations obtained with XAI techniques. Open data makes it possible to add layers of context to many technical explanations, which is also true for the explainability of AI models. For example, if an XAI system indicates that air pollution influenced an asthma diagnosis, linking this result to open air quality datasets from patients' areas of residence would allow validation of the correctness of the result.

- Improving the performance of AI models themselves is another area where open data brings value. For example, if an XAI system identifies that the density of urban green space significantly affects cardiovascular risk diagnoses, open urban planning data could be used to improve the accuracy of the algorithm.

It would be ideal if AI model training datasets could be shared as open data, so that it would be possible to verify model training and replicate the results. What is possible, however, is the open sharing of detailed metadata on such trainings as promoted by Google's Model Cards initiative, thus facilitating post-hoc explanations of the models' decisions. In this case it is a tool more oriented towards developers than towards the end-users of the algorithms.

In Spain, in a more citizen-driven initiative, but equally aimed at fostering transparency in the use of artificial intelligence algorithms, the Open Administration of Catalonia has started to publish comprehensible factsheets for each AI algorithm applied to digital administration services. Some are already available, such as the AOC Conversational Chatbots or the Video ID for Mobile idCat.

Real examples of open data and XAI

A recent paper published in Applied Sciences by Portuguese researchers exemplifies the synergy between XAI and open data in the field of real estate price prediction in smart cities. The research highlights how the integration of open datasets covering property characteristics, urban infrastructure and transport networks, with explainable artificial intelligence techniques such as SHAP analysis, unravels the key factors influencing property values. This approach aims to support the generation of urban planning policies that respond to the evolving needs and trends of the real estate market, promoting sustainable and equitable growth of cities.

Another study by researchers at INRIA (French Institute for Research in Digital Sciences and Technologies), also on real estate data, delves into the methods and challenges associated with interpretability in machine learning based on linked open data. The article discusses both intrinsic techniques, which integrate explainability into model design, and post hoc methods that examine and explain complex systems decisions to encourage the adoption of transparent, ethical and trustworthy AI systems.

As AI continues to evolve, ethical considerations and regulatory measures play an increasingly important role in creating a more transparent and trustworthy AI ecosystem. Explainable artificial intelligence and open data are interconnected in their aim to foster transparency, trust and accountability in AI-based decision-making. While XAI provides the tools to dissect AI decision-making, open data provides the raw material not only for training, but also for testing some XAI explanations and improving model performance. As AI continues to permeate every facet of our lives, fostering this synergy will contribute to building systems that are not only smarter, but also fairer.

Content prepared by Jose Luis Marín, Senior Consultant in Data, Strategy, Innovation & Digitalization. The contents and views reflected in this publication are the sole responsibility of the author.

There is no doubt that digital skills training is necessary today. Basic digital skills are essential to be able to interact in a society where technology already plays a cross-cutting role. In particular, it is important to know the basics of the technology for working with data.

In this context, public sector workers must also keep themselves constantly updated. Training in this area is key to optimising processes, ensuring information security and strengthening trust in institutions.

In this post, we identify digital skills related to open data aimed at both publishing and using open data. Not only did we identify the professional competencies that public employees working with open data must have and maintain, we also compiled a series of training resources that are available to them.

Professional competencies for working with data

A working group was set up in 2024 National Open Data Gathering with one objective: to identify the digital competencies required of public administration professionals working with open data. Beyond conclusions of this event of national relevance, the working group defined profiles and roles needed for data opening, gathering information on their roles and the skills and knowledge required. The main roles identified were:

- Role responsible: has technical responsibility for the promotion of open data policies and organises activities to define policies and data models. Some of the skills required are:

- Leadership in promoting strategies to drive data openness.

- Driving the data strategy to drive openness with purpose.

- Understand the regulatory framework related to data in order to act within the law throughout the data lifecycle.

- Encourage the use of tools and processes for data management.

- Ability to generate synergies in order to reach a consensus on cross-cutting instructions for the entire organisation.

- Technical role of data entry technician (ICT profile): carries out implementation activities more closely linked to the management of systems, extraction processes, data cleansing, etc. EThis profile must have knowledge of, for example:

- How to structure the dataset, the metadata vocabulary, data quality, strategy to follow...

- Be able to analyse a dataset and identify debugging and cleaning processes quickly and intuitively.

- Generate data visualisations, connecting databases of different formats and origins to obtain dynamic and interactive graphs, indicators and maps.

- Master the functionalities of the platform, i.e. know how to apply technological solutions for open data management or know techniques and strategies to access, extract and integrate data from different platforms.

- Open data functional role (technician of a service): executes activities more related to the selection of data to be published, quality, promotion of open data, visualisation, data analytics, etc. For example:

- Handling visualisation and dynamisation tools.

- Knowing the data economy and knowing the information related to data in its full extent (generation by public administrations, open data, infomediaries, reuse of public information, Big Data, Data Driven, roles involved, etc.).

- To know and apply the ethical and personal data protection aspects that apply to the opening of data.

- Data use by public workers: this profile carries out activities on the use of data for decision making, basic data analytics, among others. In order to do so, it must have these competences:

- Navigation, search and filtering of data.

- Data assessment.

- Data storage and export

- Data analysis and exploitation.

In addition, as part of this challenge to increase capacities for open data, a list of free trainings and guides on open data and data analyticswas developed. We compile some of them that are available online and in open format.

| Institution | Resources | Link | Level |

|---|---|---|---|

| Knight Center for Journalism in the Americas | Data journalism and visualisation with free tools | https://journalismcourses.org/es/course/dataviz/ | Beginner |

| Data Europa Academy | Introduction to open data | https://data.europa.eu/en/academy/introducing-open-data | Beginner |

| Data Europa Academy | Understanding the legal side of open data | https://data.europa.eu/en/academy/understanding-legal-side-open-data | Beginner |

| Data Europa Academy | Improve the quality of open data and metadata | https://data.europa.eu/en/academy/improving-open-data-and-metadata-quality | Advanced |

| Data Europa Academy | Measuring success in open data initiatives | https://data.europa.eu/en/training/elearning/measuring-success-open-data-initiatives | Advanced |

| Escuela de Datos | Data Pipeline Course | https://escueladedatos.online/curso/curso-tuberia-de-datos-data-pipeline/ | Intermediate |

| FEMP | Strategic guidance for its implementation - Minimum data sets to be published | https://redtransparenciayparticipacion.es/download/guia-estrategica-para-su-puesta-en-marcha-conjuntos-de-datos-minimos-a-publicar/ | Intermediate |

| Datos.gob.es | Methodological guidelines for data opening | /es/conocimiento/pautas-metodologicas-para-la-apertura-de-datos | Beginner |

| Datos.gob.es | Practical guide to publishing open data using APIs |

/es/conocimiento/guia-practica-para-la-publicacion-de-datos-abiertos-usando-apis |

Intermediate |

| Datos.gob.es | Practical guide to publishing spatial data | /es/conocimiento/guia-practica-para-la-publicacion-de-datos-espaciales | Intermediate |

| Junta de Andalucía | Processing datasets with Open Refine | https://www.juntadeandalucia.es/datosabiertos/portal/tutoriales/usar-openrefine.html | Beginner |

Figure 1. Table of own elaboration with training resources. Source: https://encuentrosdatosabiertos.es/wp-content/uploads/2024/05/Reto-2.pdf

INAP''s continuing professional development training offer

The Instituto Nacional de Administración Pública (INAP) has a Training Activities Programme for 2025, framed in the INAP Learning Strategy 2025-2028.. This training catalogue includes more than 180 activities organised in different learning programmes, which will take place throughout the year with the aim of strengthening the competences of public staff in key areas such as open data management and the use of related technologies.

INAP''s 2025 training programme offers a wide range of courses aimed at improving digital skills and open data literacy. Some of the highlighted trainings include:

- Fundamentals and tools of data analysis.

- Introduction to Oracle SQL.

- Open data and re-use of information.

- Data analysis and visualisation with Power BI.

- Blockchain: technical aspects.

- Advanced Python programming.

These courses, aimed at different profiles of public employees, from open data managers to information management technicians, allow to acquire knowledge on data extraction, processing and visualisation, as well as on strategies for the opening and reuse of open data in the Public Administration. You can consult the full catalogue here..

Other training references

Some public administrations or entities offer training courses related to open data. For more information on its training offer, please see the catalogue with the programmed courses on offer.

- FEMP''s Network of Local Entities for Transparency and Citizen Participation: https://redtransparenciayparticipacion.es/.

- Government of Aragon: Aragon Open Data: https://opendata.aragon.es/informacion/eventos-de-datos-abiertos

- School of Public Administration of Catalonia (EAPC): https://eapc.gencat.cat/ca/inici/index.html#googtrans(ca|es

- Diputació de Barcelona: http://aplicacions.diba.cat/gestforma/public/cercador_baf_ens_locals

- Instituto Geográfico Nacional (IGN): https://cursos.cnig.es/

In short, training in digital skills, in general, and in open data, in particular, is a practice that we recommend at datos.gob.es. Do you need a specific training resource? Write to us in comments, we''ll read you!

Open source artificial intelligence (AI) is an opportunity to democratise innovation and avoid the concentration of power in the technology industry. However, their development is highly dependent on the availability of high quality datasets and the implementation of robust data governance frameworks. A recent report by Open Future and the Open Source Initiative (OSI) analyses the challenges and opportunities at this intersection, proposing solutions for equitable and accountable data governance. You can read the full report here.

In this post, we will analyse the most relevant ideas of the document, as well as the advice it offers to ensure a correct and effective data governance in artificial intelligence open source and take advantage of all its benefits.

The challenges of data governance in AI

Despite the vast amount of data available on the web, accessing and using it to train AI models poses significant ethical, legal and technical challenges. For example:

- Balancing openness and rights: In line with the Data Governance Regulation (DGA), broad access to data should be guaranteed without compromising intellectual property rights, privacy and fairness.

- Lack of transparency and openness standards: It is important that models labelled as "open" meet clear criteria for transparency in the use of data.

- Structural biases: Many datasets reflect linguistic, geographic and socio-economic biases that can perpetuate inequalities in AI systems.

- Environmental sustainability: the intensive use of resources to train AI models poses sustainability challenges that must be addressed with more efficient practices.

- Engage more stakeholders: Currently, developers and large corporations dominate the conversation on AI, leaving out affected communities and public organisations.

Having identified the challenges, the report proposes a strategy for achieving the main goal: adequate data governance in open source AI models. This approach is based on two fundamental pillars.

Towards a new paradigm of data governance

Currently, access to and management of data for training AI models is marked by increasing inequality. While some large corporations have exclusive access to vast data repositories, many open source initiatives and marginalised communities lack the resources to access quality, representative data. To address this imbalance, a new approach to data management and use in open source AI is needed. The report highlights two fundamental changes in the way data governance is conceived:

On the one hand, adopting a data commons approach which is nothing more than an access model that ensures a balance between data openness and rights protection.. To this end, it would be important to use innovative licences that allow data sharing without undue exploitation. It is also relevant to create governance structures that regulate access to and use of data. And finally, implement compensation mechanisms for communities whose data is used in artificial intelligence.

On the other hand, it is necessary to transcend the vision focused on AI developers and include more actors in data governance, such as:

- Data owners and content-generating communities.

- Public institutions that can promote openness standards.

- Civil society organisations that ensure fairness and responsible access to data.

By adopting these changes, the AI community will be able to establish a more inclusive system, in which the benefits of data access are distributed in a manner that is equitable and respectful of the rights of all stakeholders. According to the report, the implementation of these models will not only increase the amount of data available for open source AI, but will also encourage the creation of fairer and more sustainable tools for society as a whole.

Advice and strategy

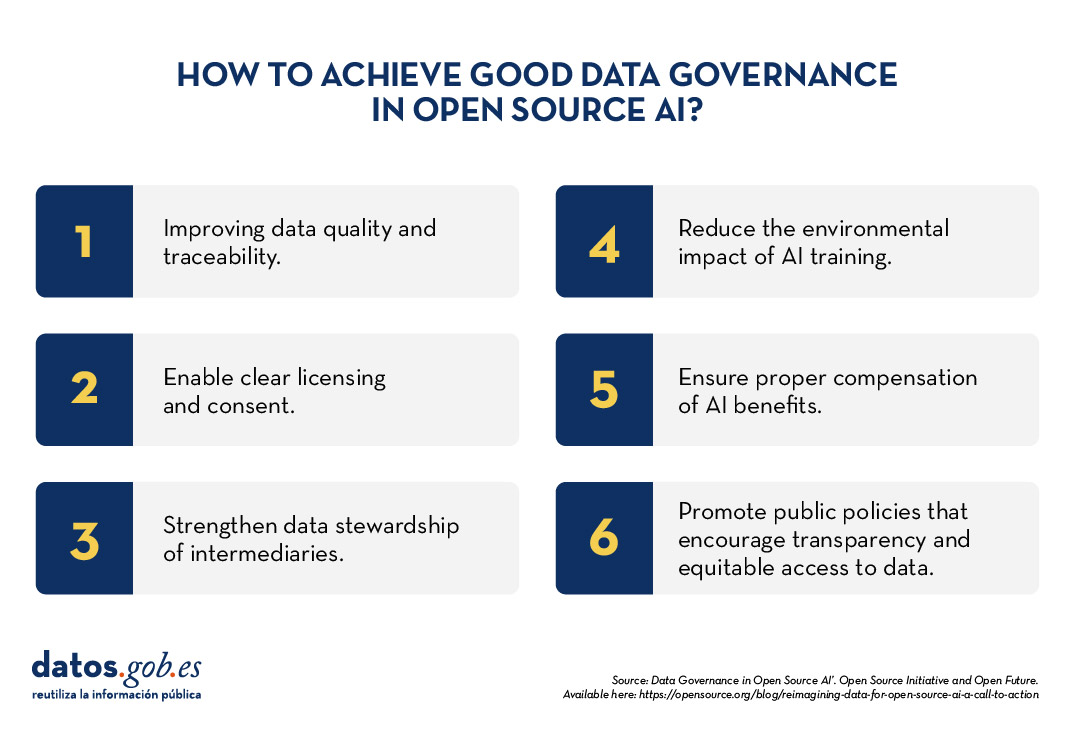

To make robust data governance effective in open source AI, the report proposes six priority areas for action:

- Data preparation and traceability: Improve the quality and documentation of data sets.

- Licensing and consent mechanisms: allow data creators to clearly define their use.

- Data stewardship: strengthen the role of intermediaries who manage data ethically.

- Environmental sustainability: Reduce the impact of AI training with efficient practices.

- Compensation and reciprocity: ensure that the benefits of AI reach those who contribute data.

- Public policy interventions: promote regulations that encourage transparency and equitable access to data.

Open source artificial intelligence can drive innovation and equity, but to achieve this requires a more inclusive and sustainable approach to data governance. Adopting common data models and broadening the ecosystem of actors will build AI systems that are fairer, more representative and accountable to the common good.

The report published by Open Future and Open Source Initiative calls for action from developers, policymakers and civil society to establish shared standards and solutions that balance open data with the protection of rights. With strong data governance, open source AI will be able to deliver on its promise to serve the public interest.

Introduction

In previous content, we have explored in depth the exciting world of Large Language Models (LLM) and, in particular, the Retrieval Augmented Generation (RAG) techniques that are revolutionising the way we interact with conversational agents. This exercise marks a milestone in our series, as we will not only explain the concepts, but also guide you step-by-step in building your own RAG-powered conversational agent. For this, we will use a Google Colabnotebook.

Access the data lab repository on Github.

Execute the data pre-processing code on Google Colab.

Through this notebook, we will build a chat that uses RAG to improve its responses, starting from scratch. The notebook will guide the user through the whole process:

- Installation of dependencies.

- Setting up the environment.

- Integration of a source of information in the form of a post.

- Incorporation of this source into the chat knowledge base using RAG techniques.

- Finally, we can see how the model's response changes before and after providing the post and asking a specific question about its content.

Tools used

Before starting, it is necessary to introduce and explain which tools we have used and why we have chosen them. For the construction of this RAG application we have used 3 pieces of technology or tools: Google Colab, OpenAI y LangChain. Both Google Colab and OpenAI are old acquaintances and we have used them several times in previous content. Therefore, in this section, we pay special attention to explaining what LangChain is, as it is a new tool that we have not used in previous posts.

- Google Colab. As usual in our exercises, when computing resources are needed, as well as a user-friendly programming environment, we use Google Colab, as far as possible. Google Colab guarantees that any user who wants to reproduce the exercise can do so without complications derived from the configuration of the particular environments of each programmer. It should be noted that adapting this exercise inspired by previous resources available in LangChain to the Google Colab environment has been a challenge.

- OpenAI. As a provider of the Chat GPT large language model (LLM), OpenAI offers a variety of powerful language models, such as GPT-4, GPT-4o, GPT-4o mini, etc. that are used to process and generate natural language text. In this case, the OpenAI language model is used in the answer generation area, where the user's question and the retrieved documents are combined to produce an accurate answer.

- LangChain. It is an open source framework (set of libraries) designed to facilitate the development of large-scale language model (LLM) based applications. This framework is especially useful for integrating and managing complex flows that combine multiple components, such as language models, vector databases, and information retrieval tools, among others.

LangChain is widely used in the development of applications such as:

- Question and answer systems (QA systems).

- Virtual assistants with specific knowledge.

- Customised text generation systems.

- Data analysis tools based on natural language.

Key features of LangChain

- Modularity and flexibility. LangChain is designed with a modular architecture that allows developers to connect different tools and services. This includes language models (such as OpenAI, Hugging Face, or local LLM) and vector databases (such as Pinecone, ChromaDB or Weaviate). The List of chat models that can be interacted with through Langchain is extensive.

- Support for AGR (Generation Augmented Retrieval) techniques. Langhain facilitates the implementation of RAG techniques by enabling the direct integration of information retrieval and text generation models. This improves the accuracy of responses by enabling LLMs to work with up-to-date and specific knowledge.

- Optimising the handling of prompts. Langhain helps to design and manage complex prompts efficiently. It allows to dynamically build a relevant context that works with the model, optimising the use of tokens and ensuring that responses are accurate and useful.

- Tokens represent the basic units that an AI model uses to process text. A token can be a whole word, a part of a word or a punctuation mark. In the sentence "Hello world!" there are, for example, four different tokens: "Hello", "Hello", "World", "! Text processing requires more computational resources as the number of tokens increases. Free versions of AI models, including the one we use in this exercise, set limits on the number of tokens that can be processed.

- Integrate multiple data sources. The framework can connect to various data sources, such as databases, APIs or user uploaded documents. This makes it ideal for building applications that need access to large volumes of structured or unstructured information.

- Interoperability with multiple LLMs. LangChain is agnostic (can be adapted to various language model providers) with respect to the language model provider, which means that you can use OpenAI, Cohere, Anthropic or even locally hosted language models.

To conclude this section, it is worth noting the open source nature of Langhain, which facilitates collaboration and innovation in the development of applications based on language models. In addition, LangChain gives us incredible flexibility because it allows developers to easily integrate different LLMs, vectorisers and even final web interfaces into their applications.

Step-by-step exploration of the exercise: introduction to the Repository

The Github repository that we will use contains all the resources needed to build our RAG application. Inside, you will find:

- README: this file provides an overview of the project, instructions for use and additional resources.

- Jupyter Notebook: the example has been developed using a Jupyter Notebook format that we have already used in the past to code practical exercises combining a text document with code snippets executable in Google Colab. Here is the detailed implementation of the application, including data loading and processing, integration with language models such as GPT-44, configuration of information retrieval systems and generation of responses based on the retrieved data.

Notebook: preparing the Environment

Before starting, it is advisable to have the following requirements.

- Basic knowledge of Python and Natural Language Processing (NLP): although the notebook is self-explanatory, a basic understanding of these concepts will facilitate learning.

- Access to Google Colab: the notebook runs in this environment, which provides the necessary infrastructure.

- Accounts active in OpenAI and LangChain with valid API keys. These services are free and essential for running the notebook. Once you register with these services, you will need to generate an API Key to interact with the services. You will need to have this key handy so that you can paste it when executing the corresponding code snippet. If you need help to get these keys, any conversational assistant such as chatGPT or Google Gemini can help you step-by-step to get the keys. If you need visual guidance on youtube you will find thousands of tutorials.

- OpenAI API: https://openai.com/api/

- Lanchain API: https://www.langchain.com/

Exploring the Notebook: block by block

The notebook is divided into several blocks, each dedicated to a specific stage of the development of our RAG application. In the following, we will describe each block in detail, including the code used and its explanation.

Note to user. In the following, we are going to reproduce blocks of the code present in the Colab notebook. For clarity we have divided the code into self-contained units and formatted the code to highlight the syntax of the Python programming language. In addition, the outputs provided by the Notebook have been formatted and highlighted in JSON format to make them more readable. Note that this Notebook invokes language model APIs and therefore the model response changes with each run. This means that the outputs (the answers) presented in this post may not be exactly the same as what the user receives when running the Notebook in Colab.

Block 1: Installation and initial configuration

|

import os |

It is very important that you run these two lines at the beginning of the exercise and then do not run it again until you close and exit Google Colab.

|

%%capture |

|

!pip install langchain --quiet |

|

import getpass os.environ["LANGCHAIN_TRACING_V2"] = "true" |

When you run this snippet, a small dialogue box will appear below the snippet. There you must paste your Langchain API Key.

|

!pip install -qU langchain-openai |

|

import getpass |

When you run this snippet, a small dialogue box will appear below the snippet. There you must paste your OpenAI API Key.

In this first block, we have installed the necessary libraries for our project. Some of the most relevant are:

- openai: To interact with the OpenAI API and access models such as GPT-4.

- langchain: A framework that simplifies LLM application development.

- langchain-text-splitters: To break up long texts into smaller fragments that can be processed by language models.

- langchain-community: A collection of additional tools and components for LangChain.

- langchain-openai: To integrate LangChain with the OpenAI API.

- langgraph: To visualise the workflow of our RAG application.

- In addition to installing the libraries, we also set up the API keys for OpenAI and LangChain, using the getpass.getpass() function to enter them securely.

Block 2: Initialising the interaction with the LLM

Next, we start the first programmatic interaction (we pass our first prompt) with the language model. To check that everything works, we ask you to translate a simple sentence.

|

import getpass import os ] |

If everything went well we will get an output like this:

|

{ |

This block is a basic introduction to using an LLM for a simple task: translation. The OpenAI API key is configured and a gpt-4o-mini language model is instantiated using ChatOpenAI.

Two messages are defined:

- SystemMessage: Instruction to the model for translating from English into Italian.

- HumanMessage: The text to be translated ("hi!").

Finally, the model is invoked with llm.invoke(messages) to get the translation.

Block 3: Creating Embeddings

To understand the concept of Embeddings applied to the context of natural language processing, we recommend reading this post.

|

import getpass pip install -qU langchain-core from langchain_core.vectorstores import InMemoryVectorStore |

When you run this snippet, a small dialogue box will appear below the snippet. There you must paste your OpenAI API Key.

This block focuses on the creation of embeddings (vector representations of text) that capture their semantic meaning. We use the OpenAIEmbeddings class to access OpenAI's text-embedding-3-large model, which generates high-quality embeddings .

The embeddings will be stored in an InMemoryVectorStore, an in-memory data structure that allows efficient searches based on semantic similarity.

Block 4: Implementing RAG

|

#RAG import bs4 from langchain_community.document_loaders import WebBaseLoader # Manten únicamente el título del post, los encabezados y el contenido del HTML bs4_strainer = bs4.SoupStrainer(class_=("post-title", "post-header", "post-content")) loader = WebBaseLoader( web_paths=("https://datos.gob.es/es/blog/slm-llm-rag-y-fine-tuning-pilares-de-la-ia…",) ) docs = loader.load() assert len(docs) == 1 print(f"Total characters: {len(docs.page_content)}") from langchain_text_splitters import RecursiveCharacterTextSplitter text_splitter = RecursiveCharacterTextSplitter( chunk_size=1000, chunk_overlap=200, add_start_index=True, ) all_splits = text_splitter.split_documents(docs) print(f"Split blog post into {len(all_splits)} sub-documents.") document_ids = vector_store.add_documents(documents=all_splits) print(document_ids[:3]) |

This block is the heart of the RAG implementation. Start loading the content of a post, using WebBaseLoader and the URL of the post on SLM, LLM, RAG and Fine-tuning..

To prepare our Generation Augmented Retrieval (GAR) system, we start by processing the text of the post using segmentation techniques. This initial step is crucial, as we break down the content into smaller fragments that are complete in meaning. We use LangChain tools to perform this segmentation, assigning each fragment a unique identifier (id). This prior preparation allows us to subsequently perform efficient and accurate searches when the system needs to retrieve relevant information to answer queries.

The bs4.SoupStrainer is used to extract only the relevant sections of the HTML. The text of the post is split into smaller fragments with RecursiveCharacterTextSplitter, ensuring overlap between fragments to maintain context. These fragments are added to the vector_store created in the previous block, generating embeddings for each one.

We see that the result of one of the fragments informs us that it has split the document into 21 sub-documents.

|

Split blog post into 21 sub-documents. |

Documents have their own identifier. For example, the first 3 are identified as:.

|

["409f1bcb-1710-49b0-80f8-e45b7ca51a96", "e242f16c-71fd-4e7b-8b28-ece6b1e37a1c", "9478b11c-61ab-4dac-9903-f8485c4770c6"] |

Block 5: Defining the Prompt and visualising the workflow

|

from langchain import hub prompt = hub.pull("rlm/rag-prompt") example_messages = prompt.invoke( {"context": "(context goes here)", "question": "(question goes here)"} ).to_messages() assert len(example_messages) == 1 print(example_messages.content) from langchain_core.documents import Document from typing_extensions import List, TypedDict class State(TypedDict): question: str context: List[Document] answer: str def retrieve(state: State): retrieved_docs = vector_store.similarity_search(state["question"]) return {"context": retrieved_docs} def generate(state: State): docs_content = "\n\n".join(doc.page_content for doc in state["context"]) messages = prompt.invoke({"question": state["question"], "context": docs_content}) response = llm.invoke(messages) return {"answer": response.content} from langgraph.graph import START, StateGraph graph_builder = StateGraph(State).add_sequence([retrieve, generate]) graph_builder.add_edge(START, "retrieve") graph = graph_builder.compile() from IPython.display import Image, display display(Image(graph.get_graph().draw_mermaid_png())) result = graph.invoke({"question": "What is Task Decomposition?"}) print(f"Context: {result["context"]}\n\n") print(f"Answer: {result["answer"]}") for step in graph.stream( {"question": "¿Cual es el futuro de la IA Generativa?"}, stream_mode="updates" ): print(f"{step}\n\n----------------\n") |

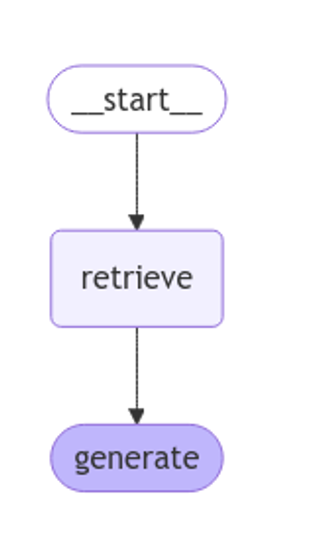

This block defines the prompt to be used to interact with the LLM. A predefined LangChain Hub prompt (rlm/rag-prompt) is used which is designed for RAG tasks.

Two functions are defined:

- retrieve: search the vector_store for snippets most similar to the user's question.

- generate: generates a response using the LLM, taking into account the context provided by the retrieved fragments.

langgraph is used to visualise the RAG workflow.

Figure 1: RAG workflow. Own elaboration.

Finally, the system is tested with two questions: one in English ("What is Task Decomposition?") and one in Spanish ("¿Cual es el futuro de la AI Generativa?").

The first question, "What is Task Decomposition?", is in English and is a generic question, unrelated to our content post. Therefore, although the system searches in its knowledge base previously created with the vectorisation of the document (post), it does not find any relation between the question and this context.

This text may vary with each execution

|

Answer: There is no explicit mention of the concept of "Task Decomposition" in the context provided. Therefore, I have no information on what Task Decomposition is. |

|

Answer: Task Decomposition is a process that decomposes a complex task into smaller, more manageable sub-tasks. This allows each subtask to be addressed independently, facilitating their resolution and improving overall efficiency. Although the context provided does not explicitly define Task Decomposition, this concept is common in AI and task optimisation. |

Answer: Task Decomposition is a process that decomposes a complex task into smaller, more manageable sub-tasks. This allows each subtask to be addressed independently, facilitating their resolution and improving overall efficiency. Although the context provided does not explicitly define Task Decomposition, this concept is common in AI and task optimisation.

|

{ |

As you can see in the response, the system retrieves 4 documents (in the diagram above, this corresponds to the "Retrieve" stage) with their corresponding "id" (identifiers), for example, the first document "id":. "53962c40-c08b-4547-a74a-26f63cced7e8" which corresponds to a fragment of the original post "title":. "SLM, LLM, RAG and Fine-tuning: Pillars of Modern Generative AI | datos.gob.es".

With these 4 fragments the system considers that it has enough relevant information to provide (in the diagram above, the "generate" stage) a satisfactory answer to the question.

|

{ |

Bloque 6: personalizando el prompt

|

from langchain_core.prompts import PromptTemplate template = """Use the following pieces of context to answer the question at the end. If you don"t know the answer, just say that you don"t know, don"t try to make up an answer. Use three sentences maximum and keep the answer as concise as possible. Always say "thanks for asking!" at the end of the answer. {context} Question: {question} Helpful Answer:""" custom_rag_prompt = PromptTemplate.from_template(template) |

This block customises the prompt to make answers more concise and add a polite sentence at the end. PromptTemplate is used to create a new prompt with the desired instructions.

Block 7: Adding metadata and refining your search

|

total_documents = len(all_splits) third = total_documents // 3 for i, document in enumerate(all_splits): if i < third: document.metadata["section"] = "beginning" elif i < 2 * third: document.metadata["section"] = "middle" else: document.metadata["section"] = "end" all_splits.metadata from langchain_core.vectorstores import InMemoryVectorStore vector_store = InMemoryVectorStore(embeddings) _ = vector_store.add_documents(all_splits) from typing import Literal from typing_extensions import Annotated class Search(TypedDict): """Search query.""" query: Annotated[str, ..., "Search query to run."] section: Annotated( Literal["beginning", "middle", "end"], ..., "Section to query.", ] class State(TypedDict): question: str query: Search context: List[Document] answer: str def analyze_query(state: State): structured_llm = llm.with_structured_output(Search) query = structured_llm.invoke(state["question"]) return {"query": query} def retrieve(state: State): query = state["query"] retrieved_docs = vector_store.similarity_search( query["query"], filter=lambda doc: doc.metadata.get("section") == query["section"], ) return {"context": retrieved_docs} def generate(state: State): docs_content = "\n\n".join(doc.page_content for doc in state["context"]) messages = prompt.invoke({"question": state["question"], "context": docs_content}) response = llm.invoke(messages) return {"answer": response.content} graph_builder = StateGraph(State).add_sequence([analyze_query, retrieve, generate]) graph_builder.add_edge(START, "analyze_query") graph = graph_builder.compile() display(Image(graph.get_graph().draw_mermaid_png())) for step in graph.stream( {"question": "¿Cual es el furturo de la IA Generativa en palabras del autor?"}, stream_mode="updates", ): print(f"{step}\n\n----------------\n") |

In this block, metadata is added to the post fragments, dividing them into three sections: "beginning", "middle" and "end". This allows for more refined searches, limiting the search to a specific section of the post.

A new analyze_query function is introduced that uses the LLM to determine the section of the post most relevant to the user's question. The RAG workflow is updated to include this new step.

Finally, the system is tested with a question in Spanish ("What is the future of Generative AI in the author's words?"), observing how the system uses the information in the "end" section of the post to generate a more accurate answer.

Let's look at the result:

Figure 2: RAG workflow. Own elaboration.

|

{ ---------------- { |

|

{ |

Conclusions

Through this notebook tour of Google Colab, we have experienced first-hand the construction of a conversational agent with RAG. We have learned to:

- Install the necessary libraries.

- Configure the development environment.

- Upload and process data.

- Create embeddings and store them in a vector_store.

- Implement the RAG recovery and generation stages.

- Customise the prompt to get more specific answers.

- Add metadata to refine the search.

This practical exercise provides you with the tools and knowledge you need to start exploring the potential of RAG and develop your own applications.

Dare to experiment with different information sources, language models and prompts to create increasingly sophisticated conversational agents!

Content prepared by Alejandro Alija, expert in Digital Transformation and Innovation. The contents and points of view reflected in this publication are the sole responsibility of its author.

Open data portals are an invaluable source of public information. However, extracting meaningful insights from this data can be challenging for users without advanced technical knowledge.

In this practical exercise, we will explore the development of a web application that democratizes access to this data through the use of artificial intelligence, allowing users to make queries in natural language.

The application, developed using the datos.gob.es portal as a data source, integrates modern technologies such as Streamlit for the user interface and Google's Gemini language model for natural language processing. The modular nature allows any Artificial Intelligence model to be used with minimal changes. The complete project is available in the Github repository.

Access the data laboratory repository on Github.

Run the data preprocessing code on Google Colab.

In this video, the author explains what you will find both on Github and Google Colab.

Application Architecture

The core of the application is based on four main interconnected sections that work together to process user queries:

- Context Generation

- Analyzes the characteristics of the chosen dataset.

- Generates a detailed description including dimensions, data types, and statistics.

- Creates a structured template with specific guidelines for code generation.

- Context and Query Combination

- Combines the generated context with the user's question, creating the prompt that the artificial intelligence model will receive.

- Response Generation

- Sends the prompt to the model and obtains the Python code that allows solving the generated question.

- Code Execution

- Safely executes the generated code with a retry and automatic correction system.

- Captures and displays the results in the application frontend.

Figure 1. Request processing flow

Development Process

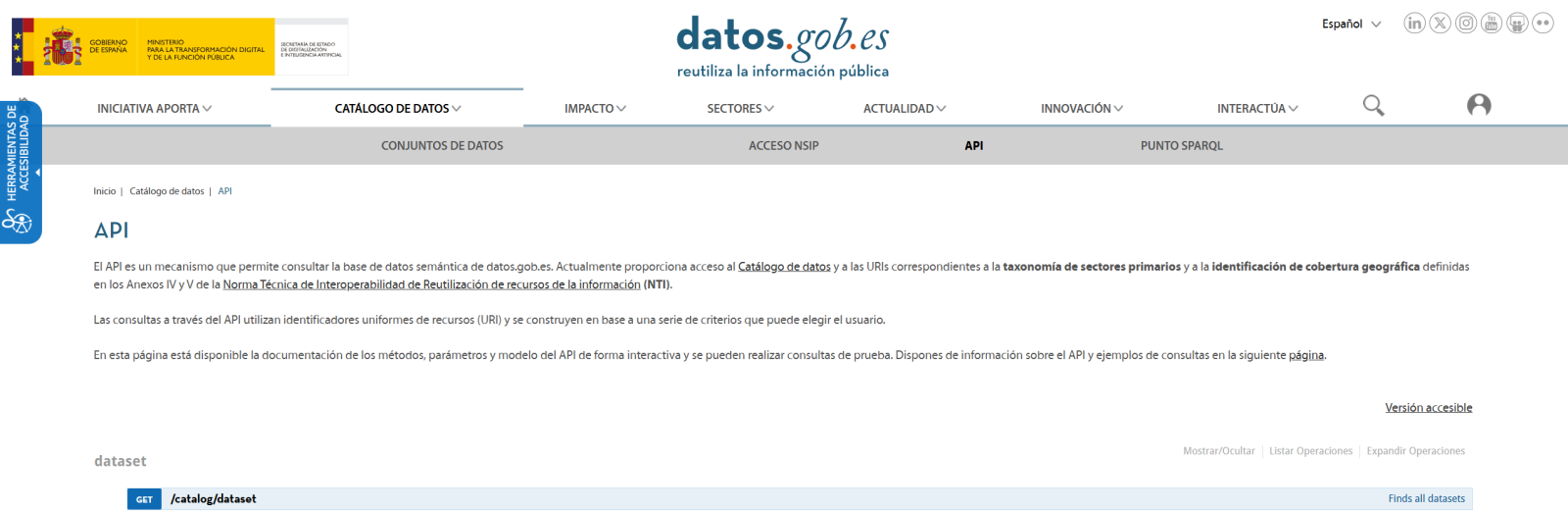

The first step is to establish a way to access public data. The datos.gob.es portal offers datasets via API. Functions have been developed to navigate the catalog and download these files efficiently.

Figura 2. API de datos.gob

The second step addresses the question: how to convert natural language questions into useful data analysis? This is where Gemini, Google's language model, comes in. However, it's not enough to simply connect the model; it's necessary to teach it to understand the specific context of each dataset.

A three-layer system has been developed:

- A function that analyzes the dataset and generates a detailed "technical sheet".

- Another that combines this sheet with the user's question.

- And a third that translates all this into executable Python code.

You can see in the image below how this process develops and, subsequently, the results of the generated code are shown once executed.

Figure 3. Visualization of the application's response processing

Finally, with Streamlit, a web interface has been built that shows the process and its results to the user. The interface is as simple as choosing a dataset and asking a question, but also powerful enough to display complex visualizations and allow data exploration.

The final result is an application that allows anyone, regardless of their technical knowledge, to perform data analysis and learn about the code executed by the model. For example, a municipal official can ask "What is the average age of the vehicle fleet?" and get a clear visualization of the age distribution.

Figure 4. Complete use case. Visualizing the distribution of registration years of the municipal vehicle fleet of Almendralejo in 2018

What Can You Learn?

This practical exercise allows you to learn:

- AI Integration in Web Applications:

- How to communicate effectively with language models like Gemini.

- Techniques for structuring prompts that generate precise code.

- Strategies for safely handling and executing AI-generated code.

- Web Development with Streamlit:

- Creating interactive interfaces in Python.

- Managing state and sessions in web applications.

- Implementing visual components for data.

- Working with Open Data:

- Connecting to and consuming public data APIs.

- Processing Excel files and DataFrames.

- Data visualization techniques.

- Development Best Practices:

- Modular structuring of Python code.

- Error handling and retries.

- Implementation of visual feedback systems.

- Web application deployment using ngrok.

Conclusions and Future

This exercise demonstrates the extraordinary potential of artificial intelligence as a bridge between public data and end users. Through the practical case developed, we have been able to observe how the combination of advanced language models with intuitive interfaces allows us to democratize access to data analysis, transforming natural language queries into meaningful analysis and informative visualizations.

For those interested in expanding the system's capabilities, there are multiple promising directions for its evolution:

- Incorporation of more advanced language models that allow for more sophisticated analysis.

- Implementation of learning systems that improve responses based on user feedback.

- Integration with more open data sources and diverse formats.

- Development of predictive and prescriptive analysis capabilities.

In summary, this exercise not only demonstrates the feasibility of democratizing data analysis through artificial intelligence, but also points to a promising path toward a future where access to and analysis of public data is truly universal. The combination of modern technologies such as Streamlit, language models, and visualization techniques opens up a range of possibilities for organizations and citizens to make the most of the value of open data.

Artificial Intelligence (AI) is no longer a futuristic concept and has become a key tool in our daily lives. From movie or series recommendations on streaming platforms to virtual assistants like Alexa or Google Assistant on our devices, AI is everywhere. But how do you build an AI model? Despite what it might seem, the process is less intimidating if we break it down into clear and understandable steps.

Step 1: Define the problem

Before we start, we need to be very clear about what we want to solve. AI is not a magic wand: different models will work better in different applications and contexts so it is important to define the specific task we want to execute. For example, do we want to predict the sales of a product? Classify emails as spam or non-spam? Having a clear definition of the problem will help us structure the rest of the process.

In addition, we need to consider what kind of data we have and what the expectations are. This includes determining the desired level of accuracy and the constraints of available time or resources.

Step 2: Collect the data

The quality of an AI model depends directly on the quality of the data used to train it. This step consists of collecting and organising the data relevant to our problem. For example, if we want to predict sales, we will need historical data such as prices, promotions or buying patterns.

Data collection starts with identifying relevant sources, which can be internal databases, sensors, surveys... In addition to the company's own data, there is a wide ecosystem of data, both open and proprietary, that can be drawn upon to build more powerful models. For example, the Government of Spain makes available through the datos.gob.es portal multiple sets of open data published by public institutions. On the other hand, Amazon Web Services (AWS) through its AWS Data Exchange portal allows access and subscription to thousands of proprietary datasets published and maintained by different companies and organisations.

The amount of data required must also be considered here. AI models often require large volumes of information to learn effectively. It is also crucial that the data are representative and do not contain biases that could affect the results. For example, if we train a model to predict consumption patterns and only use data from a limited group of people, it is likely that the predictions will not be valid for other groups with different behaviours.

Step 3: Prepare and explore the data

Once the data have been collected, it is time to clean and normalise them. In many cases, raw data may contain problems such as errors, duplications, missing values, inconsistencies or non-standardised formats. For example, you might find empty cells in a sales dataset or dates that do not follow a consistent format. Before feeding the model with this data, it is essential to fit it to ensure that the analysis is accurate and reliable. This step not only improves the quality of the results, but also ensures that the model can correctly interpret the information.

Once the data is clean, it is essential to perform feature engineering (feature engineering), a creative process that can make the difference between a basic model and an excellent one. This phase consists of creating new variables that better capture the nature of the problem we want to solve. For example, if we are analysing onlinesales, in addition to using the direct price of the product, we could create new characteristics such as the price/category_average ratio, the days since the last promotion, or variables that capture the seasonality of sales. Experience shows that well-designed features are often more important for the success of the model than the choice of the algorithm itself.

In this phase, we will also carry out a first exploratory analysis of the data, seeking to familiarise ourselves with the data and detect possible patterns, trends or irregularities that may influence the model. Further details on how to conduct an exploratory data analysis can be found in this guide .

Another typical activity at this stage is to divide the data into training, validation and test sets. For example, if we have 10,000 records, we could use 70% for training, 20% for validation and 10% for testing. This allows the model to learn without overfitting to a specific data set.

To ensure that our evaluation is robust, especially when working with limited datasets, it is advisable to implement cross-validationtechniques. This methodology divides the data into multiple subsets and performs several iterations of training and validation. For example, in a 5-fold cross-validation, we split the data into 5 parts and train 5 times, each time using a different part as the validation set. This gives us a more reliable estimate of the real performance of the model and helps us to detect problems of over-fitting or variability in the results.

Step 4: Select a model

There are multiple types of AI models, and the choice depends on the problem we want to solve. Common examples are regression, decision tree models, clustering models, time series models or neural networks. In general, there are supervised models, unsupervised models and reinforcement learning models. More detail can be found in this post on how machines learn.

When selecting a model, it is important to consider factors such as the nature of the data, the complexity of the problem and the ultimate goal. For example, a simple model such as linear regression may be sufficient for simple, well-structured problems, while neural networks or advanced models might be needed for tasks such as image recognition or natural language processing. In addition, the balance between accuracy, training time and computational resources must also be considered. A more accurate model generally requires more complex configurations, such as more data, deeper neural networks or optimised parameters. Increasing the complexity of the model or working with large datasets can significantly lengthen the time needed to train the model. This can be a problem in environments where decisions must be made quickly or resources are limited and require specialised hardware, such as GPUs or TPUs, and larger amounts of memory and storage.

Today, many open source libraries facilitate the implementation of these models, such as TensorFlow, PyTorch or scikit-learn.

Step 5: Train the model

Training is at the heart of the process. During this stage, we feed the model with training data so that it learns to perform its task. This is achieved by adjusting the parameters of the model to minimise the error between its predictions and the actual results.

Here it is key to constantly evaluate the performance of the model with the validation set and make adjustments if necessary. For example, in a neural network-type model we could test different hyperparameter settings such as learning rate, number of hidden layers and neurons, batch size, number of epochs, or activation function, among others.

Step 6: Evaluate the model

Once trained, it is time to test the model using the test data set we set aside during the training phase. This step is crucial to measure how it performs on data that is new to the model and ensures that it is not "overtrained", i.e. that it not only performs well on training data, but that it is able to apply learning on new data that may be generated on a day-to-day basis.

When evaluating a model, in addition to accuracy, it is also common to consider:

- Confidence in predictions: assess how confident the predictions made are.

- Response speed: time taken by the model to process and generate a prediction.

- Resource efficiency: measure how much memory and computational usage the model requires.

- Adaptability: how well the model can be adjusted to new data or conditions without complete retraining.

Step 7: Deploy and maintain the model

When the model meets our expectations, it is ready to be deployed in a real environment. This could involve integrating the model into an application, automating tasks or generating reports.

However, the work does not end here. The AI needs continuous maintenance to adapt to changes in data or real-world conditions. For example, if buying patterns change due to a new trend, the model will need to be updated.

Building AI models is not an exact science, it is the result of a structured process that combines logic, creativity and perseverance. This is because multiple factors are involved, such as data quality, model design choices and human decisions during optimisation. Although clear methodologies and advanced tools exist, model building requires experimentation, fine-tuning and often an iterative approach to obtain satisfactory results. While each step requires attention to detail, the tools and technologies available today make this challenge accessible to anyone interested in exploring the world of AI.

ANNEX I - Definitions Types of models

-

Regression: supervised techniques that model the relationship between a dependent variable (outcome) and one or more independent variables (predictors). Regression is used to predict continuous values, such as future sales or temperatures, and may include approaches such as linear, logistic or polynomial regression, depending on the complexity of the problem and the relationship between the variables.

-

Decision tree models: supervised methods that represent decisions and their possible consequences in the form of a tree. At each node, a decision is made based on a characteristic of the data, dividing the set into smaller subsets. These models are intuitive and useful for classification and prediction, as they generate clear rules that explain the reasoning behind each decision.

-

Clustering models: unsupervised techniques that group data into subsets called clusters, based on similarities or proximity between the data. For example, customers with similar buying habits can be grouped together to personalise marketing strategies. Models such as k-means or DBSCAN allow useful patterns to be identified without the need for labelled data.

-

Time series models: designed to work with chronologically ordered data, these models analyse temporal patterns and make predictions based on history. They are used in cases such as demand forecasting, financial analysis or meteorology. They incorporate trends, seasonality and relationships between past and future data.

-

Neural networks: models inspired by the workings of the human brain, where layers of artificial neurons process information and detect complex patterns. They are especially useful in tasks such as image recognition, natural language processing and gaming. Neural networks can be simple or deep learning, depending on the problem and the amount of data.

-

Supervised models: these models learn from labelled data, i.e., sets in which each input has a known outcome. The aim is for the model to generalise to predict outcomes in new data. Examples include spam and non-spam mail classification and price predictions.

-

Unsupervised models: twork with unlabelled data, looking for hidden patterns, structures or relationships within the data. They are ideal for exploratory tasks where the expected outcome is not known in advance, such as market segmentation or dimensionality reduction.

- Reinforcement learning model: in this approach, an agent learns by interacting with an environment, making decisions and receiving rewards or penalties based on performance. This type of learning is useful in problems where decisions affect a long-term goal, such as training robots, playing video games or developing investment strategies.

Content prepared by Juan Benavente, senior industrial engineer and expert in technologies linked to the data economy. The contents and points of view reflected in this publication are the sole responsibility of the author.

In this episode we will discuss artificial intelligence and its challenges, based on the European Regulation on Artificial Intelligence that entered into force this year. Come and find out about the challenges, opportunities and new developments in the sector from two experts in the field:

- Ricard Martínez, professor of constitutional law at the Universitat de València where he directs the Chair of Privacy and Digital Transformation Microsoft University of Valencia.

- Carmen Torrijos, computational linguist, expert in AI applied to language and professor of text mining at the Carlos III University.

Listen to the full podcast (only available in Spanish)

Summary / Transcript of the interview

1. It is a fact that artificial intelligence is constantly evolving. To get into the subject, I would like to hear about the latest developments in AI?

Carmen Torrijos: Many new applications are emerging. For example, this past weekend there has been a lot of buzz about an AI for image generation in X (Twitter), I don't know if you've been following it, called Grok. It has had quite an impact, not because it brings anything new, as image generation is something we have been doing since December 2023. But this is an AI that has less censorship, that is, until now we had a lot of difficulties with the generalist systems to make images that had faces of celebrities or had certain situations and it was very monitored from any tool. What Grok does is to lift all that up so that anyone can make any kind of image with any famous person or any well-known face. It is probably a passing fad. We will make images for a while and then it will pass.

And then there are also automatic podcast creation systems, such as Notebook LM. We've been watching them for a couple of months now and it's really been one of the things that has really surprised me in the last few months. Because it already seems that they are all incremental innovations: on top of what we already have, they give us something better. But this is something really new and surprising. You upload a PDF and it can generate a podcast of two people talking in a totally natural, totally realistic way about that PDF. This is something that Notebook LM, which is owned by Google, can do.