The Smart Agro – Irrigation Recommendations website is a free platform developed by the Cabildo de La Palma as part of the La Palma Smart Island project. It aims to improve the efficiency of water use in local agriculture, especially for banana and avocado crops.

To get a personalized recommendation, the user must select a crop and an area of the island through a drop-down menu or on the map. Instead, the app:

- It provides detailed graphs showing the recent evolution of precipitation and evapotranspiration (ETo) over the past 7 days in the selected area.

- It generates irrigation recommendations adjusted to the municipality and local climatic conditions.

To carry out the calculations, data from the island's network of weather and air quality stations are used, together with a calculation engine that processes the information to generate weekly recommendations. Likewise, the data generated by this engine is integrated into the open data portal, promoting an open innovation ecosystem that feeds back on each other.

CLIMA TERRA is a progressive web application (PWA) that provides real-time environmental information in a clear and accessible way. It allows users to view key parameters such as temperature, relative humidity, wind speed and UV index, based on open meteorological and geospatial data.

The app has been designed with a minimalist and bilingual (Spanish/English) approach, with the aim of bringing open data closer to the public and promoting more informed and sustainable everyday decisions.

embalses.info is a web platform that provides up-to-date information on the status of Spain’s reservoirs and dams. The application offers real-time hydrological data with weekly updates, allowing citizens, researchers, and public managers to consult water levels, capacities, and historical trends for more than 400 reservoirs organized into 16 river basins.

The application includes an interactive dashboard showing the overall status of Spanish reservoirs, an interactive (coming soon) basin map with filling levels, and detailed pages for each reservoir with weekly trend charts, comparisons with previous years, and historical records dating back to the 1980s. It features a powerful search engine, data analysis with interactive charts, and a contact form for suggestions.

From a technical standpoint, the platform uses Next.js 14+ with TypeScript on the frontend, Prisma ORM for data access, and PostgreSQL/SQL Server as the database. It is SEO-optimized with a dynamic XML sitemap, optimized meta tags, structured data, and friendly URLs. The site is fully responsive, accessible, and includes automatic light/dark mode.

The public value of the application lies in providing transparency and accessible information on Spain’s water resources, enabling farmers, public administrations, researchers, and the media to make informed decisions based on reliable and up-to-date data.

In this episode we talk about the environment, focusing on the role that data plays in the ecological transition. Can open data help drive sustainability and protect the planet? We found out with our two guests:

- Francisco José Martínez García, conservation director of the natural parks of the south of Alicante.

- José Norberto Mazón, professor of computer languages and systems at the University of Alicante.

Listen here the full episode (in spanish)

Summary of the interview

-

You are both passionate about the use of data for society, how did you discover the potential of open data for environmental management?

Francisco José Martínez: For my part, I can tell you that when I arrived at the public administration, at the Generalitat Valenciana, the Generalitat launched a viewer called Visor Gva, which is open, which gives a lot of information on images, metadata, data in various fields... And the truth is that it made it much easier for me - and continues to make it easier - for me to work on the resolution of files and the work of a civil servant. Later, another database was also incorporated, which is the Biodiversity Data Bank, which offers data in grids of one kilometer by one kilometer. And finally, already applied to the natural spaces and wetlands that I direct, water quality data, all of them are open and can be the object of generating applied research by all researchers.

Jose Norberto Mazón: In my case, it was precisely with Francisco as director. He directs three natural parks that are wetlands in the south of Alicante and about one of them, in which we had special interest, which is the Natural Park of Laguna de la Mata and Torrevieja, Francisco told us about his experience -all this experience that he has just commented on-. We at the University of Alicante have been working for some time on data management, open data, data interoperability, etc., and we saw the opportunity to make a perspective of data management, data generation and reuse of data from the territory, from the Natural Park itself. Together with other entities such as Proyecto Mastral, Faunatura, AGAMED, and also colleagues from the Polytechnic University of Valencia, we saw the possibility of studying these useful data, focusing above all on the concept of high-value data, which the European Union was betting on them: data that has the potential to generate socio-economic or environmental benefits, benefit all users and contribute to making a European society based on the data economy. And well, we set out there to see how we could collaborate, especially to discover the potential of data at the territory level.

-

Through a strategy called the Green Deal, the European Union aims to become the world's first competitive and resource-efficient economy, achieving net-zero greenhouse gas emissions by 2050. What concrete measures are most urgent to achieve this and how can data help to achieve these goals?

Francisco José Martínez: The European Union has several lines, several projects such as the LIFE project, focused on endangered species, the ERDF funds to restore habitats... Here in Laguna de la Mata and Torrevieja, we have improved terrestrial habitats with these ERDF funds and it is precisely about these habitats being better CO2 capturers and generating more native plant communities, eliminating invasive species. Then we also have the regulation, at the regulatory level, on nature restoration, which has been in force since 2024, and which requires us to restore up to 30% of degraded terrestrial and marine ecosystems. I must also say that the Biodiversity Foundation, under the Ministry, generates quite a few projects related, for example, to the generation of climate shelters in urban areas. In other words, there are a series of projects and a lot of funding in everything that has to do with renaturalization, habitat improvement and species conservation.

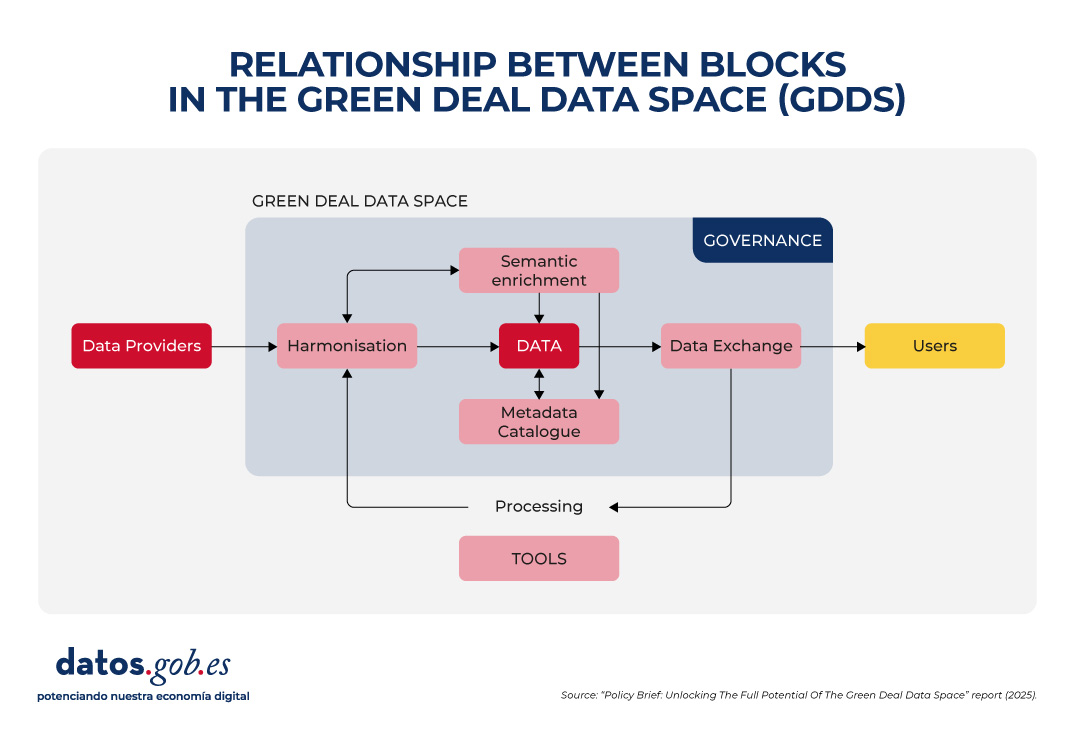

Jose Norberto Mazón: I would also focus, to complement what Francis has said, on all data management, the importance given to data management at the level of the European Green Deal, specifically with data sharing projects, to make data more interoperable. In other words, in the end, all those actors that generate data can be useful through their combination and generate much more value in what are called data spaces and especially in the data space of the European Green Deal. Recently, in addition, they have just finished some initial projects. For example, to highlight a couple of them, the USAGE project (Urban Data Spaces for Green dEal), which I am going to comment on with two specific pilots that they have developed very interestingly. One on how everything that has to do with data to mitigate climate change has to be introduced into urban management in the city of Ferrara, in Italy. And another pilot on data governance and how it has to be done to comply with the FAIR principles, in this case in Zaragoza, with a concept of climate islands that is also very interesting. And then there is another project, AD4GD (All Data for Green Deal) that has also carried out pilots in relation to this interoperability of data. In this case, in the Berlin Lake Network. Berlin has about 300 lakes that have to monitor the quality of the water, the quantity of water, etc. and it has been done through sensorization. The management of biological corridors in Catalonia, too, with data on how species move and how it is necessary to manage these biological corridors. And they have also done some air quality initiatives with citizen science. These projects have already been completed, but there is a super interesting project at the European level that is going to launch this large data space of the European Pact, which is the SAGE (Sustainable Green Europe Data Space) project, which is developing ten use cases that encompass this entire great area of the European Green Deal. Specifically, to highlight one that is very pertinent, because it is aligned with what are the natural parks, the wetlands of the south of Alicante and that Francisco directs, is that of the commitments between nature and ecosystem services. That is, how nature must be protected, how we have to conserve, but we also have to allow these socio-economic activities in a sustainable way. This data space will integrate remote sensing, models based on artificial intelligence, data, etc.

-

Would you like to add any other projects at this local or regional level?

Francisco José Martínez: Yes, of course. Well, the one we have done with Norberto, his team and several teams, several departments of the Polytechnic University of Valencia and the University of Alicante, and it is the digital twin. Research has been carried out for the generation of a digital twin in the Natural Park of Las Lagunas, here in Torrevieja. And the truth is that it has been an applied research, a lot of data has been generated from sensors, also from direct observations or from image and sound recorders. A good record of information has been made at the level of noise, climate, meteorological data to be able to carry out good management and that it is an invaluable help for the management of those of us who have to make decisions day by day. Other data that have also been carried out in this project here has been the collection of data of a social nature, tourist use, people's feelings (whether they agree with what they see in the natural space or not). In other words, we have improved our knowledge of this natural space thanks to this digital twin and that is information that neither our viewer nor the Biodiversity Data Bank can provide us.

Jose Norberto Mazón: Francisco was talking, for example, about the knowledge of people, about the influx of people from certain areas of the natural park. And also to know what they feel, what the people who visit it think, because if it is not through surveys that are very cumbersome, etcetera is complicated. We have put at the service of discovering that knowledge, this digital twin with a multitude of that sensorization and with data that in the end are also interoperable and that allow us to know the territory very well. Obviously, the fact that it is territorial does not mean that it is not scalable. What we are doing with the digital twin project, the ChanTwin project, what we are doing is that it can be dumped or extrapolated to any other natural area, because the problems that we have had in the end we are going to find in any natural area, such as connectivity problems, interoperability problems of data that come from sensors, etc. We have sensors of many types, influx of people, water quality, temperatures and climatic variables, pollution, etc. and in the end also with all the guarantees of data privacy. I have to say this, which is very important because we always try to ensure that this data collection, of course, guarantees people's privacy. We can know the concerns of the people who visit the park and also, for example, the origin of those people. And this is very interesting information at the level of park management, because in this way, for example, Francisco can make more informed decisions to better manage the park. But, the people who visit the park come from a specific municipality, with a city council that, for example, has a Department of the Environment or has a Department of Tourism. And this information can be very interesting to highlight certain aspects, for example, environmental, biodiversity, or socio-economic activity.

Francisco José Martínez: Data are fundamental in the management of the natural environment of a wetland, a mountain, a forest, a pasture... in general of all natural spaces. Note that only with the follow-up and monitoring of certain environmental parameters do we serve to explain events that can happen, for example, a fish mortality. Without having had the history of the dissolved oxygen temperature data, it is very difficult to know if it is because of that or because of a pollutant. For example, the temperature of water, which is related to dissolved oxygen: the higher the temperature, the less dissolved oxygen. And without oxygen, it turns out that they appear in spring and summer – okay, whatever the ambient temperatures are, it moves to the water, to the lagoons, to the wetlands – a disease appears that is botulism and there have already been two years that more than a thousand animals have died every year. The way to control it is by anticipating that these temperatures are going to reach a specific one, that from there the oxygen almost disappears from the waters and gives us time to plan the work teams that are removing the corpses, which is the fundamental action to avoid it. Another, for example, is the monthly census of waterfowl, which are observed in person, which are recorded, which we also have recorders that record sounds. With that we can know the dynamics when species come in migration and with that we can also manage water. Another example can be that of the temperature of the lagoon here in La Mata, which we are monitoring with the digital twin, because we know that when it reaches almost thirty degrees, the main food of the birds disappears, which is brine shrimp, because they cannot live in those extreme temperatures with that salinity. but we can bring in sea water, which despite the fact that it has been very hot these last springs and summers, is always cooler and we can refresh and extend the life of this species that is precisely synchronized with the reproduction of birds. So we can manage the water thanks to the monitoring and thanks to the data we have on the temperatures of the waters.

Jose Norberto Mazón: Look at the importance of these examples that Francisco mentioned, which are paradigmatic, and also the importance of the use of data. I would simply add a question that in the end these data, the effort is to make them all open and that they comply with those FAIR principles, that is, that they are interoperable, because as we have heard Francis have commented, they are data from many sources, each with different characteristics, collected in different ways, etc. You're talking to us about sensor data, but also other data that is collected in another way. And then also that they allow us in some way to start co-creation processes of tools that use this data at various levels. Of course, at the level of management of the natural park itself to make informed decisions, but also at the level of citizenship, even at the level of other types of professionals. As Francisco said, in the parks, in these wetlands, economic activities are carried out and therefore also being able to co-create tools with these actors or with the university research staff themselves is very interesting. And here it is always a matter of encouraging third parties, both natural and legal, for example, companies or startups, entrepreneurs, etc. that they make various applications and value-added services with that data: that they design easy-to-use tools for decision-making, for example, or any other type of tool. This would be very interesting, because it would also give us an entrepreneurial ecosystem around that data. And what this would also do is make society itself more involved from this open data, from the reuse of open data, in environmental care and environmental awareness.

-

An important aspect of this transition is that it must be "fair and leave no one behind". What role can data play in ensuring that equity?

Francisco José Martínez: In our case, we have been carrying out citizen science actions with the Environmental Education and Dissemination technicians. We are collecting data with people who sign up for these activities. We do two activities a month and, for example, we have carried out censuses of bats of different species - because one sees bats and does not distinguish the species, sometimes not even seeing them - on night routes, to detect and record them. We have also done photo trapping activities to detect mammals that are very difficult to see. With this we get children, families, people in general to know a fauna that they do not know exists when they are walking in the mountains. And I believe that we reach a lot of people and that we are disseminating it to as many people, as many sectors as possible.

Jose Norberto Mazón: And from that data, in fact, look at all the amount of data that Francis is talking about. From there, and promoting that line that Francisco follows as director of the Natural Parks of the south of Alicante, what we ask ourselves is: can we go one step further using technology? And we have made video games that make it possible to have more awareness among those target groups that may otherwise be very difficult to reach. For example, teenagers, who must be instilled in some way that behavior, that importance of natural parks as well. And we think that video games can be a very interesting channel. And how have we done it? Basing these video games on data, on data that come from what Francisco has commented on and also from the data of the digital twin itself. That is, data we have on the water surface, noise levels... We include all this data in video games. They are dynamic video games that allow us to have a better awareness of what the natural park is and of the environmental values and conservation of biodiversity.

-

You've been talking to us for a while about all the data you use, which in the end comes from various sources. Can we summarize the type of data you use in your day-to-day life and what are the challenges you encounter when integrating it into specific projects?

Francisco José Martínez: The data are spatial, they are images with their metadata, censuses of birds, mammals, the different taxonomic groups, fauna, flora... We also carry out inventories of protected flora in danger of extinction. Fundamental meteorological data that, by the way, are also very important when it comes to the issue of civil protection. Look at all the disasters that there are with cold drops or cut-off lows. Very important data such as water quality, physical and chemical data, height of the water sheet that helps us to know evaporation, evaporation curves and thus manage water inputs and of course, social data for public use. Because public use is very important in natural spaces. It is a way of opening up to citizens, to people so that they can know their natural resources and know them, value them and thus protect them. As for the difficulty, it is true that there is a series of data, especially when research is carried out, which we cannot access. They are in repositories for technicians who are in the administration or even for consultants who are difficult to access. I think Norberto can explain this better: how this could be integrated into platforms, by sectors, by groups...

Jose Norberto Mazón: In fact, it is a core issue for us. In the end there is a lot of open data, as Francis has explained throughout this little time that we have been talking, but it is true that they are very dispersed because they are also generated to meet various objectives. In the end, the main objective of open data is that it is reused, that is, that it is used for purposes other than those for which it was initially granted. But what we find is that in the end there are many proposals that are, as we would say, top-down (very top down). But really, where the problem lies is in the territory, from below, in all the actors involved in the territory, which apart from a lot of data is generated in the territory itself. In other words, it is true that there is data, for example, satellite data with remote sensing, which is generated by the satellites themselves and then reused by us, but then the data that comes from sensors or the data that comes from citizen science, etc., are generated in the territory itself. And we find that many times, in the end of that data, for example, if there are researchers who do a job in a specific natural park, then obviously that research team publishes its articles and data in open (because by the law of science they have to publish them in open in repositories). But of course, that is very research-oriented. So, the other types of actors, for example, the management of the park, the managers of a local entity or even the citizens themselves, are perhaps not aware that this data is available and do not even have mechanisms to consult it and obtain value from it. The greatest difficulty, in fact, is this, in that the data generated from the territory is reused from the territory. It is very easy to reuse them from the territory to solve these problems as well. And that difficulty is what we are trying to tackle with these projects that we have underway, at the moment with the creation of a data lake, a data architecture that allows us to manage all that heterogeneity of the data and do it from the territory. But of course, here what we really have to do is try to do it in a federated way, with that philosophy of open data at the federated level and also with a plus as well, because it is true that the casuistry within the territory is very large. There are a multitude of actors, because we are talking about open data, but there may also be actors who say "I want to share certain data, but not certain other data yet, because I may lose a certain competitiveness, but I would not mind being able to share it in three months' time". In other words, it is also necessary to have control over a certain type of data and that open data coexists with another type of data that can be shared. Maybe not so broadly, but in a way, let's say, providing great value. We are looking at this possibility with a new project that we are creating: a space for environmental data, biodiversity in these three natural parks in the south of the province of Alicante, and we are working on that project: Heleade.

If you want to know more about these projects, we invite you to visit their websites:

Interview clips

1. How was the digital twin of the Lagunas de Torrevieja Natural Park conceived?

2. What projects are being promoted within the framework of the European Green Deal Data Space?

To achieve its environmental sustainability goals, Europe needs accurate, accessible and up-to-date information that enables evidence-based decision-making. The Green Deal Data Space (GDDS) will facilitate this transformation by integrating diverse data sources into a common, interoperable and open digital infrastructure.

In Europe, work is being done on its development through various projects, which have made it possible to obtain recommendations and good practices for its implementation. Discover them in this article!

What is the Green Deal Data Space?

The Green Deal Data Space (GDDS) is an initiative of the European Commission to create a digital ecosystem that brings together data from multiple sectors. It aims to support and accelerate the objectives of the Green Deal: the European Union's roadmap for a sustainable, climate-neutral and fair economy. The pillars of the Green Deal include:

- An energy transition that reduces emissions and improves efficiency.

- The promotion of the circular economy, promoting the recycling, reuse and repair of products to minimise waste.

- The promotion of more sustainable agricultural practices.

- Restoring nature and biodiversity, protecting natural habitats and reducing air, water and soil pollution.

- The guarantee of social justice, through a transition that makes it easier for no country or community to be left behind.

Through this comprehensive strategy, the EU aims to become the world's first competitive and resource-efficient economy, achieving net-zero greenhouse gas emissions by 2050. The Green Deal Data Space is positioned as a key tool to achieve these objectives. Integrated into the European Data Strategy, data spaces are digital environments that enable the reliable exchange of data, while maintaining sovereignty and ensuring trust and security under a set of mutually agreed rules.

In this specific case, the GDDS will integrate valuable data on biodiversity, zero pollution, circular economy, climate change, forest services, smart mobility and environmental compliance. This data will be easy to locate, interoperable, accessible and reusable under the FAIR (Findability, Accessibility, Interoperability, Reusability) principles.

The GDDS will be implemented through the SAGE (Dataspace for a Green and Sustainable Europe) project and will be based on the results of the GREAT (Governance of Responsible Innovation) initiative.

A report with recommendations for the GDDS

How we saw in a previous article, four pioneering projects are laying the foundations for this ecosystem: AD4GD, B-Cubed, FAIRiCUBE and USAGE. These projects, funded under the HORIZON call, have analysed and documented for several years the requirements necessary to ensure that the GDDS follows the FAIR principles. As a result of this work, the report "Policy Brief: Unlocking The Full Potential Of The Green Deal Data Space”. It is a set of recommendations that seek to serve as a guide to the successful implementation of the Green Deal Data Space.

The report highlights five major areas in which the challenges of GDDS construction are concentrated:

1. Data harmonization

Environmental data is heterogeneous, as it comes from different sources: satellites, sensors, weather stations, biodiversity registers, private companies, research institutes, etc. Each provider uses its own formats, scales, and methodologies. This causes incompatibilities that make it difficult to compare and combine data. To fix this, it is essential to:

- Adopt existing international standards and vocabularies, such as INSPIRE, that span multiple subject areas.

- Avoid proprietary formats, prioritizing those that are open and well documented.

- Invest in tools that allow data to be easily transformed from one format to another.

2. Semantic interoperability

Ensuring semantic interoperability is crucial so that data can be understood and reused across different contexts and disciplines, which is critical when sharing data between communities as diverse as those participating in the Green Deal objectives. In addition, the Data Act requires participants in data spaces to provide machine-readable descriptions of datasets, thus ensuring their location, access, and reuse. In addition, it requires that the vocabularies, taxonomies and lists of codes used be documented in a public and coherent manner. To achieve this, it is necessary to:

- Use linked data and metadata that offer clear and shared concepts, through vocabularies, ontologies and standards such as those developed by the OGC or ISO standards.

- Use existing standards to organize and describe data and only create new extensions when really necessary.

- Improve the already accepted international vocabularies, giving them more precision and taking advantage of the fact that they are already widely used by scientific communities.

3. Metadata and data curation

Data only reaches its maximum value if it is accompanied by clear metadata explaining its origin, quality, restrictions on use and access conditions. However, poor metadata management remains a major barrier. In many cases, metadata is non-existent, incomplete, or poorly structured, and is often lost when translated between non-interoperable standards. To improve this situation, it is necessary to:

- Extend existing metadata standards to include critical elements such as observations, measurements, source traceability, etc.

- Foster interoperability between metadata standards in use, through mapping and transformation tools that respond to both commercial and open data needs.

- Recognize and finance the creation and maintenance of metadata in European projects, incorporating the obligation to generate a standardized catalogue from the outset in data management plans.

4. Data Exchange and Federated Provisioning

The GDDS does not only seek to centralize all the information in a single repository, but also to allow multiple actors to share data in a federated and secure way. Therefore, it is necessary to strike a balance between open access and the protection of rights and privacy. This requires:

- Adopt and promote open and easy-to-use technologies that allow the integration between open and protected data, complying with the General Data Protection Regulation (GDPR).

- Ensure the integration of various APIs used by data providers and user communities, accompanied by clear demonstrators and guidelines. However, the use of standardized APIs needs to be promoted to facilitate a smoother implementation, such as OGC (Open Geospatial Consortium) APIs for geospatial assets.

- Offer clear specification and conversion tools to enable interoperability between APIs and data formats.

In parallel to the development of the Eclipse Dataspace Connectors (an open-source technology to facilitate the creation of data spaces), it is proposed to explore alternatives such as blockchain catalogs or digital certificates, following examples such as the FACTS (Federated Agile Collaborative Trusted System).

5. Inclusive and sustainable governance

The success of the GDDS will depend on establishing a robust governance framework that ensures transparency, participation, and long-term sustainability. It is not only about technical standards, but also about fair and representative rules. To make progress in this regard, it is key to:

- Use only European clouds to ensure data sovereignty, strengthen security and comply with EU regulations, something that is especially important in the face of today's global challenges.

- Integrating open platforms such as Copernicus, the European Data Portal and INSPIRE into the GDDS strengthens interoperability and facilitates access to public data. In this regard, it is necessary to design effective strategies to attract open data providers and prevent GDDS from becoming a commercial or restricted environment.

- Mandating data in publicly funded academic journals increases its visibility, and supporting standardization initiatives strengthens the visibility of data and ensures its long-term maintenance.

- Providing comprehensive training and promoting cross-use of harmonization tools prevents the creation of new data silos and improves cross-domain collaboration.

The following image summarizes the relationship between these blocks:

Conclusion

All these recommendations have an impact on a central idea: building a Green Deal Data Space that complies with the FAIR principles is not only a technical issue, but also a strategic and ethical one. It requires cross-sector collaboration, political commitment, investment in capacities, and inclusive governance that ensures equity and sustainability. If Europe succeeds in consolidating this digital ecosystem, it will be better prepared to meet environmental challenges with informed, transparent and common good-oriented decisions.

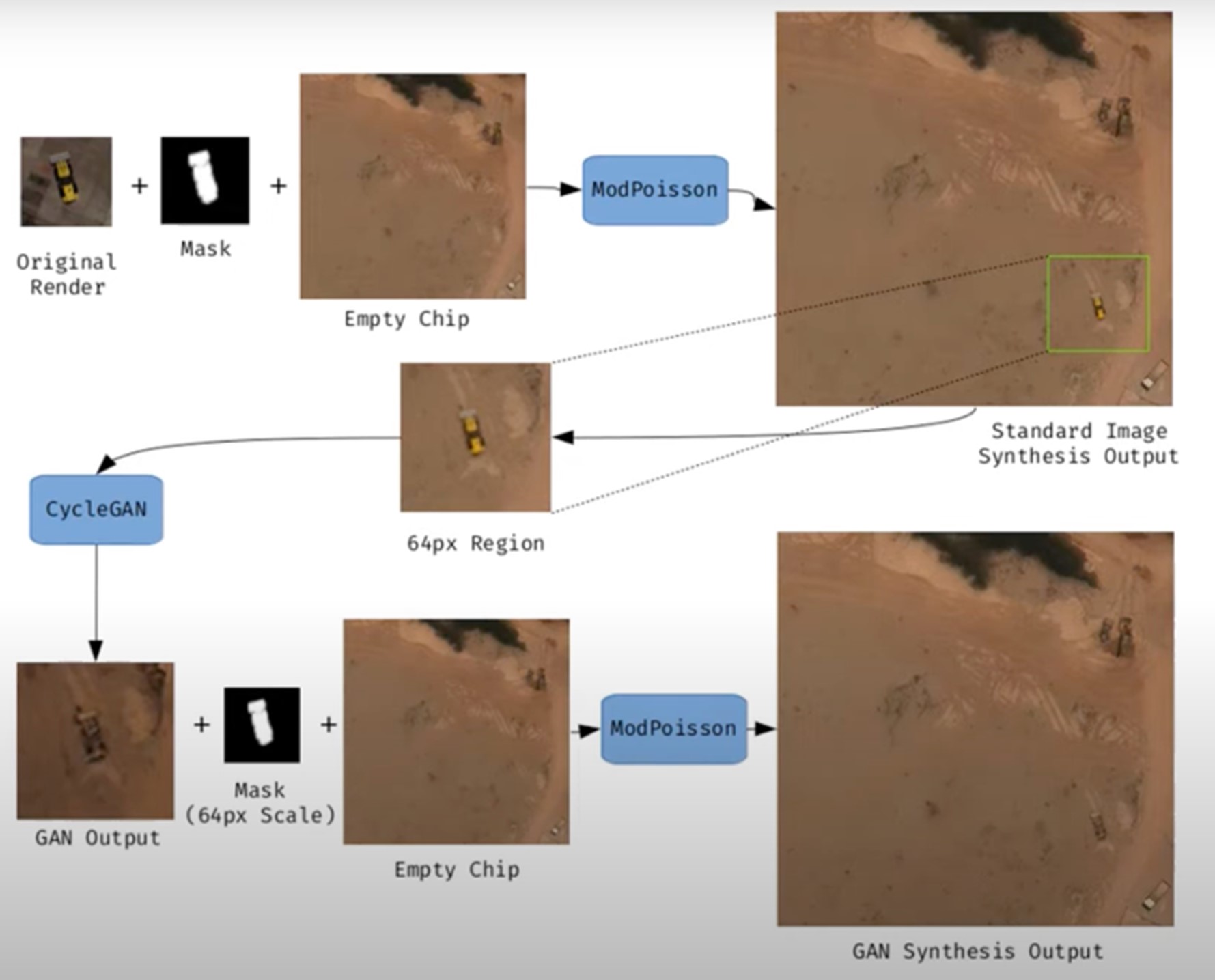

Synthetic images are visual representations artificially generated by algorithms and computational techniques, rather than being captured directly from reality with cameras or sensors. They are produced from different methods, among which the antagonistic generative networks (Generative Adversarial NetworksGAN), the Dissemination models, and the 3D rendering techniques. All of them allow you to create images of realistic appearance that in many cases are indistinguishable from an authentic photograph.

When this concept is transferred to the field of Earth observation, we are talking about synthetic satellite images. These are not obtained from a space sensor that captures real electromagnetic radiation, but are generated digitally to simulate what a satellite would see from orbit. In other words, instead of directly reflecting the physical state of the terrain or atmosphere at a particular time, they are computational constructs capable of mimicking the appearance of a real satellite image.

The development of this type of image responds to practical needs. Artificial intelligence systems that process remote sensing data require very large and varied sets of images. Synthetic images allow, for example, to recreate areas of the Earth that are little observed, to simulate natural disasters – such as forest fires, floods or droughts – or to generate specific conditions that are difficult or expensive to capture in practice. In this way, they constitute a valuable resource for training detection and prediction algorithms in agriculture, emergency management, urban planning or environmental monitoring.

Figure 1. Example of synthetic satellite image generation.

Its value is not limited to model training. Where high-resolution images do not exist – due to technical limitations, access restrictions or economic reasons – synthesis makes it possible to fill information gaps and facilitate preliminary studies. For example, researchers can work with approximate synthetic images to design risk models or simulations before actual data are available.

However, synthetic satellite imagery also poses significant risks. The possibility of generating very realistic scenes opens the door to manipulation and misinformation. In a geopolitical context, an image showing non-existent troops or destroyed infrastructure could influence strategic decisions or international public opinion. In the environmental field, manipulated images could be disseminated to exaggerate or minimize the impacts of phenomena such as deforestation or melting ice, with direct effects on policies and markets.

Therefore, it is convenient to differentiate between two very different uses. The first is use as a support, when synthetic images complement real images to train models or perform simulations. The second is use as a fake, when they are deliberately presented as authentic images in order to deceive. While the former uses drive innovation, the latter threatens trust in satellite data and poses an urgent challenge of authenticity and governance.

Risks of satellite imagery applied to Earth observation

Synthetic satellite imagery poses significant risks when used in place of images captured by real sensors. Below are examples that demonstrate this.

A new front of disinformation: "deepfake geography"

The term deepfake geography has already been consolidated in the academic and popular literature to describe fictitious satellite images, manipulated with AI, that appear authentic, but do not reflect any existing reality. Research from the University of Washington, led by Bo Zhao, used algorithms such as CycleGAN to modify images of real cities—for example, altering the appearance of Seattle with non-existent buildings or transforming Beijing into green areas—highlighting the potential to generate convincing false landscapes.

One OnGeo Intelligence (OGC) platform article stresses that these images are not purely theoretical, but real threats affecting national security, journalism and humanitarian work. For its part, the OGC warns that fabricated satellite imagery, AI-generated urban models, and synthetic road networks have already been observed, and that they pose real challenges to public and operational trust.

Strategic and policy implications

Satellite images are considered "impartial eyes" on the planet, used by governments, media and organizations. When these images are faked, their consequences can be severe:

- National security and defense: if false infrastructures are presented or real ones are hidden, strategic analyses can be diverted or mistaken military decisions can be induced.

- Disinformation in conflicts or humanitarian crises: An altered image showing fake fires, floods, or troop movements can alter the international response, aid flows, or citizens' perceptions, especially if it is spread through social media or media without verification.

- Manipulation of realistic images of places: not only the general images are at stake. Nguyen et al. (2024) showed that it is possible to generate highly realistic synthetic satellite images of very specific facilities such as nuclear plants.

Crisis of trust and erosion of truth

For decades, satellite imagery has been perceived as one of the most objective and reliable sources of information about our planet. They were the graphic evidence that made it possible to confirm environmental phenomena, follow armed conflicts or evaluate the impact of natural disasters. In many cases, these images were used as "unbiased evidence," difficult to manipulate, and easy to validate. However, the emergence of synthetic images generated by artificial intelligence has begun to call into question that almost unshakable trust.

Today, when a satellite image can be falsified with great realism, a profound risk arises: the erosion of truth and the emergence of a crisis of confidence in spatial data.

The breakdown of public trust

When citizens can no longer distinguish between a real image and a fabricated one, trust in information sources is broken. The consequence is twofold:

- Distrust of institutions: if false images of a fire, a catastrophe or a military deployment circulate and then turn out to be synthetic, citizens may also begin to doubt the authentic images published by space agencies or the media. This "wolf is coming" effect generates skepticism even in the face of legitimate evidence.

- Effect on journalism: traditional media, which have historically used satellite imagery as an unquestionable visual source, risk losing credibility if they publish doctored images without verification. At the same time, the abundance of fake images on social media erodes the ability to distinguish what is real and what is not.

- Deliberate confusion: in contexts of disinformation, the mere suspicion that an image may be false can already be enough to generate doubt and sow confusion, even if the original image is completely authentic.

The following is a summary of the possible cases of manipulation and risk in satellite images:

|

Ambit |

Type of handling |

Main risk |

Documented example |

|---|---|---|---|

| Armed conflicts | Insertion or elimination of military infrastructures. | Strategic disinformation; erroneous military decisions; loss of credibility in international observation. | Alterations demonstrated in deepfake geography studies where dummy roads, bridges or buildings were added to satellite images. |

| Climate change and the environment | Alteration of glaciers, deforestation or emissions. | Manipulation of environmental policies; delay in measures against climate change; denialism. | Studies have shown the ability to generate modified landscapes (forests in urban areas, changes in ice) by means of GANs. |

| Gestión de emergencias | Creation of non-existent disasters (fires, floods). | Misuse of resources in emergencies; chaos in evacuations; loss of trust in agencies. | Research has shown the ease of inserting smoke, fire or water into satellite images. |

| Mercados y seguros | Falsification of damage to infrastructure or crops. | Financial impact; massive fraud; complex legal litigation. | Potential use of fake images to exaggerate damage after disasters and claim compensation or insurance. |

| Derechos humanos y justicia internacional | Alteration of visual evidence of war crimes. | Delegitimization of international tribunals; manipulation of public opinion. | Risk identified in intelligence reports: Doctored images could be used to accuse or exonerate actors in conflicts. |

| Geopolítica y diplomacia | Creation of fictitious cities or border changes. | Diplomatic tensions; treaty questioning; State propaganda | Examples of deepfake maps that transform geographical features of cities such as Seattle or Tacoma. |

Figure 2. Table showing possible cases of manipulation and risk in satellite images

Impact on decision-making and public policies

The consequences of relying on doctored images go far beyond the media arena:

- Urbanism and planning: decisions about where to build infrastructure or how to plan urban areas could be made on manipulated images, generating costly errors that are difficult to reverse.

- Emergency management: If a flood or fire is depicted in fake images, emergency teams can allocate resources to the wrong places, while neglecting areas that are actually affected.

- Climate change and the environment: Doctored images of glaciers, deforestation or polluting emissions could manipulate political debates and delay the implementation of urgent measures.

- Markets and insurance: Insurers and financial companies that rely on satellite imagery to assess damage could be misled, with significant economic consequences.

In all these cases, what is at stake is not only the quality of the information, but also the effectiveness and legitimacy of public policies based on that data.

The technological cat and mouse game

The dynamics of counterfeit generation and detection are already known in other areas, such as video or audio deepfakes: every time a more realistic generation method emerges, a more advanced detection algorithm is developed, and vice versa. In the field of satellite images, this technological career has particularities:

- Increasingly sophisticated generators: today's broadcast models can create highly realistic scenes, integrating ground textures, shadows, and urban geometries that fool even human experts.

- Detection limitations: Although algorithms are developed to identify fakes (analyzing pixel patterns, inconsistencies in shadows, or metadata), these methods are not always reliable when faced with state-of-the-art generators.

- Cost of verification: independently verifying a satellite image requires access to alternative sources or different sensors, something that is not always available to journalists, NGOs or citizens.

- Double-edged swords: The same techniques used to detect fakes can be exploited by those who generate them, further refining synthetic images and making them more difficult to differentiate.

From visual evidence to questioned evidence

The deeper impact is cultural and epistemological: what was previously assumed to be objective evidence now becomes an element subject to doubt. If satellite imagery is no longer perceived as reliable evidence, it weakens fundamental narratives around scientific truth, international justice, and political accountability.

- In armed conflicts, a satellite image showing possible war crimes can be dismissed under the accusation of being a deepfake.

- In international courts, evidence based on satellite observation could lose weight in the face of suspicion of manipulation.

- In public debate, the relativism of "everything can be false" can be used as a rhetorical weapon to delegitimize even the strongest evidence.

Strategies to ensure authenticity

The crisis of confidence in satellite imagery is not an isolated problem in the geospatial sector, but is part of a broader phenomenon: digital disinformation in the age of artificial intelligence. Just as video deepfakes have called into question the validity of audiovisual evidence, the proliferation of synthetic satellite imagery threatens to weaken the last frontier of perceived objective data: the unbiased view from space.

Ensuring the authenticity of these images requires a combination of technical solutions and governance mechanisms, capable of strengthening traceability, transparency and accountability across the spatial data value chain. The main strategies under development are described below.

Robust metadata: Record origin and chain of custody

Metadata is the first line of defense against manipulation. In satellite imagery, they should include detailed information about:

- The sensor used (type, resolution, orbit).

- The exact time of acquisition (date and time, with time precision).

- The precise geographical location (official reference systems).

- The applied processing chain (atmospheric corrections, calibrations, reprojections).

Recording this metadata in secure repositories allows the chain of custody to be reconstructed, i.e. the history of who, how and when an image has been manipulated. Without this traceability, it is impossible to distinguish between authentic and counterfeit images.

EXAMPLE: The European Union's Copernicus program already implements standardized and open metadata for all its Sentinel images, facilitating subsequent audits and confidence in the origin.

Digital signatures and blockchain: ensuring integrity

Digital signatures allow you to verify that an image has not been altered since it was captured. They function as a cryptographic seal that is applied at the time of acquisition and validated at each subsequent use.

Blockchain technology offers an additional level of assurance: storing acquisition and modification records on an immutable chain of blocks. In this way, any changes in the image or its metadata would be recorded and easily detectable.

EXAMPLE: The ESA – Trusted Data Framework project explores the use of blockchain to protect the integrity of Earth observation data and bolster trust in critical applications such as climate change and food security.

Invisible watermarks: hidden signs in the image

Digital watermarking involves embedding imperceptible signals in the satellite image itself, so that any subsequent alterations can be detected automatically.

- It can be done at the pixel level, slightly modifying color patterns or luminance.

- It is combined with cryptographic techniques to reinforce its validity.

- It allows you to validate images even if they have been cropped, compressed, or reprocessed.

EXAMPLE: In the audiovisual sector, watermarks have been used for years in the protection of digital content. Its adaptation to satellite images is in the experimental phase, but it could become a standard verification tool.

Open Standards (OGC, ISO): Trust through Interoperability

Standardization is key to ensuring that technical solutions are applied in a coordinated and global manner.

- OGC (Open Geospatial Consortium) works on standards for metadata management, geospatial data traceability, and interoperability between systems. Their work on geospatial APIs and FAIR (Findable, Accessible, Interoperable, Reusable) metadata is essential to establishing common trust practices.

- ISO develops standards on information management and authenticity of digital records that can also be applied to satellite imagery.

EXAMPLE: OGC Testbed-19 included specific experiments on geospatial data authenticity, testing approaches such as digital signatures and certificates of provenance.

Cross-check: combining multiple sources

A basic principle for detecting counterfeits is to contrast sources. In the case of satellite imagery, this involves:

- Compare images from different satellites (e.g. Sentinel-2 vs. Landsat-9).

- Use different types of sensors (optical, radar SAR, hyperspectral).

- Analyze time series to verify consistency over time.

EXAMPLE: Damage verification in Ukraine following the start of the Russian invasion in 2022 was done by comparing images from several vendors (Maxar, Planet, Sentinel), ensuring that the findings were not based on a single source.

AI vs. AI: Automatic Counterfeit Detection

The same artificial intelligence that allows synthetic images to be created can be used to detect them. Techniques include:

- Pixel Forensics: Identify patterns generated by GANs or broadcast models.

- Neural networks trained to distinguish between real and synthetic images based on textures or spectral distributions.

- Geometric inconsistencies models: detect impossible shadows, topographic inconsistencies, or repetitive patterns.

EXAMPLE: Researchers at the University of Washington and other groups have shown that specific algorithms can detect satellite fakes with greater than 90% accuracy under controlled conditions.

Current Experiences: Global Initiatives

Several international projects are already working on mechanisms to reinforce authenticity:

- Coalition for Content Provenance and Authenticity (C2PA): A partnership between Adobe, Microsoft, BBC, Intel, and other organizations to develop an open standard for provenance and authenticity of digital content, including images. Its model can be applied directly to the satellite sector.

- OGC work: the organization promotes the debate on trust in geospatial data and has highlighted the importance of ensuring the traceability of synthetic and real satellite images (OGC Blog).

- NGA (National Geospatial-Intelligence Agency) in the US has publicly acknowledged the threat of synthetic imagery in defence and is driving collaborations with academia and industry to develop detection systems.

Towards an ecosystem of trust

The strategies described should not be understood as alternatives, but as complementary layers in a trusted ecosystem:

|

Id |

Layers |

Benefits |

|---|---|---|

| 1 | Robust metadata (source, sensor, chain of custody) |

Traceability guaranteed |

| 2 | Digital signatures and blockchain (data integrity) |

Ensuring integrity |

| 3 | Invisible watermarks (hidden signs) |

Add a hidden level of protection |

| 4 | Cross-check (multiple satellites and sensors) |

Validates independently |

| 5 | AI vs. AI (counterfeit detector) |

Respond to emerging threats |

| 6 | International governance (accountability, legal frameworks) |

Articulate clear rules of liability |

Figure 3. Layers to ensure confidence in synthetic satellite images

Success will depend on these mechanisms being integrated together, under open and collaborative frameworks, and with the active involvement of space agencies, governments, the private sector and the scientific community.

Conclusions

Synthetic images, far from being just a threat, represent a powerful tool that, when used well, can provide significant value in areas such as simulation, algorithm training or innovation in digital services. The problem arises when these images are presented as real without proper transparency, fueling misinformation or manipulating public perception.

The challenge, therefore, is twofold: to take advantage of the opportunities offered by the synthesis of visual data to advance science, technology and management, and to minimize the risks associated with the misuse of these capabilities, especially in the form of deepfakes or deliberate falsifications.

In the particular case of satellite imagery, trust takes on a strategic dimension. Critical decisions in national security, disaster response, environmental policy, and international justice depend on them. If the authenticity of these images is called into question, not only the reliability of the data is compromised, but also the legitimacy of decisions based on them.

The future of Earth observation will be shaped by our ability to ensure authenticity, transparency and traceability across the value chain: from data acquisition to dissemination and end use. Technical solutions (robust metadata, digital signatures, blockchain, watermarks, cross-verification, and AI for counterfeit detection), combined with governance frameworks and international cooperation, will be the key to building an ecosystem of trust.

In short, we must assume a simple but forceful guiding principle:

"If we can't trust what we see from space, we put our decisions on Earth at risk."

Content prepared by Mayte Toscano, Senior Consultant in Data Economy Technologies. The contents and points of view reflected in this publication are the sole responsibility of the author.

Sicma, a climate and environmental information system, is a platform that displays climate scenarios and various variables generated from them. This application is developed entirely with free software and allows users to consult current, past, and future climate conditions, with variable spatial resolution according to the needs of each case (100 by 100 meters in the case of the Canary Islands, and 200 by 200 meters in the case of Andalusia). This makes it possible to obtain local information on the point of intervention.

The information is generated for the scenarios, models, horizons and annual periods considered necessary in each case and with the most appropriate resolution and interpolations for each territory.

Sicma provides information on variables calculated from daily series. To quantify uncertainties, it offers projections generated from ten climate models based on the sixth report of the Intergovernmental Panel on Climate Change (IPCC), each under four future emissions scenarios, known as shared socio-economic pathways (SSPs). Therefore, a total of 40 projections are generated. These climate projections, detailed up to the year 2100, are a very useful tool for planning and managing water, agriculture and environmental conservation.

Users can easily access information on climate scenarios, providing representative data in different territorial areas through a viewer. Some of the variables included in this viewer are: maximum temperature, average temperature, minimum temperature, precipitation, potential evapotranspiration, water balance, hot days (>40ºC) or tropical nights (>22ºC).

In addition to viewing, it is also possible to download data in alphanumeric formats in spreadsheets, graphs, or value maps.

There are currently two open sicma environments:

- Sicma Andalucía: https://andalucia.sicma.red/

- Sicma Canarias: https://canarias.sicma.red/

Artificial intelligence (AI) has become a key technology in multiple sectors, from health and education to industry and environmental management, not to mention the number of citizens who create texts, images or videos with this technology for their own personal enjoyment. It is estimated that in Spain more than half of the adult population has ever used an AI tool.

However, this boom poses challenges in terms of sustainability, both in terms of water and energy consumption and in terms of social and ethical impact. It is therefore necessary to seek solutions that help mitigate the negative effects, promoting efficient, responsible and accessible models for all. In this article we will address this challenge, as well as possible efforts to address it.

What is the environmental impact of AI?

In a landscape where artificial intelligence is all the rage, more and more users are wondering what price we should pay for being able to create memes in a matter of seconds.

To properly calculate the total impact of artificial intelligence, it is necessary to consider the cycles of hardware and software as a whole, as the United Nations Environment Programme (UNEP)indicates. That is, it is necessary to consider everything from raw material extraction, production, transport and construction of the data centre, management, maintenance and disposal of e-waste, to data collection and preparation, modelling, training, validation, implementation, inference, maintenance and decommissioning. This generates direct, indirect and higher-order effects:

- The direct impacts include the consumption of energy, water and mineral resources, as well as the production of emissions and e-waste, which generates a considerable carbon footprint.

- The indirect effects derive from the use of AI, for example, those generated by the increased use of autonomous vehicles.

- Moreover, the widespread use of artificial intelligence also carries an ethical dimension, as it may exacerbate existing inequalities, especially affecting minorities and low-income people. Sometimes the training data used are biased or of poor quality (e.g. under-representing certain population groups). This situation can lead to responses and decisions that favour majority groups.

Some of the figures compiled in the UN document that can help us to get an idea of the impact generated by AI include:

- A single request for information to ChatGPT consumes ten times more electricity than a query on a search engine such as Google, according to data from the International Energy Agency (IEA).

- Entering a single Large Language Model ( Large Language Models or LLM) generates approximately 300.000 kg of carbon dioxide emissions, which is equivalent to 125 round-trip flights between New York and Beijing, according to the scientific paper "The carbon impact of artificial intelligence".

- Global demand for AI water will be between 4.2 and 6.6 billion cubic metres by 2,027, a figure that exceeds the total consumption of a country like Denmark, according to the "Making AI Less "Thirsty": Uncovering and Addressing the Secret Water Footprint of AI Models" study.

Solutions for sustainable AI

In view of this situation, the UN itself proposes several aspects to which attention needs to be paid, for example:

- Search for standardised methods and parameters to measure the environmental impact of AI, focusing on direct effects, which are easier to measure thanks to energy, water and resource consumption data. Knowing this information will make it easier to take action that will bring substantial benefit.

- Facilitate the awareness of society, through mechanisms that oblige companies to make this information public in a transparent and accessible manner. This could eventually promote behavioural changes towards a more sustainable use of AI.

- Prioritise research on optimising algorithms, for energy efficiency. For example, the energy required can be minimised by reducing computational complexity and data usage. Decentralised computing can also be boosted, as distributing processes over less demanding networks avoids overloading large servers.

- Encourage the use of renewable energies in data centres, such as solar and wind power. In addition, companies need to be encouraged to undertake carbon offsetting practices.

In addition to its environmental impact, and as seen above, AI must also be sustainable from a social and ethical perspective. This requires:

- Avoid algorithmic bias: ensure that the data used represent the diversity of the population, avoiding unintended discrimination.

- Transparency in models: make algorithms understandable and accessible, promoting trust and human oversight.

- Accessibility and equity: develop AI systems that are inclusive and benefit underprivileged communities.

While artificial intelligence poses challenges in terms of sustainability, it can also be a key partner in building a greener planet. Its ability to analyse large volumes of data allows optimising energy use, improving the management of natural resources and developing more efficient strategies in sectors such as agriculture, mobility and industry. From predicting climate change to designing models to reduce emissions, AI offers innovative solutions that can accelerate the transition to a more sustainable future.

National Green Algorithms Programme

In response to this reality, Spain has launched the National Programme for Green Algorithms (PNAV). This is an initiative that seeks to integrate sustainability in the design and application of AI, promoting more efficient and environmentally responsible models, while promoting its use to respond to different environmental challenges.

The main goal of the NAPAV is to encourage the development of algorithms that minimise environmental impact from their conception. This approach, known as "Green by Design", implies that sustainability is not an afterthought, but a fundamental criterion in the creation of AI models. In addition, the programme seeks to promote research in sustainable IA, improve the energy efficiency of digital infrastructures and promote the integration of technologies such as the green blockchain into the productive fabric.

This initiative is part of the Recovery, Transformation and Resilience Plan, the Spain Digital Agenda 2026 and the National Artificial Intelligence Strategy.. Objectives include the development of a best practice guide, a catalogue of efficient algorithms and a catalogue of algorithms to address environmental problems, the generation of an impact calculator for self-assessment, as well as measures to support awareness-raising and training of AI developers.

Its website functions as a knowledge space on sustainable artificial intelligence, where you can keep up to date with the main news, events, interviews, etc. related to this field. They also organise competitions, such as hackathons, to promote solutions that help solve environmental challenges.

The Future of Sustainable AI

The path towards more responsible artificial intelligence depends on the joint efforts of governments, business and the scientific community. Investment in research, the development of appropriate regulations and awareness of ethical AI will be key to ensuring that this technology drives progress without compromising the planet or society.

Sustainable AI is not only a technological challenge, but an opportunity to transform innovation into a driver of global welfare. It is up to all of us to progress as a society without destroying the planet.

The value of open satellite data in Europe

Satellites have become essential tools for understanding the planet and managing resources efficiently. The European Union (EU) has developed an advanced space infrastructure with the aim of providing real-time data on the environment, navigation and meteorology.

This satellite network is driven by four key programmes:.

- Copernicus: Earth observation, environmental monitoring and climate change.

- Galileo: high-precision satellite navigation, alternative to GPS.

- EGNOS: improved positioning accuracy, key to aviation and navigation.

- Meteosat: padvanced meteorological prediction and atmospheric monitoring.

Through these programmes, Europe not only ensures its technological independence, but also obtains data that is made available to citizens to drive strategic applications in agriculture, security, disaster management and urban planning.

In this article we will explore each programme, its satellites and their impact on society, including Spain''s role in each of them.

Copernicus: Europe''s Earth observation network

Copernicus is the EU Earth observation programme, managed by the European Commission with the technical support of the European Space Agency (ESA) and the European Organisation for the Exploitation of Meteorological Satellites (EUMETSAT).. It aims to provide free and open data about the planet to monitor climate change, manage natural resources and respond to emergencies.

The programme is structured into three main components:

- Space component: consists of a series of satellites called Sentinel, developed specifically for the needs of Copernicus. These satellites provide high quality data for various applications, such as land, sea and atmospheric monitoring.

- Component in situ: includes data collected through ground, air and sea stations. These data are essential to calibrate and validate the information obtained by the satellites, ensuring its accuracy and reliability.

- Operational Services: offers six thematic services that transform collected data into useful information for users:

- Atmospheric monitoring

- Marine monitoring

- Terrestrial monitoring

- Climate change

- Emergency management

- Safety

These services provide information in areas such as air quality, ocean status, land use, climate trends, disaster response and security, supporting informed decision-making in Europe.

Spain has played a key role in the manufacture of components for the Sentinel satellites. Spanish companies have developed critical structures and sensors, and have contributed to the development of data processing software. Spain is also leading projects such as the Atlantic Constellation, which will develop small satellites for climate and oceanic monitoring.

Sentinel satellite

| Satellite | Technical characteristics | Resolution | Coverage (capture frequency) | Uses |

|---|---|---|---|---|

| Sentinel-1 | C-band SAR radar, resolution up to 5m | Up to 5m | Every 6 days | Land and ocean monitoring, natural disasters |

| Sentinel-2 | Multispectral camera (13 bands), resolution up to 10m | 10m, 20m, 60m | Every 5 days | Agricultural management, forestry monitoring, water quality |

| Sentinel-3 | Radiometer SLSTR, Spectrometer OLCI, Altimeter SRAL | 300m (OLCI), 500m (SLSTR) | Every 1-2 days | Oceanic, climatic and terrestrial observation |

| Sentinel-5P | Tropomi spectrometer, resolution 7x3.5 km². | 7x3.5 km² | Daily global coverage | Air quality monitoring, trace gases |

| Sentinel-6 | Altimeter Poseidon-4, vertical resolution 1 cm | 1cm | Every 10 days | Sea level measurement, climate change |

Figure 1. Table satellites Sentinel. Source: own elaboration

Galileo: the european GPS

Galileo is the global navigation satellite system developed by the European Union, managed by the European Space Agency (ESA) and operated by the European Union Space Programme Agency (EUSPA). It aims to provide a reliable and highly accurate global positioning service, independent of other systems such as the US GPS, China''s Beidou or Russia''s GLONASS. Galileo is designed for civilian use and offers free and paid services for various sectors, including transport, telecommunications, energy and finance.

Spain has played a leading role in the Galileo programme. The European GNSS Service Centre (GSC), located in Torrejón de Ardoz, Madrid, acts as the main contact point for users of the Galileo system. In addition, Spanish industry has contributed to the development and manufacture of components for satellites and ground infrastructure, strengthening Spain''s position in the European aerospace sector.

| Satellite | Technical characteristics | Resolution | Coverage (capture frequency) | Uses |

|---|---|---|---|---|

| Galileo FOC | Medium Earth Orbit (MEO), 24 operatives | N/A | Continuous | Precise positioning, land and maritime navigation |

| Galileo IOV | First test satellites of the Galileo system | N/A | Continuous | Initial testing of Galileo before FOC |

Figure 2. Satellite Galileo. Source: own elaboration

EGNOS: improving the accuracy of GPS and Galileo

The European Geostationary Navigation Overlay Service (EGNOS) is the European satellite-based augmentation system (Satellite Based Augmentation System or SBAS) designed to improve the accuracy and reliability of global navigation satellite systems ( Global Navigation Satellite System, GNSS), such as GPS and, in the future, Galileo. EGNOS provides corrections and integrity data that allow users in Europe to determine their position with an accuracy of up to 1.5 metres, making it suitable for safety-critical applications such as aviation and maritime navigation.

Spain has played a leading role in the development and operation of EGNOS. Through ENAIRE, Spain hosts five RIMS Reference Stations located in Santiago, Palma, Malaga, Gran Canaria and La Palma. In addition, the Madrid Air Traffic Control Centre, located in Torrejón de Ardoz, hosts one of the EGNOS Mission Control Centres (MCC), operated by ENAIRE. The Spanish space industry has contributed significantly to the development of the system, with companies participating in studies for the next generation of EGNOS.

| Satellite | Technical characteristics | Resolution | Coverage (capture frequency) | Uses |

|---|---|---|---|---|

| EGNOS Geo | Geostationary GNSS correction satellites | N/A | Real-time GNSS correction | GNSS signal correction for aviation and transportation |

Figure 3. Table satellite EGNOS. Source: own elaboration

Meteosat: high precision weather forecasting

The Meteosat programme consists of a series of geostationary meteorological satellites initially developed by the European Space Agency (ESA) and currently operated by the European Organisation for the Exploitation of Meteorological Satellites (EUMETSAT). These satellites are positioned in geostationary orbit above the Earth''s equator, allowing continuous monitoring of weather conditions over Europe, Africa and the Atlantic Ocean. Its main function is to provide images and data to facilitate weather prediction and climate monitoring.

Spain has been an active participant in the Meteosat programme since its inception. Through the Agencia Estatal de Meteorología (AEMET), Spain contributes financially to EUMETSAT and participates in the programme''s decision-making and operations. In addition, the Spanish space industry has played a key role in the development of the Meteosat satellites. Spanish companies have been responsible for the design and supply of critical components for third-generation satellites, including scanning and calibration mechanisms.

| Satellite | Technical characteristics | Resolution | Cobertura (frecuencia de captura) | Usos |

|---|---|---|---|---|

| Meteosat Primera Gen. | Initial weather satellites, low resolution | Low resolution | Every 30min | Basic weather forecast, images every 30 min. |

| Meteosat Segunda Gen. | Higher spectral and temporal resolution, data every 15 min. | High resolution | Every 15min | Improved accuracy, early detection of weather events |

| Meteosat Tercera Gen. | High-precision weather imaging, lightning detection | High resolution | High frequency | High-precision weather imaging, lightning detection |

Figure 4. Metosat satellite. Source: own elaboration

Access to the data of each programme

Each programme has different conditions and distribution platforms in terms of access to data:

- Copernicus: provides free and open data through various platforms. Users can access satellite imagery and products through the Copernicus Data Space Ecosystem, which offers search, download and processing tools. Data can also be obtained through APIs for integration into automated systems.

- Galileo: its open service (Open Service - OS) allows free use of the navigation signals for any user with a compatible receiver, free of charge. However, direct access to raw satellite data is not provided. For information on services and documentation, access is via the European GNSS Services Centre (GSC):

- Galileo Portal.

- Registration for access to the High Accuracy Service (HAS) (registration required).

- EGNOS: This system improves navigation accuracy with GNSS correction signals. Data on service availability and status can be found on the EGNOS User Support platform..

- Meteosat: Meteosat satellite data are available through the EUMETSAT platform. There are different levels of access, including some free data and some subject to registration or payment. For imagery and meteorological products you can access the EUMETSAT Data Centre..

In terms of open access, Copernicus is the only programme that offers open and unrestricted data. In contrast, Galileo and EGNOS provide free services, but not access to raw satellite data, while Meteosat requires registration and in some cases payment for access to specific data.

Conclusions

The Copernicus, Galileo, EGNOS and Meteosat programmes not only reinforce Europe''s space sovereignty, but also ensure access to strategic data essential for the management of the planet. Through them, Europe can monitor climate change, optimise global navigation, improve the accuracy of its positioning systems and strengthen its weather predictioncapabilities, ensuring more effective responses to environmental crises and emergencies.

Spain plays a fundamental role in this space infrastructure, not only with its aerospace industry, but also with its control centres and reference stations, consolidating itself as a key player in the development and operation of these systems.

Satellite imagery and data have evolved from scientific tools to become essential resources for security, environmental management and sustainable growth. In a world increasingly dependent on real-time information, access to this data is critical for climate resilience, spatial planning, sustainable agriculture and ecosystem protection.

The future of Earth observation and satellite navigation is constantly evolving, and Europe, with its advanced space programmes, is positioning itself as a leader in the exploration, analysis and management of the planet from space.

Access to this data allows researchers, businesses and governments to make more informed and effective decisions. With these systems, Europe and Spain guarantee their technological independence and strengthen their leadership in the space sector.

Ready to explore more? Access the links for each programme and discover how this data can transform our world.

| Copernicus | https://dataspace.copernicus.eu/ | Download centre |

|---|---|---|

| Meteosat | https://user.eumetsat.int/data-access/data-centre/ | Download centre |

| Galileo | https://www.gsc-europa.eu/galileo/services/galileo-high-accuracy-servic…/ | Download centre, after registration |

| EGNOS | https://egnos-user-support.essp-sas.eu/ | Project |

Figure 5. Source: own elaboration

Content prepared by Mayte Toscano, Senior Consultant in Data Economy Technologies. The contents and points of view reflected in this publication are the sole responsibility of the author.

March is approaching and with it a new edition of the Open Data Day. It is an annual worldwide celebration that has been organised for 12 years, promoted by the Open Knowledge Foundation through the Open Knowledge Network. It aims to promote the use of open data in all countries and cultures.

This year's central theme is "Open data to address the polycrisis". The term polycrisis refers to a situation where different risks exist in the same time period. This theme aims to focus on open data as a tool to address, through its reuse, global challenges such as poverty and multiple inequalities, violence and conflict, climate risks and natural disasters.

If several years ago the activities were limited to a single day, from 2023 we have a week to enjoy various conferences, seminars, workshops, etc. centred on this theme. Specifically, in 2025, Open Data Day activities will take place from 1 to 7 March.

Through its website you can see the various activities that will take place throughout the week all over the world. In this article we review some of those that you can follow from Spain, either because they take place in Spain or because they can be followed online.

Open Data Day 2025: Women Leading Open Data for Equality

Iniciativa Barcelona Open Data is organising a session on the afternoon of 6 March focusing on how open data can help address equality challenges. The event will bring together women experts in data technologies and open data, to share knowledge, experiences and best practices in both the publication and reuse of open data in this field.

The event will start at 17:30 with a welcome and introduction. This will be followed by two panel discussions and an interview:

- Round Table 1. Publishing institutions. Gender-sensitive data strategy to address the feminist agenda.

- DIALOGUE Data lab. Building feminist Tech Data practice.

- Round Table 2. Re-users. Projects based on the use of open data to address the feminist agenda.

The day will end at 19:40 with a cocktail and the opportunity for attendees to discuss the topics discussed and expand their network through networking.

How can you follow the event? This is an in-person event, which will be held at Ca l'Alier, Carrer de Pere IV, 362 (Barcelona).

Open access scientific and scholarly publishing as a tool to face the 21st century polycrisis: the key role of publishers

Organised by a private individual, Professor Damián Molgaray, this conference looks at the key role of editors in open access scientific and scholarly publishing. The idea is for participants to reflect on how open knowledge is positioned as a fundamental tool to face the challenges of the 21st century polycrisis, with a focus on Latin America.

The event will take place on 4 March at 11:00 in Argentina (15:00 in mainland Spain).

How can you follow the event? This is an online event through Google Meet.

WhoFundsThem

The organisation mySociety will show the results of its latest project. Over the last few months, a team of volunteers has collected data on the financial interests of the 650 MPs in the UK House of Commons, using sources such as the official Register of Interests, Companies House, MPs' attendance at debates etc. This data, checked and verified with MPs themselves through a 'right of reply' system, has been transformed into an easily accessible format, so that anyone can easily understand it, and will be published on the parliamentary tracking website TheyWorkForYou.

At this event, the project will be presented and the conclusions will be discussed. It takes place on Tuesday 4 at 14:00 London time (15:00 in Spain peninsular).

How can you follow the event? The session can be followed online, but registration is required. The event will be in English.

Science on the 7th: A conversation on Open Data & Air Quality