The European Green Deal (Green Deal) is the European Union's (EU) sustainable growth strategy, designed to drive a green transition that transforms Europe into a just and prosperous society with a modern and competitive economy. Within this strategy, initiatives such as Target 55 (Fit for 55), which aims to reduce EU emissions by at least 55% by 2030, stand out, and the Nature Restoration Regulation(, which sets binding targets to restore ecosystems, habitats and species.

The European Data Strategy positions the EU as a leader in data-driven economies, promoting fundamental values such as privacy and sustainability. This strategy envisages the creation of data spaces sectoral spaces to encourage the availability and sharing of data, promoting its re-use for the benefit of society and various sectors, including the environment.

This article looks at how environmental data spaces, driven by the European Data Strategy, play a key role in achieving the goals of the European Green Pact by fostering the innovative and collaborative use of data.

Green Pact data space from the European Data Strategy

In this context, the EU is promoting the Green Deal Data Space, designed to support the objectives of the Green Deal through the use of data. This data space will allow sharing data and using its full potential to address key environmental challenges in several areas: preservation of biodiversity, sustainable water management, the fight against climate change and the efficient use of natural resources, among others.

In this regard, the European Data Strategy highlights two initiatives:

- On the one hand, the GreenData4all initiative which carries out an update of the INSPIRE directive to enable greater exchange of environmental geospatial data between the public and private sectors, and their effective re-use, including open access to the general public.

- On the other hand, the Destination Earth project proposes the creation of a digital twin of the Earth, using, among others, satellite data, which will allow the simulation of scenarios related to climate change, the management of natural resources and the prevention of natural disasters.

Preparatory actions for the development of the Green Pact data space

As part of its strategy for funding preparatory actions for the development of data spaces, the EU is funding the GREAT project (The Green Deal Data Space Foundation and its Community of Practice). This project focuses on laying the foundations for the development of the Green Deal data space through three strategic use cases: climate change mitigation and adaptation, zero pollution and biodiversity. A key aspect of GREAT is the identification and definition of a prioritised set of high-value environmental data (minimum but scalable set). This approach directly connects this project to the concept of high-value data defined in the European Open Data Directive (i.e. data whose re-use generates not only a positive economic impact, but also social and environmental benefits).. The high-value data defined in the Implementing Regulation include data related to Earth observation and the environment, including data obtained from satellites, ground sensors and in situ data.. These packages cover issues such as air quality, climate, emissions, biodiversity, noise, waste and water, all of which are related to the European Green Pact.

Differentiating aspects of the Green Pact data space

At this point, three differentiating aspects of the Green Pact data space can be highlighted.

- Firstly, its clearly multi-sectoral nature requires consideration of data from a wide variety of domains, each with their own specific regulatory frameworks and models.

- Secondly, its development is deeply linked to the territory, which implies the need to adopt a bottom-up approach (bottom-up) starting from concrete and local scenarios.

- Finally, it includes high-value data, which highlights the importance of active involvement of public administrations, as well as the collaboration of the private and third sectors to ensure its success and sustainability.

Therefore, the potential of environmental data will be significantly increased through European data spaces that are multi-sectoral, territorialised and with strong public sector involvement.

Development of environmental data spaces in HORIZON programme

In order to develop environmental data spaces taking into account the above considerations of both the European Data Strategy and the preparatory actions under the Horizon Europe (HORIZON) programme, the EU is funding four projects:

- Urban Data Spaces for Green dEal (USAGE).. This project develops solutions to ensure that environmental data at the local level is useful for mitigating the effects of climate change. This includes the development of mechanisms to enable cities to generate data that meets the FAIR principles (Findable, Accessible, Interoperable, Reusable) enabling its use for environmentally informed decision-making.

- All Data for Green Deal (AD4GD).. This project aims to propose a set of mechanisms to ensure that biodiversity, water quality and air quality data comply with the FAIR principles. They consider data from a variety of sources (satellite remote sensing, observation networks in situ, IoT-connected sensors, citizen science or socio-economic data).

- F.A.I.R. information cube (FAIRiCUBE). The purpose of this project is to create a platform that enables the reuse of biodiversity and climate data through the use of machine learning techniques. The aim is to enable public institutions that currently do not have easy access to these resources to improve their environmental policies and evidence-based decision-making (e.g. for the adaptation of cities to climate change).

- Biodiversity Building Blocks for Policy (B-Cubed).. This project aims to transform biodiversity monitoring into an agile process that generates more interoperable data. Biodiversity data from different sources, such as citizen science, museums, herbaria or research, are considered; as well as their consumption through business intelligence models, such as OLAP cubes, for informed decision-making in the generation of adequate public policies to counteract the global biodiversity crisis.

Environmental data spaces and research data

Finally, one source of data that can play a crucial role in achieving the objectives of the European Green Pact is scientific data emanating from research results. In this context, the European Union's European Open Science Cloud (EOSC) initiativeis an essential tool. EOSC is an open, federated digital infrastructure designed to provide the European scientific community with access to high quality scientific data and services, i.e. a true research data space. This initiative aims to facilitate interoperability and data exchange in all fields of research by promoting the adoption of FAIR principles, and its federation with the Green Pact data space is therefore essential.

Conclusions

Environmental data is key to meeting the objectives of the European Green Pact. To encourage the availability and sharing of this data, promoting its re-use, the EU is developing a series of environmental data space projects. Once in place, these data spaces will facilitate more efficient and sustainable management of natural resources, through active collaboration between all stakeholders (both public and private), driving Europe's ecological transition.

Jose Norberto Mazón, Professor of Computer Languages and Systems at the University of Alicante. The contents and views reflected in this publication are the sole responsibility of the author.

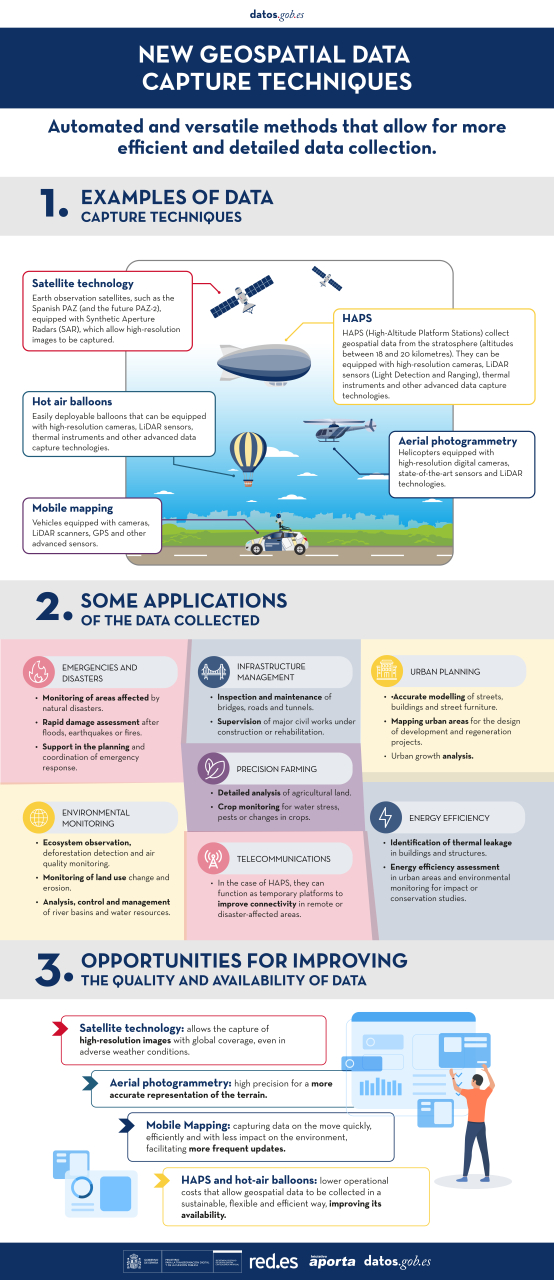

Geospatial data capture is essential for understanding our environment, making informed decisions and designing effective policies in areas such as urban planning, natural resource management or emergency response. In the past, this process was mainly manual and labour-intensive, based on ground measurements made with tools such as total stations and levels. Although these traditional techniques have evolved significantly and are still widely used, they have been complemented by automated and versatile methods that allow more efficient and detailed data collection.

The novelty in the current context lies not only in technological advances, which have improved the accuracy and efficiency of geospatial data collection, but also because it coincides with a widespread shift in mindset towards transparency and accessibility. This approach has encouraged the publication of the data obtained as open resources, facilitating their reuse in applications such as urban planning, energy management and environmental assessment. The combination of advanced technology and an increased awareness of the importance of information sharing marks a significant departure from traditional techniques.

In this article, we will explore some of the new methods of data capture, from photogrammetric flights with helicopters and drones, to ground-based systems such as mobile mapping, which use advanced sensors to generate highly accurate three-dimensional models and maps. In addition, we will learn how these technologies have empowered the generation of open data, democratising access to key geospatial information for innovation, sustainability and public-private collaboration.

Aerial photogrammetry: helicopters with advanced sensors

In the past, capturing geospatial data from the air involved long and complex processes. Analogue cameras mounted on aircraft generated aerial photographs that had to be processed manually to create two-dimensional maps. While this approach was innovative at the time, it also had limitations, such as lower resolution, long processing times and greater dependence on weather and daylight. However, technological advances have reduced these restrictions, even allowing operations at night or in adverse weather conditions.

Today, aerial photogrammetry has taken a qualitative leap forward thanks to the use of helicopters equipped with state-of-the-art sensors. The high-resolution digital cameras allow images to be captured at multiple angles, including oblique views that provide a more complete perspective of the terrain. In addition, the incorporation of thermal sensors and LiDAR (Light Detection and Ranging) technologies adds an unprecedented layer of detail and accuracy. These systems generate point clouds and three-dimensional models that can be integrated directly into geospatial analysis software, eliminating much of the manual processing.

| Features | Advantages | Disadvantages |

|---|---|---|

| Coverage and flexibility | It allows coverage of large areas and access to complex terrain. | May be limited for use in areas with airspace restrictions. Inaccessible to undergrouns or difficult to access areas such as tunnels. |

| Data type | Capture visual, thermal and topographic data in a single flight. | - |

| Precision | Generates point clouds and 3D models with high accuracy. | - |

| Efficiency in large projects | It allows coverage of large areas where drones do not have sufficient autonomy. | High operational cost compared to other technologies. |

|

Environmental impact and noise

|

- | Generates noise and greater environmental impact, limiting its use in sensitive areas. |

| Weather conditions | - | It depends on the weather; adverse conditions such as wind or rain affect its operation. |

| Amortised | - | High cost compared to drones or ground-based methods. |

Figure 1. Table with advantages and disadvantages of aerial photogrammetry with helicopters.

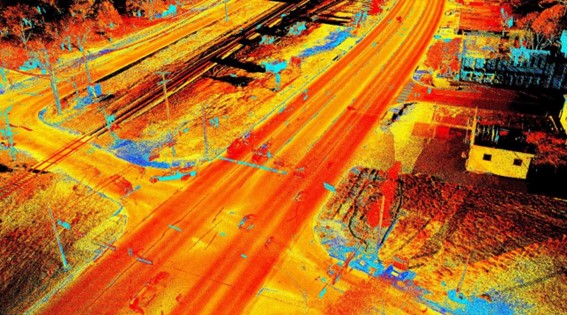

Mobile mapping: from backpacks to BIM integration

The mobile mapping is a geospatial data capture technique using vehicles equipped with cameras, LiDAR scanners, GPS and other advanced sensors. This technology allows detailed information to be collected as the vehicle moves, making it ideal for mapping urban areas, road networks and dynamic environments.

In the past, topographic surveys required stationary measurements, which meant traffic disruptions and considerable time to cover large areas. In contrast, mobile mapping has revolutionised this process, allowing data to be captured quickly, efficiently and with less impact on the environment. In addition, there are portable versions of this technology, such as backpacks with robotic scanners, which allow access to pedestrian or hard-to-reach areas.

Figure 2. Image captured with mobile mapping techniques.

| Features | Advantages | Disadvantages |

|---|---|---|

| Speed | Captures data while the vehicle is on the move, reducing operating times. | Lower accuracy in areas with poor visibility for sensors (e.g. tunnels). |

| Urban coverage | Ideal for urban environments and complex road networks. | It is efficient in areas where vehicles can circulate, but its range is limited such as in rural or inaccessible terrain. |

| Flexibility of implementation | Available in portable (backpack) versions for pedestrian or hard-to-reach areas. | Portable equipment tends to have a shorter range than vehicular systems. |

| GIS and BIM implementation | It facilitates the generation of digital models and their use in planning and analysis. | Requires advanced software to process large volumes of data. |

| Impact on the environment | It does not require traffic interruptions or exclusive access to work areas. | Dependence on optimal environmental conditions, such as adequate light and climate. |

| Accessibility | Accessible to underground or hard-to-reach areas such as tunnels |

Figure 3. Table with advantages and disadvantages of mobile mopping.

The mobile mapping is presented as a versatile and efficient solution for capturing geospatial data on the move, becoming a key tool for the modernisation of urban and territorial management systems.

HAPS and ballons: new heights for information capture

HAPS (High-Altitude Platform Stations) and hot-air balloons represent an innovative and efficient alternative for capturing geospatial data from high altitudes. These platforms, located in the stratosphere or at controlled altitudes, combine features of drones and satellites, offering an intermediate solution that stands out for its versatility and sustainability:

- HAPS, like zeppelins and similar aircraft, operate in the stratosphere, at altitudes between 18 and 20 kilometres, allowing a wide and detailed view of the terrain.

- The aerostatic balloons, on the other hand, are ideal for local or temporary studies, thanks to their easiness of deployment and operation at lower altitudes.

Both technologies can be equipped with high-resolution cameras, LiDAR sensors, thermal instruments and other advanced technologies for data capture.

| Features | Advantages | Disadvantages |

|---|---|---|

| Useful | Large capture area, especially with HAPS in the stratosphere. | Limited coverage compared to satellites in orbit. |

| Sustainability | Lower environmental impact and energy footprint compared to helicopters or aeroplanes. | Dependence on weather conditions for deployment and stability. |

| Amortised | Lower operating costs than traditional satellites. | Higher initial investment than drones or ground equipment. |

| Versatility | Ideal for temporary or emergency projects. | Limited range in hot air balloons. |

| Duration of operation | HAPS can operate for long periods (days or weeks). | Hot air balloons have a shorter operating time. |

Figure 4. Table with advantages and disadvantages of HAPS and ballons

HAPS and balloons are presented as key tools to complement existing technologies such as drones and satellites, offering new possibilities in geospatial data collection in a sustainable, flexible and efficient way. As these technologies evolve, their adoption will expand access to crucial data for smarter land and resource management.

Satellite technology: PAZ satellite and its future with PAZ-2

Satellite technology is a fundamental tool for capturing geospatial data globally. Spain has taken significant steps in this field with the development and launch of the PAZ satellite. This satellite, initially designed for security and defence purposes, has shown enormous potential for civilian applications such as environmental monitoring, natural resource management and urban planning.

PAZ is an Earth observation satellite equipped with a synthetic aperture radar (SAR), which allows high-resolution imaging, regardless of weather or light conditions.

The upcoming launch of PAZ-2 (planned for 2030) promises to further expand Spain''s observation capabilities. This new satellite, designed with technological improvements, aims to complement the functions of PAZ and increase the availability of data for civil and scientific applications. Planned improvements include:

- Higher image resolution.

- Ability to monitor larger areas in less time.

- Increased frequency of captures for more dynamic analysis.

| Feature | Advantages | Disadvantages |

|---|---|---|

| Global coverage | Ability to capture data from anywhere on the planet. | Limitations in resolution compared to more detailed terrestrial technologies. |

| Climate independance | SAR sensors allow captures even in adverse weather conditions. | |

| Data frequency | PAZ-2 will improve the frequency of captures, ideal for continuous monitoring. | Limited time in the lifetime of the satellite. |

| Access to open data | It encourages re-use in civil and scientific projects. | Requires advanced infrastructure to process large volumes of data. |

Figure 5. Table with advantages and disadvantages of PAZ and PAZ-2 satellite technology

With PAZ and the forthcoming PAZ-2, Spain strengthens its position in the field of satellite observation, opening up new opportunities for efficient land management, environmental analysis and the development of innovative solutions based on geospatial datas. These satellites are not only a technological breakthrough, but also a strategic tool to promote sustainability and international cooperation in data access.

Conclusion: challenges and opportunities in data management

The evolution of geospatial data capture techniques offers a unique opportunity to improve the accuracy, accessibility and quality of data, and in the specific case of open data, it is essential to foster transparency and re-use of public information. However, this progress cannot be understood without analysing the role played by technological tools in this process.

Innovations such as LiDAR in helicopters, Mobile Mapping, SAM, HAPS and satellites such as PAZ and PAZ-2 not only optimise data collection, but also have a direct impact on data quality and availability.

In short, these technological tools generate high quality information that can be made available to citizens as open data, a situation that is being driven by the shift in mindset towards transparency and accessibility. This balance makes open data and technological tools complementary, essential to maximise the social, economic and environmental value of geospatial data.

You can see a summary of these techniques and their applications in the following infographic:

Content prepared by Mayte Toscano, Senior Consultant in Data Economy Technologies. The contents and points of view reflected in this publication are the sole responsibility of the author.

Promoting the data culture is a key objective at the national level that is also shared by the regional administrations. One of the ways to achieve this purpose is to award those solutions that have been developed with open datasets, an initiative that enhances their reuse and impact on society.

On this mission, the Junta de Castilla y León and the Basque Government have been organising open data competitions for years, a subject we talked about in our first episode of the datos.gob.es podcast that you can listen to here.

In this post, we take a look at the winning projects in the latest editions of the open data competitions in the Basque Country and Castilla y León.

Winners of the 8th Castile and Leon Open Data Competition

In the eighth edition of this annual competition, which usually opens at the end of summer, 35 entries were submitted, from which 8 winners were chosen in different categories.

Ideas category: participants had to describe an idea to create studies, services, websites or applications for mobile devices. A first prize of 1,500€ and a second prize of 500€ were awarded.

- First prize: Green Guardians of Castilla y León presented by Sergio José Ruiz Sainz. This is a proposal to develop a mobile application to guide visitors to the natural parks of Castilla y León. Users can access information (such as interactive maps with points of interest) as well as contribute useful data from their visit, which enriches the application.

- Second prize: ParkNature: intelligent parking management system in natural spaces presented by Víctor Manuel Gutiérrez Martín. It consists of an idea to create an application that optimises the experience of visitors to the natural areas of Castilla y León, by integrating real-time data on parking and connecting with nearby cultural and tourist events.

Products and Services Category: Awarded studies, services, websites or applications for mobile devices, which must be accessible to all citizens via the web through a URL. In this category, first, second and third prizes of €2,500, €1,500 and €500 respectively were awarded, as well as a specific prize of €1,500 for students.

- First prize: AquaCyL from Pablo Varela Vázquez. It is an application that provides information about the bathing areas in the autonomous community.

- Second prize: ConquistaCyL presented by Markel Juaristi Mendarozketa and Maite del Corte Sanz. It is an interactive game designed for tourism in Castilla y León and learning through a gamified process.

- Third prize: All the sport of Castilla y León presented by Laura Folgado Galache. It is an app that presents all the information of interest associated with a sport according to the province.

- Student prize: Otto Wunderlich en Segovia by Jorge Martín Arévalo. It is a photographic repository sorted according to type of monuments and location of Otto Wunderlich's photographs.

Didactic Resource Category: consisted of the creation of new and innovative open didactic resources to support classroom teaching. These resources were to be published under Creative Commons licences. A single first prize of €1,500 was awarded in this category.

- First prize: StartUp CyL: Business creation through Artificial Intelligence and Open Data presented by José María Pérez Ramos. It is a chatbot that uses the ChatGPT API to assist in setting up a business using open data.

Data Journalism category: awarded for published or updated (in a relevant way) journalistic pieces, both in written and audiovisual media, and offered a prize of €1,500.

- First prize: Codorniz, perdiz y paloma torcaz son las especies más cazadas en Burgos, presented by Sara Sendino Cantera, which analyses data on hunting in Burgos.

Winners of the 5th edition of the Open Data Euskadi Open Data Competition

As in previous editions, the Basque open data portal opened two prize categories: an ideas competition and an applications competition, each of which was divided into several categories. On this occasion, 41 applications were submitted for the ideas competition and 30 for the applications competition.

Idea competition: In this category, two prizes of €3,000 and €1,500 have been awarded in each category.

Health and Social Category

- First prize: Development of a Model for Predicting the Volume of Patients attending the Emergency Department of Osakidetza by Miren Bacete Martínez. It proposes the development of a predictive model using time series capable of anticipating both the volume of people attending the emergency department and the level of severity of cases.

- Second prize: Euskoeduca by Sandra García Arias. It is a proposed digital solution designed to provide personalised academic and career guidance to students, parents and guardians.

Category Environment and Sustainability

- First prize: Baratzapp by Leire Zubizarreta Barrenetxea. The idea consists of the development of a software that facilitates and assists in the planning of a vegetable garden by means of algorithms that seek to enhance the knowledge related to the self-consumption vegetable garden, while integrating, among others, climatological, environmental and plot information in a personalised way for the user.

- Second prize: Euskal Advice by Javier Carpintero Ordoñez. The aim of this proposal is to define a tourism recommender based on artificial intelligence.

General Category

- First prize: Lanbila by Hodei Gonçalves Barkaiztegi. It is a proposed app that uses generative AI and open data to match curriculum vitae with job offers in a semantic way.. It provides personalised recommendations, proactive employment and training alerts, and enables informed decisions through labour and territorial indicators.

- Second prize: Development of an LLM for the interactive consultation of Open Data of the Basque Government by Ibai Alberdi Martín. The proposal consists in the development of a Large Scale Language Model (LLM) similar to ChatGPT, specifically trained with open data, focused on providing a conversational and graphical interface that allows users to get accurate answers and dynamic visualisations.

Applications competition: this modality has selected one project in the web services category, awarded with €8,000, and two more in the General Category, which have received a first prize of €8,000 and a second prize of €5,000.

Category Web Services

- First prize: Bizidata: Plataforma de visualización del uso de bicicletas en Vitoria-Gasteiz by Igor Díaz de Guereñu de los Ríos. It is a platform that visualises, analyses and downloads data on bicycle use in Vitoria-Gasteiz, and explores how external factors, such as the weather and traffic, influence bicycle use.

General Category

- First prize: Garbiñe AI by Beatriz Arenal Redondo. It is an intelligent assistant that combines Artificial Intelligence (AI) with open data from Open Data Euskadi to promote the circular economy and improve recycling rates in the Basque Country.

- Second prize: Vitoria-Gasteiz Businessmap by Zaira Gil Ozaeta. It is an interactive visualisation tool based on open data, designed to improve strategic decisions in the field of entrepreneurship and economic activity in Vitoria-Gasteiz.

All these award-winning solutions reuse open datasets from the regional portal of Castilla y León or Euskadi, as the case may be. We encourage you to take a look at the proposals that may inspire you to participate in the next edition of these competitions. Follow us on social media so you don't miss out on this year's calls!

Tupreciodeluz.com offers daily information on the price of electricity, showing the cheapest price of the day, as well as the average price of the last 24 hours and the most expensive time slot. The aim is to allow consumers adhering to the regulated market tariff (PVPC) to modulate their consumption in order to save on their electricity bill.

The website also features an artificial intelligence assistant for energy advice, and measures to promote efficiency and responsible energy consumption.

In addition, consumers can use a solar sizer to find out the feasibility of installing solar energy in their home or business.

The website also has a blog where the most relevant news for consumers is published in a summarised and entertaining way.

In this episode we will delve into the importance of three related categories of high-value datasets. These are Earth observation and environmental data, geospatial data and mobility data. To tell us about them, we have interviewed two experts in the field:

- Paloma Abad Power, Deputy Director of the National Centre for Geographic Information (CNIG).

- Rafael Martínez Cebolla, geographer of the Government of Aragón.

With them we have explored how these high-value datasets are transforming our environment, contributing to sustainable development and technological innovation.

Listen to the full podcast (only available in Spanish)

Summary of the interview

1. What are high-value datasets and why are their important?

Paloma Abad Power: According to the regulation, high-value datasets are those that ensure highest socio-economic potential and, for this, they must be easy to find, i.e. they must be accessible, interoperable and usable. And what does this mean? That means that the datasets must have their descriptions, i.e. the online metadata, which report the statistics and their properties, and which can be easily downloaded or used.

In many cases, these data are often reference data, i.e. data that serve to generate other types of data, such as thematic data, or can generate added value.

Rafael Martínez Cebolla: They could be defined as those datasets that represent phenomena that are useful for decision making, for any public policy or for any action that a natural or legal person may undertake.

In this sense, there are already some directives, which are not so recent, such as the Water Framework Directive or the INSPIRE Directive, which motivated this need to provide shared data under standards that drive the sustainable development of our society.

2. These high-value data are defined by a European Directive and an Implementing Regulation which dictated six categories of high-value datasets. On this occasion we will focus on three of them: Earth observation and environmental data, geospatial data and mobility data. What do these three categories of data have in common and what specific datasets do they cover?

Paloma Abad Power: In my opinion, these data have in common the geographical component, i.e. they are data located on the ground and therefore serve to solve problems of different nature and linked to society.

Thus, for example, we have, with national coverage, the National Aerial Orthophotography Plan (PNOA), which are the aerial images, the System of Land Occupation Information (SIOSE), cadastral parcels, boundary lines, geographical names, roads, postal addresses, protected sites - which can be both environmental and also castles, i.e. historical heritage- etc. And these categories cover almost all the themes defined by the annexes of the INSPIRE directive.

Rafael Martínez Cebolla: It is necessary to know what is pure geographic information, with a direct geographic reference, as opposed to other types of phenomena that have indirect geographic references. In today's world, 90% of information can be located, either directly or indirectly. Today more than ever, geographic tagging is mandatory for any corporation that wants to implement a certain activity, be it social, cultural, environmental or economic: the implementation of renewable energies, where I am going to eat today, etc. These high-value datasets enhance these geographical references, especially of an indirect nature, which help us to make a decision.

3. Which agencies publish these high-value datasets? In other words, where could a user locate datasets in these categories?

Paloma Abad Power: It is necessary to highlight the role of the National Cartographic System, which is an action model in which the organisations of the NSA (National State Administration) and the autonomous communities participate. It is coordinating the co-production of many unique products, funded by these organisations.

These products are published through interoperable web services. They are published, in this case, by the National Center for Geographic Information (CNIG), which is also responsible for much of the metadata for these products.

They could be located through the Catalogues of the IDEE (Spatial Data Infrastructure of Spain) or the Official Catalogue of INSPIRE Data and Services, which is also included in datos.gob.es and the European Data Portal.

And who can publish? All bodies that have a legal mandate for a product classified under the Regulation. Examples: all the mapping bodies of the Autonomous Communities, the General Directorate of Cadastre, Historical Heritage, the National Statistics Institute, the Geological and Mining Institute (IGME), the Hydrographic Institute of the Navy, the Ministry of Agriculture, Fisheries and Food (MAPA), the Ministry for Ecological Transition and the Demographic Challenge, etc. There are a multitude of organisations and many of them, as I have mentioned, participate in the National Cartographic System, provide the data and generate a single service for the citizen.

Rafael Martínez Cebolla: The National Cartographic System defines very well the degree of competences assumed by the administrations. In other words, the public administration at all levels provides official data, assisted by private enterprise, sometimes through public procurement.

The General State Administration goes up to scales of 1:25,000 in the case of the National Geographic Institute (IGN) and then the distribution of competencies for the rest of the scales is for the autonomous or local administrations. In addition, there are a number of actors, such as hydrographic confederations, state departments or the Cadastre, which have under their competences the legal obligation to generate these datasets.

For me it is an example of how it should be distributed, although it is true that it is then necessary to coordinate very well, through collegiate bodies, so that the cartographic production is well integrated.

Paloma Abad Power: There are also collaborative projects, such as, for example, a citizen map, technically known as an X, Y, Z map, which consists of capturing the mapping of all organisations at national and local level. That is, from small scales 1:1,000,000 or 1:50,000,000 to very large scales, such as 1:1000, to provide the citizen with a single multi-scale map that can be served through interoperable and standardised web services.

4. Do you have any other examples of direct application of this type of data?

Rafael Martínez Cebolla: A clear example was seen with the pandemic, with the mobility data published by the National Institute of Statistics. These were very useful data for the administration, for decision making, and from which we have to learn much more for the management of future pandemics and crises, including economic crises. We need to learn and develop our early warning systems.

I believe that this is the line of work: data that is useful for the general public. That is why I say that mobility has been a clear example, because it was the citizen himself who was informing the administration about how he was moving.

Paloma Abad Power: I am going to contribute some data. For example, according to statistics from the National Cartographic System services, the most demanded data are aerial images and digital terrain models. In 2022 there were 8 million requests and in 2023 there were 19 million requests for orthoimages alone.

Rafael Martínez Cebolla: I would like to add that this increase is also because things are being done well. On the one hand, discovery systems are improved. My general feeling is that there are many successful example projects, both from the administration itself and from companies that need this basic information to generate their products.

There was an application that was generated very quickly with de-escalation - you went to a website and it told you how far you could walk through your municipality - because people wanted to get out and walk. This example arises from spatial data that have moved out of the public administration. I believe that this is the importance of successful examples, which come from people who see a compelling need.

5. And how do you incentivise such re-use?

Rafael Martínez Cebolla: I have countless examples. Incentivisation also involves promotion and marketing, something that has sometimes failed us in the public administration. You stick to certain competences and it seems that just putting it on a website is enough. And that is not all.

We are incentivising re-use in two ways. On the one hand, internally, within the administration itself, teaching them that geographic information is useful for planning and evaluating public policies. And I give you the example of the Public Health Atlas of the Government of Aragon, awarded by an Iberian society of epidemiology the year before the pandemic. It was useful for them to know what the health of the Aragonese was like and what preventive measures they had to take.

As for the external incentives, in the case of the Geographic Institute of Aragon, it was seen that the profile entering the geoportal was very technical. The formats used were also very technical, which meant that the general public was not reached. To solve this problem, we promoted portals such as the IDE didactica, a portal for teaching geography, which reaches any citizen who wants to learn about the territory of Aragon.

Paloma Abad Power: I would like to highlight the economic benefit of this, as was shown, for example, in the economic study carried out by the National Centre for Graphic Information with the University of Leuven to measure the economic benefit of the Spatial Data Infrastructure of Spain. It measure the benefit of private companies using free and open services, rather than using, for example, Google Maps or other non-open sources..

Rafael Martínez Cebolla: For better and for worse, because the quality of the official data sometimes we wish it were better. Both Paloma in the General State Administration and I in the regional administration sometimes know that there are official data where more money needs to be invested so that the quality of the data would be better and could be reusable.

But it is true that these studies are key to know in which dimension high-value datasets move. That is to say, having studies that report on the real benefit of having a spatial data infrastructure at state or regional level is, for me, key for two things: for the citizen to understand its importance and, above all, for the politician who arrives every N years to understand the evolution of these platforms and the revolution in geospatial information that we have experienced in the last 20 years.

6. The Geographic Institute of Aragon has also produced a report on the advantages of reusing this type of data, is that right?

Rafael Martínez Cebolla: Yes, it was published earlier this year. We have been doing this report internally for three or four years, because we knew we were going to make the leap to a spatial knowledge infrastructure and we wanted to see the impact of implementing a knowledge graph within the data infrastructure. The Geographic Institute of Aragon has made an effort in recent years to analyse the economic benefit of having this infrastructure available for the citizens themselves, not for the administration. In other words, how much money Aragonese citizens save in their taxes by having this infrastructure. Today we know that having a geographic information platform saves approximately 2 million euros a year for the citizens of Aragon.

I would like to see the report for the next January or February, because I think the leap will be significant. The knowledge graph was implemented in April last year and this gap will be felt in the year ahead. We have noticed a significant increase in requests, both for viewing and downloading.

Basically from one year to the next, we have almost doubled both the number of accesses and downloads. This affects the technological component: you have to redesign it. More people are discovering you, more people are accessing your data and, therefore, you have to dedicate more investment to the technological component, because it is being the bottleneck.

7. What do you see as the challenges to be faced in the coming years?

Paloma Abad Power: In my opinion, the first challenge is to get to know the user in order to provide a better service. The technical user, the university students, the users on the street, etc. We are thinking of doing a survey when the user is going to use our geographic information. But of course, such surveys sometimes slow down the use of geographic information. That is the great challenge: to know the user in order to make services more user-friendly, applications, etc. and to know how to get to what they want and give it to them better.

There is also another technical challenge. When the spatial infrastructures began, the technical level was very high, you had to know what a visualisation service was, the metadata, know the parameters, etc. This has to be eliminated, the user can simply say I want, for example, to consult and visualise the length of the Ebro river, in a more user-friendly way. Or for example the word LiDAR, which was the Italian digital model with high accuracy. All these terms need to be made much more user-friendly.

Rafael Martínez Cebolla: Above all, let them be discovered. My perception is that we must continue to promote the discovery of spatial data without having to explain to the untrained user, or even to some technicians, that we must have a data, a metadata, a service..... No, no. Basically it is that generalist search engines know how to find high-value datasets without knowing that there is such a thing as spatial data infrastructure.

It is a matter of publishing the data under friendly standards, under accessible versions and, above all, publishing them in permanent URIs, which are not going to change. In other words, the data will improve in quality, but will never change.

And above all, from a technical point of view, both spatial data infrastructures and geoportals and knowledge infrastructures have to ensure that high-value information nodes are related to each other from a semantic and geographical point of view. I understand that knowledge networks will help in this regard. In other words, mobility has to be related to the observation of the territory, to public health data or to statistical data, which also have a geographical component. This geographical semantic relationship is key for me.

Subscribe to our Soundcloud profile to keep up to date with our podcasts

Interview clips

Clip 1. What are high-value datasets and why are their important?

Clip 2. Where can a user locate geographic data?

Clip 3. How is the reuse of data with a geographic component being encouraged?

A digital twin is a virtual, interactive representation of a real-world object, system or process. We are talking, for example, about a digital replica of a factory, a city or even a human body. These virtual models allow simulating, analysing and predicting the behaviour of the original element, which is key for optimisation and maintenance in real time.

Due to their functionalities, digital twins are being used in various sectors such as health, transport or agriculture. In this article, we review the benefits of their use and show two examples related to open data.

Advantages of digital twins

Digital twins use real data sources from the environment, obtained through sensors and open platforms, among others. As a result, the digital twins are updated in real time to reflect reality, which brings a number of advantages:

- Increased performance: one of the main differences with traditional simulations is that digital twins use real-time data for modelling, allowing better decisions to be made to optimise equipment and system performance according to the needs of the moment.

- Improved planning: using technologies based on artificial intelligence (AI) and machine learning, the digital twin can analyse performance issues or perform virtual "what-if" simulations. In this way, failures and problems can be predicted before they occur, enabling proactive maintenance.

- Cost reduction: improved data management thanks to a digital twin generates benefits equivalent to 25% of total infrastructure expenditure. In addition, by avoiding costly failures and optimizing processes, operating costs can be significantly reduced. They also enable remote monitoring and control of systems from anywhere, improving efficiency by centralizing operations.

- Customization and flexibility: by creating detailed virtual models of products or processes, organizations can quickly adapt their operations to meet changing environmental demands and individual customer/citizen preferences. For example, in manufacturing, digital twins enable customized mass production, adjusting production lines in real time to create unique products according to customer specifications. On the other hand, in healthcare, digital twins can model the human body to customize medical treatments, thereby improving efficacy and reducing side effects.

- Boosting experimentation and innovation: digital twins provide a safe and controlled environment for testing new ideas and solutions, without the risks and costs associated with physical experiments. Among other issues, they allow experimentation with large objects or projects that, due to their size, do not usually lend themselves to real-life experimentation.

- Improved sustainability: by enabling simulation and detailed analysis of processes and systems, organizations can identify areas of inefficiency and waste, thus optimizing the use of resources. For example, digital twins can model energy consumption and production in real time, enabling precise adjustments that reduce consumption and carbon emissions.

Examples of digital twins in Spain

The following three examples illustrate these advantages.

GeDIA project: artificial intelligence to predict changes in territories

GeDIA is a tool for strategic planning of smart cities, which allows scenario simulations. It uses artificial intelligence models based on existing data sources and tools in the territory.

The scope of the tool is very broad, but its creators highlight two use cases:

- Future infrastructure needs: the platform performs detailed analyses considering trends, thanks to artificial intelligence models. In this way, growth projections can be made and the needs for infrastructures and services, such as energy and water, can be planned in specific areas of a territory, guaranteeing their availability.

- Growth and tourism: GeDIA is also used to study and analyse urban and tourism growth in specific areas. The tool identifies patterns of gentrification and assesses their impact on the local population, using census data. In this way, demographic changes and their impact, such as housing needs, can be better understood and decisions can be made to facilitate equitable and sustainable growth.

This initiative has the participation of various companies and the University of Malaga (UMA), as well as the financial backing of Red.es and the European Union.

Digital twin of the Mar Menor: data to protect the environment

The Mar Menor, the salt lagoon of the Region of Murcia, has suffered serious ecological problems in recent years, influenced by agricultural pressure, tourism and urbanisation.

To better understand the causes and assess possible solutions, TRAGSATEC, a state-owned environmental protection agency, developed a digital twin. It mapped a surrounding area of more than 1,600 square kilometres, known as the Campo de Cartagena Region. In total, 51,000 nadir images, 200,000 oblique images and more than four terabytes of LiDAR data were obtained.

Thanks to this digital twin, TRAGSATEC has been able to simulate various flooding scenarios and the impact of installing containment elements or obstacles, such as a wall, to redirect the flow of water. They have also been able to study the distance between the soil and the groundwater, to determine the impact of fertiliser seepage, among other issues.

Challenges and the way forward

These are just two examples, but they highlight the potential of an increasingly popular technology. However, for its implementation to be even greater, some challenges need to be addressed, such as initial costs, both in technology and training, or security, by increasing the attack surface. Another challenge is the interoperability problems that arise when different public administrations establish digital twins and local data spaces. To address this issue further, the European Commission has published a guide that helps to identify the main organisational and cultural challenges to interoperability, offering good practices to overcome them.

In short, digital twins offer numerous advantages, such as improved performance or cost reduction. These benefits are driving their adoption in various industries and it is likely that, as current challenges are overcome, digital twins will become an essential tool for optimising processes and improving operational efficiency in an increasingly digitised world.

Many people use apps to get around in their daily lives. Apps such as Google Maps, Moovit or CityMapper provide the fastest and most efficient route to a destination. However, what many users are unaware of is that behind these platforms lies a valuable source of information: open data. By reusing public datasets, such as those related to air quality, traffic or public transport, these applications can provide a better service.

In this post, we will explore how the reuse of open data by these platforms empowers a smarter and more sustainable urban ecosystem .

Google Maps: aggregates air quality information and transport data into GTFS.

More than a billion people use Google Maps every month around the world. The tech giant offers a free, up-to-date world map that draws its data from a variety of sources, some of them open.

One of the functions provided by the app is information about the air quality in the user's location. The Air Quality Index (AQI) is a parameter that is determined by each country or region. The European benchmark can be consulted on this map which shows air quality by geolocated zones in real time.

To display the air quality of the user's location, Google Maps applies a model based on a multi-layered approach known as the "fusion approach". This method combines data from several input sources and weights the layers with a sophisticated procedure. The input layers are:

- Government reference monitoring stations

- Commercial sensor networks

- Global and regional dispersion models

- Dust and smoke fire models

- Satellite information

- Traffic data

- Ancillary information such as surface area

- Meteorology

In the case of Spain, this information is obtained from open data sources such as the Ministry of Ecological Transition and Demographic Challenge, the Regional Ministry of Environment, Territory and Housing of the Xunta de Galicia or the Community of Madrid. Open data sources used in other countries around the worldcan be found here .

Another functionality offered by Google Maps to plan the best routes to reach a destination is the information on public transport. These data are provided on a voluntary basis by the public companies providing transport services in each city. In order to make this open data available to the user, it is first dumped into Google Transit and must comply with the open public transport standard GTFS (General Transit Feed Specification).

Moovit: reusing open data to deliver real-time information

Moovit is another urban mobility app most used by Spaniards, which uses open and collaborative data to make it easier for users to plan their journeys by public transport.

Since its launch in 2012, the free-to-download app offers real-time information on the different transport options, suggests the best routes to reach the indicated destination, guides users during their journey (how long they have to wait, how many stops are left, when they have to get off, etc.) and provides constant updates in the event of any alteration in the service.

Like other mobility apps , it is also available in offline mode and allows you to save routes and frequent lines in "Favourites". It is also an inclusive solution as it integrates VoiceOver (iOs) or TalkBack (Android) for blind people.

The platform not only leverages open data provided by governments and local authorities, but also collects information from its users, allowing it to offer a dynamic and constantly updated service.

CityMapper: born as a reuser of open mobility data

The CityMapper development team recognises that the application was born with an open DNA that still remains. They reuse open datasets from, for example, OpenStreetMap at global level or RENFE and Cercanías Bilbao at national level. As the application becomes available in more cities, the list of open data reference sources from which it draws information grows.

The platform offers real-time information on public transport routes, including bus, train, metro and bike sharing. It also adds options for walking, cycling and ridesharing. It is designed to provide the most efficient and fastest route to a destinationby integrating data from different modes of transport into a single interface.

As we published in the monographic report "Municipal Innovation through Open Data" CityMapper mainly uses open data from local transport authorities, typically using the GTFS (General Transit Feed Specification) standard . However, when this data is not sufficient or accurate enough, CityMapper combines it with datasets generated by the application's own users who voluntarily collaborate. It also uses data enhanced and managed by the work of the company's own local employees. All this data is combined with artificial intelligence algorithms developed to optimise routes and provide recommendations tailored to users' needs.

In conclusion, the use of open data in transport is driving a significant transformation in the mobility sector in cities. Through their contribution to applications, users can access up-to-date and accurate data, plan their journeys efficiently and make informed decisions. Governments, for their part, have taken on the role of facilitators by enabling the dissemination of data through open platforms, optimising resources and fostering collaboration across sectors. In addition, open data has created new opportunities for developers and the private sector, who have contributed with technological solutions such as Google Maps, Moovit or CityMapper. Ultimately, the potential of open data to transform the future of urban mobility is undeniable.

Citizen science is consolidating itself as one of the most relevant sources of most relevant sources of reference in contemporary research contemporary research. This is recognised by the Centro Superior de Investigaciones Científicas (CSIC), which defines citizen science as a methodology and a means for the promotion of scientific culture in which science and citizen participation strategies converge.

We talked some time ago about the importance importance of citizen science in society in society. Today, citizen science projects have not only increased in number, diversity and complexity, but have also driven a significant process of reflection on how citizens can actively contribute to the generation of data and knowledge.

To reach this point, programmes such as Horizon 2020, which explicitly recognised citizen participation in science, have played a key role. More specifically, the chapter "Science with and for society"gave an important boost to this type of initiatives in Europe and also in Spain. In fact, as a result of Spanish participation in this programme, as well as in parallel initiatives, Spanish projects have been increasing in size and connections with international initiatives.

This growing interest in citizen science also translates into concrete policies. An example of this is the current Spanish Strategy for Science, Technology and Innovation (EECTI), for the period 2021-2027, which includes "the social and economic responsibility of R&D&I through the incorporation of citizen science" which includes "the social and economic responsibility of I through the incorporation of citizen science".

In short, we commented some time agoin short, citizen science initiatives seek to encourage a more democratic sciencethat responds to the interests of all citizens and generates information that can be reused for the benefit of society. Here are some examples of citizen science projects that help collect data whose reuse can have a positive impact on society:

AtmOOs Academic Project: Education and citizen science on air pollution and mobility.

In this programme, Thigis developed a citizen science pilot on mobility and the environment with pupils from a school in Barcelona's Eixample district. This project, which is already replicable in other schoolsconsists of collecting data on student mobility patterns in order to analyse issues related to sustainability.

On the website of AtmOOs Academic you can visualise the results of all the editions that have been carried out annually since the 2017-2018 academic year and show information on the vehicles used by students to go to class or the emissions generated according to school stage.

WildINTEL: Research project on life monitoring in Huelva

The University of Huelva and the State Agency for Scientific Research (CSIC) are collaborating to build a wildlife monitoring system to obtain essential biodiversity variables. To do this, remote data capture photo-trapping cameras and artificial intelligence are used.

The wildINTEL project project focuses on the development of a monitoring system that is scalable and replicable, thus facilitating the efficient collection and management of biodiversity data. This system will incorporate innovative technologies to provide accurate and objective demographic estimates of populations and communities.

Through this project which started in December 2023 and will continue until December 2026, it is expected to provide tools and products to improve the management of biodiversity not only in the province of Huelva but throughout Europe.

IncluScience-Me: Citizen science in the classroom to promote scientific culture and biodiversity conservation.

This citizen science project combining education and biodiversity arises from the need to address scientific research in schools. To do this, students take on the role of a researcher to tackle a real challenge: to track and identify the mammals that live in their immediate environment to help update a distribution map and, therefore, their conservation.

IncluScience-Me was born at the University of Cordoba and, specifically, in the Research Group on Education and Biodiversity Management (Gesbio), and has been made possible thanks to the participation of the University of Castilla-La Mancha and the Research Institute for Hunting Resources of Ciudad Real (IREC), with the collaboration of the Spanish Foundation for Science and Technology - Ministry of Science, Innovation and Universities.

The Memory of the Herd: Documentary corpus of pastoral life.

This citizen science project which has been active since July 2023, aims to gather knowledge and experiences from sheperds and retired shepherds about herd management and livestock farming.

The entity responsible for the programme is the Institut Català de Paleoecologia Humana i Evolució Social, although the Museu Etnogràfic de Ripoll, Institució Milà i Fontanals-CSIC, Universitat Autònoma de Barcelona and Universitat Rovira i Virgili also collaborate.

Through the programme, it helps to interpret the archaeological record and contributes to the preservation of knowledge of pastoral practice. In addition, it values the experience and knowledge of older people, a work that contributes to ending the negative connotation of "old age" in a society that gives priority to "youth", i.e., that they are no longer considered passive subjects but active social subjects.

Plastic Pirates Spain: Study of plastic pollution in European rivers.

It is a citizen science project which has been carried out over the last year with young people between 12 and 18 years of age in the communities of Castilla y León and Catalonia aims to contribute to generating scientific evidence and environmental awareness about plastic waste in rivers.

To this end, groups of young people from different educational centres, associations and youth groups have taken part in sampling campaigns to collect data on the presence of waste and rubbish, mainly plastics and microplastics in riverbanks and water.

In Spain, this project has been coordinated by the BETA Technology Centre of the University of Vic - Central University of Catalonia together with the University of Burgos and the Oxygen Foundation. You can access more information on their website.

Here are some examples of citizen science projects. You can find out more at the Observatory of Citizen Science in Spain an initiative that brings together a wide range of educational resources, reports and other interesting information on citizen science and its impact in Spain. do you know of any other projects? Send it to us at dinamizacion@datos.gob.es and we can publicise it through our dissemination channels.

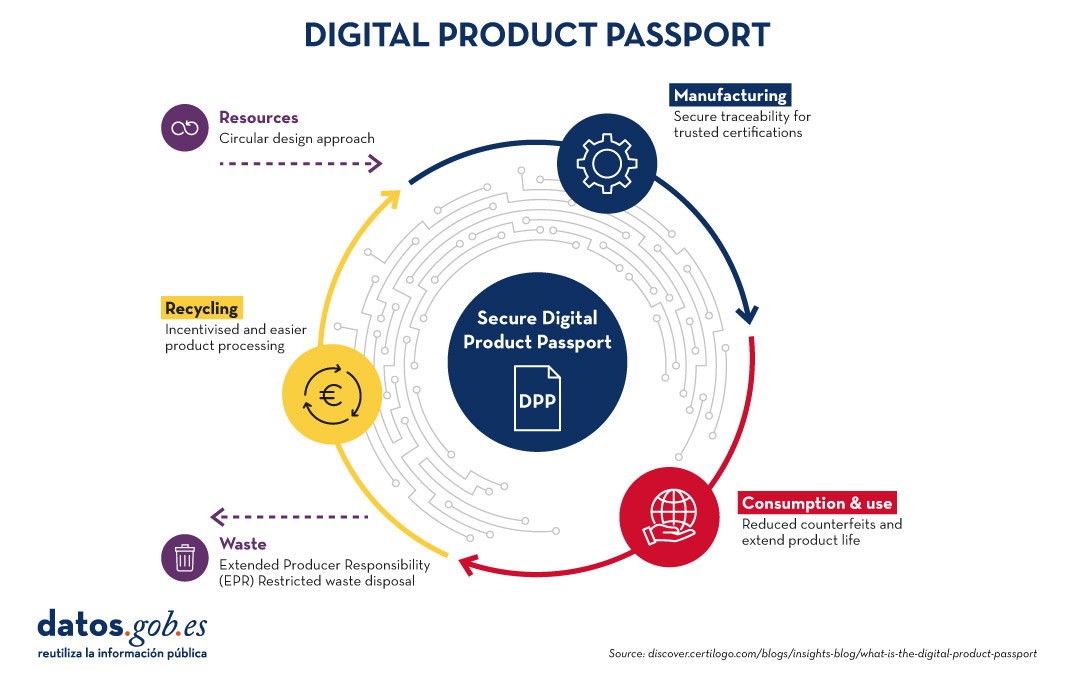

Digital transformation has reached almost every aspect and sector of our lives, and the world of products and services is no exception. In this context, the Digital Product Passport (DPP) concept is emerging as a revolutionary tool to foster sustainability and the circular economy. Accompanied by initiatives such as CIRPASS (Circular Product Information System for Sustainability), the DPP promises to change the way we interact with products throughout their life cycle. In this article, we will explore what DPP is, its origins, applications, risks and how it can affect our daily lives and the protection of our personal data.

What is the Digital Product Passport (DPP)? Origin and importance

The Digital Product Passport is a digital collection of key information about a product, from manufacturing to recycling. This passport allows products to be tracked and managed more efficiently, improving transparency and facilitating sustainable practices. The information contained in a DPP may include details on the materials used, the manufacturing process, the supply chain, instructions for use and how to recycle the product at the end of its life.

The DPP has been developed in response to the growing need to promote the circular economy and reduce the environmental impact of products. The European Union (EU) has been a pioneer in promoting policies and regulations that support sustainability. Initiatives such as the EU's Circular Economy Action Plan have been instrumental in driving the DPP forward. The objectives of this plan are as follows:

- Greater Transparency: Consumers no longer have to guess about the origin of their products and how to dispose of them correctly. With a machine-readable DPP (e.g. QR code or NFC tag) attached to end products, consumers can make informed purchasing decisions and brands can eliminate greenwashing with confidence.

- Simplified Compliance: By creating an audit of events and transactions in a product's value chain, the DPP provides the brand and its suppliers with the necessary data to address compliance demands efficiently.

- Sustainable Production: By tracking and reporting the social and environmental impacts of a product from source to disposal, brands can make data-driven decisions to optimise sustainability in product development.

- Circular Economy: The DPP facilitates a circular economy by promoting eco-design and the responsible production of durable products that can be reused, remanufactured and disposed of correctly.

The following image summarises the main advantages of the digital passport at each stage of the digital product manufacturing process:

CIRPASS as a facilitator of DPP implementation

CIRPASS is a platform that supports the implementation of the DPP. This European initiative aims to standardise the collection and exchange of data on products, facilitating their traceability and management throughout their life cycle. CIRPASS plays a crucial role in creating an interoperable digital framework that connects manufacturers, consumers and recyclers.

DPP applications in various sectors

On 5 March 2024, CIRPASS, in collaboration with the European Commission, organised an event on the future development of the Digital Product Passport. The event brought together various stakeholders from different industries and organisations, who, with an eminently practical approach presented and discussed various aspects of the upcoming regulation and its requirements, possible solutions, examples of use cases, and the obstacles and opportunities for the affected industries and businesses.

The following are the applications of DPP in various sectors as explained at the event:

- Textile industry: It allows consumers to know the origin of the garments, the materials used and the working conditions in the factories.

- Electronics: Facilitates recycling and reuse of components, reducing electronic waste.

- Automotive: It assists in tracking parts and materials, promoting the repair and recycling of vehicles.

- Power supply: It provides information on food traceability, ensuring safety and sustainability in the supply chain.

The impact of the DPP on citizens' lives

But what impact will the use of this kind of novel paradigm have on our daily lives? And how does this impact on us as end users of multiple products and services such as those mentioned above? We will focus on four base cases: informed consumers in any field, ease of product repair, trust and transparency, and efficient recycling.

The DPP provides consumers with access to detailed information about the products they buy, such as their origin, materials and production practices. This allows consumers to make more informed choices and opt for products that are sustainable and ethical. For example, a consumer can choose a garment made from organic materials and produced under fair labour conditions, thus promoting responsible and conscious consumption.

Similarly, one of the great benefits of the DPP is the inclusion of repair guides within the digital passport. This means that consumers can easily access detailed instructions on how to repair a product instead of discarding it when it breaks down. For example, if an appliance stops working, the DPP can provide a step-by-step repair manual, allowing the user to fix it himself or take it to a technician with the necessary information. This not only extends the lifetime of products, but also reduces e-waste and promotes sustainability.

Also, access to detailed and transparent product information through the DPP can increase consumers' trust in brands. Companies that provide a complete and accurate DPP demonstrate their commitment to transparency and accountability, which can enhance their reputation and build customer loyalty. In addition, consumers who have access to this information are better able to make responsible purchasing decisions, thus encouraging more ethical and sustainable consumption habits.

Finally, the DPP facilitates effective recycling by providing clear information on how to break down and reuse the materials in a product. For example, a citizen who wishes to recycle an electronic device can consult the DPP to find out which parts can be recycled and how to separate them properly. This improves the efficiency of the recycling process and ensures that more materials are recovered and reused instead of ending up in landfill, contributing to a circular economy.

Risks and challenges of the DPP

Similarly, as a novel technology and as part of the digital transformation that is taking place in the product sectors, the DPP also presents certain challenges, risks and challenges such as:

- Data Protection: The collection and storage of large amounts of data can put consumers' privacy at risk if not properly managed.

- Security: Digital data is vulnerable to cyber-attacks, which requires robust security measures.

- Interoperability: Standardisation of data across different industries and countries can be complex, making it difficult to implement the DPP on a large scale.

- Costs: Creating and maintaining digital passports can be costly, especially for small and medium-sized enterprises.

Data protection implications

The implementation of the DPP and systems such as CIRPASS implies careful management of personal data. It is essential that companies and digital platforms comply with data protection regulations, such as the EU's General Data Protection Regulation (GDPR). Organisations must ensure that the data collected is used in a transparent manner and with the explicit consent of consumers. In addition, advanced security measures must be implemented to protect the integrity and confidentiality of the data.

Relationship with European Data Spaces

The European Data Spaces are an EU initiative to create a single market for data, promoting innovation and the digital economy. The DPP and CIRPASS are aligned with this vision, as they encourage the exchange of information between different actors in the economy. Data interoperability is essential for the success of the European Data Spaces, and the DPP can contribute significantly to this goal by providing structured and accessible product data.

Conclusion

In conclusion, the Digital Product Passport and the CIRPASS initiative represent a significant step towards a more circular and sustainable economy. Through the collection and exchange of detailed product data, these systems can improve transparency, encourage responsible consumption practices and reduce environmental impact. However, their implementation requires overcoming challenges related to data protection, security and interoperability. As we move towards a more digitised future, the DPP and CIRPASS have the potential to transform the way we interact with products and contribute to a more sustainable world.

Content prepared by Dr. Fernando Gualo, Professor at UCLM and Data Governance and Quality Consultant The content and the point of view reflected in this publication are the sole responsibility of its author.