Few abilities are as characteristic of human beings as language. According to the Aristotelian school, humans are rational animals who pursue knowledge for the mere fact of knowing. Without going into deep philosophical considerations that far exceed the purpose of this space for dissemination, we can say that this search for knowledge and the accumulation of knowledge would not be possible without the intervention of human language. Hence, in this 21st century - the century of the explosion of Artificial Intelligence (AI) - a large part of the efforts are focused on supporting, complementing and increasing human capabilities related to language.

Introduction

In this space, we have repeatedly discussed the discipline of natural language processing (NLP). We have approached it from different points of view, all of them from a practical, technical and data-related approach - texts - which are the real fuel of the technologies that support this discipline. We have produced monographs on the subject, introducing the basics of the technology and including practical examples. We have also commented on current events and analysed the latest technological trends, as well as the latest major achievements in natural language processing. In almost all publications on the subject, we have mentioned tools, libraries and technological products that, in one way or another, help and support the different processes and applications of natural language. From the creation of reasoned summaries of works and long texts, to the artificial generation of publications or code for programmers, all applications use libraries, packages or frameworks for the development of artificial intelligence applications applied in the field of natural language processing.

On previous occasions we have described the GPT-3 algorithm of the OpenAI company or the Megatron-Turing NLG of Microsoft, as some of the current exponents in terms of analysis power and precision in the generation of quality results. However, on most occasions, we have to talk about algorithms or libraries that, given their complexity, are reserved for a small technical community of developers, academics and professionals within the NLP discipline. That is, if we want to undertake a new project related to natural language processing, we must start from low-level libraries, and then add layers of functionality to our project or application until it is ready for use. Normally, NLP libraries focus on a small part of the path of a software project (mainly the coding task), being the developers or software teams the ones who must contemplate and add the rest of the functional and technical layers (testing, packaging, publishing and putting into production, operations, etc.) to convert the results of that NLP library into a complete technological product or application.

The challenge of launching an application

Let's take an example. Let's suppose that we want to build an application that, based on a starting text, for example, our electricity bill, gives us a simple summary of the content of our bill. We all know that electricity bills or employee pay slips are not exactly simple documents for the general public to understand. The difficulty usually lies in the use of highly technical terminology and, why not say it, in the low interest of some organisations in simplifying the life of citizens by making it easier to understand basic information such as what we pay for electricity or our salaries as employees. Back to the topic at hand. If we want to build a software application for this purpose, we will have to use an algorithm that understands our bill. For this, NLP algorithms have to, first of all, perform an analysis of the text and detect the keywords and their relationships (what in technical terminology is called detecting entities and recognising them in context). That is, the algorithm will have to detect the key entities, such as energy consumption, its units in kWh, the relevant time periods (this month's consumption, last month's consumption, daily consumption, past consumption history, etc.). Once these relevant entities have been detected (and the others discarded), as well as their relationships, there is still a lot to do. In terms of a software project in the field of NLP, Named-Entity-Recognition (NER) is only a small part of an application ready to be used by a person or a system. This is where we introduce you to the SpaCy software library.

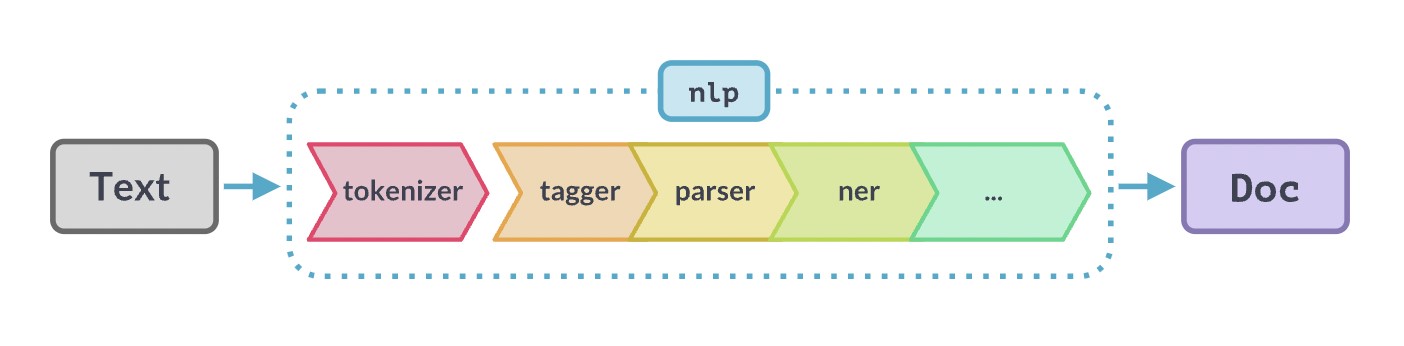

Example of NLP flow or pipeline from the moment we start from the original text we want to analyse until we obtain the final result, either a rich text or a web page with help or explanations to the user. Original source: https://SpaCy.io/

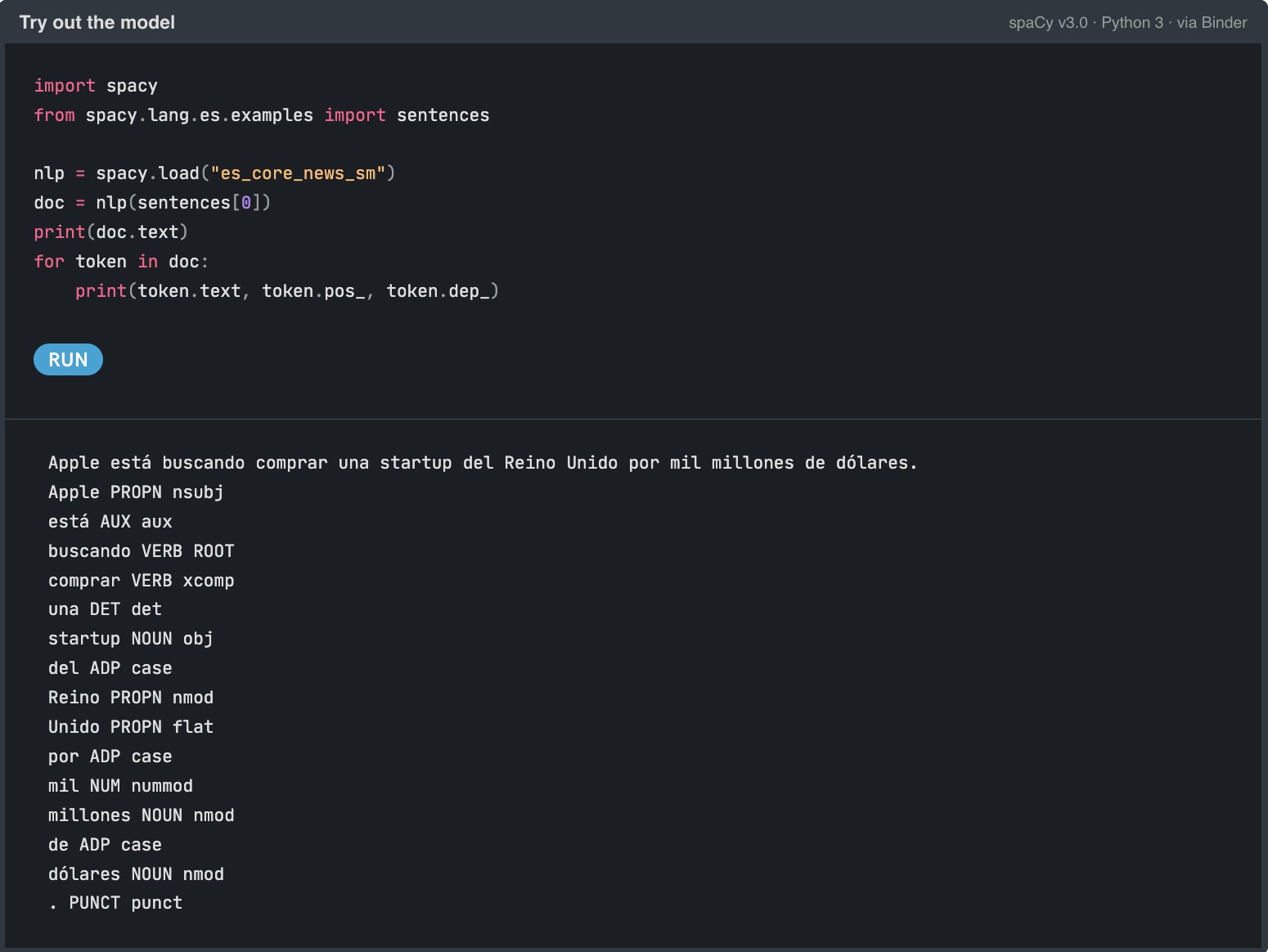

Example of the use of Spacy's es_core_news_sm pipeline to identify entities in a Spanish sentence.

What is Spacy?

SpaCy aims to facilitate the production release (making an application ready for use by the end consumer) of software applications in the natural language domain. SpaCy is an open source software library designed to facilitate advanced natural language processing tasks. SpaCy is written in Python and Cython (C language extensions for Python that allow very efficient low-level programming). It is an open-source library under the MIT license and the entire project is accessible through its Github account.

The advantages of Spacy

But what makes SpaCy different? SpaCy was created with the aim of facilitating the creation of real products. That is to say, the library is not just a library for the most technical and lowest levels within the layers that make up a software application, from the most internal algorithms to the most visual interfaces. The library contemplates the practical aspects of a real software product, in which it is necessary to take into account important aspects such as:

- The large data loads that are required to be processed (imagine what is involved, for example, loading the entire product reviews of a large e-commerce site).

- The speed of execution, since when we have a real application, we need the experience to be as smooth as possible and we can't put up with long waiting times between algorithm executions.

- The packaging of NLP functionality (such as NER) ready to deploy on one or more production servers. SpaCy, not only provides low-level code tools, but supports the processes from the time we create (compile and build a part of a software application) until we integrate this algorithmic part with other parts of the application such as databases or end-user interfaces.

- The optimisation of NLP models so that they can easily run on standard (CPU-based) servers without the need for graphics processors (GPUs).

- Integrated graphical visualisation tools to facilitate debugging or development of new functionality.

- Also important to mention, its fantastic documentation, from its most introductory website to its community on Github. This greatly facilitates rapid adoption among the development community.

- The large number of pre-trained models and flows (73 flows) in 22 different languages. In addition to support for more than 66 languages. In particular, in the case of Spanish language, it is difficult to find models optimised for Spanish in other libraries and tools.

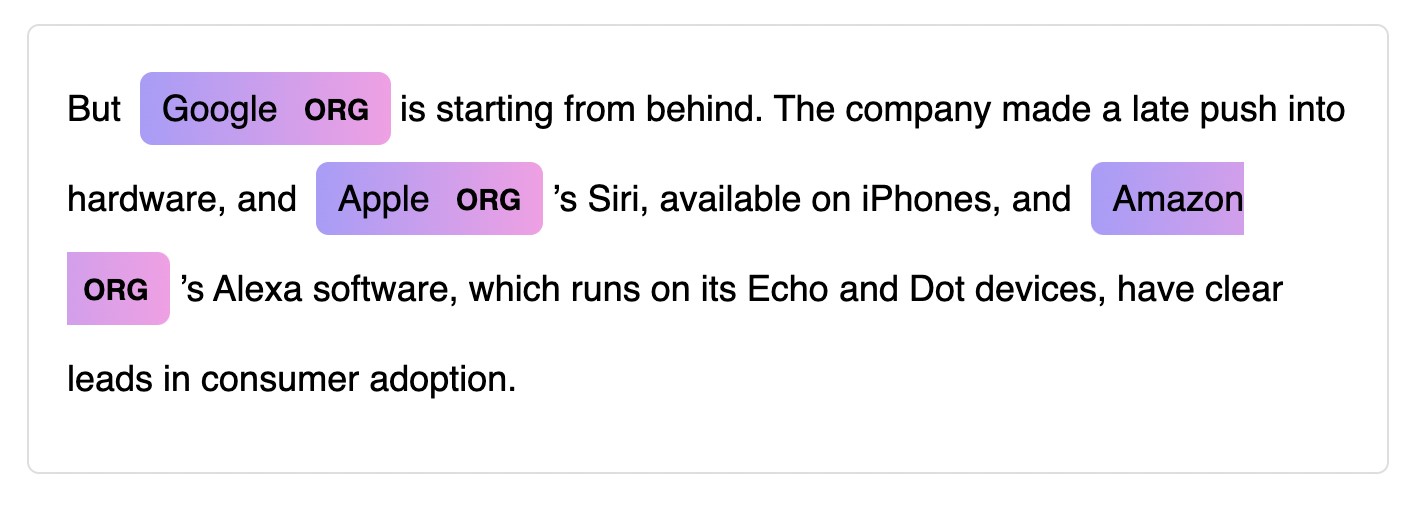

Example of a graphical entity viewer. Original source: https://SpaCy.io/

As a conclusion to this post. If you are a beginner and just starting out in the world of NLP, SpaCy makes it easy to get started and comes with extensive documentation, including a 101 guide for beginners, a free interactive online course and a variety of video tutorials. If you are an experienced developer or part of an established software development team and want to build a final production application, SpaCy is designed specifically for production use and allows you to create and train powerful NLP data streams (texts) and package them for easy deployment. Finally, if you are looking for alternatives to your existing NLP solution (looking for new NLP models, need more flexibility and agility in your production deployments or looking for performance improvements), SpaCy allows you to customise, test different architectures and easily combine existing and popular frameworks such as PyTorch or TensorFlow.

Content prepared by Alejandro Alija, expert in Digital Transformation and Innovation.

The contents and views expressed in this publication are the sole responsibility of the author.

Hola, Alejandro:

SpaCy es una librería tremendamente potente, como bien señalas en el artículo, y por eso mismo puede darse el caso de que estemos matando moscas a cañonazos para casos donde lo que interesa es mucho mas sencillo como, por ejemplo, detectar parecidos "razonables" entre nombres de empresas o de personas ("ALTEA INTERNATIONAL", "ALTEA INT" ó "ALTA INTERNAT.") a la hora de hacer búsquedas o procesar formularios de los que nos llegan por Registro.

Para estas situaciones "sencillas" hay alternativas más fáciles de aplicar (p. ej. https://github.com/seatgeek/thefuzz) que utilizan métricas como la distancia de Levenshtein en lugar de requerir la descarga de modelos de lenguaje para el idioma y que ofrecen resultados más que aceptables.

En cualquier caso, para cada problema habrá que seleccionar herramienta más conveniente.

Un saludo y enhorabuena por el artículo :-)

Hola Juan Andrés. Lo primero muchas gracias por tu interés en el post y por el comentario. Me parece muuy acertado lo que comentas y es verdad que parte del éxito en las soluciones tecnológicas es encontrar la tecnología (librería en este caso) más óptima. No conocía este repo que referencias pero muchas gracias por la contribución. Este camino lo tenemos que hacer entre todos.

Muchas gracias y un abrazo.