To speak of the public domain is to speak of free access to knowledge, shared culture and open innovation. The concept has become a key piece in understanding how information circulates and how the common heritage of humanity is built.

In this post we will explore what the public domain means and show you examples of repositories where you can discover and enjoy works that are already part of everyone.

What is the public domain?

Surely at some point in your life you have seen the image of Mickey Mouse Handling the helm on a steamboat. A characteristic image of the Disney company that you can now use freely in your own works. This is because this first version of Mickey (Steamboat Willie, 1928) entered the public domain in January 2024 -be careful, only the version of that date is "free", subsequent adaptations do continue to be protected, as we will explain later-.

When we talk about the public domain, we refer to the body of knowledge, i nformation, works and creations (books, music, films, photos, software, etc.) that are not protected by copyright. Because of this , anyone can reproduce, copy, adapt and distribute them without having to ask permission or pay licenses. However, the moral rights of the author must always be respected, which are inalienable and do not expire. These rights include always respecting the authorship and integrity of the work*.

The public domain, therefore, shapes the cultural space where works become the common heritage of society, which entails multiple benefits:

- Free access to culture and knowledge: any citizen can read, watch, listen to or download these works without paying for licenses or subscriptions. This favors education, research and universal access to culture.

- Preservation of memory and heritage: the public domain ensures that an important part of our history, science and art remains accessible to present and future generations, without being limited by legal restrictions.

- Encourages creativity and innovation: artists, developers, companies, etc. can reuse and mix works from the public domain to create new products (such as adaptations, new editions, video games, comics, etc.) without fear of infringing rights.

- Technological boost: archives, museums and libraries can freely digitise and disseminate their holdings in the public domain, creating opportunities for digital projects and the development of new tools. For example, these works can be used to train artificial intelligence models and natural language processing tools.

What works and elements belong to the public domain, according to Spanish law?

In the public domain we find both content whose copyright has expired and content that has never been protected. Let's see what Spanish legislation says about it:

Works whose copyright protection has expired.

To know if a work belongs to the public domain, we must look at the date of the death of its author. In this sense, in Spain, there is a turning point: 1987. From that year on, and according to the intellectual property law, artistic works enter the public domain 70 years after the death of their author. However, perpetrators who died before that year are subject to the 1879 Law, where the term was generally 80 years – with exceptions.

Only "original literary, artistic or scientific" creations that involve a sufficient level of creativity are protected, regardless of their medium (paper, digital, audiovisual, etc.). This includes books, musical compositions, theatrical, audiovisual or pictorial works and sculptures to graphics, maps and designs related to topography, geography and science or computer programs, among others.

It should be noted that translations and adaptations, revisions, updates and annotations; compendiums, summaries and extracts; musical arrangements, collections of other people's works, such as anthologies or any transformations of a literary, artistic or scientific work, are also subject to intellectual property. Therefore, a recent adaptation of Don Quixote will have its own protection.

Works that are not eligible for copyright protection.

As we saw, not everything that is produced can be covered by copyright, some examples are:

- Official documents: laws, decrees, judgments and other official texts are not subject to copyright. They are considered too relevant to public life to be restricted, and are therefore in the public domain from the moment of publication.

- Works voluntarily transferred: The rights holders themselves can decide to release their works before the legal term expires. For this there are tools such as the license Creative Commons CC0 , which makes it possible to renounce protection and make the work directly available to everyone.

- Facts and Information: Copyright does not cover facts or data. Information and events are common heritage and can be used freely by anyone.

Europeana and its defence of the public domain

Europeana is Europe's great digital library, a project promoted by the European Union that brings together millions of cultural resources from archives, museums and libraries throughout the territory. Its mission is to facilitate free and open access to European cultural heritage, and in that sense the public domain is at the heart of it. Europeana advocates that works that have lost their copyright protection should remain unrestricted, even when digitized, because they are part of the common heritage of humanity.

As a result of its commitment, it has recently updated its Public Domain Charter, which includes a series of essential principles and guidelines for a robust and vibrant public domain in the digital environment. Among other issues, it mentions how technological advances and regulatory changes have expanded the possibilities of access to cultural heritage, but have also generated risks for the availability and reuse of materials in the public domain. Therefore, it proposes eight measures to protect and strengthen the public domain:

- Advocate against extending the terms or scope of copyright, which limits citizens' access to shared culture.

- Oppose attempts to undue control over free materials, avoiding licenses, fees, or contractual restrictions that reconstitute rights.

- Ensure that digital reproductions do not generate new layers of protection, including photos or 3D models, unless they are original creations.

- Avoid contracts that restrict reuse: Financing digitalisation should not translate into legal barriers.

- Clearly and accurately label works in the public domain, providing data such as author and date to facilitate identification.

- Balancing access with other legitimate interests, respecting laws, cultural values and the protection of vulnerable groups.

- Safeguard the availability of heritage, in the face of threats such as conflicts, climate change or the fragility of digital platforms, promoting sustainable preservation.

- To offer high-quality, reusable reproductions and metadata, in open, machine-readable formats, to enhance their creative and educational use.

Other platforms to access works in the public domain

In addition to Europeana, in Spain we have an ecosystem of projects that make cultural heritage in the public domain available to everyone:

- The National Library of Spain (BNE) plays a key role: every year it publishes the list of Spanish authors who enter the public domain and offers access to their digitized works through BNE Digital, a portal that allows you to consult manuscripts, books, engravings and other historical materials. Thus, we can find works by authors of the stature of Antonio Machado or Federico García Lorca. In addition, the BNE publishes the dataset with information on authors in the public domain in the open air.

- The Virtual Library of Bibliographic Heritage (BVPB), promoted by the Ministry of Culture, brings together thousands of digitized ancient works, ensuring that fundamental texts and materials of our literary and scientific history can be preserved and reused without restrictions. It includes digital facsimile reproductions of manuscripts, printed books, historical photographs, cartographic materials, sheet music, maps, etc.

- Hispana acts as a large national aggregator by connecting digital collections from Spanish archives, libraries, and museums, offering unified access to materials that are part of the public domain. To do this, it collects and makes accessible the metadata of digital objects, allowing these objects to be viewed through links that lead to the pages of the owner institutions.

Together, all these initiatives reinforce the idea that the public domain is not an abstract concept, but a living and accessible resource that expands every year and that allows our culture to continue circulating, inspiring and generating new forms of knowledge.

Thanks to Europeana, BNE Digital, the BVPB, Hispana and many other projects of this type, today we have the possibility of accessing an immense cultural heritage that connects us with our past and propels us towards the future. Each work that enters the public domain expands opportunities for learning, innovation and collective enjoyment, reminding us that culture, when shared, multiplies.

*In accordance with the Intellectual Property Law, the integrity of the work refers to preventing any distortion, modification, alteration or attack against it that damages its legitimate interests or damages its reputation.

Christmas returns every year as an opportunity to stop, breathe and reconnect with what inspires us. From datos.gob.es, we take advantage of these dates to share our traditional letter of recommendations: a selection of books that invite us to better understand the digital world, reflect on the impact of artificial intelligence, improve our professional skills and look at the ethical dilemmas that mark our time.

In this post, we compile works on technological ethics, digital society, AI engineering, quantum computing, machine learning, and data governance. A varied list, with titles in both Spanish and English, which combines academic rigour, dissemination and critical vision. If you are looking for a book that will make you grow, surprise a loved one or simply feed your curiosity, here you will find options for all tastes.

The ethics of artificial intelligence by Sara Degli-Esposti

-

What is it about? This specialist in privacy and data analysis offers a clear and rigorous vision of the risks and opportunities of AI applied to areas such as health, safety, public policy or digital communication. Her proposal stands out for integrating legal, socio-technical and fundamental rights perspectives.

-

Who is it for? Readers looking for a solid introduction to the international debate on AI ethics and regulation.

Available for free here: https://ciec.edu.co/wp-content/uploads/2024/12/LA-ETICA-DE-LA-INTELIGENCIA-ARTIFICIAL.pdf

Understand Nate Gentile's technology

-

What is it about? In this book, the popularizer and specialist in hardware and digital culture Nate Gentile debunks myths, explains technical concepts in an accessible way and offers a critical look at how the technology we use every day really works. From processor performance and system architectures to the business models of large platforms, the book combines technical rigor with a relatable style, accompanied by practical examples and anecdotes from the technology community.

-

Who is it for? Curious readers who want to better understand the inner workings of devices, professionals who are looking for an informative but precise approach, and anyone who wants to lose their fear of technology and understand it more consciously.

Clicks Against Humanity by James Williams

-

What is it about? This journalistic work analyzes how digital platforms shape our attention, our beliefs and our decisions. Through true stories, the book shows the impact of algorithmic design on politics, public opinion, and daily life. An incisive and timely read, especially at a time of growing concern about misinformation and the attention economy.

-

Who is it for? Those who want to understand how platforms work and what effects they can have on society.

The Spring of Artificial Intelligence by Carmen Torrijos and José Carlos Sánchez

- What is it about? Based on everyday examples, the authors explain what language models really are, how technologies such as ChatGPT or computer vision work, what transformations they are generating in sectors such as education, creativity or communication, and what their current limits are. In addition, they provide a critical reflection on the ethical, social and labour challenges associated with this new wave of innovation.

- Who is it for? Readers who want to understand the current phenomenon of AI without the need for technical knowledge, teachers and professionals looking to contextualize its impact on their sector, and anyone interested in understanding what is behind the generative AI boom and how it can influence our immediate future.

Design of Machine Learning Systems by Chip Huyen

-

What is it about? It's a modern, practical primer that explains, from start to finish, how to design, deploy, and maintain robust machine learning systems. Chip Huyen combines technical expertise with informative clarity, covering everything from data engineering to model monitoring and MLOps. A highly valued work in the industry.

-

Who is it for? Technical professionals, teams implementing AI-based products, and students who want to understand how real systems are built in production.

Ethics in Artificial Intelligence and Information Technologies by Gabriela Arriagada-Bruneau, Claudia López and Marcelo Mendoza

-

What is it about? This book addresses one of the great challenges of our time: how to develop AI systems that respect people and society. The authors analyze principles of equity, transparency, accountability, and governance, combining philosophical foundations with practical examples. Its Ibero-American approach is particularly valuable in understanding how these challenges affect this region.

-

Who is it for? Students, public officials, technology professionals and anyone who wants to understand the ethical dilemmas behind the algorithms we use every day.

Generative Deep Learning: Teaching Machines to Paint, Write, Compose, and Play de David Foster

-

What is it about? This work, which is already a classic in its field, introduces the most important generative architectures (GANs, VAEs, diffusion models) with examples and reproducible code. The second edition updates concepts and provides new practical cases related to text, sound and image.

-

Who is it for? Those who want to learn how to create generative models from scratch or understand the technologies behind automatic content creation.

Michio Kaku's quantum supremacy

-

What is it about? Quantum computing has ceased to be science fiction and has become a global strategic field. Michio Kaku, a renowned popularizer, explains clearly what a qubit is, how quantum computers work, what limits they present and what transformations they will promote in cryptography, chemical simulation or artificial intelligence.

-

Who is it for? Ideal for science lovers, technologically curious and readers who want to peek into the future of computing without the need for advanced mathematical knowledge.

Data Governance based on UNE specifications by Ismael Caballero, Fernando Gualo and Mario G. Piattini

-

What is it about? This book is a complete guide to understanding and applying UNE standards relating to data governance in organisations. Clearly explain how to structure roles, processes, policies, and controls to manage data as a strategic asset. It includes case studies, maturity models, implementation examples and guidance to align governance with international standards and with the real needs of the public and private sectors. A particularly useful resource at a time when data quality, traceability and interoperability are critical to driving digital transformation.

-

Who is it for? Data Managers (CDOs), analytics and architecture teams, government professionals, consultants, and any organization that wants to implement a formal data governance model based on recognized standards.

While we'd love to include many more titles, this selection is already a great starting point for exploring the big themes that will shape the digital transformation of the coming years. If you feel like giving knowledge as a gift this holiday season, these works will be a sure hit: they combine current affairs, critical reflection, practical applications and a broad look at the technological future.

Remember that, although here we mention titles that you can find in online bookstores, we always encourage you to check your neighborhood bookstore first. It's a great way to support small businesses and help keep the cultural ecosystem alive and vibrant.

Would you recommend another title?

At datos.gob.es we love to discover new readings. If you know of a book on data, AI, technology or digital society that deserves to be in future editions, you can share it in the comments or write to us through this form. Happy holidays and happy reading!

Artificial intelligence (AI) assistants are already part of our daily lives: we ask them the time, how to get to a certain place or we ask them to play our favorite song. And although AI, in the future, may offer us infinite functionalities, we must not forget that linguistic diversity is still a pending issue.

In Spain, where Spanish coexists with co-official languages such as Basque, Catalan, Valencian and Galician, this issue is especially relevant. The survival and vitality of these languages in the digital age depends, to a large extent, on their ability to adapt and be present in emerging technologies. Currently, most virtual assistants, automatic translators or voice recognition systems do not understand all the co-official languages. However, did you know that there are collaborative projects to ensure linguistic diversity?

In this post we tell you about the approach and the greatest advances of some initiatives that are building the digital foundations necessary for the co-official languages in Spain to also thrive in the era of artificial intelligence.

ILENIA, the coordinator of multilingual resource initiatives in Spain

The models that we are going to see in this post share a focus because they are part of ILENIA, a state-level coordinator that connects the individual efforts of the autonomous communities. This initiative brings together the projects BSC-CNS (AINA), CENID (VIVES), HiTZ (NEL-GAITU) and the University of Santiago de Compostela (NÓS), with the aim of generating digital resources that allow the development of multilingual applications in the different languages of Spain.

The success of these initiatives depends fundamentally on citizen participation. Through platforms such as Mozilla's Common Voice, any speaker can contribute to the construction of these linguistic resources through different forms of collaboration:

- Spoken Read: Collecting different ways of speaking through voice donations of a specific text.

- Spontaneous speech: creates real and organic datasets as a result of conversations with prompts.

- Text in language: collaborate in the transcription of audios or in the contribution of textual content, suggesting new phrases or questions to enrich the corpora.

All resources are published under free licenses such as CC0, allowing them to be used free of charge by researchers, developers and companies.

The challenge of linguistic diversity in the digital age

Artificial intelligence systems learn from the data they receive during their training. To develop technologies that work correctly in a specific language, it is essential to have large volumes of data: audio recordings, text corpora and examples of real use of the language.

In other publications of datos.gob.es we have addressed the functioning of foundational models and initiatives in Spanish such as ALIA, trained with large corpus of text such as those of the Royal Spanish Academy.

Both posts explain why language data collection is not a cheap or easy task. Technology companies have invested massively in compiling these resources for languages with large numbers of speakers, but Spanish co-official languages face a structural disadvantage. This has led to many models not working properly or not being available in Valencian, Catalan, Basque or Galician.

However, there are collaborative and open data initiatives that allow the creation of quality language resources. These are the projects that several autonomous communities have launched, marking the way towards a multilingual digital future.

On the one hand, the Nós en Galicia Project creates oral and conversational resources in Galician with all the accents and dialectal variants to facilitate integration through tools such as GPS, voice assistants or ChatGPT. A similar purpose is that of Aina in Catalonia, which also offers an academic platform and a laboratory for developers or Vives in the Valencian Community. In the Basque Country there is also the Euskorpus project , which aims to constitute a quality text corpus in Basque. Let's look at each of them.

Proyecto Nós, a collaborative approach to digital Galician

The project has already developed three operational tools: a multilingual neural translator, a speech recognition system that converts speech into text, and a speech synthesis application. These resources are published under open licenses, guaranteeing their free and open access for researchers, developers and companies. These are its main features:

- Promoted by: the Xunta de Galicia and the University of Santiago de Compostela.

- Main objective: to create oral and conversational resources in Galician that capture the dialectal and accent diversity of the language.

- How to participate: The project accepts voluntary contributions both by reading texts and by answering spontaneous questions.

- Donate your voice in Galician: https://doagalego.nos.gal

Aina, towards an AI that understands and speaks Catalan

With a similar approach to the Nós project, Aina seeks to facilitate the integration of Catalan into artificial intelligence language models.

It is structured in two complementary aspects that maximize its impact:

- Aina Tech focuses on facilitating technology transfer to the business sector, providing the necessary tools to automatically translate websites, services and online businesses into Catalan.

- Aina Lab promotes the creation of a community of developers through initiatives such as Aina Challenge, promoting collaborative innovation in Catalan language technologies. Through this call , 22 proposals have already been selected with a total amount of 1 million to execute their projects.

The characteristics of the project are:

- Powered by: the Generalitat de Catalunya in collaboration with the Barcelona Supercomputing Center (BSC-CNS).

- Main objective: it goes beyond the creation of tools, it seeks to build an open, transparent and responsible AI infrastructure with Catalan.

- How to participate: You can add comments, improvements, and suggestions through the contact inbox: https://form.typeform.com/to/KcjhThot?typeform-source=langtech-bsc.gitbook.io.

Vives, the collaborative project for AI in Valencian

On the other hand, Vives collects voices speaking in Valencian to serve as training for AI models.

- Promoted by: the Alicante Digital Intelligence Centre (CENID).

- Objective: It seeks to create massive corpora of text and voice, encourage citizen participation in data collection, and develop specialized linguistic models in sectors such as tourism and audiovisual, guaranteeing data privacy.

- How to participate: You can donate your voice through this link: https://vives.gplsi.es/instruccions/.

Gaitu: strategic investment in the digitalisation of the Basque language

In Basque, we can highlight Gaitu, which seeks to collect voices speaking in Basque in order to train AI models. Its characteristics are:

- Promoted by: HiTZ, the Basque language technology centre.

- Objective: to develop a corpus in Basque to train AI models.

- How to participate: You can donate your voice in Basque here https://commonvoice.mozilla.org/eu/speak.

Benefits of Building and Preserving Multilingual Language Models

The digitization projects of the co-official languages transcend the purely technological field to become tools for digital equity and cultural preservation. Its impact is manifested in multiple dimensions:

- For citizens: these resources ensure that speakers of all ages and levels of digital competence can interact with technology in their mother tongue, removing barriers that could exclude certain groups from the digital ecosystem.

- For the business sector: the availability of open language resources makes it easier for companies and developers to create products and services in these languages without assuming the high costs traditionally associated with the development of language technologies.

- For the research fabric, these corpora constitute a fundamental basis for the advancement of research in natural language processing and speech technologies, especially relevant for languages with less presence in international digital resources.

The success of these initiatives shows that it is possible to build a digital future where linguistic diversity is not an obstacle but a strength, and where technological innovation is put at the service of the preservation and promotion of linguistic cultural heritage.

Between ice cream and longer days, summer is here. At this time of year, open information can become our best ally to plan getaways, know schedules of the bathing areas in our community or even know the state of traffic on roads that take us to our next destination.

Whether you're on the move or at home resting, you can find a wide variety of datasets and apps on the datos.gob.es portal that can transform the way you live and enjoy the summer. In addition, if you want to take advantage of the summer season to train, we also have resources for you.

Training, rest or adventure, in this post, we offer you some of the resources that can be useful this summer.

An opportunity to learn: courses and cultural applications

Are you thinking of making a change in your professional career? Or would you like to improve in a discipline? Data science is one of the most in-demand skills for companies and artificial intelligence offers new opportunities every day to apply it in our day-to-day lives.

To understand both disciplines well and be up to date with their development, you can take advantage of the summer to train in programming, data visualization or even generative AI. In this post, which we published at the beginning of summer, you have a list of proposals, you are still in time to sign up for some!

If you already have some knowledge, we advise you to review our step-by-step exercises. In each of them you will find the code reproducible and fully documented, so you can replicate it at your own pace. In this infographic we show you several examples, divided by themes and level of difficulty. A practical way to test your technical skills and learn about innovative tools and technologies.

If instead of data science, you want to take advantage of it to gain more cultural knowledge, we also have options for you. First of all, we recommend this dataset on the cultural agenda of events in the Basque Country to discover festivals, concerts and other cultural activities. Another interesting dataset is that of tourist information offices in Tenerife where they will inform you how to plan cultural itineraries. And this application will accompany you on a tour of Castilla y León through a gamified map to identify tourist places of interest.

Plan your perfect getaway: datasets for tourism and vacations

Some of the open datasets you can find on datos.gob.es are the basis for creating applications that can be very useful for travel. We are talking, for example, about the dataset of campsites in Navarre that provides updated data on active tourism camps, including information on services, location and capacity. In this same autonomous community, this dataset on restaurants and cafeterias may be useful to you.

On the other hand, this dataset on the supply of tourist accommodation in Aragon presents a complete catalogue of hotels, inns and hostels classified by category, allowing travellers to make informed decisions according to their budget and preferences.

Another interesting resource is this dataset published by the National Institute of Statistics, which you can also find federated in datos.gob.es on trips, overnight stays, average duration and expenditure per trip. Thanks to this dataset, you can get an idea of how people travel and take it as a reference to plan your trip.

Enjoy the Water: Open Datasets for Water Activities

Access to information about beaches and bathing areas is essential for a safe and pleasant summer. The Bizkaia beach dataset provides detailed information on the characteristics of each beach, including available services, accessibility and water conditions. Similarly, this dataset of bathing areas in the Community of Madrid provides data on safe and controlled aquatic spaces in the region.

If you want a more general view, this application developed by the Ministry for Ecological Transition and Demographic Challenge (MITECO) with open data offers a national visualization of beaches at the national level. More recently, RTVE's data team has developed this Great Map of Spain's beaches that includes more than 3,500 destinations with specific information.

For lovers of water sports and sailing, tide prediction datasets for both Galicia and the Basque Country offer crucial information for planning activities at sea. This data allows boaters, surfers and fishermen to optimize their activities according to ocean conditions.

Smart mobility: datasets for hassle-free travel

It is not news that mobility during these months is even greater than in the rest of the year. Datasets on traffic conditions in Barcelona and the roads in Navarra provide real-time information that helps travellers avoid congestion and plan efficient routes. This information is especially valuable during periods of increased summer mobility, when roads experience a significant increase in traffic.

The applications that provide information on the price of fuel at the different Spanish petrol stations are among the most consulted on our portal throughout the year, but in summer their popularity skyrockets even more. They are interesting because they allow you to locate the service stations with the most competitive prices, optimizing the travel budget. This information can also be found in regularly updated datasets and is especially useful for long trips and route planning.

The future of open data in tourism

The convergence of open data, mobile technology and artificial intelligence is creating new opportunities to personalize and enhance the tourism experience. The datasets and resources available in datos.gob.es not only provide current information, but also serve as a basis for the development of innovative solutions that can anticipate needs, optimize resources, and create more satisfying experiences for travelers.

From route planning to selecting accommodations or finding cultural activities, these datasets and apps empower citizens and are a useful resource to maximize the enjoyment of this time of year. This summer, before you pack your bags, it's worth exploring the possibilities offered by open data.

Artificial intelligence is no longer a thing of the future: it is here and can become an ally in our daily lives. From making tasks easier for us at work, such as writing emails or summarizing documents, to helping us organize a trip, learn a new language, or plan our weekly menus, AI adapts to our routines to make our lives easier. You don't have to be tech-savvy to take advantage of it; while today's tools are very accessible, understanding their capabilities and knowing how to ask the right questions will maximize their usefulness.

AI Passive and Active Subjects

The applications of artificial intelligence in everyday life are transforming our daily lives. AI already covers multiple fields of our routines. Virtual assistants, such as Siri or Alexa, are among the most well-known tools that incorporate artificial intelligence, and are used to answer questions, schedule appointments, or control devices.

Many people use tools or applications with artificial intelligence on a daily basis, even if it operates imperceptibly to the user and does not require their intervention. Google Maps, for example, uses AI to optimize routes in real time, predict traffic conditions, suggest alternative routes or estimate the time of arrival. Spotify applies it to personalize playlists or suggest songs, and Netflix to make recommendations and tailor the content shown to each user.

But it is also possible to be an active user of artificial intelligence using tools that interact directly with the models. Thus, we can ask questions, generate texts, summarize documents or plan tasks. AI is no longer a hidden mechanism but a kind of digital co-pilot that assists us in our day-to-day lives. ChatGPT, Copilot or Gemini are tools that allow us to use AI without having to be experts. This makes it easier for us to automate daily tasks, freeing up time to spend on other activities.

AI in Home and Personal Life

Virtual assistants respond to voice commands and inform us what time it is, the weather or play the music we want to listen to. But their possibilities go much further, as they are able to learn from our habits to anticipate our needs. They can control different devices that we have in the home in a centralized way, such as heating, air conditioning, lights or security devices. It is also possible to configure custom actions that are triggered via a voice command. For example, a "good morning" routine that turns on the lights, informs us of the weather forecast and the traffic conditions.

When we have lost the manual of one of the appliances or electronic devices we have at home, artificial intelligence is a good ally. By sending a photo of the device, you will help us interpret the instructions, set it up, or troubleshoot basic issues.

If you want to go further, AI can do some everyday tasks for you. Through these tools we can plan our weekly menus, indicating needs or preferences, such as dishes suitable for celiacs or vegetarians, prepare the shopping list and obtain the recipes. It can also help us choose between the dishes on a restaurant's menu taking into account our preferences and dietary restrictions, such as allergies or intolerances. Through a simple photo of the menu, the AI will offer us personalized suggestions.

Physical exercise is another area of our personal lives in which these digital co-pilots are very valuable. We may ask you, for example, to create exercise routines adapted to different physical conditions, goals and available equipment.

Planning a vacation is another of the most interesting features of these digital assistants. If we provide them with a destination, a number of days, interests, and even a budget, we will have a complete plan for our next trip.

Applications of AI in studies

AI is profoundly transforming the way we study, offering tools that personalize learning. Helping the little ones in the house with their schoolwork, learning a language or acquiring new skills for our professional development are just some of the possibilities.

There are platforms that generate personalized content in just a few minutes and didactic material made from open data that can be used both in the classroom and at home to review. Among university students or high school students, some of the most popular options are applications that summarize or make outlines from longer texts. It is even possible to generate a podcast from a file, which can help us understand and become familiar with a topic while playing sports or cooking.

But we can also create our applications to study or even simulate exams. Without having programming knowledge, it is possible to generate an application to learn multiplication tables, irregular verbs in English or whatever we can think of.

How to Use AI in Work and Personal Finance

In the professional field, artificial intelligence offers tools that increase productivity. In fact, it is estimated that in Spain 78% of workers already use AI tools in the workplace. By automating processes, we save time to focus on higher-value tasks. These digital assistants summarize long documents, generate specialized reports in a field, compose emails, or take notes in meetings.

Some platforms already incorporate the transcription of meetings in real time, something that can be very useful if we do not master the language. Microsoft Teams, for example, offers useful options through Copilot from the "Summary" tab of the meeting itself, such as transcription, a summary or the possibility of adding notes.

The management of personal finances has also evolved thanks to applications that use AI, allowing you to control expenses and manage a budget. But we can also create our own personal financial advisor using an AI tool, such as ChatGPT. By providing you with insights into income, fixed expenses, variables, and savings goals, it analyzes the data and creates personalized financial plans.

Prompts and creation of useful applications for everyday life

We have seen the great possibilities that artificial intelligence offers us as a co-pilot in our day-to-day lives. But to make it a good digital assistant, we must know how to ask it and give it precise instructions.

A prompt is a basic instruction or request that is made to an AI model to guide it, with the aim of providing us with a coherent and quality response. Good prompting is the key to getting the most out of AI. It is essential to ask well and provide the necessary information.

To write effective prompts we have to be clear, specific, and avoid ambiguities. We must indicate what the objective is, that is, what we want the AI to do: summarize, translate, generate an image, etc. It is also key to provide it with context, explaining who it is aimed at or why we need it, as well as how we expect the response to be. This can include the tone of the message, the formatting, the fonts used to generate it, etc.

Here are some tips for creating effective prompts:

- Use short, direct and concrete sentences. The clearer the request, the more accurate the answer. Avoid expressions such as "please" or "thank you", as they only add unnecessary noise and consume more resources. Instead, use words like "must," "do," "include," or "list." To reinforce the request, you can capitalize those words. These expressions are especially useful for fine-tuning a first response from the model that doesn't meet your expectations.

- It indicates the audience to which it is addressed. Specify whether the answer is aimed at an expert audience, inexperienced audience, children, adolescents, adults, etc. When we want a simple answer, we can, for example, ask the AI to explain it to us as if we were ten years old.

- Use delimiters. Separate the instructions using a symbol, such as slashes (//) or quotation marks to help the model understand the instruction better. For example, if you want it to do a translation, it uses delimiters to separate the command ("Translate into English") from the phrase it is supposed to translate.

- Indicates the function that the model should adopt. Specifies the role that the model should assume to generate the response. Telling them whether they should act like an expert in finance or nutrition, for example, will help generate more specialized answers as they will adapt both the content and the tone.

- Break down entire requests into simple requests. If you're going to make a complex request that requires an excessively long prompt, it's a good idea to break it down into simpler steps. If you need detailed explanations, use expressions like "Think by step" to give you a more structured answer.

- Use examples. Include examples of what you're looking for in the prompt to guide the model to the answer.

- Provide positive instructions. Instead of asking them not to do or include something, state the request in the affirmative. For example, instead of "Don't use long sentences," say, "Use short, concise sentences." Positive instructions avoid ambiguities and make it easier for the AI to understand what it needs to do. This happens because negative prompts put extra effort on the model, as it has to deduce what the opposite action is.

- Offer tips or penalties. This serves to reinforce desired behaviors and restrict inappropriate responses. For example, "If you use vague or ambiguous phrases, you will lose 100 euros."

- Ask them to ask you what they need. If we instruct you to ask us for additional information we reduce the possibility of hallucinations, as we are improving the context of our request.

- Request that they respond like a human. If the texts seem too artificial or mechanical, specify in the prompt that the response is more natural or that it seems to be crafted by a human.

- Provides the start of the answer. This simple trick is very useful in guiding the model towards the response we expect.

- Define the fonts to use. If we narrow down the type of information you should use to generate the answer, we will get more refined answers. It asks, for example, that it only use data after a specific year.

- Request that it mimic a style. We can provide you with an example to make your response consistent with the style of the reference or ask you to follow the style of a famous author.

While it is possible to generate functional code for simple tasks and applications without programming knowledge, it is important to note that developing more complex or robust solutions at a professional level still requires programming and software development expertise. To create, for example, an application that helps us manage our pending tasks, we ask AI tools to generate the code, explaining in detail what we want it to do, how we expect it to behave, and what it should look like. From these instructions, the tool will generate the code and guide us to test, modify and implement it. We can ask you how and where to run it for free and ask for help making improvements.

As we've seen, the potential of these digital assistants is enormous, but their true power lies in large part in how we communicate with them. Clear and well-structured prompts are the key to getting accurate answers without needing to be tech-savvy. AI not only helps us automate routine tasks, but it expands our capabilities, allowing us to do more in less time. These tools are redefining our day-to-day lives, making it more efficient and leaving us time for other things. And best of all: it is now within our reach.

It is possible that our ability to be surprised by new generative artificial intelligence (AI) tools is beginning to wane. The best example is GPT-o1, a new language model with the highest reasoning ability achieved so far, capable of verbalising - something similar to - its own logical processes, but which did not arouse as much enthusiasm at its launch as might have been expected. In contrast to the previous two years, in recent months we have had less of a sense of disruption and have reacted less massively to new developments.

One possible reflection is that we do not need, for now, more intelligence in the models, but to see with our own eyes a landing in concrete uses that make our lives easier: how do I use the power of a language model to consume content faster, to learn something new or to move information from one format to another? Beyond the big general-purpose applications, such as ChatGPT or Copilot, there are free and lesser-known tools that help us think better, and offer AI-based capabilities to discover, understand and share knowledge.

Generate podcasts from a file: NotebookLM

The NotebookLM automated podcasts first arrived in Spain in the summer of 2024 and did raise a significant stir, despite not even being available in Spanish. Following Google's style, the system is simple: simply upload a PDF file as a source to obtain different variations of the content provided by Gemini 2.0 (Google's AI system), such as a summary of the document, a study guide, a timeline or a list of frequently asked questions. In this case, we have used a report on artificial intelligence and democracy published by UNESCO in 2024 as an example.

Figure 1. Different summary options in NotebookLM.

While the study guide is an interesting output, offering a system of questions and answers to memorise and a glossary of terms, the star of NotebookLM is the so-called "audio summary": a completely natural conversational podcast between two synthetic interlocutors who comment in a pleasant way on the content of the PDF.

Figure 2. Audio summary in NotebookLM.

The quality of the content of this podcast still has room for improvement, but it can serve as a first approach to the content of the document, or help us to internalise it more easily from the audio while we take a break from screens, exercise or move around.

The trick: apparently, you can't generate the podcast in Spanish, only in English, but you can try with this prompt: "Make an audio summary of the document in Spanish". It almost always works.

Create visualisations from text: Napkin AI

Napkin offers us something very valuable: creating visualisations, infographics and mind maps from text content. In its free version, the system only asks you to log in with an email address. Once inside, it asks us how we want to enter the text from which we are going to create the visualisations. We can paste it or directly generate with AI an automatic text on any topic.

Figure 3. Starting points in Napkin.ai.

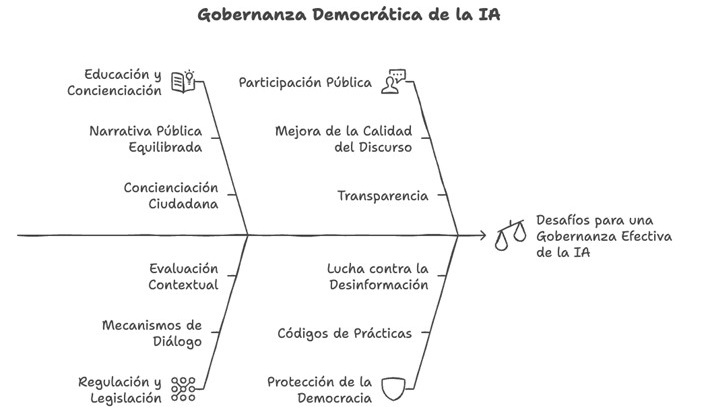

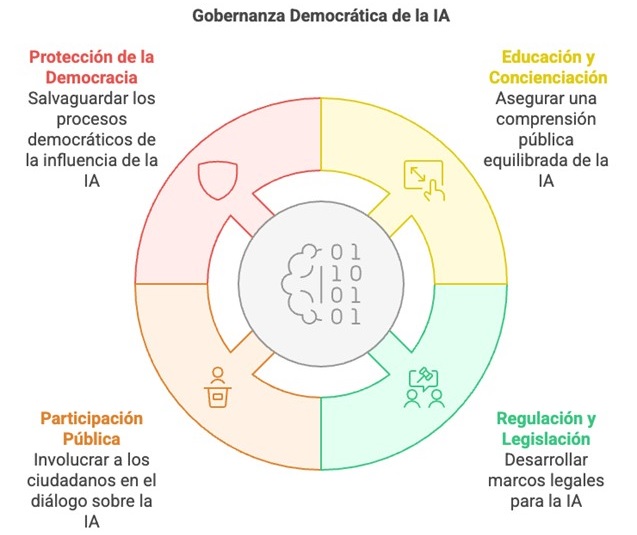

In this case, we will copy and paste an excerpt from the UNESCO report which contains several recommendations for the democratic governance of AI. From the text it receives, Napkin.ai provides illustrations and various types of diagrams. We can find from simpler proposals with text organised in keys and quadrants to others illustrated with drawings and icons.

Figure 4. Proposed scheme in Napkin.ai.

Although they are far from the quality of professional infographics, these visualisations can be useful on a personal and learning level, to illustrate a post in networks, to explain concepts internally to our team or to enrich our own content in the educational field.

The trick: if you click on Stylesin each scheme proposal, you will find more variations of the scheme with different colours and lines. You can also modify the texts by simply clicking on them once you select a visualisation.

Automatic presentations and slides: Gamma

Of all the content formats that AI is capable of generating, slideshows are probably the least successful. Sometimes the designs are not too elaborate, sometimes we don't get the template we want to use to be respected, almost always the texts are too simple. The particularity of Gamma, and what makes it more practical than other options such as Beautiful.ai, is that we can create a presentation directly from a text content that we can paste, generate with AI or upload in a file.

Figure 5. Starting points for Gamma.

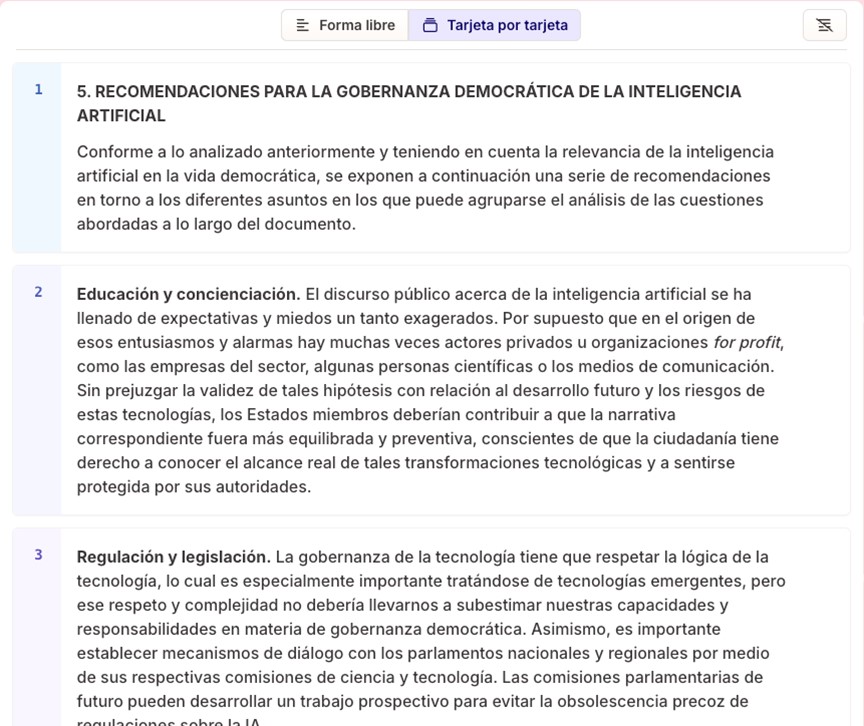

If we paste the same text as in the previous example, about UNESCO's recommendations for democratic governance of AI, in the next step Gamma gives us the choice between "free form" or "card by card". In the first option, the system's AI organises the content into slides while preserving the full meaning of each slide. In the second, it proposes that we divide the text to indicate the content we want on each slide.

Figure 6. Text automatically split into slides by Gamma.

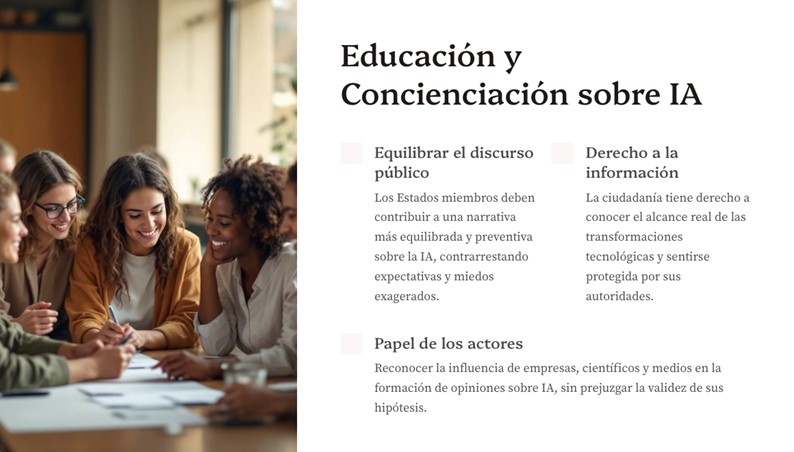

We select the second option, and the text is automatically divided into different blocks that will be our future slides. By clicking on "Continue", we are asked to select a base theme. Finally, by clicking on "Generate", the complete presentation is automatically created.

Figure 7. Example of a slide created with Gamma.

Gamma accompanies the slides with AI-created images that keep a certain coherence with the content, and gives us the option of modifying the texts or generating different images. Once ready, we can export it directly to Power Point format.

A trick: in the "edit with AI" button on each slide we can ask it to automatically translate it into another language, correct the spelling or even convert the text into a timeline.

Summarise from any format: NoteGPT

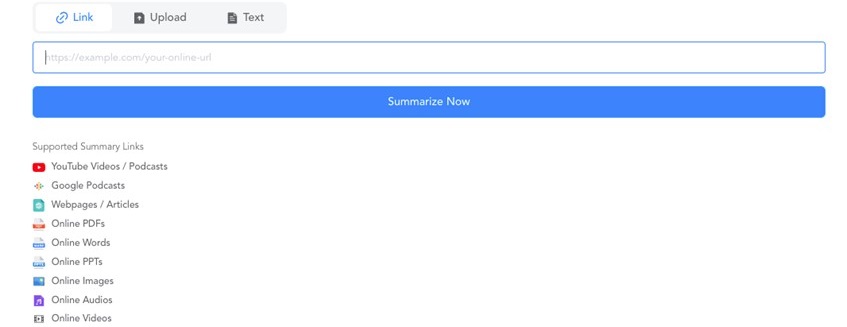

The aim of NoteGPT is very clear: to summarise content that we can import from many different sources. We can copy and paste a text, upload a file or an image, or directly extract the information from a link, something very useful and not so common in AI tools. Although the latter option does not always work well, it is one of the few tools that offer it.

Figure 8. Starting points for NoteGPT.

In this case, we introduce the link to a YouTube video containing an interview with Daniel Innerarity on the intersection between artificial intelligence and democratic processes. On the results screen, the first thing you get on the left is the full transcript of the interview, in good quality. We can locate the transcript of a specific fragment of the video, translate it into different languages, copy it or download it, even in an SRT file of time-mapped subtitles.

Figure 9. Example of transcription with minutes in NoteGPT

Meanwhile, on the right, we find the summary of the video with the most important points, sorted and illustrated with emojis. Also in the "AI Chat" button we can interact with a conversational assistant and ask questions about the content.

Figure 10. NoteGPT summary from a YouTube interview.

And although this is already very useful, the best thing we can find in NoteGPT are the flashcards, learning cards with questions and answers to internalise the concepts of the video.

Figure 11. NoteGPT learning card (question and answer).

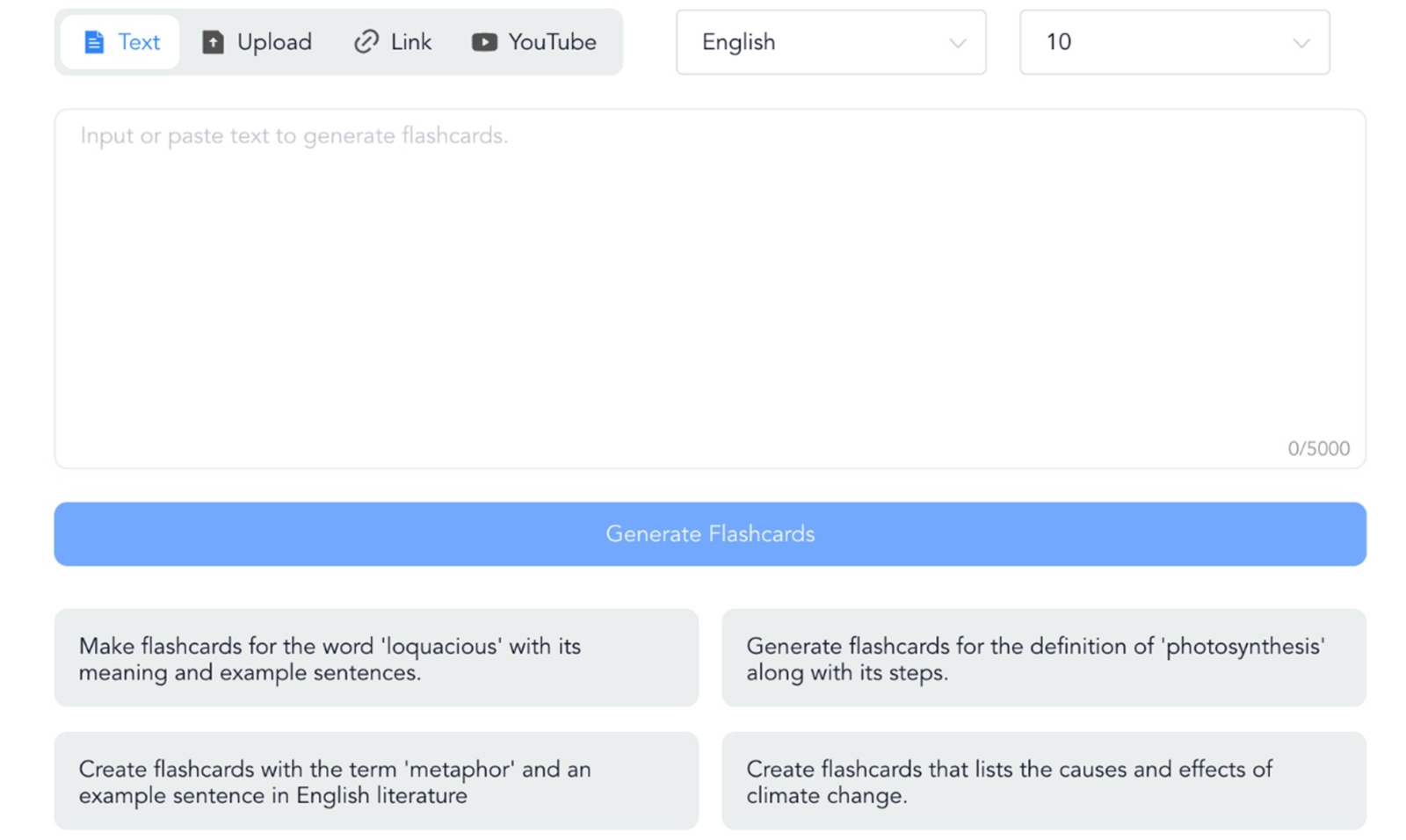

A trick: if the summary only appears in English, try changing the language in the three dots on the right, next to "Summarize" and click "Summarize" again. The summary will appear in English below. In the case of flashcards, to generate them in English, do not try from the home page, but from "AI flashcards". Under "Create" you can select the language.

Figure 12. Creation of flashcards in NoteGPT.

Create videos about anything: Lumen5

Lumen5 makes it easy to create videos with AI by creating the script and images automatically from text or voice content. The most interesting thing about Lumen5 is the starting point, which can be a text, a document, simply an idea or also an existing audio recording or video.

Figure 13. Lumen5 options.

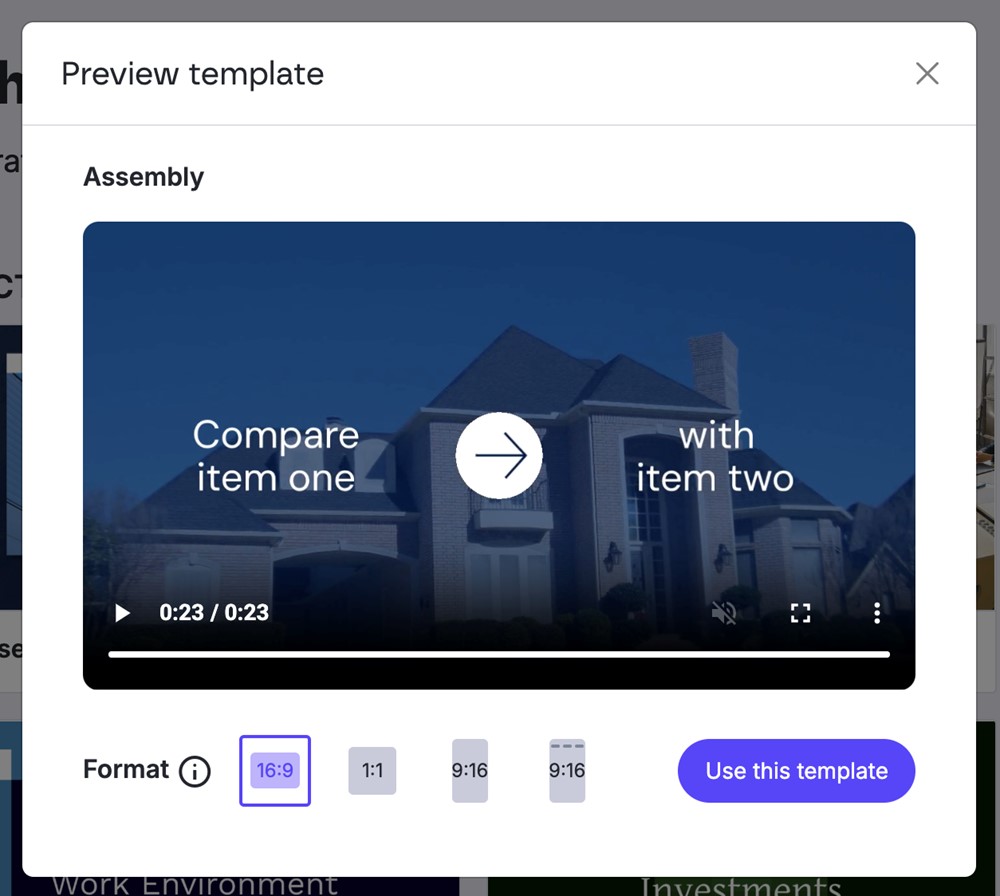

The system allows us, before creating the video and also once created, to change the format from 16:9 (horizontal) to 1:1 (square) or 9:16 (vertical), even with a special 9:16 option for Instagram stories.

Figure 14. Video preview and aspect ratio options.

In this case, we will start from the same text as in previous tools: UNESCO's recommendations for democratic governance of AI. Select the starting option "Text on media", paste it directly into the box and click on "Compose script". The result is a very simple and schematic script, divided into blocks with the basic points of the text, and a very interesting indication: a prediction of the length of the video with that script, approximately 1 minute and 19 seconds.

An important note: the script is not a voice-over, but the text that will be written on the different screens. Once the video is finished, you can translate the whole video into any other language.

Figure 15. Script proposal in Lumen5.

Clicking on "Continue" will take you to the last opportunity to modify the script, where you can add new text blocks or delete existing ones. Once ready, click on "Convert to video" and you will find the story board ready to modify images, colours or the order of the screens. The video will have background music, which you can also change, and at this point you can record your voice over the music to voice the script. Without too much effort, this is the end result:

Figura 16. Resultado final de un vídeo creado con Lumen5.

From the wide range of AI-based digital products that have flourished in recent years, perhaps thousands of them, we have gone through just five examples that show us that individual and collaborative knowledge and learning are more accessible than ever before. The ease of converting content from one format to another and the automatic creation of study guides and materials should promote a more informed and agile society, not only through text or images but also through information condensed in files or databases.

It would be a great boost to collective progress if we understood that the value of AI-based systems is not as simple as writing or creating content for us, but to support our reasoning processes, objectify our decision-making and enable us to handle much more information in an efficient and useful way. Harnessing new AI capabilities together with open data initiatives may be key to the next step in the evolution of human thinking.

Content prepared by Carmen Torrijos, expert in AI applied to language and communication. The contents and points of view reflected in this publication are the sole responsibility of the author.

As we do every year, the datos.gob.es team wishes you happy holidays. If this Christmas you feel like giving or giving yourself a gift of knowledge, we bring you our traditional Christmas letter with ideas to ask Father Christmas or the Three Wise Men.

We have a selection of books on a variety of topics such as data protection, new developments in AI or the great scientific discoveries of the 20th century. All these recommendations, ranging from essays to novels, will be a sure hit to put under the tree.

Maniac by Benjamin Labatut.

- What is it about? Guided by the figure of John von Neumann, one of the great geniuses of the 20th century, the book covers topics such as the creation of atomic bombs, the Cold War, the birth of the digital universe and the rise of artificial intelligence. The story begins with the tragic suicide of Paul Ehrenfest and progresses through the life of von Neumann, who foreshadowed the arrival of a technological singularity. The book culminates in a confrontation between man and machine in an epic showdown in the game of Go, which serves as a warning about the future of humanity and its creations.

- Who is it aimed at? This science fiction novel is aimed at anyone interested in the history of science, technology and its philosophical and social implications. Es ideal para quienes disfrutan de narrativas que combinan el thriller con profundas reflexiones sobre el futuro de la humanidad y el avance tecnológico. It is also suitable for those looking for a literary work that delves into the limits of thought, reason and artificial intelligence.

Take control of your data, by Alicia Asin.

- What is it about? This book compiles resources to better understand the digital environment in which we live, using practical examples and clear definitions that make it easier for anyone to understand how technologies affect our personal and social lives. It also invites us to be more aware of the consequences of the indiscriminate use of our data, from the digital trail we leave behind or the management of our privacy on social networks, to trading on the dark web. It also warns about the legitimate but sometimes invasive use of our online behaviour by many companies.

- Who is it aimed at? The author of this book is CEO of the data reuse company Libelium who participated in one of our Encuentros Aporta and is a leading expert on privacy, appropriate use of data and data spaces, among others. In this book, the author offers a business perspective through a work aimed at the general public.

Governance, management and quality of artificial intelligence by Mario Geraldo Piattini.

- What is it about? Artificial intelligence is increasingly present in our daily lives and in the digital transformation of companies and public bodies, offering both benefits and potential risks. In order to benefit properly from the advantages of AI and avoid problems it is very important to have ethical, legal and responsible systems in place. This book provides an overview of the main standards and tools for managing and assuring the quality of intelligent systems. To this end, it provides clear examples of best available practices.

- Who is it aimed at? Although anyone can read it, the book provides tools to help companies meet the challenges of AI by creating systems that respect ethical principles and align with engineering best practices.

Nexus, by Yuval Noah.

- What is it about? In this new installment, one of the most fashionable writers analyzes how information networks have shaped human history, from the Stone Age to the present era. This essay explores the relationship between information, truth, bureaucracy, mythology, wisdom and power, and how different societies have used information to impose order, with both positive and negative consequences. In this context, the author discusses the urgent decisions we must make in the face of current threats, such as the impact of non-human intelligence on our existence.

- Who is it aimed at? It is a mainstream work, i.e. anyone can read it and will most likely enjoy reading it. It is a particularly attractive option for readers seeking to reflect on the role of information in modern society and its implications for the future of humanity, in a context where emerging technologies such as artificial intelligence are challenging our way of life.

Generative Deep Learning: Teaching Machines to Paint, Write, Compose, and Play by David Foster (second edition 2024)

- What is it about? This practical book dives into the fascinating world of generative deep learning, exploring how machines can create art, music and text. Throughout, Foster guides us through the most innovative architectures such as VAEs, GANs and broadcasting models, explaining how these technologies can transform photographs, generate music and even write text. The book starts with the basics of deep learning and progresses to cutting-edge applications, including image creation with Stable Diffusion, text generation with GPT and music composition with MuSEGAN. It is a work that combines technical rigour with artistic creativity.

- Who is it aimed at? This technical manual is intended for machine learning engineers, data scientists and developers who want to enter the field of generative deep learning. It is ideal for those who already have a background in programming and machine learning, and wish to explore how machines can create original content. It will also be valuable for creative professionals interested in understanding how AI can amplify their artistic capabilities. The book strikes the perfect balance between mathematical theory and practical implementation, making complex concepts accessible through concrete examples and working code.

Information is beautiful, by David McCandless.

- What is it about? Esta guía visual en inglés nos ayuda a entender cómo funciona el mundo a través de impactantes infografías y visualizaciones de datos. This new edition has been completely revised, with more than 20 updates and 20 new visualisations. It presents information in a way that is easy to skim, but also invites further exploration.

- Who is it aimed at? This book is aimed at anyone interested in seeing and understanding information in a different way. It is perfect for those looking for an innovative and visually appealing way to understand the world around us. It is also ideal for those who enjoy exploring data, facts and their interrelationships in an entertaining and accessible way.

Collecting Field Data with QGIS and Mergin Maps, de Kurt Menke y Alexandra Bucha Rasova.

- What is it about? This book teaches you how to master the Mergin Maps platform for collecting, sharing and managing field data using QGIS. The book covers everything from the basics, such as setting up projects in QGIS and conducting field surveys, to advanced workflows for customising projects and managing collaborations. In addition, details on how to create maps, set up survey layers and work with smart forms for data collection are included.

- Who is it aimed at? Although it is a somewhat more technical option than the previous proposals, the book is aimed at new users of Mergin Maps and QGIS. It is also useful for those who are already familiar with these tools and are looking for more advanced workflows.

A terrible greenery by Benjamin Labatut.

- What is it about? This book is a fascinating blend of science and literature, narrating scientific discoveries and their implications, both positive and negative. Through powerful stories, such as the creation of Prussian blue and its connection to chemical warfare, the mathematical explorations of Grothendieck and the struggle between scientists like Schrödinger and Heisenberg, the author, Benjamin Labatut, leads us to explore the limits of science, the follies of knowledge and the unintended consequences of scientific breakthroughs. The work turns science into literature, presenting scientists as complex and human characters.

- Who is it aimed at? The book is aimed at a general audience interested in science, the history of discoveries and the human stories behind them, with a focus on those seeking a literary and in-depth approach to scientific topics. It is ideal for those who enjoy works that explore the complexity of knowledge and its effects on the world.

Designing Better Maps: A Guide for GIS Users, de Cynthia A. Brewer.

- What is it about? It is a guide in English written by the expert cartographer that teaches how to create successful maps using any GIS or illustration tool. Through its 400 full-colour illustrations, the book covers the best cartographic design practices applied to both reference and statistical maps. Topics include map planning, using base maps, managing scale and time, explaining maps, publishing and sharing, using typography and labels, understanding and using colour, and customising symbols.

- Who is it aimed at? This book is intended for all geographic information systems (GIS) users, from beginners to advanced cartographers, who wish to improve their map design skills.

Although in the post we link many purchase links. If you are interested in any of these options, we encourage you to ask your local bookshop to support small businesses during the festive season. Do you know of any other interesting titles? Write it in comments or send it to dinamizacion@datos.gob.es. We read you!

Today, 23 April, is World Book Day, an occasion to highlight the importance of reading, writing and the dissemination of knowledge. Active reading promotes the acquisition of skills and critical thinking by bringing us closer to specialised and detailed information on any subject that interests us, including the world of data.

Therefore, we would like to take this opportunity to showcase some examples of books and manuals regarding data and related technologies that can be found on the web for free.

1. Fundamentals of Data Science with R, edited by Gema Fernandez-Avilés and José María Montero (2024)

Access the book here.

- What is it about? The book guides the reader from the problem statement to the completion of the report containing the solution to the problem. It explains some thirty data science techniques in the fields of modelling, qualitative data analysis, discrimination, supervised and unsupervised machine learning, etc. It includes more than a dozen use cases in sectors as diverse as medicine, journalism, fashion and climate change, among others. All this, with a strong emphasis on ethics and the promotion of reproducibility of analyses.

- Who is it aimed at? It is aimed at users who want to get started in data science. It starts with basic questions, such as what is data science, and includes short sections with simple explanations of probability, statistical inference or sampling, for those readers unfamiliar with these issues. It also includes replicable examples for practice.

- Language: Spanish.

2. Telling stories with data, Rohan Alexander (2023).

Access the book here.

- What is it about? The book explains a wide range of topics related to statistical communication and data modelling and analysis. It covers the various operations from data collection, cleaning and preparation to the use of statistical models to analyse the data, with particular emphasis on the need to draw conclusions and write about the results obtained. Like the previous book, it also focuses on ethics and reproducibility of results.

- Who is it aimed at? It is ideal for students and entry-level users, equipping them with the skills to effectively conduct and communicate a data science exercise. It includes extensive code examples for replication and activities to be carried out as evaluation.

- Language: English.

3. The Big Book of Small Python Projects, Al Sweigart (2021)

Access the book here.

- What is it about? It is a collection of simple Python projects to learn how to create digital art, games, animations, numerical tools, etc. through a hands-on approach. Each of its 81 chapters independently explains a simple step-by-step project - limited to a maximum of 256 lines of code. It includes a sample run of the output of each programme, source code and customisation suggestions.

- Who is it aimed at? The book is written for two groups of people. On the one hand, those who have already learned the basics of Python, but are still not sure how to write programs on their own. On the other hand, those who are new to programming, but are adventurous, enthusiastic and want to learn as they go along. However, the same author has other resources for beginners to learn basic concepts.

- Language: English.

4. Mathematics for Machine Learning, Marc Peter Deisenroth A. Aldo Faisal Cheng Soon Ong (2024)

Access the book here.

- What is it about? Most books on machine learning focus on machine learning algorithms and methodologies, and assume that the reader is proficient in mathematics and statistics. This book foregrounds the mathematical foundations of the basic concepts behind machine learning

- Who is it aimed at? The author assumes that the reader has mathematical knowledge commonly learned in high school mathematics and physics subjects, such as derivatives and integrals or geometric vectors. Thereafter, the remaining concepts are explained in detail, but in an academic style, in order to be precise.

- Language: English.

5. Dive into Deep Learning, Aston Zhang, Zack C. Lipton, Mu Li, Alex J. Smola (2021, continually updated)

Access the book here.

- What is it about? The authors are Amazon employees who use the mXNet library to teach Deep Learning. It aims to make deep learning accessible, teaching basic concepts, context and code in a practical way through examples and exercises. The book is divided into three parts: introductory concepts, deep learning techniques and advanced topics focusing on real systems and applications.

- Who is it aimed at? This book is aimed at students (undergraduate and postgraduate), engineers and researchers, who are looking for a solid grasp of the practical techniques of deep learning. Each concept is explained from scratch, so no prior knowledge of deep or machine learning is required. However, knowledge of basic mathematics and programming is necessary, including linear algebra, calculus, probability and Python programming.

- Language: English.

6. Artificial intelligence and the public sector: challenges, limits and means, Eduardo Gamero and Francisco L. Lopez (2024)

Access the book here.

- What is it about? This book focuses on analysing the challenges and opportunities presented by the use of artificial intelligence in the public sector, especially when used to support decision-making. It begins by explaining what artificial intelligence is and what its applications in the public sector are, and then moves on to its legal framework, the means available for its implementation and aspects linked to organisation and governance.

- Who is it aimed at? It is a useful book for all those interested in the subject, but especially for policy makers, public workers and legal practitioners involved in the application of AI in the public sector.

- Language: Spanish

7. A Business Analyst’s Introduction to Business Analytics, Adam Fleischhacker (2024)

Access the book here.

- What is it about? The book covers a complete business analytics workflow, including data manipulation, data visualisation, modelling business problems, translating graphical models into code and presenting results to stakeholders. The aim is to learn how to drive change within an organisation through data-driven knowledge, interpretable models and persuasive visualisations.

- Who is it aimed at? According to the author, the content is accessible to everyone, including beginners in analytical work. The book does not assume any knowledge of the programming language, but provides an introduction to R, RStudio and the "tidyverse", a series of open source packages for data science.

- Language: English.

We invite you to browse through this selection of books. We would also like to remind you that this is only a list of examples of the possibilities of materials that you can find on the web. Do you know of any other books you would like to recommend? let us know in the comments or email us at dinamizacion@datos.gob.es!

2023 was a year full of new developments in artificial intelligence, algorithms and data-related technologies. Therefore, these Christmas holidays are a good time to take advantage of the arrival of the Three Wise Men and ask them for a book to enjoy reading during the holidays, the well-deserved rest and the return to routine after the holiday period.

Whether you are looking for a reading that will improve your professional profile, learn about new technological developments and applications linked to the world of data and artificial intelligence, or if you want to offer your loved ones a didactic and interesting gift, from datos.gob.es we want to offer you some examples. For the elaboration of the list we have counted on the opinion of experts in the field.

Take paper and pencil because you still have time to include them in your letter to the Three Wise Men!

1. Inteligencia Artificial: Ficción, Realidad y... sueños, Nuria Oliver, Real Academia de Ingeniería GTT (2023)

What it’s about: The book has its origin in the author's acceptance speech to the Royal Academy of Engineering. In it, she explores the history of AI, its implications and development, describes its current impact and raises several perspectives.

Who should read it: It is designed for people interested in entering the world of Artificial Intelligence, its history and practical applications. It is also aimed at those who want to enter the world of ethical AI and learn how to use it for social good.

2. A Data-Driven Company. 21 Claves para crear valor a través de los datos y de la Inteligencia Artificial, Richard Benjamins, Lid Editorial (2022)

What it's about: A Data-Driven Company looks at 21 key decisions companies need to face in order to become a data-driven, AI-driven enterprise. It addresses the typical organizational, technological, business, personnel, business, and ethical decisions that organizations must face to start making data-driven decisions, including how to fund their data strategy, organize teams, measure results, and scale.

Who should read it: It is suitable for professionals who are just starting to work with data, as well as for those who already have experience, but need to adapt to work with big data, analytics or artificial intelligence.

3. Digital Empires: The Global Battle to Regulate Technology, Anu Bradford, OUP USA (2023)

What it's about: In the face of technological advances around the world and the arrival of corporate giants spread across international powers, Bradford examines three competing regulatory approaches: the market-driven U.S. model, the state-driven Chinese model, and the rights-based European regulatory model. It examines how governments and technology companies navigate the inevitable conflicts that arise when these regulatory approaches clash internationally.

Who should read it: This is a book for those who want to learn more about the regulatory approach to technologies around the world and how it affects business. It is written in a clear and understandable way, despite the complexity of the subject. However, the reader will need to know English, because it has not yet been translated into Spanish.

4. El mito del algoritmo, Richard Benjamins e Idoia Salazar, Anaya Multimedia (2020)

What it's about: Artificial intelligence and its exponential use in multiple disciplines is causing an unprecedented social change. With it, philosophical thoughts as deep as the existence of the soul or debates related to the possibility of machines having feelings are beginning to emerge. This is a book to learn about the challenges, challenges and opportunities of this technology.

Who should read it: It is aimed at people with an interest in the philosophy of technology and the development of technological advances. By using simple and enlightening language, it is a book within the reach of a general public.

5. ¿Cómo sobrevivir a la incertidumbre?, de Anabel Forte Deltell, Next Door Publishers

What it is about: It explains in a simple way and with examples how statistics and probability are more present in daily life. The book starts from the present day, in which data, numbers, percentages and graphs have taken over our daily lives and have become indispensable for making decisions or for understanding the world around us.

Who should read it: A general public that wants to understand how the analysis of data, statistics and probability are shaping a large part of political, social, economic and social decisions?

6. Análisis espacial con R: Usa R como un Sistema de Información Geográfica, Jean François Mas, European Scientific Institute

What it is about: This is a more technical book, which provides a brief introduction to the main concepts for handling the R programming language and environment (types of objects and basic operations) and then introduces the reader to the use of the sf library or package for spatial data in vector format through its main functions for reading, writing and analysis. The book approaches, from a practical and applicative perspective with an easy-to-understand language, the first steps to get started with the use of R in spatial analysis applications; for this, it is necessary that users have basic knowledge of Geographic Information Systems.

Who should read it: A public with some knowledge of R and basic knowledge of GIS who wish to enter the world of spatial analysis applications.

This is just a small sample of the great variety of existing literature related to the world of data. We are sure that we have left some interesting book without including it, so if you have any extra recommendation you would like to make, do not hesitate to leave us your favorite title in the comments. Those of us on the datos.gob.es team would be delighted to read your recommendations.