7 posts found

Data policies that foster innovation

The importance of data in today's society and economy is no longer in doubt. Data is now present in virtually every aspect of our lives. This is why more and more countries have been incorporating specific data-related regulations into their policies: whether they relate to personal, busin…

GeoParquet 1.0.0: new format for more efficient access to spatial data

Cloud data storage is currently one of the fastest growing segments of enterprise software, which is facilitating the incorporation of a large number of new users into the field of analytics.

As we introduced in a previous post, a new format, Parquet, has among its…

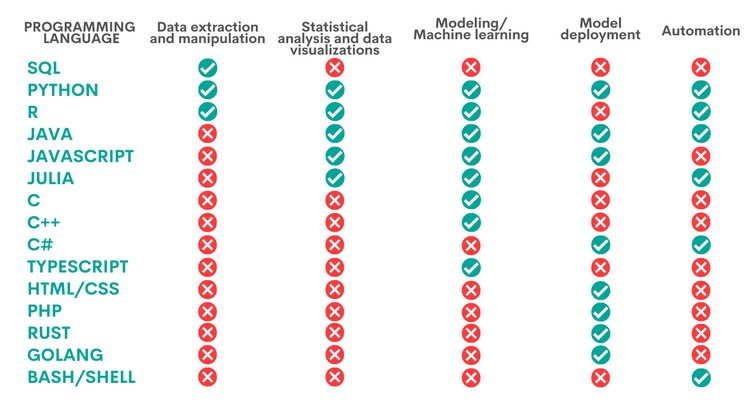

When to use each programming language in data science?

Python, R, SQL, JavaScript, C++, HTML... Nowadays we can find a multitude of programming languages that allow us to develop software programmes, applications, web pages, etc. Each one has unique characteristics that differentiate it from the rest and make it more appropriate for certain tasks. But h…

10 Popular natural language processing libraries

The advance of supercomputing and data analytics in fields as diverse as social networks or customer service is encouraging a part of artificial intelligence (AI) to focus on developing algorithms capable of processing and generating natural language.

To be able to carry out this task in the current…

10 Popular Data Analytics and Machine Learning Libraries

Programming libraries refer to the sets of code files that have been created to develop software in a simple way . Thanks to them, developers can avoid code duplication and minimize errors with greater agility and lower cost. There are many bookstores, focused on different activities. A few weeks ag…

11 libraries for creating data visualisations

Programming libraries are sets of code files that are used to develop software. Their purpose is to facilitate programming by providing common functionalities that have already been solved by other programmers.

Libraries are an essential component for developers to be able to program in a simple way…

Why should you use Parquet files if you process a lot of data?

It's been a long time since we first heard about the Apache Hadoop ecosystem for distributed data processing. Things have changed a lot since then, and we now use higher-level tools to build solutions based on big data payloads. However, it is important to highlight some best practices related to ou…