Transforming data into knowledge has become one of the main objectives facing both public and private organizations today. But, in order to achieve this, it is necessary to start from the premise that the data processed is governed and of quality.

In this sense, the Spanish Association for Standardization (UNE) has recently published an article and report where different technical standards are collected that seek to guarantee the correct management and governance of an organization's data. Both materials are collected in this post , including an infographic-summary of the highlighted standards.

In the aforementioned reference articles, technical standards related to governance, management, quality, security and data privacy are mentioned. On this occasion we want to zoom in on those focused on data quality.

Quality management reference standards

As Lord Kelvin, a 19th-century British physicist and mathematician, said, “what is not measured cannot be improved, and what is not improved is always degraded”. But to measure the quality of the data and to be able to improve it, standards are needed to help us first homogenize said quality* . The following technical standards can help us with this:

ISO 8000 standard

The ISO ( International Organization for Standardization ) regulation has ISO 8000 as the international standard for the quality of transaction data, product data and business master data . This standard is structured in 4 parts: general concepts of data quality (ISO 8000-1, ISO 8000-2 and ISO 8000-8), data quality management processes (ISO 8000-6x), aspects related to the exchange of master data between organizations (parts 100 to 150) and application of product data quality (ISO 8000-311).

Within the ISO 8000-6X family , focused on data quality management processes to create, store and transfer data that support business processes in a timely and profitable manner, we find:

- ISO 8000-60 provides an overview of data quality management processes subject to a cycle of continuous improvement.

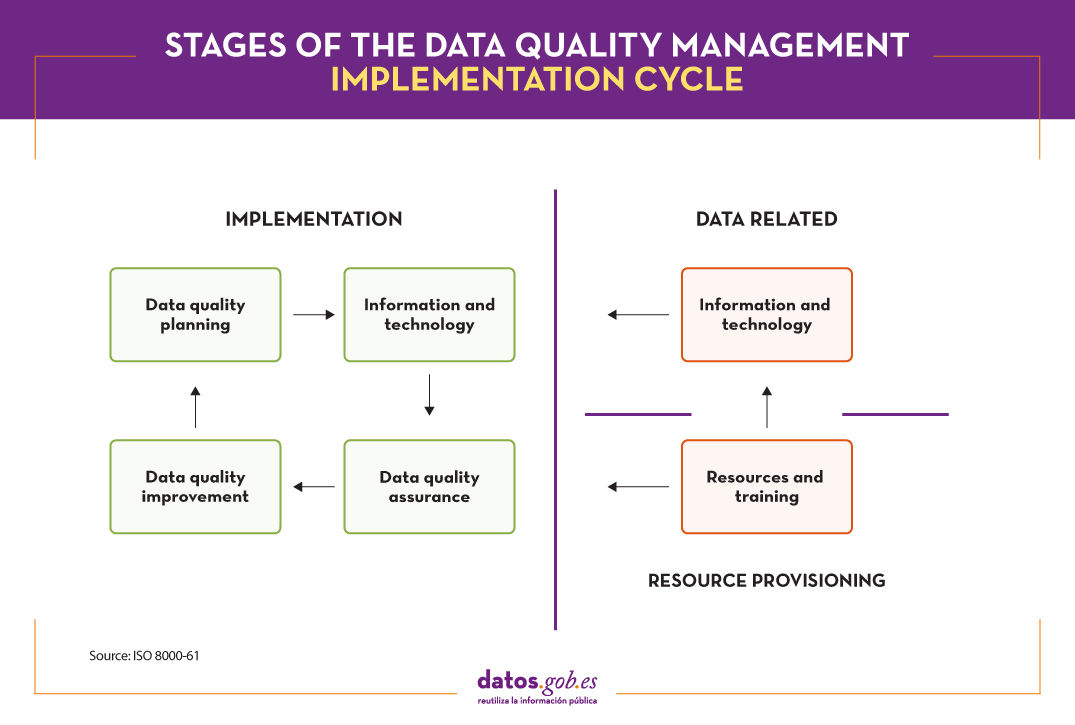

- ISO 8000-61 establishes a reference model for data quality management processes. The main characteristic is that, in order to achieve continuous improvement, the implementation process must be executed continuously following the Plan-Do-Check-Act cycle . In addition, implementation processes related to resource provisioning and data processing are included. As shown in the following image, the four stages of the implementation cycle must have input data, control information and support for continuous improvement, as well as the necessary resources to carry out the activities.

- For its part, ISO 8000-62 , the last of the ISO 8000-6X family, focuses on the evaluation of organizational process maturity . It specifies a framework for assessing the organization's data quality management maturity, based on its ability to execute the activities related to the data quality management processes identified in ISO 8000-61. . Depending on the capacity of the evaluated process, one of the defined levels is assigned.

ISO 25012 standard

Another of the ISO standards that deals with data quality is the ISO 25000 family , which aims to create a common framework for evaluating data quality.cinquality of the software product. Specifically, the ISO 25012 standard defines a general data quality model applicable to data stored in a structured way in an information system.

In addition, in the context of open data, it is considered a reference according to the set of good practices for the evaluation of the quality of open data developed by the pan-European network Share-PSI , conceived to serve as a guide for all public organizations to time to share information.

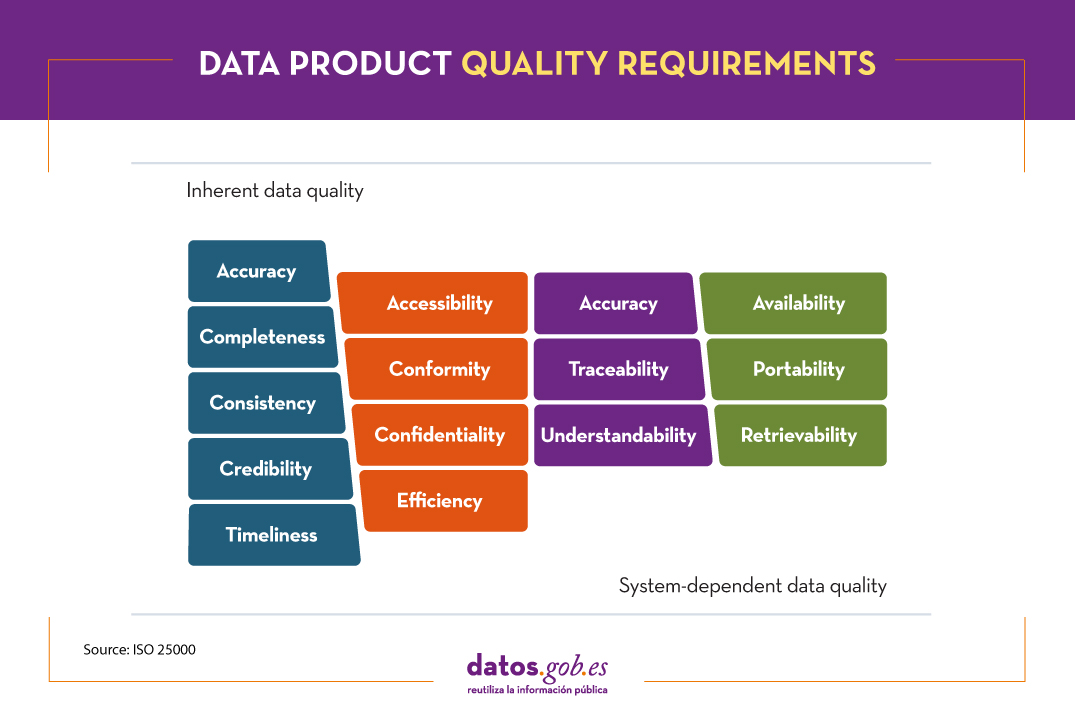

In this case, the quality of the data product is understood as the degree to which it satisfies the requirements previously defined in the data quality model through the following 15 characteristics.

These quality characteristics or dimensions are mainly classified into two categories.

Inherent data quality relates to the intrinsic potential of data to meet defined needs when used under specified conditions. Is about:

- Accuracy : degree to which the data represents the true value of the desired attribute in a specific context, such as the closeness of the data to a set of values defined in a certain domain.

- Completeness – The degree to which the associated data has value for all defined attributes.

- Consistency : degree of consistency with other existing data, eliminating contradictions.

- Credibility – The degree to which the data has attributes that are considered true and credible in its context, including the veracity of the data sources.

- Up-to- dateness : degree of validity of the data for its context of use.

On the other hand, system-dependent data quality is related to the degree achieved through a computer system under specific conditions. Is about:

- Availability : degree to which the data has attributes that allow it to be obtained by authorized users.

- Portability : ability of data to be installed, replaced or deleted from one system to another, preserving the level of quality.

- Recoverability – The degree to which data has attributes that allow quality to be maintained and preserved even in the event of failures.

Additionally, there are characteristics or dimensions that can be encompassed both within " inherent" and "system-dependent" data quality . These are:

- Accessibility : possibility of access to data in a specific context by certain roles.

- Conformity : degree to which the data contains attributes based on established standards, regulations or references.

- Confidentiality : measures the degree of data security based on its nature so that it can only be accessed by the configured roles.

- Efficiency : possibilities offered by the data to be processed with expected performance levels in specific situations.

- Accuracy : Accuracy of the data based on a specific context of use.

- Traceability : ability to audit the entire life cycle of the data.

- Comprehensibility : ability of the data to be interpreted by any user, including the use of certain symbols and languages for a specific context.

In addition to ISO standards, there are other reference frameworks that establish common guidelines for quality measurement. DAMA International , for example, after analyzing the similarities of all the models, establishes 8 basic quality dimensions common to any standard: accuracy, completeness, consistency, integrity, reasonableness, timeliness, uniqueness, validity .

The need for continuous improvement

The homogenization of the quality of the data according to reference standards such as those described, allow laying the foundations for a continuous improvement of the information. From the application of these standards, and taking into account the detailed dimensions, it is possible to define quality indicators. Once they are implemented and executed, they will yield results that will have to be reviewed by the different owners of the data , establishing tolerance thresholds and thus identifying quality incidents in all those indicators that do not exceed the defined threshold.

To do this, different parameters will be taken into account, such as the nature of the data or its impact on the business, since a descriptive field cannot be treated in the same way as a primary key, for example.

From there, it is common to launch an incident resolution circuit capable of detecting the root cause that generates a quality deficiency in a data to extract it and guarantee continuous improvement.

Thanks to this, innumerable benefits are obtained, such as minimizing risks, saving time and resources, agile decision-making, adaptation to new requirements or reputational improvement.

It should be noted that the technical standards addressed in this post allow quality to be homogenized. For data quality measurement tasks per se, we should turn to other standards such as ISO 25024:2015 .

Content prepared by Juan Mañes, expert in Data Governance.

The contents and views expressed in this publication are the sole responsibility of the author.