Christmas returns every year as an opportunity to stop, breathe and reconnect with what inspires us. From datos.gob.es, we take advantage of these dates to share our traditional letter of recommendations: a selection of books that invite us to better understand the digital world, reflect on the impact of artificial intelligence, improve our professional skills and look at the ethical dilemmas that mark our time.

In this post, we compile works on technological ethics, digital society, AI engineering, quantum computing, machine learning, and data governance. A varied list, with titles in both Spanish and English, which combines academic rigour, dissemination and critical vision. If you are looking for a book that will make you grow, surprise a loved one or simply feed your curiosity, here you will find options for all tastes.

The ethics of artificial intelligence by Sara Degli-Esposti

-

What is it about? This specialist in privacy and data analysis offers a clear and rigorous vision of the risks and opportunities of AI applied to areas such as health, safety, public policy or digital communication. Her proposal stands out for integrating legal, socio-technical and fundamental rights perspectives.

-

Who is it for? Readers looking for a solid introduction to the international debate on AI ethics and regulation.

Available for free here: https://ciec.edu.co/wp-content/uploads/2024/12/LA-ETICA-DE-LA-INTELIGENCIA-ARTIFICIAL.pdf

Understand Nate Gentile's technology

-

What is it about? In this book, the popularizer and specialist in hardware and digital culture Nate Gentile debunks myths, explains technical concepts in an accessible way and offers a critical look at how the technology we use every day really works. From processor performance and system architectures to the business models of large platforms, the book combines technical rigor with a relatable style, accompanied by practical examples and anecdotes from the technology community.

-

Who is it for? Curious readers who want to better understand the inner workings of devices, professionals who are looking for an informative but precise approach, and anyone who wants to lose their fear of technology and understand it more consciously.

Clicks Against Humanity by James Williams

-

What is it about? This journalistic work analyzes how digital platforms shape our attention, our beliefs and our decisions. Through true stories, the book shows the impact of algorithmic design on politics, public opinion, and daily life. An incisive and timely read, especially at a time of growing concern about misinformation and the attention economy.

-

Who is it for? Those who want to understand how platforms work and what effects they can have on society.

The Spring of Artificial Intelligence by Carmen Torrijos and José Carlos Sánchez

- What is it about? Based on everyday examples, the authors explain what language models really are, how technologies such as ChatGPT or computer vision work, what transformations they are generating in sectors such as education, creativity or communication, and what their current limits are. In addition, they provide a critical reflection on the ethical, social and labour challenges associated with this new wave of innovation.

- Who is it for? Readers who want to understand the current phenomenon of AI without the need for technical knowledge, teachers and professionals looking to contextualize its impact on their sector, and anyone interested in understanding what is behind the generative AI boom and how it can influence our immediate future.

Design of Machine Learning Systems by Chip Huyen

-

What is it about? It's a modern, practical primer that explains, from start to finish, how to design, deploy, and maintain robust machine learning systems. Chip Huyen combines technical expertise with informative clarity, covering everything from data engineering to model monitoring and MLOps. A highly valued work in the industry.

-

Who is it for? Technical professionals, teams implementing AI-based products, and students who want to understand how real systems are built in production.

Ethics in Artificial Intelligence and Information Technologies by Gabriela Arriagada-Bruneau, Claudia López and Marcelo Mendoza

-

What is it about? This book addresses one of the great challenges of our time: how to develop AI systems that respect people and society. The authors analyze principles of equity, transparency, accountability, and governance, combining philosophical foundations with practical examples. Its Ibero-American approach is particularly valuable in understanding how these challenges affect this region.

-

Who is it for? Students, public officials, technology professionals and anyone who wants to understand the ethical dilemmas behind the algorithms we use every day.

Generative Deep Learning: Teaching Machines to Paint, Write, Compose, and Play de David Foster

-

What is it about? This work, which is already a classic in its field, introduces the most important generative architectures (GANs, VAEs, diffusion models) with examples and reproducible code. The second edition updates concepts and provides new practical cases related to text, sound and image.

-

Who is it for? Those who want to learn how to create generative models from scratch or understand the technologies behind automatic content creation.

Michio Kaku's quantum supremacy

-

What is it about? Quantum computing has ceased to be science fiction and has become a global strategic field. Michio Kaku, a renowned popularizer, explains clearly what a qubit is, how quantum computers work, what limits they present and what transformations they will promote in cryptography, chemical simulation or artificial intelligence.

-

Who is it for? Ideal for science lovers, technologically curious and readers who want to peek into the future of computing without the need for advanced mathematical knowledge.

Data Governance based on UNE specifications by Ismael Caballero, Fernando Gualo and Mario G. Piattini

-

What is it about? This book is a complete guide to understanding and applying UNE standards relating to data governance in organisations. Clearly explain how to structure roles, processes, policies, and controls to manage data as a strategic asset. It includes case studies, maturity models, implementation examples and guidance to align governance with international standards and with the real needs of the public and private sectors. A particularly useful resource at a time when data quality, traceability and interoperability are critical to driving digital transformation.

-

Who is it for? Data Managers (CDOs), analytics and architecture teams, government professionals, consultants, and any organization that wants to implement a formal data governance model based on recognized standards.

While we'd love to include many more titles, this selection is already a great starting point for exploring the big themes that will shape the digital transformation of the coming years. If you feel like giving knowledge as a gift this holiday season, these works will be a sure hit: they combine current affairs, critical reflection, practical applications and a broad look at the technological future.

Remember that, although here we mention titles that you can find in online bookstores, we always encourage you to check your neighborhood bookstore first. It's a great way to support small businesses and help keep the cultural ecosystem alive and vibrant.

Would you recommend another title?

At datos.gob.es we love to discover new readings. If you know of a book on data, AI, technology or digital society that deserves to be in future editions, you can share it in the comments or write to us through this form. Happy holidays and happy reading!

Data visualization is a fundamental practice to democratize access to public information. However, creating effective graphics goes far beyond choosing attractive colors or using the latest technological tools. As Alberto Cairo, an expert in data visualization and professor at the academy of the European Open Data Portal (data.europa.eu), points out, "every design decision must be deliberate: inevitably subjective, but never arbitrary." Through a series of three webinars that you can watch again here, the expert offered innovative tips to be at the forefront of data visualization.

When working with data visualization, especially in the context of public information, it is crucial to debunk some myths ingrained in our professional culture. Phrases like "data speaks for itself," "a picture is worth a thousand words," or "show, don't count" sound good, but they hide an uncomfortable truth: charts don't always communicate automatically.

The reality is more complex. A design professional may want to communicate something specific, but readers may interpret something completely different. How can you bridge the gap between intent and perception in data visualization? In this post, we offer some keys to the training series.

A structured framework for designing with purpose

Rather than following rigid "rules" or applying predefined templates, the course proposes a framework of thinking based on five interrelated components:

- Content: the nature, origin, and limitations of the data

- People: The audience we are targeting

- Intention: The Purposes We Define

- Constraints: The Constraints We Face

- Results: how the graph is received

This holistic approach forces us to constantly ask ourselves: what do our readers really need to know? For example, when communicating information about hurricane or health emergency risks, is it more important to show exact trajectories or communicate potential impacts? The correct answer depends on the context and, above all, on the information needs of citizens.

The danger of over-aggregation

Even without losing sight of the purpose, it is important not to fall into adding too much information or presenting only averages. Imagine, for example, a dataset on citizen security at the national level: an average may hide the fact that most localities are very safe, while a few with extremely high rates distort the national indicator.

As Claus O. Wilke explains in his book "Fundamentals of Data Visualization," this practice can hide crucial patterns, outliers, and paradoxes that are precisely the most relevant to decision-making. To avoid this risk, the training proposes to visualize a graph as a system of layers that we must carefully build from the base:

1. Encoding

- It's the foundation of everything: how we translate data into visual attributes. Research in visual perception shows us that not all "visual channels" are equally effective. The hierarchy would be:

- Most effective: position, length and height

- Moderately effective: angle, area and slope

- Less effective: color, saturation, and shape

How do we put this into practice? For example, for accurate comparisons, a bar chart will almost always be a better choice than a pie chart. However, as nuanced in the training materials, "effective" does not always mean "appropriate". A pie chart can be perfect when we want to express the idea of a "whole and its parts", even if accurate comparisons are more difficult.

2. Arrangement

- The positioning, ordering, and grouping of elements profoundly affects perception. Do we want the reader to compare between categories within a group, or between groups? The answer will determine whether we organize our visualization with grouped or stacked bars, with multiple panels, or in a single integrated view.

3. Scaffolding

Titles, introductions, annotations, scales and legends are fundamental. In datos.gob.es we've seen how interactive visualizations can condense complex information, but without proper scaffolding, interactivity can confuse rather than clarify.

The value of a correct scale

One of the most delicate – and often most manipulable – technical aspects of a visualization is the choice of scale. A simple modification in the Y-axis can completely change the reader's interpretation: a mild trend may seem like a sudden crisis, or sustained growth may go unnoticed.

As mentioned in the second webinar in the series, scales are not a minor detail: they are a narrative component. Deciding where an axis begins, what intervals are used, or how time periods are represented involves making choices that directly affect one's perception of reality. For example, if an employment graph starts the Y-axis at 90% instead of 0%, the decline may seem dramatic, even if it's actually minimal.

Therefore, scales must be honest with the data. Being "honest" doesn't mean giving up on design decisions, but rather clearly showing what decisions were made and why. If there is a valid reason for starting the Y-axis at a non-zero value, it should be explicitly explained in the graph or in its footnote. Transparency must prevail over drama.

Visual integrity not only protects the reader from misleading interpretations, but also reinforces the credibility of the communicator. In the field of public data, this honesty is not optional: it is an ethical commitment to the truth and to citizen trust.

Accessibility: Visualize for everyone

On the other hand, one of the aspects often forgotten is accessibility. About 8% of men and 0.5% of women have some form of color blindness. Tools like Color Oracle allow you to simulate what our visualizations look like for people with different types of color perception impairments.

In addition, the webinar mentioned the Chartability project, a methodology to evaluate the accessibility of data visualizations. In the Spanish public sector, where web accessibility is a legal requirement, this is not optional: it is a democratic obligation. Under this premise, the Spanish Federation of Municipalities and Provinces published a Data Visualization Guide for Local Entities.

Visual Storytelling: When Data Tells Stories

Once the technical issues have been resolved, we can address the narrative aspect that is increasingly important to communicate correctly. In this sense, the course proposes a simple but powerful method:

- Write a long sentence that summarizes the points you want to communicate.

- Break that phrase down into components, taking advantage of natural pauses.

- Transform those components into sections of your infographic.

This narrative approach is especially effective for projects like the ones we found in data.europa.eu, where visualizations are combined with contextual explanations to communicate the value of high-value datasets or in datos.gob.es's data science and visualization exercises.

The future of data visualization also includes more creative and user-centric approaches. Projects that incorporate personalized elements, that allow readers to place themselves at the center of information, or that use narrative techniques to generate empathy, are redefining what we understand by "data communication".

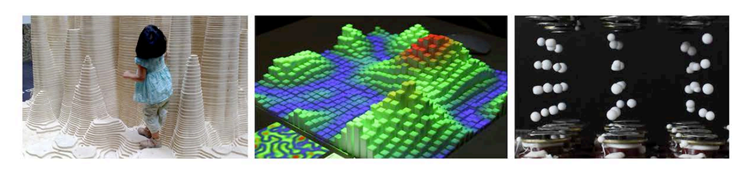

Alternative forms of "data sensification" are even emerging: physicalization (creating three-dimensional objects with data) and sonification (translating data into sound) open up new possibilities for making information more tangible and accessible. The Spanish company Tangible Data, which we echo in datos.gob.es because it reuses open datasets, is proof of this.

Figure 1. Examples of data sensification. Source: https://data.europa.eu/sites/default/files/course/webinar-data-visualisation-episode-3-slides.pdf

By way of conclusion, we can emphasize that integrity in design is not a luxury: it is an ethical requirement. Every graph we publish on official platforms influences how citizens perceive reality and make decisions. That is why mastering technical tools such as libraries and visualization APIs, which are discussed in other articles on the portal, is so relevant.

The next time you create a visualization with open data, don't just ask yourself "what tool do I use?" or "Which graphic looks best?". Ask yourself: what does my audience really need to know? Does this visualization respect data integrity? Is it accessible to everyone? The answers to these questions are what transform a beautiful graphic into a truly effective communication tool.

Education has the power to transform lives. Recognized as a fundamental right by the international community, it is a key pillar for human and social development. However, according to UNESCO data, 272 million children and young people still do not have access to school, 70% of countries spend less than 4% of their GDP on education, and 69 million more teachers are still needed to achieve universal primary and secondary education by 2030. In the face of this global challenge, open educational resources and open access initiatives are presented as decisive tools to strengthen education systems, reduce inequalities and move towards inclusive, equitable and quality education.

Open educational resources (OER) offer three main benefits: they harness the potential of digital technologies to solve common educational challenges; they act as catalysts for pedagogical and social innovation by transforming the relationship between teachers, students and knowledge; and they contribute to improving equitable access to high-quality educational materials.

What are Open Educational Resources (OER)

According to UNESCO, open educational resources are "learning, teaching, and research materials in any format and support that exist in the public domain or are under copyright and were released under an open license." The concept, coined at the forum held in Paris in 2002, has as its fundamental characteristic that these resources allow "their access at no cost, their reuse, reorientation, adaptation and redistribution by third parties".

OER encompasses a wide variety of formats, from full courses, textbooks, and curricula to maps, videos, podcasts, multimedia applications, assessment tools, mobile apps, databases, and even simulations.

Open educational resources are made up of three elements that work inseparably:

- Educational content: includes all kinds of material that can be used in the teaching-learning process, from formal objects to external and social resources. This is where open data would come in, which can be used to generate this type of resource.

- Technological tools: software that allows content to be developed, used, modified and distributed, including applications for content creation and platforms for learning communities.

- Open licenses: differentiating element that respects intellectual property while providing permissions for the use, adaptation and redistribution of materials.

Therefore, OER are mainly characterized by their universal accessibility, eliminating economic and geographical barriers that traditionally limit access to quality education.

Educational innovation and pedagogical transformation

Pedagogical transformation is one of the main impacts of open educational resources in the current educational landscape. OER are not simply free digital content, but catalysts for innovation that are redefining teaching-learning processes globally.

Combined with appropriate pedagogical methodologies and well-designed learning objectives, OER offer innovative new teaching options to enable both teachers and students to take a more active role in the educational process and even in the creation of content. They foster essential competencies such as critical thinking, autonomy and the ability to "learn to learn", overcoming traditional models based on memorization.

Educational innovation driven by OER is materialized through open technological tools that facilitate their creation, adaptation and distribution. Programs such as eXeLearning allow you to develop digital educational content in a simple way, while LibreOffice and Inkscape offer free alternatives for the production of materials.

The interoperability achieved through open standards, such as IMS Global or SCORM, ensures that these resources can be integrated into different platforms and therefore accessibility for all users, including people with disabilities.

Another promising innovation for the future of OER is the combination of decentralized technologies like Nostr with authoring tools like LiaScript. This approach solves the dependency on central servers, allowing an entire course to be created and distributed over an open, censorship-resistant network. The result is a single, permanent link (URI de Nostr) that encapsulates all the material, giving the creator full sovereignty over its content and ensuring its durability. In practice, this is a revolution for universal access to knowledge. Educators share their work with the assurance that the link will always be valid, while students access the material directly, without the need for platforms or intermediaries. This technological synergy is a fundamental step to materialize the promise of a truly open, resilient and global educational ecosystem, where knowledge flows without barriers.

The potential of Open Educational Resources is realized thanks to the communities and projects that develop and disseminate them. Institutional initiatives, collaborative repositories and programmes promoted by public bodies and teachers ensure that OER are accessible, reusable and sustainable.

Collaboration and open learning communities

The collaborative dimension represents one of the fundamental pillars that support the open educational resources movement. This approach transcends borders and connects education professionals globally.

The educational communities around OER have created spaces where teachers share experiences, agree on methodological aspects and resolve doubts about the practical application of these resources. Coordination between professionals usually occurs on social networks or through digital channels such as Telegram, in which both users and content creators participate. This "virtual cloister" facilitates the effective implementation of active methodologies in the classroom.

Beyond the spaces that have arisen at the initiative of the teachers themselves, different organizations and institutions have promoted collaborative projects and platforms that facilitate the creation, access and exchange of Open Educational Resources, thus expanding their reach and impact on the educational community.

OER projects and repositories in Spain

In the case of Spain, Open Educational Resources have a consolidated ecosystem of initiatives that reflect the collaboration between public administrations, educational centres, teaching communities and cultural entities. Platforms such as Procomún, content creation projects such as EDIA (Educational, Digital, Innovative and Open) or CREA (Creation of Open Educational Resources), and digital repositories such as Hispana show the diversity of approaches adopted to make educational and cultural resources available to citizens in open access. Here's a little more about them:

- The EDIA (Educational, Digital, Innovative and Open) Project, developed by the National Center for Curriculum Development in Non-Proprietary Systems (CEDEC), focuses on the creation of open educational resources designed to be integrated into environments that promote digital competences and that are adapted to active methodologies. The resources are created with eXeLearning, which facilitates editing, and include templates, guides, rubrics and all the necessary documents to bring the didactic proposal to the classroom.

- The Procomún network was born as a result of the Digital Culture in School Plan launched in 2012 by the Ministry of Education, Culture and Sport. This repository currently has more than 74,000 resources and 300 learning itineraries, along with a multimedia bank of 100,000 digital assets under the Creative Commons license and which, therefore, can be reused to create new materials. It also has a mobile application. Procomún also uses eXeLearning and the LOM-ES standard, which ensures a homogeneous description of the resources and facilitates their search and classification. In addition, it is a semantic web, which means that it can connect with existing communities through the Linked Open Data Cloud.

The autonomous communities have also promoted the creation of open educational resources. An example is CREA, a programme of the Junta de Extremadura aimed at the collaborative production of open educational resources. Its platform allows teachers to create, adapt and share structured teaching materials, integrating curricular content with active methodologies. The resources are generated in interoperable formats and are accompanied by metadata that facilitates their search, reuse and integration into different platforms.

There are similar initiatives, such as the REA-DUA project in Andalusia, which brings together more than 250 educational resources for primary, secondary and baccalaureate, with attention to diversity. For its part, Galicia launched the 2022-23 academic year cREAgal whose portal currently has more than 100 primary and secondary education resources. This project has an impact on inclusion and promotes the personal autonomy of students. In addition, some ministries of education make open educational resources available, as is the case of the Canary Islands.

Hispana, the portal for access to Spanish cultural heritage

In addition to these initiatives aimed at the creation of educational resources, others have emerged that promote the collection of content that was not created for an educational purpose but that can be used in the classroom. This is the case of Hispana, a portal for aggregating digital collections from Spanish libraries, archives and museums.

To provide access to Spanish cultural and scientific heritage, Hispana collects and makes accessible the metadata of digital objects, allowing these objects to be viewed through links to the pages of the owner institutions. In addition to acting as a collector, Hispana also adds the content of institutions that wish to do so to Europeana, the European digital library, which allows increasing the visibility and reuse of resources.

Hispana is an OAI-PMH repository, which means that it uses the Open Archives Initiative – Protocol for Metadata Harvesting, an international standard for the collection and exchange of metadata between digital repositories. Thus, Hispana collects the metadata of the Spanish archives, museums and libraries that exhibit their collections with this protocol and sends them to Europeana.

International initiatives and global cooperation

At the global level, it is important to highlight the role of UNESCO through the Dynamic Coalition on OER, which seeks to coordinate efforts to increase the availability, quality and sustainability of these assets.

In Europe, ENCORE+ (European Network for Catalysing Open Resources in Education) seeks to strengthen the European OER ecosystem. Among its objectives is to create a network that connects universities, companies and public bodies to promote the adoption, reuse and quality of OER in Europe. ENCORE+ also promotes interoperability between platforms, metadata standardization and cooperation to ensure the quality of resources.

In Europe, other interesting initiatives have been developed, such as EPALE (Electronic Platform for Adult Learning in Europe), an initiative of the European Commission aimed at specialists in adult education. The platform contains studies, reports and training materials, many of them under open licenses, which contributes to the dissemination and use of OER.

In addition, there are numerous projects that generate and make available open educational resources around the world. In the United States, OER Commons functions as a global repository of educational materials of different levels and subjects. This project uses Open Author, an online editor that makes it easy for teachers without advanced technical knowledge to create and customize digital educational resources directly on the platform.

Another outstanding project is Plan Ceibal, a public program in Uruguay that represents a model of technological inclusion for equal opportunities. In addition to providing access to technology, it generates and distributes OER in interoperable formats, compatible with standards such as SCORM and structured metadata that facilitate its search, integration into learning platforms and reuse by teachers.

Along with initiatives such as these, there are others that, although they do not directly produce open educational resources, do encourage their creation and use through collaboration between teachers and students from different countries. This is the case for projects such as eTwinning and Global Classroom.

The strength of OER lies in their contribution to the democratization of knowledge, their collaborative nature, and their ability to promote innovative methodologies. By breaking down geographical, economic, and social barriers, open educational resources bring the right to education one step closer to becoming a universal reality.

The open data sector is very active. To keep up to date with everything that happens, from datos.gob.es we publish a compilation of news such as the development of new technological applications, legislative advances or other related news.

Six months ago, we already made the last compilation of the year 2024. On this occasion, we are going to summarize some innovations, improvements and achievements of the first half of 2025.

Regulatory framework: new regulations that transform the landscape

One of the most significant developments is the publication of the Regulation on the European Health Data Space by the European Parliament and the Council. This regulation establishes a common framework for the secure exchange of health data between member states, facilitating both medical research and the provision of cross-border health services. In addition, this milestone represents a paradigmatic shift in the management of sensitive data, demonstrating that it is possible to reconcile privacy and data protection with the need to share information for the common good. The implications for the Spanish healthcare system are considerable, as it will allow greater interoperability with other European countries and facilitate the development of collaborative research projects.

On the other hand, the entry into force of the European AI Act establishes clear rules for the development of this technology, guaranteeing security, transparency and respect for human rights. These types of regulations are especially relevant in the context of open data, where algorithmic transparency and the explainability of AI models become essential requirements.

In Spain, the commitment to transparency is materialised in initiatives such as the new Digital Rights Observatory, which has the participation of more than 150 entities and 360 experts. This platform is configured as a space for dialogue and monitoring of digital policies, helping to ensure that the digital transformation respects fundamental rights.

Technological innovations in Spain and abroad

One of the most prominent milestones in the technological field is the launch of ALIA, the public infrastructure for artificial intelligence resources. This initiative seeks to develop open and transparent language models that promote the use of Spanish and Spanish co-official languages in the field of AI.

ALIA is not only a response to the hegemony of Anglo-Saxon models, but also a strategic commitment to technological sovereignty and linguistic diversity. The first models already available have been trained in Spanish, Catalan, Galician, Valencian and Basque, setting an important precedent in the development of inclusive and culturally sensitive technologies.

In relation to this innovation, the practical applications of artificial intelligence are multiplying in various sectors. For example, in the financial field, the Tax Agency has adopted an ethical commitment in the design and use of artificial intelligence. Within this framework, the community has even developed a virtual chatbot trained with its own data that offers legal guidance on fiscal and tax issues.

In the healthcare sector, a group of Spanish radiologists is working on a project for the early detection of oncological lesions using AI, demonstrating how the combination of open data and advanced algorithms can have a direct impact on public health.

Also combining AI with open data, projects related to environmental sustainability have been developed. This model developed in Spain combines AI and open weather data to predict solar energy production over the next 30 years, providing crucial information for national energy planning.

Another relevant sector in terms of technological innovation is that of smart cities. In recent months, Las Palmas de Gran Canaria has digitized its municipal markets by combining WiFi networks, IoT devices, a digital twin and open data platforms. This comprehensive initiative seeks to improve the user experience and optimize commercial management, demonstrating how technological convergence can transform traditional urban spaces.

Zaragoza, for its part, has developed a vulnerability map using artificial intelligence applied to open data, providing a valuable tool for urban planning and social policies.

Another relevant case is the project of the Open Data Barcelona Initiative, #iCuida, which stands out as an innovative example of reusing open data to improve the lives of caregivers and domestic workers. This application demonstrates how open data can target specific groups and generate direct social impact.

Last but not least, at a global level, this semester DeepSeek has launched DeepSeek-R1, a new family of generative models specialized in reasoning, publishing both the models and their complete training methodology in open source, contributing to the democratic advancement of AI.

New open data portals and improvement tools

In all this maelstrom of innovation and technology, the landscape of open data portals has been enriched with new sectoral initiatives. The Association of Commercial and Property Registrars of Spain has presented its open data platform, allowing immediate access to registry data without waiting for periodic reports. This initiative represents a significant change in the transparency of the registry sector.

In the field of health, the 'I+Health' portal of the Andalusian public health system collects and disseminates resources and data on research activities and results from a single site, facilitating access to relevant scientific information.

In addition to the availability of data, there is a treatment that makes them more accessible to the general public: data visualization. The University of Granada has developed 'UGR in figures', an open-access space with an open data section that facilitates the exploration of official statistics and stands as a fundamental piece in university transparency.

On the other hand, IDENA, the new tool of the Navarre Geoportal, incorporates advanced functionalities to search, navigate, incorporate maps, share data and download geographical information, being operational on any device.

Training for the future: events and conferences

The training ecosystem in this ecosystem is strengthened every year with events such as the Data Management Summit in Tenerife, which addresses interoperability in public administrations and artificial intelligence. Another benchmark event in open data that was also held in the Canary Islands was the National Open Data Meeting.

Beyond these events, collaborative innovation has also been promoted through specialized hackathons, such as the one dedicated to generative AI solutions for biodiversity or the Merkle Datathon in Gijón. These events not only generate innovative solutions, but also create communities of practice and foster emerging talent.

Once again, the open data competitions of Castilla y León and the Basque Country have awarded projects that demonstrate the transformative potential of the reuse of open data, inspiring new initiatives and applications.

International perspective and global trends: the fourth wave of open data

The Open Data Policy Lab spoke at the EU Open Data Days about what is known as the "fourth wave" of open data, closely linked to generative AI. This evolution represents a quantum leap in the way public data is processed, analyzed, and used, where natural language models allow for more intuitive interactions and more sophisticated analysis.

Overall, the open data landscape in 2025 reveals a profound transformation of the ecosystem, where the convergence between artificial intelligence, advanced regulatory frameworks, and specialized applications is redefining the possibilities of transparency and public innovation.

Artificial intelligence is no longer a thing of the future: it is here and can become an ally in our daily lives. From making tasks easier for us at work, such as writing emails or summarizing documents, to helping us organize a trip, learn a new language, or plan our weekly menus, AI adapts to our routines to make our lives easier. You don't have to be tech-savvy to take advantage of it; while today's tools are very accessible, understanding their capabilities and knowing how to ask the right questions will maximize their usefulness.

AI Passive and Active Subjects

The applications of artificial intelligence in everyday life are transforming our daily lives. AI already covers multiple fields of our routines. Virtual assistants, such as Siri or Alexa, are among the most well-known tools that incorporate artificial intelligence, and are used to answer questions, schedule appointments, or control devices.

Many people use tools or applications with artificial intelligence on a daily basis, even if it operates imperceptibly to the user and does not require their intervention. Google Maps, for example, uses AI to optimize routes in real time, predict traffic conditions, suggest alternative routes or estimate the time of arrival. Spotify applies it to personalize playlists or suggest songs, and Netflix to make recommendations and tailor the content shown to each user.

But it is also possible to be an active user of artificial intelligence using tools that interact directly with the models. Thus, we can ask questions, generate texts, summarize documents or plan tasks. AI is no longer a hidden mechanism but a kind of digital co-pilot that assists us in our day-to-day lives. ChatGPT, Copilot or Gemini are tools that allow us to use AI without having to be experts. This makes it easier for us to automate daily tasks, freeing up time to spend on other activities.

AI in Home and Personal Life

Virtual assistants respond to voice commands and inform us what time it is, the weather or play the music we want to listen to. But their possibilities go much further, as they are able to learn from our habits to anticipate our needs. They can control different devices that we have in the home in a centralized way, such as heating, air conditioning, lights or security devices. It is also possible to configure custom actions that are triggered via a voice command. For example, a "good morning" routine that turns on the lights, informs us of the weather forecast and the traffic conditions.

When we have lost the manual of one of the appliances or electronic devices we have at home, artificial intelligence is a good ally. By sending a photo of the device, you will help us interpret the instructions, set it up, or troubleshoot basic issues.

If you want to go further, AI can do some everyday tasks for you. Through these tools we can plan our weekly menus, indicating needs or preferences, such as dishes suitable for celiacs or vegetarians, prepare the shopping list and obtain the recipes. It can also help us choose between the dishes on a restaurant's menu taking into account our preferences and dietary restrictions, such as allergies or intolerances. Through a simple photo of the menu, the AI will offer us personalized suggestions.

Physical exercise is another area of our personal lives in which these digital co-pilots are very valuable. We may ask you, for example, to create exercise routines adapted to different physical conditions, goals and available equipment.

Planning a vacation is another of the most interesting features of these digital assistants. If we provide them with a destination, a number of days, interests, and even a budget, we will have a complete plan for our next trip.

Applications of AI in studies

AI is profoundly transforming the way we study, offering tools that personalize learning. Helping the little ones in the house with their schoolwork, learning a language or acquiring new skills for our professional development are just some of the possibilities.

There are platforms that generate personalized content in just a few minutes and didactic material made from open data that can be used both in the classroom and at home to review. Among university students or high school students, some of the most popular options are applications that summarize or make outlines from longer texts. It is even possible to generate a podcast from a file, which can help us understand and become familiar with a topic while playing sports or cooking.

But we can also create our applications to study or even simulate exams. Without having programming knowledge, it is possible to generate an application to learn multiplication tables, irregular verbs in English or whatever we can think of.

How to Use AI in Work and Personal Finance

In the professional field, artificial intelligence offers tools that increase productivity. In fact, it is estimated that in Spain 78% of workers already use AI tools in the workplace. By automating processes, we save time to focus on higher-value tasks. These digital assistants summarize long documents, generate specialized reports in a field, compose emails, or take notes in meetings.

Some platforms already incorporate the transcription of meetings in real time, something that can be very useful if we do not master the language. Microsoft Teams, for example, offers useful options through Copilot from the "Summary" tab of the meeting itself, such as transcription, a summary or the possibility of adding notes.

The management of personal finances has also evolved thanks to applications that use AI, allowing you to control expenses and manage a budget. But we can also create our own personal financial advisor using an AI tool, such as ChatGPT. By providing you with insights into income, fixed expenses, variables, and savings goals, it analyzes the data and creates personalized financial plans.

Prompts and creation of useful applications for everyday life

We have seen the great possibilities that artificial intelligence offers us as a co-pilot in our day-to-day lives. But to make it a good digital assistant, we must know how to ask it and give it precise instructions.

A prompt is a basic instruction or request that is made to an AI model to guide it, with the aim of providing us with a coherent and quality response. Good prompting is the key to getting the most out of AI. It is essential to ask well and provide the necessary information.

To write effective prompts we have to be clear, specific, and avoid ambiguities. We must indicate what the objective is, that is, what we want the AI to do: summarize, translate, generate an image, etc. It is also key to provide it with context, explaining who it is aimed at or why we need it, as well as how we expect the response to be. This can include the tone of the message, the formatting, the fonts used to generate it, etc.

Here are some tips for creating effective prompts:

- Use short, direct and concrete sentences. The clearer the request, the more accurate the answer. Avoid expressions such as "please" or "thank you", as they only add unnecessary noise and consume more resources. Instead, use words like "must," "do," "include," or "list." To reinforce the request, you can capitalize those words. These expressions are especially useful for fine-tuning a first response from the model that doesn't meet your expectations.

- It indicates the audience to which it is addressed. Specify whether the answer is aimed at an expert audience, inexperienced audience, children, adolescents, adults, etc. When we want a simple answer, we can, for example, ask the AI to explain it to us as if we were ten years old.

- Use delimiters. Separate the instructions using a symbol, such as slashes (//) or quotation marks to help the model understand the instruction better. For example, if you want it to do a translation, it uses delimiters to separate the command ("Translate into English") from the phrase it is supposed to translate.

- Indicates the function that the model should adopt. Specifies the role that the model should assume to generate the response. Telling them whether they should act like an expert in finance or nutrition, for example, will help generate more specialized answers as they will adapt both the content and the tone.

- Break down entire requests into simple requests. If you're going to make a complex request that requires an excessively long prompt, it's a good idea to break it down into simpler steps. If you need detailed explanations, use expressions like "Think by step" to give you a more structured answer.

- Use examples. Include examples of what you're looking for in the prompt to guide the model to the answer.

- Provide positive instructions. Instead of asking them not to do or include something, state the request in the affirmative. For example, instead of "Don't use long sentences," say, "Use short, concise sentences." Positive instructions avoid ambiguities and make it easier for the AI to understand what it needs to do. This happens because negative prompts put extra effort on the model, as it has to deduce what the opposite action is.

- Offer tips or penalties. This serves to reinforce desired behaviors and restrict inappropriate responses. For example, "If you use vague or ambiguous phrases, you will lose 100 euros."

- Ask them to ask you what they need. If we instruct you to ask us for additional information we reduce the possibility of hallucinations, as we are improving the context of our request.

- Request that they respond like a human. If the texts seem too artificial or mechanical, specify in the prompt that the response is more natural or that it seems to be crafted by a human.

- Provides the start of the answer. This simple trick is very useful in guiding the model towards the response we expect.

- Define the fonts to use. If we narrow down the type of information you should use to generate the answer, we will get more refined answers. It asks, for example, that it only use data after a specific year.

- Request that it mimic a style. We can provide you with an example to make your response consistent with the style of the reference or ask you to follow the style of a famous author.

While it is possible to generate functional code for simple tasks and applications without programming knowledge, it is important to note that developing more complex or robust solutions at a professional level still requires programming and software development expertise. To create, for example, an application that helps us manage our pending tasks, we ask AI tools to generate the code, explaining in detail what we want it to do, how we expect it to behave, and what it should look like. From these instructions, the tool will generate the code and guide us to test, modify and implement it. We can ask you how and where to run it for free and ask for help making improvements.

As we've seen, the potential of these digital assistants is enormous, but their true power lies in large part in how we communicate with them. Clear and well-structured prompts are the key to getting accurate answers without needing to be tech-savvy. AI not only helps us automate routine tasks, but it expands our capabilities, allowing us to do more in less time. These tools are redefining our day-to-day lives, making it more efficient and leaving us time for other things. And best of all: it is now within our reach.

Data science is all the rage. Professions related to this field are among the most in-demand, according to the latest study ‘Posiciones y competencias más Demandadas 2024’, carried out by the Spanish Association of Human Resources Managers. In particular, there is a significant demand for roles related to data management and analysis, such as Data Analyst, Data Engineer and Data Scientist. The rise of artificial intelligence (AI) and the need to make data-driven decisions are driving the integration of this type of professionals in all sectors.

Universities are aware of this situation and therefore offer a large number of degrees, postgraduate courses and also summer courses, both for beginners and for those who want to broaden their knowledge and explore new technological trends. Here are just a few examples of some of them. These courses combine theory and practice, allowing you to discover the potential of data.

1. Data Analysis and Visualisation: Practical Statistics with R and Artificial Intelligence. National University of Distance Education (UNED).

This seminar offers comprehensive training in data analysis with a practical approach. Students will learn to use the R language and the RStudio environment, with a focus on visualisation, statistical inference and its use in artificial intelligence systems. It is aimed at students from related fields and professionals from various sectors (such as education, business, health, engineering or social sciences) who need to apply statistical and AI techniques, as well as researchers and academics who need to process and visualise data.

- Date and place: from 25 to 27 June 2025 in online and face-to-face mode (in Plasencia).

2. Big Data. Data analysis and automatic learning with Python. Complutense University.

Thanks to this training, students will be able to acquire a deep understanding of how data is obtained, managed and analysed to generate valuable knowledge for decision making. Among other issues, the life cycle of a Big Data project will be shown, including a specific module on open data. In this case, the language chosen for the training will be Python. No previous knowledge is required to attend: it is open to university students, teachers, researchers and professionals from any sector with an interest in the subject.

- Date and place: 30 June to 18 July 2025 in Madrid.

3. Challenges in Data Science: Big Data, Biostatistics, Artificial Intelligence and Communications. University of Valencia.

This programme is designed to help participants understand the scope of the data-driven revolution. Integrated within the Erasmus mobility programmes, it combines lectures, group work and an experimental lab session, all in English. Among other topics, open data, open source tools, Big Data databases, cloud computing, privacy and security of institutional data, text mining and visualisation will be discussed.

- Date and place: From 30 June to 4 July at two venues in Valencia. Note: Places are currently full, but the waiting list is open.

4. Digital twins: from simulation to intelligent reality. University of Castilla-La Mancha.

Digital twins are a fundamental tool for driving data-driven decision-making. With this course, students will be able to understand the applications and challenges of this technology in various industrial and technological sectors. Artificial intelligence applied to digital twins, high performance computing (HPC) and digital model validation and verification, among others, will be discussed. It is aimed at professionals, researchers, academics and students interested in the subject.

- Date and place: 3 and 4 July in Albacete.

5. Health Geography and Geographic Information Systems: practical applications. University of Zaragoza.

The differential aspect of this course is that it is designed for those students who are looking for a practical approach to data science in a specific sector such as health. It aims to provide theoretical and practical knowledge about the relationship between geography and health. Students will learn how to use Geographic Information Systems (GIS) to analyse and represent disease prevalence data. It is open to different audiences (from students or people working in public institutions and health centres, to neighbourhood associations or non-profit organisations linked to health issues) and does not require a university degree.

- Date and place: 7-9 July 2025 in Zaragoza.

6. Deep into data science. University of Cantabria.

Aimed at scientists, university students (from second year onwards) in engineering, mathematics, physics and computer science, this intensive course aims to provide a complete and practical vision of the current digital revolution. Students will learn about Python programming tools, machine learning, artificial intelligence, neural networks or cloud computing, among other topics. All topics are introduced theoretically and then experimented with in laboratory practice.

- Date and place: from 7 to 11 July 2025 in Camargo.

7. Advanced Programming. Autonomous University of Barcelona.

Taught entirely in English, the aim of this course is to improve students' programming skills and knowledge through practice. To do so, two games will be developed in two different languages, Java and Python. Students will be able to structure an application and program complex algorithms. It is aimed at students of any degree (mathematics, physics, engineering, chemistry, etc.) who have already started programming and want to improve their knowledge and skills.

- Date and place: 14 July to 1 August 2025, at a location to be defined.

8. Data visualisation and analysis with R. Universidade de Santiago de Compostela.

This course is aimed at beginners in the subject. It will cover the basic functionalities of R with the aim that students acquire the necessary skills to develop descriptive and inferential statistical analysis (estimation, contrasts and predictions). Search and help tools will also be introduced so that students can learn how to use them independently.

- Date and place: from 14 to 24 July 2025 in Santiago de Compostela.

9. Fundamentals of artificial intelligence: generative models and advanced applications. International University of Andalusia.

This course offers a practical introduction to artificial intelligence and its main applications. It covers concepts related to machine learning, neural networks, natural language processing, generative AI and intelligent agents. The language used will be Python, and although the course is introductory, it will be best used if the student has a basic knowledge of programming. It is therefore aimed primarily at undergraduate and postgraduate students in technical areas such as engineering, computer science or mathematics, professionals seeking to acquire AI skills to apply in their industries, and teachers and researchers interested in updating their knowledge of the state of the art in AI.

- Date and place: 19-22 August 2025, in Baeza.

10. IA Generative AI to innovate in the company: real cases and tools for its implementation. University of the Basque Country.

This course, open to the general public, aims to help understand the impact of generative AI in different sectors and its role in digital transformation through the exploration of real cases of application in companies and technology centres in the Basque Country. This will combine talks, panel discussions and a practical session focused on the use of generative models and techniques such as Retrieval-Augmented Generation (RAG) and Fine-Tuning.

- Date and place: 10 September in San Sebastian.

Investing in technology training during the summer is not only an excellent way to strengthen skills, but also to connect with experts, share ideas and discover opportunities for innovation. This selection is just a small sample of what's on offer. If you know of any other courses you would like to share with us, please leave a comment or write to dinamizacion@datos.gob.es

Open knowledge is knowledge that can be reused, shared and improved by other users and researchers without noticeable restrictions. This includes data, academic publications, software and other available resources. To explore this topic in more depth, we have representatives from two institutions whose aim is to promote scientific production and make it available in open access for reuse:

- Mireia Alcalá Ponce de León, Information Resources Technician of the Learning, Research and Open Science Area of the Consortium of University Services of Catalonia (CSUC).

- Juan Corrales Corrillero, Manager of the data repository of the Madroño Consortium.

Listen to the full podcast (only available in Spanish)

Summary / Transcript of the interview

1. Can you briefly explain what the institutions you work for do?

Mireia Alcalá: The CSUC is the Consortium of University Services of Catalonia and is an organisation that aims to help universities and research centres located in Catalonia to improve their efficiency through collaborative projects. We are talking about some 12 universities and almost 50 research centres.

We offer services in many areas: scientific computing, e-government, repositories, cloud administration, etc. and we also offer library and open science services, which is what we are closest to. In the area of learning, research and open science, which is where I am working, what we do is try to facilitate the adoption of new methodologies by the university and research system, especially in open science, and we give support to data management research.

Juan Corrales: The Consorcio Madroño is a consortium of university libraries of the Community of Madrid and the UNED (National University of Distance Education) for library cooperation.. We seek to increase the scientific output of the universities that are part of the consortium and also to increase collaboration between the libraries in other areas. We are also, like CSUC, very involved in open science: in promoting open science, in providing infrastructures that facilitate it, not only for the members of the Madroño Consortium, but also globally. Apart from that, we also provide other library services and create structures for them.

2. What are the requirements for an investigation to be considered open?

Juan Corrales: For research to be considered open there are many definitions, but perhaps one of the most important is given by the National Open Science Strategy, which has six pillars.

One of them is that it is necessary to put in open access both research data and publications, protocols, methodologies.... In other words, everything must be accessible and, in principle, without barriers for everyone, not only for scientists, not only for universities that can pay for access to these research data or publications. It is also important to use open source platforms that we can customise. Open source is software that anyone, in principle with knowledge, can modify, customise and redistribute, in contrast to the proprietary software of many companies, which does not allow all these things. Another important point, although this is still far from being achieved in most institutions, is allowing open peer review, because it allows us to know who has done a review, with what comments, etc. It can be said that it allows the peer review cycle to be redone and improved. A final point is citizen science: allowing ordinary citizens to be part of science, not only within universities or research institutes.

And another important point is adding new ways of measuring the quality of science.

Mireia Alcalá: I agree with what Juan says. I would also like to add that, for an investigation process to be considered open, we have to look at it globally. That is, include the entire data lifecycle. We cannot talk about a science being open if we only look at whether the data at the end is open. Already at the beginning of the whole data lifecycle, it is important to use platforms and work in a more open and collaborative way.

3. Why is it important for universities and research centres to make their studies and data available to the public?

Mireia Alcalá: I think it is key that universities and centres share their studies, because a large part of research, both here in Spain and at European and world level, is funded with public money. Therefore, if society is paying for the research, it is only logical that it should also benefit from its results. In addition, opening up the research process can help make it more transparent, more accountable, etc. Much of the research done to date has been found to be neither reusable nor reproducible. What does this mean? That the studies that have been done, almost 80% of the time someone else can't take it and reuse that data. Why? Because they don't follow the same standards, the same mannersand so on. So, I think we have to make it extensive everywhere and a clear example is in times of pandemics. With COVID-19, researchers from all over the world worked together, sharing data and findings in real time, working in the same way, and science was seen to be much faster and more efficient.

Juan Corrales: The key points have already been touched upon by Mireia. Besides, it could be added that bringing science closer to society can make all citizens feel that science is something that belongs to us, not just to scientists or academics. It is something we can participate in and this can also help to perhaps stop hoaxes, fake news, to have a more exhaustive vision of the news that reaches us through social networks and to be able to filter out what may be real and what may be false.

4.What research should be published openly?

Juan Corrales: Right now, according to the law we have in Spain, the latest Law of science, all publications that are mainly financed by public funds or in which public institutions participatemust be published in open access. This has not really had much repercussion until last year, because, although the law came out two years ago, the previous law also said so, there is also a law of the Community of Madrid that says the same thing.... but since last year it is being taken into account in the evaluation that the ANECA (the Quality Evaluation Agency) does on researchers.. Since then, almost all researchers have made it a priority to publish their data and research openly. Above all, data was something that had not been done until now.

Mireia Alcalá: At the state level it is as Juan says. We at the regional level also have a law from 2022, the Law of science, which basically says exactly the same as the Spanish law. But I also like people to know that we have to take into account not only the state legislation, but also the calls for proposals from where the money to fund the projects comes from. Basically in Europe, in framework programmes such as Horizon Europe, it is clearly stated that, if you receive funding from the European Commission, you will have to make a data management plan at the beginning of your research and publish the data following the FAIR principles.

5. Among other issues, both CSUC and Consorcio Madroño are in charge of supporting entities and researchers who want to make their data available to the public. How should a process of opening research data be? What are the most common challenges and how do you solve them?

Mireia Alcalá: In our repository, which is called RDR (from Repositori de Dades de Recerca), it is basically the participating institutions that are in charge of supporting the research staff.. The researcher arrives at the repository when he/she is already in the final phase of the research and needs to publish the data yesterday, and then everything is much more complex and time consuming. It takes longer to verify this data and make it findable, accessible, interoperable and reusable.

In our particular case, we have a checklist that we require every dataset to comply with to ensure this minimum data quality, so that it can be reused. We are talking about having persistent identifiers such as ORCID for the researcher or ROR to identify the institutions, having documentation explaining how to reuse that data, having a licence, and so on. Because we have this checklist, researchers, as they deposit, improve their processes and start to work and improve the quality of the data from the beginning. It is a slow process. The main challenge, I think, is that the researcher assumes that what he has is data, because most of them don't know it. Most researchers think of data as numbers from a machine that measures air quality, and are unaware that data can be a photograph, a film from an archaeological excavation, a sound captured in a certain atmosphere, and so on. Therefore, the main challenge is for everyone to understand what data is and that their data can be valuable to others.

And how do we solve it? Trying to do a lot of training, a lot of awareness raising. In recent years, the Consortium has worked to train data curation staff, who are dedicated to helping researchers directly refine this data. We are also starting to raise awareness directly with researchers so that they use the tools and understand this new paradigm of data management.

Juan Corrales: In the Madroño Consortium, until November, the only way to open data was for researchers to pass a form with the data and its metadata to the librarians, and it was the librarians who uploaded it to ensure that it was FAIR. Since November, we also allow researchers to upload data directly to the repository, but it is not published until it has been reviewed by expert librarians, who verify that the data and metadata are of high quality. It is very important that the data is well described so that it can be easily found, reusable and identifiable.

As for the challenges, there are all those mentioned by Mireia - that researchers often do not know they have data - and also, although ANECA has helped a lot with the new obligations to publish research data, many researchers want to put their data running in the repositories, without taking into account that they have to be quality data, that it is not enough to put them there, but that it is important that these data can be reused later.

6. What activities and tools do you or similar institutions provide to help organisations succeed in this task?

Juan Corrales: From Consorcio Madroño, the repository itself that we use, the tool where the research data is uploaded, makes it easy to make the data FAIR, because it already provides unique identifiers, fairly comprehensive metadata templates that can be customised, and so on. We also have another tool that helps create the data management plans for researchers, so that before they create their research data, they start planning how they're going to work with it. This is very important and has been promoted by European institutions for a long time, as well as by the Science Act and the National Open Science Strategy.

Then, more than the tools, the review by expert librarians is also very important. There are other tools that help assess the quality of adataset, of research data, such as Fair EVA or F-Uji, but what we have found is that those tools at the end what they are evaluating more is the quality of the repository, of the software that is being used, and of the requirements that you are asking the researchers to upload this metadata, because all our datasets have a pretty high and quite similar evaluation. So what those tools do help us with is to improve both the requirements that we're putting on our datasets, on our datasets, and to be able to improve the tools that we have, in this case the Dataverse software, which is the one we are using.

Mireia Alcalá: At the level of tools and activities we are on a par, because we have had a relationship with the Madroño Consortium for years, and just like them we have all these tools that help and facilitate putting the data in the best possible way right from the start, for example, with the tool for making data management plans. Here at CSUC we have also been working very intensively in recent years to close this gap in the data life cycle, covering issues of infrastructures, storage, cloud, etc. so that, when the data is analysed and managed, researchers also have a place to go. After the repository, we move on to all the channels and portals that make it possible to disseminate and make all this science visible, because it doesn't make sense for us to make repositories and they are there in a silo, but they have to be interconnected. For many years now, a lot of work has been done on making interoperability protocols and following the same standards. Therefore, data has to be available elsewhere, and both Consorcio Madroño and we are everywhere possible and more.

7. Can you tell us a bit more about these repositories you offer? In addition to helping researchers to make their data available to the public, you also offer a space, a digital repository where this data can be housed, so that it can be located by users.

Mireia Alcalá: If we are talking specifically about research data, as we and Consorcio Madroño have the same repository, we are going to let Juan explain the software and specifications, and I am going to focus on other repositories of scientific production that CSUC also offers. Here what we do is coordinate different cooperative repositories according to the type of resource they contain. So, we have TDX for thesis, RECERCAT for research papers, RACO for scientific journals or MACO, for open access monographs. Depending on the type of product, we have a specific repository, because not everything can be in the same place, as each output of the research has different particularities. Apart from the repositories, which are cooperative, we also have other spaces that we make for specific institutions, either with a more standard solution or some more customised functionalities. But basically it is this: we have for each type of output that there is in the research, a specific repository that adapts to each of the particularities of these formats.

Juan Corrales: In the case of Consorcio Madroño, our repository is called e-scienceData, but it is based on the same software as the CSUC repository, which is Dataverse.. It is open source software, so it can be improved and customised. Although in principle the development is managed from Harvard University in the United States, institutions from all over the world are participating in its development - I don't know if thirty-odd countries have already participated in its development.

Among other things, for example, the translations into Catalan have been done by CSUC, the translation into Spanish has been done by Consorcio Madroño and we have also participated in other small developments. The advantage of this software is that it makes it much easier for the data to be FAIR and compatible with other points that have much more visibility, because, for example, the CSUC is much larger, but in the Madroño Consortium there are six universities, and it is rare that someone goes to look for a dataset in the Madroño Consortium, in e-scienceData, directly. They usually search for it via Google or a European or international portal. With these facilities that Dataverse has, they can search for it from anywhere and they can end up finding the data that we have at Consorcio Madroño or at CSUC.

8. What other platforms with open research data, at Spanish or European level, do you recommend?

Juan Corrales: For example, at the Spanish level there is the FECYT, the Spanish Foundation for Science and Technology, which has a box that collects the research data of all Spanish institutions practically. All the publications of all the institutions appear there: Consorcio Madroño, CSUC and many more.

Then, specifically for research data, there is a lot of research that should be put in a thematic repository, because that's where researchers in that branch of science are going to look. We have a tool to help choose the thematic repository. At the European level there is Zenodo, which has a lot of visibility, but does not have the data quality support of CSUC or the Madroño Consortium. And that is something that is very noticeable in terms of reuse afterwards.

Mireia Alcalá: At the national level, apart from Consorcio Madroño's and our own initiatives, data repositories are not yet widespread. We are aware of some initiatives under development, but it is still too early to see their results. However, I do know of some universities that have adapted their institutional repositories so that they can also add data. And while this is a valid solution for those who have no other choice, it has been found that software used in repositories that are not designed to handle the particularities of the data - such as heterogeneity, format, diversity, large size, etc. - are a bit lame. Then, as Juan said, at the European level, it is established that Zenodo is the multidisciplinary and multiformat repository, which was born as a result of a European project of the Commission. I agree with him that, as it is a self-archiving and self-publishing repository - that is, I Mireia Alcalá can go there in five minutes, put any document I have there, nobody has looked at it, I put the minimum metadata they ask me for and I publish it -, it is clear that the quality is very variable. There are some things that are really usable and perfect, but there are others that need a little more TLC. As Juan said, also at the disciplinary level it is important to highlight that, in all those areas that have a disciplinary repository, researchers have to go there, because that is where they will be able to use their most appropriate metadata, where everybody will work in the same way, where everybody will know where to look for those data.... For anyone who is interested there is a directory called re3data, which is basically a directory of all these multidisciplinary and disciplinary repositories. It is therefore a good place for anyone who is interested and does not know what is in their discipline. Let him go there, he is a good resource.

9. What actions do you consider to be priorities for public institutions in order to promote open knowledge?

Mireia Alcalá: What I would basically say is that public institutions should focus on making and establishing clear policies on open science, because it is true that we have come a long way in recent years, but there are times when researchers are a bit bewildered. And apart from policies, it is above all offering incentives to the entire research community, because there are many people who are making the effort to change their way of working to become immersed in open science and sometimes they don't see how all that extra effort they are making to change their way of working to do it this way pays off. So I would say this: policies and incentives.

Juan Corrales: From my point of view, the theoretical policies that we already have at the national level, at the regional level, are usually quite correct, quite good. The problem is that often no attempt has been made to enforce them. So far, from what we have seen for example with ANECA - which has promoted the use of data repositories or research article repositories - they have not really started to be used on a massive scale. In other words, incentives are necessary, and not just a matter of obligation. As Mireia has also said, we have to convince researchers to see open publishing as theirs, as it is something that benefits both them and society as a whole. What I think is most important is that: the awareness of researchers

Suscribe to our Spotify profile

Interview clips

1. Why should universities and researchers share their studies in open formats?

2. What requirements must an investigation meet in order to be considered open?

How can public administrations harness the value of data? This question is not a simple one to address; its answer is conditioned by several factors that have to do with the context of each administration, the data available to it and the specific objectives set.

However, there are reference guides that can help define a path to action. One of them is published by the European Commission through the EU Publications Office, Data Innovation Toolkit, which emerges as a strategic compass to navigate this complex data innovation ecosystem.

This tool is not a simple manual as it includes templates to make the implementation of the process easier. Aimed at a variety of profiles, from novice analysts to experienced policy makers and technology innovators, Data Innovation Toolkit is a useful resource that accompanies you through the process, step by step.

It aims to democratise data-driven innovation by providing a structured framework that goes beyond the mere collection of information. In this post, we will analyse the contents of the European guide, as well as the references it provides for good innovative use of data.

Structure covering the data lifecycle

The guide is organised in four main steps, which address the entire data lifecycle.

-

Planning

The first part of the guide focuses on establishing a strong foundation for any data-driven innovation project. Before embarking on any process, it is important to define objectives. To do so, the Data Innovation Toolkit suggests a deep reflection that requires aligning the specific needs of the project with the strategic objectives of the organisation. In this step, stakeholder mapping is also key. This implies a thorough understanding of the interests, expectations and possible contributions of each actor involved. This understanding enables the design of engagement strategies that maximise collaboration and minimise potential conflicts.