The Basque Government announces the fifth edition of the awards for the best open data reuse projects in the Basque Country. An initiative that was created with the aim of rewarding the best ideas and applications/services created from the open data catalogue of the Basque Country (Open Data Euskadi) to show its potential and promote open data culture.

As in previous editions, there are two types of prizes: an ideas competition and an applications competition. In the first, a sum of 13,500 euros in prize money will be awarded. In the second, the prize money amounts to €21,000.

Below are the details of the call for proposals for each of the modalities:

Competition of ideas

Proposals for services, studies, visualisations and applications (web and mobile) that reuse open datasets from the Open Data Euskadi portal to provide value to society will be evaluated. Ideas may be of general utility or focus on one of two sectors: health and social or environment and sustainability.

- Who is it aimed at? To all those people or companies from inside and outside the Basque Country who wish to present ideas and projects for the reuse of open data from the Basque Country. This modality does not require technical knowledge of programming or computer development.

- How can you take part? It will be necessary to explain the idea in a text document and attach it when registering. Registration can be done either online or in person.

- What prizes are on offer? Two winning projects will be chosen for each category, which will be divided into a first prize of 3,000 euros and a second prize of 1,500 euros. In other words, in summary, the awards are:

- Health and social category

- First prize: €3,000

- Second prize: €1,500

- Environment and sustainability category

- First prize: €3,000

- Second prize: €1,500

- General category

- First prize: €3,000

- Second prize: €1,500

Here you can read the rules of the Open Data Euskadi ideas competition: https://www.euskadi.eus/servicios/1028505

Application competition

This modality does require some technical knowledge of programming or computer development, asalready developed solutions using Open Data Euskadi open datasets must bepresented . Applications may be submitted in the general category or in the specific category of web services.

- Who is it aimed at? To those people or companies capable of creating services, studies, visualisations, web applications or applications for mobile devices that use at least one set of open data from one of the Basque Country's open data catalogues.

- How can you take part? The project must be explained in a text document and the developed project (service, study, visualisation, web or mobile application) must be accessible via a URL. At registering both the explanatory document and the URL of the project will be attached.

- What prizes are on offer? This category offers a single prize of 8,000 euros for the web services category and two prizes for the general category of 8,000 and 5,000 euros.

-

Category web services

- Only one prize: €8,000

- General category

- First prize: €8,000

- Second prize: €5,000

-

Check here the rules of the competition in application development mode: https://www.euskadi.eus/servicios/1028605

Deadline for registration:

The competition has been accepting proposals since 31 July and closes on 10 October. Follow us on social media so you don't miss any news about open data reuse events and competitions: @datosgob

Take part!

The Junta de Castilla y León has just launched a new edition of its open data competition. In doing so, it seeks to recognise the implementation of projects using datasets from its Open Data Portal. The call for applications will be open until the end of September, so you can take advantage of the remaining weeks of summer to submit your application.

What does the competition consist of?

The objective of the 8th Open Data Competition is to recognise the implementation of projects using open data in four categories:

- Ideas" category: participants will have to describe an idea to create studies, services, websites or applications for mobile devices.

- Products and Services" category: studies, services, websites or applications for mobile devices, which must be accessible to all citizens via the web through a URL, will be awarded.

- Didactic Resource" category: consists of the creation of new and innovative open didactic resources to support classroom teaching. These resources must be published under Creative Commons licences.

- Category "Data Journalism": Journalistic pieces published or updated (in a relevant way) in both written and audiovisual media are sought.

All categories have one thing in common: the project must use at least one dataset from the Junta de Castilla y León's Open Data portal. These datasets can be combined, if the authors so wish, with other data sources, private or public, from any level of administration.

Who can participate?

The competition is open to any natural or legal person who has carried out a project and meets the requirements of each category. Neither public administrations nor those persons who have collaborated directly or indirectly in the preparation of the regulatory bases and the call for applications may participate.

You can participate as an individual or in a group. In addition, the same person may submit more than one application for the same or different categories. The same project can also be submitted in several categories, although it can only be awarded in one category.

What do the prizes consist of?

A jury will evaluate the proposals received on the basis of a series of requirements, including their usefulness, economic value, originality, quality, etc. Once all the projects have been evaluated, a number of winners will be announced, who will receive a diploma, open data consultancy and the following prize money :

- Category Ideas.

- First prize €1,500.

- Second prize 500€.

- Category Products and services. In this case, a special award for students has also been created, aimed at people enrolled in the 2023/2024 and 2024/2025 academic years, both in university and non-university education, provided that it is official.

- First prize €2,500.

- Second prize: €1,500.

- Third prize: €500.

- Student Prize: €1,500.

- Category Educational resource.

- First prize: €1,500.

- Category Data Journalism.

- First prize: €1,500.

- Second prize : €1,000.

In addition, the winning entries will be disseminated and promoted through the Open Data Portal of Castilla y León and other media of the Administration.

What are the deadlines?

The deadline for receiving applications opened on 23 July 2024, one day after the publication of the rules in the Official Gazette of Castilla y León. Participants will have until 23 September 2024 to submit their applications.

How can I participate?

Applications can be submitted in person or electronically.

- In person: at the General Registry of the Regional Ministry of the Presidency , the Registry Assistance Offices of the Regional Government of Castilla y León or at any of the places established in article 16.4 of Law 39/2015.

- Electronic: through the electronic office.

Applications should include information on:

- Author(s) of the project.

- Project title.

- Category or categories for which you are applying.

- Project report, with a maximum length of 1,000 words.

You have all the detailed information at the website, where the competition rules are included.

With this new edition, the Castilla y León Data Portal reaffirms its commitment not only to the publication of open data, but also to the promotion of its reuse. Such actions are a showcase to promote examples of the use of open data in different fields. You can see last year's winning projects in this article.

Come and take part!

1. Introduction

Visualizations are graphical representations of data that allow for the simple and effective communication of information linked to them. The possibilities for visualization are very broad, from basic representations such as line graphs, bar charts or relevant metrics, to visualizations configured on interactive dashboards.

In this section "Visualizations step by step" we are periodically presenting practical exercises using open data available on datos.gob.es or other similar catalogs. In them, the necessary steps to obtain the data, perform the transformations and relevant analyses to, finally obtain conclusions as a summary of said information, are addressed and described in a simple way.

Each practical exercise uses documented code developments and free-to-use tools. All generated material is available for reuse in the GitHub repository of datos.gob.es.

In this specific exercise, we will explore tourist flows at a national level, creating visualizations of tourists moving between autonomous communities (CCAA) and provinces.

Access the data laboratory repository on Github.

Execute the data pre-processing code on Google Colab.

In this video, the author explains what you will find on both Github and Google Colab.

2. Context

Analyzing national tourist flows allows us to observe certain well-known movements, such as, for example, that the province of Alicante is a very popular summer tourism destination. In addition, this analysis is interesting for observing trends in the economic impact that tourism may have, year after year, in certain CCAA or provinces. The article on experiences for the management of visitor flows in tourist destinations illustrates the impact of data in the sector.

3. Objective

The main objective of the exercise is to create interactive visualizations in Python that allow visualizing complex information in a comprehensive and attractive way. This objective will be met using an open dataset that contains information on national tourist flows, posing several questions about the data and answering them graphically. We will be able to answer questions such as those posed below:

- In which CCAA is there more tourism from the same CA?

- Which CA is the one that leaves its own CA the most?

- What differences are there between tourist flows throughout the year?

- Which Valencian province receives the most tourists?

The understanding of the proposed tools will provide the reader with the ability to modify the code contained in the notebook that accompanies this exercise to continue exploring the data on their own and detect more interesting behaviors from the dataset used.

In order to create interactive visualizations and answer questions about tourist flows, a data cleaning and reformatting process will be necessary, which is described in the notebook that accompanies this exercise.

4. Resources

Dataset

The open dataset used contains information on tourist flows in Spain at the CCAA and provincial level, also indicating the total values at the national level. The dataset has been published by the National Institute of Statistics, through various types of files. For this exercise we only use the .csv file separated by ";". The data dates from July 2019 to March 2024 (at the time of writing this exercise) and is updated monthly.

Number of tourists by CCAA and destination province disaggregated by PROVINCE of origin

The dataset is also available for download in this Github repository.

Analytical tools

The Python programming language has been used for data cleaning and visualization creation. The code created for this exercise is made available to the reader through a Google Colab notebook.

The Python libraries we will use to carry out the exercise are:

- pandas: is a library used for data analysis and manipulation.

- holoviews: is a library that allows creating interactive visualizations, combining the functionalities of other libraries such as Bokeh and Matplotlib.

5. Exercise development

To interactively visualize data on tourist flows, we will create two types of diagrams: chord diagrams and Sankey diagrams.

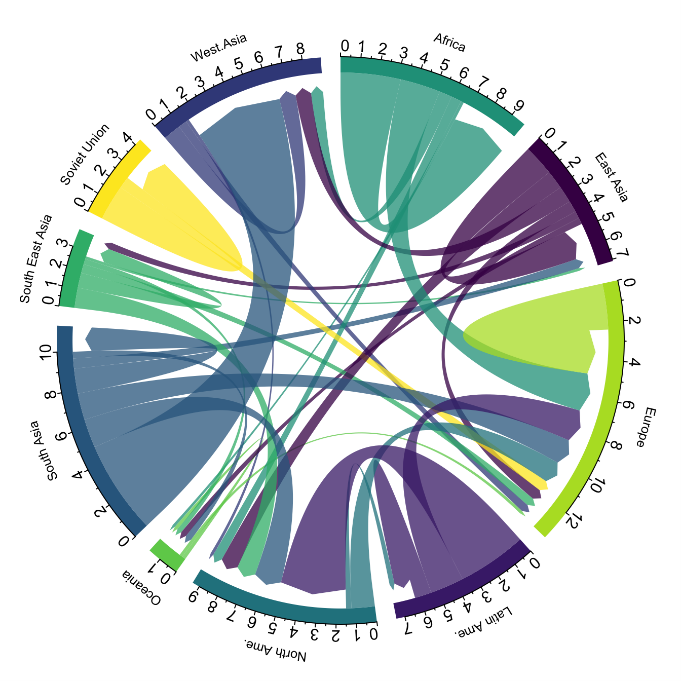

Chord diagrams are a type of diagram composed of nodes and edges, see Figure 1. The nodes are located in a circle and the edges symbolize the relationships between the nodes of the circle. These diagrams are usually used to show types of flows, for example, migratory or monetary flows. The different volume of the edges is visualized in a comprehensible way and reflects the importance of a flow or a node. Due to its circular shape, the chord diagram is a good option to visualize the relationships between all the nodes in our analysis (many-to-many type relationship).

Figure 1. Chord Diagram (Global Migration). Source.

Sankey diagrams, like chord diagrams, are a type of diagram composed of nodes and edges, see Figure 2. The nodes are represented at the margins of the visualization, with the edges between the margins. Due to this linear grouping of nodes, Sankey diagrams are better than chord diagrams for analyses in which we want to visualize the relationship between:

- several nodes and other nodes (many-to-many, or many-to-few, or vice versa)

- several nodes and a single node (many-to-one, or vice versa)

Figure 2. Sankey Diagram (UK Internal Migration). Source.

The exercise is divided into 5 parts, with part 0 ("initial configuration") only setting up the programming environment. Below, we describe the five parts and the steps carried out.

5.1. Load data

This section can be found in point 1 of the notebook.

In this part, we load the dataset to process it in the notebook. We check the format of the loaded data and create a pandas.DataFrame that we will use for data processing in the following steps.

5.2. Initial data exploration

This section can be found in point 2 of the notebook.

In this part, we perform an exploratory data analysis to understand the format of the dataset we have loaded and to have a clearer idea of the information it contains. Through this initial exploration, we can define the cleaning steps we need to carry out to create interactive visualizations.

If you want to learn more about how to approach this task, you have at your disposal this introductory guide to exploratory data analysis.

5.3. Data format analysis

This section can be found in point 3 of the notebook.

In this part, we summarize the observations we have been able to make during the initial data exploration. We recapitulate the most important observations here:

| Province of origin | Province of origin | CCAA and destination province | CCAA and destination province | CCAA and destination province | Tourist concept | Period | Total |

|---|---|---|---|---|---|---|---|

| National Total | National Total | Tourists | 2024M03 | 13.731.096 | |||

| National Total | Ourense | National Total | Andalucía | Almería | Tourists | 2024M03 | 373 |

Figure 3. Fragment of the original dataset.

We can observe in columns one to four that the origins of tourist flows are disaggregated by province, while for destinations, provinces are aggregated by CCAA. We will take advantage of the mapping of CCAA and their provinces that we can extract from the fourth and fifth columns to aggregate the origin provinces by CCAA.

We can also see that the information contained in the first column is sometimes superfluous, so we will combine it with the second column. In addition, we have found that the fifth and sixth columns do not add value to our analysis, so we will remove them. We will rename some columns to have a more comprehensible pandas.DataFrame.

5.4. Data cleaning

This section can be found in point 4 of the notebook.

In this part, we carry out the necessary steps to better format our data. For this, we take advantage of several functionalities that pandas offers us, for example, to rename the columns. We also define a reusable function that we need to concatenate the values of the first and second columns with the aim of not having a column that exclusively indicates "National Total" in all rows of the pandas.DataFrame. In addition, we will extract from the destination columns a mapping of CCAA to provinces that we will apply to the origin columns.

We want to obtain a more compressed version of the dataset with greater transparency of the column names and that does not contain information that we are not going to process. The final result of the data cleaning process is the following:

| Origin | Province of origin | Destination | Province of destination | Period | Total |

|---|---|---|---|---|---|

| National Total | National Total | 2024M03 | 13731096.0 | ||

| Galicia | Ourense | Andalucía | Almería | 2024M03 | 373.0 |

Figure 4. Fragment of the clean dataset.

5.5. Create visualizations

This section can be found in point 5 of the notebook

In this part, we create our interactive visualizations using the Holoviews library. In order to draw chord or Sankey graphs that visualize the flow of people between CCAA and CCAA and/or provinces, we have to structure the information of our data in such a way that we have nodes and edges. In our case, the nodes are the names of CCAA or province and the edges, that is, the relationship between the nodes, are the number of tourists. In the notebook we define a function to obtain the nodes and edges that we can reuse for the different diagrams we want to make, changing the time period according to the season of the year we are interested in analyzing.

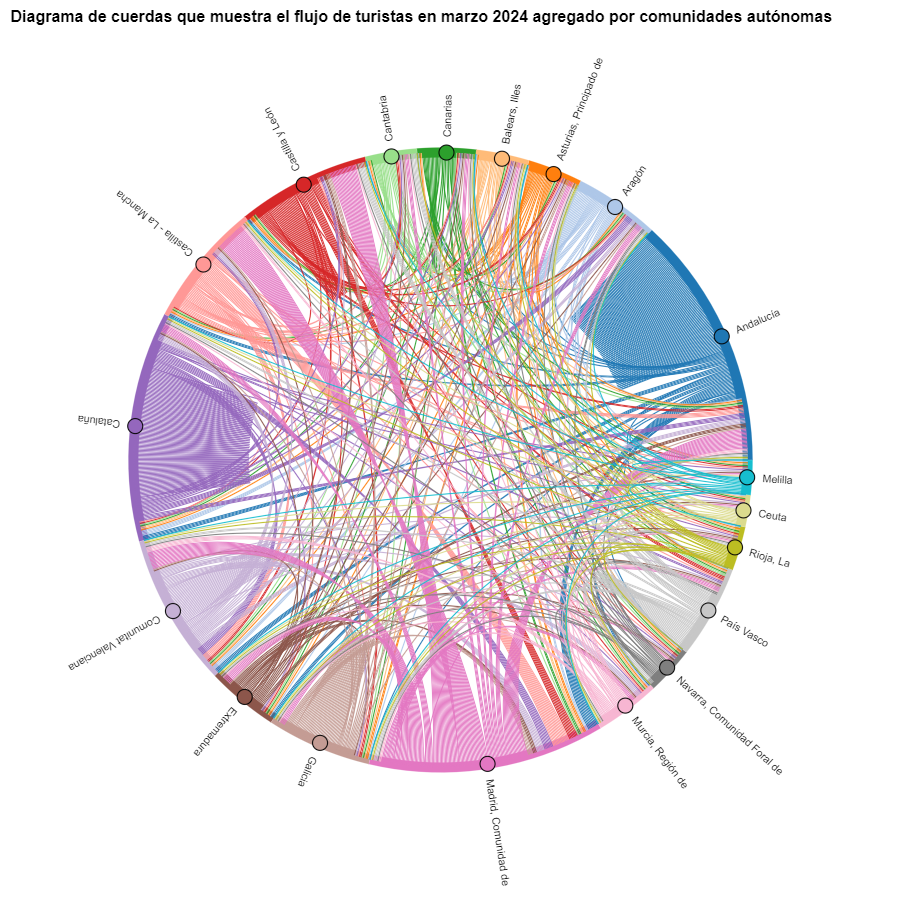

We will first create a chord diagram using exclusively data on tourist flows from March 2024. In the notebook, this chord diagram is dynamic. We encourage you to try its interactivity.

Figure 5. Chord diagram showing the flow of tourists in March 2024 aggregated by autonomous communities.

The chord diagram visualizes the flow of tourists between all CCAA. Each CA has a color and the movements made by tourists from this CA are symbolized with the same color. We can observe that tourists from Andalucía and Catalonia travel a lot within their own CCAA. On the other hand, tourists from Madrid leave their own CA a lot.

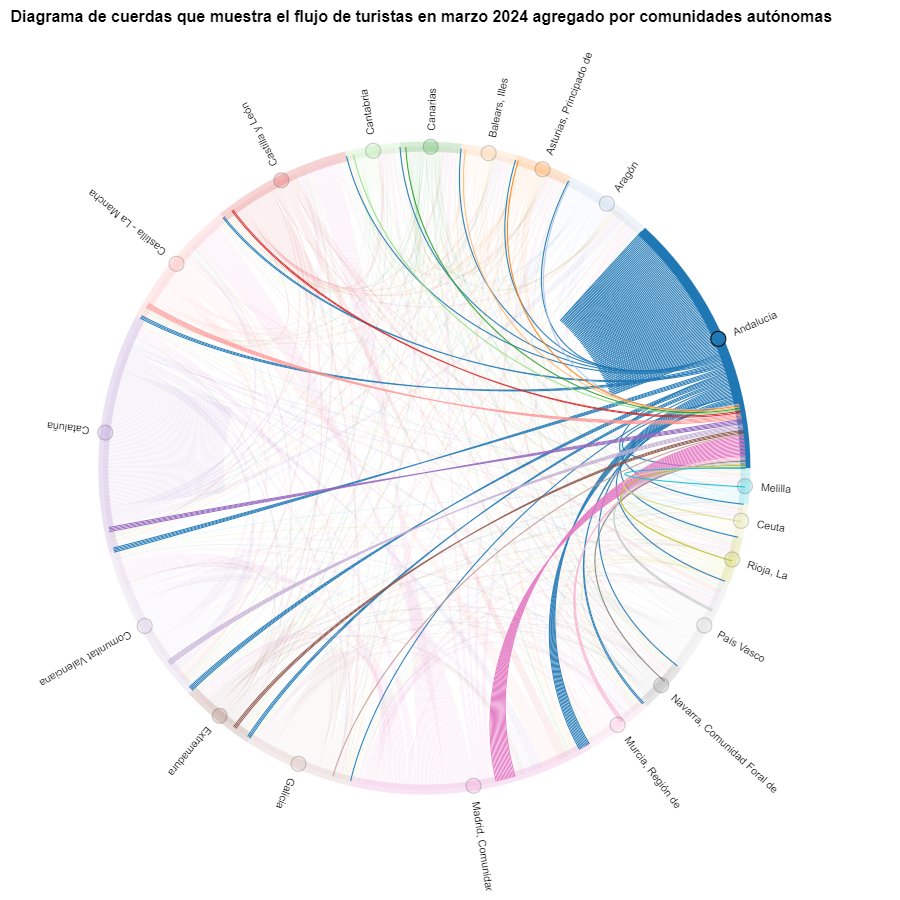

Figure 6. Chord diagram showing the flow of tourists entering and leaving Andalucía in March 2024 aggregated by autonomous communities.

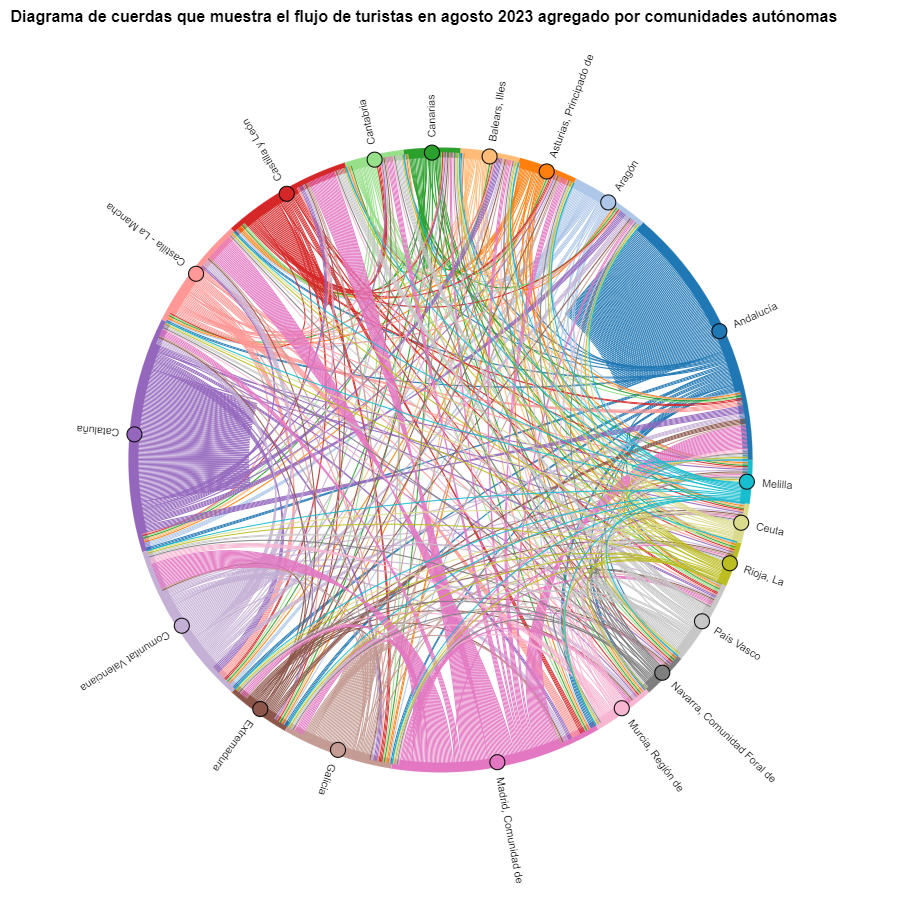

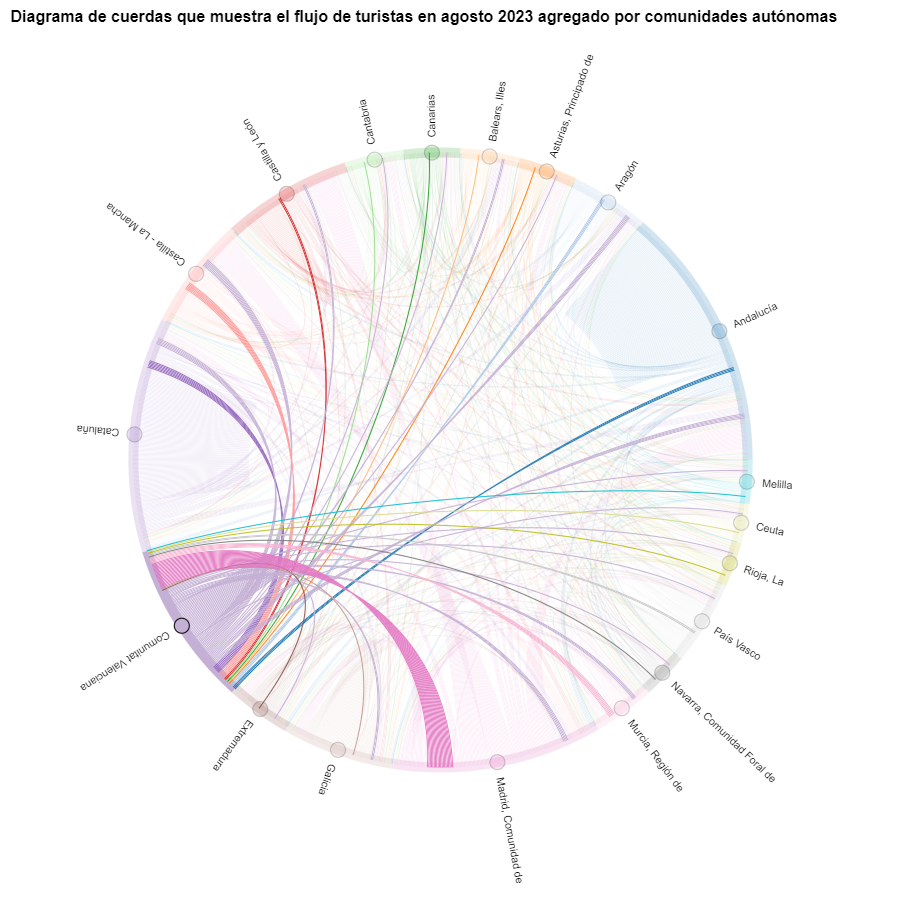

We create another chord diagram using the function we have created and visualize tourist flows in August 2023.

Figure 7. Chord diagram showing the flow of tourists in August 2023 aggregated by autonomous communities.

We can observe that, broadly speaking, tourist movements do not change, only that the movements we have already observed for March 2024 intensify.

Figure 8. Chord diagram showing the flow of tourists entering and leaving the Valencian Community in August 2023 aggregated by autonomous communities.

The reader can create the same diagram for other time periods, for example, for the summer of 2020, in order to visualize the impact of the pandemic on summer tourism, reusing the function we have created.

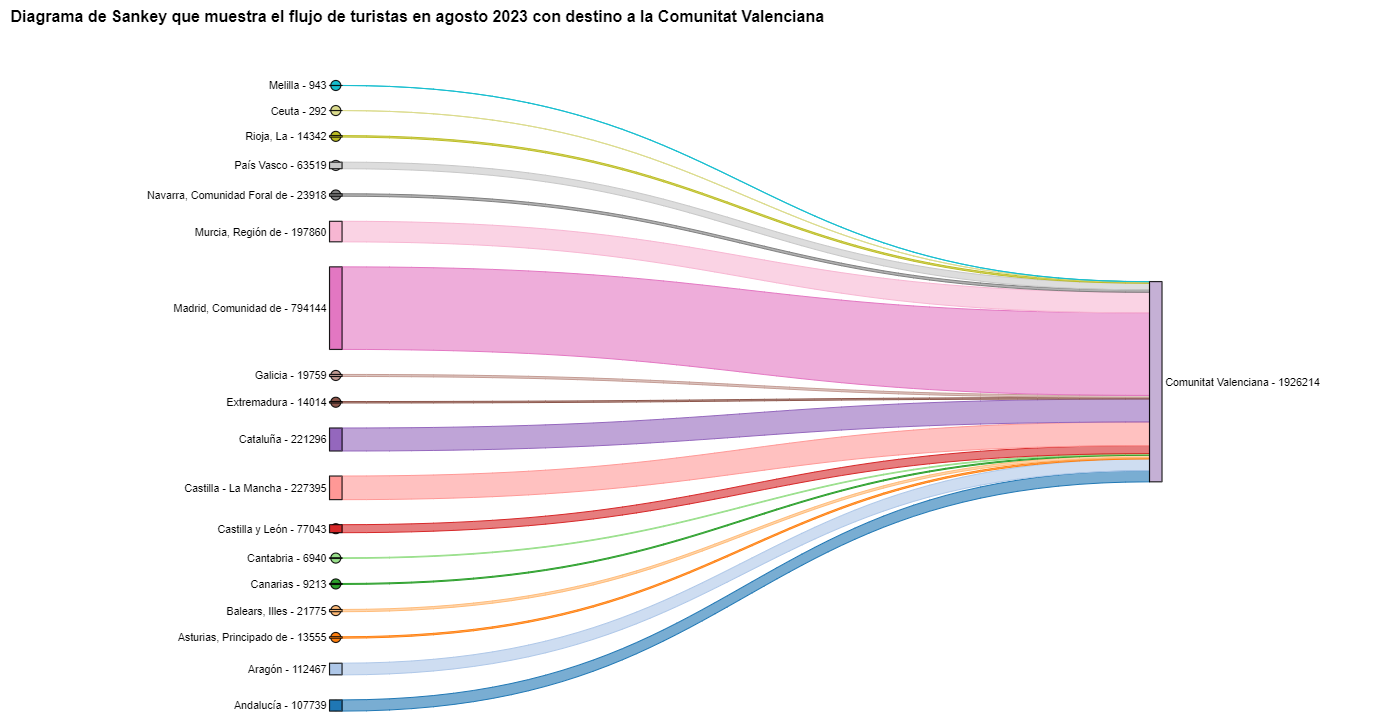

For the Sankey diagrams, we will focus on the Valencian Community, as it is a popular holiday destination. We filter the edges we created for the previous chord diagram so that they only contain flows that end in the Valencian Community. The same procedure could be applied to study any other CA or could be inverted to analyze where Valencians go on vacation. We visualize the Sankey diagram which, like the chord diagrams, is interactive within the notebook. The visual aspect would be like this:

Figure 9. Sankey diagram showing the flow of tourists in August 2023 destined for the Valencian Community.

As we could already intuit from the chord diagram above, see Figure 8, the largest group of tourists arriving in the Valencian Community comes from Madrid. We also see that there is a high number of tourists visiting the Valencian Community from neighboring CCAA such as Murcia, Andalucía, and Catalonia.

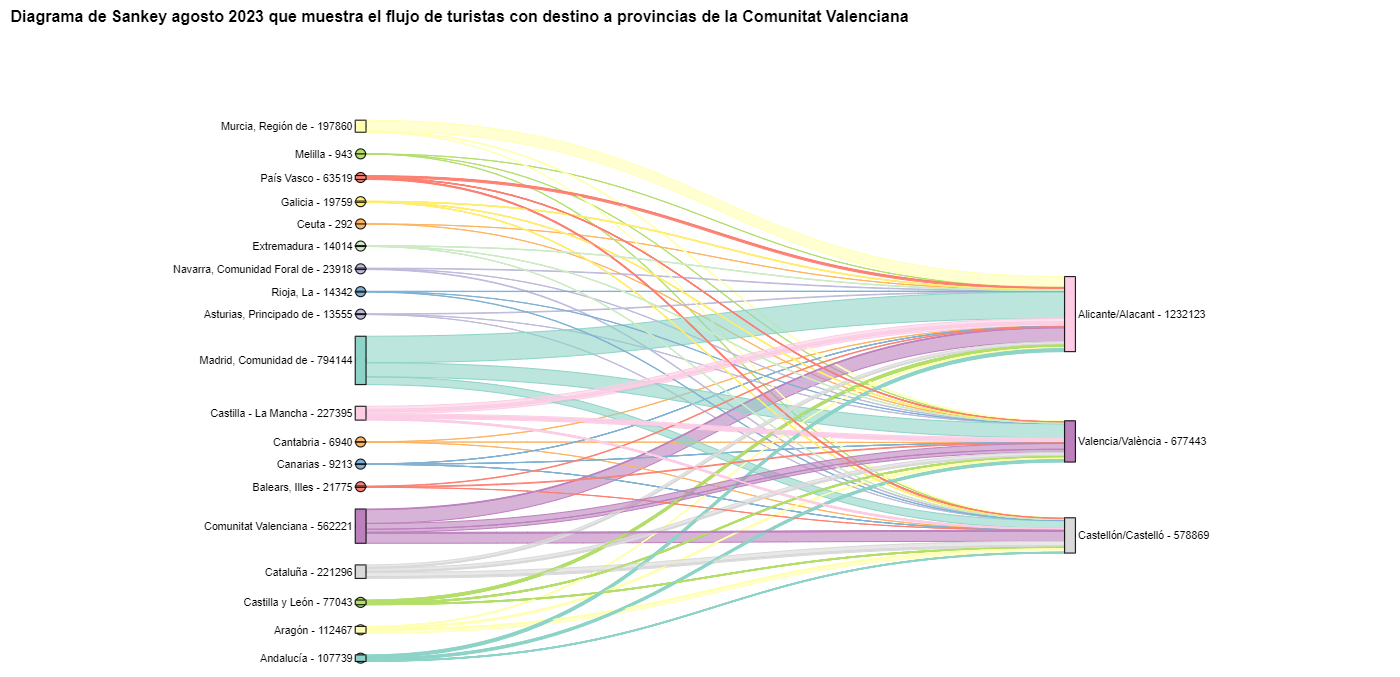

To verify that these trends occur in the three provinces of the Valencian Community, we are going to create a Sankey diagram that shows on the left margin all the CCAA and on the right margin the three provinces of the Valencian Community.

To create this Sankey diagram at the provincial level, we have to filter our initial pandas.DataFrame to extract the relevant information from it. The steps in the notebook can be adapted to perform this analysis at the provincial level for any other CA. Although we are not reusing the function we used previously, we can also change the analysis period.

The Sankey diagram that visualizes the tourist flows that arrived in August 2023 to the three Valencian provinces would look like this:

Figure 10. Sankey diagram August 2023 showing the flow of tourists destined for provinces of the Valencian Community.

We can observe that, as we already assumed, the largest number of tourists arriving in the Valencian Community in August comes from the Community of Madrid. However, we can verify that this is not true for the province of Castellón, where in August 2023 the majority of tourists were Valencians who traveled within their own CA.

6. Conclusions of the exercise

Thanks to the visualization techniques used in this exercise, we have been able to observe the tourist flows that move within the national territory, focusing on making comparisons between different times of the year and trying to identify patterns. In both the chord diagrams and the Sankey diagrams that we have created, we have been able to observe the influx of Madrilenian tourists on the Valencian coasts in summer. We have also been able to identify the autonomous communities where tourists leave their own autonomous community the least, such as Catalonia and Andalucía.

7. Do you want to do the exercise?

We invite the reader to execute the code contained in the Google Colab notebook that accompanies this exercise to continue with the analysis of tourist flows. We leave here some ideas of possible questions and how they could be answered:

- The impact of the pandemic: we have already mentioned it briefly above, but an interesting question would be to measure the impact that the coronavirus pandemic has had on tourism. We can compare the data from previous years with 2020 and also analyze the following years to detect stabilization trends. Given that the function we have created allows easily changing the time period under analysis, we suggest you do this analysis on your own.

- Time intervals: it is also possible to modify the function we have been using in such a way that it not only allows selecting a specific time period, but also allows time intervals.

- Provincial level analysis: likewise, an advanced reader with Pandas can challenge themselves to create a Sankey diagram that visualizes which provinces the inhabitants of a certain region travel to, for example, Ourense. In order not to have too many destination provinces that could make the Sankey diagram illegible, only the 10 most visited could be visualized. To obtain the data to create this visualization, the reader would have to play with the filters they apply to the dataset and with the groupby method of pandas, being inspired by the already executed code.

We hope that this practical exercise has provided you with sufficient knowledge to develop your own visualizations. If you have any data science topic that you would like us to cover soon, do not hesitate to propose your interest through our contact channels.

In addition, remember that you have more exercises available in the section "Data science exercises".

The digital revolution is transforming municipal services, driven by the increasing adoption of artificial intelligence (AI) technologies that also benefit from open data. These developments have the potential to redefine the way municipalities deliver services to their citizens, providing tools to improve efficiency, accessibility and sustainability. This report looks at success stories in the deployment of applications and platforms that seek to improve various aspects of life in municipalities, highlighting their potential to unlock more of the vast untapped potential of open data and associated artificial intelligence technologies.

The applications and platforms described in this report have a high potential for replicability in different municipal contexts, as they address common problems. Replication of these solutions can take place through collaboration between municipalities, companies and developers, as well as through the release and standardisation of open data.

Despite the benefits, the adoption of open data for municipal innovation also presents significant challenges. The quality, updating and standardisation of data published by local authorities, as well as interoperability between different platforms and systems, must be ensured. In addition, the open data culture needs to be reinforced among all actors involved, including citizens, developers, businesses and public administrations themselves.

The use cases analysed are divided into four sections. Each of these sections is described below and some examples of the solutions included in the report are shown.

Transport and Mobility

One of the most significant challenges in urban areas is transport and mobility management. Applications using open data have proven to be effective in improving these services. For example, applications such as Park4Dis make it easy to locate parking spaces for people with reduced mobility, using data from multiple municipalities and contributions from volunteers. CityMapper, which has gone global, on the other hand, offers optimised public transport routes in real time, integrating data from various transport modes to provide the most efficient route. These applications not only improve mobility, but also contribute to sustainability by reducing congestion and carbon emissions.

Environment and Sustainability

Growing awareness of sustainability has spurred the development of applications that promote environmentally friendly practices. CleanSpot, for example, facilitates the location of recycling points and the management of municipal waste. The application encourages citizen participation in cleaning and recycling, contributing to the reduction of the ecological footprint. Liight gamifies sustainable behaviour by rewarding users for actions such as recycling or using public transport. These applications not only improve environmental management, but also educate and motivate citizens to adopt more sustainable habits.

Optimisation of Basic Public Services

Urban service management platforms, such as Gestdropper, use open data to monitor and control urban infrastructure in real time. These tools enable more efficient management of resources such as street lighting, water networks and street furniture, optimising maintenance, incident response and reducing operating costs. Moreover, the deployment of appointment management systems, such as CitaME, helps to reduce waiting times and improve efficiency in customer service.

Citizen Services Aggregators

Applications that centralise public information and services, such as Badajoz Es Más and AppValencia, improve accessibility and communication between administrations and citizens. These platforms provide real-time data on public transport, cultural events, tourism and administrative procedures, making life in the municipality easier for residents and tourists alike. For example, integrating multiple services into a single application improves efficiency and reduces the need for unnecessary travel. These tools also support local economies by promoting cultural events and commercial services.

Conclusions

The use of open data and artificial intelligence technologies is transforming municipal management, improving the efficiency, accessibility and sustainability of public services. The success stories presented in this report describe how these tools can benefit both citizens and public administrations by making cities smarter, more inclusive and sustainable environments, and more responsive to the needs and well-being of their inhabitants and visitors.

Listen the podcast (only availible in spanish)

One of the objectives of datos.gob.es is to disseminate the data culture. To this end, we use different channels to disseminate content such as a specialised content blog, a fortnightly newsletter or profiles on social networks such as X (formerly Twitter) or LinkedIn. Social networks serve both as a channel for dissemination and as a space for contact with the open data reuse community. In our didactic mission to raise awareness of data culture, we will now also be present on Instagram.

This visual and dynamic platform will become a new meeting point where our followers can discover, explore and leverage the value of open data and related technologies.

On our Instagram account (@datosgob), we will offer a variety of content:

- Key concepts: definitions of concepts from the world of data and related technologies explained in a clear and concise way to create a glossary at your fingertips.

- Informative infographics: complex issues such as laws, use cases or application of innovative technologies explained graphically and in a simpler way.

- Impact stories: inspiring projects that use open data to make a positive impact on society.

- Tutorials and tips: to learn how to use our platform more effectively, data science exercises and step-by-step visualisations, among others.

- Events and news: important activities, launches of new datasets and the latest developments in the world of open data.

Varied formats of valuable content

In addition, all this information of interest will be presented in formats suitable for the platform, such as:

- Publications: informative pills posts, infographics, monographs, interviews, audiovisual pieces and success stories that will help you learn how different digital tools and methodologies are your allies. You will be able to enjoy different types of publications (fixed, carousels, collaborative with other reference accounts, etc.), where you will can share your opinions, doubts and experiences, and connect with other professionals.

- Stories: announcements, polls or calendars so you can stay on top of what's happening in the data ecosystem and be part of it by sharing your impressions.

- Featured stories: at the top of our profile, we will leave selected and ordered the most relevant information on the different topics and initiatives of datos.gob.es, in three areas: training, events and news.

A participatory and collaborative platform

As we have been doing in the other social networks where we are present, we want our account to be a space for dialogue and collaboration. Therefore, we invite all citizens, researchers, journalists, developers and anyone interested in open data to join the datos.gob.es community. Here are some ways you can get involved:

- Comment and share: we want to hear your opinions, questions and suggestions. Interact with our publications and share our content with your network to help spread the word about the importance of open data.

- Tag us: if you are working on a project that uses open data, show us! Tag us in your posts and use the hashtag #datosgob so we can see and share your work with our community.

- Featured stories: do you have an interesting story to tell about how you have used open data? Send us a direct message and we may feature it on our account to inspire others.

Why Instagram?

In a world where visual information has become a powerful tool for communication and learning, we have decided to make the leap to Instagram. This platform will not only allow us to report on developments in the data ecosystem in a more engaging and understandable way, but will also help us to connect with a wider and more diverse audience. We want to make public information accessible and relevant to everyone, and we believe Instagram is the perfect place to do this.

In short, the launch of our Instagram account marks an important step in our mission to make open data more accessible and useful for all.

Follow us on Instagram at @datosgob and join a growing community of people interested in transparency, innovation and knowledge sharing. By following us, you will have immediate access to a constant source of information and resources to help you make the most of open data. Also, don't forget to follow us on our other social networks X o LinkedIn.

ELISA: The Plan in figures is a tool launched by the Spanish government to visualise updated data on the implementation of the investments of the Recovery, Transformation and Resilience Plan (PRTR). Through intuitive visualisations, this tool provides information on the number of companies and households that have received funding, the size of the beneficiary companies and the investments made in the different levers of action defined in the Plan.

The tool also provides details of the funds managed and executed in each Autonomous Community. In this way, the territorial distribution of the projects can be seen. In addition, the tool is accompanied by territorial sheets, which show a more qualitative detail of the impact of the Recovery Plan in each Autonomous Community.

1. Introduction

In the information age, artificial intelligence has proven to be an invaluable tool for a variety of applications. One of the most incredible manifestations of this technology is GPT (Generative Pre-trained Transformer), developed by OpenAI. GPT is a natural language model that can understand and generate text, providing coherent and contextually relevant responses. With the recent introduction of Chat GPT-4, the capabilities of this model have been further expanded, allowing for greater customisation and adaptability to different themes.

In this post, we will show you how to set up and customise a specialised critical minerals wizard using GPT-4 and open data sources. As we have shown in previous publications critical minerals are fundamental to numerous industries, including technology, energy and defence, due to their unique properties and strategic importance. However, information on these materials can be complex and scattered, making a specialised assistant particularly useful.

The aim of this post is to guide you step by step from the initial configuration to the implementation of a GPT wizard that can help you to solve doubts and provide valuable information about critical minerals in your day to day life. In addition, we will explore how to customise aspects of the assistant, such as the tone and style of responses, to perfectly suit your needs. At the end of this journey, you will have a powerful, customised tool that will transform the way you access and use critical open mineral information.

Access the data lab repository on Github.

2. Context

The transition to a sustainable future involves not only changes in energy sources, but also in the material resources we use. The success of sectors such as energy storage batteries, wind turbines, solar panels, electrolysers, drones, robots, data transmission networks, electronic devices or space satellites depends heavily on access to the raw materials critical to their development. We understand that a mineral is critical when the following factors are met:

- Its global reserves are scarce

- There are no alternative materials that can perform their function (their properties are unique or very unique)

- They are indispensable materials for key economic sectors of the future, and/or their supply chain is high risk

You can learn more about critical minerals in the post mentioned above.

3. Target

This exercise focuses on showing the reader how to customise a specialised GPT model for a specific use case. We will adopt a "learning-by-doing" approach, so that the reader can understand how to set up and adjust the model to solve a real and relevant problem, such as critical mineral expert advice. This hands-on approach not only improves understanding of language model customisation techniques, but also prepares readers to apply this knowledge to real-world problem solving, providing a rich learning experience directly applicable to their own projects.

The GPT assistant specialised in critical minerals will be designed to become an essential tool for professionals, researchers and students. Its main objective will be to facilitate access to accurate and up-to-date information on these materials, to support strategic decision-making and to promote education in this field. The following are the specific objectives we seek to achieve with this assistant:

- Provide accurate and up-to-date information:

- The assistant should provide detailed and accurate information on various critical minerals, including their composition, properties, industrial uses and availability.

- Keep up to date with the latest research and market trends in the field of critical minerals.

- Assist in decision-making:

- To provide data and analysis that can assist strategic decision making in industry and critical minerals research.

- Provide comparisons and evaluations of different minerals in terms of performance, cost and availability.

- Promote education and awareness of the issue:

- Act as an educational tool for students, researchers and practitioners, helping to improve their knowledge of critical minerals.

- Raise awareness of the importance of these materials and the challenges related to their supply and sustainability.

4. Resources

To configure and customise our GPT wizard specialising in critical minerals, it is essential to have a number of resources to facilitate implementation and ensure the accuracy and relevance of the model''s responses. In this section, we will detail the necessary resources that include both the technological tools and the sources of information that will be integrated into the assistant''s knowledge base.

Tools and Technologies

The key tools and technologies to develop this exercise are:

- OpenAI account: required to access the platform and use the GPT-4 model. In this post, we will use ChatGPT''s Plus subscription to show you how to create and publish a custom GPT. However, you can develop this exercise in a similar way by using a free OpenAI account and performing the same set of instructions through a standard ChatGPT conversation.

- Microsoft Excel: we have designed this exercise so that anyone without technical knowledge can work through it from start to finish. We will only use office tools such as Microsoft Excel to make some adjustments to the downloaded data.

In a complementary way, we will use another set of tools that will allow us to automate some actions without their use being strictly necessary:

- Google Colab: is a Python Notebooks environment that runs in the cloud, allowing users to write and run Python code directly in the browser. Google Colab is particularly useful for machine learning, data analysis and experimentation with language models, offering free access to powerful computational resources and facilitating collaboration and project sharing.

- Markmap: is a tool that visualises Markdown mind maps in real time. Users write ideas in Markdown and the tool renders them as an interactive mind map in the browser. Markmap is useful for project planning, note taking and organising complex information visually. It facilitates understanding and the exchange of ideas in teams and presentations.

Sources of information

- Raw Materials Information System (RMIS): raw materials information system maintained by the Joint Research Center of the European Union. It provides detailed and up-to-date data on the availability, production and consumption of raw materials in Europe.

- International Energy Agency (IEA) Catalogue of Reports and Data: the International Energy Agency (IEA) offers a comprehensive catalogue of energy-related reports and data, including statistics on production, consumption and reserves of energy and critical minerals.

- Mineral Database of the Spanish Geological and Mining Institute (BDMIN in its acronym in Spanish): contains detailed information on minerals and mineral deposits in Spain, useful to obtain specific data on the production and reserves of critical minerals in the country.

With these resources, you will be well equipped to develop a specialised GPT assistant that can provide accurate and relevant answers on critical minerals, facilitating informed decision-making in the field.

5. Development of the exercise

5.1. Building the knowledge base

For our specialised critical minerals GPT assistant to be truly useful and accurate, it is essential to build a solid and structured knowledge base. This knowledge base will be the set of data and information that the assistant will use to answer queries. The quality and relevance of this information will determine the effectiveness of the assistant in providing accurate and useful answers.

We start with the collection of information sources that will feed our knowledge base. Not all sources of information are equally reliable. It is essential to assess the quality of the sources identified, ensuring that:

- Information is up to date: the relevance of data can change rapidly, especially in dynamic fields such as critical minerals.

- The source is reliable and recognised: it is necessary to use sources from recognised and respected academic and professional institutions.

- Data is complete and accessible: it is crucial that data is detailed and accessible for integration into our wizard.

In our case, we developed an online search in different platforms and information repositories trying to select information belonging to different recognised entities:

- Research centres and universities:

- They publish detailed studies and reports on the research and development of critical minerals.

- Example: RMIS of the Joint Research Center of the European Union.

- Governmental institutions and international organisations:

- These entities usually provide comprehensive and up-to-date data on the availability and use of critical minerals.

- Example: International Energy Agency (IEA).

- Specialised databases:

- They contain technical and specific data on deposits and production of critical minerals.

- Example: Minerals Database of the Spanish Geological and Mining Institute (BDMIN).

Selection and preparation of information

We will now focus on the selection and preparation of existing information from these sources to ensure that our GPT assistant can access accurate and useful data.

RMIS of the Joint Research Center of the European Union:

- Selected information:

We selected the report "Supply chain analysis and material demand forecast in strategic technologies and sectors in the EU - A foresight study". This is an analysis of the supply chain and demand for minerals in strategic technologies and sectors in the EU. It presents a detailed study of the supply chains of critical raw materials and forecasts the demand for minerals up to 2050.

- Necessary preparation:

The format of the document, PDF, allows the direct ingestion of the information by our assistant. However, as can be seen in Figure 1, there is a particularly relevant table on pages 238-240 which analyses, for each mineral, its supply risk, typology (strategic, critical or non-critical) and the key technologies that employ it. We therefore decided to extract this table into a structured format (CSV), so that we have two pieces of information that will become part of our knowledge base.

Figure 1: Table of minerals contained in the JRC PDF

To programmatically extract the data contained in this table and transform it into a more easily processable format, such as CSV(comma separated values), we will use a Python script that we can use through the platform Google Colab platform (Figure 2).

Figure 2: Script Python para la extracción de datos del PDF de JRC desarrollado en plataforma Google Colab.

To summarise, this script:

- It is based on the open source library PyPDF2capable of interpreting information contained in PDF files.

- First, it extracts in text format (string) the content of the pages of the PDF where the mineral table is located, removing all the content that does not correspond to the table itself.

- It then goes through the string line by line, converting the values into columns of a data table. We will know that a mineral is used in a key technology if in the corresponding column of that mineral we find a number 1 (otherwise it will contain a 0).

- Finally, it exports the table to a CSV file for further use.

International Energy Agency (IEA):

- Selected information:

We selected the report "Global Critical Minerals Outlook 2024". It provides an overview of industrial developments in 2023 and early 2024, and offers medium- and long-term prospects for the demand and supply of key minerals for the energy transition. It also assesses risks to the reliability, sustainability and diversity of critical mineral supply chains.

- Necessary preparation:

The format of the document, PDF, allows us to ingest the information directly by our virtual assistant. In this case, we will not make any adjustments to the selected information.

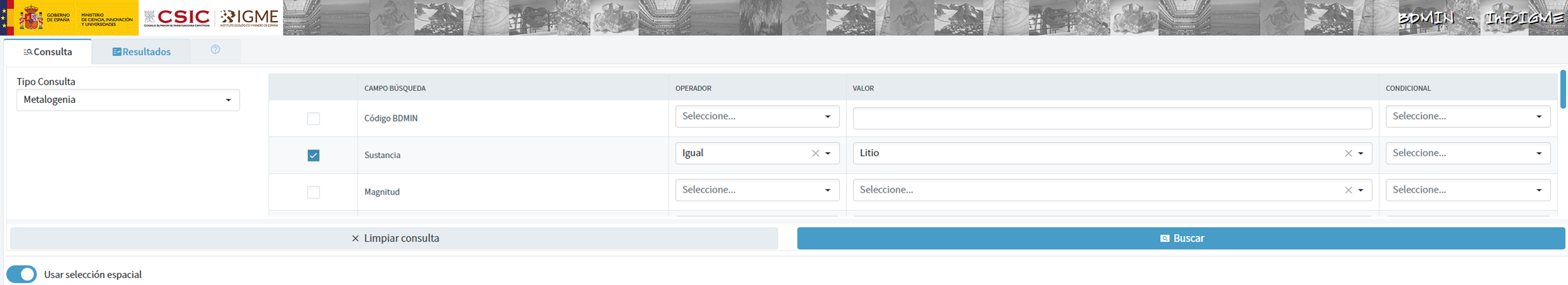

Spanish Geological and Mining Institute''s Minerals Database (BDMIN)

- Selected information:

In this case, we use the form to select the existing data in this database for indications and deposits in the field of metallogeny, in particular those with lithium content.

Figure 3: Dataset selection in BDMIN.

- Necessary preparation:

We note how the web tool allows online visualisation and also the export of this data in various formats. Select all the data to be exported and click on this option to download an Excel file with the desired information.

Figure 4: Visualization and download tool in BDMIN

Figure 5: BDMIN Downloaded Data.

All the files that make up our knowledge base can be found at GitHub, so that the reader can skip the downloading and preparation phase of the information.

5.2. GPT configuration and customisation for critical minerals

When we talk about "creating a GPT," we are actually referring to the configuration and customisation of a GPT (Generative Pre-trained Transformer) based language model to suit a specific use case. In this context, we are not creating the model from scratch, but adjusting how the pre-existing model (such as OpenAI''s GPT-4) interacts and responds within a specific domain, in this case, on critical minerals.

First of all, we access the application through our browser and, if we do not have an account, we follow the registration and login process on the ChatGPT platform. As mentioned above, in order to create a GPT step-by-step, you will need to have a Plus account. However, readers who do not have such an account can work with a free account by interacting with ChatGPT through a standard conversation.

Figure 6: ChatGPT login and registration page.

Once logged in, select the "Explore GPT" option, and then click on "Create" to begin the process of creating your GPT.

Figure 7: Creation of new GPT.

The screen will display the split screen for creating a new GPT: on the left, we will be able to talk to the system to indicate the characteristics that our GPT should have, while on the left we will be able to interact with our GPT to validate that its behaviour is adequate as we go through the configuration process.

Figure 8: Screen of creating new GPT.

In the GitHub of this project, we can find all the prompts or instructions that we will use to configure and customise our GPT and that we will have to introduce sequentially in the "Create" tab, located on the left tab of our screens, to complete the steps detailed below.

The steps we will follow for the creation of the GPT are as follows:

- First, we will outline the purpose and basic considerations for our GPT so that you can understand how to use it.

Figure 9: Basic instructions for new GPT.

2. We will then create a name and an image to represent our GPT and make it easily identifiable. In our case, we will call it MateriaGuru.

Figure 10: Name selection for new GPT.

Figure 11: Image creation for GPT.

3.We will then build the knowledge base from the information previously selected and prepared to feed the knowledge of our GPT.

Figure 12: Uploading of information to the new GPT knowledge base.

4. Now, we can customise conversational aspects such as their tone, the level of technical complexity of their response or whether we expect brief or elaborate answers.

5. Lastly, from the "Configure" tab, we can indicate the conversation starters desired so that users interacting with our GPT have some ideas to start the conversation in a predefined way.

Figure 13: Configure GPT tab.

In Figure 13 we can also see the final result of our training, where key elements such as their image, name, instructions, conversation starters or documents that are part of their knowledge base appear.

5.3. Validation and publication of GPT

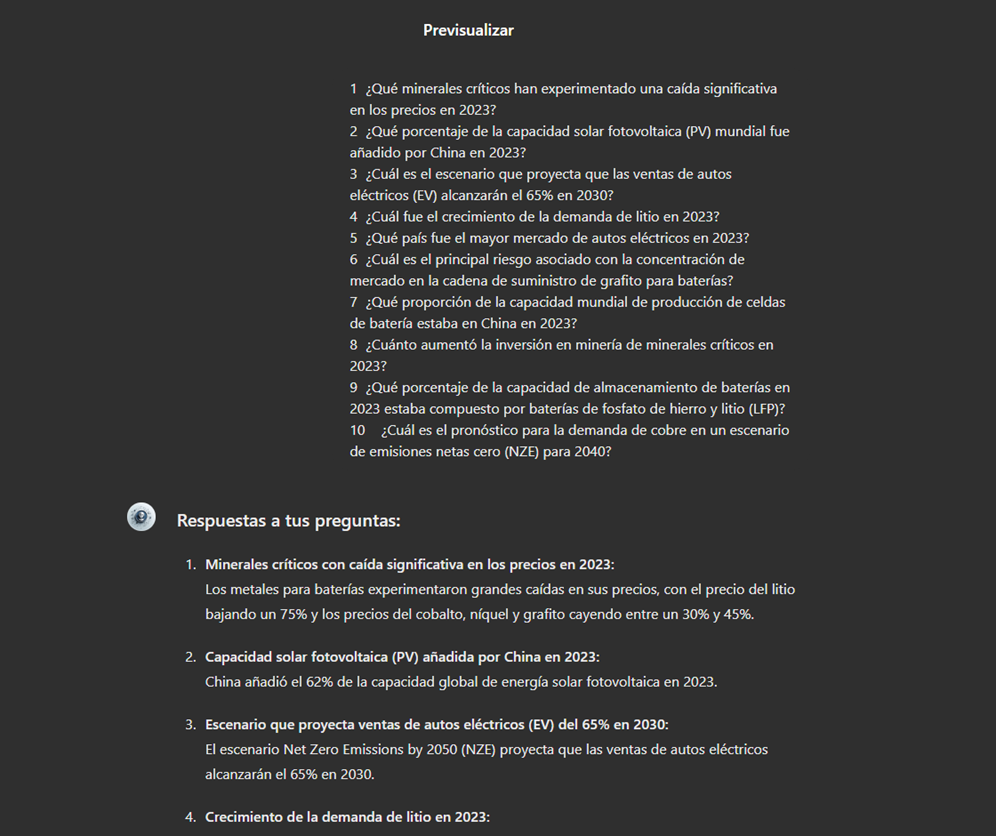

Before we sign off our new GPT-based assistant, we will proceed with a brief validation of its correct configuration and learning with respect to the subject matter around which we have trained it. For this purpose, we prepared a battery of questions that we will ask MateriaGuru to check that it responds appropriately to a real scenario of use.

| # | Question | Answer |

|---|---|---|

| 1 | Which critical minerals have experienced a significant drop in prices in 2023? | Battery mineral prices saw particularly large drops with lithium prices falling by 75% and cobalt, nickel and graphite prices falling by between 30% and 45%. |

| 2 | What percentage of global solar photovoltaic (PV) capacity was added by China in 2023? | China accounted for 62% of the increase in global solar PV capacity in 2023. |

| 3 | What is the scenario that projects electric car (EV) sales to reach 65% by 2030? | The Net Zero Emissions (NZE) scenario for 2050 projects that electric car sales will reach 65% by 2030. |

| 4 | What was the growth in lithium demand in 2023? | Lithium demand increased by 30% in 2023. |

| 5 | Which country was the largest electric car market in 2023? | China was the largest electric car market in 2023 with 8.1 million electric car sales representing 60% of the global total. |

| 6 | What is the main risk associated with market concentration in the battery graphite supply chain? | More than 90% of battery-grade graphite and 77% of refined rare earths in 2030 originate in China, posing a significant risk to market concentration. |

| 7 | What proportion of global battery cell production capacity was in China in 2023? | China owned 85% of battery cell production capacity in 2023. |

| 8 | How much did investment in critical minerals mining increase in 2023? | Investment in critical minerals mining grew by 10% in 2023. |

| 9 | What percentage of battery storage capacity in 2023 was composed of lithium iron phosphate (LFP) batteries? | By 2023, LFP batteries would constitute approximately 80% of the total battery storage market. |

| 10 | What is the forecast for copper demand in a net zero emissions (NZE) scenario for 2040? | In the net zero emissions (NZE) scenario for 2040, copper demand is expected to have the largest increase in terms of production volume. |

Figure 14: Table with battery of questions for the validation of our GPT.

Using the preview section on the right-hand side of our screens, we launch the battery of questions and validate that the answers correspond to those expected.

Figure 15: Validation of GPT responses.

Finally, click on the "Create" button to finalise the process. We will be able to select between different alternatives to restrict its use by other users.

Figure 16: Publication of our GPT.

6. Scenarios of use

In this section we show several scenarios in which we can take advantage of MateriaGuru in our daily life. On the GitHub of the project you can find the prompts used to replicate each of them.

6.1. Consultation of critical minerals information

The most typical scenario for the use of this type of GPTs is assistance in resolving doubts related to the topic in question, in this case, critical minerals. As an example, we have prepared a set of questions that the reader can pose to the GPT created to understand in more detail the relevance and current status of a critical material such as graphite from the reports provided to our GPT.

Figure 17: Resolution of critical mineral queries.

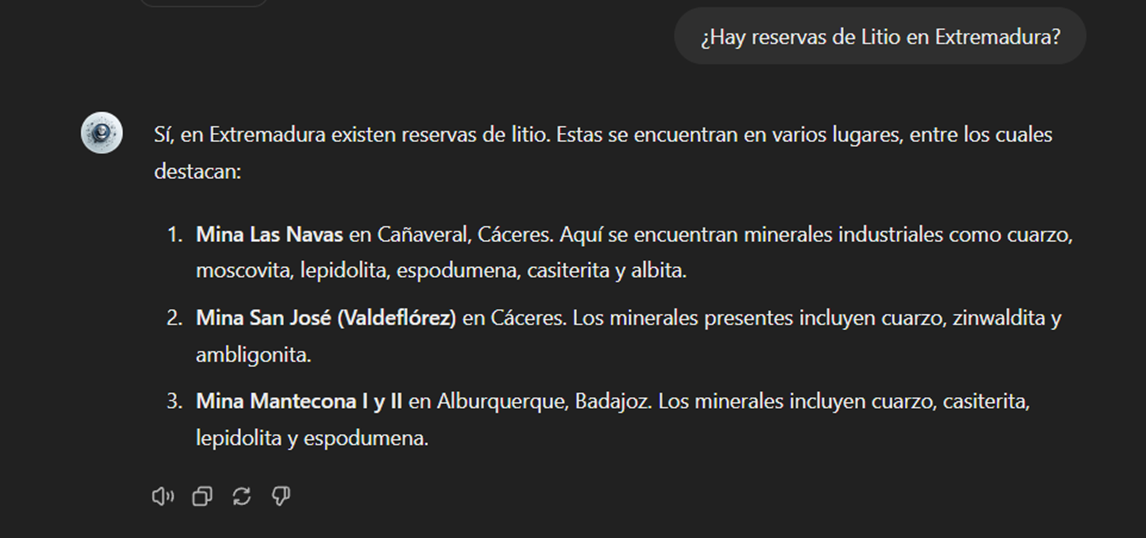

We can also ask you specific questions about the tabulated information provided on existing sites and evidence on Spanish territory.

Figure 18: Lithium reserves in Extremadura.

6.2. Representation of quantitative data visualisations

Another common scenario is the need to consult quantitative information and make visual representations for better understanding. In this scenario, we can see how MateriaGuru is able to generate an interactive visualisation of graphite production in tonnes for the main producing countries.

Figure 19: Interactive visualisation generation with our GPT.

6.3. Generating mind maps to facilitate understanding

Finally, in line with the search for alternatives for a better access and understanding of the existing knowledge in our GPT, we will propose to MateriaGuru the construction of a mind map that allows us to understand in a visual way key concepts of critical minerals. For this purpose, we use the open Markmap notation (Markdown Mindmap), which allows us to define mind maps using markdown notation.

Figure 20: Generation of mind maps from our GPT

We will need to copy the generated code and enter it in a markmapviewer in order to generate the desired mind map. We facilitate here a version of this code generated by MateriaGuru.

Figure 21: Visualisation of mind maps.

7. Results and conclusions

In the exercise of building an expert assistant using GPT-4, we have succeeded in creating a specialised model for critical minerals. This wizard provides detailed and up-to-date information on critical minerals, supporting strategic decision making and promoting education in this field. We first gathered information from reliable sources such as the RMIS, the International Energy Agency (IEA), and the Spanish Geological and Mining Institute (BDMIN). We then process and structure the data appropriately for integration into the model. Validations showed that the wizard accurately answers domain-relevant questions, facilitating access to your information.

In this way, the development of the specialised critical minerals assistant has proven to be an effective solution for centralising and facilitating access to complex and dispersed information.

The use of tools such as Google Colab and Markmap has enabled better organisation and visualisation of data, increasing efficiency in knowledge management. This approach not only improves the understanding and use of critical mineral information, but also prepares users to apply this knowledge in real-world contexts.

The practical experience gained in this exercise is directly applicable to other projects that require customisation of language models for specific use cases.

8. Do you want to do the exercise?

If you want to replicate this exercise, access this this repository where you will find more information (the prompts used, the code generated by MateriaGuru, etc.)

Also, remember that you have at your disposal more exercises in the section "Step-by-step visualisations".

Content elaborated by Juan Benavente, industrial engineer and expert in technologies linked to the data economy. The contents and points of view reflected in this publication are the sole responsibility of the author.

1. Introduction

Visualisations are graphical representations of data that allow to communicate, in a simple and effective way, the information linked to the data. The visualisation possibilities are very wide ranging, from basic representations such as line graphs, bar charts or relevant metrics, to interactive dashboards.

In this section of "Step-by-Step Visualisations we are regularly presenting practical exercises making use of open data available at datos.gob.es or other similar catalogues. They address and describe in a simple way the steps necessary to obtain the data, carry out the relevant transformations and analyses, and finally draw conclusions, summarizing the information.

Documented code developments and free-to-use tools are used in each practical exercise. All the material generated is available for reuse in the GitHub repository of datos.gob.es.

In this particular exercise, we will explore the current state of electric vehicle penetration in Spain and the future prospects for this disruptive technology in transport.

Access the data lab repository on Github.

Run the data pre-processing code on Google Colab.

In this video (available with English subtitles), the author explains what you will find both on Github and Google Colab.

2. Context: why is the electric vehicle important?

The transition towards more sustainable mobility has become a global priority, placing the electric vehicle (EV) at the centre of many discussions on the future of transport. In Spain, this trend towards the electrification of the car fleet not only responds to a growing consumer interest in cleaner and more efficient technologies, but also to a regulatory and incentive framework designed to accelerate the adoption of these vehicles. With a growing range of electric models available on the market, electric vehicles represent a key part of the country's strategy to reduce greenhouse gas emissions, improve urban air quality and foster technological innovation in the automotive sector.

However, the penetration of EVs in the Spanish market faces a number of challenges, from charging infrastructure to consumer perception and knowledge of EVs. Expansion of the freight network, together with supportive policies and fiscal incentives, are key to overcoming existing barriers and stimulating demand. As Spain moves towards its sustainability and energy transition goals, analysing the evolution of the electric vehicle market becomes an essential tool to understand the progress made and the obstacles that still need to be overcome.

3. Objective

This exercise focuses on showing the reader techniques for the processing, visualisation and advanced analysis of open data using Python. We will adopt a "learning-by-doing" approach so that the reader can understand the use of these tools in the context of solving a real and topical challenge such as the study of EV penetration in Spain. This hands-on approach not only enhances understanding of data science tools, but also prepares readers to apply this knowledge to solve real problems, providing a rich learning experience that is directly applicable to their own projects.

The questions we will try to answer through our analysis are:

- Which vehicle brands led the market in 2023?

- Which vehicle models were the best-selling in 2023?

- What market share will electric vehicles absorb in 2023?

- Which electric vehicle models were the best-selling in 2023?

- How have vehicle registrations evolved over time?

- Are we seeing any trends in electric vehicle registrations?

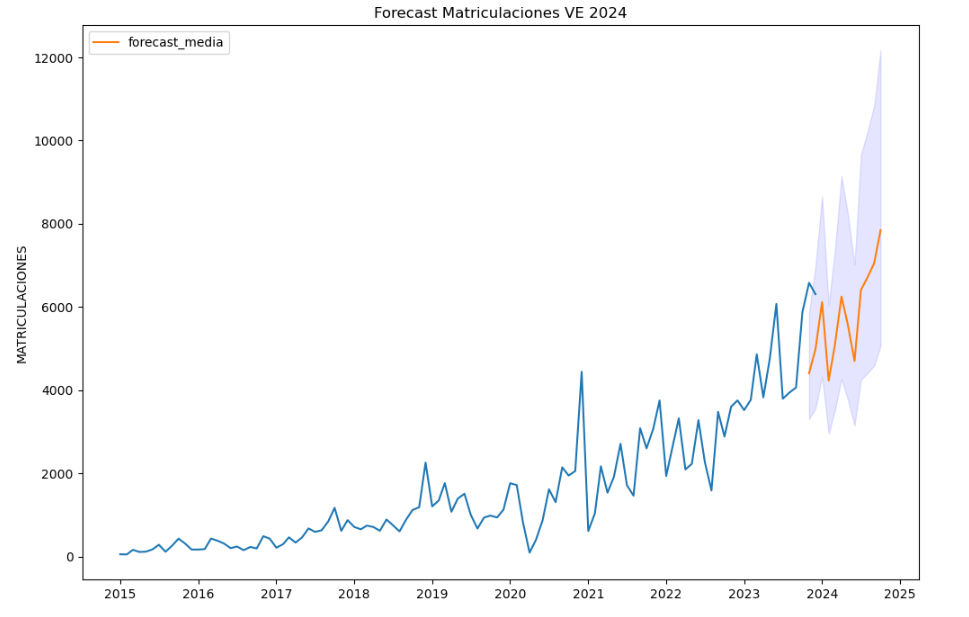

- How do we expect electric vehicle registrations to develop next year?

- How much CO2 emission reduction can we expect from the registrations achieved over the next year?

4. Resources

To complete the development of this exercise we will require the use of two categories of resources: Analytical Tools and Datasets.

4.1. Dataset

To complete this exercise we will use a dataset provided by the Dirección General de Tráfico (DGT) through its statistical portal, also available from the National Open Data catalogue (datos.gob.es). The DGT statistical portal is an online platform aimed at providing public access to a wide range of data and statistics related to traffic and road safety. This portal includes information on traffic accidents, offences, vehicle registrations, driving licences and other relevant data that can be useful for researchers, industry professionals and the general public.

In our case, we will use their dataset of vehicle registrations in Spain available via:

- Open Data Catalogue of the Spanish Government.

- Statistical portal of the DGT.

Although during the development of the exercise we will show the reader the necessary mechanisms for downloading and processing, we include pre-processed data

in the associated GitHub repository, so that the reader can proceed directly to the analysis of the data if desired.

*The data used in this exercise were downloaded on 04 March 2024. The licence applicable to this dataset can be found at https://datos.gob.es/avisolegal.

4.2. Analytical tools

- Programming language: Python - a programming language widely used in data analysis due to its versatility and the wide range of libraries available. These tools allow users to clean, analyse and visualise large datasets efficiently, making Python a popular choice among data scientists and analysts.

- Platform: Jupyter Notebooks - ia web application that allows you to create and share documents containing live code, equations, visualisations and narrative text. It is widely used for data science, data analytics, machine learning and interactive programming education.

-

Main libraries and modules:

- Data manipulation: Pandas - an open source library that provides high-performance, easy-to-use data structures and data analysis tools.

- Data visualisation:

- Matplotlib: a library for creating static, animated and interactive visualisations in Python..

- Seaborn: a library based on Matplotlib. It provides a high-level interface for drawing attractive and informative statistical graphs.

- Statistics and algorithms:

- Statsmodels: a library that provides classes and functions for estimating many different statistical models, as well as for testing and exploring statistical data.

- Pmdarima: a library specialised in automatic time series modelling, facilitating the identification, fitting and validation of models for complex forecasts.

5. Exercise development

It is advisable to run the Notebook with the code at the same time as reading the post, as both didactic resources are complementary in future explanations

The proposed exercise is divided into three main phases.

5.1 Initial configuration

This section can be found in point 1 of the Notebook.

In this short first section, we will configure our Jupyter Notebook and our working environment to be able to work with the selected dataset. We will import the necessary Python libraries and create some directories where we will store the downloaded data.

5.2 Data preparation

This section can be found in point 2 of the Notebookk.

All data analysis requires a phase of accessing and processing to obtain the appropriate data in the desired format. In this phase, we will download the data from the statistical portal and transform it into the format Apache Parquet format before proceeding with the analysis.

Those users who want to go deeper into this task, please read this guide Practical Introductory Guide to Exploratory Data Analysis.

5.3 Data analysis

This section can be found in point 3 of the Notebook.

5.3.1 Análisis descriptivo

In this third phase, we will begin our data analysis. To do so,we will answer the first questions using datavisualisation tools to familiarise ourselves with the data. Some examples of the analysis are shown below:

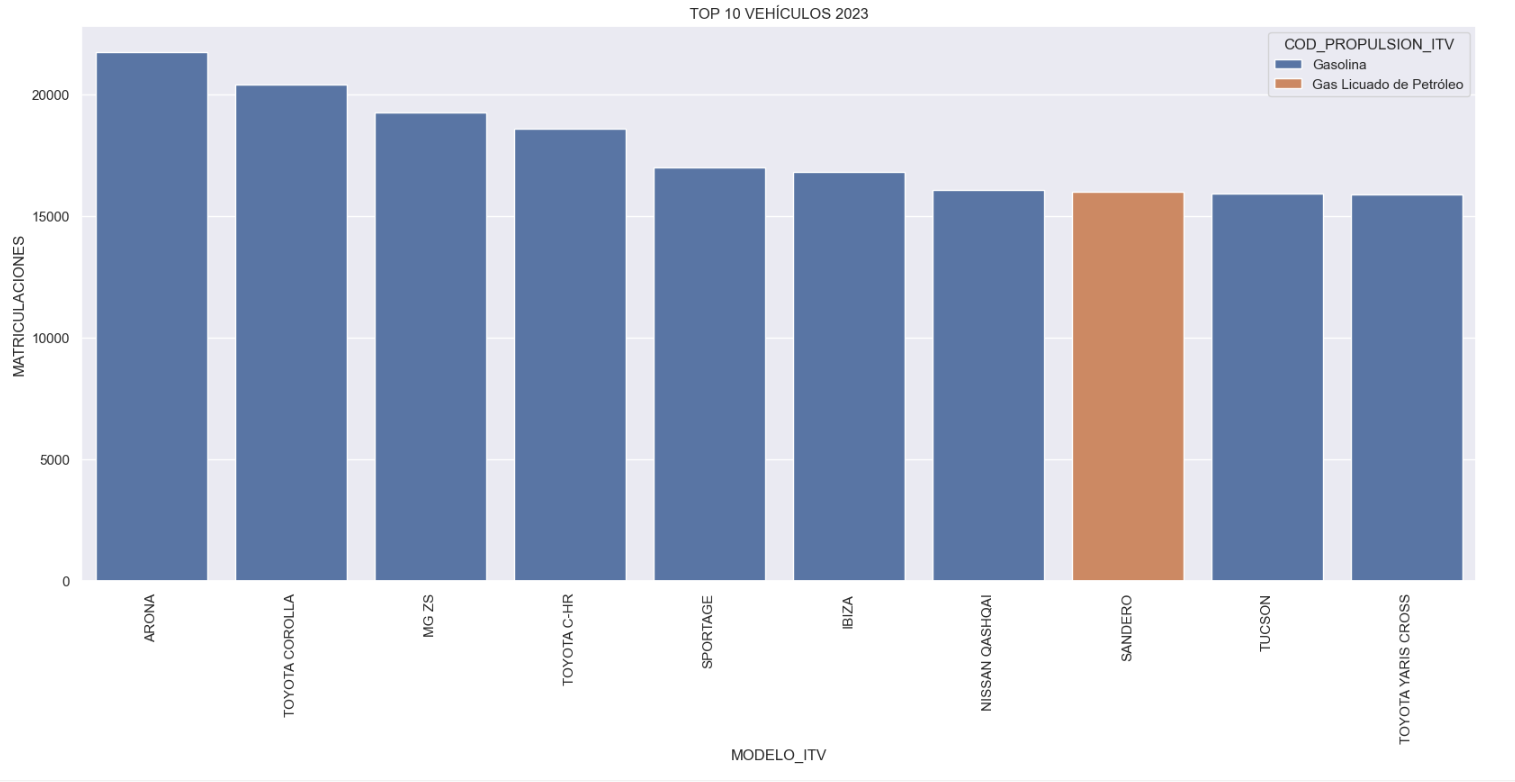

- Top 10 Vehicles registered in 2023: In this visualisation we show the ten vehicle models with the highest number of registrations in 2023, also indicating their combustion type. The main conclusions are:

- The only European-made vehicles in the Top 10 are the Arona and the Ibiza from Spanish brand SEAT. The rest are Asians.

- Nine of the ten vehicles are powered by gasoline.

- The only vehicle in the Top 10 with a different type of propulsion is the DACIA Sandero LPG (Liquefied Petroleum Gas).

Figure 1. Graph "Top 10 vehicles registered in 2023"

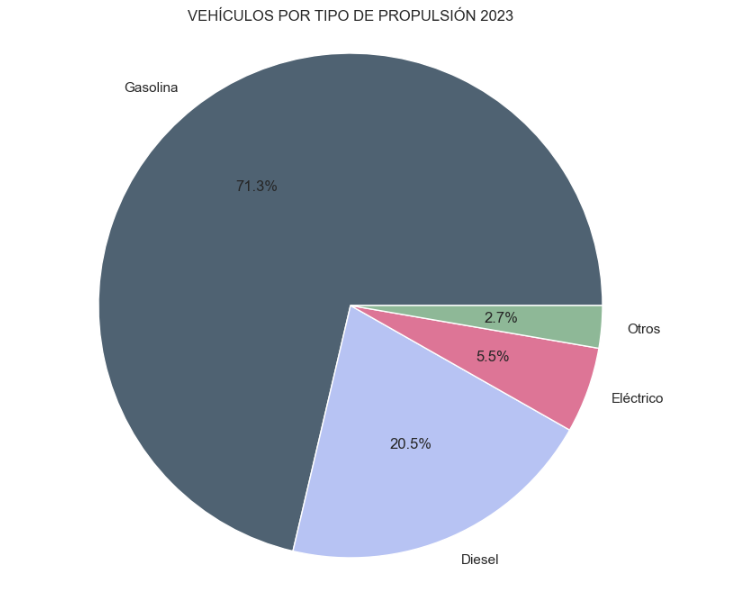

- Market share by propulsion type: In this visualisation we represent the percentage of vehicles registered by each type of propulsion (petrol, diesel, electric or other). We see how the vast majority of the market (>70%) was taken up by petrol vehicles, with diesel being the second choice, and how electric vehicles reached 5.5%.

Figure 2. Graph "Market share by propulsion type".

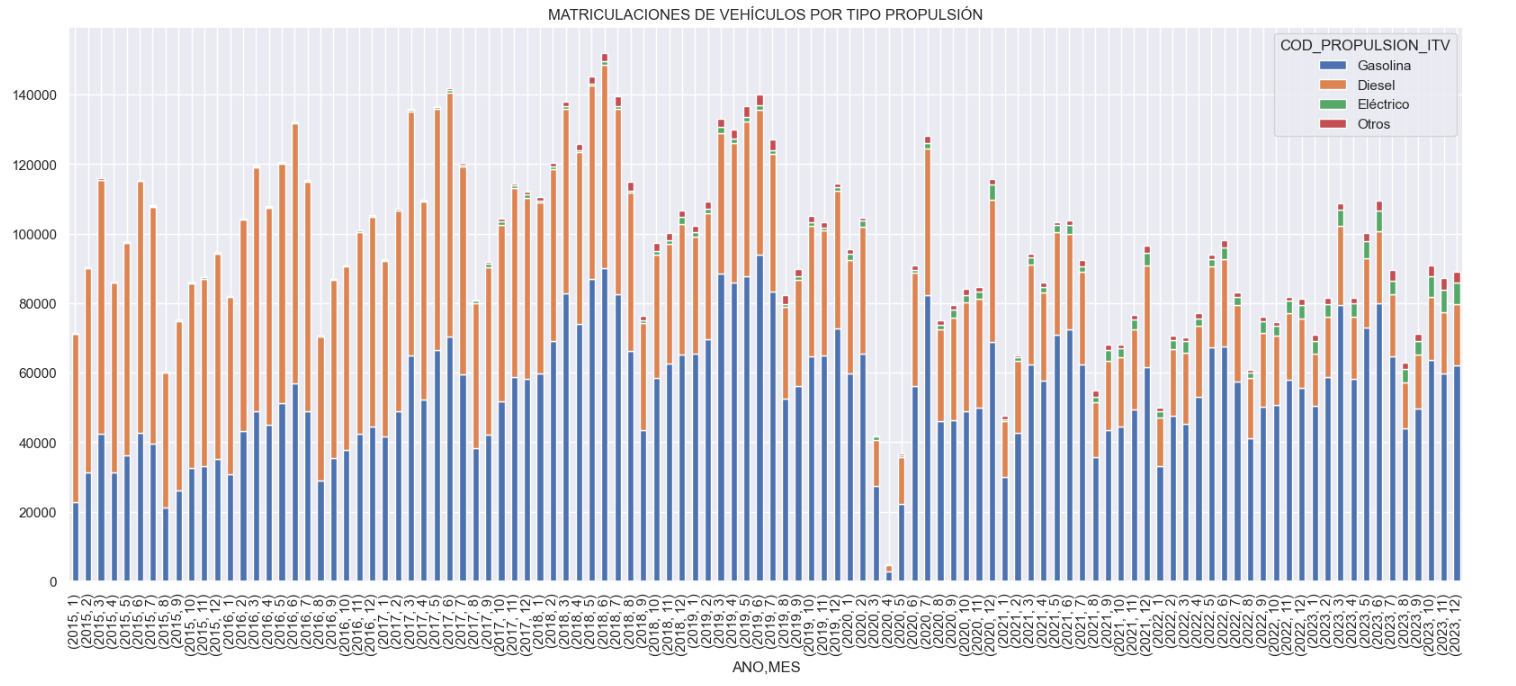

- Historical development of registrations: This visualisation represents the evolution of vehicle registrations over time. It shows the monthly number of registrations between January 2015 and December 2023 distinguishing between the propulsion types of the registered vehicles, and there are several interesting aspects of this graph:

- We observe an annual seasonal behaviour, i.e. we observe patterns or variations that are repeated at regular time intervals. We see recurring high levels of enrolment in June/July, while in August/September they decrease drastically. This is very relevant, as the analysis of time series with a seasonal factor has certain particularities.

-

The huge drop in registrations during the first months of COVID is also very remarkable.

-

We also see that post-covid enrolment levels are lower than before.

-

Finally, we can see how between 2015 and 2023 the registration of electric vehicles is gradually increasing.

Figure 3. Graph "Vehicle registrations by propulsion type".

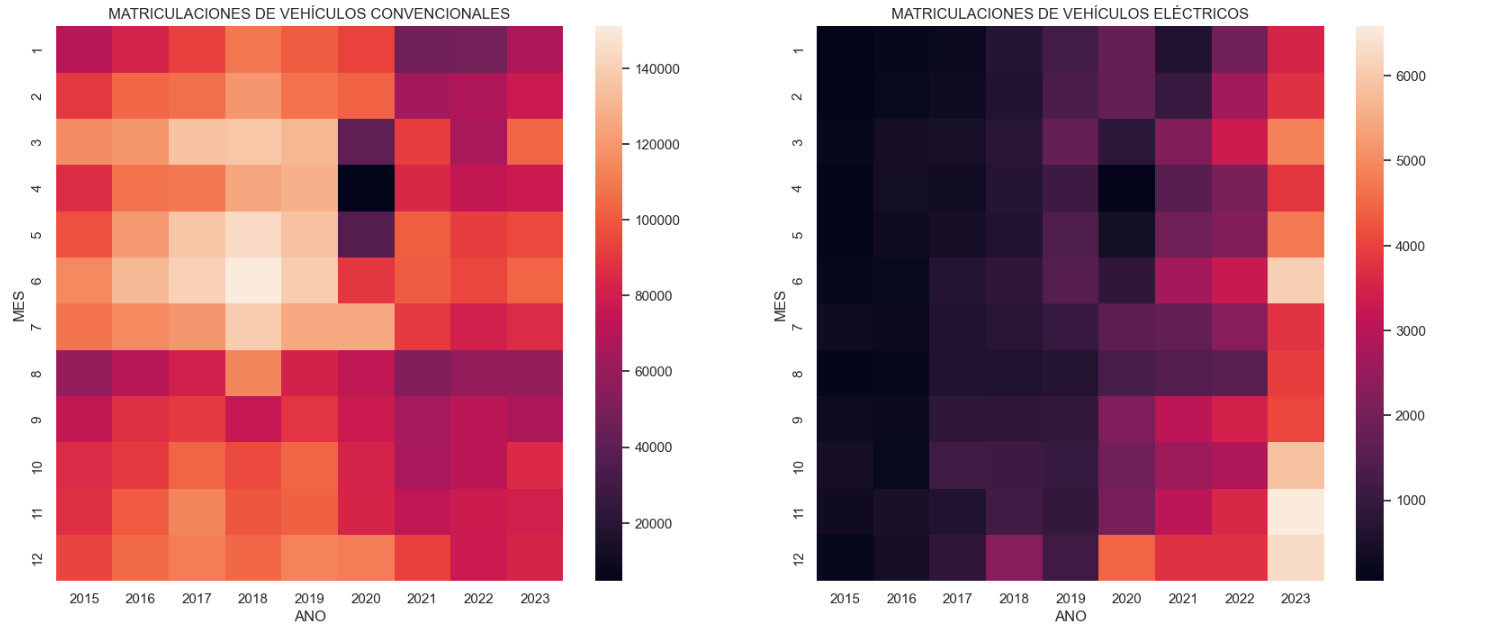

- Trend in the registration of electric vehicles: We now analyse the evolution of electric and non-electric vehicles separately using heat maps as a visual tool. We can observe very different behaviours between the two graphs. We observe how the electric vehicle shows a trend of increasing registrations year by year and, despite the COVID being a halt in the registration of vehicles, subsequent years have maintained the upward trend.

Figure 4. Graph "Trend in registration of conventional vs. electric vehicles".

5.3.2. Predictive analytics

To answer the last question objectively, we will use predictive models that allow us to make estimates regarding the evolution of electric vehicles in Spain. As we can see, the model constructed proposes a continuation of the expected growth in registrations throughout the year of 70,000, reaching values close to 8,000 registrations in the month of December 2024 alone.

Figure 5. Graph "Predicted electric vehicle registrations".

5. Conclusions

As a conclusion of the exercise, we can observe, thanks to the analysis techniques used, how the electric vehicle is penetrating the Spanish vehicle fleet at an increasing speed, although it is still at a great distance from other alternatives such as diesel or petrol, for now led by the manufacturer Tesla. We will see in the coming years whether the pace grows at the level needed to meet the sustainability targets set and whether Tesla remains the leader despite the strong entry of Asian competitors.

6. Do you want to do the exercise?

If you want to learn more about the Electric Vehicle and test your analytical skills, go to this code repository where you can develop this exercise step by step.

Also, remember that you have at your disposal more exercises in the section "Step by step visualisations" "Step-by-step visualisations" section.

Content elaborated by Juan Benavente, industrial engineer and expert in technologies linked to the data economy. The contents and points of view reflected in this publication are the sole responsibility of the author.

Data is a key part of Europe''s digital economy. This is recognised in the Data Strategy, which aims to create a single market that allows free movement of data in order to foster digital transformation and technological innovation. However, achieving this goal involves overcoming a number of obstacles. One of the most salient is the distrust that citizens may feel about the process.

In response to this need, the Data Governance Act or Data Governance Act (DGA), a horizontal instrument that seeks to regulate the re-use of data over which third party rights concur, and to promote their exchange under the principles and values of the European Union. The objectives of the DGA include strengthening the confidence of citizens and businesses that their data is re-used under their control, in accordance with minimum legal standards.

Among other issues, the DGA elaborates on the concept ofdata intermediaries , for whom it establishes a reporting and monitoring framework.

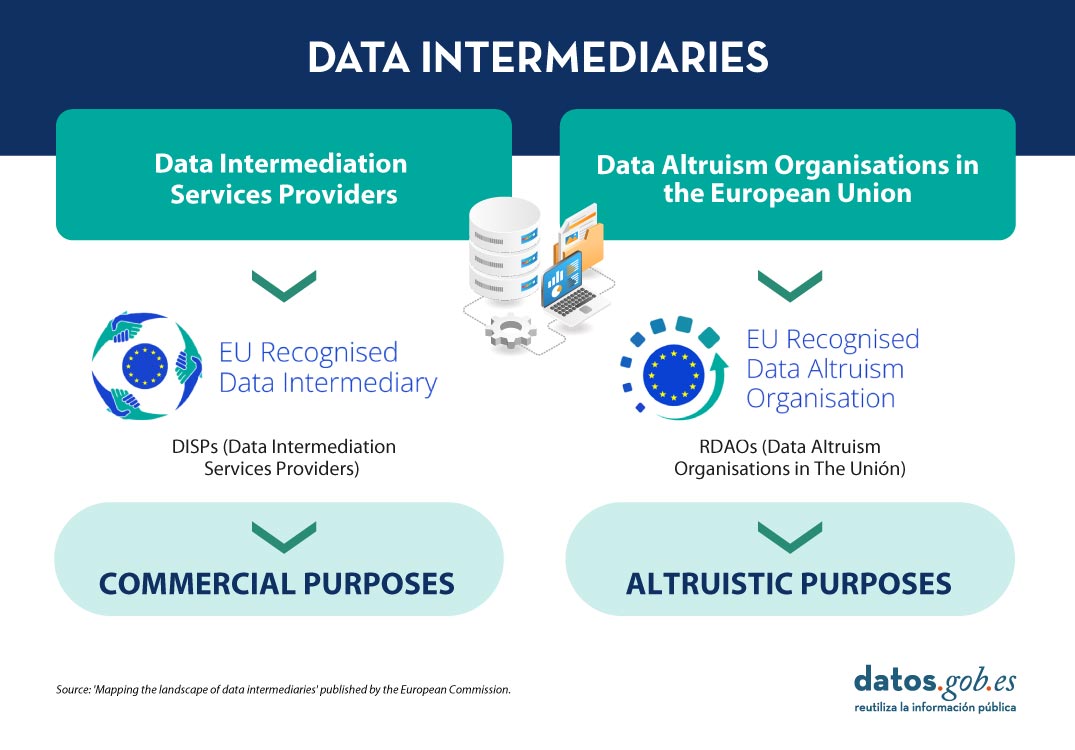

What are data brokers?

The concept of data brokers is relatively new in the data economy, so there are multiple definitions. If focusing on the context of the DGAdata Intermediation Services Providers ( DISPs) are those "whose purpose is to establish commercial relationships for the exchange of data between an undetermined number of data subjects and data owners on the one hand, and data users on the other hand".

The Data Governance Act also differentiates betweenData Brokering Service Providers andData Altruism Organisations Recognised in the Union (RDAOs). The latter concept describes a data exchange relationship, but without seeking a profit for it, in an altruistic way.

What types of data brokering services exist according to the DGA?

Data brokering services are another piece of data sharing, as they make it easier for data subjects to share their data so that it can be reused. They canalso provide technical infrastructure and expertise to support interoperability between datasets, or act as mediators negotiating exchange agreements between parties interested in sharing, accessing or pooling data.

Chapter III of the Data Governance Act explains three types of data brokering services:

- Intermediation services between data subjects and their potential users, including the provision of technical or other means to enable such services. They may include the bilateral or multilateral exchange of data, as well as the creation of platforms, databases or infrastructures enabling their exchange or common use.

- Intermediation services between natural persons wishing to make their personal and non-personal data availableto potential users, including technical means. These services should make it possible for data subjects to exercise their rights as provided for in the General Data Protection Regulation (Regulation 2016/679).

- Data cooperatives. These are organisational structures made up of data subjects, sole proprietorships or SMEs. These entities assist cooperative members in exercising their rights over their data.

In summary, the first type of service can facilitate the exchange of industrial data, the second focuses mainly on the exchange of personal data and the third covers collective data exchange and related governance schemes.

Categories of data intermediaries in detail:

To explore these concepts further, the European Commission has published the report ''...Mapping the landscape of data intermediariesthereport examines in depth the types of data brokering that exist. The report''s findings highlight the fragmentation and heterogeneity of the field.

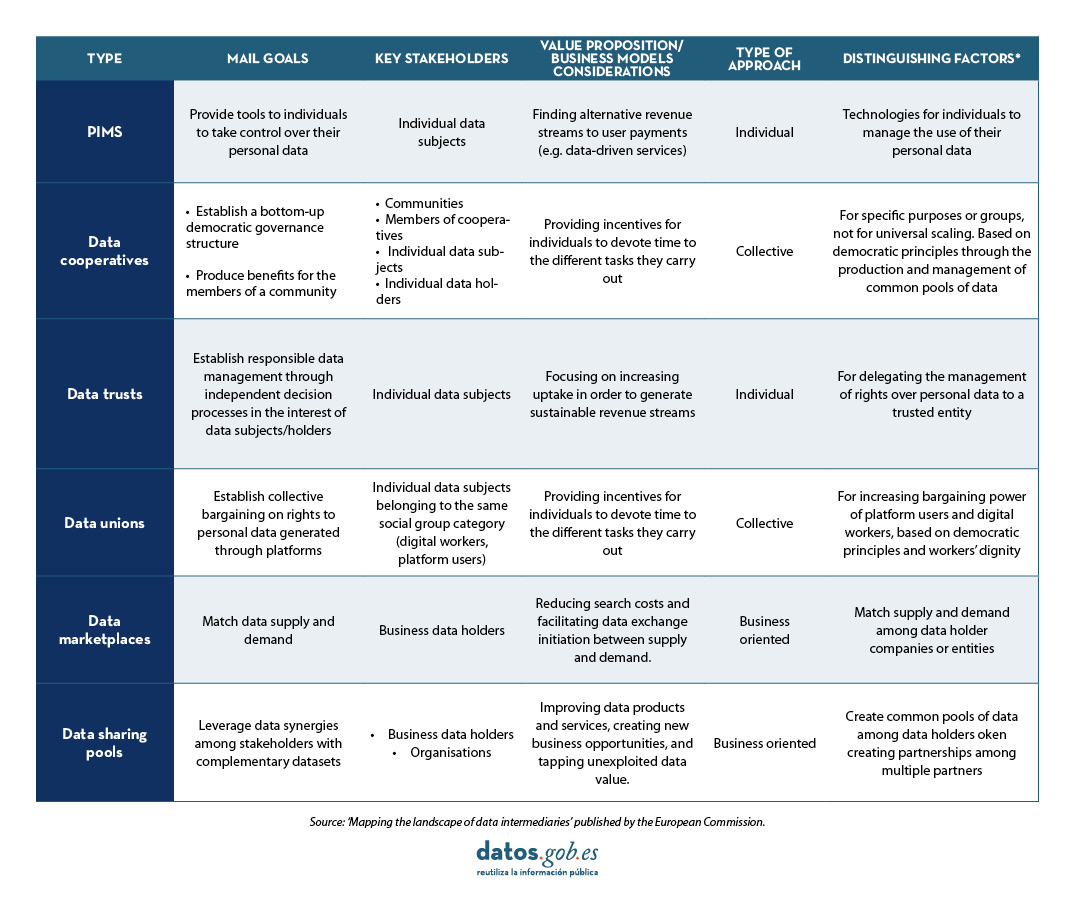

Types of data brokers range from individualistic and business-oriented to more collective and inclusive models that support greater participation in data governance by communities and individual data subjects. Taking into account the categories included in the DGA, six types of data intermediaries are described:

| Types of data broikering services according to the DGA | Equivalence in the report ''Mapping the landscape of data intermediaries'' |

|---|---|

| Intermediation services between data sujcets and potential data users (I) |

|

| Intermediation services between data subjects or individuals and data users (II) |

|

| Data cooperatives (III) |

|

Source: Mapping the landscape of data intermediaries published by the European Comission

Each of these is described below:

- Personal Information Management Systems (PIMS): provides tools for individuals to control and direct the processing of their data.

- Data cooperatives: foster democratic governance through agreements between members. Individuals manage their data for the benefit of the whole community.

- Data trusts: establish specific legal mechanisms to ensure responsible and independent management of data between two entities, an intermediary that manages the data and its rights, and a beneficiary and owner of the data.

- Data syndicates: these are sectoral or territorial unions between different data owners that manage and protect the rights over personal data generated through platforms by both users and workers.

- Data marketplaces: these drive platforms that match supply and demand for data or data-based products/services.

- Data sharing pools: these are alliances between parties interested in sharing data to improve their assets (data products, processes and services) by taking advantage of the complementarity of the data pooled.

In order to consolidate data brokers, further research will be needed to help further define the concept of data brokers. This process will entail assessing the needs of developers and entrepreneurs on economic, legal and technical issues that play a role in the establishment of data brokers, the incentives for both the supply and demand side of data brokers, and the possible connections of data brokers with other EU data policy instruments.

The types of data intermediaries differ according to several parameters, but are complementary and may overlap in certain respects. For each type of data intermediary presented, the report provides information on how it works, its main features, selected examples and business model considerations.

Requirements for data intermediaries in the European Union

The DGA establishes rules of the game to ensure that data exchange service providers perform their services under the principles and values of the European Union (EU). Suppliers shall be subject to the law of the Member State where their head office is located. If you are a provider not established in the EU, you must appoint a legal representative in one of the Member States where your services are offered.

Any data brokering service provider operating in the EU must notify the competent authority. This authority shall be designated by each State and shall ensure that the supplier carries out its activity in compliance with the law. The notification shall include information on the supplier''s name, legal nature (including information on structure and subsidiaries), address, website with information on its activities, contact person and estimated date of commencement of activity. In addition, it shall include a description of the data brokering service it performs, indicating the category detailed in the GAD to which it belongs, i.e. brokering services between data subjects and users, brokering services between data subjects or individuals and data users or data cooperatives.

Furthermore, in its Article 12, the DGA lays down a number of conditions for the provision of data brokering services. For example, providers may not use the data in connection with the provision of their services, but only make them available. They must also respect the original formats and may only make transformations to improve their interoperability. They should also provide for procedures to prevent fraudulent or abusive practices by users. This is to ensure that services are neutral, transparent and non-discriminatory.

Future scenarios for data intermediaries

According to the report "Mapping the landscape of data intermediaries", on the horizon, the envisaged scenario for data intermediaries involves overcoming a number of challenges:

Identify appropriate business models that guarantee economic sustainability. Expand demand for data brokering services. Understand the neutrality requirement set by the DGA and how it could be implemented. Align data intermediaries with other EU data policy instruments. Consider the needs of developers and entrepreneurs. Meeting the demand of data intermediaries.