The "Stories of Use Cases" series, organized by the European Open Data portal (data.europe.eu), is a collection of online events focused on the use of open data to contribute to common European Union objectives such as consolidating democracy, boosting the economy, combating climate change, and driving digital transformation. The series comprises four events, and all recordings are available on the European Open Data portal's YouTube channel. The presentations used to showcase each case are also published.

In a previous post on datos.gob.es, we explained the applications presented in two of the series' events, specifically those related to the economy and democracy. Now, we focus on use cases related to climate and technology, as well as the open datasets used for their development.

Open data has enabled the development of applications offering diverse information and services. In terms of climate, some examples can trace waste management processes or visualize relevant data about organic agriculture. Meanwhile, the application of open data in the technological sphere facilitates process management. Discover the highlighted examples by the European Open Data portal!

Open Data for Fulfilling the European Green Deal

The European Green Deal is a strategy by the European Commission aiming to achieve climate neutrality in Europe by 2050 and promote sustainable economic growth. To reach this objective, the European Commission is working on various actions, including reducing greenhouse gas emissions, transitioning to a circular economy, and improving energy efficiency. Under this common goal and utilizing open datasets, three applications have been developed and presented in one of the webinars of the series on data.europe.eu use cases: Eviron Mate, Geofluxus, and MyBioEuBuddy.

- Eviron Mate: It's an educational project aimed at raising awareness among young people about climate change and related data. To achieve this goal, Eviron Mate utilizes open data from Eurostat, the Copernicus Program and data.europa.eu.

- Geofluxus: This initiative tracks waste from its origin to its final destination to promote material reuse and reduce waste volume. Its main objective is to extend material lifespan and provide businesses with tools for better waste management decisions. Geofluxus uses open data from Eurostat and various national open data portals.

- MyBioEuBuddy is a project offering information and visualizations about sustainable agriculture in Europe, using open data from Eurostat and various regional open data portals.

The Role of Open Data in Digital Transformation

In addition to contributing to the fight against climate change by monitoring environment-related processes, open data can yield interesting outcomes in other digitally-operating domains. The combination of open data with innovative technologies provides valuable results, such as natural language processing, artificial intelligence, or augmented reality.

Another online seminar from the series, presented by the European Data Portal, delved into this theme: driving digital transformation in Europe through open data. During the event, three applications that combine cutting-edge technology and open data were presented: Big Data Test Infrastructure, Lobium, and 100 Europeans.

- "Big Data Test Infrastructure (BDTI)": This is a European Commission tool featuring a cloud platform to facilitate the analysis of open data for public sector administrations, offering a free and ready-to-use solution. BDTI provides open-source tools that promote the reuse of public sector data. Any public administration can request the free advisory service by filling out a form. BDTI has already aided some public sector entities in optimizing procurement processes, obtaining mobility information for service redesign, and assisting doctors in extracting knowledge from articles.

- Lobium: A website assisting public affairs managers in addressing the complexities of their tasks. Its aim is to provide tools for campaign management, internal reporting, KPI measurement, and government affairs dashboards. Ultimately, its solution leverages digital tools' advantages to enhance and optimize public management.

- 100 Europeans: An application that simplifies European statistics, dividing the European population into 100 individuals. Through scrolling navigation, it presents data visualizations with figures related to healthy habits and consumption in Europe.

These six applications are examples of how open data can be used to develop solutions of societal interest. Discover more use cases created with open data in this article we have published on datos.gob.es

Learn more about these applications in their seminars -> Recordings here

The combination and integration of open data with artificial intelligence (AI) is an area of work that has the potential to achieve significant advances in multiple fields and bring improvements to various aspects of our lives. The most frequently mentioned area of synergy is the use of open data as input for training the algorithms used by AI since these systems require large amounts of data to fuel their operations. This makes open data an essential element for AI development and utilizing it as input brings additional advantages such as increased equality of access to technology and improved transparency regarding algorithmic functioning.

Today, we can find open data powering algorithms for AI applications in diverse areas such as crime prevention, public transportation development, gender equality, environmental protection, healthcare improvement, and the creation of more friendly and liveable cities. All of these objectives are more easily attainable through the appropriate combination of these technological trends.

However, as we will see next, when envisioning the joint future of open data and AI, the combined use of both concepts can also lead to many other improvements in how we currently work with open data throughout its entire lifecycle. Let's review step by step how artificial intelligence can enrich a project with open data.

Utilizing AI to Discover Sources and Prepare Data Sets

Artificial intelligence can assist right from the initial steps of our data projects by supporting the discovery and integration of various data sources, making it easier for organizations to find and use relevant open data for their applications. Furthermore, future trends may involve the development of common data standards, metadata frameworks, and APIs to facilitate the integration of open data with AI technologies, further expanding the possibilities of automating the combination of data from diverse sources.

In addition to automating the guided search for data sources, AI-driven automated processes can be helpful, at least in part, in the data cleaning and preparation process. This can improve the quality of open data by identifying and correcting errors, filling gaps in the data, and enhancing its completeness. This would free scientists and data analysts from certain basic and repetitive tasks, allowing them to focus on more strategic activities such as developing new ideas and making predictions.

Innovative Techniques for Data Analysis with AI

One characteristic of AI models is their ability to detect patterns and knowledge in large amounts of data. AI techniques such as machine learning, natural language processing, and computer vision can easily be used to extract new perspectives, patterns, and knowledge from open data. Moreover, as technological development continues to advance, we can expect the emergence of even more sophisticated AI techniques specifically tailored for open data analysis, enabling organizations to extract even more value from it.

Simultaneously, AI technologies can help us go a step further in data analysis by facilitating and assisting in collaborative data analysis. Through this process, multiple stakeholders can work together on complex problems and find answers through open data. This would also lead to increased collaboration among researchers, policymakers, and civil society communities in harnessing the full potential of open data to address social challenges. Additionally, this type of collaborative analysis would contribute to improving transparency and inclusivity in decision-making processes.

The Synergy of AI and Open Data

In summary, AI can also be used to automate many tasks involved in data presentation, such as creating interactive visualizations simply by providing instructions in natural language or a description of the desired visualization.

On the other hand, open data enables the development of applications that, combined with artificial intelligence, can provide innovative solutions. The development of new applications driven by open data and artificial intelligence can contribute to various sectors such as healthcare, finance, transportation, or education, among others. For example, chatbots are being used to provide customer service, algorithms for investment decisions, or autonomous vehicles, all powered by AI. By using open data as the primary data source for these services, we would achieve higher

Finally, AI can also be used to analyze large volumes of open data and identify new patterns and trends that would be difficult to detect through human intuition alone. This information can then be used to make better decisions, such as what policies to pursue in each area to bring about the desired changes.

These are just some of the possible future trends at the intersection of open data and artificial intelligence, a future full of opportunities but at the same time not without risks. As AI continues to develop, we can expect to see even more innovative and transformative applications of this technology. This will also require closer collaboration between artificial intelligence researchers and the open data community in opening up new datasets and developing new tools to exploit them. This collaboration is essential in order to shape the future of open data and AI together and ensure that the benefits of AI are available to all in a fair and equitable way.

Content prepared by Carlos Iglesias, Open data Researcher and consultant, World Wide Web Foundation.

The contents and views reflected in this publication are the sole responsibility of the author.

Open data is a highly valuable source of knowledge for our society. Thanks to it, applications can be created that contribute to social development and solutions that help shape Europe's digital future and achieve the Sustainable Development Goals (SDGs).

The European Open Data portal (data.europe.eu) organizes online events to showcase projects that have been carried out using open data sources and have helped address some of the challenges our society faces: from combating climate change and boosting the economy to strengthening European democracy and digital transformation.

In the current year, 2023, four seminars have been held to analyze the positive impact of open data on each of the mentioned themes. All the material presented at these events is published on the European data portal, and recordings are available on their YouTube channel, accessible to any interested user.

In this post, we take a first look at the showcased use cases related to boosting the economy and democracy, as well as the open data sets used for their development.

Solutions Driving the European Economy and Lifestyle

In a rapidly evolving world where economic challenges and aspirations for a prosperous lifestyle converge, the European Union has demonstrated an unparalleled ability to forge innovative solutions that not only drive its own economy but also elevate the standard of living for its citizens. In this context, open data has played a pivotal role in the development of applications that address current challenges and lay the groundwork for a prosperous and promising future. Two of these projects were presented in the second webinar of the series "Stories of Use Cases”, an event focused on "Open Data to Foster the European Economy and Lifestyle": UNA Women and YouthPOP.

The first project focuses on tackling one of the most relevant challenges we must overcome to achieve a just society: gender inequality. Closing the gender gap is a complex social and economic issue. According to estimates from the World Economic Forum, it will take 132 years to achieve full gender parity in Europe. The UNA Women application aims to reduce that figure by providing guidance to young women so they can make better decisions regarding their education and early career steps. In this use case, the company ITER IDEA has used over 6 million lines of processed data from various sources, such as data.europa.eu, Eurostat, Censis, Istat (Italy's National Institute of Statistics), and NUMBEO.

The second presented use case also targets the young population. This is the YouthPOP application (Youth Public Open Procurement), a tool that encourages young people to participate in public procurement processes. For the development of this app, data from data.europa.eu, Eurostat, and ESCO, among others, have been used. YouthPOP aims to improve youth employment and contribute to the proper functioning of democracy in Europe.

Open Data for Boosting and Strengthening European Democracy

In this regard, the use of open data also contributes to strengthening and consolidating European democracy. Open data plays a crucial role in our democracies through the following avenues:

- Providing citizens with reliable information.

- Promoting transparency in governments and public institutions.

- Combating misinformation and fake news.

The theme of the third webinar organized by data.europe.eu on use cases is "Open Data and a New Impetus for European Democracy". This event presented two innovative solutions: EU Integrity Watch and the EU Institute for Freedom of Information.

Firstly, EU Integrity Watch is a platform that provides online tools for citizens, journalists, and civil society to monitor the integrity of decisions made by politicians in the European Union. This website offers visualizations to understand the information and provides access to collected and analyzed data. The analyzed data is used in scientific disclosures, journalistic investigations, and other areas, contributing to a more open and transparent government. This tool processes and offers data from the Transparency Register.

The second initiative presented in the democracy-focused webinar with open data is the EU Institute for Freedom of Information (IDFI), a Georgian non-governmental organization that focuses on monitoring and supervising government actions, revealing infractions, and keeping citizens informed.

The main activities of the IDFI include requesting public information from relevant bodies, creating rankings of public bodies, monitoring the websites of these bodies, and advocating for improved access to public information, legislative standards, and related practices. This project obtains, analyzes, and presents open data sets from national public institutions.

In conclusion, open data makes it possible to develop applications that reduce the gender wage gap, boost youth employment, or monitor government actions. These are just a few examples of the value that open data can offer to society.

Learn more about these applications in their seminars -> Recordings here.

1. Introduction

Visualizations are graphical representations of data that allow the information linked to them to be communicated in a simple and effective way. The visualization possibilities are very wide, from basic representations, such as line, bar or sector graphs, to visualizations configured on interactive dashboards.

In this "Step-by-Step Visualizations" section we are regularly presenting practical exercises of open data visualizations available in datos.gob.es or other similar catalogs. They address and describe in a simple way the stages necessary to obtain the data, perform the transformations and analyses that are relevant to, finally, enable the creation of interactive visualizations that allow us to obtain final conclusions as a summary of said information. In each of these practical exercises, simple and well-documented code developments are used, as well as tools that are free to use. All generated material is available for reuse in the GitHub Data Lab repository.

Then, as a complement to the explanation that you will find below, you can access the code that we will use in the exercise and that we will explain and develop in the following sections of this post.

Access the data lab repository on Github.

Run the data pre-processing code on top of Google Colab.

2. Objetive

The main objective of this exercise is to show how to generate an interactive dashboard that, based on open data, shows us relevant information on the food consumption of Spanish households based on open data. To do this, we will pre-process the open data to obtain the tables that we will use in the visualization generating tool to create the interactive dashboard.

Dashboards are tools that allow you to present information in a visual and easily understandable way. Also known by the term "dashboards", they are used to monitor, analyze and communicate data and indicators. Your content typically includes charts, tables, indicators, maps, and other visuals that represent relevant data and metrics. These visualizations help users quickly understand a situation, identify trends, spot patterns, and make informed decisions.

Once the data has been analyzed, through this visualization we will be able to answer questions such as those posed below:

- What is the trend in recent years regarding spending and per capita consumption in the different foods that make up the basic basket?

- What foods are the most and least consumed in recent years?

- In which Autonomous Communities is there a greater expenditure and consumption in food?

- Has the increase in the cost of certain foods in recent years meant a reduction in their consumption?

These, and many other questions can be solved through the dashboard that will show information in an orderly and easy to interpret way.

3. Resources

3.1. Datasets

The open datasets used in this exercise contain different information on per capita consumption and per capita expenditure of the main food groups broken down by Autonomous Community. The open datasets used, belonging to the Ministry of Agriculture, Fisheries and Food (MAPA), are provided in annual series (we will use the annual series from 2010 to 2021)

Annual series data on household food consumption

These datasets are also available for download from the following Github repository.

These datasets are also available for download from the following Github repository.

3.2. Tools

To carry out the data preprocessing tasks, the Python programming language written on a Jupyter Notebook hosted in the Google Colab cloud service has been used.

"Google Colab" or, also called Google Colaboratory, is a cloud service from Google Research that allows you to program, execute and share code written in Python or R on a Jupyter Notebook from your browser, so it does not require configuration. This service is free of charge.

For the creation of the dashboard, the Looker Studio tool has been used.

"Looker Studio" formerly known as Google Data Studio, is an online tool that allows you to create interactive dashboards that can be inserted into websites or exported as files. This tool is simple to use and allows multiple customization options.

If you want to know more about tools that can help you in the treatment and visualization of data, you can use the report "Data processing and visualization tools".

4. Processing or preparation of data

The processes that we describe below you will find commented in the following Notebook that you can run from Google Colab.

Before embarking on building an effective visualization, we must carry out a prior treatment of the data, paying special attention to its obtaining and the validation of its content, making sure that it is in the appropriate and consistent format for processing and that it does not contain errors.

As a first step of the process, once the initial data sets are loaded, it is necessary to perform an exploratory data analysis (EDA) to properly interpret the starting data, detect anomalies, missing data or errors that could affect the quality of subsequent processes and results. If you want to know more about this process, you can resort to the Practical Guide of Introduction to Exploratory Data Analysis.

The next step is to generate the pre-processed data table that we will use to feed the visualization tool (Looker Studio). To do this, we will modify, filter and join the data according to our needs.

The steps followed in this data preprocessing, explained in the following Google Colab Notebook, are as follows:

- Installation of libraries and loading of datasets

- Exploratory Data Analysis (EDA)

- Generating preprocessed tables

You will be able to reproduce this analysis with the source code that is available in our GitHub account. The way to provide the code is through a document made on a Jupyter Notebook that once loaded into the development environment you can run or modify easily. Due to the informative nature of this post and to favor the understanding of non-specialized readers, the code is not intended to be the most efficient, but to facilitate its understanding so you will possibly come up with many ways to optimize the proposed code to achieve similar purposes. We encourage you to do so!

5. Displaying the interactive dashboard

Once we have done the preprocessing of the data, we go with the generation of the dashboard. A scorecard is a visual tool that provides a summary view of key data and metrics. It is useful for monitoring, decision-making and effective communication, by providing a clear and concise view of relevant information.

For the realization of the interactive visualizations that make up the dashboard, the Looker Studio tool has been used. Being an online tool, it is not necessary to have software installed to interact or generate any visualization, but it is necessary that the data table that we provide is properly structured, which is why we have carried out the previous steps related to the preprocessing of the data. If you want to know more about how to use Looker Studio, in the following link you can access training on the use of the tool.

Below is the dashboard, which can be opened in a new tab in the following link. In the following sections we will break down each of the components that make it up.

5.1. Filters

Filters in a dashboard are selection options that allow you to visualize and analyze specific data by applying various filtering criteria to the datasets presented in the dashboard. They help you focus on relevant information and get a more accurate view of your data.

The filters included in the generated dashboard allow you to choose the type of analysis to be displayed, the territory or Autonomous Community, the category of food and the years of the sample.

It also incorporates various buttons to facilitate the deletion of the chosen filters, download the dashboard as a report in PDF format and access the raw data with which this dashboard has been prepared.

5.2. Interactive visualizations

The dashboard is composed of various types of interactive visualizations, which are graphical representations of data that allow users to actively explore and manipulate information.

Unlike static visualizations, interactive visualizations provide the ability to interact with data, allowing users to perform different and interesting actions such as clicking on elements, dragging them, zooming or reducing focus, filtering data, changing parameters and viewing results in real time.

This interaction is especially useful when working with large and complex data sets, as it makes it easier for users to examine different aspects of the data as well as discover patterns, trends and relationships in a more intuitive way.

To define each type of visualization, we have based ourselves on the data visualization guide for local entities presented by the NETWORK of Local Entities for Transparency and Citizen Participation of the FEMP.

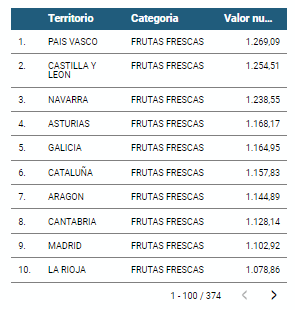

5.2.1 Data tables

Data tables allow the presentation of a large amount of data in an organized and clear way, with a high space/information performance.

However, they can make it difficult to present patterns or interpretations with respect to other visual objects of a more graphic nature.

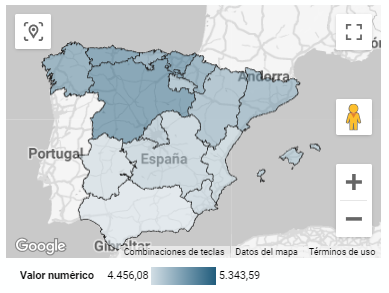

5.2.2 Map of chloropetas

t is a map in which numerical data are shown by territories marking with intensity of different colours the different areas. For its elaboration it requires a measure or numerical data, a categorical data for the territory and a geographical data to delimit the area of each territory.

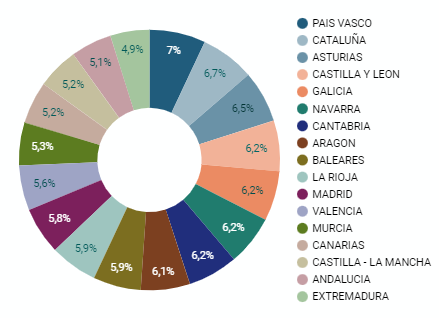

5.2.3 Pie chart

It is a graph that shows the data from polar axes in which the angle of each sector marks the proportion of a category with respect to the total. Its functionality is to show the different proportions of each category with respect to a total using pie charts.

Figure 4. Dashboard pie chart

5.2.4 Line chart

It is a graph that shows the relationship between two or more measurements of a series of values on two Cartesian axes, reflecting on the X axis a temporal dimension, and a numerical measure on the Y axis. These charts are ideal for representing time data series with a large number of data points or observations.

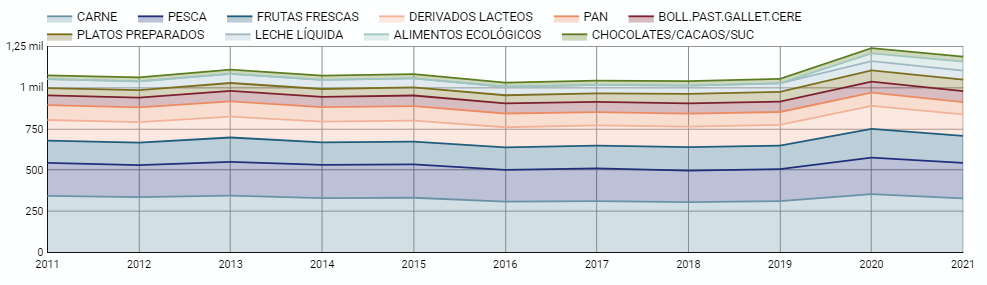

Figure 5. Dashboard line chart

5.2.5 Bar chart

It is a graph of the most used for the clarity and simplicity of preparation. It makes it easier to read values from the ratio of the length of the bars. The chart displays the data using an axis that represents the quantitative values and another that includes the qualitative data of the categories or time.

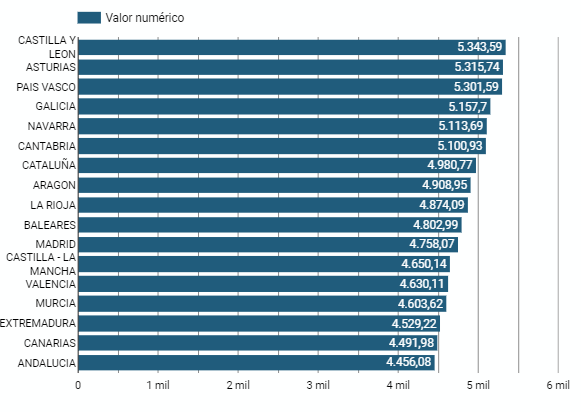

Figure 6. Dashboard bar chart

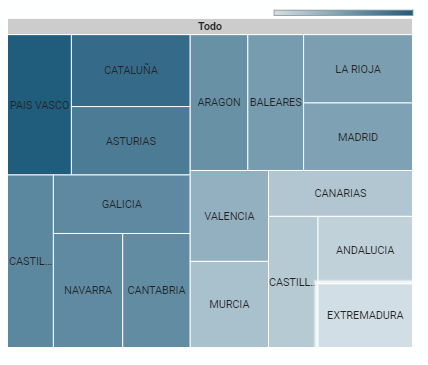

5.2.6 Hierarchy chart

It is a graph formed by different rectangles that represent categories, and that allows hierarchical groupings of the sectors of each category. The dimension of each rectangle and its placement varies depending on the value of the measurement of each of the categories shown with respect to the total value of the sample.

Figure 7. Dashboard Hierarchy chart

6. Conclusions

Dashboards are one of the most powerful mechanisms for exploiting and analyzing the meaning of data. It should be noted the importance they offer us when it comes to monitoring, analyzing and communicating data and indicators in a clear, simple and effective way.

As a result, we have been able to answer the questions originally posed:

- The trend in per capita consumption has been declining since 2013, when it peaked, with a small rebound in 2020 and 2021.

- The trend of per capita expenditure has remained stable since 2011 until in 2020 it has suffered a rise of 17.7%, going from being the average annual expenditure of 1052 euros to 1239 euros, producing a slight decrease of 4.4% from the data of 2020 to those of 2021.

- The three most consumed foods during all the years analyzed are: fresh fruits, liquid milk and meat (values in kgs)

- The Autonomous Communities where per capita spending is highest are the Basque Country, Catalonia and Asturias, while Castilla la Mancha, Andalusia and Extremadura have the lowest spending.

- The Autonomous Communities where a higher per capita consumption occurs are Castilla y León, Asturias and the Basque Country, while in those with the lowest are Extremadura, the Canary Islands and Andalusia.

We have also been able to observe certain interesting patterns, such as a 17.33% increase in alcohol consumption (beers, wine and spirits) in the years 2019 and 2020.

You can use the different filters to find out and look for more trends or patterns in the data based on your interests and concerns.

We hope that this step-by-step visualization has been useful for learning some very common techniques in the treatment and representation of open data. We will be back to show you new reuses. See you soon!

Open solutions, including Open Educational Resources (OER), Open Access to Scientific Information (OA), Free and Open-Source Software (FOSS), and open data, encourage the free flow of information and knowledge, serving as a foundation for addressing global challenges, as reminded by UNESCO.

The United Nations Educational, Scientific and Cultural Organization (UNESCO) recognizes the value of open data in the educational field and believes that its use can contribute to measuring the compliance of the Sustainable Development Goals, especially Goal 4 of Quality Education. Other international organizations also recognize the potential of open data in education. For example, the European Commission has classified the education sector as an area with high potential for open data.

Open data can be used as a tool for education and training in different ways. They can be used to develop new educational materials and to collect and analyze information about the state of the educational system, which can be used to drive improvement.

The global pandemic marked a milestone in the education field, as the use of new technologies became essential in the teaching and learning process, which became entirely virtual for months. Although the benefits of incorporating ICT and open solutions into education, a trend known as Edtech, had been talked about for years, COVID-19 accelerated this process.

Benefits of Using Open Data in the Classroom

In the following infographic, we summarize the benefits of utilizing open data in education and training, from the perspective of both students and educators, as well as administrators of the education system.

There are many datasets that can be used for developing educational solutions. At datos.gob.es, there are more than 6,700 datasets available, which can be supplemented by others used for educational purposes in different fields, such as literature, geography, history, etc.

Many solutions have been developed using open data for these purposes. We gather some of them based on their purpose: firstly, solutions that provide information on the education system to understand its situation and plan new measures, and secondly, those that offer educational material to use in the classroom.

In essence, open data is a key tool for the strengthening and progress of education, and we must not forget that education is a universal right and one of the main tools for the progress of humanity.

As more of our daily lives take place online, and as the importance and value of personal data increases in our society, standards protecting the universal and fundamental right to privacy, security and privacy - backed by frameworks such as the Universal Declaration of Human Rights or the European Declaration on Digital Rights - become increasingly important.

Today, we are also facing a number of new challenges in relation to our privacy and personal data. According to the latest Lloyd's Register Foundation report, at least three out of four internet users are concerned that their personal information could be stolen or otherwise used without their permission. It is therefore becoming increasingly urgent to ensure that people are in a position to know and control their personal data at all times.

Today, the balance is clearly tilted towards the large platforms that have the resources to collect, trade and make decisions based on our personal data - while individuals can only aspire to gain some control over what happens to their data, usually with a great deal of effort.

This is why initiatives such as MyData Global, a non-profit organisation that has been promoting a human-centred approach to personal data management for several years now and advocating for securing the right of individuals to actively participate in the data economy, are emerging. The aim is to redress the balance and move towards a people-centred view of data to build a more just, sustainable and prosperous digital society, the pillars of which would be:

- Establish relationships of trust and security between individuals and organisations.

- Achieve data empowerment, not only through legal protection, but also through measures to share and distribute the power of data.

- Maximising the collective benefits of personal data, sharing it equitably between organisations, individuals and society.

And in order to bring about the changes necessary to bring about this new, more humane approach to personal data, the following principles have been developed:

1 - People-centred control of data.

It is individuals who must have the power of decision in the management of everything that concerns their personal lives. They must have the practical means to understand and effectively control who has access to their data and how it is used and shared.

Privacy, security and minimal use of data should be standard practice in the design of applications, and the conditions of use of personal data should be fairly negotiated between individuals and organisations.

2 - People as the focal point of integration

The value of personal data grows exponentially with its diversity, while the potential threat to privacy grows at the same time. This apparent contradiction could be resolved if we place people at the centre of any data exchange, always focusing on their own needs above all other motivations.

Any use of personal data must revolve around the individual through deep personalisation of tools and services.

3 - Individual autonomy

In a data-driven society, individuals should not be seen solely as customers or users of services and applications. They should be seen as free and autonomous agents, able to set and pursue their own goals.

Individuals should be able to securely manage their personal data in the way they choose, with the necessary tools, skills and support.

4 - Portability, access and re-use

Enabling individuals to obtain and reuse their personal data for their own purposes and in different services is the key to moving from silos of isolated data to data as reusable resources.

Data portability should not merely be a legal right, but should be combined with practical means for individuals to effectively move data to other services or on their personal devices in a secure and simple way.

5 - Transparency and accountability

Organisations using an individual's data must be transparent about how they use it and for what purpose. At the same time, they must be accountable for their handling of that data, including any security incidents.

User-friendly and secure channels must be created so that individuals can know and control what happens to their data at all times, and thus also be able to challenge decisions based solely on algorithms.

6 - Interoperability

There is a need to minimise friction in the flow of data from the originating sources to the services that use it. This requires incorporating the positive effects of open and interoperable ecosystems, including protocols, applications and infrastructure. This will be achieved through the implementation of common norms and practices and technical standards.

The MyData community has been applying these principles for years in its work to spread a more human-centred vision of data management, processing and use, as it is currently doing for example through its role in the Data Spaces Support Centre, a reference project that is set to define the future responsible use and governance of data in the European Union.

And for those who want to delve deeper into people-centric data use, we will soon have a new edition of the MyData Conference, which this year will focus on showcasing case studies where the collection, processing and analysis of personal data primarily serves the needs and experiences of human beings.

Content prepared by Carlos Iglesias, Open data Researcher and consultant, World Wide Web Foundation.

The contents and views expressed in this publication are the sole responsibility of the author.

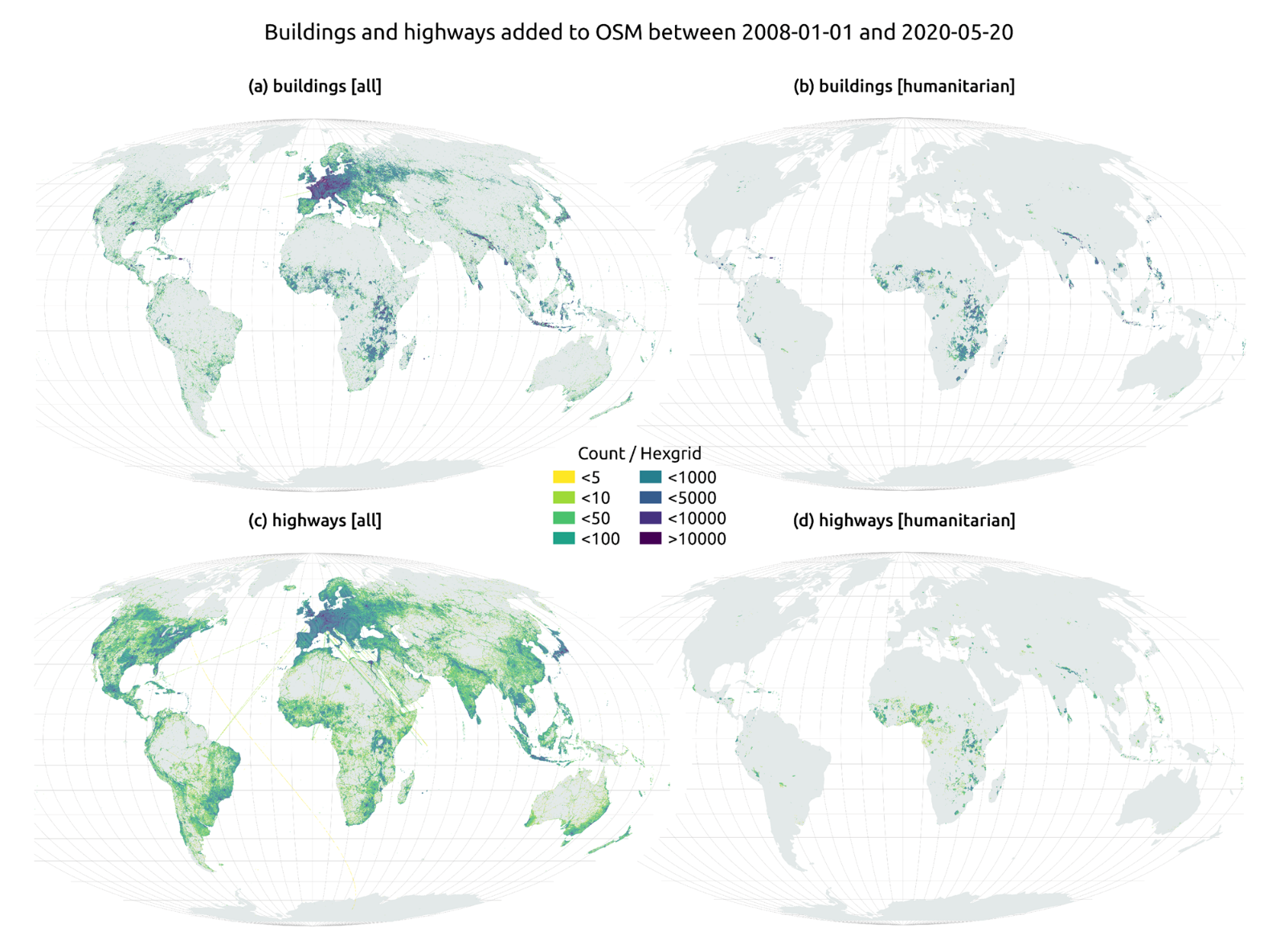

The humanitarian crisis following the earthquake in Haiti in 2010 was the starting point for a voluntary initiative to create maps to identify the level of damage and vulnerability by areas, and thus to coordinate emergency teams. Since then, the collaborative mapping project known as Hot OSM (OpenStreetMap) has played a key role in crisis situations and natural disasters.

Now, the organisation has evolved into a global network of volunteers who contribute their online mapping skills to help in crisis situations around the world. The initiative is an example of data-driven collaboration to solve societal problems, a theme we explore in this data.gob.es report.

Hot OSM works to accelerate data-driven collaboration with humanitarian and governmental organisations, as well as local communities and volunteers around the world, to provide accurate and detailed maps of areas affected by natural disasters or humanitarian crises. These maps are used to help coordinate emergency response, identify needs and plan for recovery.

In its work, Hot OSM prioritises collaboration and empowerment of local communities. The organisation works to ensure that people living in affected areas have a voice and power in the mapping process. This means that Hot OSM works closely with local communities to ensure that areas important to them are mapped. In this way, the needs of communities are considered when planning emergency response and recovery.

Hot OSM's educational work

In addition to its work in crisis situations, Hot OSM is dedicated to promoting access to free and open geospatial data, and works in collaboration with other organisations to build tools and technologies that enable communities around the world to harness the power of collaborative mapping.

Through its online platform, Hot OSM provides free access to a wide range of tools and resources to help volunteers learn and participate in collaborative mapping. The organisation also offers training for those interested in contributing to its work.

One example of a HOT project is the work the organisation carried out in the context of Ebola in West Africa. In 2014, an Ebola outbreak affected several West African countries, including Sierra Leone, Liberia and Guinea. The lack of accurate and detailed maps in these areas made it difficult to coordinate the emergency response.

In response to this need, HOT initiated a collaborative mapping project involving more than 3,000 volunteers worldwide. Volunteers used online tools to map Ebola-affected areas, including roads, villages and treatment centres.

This mapping allowed humanitarian workers to better coordinate the emergency response, identify high-risk areas and prioritize resource allocation. In addition, the project also helped local communities to better understand the situation and participate in the emergency response.

This case in West Africa is just one example of HOT's work around the world to assist in humanitarian crisis situations. The organisation has worked in a variety of contexts, including earthquakes, floods and armed conflict, and has helped provide accurate and detailed maps for emergency response in each of these contexts.

On the other hand, the platform is also involved in areas where there is no map coverage, such as in many African countries. In these areas, humanitarian aid projects are often very challenging in the early stages, as it is very difficult to quantify what population is living in an area and where they are located. Having the location of these people and showing access routes "puts them on the map" and allows them to gain access to resources.

In this article The evolution of humanitarian mapping within the OpenStreetMap community by Nature, we can see graphically some of the achievements of the platform.

How to collaborate

It is easy to start collaborating with Hot OSM, just go to https://tasks.hotosm.org/explore and see the open projects that need collaboration.

This screen allows us a lot of options when searching for projects, selected by level of difficulty, organisation, location or interests among others.

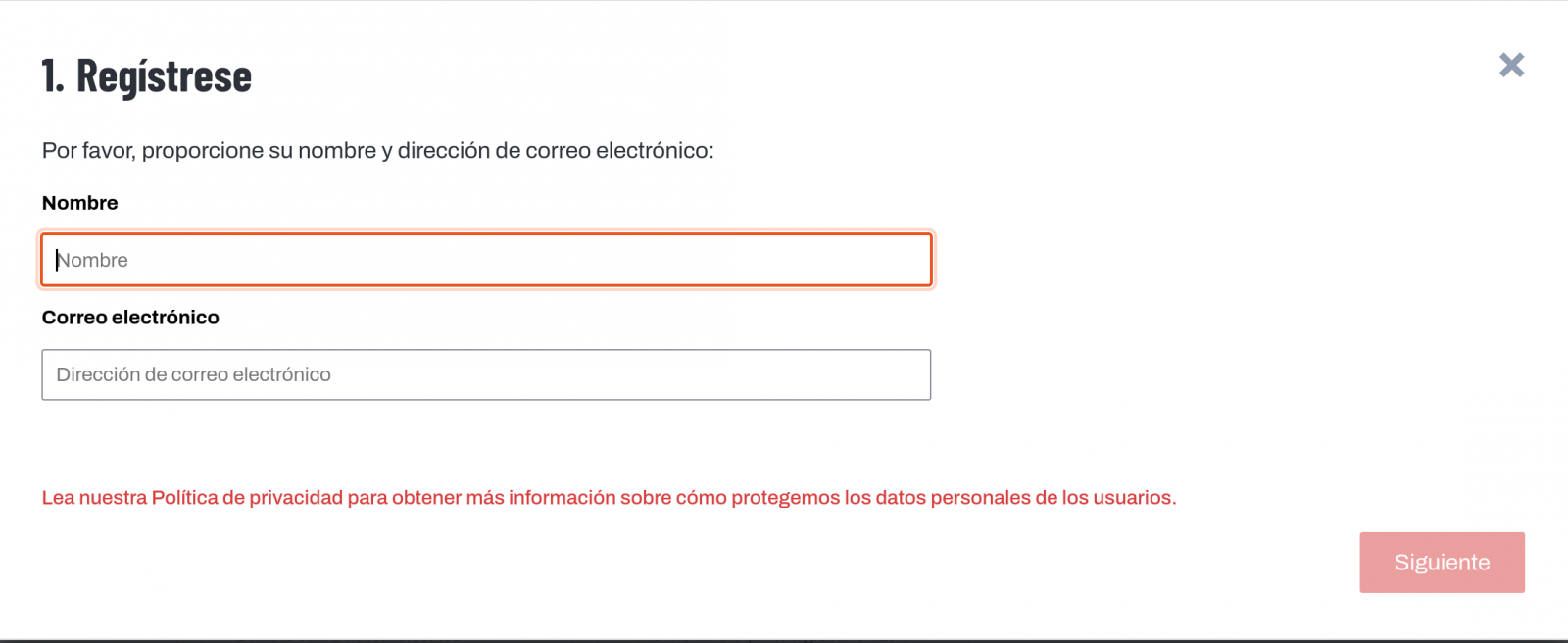

To participate, simply click on the Register button.

Give a name and an e-mail adress on the next screen:

It will ask us if we have already created an account in Open Street Maps or if we want to create one.

If we want to see the process in more detail, this website makes it very easy.

Once the user has been created, on the learning page we find help on how to participate in the project.

It is important to note that the contributions of the volunteers are reviewed and validated and there is a second level of volunteers, the validators, who validate the work of the beginners. During the development of the tool, the HOT team has taken great care to make it a user-friendly application so as not to limit its use to people with computer skills.

In addition, organisations such as the Red Cross and the United Nations regularly organise mapathons to bring together groups of people for specific projects or to teach new volunteers how to use the tool. These meetings serve, above all, to remove the new users' fear of "breaking something" and to allow them to see how their voluntary work serves concrete purposes and helps other people.

Another of the project's great strengths is that it is based on free software and allows for its reuse. In the MissingMaps project's Github repository we can find the code and if we want to create a community based on the software, the Missing Maps organisation facilitates the process and gives visibility to our group.

In short, Hot OSM is a citizen science and data altruism project that contributes to bringing benefits to society through the development of collaborative maps that are very useful in emergency situations. This type of initiative is aligned with the European concept of data governance that seeks to encourage altruism to voluntarily facilitate the use of data for the common good.

Content by Santiago Mota, senior data scientist.

The contents and views reflected in this publication are the sole responsibility of the author.

1. Introduction

Visualizations are graphical representations of data that allow the information linked to them to be communicated in a simple and effective way. The visualization possibilities are very wide, from basic representations, such as a line chart, bars or sectors, to visualizations configured on dashboards or interactive dashboards.

In this "Step-by-Step Visualizations" section we are regularly presenting practical exercises of open data visualizations available on datos.gob.es or similar catalogs. They address and describe in a simple way the stages necessary to obtain the data, perform the transformations and analysis that are relevant to and finally, the creation of interactive visualizations; from which we can extract information summarized in final conclusions. In each of these practical exercises, simple and well-documented code developments are used, as well as free to use tools. All generated material is available for reuse in GitHub's Data Lab repository.

Run the data pre-processing code on top of Google Colab.

Below, you can access the material that we will use in the exercise and that we will explain and develop in the following sections of this post.

Access the data lab repository on Github.

Run the data pre-processing code on top of Google Colab.

2. Objective

The main objective of this exercise is to make an analysis of the meteorological data collected in several stations during the last years. To perform this analysis, we will use different visualizations generated by the "ggplot2" library of the programming language "R".

Of all the Spanish weather stations, we have decided to analyze two of them, one in the coldest province of the country (Burgos) and another in the warmest province of the country (Córdoba), according to data from the AEMET. Patterns and trends in the different records between 1990 and 2020 will be sought to understand the meteorological evolution suffered in this period of time.

Once the data has been analyzed, we can answer questions such as those shown below:

- What is the trend in the evolution of temperatures in recent years?

- What is the trend in the evolution of rainfall in recent years?

- Which weather station (Burgos or Córdoba) presents a greater variation of climatological data in recent years?

- What degree of correlation is there between the different climatological variables recorded?

These, and many other questions can be solved by using tools such as ggplot2 that facilitate the interpretation of data through interactive visualizations.

3. Resources

3.1. Datasets

The datasets contain different meteorological information of interest for the two stations in question broken down by year. Within the AEMET download center, we can download them, upon request of the API key, in the section "monthly / annual climatologies". From the existing weather stations, we have selected two of which we will obtain the data: Burgos airport (2331) and Córdoba airport (5402)

It should be noted that, along with the datasets, we can also download their metadata, which are of special importance when identifying the different variables registered in the datasets.

These datasets are also available in the Github repository.

3.2. Tools

To carry out the data preprocessing tasks, the R programming language written on a Jupyter Notebook hosted in the Google Colab cloud service has been used.

"Google Colab" or, also called Google Colaboratory, is a cloud service from Google Research that allows you to program, execute and share code written in Python or R on a Jupyter Notebook from your browser, so it does not require configuration. This service is free of charge.

For the creation of the visualizations, the ggplot2 library has been used.

"ggplot2" is a data visualization package for the R programming language. It focuses on the construction of graphics from layers of aesthetic, geometric and statistical elements. ggplot2 offers a wide range of high-quality statistical charts, including bar charts, line charts, scatter plots, box and whisker charts, and many others.

If you want to know more about tools that can help you in the treatment and visualization of data, you can use the report "Data processing and visualization tools".

4. Data processing or preparation

The processes that we describe below you will find them commented in the Notebook that you can also run from Google Colab.

Before embarking on building an effective visualization, we must carry out a prior treatment of the data, paying special attention to obtaining them and validating their content, ensuring that they are in the appropriate and consistent format for processing and that they do not contain errors.

As a first step of the process, once the necessary libraries have been imported and the datasets loaded, it is necessary to perform an exploratory analysis of the data (EDA) in order to properly interpret the starting data, detect anomalies, missing data or errors that could affect the quality of the subsequent processes and results. If you want to know more about this process, you can resort to the Practical Guide of Introduction to Exploratory Data Analysis.

The next step is to generate the preprocessed data tables that we will use in the visualizations. To do this, we will filter the initial data sets and calculate the values that are necessary and of interest for the analysis carried out in this exercise.

Once the preprocessing is finished, we will obtain the data tables "datos_graficas_C" and "datos_graficas_B" which we will use in the next section of the Notebook to generate the visualizations.

The structure of the Notebook in which the steps previously described are carried out together with explanatory comments of each of them, is as follows:

- Installation and loading of libraries.

- Loading datasets

- Exploratory Data Analysis (EDA)

- Preparing the data tables

- Views

- Saving graphics

You will be able to reproduce this analysis, as the source code is available in our GitHub account. The way to provide the code is through a document made on a Jupyter Notebook that once loaded into the development environment you can run or modify easily. Due to the informative nature of this post and in order to favor the understanding of non-specialized readers, the code is not intended to be the most efficient but to facilitate its understanding so you will possibly come up with many ways to optimize the proposed code to achieve similar purposes. We encourage you to do so!

5. Visualizations

Various types of visualizations and graphs have been made to extract information on the tables of preprocessed data and answer the initial questions posed in this exercise. As mentioned previously, the R "ggplot2" package has been used to perform the visualizations.

The "ggplot2" package is a data visualization library in the R programming language. It was developed by Hadley Wickham and is part of the "tidyverse" package toolkit. The "ggplot2" package is built around the concept of "graph grammar", which is a theoretical framework for building graphs by combining basic elements of data visualization such as layers, scales, legends, annotations, and themes. This allows you to create complex, custom data visualizations with cleaner, more structured code.

If you want to have a summary view of the possibilities of visualizations with ggplot2, see the following "cheatsheet". You can also get more detailed information in the following "user manual".

5.1. Line charts

Line charts are a graphical representation of data that uses points connected by lines to show the evolution of a variable in a continuous dimension, such as time. The values of the variable are represented on the vertical axis and the continuous dimension on the horizontal axis. Line charts are useful for visualizing trends, comparing evolutions, and detecting patterns.

Next, we can visualize several line graphs with the temporal evolution of the values of average, minimum and maximum temperatures of the two meteorological stations analyzed (Córdoba and Burgos). On these graphs, we have introduced trend lines to be able to observe their evolution in a visual and simple way.

To compare the evolutions, not only visually through the graphed trend lines, but also numerically, we obtain the slope coefficients of the trend line, that is, the change in the response variable (tm_ month, tm_min, tm_max) for each unit of change in the predictor variable (year).

- Average temperature slope coefficient Córdoba: 0.036

- Average temperature slope coefficient Burgos: 0.025

- Coefficient of slope minimum temperature Córdoba: 0.020

- Coefficient of slope minimum temperature Burgos: 0.020

- Slope coefficient maximum temperature Córdoba: 0.051

- Slope coefficient maximum temperature Burgos: 0.030

We can interpret that the higher this value, the more abrupt the average temperature rise in each observed period.

Finally, we have created a line graph for each weather station, in which we jointly visualize the evolution of average, minimum and maximum temperatures over the years.

The main conclusions obtained from the visualizations of this section are:

- The average, minimum and maximum annual temperatures recorded in Córdoba and Burgos have an increasing trend.

- The most significant increase is observed in the evolution of the maximum temperatures of Córdoba (slope coefficient = 0.051)

- The slightest increase is observed in the evolution of the minimum temperatures, both in Córdoba and Burgos (slope coefficient = 0.020)

5.2. Bar charts

Bar charts are a graphical representation of data that uses rectangular bars to show the magnitude of a variable in different categories or groups. The height or length of the bars represents the amount or frequency of the variable, and the categories are represented on the horizontal axis. Bar charts are useful for comparing the magnitude of different categories and for visualizing differences between them.

We have generated two bar graphs with the data corresponding to the total accumulated precipitation per year for the different weather stations.

As in the previous section, we plot the trend line and calculate the slope coefficient.

- Slope coefficient for accumulated rainfall Córdoba: -2.97

- Slope coefficient for accumulated rainfall Burgos: -0.36

The main conclusions obtained from the visualizations of this section are:

- The annual accumulated rainfall has a decreasing trend for both Córdoba and Burgos.

- The downward trend is greater for Córdoba (coefficient = -2.97), being more moderate for Burgos (coefficient = -0.36)

5.3. Histograms

Histograms are a graphical representation of a frequency distribution of numeric data in a range of values. The horizontal axis represents the values of the data divided into intervals, called "bin", and the vertical axis represents the frequency or amount of data found in each "bin". Histograms are useful for identifying patterns in data, such as distribution, dispersion, symmetry, or bias.

We have generated two histograms with the distributions of the data corresponding to the total accumulated precipitation per year for the different meteorological stations, being the chosen intervals of 50 mm3.

The main conclusions obtained from the visualizations of this section are:

- The records of annual accumulated precipitation in Burgos present a distribution close to a normal and symmetrical distribution.

- The records of annual accumulated precipitation in Córdoba do not present a symmetrical distribution.

5.4. Box and whisker diagrams

Box and whisker diagrams are a graphical representation of the distribution of a set of numerical data. These graphs represent the median, interquartile range, and minimum and maximum values of the data. The chart box represents the interquartile range, that is, the range between the first and third quartiles of the data. Out-of-the-box points, called outliers, can indicate extreme values or anomalous data. Box plots are useful for comparing distributions and detecting extreme values in your data.

We have generated a graph with the box diagrams corresponding to the accumulated rainfall data from the weather stations.

To understand the graph, the following points should be highlighted:

- The boundaries of the box indicate the first and third quartiles (Q1 and Q3), which leave below each, 25% and 75% of the data respectively.

- The horizontal line inside the box is the median (equivalent to the second quartile Q2), which leaves half of the data below.

- The whisker limits are the extreme values, that is, the minimum value and the maximum value of the data series.

- The points outside the whiskers are the outliers.

The main conclusions obtained from the visualization of this section are:

- Both distributions present 3 extreme values, being significant those of Córdoba with values greater than 1000 mm3.

- The records of Córdoba have a greater variability than those of Burgos, which are more stable

5.5. Pie charts

A pie chart is a type of pie chart that represents proportions or percentages of a whole. It consists of several sections or sectors, where each sector represents a proportion of the whole set. The size of the sector is determined based on the proportion it represents, and is expressed in the form of an angle or percentage. It is a useful tool for visualizing the relative distribution of the different parts of a set and facilitates the visual comparison of the proportions between the different groups.

We have generated two graphs of (polar) sectors. The first of them with the number of days that the values exceed 30º in Córdoba and the second of them with the number of days that the values fall below 0º in Burgos.

For the realization of these graphs, we have grouped the sum of the number of days described above into six groups, corresponding to periods of 5 years from 1990 to 2020.

The main conclusions obtained from the visualizations of this section are:

- There is an increase of 31.9% in the total number of annual days with temperatures above 30º in Córdoba for the period between 2015-2020 compared to the period 1990-1995.

- There is an increase of 33.5% in the total number of annual days with temperatures above 30º in Burgos for the period between 2015-2020 compared to the period 1990-1995.

5.6. Scatter plots

Scatter plots are a data visualization tool that represent the relationship between two numerical variables by locating points on a Cartesian plane. Each dot represents a pair of values of the two variables and its position on the graph indicates how they relate to each other. Scatter plots are commonly used to identify patterns and trends in data, as well as to detect any possible correlation between variables. These charts can also help identify outliers or data that doesn't fit the overall trend.

We have generated two scattering plots in which the values of maximum average temperatures and minimum averages are compared, looking for correlation trends between them for the values of each weather station.

To analyze the correlations, not only visually through graphs, but also numerically, we obtain Pearson's correlation coefficients. This coefficient is a statistical measure that indicates the degree of linear association between two quantitative variables. It is used to assess whether there is a positive linear relationship (both variables increase or decrease simultaneously at a constant rate), negative (the values of both variables vary oppositely) or null (no relationship) between two variables and the strength of such a relationship, the closer to +1, the higher their association.

- Pearson coefficient (Average temperature max VS min) Córdoba: 0.15

- Pearson coefficient (Average temperature max VS min) Burgos: 0.61

In the image we observe that while in Córdoba a greater dispersion is appreciated, in Burgos a greater correlation is observed.

Next, we will modify the previous scatter plots so that they provide us with more information visually. To do this, we divide the space by colored sectors (red with higher temperature values / blue lower temperature values) and show in the different bubbles the label with the corresponding year. It should be noted that the color change limits of the quadrants correspond to the average values of each of the variables.

The main conclusions obtained from the visualizations of this section are:

- There is a positive linear relationship between the average maximum and minimum temperature in both Córdoba and Burgos, this correlation being greater in the Burgos data.

- The years with the highest values of maximum and minimum temperatures in Burgos are (2003, 2006 and 2020)

- The years with the highest values of maximum and minimum temperatures in Córdoba are (1995, 2006 and 2020)

5.7. Correlation matrix

The correlation matrix is a table that shows the correlations between all variables in a dataset. It is a square matrix that shows the correlation between each pair of variables on a scale ranging from -1 to 1. A value of -1 indicates a perfect negative correlation, a value of 0 indicates no correlation, and a value of 1 indicates a perfect positive correlation. The correlation matrix is commonly used to identify patterns and relationships between variables in a dataset, which can help to better understand the factors that influence a phenomenon or outcome.

We have generated two heat maps with the correlation matrix data for both weather stations.

The main conclusions obtained from the visualizations of this section are:

- There is a strong negative correlation (-0.42) for Córdoba and (-0.45) for Burgos between the number of annual days with temperatures above 30º and accumulated rainfall. This means that as the number of days with temperatures above 30º increases, precipitation decreases significantly.

6. Conclusions of the exercise

Data visualization is one of the most powerful mechanisms for exploiting and analyzing the implicit meaning of data. As we have seen in this exercise, "ggplot2" is a powerful library capable of representing a wide variety of graphics with a high degree of customization that allows you to adjust numerous characteristics of each graph.

After analyzing the previous visualizations, we can conclude that both for the weather station of Burgos, as well as that of Córdoba, temperatures (minimum, average, maximum) have suffered a considerable increase, days with extreme heat (temperature > 30º) have also suffered and rainfall has decreased in the period of time analyzed, from 1990 to 2020.

We hope that this step-by-step visualization has been useful for learning some very common techniques in the treatment, representation and interpretation of open data. We will be back to show you new reuses. See you soon!

The public sector in Spain will have the duty to guarantee the openness of its data by design and by default, as well as its reuse. This is the result of the amendment of Law 37/2007 on the reuse of public sector information in application of European Directive 2019/1024.

This new wording of the regulation seeks to broaden the scope of application of the Law in order to bring the legal guarantees and obligations closer to the current technological, social and economic context. In this scenario, the current regulation takes into account that greater availability of public sector data can contribute to the development of cutting-edge technologies such as artificial intelligence and all its applications.

Moreover, this initiative is aligned with the European Union's Data Strategy aimed at creating a single data market in which information flows freely between states and the private sector in a mutually beneficial exchange.

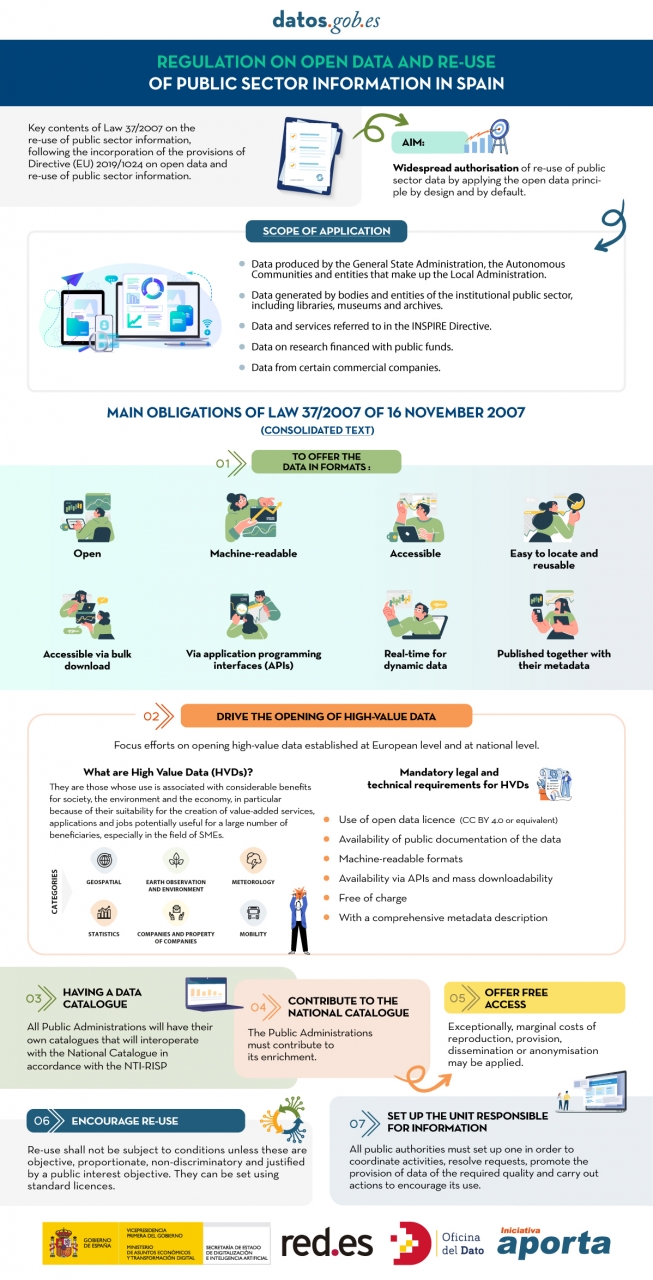

From high-value data to the responsible unit of information: obligations under Law 37/2007

In the following infographic, we highlight the main obligations contained in the consolidated text of the law. Emphasis is placed on duties such as promoting the opening of High Value Datasets (HVDS), i.e. datasets with a high potential to generate social, environmental and economic benefits. As required by law, HVDS must be published under an open data attribution licence (CC BY 4.0 or equivalent), in machine-readable format and accompanied by metadata describing the characteristics of the datasets. All of this will be publicly accessible and free of charge with the aim of encouraging technological, economic and social development, especially for SMEs.

In addition to the publication of high-value data, all public administrations will be obliged to have their own data catalogues that will interoperate with the National Catalogue following the NTI-RISP, with the aim of contributing to its enrichment. As in the case of HVDS, access to the datasets of the Public Administrations must be free of charge, with exceptions in the case of HVDS. As with HVDS, access to public authorities' datasets should be free of charge, except for exceptions where marginal costs resulting from data processing may apply.

To guarantee data governance, the law establishes the need to designate a unit responsible for information for each entity to coordinate the opening and re-use of data, and to be in charge of responding to citizens' requests and demands.

In short, Law 37/2007 has been modified with the aim of offering legal guarantees to the demands of competitiveness and innovation raised by technologies such as artificial intelligence or the internet of things, as well as to realities such as data spaces where open data is presented as a key element.

Click on the infographic to see it full size:

1. Introduction

Visualizations are graphical representations of the data allowing to transmit in a simple and effective way related information. The visualization capabilities are extensive, from basic representations, such as a line chart, bars or sectors, to visualizations configured on control panels or interactive dashboards.

In this "Step-by-Step Visualizations" section we are periodically presenting practical exercises of open data visualizations available in datos.gob.es or other similar catalogs. They address and describe in an easy manner stages necessary to obtain the data, to perform transformations and analysis relevant to finally creating interactive visualizations, from which we can extract information summarized in final conclusions. In each of these practical exercises simple and well-documented code developments are used, as well as open-source tools. All generated materials are available for reuse in the GitHub repository.

In this practical exercise, we made a simple code development that is conveniently documented relying on free to use tools.

Access the data lab repository on Github

Run the data pre-procesing code on top of Google Colab

2. Objective

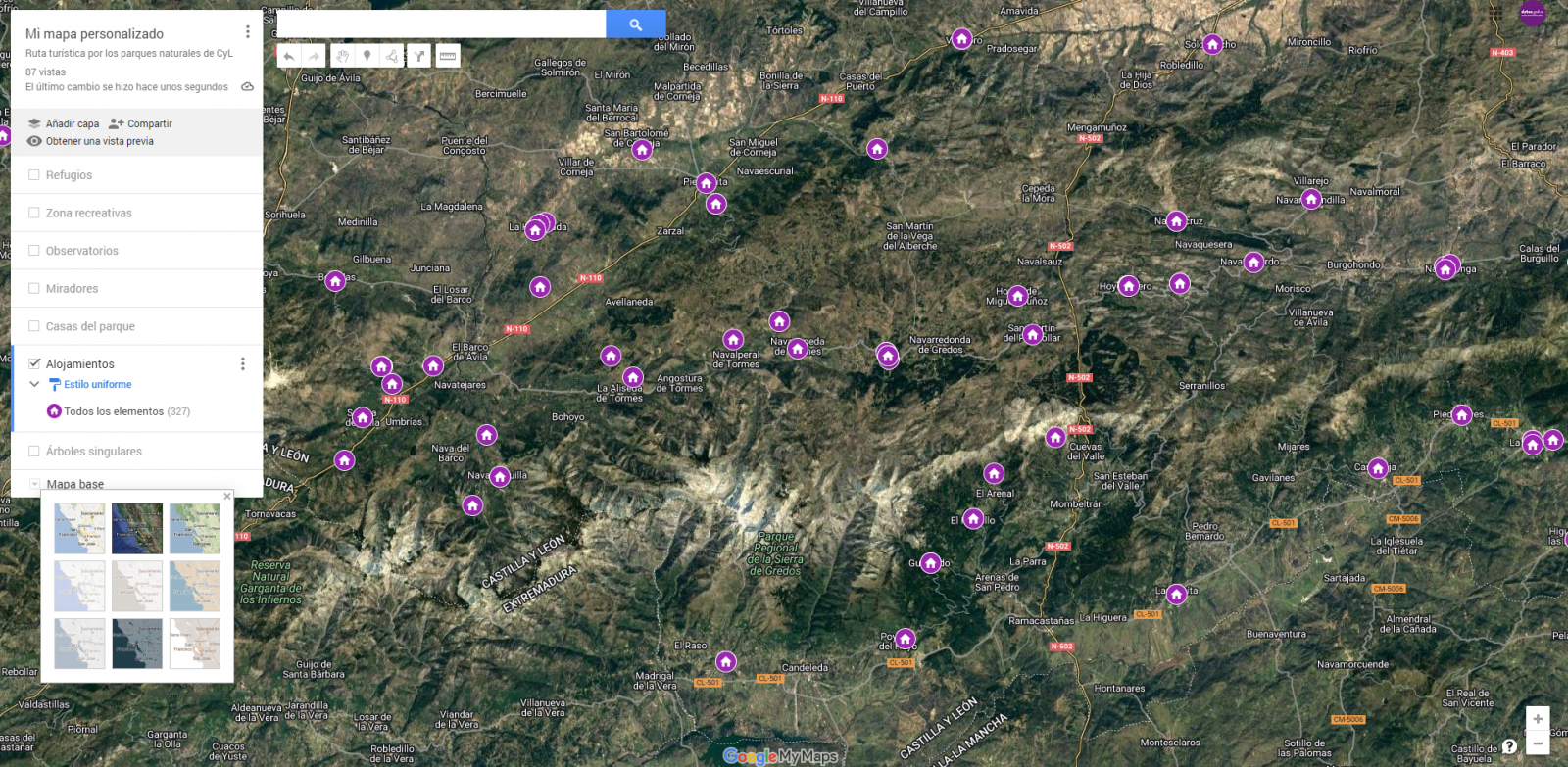

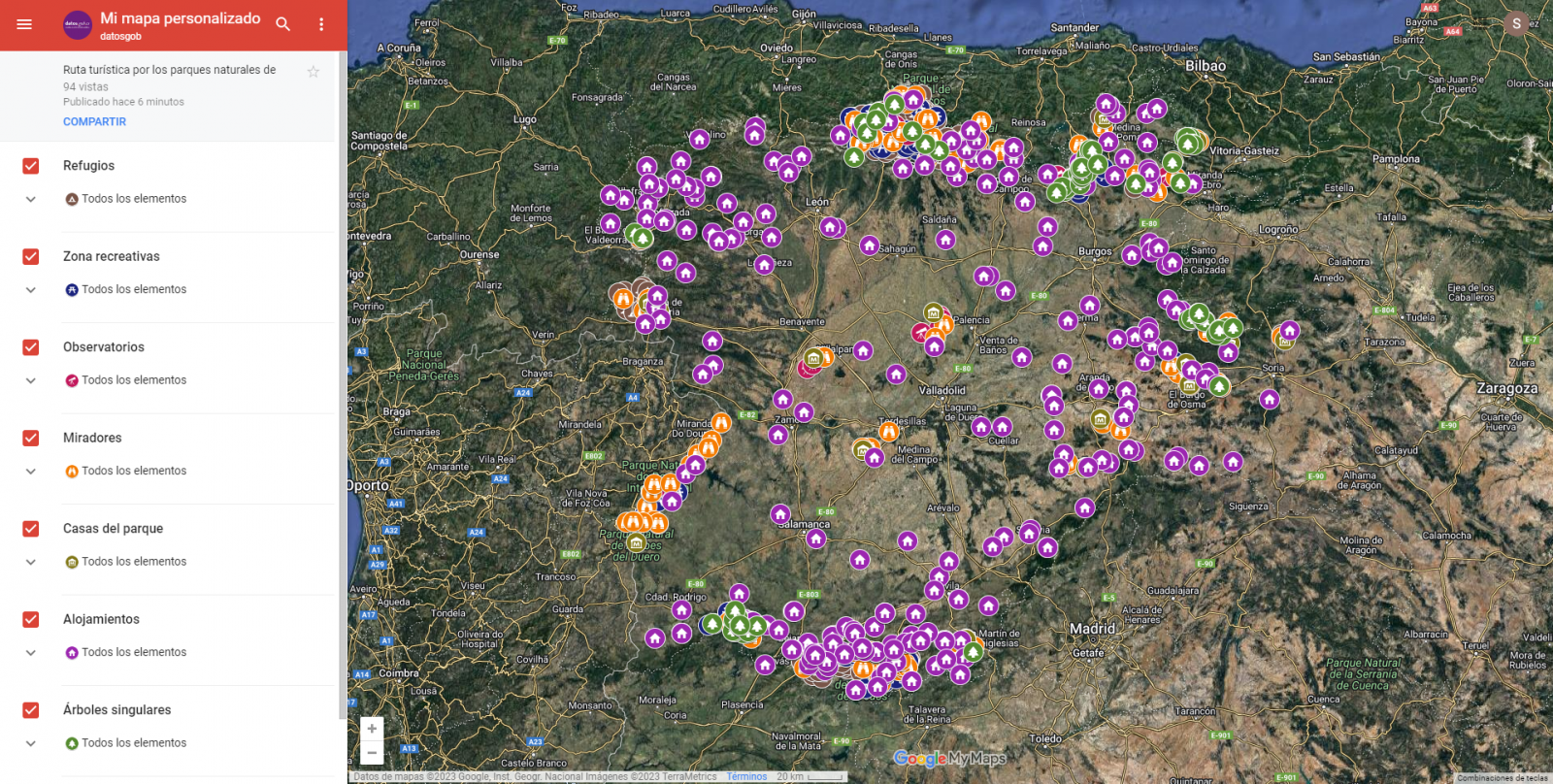

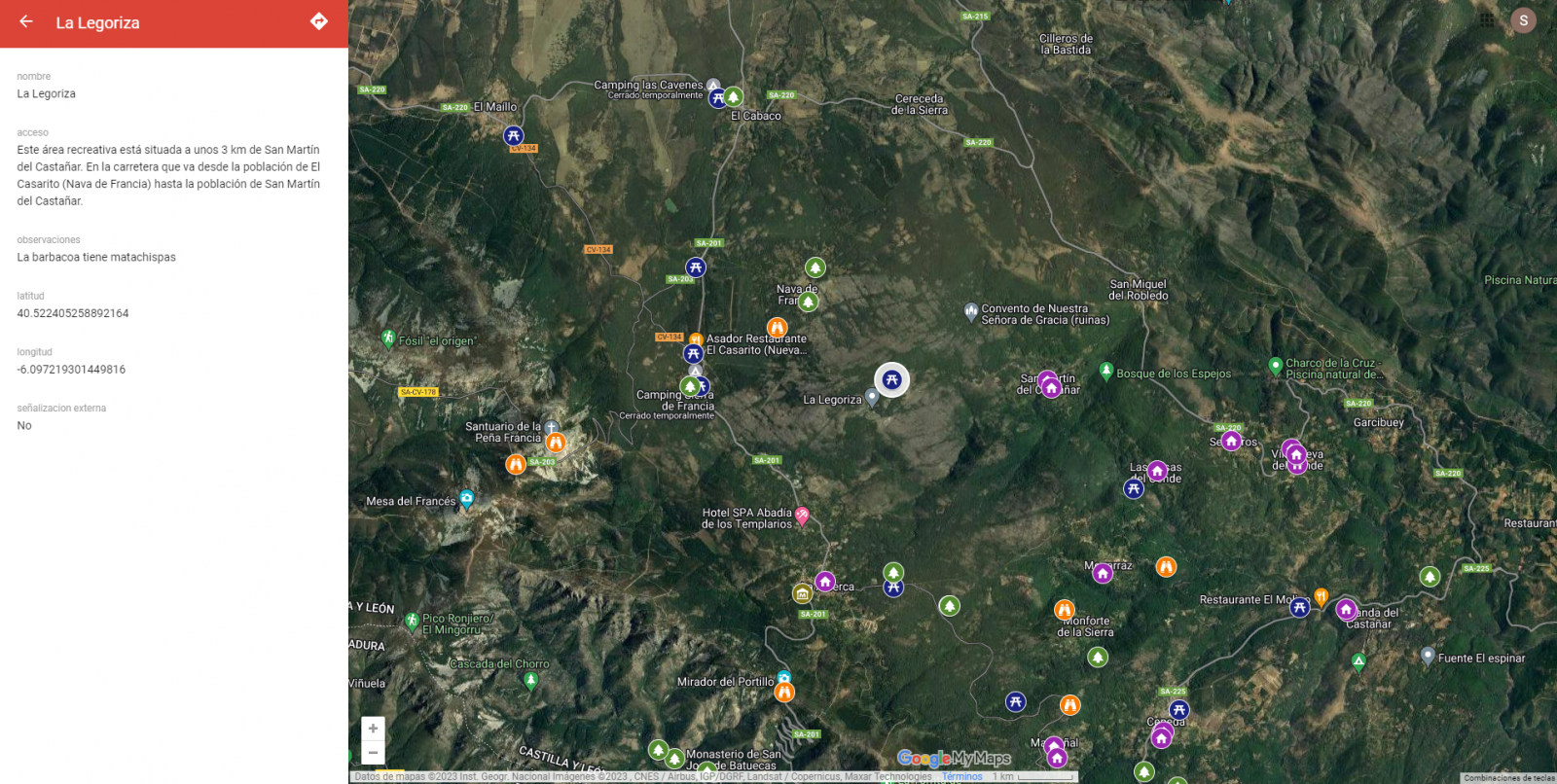

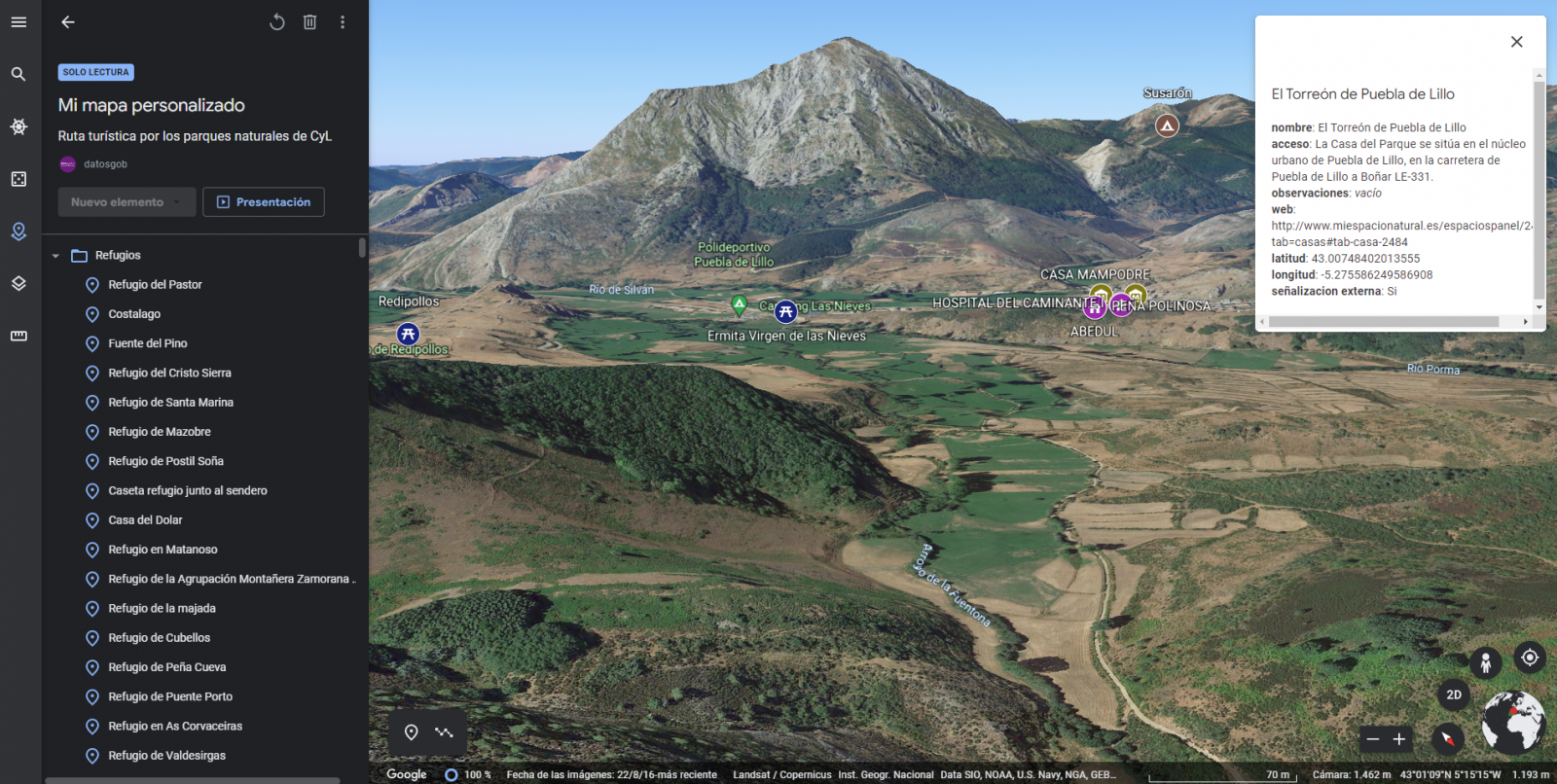

The main scope of this post is to show how to generate a custom Google Maps map using the "My Maps" tool based on open data. These types of maps are highly popular on websites, blogs and applications in the tourism sector, however, the useful information provided to the user is usually scarce.

In this exercise, we will use potential of the open-source data to expand the information to be displayed on our map in an automatic way. We will also show how to enrich open data with context information that significantly improves the user experience.

From a functional point of view, the goal of the exercise is to create a personalized map for planning tourist routes through the natural areas of the autonomous community of Castile and León. For this, open data sets published by the Junta of Castile and León have been used, which we have pre-processed and adapted to our needs in order to generate a personalized map.

3. Resources

3.1. Datasets

The datasets contain different tourist information of geolocated interest. Within the open data catalog of the Junta of Castile and León, we may find the "dictionary of entities" (additional information section), a document of vital importance, since it defines the terminology used in the different data sets.

- Viewpoints in natural areas

- Observatories in natural areas

- Shelters in natural areas

- Trees in natural areas

- Park houses in natural areas

- Recreational areas in natural areas

- Registration of hotel establishments

These datasets are also available in the Github repository.

3.2. Tools

To carry out the data preprocessing tasks, the Python programming language written on a Jupyter Notebook hosted in the Google Colab cloud service has been used.

"Google Colab" also called " Google Colaboratory", is a free cloud service from Google Research that allows you to program, execute and share from your browser code written in Python or R, so it does not require installation of any tool or configuration.

For the creation of the interactive visualization, the Google My Maps tool has been used.

"Google My Maps" is an online tool that allows you to create interactive maps that can be embedded in websites or exported as files. This tool is free, easy to use and allows multiple customization options.

If you want to know more about tools that can help you with the treatment and visualization of data, you can go to the section "Data processing and visualization tools".

4. Data processing and preparation

The processes that we describe below are commented in the Notebook which you can run from Google Colab.

Before embarking on building an effective visualization, we must carry out a prior data treatment, paying special attention to obtaining them and validating their content, ensuring that they are in the appropriate and consistent format for processing and that they do not contain errors.

The first step necessary is performing the exploratory analysis of the data (EDA) in order to properly interpret the starting data, detect anomalies, missing data or errors that could affect the quality of the subsequent processes and results. If you want to know more about this process, you can go to the Practical Guide of Introduction to Exploratory Data Analysis.

The next step is to generate the tables of preprocessed data that will be used to feed the map. To do so, we will transform the coordinate systems, modify and filter the information according to our needs.

The steps required in this data preprocessing, explained in the Notebook, are as follows:

- Installation and loading of libraries

- Loading datasets

- Exploratory Data Analysis (EDA)

- Preprocessing of datasets

During the preprocessing of the data tables, it is necessary to change the coordinate system since in the source datasets the ESTR89 (standard system used in the European Union) is used, while we will need them in the WGS84 (system used by Google My Maps among other geographical applications). How to make this coordinate change is explained in the Notebook. If you want to know more about coordinate types and systems, you can use the "Spatial Data Guide".

Once the preprocessing is finished, we will obtain the data tables "recreational_natural_parks.csv", "rural_accommodations_2stars.csv", "natural_park_shelters.csv", "observatories_natural_parks.csv", "viewpoints_natural_parks.csv", "park_houses.csv", "trees_natural_parks.csv" which include generic and common information fields such as: name, observations, geolocation,... together with specific information fields, which are defined in details in section "6.2 Personalization of the information to be displayed on the map".

You will be able to reproduce this analysis, as the source code is available in our GitHub account. The code can be provided through a document made on a Jupyter Notebook once loaded into the development environment can be easily run or modified. Due to informative nature of this post and to favor understanding of non-specialized readers, the code is not intended to be the most efficient, but rather to facilitate its understanding so you could possibly come up with many ways to optimize the proposed code to achieve similar purposes. We encourage you to do so!

5. Data enrichment

To provide more related information, a data enrichment process is carried out on the dataset "hotel accommodation registration" explained below. With this step we will be able to automatically add complementary information that was initially not included. With this, we will be able to improve the user experience during their use of the map by providing context information related to each point of interest.

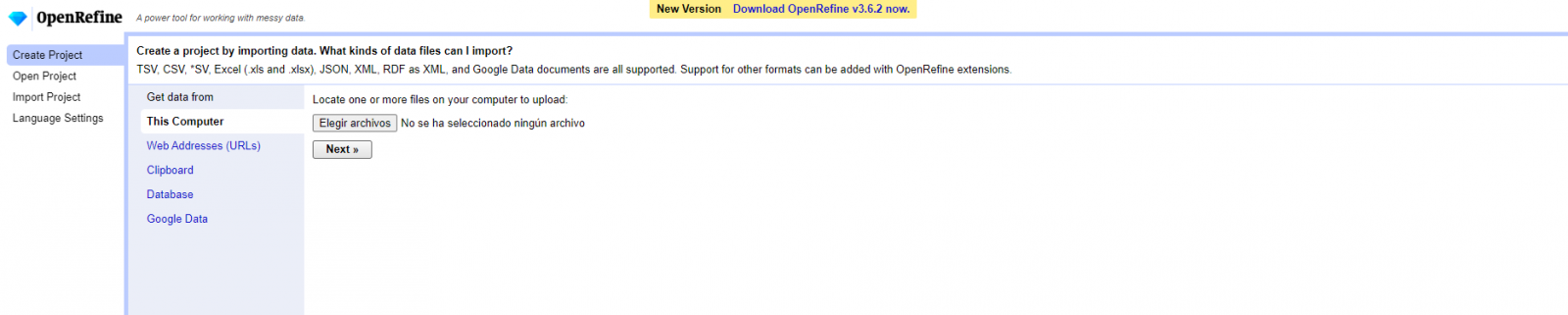

For this we will apply a useful tool for such kind of a tasks: OpenRefine. This open-source tool allows multiple data preprocessing actions, although this time we will use it to carry out an enrichment of our data by incorporating context by automatically linking information that resides in the popular Wikidata knowledge repository.

Once the tool is installed on our computer, when executed – a web application will open in the browser in case it is not opened automatically.

Here are the steps to follow.

Step 1

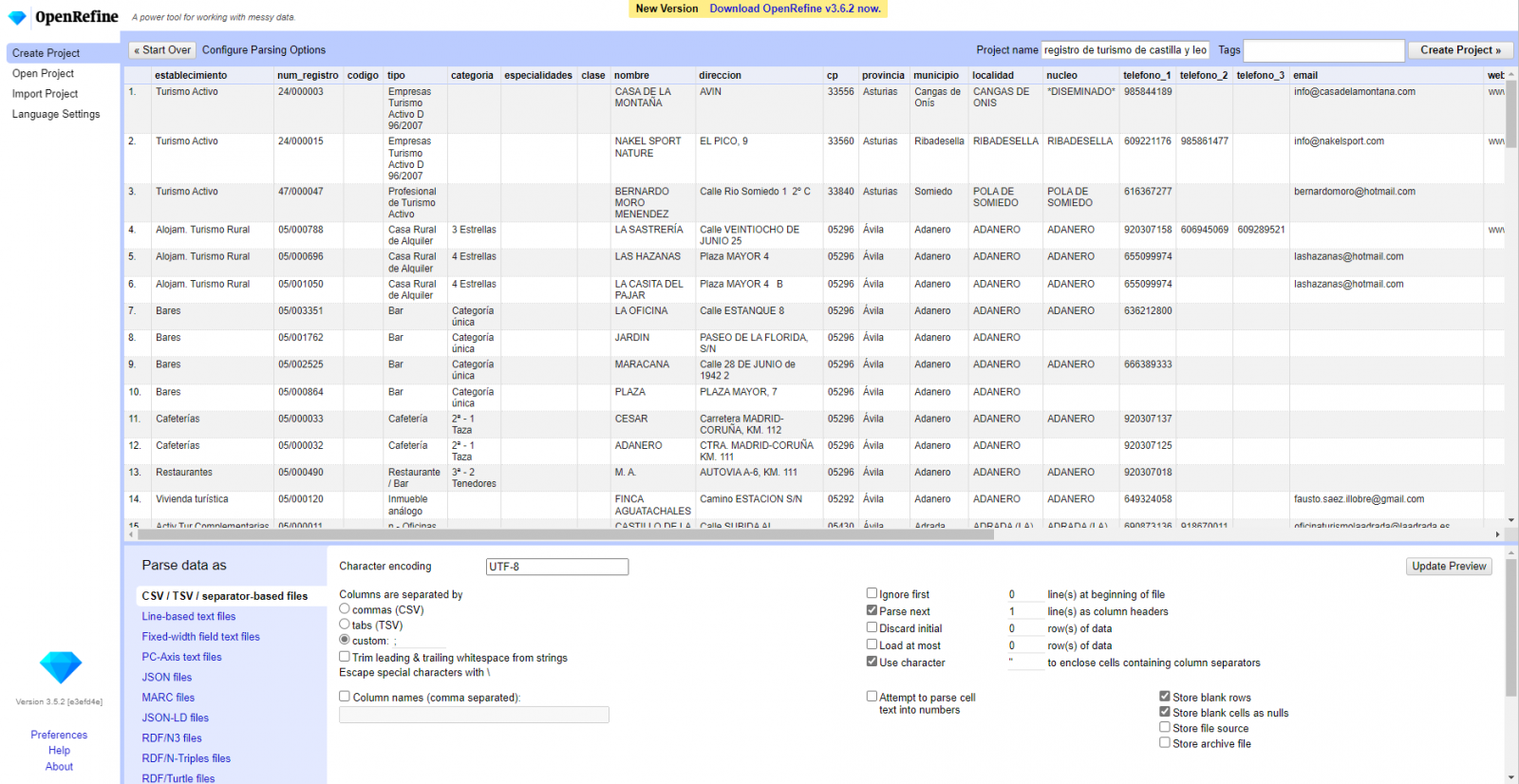

Loading the CSV into the system (Figure 1). In this case, the dataset "Hotel accommodation registration".

Figure 1. Uploading CSV file to OpenRefine

Step 2

Creation of the project from the uploaded CSV (Figure 2). OpenRefine is managed by projects (each uploaded CSV will be a project), which are saved on the computer where OpenRefine is running for possible later use. In this step we must assign a name to the project and some other data, such as the column separator, although the most common is that these last settings are filled automatically.

Figure 2. Creating a project in OpenRefine

Step 3

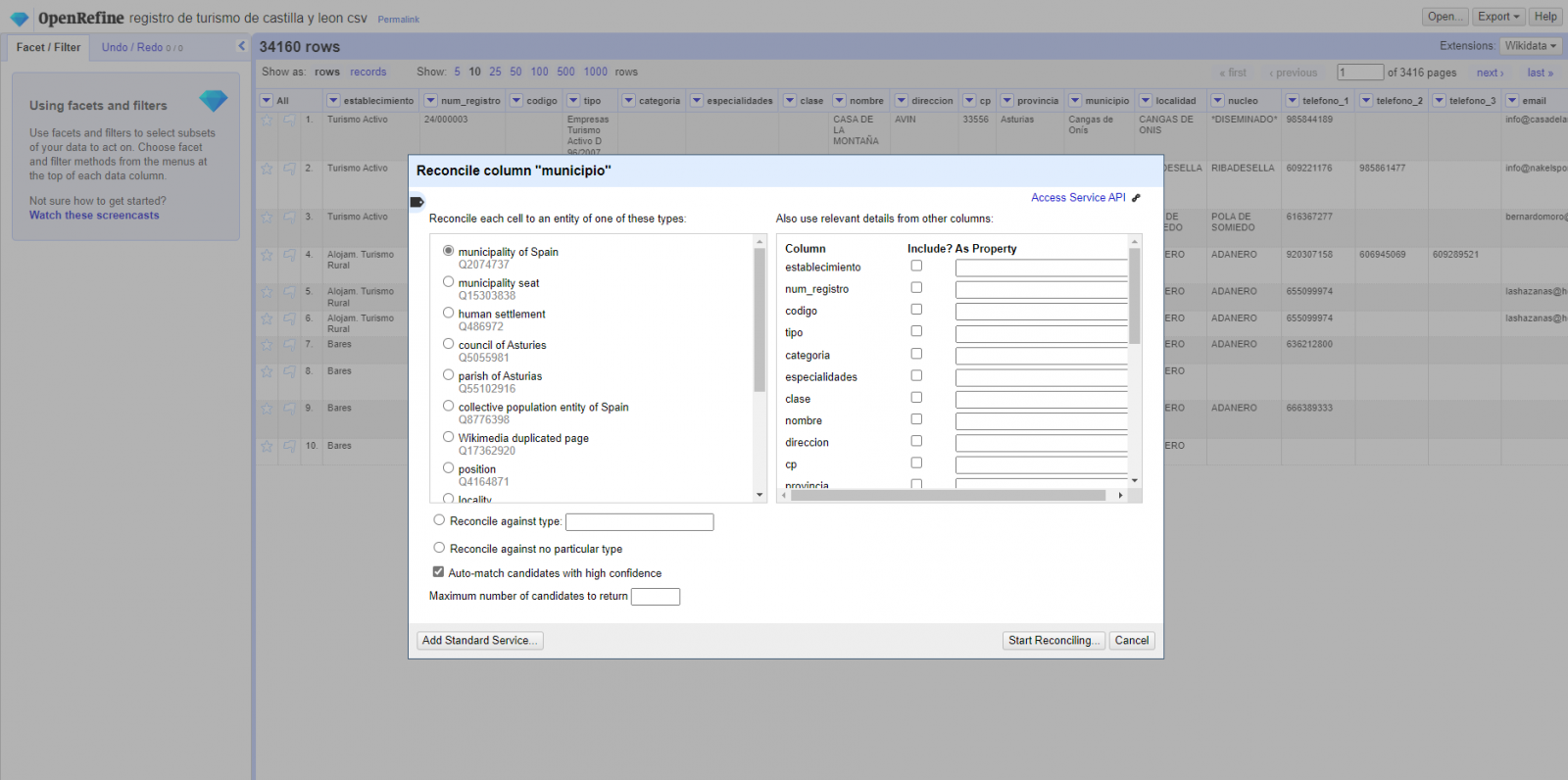

Linked (or reconciliation, using OpenRefine nomenclature) with external sources. OpenRefine allows us to link resources that we have in our CSV with external sources such as Wikidata. To do this, the following actions must be carried out:

- Identification of the columns to be linked. Usually, this step is based on the analyst experience and knowledge of the data that is represented in Wikidata. As a hint, generically you can reconcile or link columns that contain more global or general information such as country, streets, districts names etc., and you cannot link columns like geographical coordinates, numerical values or closed taxonomies (types of streets, for example). In this example, we have the column "municipalities" that contains the names of the Spanish municipalities.

- Beginning of reconciliation (Figure 3). We start the reconciliation and select the default source that will be available: Wikidata. After clicking Start Reconciling, it will automatically start searching for the most suitable Wikidata vocabulary class based on the values in our column.

- Obtaining the values of reconciliation. OpenRefine offers us an option of improving the reconciliation process by adding some features that allow us to conduct the enrichment of information with greater precision.

Figure 3. Selecting the class that best represents the values in the "municipality"

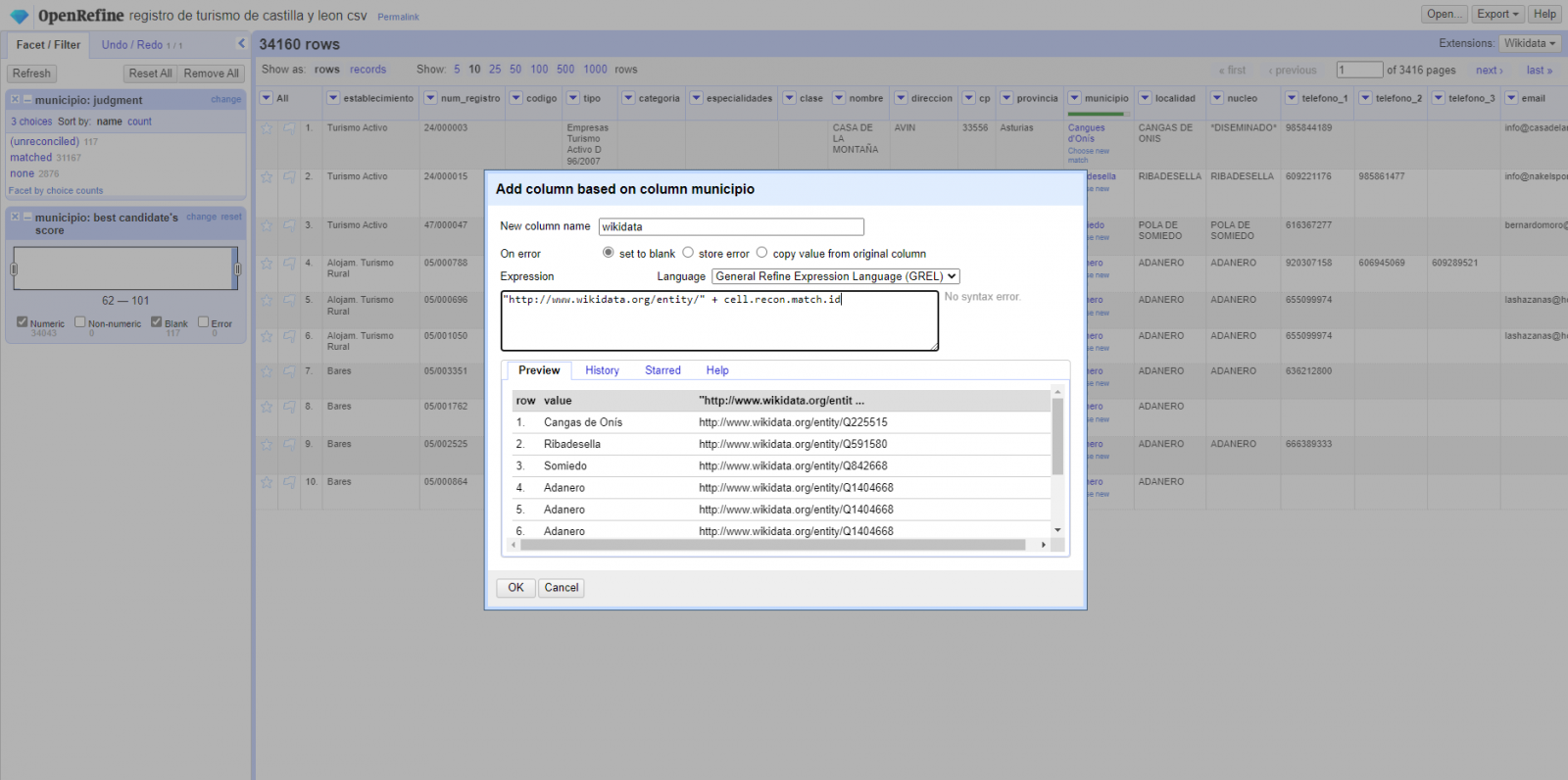

Step 4

Generate a new column with the reconciled or linked values (Figure 4). To do this we need to click on the column "municipality" and go to "Edit Column → Add column based in this column", where a text will be displayed in which we will need to indicate the name of the new column (in this example it could be "wikidata"). In the expression box we must indicate: "http://www.wikidata.org/ entity/"+cell.recon.match.id and the values appear as previewed in the Figure. "http://www.wikidata.org/entity/" is a fixed text string to represent Wikidata entities, while the reconciled value of each of the values is obtained through the cell.recon.match.id statement, that is, cell.recon.match.id("Adanero") = Q1404668

Thanks to the abovementioned operation, a new column will be generated with those values. In order to verify that it has been executed correctly, we click on one of the cells in the new column which should redirect to the Wikidata webpage with reconciled value information.

Figure 4. Generating a new column with reconciled values

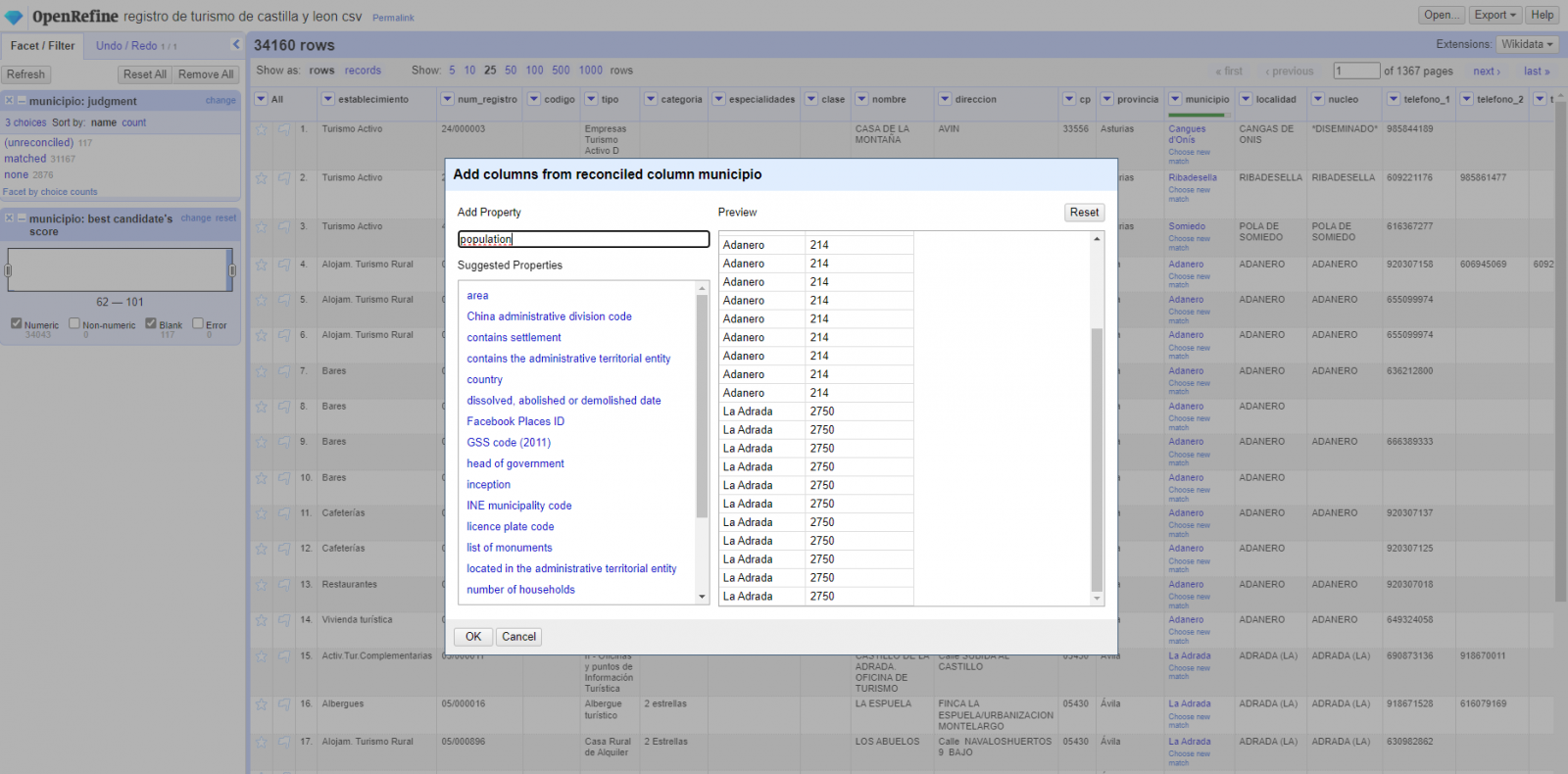

Step 5

We repeat the process by changing in step 4 the "Edit Column → Add column based in this column" with "Add columns from reconciled values" (Figure 5). In this way, we can choose the property of the reconciled column.

In this exercise we have chosen the "image" property with identifier P18 and the "population" property with identifier P1082. Nevertheless, we could add all the properties that we consider useful, such as the number of inhabitants, the list of monuments of interest, etc. It should be mentioned that just as we enrich data with Wikidata, we can do so with other reconciliation services.

Figura 5. Choice of property for reconciliation

In the case of the "image" property, due to the display, we want the value of the cells to be in the form of a link, so we have made several adjustments. These adjustments have been the generation of several columns according to the reconciled values, adequacy of the columns through commands in GREL language (OpenRefine''s own language) and union of the different values of both columns. You can check these settings and more techniques to improve your handling of OpenRefine and adapt it to your needs in the following User Manual.

6. Map visualization

6.1 Map generation with "Google My Maps"

To generate the custom map using the My Maps tool, we have to execute the following steps:

- We log in with a Google account and go to "Google My Maps", with free access with no need to download any kind of software.

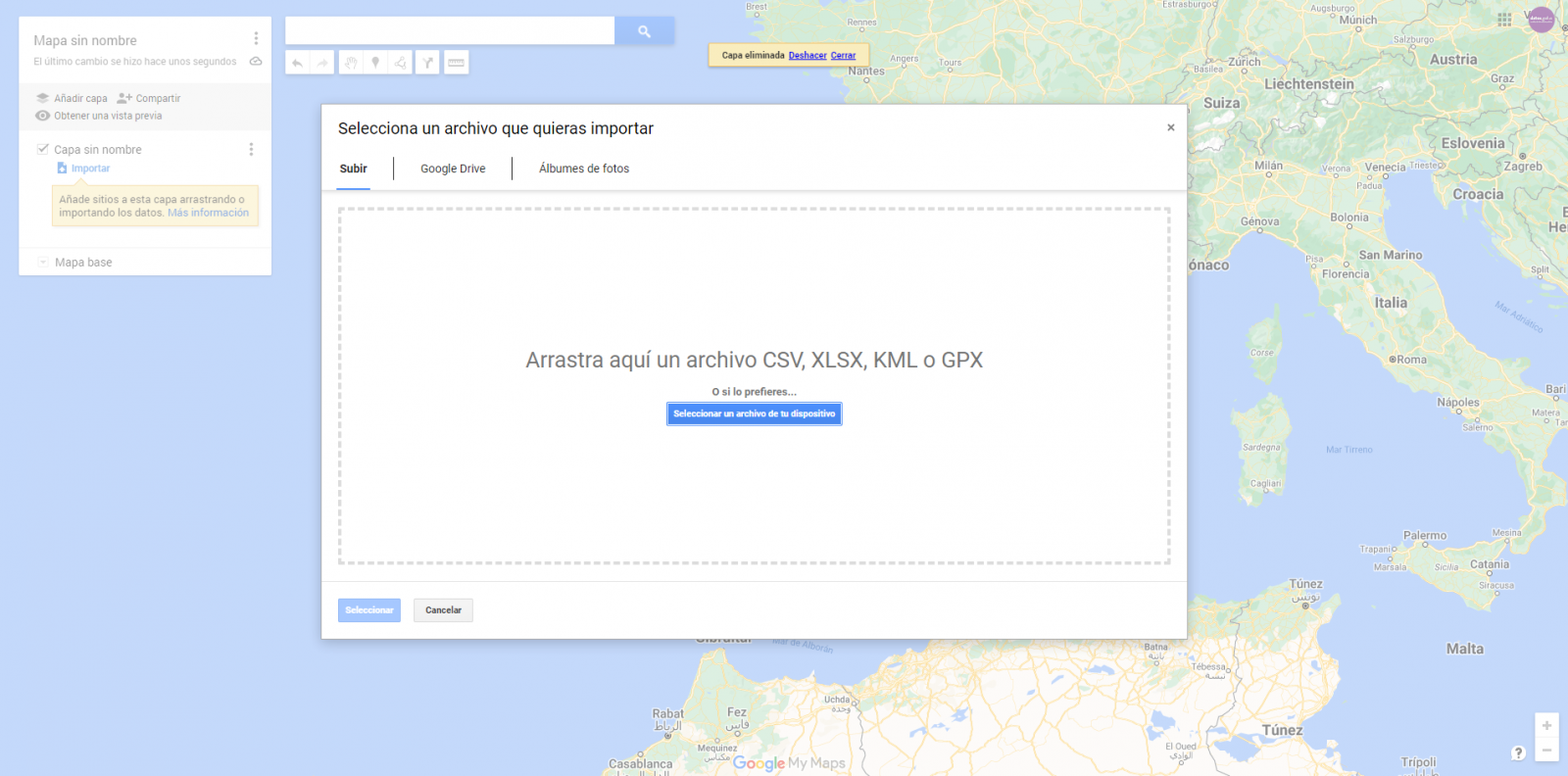

- We import the preprocessed data tables, one for each new layer we add to the map. Google My Maps allows you to import CSV, XLSX, KML and GPX files (Figure 6), which should include associated geographic information. To perform this step, you must first create a new layer from the side options menu.

Figure 6. Importing files into "Google My Maps"

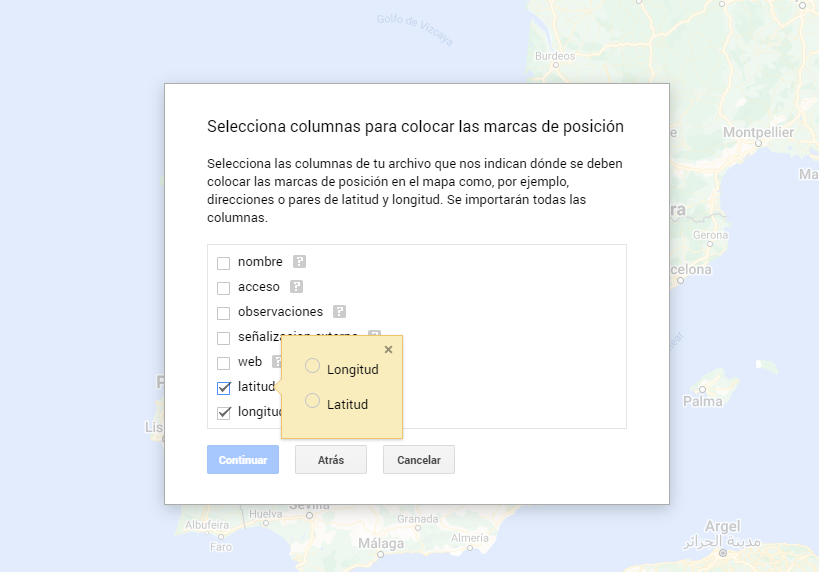

- In this case study, we''ll import preprocessed data tables that contain one variable with latitude and other with longitude. This geographic information will be automatically recognized. My Maps also recognizes addresses, postal codes, countries, ...

Figura 7. Select columns with placement values

- With the edit style option in the left side menu, in each of the layers, we can customize the pins, editing their color and shape.

Figure 8. Position pin editing

- Finally, we can choose the basemap that we want to display at the bottom of the options sidebar.

Figura 9. Basemap selection

If you want to know more about the steps for generating maps with "Google My Maps", check out the following step-by-step tutorial.

6.2 Personalization of the information to be displayed on the map

During the preprocessing of the data tables, we have filtered the information according to the focus of the exercise, which is the generation of a map to make tourist routes through the natural spaces of Castile and León. The following describes the customization of the information that we have carried out for each of the datasets.

- In the dataset belonging to the singular trees of the natural areas, the information to be displayed for each record is the name, observations, signage and position (latitude / longitude)

- In the set of data belonging to the houses of the natural areas park, the information to be displayed for each record is the name, observations, signage, access, web and position (latitude / longitude)

- In the set of data belonging to the viewpoints of the natural areas, the information to be displayed for each record is the name, observations, signage, access and position (latitude / longitude)