14 posts found

AI Data Readiness: Preparing Data for Artificial Intelligence

Over the last few years we have seen spectacular advances in the use of artificial intelligence (AI) and, behind all these achievements, we will always find the same common ingredient: data. An illustrative example known to everyone is that of the language models used by OpenAI for its famous ChatGP…

Explainable artificial intelligence (XAI): how open data can help understand algorithms

The increasing adoption of artificial intelligence (AI) systems in critical areas such as public administration, financial services or healthcare has brought the need for algorithmic transparency to the forefront. The complexity of AI models used to make decisions such as granting credit or making a…

The role of open data in the evolution of SLM and LLM: efficiency vs. power

Language models are at the epicentre of the technological paradigm shift that has been taking place in generative artificial intelligence (AI) over the last two years. From the tools with which we interact in natural language to generate text, images or videos and which we use to create creativ…

SLM, LLM, RAG and Fine-tuning: Pillars of Modern Generative AI

In the fast-paced world of Generative Artificial Intelligence (AI), there are several concepts that have become fundamental to understanding and harnessing the potential of this technology. Today we focus on four: Small Language Models(SLM), Large Language Models(LLM), Retrieval Augmented Generation…

New Year's resolution: Apply the UNE data specifications in your organisation

As tradition dictates, the end of the year is a good time to reflect on our goals and objectives for the new phase that begins after the chimes. In data, the start of a new year also provides opportunities to chart an interoperable and digital future that will enable the development of a robust data…

Application of the UNE 0081:2023 Specification for data quality evaluation

The new UNE 0081 Data Quality Assessment specification, focused on data as a product (datasets or databases), complements the UNE 0079 Data Quality Management specification, which we analyse in this article, and focuses on data quality management processes. Both standards 0079 and 008…

UNE 0081 Specification - Data Quality Assessment Guide

Today, data quality plays a key role in today's world, where information is a valuable asset. Ensuring that data is accurate, complete and reliable has become essential to the success of organisations, and guarantees the success of informed decision making.

Data quality has a direct impact not only…

FAIR principles: the secret of the data wizards.

Books are an inexhaustible source of knowledge and experiences lived by others before us, which we can reuse to move forward in our lives. Libraries, therefore, are places where readers looking for books, borrow them, and once they have used them and extracted from them what they need, return them.…

Free tools to work on data quality issues

Ensuring data quality is an essential task for any open data initiative. Before publication, datasets need to be validated to check that they are free of errors, duplication, etc. In this way, their potential for re-use will grow.

Data quality is conditioned by many aspects. In this sense, the Aport…

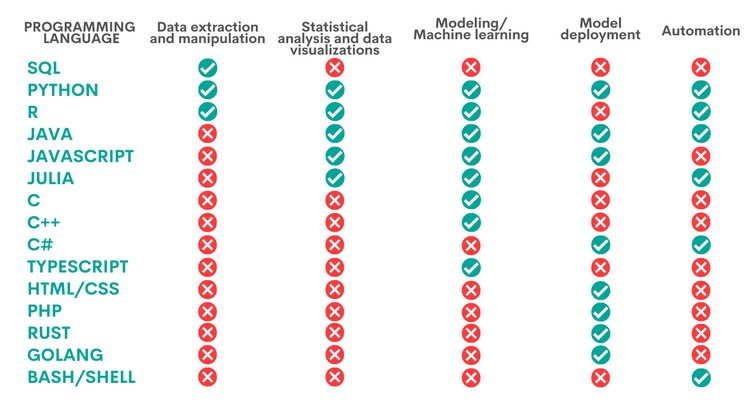

When to use each programming language in data science?

Python, R, SQL, JavaScript, C++, HTML... Nowadays we can find a multitude of programming languages that allow us to develop software programmes, applications, web pages, etc. Each one has unique characteristics that differentiate it from the rest and make it more appropriate for certain tasks. But h…