The European Commission's 'European Data Strategy' states that the creation of a single market for shared data is key. In this strategy, the Commission has set as one of its main objectives the promotion of a data economy in line with European values of self-determination in data sharing (sovereignty), confidentiality, transparency, security and fair competition.

Common data spaces at European level are a fundamental resource in the data strategy because they act as enablers for driving the data economy. Indeed, pooling European data in key sectors, fostering data circulation and creating collective and interoperable data spaces are actions that contribute to the benefit of society.

Although data sharing environments have existed for a long time, the creation of data spaces that guarantee EU values and principles is an issue. Developing enabling legislative initiatives is not only a technological challenge, but also one of coordination among stakeholders, governance, adoption of standards and interoperability.

To address a challenge of this magnitude, the Commission plans to invest close to €8 billion by 2027 in the deployment of Europe's digital transformation. Part of the project includes the promotion of infrastructures, tools, architectures and data sharing mechanisms. For this strategy to succeed, a data space paradigm that is embedded in the industry needs to be developed, based on the fulfilment of European values. This data space paradigm will act as a de facto technology standard and will advance social awareness of the possibilities of data, which will enable the economic return on the investments required to create it.

In order to make the data space paradigm a reality, from the convergence of current initiatives, the European Commission has committed to the development of the Simpl project.

What exactly is Simpl?

Simpl is a €150 million project funded by the European Commission's Digital Europe programme with a three-year implementation period. Its objective is to provide society with middleware for building data ecosystems and cloud infrastructure services that support the European values of data sovereignty, privacy and fair markets.

The Simpl project consists of the delivery of 3 products:

- Simpl-Open: Middleware itself. This is a software solution to create ecosystems of data services (data and application sharing) and cloud infrastructure services (IaaS, PaaS, SaaS, etc). This software must include agents enabling connection to the data space, operational services and brokerage services (catalogue, vocabulary, activity log, etc.). The result should be delivered under an open source licence and an attempt will be made to build an open source community to ensure its evolution.

- Simpl-Labs: Infrastructure for creating test bed environments so that interested users can test the latest version of the software in self-service mode. This environment is primarily intended for data space developers who want to do the appropriate technical testing prior to a deployment.

- Simpl-Live: Deployments of Simpl-open in production environments that will correspond to sectorial spaces contemplated in the Digital Europe programme. In particular, the deployment of data spaces managed by the European Commission itself (Health, Procurement, Language) is envisaged.

The project is practically oriented and aims to deliver results as soon as possible. It is therefore intended that, in addition to supplying the software, the contractor will provide a laboratory service for user testing. The company developing Simpl will also have to adapt the software for the deployment of common European data spaces foreseen in the Digital Europe programme.

The Gaia-X partnership is considered to be the closest in its objectives to the Simpl project, so the outcome of the project should strive for the reuse of the components made available by Gaia-X.

For its part, the Data Space Support Center, which involves the main European initiatives for the creation of technological frameworks and standards for the construction of data spaces, will have to define the middleware requirements by means of specifications, architectural models and the selection of standards.

Simpl's preparatory work was completed in May 2022, setting out the scope and technical requirements of the project which have been the subject of detail in the currently open contractual process. The tender was launched on 24 February 2023. All information is available on TED eTendering, including how to ask questions about the tendering process. The deadline for applications is 24 April 2023 at 17:00 (Brussels time).

Simpl expects to have a minimum viable platform published in early 2024. In parallel, and as soon as possible, the open test environment (Simpl-Labs) will be made available for interested parties to experiment. This will be followed by the progressive integration of different use cases, helping to tailor Simpl to specific needs, with priority being given to cases otherwise funded under the Europe DIGITAL work programme.

In conclusion, Simpl is the European Commission's commitment to the deployment and interoperability of the different sectoral data space initiatives, ensuring alignment with the specifications and requirements emanating from the Data Space Support Center and, therefore, with the convergence process of the different European initiatives for the construction of data spaces (Gaia-X, IDSA, Fiware, BDVA).

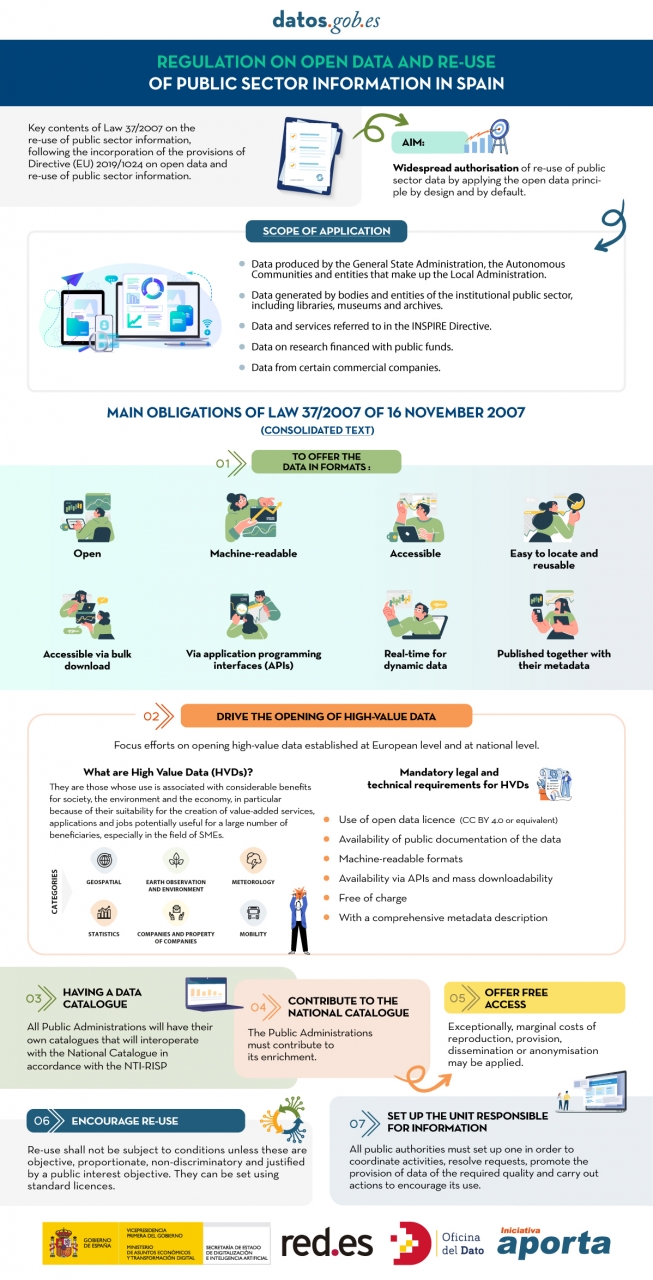

The public sector in Spain will have the duty to guarantee the openness of its data by design and by default, as well as its reuse. This is the result of the amendment of Law 37/2007 on the reuse of public sector information in application of European Directive 2019/1024.

This new wording of the regulation seeks to broaden the scope of application of the Law in order to bring the legal guarantees and obligations closer to the current technological, social and economic context. In this scenario, the current regulation takes into account that greater availability of public sector data can contribute to the development of cutting-edge technologies such as artificial intelligence and all its applications.

Moreover, this initiative is aligned with the European Union's Data Strategy aimed at creating a single data market in which information flows freely between states and the private sector in a mutually beneficial exchange.

From high-value data to the responsible unit of information: obligations under Law 37/2007

In the following infographic, we highlight the main obligations contained in the consolidated text of the law. Emphasis is placed on duties such as promoting the opening of High Value Datasets (HVDS), i.e. datasets with a high potential to generate social, environmental and economic benefits. As required by law, HVDS must be published under an open data attribution licence (CC BY 4.0 or equivalent), in machine-readable format and accompanied by metadata describing the characteristics of the datasets. All of this will be publicly accessible and free of charge with the aim of encouraging technological, economic and social development, especially for SMEs.

In addition to the publication of high-value data, all public administrations will be obliged to have their own data catalogues that will interoperate with the National Catalogue following the NTI-RISP, with the aim of contributing to its enrichment. As in the case of HVDS, access to the datasets of the Public Administrations must be free of charge, with exceptions in the case of HVDS. As with HVDS, access to public authorities' datasets should be free of charge, except for exceptions where marginal costs resulting from data processing may apply.

To guarantee data governance, the law establishes the need to designate a unit responsible for information for each entity to coordinate the opening and re-use of data, and to be in charge of responding to citizens' requests and demands.

In short, Law 37/2007 has been modified with the aim of offering legal guarantees to the demands of competitiveness and innovation raised by technologies such as artificial intelligence or the internet of things, as well as to realities such as data spaces where open data is presented as a key element.

Click on the infographic to see it full size:

On 21 February, the winners of the 6th edition of the Castilla y León Open Data Competition were presented with their prizes. This competition, organised by the Regional Ministry of the Presidency of the Regional Government of Castilla y León, recognises projects that provide ideas, studies, services, websites or mobile applications, using datasets from its Open Data Portal.

The event was attended, among others, by Jesús Julio Carnero García, Minister of the Presidency, and Rocío Lucas Navas, Minister of Education of the Junta de Castilla y León.

In his speech, the Minister Jesús Julio Carnero García emphasised that the Regional Government is going to launch the Data Government project, with which they intend to combine Transparency and Open Data, in order to improve the services offered to citizens.

In addition, the Data Government project has an approved allocation of almost 2.5 million euros from the Next Generation Funds, which includes two lines of work: both the design and implementation of the Data Government model, as well as the training for public employees.

This is an Open Government action which, as the Councillor himself added, "is closely related to transparency, as we intend to make Open Data freely available to everyone, without copyright restrictions, patents or other control or registration mechanisms".

Nine prize-winners in the 6th edition of the Castilla y León Open Data Competition

It is precisely in this context that initiatives such as the 6th edition of the Castilla y León Open Data Competition stand out. In its sixth edition, it has received a total of 26 proposals from León, Palencia, Salamanca, Zamora, Madrid and Barcelona.

In this way, the 12,000 euros distributed in the four categories defined in the rules have been distributed among nine of the above-mentioned proposals. This is how the awards were distributed by category:

Products and Services Category: aimed at recognising projects that provide studies, services, websites or applications for mobile devices and that are accessible to all citizens via the web through a URL.

- First prize: 'Oferta de Formación profesional de Castilla y León. An attractive and accessible alternative with no-cod tools'". Author: Laura Folgado Galache (Zamora). 2,500 euros.

- Second prize: 'Enjoycyl: collection and exploitation of assistance and evaluation of cultural activities'. Author: José María Tristán Martín (Palencia). 1,500 euros.

- Third prize: 'Aplicación del problema de la p-mediana a la Atención Primaria en Castilla y León'. Authors: Carlos Montero and Ernesto Ramos (Salamanca) 500 euros.

- Student prize: 'Play4CyL'. Authors: Carlos Montero and Daniel Heras (Salamanca) 1,500 euros.

Ideas category: seeks to reward projects that describe an idea for developing studies, services, websites or applications for mobile devices.

- First prize: 'Elige tu Universidad (Castilla y León)'. Authors: Maite Ugalde Enríquez and Miguel Balbi Klosinski (Barcelona) 1,500 euros.

- Second prize: 'Bots to interact with open data - Conversational interfaces to facilitate access to public data (BODI)'. Authors: Marcos Gómez Vázquez and Jordi Cabot Sagrera (Barcelona) 500 euros

Data Journalism Category: awards journalistic pieces published or updated (in a relevant way) in any medium (written or audiovisual).

- First prize: '13-F elections in Castilla y León: there will be 186 fewer polling stations than in the 2019 regional elections'. Authors: Asociación Maldita contra la desinformación (Madrid) 1,500 euros.

- Second prize: 'More than 2,500 mayors received nothing from their city council in 2020 and another 1,000 have not reported their salary'. Authors: Asociación Maldita contra la desinformación (Madrid). 1,000 euros.

Didactic Resource Category: recognises the creation of new and innovative open didactic resources (published under Creative Commons licences) that support classroom teaching.

In short, and as the Regional Ministry of the Presidency itself points out, with this type of initiative and the Open Data Portal, two basic principles are fulfilled: firstly, that of transparency, by making available to society as a whole data generated by the Community Administration in the development of its functions, in open formats and with a free licence for its use; and secondly, that of collaboration, allowing the development of shared initiatives that contribute to social and economic improvements through joint work between citizens and public administrations.

16.5 billion euros. These are the revenues that artificial intelligence (AI) and data are expected to generate in Spanish industry by 2025, according to what was announced last February at the IndesIA forum, the association for the application of artificial intelligence in industry. AI is already part of our daily lives: either by making our work easier by performing routine and repetitive tasks, or by complementing human capabilities in various fields through machine learning models that facilitate, for example, image recognition, machine translation or the prediction of medical diagnoses. All of these activities help us to improve the efficiency of businesses and services, driving more accurate decision-making.

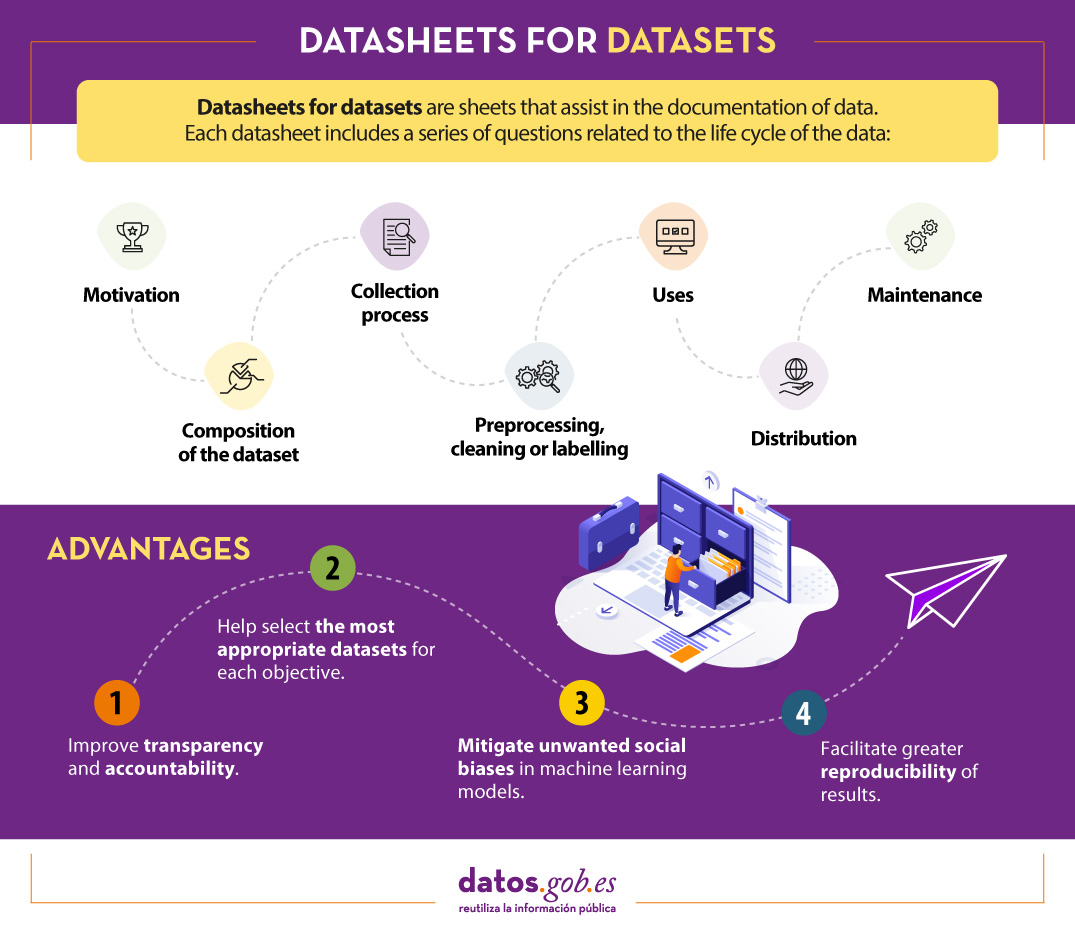

But for machine learning models to work properly, they need quality and well-documented data. Every machine learning model is trained and evaluated with data. The characteristics of these datasets condition the behaviour of the model. For example, if the training data reflects unwanted social biases, these are likely to be incorporated into the model as well, which can have serious consequences when used in high-profile areas such as criminal justice, recruitment or credit lending. Moreover, if we do not know the context of the data, our model may not work properly, as its construction process has not taken into account the intrinsic characteristics of the data on which it is based.

For these and other reasons, the World Economic Forum suggests that all entities should document the provenance, creation and use of machine learning datasets in order to avoid erroneous or discriminatory results..

What are datasheets for datasets?

One mechanism for documenting this information is known as Datasheets for datasets. This framework proposes that every dataset should be accompanied by a datasheet, which consists of a questionnaire that guides data documentation and reflection throughout the data lifecycle. Some of the benefits are:

- It improves collaboration, transparency and accountability within the machine learning community.

- Mitigates unwanted social biases in models.

- Helps researchers and developers select the most appropriate datasets to achieve their specific goals.

- Facilitates greater reproducibility of results.

Datasheets will vary depending on factors such as knowledge area, existing organisational infrastructure or workflows.

To assist in the creation of datasheets, a questionnaire has been designed with a series of questions, according to the stages of the data lifecycle:

- Motivation. Collects the reasons that led to the creation of the datasets. It also asks who created or funded the datasets.

- Composition. Provides users with the necessary information on the suitability of the dataset for their purposes. It includes, among other questions, which units of observation represent the dataset (documents, photos, persons, countries), what kind of information each unit provides or whether there are errors, sources of noise or redundancies in the dataset. Reflect on data that refer to individuals to avoid possible social biases or privacy violations.

- Collection process. It is intended to help researchers and users think about how to create alternative datasets with similar characteristics. It details, for example, how the data were acquired, who was involved in the collection process, or what the ethical review process was like. It deals especially with the ethical aspects of processing data protected by the GDPR.

- Preprocessing, cleansing or tagging. These questions allow data users to determine whether data have been processed in ways that are compatible with their intended uses. Inquire whether any preprocessing, cleansing or tagging of the data was performed, or whether the software that was used to preprocess, cleanse and tag the data is available.

- Uses. This section provides information on those tasks for which the data may or may not be used. For this, questions such as: Has the dataset already been used for any task? What other tasks can it be used for? Does the composition of the dataset or the way it was collected, preprocessed, cleaned and labelled affect other future uses?

- Distribution. This covers how the dataset will be disseminated. Questions focus on whether the data will be distributed to third parties and, if so, how, when, what are the restrictions on use and under what licences.

- Maintenance. The questionnaire ends with questions aimed at planning the maintenance of the data and communicating the plan to the users of the data. For example, answers are given to whether the dataset will be updated or who will provide support.

It is recommended that all questions are considered prior to data collection, so that data creators can be aware of potential problems. To illustrate how each of these questions could be answered in practice, the model developers have produced an appendix with an example for a given dataset.

Is Datasheets for datasets effective?

The Datasheets for datasets data documentation framework has initially received good reviews, but its implementation continues to face challenges, especially when working with dynamic data.

To find out whether the framework effectively addresses the documentation needs of data creators and users, in June 2022, Microsoft USA and the University of Michigan conducted a study on its implementation. To do so, they conducted a series of interviews and a follow-up on the implementation of the questionnaire by a number of machine learning professionals.

In summary, participants expressed the need for documentation frameworks to be adaptable to different contexts, to be integrated into existing tools and workflows, and to be as automated as possible, partly due to the length of the questions. However, they also highlighted its advantages, such as reducing the risk of information loss, promoting collaboration between all those involved in the data lifecycle, facilitating data discovery and fostering critical thinking, among others.

In short, this is a good starting point, but it will have to evolve, especially to adapt to the needs of dynamic data and documentation flows applied in different contexts.

Content prepared by the datos.gob.es team.

One of the key actions that we recently highlighted as necessary to build the future of open data in our country is the implementation of processes to improve data management and governance. It is no coincidence that proper data management in our organisations is becoming an increasingly complex and in-demand task. Data governance specialists, for example, are increasingly in demand - with more than 45,000 active job openings in the US for a role that was virtually non-existent not so long ago - and dozens of data management platforms now advertise themselves as data governance platforms.

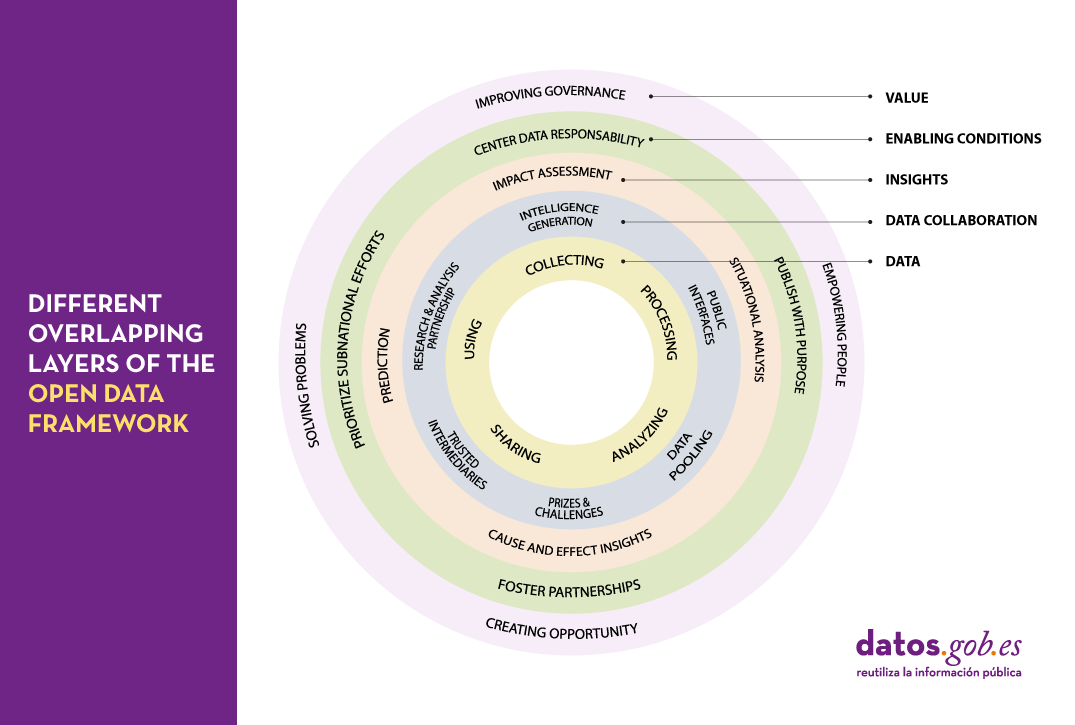

But what's really behind these buzzwords - what is it that we really mean by data governance? In reality, what we are talking about is a series of quite complex transformation processes that affect the whole organisation.

This complexity is perfectly reflected in the framework proposed by the Open Data Policy Lab, where we can clearly see the different overlapping layers of the model and what their main characteristics are - leading to a journey through the elaboration of data, collaboration with data as the main tool, knowledge generation, the establishment of the necessary enabling conditions and the creation of added value.

Let's now peel the onion and take a closer look at what we will find in each of these layers:

The data lifecycle

We should never consider data as isolated elements, but as part of a larger ecosystem, which is embedded in a continuous cycle with the following phases:

- Collection or collation of data from different sources.

- Processing and transformation of data to make it usable.

- Sharing and exchange of data between different members of the organisation.

- Analysis to extract the knowledge being sought.

- Using data according to the knowledge obtained.

Collaboration through data

It is not uncommon for the life cycle of data to take place solely within the organisation where it originates. However, we can increase the value of that data exponentially, simply by exposing it to collaboration with other organisations through a variety of mechanisms, thus adding a new layer of management:

- Public interfaces that provide selective access to data, enabling new uses and functions.

- Trusted intermediaries that function as independent data brokers. These brokers coordinate the use of data by third parties, ensuring its security and integrity at all times.

- Data pooling that provide a common, joint, complete and coherent view of data by aggregating portions from different sources.

- Research and analysis partnership, granting access to certain data for the purpose of generating specific knowledge.

- Prizes and challenges that give access to specific data for a limited period of time to promote new innovative uses of data.

- Intelligence generation, whereby the knowledge acquired by the organisation through the data is also shared and not just the raw material.

Insight generation

Thanks to the collaborations established in the previous layer, it will be possible to carry out new studies of the data that will allow us both to analyse the past and to try to extrapolate the future using various techniques such as:

- Situational analysis, knowing what is happening in the data environment.

- Cause and effect insigths, looking for an explanation of the origin of what is happening.

- Prediction, trying to infer what will happen next.

- Impact assessment, establishing what we expect should happen.

Enabling conditions

There are a number of procedures that when applied on top of an existing collaborative data ecosystem can lead to even more effective use of data through techniques such as:

- Publish with a purpose, with the aim of coordinating data supply and demand as efficiently as possible.

- Foster partnerships, including in our analysis those groups of people and organisations that can help us better understand real needs.

- Prioritize subnational efforts, strengthening of alternative data sources by providing the necessary resources to create new data sources in untapped areas.

- Center data responsability, establishing an accountability framework around data that takes into account the principles of fairness, engagement and transparency.

Value generation

Scaling up the ecosystem -and establishing the right conditions for that ecosystem to flourish- can lead to data economies of scale from which we can derive new benefits such as:

- Improving governance and operations of the organisation itself through the overall improvements in transparency and efficiency that accompany openness processes.

- Empowering people by providing them with the tools they need to perform their tasks in the most appropriate way and make the right decisions.

- Creating new opportunities for innovation, the creation of new business models and evidence-led policy making.

- Solving problems by optimising processes and services and interventions within the system in which we operate.

As we can see, the concept of data governance is actually much broader and more complex than one might initially expect and encompasses a number of key actions and tasks that in most organisations it will be practically impossible to try to centralise in a single role or through a single tool. Therefore, when establishing a data governance system in an organisation, we should face the challenge as an integral transformation process or a paradigm shift in which practically all members of the organisation should be involved to a greater or lesser extent. A good way to face this challenge with greater ease and better guarantees would be through the adoption and implementation of some of the frameworks and reference standards that have been created in this respect and that correspond to different parts of this model.

Content prepared by Carlos Iglesias, Open data Researcher and consultant, World Wide Web Foundation.

The contents and views expressed in this publication are the sole responsibility of the author.

Transforming data into knowledge has become one of the main objectives facing both public and private organizations today. But, in order to achieve this, it is necessary to start from the premise that the data processed is governed and of quality.

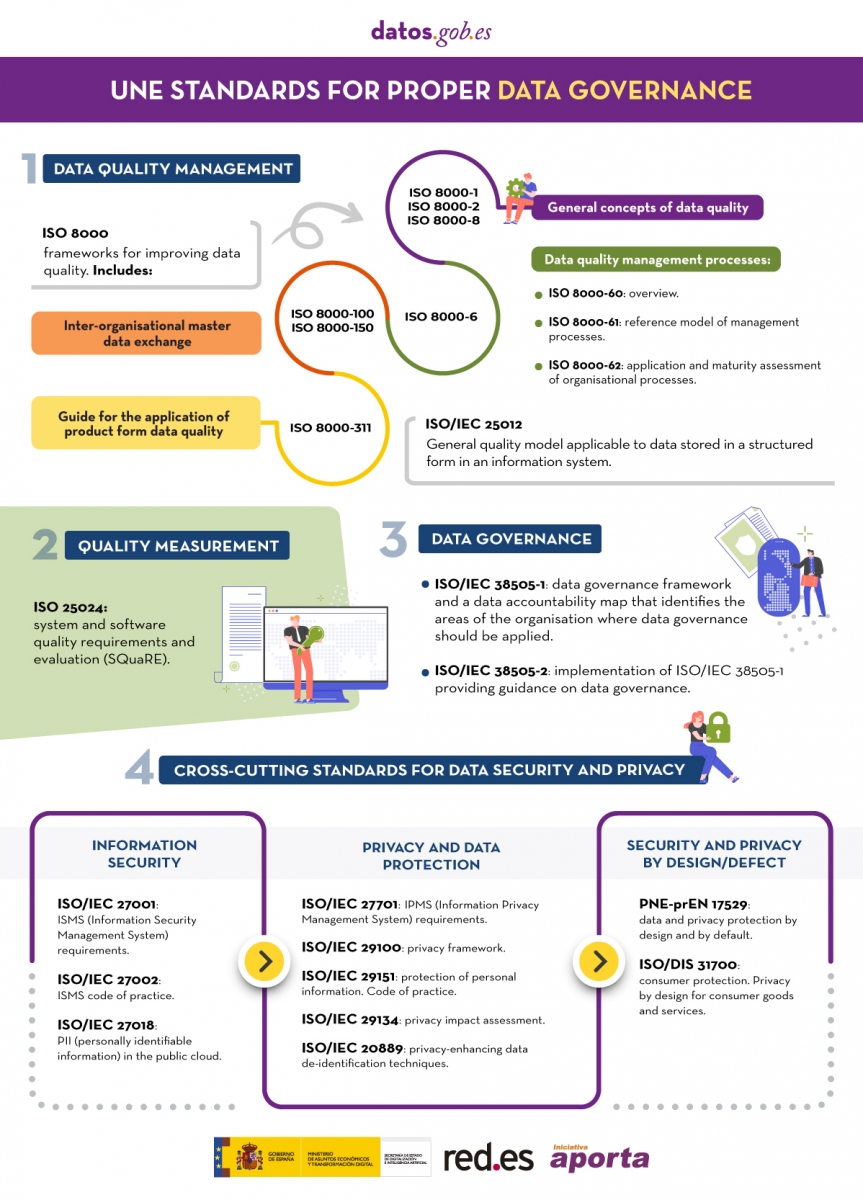

In this sense, the Spanish Association for Standardization (UNE) has recently published an article and report where different technical standards are collected that seek to guarantee the correct management and governance of an organization's data. Both materials are collected in this post , including an infographic-summary of the highlighted standards.

In the aforementioned reference articles, technical standards related to governance, management, quality, security and data privacy are mentioned. On this occasion we want to zoom in on those focused on data quality.

Quality management reference standards

As Lord Kelvin, a 19th-century British physicist and mathematician, said, “what is not measured cannot be improved, and what is not improved is always degraded”. But to measure the quality of the data and to be able to improve it, standards are needed to help us first homogenize said quality* . The following technical standards can help us with this:

ISO 8000 standard

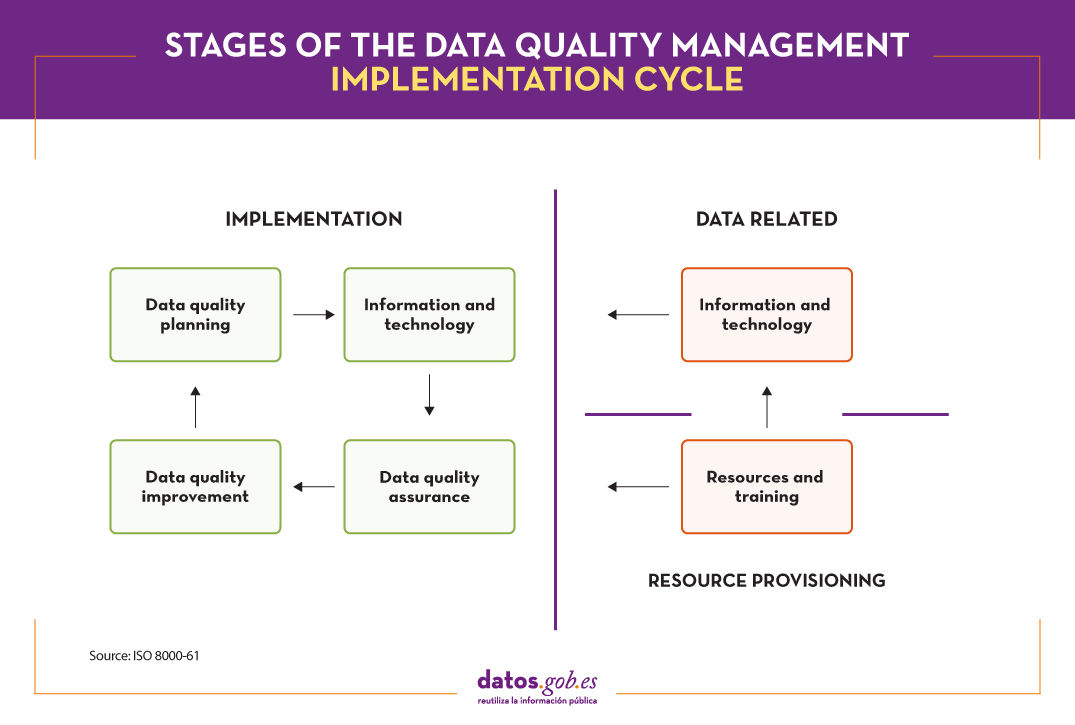

The ISO ( International Organization for Standardization ) regulation has ISO 8000 as the international standard for the quality of transaction data, product data and business master data . This standard is structured in 4 parts: general concepts of data quality (ISO 8000-1, ISO 8000-2 and ISO 8000-8), data quality management processes (ISO 8000-6x), aspects related to the exchange of master data between organizations (parts 100 to 150) and application of product data quality (ISO 8000-311).

Within the ISO 8000-6X family , focused on data quality management processes to create, store and transfer data that support business processes in a timely and profitable manner, we find:

- ISO 8000-60 provides an overview of data quality management processes subject to a cycle of continuous improvement.

- ISO 8000-61 establishes a reference model for data quality management processes. The main characteristic is that, in order to achieve continuous improvement, the implementation process must be executed continuously following the Plan-Do-Check-Act cycle . In addition, implementation processes related to resource provisioning and data processing are included. As shown in the following image, the four stages of the implementation cycle must have input data, control information and support for continuous improvement, as well as the necessary resources to carry out the activities.

- For its part, ISO 8000-62 , the last of the ISO 8000-6X family, focuses on the evaluation of organizational process maturity . It specifies a framework for assessing the organization's data quality management maturity, based on its ability to execute the activities related to the data quality management processes identified in ISO 8000-61. . Depending on the capacity of the evaluated process, one of the defined levels is assigned.

ISO 25012 standard

Another of the ISO standards that deals with data quality is the ISO 25000 family , which aims to create a common framework for evaluating data quality.cinquality of the software product. Specifically, the ISO 25012 standard defines a general data quality model applicable to data stored in a structured way in an information system.

In addition, in the context of open data, it is considered a reference according to the set of good practices for the evaluation of the quality of open data developed by the pan-European network Share-PSI , conceived to serve as a guide for all public organizations to time to share information.

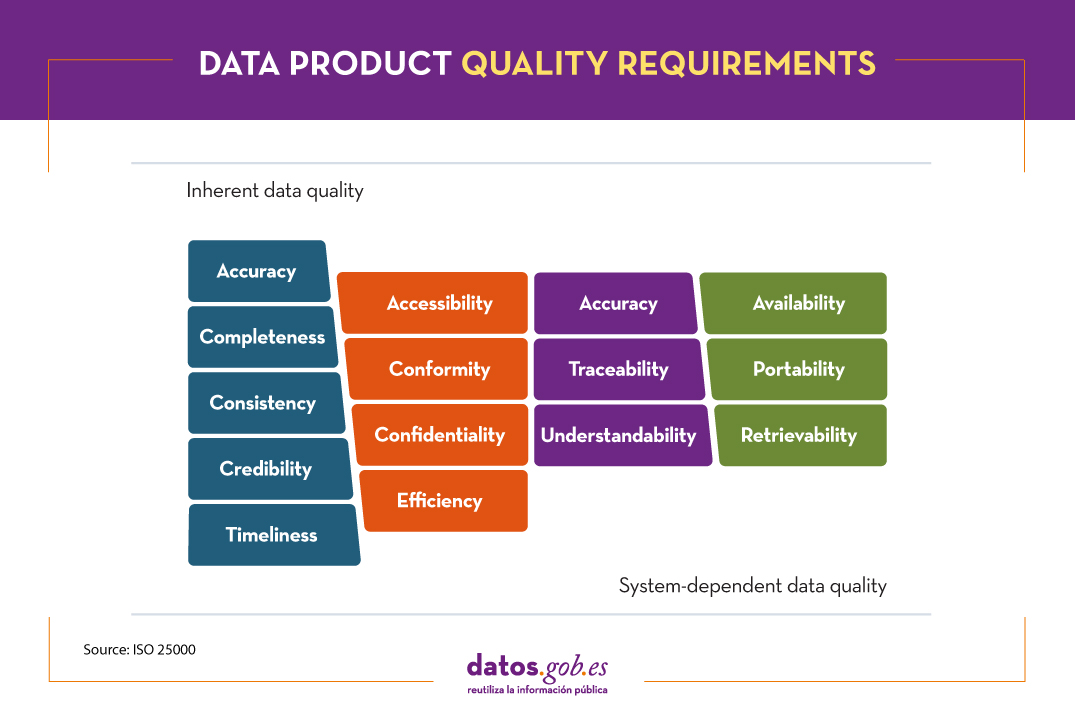

In this case, the quality of the data product is understood as the degree to which it satisfies the requirements previously defined in the data quality model through the following 15 characteristics.

These quality characteristics or dimensions are mainly classified into two categories.

Inherent data quality relates to the intrinsic potential of data to meet defined needs when used under specified conditions. Is about:

- Accuracy : degree to which the data represents the true value of the desired attribute in a specific context, such as the closeness of the data to a set of values defined in a certain domain.

- Completeness – The degree to which the associated data has value for all defined attributes.

- Consistency : degree of consistency with other existing data, eliminating contradictions.

- Credibility – The degree to which the data has attributes that are considered true and credible in its context, including the veracity of the data sources.

- Up-to- dateness : degree of validity of the data for its context of use.

On the other hand, system-dependent data quality is related to the degree achieved through a computer system under specific conditions. Is about:

- Availability : degree to which the data has attributes that allow it to be obtained by authorized users.

- Portability : ability of data to be installed, replaced or deleted from one system to another, preserving the level of quality.

- Recoverability – The degree to which data has attributes that allow quality to be maintained and preserved even in the event of failures.

Additionally, there are characteristics or dimensions that can be encompassed both within " inherent" and "system-dependent" data quality . These are:

- Accessibility : possibility of access to data in a specific context by certain roles.

- Conformity : degree to which the data contains attributes based on established standards, regulations or references.

- Confidentiality : measures the degree of data security based on its nature so that it can only be accessed by the configured roles.

- Efficiency : possibilities offered by the data to be processed with expected performance levels in specific situations.

- Accuracy : Accuracy of the data based on a specific context of use.

- Traceability : ability to audit the entire life cycle of the data.

- Comprehensibility : ability of the data to be interpreted by any user, including the use of certain symbols and languages for a specific context.

In addition to ISO standards, there are other reference frameworks that establish common guidelines for quality measurement. DAMA International , for example, after analyzing the similarities of all the models, establishes 8 basic quality dimensions common to any standard: accuracy, completeness, consistency, integrity, reasonableness, timeliness, uniqueness, validity .

The need for continuous improvement

The homogenization of the quality of the data according to reference standards such as those described, allow laying the foundations for a continuous improvement of the information. From the application of these standards, and taking into account the detailed dimensions, it is possible to define quality indicators. Once they are implemented and executed, they will yield results that will have to be reviewed by the different owners of the data , establishing tolerance thresholds and thus identifying quality incidents in all those indicators that do not exceed the defined threshold.

To do this, different parameters will be taken into account, such as the nature of the data or its impact on the business, since a descriptive field cannot be treated in the same way as a primary key, for example.

From there, it is common to launch an incident resolution circuit capable of detecting the root cause that generates a quality deficiency in a data to extract it and guarantee continuous improvement.

Thanks to this, innumerable benefits are obtained, such as minimizing risks, saving time and resources, agile decision-making, adaptation to new requirements or reputational improvement.

It should be noted that the technical standards addressed in this post allow quality to be homogenized. For data quality measurement tasks per se, we should turn to other standards such as ISO 25024:2015 .

Content prepared by Juan Mañes, expert in Data Governance.

The contents and views expressed in this publication are the sole responsibility of the author.

Data is a key pillar of digital transformation. Reliable and quality data are the basis of everything, from the main strategic decisions to the routine operational process, they are fundamental in the development of data spaces, as well as the basis of disruptive solutions linked to fields such as artificial intelligence or Big Data.

In this sense, the correct management and governance of data has become a strategic activity for all types of organizations, public and private.

Data governance standardization is based on 4 principles:

- Governance

- Management

- Quality

- Security and data privacy

Those organizations that want to implement a solid governance framework based on these pillars have at their disposal a series of technical standards that provide guiding principles to ensure that an organization's data is properly managed and governed, both internally and by external contracts.

With the aim of trying to clarify doubts in this matter, the Spanish Association for Standardization (UNE), has published various support materials.

The first is an article on the different technical standards to consider when developing effective data governance . The rules contained in said article, together with some additional ones, are summarized in the following infographic:

(You can download the accessible version in word here)

In addition, the UNE has also published the report "Standards for the data economy" , which can be downloaded at the end of this article. The report begins with an introduction where the European legislative context that is promoting the Data Economy is deepened and the recognition that it makes of technical standardization as a key tool when it comes to achieving the objectives set. The technical standards included in the previous infographic are analyzed in more detail below.

Data has occupied a fundamental place in our society in recent years. New technologies have enabled a data-driven globalization in which everything that happens in the world is interconnected. Using simple techniques, it is possible to extract value from them that was unimaginable just a few years ago. However, in order to make proper use of data, it is necessary to have good documentation, through a data dictionary.

What is a data dictionary?

It is common that when we talk about data dictionary, it is confused with business glossary or data vocabulary, however, they are different concepts.

While a business glossary, or data vocabulary, tries to give functional meaning to the indicators or concepts that are handled in a way that ensures that the same language is spoken, abstracting from the technical world, as explained in this article, a data dictionary tries to document the metadata most closely linked to its storage in the database. In other words, it includes technical aspects such as the type of data, format, length, possible values it can take and even the transformations it has undergone, without forgetting the definition of each field. The documentation of these transformations will automatically provide us with the lineage of the data, understood as the traceability throughout its life cycle. This metadata helps users to understand the data from a technical point of view in order to be able to use it properly. For this reason, each database should have its associated data dictionary.

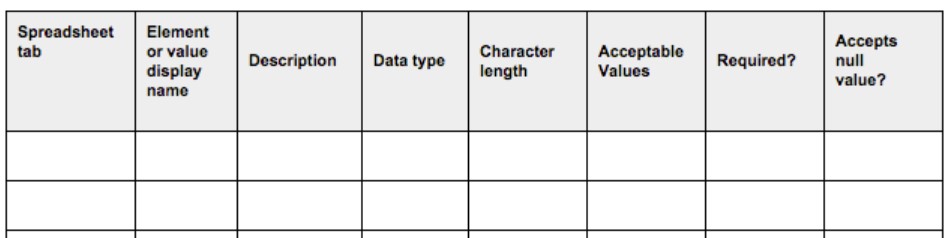

For the completion of the metadata requested in a data dictionary, there are pre-designed guides and templates such as the following example provided by the U.S. Department of Agriculture.

In addition, in order to standardize its content, taxonomies and controlled vocabularies are often used to encode values according to code lists.

Finally, a data catalog acts as a directory to locate information and make it available to users, providing all users with a single point of reference for accessing it. This is made possible by bridging functional and technical terms through the lineage.

Open data applicability

When we talk about open data, data dictionaries become even more important, as they are made available to third parties and their usability is much greater.

Each dataset should be published together with its data dictionary, describing the content of each column. Therefore, when publishing an open dataset, a URL to the document containing its data dictionary should also be published, regardless of its format. In cases where more than one Data Dictionary is required, due to the variety of the originating sources, as many as necessary should be added, generally one per database or table.

Unfortunately, however, it is easy to find datasets extracted directly from information systems without adequate preparation and without an associated data dictionary provided by the publishers. This may be due to several factors, such as a lack of knowledge of this type of tool that facilitates documentation, not knowing for sure how to create a dictionary, or simply assuming that the user knows the contents of the fields.

However, the consequences of publishing data without documenting them correctly may result in the user seeing data referring to unreadable acronyms or technical names, making it impossible to process them or even making inappropriate use of them due to ambiguity and misinterpretation of the contents.

To facilitate the creation of this type of documentation, there are standards and technical recommendations from some organizations. For example, the World Wide Web Consortium (W3C), the body that develops standards to ensure the long-term growth of the World Wide Web, has issued a model recommending how to publish tabular data such as CSV and metadata on the Web.

Interpreting published data

An example of a good data publication can be found in this dataset published by the National Statistics Institute (INE) and available at datos.gob.es, which indicates "the number of persons between 18 and 64 years of age according to the most frequent mother tongue and non-mother tongue languages they may use, by parental characteristics". For its interpretation, the INE provides all the necessary details for its understanding in a URL, such as the units of measurement, sources, period of validity, scope and the methodology followed for the preparation of these surveys. In addition, it provides self-explanatory functional names for each column to ensure the understanding of its meaning by any user outside the INE. All of this allows the user to know with certainty the information he/she is downloading for consumption, avoiding misunderstandings. This information is shared in the "related resources" section, designed for this purpose. This is a metadata describing the dct:references property.

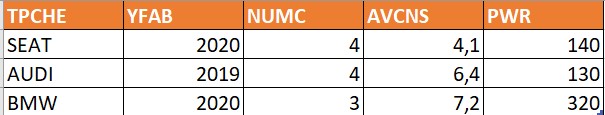

Although this example may seem logical, it is not uncommon to find cases on the opposite side. For illustrative purposes, a fictitious example dataset is shown as follows:

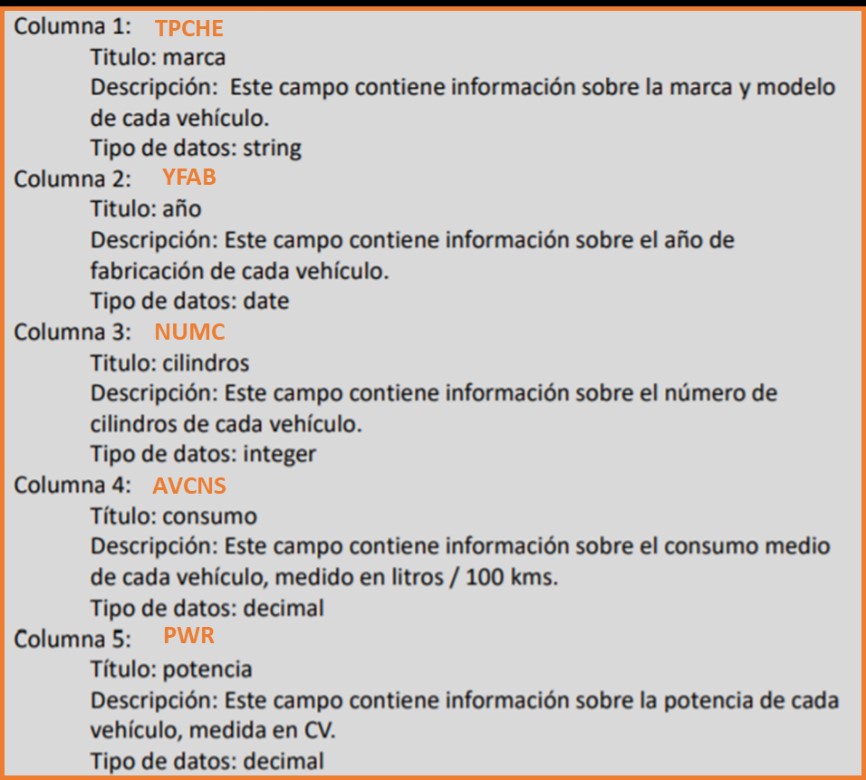

In this case, a user who does not know the database will not know how to correctly interpret the meaning of the fields "TPCHE", "YFAB", "NUMC" ... However, if this table is associated with a data dictionary, we can relate the metadata to the set, as shown in the following image:

In this case, we have chosen to publish the data dictionary by means of a text document describing the fields, although there are many ways of publishing the dictionaries. It can be done following recommendations and standards, such as the one mentioned above by the W3C, by means of text files, as in this case, or even by means of Excel templates customized by the publisher itself. As a general rule, there is no one way that is better than another, but it must be adapted to the nature and complexity of the dataset in order to ensure its comprehension, planning the level of detail required depending on the final objective, the audience receiving the data and the needs of consumers, as explained in this post.

Open data is born with the aim of facilitating the reuse of information for everyone, but for such access to be truly useful, it cannot be limited only to the publication of raw datasets, but must be clearly documented for proper processing. The development of data dictionaries that include the technical details of the datasets that are published is essential for their correct reuse and the extraction of value from them.

Content prepared by Juan Mañes, expert in Data Governance.

The contents and views expressed in this publication are the sole responsibility of the author.

In the current environment, organisations are trying to improve the exploitation of their data through the use of new technologies, providing the business with additional value and turning data into their main strategic asset.

However, we can only extract the real value of data if it is reliable and for this, the function of Data Governance arises, focused on the efficient management of information assets. Open data cannot be alien to these practices due to its characteristics, mainly of availability and access.

To answer the question of how we should govern data, there are several international methodologies, such as DCAM, MAMD, DGPO or DAMA. In this post, we will base ourselves on the guidelines offered by the latter.

What is DAMA?

DAMA, by its acronym Data Management Association, is an international association for data management professionals. It has a chapter in Spain, DAMA Spain, since March 2019.

Its main mission is to promote and facilitate the development of the data management culture, becoming the reference for organisations and professionals in information management, providing resources, training and knowledge on the subject.

The association is made up of data management professionals from different sectors.

Data governance according to DAMA's reference framework

“A piece of data placed in a context gives rise to information. Add intelligence and you get knowledge that, combined with a good strategy, generates power”

Although it is just a phrase, it perfectly sums up the strategy, the search for power from data. To achieve this, it is necessary to exercise authority, control and shared decision-making (planning, monitoring and implementation) over the management of data assets or, in other words, to apply Data Governance.

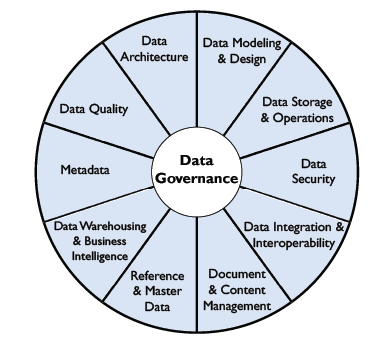

DAMA presents what it considers to be the best practices for guaranteeing control over information, regardless of the application business, and to this end, it positions Data Governance as the main activity around which all other activities are managed, such as architecture, interoperability, quality or metadata, as shown in the following figure:

The Data Government's implementation of open data

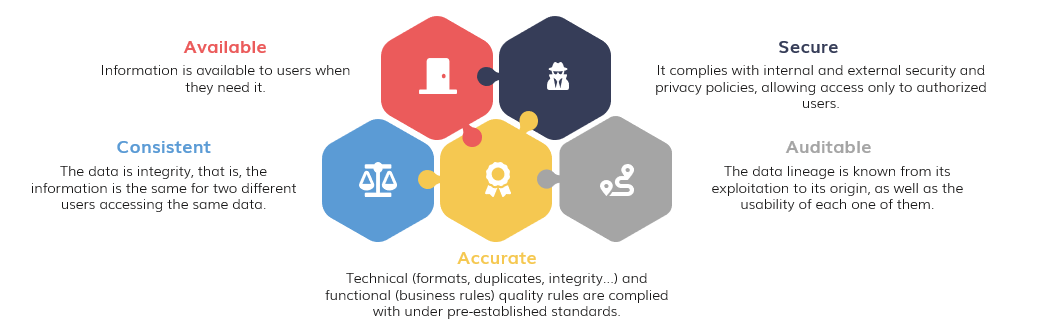

Based on the wheel outlined in the previous section, data governance, control, quality, management and knowledge are the key to success and, to this end, the following principles must be complied with:

To achieve data compliance with these principles, it will be necessary to establish a data governance strategy, through the implementation of a Data Office capable of defining the policies and procedures that dictate the guidelines for data management. These should include the definition of roles and responsibilities, the relationship model for all of them and how they will be enforced, as well as other data-related initiatives.

In addition to data governance, some of the recommended features of open data management include the following:

- An architecture capable of ensuring the availability of information on the portal. In this sense, CKAN has become one of the reference architecture for open data. CKAN is a free and open source platform, developed by the Open Knowledge Foundation, which serves to publish and catalogue data collections. This link provides a guide to learn more about how to publish open data with CKAN.

- The interoperability of data catalogues. Any user can make use of the information through direct download of the data they consider. This highlights the need for easy integration of information, regardless of which open data portal it was obtained from.

- Recognised standards should be used to promote the interoperability of data and metadata catalogues across Europe, such as the Data Catalogue Vocabulary (DCAT) defined by the W3C and its application profile DCAT-AP. In Spain, we have the Technical Interoperability Standard (NTI), based on this vocabulary. You can read more about it in this report.

- The metadata, understood as the data of the data, is one of the fundamental pillars when categorising and labelling the information, which will later be reflected in an agile and simple navigation in the portal for any user. Some of the metadata to be included are the title, the format or the frequency of updating, as shown in the aforementioned NTI.

- As this information is offered by public administrations for reuse, it is not necessary to comply with strict privacy measures for its exploitation, as it has been previously anonymised. On the contrary, there must be activities to ensure the security of the data. For example, improper or fraudulent use can be prevented by monitoring access and tracking user activity.

- Furthermore, the information available on the portal will meet the technical and functional quality criteria required by users, guaranteed by the application of quality indicators.

- Finally, although it is not one of the characteristics of the reference framework as such, DAMA speaks transversally to all of them about data ethics, understood as social responsibility with respect to data processing. There is certain sensitive information whose improper use could have an impact on individuals.

The evolution of Data Government

Due to the financial crisis of 2008, the focus was placed on information management in financial institutions: what information is held, how it is exploited... For this reason, it is currently one of the most regulated sectors, which also makes it one of the most advanced with regard to the applicability of these practices.

However, the rise of new technologies associated with data processing began to change the conception of these management activities. They were no longer seen so much as a mere control of information, but considering data as strategic assets meant great advances in the business.

Thanks to this new concept, private organisations of all kinds have taken an interest in this area and, even in some public bodies, it is not unusual to see how data governance is beginning to be professionalised through initiatives focused on offering citizens a more personalised and efficient service based on data. For example, the city of Edmonton uses this methodology and has been recognised for it.

In this webinar you can learn more about data management in the DAMA framework. You can also watch the video of their annual event where different use cases are explained or follow their blog.

The road to data culture

We are immersed in a globalised digital world that is constantly evolving and data is no stranger to this. New data initiatives are constantly emerging and an efficient data governance capable of responding to these changes is necessary.

Therefore, the path towards a data culture is a reality that all organisations and public bodies must take in the short term. The use of a data governance methodology, such as DAMA's, will undoubtedly be a great support along the way.

Content prepared by David Puig, Graduate in Information and Documentation and head of the Master and Reference Data working group at DAMA SPAIN, and Juan Mañes, expert in Data Governance.

The contents and points of view reflected in this publication are the sole responsibility of its author.