11 posts found

AI tools for research and a new way to use language models

AI systems designed to assist us from the first dives to the final bibliography.

One of the missions of contemporary artificial intelligence is to help us find, sort and digest information, especially with the help of large language models. These systems have come at a time when we most need to mana…

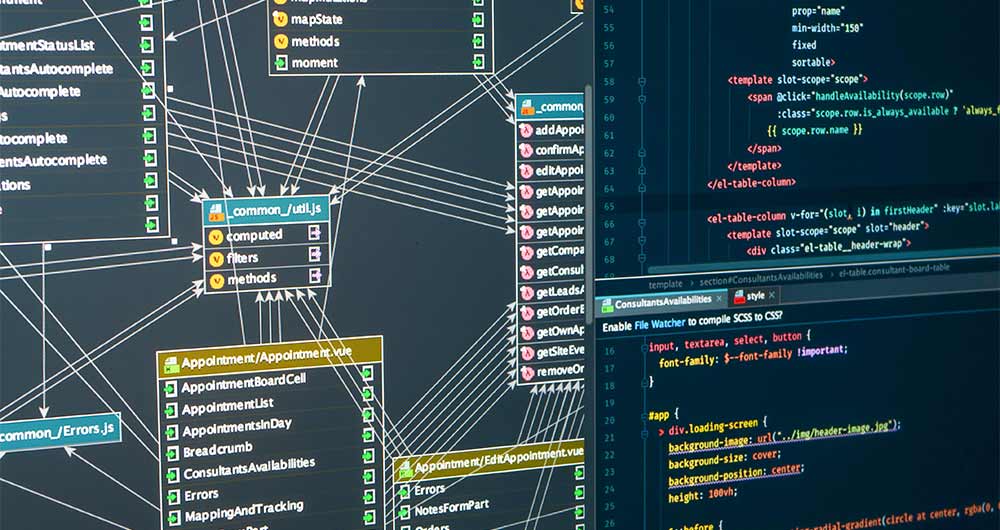

Open source auto machine learning tools

The increasing complexity of machine learning models and the need to optimise their performance has been driving the development of AutoML (Automated Machine Learning) for years. This discipline seeks to automate key tasks in the model development lifecycle, such as algorithm selection, data process…

Re3gistry: facilitating the semantic interoperability of data

The INSPIRE (Infrastructure for Spatial Information in Europe) Directive sets out the general rules for the establishment of an Infrastructure for Spatial Information in the European Community based on the Infrastructures of the Member States. Adopted by the European Parliament a…

Vinalod: The tool to make open datasets more accessible

Public administration is working to ensure access to open data, in order to empowering citizens in their right to information. Aligned with this objective, the European open data portal (data.europa.eu) references a large volume of data on a variety of topics.

However, although the data belong to di…

Artificial Intelligence applied to the identification and classification of diseases detected by radiodiagnosis

In this post we have described step-by-step a data science exercise in which we try to train a deep learning model with a view to automatically classifying medical images of healthy and sick people.

Diagnostic imaging has been around for many years in the hospitals of develo…

Free tools to work on data quality issues

Ensuring data quality is an essential task for any open data initiative. Before publication, datasets need to be validated to check that they are free of errors, duplication, etc. In this way, their potential for re-use will grow.

Data quality is conditioned by many aspects. In this sense, the Aport…

Hackathons, a new way of attracting talent

Technology is now an essential component of our daily lives. It is no secret that a large number of companies worldwide have been making significant investments in order to digitize their processes, products or services and thus offer greater innovation in them.

All this has led to an increase in th…

What are the advantages of participating in a hackathon or data-related competition like the Aporta Challenge?

Hackathons, contests or challenges related to data are a different way to test your ideas and/or knowledge, while acquiring new skills. Through this type of competition, solutions to real problems are sought, often in multidisciplinary teams that share diverse knowledge and points of view. In additi…

Low coding tools for data analysis

The democratisation of technology in all areas is an unstoppable trend. With the spread of smartphones and Internet access, an increasing number of people can access high-tech products and services without having to resort to advanced knowledge or specialists. The world of data is no stranger to thi…

API Friendliness Checker. A much needed tool in the age of data products.

Many people don't know, but we are surrounded by APIs. APIs are the mechanism by which services communicate on the Internet. APIs are what make it possible for us to log into our email or make a purchase online.

API stands for Application Programming Interface, which for most Internet users means no…