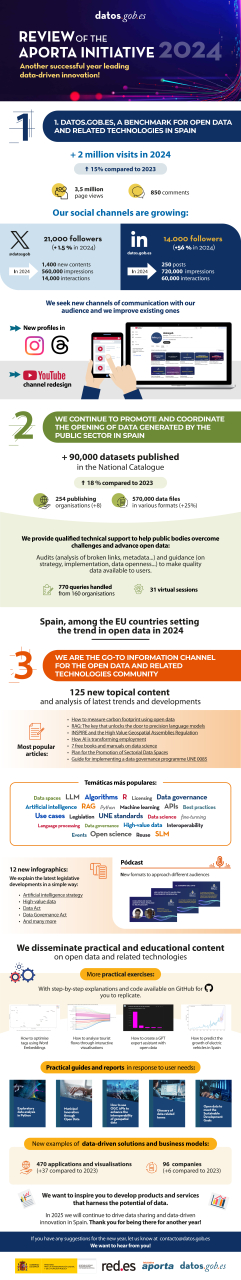

As the year comes to an end, it is the perfect time to pause and reflect on all that we have experienced and shared at Iniciativa Aporta. This year has been full of challenges, learning and achievements that deserve to be celebrated.

One of the milestones we want to share is that we have reached almost two million visits on the platform, which is a 15% growth compared to 2023. The interest in data and related technologies has also been evident in social networks: we have exceeded 14,000 followers on LinkedIn (+56%) and 21,000 on X, the former Twitter (+ 1.5%). In addition, we wanted to reach out to new audiences with the launch of our Instagram and Threads profiles, and the redesign of the YouTube channel.

One of our objectives is to promote the openness of data generated by the public sector so that it can be reused by businesses and citizens. The Aporta Initiative provides qualified technical support to help public bodies overcome their challenges and make quality data available to users, through audits, training sessions and advice. This work has borne fruit with over 90,000 datasets published in the National Catalogue, 18% more than in 202. These datasets are federated with the European Open Data Portal, data.europa.eu.

But it is not only about publishing data, it is also about promoting its use. In order to promote knowledge about open data and stimulate a market linked to the reuse of public sector information, the Aporta Initiative has developed more than 120 articles, 1,400 tweets and 250 publications on LinkedIn with news, events or analysis of the sector. In this sense, we have tried to gather the latest trends on multiple data-related topics such as artificial intelligence, data spaces or open science. In addition:

- Spain is among the EU countries setting open data trends by 2024.

- We have launched a new content format: the pódcasts from datos.gob.es. The aim is to give you the opportunity to learn more about different topics through audio programmes that you can listen to anytime, anywhere.

- We have strengthened the infographics section, with new content summarising complex data-related issues, such as legislation or strategic documents. Each infographic presents detailed information in a visually appealing way, making it easy to grasp important concepts and allowing you to quickly access key points.

- We have created new data science exercises, designed to guide you step-by-step through key concepts and various analysis techniques so you can learn effectively and practically. In addition, each exercise includes the full code available on GitHub, allowing you to replicate and experiment on your own.

- We have published new guides and reports focusing on how to harness the potential of open data to drive innovation and transparency. Each document includes clear explanations and practical examples to keep you up to date with best practices and tools, ensuring that you are always at the forefront in the use of emerging data-related technologies.

- We have expanded the list of examples of applications and companies that reuse open data. In the case of applications, we have already reached 470 solutions (37 more than in 2023) and in the case of companies, 96 companies (6 more than in 2023)..

Thank you for a good year! In 2025 we will continue to work to drive the data culture in public bodies, businesses and citizens.

You can see more about our activity in the following infographic:

Link to the infographic

The ability to collect, analyse and share data plays a crucial role in the context of the global challenges we face as a society today. From pollution and climate change, through poverty and pandemics, to sustainable mobility and lack of access to basic services. Global problems require solutions that can be adapted on a large scale. This is where open data can play a key role, as it allows governments, organisations and citizens to work together in a transparent way, and facilitates the process of achieving effective, innovative, adaptable and sustainable solutions.

The World Bank as a pioneer in the comprehensive use of open data

One of the most relevant examples of good practices that we can find when it comes to expressing the potential of open data to tackle major global challenges is, without a doubt, the case of the World Bank, a benchmark in the use of open data for more than a decade now as a fundamental tool for sustainable development.

Since the launch of its open data portal in 2010, the institution has undergone a complete transformation process in terms of data access and use. This portal, totally innovative at the time, quickly became a reference model by offering free and open access to a wide range of data and indicators covering more than 250 economies. Moreover, its platform is constantly being updated and bears little resemblance to the initial version at present, as it is continuously improving and providing new datasets and complementary and specialised tools with the aim of making data always accessible and useful for decision making. Examples of such tools include:

- The Poverty and Inequality Platform (PIP): designed to monitor and analyse global poverty and inequality. With data from more than 140 countries, this platform allows users to access up-to-date statistics and better understand the dynamics of collective well-being. It also facilitates data visualisation through interactive graphs and maps, helping users to gain a clear and quick understanding of the situation in different regions and over time.

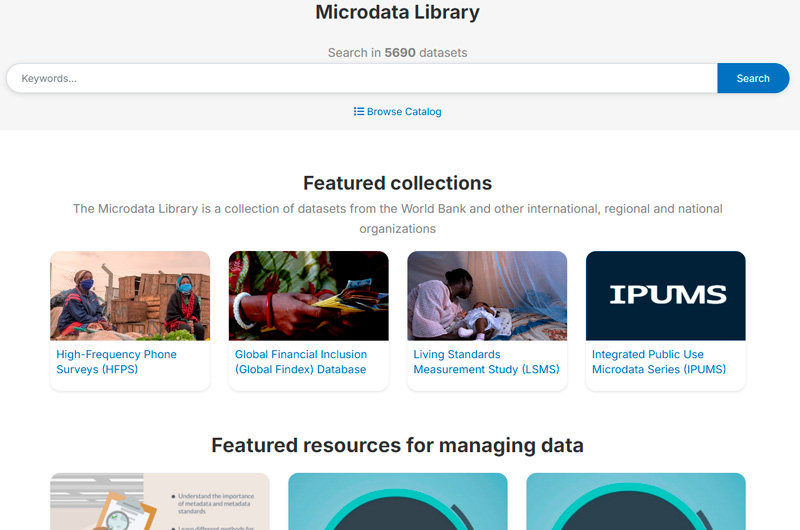

- The Microdata Library: provides access to household and enterprise level survey and census data in several countries. The library has more than 3,000 datasets from studies and surveys conducted by the Bank itself, as well as by other international organisations and national statistical agencies. The data is freely available and fully accessible for downloading and analysis.

- The World Development Indicators (WDI): are an essential tool for tracking progress on the global development agenda. This database contains a vast collection of economic, social and environmental development indicators, covering more than 200 countries and territories. It has data covering areas such as poverty, education, health, environmental sustainability, infrastructure and trade. The WDIs provide us with a reliable frame of reference for analysing global and regional development trends.

Figure 1. Screenshots of the web portals Poverty and Inequality Platform (PIP), Microdata Library and World Development Indicators (WDI).

Data as a transformative element for change

A major milestone in the World Bank's use of data was the publication of the World Development Report 2021, entitled "data for better lives". This report has become a flagship publication that explores the transformative potential of data to address humanity's grand challenges, improve the results of development efforts and promote inclusive and equitable growth. Through the report, the institution advocates a new social agenda for data, including robust, ethical and responsible governance of data, maximising its value in order to generate significant economic and social benefit.

The report examines how data can be integrated into public policy and development programmes to address global challenges in areas such as education, health, infrastructure and climate change. But it also marked a turning point in reinforcing the World Bank's commitment to data as a driver of change in tackling major challenges, and has since adopted a new roadmap with a more innovative, transformative and action-oriented approach to data use. Since then, they have been moving from theory to practice through their own projects, where data becomes a fundamental tool throughout the strategic cycle, as in the following examples:

- Open Data and Disaster Risk Reduction: the report "Digital Public Goods for Disaster Risk Reduction in a Changing Climate" highlights how open access to geospatial and meteorological data facilitates more effective decision-making and strategic planning. Reference is also made to tools such as OpenStreetMap that allow communities to map vulnerable areas in real time. This democratisation of data strengthens emergency response and builds the resilience of communities at risk from floods, droughts and hurricanes.

- Open data in the face of agri-food challenges: the report "What's cooking?" shows how open data is revolutionising global agri-food systems, making them more inclusive, efficient and sustainable. In agriculture, access to open data on weather patterns, soil quality and market prices empowers smallholder farmers to make informed decisions. In addition, platforms that provide open geospatial data serve to promote precision agriculture, enabling the optimisation of key resources such as water and fertilisers, while reducing costs and minimising environmental impact.

- Optimising urban transport systems: in Tanzania, the World Bank has supported a project that uses open data to improve the public transport system. The rapid urbanisation of Dar es Salaam has led to considerable traffic congestion in several areas, affecting both urban mobility and air quality. This initiative addresses traffic congestion through a real-time information system that improves mobility and reduces environmental impact. This approach, based on open data, not only increases transport efficiency, but also contributes to a better quality of life for city dwellers.

Leading by example

Finally, and within this same comprehensive vision, it is worth noting how this international organization closes the circle of open data through its use as a tool for transparency and communication of its own activities.That is why among the outstanding data tools in its catalogue we can find some of them:

- Its project and operations portal: a tool that provides detailed access to the development projects that the institution funds and implements around the world. This portal acts as a window into all its global initiatives, providing information on objectives, funding, expected results and progress for the Bank's thousands of projects.

- The Finances One platform: on which they centralise all their financial data of public interest and those corresponding to the project portfolio of all the group's entities. It aims to simplify the presentation of financial information, facilitating its analysis and sharing by customers and partners.

The future impact of open data on major global challenges

As we have also seen above, opening up data offers immense potential to advance the sustainable development agenda and thus be able to address global challenges more effectively. The World Bank has been demonstrating how this practice can evolve and adapt to current challenges. Its leadership in this area has served as a model for other institutions, showing the positive impact that open data can have on sustainable development and in tackling the major challenges affecting the lives of millions of people around the world.

However, there is still a long way to go, as transparency and access to information policies need to be further improved so that data can reach the benefit of society as a whole in a more equitable way. In addition, another key challenge is to strengthen the capacities needed to maximise the use and impact of this data, particularly in developing countries. This implies not only going beyond facilitating access, but also working on data literacy and supporting the creation of the right tools to enable information to be used effectively.

The use of open data is enabling more and more actors to participate in the creation of innovative solutions and bring about real change. All this gives rise to a new and expanding area of work that, in the right hands and with the right support, can play a crucial role in creating a safer, fairer and more sustainable future for all. We hope that many organisations will follow the World Bank's example and also adopt a holistic approach to using data to address humanity's grand challenges.

Content prepared by Carlos Iglesias, Open data Researcher and consultant, World Wide Web Foundation. The contents and views reflected in this publication are the sole responsibility of the author.

Tourism is one of Spain's economic engines. In 2022, it accounted for 11.6% of Gross Domestic Product (GDP), exceeding €155 billion, according to the Instituto Nacional de Estadística (INE). A figure that grew to 188,000 million and 12.8% of GDP in 2023, according to Exceltur, an association of companies in the sector. In addition, Spain is a very popular destination for foreigners, ranking second in the world and growing: by 2024 it is expected to reach a record number of international visitors, reaching 95 million.

In this context, the Secretariat of State for Tourism (SETUR), in line with European policies, is developing actions aimed at creating new technological tools for the Network of Smart Tourist Destinations, through SEGITTUR (Sociedad Mercantil Estatal para la Gestión de la Innovación y las Tecnologías Turísticas), the body in charge of promoting innovation (R&D&I) in this industry. It does this by working with both the public and private sectors, promoting:

- Sustainable and more competitive management models.

- The management and creation of smart destinations.

- The export of Spanish technology to the rest of the world.

These are all activities where data - and the knowledge that can be extracted from it - play a major role. In this post, we will review some of the actions SEGITTUR is carrying out to promote data sharing and openness, as well as its reuse. The aim is to assist not only in decision-making, but also in the development of innovative products and services that will continue to position our country at the forefront of world tourism.

Dataestur, an open data portal

Dataestur is a web space that gathers in a unique environment open data on national tourism. Users can find figures from a variety of public and private information sources.

The data are structured in six categories:

- General: international tourist arrivals, tourism expenditure, resident tourism survey, world tourism barometer, broadband coverage data, etc.

- Economy: tourism revenues, contribution to GDP, tourism employment (job seekers, unemployment and contracts), etc.

- Transport: air passengers, scheduled air capacity, passenger traffic by ports, rail and road, etc.

- Accommodation: hotel occupancy, accommodation prices and profitability indicators for the hotel sector, etc.

- Sustainability: air quality, nature protection, climate values, water quality in bathing areas, etc.

- Knowledge: active listening reports, visitor behaviour and perception, scientific tourism journals, etc.

The data is available for download via API.

Dataestur is part of a more ambitious project in which data analysis is the basis for improving tourist knowledge, through actions with a wide scope, such as those we will see below.

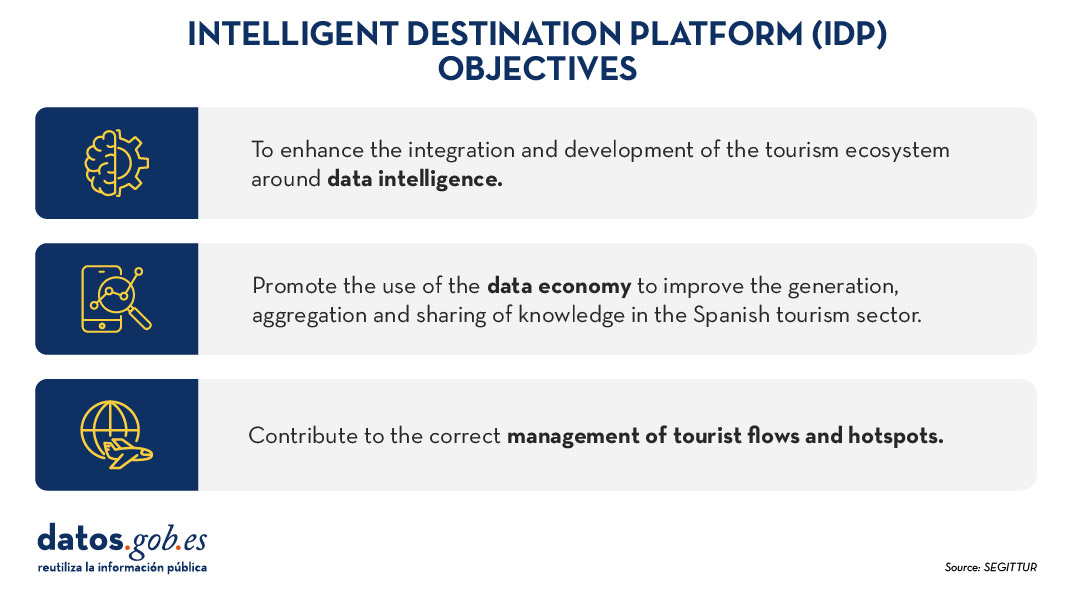

Developing an Intelligent Destination Platform (IDP)

Within the fulfillment of the milestones set by the Next Generation funds, and corresponding to the development of the Digital Transformation Plan for Tourist Destinations, the Secretary of State for Tourism, through SEGITTUR, is developing an Intelligent Destination Platform (PID). It is a platform-node that brings together the supply of tourism services and facilitates the interoperability of public and private operators. Thanks to this platform it will be possible to provide services to integrate and link data from both public and private sources.

Some of the challenges of the Spanish tourism ecosystem to which the IDP responds are:

- Encourage the integration and development of the tourism ecosystem (academia, entrepreneurs, business, etc.) around data intelligence and ensure technological alignment, interoperability and common language.

- To promote the use of the data economy to improve the generation, aggregation and sharing of knowledge in the Spanish tourism sector, driving its digital transformation.

- To contribute to the correct management of tourist flows and tourist hotspots in the citizen space, improving the response to citizens' problems and offering real-time information for tourist management.

- Generate a notable impact on tourists, residents and companies, as well as other agents, enhancing the brand "sustainable tourism country" throughout the travel cycle (before, during and after).

- Establish a reference framework to agree on targets and metrics to drive sustainability and carbon footprint reduction in the tourism industry, promoting sustainable practices and the integration of clean technologies.

Figure 1. Objectives of the Intelligent Destination Platform (IDP).

New use cases and methodologies to implement them

To further harmonise data management, up to 25 use cases have been defined that enable different industry verticals to work in a coordinated manner. These verticals include areas such as wine tourism, thermal tourism, beach management, data provider hotels, impact indicators, cruises, sports tourism, etc.

To implement these use cases, a 5-step methodology is followed that seeks to align industry practices with a more structured approach to data:

- Identify the public problems to be solved.

- Identify what data are needed to be available to be able to solve them.

- Modelling these data to define a common nomenclature, definition and relationships.

- Define what technology needs to be deployed to be able to capture or generate such data.

- Analyse what intervention capacities, both public and private, are needed to solve the problem.

Boosting interoperability through a common ontology and data space

As a result of this definition of the 25 use cases, a ontology of tourism has been created, which they hope will serve as a global reference. The ontology is intended to have a significant impact on the tourism sector, offering a series of benefits:

- Interoperability: The ontology is essential to establish a homogeneous data structure and enable global interoperability, facilitating information integration and data exchange between platforms and countries. By providing a common language, definitions and a unified conceptual structure, data can be comparable and usable anywhere in the world. Tourism destinations and the business community can communicate more effectively and agilely, fostering closer collaboration.

- Digital transformation: By fostering the development of advanced technologies, such as artificial intelligence, tourism companies, the innovation ecosystem or academia can analyse large volumes of data more efficiently. This is mainly due to the quality of the information available and the systems' better understanding of the context in which they operate.

- Tourism competitiveness: Aligned with the previous question, the implementation of this ontology contributes to eliminating inequalities in the use and application of technology within the sector. By facilitating access to advanced digital tools, both public institutions and private companies can make more informed and strategic decisions. This not only raises the quality of the services offered, but also boosts the productivity and competitiveness of the Spanish tourism sector in an increasingly demanding global market.

- Tourist experience: Thanks to ontology, it is possible to offer recommendations tailored to the individual preferences of each traveller. This is achieved through more accurate profiling based on demographic and behavioural characteristics as well as specific motivations related to different types of tourism. By personalising offers and services, customer satisfaction before, during and after the trip is improved, and greater loyalty to tourist destinations is fostered.

- Governance: The ontology model is designed to evolve and adapt as new use cases emerge in response to changing market demands. SEGITTUR is actively working to establish a governance model that promotes effective collaboration between public and private institutions, as well as with the technology sector.

In addition, to solve complex problems that require the sharing of data from different sources, the Open Innovation Platform (PIA) has been created, a data space that facilitates collaboration between the different actors in the tourism ecosystem, both public and private. This platform enables secure and efficient data sharing, empowering data-driven decision making. The PIA promotes a collaborative environment where open and private data is shared to create joint solutions to address specific industry challenges, such as sustainability, personalisation of the tourism experience or environmental impact management.

Building consensus

SEGITTUR is also carrying out various initiatives to achieve the necessary consensus in the collection, management and analysis of tourism-related data, through collaboration between public and private actors. To this end, the Ente Promotor de la Plataforma Inteligente de Destinoswas created in 2021, which plays a fundamental role in bringing together different actors to coordinate efforts and agree on broad lines and guidelines in the field of tourism data.

In summary, Spain is making progress in the collection, management and analysis of tourism data through coordination between public and private actors, using advanced methodologies and tools such as the creation of ontologies, use cases and collaborative platforms such as PIA that ensure efficient and consensual management of the sector.

All this is not only modernising the Spanish tourism sector, but also laying the foundations for a smarter, more intelligent, connected and efficient future. With its focus on interoperability, digital transformation and personalisation of experiences, Spain is positioned as a leader in tourism innovation, ready to face the technological challenges of tomorrow.

Today's climate crisis and environmental challenges demand innovative and effective responses. In this context, the European Commission's Destination Earth (DestinE) initiative is a pioneering project that aims to develop a highly accurate digital model of our planet.

Through this digital twin of the Earth it will be possible to monitor and prevent potential natural disasters, adapt sustainability strategies and coordinate humanitarian efforts, among other functions. In this post, we analyse what the project consists of and the state of development of the project.

Features and components of Destination Earth

Aligned with the European Green Pact and the Digital Europe Strategy, Destination Earth integrates digital modeling and climate science to provide a tool that is useful in addressing environmental challenges. To this end, it has a focus on accuracy, local detail and speed of access to information.

In general, the tool allows:

- Monitor and simulate Earth system developments, including land, sea, atmosphere and biosphere, as well as human interventions.

- To anticipate environmental disasters and socio-economic crises, thus enabling the safeguarding of lives and the prevention of significant economic downturns.

- Generate and test scenarios that promote more sustainable development in the future.

To do this, DestinE is subdivided into three main components :

- Data lake:

- What is it? A centralised repository to store data from a variety of sources, such as the European Space Agency (ESA), EUMETSAT and Copernicus, as well as from the new digital twins.

- What does it provide? This infrastructure enables the discovery and access to data, as well as the processing of large volumes of information in the cloud.

·The DestinE Platform:.

- What is it? A digital ecosystem that integrates services, data-driven decision-making tools and an open, flexible and secure cloud computing infrastructure.

- What does it provide? Users have access to thematic information, models, simulations, forecasts and visualisations that will facilitate a deeper understanding of the Earth system.

- Digital cufflinks and engineering:

- What are they? There are several digital replicas covering different aspects of the Earth system. The first two are already developed, one on climate change adaptation and the other on extreme weather events.

- WHAT DOES IT PROVIDE? These twins offer multi-decadal simulations (temperature variation) and high-resolution forecasts.

Discover the services and contribute to improve DestinE

The DestinE platform offers a collection of applications and use cases developed within the framework of the initiative, for example:

- Digital twin of tourism (Beta): it allows to review and anticipate the viability of tourism activities according to the environmental and meteorological conditions of its territory.

- VizLab: offers an intuitive graphical user interface and advanced 3D rendering technologies to provide a storytelling experience by making complex datasets accessible and understandable to a wide audience..

- miniDEA: is an interactive and easy-to-use DEA-based web visualisation app for previewing DestinE data.

- GeoAI: is a geospatial AI platform for Earth observation use cases.

- Global Fish Tracking System (GFTS): is a project to help obtain accurate information on fish stocks in order to develop evidence-based conservation policies.

- More resilient urban planning: is a solution that provides a heat stress index that allows urban planners to understand best practices for adapting to extreme temperatures in urban environments..

- Danube Delta Water Reserve Monitoring: is a comprehensive and accurate analysis based on the DestinE data lake to inform conservation efforts in the Danube Delta, one of the most biodiverse regions in Europe.

Since October this year, the DestinE platform has been accepting registrations, a possibility that allows you to explore the full potential of the tool and access exclusive resources. This option serves to record feedback and improve the project system.

To become a user and be able to generate services, you must follow these steps..

Project roadmap:

The European Union sets out a series of time-bound milestones that will mark the development of the initiative:

- 2022 - Official launch of the project.

- 2023 - Start of development of the main components.

- 2024 - Development of all system components. Implementation of the DestinE platform and data lake. Demonstration.

- 2026 - Enhancement of the DestinE system, integration of additional digital twins and related services.

- 2030 - Full digital replica of the Earth.

Destination Earth not only represents a technological breakthrough, but is also a powerful tool for sustainability and resilience in the face of climate challenges. By providing accurate and accessible data, DestinE enables data-driven decision-making and the creation of effective adaptation and mitigation strategies.

As part of the European Cybersecurity Awareness Month, the European data portal, data.europa.eu, has organized a webinar focused on the protection of open data.This event comes at a critical time when organisations, especially in the public sector, face the challenge of balancing data transparency and accessibility with the need to protect against cyber threats.

The online seminar was attended by experts in the field of cybersecurity and data protection, both from the private and public sector.

The expert panel addressed the importance of open data for government transparency and innovation, as well as emerging risks related to data breaches, privacy issues and other cybersecurity threats. Data providers, particularly in the public sector, must manage this paradox of making data accessible while ensuring its protection against malicious use.

During the event, a number of malicious tactics used by some actors to compromise the security of open data were identified. These tactics can occur both before and after publication. Knowing about them is the first step in preventing and counteracting them.

Pre-publication threats

Before data is made publicly available, it may be subject to the following threats:

-

Supply chain attacks: attackers can sneak malicious code into open data projects, such as commonly used libraries (Pandas, Numpy or visualisation modules), by exploiting the trust placed in these resources. This technique allows attackers to compromise larger systems and collect sensitive information in a gradual and difficult to detect manner.

- Manipulation of information: data may be deliberately altered to present a false or misleading picture. This may include altering numerical values, distorting trends or creating false narratives. These actions undermine the credibility of open data sources and can have significant consequences, especially in contexts where data is used to make important decisions.

- Envenenamiento de datos (data poisoning): attackers can inject misleading or incorrect data into datasets, especially those used for training AI models. This can result in models that produce inaccurate or biased results, leading to operational failures or poor business decisions.

Post-publication threats

Once data has been published, it remains vulnerable to a variety of attacks:

-

Compromise data integrity: attackers can modify published data, altering files, databases or even data transmission. These actions can lead to erroneous conclusions and decisions based on false information.

- Re-identification and breach of privacy: data sets, even if anonymised, can be combined with other sources of information to reveal the identity of individuals. This practice, known as 're-identification', allows attackers to reconstruct detailed profiles of individuals from seemingly anonymous data. This represents a serious violation of privacy and may expose individuals to risks such as fraud or discrimination.

- Sensitive data leakage: open data initiatives may accidentally expose sensitive information such as medical records, personally identifiable information (emails, names, locations) or employment data. This information can be sold on illicit markets such as the dark web, or used to commit identity fraud or discrimination.

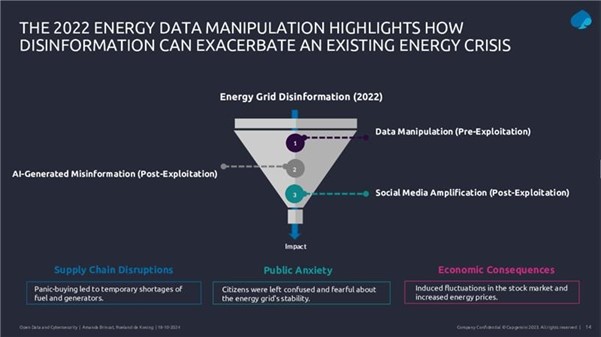

Following on from these threats, the webinar presented a case study on how cyber disinformation exploited open data during the energy and political crisis associated with the Ukraine war in 2022. Attackers manipulated data, generated false content with artificial intelligence and amplified misinformation on social media to create confusion and destabilise markets.

Figure 1. Slide from the webinar presentation "Safeguarding open data: cybersecurity essentials and skills for data providers".

Data protection and data governance strategies

In this context, the implementation of a robust governance structure emerges as a fundamental element for the protection of open data. This framework should incorporate rigorous quality management to ensure accuracy and consistency of data, together with effective updating and correction procedures. Security controls should be comprehensive, including:

- Technical protection measures.

- Integrity check procedures.

- Access and modification monitoring systems.

Risk assessment and risk management requires a systematic approach starting with a thorough identification of sensitive and critical data. This involves not only the cataloguing of critical information, but also a detailed assessment of its sensitivity and strategic value. A crucial aspect is the identification and exclusion of personal data that could allow the identification of individuals, implementing robust anonymisation techniques where necessary.

For effective protection, organisations must conduct comprehensive risk analyses to identify potential vulnerabilities in their data management systems and processes. These analyses should lead to the implementation of robust security controls tailored to the specific needs of each dataset. In this regard, the implementation of data sharing agreements establishes clear and specific terms for the exchange of information with other organisations, ensuring that all parties understand their data protection responsibilities.

Experts stressed that data governance must be structured through well-defined policies and procedures that ensure effective and secure information management. This includes the establishment of clear roles and responsibilities, transparent decision-making processes and monitoring and control mechanisms. Mitigation procedures must be equally robust, including well-defined response protocols, effective preventive measures and continuous updating of protection strategies.

In addition, it is essential to maintain a proactive approach to security management. A strategy that anticipates potential threats and adapts protection measures as the risk landscape evolves. Ongoing staff training and regular updating of policies and procedures are key elements in maintaining the effectiveness of these protection strategies. All this must be done while maintaining a balance between the need for protection and the fundamental purpose of open data: its accessibility and usefulness to the public.

Legal aspects and compliance

In addition, the webinar explained the legal and regulatory framework surrounding open data. A crucial point was the distinction between anonymization and pseudo-anonymization in the context of the GDPR (General Data Protection Regulation).

On the one hand, anonymised data are not considered personal data under the GDPR, because it is impossible to identify individuals. However, pseudo-anonymisation retains the possibility of re-identification if combined with additional information. This distinction is crucial for organisations handling open data, as it determines which data can be freely published and which require additional protections.

To illustrate the risks of inadequate anonymisation, the webinar presented the Netflix case in 2006, when the company published a supposedly anonymised dataset to improve its recommendation algorithm. However, researchers were able to "re-identify" specific users by combining this data with publicly available information on IMDb. This case demonstrates how the combination of different datasets can compromise privacy even when anonymisation measures have been taken.

In general terms, the role of the Data Governance Act in providing a horizontal governance framework for data spaces was highlighted, establishing the need to share information in a controlled manner and in accordance with applicable policies and laws. The Data Governance Regulation is particularly relevant to ensure that data protection, cybersecurity and intellectual property rights are respected in the context of open data.

The role of AI and cybersecurity in data security

The conclusions of the webinar focused on several key issues for the future of open data. A key element was the discussion on the role of artificial intelligence and its impact on data security. It highlighted how AI can act as a cyber threat multiplier, facilitating the creation of misinformation and the misuse of open data.

On the other hand, the importance of implementing Privacy Enhancing Technologies (PETs ) as fundamental tools to protect data was emphasized. These include anonymisation and pseudo-anonymisation techniques, data masking, privacy-preserving computing and various encryption mechanisms. However, it was stressed that it is not enough to implement these technologies in isolation, but that they require a comprehensive engineering approach that considers their correct implementation, configuration and maintenance.

The importance of training

The webinar also emphasised the critical importance of developing specific cybersecurity skills. ENISA's cyber skills framework, presented during the session, identifies twelve key professional profiles, including the Cybersecurity Policy and Legal Compliance Officer, the Cybersecurity Implementer and the Cybersecurity Risk Manager. These profiles are essential to address today's challenges in open data protection.

Figure 2. Slide presentation of the webinar " Safeguarding open data: cybersecurity essentials and skills for data providers".

In summary, a key recommendation that emerged from the webinar was the need for organisations to take a more proactive approach to open data management. This includes the implementation of regular impact assessments, the development of specific technical competencies and the continuous updating of security protocols. The importance of maintaining transparency and public confidence while implementing these security measures was also emphasised.

There is no doubt that data has become the strategic asset for organisations. Today, it is essential to ensure that decisions are based on quality data, regardless of the alignment they follow: data analytics, artificial intelligence or reporting. However, ensuring data repositories with high levels of quality is not an easy task, given that in many cases data come from heterogeneous sources where data quality principles have not been taken into account and no context about the domain is available.

To alleviate as far as possible this casuistry, in this article, we will explore one of the most widely used libraries in data analysis: Pandas. Let's check how this Python library can be an effective tool to improve data quality. We will also review the relationship of some of its functions with the data quality dimensions and properties included in the UNE 0081 data quality specification, and some concrete examples of its application in data repositories with the aim of improving data quality.

Using Pandas for data profiling

Si bien el data profiling y la evaluación de calidad de datos están estrechamente relacionados, sus enfoques son diferentes:

- Data Profiling: is the process of exploratory analysis performed to understand the fundamental characteristics of the data, such as its structure, data types, distribution of values, and the presence of missing or duplicate values. The aim is to get a clear picture of what the data looks like, without necessarily making judgements about its quality.

- Data quality assessment: involves the application of predefined rules and standards to determine whether data meets certain quality requirements, such as accuracy, completeness, consistency, credibility or timeliness. In this process, errors are identified and actions to correct them are determined. A useful guide for data quality assessment is the UNE 0081 specification.

It consists of exploring and analysing a dataset to gain a basic understanding of its structure, content and characteristics, before conducting a more in-depth analysis or assessment of the quality of the data. The main objective is to obtain an overview of the data by analysing the distribution, types of data, missing values, relationships between columns and detection of possible anomalies. Pandas has several functions to perform this data profiling.

En resumen, el data profiling es un paso inicial exploratorio que ayuda a preparar el terreno para una evaluación más profunda de la calidad de los datos, proporcionando información esencial para identificar áreas problemáticas y definir las reglas de calidad adecuadas para la evaluación posterior.

What is Pandas and how does it help ensure data quality?

Pandas is one of the most popular Python libraries for data manipulation and analysis. Its ability to handle large volumes of structured information makes it a powerful tool in detecting and correcting errors in data repositories. With Pandas, complex operations can be performed efficiently, from data cleansing to data validation, all of which are essential to maintain quality standards. The following are some examples of how to improve data quality in repositories with Pandas:

1. Detection of missing or inconsistent values: One of the most common data errors is missing or inconsistent values. Pandas allows these values to be easily identified by functions such as isnull() or dropna(). This is key for the completeness property of the records and the data consistency dimension, as missing values in critical fields can distort the results of the analyses.

-

# Identify null values in a dataframe.

df.isnull().sum()

2. Data standardisation and normalisation: Errors in naming or coding consistency are common in large repositories. For example, in a dataset containing product codes, some may be misspelled or may not follow a standard convention. Pandas provides functions like merge() to perform a comparison with a reference database and correct these values. This option is key to maintaining the dimension and semantic consistency property of the data.

# Substitution of incorrect values using a reference table

df = df.merge(product_codes, left_on='product_code', right_on='ref_code', how= 'left')

3. Validation of data requirements: Pandas allows the creation of customised rules to validate the compliance of data with certain standards. For example, if an age field should only contain positive integer values, we can apply a function to identify and correct values that do not comply with this rule. In this way, any business rule of any of the data quality dimensions and properties can be validated.

# Identify records with invalid age values (negative or decimals)

age_errors = df[(df['age'] < 0) | (df['age'] % 1 != 0)])

4. Exploratory analysis to identify anomalous patterns: Functions such as describe() or groupby() in Pandas allow you to explore the general behaviour of your data. This type of analysis is essential for detecting anomalous or out-of-range patterns in any data set, such as unusually high or low values in columns that should follow certain ranges.

# Statistical summary of the data

df.describe()

#Sort by category or property

df.groupby()

5. Duplication removal: Duplicate data is a common problem in data repositories. Pandas provides methods such as drop_duplicates() to identify and remove these records, ensuring that there is no redundancy in the dataset. This capacity would be related to the dimension of completeness and consistency.

# Remove duplicate rows

df = df.drop_duplicates()

Practical example of the application of Pandas

Having presented the above functions that help us to improve the quality of data repositories, we now consider a case to put the process into practice. Suppose we are managing a repository of citizens' data and we want to ensure:

- Age data should not contain invalid values (such as negatives or decimals).

- That nationality codes are standardised.

- That the unique identifiers follow a correct format.

- The place of residence must be consistent.

With Pandas, we could perform the following actions:

1. Age validation without incorrect values:

# Identify records with ages outside the allowed ranges (e.g. less than 0 or non-integers)

age_errors = df[(df['age'] < 0) | (df['age'] % 1 != 0)])

2. Correction of nationality codes:

# Use of an official dataset of nationality codes to correct incorrect entries

df_corregida = df.merge(nacionalidades_ref, left_on='nacionalidad', right_on='codigo_ref', how='left')

3. Validation of unique identifiers:

# Check if the format of the identification number follows a correct pattern

df['valid_id'] = df['identificacion'].str.match(r'^[A-Z0-9]{8}$')

errores_id = df[df['valid_id'] == False]

4. Verification of consistency in place of residence:

# Detect possible inconsistencies in residency (e.g. the same citizen residing in two places at the same time).

duplicados_residencia = df.groupby(['id_ciudadano', 'fecha_residencia'])['lugar_residencia'].nunique()

inconsistencias_residencia = duplicados_residencia[duplicados_residencia > 1]

Integration with a variety of technologies

Pandas is an extremely flexible and versatile library that integrates easily with many technologies and tools in the data ecosystem. Some of the main technologies with which Pandas is integrated or can be used are:

- SQL databases:

Pandas integrates very well with relational databases such as MySQL, PostgreSQL, SQLite, and others that use SQL. The SQLAlchemy library or directly the database-specific libraries (such as psycopg2 for PostgreSQL or sqlite3) allow you to connect Pandas to these databases, perform queries and read/write data between the database and Pandas.

- Common function: pd.read_sql() to read a SQL query into a DataFrame, and to_sql() to export the data from Pandas to a SQL table.

- REST and HTTP-based APIs:

Pandas can be used to process data obtained from APIs using HTTP requests. Libraries such as requests allow you to get data from APIs and then transform that data into Pandas DataFrames for analysis.

- Big Data (Apache Spark):

Pandas can be used in combination with PySpark, an API for Apache Spark in Python. Although Pandas is primarily designed to work with in-memory data, Koalas, a library based on Pandas and Spark, allows you to work with Spark distributed structures using a Pandas-like interface. Tools like Koalas help Pandas users scale their scripts to distributed data environments without having to learn all the PySpark syntax.

- Hadoop and HDFS:

Pandas can be used in conjunction with Hadoop technologies, especially the HDFS distributed file system. Although Pandas is not designed to handle large volumes of distributed data, it can be used in conjunction with libraries such as pyarrow or dask to read or write data to and from HDFS on distributed systems. For example, pyarrow can be used to read or write Parquet files in HDFS.

- Popular file formats:

Pandas is commonly used to read and write data in different file formats, such as:

- CSV: pd.read_csv()

- Excel: pd.read_excel() and to_excel().

- JSON: pd.read_json()

- Parquet: pd.read_parquet() for working with space and time efficient files.

- Feather: a fast file format for interchange between languages such as Python and R (pd.read_feather()).

- Data visualisation tools:

Pandas can be easily integrated with visualisation tools such as Matplotlib, Seaborn, and Plotly.. These libraries allow you to generate graphs directly from Pandas DataFrames.

- Pandas includes its own lightweight integration with Matplotlib to generate fast plots using df.plot().

- For more sophisticated visualisations, it is common to use Pandas together with Seaborn or Plotly for interactive graphics.

- Machine learning libraries:

Pandas is widely used in pre-processing data before applying machine learning models. Some popular libraries with which Pandas integrates are:

- Scikit-learn: la mayoría de los pipelines de machine learning comienzan con la preparación de datos en Pandas antes de pasar los datos a modelos de Scikit-learn.

- TensorFlow y PyTorch: aunque estos frameworks están más orientados al manejo de matrices numéricas (Numpy), Pandas se utiliza frecuentemente para la carga y limpieza de datos antes de entrenar modelos de deep learning.

- XGBoost, LightGBM, CatBoost: Pandas supports these high-performance machine learning libraries, where DataFrames are used as input to train models.

- Jupyter Notebooks:

Pandas is central to interactive data analysis within Jupyter Notebooks, which allow you to run Python code and visualise the results immediately, making it easy to explore data and visualise it in conjunction with other tools.

- Cloud Storage (AWS, GCP, Azure):

Pandas can be used to read and write data directly from cloud storage services such as Amazon S3, Google Cloud Storage and Azure Blob Storage. Additional libraries such as boto3 (for AWS S3) or google-cloud-storage facilitate integration with these services. Below is an example for reading data from Amazon S3.

import pandas as pd

import boto3

#Create an S3 client

s3 = boto3.client('s3')

#Obtain an object from the bucket

obj = s3.get_object(Bucket='mi-bucket', Key='datos.csv')

#Read CSV file from a DataFrame

df = pd.read_csv(obj['Body'])

10. Docker and containers:

Pandas can be used in container environments using Docker.. Containers are widely used to create isolated environments that ensure the replicability of data analysis pipelines .

In conclusion, the use of Pandas is an effective solution to improve data quality in complex and heterogeneous repositories. Through clean-up, normalisation, business rule validation, and exploratory analysis functions, Pandas facilitates the detection and correction of common errors, such as null, duplicate or inconsistent values. In addition, its integration with various technologies, databases, big dataenvironments, and cloud storage, makes Pandas an extremely versatile tool for ensuring data accuracy, consistency and completeness.

Content prepared by Dr. Fernando Gualo, Professor at UCLM and Data Governance and Quality Consultant. The content and point of view reflected in this publication is the sole responsibility of its author.

From October 28 to November 24, registration will be open for submitting proposals to the challenge organized by the Diputación de Bizkaia. The goal of the competition is to identify initiatives that combine the reuse of available data from the Open Data Bizkaia portal with the use of artificial intelligence. The complete guidelines are available at this link, but in this post, we will cover everything you need to know about this contest, which offers cash prizes for the five best projects.

Participants must use at least one dataset from the Diputación Foral de Bizkaia or from the municipalities in the territory, which can be found in the catalog, to address one of the five proposed use cases:

-

Promotional content about tourist attractions in Bizkaia: Written promotional content, such as generated images, flyers, etc., using datasets like:

- Beaches of Bizkaia by municipality

- Cultural agenda – BizkaiKOA

- Cultural agenda of Bizkaia

- Bizkaibus

- Trails

- Recreation areas

- Hotels in Euskadi – Open Data Euskadi

- Temperature predictions in Bizkaia – Weather API data

-

Boosting tourism through sentiment analysis: Text files with recommendations for improving tourist resources, such as Excel and PowerPoint reports, using datasets like:

- Beaches of Bizkaia by municipality

- Cultural agenda – BizkaiKOA

- Cultural agenda of Bizkaia

- Bizkaibus

- Trails

- Recreation areas

- Hotels in Euskadi – Open Data Euskadi

- Google reviews API – this resource is paid with a possible free tier

-

Personalized tourism guides: Chatbot or document with personalized recommendations using datasets like:

- Tide table 2024

- Beaches of Bizkaia by municipality

- Cultural agenda – BizkaiKOA

- Cultural agenda of Bizkaia

- Bizkaibus

- Trails

- Hotels in Euskadi – Open Data Euskadi

- Temperature predictions in Bizkaia – Weather API data, resource with a free tier

-

Personalized cultural event recommendations: Chatbot or document with personalized recommendations using datasets like:

- Cultural agenda – BizkaiKOA

- Cultural agenda of Bizkaia

-

Waste management optimization: Excel, PowerPoint, and Word reports containing recommendations and strategies using datasets like:

- Urban waste

- Containers by municipality

How to participate?

Participants can register individually or in teams via this form available on the website. The registration period is from October 28 to November 24, 2024. Once registration closes, teams must submit their solutions on Sharepoint. A jury will pre-select five finalists, who will have the opportunity to present their project at the final event on December 12, where the prizes will be awarded. The organization recommends attending in person, but online attendance will also be allowed if necessary.

The competition is open to anyone over 16 years old with a valid ID or passport, who is not affiliated with the organizing entities. Additionally, multiple proposals can be submitted.

What are the prizes?

The jury members will select five winning projects based on the following evaluation criteria:

- Suitability of the proposed solution to the selected challenge.

- Creativity and innovation.

- Quality and coherence of the solution.

- Suitability of the Open Data Bizkaia datasets used.

The winning candidates will receive a cash prize, as well as the commitment to open the datasets associated with the project, to the extent possible.

- First prize: €2,000.

- Second prize: €1,000.

- Three prizes for the remaining finalists of €500 each.

One of the objectives of this challenge, as explained by the Diputación Foral de Bizkaia, is to understand whether the current dataset offerings meet demand. Therefore, if any participant requires a dataset from Bizkaia or its municipalities that is not available, they can propose that the institution make it publicly available, as long as the information falls within the competencies of the Diputación Foral de Bizkaia or the municipalities.

This is a unique event that will not only allow you to showcase your skills in artificial intelligence and open data but also contribute to the development and improvement of Bizkaia. Don’t miss the chance to be part of this exciting challenge. Sign up and start creating innovative solutions!

Open data can transform how we interact with our cities, offering opportunities to improve quality of life. When made publicly available, they enable the development of innovative applications and tools that address urban challenges, from accessibility to road safety and participation.

Real-time information can have positive impacts on citizens. For example, applications that use open data can suggest the most efficient routes, considering factors such as traffic and ongoing construction; information on the accessibility of public spaces can improve mobility for people with disabilities; data on cycling or pedestrian routes encourages greener and healthier modes of transport; and access to urban data can empower citizens to participate in decision-making about their city. In other words, citizen use of open data not only improves the efficiency of the city and its services, but also promotes a more inclusive, sustainable and participatory city.

To illustrate these ideas, this article discusses maps for "navigating" cities, made with open data. In other words, initiatives are shown that improve the relationship between citizens and their urban environment from different aspects such as accessibility, school safety and citizen participation. The first project is Mapcesible, which allows users to map and assess the accessibility of different locations in Spain. The second, Eskola BideApp, a mobile application designed to support safe school routes. And finally, maps that promote transparency and citizen participation in urban management. The first identifies noise pollution, the second locates available services in various areas within 15 minutes and the third displays banks in the city. These maps use a variety of public data sources to provide a detailed overview of different aspects of urban life.

The first initiative is a project of a large foundation, the second a collaborative and local proposal, and the third a personal project. Although they are based on very different approaches, all three have in common the use of public and open data and the vocation to help people understand and experience the city. The variety of origins of these projects indicates that the use of public and open data is not limited to large organisations.

Below is a summary of each project, followed by a comparison and reflection on the use of public and open data in urban environments.

Mapcesible, map for people with reduced mobility

Mapcessible was launched in 2019 to assess the accessibility of various spaces such as shops, public toilets, car parks, accommodation, restaurants, cultural spaces and natural environments.

Figure 1. Mapcesible. Source: https://mapcesible.fundaciontelefonica.com/intro

This project is supported by organizations such as the NGO Spanish Confederation of People with Physical and Organic Disabilities (COCEMFE) and the company ILUNION. It currently has more than 40,000 evaluated accessible spaces and thousands of users.

Figure 2. Mapcesible. Source: https://mapcesible.fundaciontelefonica.com/filters

Mapcesible uses open data as part of its operation. Specifically, the application incorporates fourteen datasets from official bodies, including the Ministry of Agriculture and Environment, city councils of different cities (including Madrid and Barcelona) and regional governments. This open data is combined with information provided by the users of the application, who can map and evaluate the accessibility of the places they visit. This combination of official data and citizen collaboration allows Mapcesible to provide up-to-date and detailed information on the accessibility of various spaces throughout Spain, thus benefiting people with reduced mobility.

Eskola BideAPP, application to define safe school routes.

Eskola BideAPP is an application developed by Montera34 - a team dedicated to data visualisation and the development of collaborative projects - in alliance with the Solasgune Association to support school pathways. Eskola BideAPP has served to ensure that boys and girls can access their schools safely and efficiently. The project mainly uses public data from the OpenStreetMap, e.g. geographical and cartographic data on streets, pavements, crossings, as well as data collected during the process of creating safe routes for children to walk to school in order to promote their autonomy and sustainable mobility.

The application offers an interactive dashboard to visualise the collected data, the generation of paper maps for sessions with students, and the creation of reports for municipal technicians. It uses technologies such as QGIS (a free and open source geographic information system) and a development environment for the R programming language, dedicated to statistical computing and graphics.

The project is divided into three main stages:

- Data collection through questionnaires in classrooms.

- Analysis and discussion of results with the children to co-design personalised routes.

- Testing of the designed routes.

Figure 3. Eskola BideaAPP. Photo by Julián Maguna (Solasgune). Source: https://montera34.com/project/eskola-bideapp/

Pablo Rey, one of the promoters of Montera34 together with Alfonso Sánchez, reports for this article that Eskola BideAPP, since 2019, has been used in eight municipalities, including Derio, Erandio, Galdakao, Gatika, Plentzia, Leioa, Sopela and Bilbao. However, it is currently only operational in the latter two. "The idea is to implement it in Portugalete at the beginning of 2025," he adds.

It''s worth noting the maps from Montera34 that illustrated the effect of Airbnb in San Sebastián and other cities, as well as the data analyses and maps published during the COVID-19 pandemic, which also visualized public data.In addition, Montera34 has used public data to analyse abstention, school segregation, minor contracts or make open data available to the public. For this last project, Montera34 has started with the ordinances of the Bilbao City Council and the minutes of its plenary sessions, so that they are not only available in a PDF document but also in the form of open and accessible data.

Mapas de Madrid sobre contaminación acústica, servicios y ubicación de bancos

Abel Vázquez Montoro has made several maps with open data that are very interesting, for example, the one made with data from the Strategic Noise Map (MER) offered by the Madrid City Council and land registry data. The map shows the noise affecting each building, facade and floor in Madrid.

Figure 4. Noise maps in Madrid. Source: https://madb.netlify.app/.

This map is organised as a dashboard with three sections: general data of the area visible on the map, dynamic 2D and 3D map with configurable options and detailed information on specific buildings. It is an open, free, non-commercial platform that uses free and open source software such as GitLab - a web-based Git repository management platform - and QGIS. The map allows the assessment of compliance with noise regulations and the impact on quality of life, as it also calculates the health risk associated with noise levels, using the attributable risk ratio (AR%).

15-minCity is another interactive map that visualises the concept of the "15-minute city" applied to different urban areas, i.e. it calculates how accessible different services are within a 15-minute walking or cycling radius from any point in the selected city.

Figure 5. 15-minCity. Source: https://whatif.sonycsl.it/15mincity/15min.php?idcity=9166

Finally, "Where to sit in Madrid" is another interactive map that shows the location of benches and other places to sit in public spaces in Madrid, highlighting the differences between rich (generally with more public seating) and poor (with less) neighbourhoods. This map uses the map-making tool, Felt, to visualise and share geospatial information in an accessible way. The map presents different types of seating, including traditional benches, individual seats, bleachers and other types of seating structures.

Figure 6. Where to sit in Madrid. Source: https://felt.com/map/Donde-sentarse-en-Madrid-TJx8NGCpRICRuiAR3R1WKC?loc=40.39689,-3.66392,13.97z

Its maps visualise public data on demographic information (e.g. population data by age, gender and nationality), urban information on land use, buildings and public spaces, socio-economic data (e.g. income, employment and other economic indicators for different districts and neighbourhoods), environmental data, including air quality, green spaces and other related aspects, and mobility data.

What do they have in common?

| Name | Promoter | Type of data used | Profit motive | Users | Characteristics |

|---|---|---|---|---|---|

| Mapcesible | Telefónica Foundation | Combines user-generated and public data (14 open data sets from government agencies) | Non-profit | More than 5.000 | Collaborative app, available on iOS and Android, more than 40,000 mapped accessible points. |

| Eskola BideAPP | Montera34 and Solasgune Association | Combines user-generated and public data (classroom questionnaires) and some public data. | Non-profit. | 4.185 | Focus on safe school routes, uses QGIS and R for data processing |

| Mapa Estratégico de Ruido (MER) | Madrid City Council | 2D and 3D geographic and visible area data | Non-profit | No data | It allows the assessment of compliance with noise regulations and the impact on quality of life, as it also calculates the associated health risk. |

| 15 min-City | Sony GSL | Geographic data and services | Non-profit | No data | Interactive map visualising the concept of the "15-minute city" applied to different urban areas. |

| MAdB "Dónde sentarse en Madrid" | Private | Public data (demographic, electoral, urban, socio-economic, etc.) | Non-profit | No data | Interactive maps of Madrid |

Figure 7. Comparative table of solutions

These projects share the approach of using open data to improve access to urban services, although they differ in their specific objectives and in the way information is collected and presented. Mapcesible, Eskola BideApp, MAdB and "Where to sit in Madrid" are of great value.

On the one hand, Mapcesible offers unified and updated information that allows people with disabilities to move around the city and access services. Eskola BideApp involves the community in the design and testing of safe routes for walking to school; this not only improves road safety, but also empowers young people to be active agents in urban planning. In the meantime, 15-min city, MER and the maps developed by Vázquez Montoro visualise complex data about Madrid so that citizens can better understand how their city works and how decisions that affect them are made.

Overall, the value of these projects lies in their ability to create a data culture, teaching how to value, interpret and use information to improve communities.

Content created by Miren Gutiérrez, PhD and researcher at the University of Deusto, expert in data activism, data justice, data literacy, and gender disinformation. The contents and viewpoints reflected in this publication are the sole responsibility of the author.

On 11, 12 and 13 November, a new edition of DATAforum Justice will be held in Granada. The event will bring together more than 100 speakers to discuss issues related to digital justice systems, artificial intelligence (AI) and the use of data in the judicial ecosystem.The event is organized by the Ministry of the Presidency, Justice and Relations with the Courts, with the collaboration of the University of Granada, the Andalusian Regional Government, the Granada City Council and the Granada Training and Management entity.

The following is a summary of some of the most important aspects of the conference.

An event aimed at a wide audience

This annual forum is aimed at both public and private sector professionals, without neglecting the general public, who want to know more about the digital transformation of justice in our country.

The DATAforum Justice 2024 also has a specific itinerary aimed at students, which aims to provide young people with valuable tools and knowledge in the field of justice and technology. To this end, specific presentations will be given and a DATAthon will be set up. These activities are particularly aimed at students of law, social sciences in general, computer engineering or subjects related to digital transformation. Attendees can obtain up to 2 ECTS credits (European Credit Transfer and Accumulation System): one for attending the conference and one for participating in the DATAthon.

Data at the top of the agenda

The Paraninfo of the University of Granada will host experts from the administration, institutions and private companies, who will share their experience with an emphasis on new trends in the sector, the challenges ahead and the opportunities for improvement.

The conference will begin on Monday 11 November at 9:00 a.m., with a welcome to the students and a presentation of DATAthon. The official inauguration, addressed to all audiences, will be at 11:35 a.m. and will be given by Manuel Olmedo Palacios, Secretary of State for Justice, and Pedro Mercado Pacheco, Rector of the University of Granada.

From then on, various talks, debates, interviews, round tables and conferences will take place, including a large number of data-related topics. Among other issues, the data management, both in administrations and in companies, will be discussed in depth. It will also address the use of open data to prevent everything from hoaxes to suicide and sexual violence.

Another major theme will be the possibilities of artificial intelligence for optimising the sector, touching on aspects such as the automation of justice, the making of predictions. It will include presentations of specific use cases, such as the use of AI for the identification of deceased persons, without neglecting issues such as the governance of algorithms.

The event will end on Wednesday 13 at 17:00 hours with the official closing ceremony. On this occasion, Félix Bolaños, Minister of the Presidency, Justice and Relations with the Cortes, will accompany the Rector of the University of Granada.

A Datathon to solve industry challenges through data

In parallel to this agenda, a DATAthon will be held in which participants will present innovative ideas and projects to improve justice in our society. It is a contest aimed at students, legal and IT professionals, research groups and startups.

Participants will be divided into multidisciplinary teams to propose solutions to a series of challenges, posed by the organisation, using data science oriented technologies. During the first two days, participants will have time to research and develop their original solution. On the third day, they will have to present a proposal to a qualified jury. The prizes will be awarded on the last day, before the closing ceremony and the Spanish wine and concert that will bring the 2024 edition of DATAfórum Justicia to a close.

In the 2023 edition, 35 people participated, divided into 6 teams that solved two case studies with public data and two prizes of 1,000 euros were awarded.

How to register

The registration period for the DATAforum Justice 2024 is now open. This must be done through the event website, indicating whether it is for the general public, public administration staff, private sector professionals or the media.

To participate in the DATAthon it is necessary to register also on the contest site.

Last year's edition, focusing on proposals to increase efficiency and transparency in judicial systems, was a great success, with over 800 registrants. This year again, a large number of people are expected, so we encourage you to book your place as soon as possible. This is a great opportunity to learn first-hand about successful experiences and to exchange views with experts in the sector.

In an increasingly information-driven world, open data is transforming the way we understand and shape our societies. This data are a valuable source of knowledge that also helps to drive research, promote technological advances and improve policy decision-making.

In this context, the Publications Office of the European Union organises the annual EU Open Data Days to highlight the role of open data in European society and all the new developments. The next edition will take place on 19-20 March 2025 at the European Conference Centre Luxembourg (ECCL) and online.

This event, organised by the data.europa.eu, europe's open data portal, will bring together data providers, enthusiasts and users from all over the world, and will be a unique opportunity to explore the potential of open data in various sectors. From success stories to new initiatives, this event is a must for anyone interested in the future of open data.

What are EU Open Data Days?

EU Open Data Days are an opportunity to exchange ideas and network with others interested in the world of open data and related technologies. This event is particularly aimed at professionals involved in data publishing and reuse, analysis, policy making or academic research.However, it is also open to the general public. After all, these are two days of sharing, learning and contributing to the future of open data in Europe.

What can you expect from EU Open Data Days 2025?

The event programme is designed to cover a wide range of topics that are key to the open data ecosystem, such as:

- Success stories and best practices: real experiences from those at the forefront of data policy in Europe, to learn how open data is being used in different business models and to address the emerging frontiers of artificial intelligence.

- Challenges and solutions: an overview of the challenges of using open data, from the perspective of publishers and users, addressing technical, ethical and legal issues.

- Visualising impact: analysis of how data visualisation is changing the way we communicate complex information and how it can facilitate better decision-making and encourage citizen participation.

- Data literacy: training to acquire new skills to maximise the potential of open data in each area of work or interest of the attendees.

An event open to all sectors

The EU Open Data Days are aimed at a wide audience: the public, the media, the general public and the general public.

- Private sector: data analytics specialists, developers and technology solution providers will be able to learn new techniques and trends, and connect with other professionals in the sector.

- Public sector: policy makers and government officials will discover how open data can be used to improve decision-making, increase transparency and foster innovation in policy design.

- Academia and education: researchers, teachers and students will be able to engage in discussions on how open data is fuelling new research and advances in areas as diverse as social sciences, emerging technologies and economics.

- Journalism and media: Data journalists and communicators will learn how to use data visualisation to tell more powerful and accurate stories, fostering better public understanding of complex issues.

Submit your proposal before 22 October

Would you like to present a paper at the EU Open Data Days 2025? You have until Tuesday 22 October to send your proposal on one of the above-mentioned themes. Papers that address open data or related areas are sought, such as data visualisation or the use of artificial intelligence in conjunction with open data.

The European data portal is looking for inspiring cases that demonstrate the impact of open data use in Europe and beyond. The call is open to participants from all over the world and from all sectors: from international, national and EU public organisations, to academics, journalists and data visualisation experts. Selected projects will be part of the conference programme, and presentations must be made in English.

Proposals should be between 20 and 35 minutes in length, including time for questions and answers. If your proposal is selected, travel and accommodation expenses (one night) will be reimbursed for participants from the academic sector, the public sector and NGOs.

For further details and clarifications, please contact the organising team by email: EU-Open-Data-Days@ec.europa.eu.

- Deadline for submission of proposals: 22 October 2024.

- Notification to selected participants: November 2024.

- Delivery of the draft presentation: 15 January 2025.

- Delivery of the final presentation: 18 February 2025.

- Conference dates: 19-20 March 2025.

The future of open data is now. The EU Open Data Days 2025 will not only be an opportunity to learn about the latest trends and practices in data use, but also to build a stronger and more collaborative community around open data. Registration for the event will open in late autumn 2024, we will announce it through our social media channels on TwitterlinkedIn and Instagram.