Can you imagine an AI capable of writing songs, novels, press releases, interviews, essays, technical manuals, programming code, prescribing medication and much more that we don't know yet? Watching GPT-3 in action doesn't seem like we're very far away.

In our latest report on natural language processing (NLP) we mentioned the GPT-2 algorithm developed by OpenAI (the company founded by such well-known names as Elon Musk) as an exponent of its capabilities for generating synthetic text with a quality indistinguishable from any other human-created text. The surprising results of GPT-2 led the company not to publish the source code of the algorithm because of its potential negative effects on the generation of deepfakes or false news

Recently (May 2020) a new version of the algorithm has been released, now called GPT-3, which includes functional innovations and improvements in performance and capacity to analyse and generate natural language texts.

In this post we try to summarize in a simple and affordable way the main new features of GPT-3. Do you dare to discover them?

We start directly, getting to the point. What does GPT-3 bring with it? (adaptation of the original post by Mayor Mundada).

- It is much bigger (complex) than everything we had before. Deep learning models based on neural networks are usually classified by their number of parameters. The greater the number of parameters, the greater the depth of the network and therefore its complexity. The training of the full version of GPT-2 resulted in 1.5 billion parameters. GPT-3 results in 175 billion parameters. GPT-3 has been trained on a basis of 570 GB of text compared to 40 GB of GPT-2.

- For the first time, it can be used as a product or service. For the first time it can be used as a product or service. That is, OpenAI has announced the availability of a public API for users to experiment with the algorithm. At the time of writing this post, access to the API is restricted (this is what we call a private preview) and access must be requested.

- The most important thing: the results. Despite the fact that the API is restricted by invitation, many Internet users (with access to the API) have published articles on its results in different fields.

What role do open data play?

It is rarely possible to see the power and benefits of open data as in this type of project. As mentioned above GPT-3 has been trained with 570 GB of data in text format. Well, it turns out that 60% of the training data of the algorithm comes from the source https://commoncrawl.org. Common Crawl is an open and collaborative project that provides a corpus for research, analysis, education, etc. As specified on the Common Crawl website the data provided are open and hosted under the AWS open data initiative. Much of the rest of the training data is also open including sources such as Wikipedia.

Use cases

Below are some of the examples and use cases that have had an impact.

Generation of synthetic text

This entry (no spoilers ;) ) from Manuel Araoz's blog shows the power of the algorithm to generate a 100% synthetic article on Artificial Intelligence. Manuel performs the following experiment: he provides GPT-3 with a minimal description of his biography included in his blog and a small fragment of the last entry in his blog. 117 words in total. After 10 runs of GPT-3 to generate related artificial text, Manuel is able to copy and paste the generated text, place a cover image and has a new post ready for his blog. Honestly, the text of the synthetic post is indistinguishable from an original post except for possible errors in names, dates, etc. that the text may include.

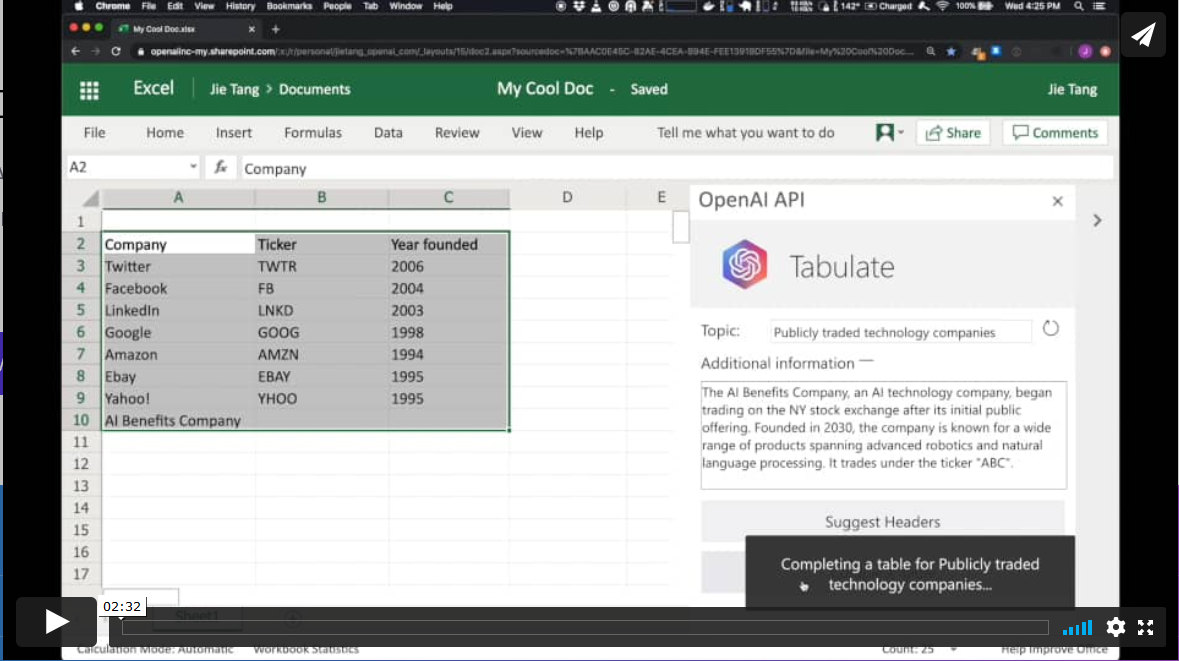

Productivity. Automatic generation of data tables.

In a different field, the GPT-3 algorithm has applications in the field of productivity. In this example GPT-3 is able to create a MS Excel table on a certain topic. For example, if we want to obtain a table, as a list, with the most representative technology companies and their year of foundation, we simply provide GPT-3 with the desired pattern and ask it to complete it. The starting pattern can be something similar to this table below (in a real example, the input data will be in English). GPT-3 will complete the shaded area with actual data. However, if in addition to the input pattern, we provide the algorithm with a plausible description of a fictitious technology company and ask you again to complete the table with the new information, the algorithm will include the data from this new fictitious company.

These examples are just a sample of what GPT-3 is capable of doing. Among its functionalities or applications are:

- semantic search (different from keyword search)

- the chatbots

- the revolution in customer services (call-center)

- the generation of multi-purpose text (creation of poems, novels, music, false news, opinion articles, etc.)

- productivity tools. We have seen an example on how to create data tables, but there is talk (and much) about the possibility of creating simple software such as web pages and small simple applications without the need for coding, just by asking GPT-3 and its brothers who are coming.

- online translation tools

- understanding and summarizing texts.

and so many other things we haven't discovered yet... We will continue to inform you about the next developments in NLP and in particular about GPT-3, a game-changer that has come to revolutionize everything we know at the moment.

Content elaborated by Alejandro Alija, expert in Digital Transformation and Innovation.

Contents and points of view expressed in this publication are the exclusive responsibility of its author.

The promotion of digitalisation in industrial activity is one of the main axes for tackling the transformation that the Spain's Digital Agenda 2025 aims to promote. In this respect, several initiatives have already been launched by public institutions, including the Connected Industry 4.0 programme, through which the aim is to promote a framework for joint and coordinated action by the public and private sectors in this field.

Apart from the debates on what Industry 4.0 means and the challenges it poses, one of the main requirements identified to assess the maturity and viability of this type of project is the existence of "a strategy for collecting, analysing and using relevant data, promoting the implementation of technologies that facilitate this, aimed at decision-making and customer satisfaction", as well as the use of technologies that "allow predictive and prescriptive models, for example, Big Data and Artificial Intelligence". Precisely, the Spanish Artificial R+D+I Strategy gives a singular relevance to the massive use of data that, in short, requires its availability in adequate conditions, both from a quantitative and qualitative perspective. In the case of Industry 4.0 this requirement becomes strategic, particularly if we consider that almost a half of companies' technological expenditure is linked to data management.

Although a relevant part of the data will be those generated in the development of their own activity by the companies, it cannot be ignored that the re-use of data from third parties acquires a singular importance due to the added value it provides, especially with regard to the information provided by public sector entities. In any case, the specific sectors in which industrial activity takes place will determine which type of data is particularly useful. In this sense, the food industry may have a greater interest in knowing as accurately as possible not only weather forecasts but also historical data related to climate and, in this way, adequately planning both its production and also the management of its personnel, logistics activities and even future investments. Or, to continue with another example, the pharmaceutical industry and the industry linked to the supply of health material could make more effective and efficient decisions if they could access updated information from the autonomous health systems under suitable conditions, which would ultimately not only benefit them but also better satisfy their own public interests.

Beyond the particularities of each of the sectors on which the specific business activity is projected, in general, public sector entities have relevant data banks whose effective opening in order to allow their reuse in an automated manner would be of great interest to facilitate the digital transformation of industrial activity. Specifically, the availability of socio-economic information can provide undeniable added value, so that the adoption of decisions on the activity of companies can be based on data generated by public statistics services, on parameters which are relevant from the perspective of economic activity - for example, taxes or income levels - or even on the planning of the activity of public bodies with implications for assets, as occurs in the field of subsidies or contracting. On the other hand, there are many public registers with structured information whose opening would provide an important added value from an industrial perspective, such as those which provide relevant information on the population or the opening of establishments which carry out economic activities which directly or indirectly affect the conditions in which industrial activity is carried out, either in terms of production conditions or the market in which their products are distributed. In addition, the accessibility of environmental, town planning and, in general, territorial planning information would be an undeniable asset in the context of the digital transformation of industrial activity, as it would allow the integration of essential variables for the data processing required by this type of company.

However, the availability of data from third parties in projects linked to Industry 4.0 cannot be limited to the public sector alone, as it is particularly important to be able to rely on data provided by private subjects. In particular, there are certain sectors of activity in which their accessibility for the purposes of reuse by industrial companies would be of particular relevance, such as telecommunications, energy or, among others, financial institutions. However, unlike what happens with data generated in the public sector, there is no regulation that obliges private subjects to offer information generated in the development of their own activity to third parties in open and reusable formats.

Moreover, there may sometimes be a legitimate interest on the part of such entities to prevent other parties from accessing the data they hold, for example, if possible intellectual or industrial property rights are affected, if there are contractual obligations to be fulfilled or if, simply for commercial reasons, it is advisable to prevent relevant information from being made available to competing companies. However, apart from the timid European regulation aimed at facilitating the free circulation of not personal data, there is no specific regulatory framework that is applicable to the private sector and therefore, in the end, the possibility of re-using relevant information for projects related to Industry 4.0 would be limited to agreements that can be reached on a voluntary basis.

Therefore, the decisive promotion of Industry 4.0 requires the existence of an adequate ecosystem with regard to the accessibility of data generated by other entities which, in short, cannot be limited solely and exclusively to the public sector. It is not simply a question of adopting a perspective of increasing efficiency from a cost perspective but, rather, of optimising all processes; this also affects certain social aspects of growing importance such as energy efficiency, respect for the environment or improvement in working conditions. And it is precisely in relation to these challenges that the role to be played by the Public Administration is crucial, not only offering relevant data for reuse by industrial companies but, above all, promoting the consolidation of a technological and socially advanced production model based on the parameters of Industry 4.0, which also requires the dynamisation of adequate legal conditions to guarantee the accessibility of information generated by private entities in certain strategic sectors.

Content prepared by Julián Valero, professor at the University of Murcia and Coordinator of the Research Group "Innovation, Law and Technology" (iDerTec).

Contents and points of view expressed in this publication are the exclusive responsibility of its author.

Machine learning is a branch within the field of Artificial Intelligence that provides systems with the ability to learn and improve automatically, based on experience. These systems transform data into information, and with this information, they can make decisions. A model needs to be fed with data to make predictions in a robust way. The more, the better. Fortunately, today's network is full of data sources. In many cases, data is collected by private companies for their own benefit, but there are also other initiatives, such as open data portals.

Once we have the data, we are ready to start the learning process. This process, carried out by an algorithm, tries to analyze and explore the data in search of hidden patterns. The result of this learning, sometimes, is nothing more than a function that operates on the data to calculate a certain prediction.

In this article we will see the types of machine learning that exist including some examples.

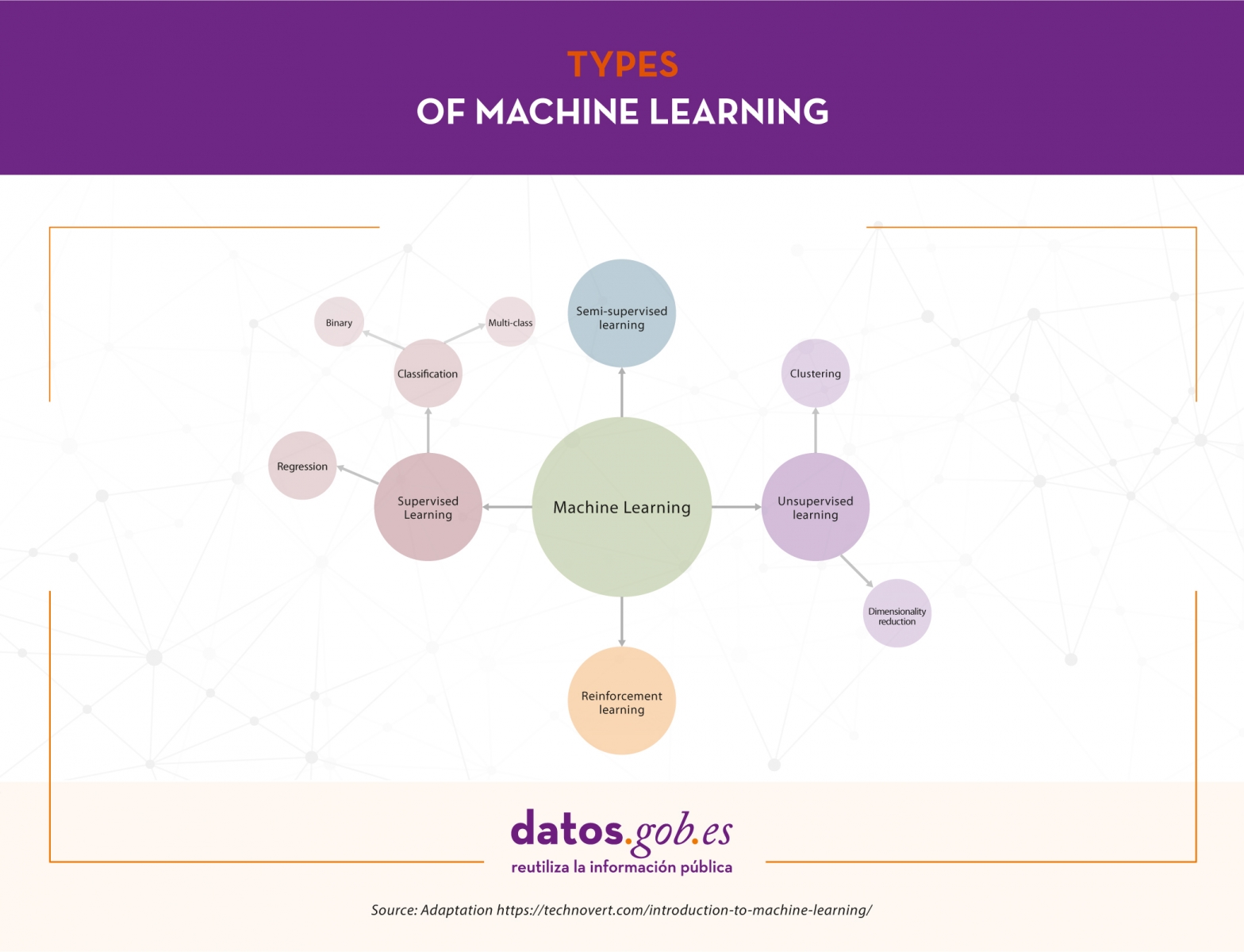

Types of machine learning

Depending on the data available and the task we want to tackle, we can choose between different types of learning. These are: supervised learning, unsupervised learning, semi-supervised learning and reinforcement learning.

Supervised learning

Supervised learning needs labeled datasets, that is, we tell the model what we want it to learn. Suppose we have an ice cream shop and for the last few years we have been recording daily weather data, temperature, month, day of the week, etc., and we have also been doing the same with the number of ice creams sold each day. In this case, we would surely be interested in training a model that, based on the climatological data, temperature, etc. (characteristics of the model) of a specific day, tells us how many ice creams are going to be sold (the label to be predicted).

Depending on the type of label, within the supervised learning there are two types of models:

- Classification models, which produce as output a discrete label, that is, a label within a finite set of possible labels. In turn, classification models can be binary if we have to predict between two classes or labels (disease or not disease, classification of emails as "spam" or not "spam") or multiclass, when we have to classify more than two classes (classification of animal images, sentiment analysis, etc.).

- The regression models produce as output a real value, like the example we mentioned of the ice cream.

Unsupervised learning

Unsupervised learning, on the other hand, works with data that has not been labeled. We do not have a label to predict. These algorithms are mainly used in tasks where it is necessary to analyze the data to extract new knowledge or group entities by affinity.

This type of learning also has applications for reducing dimensionality or simplifying datasets. In the case of grouping data by affinity, the algorithm must define a similarity or distance metric that serves to compare the data with each other. As an example of unsupervised learning we have the clustering algorithms, which could be applied to find customers with similar characteristics to those who offer certain products or target a marketing campaign, discovery of topics or detection of anomalies, among others. On the other hand, sometimes some datasets such as those related to genomic information have large amounts of characteristics and for various reasons, such as reducing the training time of the algorithms, improving the performance of the model or facilitating the visual representation of the data, we need to reduce the dimensionality or number of columns in the dataset. Dimensionality reduction algorithms use mathematical and statistical techniques to convert the original dataset into a new one with fewer dimensions in exchange for losing some information. Examples of dimensionality reduction algorithms are PCA, t-SNE or ICA.

Semi-supervised learning

Sometimes it is very complicated to have a fully labeled dataset. Let's imagine that we are the owners of a dairy product manufacturing company and we want to study the brand image of our company through the comments that users have posted on social networks. The idea is to create a model that classifies each comment as positive, negative or neutral and then do the study. The first thing we do is dive into social networks and collect sixteen thousand messages where our company is mentioned. The problem now is that we don't have a label on the data, that is, we don't know what the feeling of each comment is. This is where semi-supervised learning comes into play. This type of learning has a little of the two previous ones. Using this approach, you start by manually tagging some of the comments. Once we have a small portion of tagged comments, we train one or more supervised learning algorithms on that small portion of tagged data and use the resulting training models to tag the remaining comments. Finally, we train a supervised learning algorithm using as labels those manually tagged plus those generated by the previous models.

Reinforcement learning

Finally, reinforcement learning is an automatic learning method based on rewarding desired behaviors and penalizing unwanted ones. Applying this method, an agent is able to perceive and to interpret the environment, to execute actions and to learn through test and error. It is a learning that sets long term objectives to obtain a maximum general reward and achieve an optimal solution. The game is one of the most used fields to test reinforcement learning. AlphaGo or Pacman are some games where this technique is applied. In these cases, the agent receives information about the rules of the game and learns to play by himself. At first, obviously, it behaves randomly, but with time it starts to learn more sophisticated movements. This type of learning is also applied in other areas as the robotics, the optimization of resources or systems of control.

Automatic learning is a very powerful tool that converts data into information and facilitates decision making. The key is to define in a clear and concise way the objective of the learning in order to, depending on the characteristics of the dataset we have, select the type of learning that best fits to give a solution that responds to the needs.

Content elaborated by Jose Antonio Sanchez, expert in Data Science and enthusiast of the Artificial Intelligence.

Contents and points of view expressed in this publication are the exclusive responsibility of its author.

El Bosco, Tiziano, El Greco, Rubens, Velázquez, Goya ... The Prado National Museum has more than 35,000 works of unquestionable value, which make it one of the most important art galleries in the world.

In the year of its bicentennial, we approached the Prado joint with Javier Pantoja, Head of the Digital Development Area, to know what innovative projects has been implemented to enrich the visitors experience.

- In recent years, the Prado Museum has launched a series of technological projects aimed at bringing its collections closer to citizens. How did this idea come about? What is the digital strategy of the Prado Museum?

The Prado Museum teams does not only seek to develop a digital strategy, but we want “the digital” to be part of the global museum's strategy. Therefore, the last 3 strategic plans have included a specific line of action for the digital area.

When we talk about digital strategy in the Prado, we refer to two aspects. On the one hand, we talk about processes, that is, the improvement of internal management. But, on the other hand, we also use digital tools to attract a higher audience to the Prado.

Our intention is considering technology as a tool, not as an end. We have a strong commitment to technologies such as linked data or artificial intelligence, but always without losing the objective of spreading the

- What kind of technologies are you implementing and how are you doing it? Can you tell us some concrete projects?

The most interesting point of the project was the launch of the current Prado website at the end of 2015. We were looking to create a semantic web, conceiving collections and the related information in a different way, that allow us to go further, and generate projects as augmented reading or the timeline that we have recently launched using Artificial Intelligence.

It has been a many-years process. The first task was to digitize and document the collection. For that we needed internal applications that allowed all areas of the museum to nurture the databases with their knowledge in a homogeneous way.

Then, we had to make that information available to the public using semantic web models. That is, we needed to define each element semantically: what a “technique”, a “support”, a “painter”, a “sculptor”, etc. are. It was about creating relationships between all the data to result in knowledge graphs. For example, we want to link Goya to the books that speak about him, the kings who ruled in his time, the techniques he used, etc. The work was tedious, but necessary to obtain good results.

This allowed to create a faceted search engine, which helped bring the collection closer to a much more users. With a boolean search engine, you have to know what you are looking for, while a faceted one is easier to find relationships.

All this informative work of updating, reviewing and publishing information open the way for a second part of the project: it was necessary to make the information attractive, through an aesthetic, beautiful and high-usability web. Do not forget that our goal was "position the Prado on the Internet." We had to create an easy-to-use data web. In the end, the Prado is a museum full of works of art and we seek to bring that art closer to the citizens.

The result was a website that has won several awards and recognitions.

- And how did the augmented reading projects and the timeline come about?

They come up from a very simple matter. Let me give an example. An user accesses to the La Anunciación by Fra Angelico work sheet. This sheet mentions terms such as Fiésole or Taddeo Gaddi. Probably, the majority of the users does not know what or who they are. That is, the information was very well written and documented, but it did not reach the entire audience.

Here, we had two solutions. On the one hand, we could make a basic-level worksheet, but creating a text adapted to anyone is very complex, regardless of their age, nationality, etc. That is why we chose another solution: What does a user do to find something he/she didn´t know? In this situation, users search on Google and click on Wikipedia.

As the Prado Museum cannot be an encyclopedia, we took advantage of the knowledge of Wikipedia and DBpedia. Mainly for 3 reasons:

- It has the precise knowledge structure

- It is a context encyclopedia

- It is an open source

- What challenges and barriers have you found when reusing this data?

First of all, we had to write down all the entities, cities, kings… one by one. The work was impossible, since there are currently 16,000 worksheets published on the web. In addition, the Prado is constantly studying and reviewing publications.

That is why we used a natural language recognition engine: the machine reads the text and the Artificial Intelligence understands it as a human, extracts entities and disambiguates the terms. The machine is processing the language, which it understands based on the context. In this task, we use the knowledge graph we already had and the relationships between the different entities through DBpedia.

The work is carried out together with Telefónica -sponsoring company- and GNOSS -developing company-; and the degree of reliability was very high, between 80% and 90%. Even so, there were complex issues. For example, when we talk about “the virgin's announcement”, we do not know if we refer to the concept, to the church in Milan, to some of the paintings related to this subject... In order to ensure that everything was correct, the documentation service reviewed the information.

- What was the next step?

We already had an increased reading. At this point, we asked ourselves why not making these relationships visible. And so that, the Timeline emerged: a cluster of new knowledge graphs that allowed the user to see in a simpler way the relationships between concepts, result of the exploitation of the linked data web.

Timelines tend to had just one layer, but we wanted to go further and create a multilayer structure that would allow us to understand and deepen the concepts: a layer of history, another layer of literature, architecture, philosophy, performing arts ... In this way we can easily see, for example, what works were created during the 100-year war.

For this, we had to review the datasets and select the concepts according to the interest they generate and their context into the Prado collection.

- These types of projects have great potential to be used in the educational field ...

Yes, our tool has a marked informative intention and has great potential to be exploited in educational environments. We have tried to make it easy for any teacher or disseminator to have at a glance the entire context of a work.

But it could also be used to learn history. For example, you can teach the Spanish Independence War using “The second of May” and “The third of May”, paintings elaborated by Goya. In this way, more active learning could be achieved, based on the relationships between concepts.

The tool has an appropriate approach for secondary and high school students, but could also be used at other stages.

- What profiles are necessary to carry out such a project?

We create multidisciplinary teams, composed of designers, computer scientists, documentaries, etc. to carry out the entire project

There are very good specialists doing semantics, working on linked data, artificial intelligence, etc. But it is also necessary to have people with the ideas to join the puzzle into something usable and useful for the user. That is to say, linking wills and knowledge, around a global idea and objectives. Technological advances are very good, and you have to know them to take advantage of their benefits, but always with a clear objective

- Could you tell us what are the next steps to be followed in terms of technological innovation?

We would like to reach agreements with other museums and entities so that all knowledge is integrated. We would like to enrich the information and link it with data from international and national cultural and museum institutions.

We already have some alliances, for example, an agreement with the National Film Library and the RTVE visual archive, and we would like to continue working on that line.

In addition, we will continue working with our data to bring the Prado collection closer to all citizens. The Museum has to be the first reuser of its data sources because it is who best knows them and who can get a good result from them.

Data science is an interdisciplinary field that seeks to extract actuable knowledge from datasets, structured in databases or unstructured as texts, audios or videos. Thanks to the application of new techniques, data science is allowing for answering questions that are not easy to solve through other methods. The ultimate goal is to design improvement or correction actions based on the new knowledge.

The key concept in data science is SCIENCE, and not really data, considering that experts has even begun to speak about a fourth paradigm of science, including data-based approach together with the traditional theoretical, empirical and computational approachs.

Data science combines methods and technologies that come from mathematics, statistics and computer science. It include exploratory analysis, machine learning, deep learning, natural-language processing, data visualization and experimental design, among others.

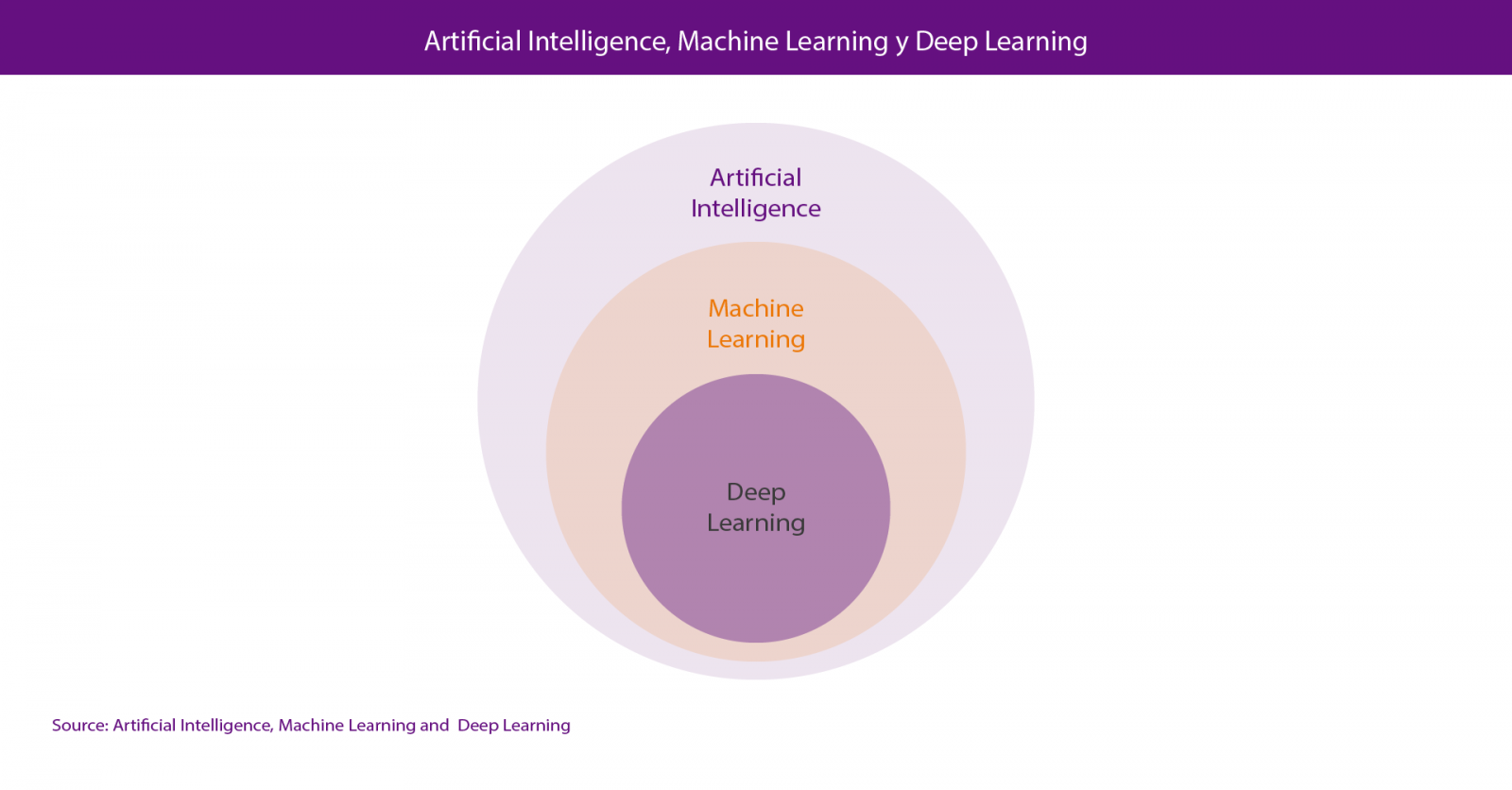

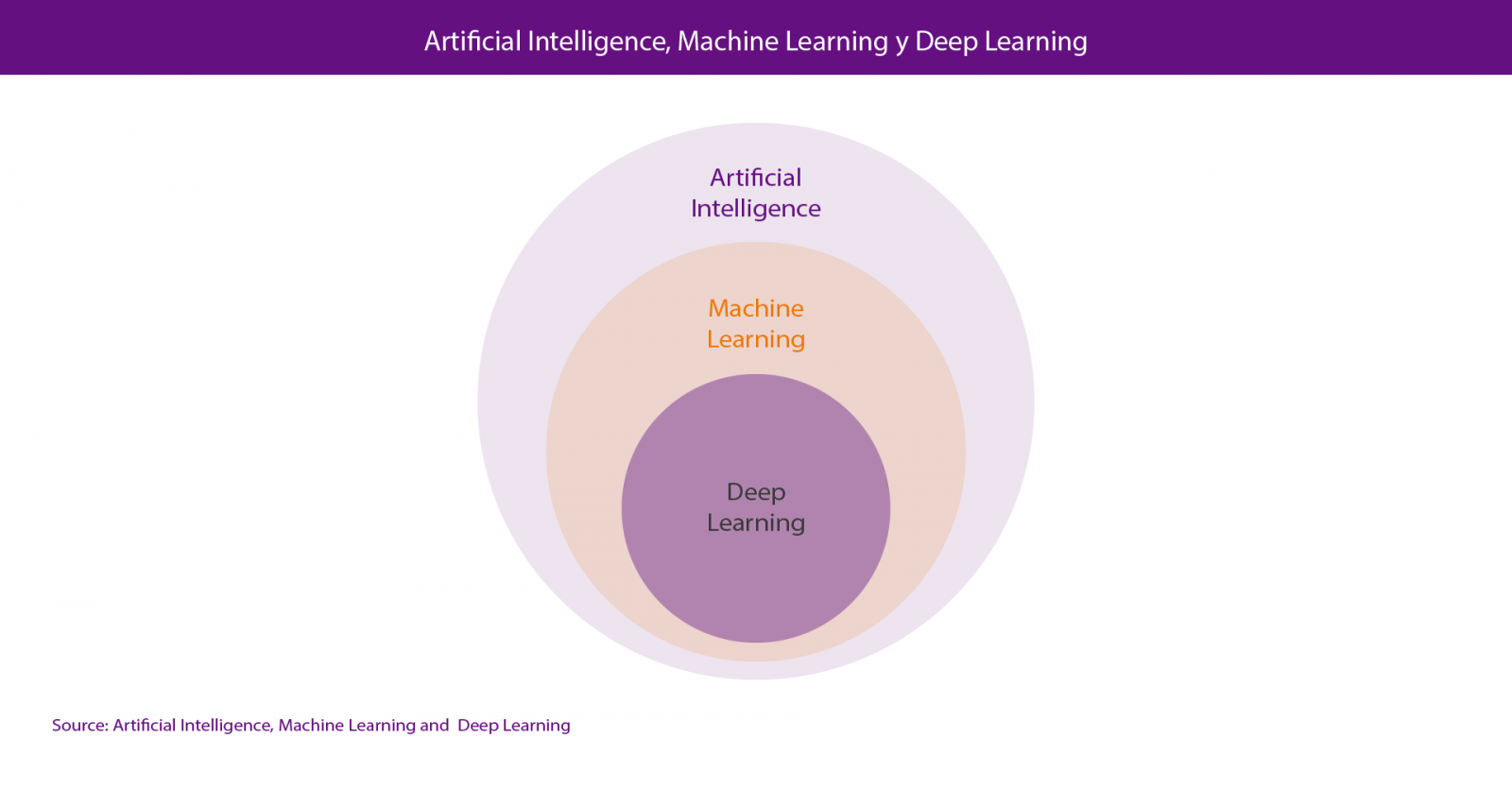

Within Data Science, the two most talked-about technologies are Machine Learning and Deep Learning, both included in the field of artificial intelligence. In both cases, the objective is the construction of systems capable of learning to solve problems without the intervention of a human being, including from orthographic or automatic translation systems to autonomous cars or artificial vision systems applied to use cases as spectacular as the Amazon Go stores.

In both cases, the systems learn to solve problems from the datasets “we teach them” in order to train them to solve the problem, either in a supervised way - training datasets are previously labeled by humans-, or in a unsupervised way - these data sets are not labeled-.

Actually, the correct point of view is to consider deep learning as a part of machine learning so, if we have to look for an attribute to differentiate both of them, we could consider their method of learning, which is completely different. Machine learning is based on algorithms (Bayesian networks, support vector machines, clusters analysis, etc.) that are able to discover patterns from the observations included in a dataset. In the case of deep learning, the approach is inspired, basically, in the functioning of human brain´s neurons and their connections; and there are also numerous approaches for different problems, such as convolutional neural networks for image recognition or recurrent neural networks for natural language processing.

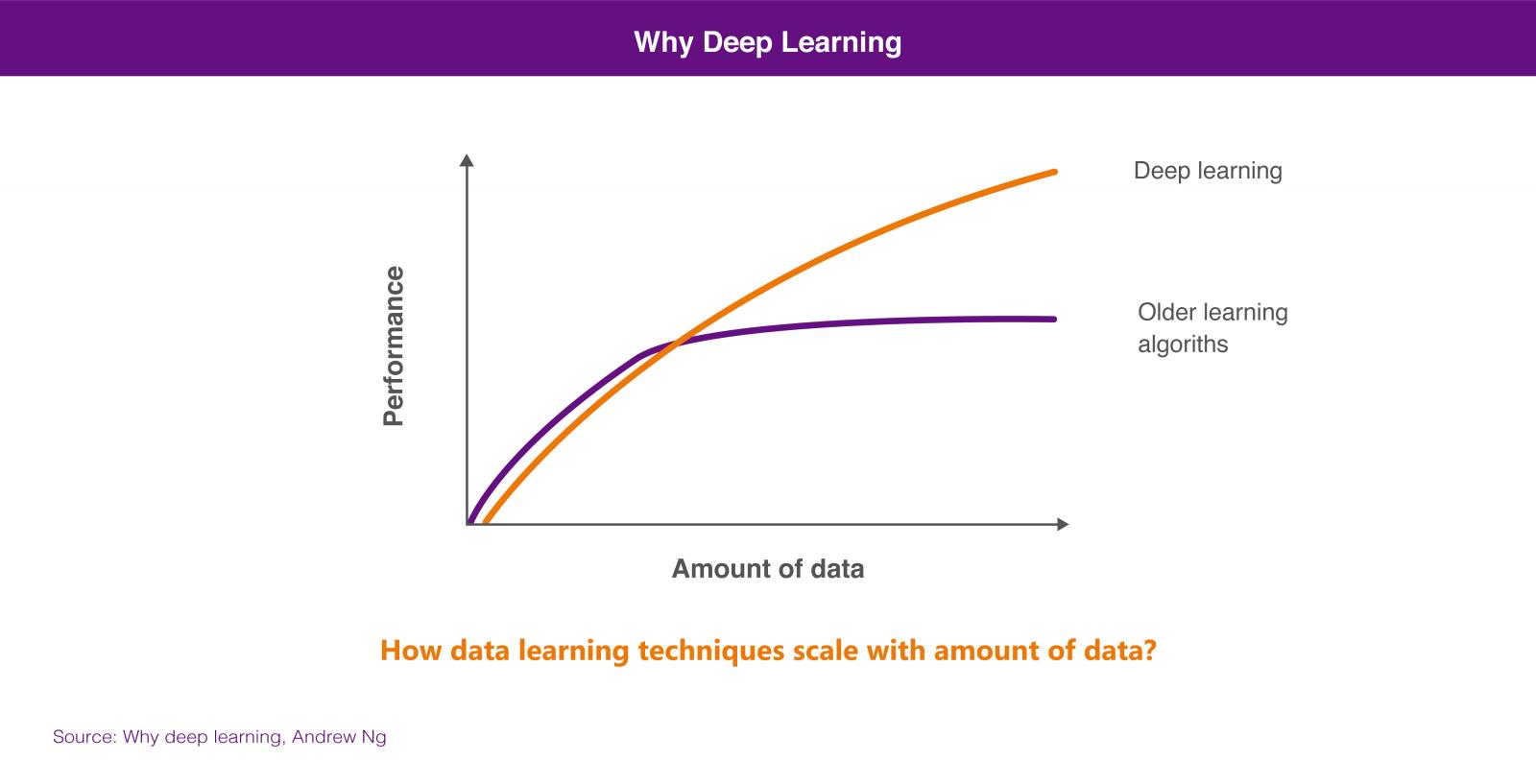

The suitability of one approach or another will rely on the amount of data we have available to train our artificial intelligence system. In general, we can affirm that, if we have a small amounts of training data, the neural network-based approach does not offer superior performance than the algorithm-based approach. The algorithm-based approach usually come to a standstill due to huge amount of data, not being able to offer greater precision although we teach more training cases. However, through deep learning we can have a better performance from this greater amount of data, because the system is usually able to solve the problem with greater precision, the more cases of training are available.

None of these technologies is new at all, considering that they have decades of theoretical development. However, in recent years new advances have greatly reduced their barriers: the opening of programming tools that allow high-level work with very complex concepts, open source software packages to run data management infrastructures, cloud tools that allow access to almost unlimited computing power and without the need to manage the infrastructure, and even free training given by some of the best specialists in the world.

All this issues are contributing to capture data on an unprecedented scale, and store and process data at acceptable costs that allow us to solve old problems with new approaches. Artificial intelligence is also available to many more people, whose collaboration in an increasingly connected world is giving rise to innovation, advancing increasingly faster in all areas: transport, medicine, services, manufacturing, etc.

For some reason, data scientist has been called the sexiest job of the 21st century.

Content prepared by Jose Luis Marín, Head of Corporate Technology Startegy en MADISON MK and Euroalert CEO.

Contents and points of view expressed in this publication are the exclusive responsibility of its author.

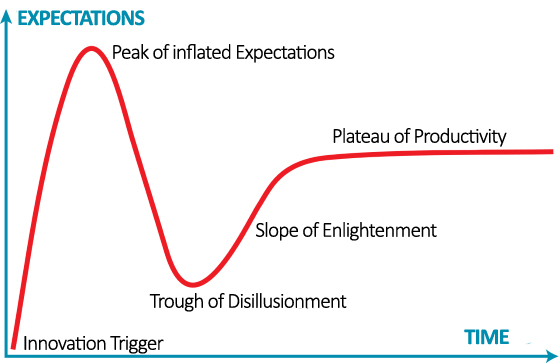

Not all press headlines on Artificial Intelligence are true, but do not make the mistake of underestimating the changes in the coming years thanks to the development of AI.

Artificial intelligence has not yet changed our lives, but we are experiencing a cycle of over-exposure about its results and applications in the short term. The non-specialized media and the marketing departments of companies and other organizations foster a climate of excessive optimism regarding the current achievements in the development of AI.

Undoubtedly, we can all ask our smartphone some simple questions and get reasonable answers. In our online purchases (for example on Amazon.com), we experience the recommendation of personalized products based on our profile as buyers. We can search for events in our digital photographs library hosted in an online service such as Google Photos. With just writing, "mum's birthday" we will get a list of photos of that day with a relatively high precision in seconds. However, the time has not yet come for AI modifying our daily experience when we consume digital or physical products and services. Up to now, our doctor does not use AI to support his diagnosis and our lawyer or financial advisor does not use AI to prepare a resource or better invest our money.

The hot news floods us with photorealistic infographics of a world full of autonomous cars and virtual assistants with whom we talk as if it were a person. The risk of overexploitation in the short term is that, in any development process of a disruptive and exponential technology, technology necessarily fails. This leads to a state of disappointment that entails a reduction of investments by companies and states, which in turn produces a slowdown in the development of such technology. The development of AI is not unconnected to this process and has already known two stages of slowdown. These historical stages are known as the "winters of artificial intelligence". The first winter of the AI took place in the early years of the 1970 decade. After the birth of what today we know as IA - in the decade of 1950 - at the end of the sixties, Marvin Minsky got to assure in 1967 that "... in the course of a generation... the problem of creating an artificial intelligence will be practically solved...". Only three years later, Minsky himself said: "... in three to eight years we will have achieved a machine with an artificial intelligence superior to human beings ..."

Currently, no expert would dare to specify when (if so) this could happen. The second winter of AI arrived in the early years of the nineties. After a period of overexploitation of the then known as "expert systems". Expert systems are computer programs that contain logical rules that code and parameterize the operation of simple systems. For example, a computer program that encodes the rules of the chess game belongs to the type of programs that we know as expert systems. The coding of fixed logic rules to simulate the behavior of a system is what is known as symbolic artificial intelligence and was the first type of artificial intelligence that was developed.

Between 1980 and 1985, companies had invested more than one billion US dollars each year in the development of these systems. After this period, it was demonstrated that these turned out to be extremely expensive to maintain as well as not scalable and with little results for the investment they entailed. The historical journey of t AI development from its beginnings in 1950 to the present is a highly recommended and exciting reading.

What has artificial intelligence achieved so far?

Although we say that we must be prudent with the expectations regarding AI applications in the short term, we must punctuate here what have been the main achievements of artificial intelligence from a strictly rigorous point of view. For this, we will base on the book Deep Learning with R. Manning Shelter Island (2018) by Francois Cho-llet with J.J. Allaire.

- Classification of images at an almost-human level.

- Recognition of spoken language at an almost-human level.

- Transcription of written language at an almost-human level.

- Substantial improvement in the text conversion to spoken language.

- Substantial improvement in translations.

- Autonomous driving at an almost-human level.

- Ability to answer questions in natural language.

- Players (Chess or Go) that far exceed human capabilities.

After this brief introduction of the current historical moment in regarding to AI, we are in a position to define, in a slightly more formal way, what artificial intelligence is and which fields of science and technology directly are affected by its development.

Artificial intelligence could be defined as: the field of science that studies the possibility of automating intellectual tasks that are normally performed by humans. The reality is that the scientific domain of AI is normally divided into two sub-fields of computer science and mathematics called Machine Learning and Deep Learning. The representation of this statement can be seen in Figure 1.

Figure 1. Artificial Intelligence and sub-fields such as Machine Learning and Deep Lear-ning.

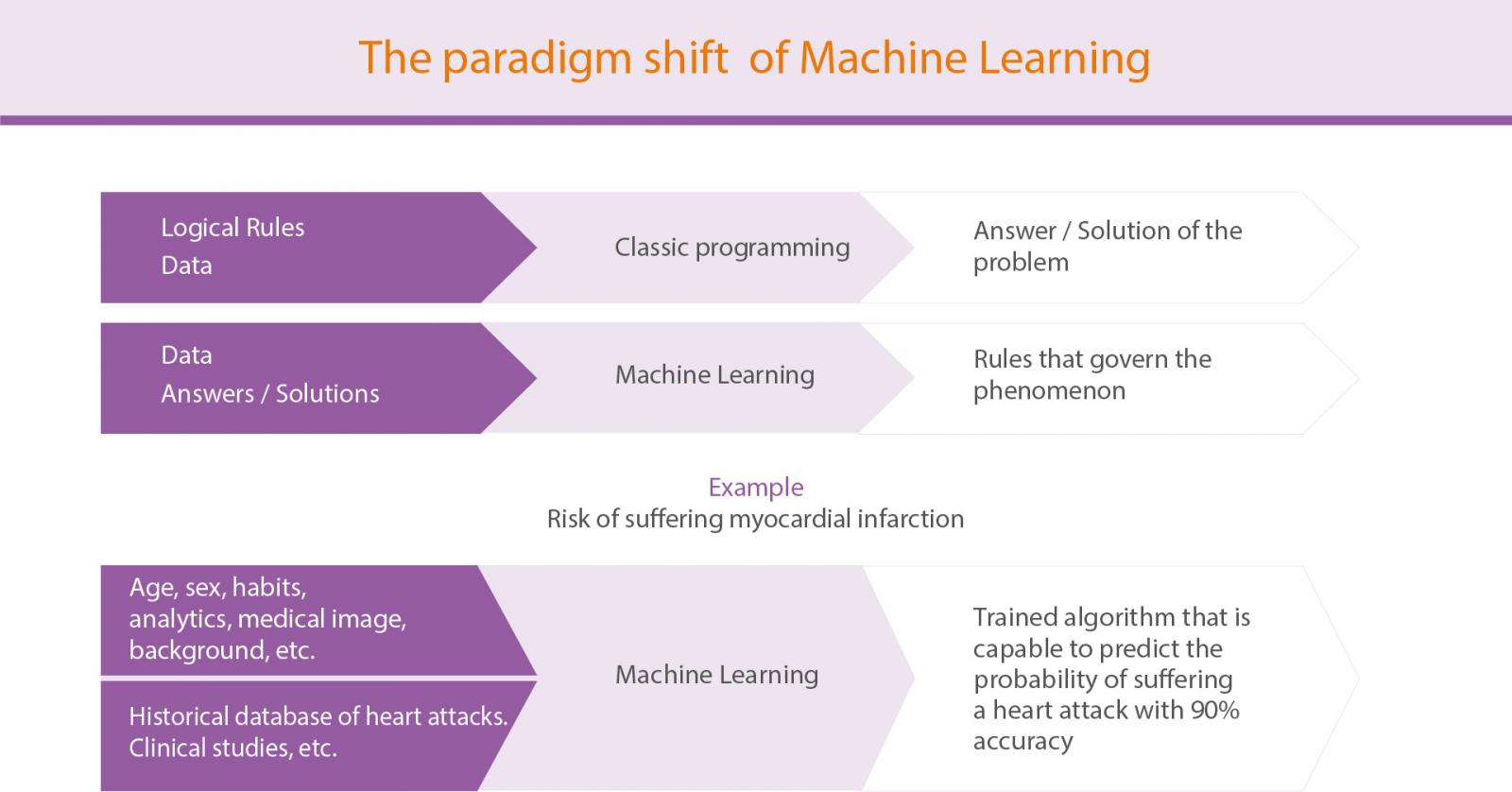

We are not going to speak here about technical considerations about the difference between machine learning and deep learning (you can learn more about these concepts in this post). However, perhaps the most interesting is to understand the paradigm shift from the first development of AI based on logical rules to the modern conception of our days, where machines find out those rules that govern a certain process (for example, the risk of suffering a myocardial infarction) based on input data and previous experiences that have been recorded and analysed by a certain algorithm. Figure 2 represents that change of paradigm between the classic programming of logical rules and the machine learning approach.

Figure 2. The paradigm shift to Machine Learning from classical programming.

The fast development of AI in our days is the result of multiple factors. However all experts agree that two of them have been and are key to enhance this third wave of artificial intelligence development: the reduction of computing cost and the explosion of available data thanks to the Internet and the connected devices.

Just as the Internet has impacted, and more than it is going to impact, in all areas of our lives - from the end consumer to the processes and operating models of the most traditional companies and industries - the IA will radically change the way in which humans use our capacities for the development of our species. There is a lot of news about the negative impact that AI will have on jobs positions in the next 10-20 years. While there is no doubt that the automation of certain processes will eliminate some jobs that still exist today, it is no less true that, precisely, the first jobs to disappear will be those jobs that make us "less human". Those jobs that employ people as machines to repeat the same actions, one after another, will be replaced by agents equipped with AI, either in the form of software or in the form of robots equipped with AI.

The iterative and predictable tasks will fall within the exclusive domain of the machines. On the contrary, those tasks that require leadership, empathy, creativity and value judgments will continue to belong only to humans. So, is it done? Do we have nothing else to do but wait for the machines to do part of the work while we do the other part? The answer is no. The reality is there is a big space in the middle of these two extremes.

The space in which the human is amplified by the AI and in return the AI is trained by humans to feed back this intermediate cycle. Paul R. Daugherty and H. James Wil-son, define in his book Human + Machine this space as "The missing middle”. In this intermediate space, AI machines increase human capabilities, make us stronger with exoskeletons capable of lifting heavy loads or restore us the capacity to walk after paralysis; they make us less vulnerable by working in potentially harmful environments such as space, the bottom of the sea or in a pipeline with deadly gases; We increase our sensory capabilities in real time with glasses that carry cameras and sensors that complement our field of vision with overlapping textual information.

With the help of machines equipped with an improved AI, it is not difficult to imagine how the processes and maintenance tasks will change in a factory, the different way in which our fields will be cultivated, how the warehouses of the future will work and the efficiency of our self-managed homes.

In conclusion, let's stop looking at the machines and AI as rivals that will dispute our jobs, and start thinking about how we will merge our most human skills with the superior capabilities of the machines regarding resistance, speed and precision.

Let's do different things now to do different things in the future.

Content prepared by Alejandro Alija, expert in Digital Transformation and innovation.

Contents and points of view expressed in this publication are the exclusive responsibility of its author.

The commercial adoption of any new technology and, therefore, its incorporation into the business value chain follows a cycle that can be moulded in different ways. One of the best known models is the Gartner hype cycle. With regard to artificial intelligence and data science, the current discussion focuses on whether the peak of inflated expectations has already been reached or, on the contrary, we will continue to see how the promises of new and revolutionary innovations increase.

As we advance in this cycle, it is usual to find new advances in technology (new algorithms, in the case of Artificial Intelligence) or a great knowledge about their possibilities of commercial application (new products or products with better price or functionalities). And, of course, the more industries and sectors are affected, the higher expectations are generated.

However, the new discoveries do not only remain on the technological level, but it usually also go deeper into the study and understanding of the economic, social, legal or ethical impact derived from the innovations that are arriving on the market. For any business, it is essential to detect and understand as soon as possible the impact that a new technology will have on its value chain. This way, the company will be able to incorporate the technology into its capabilities before its competitors and generate competitive advantages.

One of the most interesting thesis recently published to model and understand the economic impact of Artificial Intelligence is the one proposed by Professor Ajay Agrawal with Joshua Gans and Avi Goldfarb in his book "Prediction Machines: The Simple Economics of Artificial Intelligence”. The premise is very simple: at the beginning, it establish that the purpose of artificial intelligence, from a merely economic point of view, is to reduce the cost of predictions.

When the cost of a raw material or technology is reduced, it is usual for the industry to increase their use, first applying this technology to the products or services it was designed for, and later, to other product or services that were manufactured in another way. Sometimes it even affects the value of substitute products (that fall) and complementary products (that rise), or other elements of the value chain.

Although these technologies are very complex, the authors were able to establish a surprisingly simple economic framework to understand the AI. But let's see a concrete case, familiar to all of us, in which the increase of the accuracy of the predictions, taken to the extreme, could mean not only to automate a series of tasks, but also to completely change the rules of a business .

As we all know, Amazon uses Artificial Intelligence for the purchase recommendation system that offers suggestions for new products. As mentioned by the authors in his book, the accuracy of this system is around 5%. This means that users acquire 1 out of every 20 products that Amazon suggests, which is not bad.

If Amazon is able to increase the accuracy of these predictions, let's say to 20%, that is, if users acquire 1 out of every 5 suggested products, Amazon would increase its profits enormously and the value of the company would skyrocket even more. But if we imagine a system capable of having a precision of 90% in the purchase predictions, Amazon could consider radically changing its business model and send us products before we decide to buy them, because we would only return 1 out of every 10. AI would not just automate tasks or improve our shopping experience, it would also radically change the way we understand the retail industry.

Given that the main substitute for AI predictions are human predictions, it seems clear that our value as a predictive tool will continue decreasing. The advance of the wave of automations based on artificial intelligence and data science already allows us to see the beginning of this trend.

On the contrary, company data would become an increasingly valuable asset, since they are the main complementary product necessary to generate correct predictions. Likewise, the public data necessary to enrich the companies data, and thus make possible new use cases, would also increase its value.

Following this line of reasoning, we could dare to establish metrics to measure the value of public data where they were used. We would only have to answer this question: how much improves the accuracy of a certain prediction if we enrich the training with determined open data? These improvements would have a concrete value that could give us an idea of the economic value of a public dataset in a specific scenario.

The repeated mantra "data is the new oil" is changing from being a political or marketing affirmation to being supported by economic science, because data are the necessary and indispensable raw material to make good and valuable predictions. And it seems clear that, to continue reducing the predictions cost, the data value should increase. Simple economy.

Content prepared by Jose Luis Marín, Head of Corporate Technology Startegy en MADISON MK and Euroalert CEO.

Contents and points of view expressed in this publication are the exclusive responsibility of its author.

New technologies are changing the world we live in. The society changes, the economy changes, and with that, the jobs change. The implementation of technologies such as Artificial Intelligence, Big Data or Internet of Things are driving the demand for new professional profiles that we did not even conceive a decade ago. In addition, the possibilities of automating tasks currently developed by humans, executing task more quickly and efficiently, leads some professionals to consider that their job could be in danger. Responding to this situation is one of the big challenges we have to overcome.

According to the report It's learning. Just not as we know. How to accelerate skills acquisition in the age of intelligent technologies, carried out by G20 Young Entrepreneurs' Alliance and Accenture, if skill-building doesn’t catch up with the rate of technological progress, the G20 economies could lose up to US$11.5 trillion in cumulative GDP growth in the next ten years.

But this change is not simple. It is not correct just to assume that intelligent technologies will eliminate some jobs and create new ones. In fact, the biggest effect will be the evolution of traditional roles. According to the study, 90% of each worker time will be affected by new technologies. Taking the average of all sectors, 38% of worker time is currently dedicated to tasks that will be automated, while 51% are activities that can be improved (or augmented), using new technologies that help to increase our skills. In short, the solution is not just to train more engineers or data analysts, since even these profiles will have to evolve to adapt to a future that is closer than it seems.

To know how this change will affect the different professional profiles, the report analyse the tasks and skills necessary to carry out the current work positions, determining how they will evolve in the future. To facilitate the analysis, the professions have been grouped around 10 different roles. The following table shows the result of the study:

| Role cluster | Typical activities | Illustrative occupations | Illustrative task evolution |

|---|---|---|---|

| Management & Leadership | Supervises and takes decisions | Corporate managers and education administrators | Marketing managers handle data and take decisions based on social media and web metrics |

| Empathy & Support | Provides expert support and guidance | Psychiatrists and nurses | Nurses can focus on more patient care rather than administration and form filling |

| Science & Engineering | Conducts deep, technical analyzes | Chemical engineers and computer programmers | Researchers focus on sharing, explaining and applying their work, rather than being trapped in labs |

| Process & Analysis | Processes and analyzes information | Auditors and clerks | Accountants can ensure quality control rather than crunch data |

| Analytical subject-Matter Expertise | Examines and applies experience of complex systems | Air traffic controllers and forensic science technicians | Information security analysts can widen and deepen searches, supported by AI-powered simulations |

| Relational subject-matter Expertise | Applies expertise in environments that demand human interaction | Medical team workers and interpreters | Ambulance dispatchers can focus on accurate assessment and support, rather than logistical details |

| Technical Equipment maintenance | Installs and maintains equipment and machinery | Mechanics and maintenance workers | Machinery mechanics work with data to predict failure and perform preventative repairs |

| Machine Operation & Manoeuvring | Operates machinery and drives vehicles | Truck drivers and crane operators | Tractor operators can ensure data-guided, accurate and tailored treatment of crops, whilst “driving”. |

| Physical Manual Labor | Performs strenuous physical tasks in specific environments | Construction and landscaping workers | Construction workers reduce re-work as technology predicts the location and nature of physical obstacles |

| Physical Services | Performs services that demand physical activity | Hairdressers and cooks | Transport attendants can focus on customer needs and service rather than technical tasks |

The results show how some skills, such as administrative management, will decline in importance. However, for almost every single role described in the previous table, a combination of complex reasoning, creativity, socio-emotional intelligence and sensory perception skills will be necessary.

The problem is that these types of skills are acquired with experience. The current education and learning systems, both regulated and corporate, are not designed to address this revolution, so it will be also necessary their evolution. To facilitate this transition, the report provides a series of recommendations:

- Speed up experiential learning: Teaching has traditionally been based on a passive model, consisting of absorbing knowledge by listening or reading. However, experiential learning becomes more and more powerful, that is, through the practical application of knowledge. This would be the case of airplane pilots, who learn through flight simulation programs. New technologies, such as augmented reality or artificial intelligence, help to make these solutions based on experience more personalized and accessible, covering a greater number of sectors and job positions.

- Shift focus from institutions to individuals: Inside a work team it is common to found workers with different capacities and abilities, in such a way that they complement each other, but, as we have seen, it is also necessary to put more emphasis on expanding the variety of skills of each individual worker, including new skills such as creativity and socio-emotional intelligence. The current system does not drive the learning of these subjects, so it is necessary to design metrics and incentives that encourage the mix of skills in each person.

- Empower vulnerable learners: Learning must be accessible to all employees, in order to close the current skills gap. According to the study, in general, the most vulnerable workers to technological change are the least qualified, because their jobs tend to be easier to automate. However, they also tend to receive the least training from the company, something that must change. Other groups to pay attention to are the older workers and those from small companies, with fewer resources. An increasing number of companies are using modular and free MOOC courses to facilitate the equal acquisition of skills among the entire workforce. In addition, some governments, such as France or Singapore, are providing training aids.

In short, we are in a moment of change. It is necessary to stop and reflect on how our work environment will change in order to adapt ourselves to it, acquiring new skills that provide us with competitive advantages in our professional future.

In the policies promoted by the European Union, an intimate connection between artificial intelligence and open data has been considered. In this regard, as we highlighted, open data is essential for the proper functioning of artificial intelligence, since the algorithms must be fed by data whose quality and availability is essential for its continuous improvement, as well as to audit its correct operation.

Artificial intelligence entails an increase in the sophistication of data processing, since it requires greater precision, updating and quality, which, on the other hand, must be obtained from very diverse sources to increase the quality of the algorithms results. Likewise, an added difficulty is the fact that processing is carried out in an automated way and must offer precise answers immediately to face changing circumstances. Therefore, a dynamic perspective that justifies the need for data -not only to be offered in open and machine-readable format, but also with the highest levels of precision and disaggregation- is needed.

This requirement acquires a special importance as regards the accessibility of the data generated by the public sector, undoubtedly one of the main sources for algorithms due to both the large number of available data sets and the special interest of the subjects, especially public services. In this regard, apart from the need to overcome the inadequacies of the current legal framework regarding the limited scope of the obligations imposed on public entities, it is convenient to assess what extent the legal conditions in which data are offered serve to streamline the development of applications based on artificial intelligence.

Thus, in the first place, article 5.3 of the Law states categorically that "public sector administrations and organizations may not be required to maintain the production and storage of a certain type of document focused on its reuse". Taking into account this legal forecast, the aforementioned entities can rely on the absence of an obligation to guarantee the supply of data indefinitely. Also in the limitation of liability contemplated by some provisions when stating that the use of the data will be carried out under the responsibility and risk of the users or reuser agents or, even, the exoneration for any error or omission that is determined by the incorrectness of the data itself. However, it is an interpretation whose effective scope in each specific case has to be contrasted with the demanding European regulation related to the scope of the obligations and the protection channels, in particular after the reform that took place in the year 2013.

Beyond an approach based on strict regulatory compliance from a restrictive interpretation, the truth is that the need to offer open data policies for the public sector to meet the unique demands of artificial intelligence requires a proactive approach. In this sense, the interaction between public and private subjects in contexts of systematic data measurements and collection, continuously updated from generalized connections - as is the case of smart city initiatives - places us in front of a technological scenario where active contractual management policies acquire a special importance in order to overcome the barriers and legal difficulties for its opening. In fact, municipal public services are often provided by private parties that are outside the reuse regulations and, in addition, data are not always obtained from services or objects managed by public entities; even in spite of the general interest underlying in areas such as electricity supply, the provision of telephony and electronic communications services, or even financial services.

For this reason, the initiative launched by the European Union in 2017 acquires a singular importance from the perspective of artificial intelligence, since it aims to overcome a large part of the legal restrictions currently in place for data opening. In the same sense, the Spanish Strategy of R & D in Artificial Intelligence, recently presented by the Ministry of Science, Innovation and Universities, considers as one of its priorities the development of a digital data ecosystem whose measures include the need to guarantee an optimal use of open data, as well as the creation of a National Data Institute in charge of the governance of the data coming from the different levels of the Public Administration. Likewise, in line with the European initiative previously referred, among other measures, there is a need to expand the sharing obligations of up to certain private entities and scientific data, which would undoubtedly have a relevant impact on the better functioning of the algorithms.

The technological singularity that Artificial Intelligence poses requires, without a doubt, an adequate ethical and legal framework that allows facing the challenges that it entails. The new Directive on open data and reuse of public sector information recently approved by the European Parliament will be a strong impulse for artificial intelligence, as this initiative will expand both the obligated parties and the type of data that will have to be available. Undoubtedly a certainly relevant measure, which will be followed by many others within the framework of the European Union's strategy on Artificial Intelligence, one of whose main premises is to ensure an adequate regulatory framework to facilitate technological innovation based on respect for fundamental rights and the ethical principles.

Content prepared by Julián Valero, professor at the University of Murcia and Coordinator of the Research Group "Innovation, Law and Technology" (iDerTec).

Contents and points of view expressed in this publication are the exclusive responsibility of its author.

Virtual assistants, purchase prediction algorithms or fraud detection systems. We all interact every day with Artificial Intelligence technologies.

Although there is still a lot of development ahead, the current Artificial Intelligence impact in our lives cannot be denied. When we talk about Artificial Intelligence (or AI) we don't mean humanoid-looking robots that think like us, but rather a succession of algorithms that help us extract value from large volumes of data in an agile and efficient way, facilitating automatic decision making. These algorithms need to be trained with quality data so that their behaviour adapts to our social context rules.

Currently, Artificial Intelligence has a high impact on the business value chain, and affects many of the decisions taken not only by companies but also by individuals. Therefore, it is essential that the data they use are not biased and respect human rights and democratic values.

The European Union and the governments of the different countries are promoting policies in this regard. To help them in this process, the OECD has developed a series of minimum principles that AI systems should comply with. These principles are a series of practical and flexible standards that can stand the test of time in a constantly evolving field. These standards are not legally binding, but they seek to influence international standards and function as the basis of the different laws.

The OECD principles on Artificial Intelligence are based on the recommendations developed by a working group composed of 50 expert AI members, including representatives of governments and business communities, as well as civil, academic and scientific society. These recommendations were adopted on May 22, 2019 by OECD member countries.

These recommendations identify five complementary values-based for the responsible stewardship of Artificial Intelligence:

- AI should benefit people and the planet by driving inclusive growth, sustainable development and well-being.

- AI systems should be designed in a way that respects the rule of law, human rights, democratic values and diversity, and they should include appropriate safeguards – for example, enabling human intervention where necessary – to ensure a fair and just society.

- There should be transparency and responsible disclosure around AI systems to ensure that people understand AI-based outcomes and can challenge them.

- AI systems must function in a robust, secure and safe way throughout their life cycles and potential risks should be continually assessed and managed.

- Organisations and individuals developing, deploying or operating AI systems should be held accountable for their proper functioning in line with the above principles.

Consistent with these principles, the OECD also provides five recommendations to governments:

- Facilitate public and private investment in research & development to spur innovation in trustworthy AI.

- Foster accessible AI ecosystems with digital infrastructure and technologies and mechanisms to share data and knowledge.

- Ensure a policy environment that will open the way to deployment of trustworthy AI systems.

- Empower people with the skills for AI and support workers for a fair transition.

- Co-operate across borders and sectors to progress on responsible stewardship of trustworthy AI.

These recommendations are a first step towards the achievement of responsible Artificial Intelligence. Among its next steps, the OECD contemplates the development of the AI Policy Observatory, which will be responsible for providing guidance on metrics, policies and good practices in order to help implement the principles indicated above, something fundamental if we want to move beyond the theoretical to practice scope.

Governments can take these recommendations as a basis and develop their own policies, which will facilitate the homogeneity of Artificial Intelligence systems and ensure that their behaviour respects the basic principles of coexistence.