The transfer of human knowledge to machine learning models is the basis of all current artificial intelligence. If we want AI models to be able to solve tasks, we first have to encode and transmit solved tasks to them in a formal language that they can process. We understand as a solved task information encoded in different formats, such as text, image, audio or video. In the case of language processing, and in order to achieve systems with a high linguistic competence so that they can communicate with us in an agile way, we need to transfer to these systems as many human productions in text as possible. We call these data sets the corpus.

Corpus: text datasets

When we talk about corpora (its Latin plural) or datasets that have been used to train Large Language Models (LLMs) such as GPT-4, we are talking about books of all kinds, content written on websites, large repositories of text and information in the world such as Wikipedia, but also less formal linguistic productions such as those we write on social networks, in public reviews of products or services, or even in emails. This variety allows these language models to process and handle text in different languages, registers and styles.

For people working in Natural Language Processing (NLP), data science and data engineering, great enablers like Kaggle or repositories like Awesome Public Datasets on GitHub, which provide direct access to download public datasets. Some of these data files have been prepared for processing and are ready for analysis, while others are in an unstructured state, which requires prior cleaning and sorting before they can be worked with. While also containing quantitative numerical data, many of these sources present textual data that can be used to train language models.

The problem of legitimacy

One of the complications we have encountered in creating these models is that text data that is published on the internet and has been collected via API (direct connections that allow mass downloading from a website or repository) or other techniques, are not always in the public domain. In many cases, they are copyrighted: writers, translators, journalists, content creators, scriptwriters, illustrators, designers and also musicians claim licensing fees from the big tech companies for the use of their text and image content to train models. The media, in particular, are actors greatly impacted by this situation, although their positioning varies according to their situation and different business decisions. There is therefore a need for open corpora that can be used for these training tasks, without prejudice to intellectual property.

Characteristics suitable for a training corpus

Most of the characteristics, which have traditionally have traditionally defined a good corpus in linguistic in linguistic research have not changed when these text datasets are now used to train language models.

- It is still beneficial to use whole texts rather than fragments to ensure coherence.

- Texts must be authentic, from linguistic reality and natural language situations, retrievable and verifiable.

- It is important to ensure a wide diversity in the provenance of texts in terms of sectors of society, publications, local varieties of languages and issuers or speakers.

- In addition to general language, a wide variety of specialised language, technical terms and texts specific to different areas of knowledge should be included.

- Register is fundamental in a language, so we must cover both formal and informal register, in its extremes and intermediate regions.

- Language must be well-formed to avoid interference in learning, so it is desirable to remove code marks, numbers or symbols that correspond to digital metadata and not to the natural formation of the language.

Like specific recommendations for the formats of the files that are to form part of these corpora to be part of these corpora, we find that text corpora with annotations should be stored in UTF-8 encoding and in JSON or CSV format, not in PDF. The preferred format of the sound corpus is WAV 16 bit, 16 KHz. (for voice) or 44.1 KHz (for music and audio). Video corpora should be compiled in MPEG-4 (MP4) format, and translation memories in TMX or CSV.

The text as a collective heritage

National libraries in Europe are actively digitising their rich repositories of history and culture, ensuring public access and preservation. Institutions such as the National Library of France or the British Library are leading the way with initiatives that digitise everything from ancient manuscripts to current web publications. This digital hoarding not only protects heritage from physical deterioration, but also democratises access for researchers and the public and, for some years now, also allows the collection of training corpora for artificial intelligence models.

The corpora provided officially by national libraries allow text collections to be used to create public technology available to all: a collective cultural heritage that generates a new collective heritage, this time a technologicalone. The gain is greatest when these institutional corpora do focus on complying with intellectual property laws, providing only open data and texts free of copyright restrictions, with prescribed or licensed rights. This, coupled with the encouraging fact that the amount of real data needed to train language models is hopefully decreasing as technology advances models is decreasing as technology advances, e.g. with the generation ofadvances, for example, with the generation of synthetic data or the optimisation of certain parameters, indicates that it is possible to train large text models without infringing on intellectual property laws operating in Europe.

In particular, the Biblioteca Nacional de España is making a major digitisation effort to make its valuable text repositories available for research, and in particular for language technologies. Since the first major mass digitisation of physical collections in 2008, the BNE has opened up access to millions of documents with the sole aim of sharing and universalising knowledge. In 2023, thanks to investment from the European Union's Recovery, Transformation and Resilience funds, the BNE is promoting a new digital preservation project in its Strategic Plan 2023-2025the plan focuses on four axes:

- the massive and systematic digitisation of collections,

- BNELab as a catalyst for innovation and data reuse in digital ecosystems,

- partnerships and new cooperation environments,

- and technological integration and sustainability.

The alignment of these four axes with new artificial intelligence and natural language processing technologies is more than obvious, as one of the main data reuses is the training of large language models. Both the digitised bibliographic records and the Library's cataloguing indexes are valuable materials for knowledge technology.

Spanish language models

In 2020, as a pioneering and relatively early initiative, in Spain the following was introduced MarIA a language model promoted by the Secretary of State for Digitalisation and Artificial Intelligence and developed by the National Supercomputing Centre (BSC-CNS), based on the archives of the National Library of Spain. In this case, the corpus was composed of texts from web pages, which had been collected by the BNE since 2009 and which had served to nourish a model originally based on GPT-2.

A lot has happened between the creation of MarIA and the announcement at the announcement at the 2024 Mobile World Congress of the construction of a great foundational language model, specifically trained in Spanish and co-official languages. This system will be open source and transparent, and will only use royalty-free content in its training. This project is a pioneer at European level, as it seeks to provide an open, public and accessible language infrastructure for companies. Like MarIA, the model will be developed at the BSC-CNS, working together with the Biblioteca Nacional de España and other actors such as the Academia Española de la Lengua and the Asociación de Academias de la Lengua Española.

In addition to the institutions that can provide linguistic or bibliographic collections, there are many more institutions in Spain that can provide quality corpora that can also be used for training models in Spanish. The Study on reusable data as a language resource, published in 2019 within the framework of the Language Technologies Plan, already pointed to different sources: the patents and technical reports of the Spanish and European Patent and Trademark Office, the terminology dictionaries of the Terminology Centre, or data as elementary as the census of the National Statistics Institute, or the place names of the National Geographic Institute. When it comes to audiovisual content, which can be transcribed for reuse, we have the video archive of RTVE A la carta, the Audiovisual Archive of the Congress of Deputies or the archives of the different regional television stations. The Boletín Oficial del Estado itself and its associated materials are an important source of textual information containing extensive knowledge about our society and its functioning. Finally, in specific areas such as health or justice, we have the publications of the Spanish Agency of Medicines and Health Products, the jurisprudence texts of the CENDOJ or the recordings of court hearings of the General Council of the Judiciary.

European initiatives

In Europe there does not seem to be as clear a precedent as MarIA or the upcoming GPT-based model in Spanish, as state-driven projects trained with heritage data, coming from national libraries or official bodies.

However, in Europe there is good previous work on the availability of documentation that could now be used to train European-founded AI systems. A good example is the europeana project, which seeks to digitise and make accessible the cultural and artistic heritage of Europe as a whole. It is a collaborative initiative that brings together contributions from thousands of museums, libraries, archives and galleries, providing free access to millions of works of art, photographs, books, music pieces and videos. Europeana has almost 25 million documents in text, which could be the basis for creating multilingual or multilingual competent foundational models in the different European languages.

There are also non-governmental initiatives, but with a global impact, such as Common Corpus which are the ultimate proof that it is possible to train language models with open data and without infringing copyright laws. Common Corpus was released in March 2024, and is the largest dataset created for training large language models, with 500 billion words from various cultural heritage initiatives. This corpus is multilingual and is the largest to date in English, French, Dutch, Spanish, German, Italian and French.

And finally, beyond text, it is possible to find initiatives in other formats such as audio, which can also be used to train AI models. In 2022, the National Library of Sweden provided a sound corpus of more than two million hours of recordings from local public radio, podcasts and audiobooks. The aim of the project was to generate an AI-based model of language-competent audio-to-text transcription that maximises the number of speakers to achieve a diverse and democratic dataset available to all.

Until now, the sense of collectivity and heritage has been sufficient in collecting and making data in text form available to society. With language models, this openness achieves a greater benefit: that of creating and maintaining technology that brings value to people and businesses, fed and enhanced by our own linguistic productions.

Content prepared by Carmen Torrijos, expert in AI applied to language and communication. The contents and points of view reflected in this publication are the sole responsibility of the author.

Today, 23 April, is World Book Day, an occasion to highlight the importance of reading, writing and the dissemination of knowledge. Active reading promotes the acquisition of skills and critical thinking by bringing us closer to specialised and detailed information on any subject that interests us, including the world of data.

Therefore, we would like to take this opportunity to showcase some examples of books and manuals regarding data and related technologies that can be found on the web for free.

1. Fundamentals of Data Science with R, edited by Gema Fernandez-Avilés and José María Montero (2024)

Access the book here.

- What is it about? The book guides the reader from the problem statement to the completion of the report containing the solution to the problem. It explains some thirty data science techniques in the fields of modelling, qualitative data analysis, discrimination, supervised and unsupervised machine learning, etc. It includes more than a dozen use cases in sectors as diverse as medicine, journalism, fashion and climate change, among others. All this, with a strong emphasis on ethics and the promotion of reproducibility of analyses.

- Who is it aimed at? It is aimed at users who want to get started in data science. It starts with basic questions, such as what is data science, and includes short sections with simple explanations of probability, statistical inference or sampling, for those readers unfamiliar with these issues. It also includes replicable examples for practice.

- Language: Spanish.

2. Telling stories with data, Rohan Alexander (2023).

Access the book here.

- What is it about? The book explains a wide range of topics related to statistical communication and data modelling and analysis. It covers the various operations from data collection, cleaning and preparation to the use of statistical models to analyse the data, with particular emphasis on the need to draw conclusions and write about the results obtained. Like the previous book, it also focuses on ethics and reproducibility of results.

- Who is it aimed at? It is ideal for students and entry-level users, equipping them with the skills to effectively conduct and communicate a data science exercise. It includes extensive code examples for replication and activities to be carried out as evaluation.

- Language: English.

3. The Big Book of Small Python Projects, Al Sweigart (2021)

Access the book here.

- What is it about? It is a collection of simple Python projects to learn how to create digital art, games, animations, numerical tools, etc. through a hands-on approach. Each of its 81 chapters independently explains a simple step-by-step project - limited to a maximum of 256 lines of code. It includes a sample run of the output of each programme, source code and customisation suggestions.

- Who is it aimed at? The book is written for two groups of people. On the one hand, those who have already learned the basics of Python, but are still not sure how to write programs on their own. On the other hand, those who are new to programming, but are adventurous, enthusiastic and want to learn as they go along. However, the same author has other resources for beginners to learn basic concepts.

- Language: English.

4. Mathematics for Machine Learning, Marc Peter Deisenroth A. Aldo Faisal Cheng Soon Ong (2024)

Access the book here.

- What is it about? Most books on machine learning focus on machine learning algorithms and methodologies, and assume that the reader is proficient in mathematics and statistics. This book foregrounds the mathematical foundations of the basic concepts behind machine learning

- Who is it aimed at? The author assumes that the reader has mathematical knowledge commonly learned in high school mathematics and physics subjects, such as derivatives and integrals or geometric vectors. Thereafter, the remaining concepts are explained in detail, but in an academic style, in order to be precise.

- Language: English.

5. Dive into Deep Learning, Aston Zhang, Zack C. Lipton, Mu Li, Alex J. Smola (2021, continually updated)

Access the book here.

- What is it about? The authors are Amazon employees who use the mXNet library to teach Deep Learning. It aims to make deep learning accessible, teaching basic concepts, context and code in a practical way through examples and exercises. The book is divided into three parts: introductory concepts, deep learning techniques and advanced topics focusing on real systems and applications.

- Who is it aimed at? This book is aimed at students (undergraduate and postgraduate), engineers and researchers, who are looking for a solid grasp of the practical techniques of deep learning. Each concept is explained from scratch, so no prior knowledge of deep or machine learning is required. However, knowledge of basic mathematics and programming is necessary, including linear algebra, calculus, probability and Python programming.

- Language: English.

6. Artificial intelligence and the public sector: challenges, limits and means, Eduardo Gamero and Francisco L. Lopez (2024)

Access the book here.

- What is it about? This book focuses on analysing the challenges and opportunities presented by the use of artificial intelligence in the public sector, especially when used to support decision-making. It begins by explaining what artificial intelligence is and what its applications in the public sector are, and then moves on to its legal framework, the means available for its implementation and aspects linked to organisation and governance.

- Who is it aimed at? It is a useful book for all those interested in the subject, but especially for policy makers, public workers and legal practitioners involved in the application of AI in the public sector.

- Language: Spanish

7. A Business Analyst’s Introduction to Business Analytics, Adam Fleischhacker (2024)

Access the book here.

- What is it about? The book covers a complete business analytics workflow, including data manipulation, data visualisation, modelling business problems, translating graphical models into code and presenting results to stakeholders. The aim is to learn how to drive change within an organisation through data-driven knowledge, interpretable models and persuasive visualisations.

- Who is it aimed at? According to the author, the content is accessible to everyone, including beginners in analytical work. The book does not assume any knowledge of the programming language, but provides an introduction to R, RStudio and the "tidyverse", a series of open source packages for data science.

- Language: English.

We invite you to browse through this selection of books. We would also like to remind you that this is only a list of examples of the possibilities of materials that you can find on the web. Do you know of any other books you would like to recommend? let us know in the comments or email us at dinamizacion@datos.gob.es!

The era of digitalisation in which we find ourselves has filled our daily lives with data products or data-driven products. In this post we discover what they are and show you one of the key data technologies to design and build this kind of products: GraphQL.

Introduction

Let's start at the beginning, what is a data product? A data product is a digital container (a piece of software) that includes data, metadata and certain functional logics (what and how I handle the data). The aim of such products is to facilitate users' interaction with a set of data. Some examples are:

- Sales scorecard: Online businesses have tools to track their sales performance, with graphs showing trends and rankings, to assist in decision making.

- Apps for recommendations: Streaming TV services have functionalities that show content recommendations based on the user's historical tastes.

- Mobility apps. The mobile apps of new mobility services (such as Cabify, Uber, Bolt, etc.) combine user and driver data and metadata with predictive algorithms, such as dynamic fare calculation or optimal driver assignment, in order to offer a unique user experience.

- Health apps: These applications make massive use of data captured by technological gadgets (such as the device itself, smart watches, etc.) that can be integrated with other external data such as clinical records and diagnostic tests.

- Environmental monitoring: There are apps that capture and combine data from weather forecasting services, air quality systems, real-time traffic information, etc. to issue personalised recommendations to users (e.g. the best time to schedule a training session, enjoy the outdoors or travel by car).

As we can see, data products accompany us on a daily basis, without many users even realising it. But how do you capture this vast amount of heterogeneous information from different technological systems and combine it to provide interfaces and interaction paths to the end user? This is where GraphQL positions itself as a key technology to accelerate the creation of data products, while greatly improving their flexibility and adaptability to new functionalities desired by users.

What is GraphQL?

GraphQL saw the light of day on Facebook in 2012 and was released as Open Source in 2015. It can be defined as a language and an interpreter of that language, so that a developer of data products can invent a way to describe his product based on a model (a data structure) that makes use of the data available through APIs.

Before the advent of GraphQL, we had (and still have) the technology REST, which uses the HTTPs protocol to ask questions and get answers based on the data. In 2021, we introduced a post where we presented the technology and made a small demonstrative example of how it works. In it, we explain REST API as the standard technology that supports access to data by computer programs. We also highlight how REST is a technology fundamentally designed to integrate services (such as an authentication or login service).

In a simple way, we can use the following analogy. It is as if REST is the mechanism that gives us access to a complete dictionary. That is, if we need to look up any word, we have a method of accessing the dictionary, which is alphabetical search. It is a general mechanism for finding any available word in the dictionary. However, GraphQL allows us, beforehand, to create a dictionary model for our use case (known as a "data model"). So, for example, if our final application is a recipe book, what we do is select a subset of words from the dictionary that are related to recipes.

To use GraphQL, data must always be available via an API. GraphQL provides a complete and understandable description of the API data, giving clients (human or application) the possibility to request exactly what they need. As quoted in this post, GraphQL is like an API to which we add a SQL-style "Where" statement.

Below, we take a closer look at GraphQL's strengths when the focus is on the development of data products.

Benefits of using GraphQL in data products:

- With GraphQL, the amount of data and queries on the APIs is considerably optimised . APIs for accessing certain data are not intended for a specific product (or use case) but as a general access specification (see dictionary example above). This means that, on many occasions, in order to access a subset of the data available in an API, we have to perform several chained queries, discarding most of the information along the way. GraphQL optimises this process, as it defines a predefined (but adaptable in the future) consumption model over a technical API. Reducing the amount of data requested has a positive impact on the rationalisation of computing resources, such as bandwidth or caches, and improves the speed of response of systems.

- This has an immediate effect on the standardisation of data access. The model defined thanks to GraphQL creates a data consumption standard for a family of use cases. Again, in the context of a social network, if what we want is to identify connections between people, we are not interested in a general mechanism of access to all the people in the network, but a mechanism that allows us to indicate those people with whom I have some kind of connection. This kind of data access filter can be pre-configured thanks to GraphQL.

- Improved safety and performance: By precisely defining queries and limiting access to sensitive data, GraphQL can contribute to a more secure and better performing application.

Thanks to these advantages, the use of this language represents a significant evolution in the way of interacting with data in web and mobile applications, offering clear advantages over more traditional approaches such as REST.

Generative Artificial Intelligence. A new superhero in town.

If the use of GraphQL language to access data in a much more efficient and standard way is a significant evolution for data products, what will happen if we can interact with our product in natural language? This is now possible thanks to the explosive evolution in the last 24 months of LLMs (Large Language Models) and generative AI.

The following image shows the conceptual scheme of a data product, intLegrated with LLMS: a digital container that includes data, metadata and logical functions that are expressed as functionalities for the user, together with the latest technologies to expose information in a flexible way, such as GraphQL and conversational interfaces built on top of Large Language Models (LLMs).

How can data products benefit from the combination of GraphQL and the use of LLMs?

- Improved user experience. By integrating LLMs, people can ask questions to data products using natural language, . This represents a significant change in how we interact with data, making the process more accessible and less technical. In a practical way, we will replace the clicks with phrases when ordering a taxi.

- Security improvements along the interaction chain in the use of a data product. For this interaction to be possible, a mechanism is needed that effectively connects the backend (where the data resides) with the frontend (where the questions are asked). GraphQL is presented as the ideal solution due to its flexibility and ability to adapt to the changing needs of users,offering a direct and secure link between data and questions asked in natural language. That is, GraphQl can pre-select the data to be displayed in a query, thus preventing the general query from making some private or unnecessary data visible for a particular application.

- Empowering queries with Artificial Intelligence: The artificial intelligence not only plays a role in natural language interaction with the user. One can think of scenarios where the very model that is defined with GraphQL is assisted by artificial intelligence itself. This would enrich interactions with data products, allowing a deeper understanding and richer exploration of the information available. For example, we can ask a generative AI (such as ChatGPT) to take this catalogue data that is exposed as an API and create a GraphQL model and endpoint for us.

In short, the combination of GraphQL and LLMs represents a real evolution in the way we access data. GraphQL's integration with LLMs points to a future where access to data can be both accurate and intuitive, marking a move towards more integrated information systems that are accessible to all and highly reconfigurable for different use cases. This approach opens the door to a more human and natural interaction with information technologies, aligning artificial intelligence with our everyday experiences of communicating using data products in our day-to-day lives.

Content prepared by Alejandro Alija, expert in Digital Transformation and Innovation.

The contents and points of view reflected in this publication are the sole responsibility of its author.

The process of technological modernisation in the Administration of Justice in Spain began, to a large extent, in 2011. That year, the first regulation specifically aimed at promoting the use of information and communication technologies was approved. The aim of this regulation was to establish the conditions for recognising the validity of the use of electronic means in judicial proceedings and, above all, to provide legal certainty for procedural processing and acts of communication, including the filing of pleadings and the receipt of notifications of decisions. In this sense, the legislation established a basic legal status for those dealing with the administration of justice, especially for professionals. Likewise, the Internet presence of the Administration of Justice was given legal status, mainly with the appearance of electronic offices and access points, expressly admitting the possibility that the proceedings could be carried out in an automated manner.

However, as with the 2015 legal regulation of the common administrative procedure and the legal regime of the public sector, the management model it was inspired by was substantially oriented towards the generation, preservation and archiving of documents and records. Although a timid consideration of data was already apparent, it was largely too general in the scope of the regulation, as it was limited to recognising and ensuring security, interoperability and confidentiality.

In this context, the approval of Royal Decree-Law 6/2023 of 19 December has been a very important milestone in this process, as it incorporates important measures that aim to go beyond mere technological modernisation. Among other issues, it seeks to lay the foundations for an effective digital transformation in this area.

Towards a data-driven management orientation

Although this new regulatory framework largely consolidates and updates the previous regulation, it is an important step forward in facilitating the digital transformation as it establishes some essential premises without which it would be impossible to achieve this objective. Specifically, as stated in the Explanatory Memorandum:

From the understanding of the capital importance of data in a contemporary digital society, a clear and decisive commitment is made to its rational use in order to achieve evidence and certainty at the service of the planning and elaboration of strategies that contribute to a better and more effective public policy of Justice. [...] These data will not only benefit the Administration itself, but all citizens through the incorporation of the concept of "open data" in the Administration of Justice. This same data orientation will facilitate so-called automated, assisted and proactive actions.

In this sense, a general principle of data orientation is expressly recognised, thus overcoming the restrictions of a document- and file-based electronic management model as it has existed until now. This is intended not only to achieve objectives of improving procedural processing but also to facilitate its use for other purposes such as the development of dashboards, the generation of automated, assisted and proactive actions, the use of artificial intelligence systems and its publication in open data portals.

How has this principle been put into practice?

The main novelties of this regulatory framework from the perspective of the data orientation principle are the following:

- As a general rule, IT and communication systems shall allow for the exchange of information in structured data format, facilitating their automation and integration into the judicial file. To this end, the implementation of a data interoperability platform is envisaged, which will have to be compatible with the Data Intermediation Platform of the General State Administration.

- Data interoperability between judicial and prosecutorial bodies and data portals are set up as e-services of the administration of justice. The specific technical conditions for the provision of such services are to be defined through the State Technical Committee for e-Judicial Administration (CTEAJE).

- In order, among other objectives, to facilitate the promotion of artificial intelligence, the implementation of automated, assisted and proactive activities, as well as the publication of information in open data portals, a requirement is established for all information and communication systems to ensure that the management of information incorporate metadata and is based on common and interoperable data models. With regard to communications in particular, data orientation is also reflected in the electronic channels used for communications.

- In contrast to the common administrative procedure, the legal definition of court file incorporates an explicit reference to data as one of the basic units of the common administrative procedure.

- A specific regulation is included for the so-called Justice Administration Data Portal, so that the current data access tool in this area is legally enshrined for the first time. Specifically, in addition to establishing certain minimum contents and assigning competences to various bodies, it envisages the creation of a specific section on open data, as well as a mandate to the competent administrations to make them automatically processable and interoperable with the state open data portal. In this respect, the general regulations already existing for the rest of the public sector are declared applicable, without prejudice to the singularities that may be specifically contemplated in the procedural regulations.

In short, the new regulation is an important step in articulating the process of digital transformation of the Administration of Justice based on a data-driven management model. However, the unique competencies and organisational characteristics of this area require a unique governance model. For this reason, a specific institutional framework for cooperation has been envisaged, the effective functioning of which is essential for the implementation of the legal provisions and, ultimately, for addressing the challenges, difficulties and opportunities posed by open data and the re-use of public sector information in the judicial area. These are challenges that need to be tackled decisively so that the technological modernisation of the Justice Administration facilitates its effective digital transformation.

Content prepared by Julián Valero, Professor at the University of Murcia and Coordinator of the Research Group "Innovation, Law and Technology" (iDerTec). The contents and points of view reflected in this publication are the sole responsibility of its author.

Standardisation is essential to improve efficiency and interoperability in governance and data management. The adoption of standards provides a common framework for organising, exchanging and interpreting data, facilitating collaboration and ensuring data consistency and quality. The ISO standards, developed at international level, and the UNE norms, developed specifically for the Spanish market, are widely recognised in this field. Both catalogues of good practices, while sharing similar objectives, differ in their geographical scope and development approach, allowing organisations to select the most appropriate standards for their specific needs and context.

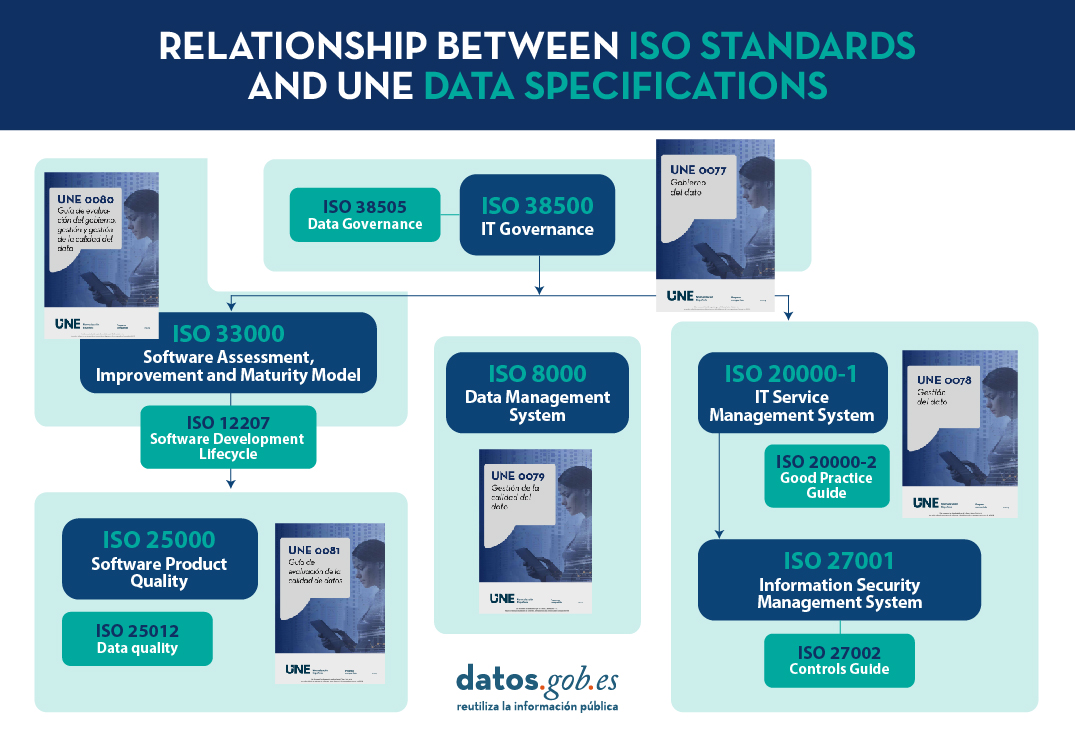

With the publication, a few months ago, of the UNE 0077, 0078, 0079, 0080, and 0081 specifications on data governance, management, quality, maturity, and quality assessment, users may have questions about how these relate to the ISO standards they already have in place in their organisation. This post aims to help alleviate these doubts. To this end, an overview of the main ICT-related standards is presented, with a focus on two of them: ISO 20000 on service management and ISO 27000 on information security and privacy, and the relationship between these and the UNE specifications is established.

Most common ISO standards related to data

ISO standards have the great advantage of being open, dynamic and agnostic to the underlying technologies. They are also responsible for bringing together the best practices agreed and decided upon by different groups of professionals and researchers in each of the fields of action. If we focus on ICT-related standards, there is already a framework of standards on governance, management and quality of information systems where, among others, the following stand out:

At the government level:

- ISO 38500 for corporate governance of information technology.

At management level:

- ISO 8000 for data management systems and master data.

- ISO 20000 for service management.

- ISO 25000 for the quality of the generated product (both software and data).

- ISO 27000 and ISO 27701 for information security and privacy management.

- ISO 33000 for process evaluation.

In addition to these standards, there are others that are also commonly used in companies, such as:

- ISO 9000-based quality management system

- Environmental management system proposed in ISO 14000

These standards have been used for ICT governance and management for many years and have the great advantage that, as they are based on the same principles, they can be used perfectly well together. For example, it is very useful to mutually reinforce the security of information systems based on the ISO/IEC 27000 family of standards with the management of services based on the ISO/IEC 20000 family of standards.

The relationship between ISO standards and UNE data specifications

The UNE 0077, 0078, 0079, 0080 and 0081 specifications complement the existing ISO standards on data governance, management and quality by providing specific and detailed guidelines that focus on the particular aspects of the Spanish environment and the needs of the national market.

When the UNE 0077, 0078, 0079, 0080, 0080, and 0081 specifications were developed, they were based on the main ISO standards, in order to be easily integrated into the management systems already available in the organisations (mentioned above), as can be seen in the following figure:

Figure 1. Relation of the UNE specifications with the different ISO standards for ICT.

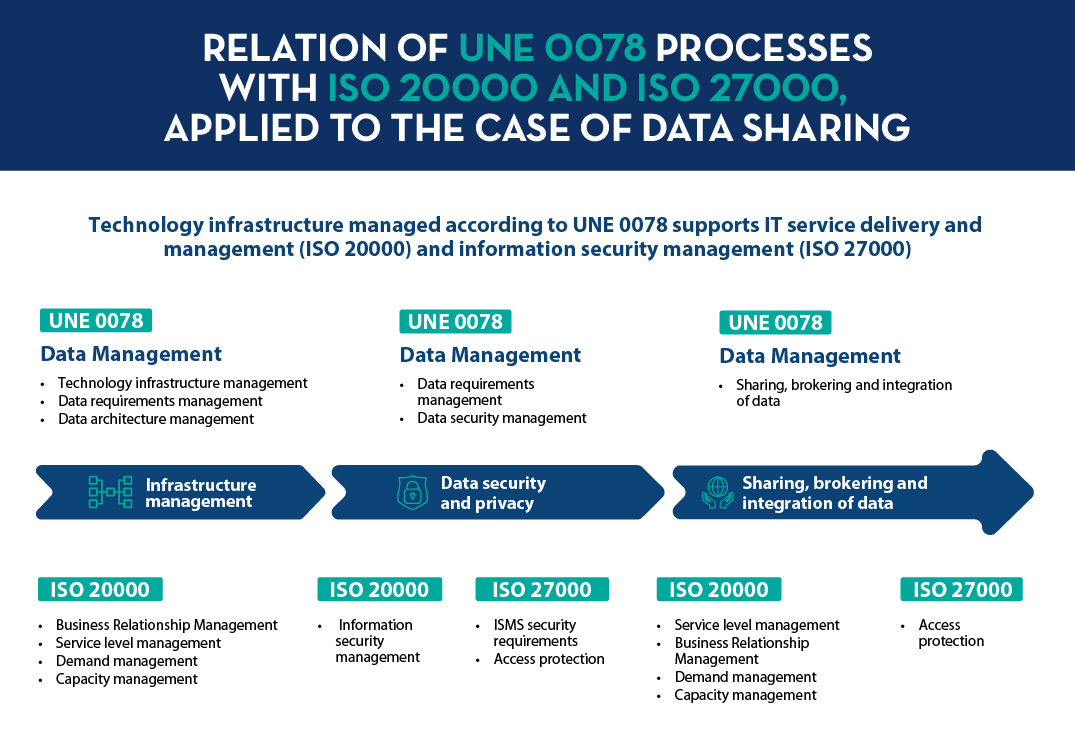

Example of application of standard UNE 0078

The following is an example of how the UNE and ISO standards that many organisations have already had in place for years can be more clearly integrated, taking UNE 0078 as a reference. Although all UNE data specifications are intertwined with most ISO standards on IT governance, management and quality, the UNE 0078 data management specification is more closely related to information security management systems (ISO 27000) and IT service management (ISO 20000). On Table 1 you can see the relationship for each process with each ISO standard.

| Process UNE 0078: Data Management | Related to ISO 20000 | Related to ISO 27000 |

|---|---|---|

| (ProcDat) Data processing | ||

| (InfrTec) Technology infrastructure management | X | X |

| (ReqDat) Data Requirements Management | X | X |

| (ConfDat) Data Configuration Management | ||

| (DatHist) Historical data management | X | |

| (SegDat) Data security management | X | X |

| (Metdat) Metadata management | X | |

| (ArqDat) Data architecture and design management | X | |

| (CIIDat) Sharing, brokering and integration of data | X | |

| (MDM) Master Data Management | | |

| (HR) Human resources management | ||

| (CVidDat) Data lifecycle management | X | |

| (AnaDat) Data analysis |

| Process UNE 0078: Data Management | Related to ISO 20000 | Related to ISO 27000 |

|---|---|---|

| (ProcDat) Data processing | ||

| (InfrTec) Technology infrastructure management | X | X |

| (ReqDat) Data Requirements Management | X | X |

| (ConfDat) Data Configuration Management | ||

| (DatHist) Historical data management | X | |

| (SegDat) Data security management | X | X |

|

(Metdat) Metadata management |

X | |

|

(ArqDat) Data architecture and design management |

X |

|

|

(CIIDat) Sharing, brokering and integration of data |

X |

|

|

(MDM) Master Data Management |

|

|

|

(HR) Human resources management |

|

|

|

(CVidDat) Data lifecycle management |

X |

|

|

(AnaDat) Data analysis |

|

Figure 2. Relationship of UNE 0078 processes with ISO 27000 and ISO 20000.

Relationship of the UNE 0078 standard with ISO 20000

Regarding the interrelation between ISO 20000-1 and the UNE 0078 specification, here you can find a use case in which an organisation wants to make relevant data available for consumption throughout the organisation through different services. The integrated implementation of UNE 0078 and ISO 20000-1 enables organisations:

- Ensure that business-critical data is properly managed and protected.

- Improve the efficiency and effectiveness of IT services, ensuring that the technology infrastructure supports the needs of the business and end users.

- Align data management and IT service management with the organisation''s strategic objectives, improving decision making and market competitiveness.

The relationship between the two is manifested in how the technology infrastructure managed according to UNE 0078 supports the delivery and management of IT services according to ISO 20000-1.

This requires at least the following:

- Firstly, in the case of making data available as a service, a well-managed and secure IT infrastructureis necessary. This is essential, on the one hand, for the effective implementation of IT service management processes, such as incident and problem management, and on the other hand, to ensure business continuity and availability of IT services.

- Secondly, once the infrastructure is in place, and it is known that the data will be made available for consumption at some point in time, the principles of sharing and brokering of that data need to be managed. For this purpose, the UNE 0078 specification includes the process of data sharing, intermediation and integration. Its main objective is to enable its acquisition and/or delivery for consumption or sharing, noting if necessary the deployment of intermediation mechanisms, as well as its integration. This UNE 0078 process would be related to several of the processes in ISO 20000-1, such as the Business Relationship Managementprocess, service level management, demand management and the management of the capacity of the data being made available.

Relationship of the UNE 0078 standard with ISO 27000

Likewise, the technological infrastructure created and managed for a specific objective must ensure minimum data security and privacy standards, therefore, the implementation of good practices included in ISO 27000 and ISO 27701 will be necessary to manage the infrastructure from the perspective of information security and privacy, thus showing a clear example of interrelation between the three management systems: services, information security and privacy, and data.

Not only is it essential that the data is made available to organisations and citizens in an optimal way, but it is also necessary to pay special attention to the security of the data throughout its entire lifecycle during commissioning. This is where the ISO 27000 standard brings its full value. The ISO 27000 standard, and in particular ISO 27001 fulfils the following objectives:

- It specifies the requirements for an information security management system (ISMS).

- It focuses on the protection of information against unauthorised access, data integrity and confidentiality.

- It helps organisations to identify, assess and manage information security risks.

In this line, its interrelation with the UNE 0078 Data Management specification is marked through the Data Security Management process. Through the application of the different security mechanisms, it is verified that the information handled in the systems is not subject to unauthorised access, maintaining its integrity and confidentiality throughout the data''s life cycle. Similarly, a triad can be built in this relationship with the data security management process of the UNE 0078 specification and with the UNE 20000-1 process of SGSTI Operation - Information Security Management.

The following figure presents how the UNE 0078 specification complements the current ISO 20000 and ISO 27000 as applied to the example discussed above.

Figure 3. Relation of UNE 0078 processes with ISO 20000 and ISO 27000 applied to the case of data sharing.

Through the above cases, it can be seen that the great advantage of the UNE 0078 specification is that it integrates seamlessly with existing security management and service management systems in organisations. The same applies to the rest of the UNE standards 0077, 0079, 0080, and 0081. Therefore, if an organisation that already has ISO 20000 or ISO 27000 in place wants to implement data governance, management and quality initiatives, alignment between the different management systems with the UNE specifications is recommended, as they are mutually reinforcing from a security, service and data point of view.

Content prepared by Dr. Fernando Gualo, Professor at UCLM and Data Governance and Quality Consultant. The contents and points of view reflected in this publication are the sole responsibility of its author.

After months of new developments, the pace of advances in artificial intelligence does not seem to be slowing down - quite the contrary. A few weeks ago, when reviewing the latest developments in this field on the occasion of the 2023 deadline, video generation from text instructions was considered to be still in its infancy. However, just a few weeks later, we have seen the announcement of SORA. With this tool, it seems that the possibility to generate realistic videos, up to one minute long, from textual descriptions is here.

Every day, the tools we have access to become more sophisticated and we are constantly amazed by their ability to perform tasks that once seemed exclusive to the human mind. We have quickly become accustomed to generating text and images from written instructions and have incorporated these tools into our daily lives to enhance and improve the way we do our jobs. With each new development, pushing the boundaries a little further than we imagined, the possibilities seem endless.

Advances in Artificial Intelligence, powered by open data and other technologies such as those associated with the Web3, are helping to rethink the future of virtually every field of our activity: from solutions to address the challenges of climate change, to artistic creation, be it music, literature or painting[6], to medical diagnosis, agriculture or the generation of trust to drive the creation of social and economic value.

In this article we will review the developments that impact on a field where, in the coming years, interesting advances are likely to be made thanks to the combination of artificial intelligence and open data. We are talking about the design and planning of smarter, more sustainable and liveable cities for all their inhabitants.

Urban Planning and Management

Urban planning and management is complicated because countless complex interactions need to be anticipated, analysed and resolved. Therefore, it is reasonable to expect major breakthroughs from the analysis of the data that cities increasingly open up on mobility, energy consumption, climatology and pollution, planning and land use, etc. New techniques and tools provided by generative artificial intelligence combined, for example, with intelligent agents will allow a deeper interpretation and simulation of urban dynamics.

In this sense, this new combination of technologies could be used for example to design more efficient, sustainable and liveable cities, anticipating the future needs of the population and dynamically adapting to changes in real time. Thus, new smart urban models would be used to optimise everything from traffic flow to resource allocation by simulating behaviour through intelligent agents.

Urbanist.ai is one of the first examples of an advanced urban analytics platform, based on generative artificial intelligence, that aims to transform the way urban planning tasks are currently conceived. The services it currently provides already allow the participatory transformation of urban spaces from images, but its ambition goes further and it plans to incorporate new techniques that redefine the way cities are planned. There is even a version of UrbanistAI designed to introduce children to the world of urban planning.

Going one step further, the generation of 3D city models is something that tools such as InfiniCity have already made available to users. Although there are still many challenges to be overcome, the results are promising. These technologies could make it substantially cheaper to generate digital twins on which to run simulations that anticipate problems before they are built.

Available data

However, as with other developments based on Generative AI, these issues would not be possible without data, and especially not without open data. All new developments in AI use a combination of private and public data in their training, but in few cases is the training dataset known with certainty, as it is not made public. Data can come from a wide variety of sources, such as IoT sensors, government records or public transport systems, and is the basis for providing a holistic view of how cities function holistic view of how cities function and how and how their inhabitants interact with the urban environment.

The growing importance of open data in training these models is reflected in initiatives such as the Task Force on AI Data Assets and Open Government, launched by the US Department of Commerce, which will be tasked with preparing open public data for Artificial Intelligence. This means not only machine-readable formats, but also machine-understandable metadata. With open data enriched by metadata and organised in interpretable formats, artificial intelligence models could yield much more accurate results.

A long-established and basic data source is OpenStreetmap (OSM), a collaborative project that makes a free and editable map of open global geographic dataavailable to the community. It includes detailed information on streets, squares, parks, buildings, etc. which is crucial as a basis for urban mobility analysis, transport planning or infrastructure management. The immense cost of developing such a resource is only within the reach of large technology companies, making it invaluable to all initiatives that use it as a basis.

More specific datasets such as HoliCity, a 3D data asset with rich structural information, including 6,300 real-world views, are proving valuable. For example, recent scientific work based on this dataset has shown that it is possible for a model fed with millions of street images to predict neighbourhood characteristics, such as home values or crime rates.

Along these lines, Microsoft has released an extensive collection of building contours automatically generated from satellite imagery, covering a large number of countries and regions.

Figure 3: Urban Atlas Images (OSM)

Microsoft Building Footprints provide a detailed basis for 3D city modelling, urban density analysis, infrastructure planning and natural hazard management, giving an accurate picture of the physical structure of cities.

We also have Urban Atlas, an initiative that provides free and open access to detailed land use and land cover information for more than 788 Functional Urban Areas in Europe. It is part of the Copernicus Land Monitoring Serviceprogramme, and provides valuable insights into the spatial distribution of urban features, including residential, commercial, industrial, green areas and water bodies, street tree maps, building block height measurements, and even population estimates.

Risks and ethical considerations

However, we must not lose sight of the risks posed, as in other domains, by the incorporation of artificial intelligence into the planning and management of cities, as discussed in the UN report on "Risks, Applications and Governance of AI for Cities". For example, concerns about privacy and security of personal information raised by mass data collection, or the risk of algorithmic biases that may deepen existing inequalities. It is therefore essential to ensure that data collection and use is conducted in an ethical and transparent manner, with a focus on equity and inclusion.

This is why, as city design moves towards the adoption of artificial intelligence, dialogue and collaboration between technologists, urban planners, policy makers and society at large will be key to ensuring that smart city development aligns with the values of sustainability, equity and inclusion. Only in this way can we ensure that the cities of the future

are not only more efficient and technologically advanced, but also more humane and welcoming for all their inhabitants.

Content prepared by Jose Luis Marín, Senior Consultant in Data, Strategy, Innovation & Digitalization. The contents and views reflected in this publication are the sole responsibility of the author.

The European Union has placed the digital transformation of the public sector at the heart of its policy agenda. Through various initiatives under the Digital Decade policy programme, the EU aims to boost the efficiency of public services and provide a better experience for citizens. A goal for which the exchange of data and information in an agile manner between institutions and countries is essential.

This is where interoperability and the search for new solutions to promote it becomes important. Emerging technologies such as artificial intelligence (AI) offer great opportunities in this field, thanks to their ability to analyse and process huge amounts of data.

A report to analyse the state of play

Against this background, the European Commission has published an extensive and comprehensive report entitled "Artificial Intelligence for Interoperability in the European Public Sector", which provides an analysis of how AI is already improving interoperability in the European public sector. The report is divided into three parts:

- A literature and policy review on the synergies between IA and interoperability. It highlights the legislative work carried out by the EU. It highlights the Interoperable Europe Act which seeks to establish a governance structure and to foster an ecosystem of reusable and interoperable solutions for public administration. Mention is also made of the Artificial Intelligence Act, designed to ensure that AI systems used in the EU are safe, transparent, traceable, non-discriminatory and environmentally friendly.

- The report continues with a quantitative analysis of 189 use cases. These cases were selected on the basis of the inventory carried out in the report "AI Watch. European overview of the use of Artificial Intelligence by the public sector" which includes 686 examples, recently updated to 720.

- A qualitative study that elaborates on some of the above cases. Specifically, seven use cases have been characterised (two of them Spanish), with an exploratory objective. In other words, it seeks to extract knowledge about the challenges of interoperability and how AI-based solutions can help.

Conclusions of the study

AI is becoming an essential tool for structuring, preserving, standardising and processing public administration data, improving interoperability within and outside public administration. This is a task that many organisations are already doing.

Of all the AI use cases in the public sector analysed in the study, 26% were related to interoperability. These tools are used to improve interoperability by operating at different levels: technical, semantic, legal and organisational. The same AI system can operate at different layers.

- The semantic layer of interoperability is the most relevant (91% of cases). The use of ontologies and taxonomies to create a common language, combined with AI, can help establish semantic interoperability between different systems. One example is the EPISA60 project, which is based on natural language processing, using entity recognition and machine learning to explore digital documents.

- In second place is the organisational layer, with 35% of cases. It highlights the use of AI for policy harmonisation, governance models and mutual data recognition, among others. In this regard, the Austrian Ministry of Justice launched the JustizOnline project which integrates various systems and processes related to the delivery of justice.

- The 33% of the cases focused on the legal layer. In this case, the aim is to ensure that the exchange of data takes place in compliance with legal requirements on data protection and privacy. The European Commission is preparing a study to explore how AI can be used to verify the transposition of EU legislation by Member States. For this purpose, different articles of the laws are compared with the help of an AI.

- Lastly, there is the technical layer, with 21% of cases. In this field, AI can help the exchange of data in a seamless and secure way. One example is the work carried out at the Belgian research centre VITO, based on AI data encoding/decoding and transport techniques.

Specifically, the three most common actions that AI-based systems take to drive data interoperability are: detecting information (42%), structuring it (22%) and classifying it (16%). The following table, extracted from the report, shows all the detailed activities:

Download here the accessible version of the table

The report also analyses the use of AI in specific areas. Its use in "general public services" stands out (41%), followed by "public order and security" (17%) and "economic affairs" (16%). In terms of benefits, administrative simplification stands out (59%), followed by the evaluation of effectiveness and efficiency (35%) and the preservation of information (27%).

AI use cases in Spain

The third part of the report looks in detail at concrete use cases of AI-based solutions that have helped to improve public sector interoperability. Of the seven solutions characterised, two are from Spain:

- Energy vulnerability - automated assessment of the fuel poverty report. When energy service providers detect non-payments, they must consult with the municipality to determine whether the user is in a situation of social vulnerability before cutting off the service, in which case supplies cannot be cut off. Municipalities receive monthly listings from companies in different formats and have to go through a costly manual bureaucratic process to validate whether a citizen is at social or economic risk. To solve this challenge, the Administració Oberta de Catalunya (AOC) has developed a tool that automates the data verification process, improving interoperability between companies, municipalities and other administrations.

- Automated transcripts to speed up court proceedings. In the Basque Country, trial transcripts by the administration are made by manually reviewing the videos of all sessions. Therefore, it is not possible to easily search for words, phrases, etc. This solution converts voice data into text automatically, which allows you to search and save time.

Recommendations

The report concludes with a series of recommendations on what public administrations should do:

- Raise internal awareness of the possibilities of AI to improve interoperability. Through experimentation, they will be able to discover the benefits and potential of this technology.

- Approach the adoption of an AI solution as a complex project with not only technical, but also organisational, legal, ethical, etc. implications.

- Create optimal conditions for effective collaboration between public agencies. This requires a common understanding of the challenges to be addressed in order to facilitate data exchange and the integration of different systems and services.

- Promote the use of uniform and standardised ontologies and taxonomies to create a common language and shared understanding of data to help establish semantic interoperability between systems.

- Evaluate current legislation, both in the early stages of experimentation and during the implementation of an AI solution, on a regular basis. Collaboration with external actors to assess the adequacy of the legal framework should also be considered. In this regard, the report also includes recommendations for the next EU policy updates.

- Support the upgrading of the skills of AI and interoperability specialists within the public administration. Critical tasks of monitoring AI systems are to be kept within the organisation.

Interoperability is one of the key drivers of digital government, as it enables the seamless exchange of data and processes, fostering effective collaboration. AI can help automate tasks and processes, reduce costs and improve efficiency. It is therefore advisable to encourage their adoption by public bodies at all levels.

The alignment of artificial intelligence is a term established since the 1960s, according to which we orient the goals of intelligent systems in the exact direction of human values. The advent of generative models has brought this concept of alignment back into fashion, which becomes more urgent the more intelligence and autonomy systems show. However, no alignment is possible without a prior, consensual and precise definition of these values. The challenge today is to find enriching objectives where the application of AI has a positive and transformative effect on knowledge, social organisation and coexistence.

The right to understand

In this context, one of the main pillars of today's AI, language processing has been making valuable contributions to clear communication and, in particular, to clear language for years. Let us look at what these concepts are:

- Clear communication, as a discipline, aims to make information accessible and understandable for all people, using writing resources, but also visual, design, infographics, user experience and accessibility.

- Clear language focuses on the composition of texts, with techniques for presenting ideas directly and concisely, without stylistic excesses or omissions of key information.

Both concepts are closely linked to people's right to understand.

Before chatGPT: analytical approaches

Before the advent of generative AI and the popularisation of GPT capabilities, artificial intelligence was applied to plain language from an analytical point of view, with different classification and pattern search techniques. The main need then was for a system that could assess whether or not a text was understandable, but there was not yet the expectation that the same system could rewrite our text in a clearer way. Let's look at a couple of examples:

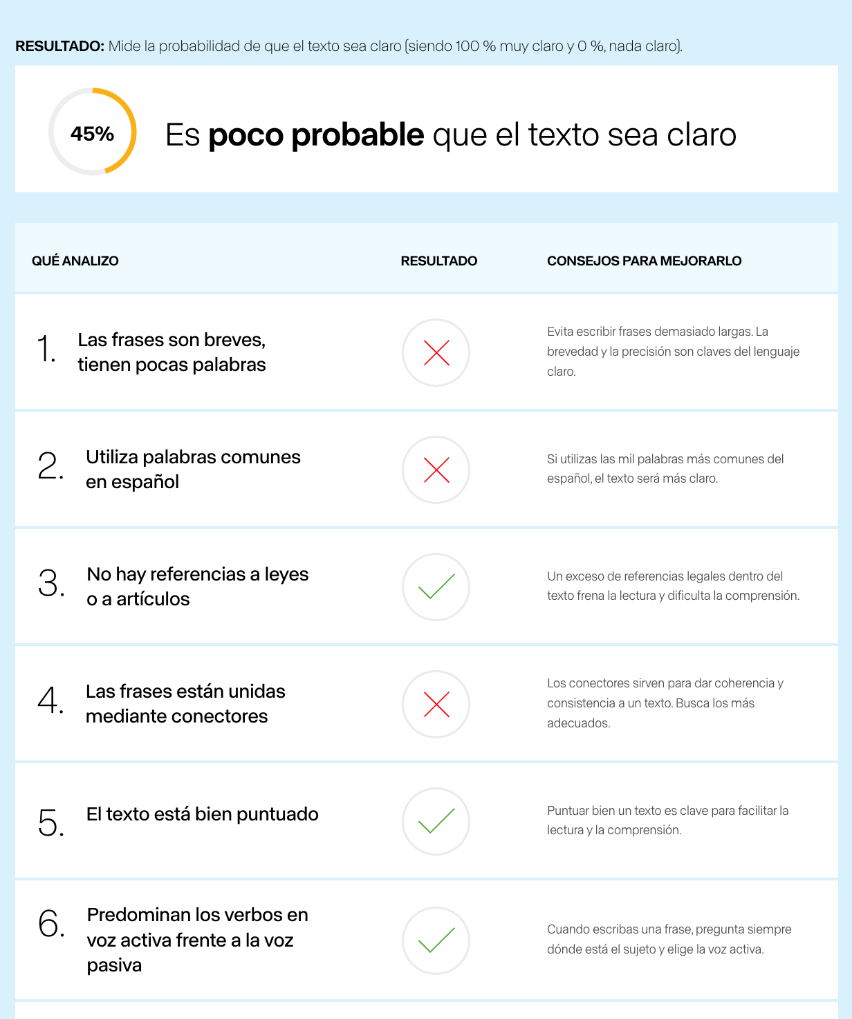

This is the case of Clara, an analytical AI system that is openly available in beta. Clara is a mixed system: on the one hand, it has learned which patterns characterise clear and unclear texts from the observation of a corpus of peers prepared by specialists in Clear Communication. On the other hand, it handles nine metrics designed by computational linguists to decide whether or not a text meets the minimum requirements for clarity, for example, the average number of words per sentence, the technicalities used or the frequency of connectors. Finally, Clara returns a percentage score to indicate whether the written text is more or less close to being clear text. This allows the user to correct the text according to Clara's indications and submit it for re-evaluation.

However, other analytical systems have established a different approach, such as Artext of course. Artext is more like a traditional text editor, where we can write our text and activate a series of revisions, such as participles, verb nominalisations or the use of negation. Artext highlights in colour words or expressions in our text and advises us in a side menu what we have to take into account when using them. The user can rewrite the text until, in the different revisions, the words and expressions marked in colour disappear.

Both Clara and Artext specialise in administrative and financial texts, with the aim of being of use mainly to public administration, financial institutions and other sources of difficult-to-understand texts that have an impact on citizens.

The generative revolution

Analytical AI tools are useful and very valuable if we want to evaluate a text over which we need to have more control. However, following the arrival of chatGPT in November 2022, users' expectations are set to rise even further. Not only do we need an evaluator, but we expect a translator, an automatic transformer of our text into a clearer version. We insert the original version of the text in the chat and, through a direct instruction called prompt, we ask it to transform it into a clearer and simpler text, understandable by anyone.

If we need more clarity, we only have to repeat the instruction and the text becomes simpler again before our eyes.

By using generative AI we are reducing cognitive effort, but we are also losing much of the control over the text. Most importantly, we will not know what modifications are being made and why, and we may incur the loss or alteration of information. If we want to increase control and keep track of the changes, deletions and additions that chatGPT makes to the text, we can use a plug-in such as EditGPT, available as an extension for Google Chrome, which allows us to keep track of changes similar to Word in our interactions with the chat. However, we would not be able to understand the rationale for the changes made, as we would with tools such as Clara or Artext designed by language professionals. One limiting option is to ask the chat to justify each of these changes, but the interaction would become cumbersome, complex and inefficient, not to mention the excessive enthusiasm with which the model would try to justify its corrections.

Examples of generative clarification

Beyond the speed of transformation, generative AI has other advantages over analytics, such as certain elements that can only be identified with GPT capabilities. For example, detecting in a text whether an acronym or acronym has been developed previously, or whether a technicality is explained immediately after its appearance. This requires very complex semantic analysis for analytical AI or rule-based models. In contrast, a great language model is able to establish an intelligent relationship between the acronym and its development, or between the technicality and its meaning, to recognise if this explanation exists somewhere in the text, and to add it where relevant.

Open data to inform clarification

Universal access to open data, especially when it is ready for computational processing, makes it indispensable for training large linguistic models. Huge sources of unstructured information such as Wikipedia, the Common Crawl project or Gutenberg allow systems to learn how the language works. And, on this generalist basis, it is possible to fit models with specialised datasets to make them more accurate in the task of clarifying text.

In the application of generative artificial intelligence to plain language we have the perfect example of a valuable purpose, useful to citizens and positive for social development. Beyond the fascination it has aroused, we have the opportunity to use its potential in a use case that favours equality and inclusiveness. The technology exists, we just need to go down the difficult road of integration.

Content prepared by Carmen Torrijos, expert in AI applied to language and communication.

The contents and points of view reflected in this publication are the sole responsibility of the author.

Teaching computers to understand how humans speak and write is a long-standing challenge in the field of artificial intelligence, known as natural language processing (NLP). However, in the last two years or so, we have seen the fall of this old stronghold with the advent of large language models (LLMs) and conversational interfaces. In this post, we will try to explain one of the key techniques that makes it possible for these systems to respond relatively accurately to the questions we ask them.

Introduction

In 2020, Patrick Lewis, a young PhD in the field of language modelling who worked at the former Facebook AI Research (now Meta AI Research) publishes a paper with Ethan Perez of New York University entitled: Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks in which they explained a technique to make current language models more precise and concrete. The article is complex for the general public, however, in their blog, several of the authors of the article explain in a more accessible way how the RAG technique works. In this post we will try to explain it as simply as possible.

Large Language Models are artificial intelligence models that are trained using Deep Learning algorithms on huge sets of human-generated information. In this way, once trained, they have learned the way we humans use the spoken and written word, so they are able to give us general and very humanly patterned answers to the questions we ask them. However, if we are looking for precise answers in a given context, LLMs alone will not provide specific answers or there is a high probability that they will hallucinate and completely make up the answer. For LLMs to hallucinate means that they generate inaccurate, meaningless or disconnected text. This effect poses potential risks and challenges for organisations using these models outside the domestic or everyday environment of personal LLM use. The prevalence of hallucination in LLMs, estimated at 15% to 20% for ChatGPT, may have profound implications for the reputation of companies and the reliability of AI systems.

What is a RAG?

Precisely, RAG techniques have been developed to improve the quality of responses in specific contexts, for example, in a particular discipline or based on private knowledge repositories such as company databases.

RAG is an extra technique within artificial intelligence frameworks, which aims to retrieve facts from an external knowledge base to ensure that language models return accurate and up-to-date information. A typical RAG system (see image) includes an LLM, a vector database (to conveniently store external data) and a series of commands or queries. In other words, in a simplified form, when we ask a natural language question to an assistant such as ChatGPT, what happens between the question and the answer is something like this:

- The user makes the query, also technically known as a prompt.

- The RAG enriches the prompt or question with data and facts obtained from an external database containing information relevant to the user's question. This stage is called retrieval

- The RAG is responsible for sending the user's prompt enriched or augmented to the LLM which is responsible for generate a natural language response taking advantage of the full power of the human language it has learned with its generic training data, but also with the specific data provided in the retrieval stage.

Understanding RAG with examples

Let us take a concrete example. Imagine you are trying to answer a question about dinosaurs. A generalist LLM can invent a perfectly plausible answer, so that a non-expert cannot distinguish it from a scientifically based answer. In contrast, using RAG, the LLM would search a database of dinosaur information and retrieve the most relevant facts to generate a complete answer.

The same was true if we searched for a particular piece of information in a private database. For example, think of a human resources manager in a company. It wants to retrieve summarised and aggregated information about one or more employees whose records are in different company databases. Consider that we may be trying to obtain information from salary scales, satisfaction surveys, employment records, etc. An LLM is very useful to generate a response with a human pattern. However, it is impossible for it to provide consistent and accurate data as it has never been trained with such information due to its private nature. In this case, RAG assists the LLM in providing specific data and context to return the appropriate response.

Similarly, an LLM complemented by RAG on medical records could be a great assistant in the clinical setting. Financial analysts would also benefit from an assistant linked to up-to-date stock market data. Virtually any use case benefits from RAG techniques to enrich LLM capabilities with context-specific data.

Additional resources to better understand RAG

As you can imagine, as soon as we look for a moment at the more technical side of understanding LLMs or RAGs, things get very complicated. In this post we have tried to explain in simple words and everyday examples how the RAG technique works to get more accurate and contextualised answers to the questions we ask to a conversational assistant such as ChatGPT, Bard or any other. However, for those of you who have the desire and strength to delve deeper into the subject, here are a number of web resources available to try to understand a little more about how LLMs combine with RAG and other techniques such as prompt engineering to deliver the best possible generative AI apps.

Introductory videos:

LLMs and RAG content articles for beginners

- DEV - LLM for dummies

- Digital Native - LLMs for Dummies

- Hopsworks.ai - Retrieval Augmented Augmented Generation (RAG) for LLMs

- Datalytyx - RAG For Dummies

Do you want to go to the next level? Some tools to try out:

- LangChain. LangChain is a development framework that facilitates the construction of applications using LLMs, such as GPT-3 and GPT-4. LangChain is for software developers and allows you to integrate and manage multiple LLMs, creating applications such as chatbots and virtual agents. Its main advantage is to simplify the interaction and orchestration of LLMs for a wide range of applications, from text analysis to virtual assistance.

- Hugging Face. Hugging Face is a platform with more than 350,000 models, 75,000 datasets and 150,000 demo applications, all open source and publicly available online where people can easily collaborate and build artificial intelligence models.

- OpenAI. OpenAI is the best known platform for LLM models and conversational interfaces. The creators of ChatGTP provide the developer community with a set of libraries to use the OpenAI API to create their own applications using the GPT-3.5 and GPT-4 models. As an example, we suggest you visit the Python library documentation to understand how, with very few lines of code, we can be using an LLM in our own application. Although OpenAI conversational interfaces, such as ChatGPT, use their own RAG system, we can also combine GPT models with our own RAG, for example, as proposed in this article.

Content elaborated by Alejandro Alija, expert in Digital Transformation and Innovation.

The contents and views expressed in this publication are the sole responsibility of the author.

We are currently in the midst of an unprecedented race to master innovations in Artificial Intelligence. Over the past year, the star of the show has been Generative Artificial Intelligence (GenAI), i.e., that which is capable of generating original and creative content such as images, text or music. But advances continue to come and go, and lately news is beginning to arrive suggesting that the utopia of Artificial General Intelligence (AGI) may not be as far away as we thought. We are talking about machines capable of understanding, learning and performing intellectual tasks with results similar to those of the human brain.

Whether this is true or simply a very optimistic prediction, a consequence of the amazing advances achieved in a very short space of time, what is certain is that Artificial Intelligence already seems capable of revolutionizing practically all facets of our society based on the ever-increasing amount of data used to train it.

And the fact is that if, as Andrew Ng argued back in 2017, artificial intelligence is the new electricity, open data would be the fuel that powers its engine, at least in a good number of applications whose main and most valuable source is public information that is accessible for reuse. In this article we will review a field in which we are likely to see great advances in the coming years thanks to the combination of artificial intelligence and open data: artistic creation.

Generative Creation Based on Open Cultural Data

The ability of artificial intelligence to generate new content could lead us to a new revolution in artistic creation, driven by access to open cultural data and a new generation of artists capable of harnessing these advances to create new forms of painting, music or literature, transcending cultural and temporal barriers.

Music

The world of music, with its diversity of styles and traditions, represents a field full of possibilities for the application of generative artificial intelligence. Open datasets in this field include recordings of folk, classical, modern and experimental music from all over the world and from all eras, digitized scores, and even information on documented music theories. From the arch-renowned MusicBrainz, the open music encyclopedia, to datasets opened by streaming industry dominators such as Spotify or projects such as Open Music Europe, these are some examples of resources that are at the basis of progress in this area. From the analysis of all this data, artificial intelligence models can identify unique patterns and styles from different cultures and eras, fusing them to create unpublished musical compositions with tools and models such as OpenAI's MuseNet or Google's Music LM.

Literature and painting