The last few months of the year are always accompanied by numerous innovations in the open data ecosystem. It is the time chosen by many organisations to stage conferences and events to show the latest trends in the field and to demonstrate their progress.

New functionalities and partnerships

Public bodies have continued to make progress in their open data strategies, incorporating new functionalities and data sets at their open data platforms. Examples include:

- On 11 November, the Ministry for the Ecological Transition and the Demographic Challenge and The Information Lab Spain presented the SIDAMUN platform (Integrated Municipal Data System). It is a data visualisation tool with interactive dashboards which show detailed information about the current status of the territory.

- The Ministry of Agriculture, Food and Fisheries has published four interactive reports to exploit more than 500 million data elements and thus provide information in a simple way about the status and evolution of the Spanish primary sector.

- The Open Data Portal of the Regional Government of Andalusia has been updated in order to promote the reuse of information, expanding the possibilities of access through APIs in a more efficient, automated way.

- The National Geographic Institute has updated the information on green routes (reconditioned railway lines) which are already available for download in KML, GPX and SHP.

- The Institute for Statistics and Cartography of Andalusia has published data on the Natural Movement of the Population for 2021, which provides information on births, marriages and deaths.

We have also seen advances made from a strategic perspective and in terms of partnerships. The Regional Ministry of Participation and Transparency of the Valencian Regional Government set in motion a participatory process to design the first action plan of the 'OGP Local' programme of the Open Government Partnership. In turn, the Government of the Canary Islands has applied for admission to the International Open Government Partnership and it will strengthen collaboration with the local entities of the islands, thereby mainstreaming the Open Government policies.

In addition, various organisations have announced news for the coming months. This is the case of Cordoba City Council which is set to launch in the near future a new portal with open data, or of Torrejon City Council which has included in its local action plan the creation of an Open data portal, as well as the promotion of the use of big data in institutions.

Open data tenders, a showcase for finding talent and new use cases

During the autumn, the winners of various contests were announced which sought to promote the reuse of open data. Thanks to these tenders, we have also learned of numerous cases of reuse which demonstrate open data's capacity to generate social and economic benefits.

- At the end of October we met the winners of our “Aporta” Challenge. First prize went to HelpVoice!, a service that seeks to help the elderly using speech recognition techniques based on automatic learning. A web environment to facilitate the analysis and interactive visualisation of microdata from the Hospital Morbidity Survey and an app to promote healthy habits won second and third prizes, respectively.

- The winners of the ideas and applications tender of Open Data Euskadi were also announced. The winners include a smart assistant for energy saving and an app to locate free parking spaces.

- Aragon Open Data, the open data portal of the Government of Aragon, celebrated its tenth anniversary with a face-to-face datathon to prototype services that help people through portal data. The award for the most innovative solution with the greatest impact went to Certifica-Tec, a website that allows you to geographically view the status of energy efficiency certificates.

- The Biscay Open Data Datathon set out to transform Biscay based on its open data. At the end of November, the final event of the Datathon was held. The winner was Argilum, followed by Datoston.

- UniversiData launched its first datathon, whose winning projects have just been announced.

In addition, in the last few months other initiatives related with the reuse of data have been announced such as:

- Researchers from Technical University of Madrid have carried out a study where they use artificial intelligence algorithms to analyse clinical data on lung cancer patients, scientific publications and open data. The aim is to obtain statistical patterns that allow the treatments to be improved.

- The Research Report 2021 that the University of Extremadura has just published was generated automatically from the open data portal. It is a document containing more than 1,200 pages which includes the investigations of all the departments of the centre.

- F4map is a 3D map that has been produced thanks to the open data of the OpenStreetMap collaborative community. Hence, and alternating visualisation in 2D and 3D, it offers a detailed view of different cities, buildings and monuments from all around the world.

Dissemination of open data and their use cases through events

One thing autumn has stood out for has been for the staging of events focused on the world of data, many of which were recorded and can be viewed again online. Examples include:

- The Ministry of Justice and the University of Salamanca organised the symposium Justice and Law in Data: The role of Data as an enabler and engine for change for the transformation of Justice and Law”. During the event reflections were made on data as a public asset. All the presentations are available on the Youtube channel of the University.

- In October Madrid hosted a new edition of the Data Management Summit Spain. The day before there was a prior session, organised in collaboration with DAMA España and the Data Office, aimed exclusively at representatives of the public administration and focused on open data and the exchange of information between administrations. This can be seen on Youtube too.

- The Barcelona Provincial Council, the Castellon Provincial Council and the Government of Aragon organised the National Open Data Meeting, with the aim of making clear the importance of the latter in territorial cohesion.

- The Iberian Conference on Spatial Data Infrastructure was held in Seville, where geographic information trends were discussed.

- A recording of the Associationism Seminars 2030, organised by the Government of the Canary Islands, can also be viewed. As regards the presentations, we would highlight the one related with the ‘Map of Associationism in the Canary Islands' which makes this type of data visible in an interactive way.

- ASEDIE organised the 14th edition of its International Conference on the reuse of public sector Information which featured various round tables, including one on 'The Data Economy: rights, obligations, opportunities and barriers'.

Guides and courses

During these months, guides have also been published which seek to help publishers and reusers in their work with open data. From datos.gob.es we have published documents on How to prepare a Plan of measures to promote the opening and reuse of open data, the guide to Introduction to data anonymisation: Techniques and practical cases and the Practical guide for improving the quality of open data. In addition, other organisations have also published help documents such as:

- The Regional Government of Valencia has published a guide that compiles transparency obligations established by the Valencian law for public sector entities.

- The Spanish Data Protection Agency (AEPD) has translated the Singapore Data Protection Authority’s Guide to Basic Anonymisation, in view of its educational value and special interest to data protection officers. The guide is complemented by a free data anonymisation tool, which the AEPD makes available to organisations

- The NETWORK of Local Entities for Transparency and Citizen Participation of the FEMP has just presented the Data visualisation guide for Local Entities, a document with good practices and recommendations. The document refers to a previous work of the City Council of L'Hospitalet.

International news

During this period, we have also seen developments at European level. Some of the ones we are highlighting are:

- In October the final of the EUdatathon 2022. The finalist teams were previously selected from a total of 156 initial proposals.

- The European Data Portal has launched the initiative Use Case Observatory to measure the impact of open data by monitoring 30 use cases over 3 years.

- A group of scientists from the Dutch Institute for Fundamental Energy Research has created a database of 31,618 molecules thanks to algorithms trained with artificial intelligence.

- The World Bank has developed a new food and nutrition security dashboard which offers the latest global and national data.

These are just a few examples of what the open data ecosystem has produced in recent months. If you would like to share with us any other news, leave us a comment or send us an e-mail to dinamizacion@datos.gob.es

Measuring the impact of open data is one of the challenges facing open data initiatives. Ther are a variety of methods, most of which combine quantitative and qualitative analysis in order to understand the value of specific datasets.

In this context, data.europa.eu, the European Open Data Portal, has launched a Use Case Observatory. This is a research project on the economic, governmental, social and environmental impact of open data.

What is the Use Case Observatory?

For three years, from 2022 to 2025, the European Data Portal will monitor 30 cases of re-use of open data. The aim is to:

- Assess how the impact of open data is created.

- Share the challenges and achievements of the analysed re-use cases

- Contribute to the debate on the methodology to be used to measure such impact.

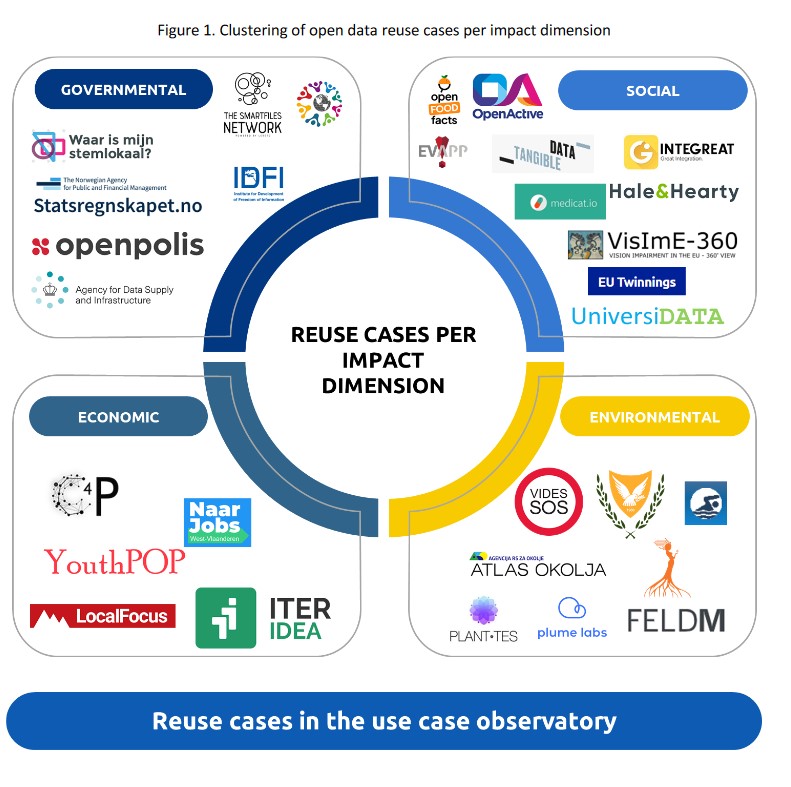

The analysed use cases refer to four areas of impact:

- Economic impact: includes reuse cases related to business creation and (re)training of workers, among others. For example, solutions that help identify public tenders or apply for jobs are included.

- Governmental impact: This refers to reuse cases that drive e-government, transparency and accountability.

- Social impact: includes cases of re-use in the fields of healthcare, welfare and tackling inequality.

- Environmental impact: This is limited to cases of re-use that promote sustainability and energy reduction, including solutions related to air quality control or forest preservation.

To select the use cases, an inventory was made based on three sources: the examples collected in the maturity studies carried out each year by the European portal, the solutions participating in the EU Datathon and the examples of reuse available in the repository of use cases on data.europa.eu. Only projects developed in Europe were taken into account, trying to maintain a balance between the different countries. In addition, projects that had won an award or were aligned with the European Commission's priorities for 2019 to 2024 were highlighted. To finalise the selection process, data.europa.eu conducted interviews with representatives of the use cases that met the requirements and were interested in participating in the project.

Three Spanish projects among the use cases analysed

The selected use cases are shown in the following image:

Among them, there are three Spaniards:

- In the Social Impact category is UniversiDATA-Lab, a public portal for the advanced and automatic analysis of datasets published by universities. This project, which won the first prize in the III Desafío Aporta, was conceived by the team that created UniversiData, a collaborative initiative oriented and driven by public universities with the aim of promoting open data in the higher education sector in Spain in a harmonised way. You can learn more about these projects in this interview.

- In the same category we also find Tangible data, a project focused on the creation of sculptures based on data, to bring them closer to non-technical people. Among other data sources, it uses datasets from NASA or Our World in Data.

- In the environment category is Planttes. This is a citizen science project designed to report on the presence of allergenic plants in our environment and the level of allergy risk depending on their condition. This project is promoted by the Aerobiological Information Point (PIA) of the Institute of Environmental Science and Technology (ICTA-UAB) and the Department of Animal Biology, Plant Biology and Ecology (BABVE), in collaboration with the Computer Vision Centre (CVC) and the Library Living Lab, all of them at the Autonomous University of Barcelona (UAB).

First report now available

As a result of the analysis carried out, three reports will be developed. The first report, which has just been published, presents the methodology and the 30 selected cases of re-use. It includes information on the services they offer, the (open) data they use and their impact at the time of writing. The report ends with a summary of the general conclusions and lessons learned from this first part of the research project, giving an overview of the next steps of the observatory.

The second and third reports, to be released in 2024 and 2025, will assess the progress of the same use cases and expand on the findings of this first volume. The reports will focus on identifying achievements and challenges over a three-year period, allowing concrete ideas to be extrapolated to improve methodologies for assessing the impact of open data.

The project was presented in a webinar on 7 October, a recording of which is available, together with the presentation used. Representatives from 4 of the use cases were invited to participate in the webinar: Openpolis, Integreat, ANP, and OpenFoodFacts.

Data science has a key role to play in building a more equitable, fair and inclusive world. Open data related to justice and society can serve as the basis for the development of technological solutions that drive a legal system that is not only more transparent, but also more efficient, helping lawyers to do their work in a more agile and accurate way. This is what is known as LegalTech, and includes tools that make it possible to locate information in large volumes of legal texts, perform predictive analyses or resolve legal disputes easily, among other things.

In addition, this type of data drives the development of solutions aimed at responding to the great social challenges facing humanity, helping to promote the common good, such as the inclusion of certain groups, aid for refugees and people in conflict zones or the fight against gender-based violence.

When we talk about open data related to justice and society, we refer both to legal data and to other data that can have an impact on universalising access to basic services, achieving equity, ensuring that all people have the same opportunities for development and promoting collaboration between different social agents.

What types of data on justice and society can I find in datos.gob.es?

On our portal you can access a wide catalogue of data that is classified by different sectors. The Legislation and Justice category currently has more than 5,000 datasets of different types, including information related to criminal offences, appeals or victims of certain crimes, among others. For its part, the Society and Welfare category has more than 8,000 datasets, including, for example, lists of aid, associations or information on unemployment.

Of all these datasets, here are some examples of the most outstanding ones, together with the format in which you can consult them:

At state level

- Spanish Statistical Office (INE). Offences according to sex by Autonomous Communities and cities. CSV, XLSX, XLS, JSON, PC-Axis, HTML (landing page for data download)

- Spanish Statistical Office (INE). 2030 Agenda SDG - Population at risk of poverty or social exclusion: AROPE indicator. CSV, XLS, XLSX, HTML (landing page for data download)

- Spanish Statistical Office (INE). Internet use by demographic characteristics and frequency of use. CSV, XLSX, XLS, JSON, PC-Axis, HTML (landing page for data download)

- Spanish Statistical Office (INE). Average expenditure according to size of the municipality of residence. CSV, XLSX, XLS, JSON, PC-Axis, HTML (landing page for data download)

- Spanish Statistical Office (INE). Retirement age in access to Benefit. CSV, XLSX

- Ministry of Justice. Judicial Census. XLSX, PDF, HTML (landing page for data download

At Autonomous Community level

- Cantabrian Institute of Statistics. Statistics on annulments, separations and divorces. RDF-XML, XLS, JSON, ZIP, PC-Axis, HTML (landing page for data download).

- Basque Government. Standards and laws in force applicable in the Basque Country. JSON, JSON-P, XML, XLSX.

- Basque Government. Locating mass graves from the Civil War and Francoism. CSV, XLS, XML.

- Generalitat Catalana. Minstry of Justice resources statistics. XLSX, HTML (landing page for data download).

- Government of Catalonia. Youth justice statistics. XLSX, HTML (landing page de descarga de datos).

- Autonomous Community of Navarre. Statistics on Transfer of Property Rights. XLSX, HTML (landing page for data download).

- Principality of Asturias. Sustainable Development Goals indicators in Asturias. HTML, XLSX, ZIP.

- Principality of Asturias. Justice in Asturias: staffing levels of the judicial bodies of the Principality of Asturias according to type. HTML (landing page for data download).

- Cantabrian Institute of Statistics. Judges and magistrates active in the Canary Islands. HTML, JSON, PC-Axis.

A the local level

- Santa Cruz de Tenerife City Council. Parking spaces for people with reduced mobility. SHP, KML, KMZ, RDF-XML, CSV, JSON, XLS

- Madrid City Council. Justice Administration Offices in the city of Madrid. CSV, XML, RSS, RDF-XML, JSON, HTML (landing page for data download)

- Gijón City Council. Security forces. JSON, CSV, XLS, PDF, HTML, TSV, texto, XML, HTML (landing page for data download)

- Madrid City Council. Child and Family Care Centres. CSV, JSON, RDF-XML, XML, RSS, HTML (landing page for data download).

- Zaragoza City Council. List of police stations. CSV, JSON.

Some examples of re-use of justice and social good related data

In the companies and applications section of datos.gob.es you can find some examples of solutions developed with open data related to justice and social good. One example is Papelea, a company that provides answers to users' legal and administrative questions. To this end, it draws on public information such as administrative procedures of the main administrations, legal regulations, jurisprudence, etc. Another example is the ISEAK Foundation, which specialises in the evaluation of public policies on employment, inequality, inclusion and gender, using public data sources such as the National Institute of Statistics, Social Security, Eurostat and Opendata Euskadi.

Internationally, there are also examples of initiatives created to monitor procedural cases or improve the transparency of police services. In Europe, there is a boom in the creation of companies focused on legal technology that seek to improve the daily life of citizens, as well as initiatives that seek to use data for equity. Concrete examples of solutions in this area are miHub for asylum seekers and refugees in Cyprus, or Surviving in Brussels, a website for the homeless and people in need of access to services such as medical help, housing, job offers, legal help or financial advice.

Do you know of a company that uses this kind of data or an application that relies on it to contribute to the advancement of society? Then do not hesitate to leave us a comment with all the information or send us an email to dinamizacion@datos.gob.es.

The demand for professionals with skills related to data analytics continues to grow and it is already estimated that just the industry in Spain would need more than 90,000 data and artificial intelligence professionals to boost the economy. Training professionals who can fill this gap is a major challenge. Even large technology companies such as Google, Amazon or Microsoft are proposing specialised training programmes in parallel to those proposed by the formal education system. And in this context, open data plays a very relevant role in the practical training of these professionals, as open data is often the only possibility to carry out real exercises and not just simulated ones.

Moreover, although there is not yet a solid body of research on the subject, some studies already suggest positive effects derived from the use of open data as a tool in the teaching-learning process of any subject, not only those related to data analytics. Some European countries have already recognised this potential and have developed pilot projects to determine how best to introduce open data into the school curriculum.

In this sense, open data can be used as a tool for education and training in several ways. For example, open data can be used to develop new teaching and learning materials, to create real-world data-based projects for students or to support research on effective pedagogical approaches. In addition, open data can be used to create opportunities for collaboration between educators, students and researchers to share best practices and collaborate on solutions to common challenges.

Projects based on real-world data

A key contribution of open data is its authenticity, as it is a representation of the enormous complexity and even flaws of the real world as opposed to artificial constructs or textbook examples that are based on much simpler assumptions.

An interesting example in this regard is documented by Simon Fraser University in Canada in their Masters in Publishing where most of their students come from non-STEM university programmes and therefore had limited data handling skills. The project is available as an open educational resource on the OER Commons platform and aims to help students understand that metrics and measurement are important strategic tools for understanding the world around us.

By working with real-world data, students can develop story-building and research skills, and can apply analytical and collaborative skills in using data to solve real-world problems. The case study conducted with the first edition of this open data-based OER is documented in the book "Open Data as Open Educational Resources - Case studies of emerging practice". It shows that the opportunity to work with data pertaining to their field of study was essential to keep students engaged in the project. However, it was dealing with the messiness of 'real world' data that allowed them to gain valuable learning and new practical skills.

Development of new learning materials

Open datasets have a great potential to be used in the development of open educational resources (OER), which are free digital teaching, learning and research materials, as they are published under an open licence (Creative Commons) that allows their use, adaptation and redistribution for non-commercial uses according to UNESCO's definition.

In this context, although open data are not always OER, we can say that they become OER when are used in pedagogical contexts. Open data used as an educational resource facilitates students to learn and experiment by working with the same datasets used by researchers, governments and civil society. It is a key component for students to develop analytical, statistical, scientific and critical thinking skills.

It is difficult to estimate the current presence of open data as part of OER but it is not difficult to find interesting examples within the main open educational resource platforms. On the Procomún platform we can find interesting examples such as Learning Geography through the evolution of agrarian landscapes in Spain, which builds a Webmap for learning about agrarian landscapes in Spain on the ArcGIS Online platform of the Complutense University of Madrid. The educational resource uses specific examples from different autonomous communities using photographs or geolocated still images and its own data integrated with open data. In this way, students work on the concepts not through a mere text description but with interactive resources that also favour the improvement of their digital and spatial competences.

On the OER Commons platform, for example, we find the resource "From open data to civic engagement", which is aimed at audiences from secondary school upwards, with the objective of teaching them to interpret how public money is spent in a given regional, local area or neighbourhood. It is based on the well-known projects to analyse public budgets "Where do my taxes go?", available in many parts of the world as a result of the transparency policies of public authorities. This resource could be easily ported to Spain, as there are numerous "Where do my taxes go?" projects, such as the one maintained by Fundación Civio.

Data-related skills

When we refer to training and education in data-related skills, we are actually referring to a very broad area that is also very difficult to master in all its facets. In fact, it is common for data-related projects to be tackled in teams where each member has a specialised role in one of these areas. For example, it is common to distinguish at least data cleaning and preparation, data modelling and data visualisation as the main activities performed in a data science and artificial intelligence project.

In all cases, the use of open data is widely adopted as a central resource in the projects proposed for the acquisition of any of these skills. The well-known data science community Kaggle organises competitions based on open datasets contributed to the community and which are an essential resource for real project-based learning for those who want to acquire data-related skills. There are other subscription-based proposals such as Dataquest or ProjectPro but in all cases they use real datasets from multiple general open data repositories or knowledge area specific repositories.

Open data, as in other areas, has not yet developed its full potential as a tool for education and training. However, as can be seen in the programme of the latest edition of the OER Conference 2022, there are an increasing number of examples of open data playing a central role in teaching, new educational practices and the creation of new educational resources for all kinds of subjects, concepts and skills

Content written by Jose Luis Marín, Senior Consultant in Data, Strategy, Innovation & Digitalization.

The contents and views reflected in this publication are the sole responsibility of the author.

On 20 October, the EU's open data competition came to an end after several months of competition. The final of this sixth edition of the EU Datathon was held in Brussels in the framework of the European Year of Youth and was streamed worldwide.

It is a competition that gives open data enthusiasts and application developers from around the world the opportunity to demonstrate the potential of open data, while their innovative ideas gain international visibility and compete for a portion of the total prize money of €200,000.

The finalist teams were pre-selected from a total of 156 initial submissions. They came from 38 different countries, the largest participation in the history of the competition, to compete in four different categories related to the challenges facing Europe today.

Before the final, the selected participants had the opportunity to present in video format each of the proposals they have been developing based on the open data from the European catalogues.

Here is a breakdown of the winning teams in each challenge, the content of the proposal and the amount of the prize.

Winners of the “European Green Deal” Challenge

The European Green Deal is the blueprint for a modern, sustainable and competitive European economy. Participants who took up the challenge had to develop applications or services aimed at creating a green Europe, capable of driving resource efficiency.

1st prize: CROZ RenEUwable (Croatia)

The application developed by this Croatian team, "renEUwable", combines the analysis of environmental, social and economic data to provide specific and personal recommendations on sustainable energy use.

- Prize: €25,000

2nd prize: MyBioEUBuddy (France, Montenegro)

This project was created to help farm workers and local governments find regions that grow organic produce and can serve as an example to build a more sustainable agricultural network.

- Prize: €15,000

3rd prize: Green Land Dashboard for Cities (Italy)

The bronze in this category went to an Italian project that aims to analyse and visualise the evolution of green spaces in order to help cities, regional governments and non-governmental organisations to make them more liveable and sustainable.

- Prize: €7,000

"Winners of the “Transparency in Public Procurement” Challenge

Transparency in public procurement helps to track how money is spent, combat fraud and analyse economic and market trends. Participants who chose this challenge had to explore the information available to develop an application to improve transparency.

1st prize: Free Software Foundation Europe e.V (Germany)

This team of developers aims to make the links between the private sector, public administrations, users and tenders accessible.

- Prize: €25,000

2nd prize: The AI-Team (Germany)

This is a project that proposes to visualise data from TED, the European public procurement journal, in a graphical database and combine them with ownership information and a list of sanctioned entities. This will allow public officials and competitors to trace the amounts and values of contracts awarded back to the owners of the companies.

- Prize: €15,000

3rd prize: EMMA (France)

This fraud prevention and early detection tool allows public institutions, journalists and civil society to automatically monitor how the relationship between companies and administration is established at the beginning of a public procurement process.

- Prize: €7,000

Winners of the “Public Procurement Opportunities for Young People” Challenge

Public procurement is often perceived as a complex field, where only specialists feel comfortable finding the information they need. Thus, the developers who participated in this challenge had to design, for example, apps aimed at helping young people find the information they need to apply for public procurement positions.

1st prize: Hermix (Belgium, Romania)

It is a tool that develops a strategic marketing methodology aimed at the B2G (business to government) sector so that it is possible to automate the creation and monitoring of strategies for this sector.

- Prize: €25,000

2nd prize: YouthPOP (France)

YouthPOP is a tool designed to democratise employment and public procurement opportunities to bring them closer to young workers and entrepreneurs. It does this by combining historical data with machine learning technology.

- Prize: €15,000

3rd prize: HasPopEU (Romania)

This proposal takes advantage of open EU public procurement data and machine learning techniques to improve the communication of the skills required to access this type of job vacancies. The application focuses on young people, immigrants and SMEs.

- Prize: €7,000

Winners of the “A Europe Fit for the Digital Age” Challenge

The EU aims for a digital transformation that works for people and businesses. Therefore, participants in this challenge developed applications and services aimed at improving data skills, connectivity or data dissemination, always based on the European Data Strategy.

1st prize:: Lobium/Gavagai (Netherlands, Sweden, United Kingdom)

This application, developed using natural language processing techniques, was created with the aim of facilitating the work of investigative journalists, promoting transparency and rapid access to certain information.

- Prize: €25,000

2nd prize: 100 Europeans (France)

It is an interactive app that uses open data to raise awareness of the great challenges of our time. In this way, and aware of how difficult it is to communicate the impact that these challenges have on society, '100 Europeans' changes the way of conveying the message and personalises the effects of climate change, pollution or overweight in a total of one hundred people. The aim of this project is to make society more aware of these challenges by telling them through the stories of people close to them.

- Prize: €15,000

3rd prize: UNIOR NLP (Italy)

Leveraging European natural language processing techniques and data collection, the Computational Linguistics and Automatic Natural Language Processing research group at the University of Naples L'Orientale has developed a personal assistant called Maggie that guides users to explore cultural content across Europe, answering their questions and offering personalised suggestions.

- Prize: €7,000

Finally, the Audience Award of this 2022 edition also went to CROZ RenEUwable, the same team that won the first prize in the challenge dedicated to fostering commitment to the European Green Pact.

As in previous editions, the EU Datathon is a competition organised by the Publications Office of the European Union in collaboration with the European Data Strategy. Thus, the recently closed 2022 edition has managed to activate the support of some twenty partners representing open data stakeholders inside and outside the European institutions.

The IV edition of the Aporta Challenge, whose motto has revolved around 'The value of data for health and well-being of citizens', has already announced its three winners. The competition, promoted by Red.es in collaboration with the Secretary of State for Digitalisation and Artificial Intelligence, launched in November 2021 with an ideas competition and continued earlier this summer with a selection of ten finalist proposals.

As in the three previous editions, the selected candidates had a three month period to transform their ideas into a prototype, which they presented in person at the final gala.

In a post-pandemic context, where health plays an increasingly important role, the theme of the competition sought to identify, recognise and reward ideas aimed at improving the efficiency of this sector with solutions based on the use of open data.

On 18 October, the ten finalists came to the Red.es headquarters to present their proposals to a jury made up of representatives from public administrations, organisations linked to the digital economy, universities and data communities. In just twelve minutes, they had to summarise the purpose of the proposed project or service, explain how the development process had been carried out, what data they had used, and dwell on aspects such as the economic viability or traceability of the project or service.

Ten innovative projects to improve the health sector

The ten proposals presented to the jury showed a high level of innovation, creativity, rigour and public vocation. They were also able to demonstrate that it is possible to improve the quality of life of citizens by creating initiatives that monitor air quality, build solutions to climate change or provide a quicker response to a sudden health problem, among other examples.

For all these reasons, it is not surprising that the jury had a difficult time choosing the three winners of this fourth edition. In the end, HelpVoice initiative won the first prize of €5,000, the Hospital Morbidity Survey won the €4,000 linked to second place and RIAN, the Intelligent Activity and Nutrition Recommender, closed the ranking with third place and €3,000 as an award.

First prize: HelpVoice!

- Team: Data Express, composed of Sandra García, Antonio Ríos and Alberto Berenguer.

HelpVoice! is a service that helps our elderly through voice recognition techniques based on automatic learning. Thus, in an emergency situation, the user only need to click on a device that can be an emergency button, a mobile phone or home automation tools and tell about their symptoms. The system will send a report with the transcript and predictions to the nearest hospital, speeding up the response of the healthcare workers.

In parallel, HelpVoice! will also recommend to the patient what to do while waiting for the emergency services. Regarding the use of data, the Data Express team has used open information such as the map of hospitals in Spain and uses speech and sentiment recognition data in text.

Second prize: The Hospital Morbidity Survey

- Team: Marc Coca Moreno

This is a web environment based on MERN, Python and Pentaho tools for the analysis and interactive visualisation of the Hospital Morbidity Survey microdata. The entire project has been developed with open source and free tools and both the code and the final product will be openly accessible.

To be precise, it offers 3 main analyses with the aim of improving health planning:

- Descriptive: hospital discharge counts and time series.

- KPIs: standardised rates and indicators for comparison and benchmarking of provinces and communities.

- Flows: count and analysis of discharges from a hospital region and patient origin.

All data can be filtered according to the variables of the dataset (age, sex, diagnoses, circumstance of admission and discharge, etc.).

In this case, in addition to the microdata from the INE Hospital Morbidity Survey, statistics from the Continuous Register (also from the INE), data from the ICD10 diagnosis catalogues of the Ministry of Health and from the catalogues and indicators of the Agency for Healthcare Research and Quality (AHRQ) and of the Autonomous Communities, such as Catalonia: catalogues and stratification tools, have also been integrated.

You can see the result of this work here.

Third prize: RIAN - Intelligent Activity and Nutrition Recommender

- Team: RIAN Open Data Team, composed of Jesús Noguera y Raúl Micharet..

This project was created to promote healthy habits and combat overweight, obesity, sedentary lifestyles and poor nutrition among children and adolescents. It is an application designed for mobile devices that uses gamification techniques, as well as augmented reality and artificial intelligence algorithms to make recommendations.

Users have to solve personalised challenges, individually or collectively, linked to nutritional aspects and physical activities, such as gymkhanas or games in public green spaces.

In relation to the use of open data, the pilot uses data related to green areas, points of interest, greenways, activities and events belonging to the cities of Malaga, Madrid, Zaragoza and Barcelona. In addition, these data are combined with nutritional recommendations (food data and nutritional values and branded food products) and data for food recognition by images from Tensorflow or Kaggle, among others.

Alberto Martínez Lacambra, Director General of Red.es presents the awards and announces a new edition

The three winners were announced by Alberto Martínez Lacambra, Director General of Red.es, at a ceremony held at Red.es headquarters on 27 October. The event was attended by several members of the jury, who were able to talk to the three winning teams.

Martínez Lacambra also announced that Red.es is already working to shape the V Aporta Challenge, which will focus on the value of data for the improvement of the common good, justice, equality and equity.

Once again this year, the Aporta Initiative would like to congratulate the three winners, as well as to thank the work and talent of all the participants who decided to invest their time and knowledge in thinking and developing proposals for the fourth edition of the Aporta Challenge.

On 24 February Europe entered a scenario that not even the data could have predicted: Russia invaded Ukraine, unleashing the first war on European soil so far in the 21st century.

Almost five months later, on 26 September, the United Nations (UN) published its official figures: 4,889 dead and 6,263 wounded. According to the official UN data, month after month, the reality of the Ukrainian victims was as follows:

| Date | Deceased | Injured |

|---|---|---|

| 24-28 February | 336 | 461 |

| March | 3028 | 2384 |

| April | 660 | 1253 |

| May | 453 | 1012 |

| Jun | 361 | 1029 |

| 1-3 july | 51 | 124 |

According to data extracted by the mission that the UN High Commissioner for Human Rights has been carrying out in Ukraine since Russia invaded Crimea in 2014, the total number of civilians displaced as a result of the conflict is more than 7 million people.

However, as in other areas, the data serve not only to develop solutions, but also to gain an in-depth understanding of aspects of reality that would otherwise not be possible. In the case of the war in Ukraine, the collection, monitoring and analysis of data on the territory allows organisations such as the United Nations to draw their own conclusions.

With the aim of making visible how data can be used to achieve peace, we will now analyse the role of data in relation to the following tasks:

Prediction

In this area, data are used to try to anticipate situations and plan an appropriate response to the anticipated risk. Whereas before the outbreak of war, data was used to assess the risk of future conflict, it is now being used to establish control and anticipate escalation.

For example, satellite images provided by applications such as Google Maps have made it possible to monitor the advance of Russian troops. Similarly, visualisers such as Subnational Surge Tracker identify peaks of violence at different administrative levels: states, provinces or municipalities.

Information

It is just as important to know the facts in order to prevent violence as it is to use them to limit misinformation and communicate the facts objectively, truthfully and in line with official figures. To achieve this, fact-checking applications have begun to be used, capable of responding to fake news with official data.

Among them is Newsguard, a verification entity that has developed a tracker that gathers all the websites that share disinformation about the conflict, placing special emphasis on the most popular false narratives circulating on the web. It even catalogues this type of content according to the language in which it is promoted.

Material damage

The data can also be used to locate material damage and track the occurrence of new damage. Over the past months, the Russian offensive has damaged the Ukrainian public infrastructure network, rendering roads, bridges, water and electricity supplies, and even hospitals unusable.

Data on this reality is very useful for organising a response aimed at reconstructing these areas and sending humanitarian assistance to civilians who have been left without services.

In this sense, we highlight the following use cases:

- The United Nations Development Programme''s (UNDP) machine learning algorithm has been developed and improved to identify and classify war-damaged infrastructure.

- In parallel, the HALO Trust uses social media mining capable of capturing information from social media, satellite imagery and even geographic data to help identify areas with ''explosive remnants''. Thanks to this finding, organisations deployed across the Ukrainian terrain can move more safely to organise a coordinated humanitarian response.

- The light information captured by NASA satellites is also being used to build a database to help identify areas of active conflict in Ukraine. As in the previous examples, this data can be used to track and send aid to where it is most needed.

Human rights violations and abuses

Unfortunately, in such conflicts, violations of the human rights of the civilian population are the order of the day. In fact, according to experience on the ground and information gathered by the UN High Commissioner for Human Rights, such violations have been documented throughout the entire period of war in Ukraine.

In order to understand what is happening to Ukrainian civilians, monitoring and human rights officers collect data, public information and first-person accounts of the war in Ukraine. From this, they develop a mosaic map that facilitates decision-making and the search for just solutions for the population.

Another very interesting work developed with open data is carried out by Conflict Observatory. Thanks to the collaboration of analysts and developers, and the use of geospatial information and artificial intelligence, it has been possible to discover and map war crimes that might otherwise remain invisible.

Migratory movements

Since the outbreak of war last February, more than 7 million Ukrainians have fled the war and thus their own country. As in previous cases, data on migration flows can be used to bolster humanitarian efforts for refugees and IDPs.

Some of the initiatives where open data contributes include the following:

The Displacement Tracking Matrix is a project developed by the International Organization for Migration and aimed at obtaining data on migration flows within Ukraine. Based on the information provided by approximately 2,000 respondents through telephone interviews, a database was created and used to ensure the effective distribution of humanitarian actions according to the needs of each area of the country

Humanitarian response

Similar to the analysis carried out to monitor migratory movements, the data collected on the conflict also serves to design humanitarian response actions and track the aid provided.

In this line, one of the most active actors in recent months has been the United Nations Population Fund (UNFPA), which created a dataset containing updated projections by gender, age and Ukrainian region. In other words, thanks to this updated mapping of the Ukrainian population, it is much easier to think about what needs each area has in terms of medical supplies, food or even mental health support.

Another initiative that is also providing support in this area is the Ukraine Data Explorer, an open source project developed on the Humanitarian Data Exchange (HDX) platform that provides collaboratively collected information on refugees, victims and funding needs for humanitarian efforts.

Finally, the data collected and subsequently analysed by Premise provides visibility on areas with food and fuel shortages. Monitoring this information is really useful for locating the areas of the country with the least resources for people who have migrated internally and, in turn, for signalling to humanitarian organisations which areas are most in need of assistance.

Innovation and the development of tools capable of collecting data and drawing conclusions from it is undoubtedly a major step towards reducing the impact of armed conflict. Thanks to this type of forecasting and data analysis, it is possible to respond quickly and in a coordinated manner to the needs of civil society in the most affected areas, without neglecting the refugees who are displaced thousands of kilometres from their homes.

We are facing a humanitarian crisis that has generated more than 12.6 million cross-border movements. Specifically, our country has attended to more than 145,600 people since the beginning of the invasion and more than 142,190 applications for temporary protection have been granted, 35% of them to minors. These figures make Spain the fifth Member State with the highest number of favourable temporary protection decisions. Likewise, more than 63,500 displaced persons have been registered in the National Health System and with the start of the academic year, there are 30,919 displaced Ukrainian students enrolled in school, of whom 28,060 are minors..

Content prepared by the datos.gob.es team.

Do you accept the challenge of transforming Bizkaia from its open data? This is the "Datathon Open Data Bizkaia", a collaborative development competition organised by Lantik and the Provincial Council of Bizkaia.

Participants will have to create the mockup of an application that helps to solve problems affecting the citizens of Bizkaia. To do so, they will have to use at least one dataset from among all those available on the Open Data Bizkaia portal. These datasets may be combined with data from other sources.

How does the competition unfold?

The competition will be held in two phases:

- First phase. Participating teams must submit a proposal document in PDF format. Among other information, the proposal shall include a brief description of the solution, its functionalities and the datasets used.

- Second phase. A jury will evaluate all applications received and that are valid in time and form. Seven finalist proposals will then be selected. The shortlisted teams will have to produce a mockup and a promotional video of maximum 2 minutes, presenting the team members and describing the most outstanding features of the solution.

These phases will be carried out according to the following timetable:

- From 19 September to 19 October. Registration period open to submit proposals in pdf format.

- 26 October. Announcement of shortlisted teams.

- 14th November. Deadline for submitting the mockup and video.

- 18th November. The final will be held in Bilbao, although it will also be possible to attend, optionally, online. The videos will be presented and the winning teams will be selected.

Who can participate?

The competition is open to anyone over 16 years of age, regardless of nationality, as long as they have a valid DNI/NIF/NIE, passport or other public document that proves the identity and age of the participant.

You can participate as an individual or in teams of a maximum of six people.

What do the prizes consist of?

Two winners will be chosen from the 7 finalists, who will receive the following prize money:

- First prize: €2,500.

- Second prize: €1,500.

In addition, the other finalist teams will receive €500.

Para llevar a cabo la valoración, el jurado tomará como referencia una serie de criterios detallados en las bases de la competición: relevancia, reutilización de datos abiertos y aptitud para el propósito.

In order to carry out the assessment, the jury will take as a reference a series of criteria detailed in the competition rules: relevance, reuse of open data and fitness for purpose.

How can I participate?

Participants must upload their proposal to a sharepoint enabled for this purpose. A model document that can be used as a reference can be found on the website.

Beforehand, it is necessary to register using the form on the website. After registration, the team will receive an e-mail with instructions on how to submit the proposal.

The proposal must be submitted before 19 October 2022 at 12h.

Find out more about Open Data Bizkaia

Open Data Bizkaia provides citizens and reusing agents with access to the public information managed by the Provincial Council of Bizkaia. There are currently more than 900 datasets available.

Its website also offers resources for reusers, an API, good practices and examples of applications created with datasets from the portal that can serve to inspire the participants in this competition.

Find out more about Bizkaia's Open Data strategy in this article.

After several months of tests and different types of training, the first massive Artificial Intelligence system in the Spanish language is capable of generating its own texts and summarising existing ones. MarIA is a project that has been promoted by the Secretary of State for Digitalisation and Artificial Intelligence and developed by the National Supercomputing Centre, based on the web archives of the National Library of Spain (BNE).

This is a very important step forward in this field, as it is the first artificial intelligence system expert in understanding and writing in Spanish. As part of the Language Technology Plan, this tool aims to contribute to the development of a digital economy in Spanish, thanks to the potential that developers can find in it.

The challenge of creating the language assistants of the future

MarIA-style language models are the cornerstone of the development of the natural language processing, machine translation and conversational systems that are so necessary to understand and automatically replicate language. MarIA is an artificial intelligence system made up of deep neural networks that have been trained to acquire an understanding of the language, its lexicon and its mechanisms for expressing meaning and writing at an expert level.

Thanks to this groundwork, developers can create language-related tools capable of classifying documents, making corrections or developing translation tools.

The first version of MarIA was developed with RoBERTa, a technology that creates language models of the "encoder" type, capable of generating an interpretation that can be used to categorise documents, find semantic similarities in different texts or detect the sentiments expressed in them.

Thus, the latest version of MarIA has been developed with GPT-2, a more advanced technology that creates generative decoder models and adds features to the system. Thanks to these decoder models, the latest version of MarIA is able to generate new text from a previous example, which is very useful for summarising, simplifying large amounts of information, generating questions and answers and even holding a dialogue.

Advances such as the above make MarIA a tool that, with training adapted to specific tasks, can be of great use to developers, companies and public administrations. Along these lines, similar models that have been developed in English are used to generate text suggestions in writing applications, summarise contracts or search for specific information in large text databases in order to subsequently relate it to other relevant information.

In other words, in addition to writing texts from headlines or words, MarIA can understand not only abstract concepts, but also their context.

More than 135 billion words at the service of artificial intelligence

To be precise, MarIA has been trained with 135,733,450,668 words from millions of web pages collected by the National Library, which occupy a total of 570 Gigabytes of information. The MareNostrum supercomputer at the National Supercomputing Centre in Barcelona was used for the training, and a computing power of 9.7 trillion operations (969 exaflops) was required.

Bearing in mind that one of the first steps in designing a language model is to build a corpus of words and phrases that serves as a database to train the system itself, in the case of MarIA, it was necessary to carry out a screening to eliminate all the fragments of text that were not "well-formed language" (numerical elements, graphics, sentences that do not end, erroneous encodings, etc.) and thus train the AI correctly.

Due to the volume of information it handles, MarIA is already the third largest artificial intelligence system for understanding and writing with the largest number of massive open-access models. Only the language models developed for English and Mandarin are ahead of it. This has been possible mainly for two reasons. On the one hand, due to the high level of digitisation of the National Library's heritage and, on the other hand, thanks to the existence of a National Supercomputing Centre with supercomputers such as the MareNostrum 4.

The role of BNE datasets

Since it launched its own open data portal (datos.bne.es) in 2014, the BNE has been committed to bringing the data available to it and in its custody closer: data on the works it preserves, but also on authors, controlled vocabularies of subjects and geographical terms, among others.

In recent years, the educational platform BNEscolar has also been developed, which seeks to offer digital content from the Hispánica Digital Library's documentary collection that may be of interest to the educational community.

Likewise, and in order to comply with international standards of description and interoperability, the BNE data are identified by means of URIs and linked conceptual models, through semantic technologies and offered in open and reusable formats. In addition, they have a high level of standardisation.

Next steps

Thus, and with the aim of perfecting and expanding the possibilities of use of MarIA, it is intended that the current version will give way to others specialised in more specific areas of knowledge. Given that it is an artificial intelligence system dedicated to understanding and generating text, it is essential for it to be able to cope with lexicons and specialised sets of information.

To this end, the PlanTL will continue to expand MarIA to adapt to new technological developments in natural language processing (more complex models than the GPT-2 now implemented, trained with larger amounts of data) and will seek ways to create workspaces to facilitate the use of MarIA by companies and research groups.

Content prepared by the datos.gob.es team.

Open data portals are experiencing a significant growth in the number of datasets being published in the transport and mobility category. For example, the EU's open data portal already has almost 48,000 datasets in the transport category or Spain's own portal datos.gob.es, which has around 2,000 datasets if we include those in the public sector category. One of the main reasons for the growth in the publication of transport-related data is the existence of three directives that aim to maximise the re-use of datasets in the area. The PSI directive on the re-use of public sector information in combination with the INSPIRE directive on spatial information infrastructure and the ITS directive on the implementation of intelligent transport systems, together with other legislative developments, make it increasingly difficult to justify keeping transport and mobility data closed.

In this sense, in Spain, Law 37/2007, as amended in November 2021, adds the obligation to publish open data to commercial companies belonging to the institutional public sector that act as airlines. This goes a step further than the more frequent obligations with regard to data on public passenger transport services by rail and road.

In addition, open data is at the heart of smart, connected and environmentally friendly mobility strategies, both in the case of the Spanish "es.movilidad" strategy and in the case of the sustainable mobility strategy proposed by the European Commission. In both cases, open data has been introduced as one of the key innovation vectors in the digital transformation of the sector to contribute to the achievement of the objectives of improving the quality of life of citizens and protecting the environment.

However, much less is said about the importance and necessity of open data during the research phase, which then leads to the innovations we all enjoy. And without this stage in which researchers work to acquire a better understanding of the functioning of the transport and mobility dynamics of which we are all a part, and in which open data plays a fundamental role, it would not be possible to obtain relevant innovations or well-informed public policies. In this sense, we are going to review two very relevant initiatives in which coordinated multi-national efforts are being made in the field of mobility and transport research.

The information and monitoring system for transport research and innovation

At the European level, the EU also strongly supports research and innovation in transport, aware that it needs to adapt to global realities such as climate change and digitalisation. The Strategic Transport Research and Innovation Agenda (STRIA) describes what the EU is doing to accelerate the research and innovation needed to radically change transport by supporting priorities such as electrification, connected and automated transport or smart mobility.

In this sense, the Transport Research and Innovation Monitoring and Information System (TRIMIS) is the tool maintained by the European Commission to provide open access information on research and innovation (R&I) in transport and was launched with the mission to support the formulation of public policies in the field of transport and mobility.

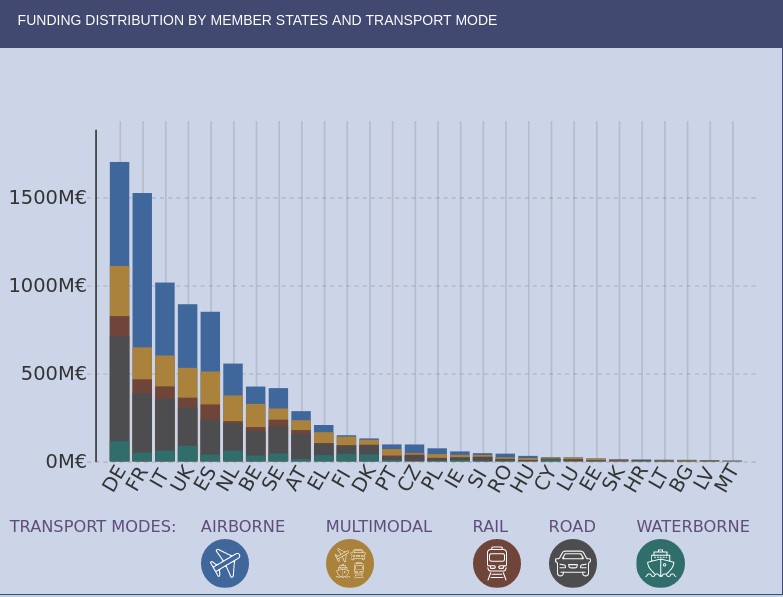

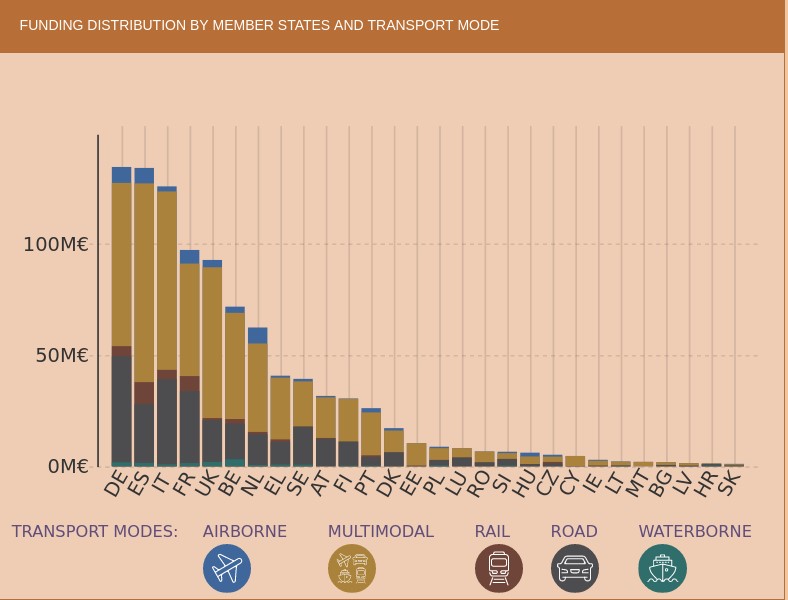

TRIMIS maintains an up-to-date dashboard to visualise data on transport research and innovation and provides an overview and detailed data on the funding and organisations involved in this research. The information can be filtered by the seven STRIA priorities and also includes data on the innovation capacity of the transport sector.

If we look at the geographical distribution of research funds provided by TRIMIS, we see that Spain appears in fifth place, far behind Germany and France. The transport systems in which the greatest effort is being made are road and air transport, beneficiaries of more than half of the total effort.

However, we find that in the strategic area of Smart Mobility and Services (SMO), which are evaluated in terms of their contribution to the overall sustainability of the energy and transport system, Spain is leading the research effort at the same level as Germany. It should also be noted that the effort being made in Spain in terms of multimodal transport is higher than in other countries.

As an example of the research effort being carried out in Spain, we have the pilot dataset to implement semantic capabilities on traffic incident information related to safety on the Spanish state road network, except for the Basque Country and Catalonia, which is published by the General Directorate of Traffic and which uses an ontology to represent traffic incidents developed by the University of Valencia.

The area of intelligent mobility systems and services aims to contribute to the decarbonisation of the European transport sector and its main priorities include the development of systems that connect urban and rural mobility services and promote modal shift, sustainable land use, travel demand sufficiency and active and light travel modes; the development of mobility data management solutions and public digital infrastructure with fair access or the implementation of intermodality, interoperability and sectoral coupling.

The 100 mobility questions initiative

The 100 Questions Initiative, launched by The Govlab in collaboration with Schmidt Futures, aims to identify the world's 100 most important questions in a number of domains critical to the future of humanity, such as gender, migration or air quality.

One of these domains is dedicated precisely to transport and urban mobility and aims to identify questions where data and data science have great potential to provide answers that will help drive major advances in knowledge and innovation on the most important public dilemmas and the most serious problems that need to be solved.

In accordance with the methodology used, the initiative completed the fourth stage on 28 July, in which the general public voted to decide on the final 10 questions to be addressed. The initial 48 questions were proposed by a group of mobility experts and data scientists and are designed to be data-driven and planned to have a transformative impact on urban mobility policies if they can be solved.

In the next stage, the GovLab working group will identify which datasets could provide answers to the selected questions, some as complex as "where do commuters want to go but really can't and what are the reasons why they can't reach their destination easily?" or "how can we incentivise people to make trips by sustainable modes, such as walking, cycling and/or public transport, rather than personal motor vehicles?"

Other questions relate to the difficulties encountered by reusers and have been frequently highlighted in research articles such as "Open Transport Data for maximising reuse in multimodal route": "How can transport/mobility data collected with devices such as smartphones be shared and made available to researchers, urban planners and policy makers?"

In some cases it is foreseeable that the datasets needed to answer the questions may not be available or may belong to private companies, so an attempt will also be made to define what new datasets should be generated to help fill the gaps identified. The ultimate goal is to provide a clear definition of the data requirements to answer the questions and to facilitate the formation of data collaborations that will contribute to progress towards these answers.

Ultimately, changes in the way we use transport and lifestyles, such as the use of smartphones, mobile web applications and social media, together with the trend towards renting rather than owning a particular mode of transport, have opened up new avenues towards sustainable mobility and enormous possibilities in the analysis and research of the data captured by these applications.

Global initiatives to coordinate research efforts are therefore essential as cities need solid knowledge bases to draw on for effective policy decisions on urban development, clean transport, equal access to economic opportunities and quality of life in urban centres. We must not forget that all this knowledge is also key to proper prioritisation so that we can make the best use of the scarce public resources that are usually available to meet the challenges.

Content written by Jose Luis Marín, Senior Consultant in Data, Strategy, Innovation & Digitalization.

The contents and views reflected in this publication are the sole responsibility of the author.