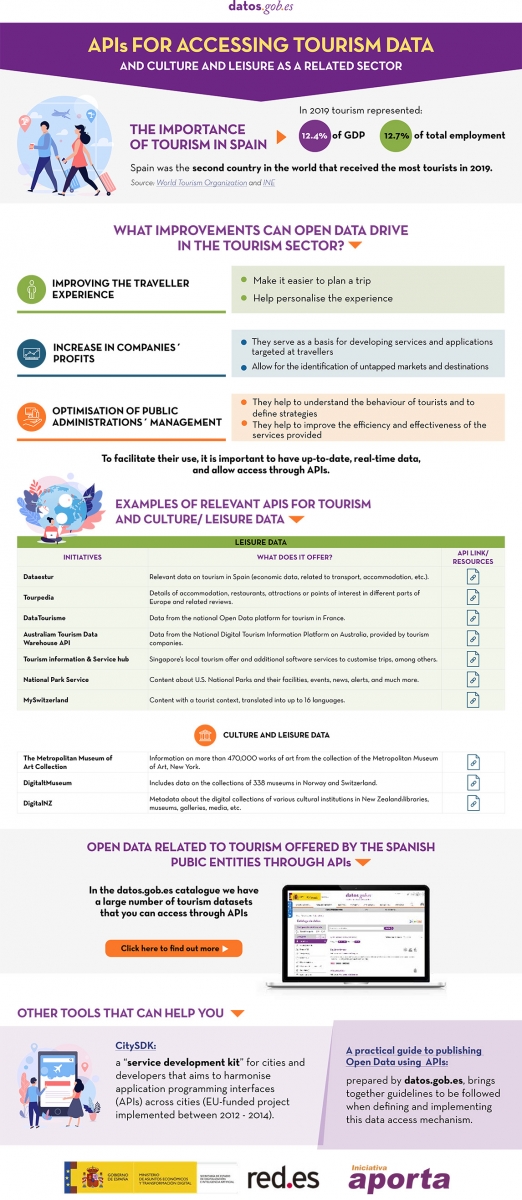

Spain was the second country in the world that received the most tourists during 2019, with 83.8 million visitors. That year, tourism activity represented 12.4% of GDP, employing more than 2.2 million people (12.7% of the total). It is therefore a fundamental sector for our economy.

These figures have been reduced due to the pandemic, but the sector is expected to recover in the coming months. Open data can help. Up-to-date information can bring benefits to all actors involved in this industry:

- Tourists: Open data helps tourists plan their trips, providing them with the information they need to choose where to stay or what activities to do. The up-to-date information that open data can provide is particularly important in times of COVID. There are several portals that collect information and visualisations of travel restrictions, such as the UN's Humanitarian Data Exchange. This website hosts a daily updated interactive map of travel restrictions by country and airline.

- Businesses. Businesses can generate various applications targeted at travellers, with useful information. In addition, by analysing the data, tourism establishments can detect untapped markets and destinations. They can also personalise their offers and even create recommendation systems that help to promote different activities, with a positive impact on the travellers' experience.

- Public administrations. More and more governments are implementing solutions to capture and analyse data from different sources in real time, in order to better understand the behaviour of their visitors. Examples include Segovia, Mallorca and Gran Canaria. Thanks to these tools, they will be able to define strategies and make informed decisions, for example, aimed at avoiding overcrowding. In this sense, tools such as Affluences allow them to report on the occupation of museums, swimming pools and shops in real time, and to obtain predictions for successive time slots.

The benefits of having quality tourism-related data are such that it is not surprising that the Spanish Government has chosen this sector as a priority when it comes to creating data spaces that allow voluntary data sharing between organisations. In this way, data from different sources can be cross-referenced, enriching the various use cases.

The data used in this field are very diverse: data on consumption, transport, cultural activities, economic trends or even weather forecasts. But in order to make good use of this highly dynamic data, it needs to be available to users in appropriate, up-to-date formats and access needs to be automated through application programming interfaces (APIs).

Many organisations already offer data through APIs. In this infographic you can see several examples linked to our country at national, regional and local level. But in addition to general data portals, we can also find APIs in open data platforms linked exclusively to the tourism sector. In the following infographic you can see several examples:

Click here to see the infographic in full size and in its accessible version.

Do you know more examples of APIs or other resources that facilitate access to tourism-related open data? Leave us a comment or write to datos.gob.es!

Content prepared by the datos.gob.es team.

The end of winter is approaching and, with the change of season, comes the time to compile the main developments of the last three months.

Autumn ended with the approval of Royal Decree-Law 24/2021, which includes the transposition of the European Directive on open data and re-use of public sector information, and now we end the winter with another regulatory advance, this time at European level: the publication of the draft Regulation on the establishment of harmonised rules on access and fair use of data (Data Act), applicable to all economic sectors and which proposes new rules on who can use and access data generated in the EU and under what conditions.

These regulatory developments have been joined by many others in the area of openness and re-use of data. In this article we highlight some examples.

Public data and disruptive technologies

The relationship between open data and new technologies is increasingly evident through various projects that aim to generate improvements in society. Universities are a major player in this field, with innovative projects such as:

- The Universitat Oberta de Catalunya (UOC) has launched the project "Bots for interacting with open data - Conversational interfaces to facilitate access to public data". Its aim is to help citizens improve their decision-making through access to data, as well as to optimise the return on investment of open data projects.

- The UOC has also launched, together with the Universitat Politècnica de València (UPV) and the Universitat Politècnica de Catalunya (UPC), OptimalSharing@SmartCities, which optimises the use of car sharing in cities through intelligent algorithms capable of processing large amounts of data. They are currently working with data from the Open Data BCN initiative.

- Researchers from the University of Cantabria are participating in the SALTED project, aimed at reusing open data from IoT devices, the web and social networks. The aim is to transform them into "useful" information in fields such as urban management or agriculture.

Public bodies are also increasingly harnessing the potential of open data to implement solutions that optimise resources, boost efficiency and improve the citizen experience. Many of these projects are linked to smart cities.

- The Cordoba Provincial Council's 'Enlaza, Cordoba Smart Municipalities' project seeks to intelligently manage municipal electricity supplies. A proprietary software has been developed and different municipal facilities have been sensorised with the aim of obtaining data to facilitate decision-making. Among other issues, the province's infrastructures will be used to incorporate a platform that favours the use of open data.

- The eCitySevilla and eCityMálaga projects have brought together 90 public and private entities to promote a smart city model at the forefront of innovation and sustainability. Among other issues, they will integrate open data, renewable energies, sustainable transport, efficient buildings and digital infrastructures.

- One area where data-driven solutions have a major impact is in tourism. In this sense, the Segovia Provincial Council has created a digital platform to collect tourism data and adjust its proposals to the demands of visitors. The visualisation of updated data will be obtained in real time and will make it possible to learn more about the tourism behaviour of visitors.

- For its part, the Consell de Mallorca has set up a Sustainable Tourism Observatory that will provide permanently updated information to define strategies and make decisions based on real data.

To boost the use of data analytics in public bodies, the Andalusian Digital Agency has announced the development of a unit to boost Big Data. Its aim is to provide data analytics-related services to different Andalusian government agencies.

Other examples of open data re-use

Open data is also increasingly in demand by journalists for so-called data journalism. This is especially noticeable in election periods, such as the recent elections to the Castilla y León parliament. Re-users such as Maldita or EPData have taken advantage of the open data offered by the Junta to create informative pieces and interactive maps to bring information closer to the citizens.

Public bodies themselves also take advantage of visualisations to bring data to the public in a simple way. As an example, the map of the National Library of Spain with the Spanish authors who died in 1941, whose works become public domain in 2022 and, therefore, can be edited, reproduced or disseminated publicly.

Another example of the reuse of open data can be found in the Fallas of Valencia. In addition to the classic ninot, this festival also has immaterial fallas that combine tradition, technology and scientific dissemination. This year, one of them consists of an interactive game that uses open data from the city to pose various questions.

Open data platforms are constantly being upgraded

During this season we have also seen many public bodies launching new portals and tools to facilitate access to data, and thus its re-use. Likewise, catalogues have been constantly updated and the information offered has been expanded. The following are some examples:

- Sant Boi Town Council has recently launched its digital platform Open Data. It is a space that allows users to explore and download open data from the City Council easily, free of charge and without restrictions.

- As part of its Smart City project, Alcoi City Council has set up a website with open data on traffic and environmental indicators. Here you can consult data on air quality, sound pressure, temperature and humidity in different parts of the city.

- The Castellón Provincial Council has developed an intuitive and easy-to-use tool to facilitate and accompany citizens' requests for access to public information. It has also updated the information on infrastructures and equipment of the municipalities of Castellón on the provincial institution's data portal in geo-referenced formats, which facilitates its reuse.

- The Institute of Statistics and Cartography of Andalusia (IECA) has updated the data tables offered through its BADEA databank. Users can now sort and filter all the information with a single click. In addition, a new section has been created on the website aimed at reusers of statistical information. Its aim is to make the data more accessible and interoperable.

- IDECanarias has published an orthophoto of La Palma after the volcanic eruption. It can be viewed through the GRAFCAN viewer. It should be noted that open data has been of great importance in analysing the damage caused by the lava on the island.

- GRAFCAN has also updated the Territorial Information System of the Canary Islands (SITCAN), incorporating 25 new points of interest. This update facilitates the location of 36,753 points of interest in the archipelago through the portal of the Spatial Data Infrastructure of the Canary Islands (IDECanarias).

- Barcelona Provincial Council offers a new service for downloading open geographic data, with free and open access, through the IDEBarcelona geoportal. This service was presented through a session (in Catalan), which was recorded and is available on Youtube.

- The municipal GIS of the City Council of Cáceres has made available to citizens the free download of the updated cartography of the city of Extremadura in different formats such as DGN, DWG, SHP or KMZ.

New reports, guides, courses and challenges

These three months have also seen the publication and launch of resources and activities aimed at promoting open data:

- The Junta de Castilla y León has published the guide "Smart governance: the challenge of public service management in local government", which includes tools and use cases to boost efficiency and effectiveness through participation and open data.

- The Spanish National Research Council (CSIC) has updated the ranking of open access portals and repositories worldwide in support of Open Science initiatives. This ranking is based on the number of papers indexed in the Google Scholar database.

- The commitment of local councils to open data is also evident in the implementation of training initiatives and internal promotion of open data. In this sense, L'Hospitalet City Council has launched two new internal tools to promote the use and dissemination of data by municipal employees: a data visualisation guide and another on graphic guidelines and data visualisation style.

- Along the same lines, the Spanish Federation of Municipalities and Provinces (FEMP) has launched the Course on Open Data Treatment and Management in Local Entities, which will be held at the end of March (specifically on 22, 24, 29 and 31 March 2022). The course is aimed at technicians without basic knowledge of Local Entities.

Multiple competitions have also been launched, which aim to boost the use of data, especially at university level, for example The Generalitat Valenciana with POLIS, a project in which students from three secondary schools will learn the importance of understanding and analysing public policies using open data available in public bodies.

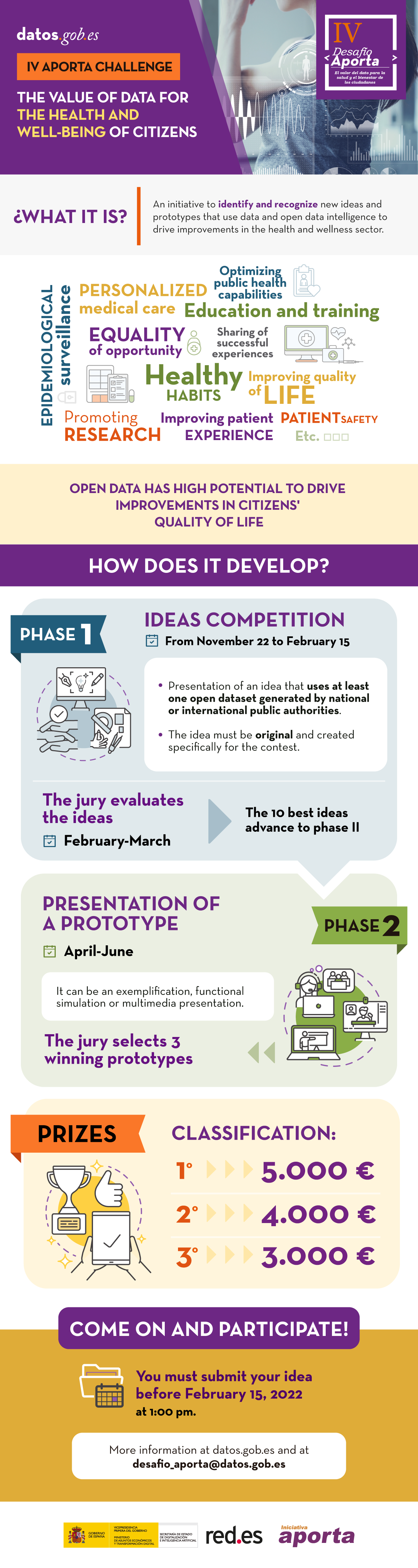

In addition, the registration period for the IV Aporta Challenge, focused on the field of Health and Wellbeing, ended this winter. Among the proposals received we find predictive models that allow us to know the evolution of diseases or algorithms that cross-reference data and determine healthy habits.

Other news of interest in Europe

In addition to the publication of the Data Act, we have also seen other developments in Europe:

- JoinUp has published the latest version of the metadata application profile specification for open data portals in Europe, DCAT-AP 2.1.

- In line with the European Data Strategy, the European Commission has published a working document with its overview of common European data spaces.

- The Publications Office of the European Union has launched the sixth edition of the EU Datathon. In this competition, participants have to use open data to address one of four proposed challenges.

- The EU Science Hub has published a report presenting examples of use cases linked to data sharing. They explore emerging technologies and tools for data-driven innovation.

These are just some examples of the latest developments in the open data ecosystem in Spain and Europe. If you want to share a project or news with us, leave us a comment or write to dinamizacion@datos.gob.es.

The deadline for receiving applications to participate in the IV Aporta Challenge closed on 15 February. In total, 38 valid proposals were received in due time and form, all of high quality, whose aim is to promote improvements in the health and well-being of citizens through the reuse of data offered by public administrations for their reuse.

Disruptive technologies, key to extracting maximum value from data

According to the competition rules, in this first phase, participants had to present ideas that identified new opportunities to capture, analyse and use data intelligence in the development of solutions of all kinds: studies, mobile applications, services or websites.

All the ideas seek to address various challenges related to health and wellbeing, many of which have a direct impact on our healthcare system, such as improving the efficiency of services, optimising resources or boosting transparency. Some of the areas addressed by participants include pressure on the health system, diagnosis of diseases, mental health, healthy lifestyles, air quality and the impact of climate change.

Many of the participants have chosen to use disruptive technologies to address these challenges. Among the proposals, we find solutions that harness the power of algorithms to cross-reference data and determine healthy habits or predictive models that allow us to know the evolution of diseases or the situation of the health system. Some even use gamification techniques. There are also a large number of solutions aimed at bringing useful information to citizens, through maps or visualisations.

Likewise, the specific groups at which the solutions are aimed are diverse: we find tools aimed at improving the quality of life of people with disabilities, the elderly, children, individuals who live alone or who need home care, etc.

Proposals from all over Spain and with a greater presence of women

Teams and individuals from all over Spain have been encouraged to participate in the Challenge. We have representatives from 13 Autonomous Communities: Madrid, Catalonia, the Basque Country, Andalusia, Valencia, the Canary Islands, Galicia, Aragon, Extremadura, Castile and Leon, Castile-La Mancha, La Rioja and Asturias.

25% of the proposals were submitted by individuals and 75% by multidisciplinary teams made up of various members. The same distribution is found between individuals (75%) and legal entities (25%). In the latter category, we find teams from universities, organisations linked to the Public Administration and different companies.

It is worth noting that in this edition the number of women participants has increased, demonstrating the progress of our society in the field of equality. Two editions ago, 38% of the proposals were submitted by women or by teams with women members. Now that number has risen to 47.5%. While this is a significant improvement, there is still work to be done in promoting STEM subjects among women and girls in our country.

Jury deliberation begins

Once the proposals have been accepted, it is time for the jury's assessment, made up of experts in the field of innovation, data and health. The assessment will be based on a series of criteria detailed in the rules, such as the overall quality and clarity of the proposed idea, the data sources used or the expected impact of the proposed idea on improving the health and well-being of citizens.

The 10 proposals with the best evaluation will move on to phase II, and will have a minimum of two months to develop the prototype resulting from their idea. The proposals will be presented to the same jury, which will score each project individually. The three prototypes with the highest scores will be the winners and will receive a prize of 5,000, 4,000 and 3,000 euros, respectively.

Good luck to all participants!

Today, no one can deny that open data holds great economic power. The European Commission itself estimates that the turnover of open data in the EU27 could reach 334.2 billion in 2025, driven by its use in areas linked to disruptive technologies such as artificial intelligence, machine learning or language technologies.

But in addition to its economic impact, open data also has an important value for society: it provides information that makes social reality visible, driving informed decision-making for the common good.

There are thousands of areas where open data is essential, from refugee crises to the inclusion of people with disabilities, but in this article we will focus on the scourge of gender violence.

Where can I obtain data on the subject?

Globally, agencies such as the UN, the World Health Organization and the World Bank offer resources and statistics related to violence against women.

In our country, local, autonomous and state agencies publish related datasets. To facilitate unified access to them, the Government Delegation against Gender Violence has a statistical portal that includes in a single space data from various sources such as the Ministry of Finance and Public Administration, the General Council of the Judiciary or the Public Employment Service of the Ministry of Employment and Social Security. The user can cross-reference variables and create tables and graphs to facilitate the visualization of the information, as well as export the data sets in CSV or Excel format.

Projects to raise awareness and visibility

But data alone can be complicated to understand. Data need a context that gives them meaning and transforms them into information and knowledge. This is where different projects arise that seek to bring data to the public in a simple way.

There are many associations and organizations that take advantage of published data to create visualizations and stories with data that help to raise awareness about gender violence. As an example, the Barcelona Open Data Initiative is developing the "DatosXViolenciaXMujeres" project. It is a visual and interactive tour on the impact of gender violence in Spain and by Autonomous Communities during the period 2008-2020, although it is updated periodically. Using data storytelling techniques, it shows the evolution of gender violence within the couple, the judicial response (orders issued and final convictions), the public resources allocated, the impact of COVID-19 in this area and crimes of sexual violence. Each graph includes links to the original source and to places where the data can be downloaded so that they can be reused in other projects.

Another example is "Datos contra el ruido” (Data against noise), developed within the framework of GenderDataLab, a collaborative platform for the digital common good that has the support of various associations, such as Pyladies or Canodron, and the Barcelona City Council, among others. This association promotes the inclusion of the gender perspective in the collection of open data through various projects such as the aforementioned "Dotos contra el ruido", which makes visible and understandable the information published by the judicial system and the police on gender violence. Through data and visualizations, it provides information on the types of crimes or their geographical distribution throughout our country, among other issues. As with "DatosXViolenciaXMujeres", a link to the original source of the data and download spaces are included.

Tools and solutions to support victims

But in addition to providing visibility, open data can also give us information on the resources dedicated to helping victims, as we saw in some of the previous projects. Making this information available to victims in a quick and easy way is essential. Maps showing the location of help centers are of great help, such as this one from the SOL.NET project, with information on organizations that offer support and care services for victims of gender-based violence in Spain. Or this one with the centers and social services of the Valencian Community aimed at disadvantaged groups, including victims of gender violence, prepared by the public institution itself.

This information is also incorporated in applications aimed at victims, such as Anticípate. This app not only provides information and resources to women in vulnerable situations, but also has an emergency call button and allows access to legal, psychological or even self-defense advice, facilitating access to a social criminologist.

In short, we are facing a particularly sensitive issue, which we must continue to raise awareness and fight to put an end to. A task to which open data can make a significant contribution.

If you know of any other example that shows the power of open data in this field, we encourage you to share it in the comments section or send us an email to dinamizacion@datos.gob.es.

Content prepared by the datos.gob.es team.

Today, Artificial Intelligence (AI) applications are present in many areas of everyday life, from smart TVs and speakers that are able to understand what we ask them to do, to recommendation systems that offer us services and products adapted to our preferences.

These AIs "learn" thanks to various techniques, including supervised, unsupervised and reinforcement learning. In this article we will focus on reinforcement learning, which focuses mainly on trial and error, similar to how humans and animals in general learn.

The key to this type of system is to correctly set long-term goals in order to find an optimal global solution, without focusing too much on immediate rewards, which do not allow for an adequate exploration of the set of possible solutions.

Simulation environments as a complement to open data sets.

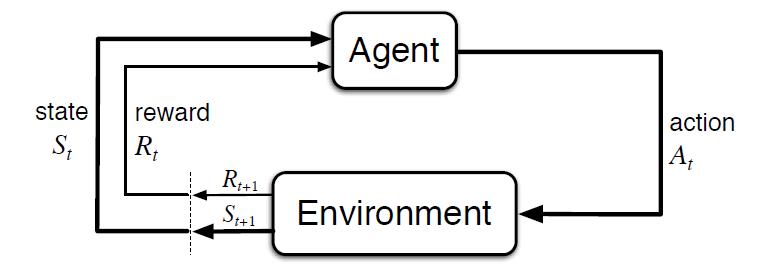

Unlike other types of learning, where learning is usually based on historical datasets, this type of technique requires simulation environments that allow training a virtual agent through its interaction with an environment, where it receives rewards or penalties depending on the state and actions it performs. This cycle between agent and environment can be seen in the following diagram:

Figure 1 - Scheme of learning by reinforcement [Sutton & Barto, 2015]

That is, starting from a simulated environment, with an initial state, the agent performs an action that generates a new state and a possible reward or penalty, which depends on the previous states and the action performed. The agent learns the best strategy in this simulated environment from experience, exploring the set of states, and being able to recommend the best action policy if configured appropriately.

The best-known example worldwide was the success achieved by AlphaGo, beating 18-time world champion Lee Sedol in 2016. Go is an ancient game, considered one of the 4 basic arts in Chinese culture, along with music, painting and calligraphy. Unlike chess, the number of possible game combinations is greater than the number of atoms in the Universe, being a problem impossible to solve by traditional algorithms.

Curiously, the technological breakthrough demonstrated by AlphaGo in solving a problem that was claimed to be beyond the reach of an AI, was eclipsed a year later by its successor AlphaGo Zero. In this version, its creators chose not to use historical data or heuristic rules. AlphaGo Zero only uses the board positions and learns by trial and error by playing against itself.

Following this innovative learning strategy, in 3 days of execution he managed to beat AlphaGo, and after 40 days he became the best Go player, accumulating thousands of years of knowledge in a matter of days, and even discovering previously unknown strategies.

The impact of this technological milestone covers countless areas, and AI solutions that learn to solve complex problems from experience can be counted on. From resource management, strategy planning, or the calibration and optimization of dynamic systems.

The development of solutions in this area is especially limited by the need for appropriate simulation environments, being the most complex component to build. However, there are multiple repositories to obtain open simulation environments that allow us to test this type of solutions.

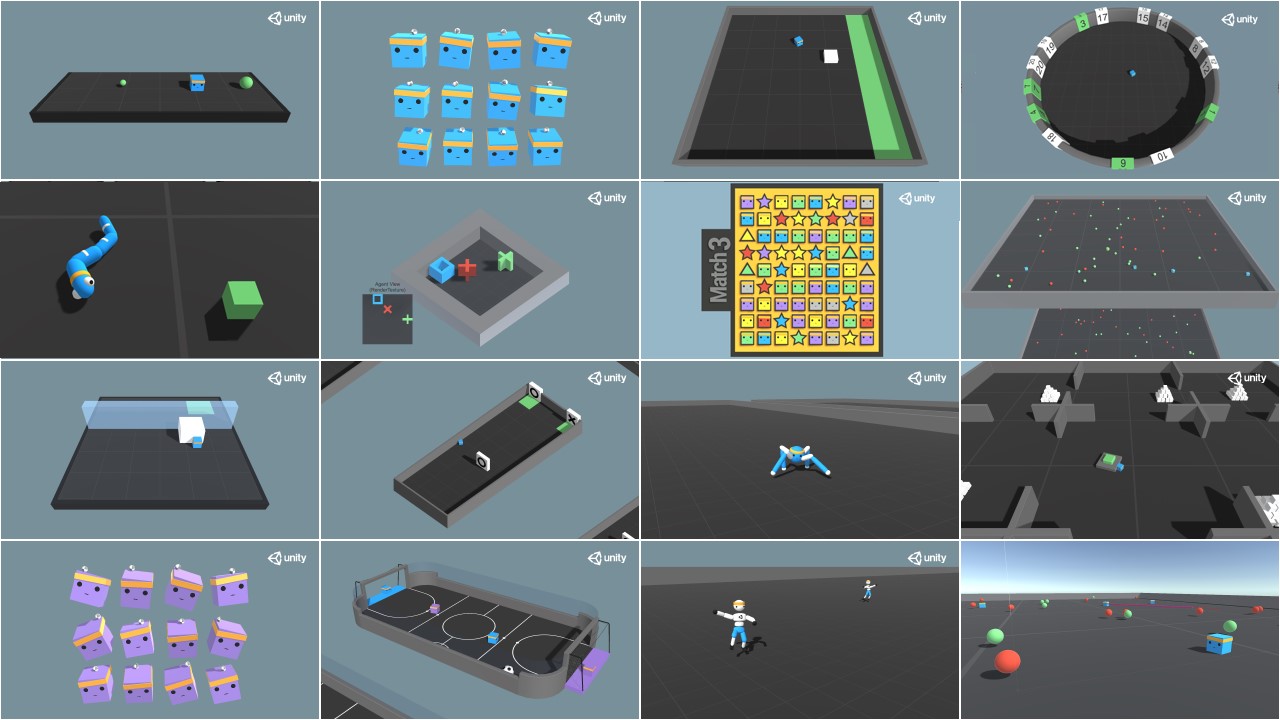

The best known reference is Open AI Gym, which includes an extensive set of libraries and open simulation environments for the development and validation of reinforcement learning algorithms. Among others, it includes simulators for the basic control of mechanical elements, robotics applications and physics simulators, two-dimensional ATARI video games, and even the landing of a lunar module. In addition, it allows to integrate and publish new open simulators for the development of our own simulators adapted to our needs that can be shared with the community:

Figure 2 - Examples of visual simulation environments offered by Open AI Gym

Another interesting reference is Unity ML Agents, where we also find multiple libraries and several simulation environments, also offering the possibility of integrating our own simulator:

Figure 3 - Examples of visual simulation environments offered by Unity ML Agents

Potential applications of reinforcement learning in public administrations

This type of learning is used especially in areas such as robotics, resource optimization or control systems, allowing the definition of optimal policies or strategies for action in specific environments.

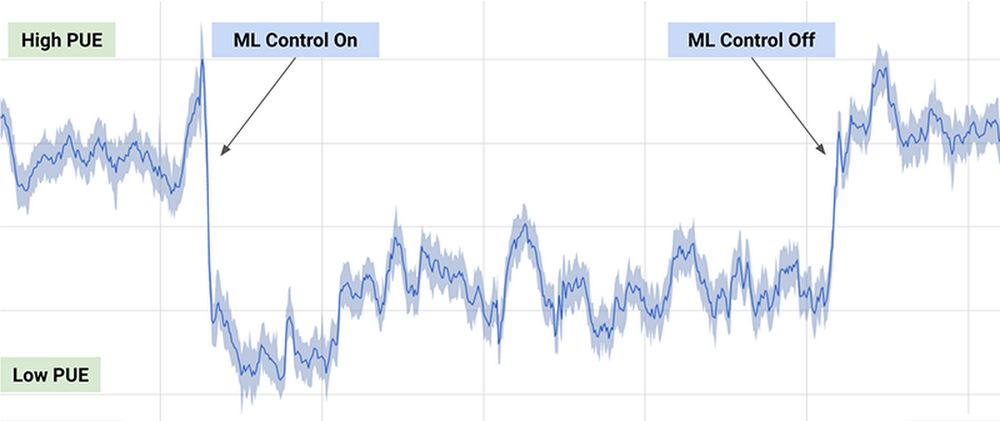

One of the best-known practical examples is the DeepMind algorithm used by Google to reduce by 40% the energy consumption required to cool its data centers in 2016, achieving a significant reduction in energy consumption during use, as can be seen in the following graph (taken from the previous article):

Figure 4 - Results of the DeepMind algorithm on the energy consumption of Google's data centers.

The algorithm employed uses a combination of deep learning and reinforcement learning techniques, together with a general purpose simulator to understand complex dynamic systems that could be applied in multiple environments such as transformation between energy types, water consumption or resource optimization in general.

Other possible applications in the public domain include the search and recommendation of open datasets through chatbots, or the optimization of public policies, as is the case of the European project Policy Cloud, applied for example in the analysis of future strategies of the different designations of origin of wines from Aragon.

In general, the application of this type of techniques could optimize the use of public resources by planning action policies that result in more sustainable consumption, reducing pollution, waste and public spending.

Content prepared by Jose Barranquero, expert in Data Science and Quantum Computing.

The contents and views expressed in this publication are the sole responsibility of the author.

Autumn is coming to an end and, as every time we change season, we would like to summarize some of the main news and developments of the last three months.

One of the main advances of open data in our country has occurred in the legislative field, with the approval of the transposition of the European Directive on open data and reuse of public sector information. It has been included in the Royal Decree-Law 24/2021, validated last December 2 by the Congress. You can read about the new features here.

It is expected that, under the protection of this regulation, the Spanish open data ecosystem will continue to grow, as shown by the new developments in recent months. In the case of datos.gob.es, we have reached 160 public administrations publishing data this fall, exceeding 50,000 datasets accessible from the National Catalog. In addition, many organizations and reusers have launched new projects linked to open data, as we will see below.

La Palma volcano, an example of the value of public data

This autumn will be remembered in our country for the eruption of the volcano on the island of La Palma. A situation that has highlighted the importance of the publication and use of open data for the management of natural emergencies.

The open data portal of La Palma has offered -and offers- updated information about the eruption in Cumbre Vieja. Data on perimeters, photogrammetries, thermographs or terrain models can be consulted and downloaded from its website. In addition, the Cabildo Insular has created a unified point to collect all the information of interest in real time in a simple way. It is also important the data and tools made available to the public by the National Geographic Institute. In addition, the Copernicus Earth Observation Program offers data and maps of interest, as well as management support.

All these data have allowed the development of 3D viewers and tools to compare the situation prior to the eruption with the current one, which are very useful to understand the magnitude of the event and make decisions accordingly. The data have also been used by the media and reusers to create visuals to help transmit information to the public, such as this visual tour of the tongue of fire from the recording of the seismic activity to its arrival at the sea or this animation that shows in just 30 seconds, the 5,000 seismic movements recorded in La Palma to date.

Growing use of open data and new technologies

In addition to emergency management, open data is also increasingly being used by public agencies to improve the efficiency and effectiveness of their activities, often in conjunction with disruptive technologies such as artificial intelligence. Some examples are shown below:

- The Murcia City Council has announced that it is developing new sustainable mobility management models using data from the Copernicus Earth Observation Program. The information obtained will make it possible to offer new intelligent mobility services oriented to citizens, companies and public administrations of the municipality.

- Las Palmas de Gran Canaria has presented a Sustainable Tourism Intelligence System. It is a digital tool that provides updated data from multiple sources for decision-making and improving competitiveness, both for companies and for the tourist destination itself.

- The Vigo City Council plans to create a 3D model of the entire city, combining open data with geographic data. This action will be used to develop elements such as noise, pollution and traffic maps, among others.

- The Junta de Castilla y León is working on the Bision Project, a Business Intelligence system for better decision making in the field of health. The new system would automatically allow the development of instruments for evaluating the quality of the healthcare system. It is worth mentioning that the Junta de Castilla y León has received an award for the quality and innovation of its transparency portal during the pandemic thanks to its open data platform.

- The massive Artificial Intelligence system of the Spanish language MarIA, promoted by the State Secretariat for Digitalization and Artificial Intelligence, is making progress in its development. Based on the web archives of the Spanish National Library, a new version has been created that allows summarizing existing texts and creating new ones based on headlines or words.

What's new in open data platforms

In order to continue developing this type of projects, it is essential to continue promoting access to quality data and tools that facilitate their exploitation. In this regard, some of the new developments are:

- The Government of Navarra has presented a new portal in a conference at the University of Navarra, with the participation of the Aporta initiative. Cordoba, which has also approved the implementation of a new open data and transparency portal, will soon follow.

- Aragon's open data portal has released a new version of its API, the GA_OD_Core service. Its aim is to offer citizens and developers the ability to access the data offered on the portal and integrate them into different apps and services through REST architecture. In addition, Aragón has also presented a new virtual assistant that facilitates the location and access to data. It is a chatbot that provides answers based on the data it contains, with a conversational level that can be understood by the receiver.

- The Ministry of Transport, Mobility and Urban Agenda has published a viewer to consult the transport infrastructures belonging to the Trans-European Transport Network in Spain (TEN-T). The viewer allows the downloading of open data of the network, as well as projects co-financed with CEF funds.

- The Madrid City Council has presented Cibeles+, an artificial intelligence project to facilitate access to urban planning information. Using natural language processing techniques and machine learning, the system responds to complex urban planning questions and issues through Alexa and Twitter.

- The geoportal of the IDE Barcelona has launched a new open geographic data download service with free and open access. Among the information published in vector format, the topographic cartography (scale 1:1000) stands out. It includes urban and developable areas and sectors of interest in undeveloped land.

- The Valencia City Council has developed a data inventory to measure efficiency in the implementation of public policies. This tool will also allow citizens to access public information more quickly.

It should also be noted that the Pinto City Council has confirmed its adherence to the principles of the international Open Data Charter (ODC) with the commitment to improve open data policies and governance.

Boosting reuse and data-related capabilities

Public bodies have also launched various initiatives to promote the use of data. Among them, datos.gob.es launched at the end of November the fourth edition of the Aporta Challenge, focused on the field of health and welfare. This seeks to identify and recognize new ideas and prototypes that drive improvements in this field, using open data from public bodies.

This season we also met the winners of the V Open Data Contest organized by the Junta de Castilla y León. Of the 37 applications received, a jury of experts in the field has chosen 8 projects that have emerged as winners in the various categories.

Also, increasingly popular are the courses and seminars that are launched to increase the acquisition of data-related knowledge. Here are two examples:

- The City Council of L'Hospitalet de Llobregat has launched a training program on the use of data for municipal workers. This plan is structured in 22 different courses that will be taught until May next year.

- The Open Cities project has delivered a cycle of workshops related to open data in Smart cities. The complete video is available at this link.

Other news of interest in Europe

At the European level, we have ended the autumn with two major actions: the publication of the Open Data Maturity Index 2021, prepared by the European Data Portal, and the celebration of the EU Open Data Days. In the first one, it should be noted that Spain is in third position and is once again among the leading countries in open data in Europe. For its part, the EU Open Data Days were made up of the EU DataViz 2021 conference and the final of the EU Datathon 2021, where the Spanish company CleanSpot came second in its category. This app raises awareness and encourages recycling and reuse of products through gamification.

The European portal has also launched the Open Data Academy, with all available courses structured around four themes: policy, impact, technology and quality (the same as those assessed by the aforementioned maturity index). The curriculum is constantly updated with new materials.

Other new features include:

- The DCAT Application Profile for Data Portals in Europe (DCAT-AP) has been updated. The preliminary version of DCAT-AP version 2.1.0 was available for public review between October and November 2021.

- Asedie has been selected by the Global Data Barometer (GBD) and Access Info Europe, as "Country Researcher" for the elaboration of the 1st edition of the Global Data Barometer 20-21. This is a research project that analyses how data is managed, shared and used for the common good.

- The OpenCharts map catalog of European Centre for Medium-Range Weather Forecasts, which offers hundreds of open charts, has been updated. This RAM magazine article tells you what's new.

- The UK government has launched one of the world's first national standards for algorithmic transparency. This follows commitments made in its National Artificial Intelligence Strategy and its National Data Strategy.

Do you know of other examples of projects related to open data? Leave us a comment or write to dinamizacion@datos.gob.es.

1. Introduction

Visualizations are a graphic representation that allow us to comprehend in a simple way the information that the data contains. Thanks to visual elements, such as graphs, maps or word clouds, visualizations also help to explain trends, patterns, or outliers that data may present.

Visualizations can be generated from the data of a different nature, such as words that compose a news, a book or a song. To make visualizations out of this kind of data, it is required that the machines, through software programs, are able to understand, interpret and recognize the words that form human speech (both written or spoken) in multiple languages. The field of studies focused on such data treatment is called Natural Language Processing (NLP). It is an interdisciplinary field that combines the powers of artificial intelligence, computational linguistics, and computer science. NLP-based systems have allowed great innovations such as Google's search engine, Amazon's voice assistant, automatic translators, sentiment analysis of different social networks or even spam detection in an email account.

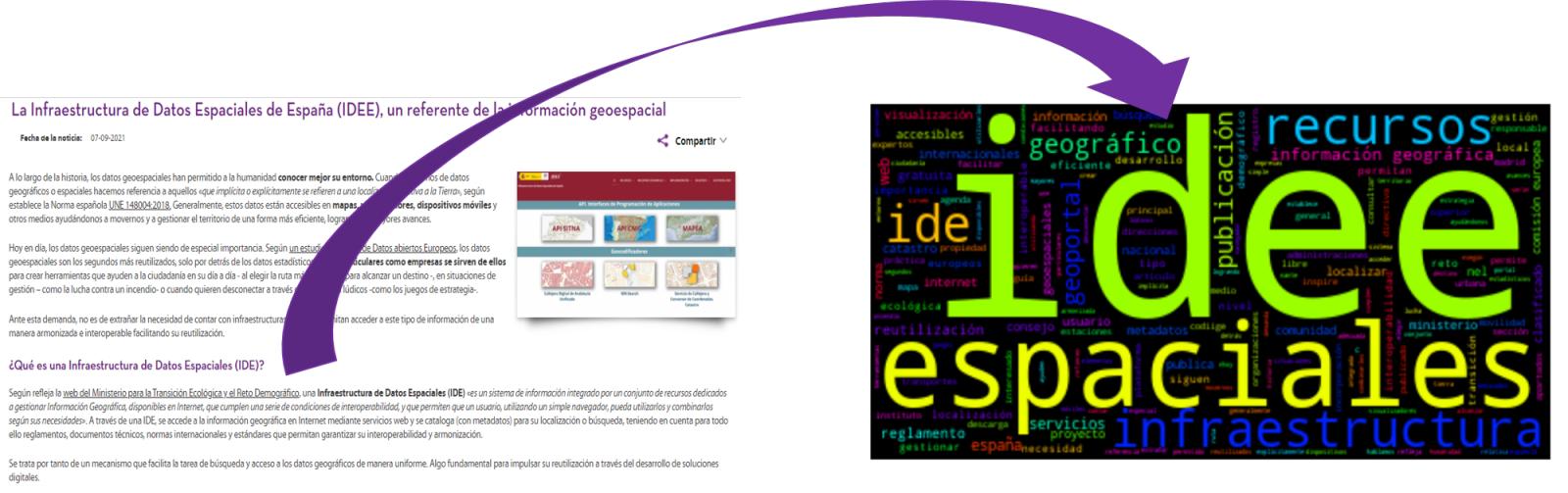

In this practical exercise, we will apply a graphical visualization for a keywords summary representing various texts extracted with NLP techniques. Especially, we are going to create a word cloud that summarizes which are the most reoccurring terms in several posts of the portal.

This visualization is included within a series of practical exercises, in which open data available on the datos.gob.es portal is used. These address and describe in a simple way the steps necessary to obtain the data, perform transformations and analysis that are relevant to the creation of the visualization, with the maximum information extracted. In each of the practical exercises, simple code developments are used that will be conveniently documented, as well as free and open use tools. All the generated material will be available in the Data Lab repository on GitHub.

2. Objetives

The main objective of this post is to learn how to create a visualization that includes images, generated from sets of words representative of various texts, popularly known as \"word clouds\". For this practical exercise we have chosen 6 posts published in the blog section of the datos.gob.es portal. From these texts using NLP techniques we will generate a word cloud for each text that will allow us to detect in a simple and visual way the frequency and importance of each word, facilitating the identification of the keywords and the main theme of each of the posts.

From a text we build a cloud of words applying Natural Language Processing (NLP) techniques

3. Resources

3.1. Tools

To perform the pre-treatment of the data (work environment, programming and the very edition), such as the visualization itself, Python (versión 3.7) and Jupyter Notebook (versión 6.1) are used, tools that you will find integrated in, along with many others in Anaconda, one of the most popular platforms to install, update and manage software to work in data science. To tackle tasks related to Natural Language Processing, we use two libraries, Scikit-Learn (sklearn) and wordcloud. All these tools are open source and available for free..

Scikit-Learn is a very popular vast library, designed in the first place to carry out machine learning tasks on data in textual form. Among others, it has algorithms to perform classification, regression, clustering, and dimensionality reduction tasks. In addition, it is designed for deep learning on textual data, being useful for handling textual feature sets in the form of matrices, performing tasks such as calculating similarities, classifying text and clustering. In Python, to perform this type of tasks, it is also possible to work with other equally popular libraries such as NLTK or spacy, among others.

wordcloud eis a library specialized in creating word clouds using a simple algorithm that can be easily modified.

To facilitate understanding for readers not specialized in programming, the Python code included below, accessible by clicking on the \"Code\" button in each section, is not designed to maximize its efficiency, but to facilitate its comprehension, therefore it is likely that readers more advanced in this language may consider more efficient, alternative ways to code some functionalities. The reader will be able to reproduce this analysis if desired, as the source code is available on datos.gob.es's GitHub account. The way the code is provided is through a Jupyter Notebook, which once loaded into the development environment can be easily executed or modified if desired.

3.2. Datasets

For this analysis, 6 posts recently published on the open data portal datos.gob.es, in its blog section, have been selected. These posts are related to different topics related to open data:

- The latest news in natural language processing: summaries of classic works in just a few hundred words.

- The importance of anonymization and data privacy.

- The value of real-time data through a practical example.

- New initiatives to open and harness data for health research.

- Kaggle and other alternative platforms for learning data science.

- The Spatial Data Infrastructure of Spain (IDEE), a benchmark for geospatial information.

4. Data processing

Before advancing to creation of an effective visualization, we must perform a preliminary data treatment or pre-processing of the data, paying attention to obtaining them, ensuring that they do not contain errors and are in a suitable format for processing. Data pre-processing is essential for build any effective and consistent visual representation.

In NLP, the pre-processing of the data consists mainly of a series of transformations that are carried out on the input data, in our case several posts in TXT format, with the aim of obtaining standardized data and without elements that may affect the quality of the results, in order to facilitate its subsequent processing to perform tasks such as, generate a word cloud, perform opinion/sentiment mining or generate automated summaries from input texts. In general, the flowchart to be followed to perform word preprocessing includes the following steps:

- Cleaning: Removal of special characters and symbols that inflict results distortion, such as punctuation marks.

- Tokenize: Tokenization is the process of separating a text into smaller units, tokens. Tokens can be sentences, words, or even characters.

- Derivation and Lemmatisation: this process consists of transforming words to their basic form, that is, to their canonical form or lemma, eliminating plurals, verb tenses or genders. This action is sometimes redundant since it is not always required for further processing to know the semantic similarity between the different words of the text.

- Elimination of stop words: stop words or empty words are those words of common use that do not contribute in a significant way to the text. These words should be removed before text processing as they do not provide any unique information that can be used for the classification or grouping of the text, for example, determining articles such as 'a', 'an', 'the' etc.

- Vectorization: in this step we transform each of the tokens obtained in the previous step to a vector of real numbers that is generated based on the frequency of the appearance of each word in the text. Vectorization allows machines to be able to process text and apply, among others, machine learning techniques.

4.1. Installation and loading of libraries

Before starting with data pre-processing, we need to import the libraries to work with. Python provides a vast number of libraries that allow to implement functionalities for many tasks, such as data visualization, Machine Learning, Deep Learning or Natural Language Processing, among many others. The libraries that we will use for this analysis and visualization are the following:

- os, which allows access to operating system-dependent functionality, such as manipulating the directory structure.

- re, provides functions for processing regular expressions.

- pandas, is a very popular and essential library for processing data tables.

- string, provides a series of very useful functions for handling strings.

- matplotlib.pyplot, contains a collection of functions that will allow us to generate the graphical representations of the word clouds.

- sklearn.feature_extraction.text (Scikit-Learn library), converts a collection of text documents into a vector array. From this library we will use some commands that we will discuss later.

- wordcloud, library with which we can generate the word cloud.

# Importaremos las librerías necesarias para realizar este análisis y la visualización. import os import re import pandas as pd import string import matplotlib.pyplot as plt from sklearn.feature_extraction.text import CountVectorizer from sklearn.feature_extraction.text import TfidfTransformer from wordcloud import WordCloud4.2. Data loading

Once the libraries are loaded, we prepare the data with which we are going to work. Before starting to load the data, in the working directory we need: (a) a folder called \"post\" that will contain all the files in TXT format with which we are going to work and that are available in the repository of this project of the GitHub of datos.gob.es; (b) a file called \"stop_words_spanish.txt\" that contains the list of stop words in Spanish, which is also available in said repository and (c) a folder called \"images\" where we will save the images of the word clouds in PNG format, which we will create below.

# Generamos la carpeta \"imagenes\".nueva_carpeta = \"imagenes/\" try: os.mkdir(nueva_carpeta)except OSError: print (\"Ya existe una carpeta llamada %s\" % nueva_carpeta)else: print (\"Se ha creado la carpeta: %s\" % nueva_carpeta)Next, we will proceed to load the data. The input data, as we have already mentioned, are in TXT files and each file contains a post. As we want to perform the analysis and visualization of several posts at the same time, we will load in our development environment all the texts that interest us, to later insert them in a single table or dataframe.

# Generamos una lista donde incluiremos todos los archivos que debe leer, indicándole la carpeta donde se encuentran.filePath = []for file in os.listdir(\"./post/\"): filePath.append(os.path.join(\"./post/\", file))# Generamos un dataframe en el cual incluiremos una fila por cada post.post_df = pd.DataFrame()for file in filePath: with open (file, \"rb\") as readFile: post_df = pd.DataFrame([readFile.read().decode(\"utf8\")], append(post_df)# Nombramos la columna que contiene los textos en el dataframe.post_df.columns = [\"texto\"]4.3. Data pre-processing

In order to obtain our objective: generate word clouds for each post, we will perform the following pre-processing tasks.

a) Data cleansing

Once a table containing the texts with which we are going to work has been generated, we must eliminate the noise beyond the text that interests us: special characters, punctuation marks and carriage returns.

First, we put all characters in lowercase to avoid any errors in case-sensitive processes, by using the lower() command.

Then we eliminate punctuation marks, such as periods, commas, exclamations, questions, among many others. For the elimination of these we will resort to the preinitialized string.punctuacion of the string library, which returns a set of symbols considered punctuation marks. In addition, we must eliminate tabs, cart jumps and extra spaces, which do not provide information in this analysis, using regular expressions.

It is essential to apply all these steps in a single function so that they are processed sequentially, because all processes are highly related.

# Eliminamos los signos de puntuación, los saltos de carro/tabulaciones y espacios en blanco extra.# Para ello generamos una función en la cual indicamos todos los cambios que queremos aplicar al texto.def limpiar_texto(texto): texto = texto.lower() texto = re.sub(\"\\[.*?¿\\]\\%\", \" \", texto) texto = re.sub(\"[%s]\" % re.escape(string.punctuation), \" \", texto) texto re.sub(\"\\w*\\d\\w*\", \" \", texto) return texto# Aplicamos los cambios al texto.limpiar_texto = lambda x: limpiar_texto(x)post_clean = pd.DataFrame(post_clean.texto.apply/limpiar_texto)b) Tokenize

Once we have eliminated the noise in the texts with which we are going to work, we will tokenize each of the texts in words. For this we will use the split() function, using space as a separator between words. This will allow separating the words independently (tokens) for future analysis.

# Tokenizar los textos. Se crea una nueva columna en la tabla con los tokens con el texto \"tokenizado\".def tokenizar(text): text = texto.split(sep = \" \") return(text)post_df[\"texto_tokenizado\"] = post_df[\"texto\"].apply(lambda x: tokenizar(x))c) Removal of \"stop words\"

After removing punctuation marks and other elements that can distort the target display, we will remove \"stop words\". To carry out this step we use a list of Spanish stop words since each language has its own list. This list consists of a total of 608 words, which include articles, prepositions, linking verbs, adverbs, among others and is recently updated. This list can be downloaded from the datos.gob.es GitHub account in TXT format and must be located in the working directory.

# Leemos el archivo que contiene las palabras vacías en castellano.with open \"stop_words_spanish.txt\", encoding = \"UTF8\") as f: lines = f.read().splitlines()In this list of words, we will include new words that do not contribute relevant information to our texts or appear recurrently due to their own context. In this case, there is a bunch of words, which we should eliminate since they are present in all posts repetitively since they all deal with the subject of open data and there is a high probability that these are the most significant words. Some of these words are, \"item\", \"data\", \"open\", \"case\", among others. This will allow to obtain a more representative graphic representation of the content of each post.

On the other hand, a visual inspection of the results obtained allows to detect words or characters derived from errors included in the texts, which obviously have no meaning and that have not been eliminated in the previous steps. These should be removed from the analysis so that they do not distort the subsequent results. These are words like, \"nen\", \"nun\" or \"nla\"

# Actualizamos nuestra lista de stop words.stop_words.extend((\"caso\", \"forma\",\"unido\", \"abiertos\", \"post\", \"espera\", \"datos\", \"dato\", \"servicio\", \"nun\", \"día\", \"nen\", \"data\", \"conjuntos\", \"importantes\", \"unido\", \"unión\", \"nla\", \"r\", \"n\"))# Eliminamos las stop words de nuestra tabla.post_clean = post_clean [~(post_clean[\"texto_tokenizado\"].isin(stop_words))]d) Vectorization

Machines are not capable of understanding words and sentences therefore, they must be transformed to some numerical structure. The method consists of generating vectors from each token. In this post we use a simple technique known as bag-of-words (BoW). It consists of assigning a weight to each token proportional to the frequency of appearance of that token in the text. To do this, we work on an array in which each row represents a text and each column a token. To perform the vectorization we will resort to the CountVectorizer() and TfidTransformer() commands of the scikit-learn library.

The CountVectorizer() function allows you to transform text into a vector of frequencies or word counts. In this case we will obtain 6 vectors with as many dimensions as there are tokens in each text, one for each post, which we will integrate into a single matrix, where the columns will be the tokens or words and the rows will be the posts.

# Calculamos la matriz de frecuencia de palabras del texto.vectorizador = CountVectorizer()post_vec = vectorizador.fit_transform(post_clean.texto_tokenizado)Once the word frequency matrix is generated, it is necessary to convert it into a normalized vector form in order to reduce the impact of tokens that occur very frequently in the text. To do this we will use the TfidfTransformer() function.

# Convertimos una matriz de frecuencia de palabras en una forma vectorial regularizada.transformer = TfidfTransformer()post_trans = transformer.fit_transform(post_vec).toarray()If you want to know more about the importance of applying this technique, you will find numerous articles on the Internet that talk about it and how relevant it is, among other issues, for SEO optimization.

5. Creation of the word cloud

Once we have concluded the pre-processing of the text, as we indicated at the beginning of the post, it is possible to perform NLP tasks. In this exercise we will create a word cloud or \"WordCloud\" for each of the analyzed texts.

A word cloud is a visual representation of the words with the highest rate of occurrence in the text. It allows to detect in a simple way the frequency and importance of each of the words, facilitating the identification of the keywords and discovering with a single glance the main theme treated in the text.

For this we are going to use the \"wordcloud\" library that incorporates the necessary functions to build each representation. First, we have to indicate the characteristics that each word cloud should present, such as the background color (background_color function), the color map that the words will take (colormap function), the maximum font size (max_font_size function) or set a seed so that the word cloud generated is always the same (function random_state) in future implementations. We can apply these and many other functions to customize each word cloud.

# Indicamos las características que presentará cada nube de palabras.wc = WordCloud(stopwords = stop_words, background_color = \"black\", colormap = \"hsv\", max_font_size = 150, random_state = 123)Once we have indicated the characteristics that we want each word cloud to present, we proceed to create it and save it as an image in PNG format. To generate the word cloud, we will use a loop in which we will indicate different functions of the matplotlib library (represented by the plt prefix) necessary to graphically generate the word cloud according to the specification defined in the previous step. We have to specify that a world cloud needs to be created for each row of the table, that is, for each text, with the function plt.subplot(). With the command plt.imshow() we indicate that the result is a 2D image. If we want the axes not to be displayed, we must indicate it with the plt.axis() function. Finally, with the function plt.savefig() we will save the generated visualization.

# Generamos las nubes de palabras para cada uno de los posts.for index, i in enumerate(post.columns): wc.generate(post.texto_tokenizado[i]) plt.subplot(3, 2, index+1 plt.imshow(wc, interpolation = \"bilinear\") plt.axis(\"off\") plt.savefig(\"imagenes/.png\")# Mostramos las nubes de palabras resultantes.plt.show()The visualization obtained is:

Visualization of the word clouds obtained from the texts of different posts of the blog section of datos.gob.es

5. Conclusions

Data visualization is one of the most powerful mechanisms for exploiting and analyzing the implicit meaning of data, regardless of the data type and the degree of technological knowledge of the user. Visualizations allow us to extract meaning out of the data and create narratives based on graphical representation.

Word clouds are a tool that allows us to speed up the analysis of textual data, since through them we can quickly and easily identify and interpret the words with the greatest relevance in the analyzed text, which gives us an idea of the subject.

If you want to learn more about Natural Language Processing, you can consult the guide \"Emerging Technologies and Open Data: Natural Language Processing\" and the posts \"Natural Language Processing\" and \" The latest news in natural language processing: summaries of classic works in just a few hundred words\".

Hopefully this step-by-step visualization has taught you a few things about the ins and outs of Natural Language Processing and word cloud creation. We will return to show you new data reuses. See you soon!

Red.es, in collaboration with the Secretary of State for Digitalization and Artificial Intelligence, organizes the fourth edition of the Aporta Challenge. Like other years, it seeks to identify and recognize new ideas and prototypes that drive improvements in a specific sector through the use of open data. This year the focus will be on health and wellness.

The objective is to encourage the talent, technical competence and creativity of the participants while promoting the direct reuse of data published by various public bodies.

Why is it important to promote the use of open data in the health and wellness sector?

If there is one sector where there has been a demand for data in the last year, it has been healthcare, due in part to the global COVID-19 pandemic. However, open data related to health and well-being not only serves to inform citizens in an exercise of transparency, but is also essential as a basis for solutions that drive improvements in health care, the patient experience and services that are offered in order to ensure a better quality of life. Open data has the ability to make essential public services more efficient, effective and inclusive, which in turn contributes to reducing inequality.

Aware of this, the European Commission has among its priorities the creation of a European data space in the health sector. For its part, the Spanish Government has begun actions to establish a data lake sanitary- which will make a large amount of raw data available to researchers so they can analyze it, make predictions, or spot trends. The use of this data, anonymised or in an aggregated form, can generate great improvements that some autonomies are already taking advantage of, such as Andalusia, that works, with the collaboration of Red.es, in the implementation of an advanced analytics system based on Big Data technologies to improve the quality of life of chronic patients.

Under the slogan "The value of data for the health and well-being of citizens" an open competition will be held which, like other years, will consist of two phases:

Phase I: Ideas competition. From 22/11/2021 to 15/02/2022

Participants must present an idea that responds to the proposed challenge: it is about identifying new opportunities to capture, analyze and use data intelligence to drive improvements related to health and well-being.

Proposals must be based on the use of at least one set of open data generated by Public Administrations, whether national or international. These data may be combined with others of a public or private nature.

The idea presented must be original and not have been awarded previously. Previously existing solutions are not valid, but must be developed by the participant within the framework of the Challenge.

A jury made up of experts in the field will assess the ideas presented and will choose 10, which go on to phase II. The criteria that will be taken into account for the evaluation are:

- Relevance

- Quality and overall clarity of the proposed idea

- Impact on the improvement of the educational system of the proposed idea

- Data sources used

- Promotion of the quality of life of vulnerable groups

Phase II: Prototype development and face-to-face exhibition. April-June.

The participants whose ideas have been selected in the previous phase will develop the associated prototype and present it to the members of the Jury. For this they will have a period of 4 months.

The prototypes may be a functional solution, a visualization, dynamic graphic element or multimedia solution that simulates the service or a functional exemplification from the extrapolation of an existing solution, for example, in another sector, country, area, etc.

In this case, the evaluation criteria will be:

- Easy to use

- Technical quality of the prototype

- Viability

- Exhibition quality

The three proposals with the highest score will be the winners and will receive the following financial award:

- First classified: € 5,000

- Second classified: € 4,000

- Third classified: € 3,000

Examples of challenges to solve

Algunos ejemplos de retos a los que pueden dar respuesta las soluciones presentadas son:

- Promote the acquisition and consolidation of healthy habits in citizenship

- Get more effective medical care and improve the patient experience

- Increase public health and epidemiological surveillance capacities

- Obtain better health and wellness outcomes for the dependent, chronically ill, or elderly

- Ensure that all people have the maximum opportunity to preserve their health and well-being

- Optimize the training and development of healthcare professionals

- Promote research to help discover healthy patterns and new treatments

- Encourage the sharing of successful experiences

- Leverage data to improve patient and service user safety

Who can participate?

Registration is open to any person, team or company domiciled in the European Union, who wants to contribute to economic and social development in the field of health and well-being.

How can I sign up?

You can register through the red.es electronic area. For this you will need your password or electronic certificate. The deadline ends on February 15, 2022 at 1:00 p.m.

You must include a detailed description of your idea and its value to society, as well as a descriptive video of the idea in Spanish, between 120 and 180 seconds long.

You have all the information at red.es and the bases of the Challenge.

Get inspired by the finalist works from previous editions!

For inspiration, look at work from previous years.

- III Challenge Aporta: "The value of data in digital education"

- II Challenge Aporta: "The value of data for the agri-food, forestry and rural sectors"

- I Challenge Provides: "The value of data for the Administration"

Go ahead and participate!

If you would like to help spread this information to others, we offer materials (in Spanish) that will make it easier for you:

On November 2, the awards ceremony took place for the winners of the V edition of the Castilla y León Open Data Contest. This competition, organized by the Ministry of Transparency, Spatial Planning and Foreign Action of the Junta de Castilla y León, recognizes the realization of projects that provide ideas, studies, services, websites or mobile applications, using for this datasets from this Open Data Portal.

The event had the participation of Francisco Igea, Vice President of the Junta de Castilla y León, and Joaquín Meseguer, General Director of Transparency and Good Governance of the same, in charge of awarding the awards to the winners.

Of the 37 applications received, a jury of experts in the field has chosen 8 projects that have emerged as winners in the various categories.

Category Ideas

In this category, proposals to develop studies, services, websites or applications for mobile devices are awarded.

- The first prize, € 1,500, went to APP SOLAR-CYL, a web tool for optimal sizing of photovoltaic solar self-consumption installations. Aimed at both citizens and energy managers of the Public Administration, the solution seeks to support the analysis of the technical and economic viability of this type of system. The idea was presented by professors from the Electrical Engineering Area, members of the ERESMA (Energy Resources' Smart Management) research group at the University of León: Miguel de Simón Martín, Ana María Díez Suárez, Alberto González Martínez, Álvaro de la Puente Gil and Jorge Blanes Peiró.

- The second prize, € 500, went to Dónde te esperan: Recommender for municipalities in Spain, by Mario Varona Bueno. Thanks to this tool, users will be able to find the best place to live based on their preferences or even chance.

Category Products and Services

This category differs from the previous one in that ideas are no longer awarded, but projects accessible to all citizens via the web through a URL.

- Repuéblame won the first prize, which this time consisted of € 2,500. Presented by Guido García Bernardo and Javier García Bernardo, it consists of a website to rediscover the best places to live or telework. The application catalogs the Castilian-Leon municipalities based on a series of numerical indicators, of its own creation, related to quality of life.

- The second prize, € 1,500, went to Plagrícola: Avisos de Plagas Agrícolas CyL by José María Pérez Ramos. It is a mobile app that informs farmers of the pest alerts published by the Instituto Tecnológico Agrario de Castilla y León (Itacyl), so that they can carry out the necessary preventive and curative measures.

- Complete the podium disCAPACIDAD.es [web], which won the third prize of € 500. Its creator, Luis Hernández Fuentevilla, has developed a website that centralizes offers and aid related to employment for people with disabilities in Castilla y León.

- This category also included a prize for students of €1,500. The winner was Ruta x Ruta x Castilla y León, presented by Adrián Arroyo Calle. This web application allows users to consult routes of all kinds, as well as points of interest located in their vicinity, such as monuments, restaurants or events. The solution also allows users to share their tracks (GPS recordings).

In addition, an honorable mention has been given to the candidatures presented by students "APP BOCYL Boletín Oficial Castilla y León”, by Nicolás Silva Brenes and "COVID CyL" presented by Silvia Pedrón Hermosa. This seeks to encourage students to work with data and present their projects to future calls.

Data Journalism Category

This category is designed to reward relevant journalistic pieces published or updated in any medium, whether written or audiovisual.

- MAPA COVID-19: check how many cases of coronavirus there are and how is the occupation of your hospital from the Maldita Association against misinformation won the first prize of € 1,500. After a work of compiling all the available data that the regional governments publish about the hospitals, Maldita has created a map to see how many cases of coronavirus there are and how the different hospitals are occupied. Information on ICU occupancy is also included in some hospitals.

- The second prize, € 1,000, went to De cero a 48.000 euros: who charges how much in the mayors of Castilla y León. Annual Compensation Explorer. Through various visualizations, its creator, Laura Navarro Soler, reveals information available in the Información Salarial Puestos de la Administración (ISPA). This information is crossed with other types of data such as the number of inhabitants in each region or the level of dedication (exclusive, partial or non-dedicated).

The category "Didactic Resource" has been declared void. The jury considered that the candidatures presented did not meet the criteria set out in the bases.

In total, the 8 awarded projects have received 12,000 euros. They will also have the option of participating in a business development consultancy.

Congratulations to all the winners!

These infographics show examples of the use of open data in certain sectors, as well as data from studies on its impact. New content will be published periodically.

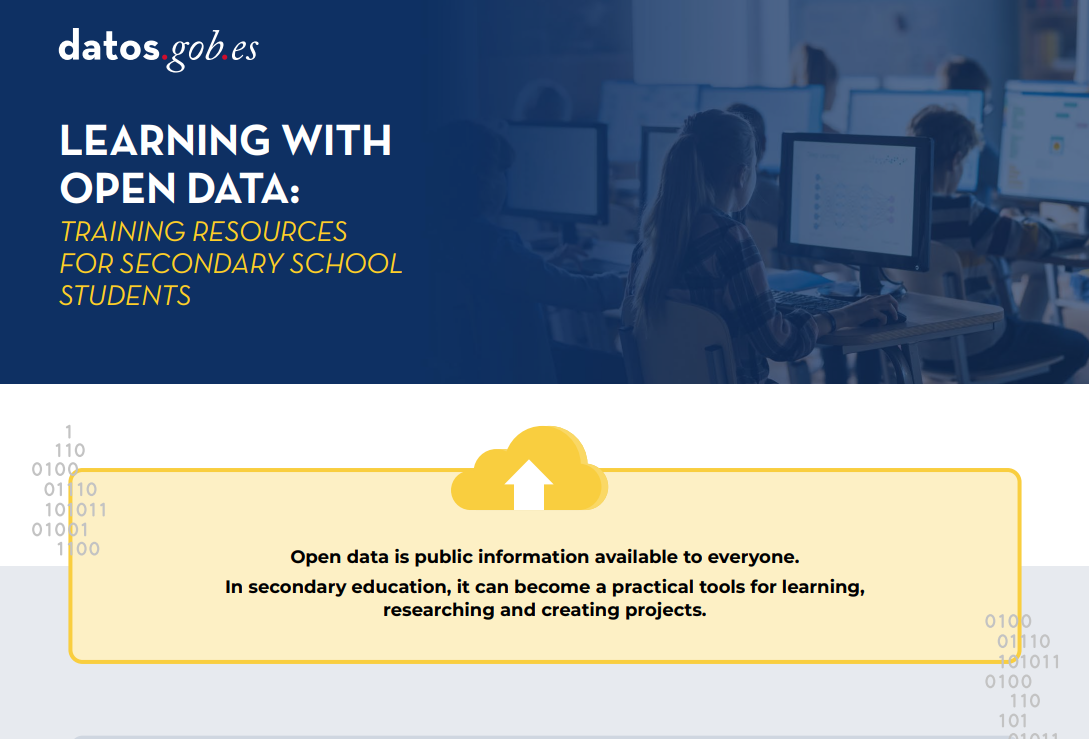

1. Learning with open data: training resources for secondary school students

|

Published: November 2025 This compilation of educational resources serves as a guide to learning more about open data and related technologies. Specially designed for students, it compiles tips for harnessing the potential of data sets, videos, and much more. |

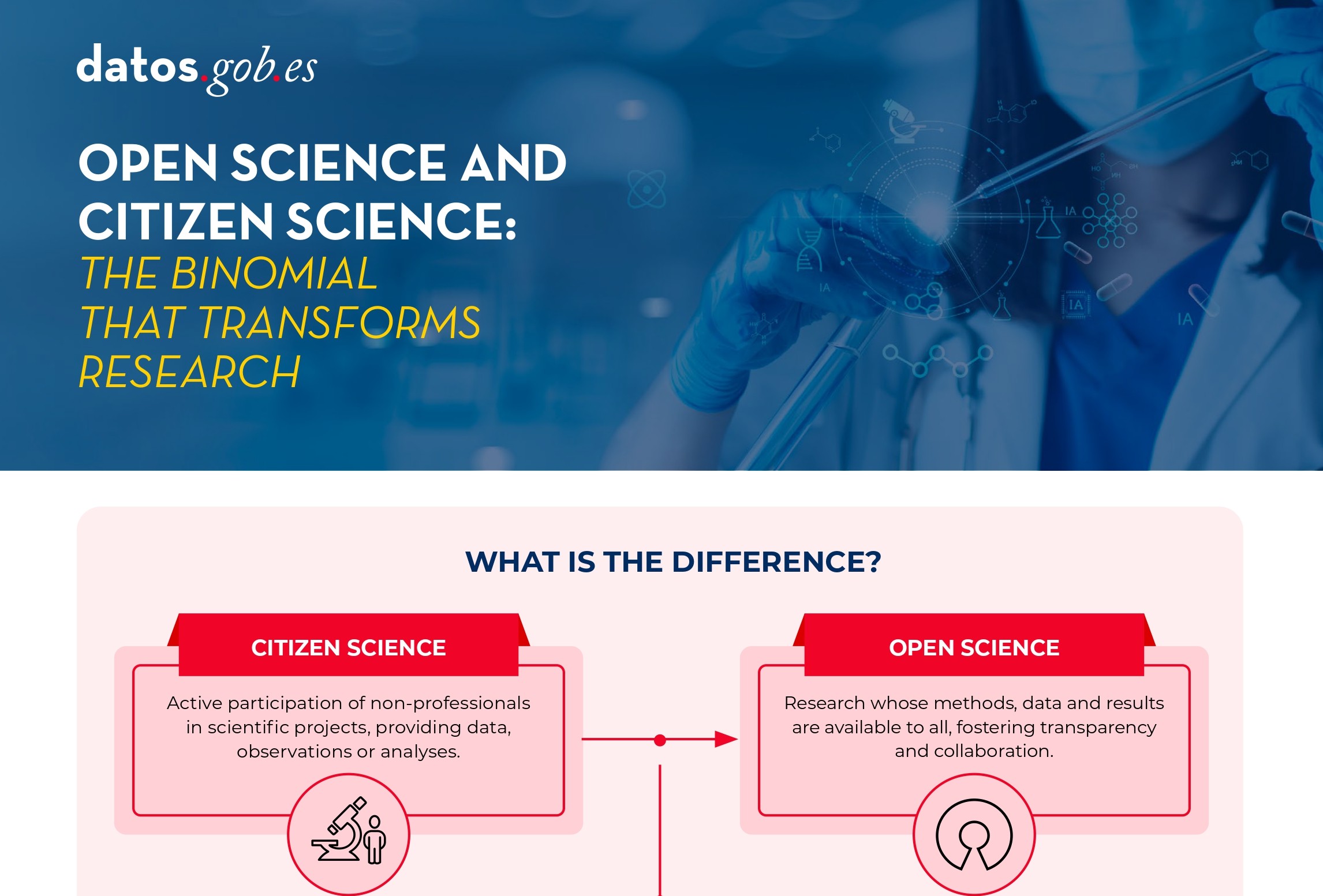

2. Open science and citizen science: the combination that transforms research

|

Published: July 2025 Planning the publication of open data from the outset of a citizen science project is key to ensuring the quality and interoperability of the data generated, facilitating its reuse, and maximizing the scientific and social impact of the project. |

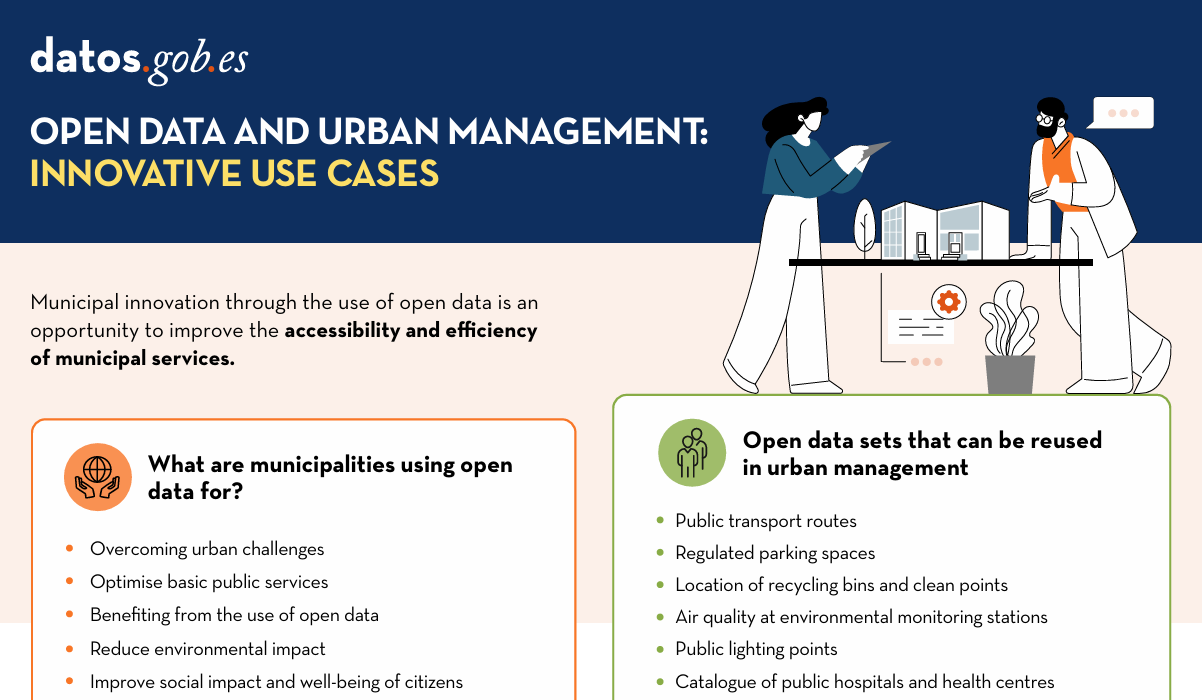

3. Open Data and Urban Management: Innovative Use Cases

|

Published: July 2024 Municipal innovation through the use of open data presents a significant opportunity to improve the accessibility and efficiency of municipal services. In this infographic, we collect examples of applications that contribute to the improvement of urban sectors such as transport and mobility, organisation of basic public services, environment and sustainability, and citizen services. |

4. Open data for Sustainable City Development

|

Published: August 2023 In this infographic, we have gathered use cases that utilize sets of open data to monitor and/or enhance energy efficiency, transportation and urban mobility, air quality, and noise levels. Issues that contribute to the proper functioning of urban centers. |

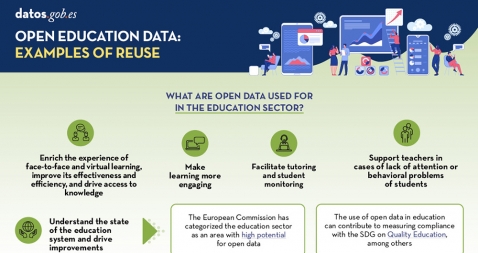

5. LegalTech: Transformative potencial of legal services

|

Published: May 2023 Open data is a key for the strengthening and progress of education and we must no forget thatbsp;education is a universal right and one of the main tools for the progress of humanity. In this infographic we summarize the benefits of utilizing open data in education |

6. LegalTech: Transformative potencial of legal services

|

Published: August 2022 The LegalTech concept refers to the use of new technological processes and tools to offer more efficient legal services. For all these tools to work properly, it is necessary to have valuable data. In this sense, open data is a great opportunity. Find out more information in this inforgraphic. |

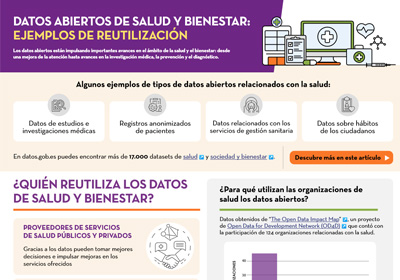

7. How is open data used in the health and welfare sector?

|

Published: September 2021 Open health data is essential for management and decision making by our governments, but it is also fundamental as a basis for solutions that help both patients and doctors. This infographic shows several examples, both of applications that collect health services and of tools for forecasting and diagnosing diseases, among others. |

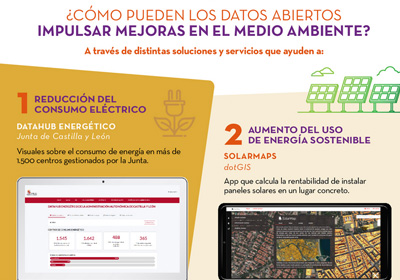

8. Open data use cases to care for the environment and fight climate change

|

Published: November 2020 This interactive infographic shows the strategic, regulatory and political situation affecting the world of open data in Spain and Europe. It includes the main points of the European Data Strategy, the Regulation on Data Governance in Europe or the Spain Digital 2025 plan, among others. |

9. Public administrations faced with the reuse of public information

|

Published: August 2020 Public administrations play an important role in the open data ecosystem, both as information providers and consumers. This infographic contains a series of examples of success stories and best practices, compiled in the report "Las Administraciones Públicas ante la reutilización de la información pública" by the National Observatory of Telecommunications and the Information Society (ONTSI). |

10. The importance of opening cultural data

|

Published: June 2020 Did you know that 90% of the world''s cultural heritage has not yet been digitized? Discover in this infographic the benefits of open cultural data, as well as examples of the products that can be created through its reuse, and success stories of museums that share open collections. |

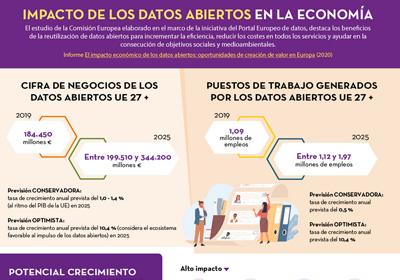

11. Open data, especially valuable for small and medium-sized companies

|

Published: March 2020 This infographic shows the results of the study "The Impact of open data: opportunities for value creation in Europe", conducted by the European Data Portal. Find out what the expected annual growth rate is, both in terms of turnover and jobs.(only available in Spanish). |