In recent months, the Canary Islands data initiative strategy has focused on centralization, with the aim of providing citizens with access to public information through a single access point. With this motive launched a new version of their open data portal, data.canarias.es, and have continued to develop projects that display data in a simple and unified way. An example is the Government Organizational Chart that they have recently published.

Interesting data on the organizational structure and policy makers in a single portal

The Organizational chart of the Government of the Canary Islands is a web portal that openly offers information of interest related to both the organic structure and the political representatives in the Government of the Canary Islands. Its launch has been carried out by the General Directorate of Transparency and Citizen Participation, dependent on the Ministry of Public Administrations, Justice and Security of the Government of the Canary Islands.

From the beginning, the Organization Chart has been designed with the opening of data by default, accessibility and usability in mind. The tool consumes data published on the Canary Islands open data portal, such as the salaries of public officials and temporary personnel, through its API. It also includes numerous sections with information on autonomous bodies, public entities, public business entities, commercial companies, public foundations, consortia or collegiate bodies, automatically extracted from the corporate toolDirectory of Administrative Units and Registry and Citizen Services Offices (DIRCAC).

All the content of the Organization Chart is published, in turn, in an automated and periodic way, on the Canary Islands open data portal. The Organizational Chart data is published on the Canary Islands open data portal, as well as the remuneration, the organic structure or the registry and citizen service offices. They are updated automatically, once a month, taking the data from the information systems and publishing them on the open data portal. This configuration is fully parameterizable and can be adapted to the frequency that is considered necessary.

From the organization chart itself, all data can be downloaded both in open and editable format (ODT) and in a format that allows it to be viewed on any device (PDF).

What information is available in the Organization Chart?

Thanks to the Organizational Chart, citizens can find out who is part of the regional government. The information appears divided into eleven main areas: Presidency and the ten ministries.

In the Organizational Chart, the resumes, salaries and declarations of assets of all the high positions that make up the Government are available, in addition to the temporary personnel who work with them. Likewise, the emails, the address of their offices and the web pages of each area are also displayed. All this information is constantly updated, reflecting any changes that occur.

Regarding the agendas for the transparency of public activity, which are accessible from each of the files of public positions in the Organizational Chart, it should be noted that, thanks to the efforts, it has been achieved:

- Update the application so that agendas can be managed from any device (mobile, tablet, PC,…), thereby improving their use by the responsible persons.

- Categorize the events that, in addition, are visually highlighted by a color code, thus facilitating their location by the public.

- Publish immediately, and automatically, all the changes that are made in the agendas in the Organization Chart.

- Incorporate more information, such as the location of the events or the data of the attendees.

- Download the data of the calendars in open formats such as CSV or JSON, or in ICAL format, which will allow adding these events to other calendars.

- Publish all the information of the agendas in the Canary Islands open data portal, including an API for direct consumption.

At the moment, the agendas of the members of the Governing Council, the Vice-counselors and the Vice-counselors have been published, but it is planned that the agendas of the rest of the public positions of the Government of the Canary Islands will be incorporated progressively.

The Organization Chart was presented at the International Congress on Transparency, held last September in Alicante, as an example not only of openness and reuse of data, but also of transparency and accountability. All this has been developed by a team of people committed to transparency, accountability and open data, all principles of Open Government, in order to offer better services to citizens.

1. Introduction

Data visualization is a task linked to data analysis that aims to graphically represent underlying data information. Visualizations play a fundamental role in the communication function that data possess, since they allow to drawn conclusions in a visual and understandable way, allowing also to detect patterns, trends, anomalous data or to project predictions, alongside with other functions. This makes its application transversal to any process in which data intervenes. The visualization possibilities are very numerous, from basic representations, such as a line graphs, graph bars or sectors, to complex visualizations configured from interactive dashboards.

Before we start to build an effective visualization, we must carry out a pre-treatment of the data, paying attention to how to obtain them and validating the content, ensuring that they do not contain errors and are in an adequate and consistent format for processing. Pre-processing of data is essential to start any data analysis task that results in effective visualizations.

A series of practical data visualization exercises based on open data available on the datos.gob.es portal or other similar catalogues will be presented periodically. They will address and describe, in a simple way; the stages necessary to obtain the data, perform the transformations and analysis that are relevant for the creation of interactive visualizations, from which we will be able summarize on in its final conclusions the maximum mount of information. In each of the exercises, simple code developments will be used (that will be adequately documented) as well as free and open use tools. All generated material will be available for reuse in the Data Lab repository on Github.

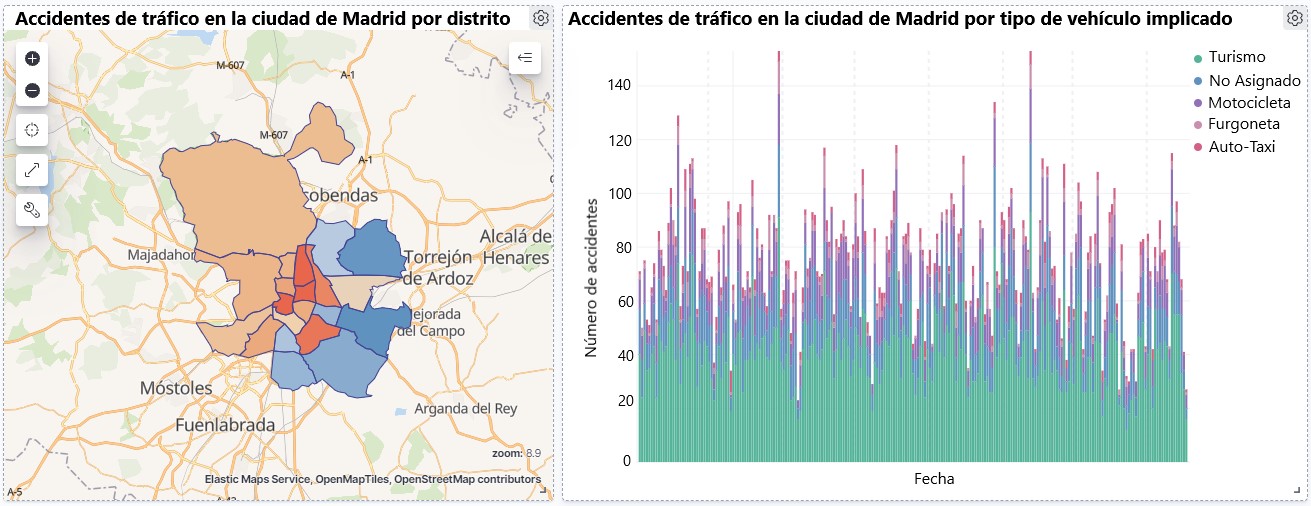

Visualization of traffic accidents occurring in the city of Madrid, by district and type of vehicle

2. Objetives

The main objective of this post is to learn how to make an interactive visualization based on open data available on this portal. For this exercise, we have chosen a dataset that covers a wide period and contains relevant information on the registration of traffic accidents that occur in the city of Madrid. From these data we will observe what is the most common type of accidents in Madrid and the incidence that some variables such as age, type of vehicle or the harm produced by the accident have on them.

3. Resources

3.1. Datasets

For this analysis, a dataset available in datos.gob.es on traffic accidents in the city of Madrid published by the City Council has been selected. This dataset contains a time series covering the period 2010 to 2021 with different subcategories that facilitate the analysis of the characteristics of traffic accidents that occurred. For example, the environmental conditions in which each accident occurred or the type of accident. Information on the structure of each data file is available in documents covering the period 2010-2018 and 2019 onwards. It should be noted that there are inconsistencies in the data before and after the year 2019, due to data structure variations. This is a common situation that data analysts must face when approaching the preprocessing tasks of the data that will be used, this is derived from the lack of a homogeneous structure of the data over time. For example, alterations on the number of variables, modification of the type of variables or changes to different measurement units. This is a compelling reason that justifies the need to accompany each open data set with complete documentation explaining its structure.

3.2. Tools

R (versión 4.0.3) and RStudio with the RMarkdown complement have been used to carry out the pre-treatment of the data (work environment setup, programming and writing).

R is an object-oriented and interpreted open-source programming language, initially created for statistical computing and the creation of graphical representations. Nowadays, it is a very powerful tool for all types of data processing and manipulation permanently updated. It contains a programming environment, RStudio, also open source.

The Kibana tool has been used for the creation of the interactive visualization.

Kibana is an open source tool that belongs to the Elastic Stack product suite (Elasticsearch, Beats, Logstash and Kibana) that enables visualization creation and exploration of indexed data on top of the Elasticsearch analytics engine.

If you want to know more about these tools or anyother that can help you in data processing and creating interactive visualizations, you can consult the report \"Data processing and visualization tools\".

4. Data processing

For the realization of the subsequent analysis and visualizations, it is necessary to prepare the data adequately, so that the results obtained are consistent and effective. We must perform an exploratory data analysis (EDA), in order to know and understand the data with which we want to work. The main objective of this data pre-processing is to detect possible anomalies or errors that could affect the quality of subsequent results and identify patterns of information contained in the data.

To facilitate the understanding of readers not specialized in programming, the R code included below, which you can access by clicking on the \"Code\" button in each section, is not designed to maximize its efficiency, but to facilitate its understanding, so it is possible that more advanced readers in this language might consider alternatives more efficient to encode some functionalities. The reader will be able to reproduce this analysis if desired, as the source code is available on datos.gob.es's Github account. In order to provide the code a plain text document will be used, which once loaded into the development environment can be easily executed or modified if desired.

4.1. Installation and loading of libraries

For the development of this analysis, we need to install a series of additional R packages to the base distribution, incorporating the functions and objects defined by them into the work environment. There are many packages available in R but the most suitable to work with this dataset are: tidyverse, lubridate and data.table.Tidyverse is a collection of R packages (it contains other packages such as dplyr, ggplot2, readr, etc.) specifically designed to work in Data Science, facilitating the loading and processing of data, and graphical representations and other essential functionalities for data analysis. It requires a progressive knowledge to get the most out of the packages that integrates. On the other hand, the lubridate package will be used for the management of date variables. Finally the data.table package allows a more efficient management of large data sets. These packages will need to be downloaded and installed in the development environment.

#Lista de librerías que queremos instalar y cargar en nuestro entorno de desarrollo librerias <- c(\"tidyverse\", \"lubridate\", \"data.table\")#Descargamos e instalamos las librerías en nuestros entorno de desarrollo package.check <- lapplay (librerias, FUN = function(x) { if (!require (x, character.only = TRUE)) { install.packages(x, dependencies = TRUE) library (x, character.only = TRUE } }4.2. Uploading and cleaning data

a. Loading datasets

The data that we are going to use in the visualization are divided by annuities in CSV files. As we want to perform an analysis of several years we must download and upload in our development environment all the datasets that interest us.

To do this, we generate the working directory \"datasets\", where we will download all the datasets. We use two lists, one with all the URLs where the datasets are located and another with the names that we assign to each file saved on our machine, with this we facilitate subsequent references to these files.

#Generamos una carpeta en nuestro directorio de trabajo para guardar los datasets descargadosif (dir.exists(\".datasets\") == FALSE)#Nos colocamos dentro de la carpetasetwd(\".datasets\")#Listado de los datasets que nos interese descargardatasets <- c(\"https://datos.madrid.es/egob/catalogo/300228-10-accidentes-trafico-detalle.csv\", \"https://datos.madrid.es/egob/catalogo/300228-11-accidentes-trafico-detalle.csv\", \"https://datos.madrid.es/egob/catalogo/300228-12-accidentes-trafico-detalle.csv\", \"https://datos.madrid.es/egob/catalogo/300228-13-accidentes-trafico-detalle.csv\", \"https://datos.madrid.es/egob/catalogo/300228-14-accidentes-trafico-detalle.csv\", \"https://datos.madrid.es/egob/catalogo/300228-15-accidentes-trafico-detalle.csv\", \"https://datos.madrid.es/egob/catalogo/300228-16-accidentes-trafico-detalle.csv\", \"https://datos.madrid.es/egob/catalogo/300228-17-accidentes-trafico-detalle.csv\", \"https://datos.madrid.es/egob/catalogo/300228-18-accidentes-trafico-detalle.csv\", \"https://datos.madrid.es/egob/catalogo/300228-19-accidentes-trafico-detalle.csv\", \"https://datos.madrid.es/egob/catalogo/300228-21-accidentes-trafico-detalle.csv\", \"https://datos.madrid.es/egob/catalogo/300228-22-accidentes-trafico-detalle.csv\")#Descargamos los datasets de interésdt <- list()for (i in 1: length (datasets)){ files <- c(\"Accidentalidad2010\", \"Accidentalidad2011\", \"Accidentalidad2012\", \"Accidentalidad2013\", \"Accidentalidad2014\", \"Accidentalidad2015\", \"Accidentalidad2016\", \"Accidentalidad2017\", \"Accidentalidad2018\", \"Accidentalidad2019\", \"Accidentalidad2020\", \"Accidentalidad2021\") download.file(datasets[i], files[i]) filelist <- list.files(\".\") print(i) dt[i] <- lapply (filelist[i], read_delim, sep = \";\", escape_double = FALSE, locale = locale(encoding = \"WINDOWS-1252\", trim_ws = \"TRUE\") }b. Creating the worktable

Once we have all the datasets loaded into our development environment, we create a single worktable that integrates all the years of the time series.

Accidentalidad <- rbindlist(dt, use.names = TRUE, fill = TRUE)Once the worktable is generated, we must solve one of the most common problems in all data preprocessing: the inconsistency in the naming of the variables in the different files that make up the time series. This anomaly produces variables with different names, but we know that they represent the same information. In this case it is explained in the data dictionary described in the documentation of the files, if this was not the case, it is necessary to resort to the observation and descriptive exploration of the files. In this case, the variable \"\"RANGO EDAD\"\" that presents data from 2010 to 2018 and the variable \"\"RANGO EDAD\"\" that presents the same data but from 2019 to 2021 are different. To solve this problem, we must unite/merge the variables that present this anomaly in a single variable.

#Con la función unite() unimos ambas variables. Debemos indicarle el nombre de la tabla, el nombre que queremos asignarle a la variable y la posición de las variables que queremos unificar. Accidentalidad <- unite(Accidentalidad, LESIVIDAD, c(25, 44), remove = TRUE, na.rm = TRUE)Accidentalidad <- unite(Accidentalidad, NUMERO_VICTIMAS, c(20, 27), remove = TRUE, na.rm = TRUE)Accidentalidad <- unite(Accidentalidad, RANGO_EDAD, c(26, 35, 42), remove = TRUE, na.rm = TRUE)Accidentalidad <- unite(Accidentalidad, TIPO_VEHICULO, c(20, 27), remove = TRUE, na.rm = TRUE)Once we have the table with the complete time series, we create a new table counting only the variables that are relevant to us to make the interactive visualization that we want to develop.

Accidentalidad <- Accidentalidad %>% select (c(\"FECHA\", \"DISTRITO\", \"LUGAR ACCIDENTE\", \"TIPO_VEHICULO\", \"TIPO_PERSONA\", \"TIPO ACCIDENTE\", \"SEXO\", \"LESIVIDAD\", \"RANGO_EDAD\", \"NUMERO_VICTIMAS\") c. Variable transformation

Next, we examine the type of variables and values to transform the necessary variables to be able to perform future aggregations, graphs or different statistical analyses.

#Re-ajustar la variable tipo fechaAccidentalidad$FECHA <- dmy (Accidentalidad$FECHA #Re-ajustar el resto de variables a tipo factor Accidentalidad$'TIPO ACCIDENTE' <- as.factor(Accidentalidad$'TIPO.ACCIDENTE')Accidentalidad$'Tipo Vehiculo' <- as.factor(Accidentalidad$'Tipo Vehiculo')Accidentalidad$'TIPO PERSONA' <- as.factor(Accidentalidad$'TIPO PERSONA')Accidentalidad$'Tramo Edad' <- as.factor(Accidentalidad$'Tramo Edad')Accidentalidad$SEXO <- as.factor(Accidentalidad$SEXO)Accidentalidad$LESIVIDAD <- as.factor(Accidentalidad$LESIVIDAD)Accidentalidad$DISTRITO <- as.factor (Accidentalidad$DISTRITO)d. Creation of new variables

Let's divide the variable \"\"FECHA\"\" into a hierarchy of variables of date types, \"\"Año\", \"\"Mes\"\" and \"\"Día\"\". This action is very common in data analytics, since it is interesting to analyze other time ranges, for example; years, months, weeks (and any other unit of time), or we need to generate aggregations from the day of the week.

#Generación de la variable AñoAccidentalidad$Año <- year(Accidentalidad$FECHA)Accidentalidad$Año <- as.factor(Accidentalidad$Año) #Generación de la variable MesAccidentalidad$Mes <- month(Accidentalidad$FECHA)Accidentalidad$Mes <- as.factor(Accidentalidad$Mes)levels (Accidentalidad$Mes) <- c(\"Enero\", \"Febrero\", \"Marzo\", \"Abril\", \"Mayo\", \"Junio\", \"Julio\", \"Agosto\", \"Septiembre\", \"Octubre\", \"Noviembre\", \"Diciembre\") #Generación de la variable DiaAccidentalidad$Dia <- month(Accidentalidad$FECHA)Accidentalidad$Dia <- as.factor(Accidentalidad$Dia)levels(Accidentalidad$Dia)<- c(\"Domingo\", \"Lunes\", \"Martes\", \"Miercoles\", \"Jueves\", \"Viernes\", \"Sabado\")e. Detection and processing of lost data

The detection and processing of lost data (NAs) is an essential task in order to be able to process the variables contained in the table, since the lack of data can cause problems when performing aggregations, graphs or statistical analysis.

Next, we will analyze the absence of data (detection of NAs) in the table:

#Suma de todos los NAs que presenta el datasetsum(is.na(Accidentalidad))#Porcentaje de NAs en cada una de las variablescolMeans(is.na(Accidentalidad))Once the NAs presented by the dataset have been detected, we must treat them somehow. In this case, as all the interesting variables are categorical, we will complete the missing values with the new value \"Unassigned\", this way we do not lose sample size and relevant information.

#Sustituimos los NAs de la tabla por el valor \"No asignado\"Accidentalidad [is.na(Accidentalidad)] <- \"No asignado\"f. Level assignments in variables

Once we have the variables of interest in the table, we can perform a more exhaustive analysis of the data and categories presented by each of the variables. If we analyze each one independently, we can see that some of them have repeated categories, simply by use of accents, special characters or capital letters. We will reassign the levels to the variables that require so that future visualizations or statistical analysis are built efficiently and without errors.

For space reasons, in this post we will only show an example with the variable \"HARMFULNESS\". This variable was typified until 2018 with a series of categories (IL, HL, HG, MT), while from 2019 other categories were used (values from 0 to 14). Fortunately, this task is easily approachable since it is documented in the information about the structure that accompanies each dataset. This issue (as we have said before), that does not always happen, greatly hinders this type of data transformations.

#Comprobamos las categorías que presenta la variable \"LESIVIDAD\"levels(Accidentalidad$LESIVIDAD)#Asignamos las nuevas categoríaslevels(Accidentalidad$LESIVIDAD)<- c(\"Sin asistencia sanitaria\", \"Herido leve\", \"Herido leve\", \"Herido grave\", \"Fallecido\", \"Herido leve\", \"Herido leve\", \"Herido leve\", \"Ileso\", \"Herido grave\", \"Herido leve\", \"Ileso\", \"Fallecido\", \"No asignado\")#Comprobamos de nuevo las catergorías que presenta la variablelevels(Accidentalidad$LESIVIDAD)4.3. Dataset Summary

Let's see what variables and structure the new dataset presents after the transformations made:

str(Accidentalidad)summary(Accidentalidad)The output of these commands will be omitted for reading simplicity. The main characteristics of the dataset are:

- It is composed of 14 variables: 1 date variable and 13 categorical variables.

- The time range covers from 01-01-2010 to 31-06-2021 (the end date may vary, since the dataset of the year 2021 is being updated periodically).

- For space reasons in this post, not all available variables have been considered for analysis and visualization.

4.4. Save the generated dataset

Once we have the dataset with the structure and variables ready for us to perform the visualization of the data, we will save it as a data file in CSV format to later perform other statistical analysis. Or use it in other data processing or visualization tools such as the one we address below. It is important to save it with a UTF-8 (Unicode Transformation Format) encoding so that special characters are correctly identified by any software.

write.csv(Accidentalidad, file = Accidentalidad.csv\", fileEncoding=\"UTF-8\")5. Creation of the visualization on traffic accidents that occur in the city of Madrid using Kibana

To create this interactive visualization the Kibana tool (in its free version) has been used on our local environment. Before being able to perform the visualization it is necessary to have the software installed since we have followed the steps of the download and installation tutorial provided by the company Elastic.

Once the Kibana software is installed, we proceed to develop the interactive visualization. Below there are two video tutorials, which show the process of creating the visualization and interacting with it.

This first video tutorial shows the visualization development process by performing the following steps:

- Loading data into Elasticsearch, generating an index in Kibana that allows us to interact with the data practically in real time and interaction with the variables presented by the dataset.

- Generation of the following graphical representations:

- Line graph to represent the time series on traffic accidents that occurred in the city of Madrid.

- Horizontal bar chart showing the most common accident type

- Thematic map, we will show the number of accidents that occur in each of the districts of the city of Madrid. For the creation of this visual it is necessary to download the \"dataset containing the georeferenced districts in GeoJSON format\".

- Construction of the dashboard integrating the visuals generated in the previous step.

In this second video tutorial we will show the interaction with the visualization that we have just created:

6. Conclusions

Observing the visualization of the data on traffic accidents that occurred in the city of Madrid from 2010 to June 2021, the following conclusions can be drawn:

- The number of accidents that occur in the city of Madrid is stable over the years, except for 2019 where a strong increase is observed and during the second quarter of 2020 where a significant decrease is observed, which coincides with the period of the first state of alarm due to the COVID-19 pandemic.

- Every year there is a decrease in the number of accidents during the month of August.

- Men tend to have a significantly higher number of accidents than women.

- The most common type of accident is the double collision, followed by the collision of an animal and the multiple collision.

- About 50% of accidents do not cause harm to the people involved.

- The districts with the highest concentration of accidents are: the district of Salamanca, the district of Chamartín and the Centro district.

Data visualization is one of the most powerful mechanisms for autonomously exploiting and analyzing the implicit meaning of data, regardless of the degree of the user's technological knowledge. Visualizations allow us to build meaning on top of data and create narratives based on graphical representation.

If you want to learn how to make a prediction about the future accident rate of traffic accidents using Artificial Intelligence techniques from this data, consult the post on \"Emerging technologies and open data: Predictive Analytics\".

We hope you liked this post and we will return to show you new data reuses. See you soon!

Life happens in real time and much of our life, today, takes place in the digital world. Data, our data, is the representation of how we live hybrid experiences between the physical and the virtual. If we want to know what is happening around us, we must analyze the data in real time. In this post, we explain how.

Introduction

Let's imagine the following situation: we enter our favorite online store, we search for a product we want and we get a message on the screen saying that the price of the product shown is from a week ago and we have no information about the current price of the product. Someone in charge of the data processes of that online store could say that this is the expected behavior since the price database uploads from the central system to the e-commerce are weekly. Fortunately, this online experience is unthinkable today in an e-commerce, but far from what you might think, it is a common situation in many other processes of companies and organizations. It has happened to all of us that being registered in a database of a business, when we go to a store different from our usual one, opps, it turns out that we are not listed as customers. Again, this is because the data processing (in this case the customer database) is centralized and the loads to peripheral systems (after-sales service, distributors, commercial channel) are done in batch mode. This, in practice, means that data updates can take days or even weeks.

In the example above, batch mode thinking about data can unknowingly ruin the experience of a customer or user. Batch thinking can have serious consequences such as: the loss of a customer, the worsening of the brand image or the loss of the best employees.

Benefits of using real-time data

There are situations in which data is simply either real-time or it is not. A very recognizable example is the case of transactions, banking or otherwise. We cannot imagine that payment in a store does not occur in real time (although sometimes the payment terminals are out of coverage and this causes annoying situations in physical stores). Nor can (or should) it happens that when passing through a toll booth on a highway, the barrier does not open in time (although we have probably all experienced some bizarre situation in this context).

However, in many processes and situations it can be a matter of debate and discussion whether to implement a real-time data strategy or simply follow conventional approaches, trying to have a time lag in (data) analysis and response times as low as possible. Below, we list some of the most important benefits of implementing real-time data strategies:

- Immediate reaction to an error. Errors happen and with data it is no different. If we have a real-time monitoring and alerting system, we will react before it is too late to an error.

- Drastic improvement in the quality of service. As we have mentioned, not having the right information at the time it is needed can ruin the experience of our service and with it the loss of customers or potential customers. If our service fails, we must know about it immediately to be able to fix it and solve it. This is what makes the difference between organizations that have adapted to digital transformation and those that have not.

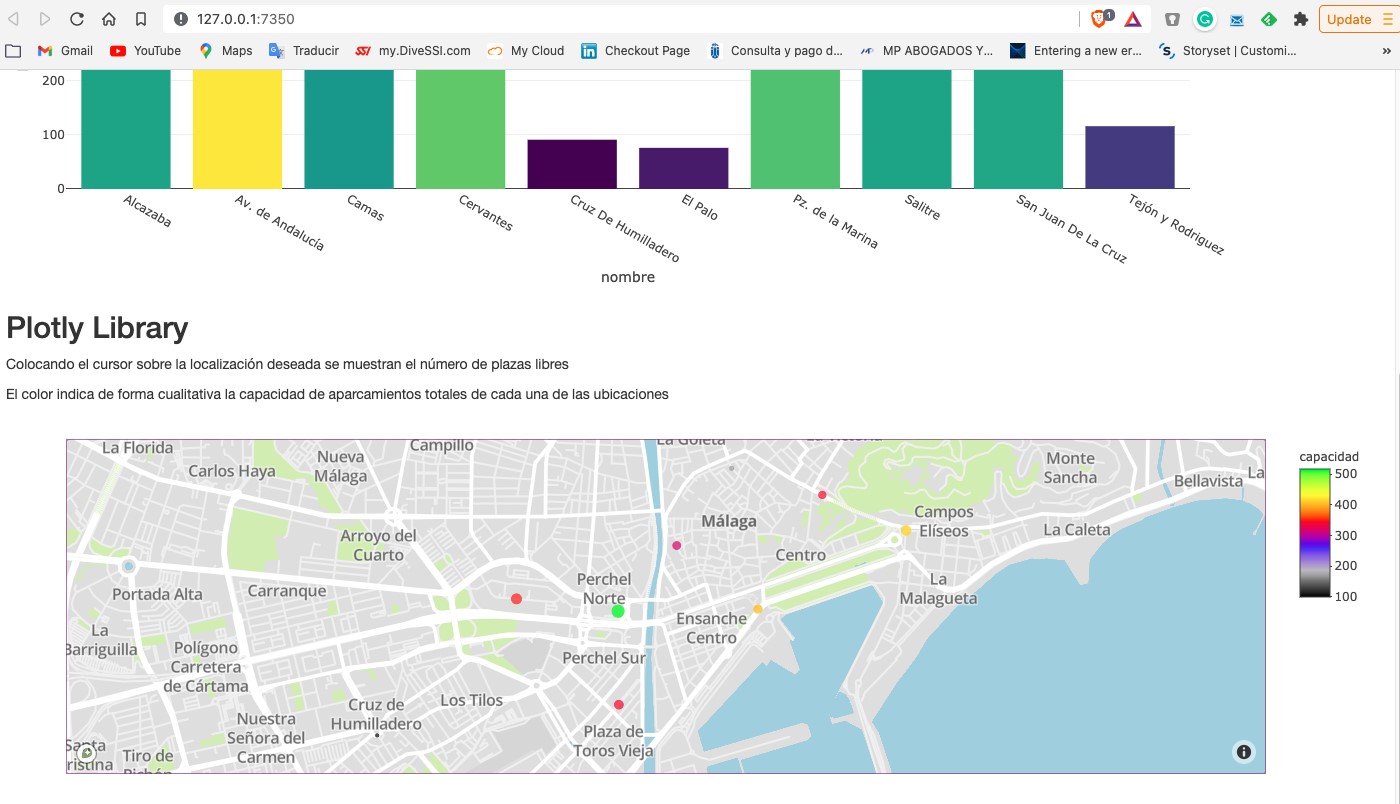

- Increasing sales. Not having the data in real time, can make you lose a lot of money and profitability. Let's imagine the following example, which we will see in more detail in the practical section. If we have a business in which the service we provide depends on a limited capacity (a chain of restaurants, hotels or a parking lot, for example) it is in our interest to have our occupancy data in real time, since this means that we can sell our available service capacity more dynamically.

The technological part of real time

For years, data analysis was originally conceived in batch mode. Historical data loads, every so often, in processes that are executed only under certain conditions. The reason is that there is a certain technological complexity behind the possibility of capturing and consuming data at the very moment it is generated. Traditional data warehouses, (relational) databases, for example, have certain limitations for working with fast transactions and for executing operations on data in real time. There is a huge amount of documentation on this subject and on how technological solutions have been incorporating technology to overcome these barriers. It is not the purpose of this post to go into the technical details of the technologies to achieve the goal of capturing and analyzing data in real time. However, we will comment that there are two clear paradigms for building real-time solutions that need not be mutually exclusive.

- Solutions based on classic mechanisms and flows of data capture, storage (persistence) and exposure to specific consumption channels (such as a web page or an API).

- Solutions based on event-driven availability mechanisms, in which data is generated and published regardless of who and how it will be consumed.

A practical example

As we usually do in this type of posts, we try to illustrate the topic of the post with a practical example with which the reader can interact. In this case, we are going to use an open dataset from the datos.gob.es catalog. In particular, we are going to use a dataset containing information on the occupancy of public parking spaces in the city center of Malaga. The dataset is available at this link and can be explored in depth through this link. The data is accessible through this API. In the description of the dataset it is indicated that the update frequency is every 2 minutes. As mentioned above, this is a good example in which having the data available in real time[1] has important advantages for both the service provider and the users of the service. Not many years ago it was difficult to think of having this information in real time and we were satisfied with aggregated information at the end of the week or month on the evolution of the occupancy of parking spaces.

From the data set we have built an interactive app where the user can observe in real time the occupancy level through graphic displays. The reader has at his disposal the code of the example to reproduce it at any time.

In this example, we have seen how, from the moment the occupancy sensors communicate their status (free or occupied) until we consume the data in a web application, this same data has gone through several systems and even had to be converted to a text file to expose it to the public. A much more efficient system would be to publish the data in an event broker that can be subscribed to with real-time technologies. In any case, through this API we are able to capture this data in real time and represent it in a web application ready for consumption and all this with less than 150 lines of code. Would you like to try it?

In conclusion, the importance of real-time data is now fundamental to most processes, not just space management or online commerce. As the volume of real-time data increases, we need to shift our thinking from a batch perspective to a real-time first mindset. That is, let's think directly that data must be available for real-time consumption from the moment it is generated, trying to minimize the number of operations we do with it before we can consume it.

[1] The term real time can be ambiguous in certain cases. In the context of this post, we can consider real time to be the characteristic data update time that is relevant to the particular domain we are working in. For example, in this use case an update rate of 2 min is sufficient and can be considered real time. If we were analysing a use case of stock quotes the concept of real time would be in the order of seconds.

Content prepared by Alejandro Alija, expert in Digital Transformation and Innovation.

The contents and views expressed in this publication are the sole responsibility of the author.

1. Introduction

Data visualization is a task linked to data analysis that aims to graphically represent underlying data information. Visualizations play a fundamental role in the communication function that data possess, since they allow to drawn conclusions in a visual and understandable way, allowing also to detect patterns, trends, anomalous data or to project predictions, alongside with other functions. This makes its application transversal to any process in which data intervenes. The visualization possibilities are very numerous, from basic representations, such as a line graphs, graph bars or sectors, to complex visualizations configured from interactive dashboards.

Before we start to build an effective visualization, we must carry out a pre-treatment of the data, paying attention to how to obtain them and validating the content, ensuring that they do not contain errors and are in an adequate and consistent format for processing. Pre-processing of data is essential to start any data analysis task that results in effective visualizations.

A series of practical data visualization exercises based on open data available on the datos.gob.es portal or other similar catalogues will be presented periodically. They will address and describe, in a simple way; the stages necessary to obtain the data, perform the transformations and analysis that are relevant for the creation of interactive visualizations, from which we will be able summarize on in its final conclusions the maximum mount of information. In each of the exercises, simple code developments will be used (that will be adequately documented) as well as free and open use tools. All generated material will be available for reuse in the Data Lab repository on Github.

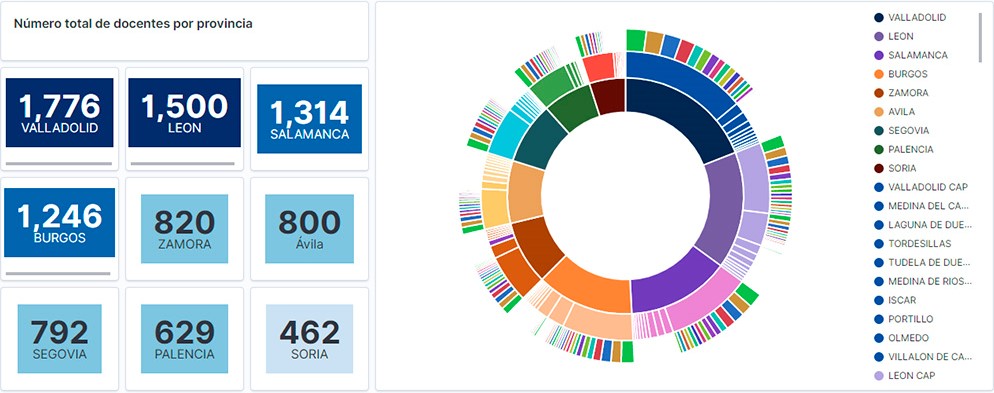

Visualization of the teaching staff of Castilla y León classified by Province, Locality and Teaching Specialty

2. Objetives

The main objective of this post is to learn how to treat a dataset from its download to the creation of one or more interactive graphs. For this, datasets containing relevant information on teachers and students enrolled in public schools in Castilla y León during the 2019-2020 academic year have been used. Based on these data, analyses of several indicators that relate teachers, specialties and students enrolled in the centers of each province or locality of the autonomous community.

3. Resources

3.1. Datasets

For this study, datasets on Education published by the Junta de Castilla y León have been selected, available on the open data portal datos.gob.es. Specifically:

- Dataset of the legal figures of the public centers of Castilla y León of all the teaching positions, except for the schoolteachers, during the academic year 2019-2020. This dataset is disaggregated by specialty of the teacher, educational center, town and province.

- Dataset of student enrolments in schools during the 2019-2020 academic year. This dataset is obtained through a query that supports different configuration parameters. Instructions for doing this are available at the dataset download point. The dataset is disaggregated by educational center, town and province.

3.2. Tools

To carry out this analysis (work environment set up, programming and writing) Python (versión 3.7) programming language and JupyterLab (versión 2.2) have been used. This tools will be found integrated in Anaconda, one of the most popular platforms to install, update or manage software to work with Data Science. All these tools are open and available for free.

JupyterLab is a web-based user interface that provides an interactive development environment where the user can work with so-called Jupyter notebooks on which you can easily integrate and share text, source code and data.

To create the interactive visualization, the Kibana tool (versión 7.10) has been used.

Kibana is an open source application that is part of the Elastic Stack product suite (Elasticsearch, Logstash, Beats and Kibana) that provides visualization and exploration capabilities of indexed data on top of the Elasticsearch analytics engine..

If you want to know more about these tools or others that can help you in the treatment and visualization of data, you can see the recently updated \"Data Processing and Visualization Tools\" report.

4. Data processing

As a first step of the process, it is necessary to perform an exploratory data analysis (EDA) to properly interpret the starting data, detect anomalies, missing data or errors that could affect the quality of subsequent processes and results. Pre-processing of data is essential to ensure that analyses or visualizations subsequently created from it are consistent and reliable.

Due to the informative nature of this post and to favor the understanding of non-specialized readers, the code does not intend to be the most efficient, but to facilitate its understanding. So you will probably come up with many ways to optimize the proposed code to get similar results. We encourage you to do so! You will be able to reproduce this analysis since the source code is available in our Github account. The way to provide the code is through a document made on JupyterLab that once loaded into the development environment you can execute or modify easily.

4.1. Installation and loading of libraries

The first thing we must do is import the libraries for the pre-processing of the data. There are many libraries available in Python but one of the most popular and suitable for working with these datasets is Pandas. The Pandas library is a very popular library for manipulating and analyzing datasets.

import pandas as pd 4.2. Loading datasets

First, we download the datasets from the open data catalog datos.gob.es and upload them into our development environment as tables to explore them and perform some basic data cleaning and processing tasks. For the loading of the data we will resort to the function read_csv(), where we will indicate the download url of the dataset, the delimiter (\"\";\"\" in this case) and, we add the parameter \"encoding\"\" that we adjust to the value \"\"latin-1\"\", so that it correctly interprets the special characters such as the letters with accents or \"\"ñ\"\" present in the text strings of the dataset.

#Cargamos el dataset de las plantillas jurídicas de los centros públicos de Castilla y León de todos los cuerpos de profesorado, a excepción de los maestros url = \"https://datosabiertos.jcyl.es/web/jcyl/risp/es/educacion/plantillas-centros-educativos/1284922684978.csv\"docentes = pd.read_csv(url, delimiter=\";\", header=0, encoding=\"latin-1\")docentes.head(3)#Cargamos el dataset de los alumnos matriculados en los centros educativos públicos de Castilla y León alumnos = pd.read_csv(\"matriculaciones.csv\", delimiter=\",\", names=[\"Municipio\", \"Matriculaciones\"], encoding=\"latin-1\") alumnos.head(3)The column \"\"Localidad\"\" of the table \"\"alumnos\"\" is composed of the code of the municipality and the name of the same. We must divide this column in two, so that its treatment is more efficient.

columnas_Municipios = alumnos[\"Municipio\"].str.split(\" \", n=1, expand = TRUE)alumnos[\"Codigo_Municipio\"] = columnas_Municipios[0]alumnos[\"Nombre_Munipicio\"] = columnas_Munipicio[1]alumnos.head(3)4.3. Creating a new table

Once we have both tables with the variables of interest, we create a new table resulting from their union. The union variables will be: \"\"Localidad\"\" in the table of \"\"docentes\"\" and \"\"Nombre_Municipio” in the table of \"\"alumnos\".

docentes_alumnos = pd.merge(docentes, alumnos, left_on = \"Localidad\", right_on = \"Nombre_Municipio\")docentes_alumnos.head(3)4.4. Exploring the dataset

Once we have the table that interests us, we must spend some time exploring the data and interpreting each variable. In these cases, it is very useful to have the data dictionary that always accompanies each downloaded dataset to know all its details, but this time we do not have this essential tool. Observing the table, in addition to interpreting the variables that make it up (data types, units, ranges of values), we can detect possible errors such as mistyped variables or the presence of missing values (NAs) that can reduce analysis capacity.

docentes_alumnos.info()In the output of this section of code, we can see the main characteristics of the table:

- Contains a total of 4,512 records

- It is composed of 13 variables, 5 numerical variables (integer type) and 8 categorical variables (\"object\" type)

- There is no missing of values.

Once we know the structure and content of the table, we must rectify errors, as is the case of the transformation of some of the variables that are not properly typified, for example, the variable that houses the center code (\"Código.centro\").

docentes_alumnos.Codigo_centro = data.Codigo_centro.astype(\"object\")docentes_alumnos.Codigo_cuerpo = data.Codigo_cuerpo.astype(\"object\")docentes_alumnos.Codigo_especialidad = data.Codigo_especialidad.astype(\"object\")Once we have the table free of errors, we obtain a description of the numerical variables, \"\"Plantilla\" and \"\"Matriculaciones\", which will help us to know important details. In the output of the code that we present below we observe the mean, the standard deviation, the maximum and minimum number, among other statistical descriptors.

docentes_alumnos.describe()4.5. Save the dataset

Once we have the table free of errors and with the variables that we are interested in graphing, we will save it in a folder of our choice to use it later in other analysis or visualization tools. We will save it in CSV format encoded as UTF-8 (Unicode Transformation Format) so that special characters are correctly identified by any tool we might use later.

df = pd.DataFrame(docentes_alumnos)filname = \"docentes_alumnos.csv\"df.to_csv(filename, index = FALSE, encoding = \"utf-8\")5. Creation of the visualization on the teachers of the public educational centers of Castilla y León using the Kibana tool

For the realization of this visualization, we have used the Kibana tool in our local environment. To do this it is necessary to have Elasticsearch and Kibana installed and running. The company Elastic makes all the information about the download and installation available in this tutorial.

Attached below are two video tutorials, which shows the process of creating the visualization and the interaction with the generated dashboard.

In this first video, you can see the creation of the dashboard by generating different graphic representations, following these steps:

- We load the table of previously processed data into Elasticsearch and generate an index that allows us to interact with the data from Kibana. This index allows search and management of data, practically in real time.

- Generation of the following graphical representations:

- Graph of sectors where to show the teaching staff by province, locality and specialty.

- Metrics of the number of teachers by province.

- Bar chart, where we will show the number of registrations by province.

- Filter by province, locality and teaching specialty.

- Construction of the dashboard.

In this second video, you will be able to observe the interaction with the dashboard generated previously.

6. Conclusions

Observing the visualization of the data on the number of teachers in public schools in Castilla y León, in the academic year 2019-2020, the following conclusions can be obtained, among others:

- The province of Valladolid is the one with both the largest number of teachers and the largest number of students enrolled. While Soria is the province with the lowest number of teachers and the lowest number of students enrolled.

- As expected, the localities with the highest number of teachers are the provincial capitals.

- In all provinces, the specialty with the highest number of students is English, followed by Spanish Language and Literature and Mathematics.

- It is striking that the province of Zamora, although it has a low number of enrolled students, is in fifth position in the number of teachers.

This simple visualization has helped us to synthesize a large amount of information and to obtain a series of conclusions at a glance, and if necessary, make decisions based on the results obtained. We hope you have found this new post useful and we will return to show you new reuses of open data. See you soon!

In recent months there have been a number of important announcements related to the construction and exploitation of infrastructures and datasets related to health research. These initiatives aim to make data a vital part of a health system that is currently not extracting the maximum possible value from it.

The health data policy landscape, globally in general and in Europe in particular, is highly fragmented and regulatory inconsistency hampers innovation. While at least in Europe there are adequate safeguards to protect sensitive data, trust in the whole data ecosystem is still generally weak. As a result, willingness to share data at all levels is often low. But this situation seems to be changing at great speed, as the high number of initiatives we are seeing being born or developed in 2021 seems to demonstrate.

UK aims to position itself as a world leader

For example, mindful of the limitations we have described in the data ecosystem, the UK government has published in June 2021 the draft of its new strategy "Data saves lives: reshaping health and social care with data", which aims to capitalise on the work done during the pandemic to improve health and care services.

Although it is still in draft form - and has been criticised by privacy experts and patients' rights groups for not clarifying who will have access to the data - it makes no secret of the ambition to make the UK a world leader in health innovation through the use of data. The strategy aims to put people in control of their own data, while supporting the NHS in creating a modernised system fit for the 21st century that is able to open up and harness its vast data assets.

Another interesting initiative is the work being undertaken in 2021 by the Open Data Institute (ODI) as part of a wider research project commissioned by the pharmaceutical company Roche. ODI is mapping the use of health data standards across the European region in order to design a "Data governance playbook for data-driven healthcare projects", which can be used and shared globally.

July 2021 also saw the announcement of the commissioning of what has become the UK's most powerful supercomputer (and 41st in the world rankings), the Cambridge-1per. It will be dedicated to health sciences and to facilitating the resolution of problems related to medical care. With a $100 million investment from US company Nvidia, its creators hope it will help make the process of disease prevention, diagnosis and treatment better, faster and cheaper.

It is known, for example, that the pharmaceutical company GSK is already working with Nvidia to put its massive datasets to work to accelerate research into new drugs and vaccines. GSK will use Cambridge-1 to help discover new therapies faster by combining genetic and clinical data.

The use of data and artificial intelligence in healthcare is enjoying a period of huge growth in the UK that will undoubtedly be accelerated by these new initiatives. Some of these massive patient datasets such as the UK Biobank are not new, having been founded in 2012, but they are taking on renewed prominence in this context. The UK Biobank, available to researchers worldwide, includes anonymised medical and lifestyle records of half a million middle-aged UK patients and is regularly augmented with additional data.

The United States relies on private sector innovations

The United States has a strong innovation in open data from the private sector that is also evident when it comes to health data. For example, the well-known Data for Good project, which Facebook launched in 2017, has placed a strong emphasis on pandemic response in its activities. For example, by means of maps on population movement, they have contributed to a better understanding of the coronavirus crisis, always with an approach that aims to preserve people's privacy. In this type of project, where the input data is highly sensitive, the appropriate implementation of privacy-enhancing technologies for users is of great importance.

The European Union announces important steps

The European Union, on the other hand, is taking important steps, such as progress on the European Health Data Space, one of the Commission's priorities for the 2019-2025 period. We recall that the common European health data space will promote better exchange and access to different types of health data (electronic health records, genomic data, patient registry data, etc.), not only to support healthcare delivery but also for health research and policy making in the field of health.

The public consultation aimed at ensuring that all possible views are considered in the design of a new legal framework for a European health data space - and ensuring transparency and accountability - closed just a few weeks ago and the results, as well as the proposed EU legislation, are expected to be published in the last quarter. This new legislation is expected to provide a decisive impetus within the European Union for the publication of new health datasets.

For the time being, the proposal for a Regulation on European data governance (Data Governance Act) is available, which addresses such relevant and sensitive issues as the transfer of data from the public sector for re-use, the exchange of data between companies for remuneration or the transfer of data for altruistic purposes. Clarifying the regulatory framework for all these issues will undoubtedly contribute to fostering innovation in the field of health.

Spain also joins in boosting the use of health data

In Spain, although with some delay, important initiatives are also beginning to move, the fruits of which we will see in the coming years. Among the investments that the Spanish government will make thanks to the Recovery, Transformation and Resilience Plan, for example, the creation of a healthcare data lake has recently been announced with the aim of facilitating the development and implementation of massive data processing projects.

In some cases, regional health services, such as Andalusia, are already working on the implementation of innovative advanced analytics techniques for real use cases. Thus, in the project to implement a corporate advanced analytics solution, the Andalusian Health System plans to deploy, among others, recommendation engines to optimise waiting lists, computer vision techniques to assist in breast cancer screening or segmentation techniques for chronic patients.

One of the positive effects of the global pandemic caused by Covid-19 is that awareness of the need for an open and trusted data ecosystem that benefits the health of all has been amplified. The convergence of medical knowledge, technology and data science has the potential to revolutionise patient care, and the pandemic may provide a definitive boost to open health data. For the moment, as reported in the study "Analysis of the current state of health data openness at regional level through open data portals" and despite the remaining challenges, the progress made in health data openness, especially in the most advanced regions, is promising.

Content prepared by Jose Luis Marín, Senior Consultant in Data, Strategy, Innovation and Digitalization.

The contents and views expressed in this publication are the sole responsibility of the author.

1. Introduction

Data visualization is a task linked to data analysis that aims to represent graphically the underlying information. Visualizations play a fundamental role in data communication, since they allow to draw conclusions in a visual and understandable way, also allowing detection of patterns, trends, anomalous data or projection of predictions, among many other functions. This makes its application transversal to any process that involves data. The visualization possibilities are very broad, from basic representations such as line, bar or sector graph, to complex visualizations configured on interactive dashboards.

Before starting to build an effective visualization, a prior data treatment must be performed, paying attention to their collection and validation of their content, ensuring that they are free of errors and in an adequate and consistent format for processing. The previous data treatment is essential to carry out any task related to data analysis and realization of effective visualizations.

We will periodically present a series of practical exercises on open data visualizations that are available on the portal datos.gob.es and in other similar catalogues. In there, we approach and describe in a simple way the necessary steps to obtain data, perform transformations and analysis that are relevant to creation of interactive visualizations from which we may extract all the possible information summarised in final conclusions. In each of these practical exercises we will use simple code developments which will be conveniently documented, relying on free tools. Created material will be available to reuse in Data Lab on Github.

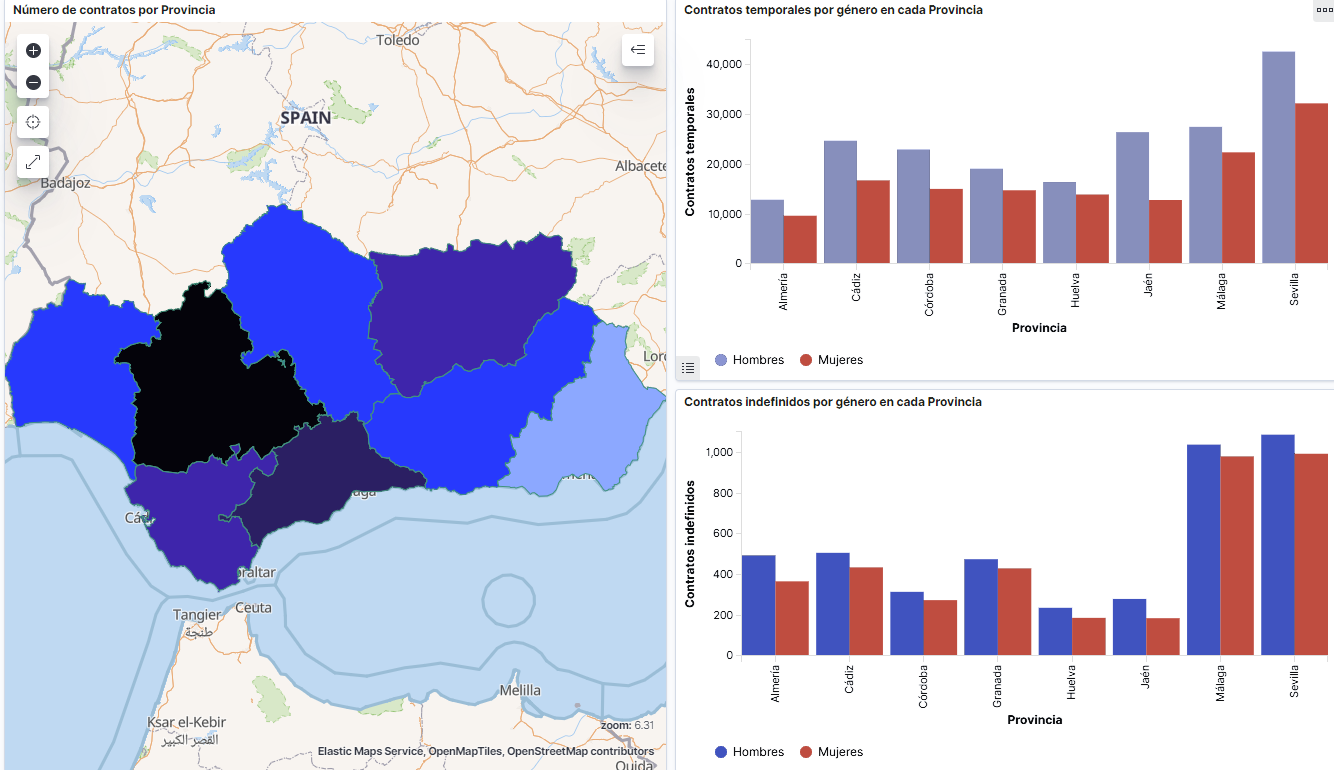

Captura del vídeo que muestra la interacción con el dashboard de la caracterización de la demanda de empleo y la contratación registrada en España disponible al final de este artículo

2. Objetives

The main objective of this post is to create an interactive visualization using open data. For this purpose, we have used datasets containing relevant information on evolution of employment demand in Spain over the last years. Based on these data, we have determined a profile that represents employment demand in our country, specifically investigating how does gender gap affects a group and impact of variables such as age, unemployment benefits or region.

3. Resources

3.1. Datasets

For this analysis we have selected datasets published by the Public State Employment Service (SEPE), coordinated by the Ministry of Labour and Social Economy, which collects time series data with distinct breakdowns that facilitate the analysis of the qualities of job seekers. These data are available on datos.gob.es, with the following characteristics:

- Demandantes de empleo por municipio: contains the number of job seekers broken down by municipality, age and gender, between the years 2006-2020.

- Gasto de prestaciones por desempleo por Provincia: time series between the years 2010-2020 related to unemployment benefits expenditure, broken down by province and type of benefit.

- Contratos registrados por el Servicio Público de Empleo Estatal (SEPE) por municipio: these datasets contain the number of registered contracts to both, job seekers and non-job seekers, broken down by municipality, gender and contract type, between the years 2006-2020.

3.2. Tools.

R (versión 4.0.3) and RStudio with RMarkdown add-on have been used to carry out this analysis (working environment, programming and drafting).

RStudio is an integrated open source development environment for R programming language, dedicated to statistical analysis and graphs creation.

RMarkdown allows creation of reports integrating text, code and dynamic results into a single document.

To create interactive graphs, we have used Kibana tool.

Kibana is an open code application that forms a part of Elastic Stack (Elasticsearch, Beats, Logstasg y Kibana) qwhich provides visualization and exploration capacities of the data indexed on the analytics engine Elasticsearch. The main advantages of this tool are:

- Presents visual information through interactive and customisable dashboards using time intervals, filters faceted by range, geospatial coverage, among others

- Contains development tools catalogue (Dev Tools) to interact with data stored in Elasticsearch.

- It has a free version ready to use on your own computer and enterprise version that is developed in the Elastic cloud and other cloud infrastructures, such as Amazon Web Service (AWS).

On Elastic website you may find user manuals for the download and installation of the tool, but also how to create graphs, dashboards, etc. Furthermore, it offers short videos on the youtube channel and organizes webinars dedicated to explanation of diverse aspects related to Elastic Stack.

If you want to learn more about these and other tools which may help you with data processing, see the report “Data processing and visualization tools” that has been recently updated.

4. Data processing

To create a visualization, it´s necessary to prepare the data properly by performing a series of tasks that include pre-processing and exploratory data analysis (EDA), to understand better the data that we are dealing with. The objective is to identify data characteristics and detect possible anomalies or errors that could affect the quality of results. Data pre-processing is essential to ensure the consistency and effectiveness of analysis or visualizations that are created afterwards.

In order to support learning of readers who are not specialised in programming, the R code included below, which can be accessed by clicking on “Code” button, is not designed to be efficient but rather to be easy to understand. Therefore, it´s probable that the readers more advanced in this programming language may consider to code some of the functionalities in an alternative way. A reader will be able to reproduce this analysis if desired, as the source code is available on the datos.gob.es Github account. The way to provide the code is through a RMarkdown document. Once it´s loaded to the development environment, it may be easily run or modified.

4.1. Installation and import of libraries

R base package, which is always available when RStudio console is open, includes a wide set of functionalities to import data from external sources, carry out statistical analysis and obtain graphic representations. However, there are many tasks for which it´s required to resort to additional packages, incorporating functions and objects defined in them into the working environment. Some of them are already available in the system, but others should be downloaded and installed.

#Instalación de paquetes \r\n #El paquete dplyr presenta una colección de funciones para realizar de manera sencilla operaciones de manipulación de datos \r\n if (!requireNamespace(\"dplyr\", quietly = TRUE)) {install.packages(\"dplyr\")}\r\n #El paquete lubridate para el manejo de variables tipo fecha \r\n if (!requireNamespace(\"lubridate\", quietly = TRUE)) {install.packages(\"lubridate\")}\r\n#Carga de paquetes en el entorno de desarrollo \r\nlibrary (dplyr)\r\nlibrary (lubridate)\r\n4.2. Data import and cleansing

a. Import of datasets

Data which will be used for visualization are divided by annualities in the .CSV and .XLS files. All the files of interest should be imported to the development environment. To make this post easier to understand, the following code shows the upload of a single .CSV file into a data table.

To speed up the loading process in the development environment, it´s necessary to download the datasets required for this visualization to the working directory. The datasets are available on the datos.gob.es Github account.

#Carga del datasets de demandantes de empleo por municipio de 2020. \r\n Demandantes_empleo_2020 <- \r\n read.csv(\"Conjuntos de datos/Demandantes de empleo por Municipio/Dtes_empleo_por_municipios_2020_csv.csv\",\r\n sep=\";\", skip = 1, header = T)\r\nOnce all the datasets are uploaded as data tables in the development environment, they need to be merged in order to obtain a single dataset that includes all the years of the time series, for each of the characteristics related to job seekers that will be analysed: number of job seekers, unemployment expenditure and new contracts registered by SEPE.

#Dataset de demandantes de empleo\r\nDatos_desempleo <- rbind(Demandantes_empleo_2006, Demandantes_empleo_2007, Demandantes_empleo_2008, Demandantes_empleo_2009, \r\n Demandantes_empleo_2010, Demandantes_empleo_2011,Demandantes_empleo_2012, Demandantes_empleo_2013,\r\n Demandantes_empleo_2014, Demandantes_empleo_2015, Demandantes_empleo_2016, Demandantes_empleo_2017, \r\n Demandantes_empleo_2018, Demandantes_empleo_2019, Demandantes_empleo_2020) \r\n#Dataset de gasto en prestaciones por desempleo\r\ngasto_desempleo <- rbind(gasto_2010, gasto_2011, gasto_2012, gasto_2013, gasto_2014, gasto_2015, gasto_2016, gasto_2017, gasto_2018, gasto_2019, gasto_2020)\r\n#Dataset de nuevos contratos a demandantes de empleo\r\nContratos <- rbind(Contratos_2006, Contratos_2007, Contratos_2008, Contratos_2009,Contratos_2010, Contratos_2011, Contratos_2012, Contratos_2013, \r\n Contratos_2014, Contratos_2015, Contratos_2016, Contratos_2017, Contratos_2018, Contratos_2019, Contratos_2020)b. Selection of variables

Once the tables with three time series are obtained (number of job seekers, unemployment expenditure and new registered contracts), the variables of interest will be extracted and included in a new table.

First, the tables with job seekers (“unemployment_data”) and new registered contracts (“contracts”) should be added by province, to facilitate the visualization. They should match the breakdown by province of the unemployment benefits expenditure table (“unemployment_expentidure”). In this step, only the variables of interest will be selected from the three datasets.

#Realizamos un group by al dataset de \"datos_desempleo\", agruparemos las variables numéricas que nos interesen, en función de varias variables categóricas\r\nDtes_empleo_provincia <- Datos_desempleo %>% \r\n group_by(Código.mes, Comunidad.Autónoma, Provincia) %>%\r\n summarise(total.Dtes.Empleo = (sum(total.Dtes.Empleo)), Dtes.hombre.25 = (sum(Dtes.Empleo.hombre.edad...25)), \r\n Dtes.hombre.25.45 = (sum(Dtes.Empleo.hombre.edad.25..45)), Dtes.hombre.45 = (sum(Dtes.Empleo.hombre.edad...45)),\r\n Dtes.mujer.25 = (sum(Dtes.Empleo.mujer.edad...25)), Dtes.mujer.25.45 = (sum(Dtes.Empleo.mujer.edad.25..45)),\r\n Dtes.mujer.45 = (sum(Dtes.Empleo.mujer.edad...45)))\r\n#Realizamos un group by al dataset de \"contratos\", agruparemos las variables numericas que nos interesen en función de las varibles categóricas.\r\nContratos_provincia <- Contratos %>% \r\n group_by(Código.mes, Comunidad.Autónoma, Provincia) %>%\r\n summarise(Total.Contratos = (sum(Total.Contratos)),\r\n Contratos.iniciales.indefinidos.hombres = (sum(Contratos.iniciales.indefinidos.hombres)), \r\n Contratos.iniciales.temporales.hombres = (sum(Contratos.iniciales.temporales.hombres)), \r\n Contratos.iniciales.indefinidos.mujeres = (sum(Contratos.iniciales.indefinidos.mujeres)), \r\n Contratos.iniciales.temporales.mujeres = (sum(Contratos.iniciales.temporales.mujeres)))\r\n#Seleccionamos las variables que nos interesen del dataset de \"gasto_desempleo\"\r\ngasto_desempleo_nuevo <- gasto_desempleo %>% select(Código.mes, Comunidad.Autónoma, Provincia, Gasto.Total.Prestación, Gasto.Prestación.Contributiva)Secondly, the three tables should be merged into one that we will work with from this point onwards..

Caract_Dtes_empleo <- Reduce(merge, list(Dtes_empleo_provincia, gasto_desempleo_nuevo, Contratos_provincia))

c. Transformation of variables

When the table with variables of interest is created for further analysis and visualization, some of them should be transformed to other types, more adequate for future aggregations.

#Transformación de una variable fecha\r\nCaract_Dtes_empleo$Código.mes <- as.factor(Caract_Dtes_empleo$Código.mes)\r\nCaract_Dtes_empleo$Código.mes <- parse_date_time(Caract_Dtes_empleo$Código.mes(c(\"200601\", \"ym\")), truncated = 3)\r\n#Transformamos a variable numérica\r\nCaract_Dtes_empleo$Gasto.Total.Prestación <- as.numeric(Caract_Dtes_empleo$Gasto.Total.Prestación)\r\nCaract_Dtes_empleo$Gasto.Prestación.Contributiva <- as.numeric(Caract_Dtes_empleo$Gasto.Prestación.Contributiva)\r\n#Transformación a variable factor\r\nCaract_Dtes_empleo$Provincia <- as.factor(Caract_Dtes_empleo$Provincia)\r\nCaract_Dtes_empleo$Comunidad.Autónoma <- as.factor(Caract_Dtes_empleo$Comunidad.Autónoma)d. Exploratory analysis

Let´s see what variables and structure the new dataset presents.

str(Caract_Dtes_empleo)\r\nsummary(Caract_Dtes_empleo)The output of this portion of the code is omitted to facilitate reading. Main characteristics presented in the dataset are as follows:

- Time range covers a period from January to December 2020.

- Number of columns (variables) is 17. .

- It presents two categorical variables (“Province”, “Autonomous.Community”), one date variable (“Code.month”) and the rest are numerical variables.

e. Detection and processing of missing data

Next, we will analyse whether the dataset has missing values (NAs). A treatment or elimination of NAs is essential, otherwise it will not be possible to process properly the numerical variables.

any(is.na(Caract_Dtes_empleo)) \r\n#Como el resultado es \"TRUE\", eliminamos los datos perdidos del dataset, ya que no sabemos cual es la razón por la cual no se encuentran esos datos\r\nCaract_Dtes_empleo <- na.omit(Caract_Dtes_empleo)\r\nany(is.na(Caract_Dtes_empleo))4.3. Creation of new variables

In order to create a visualization, we are going to make a new variable from the two variables present in the data table. This operation is very common in the data analysis, as sometimes it´s interesting to work with calculated data (e.g., the sum or the average of different variables) instead of source data. In this case, we will calculate the average unemployment expenditure for each job seeker. For this purpose, variables of total expenditure per benefit (“Expenditure.Total.Benefit”) and the total number of job seekers (“total.JobSeekers.Employment”) will be used.

Caract_Dtes_empleo$gasto_desempleado <-\r\n (1000 * (Caract_Dtes_empleo$Gasto.Total.Prestación/\r\n Caract_Dtes_empleo$total.Dtes.Empleo))4.4. Save the dataset

Once the table containing variables of interest for analysis and visualizations is obtained, we will save it as a data file in CSV format to perform later other statistical analysis or use it within other processing or data visualization tools. It´s important to use the UTF-8 encoding (Unicode Transformation Format), so the special characters may be identified correctly by any other tool.

write.csv(Caract_Dtes_empleo,\r\n file=\"Caract_Dtes_empleo_UTF8.csv\",\r\n fileEncoding= \"UTF-8\")5. Creation of a visualization on the characteristics of employment demand in Spain using Kibana

The development of this interactive visualization has been performed with usage of Kibana in the local environment. We have followed Elastic company tutorial for both, download and installation of the software.

Below you may find a tutorial video related to the whole process of creating a visualization. In the video you may see the creation of dashboard with different interactive indicators by generating graphic representations of different types. The steps to build a dashboard are as follows:

A continuación se adjunta un vídeo tutorial donde se muestra todo el proceso de realización de la visualización. En el vídeo podrás ver la creación de un cuadro de mando (dashboard) con diferentes indicadores interactivos mediante la generación de representaciones gráficas de diferentes tipos. Los pasos para obtener el dashboard son los siguientes:

- Load the data into Elasticsearch and generate an index that allows to interact with the data from Kibana. This index permits a search and management of the data in the loaded files, practically in real time.

- Generate the following graphic representations:

- Line graph to represent a time series on the job seekers in Spain between 2006 and 2020.

- Sector graph with job seekers broken down by province and Autonomous Community

- Thematic map showing the number of new contracts registered in each province on the territory. For creation of this visual it´s necessary to download a dataset with province georeferencing published in the open data portal Open Data Soft.

- Build a dashboard.

Below you may find a tutorial video interacting with the visualization that we have just created:

6. Conclusions

Looking at the visualization of the data related to the profile of job seekers in Spain during the years 2010-2020, the following conclusions may be drawn, among others:

- There are two significant increases of the job seekers number. The first, approximately in 2010, coincides with the economic crisis. The second, much more pronounced in 2020, coincides with the pandemic crisis.

- A gender gap may be observed in the group of job seekers: the number of female job seekers is higher throughout the time series, mainly in the age groups above 25.

- At the regional level, Andalusia, followed by Catalonia and Valencia, are the Autonomous Communities with the highest number of job seekers. In contrast to Andalusia, which is an Autonomous Community with the lowest unemployment expenditure, Catalonia presents the highest value.

- Temporal contracts are leading and the provinces which generate the highest number of contracts are Madrid and Barcelona, what coincides with the highest number of habitants, while on the other side, provinces with the lowest number of contracts are Soria, Ávila, Teruel and Cuenca, what coincides with the most depopulated areas of Spain.

This visualization has helped us to synthetise a large amount of information and give it a meaning, allowing to draw conclusions and, if necessary, make decisions based on results. We hope that you like this new post, we will be back to present you new reuses of open data. See you soon!

In the last year, we have seen how decisions on health matters have marked the political, social and economic agenda of our country, due to the global pandemic situation resulting from COVID-19. Decisions taken on the basis of public data on cumulative incidence, hospital bed occupancy or vaccination rates have marked our daily lives.

This fact highlights the importance of open health data for the management and decision-making of our governments, but it is also fundamental as a basis for solutions that help both patients and doctors.

The types of data used in the field of health and wellbeing are numerous: results of medical studies and research, anonymised patient records, data on patients' habits (such as how much exercise we do or how much sleep we get) or data linked to health services and management. All these data are of great value that can be exploited by healthcare professionals, providers and citizens alike.

How have health services been using open data?

According to the study "The Open Data Impact Map", a project of the Open Data for Development Network (OD4D), health-related organisations use open data mainly for the purpose of optimising their management and organisation of resources. Of the 124 organisations interviewed in 2018, only 19 indicated that they use open data for the development of health products and services, and only 13 for research. The same study indicates that the most widely used open data are those directly related to health, and that very few organisations combine them with datasets from other themes - mainly geospatial or demographic or social indicators - to generate deeper and more detailed knowledge.

However, the opportunities in this field are vast, as shown below.

Click here to see the infographic in full size and in its accessible version

Examples of services based on open health data

The situation seems to be changing and there is increasing momentum for the implementation of applications, services or projects based on data in this field. Europe is committed to the creation of data spaces focused on the field of health, as part of its strategy to build a European cloud, while the Spanish government has included the promotion of Digital Health solutions in its Digital Spain 2025 strategy. Among the actions envisaged by our country is the streamlining of information systems to enable better data sharing and interoperability.

Applications that collect health services

When it comes to health apps, the most common are those that help citizens find local healthcare providers that meet their needs. An example is 24-hour pharmacies in Tudela and la Ribera or the search engine for health centres in the community of Madrid. Thanks to these, patients can find out where the centres are located and find out information of interest, such as opening hours. Some applications include additional services, such as Salud Responde, from the Junta de Andalucía, which allows the request and modification of medical appointments, improving the efficiency of the system.

But such services can also provide important information for more efficient resource management, especially when cross-referenced with other datasets. For example, Pharmacies, Health Centres and Health Areas of the Government of Cantabria, developed by Esri, includes information on the territorial organisation of health resources according to geographical, demographic, epidemiological, socio-economic, labour, cultural, climatological and transport factors. Its main objective is not only to facilitate citizens' access to this information, but also to ensure that "the provision of health services is carried out in the best conditions of accessibility, efficiency and quality".

The Health, environmental and socio-economic atlas of the Basque Country by small areas shows a series of maps with the aim of "monitoring geographical inequalities in health, socioeconomic and environmental indicators in the Basque Country, taking into account the gender perspective". This information is very useful for service managers in trying to promote greater equity in access to healthcare.

Disease prevention tools

There are also applications on the market aimed at disease prevention, such as ZaraHealth, a web application that displays real-time data on water quality, air quality and pollen levels in the city of Zaragoza. The user can set a series of thresholds for pollen and pollution levels, so that a warning is issued when they are reached. In this way, they can avoid going outdoors or exercising in areas that do not meet their needs. APCYL: Allergy to pollen CyL has the same goal.

Another important aspect of our health is our diet, a key factor in the prevention of various pathologies such as cardiovascular diseases or diabetes. Websites such as Mils, which offers detailed nutritional information on food, can help us to eat more healthily.

Services for the diagnosis and treatment of diseases

Open data can help assess health outcomes, develop more effective treatments and predict disease outbreaks.

In the field of mental health, for example, we find Mentalcheck, an app that enables psychological assessments and self-reporting via mobile devices. It aims to improve Ecological Momentary Assessment and Intervention (EMA and EMI). The application incorporates open data on medications and mental health services from the US Food and Drug Administration (FDA). It also allows the integration of psychological and physiological data to generate correlations.

Another example is Qmenta, a company focused on analysing brain data, using MRI and related clinical data. In recent months they have also incorporated open data related to COVID-19 in some of their work. Through medical image processing algorithms, they seek to accelerate the development of new therapies for neurological diseases.

Up-to-date information on diseases or system needs

Another area where open data can drive improvements is in the reporting of certain situations. This has become especially important in the context of the global pandemic where citizens demand constant and updated information. In this sense, we find the scorecard of the Ministry of Health at state level and different regional initiatives, such as Curve in Aragon: Evolution of Coronavirus in Aragon, or Evolution of the coronavirus in Castilla y León. These are just a couple of examples, but it should be noted that there are numerous efforts in this area, as the Ministry of Health reports on its website.

It is also important to make information on medicines transparent, both for doctors and patients, by facilitating comparisons. In this regard, the Nomenclature of Medicines shows more than 20,000 medicines marketed in Spain with Social Security coverage, offering information on price, presentation, links to the package leaflet, safety notes and active ingredients, among others.

Finally, it is also important to provide information on resource needs, for example, doctor vacancies or the state of blood reserves.

Data in general has driven important advances in improving health outcomes, from increased access to care to medical research and diagnosis. Open data is a key ingredient that can help further enrich these solutions with new variables. It is therefore essential that more and more health and wellness data will be opened, following a set of guidelines and standards that ensure the privacy and security of patients. In this sense, the report "Open data and health: technological context, stakeholders and legal framework" includes information on what types of data can be opened and what the legal framework says about it.