There are only a few days left until the end of summer and, as with every change of season, it is time to review what the last three months have brought in the Spanish open data ecosystem.

In July we learned of the latest edition of the European Commission's DESI (Digital Economy and Society Index) report, which places Spain ahead of the EU average in digital matters. Our country is in seventh position, improving two places compared to 2021. One of the areas where it performs best is in open data, where it ranks third. These good data are the result of the fact that an increasing number of organisations are committed to opening up the information they hold and more reusers are taking advantage of this data to create valuable products and services, as we will see below.

Advances in strategy and agreements to promote open data

Open data is gaining ground in political strategies at national, regional and local level.

In this regard, in July the Council of Ministers approved the draft Act on the Digital Efficiency of the Public Justice Service, an initiative that seeks to build a more accessible Justice Administration, promoting the data orientation of its systems. Among other issues, this act incorporates the concept of "open data" in the Administration of Justice.

Another example, this time at the regional level, comes from the Generalitat de Valencia, which launched a new Open Data Strategy at the beginning of the summer with the aim of offering quality public information, by design and by default.

We have also witnessed the signing of collaboration agreements to boost the open data ecosystem, for example:

- The Ajuntament de L'Hospitalet and the Universitat Politècnica de Catalunya have signed an agreement to offer training to undergraduate and master's degree students in Big Data and Artificial Intelligence, based on open data work.

- The University of Castilla la Mancha has agreed with the regional government to launch the 'Open Government' chair in order to promote higher education and research in areas such as transparency, open data or access to public information.

- The National Centre for Geographic Information (CNIG) and Asedie have signed a new protocol to improve access to geographic information, in order to promote openness, access and reuse of public sector information.

Examples of data reuse

The summer of 2022 will be remembered for the heat waves and fires that have ravaged different corners of the country. A context in which open data has demonstrated its power to provide information on the state of the situation and help in extinguishing fires. Data from Copernicus or the State Meteorological Agency (AEMET) have been used to monitor the situation and make decisions. These data sources, together with others, are also being used to understand the consequences of low rainfall and high temperatures on European reservoirs. In addition, these data have been used by the media to provide the public with the latest information on the evolution of the fires.

Firefighting based on open data has also been developed at the regional level. For example, the Government of Navarre has launched Agronic, a tool that works with Spatial Data Infrastructures of Navarre to prevent fires caused by harvesters. For its part, the Barcelona Provincial Council's open data portal has published datasets with "essential information" for the prevention of forest fires. These include the network of water points, low combustibility strips and forest management actions, used by public bodies to draw up plans to deal with fire.

Other examples of the use of open data that we have seen during this period are:

- The Environmental Radiological Surveillance Network of the Generalitat de Catalunya has developed, from open data, a system to monitor the radiation present in the environment of the nuclear power plants (Vandellòs and Ascó) and the rest of the Catalan territory.

- Thanks to the open data shared by Aragón Open Data, a new scientific article on Covid-19 has been written with the aim of finding out and identifying spatio-temporal patterns in relation to the incidence of the virus and the organisation of health resources.

- The Barcelona Open Data initiative has launched #DataBretxaWomen, a project that seeks to raise public awareness of the existing inequality between men and women in different sectors.

- Maldito dato has used open data from the statistics developed by the National Statistics Institute (INE) based on mobile positioning data to show how the population density of different Spanish municipalities changes during July and August.

- Within its Data Analytics for Research and Innovation in Health Programme, Catalonia has prioritised 8 proposals for research based on data analysis. These include studies on migraines, psychosis and heart disease.

Developments in open data platforms

Summer has also been the time chosen by different organisations to launch or update their open data platforms. Examples include:

- The Statistical Institute of Navarre launched a new web portal, with more dynamic and attractive visualisations. In the process of creation, they have managed to automate statistical production and integrate all the data in a single environment.

- Zaragoza City Council has also just published a new open data portal that offers all municipal information in a clearer and more concise way. This new portal has been agreed with other city councils as part of the 'Open Cities' project.

- Another city that already has an open data portal is Cadiz. Its City Council has launched a platform that will allow the people of Cadiz to know, access, reuse and redistribute the open data present in the city.

- The Valencian Institute of Business Competitiveness (IVACE) presented an open data portal with all the records of energy certification of buildings in the Valencian Community since 2011. This will allow, among other actions, to carry out consumption analysis and establish rehabilitation strategies.

- Aragón Open Data has included a new functionality in its API that allows users to obtain geographic data in GeoJSON format.

- The National Geographic Institute announced a new version of the earthquake app, with new features, educational content and information.

- The Ministry for Ecological Transition and the Demographic Challenge presented SIDAMUN, a platform that facilitates access to territorial statistical information based on municipal data.

- The open data portal of the Government of the Canary Islands launched a new search engine that makes it possible to locate the pages of the portal using metadata, and which allows exporting in CVS, ODS or PDF.

Some organisations have taken advantage of the summer to announce new developments that will see the light of day in the coming months, such as the Xunta de Galicia, which is making progress in the development of a Public Health Observatory through an open data platform, Burgos City Council, which will launch an open data portal, and the Pontevedra Provincial Council, which will soon launch a real-time budget viewer.

Actions to promote open data

In June we met the finalists of the IV Aporta Challenge: "The value of data for the health and well-being of citizens", the final of which will be held in October. In addition, some competitions have been launched in recent months to promote the reuse of open data, for which the registration period is still open, such as the Castilla y León competition or the first UniversiData Datathon. The Euskadi open data competition was also launched and is currently in the evaluation phase.

With regard to events, the summer started with the celebration of the Open Government Week, which brought together various activities, some of them focused on data. If you missed it, some organisations have made materials available to citizens. For example, you can watch the video of the colloquium "Open data with a gender perspective: yes or yes" promoted by the Government of the Canary Islands or access the webinar presentations to learn about the Data Office and the Aporta Initiative.

Other events that have been held with the participation of the Data Office and whose videos are public are: the National Congress on Archives and Electronic Documents and the Data Spaces as ecosystems for entities to reach further.

Finally, in the field of training, some examples of courses that have been launched these months are:

- The National Geographic Institute has launched an Inter-administrative Training Plan, with the aim of generating a common culture among all the experts in Geographic Information of the public bodies.

- Andalucía Vuela has launched a series of free training courses aimed at citizens interested in data or artificial intelligence.

International News

The summer has also brought many new developments at the international level. Some examples are:

- The beginning of the meteorological summer saw the publication of the results of the first edition of the Global Data Barometer, which measures the state of data with respect to societal issues such as Covid19 or climate.

- The 12 finalists of the Eu Datathon 2022 were also announced.

- An interactive edition of Eurostat's regional yearbook 2021 was published.

- England has developed a strategy to harness the potential of data in health and healthcare in a secure, reliable and transparent way.

This is just a selection of news among all the developments in the open data ecosystem over the last three months. If you would like to make a contribution, feel free to leave us a message in the comments or write to dinamizacion@datos.gob.es.

For yet another year since 2016, the Junta de Castilla y León has opened the deadline to receive the most innovative proposals in the field of open data. The sixth edition of the competition of the same name aims to "recognise the development of projects that provide any type of idea, study, service, website or application for mobile devices, using datasets from the Open Data Portal of the Junta de Castilla y León".

With this type of initiative, Castilla y León seeks to showcase the digital talent present in the autonomous community, while promoting the use of open data and the role of reusing companies in Castilla y León.

The deadline for submitting applications has been open since 5 August and will end on 4 October. When submitting projects, participants will be able to choose between the in-person or digital option. The latter will be carried out through the Electronic Headquarters of Castilla y León and can be processed by both individuals and legal entities.

4 different categories

As in previous editions, the projects and associated prizes are divided into four different categories:

- “Ideas" category: This category includes projects that describe an idea that can be used to create studies, services, websites or applications for mobile devices. The main requirement is to use datasets from the Junta de Castilla y León's Open Data portal.

- "Products and Services" category: Includes those that provide studies, services, websites or applications for mobile devices and that use datasets from the Open Data portal of the Junta de Castilla y León, which are accessible to all citizens via the web using an URL.

- “Didactic Resource" category: This section includes the creation of new and innovative open didactic resources (published under Creative Commons licences) that use datasets from the Junta de Castilla y León's Open Data portal, and serve to support teaching in the classroom.

- “Data Journalism" category: Finally, this category includes journalistic pieces published or updated (in a relevant way) in any medium (written or audiovisual) that use datasets from the Junta de Castilla y León's Open Data portal.

Regarding the awards of this sixth edition, the prizes amount to €12,000 and are distributed according to the category awarded and the position achieved.

Ideas category

- First prize €1,500

- Second prize €500

Products and Services category

- First prize €2,500

- Second prize €1,500

- Third prize €500

- Student Prize €1,500

Didactic Resource category

- First prize €1,500

Data Journalism category

- First prize €1,500

- Second prize €1,000

As in previous editions, the final verdict will be issued by a jury made up of members with proven experience in the field of open data, information analysis or the digital economy. Likewise, the jury's decisions will be taken by majority vote and, in the event of a tie, the jury will decide who holds the presidency.

Finally, the winners will have a period of 5 working days to accept the award. If they do not accept the prize, it will be understood that they have renounced it. If you want to consult the conditions and legal bases of the competition in detail, you can access them through this link.

Winners of the 2021 edition

The 5th edition of the Castilla y León Data Competition had a total of 37 proposals of which only eight of them won some kind of award. With a view to participating in the current edition, it may be of interest to know which projects won the jury's attention in 2021.

Ideas Category

The first prize of €1,500 went to APP SOLAR-CYL, a web tool for optimal sizing of photovoltaic solar self-consumption installations. Aimed at both citizens and public administration energy managers, the solution aims to support the analysis of the technical and economic viability of this type of system.

Products and Services Category

Repuéblame is a website aimed at rediscovering the best places in which to live or telework. In this way, the app catalogues the municipalities of Castilla y León based on a series of numerical indicators, developed in-house, related to quality of life. By winning the first prize in this category, it received a cash prize of 2,500 euros.

Data Journalism Category

Asociación Maldita contra la desinformación won the first prize of €1,500 for its project MAPA COVID-19: see how many cases of coronavirus there are and how busy your hospital is.

Finally, after the jury decided that the entries submitted did not meet the criteria set out in the rules, the "Educational Resource" category was declared void and, therefore, none of the participants were awarded a prize.

If you have any questions or queries about the competition, you can write an email to: datosabiertos@jcyl.es.

1. Introduction

Visualizations are graphical representations of data that allow the information linked to them to be communicated in a simple and effective way. The visualization possibilities are very broad, from basic representations such as line, bar or pie chart, to visualizations configured on control panels or interactive dashboards. Visualizations play a fundamental role in drawing conclusions from visual information, allowing detection of patterns, trends, anomalous data or projection of predictions, among many other functions.

Before starting to build an effective visualization, a prior data treatment must be performed, paying special attention to their collection and validation of their content, ensuring that they are in a proper and consistent format for processing and free of errors. The previous data treatment is essential to carry out any task related to data analysis and realization of effective visualizations.

In the section “Visualizations step-by-step” we are periodically presenting practical exercises on open data visualizations that are available in datos.gob.es catalogue and other similar catalogues. In there, we approach and describe in a simple way the necessary steps to obtain data, perform transformations and analysis that are relevant to creation of interactive visualizations from which we may extract information in the form of final conclusions.

In this practical exercise we have performed a simple code development which is conveniently documented, relying on free tools.

Access the Data Lab repository on Github.

Run the data pre-processing code on Google Colab.

2. Objetives

The main objective of this post is to learn how to make an interactive visualization using open data. For this practical exercise we have chosen datasets containing relevant information on national reservoirs. Based on that, we will analyse their state and time evolution within the last years.

3. Resources

3.1. Datasets

For this case study we have selected datasets published by Ministry for the Ecological Transition and Demographic Challenge, which in its hydrological bulletin collects time series data on the volume of water stored in the recent years in all the national reservoirs with capacity greater than 5hm3. Historical data on the volume of stored water are available at:

Furthermore, a geospatial dataset has been selected. During the search, two possible input data files have been found, one that contains geographical areas corresponding to the reservoirs in Spain and one that contains dams, including their geopositioning as a geographic point. Even though they are not the same thing, reservoirs and dams are related and to simplify this practical exercise, we choose to use the file containing the list of dams in Spain. Inventory of dams is available at: https://www.mapama.gob.es/ide/metadatos/index.html?srv=metadata.show&uuid=4f218701-1004-4b15-93b1-298551ae9446

This dataset contains geolocation (Latitude, Longitude) of dams throughout Spain, regardless of their ownership. A dam is defined as an artificial structure that limits entirely or partially a contour of an enclosure nestled in terrain and is destined to store water within it.

To generate geographic points of interest, a processing has been executed with the usage of QGIS tool. The steps are the following: download ZIP file, upload it to QGIS and save it as CSV, including the geometry of each element as two fields specifying its position as a geographic point (Latitude, Longitude).

Also, a filtering has been performed, in order to extract the data related to dams of reservoirs with capacity greater than 5hm3.

3.2. Tools

To perform data pre-processing, we have used Python programming language in the Google Colab cloud service, which allows the execution of JNotebooks de Jupyter.

Google Colab, also called Google Colaboratory, is a free service in the Google Research cloud which allows to program, execute and share a code written in Python or R through the browser, as it does not require installation of any tool or configuration.

Google Data Studio tool has been used for the creation of the interactive visualization.

Google Data Studio in an online tool which allows to create charts, maps or tables that can be embedded on websites or exported as files. This tool is easy to use and permits multiple customization options.

If you want to know more about tools that can help you with data treatment and visualization, see the report “Data processing and visualization tools”.

4. Enriquecimiento de los datos

In order to provide more information about each of the dams in the geospatial dataset, a process of data enrichment is carried out, as explained below.

To do this, we will focus on OpenRefine, which is a useful tool for this type of tasks. This open source tool allows to perform multiple data pre-processing actions, although at that point we will use it to conduct enrichment of our data by incorporation of context, automatically linking information that resides in a popular knowledge repository, Wikidata.

Once the tool is installed and launched on computer, a web application will open in the browser. In case this doesn´t happen, the application may be accessed by typing http://localhost:3333 in the browser´s search bar.

Steps to follow:

- Step 1: Upload of CSV to the system (Figure 1).

Figure 1 – Upload of a CSV file to OpenRefine

- Step 2: Creation of a project from uploaded CSV (Figure 2). OpenRefine is managed through projects (each uploaded CSV will become a project) that are saved for possible later use on a computer where OpenRefine is running. At this stage it´s required to name the project and some other data, such as the column separator, though the latter settings are usually filled in automatically.

Figure 2 – Creation of a project in OpenRefine

- Step 3: Linkage (or reconciliation, according to the OpenRefine nomenclature) with external sources. OpenRefine allows to link the CSV resources with external sources, such as Wikidata. For this purpose, the following actions need to be taken (steps 3.1 to 3.3):

- Step 3.1: Identification of the columns to be linked. This step is commonly based on analyst´s experience and knowledge of the data present in Wikidata. A tip: usually, it is feasible to reconcile or link the columns containing information of global or general character, such as names of countries, streets, districts, etc. and it´s not possible to link columns with geographic coordinates, numerical values or closed taxonomies (e.g. street types). In this example, we have found a NAME column containing name of each reservoir that can serve as a unique identifier for each item and may be a good candidate for linking

- Step 3.2: Start of reconciliation. As indicated in figure 3, start reconciliation and select the only available source: Wikidata(en). After clicking Start Reconciling, the tool will automatically start searching for the most suitable vocabulary class on Wikidata, based on the values from the selected column.

Figure 3 – Start of the reconciliation process for the NAME column in OpenRefine

- Step 3.3: Selection of the Wikidata class. In this step reconciliation values will be obtained. In this case, as the most probable value, select property “reservoir”, which description may be found at https://www.wikidata.org/wiki/Q131681 and it corresponds to the description of an “artificial lake to accumulate water”. It´s necessary to click again on Start Reconciling.

OpenRefine offers a possibility of improving the reconciliation process by adding some features that allow to target the information enrichment with higher precision. For that purpose, adjust property P4568, which description matches the identifier of a reservoir in Spain within SNCZI-IPE, as it may be seen in the figure 4.

Figure 4 – Selection of a Wikidata class that best represents the values on NAME column

- Step 4: Generation of a column with reconciled or linked values. To do that, click on the NAME column and go to “Edit column → Add column based in this column”. A window will open where a name of the new column must be specified (in this case, WIKIDATA_RESERVOIR). In the expression box introduce: “http://www.wikidata.org/entity/”+cell.recon.match.id, so the values will be displayed as it´s previewed in figure 6. “http://www.wikidata.org/entity/” is a fixed text string that represents Wikidata entities, while the reconciled value of each of the values we obtain through the command cell.recon.match.id, that is, cell.recon.match.id(“ALMODOVAR”) = Q5369429.

Launching described operation will result in generation of a new column with those values. Its correctness may be confirmed by clicking on one of the new column cells, as it should redirect to a Wikidata web page containing information about reconciled value.

Repeat the process to add other type of enriched information as a reference for Google and OpenStreetMap.

Figure 5 – Generation of Wikidata entities through a reconciliation within a new column.

- Step 5: Download of enriched CSV. Go to the function Export → Custom tabular exporter placed in the upper right part of the screen and select the features indicated in Figure 6.

Figure 6 – Options of CSV file download via OpenRefine

5. Data pre-processing

During the pre-processing it´s necessary to perform an exploratory data analysis (EDA) in order to interpret properly the input data, detect anomalies, missing data and errors that could affect the quality of subsequent processes and results, in addition to realization of the transformation tasks and preparation of the necessary variables. Data pre-processing is essential to ensure the reliability and consistency of analysis or visualizations that are created afterwards. To learn more about this process, see A Practical Introductory Guide to Exploratory Data Analysis.

The steps involved in this pre-processing phase are the following:

- Installation and import of libraries

- Import of source data files

- Modification and adjustment of variables

- Prevention and treatment of missing data (NAs)

- Generation of new variables

- Creation of a table for visualization “Historical evolution of water reserve between the years 2012-2022”

- Creation of a table for visualization “Water reserve (hm3) between the years 2012-2022”

- Creation of a table for visualization “Water reserve (%) between the years 2012-2022”

- Creation of a table for visualization “Monthly evolution of water reserve (hm3) for different time series”

- Saving the tables with pre-processed data

You may reproduce this analysis, as the source code is available in the GitHub repository. The way to provide the code is through a document made on Jupyter Notebook which once loaded to the development environment may be easily run or modified. Due to the informative nature of this post and its purpose to support learning of non-specialist readers, the code is not intended to be the most efficient but rather to be understandable. Therefore, it´s possible that you will think of many ways of optimising the proposed code to achieve a similar purpose. We encourage you to do it!

You may follow the steps and run the source code on this notebook in Google Colab.

6. Data visualization

Once the data pre-processing is done, we may move on to interactive visualizations. For this purpose, we have used Google Data Studio. As it´s an online tool, it´s not necessary to install software to interact or generate a visualization, but it´s required to structure adequately provided data tables.

In order to approach the process of designing the set of data visual representations, the first step is to raise the questions that we want to solve. We suggest the following:

-

What is the location of reservoirs within the national territory?

-

Which reservoirs have the largest and the smallest volume of water (water reserve in hm3) stored in the whole country?

-

Which reservoirs have the highest and the lowest filling percentage (water reserve in %)?

-

What is the trend of the water reserve evolution within the last years?

Let´s find the answers by looking at the data!

6.1. Geographic location and main information on each reservoir

This visual representation has been created with consideration of geographic location of reservoirs and distinct information associated with each one of them. For this task, a table “geo.csv” has been generated during the data pre-processing.

Location of reservoirs in the national territory is shown on a map of geographic points.

Once the map is obtained, you may access additional information about each reservoir by clicking on it. The information will display in the table below. Furthermore, an option of filtering by hydrographic demarcation and by reservoir is available through the drop-down tabs.

View the visualization in full screen

6.2. Water reserve between the years 2012-2022

This visual representation has been made with consideration of water reserve (hm3) per reservoir between the years 2012 (inclusive) and 2022. For this purpose, a table “volumen.csv” has been created during the data pre-processing.

A rectangular hierarchy chart displays intuitively the importance of each reservoir in terms of volumn stored within the national total for the time period indicated above.

Ones the chart is obtained, an option of filtering by hydrographic demarcation and by reservoir is available through the drop-down tabs.

View the visualization in full screen

6.3. Water reserve (%) between the years 2012-2022

This visual representation has been made with consideration of water reserve (%) per reservoir between the years 2012 (inclusive) and 2022. For this task, a table “porcentaje.csv” has been generated during the data pre-processing.

The percentage of each reservoir filling for the time period indicated above is intuitively displayed in a bar chart.

Ones the chart is obtained, an option of filtering by hydrographic demarcation and by reservoir is available through the drop-down tabs.

View the visualization in ful screen

6.4. Historical evolution of water reserve between the years 2012-2022

This visual representation has been made with consideration of water reserve historical data (hm3 and %) per reservoir between the years 2012 (inclusive) and 2022. For this purpose, a table “lineas.csv” has been created during the data pre-processing.

Line charts and their trend lines show the time evolution of the water reserve (hm3 and %).

Ones the chart is obtained, modification of time series, as well as filtering by hydrographic demarcation and by reservoir is possible through the drop-down tabs.

View the visualization in full screen

6.5. Monthly evolution of water reserve (hm3) for different time series

This visual representation has been made with consideration of water reserve (hm3) from distinct reservoirs broken down by months for different time series (each year from 2012 to 2022). For this purpose, a table “lineas_mensual.csv” has been created during the data pre-processing.

Line chart shows the water reserve month by month for each time series.

Ones the chart is obtained, filtering by hydrographic demarcation and by reservoir is possible through the drop-down tabs. Additionally, there is an option to choose time series (each year from 2012 to 2022) that we want to visualize through the icon appearing in the top right part of the chart.

View the visualization in full screen

7. Conclusions

Data visualization is one of the most powerful mechanisms for exploiting and analysing the implicit meaning of data, independently from the data type and the user´s level of the technological knowledge. Visualizations permit to create meaningful data and narratives based on a graphical representation. In the set of implemented graphical representations the following may be observed:

-

A significant trend in decreasing the volume of water stored in the reservoirs throughout the country between the years 2012-2022.

-

2017 is the year with the lowest percentage values of the total reservoirs filling, reaching less than 45% at certain times of the year.

-

2013 is the year with the highest percentage values of the total reservoirs filling, reaching more than 80% at certain times of the year.

It should be noted that visualizations have an option of filtering by hydrographic demarcation and by reservoir. We encourage you to do it in order to draw more specific conclusions from hydrographic demarcation and reservoirs of your interest.

Hopefully, this step-by-step visualization has been useful for the learning of some common techniques of open data processing and presentation. We will be back to present you new reuses. See you soon!

Last November, Red.es, in collaboration with the Secretary of State for Digitalisation and Artificial Intelligence launched the 4th edition of the Aporta Challenge. Under the slogan "The value of data for health and well-being of citizens", the competition seeks to identify new services and solutions, based on open data, that drive improvements in this field.

The challenge is divided into two phases: an ideas competition, followed by a second phase where finalists have to develop and present a prototype. We are now at the halfway point of the competition. Phase I has come to an end and it is time to find out who are the 10 finalists who will move on to phase II.

After analysing the diverse and high-quality proposals submitted, the jury has determined a series of finalists, as reflected the resolution published on the Red.es website.

Let us look at each candidacy in detail:

Getting closer to the patient

- Team:

SialSIG aporta, composed of Laura García and María del Mar Gimeno.

- What does it consist of?

A platform will be built to reduce rescue time and optimise medical care in the event of an emergency. Parameters will be analysed to categorise areas by defining the risk of mortality and identifying the best places for aerial rescue vehicles to land. This information will also make available which areas are the most isolated and vulnerable to medical emergencies, information of great value for defining strategies for action that will lead to an improvement in the management and resources to be used.

- Data

The platform seeks to integrate information from all the autonomous communities, including population data (census, age, sex, etc.), hospital and heliport data, land use and crop data, etc. Specifically, data will be obtained from the municipal census of the National Statistics Institute (INE), the boundaries of provinces and municipalities, the land use classification of the National Geographic Institute (IGN) and data from the SIGPAC (MAPA), among others.

Hospital pressure monitoring

- Team:

DSLAB, data science research group at Rey Juan Carlos University, composed of Isaac Martín, Alberto Fernández, Marina Cuesta and María del Carmen Lancho.

- What does it consist of?

With the aim of improving hospital management, the DSLAB proposes an interactive and user-friendly dashboard that allows:

- Monitor hospital pressure

- Evaluate the actual load and saturation of healthcare centres

- Forecast the evolution of this pressure

This will enable better resource planning, anticipate decision making and avoid possible collapses.

- Data

To realise the tool's potential, the prototype will be created with open data relating to COVID in the Autonomous Community of Castilla y León, such as bed occupancy or the epidemiological situation by hospital and province. However, the solution is scalable and can be extrapolated to any other territory with similar data.

RIAN - Intelligent Activity and Nutrition Recommender

- Team:

RIAN Open Data Team, composed of Jesús Noguera y Raúl Micharet.

- What does it consist of?

RIAN was created to promote healthy habits and combat overweight, obesity, sedentary lifestyles and poor nutrition among children and adolescents. It is an application for mobile devices that uses gamification techniques, as well as augmented reality and artificial intelligence algorithms to make recommendations. Users have to solve personalised challenges, individually or collectively, linked to nutritional aspects and physical activities, such as gymkhanas or games in public green spaces.

- Data

The pilot uses data relating to green areas, points of interest, greenways, activities and events from the cities of Málaga, Madrid, Zaragoza and Barcelona. These data are combined with nutritional recommendations (food data and nutritional values and branded food products) and data for food image recognition from Tensorflow or Kaggle, among others.

MentalReview - visualising data for mental health

- Team:

Kairos Digital Analytics and Big Data Solutions S.L.

- What does it consist of?

MentalReview is a mental health monitoring tool to support health and social care management and planning, enabling institutions to improve citizen care services. The tool will allow the analysis of information extracted from open databases, the calculation of indicators and, finally, the visualisation of the information through graphs and an interactive map. This will allow us to know the current state of mental health in the Spanish population, identify trends or make a study of its evolution.

- Data

For its development, data from the INE, the Sociological Research Centre, the Mental Health Services of the different autonomous regions, the Spanish Agency for Medicines and Health Products or EUROSTAT, among others, will be used. Some specific examples of datasets to be used are: anxiety problems in young people, the suicide mortality rate by autonomous community, age, sex and period or the consumption of anxiolytics.

HelpVoice!

- Team:

Data Express, composed of Sandra García, Antonio Ríos and Alberto Berenguer.

- What does it consist of?

HelpVoice! is a service that helps our elderly through voice recognition techniques based on automatic learning. In an emergency situation, the user only need to click on a device that can be an emergency button, a mobile phone or home automation tools and tell about their symptoms. The system will send a report with the transcript and predictions to the nearest hospital, speeding up the response of the healthcare workers. In parallel, HelpVoice! will also recommend to the patient what to do while waiting for the emergency services.

- Data

Among other open data, the map of hospitals in Spain will be used. Speech and sentiment recognition data will also be used in the text.

Living and liveable cities: creating high-resolution shadow maps to help cities adapt to climate change

- Team:

Living Cities, composed of Francisco Rodríguez-Sánchez and Jesús Sánchez-Dávila.

- What does it consist of?

In the current context of rising temperatures, the Living Cities team proposes to develop open software to promote the adaptation of cities to climate change, facilitating the planning of urban shading. Using spatial analysis, remote sensing and modelling techniques, this software will allow to know the level of insolation (or shading) with high spatio-temporal resolution (every hour of the day at every square metre of land) for any municipality in Spain. The team will particularly analyse the shading situation in the city of Seville, offering its results publicly through a web application that will allow consultation of the insolation maps and to obtain shade routes between different points in the city.

- Data

Living Cities is based on the use of open remote sensing data (LiDAR) from the National Aerial Orthophotography Programme (PNOA), the Seville city trees and spatial data from OpenStreetMap.

Impact of air quality on respiratory health in the city of Madrid

- Team:

So Good Data, composed of Ana Belén Laguna, Manuel López, Vicente Lorenzo, Javier Maestre and Iván Robles.

- What does it consist of?

So Good Data is proposing a study to analyse the impact of air pollution on the number of hospital admissions for respiratory diseases. It will also determine which pollutant particles are likely to be most harmful. With this information, it would be possible to predict the number of admissions a hospital will face depending on air pollution on a given date, in order to take the necessary measures in advance and reduce mortality.

- Data

Among other datasets, hospitalisations due to respiratory diseases, air quality, tobacco sales or atmospheric pollen in the Community of Madrid will be used for the study.

PLES

- Team:

BOLT, composed of Víctor José Montiel, Núria Foguet, Borja Macías, Alejandro Pelegero and José Luis Álvarez.

- What does it consist of?

The BOLT team will create a web application that allows the user to obtain an estimate of the average waiting time for consultations, tests or interventions in the public health system of Catalonia. The time series prediction models will be developed using Python with statistical and machine learning techniques. The user only need to indicate the hospital and the type of consultation, operation or test for which he/she is waiting. In addition to improving transparency with patients, the website can also be used by healthcare professionals to better manage their resources.

- Datos

The Project will use data from the public waiting lists in Catalonia published by CatSalut on a monthly basis. Specifically, monthly data on waiting lists for surgery, specialised outpatient consultations and diagnostic tests will be used from at least 2019 to the present. In the future, the idea could be adapted to other Autonomous Communities.

The Hospital Morbidity Survey: Proposal for the development of a MERN+Python web environment for its analysis and graphical visualisation.

- Team:

Marc Coca Moreno

- What does it consist of?

This is a web environment based on MERN, Python and Pentaho tools for the analysis and interactive visualisation of the Hospital Morbidity Survey microdata. The entire project will be developed with open source and free tools. Both the code and the final product will be openly accessible.

Specifically, it offers 3 major analyses with the aim of improving health planning:

o Descriptive: hospital discharge counts and time series.

o KPIs: standardised rates and indicators for comparison and benchmarking of provinces and communities.

o Flows: count and analysis of discharges from a hospital region and patient origin.

All data will be filterable according to dataset variables (age, sex, diagnoses, circumstance of admission and discharge, etc.).

- Data

In addition to the microdata from the INE's Hospital Morbidity Survey, it will also integrate Statistics from the Continuous Register (also from the INE), data from the Ministry of Health's catalogues of ICD10 diagnoses and from the catalogues and indicators of the Agency for Healthcare Research and Quality (AHRQ) and of the Autonomous Communities, such as Catalonia: catalogues and stratification tools.

TWINPLAN: Decision support system for accessible and healthy routes

- Team:

TWINPLAN, composed of Ivan Araquistain, Josu Ansola and Iñaki Prieto

- What does it consist of?

This is a web App to facilitate accessibility for people with mobility problems and promote healthy exercise for all citizens. The tool assesses whether your route is affected by any incidents in public lifts and, if so, proposes an alternative accessible route, also indicating the level of traffic (noise) in the area, air quality and cardioprotection points. It also provides contact details for nearby means of transport.

This web App can also be used by public administrations to monitor the use and planning of new accessible infrastructures.

- Data

The prototype will be developed using data from the Digital Twin of Ermua's public lifts, although the model is scalable to other territories. This data is complemented with other public data from Ermua such as the network of environmental sensors, traffic and LurData, among other sources.

These 10 proposals now have several months to develop their proposals, which will be presented on 18 October. The three prototypes best valued by the jury will receive €5,000, €4,000 and €3,000, respectively.

Good luck to all the finalists!

(You can download the accessible version in word here)

Digital life has arrived to become part of our daily lives and with it new communication and information consumption habits. Concepts such as augmented reality are actively participating in this process of change in which an increasing number of companies and organisations are involved.

Differences between augmented and virtual reality

Although the nomenclature of these concepts is somewhat similar, in practice, we are talking about different scenarios:

- Virtual reality: This is a digital experience that allows the user to immerse themselves in an artificial world where they can experience sensory nuances isolated from what is happening outside.

- Augmented reality: This is a data visualisation alternative that enhances the user experience by incorporating digital elements into tangible reality. In other words, it allows visual aspects to be added to the environment around us. This makes it especially interesting in the world of data visualisation, as it allows graphic elements to be superimposed on our reality. To achieve this, it is most common to use specialised glasses. At the same time, augmented reality can also be developed without the need for external gadgets. Using the camera of our mobile phone, some applications are capable of combining the visualisation of real elements present around us with other digitally processed elements that allow us to interact with tangible reality.

In this article we are going to focus on augmented reality, which is presented as an effective formula for sharing, presenting and disseminating the information contained in datasets.

Challenges and opportunities

The use of augmented reality tools is particularly useful when distributing and disseminating knowledge online. In this way, instead of sharing a set of data through text and graphic representations, augmented reality allows us to explore ways of disseminating information that facilitate understanding from the point of view of the user experience.

These are some of the opportunities associated with its use:

- Through 3D visualisations, augmented reality allows the user to have an immersive experience that facilitates contact with and internalisation of this type of information.

- It allows information to be consulted in real time and to interact with the environment. Augmented reality allows the user to interact with data in remote locations. Data can be adapted, even in spatial terms, to the needs and characteristics of the environment. This is particularly useful in field work, allowing operators repairing breakdowns or farmers in the middle of their crops to access the up-to-date information they need, in a highly visual way, combined with the environment.

- A higher density of data can be displayed at the same time, which facilitates the cognitive processing of information. In this way, augmented reality helps to speed up comprehension processes and thus our ability to conceive new realities.

Example of visualisation of agricultural data on the environment

Despite these advantages, the market is still developing and faces challenges such as implementation costs, lack of common standards and user concerns about security.

Use cases

Beyond the challenges, opportunities and strengths, augmented reality becomes even more relevant when organisations incorporate it into their innovation area to improve user experience or process efficiency. Thus, through the use cases, we can better understand the universe of usefulness that lies behind augmented reality.

One field where they represent a great opportunity is tourism. One example is Gijón in a click, a free mobile application that makes 3 routes available to visitors. During the tours, plaques have been installed on the ground from where tourists can launch augmented reality recreations with their own mobile phone.

From the point of view of hardware companies, we can highlight the example, among a long list, of the smart helmet prototype designed by the company Aegis Rider, which allows information to be obtained without taking your eyes off the road. This helmet uses augmented reality to project a series of indicators at eye level to help minimise the risk of an accident.

The projected data includes information from open data sources such as road conditions, road layout and maximum speed. In addition, using a system based on object recognition and deep learning, the Aegis Rider helmet also detects objects, animals, pedestrians or other vehicles on the road that could pose an accident risk when they are in the same path.

In addition to road safety, but continuing with the possibilities offered by augmented reality, Accuvein uses augmented reality to prevent chronic patients, such as cancer patients, from having to suffer failed needlesticks when receiving their medication. To do this, Accuvein has designed a handheld scanner that projects the exact location of the various veins on the patient's skin. According to its developers, the level of accuracy is 3.5 times higher than that of a traditional needle stick.

On the other hand, ordinary citizens can also find augmented reality as supporting material for news and reports. Media such as The New York Times are increasingly offering information that uses augmented reality to visualise datasets and make them easier to understand.

Tools for generating visualisations with augmented reality

As we have seen, augmented reality can also be used to create data visualisations that facilitate the understanding of sets of information that, a priori, may seem abstract. To create these visualisations there are different tools, such as Flow, whose function is to facilitate the work of programmers and developers. This platform, which displays datasets through the API of the WebXR device, allows these types of professionals to load data, create visualisations and add steps for the transition between them. Other tools include 3Data or Virtualitics. Companies such as IBM are also entering the sector.

For all these reasons, and in line with the evidence provided by the previous use cases, augmented reality is positioned as one of the data visualisation and transmission technologies that have arrived to further expand the limits of the knowledge society in which we are immersed.

Content prepared by the datos.gob.es team.

Spring, in addition to the arrival of good weather, has brought a great deal of news related to the open data ecosystem and data sharing. Over the last three months, European-driven developments have continued, with two initiatives that are currently in the public consultation phase standing out:

- Progress on data spaces. The first regulatory initiative for a data space has been launched. This first proposal focuses on health sector data and seeks to establish a uniform legal framework, facilitate patients' electronic access to their own data and encourage its re-use for other secondary purposes. It is currently under public consultation until 28 July.

- Boosting high-value data. The concept of high-value data, set out in Directive 2019/1024, refers to data whose re-use brings considerable benefits to society, the environment and the economy. Although this directive included a proposal for initial thematic categories to be considered, an initiative is currently underway to create a more concrete list. This list has already been made public and any citizen can add comment until 24 June. In the addition, the European Commission has also launched a series of grants to support public administrations at local, regional and national level to boost the availability, quality and usability of high-value data.

These two initiatives are helping to boost an ecosystem that is growing steadily in Spain, as shown in these examples of news we have collected over the last few months.

Examples of open data re-use

This season we have also learned about different projects that highlight the advantages of using data:

- Thanks to the use of Linked Open Data, a professor from the University of Valladolid has created the web application LOD4Culture. This app allows to explore the world's cultural heritage from the semantic databases of dbpedia and wikidata.

- The University of Zaragoza has launched sensoriZAR to monitor air quality and reduce energy consumption in its spaces. Built on IoT, open data and open science, the solution is focused on data-driven decision-making.

- Villalba Hospital has created a map of cardiovascular risk in the Sierra de Madrid thanks to Big Data. The map collects clinical and demographic data of patients to inform about the likelihood of developing such a disease in the future.

- The Open Government Lab of Aragon has recently presented "RADAR", an application that provides geo-referenced information on initiatives in rural areas.

Agreements to boost open data

The commitment of public bodies to open data is evident in a number of agreements and plans that have been launched in recent months:

- On 13 April, the mayors of Madrid and Malaga signed two collaboration agreements to jointly boost digital transformation and tourism growth in both cities. Thanks to these agreements, it will be possible to adopt policies on security and data protection, open data and Big Data, among others.

- The Government of the Balearic Islands and Asedie will collaborate to implement open data measures, transparency and reuse of public data. This is set out in an agreement that seeks to promote public-private collaboration and the development of commercial solutions, among others.

- The Generalitat Valenciana has signed an agreement with the Universitat Politècnica de València through which it will allocate €70,000 to carry out activities focused on the openness and reuse of data during the 2022 financial year.

- Madrid City Council has also initiated the process for the elaboration of the III Open Government Action Plan for the city, for which it launched a public consultation.

In addition, open data platforms continue to be enriched with new datasets and tools aimed at facilitating access to and use of information. Some examples are:

- Aragón Open Data has presented its virtual assistant to bring the portal's data closer to users in a simple and user-friendly way. The solution has been developed by Itainnova together with the Government of Aragon.

- Cartociudad, which belongs to the Ministry of Transport, Mobility and Urban Agenda, has a new viewer to locate postal addresses. It has been developed from a search engine based on REST services and has been created with API-CNIG.

- Madrid City Council has also launched a new open data viewer. Interactive dashboards with data on energy, weather, parking, libraries, etc. can be consulted.

- The Department of Ecological Transition of the Government of the Canary Islands has launched a new search engine for energy certificates by cadastral reference, with information from the Canary Islands Open Data portal.

- The Segovia City Council has renewed its website to host all the pages of the municipal areas, including the Open Data Portal, under the umbrella of the Smart Digital Segovia project.

- The University of Navarra has published a new dataset through GBIF, showing 10 years of observations on the natural succession of vascular plants in abandoned crops.

- The Castellón Provincial Council has published on its open data portal a dataset listing the 52 municipalities in which the installation of ATMs to combat depopulation has been promoted.

Boom in events, trainings and reports

Spring is one of the most prolific times for events and presentations. Some of the activities that have taken place during these months are:

- Asedie presented the 10th edition of its Report on the state of the infomediary sector, this time focusing on the data economy. The report highlights that this sector has a turnover of more than 2,000 million euros per year and employs almost 23,000 professionals. On the Asedie website you can access the video of the presentation of the report, with the participation of the Data Office.

- During this season, the results of the Gobal Data Barometer were also presented. This report reflects examples of the use and impact of open data, but also highlights the many barriers that prevent access and effective use of open data, limiting innovation and problem solving.

- The Social Security Data Conference was held on 26 May. It was recorded on video and can be viewed at this link. They showed the main strategic lines of the Social Security IT Management (GISS) in this area.

- The recording of the conference "Public Strategies for the Development of Data Spaces", organised by AIR4S (Digital Innovation Hub in AI and Robotics for the Sustainable Development Goals) and the Madrid City Council, is also available. During the event, public policies and initiatives based on the use and exploitation of data were presented.

- Another video available is the presentation of Oscar Corcho, Professor at the Polytechnic University of Madrid (UPM), co-director of the Ontological Engineering Group (OEG) and co-founder of LocaliData, talking about the collaborative project Ciudades Abiertas in the webinar "Improving knowledge transfer across organisations by knowledge graphs". You can watch it at this link from minute 15:55 onwards.

- In terms of training, the FEMP's Network of Local Entities for Transparency and Participation approved its Training Plan for this year. It includes topics related to the evaluation of public policies, open data, transparency, public innovation and cybersecurity, among others.

- Alcobendas City Council has launched a podcast section on its website, with the aim of disseminating among citizens issues such as the use of open data in public administrations.

Other news of interest in Europe

We end our review with some of the latest developments in the European data ecosystem:

- The 24 shortlisted teams for the EUDatathon 2022 have been made public. Among them is the Spanish Astur Data Team.

- The European Consortium for Digital and Interconnected Scientific Infrastructures LifeWatch ERIC, based in Seville, has taken on the responsibility of providing technological support to the global open data network on biodiversity.

- The European Commission has recently launched Kohesio. It is a new public online platform that provides data on European cohesion projects. Through the data, it shows the contribution of the policy to the economic, territorial and social cohesion of EU regions, as well as to the ecological and digital transitions.

- The European Open Data Portal has published a new study on how to make its data more reusable. This is the first in a series of reports focusing on the sustainability of open data portal infrastructures.

This is just a selection of news among all the developments in the open data ecosystem over the last three months. If you would like to make a contribution, you can leave us a message in the comments or write to dinamizacion@datos.gob.es.

This report published by the European Data Portal (EDP) aims to help open data users in harnessing the potential of the data generated by the Copernicus program.

The Copernicus project generates high-value satellite data, generating a large amount of Earth observation data, this is in line with the European Data Portal's objective of increasing the accessibility and value of open data.

The report addresses the following questions, What can I do with Copernicus data? How can I access the data?, and What tools do I need to use the data? using the information found in the European Data Portal, specialized catalogues and examining practical examples of applications using Copernicus data.

This report is available at this link: "Copernicus data for the open data community"

Who hasn't ever used an app to plan a romantic getaway, a weekend with friends or a family holiday? More and more digital platforms are emerging to help us calculate the best route, find the cheapest petrol station or make recommendations about hotels and restaurants according to our tastes and needs. Many of them have a common denominator, and that is that their operation is based on the use of data coming, for the most part, from public administrations.

It is becoming increasingly easy to find tourism-related data that have been published openly by various public bodies. Tourism is one of the sectors that generates the most revenue in Spain year after year. Therefore, it is not surprising that many organisations choose to open tourism data in exchange for attracting a greater number of visitors to the different areas of our country.

Below, we take a look at some of the datasets on tourism that you can find in the National Catalogue of Open Data in order to reuse them to develop new applications or services that offer improvements in this field.

These are the types of data on tourism that you can find in datos.gob.es

In our portal you can access a wide catalogue of data that is classified by different sectors. The Tourism category currently has 2,600 datasets of different types, including statistics, financial aid, points of interest, accommodation prices, etc.

Of all these datasets, here are some of the most important ones together with the format in which you can consult them:

At the state level

- National Statistics Institute (Ministry of Economic Affairs and Digital Transformation). Average stay, by type of accommodation by Autonomous Communities and Autonomous Cities. CSV, XLSX, XLS, HTML, JSON, PC-Axis.

- State Meteorological Agency (AEMET). Forecast by municipality, 7 days. XML.

- Geological and Mining Institute of Spain (Ministry of Science and Innovation). Spanish Inventory of Places of Geological Interest (IELIG). HTML, JSON, KMZ, XML.

- National Statistics Institute (Ministry of Economic Affairs and Digital Transformation). Rural Tourism Accommodation Price Index (RTAPI): national general index and by tariffs. CSV, HTML, JSON, PC-Axis, CSV

- National Statistics Institute (Ministry of Economic Affairs and Digital Transformation). Holiday Dwellings Price Index (HDPI): national general index and by tariffs. CSV, HTML, JSON, PC-Axis, CSV

- National Statistical Institute (Ministry of Economic Affairs and Digital Transformation). Tourist Campsite Price Index (TCPI): national general index and by tariffs. CSV, HTML, JSON, PC-Axis

At the level of the Autonomous Regions

- Regional Government of Andalusia. Andalusia Tourism Situation Survey. CSV, HTML

- Autonomous Community of the Basque Country. Tourist destinations in the Basque Country: towns, counties, routes, walks and experiences. RSS, API, XLSX, GeoJSON, XML, JSON, KML.

- Autonomous Community of Navarre. Signposting Camino Santiago. CSV, HTML, JSON, ODS, TSV, XLSX, XML.

- Autonomous Community of the Canary Islands. Active Tourism Activities registered in the General Tourism Register. XLS, CSV.

- Autonomous Community of Navarra. Ornithological tourism. CSV, HTML, JSON, ODS, TSV, XLSX, XML.

- Autonomous Community of Aragon. Footpaths of Aragon. XML, JSON, CSV, XLS.

- Cantabrian Institute of Statistics. Directory of Collective Tourist Accommodation (ALOJATUR) of the Canary Islands. JSON, XML, ZIP, CSV.

At the local level

- Valencia City Council. Tourist monuments. CSV, GML, JSON, KML, KMZ, OCTET-STREAM, WFS, WMS.

- Lorca City Council. Itineraries of tourist routes in the city centre. KMZ.

- Almendralejo Town Hall. Restaurants and bars of Almendralejo. XML, TSV, CSV, JSON, XLSX.

- Madrid City Council. Tourist offices of Madrid. HTML, RDF-XML, RSS, XML, CSV, JSON.

- Vigo City Council. Urban Tourism. CSV, JSON, KML, ZIP, XLS, CSV.

Some examples of re-use of tourism-related data

As we indicated at the beginning of this article, the opening up of data by public administrations facilitates the creation of applications and platforms that, by reusing this information, offer a quality service to citizens, improving the experience of travellers, for example, by providing updated information of interest. This is the case of Playas de Mallorca, which informs its users about the state of the island's beaches in real time, or Castilla y León Gurú, a tourist assistant for Telegram, with information about restaurants, monuments, tourist offices, etc. We can also find applications that make saving money easier (Geogasolineras) or that help people with disabilities to get around the destination (Ruta Accesible - How to get there in a wheelchair).

Public administrations can also take advantage of this information to get to know tourists better. For example, Madrid en Bici, thanks to the data provided by the city's portal, is able to draw up an X-ray of the real use of bicycles in the capital. This makes it possible to make decisions related to this service.

In our impact section, in addition to applications, you can also find numerous companies related to the tourism sector that use public data to offer and improve their services. This is the case of Smartvel or Bloowatch.

Do you know of any company that uses tourism data or an application based on it? Then don't hesitate to leave us a comment with all the information or send us an email to contacto@datos.gob.es. We will be happy to read it!

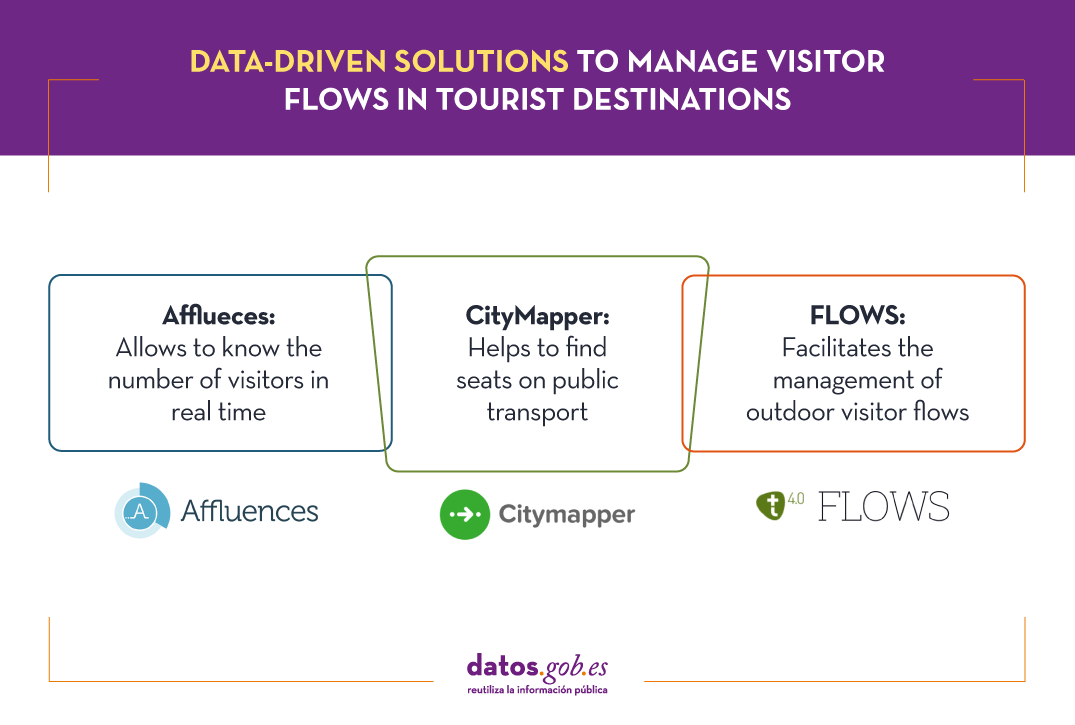

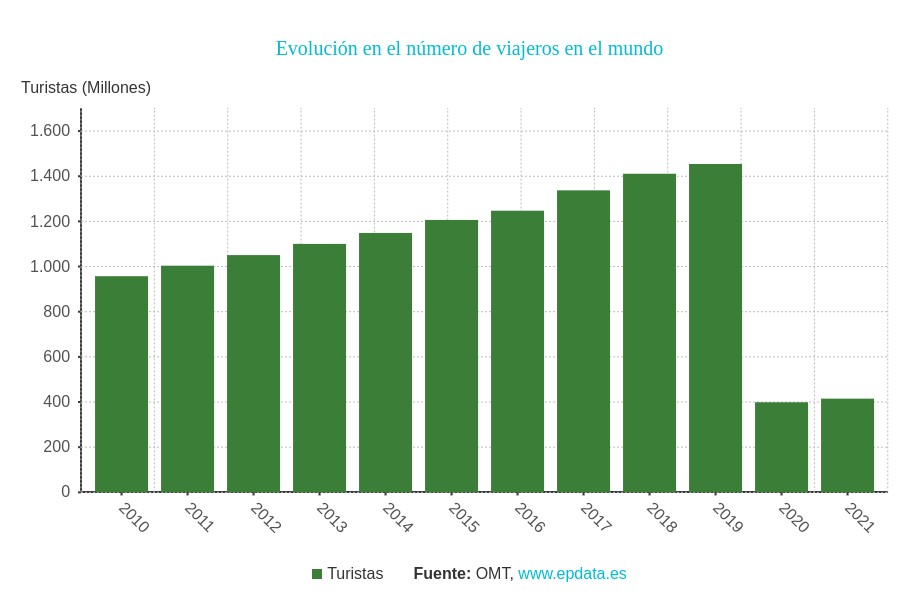

Today's tourism industry has a major challenge in managing the concentration of people visiting both open and closed spaces. This issue was already very important in 2019, when, according to the World Tourism Organisation, the number of travellers worldwide exceeded 1.4 billion. The aim was to minimise the negative impact of mass tourism on the environment, local communities and the tourist attractions themselves. But also, to ensure the quality of the experience for visitors who will prefer to schedule their visits in situations where the total occupancy of the area they intend to visit is lower.

The restrictions associated with the pandemic drastically reduced visitor numbers, which in 2020 and 2021 were less than a third of the number recorded in 2019, but made it much more important to manage visitor flows, even if this was for public health reasons.

We are now in an intermediate situation between restrictions that seem to be in their final phase and a steady growth in visitor numbers, making cities more sensitive than ever to use data-driven solutions to promote tourism and at the same time control visitor flows.

Know the number of visitors in real time with Afflueces

Among the occupancy management applications that help tourists avoid queues and crowds indoors is Affluences, a French-born solution that allows tourists to monitor the occupancy of museums, libraries, swimming pools and shops in real time.

The proposal of this solution consists of measuring the influx of visitors in closed spaces using people counting systems and then analysing and communicating it to the user, providing data such as waiting time and occupancy rate.

In some cases, Affluences installs sensors in the institutions or uses existing sensors to measure in real time the number of people present in the institution. In other cases, it uses the real-time occupancy data provided by the facilities as open data, as in the case of the swimming pools of the city of Strasbourg.

The data measured in real time are enriched with other sources of information such as attendance history, opening calendar, etc. and are processed by predictive analytics algorithms every 5 minutes. This approach makes it possible to provide the user with much more accurate information than can be obtained, for example, via Google Maps, based on the analysis of location data captured via mobile phones.

Find a seat on public transport with CityMapper

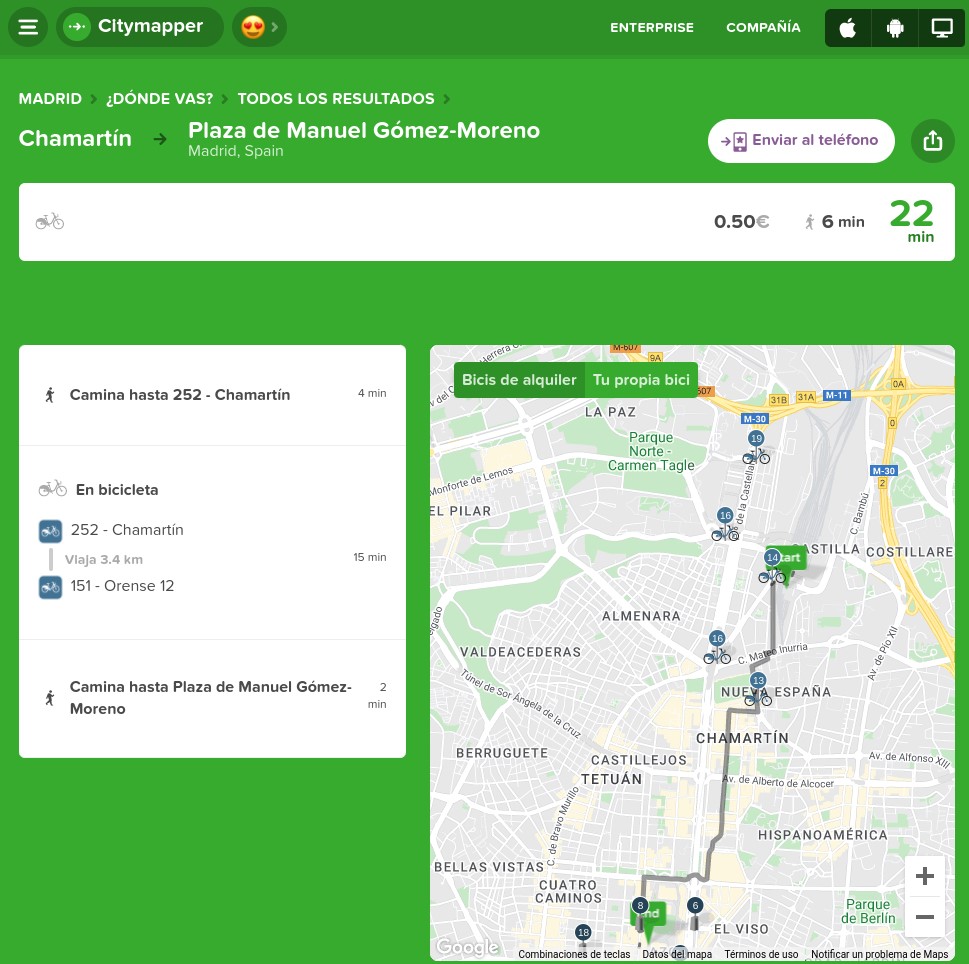

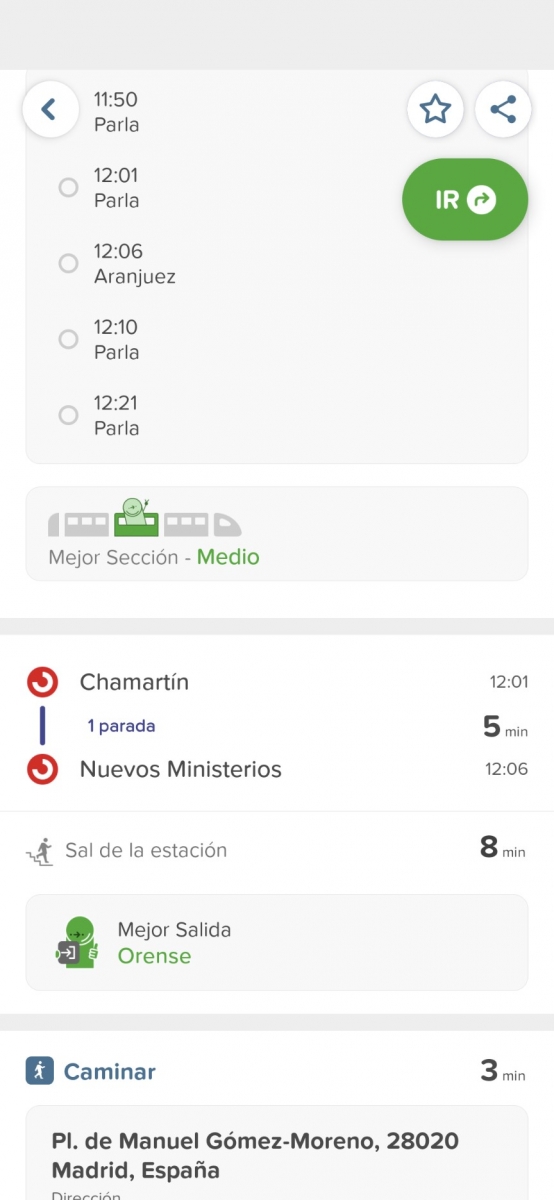

CityMapper is probably the best known urban mobility app in major European cities and one of the most popular worldwide. It was founded in London, but is already present in 71 European cities in 31 countries and aggregates 368 different modes of transport. Among these cities are of course Madrid and Barcelona, but also a number of large cities in Spain such as Valencia, Seville, Zaragoza or Malaga.

CityMapper allows you to calculate multimodal routes by combining a large number of modes of transport: metros and buses together with bicycles, scooters and even mopeds where available. If we choose, for example, the bicycle as a means of transport, the application provides the user with granular data such as how many bicycles are available at the pick-up point and how many empty parking spaces are available at the destination.

But the differentiating factor of CityMapper and probably the one that has had the greatest influence on its great success of adoption is the clever way in which it uses a combination of open and private data and artificial intelligence to provide users with highly accurate estimates of waiting times, journey times and even traffic disruptions.

For example, CityMapper is even able to provide information about the occupancy of some of the modes of transport it suggests on routes so that the user can for example choose the least congested carriage on the train they are waiting for. The application even suggests where the user should be positioned to optimise the journey by specifying which entrances and exits to use.

Outdoor visitor flows with FLOWS

The management of outdoor visitor flows introduces new elements of difficulty both in measuring occupancy and in establishing stable predictive models that are useful for visitors and for those responsible for planning security measures. This requires new data sources and special attention to the privacy of the users whose data is analysed.

FLOWS is a project that is working to help cities and tourism establishments prepare for peak tourism periods and redirect visitors to less congested areas. To achieve this ambitious goal, it combines anonymised data from various sources such as traffic control sensors, data from open Wi-Fi networks, data from mobile phone operators, data from tourist records or itinerary and reservation management systems, water and energy consumption data, waste collection or social media posts.

Through a simple user interface it will allow advanced analysis and forecasting of tourist movements showing traffic flows, traffic congestion, seasonal deviations, entries/exits to the destination, movement within the destination, etc. It will be possible to display the analyses in the selected time interval and make predictions based on historical data considering seasonal factors.

These are just a few examples of the many initiatives that are working to address a major challenge facing tourism during the green and digital transition - the management of traffic flows in both indoor and outdoor spaces. The coming years will undoubtedly see breakthroughs that will change the way we experience tourism and make the experience more enjoyable while minimising the impact we have on the environment and local communities.

Content prepared by Jose Luis Marín, Senior Consultant in Data, Strategy, Innovation & Digitalization.

The contents and points of view reflected in this publication are the sole responsibility of its author.

1. Introduction

Visualizations are graphical representations of data that allow to transmit in a simple and effective way the information linked to them. The visualization potential is very wide, from basic representations, such as a graph of lines, bars or sectors, to visualizations configured on control panels or interactive dashboards. Visualizations play a fundamental role in drawing conclusions from visual information, also allowing to detect patterns, trends, anomalous data, or project predictions, among many other functions.

Before proceeding to build an effective visualization, we need to perform a previous treatment of the data, paying special attention to obtaining them and validating their content, ensuring that they are in the appropriate and consistent format for processing and do not contain errors. A preliminary treatment of the data is essential to perform any task related to the analysis of data and of performing an effective visualization.

In the \"Visualizations step-by-step\" section, we are periodically presenting practical exercises on open data visualization that are available in the datos.gob.es catalog or other similar catalogs. There we approach and describe in a simple way the necessary steps to obtain the data, perform the transformations and analyzes that are pertinent to, finally, we create interactive visualizations, from which we can extract information that is finally summarized in final conclusions.

In this practical exercise, we have carried out a simple code development that is conveniently documented by relying on tools for free use. All generated material is available for reuse in the GitHub Data Lab repository.

Access the data lab repository on Github.

Run the data pre-processing code on Google Colab.

2. Objetives

The main objective of this post is to learn how to make an interactive visualization based on open data. For this practical exercise we have chosen datasets that contain relevant information about the students of the Spanish university over the last few years. From these data we will observe the characteristics presented by the students of the Spanish university and which are the most demanded studies.

3. Resources

3.1. Datasets

For this practical case, data sets published by the Ministry of Universities have been selected, which collects time series of data with different disaggregations that facilitate the analysis of the characteristics presented by the students of the Spanish university. These data are available in the datos.gob.es catalogue and in the Ministry of Universities' own data catalogue. The specific datasets we will use are:

- Enrolled by type of university modality, area of nationality and field of science, and enrolled by type and modality of university, gender, age group and field of science for PHD students by autonomous community from the academic year 2015-2016 to 2020-2021.

- Enrolled by type of university modality, area of nationality and field of science, and enrolled by type and modality of the university, gender, age group and field of science for master's students by autonomous community from the academic year 2015-2016 to 2020-2021.

- Enrolled by type of university modality, area of nationality and field of science and enrolled by type and modality of the university, gender, age group and field of study for bachelor´s students by autonomous community from the academic year 2015-2016 to 2020-2021.

- Enrolments for each of the degrees taught by Spanish universities that is published in the Statistics section of the official website of the Ministry of Universities. The content of this dataset covers from the academic year 2015-2016 to 2020-2021, although for the latter course the data with provisional.

3.2. Tools

To carry out the pre-processing of the data, the R programming language has been used from the Google Colab cloud service, which allows the execution of Notebooks de Jupyter.

Google Colaboratory also called Google Colab, is a free cloud service from Google Research that allows you to program, execute and share code written in Python or R from your browser, so it does not require the installation of any tool or configuration.

For the creation of the interactive visualization the Datawrapper tool has been used.

Datawrapper is an online tool that allows you to make graphs, maps or tables that can be embedded online or exported as PNG, PDF or SVG. This tool is very simple to use and allows multiple customization options.

If you want to know more about tools that can help you in the treatment and visualization of data, you can use the report \"Data processing and visualization tools\".

4. Data pre-processing

As the first step of the process, it is necessary to perform an exploratory data analysis (EDA) in order to properly interpret the initial data, detect anomalies, missing data or errors that could affect the quality of subsequent processes and results, in addition to performing the tasks of transformation and preparation of the necessary variables. Pre-processing of data is essential to ensure that analyses or visualizations subsequently created from it are reliable and consistent. If you want to know more about this process you can use the Practical Guide to Introduction to Exploratory Data Analysis.

The steps followed in this pre-processing phase are as follows:

- Installation and loading the libraries

- Loading source data files

- Creating work tables

- Renaming some variables

- Grouping several variables into a single one with different factors

- Variables transformation

- Detection and processing of missing data (NAs)

- Creating new calculated variables

- Summary of transformed tables

- Preparing data for visual representation

- Storing files with pre-processed data tables

You'll be able to reproduce this analysis, as the source code is available in this GitHub repository. The way to provide the code is through a document made on a Jupyter Notebook that once loaded into the development environment can be executed or modified easily. Due to the informative nature of this post and in order to facilitate learning of non-specialized readers, the code does not intend to be the most efficient, but rather make it easy to understand, therefore it is likely to come up with many ways to optimize the proposed code to achieve a similar purpose. We encourage you to do so!

You can follow the steps and run the source code on this notebook in Google Colab.

5. Data visualizations

Once the data is pre-processed, we proceed with the visualization. To create this interactive visualization we use the Datawrapper tool in its free version. It is a very simple tool with special application in data journalism that we encourage you to use. Being an online tool, it is not necessary to have software installed to interact or generate any visualization, but it is necessary that the data table that we provide is properly structured.

To address the process of designing the set of visual representations of the data, the first step is to consider the queries we intent to resolve. We propose the following:

- How is the number of men and women being distributed among bachelor´s, master's and PHD students over the last few years?

If we focus on the last academic year 2020-2021:

- What are the most demanded fields of science in Spanish universities? What about degrees?

- Which universities have the highest number of enrolments and where are they located?

- In what age ranges are bachelor´s university students?

- What is the nationality of bachelor´s students from Spanish universities?

Let's find out by looking at the data!

5.1. Distribution of enrolments in Spanish universities from the 2015-2016 academic year to 2020-2021, disaggregated by gender and academic level