Almost half of European adults lack basic digital skills. According to the latest State of the Digital Decade report, in 2023, only 55.6% of citizens reported having such skills. This percentage rises to 66.2% in the case of Spain, ahead of the European average.

Having basic digital skills is essential in today's society because it enables access to a wider range of information and services, as well as effective communication in onlineenvironments, facilitating greater participation in civic and social activities. It is also a great competitive advantage in the world of work.

In Europe, more than 90% of professional roles require a basic level of digital skills. Technological knowledge has long since ceased to be required only for technical professions, but is spreading to all sectors, from business to transport and even agriculture. In this respect, more than 70% of companies said that the lack of staff with the right digital skills is a barrier to investment.

A key objective of the Digital Decade is therefore to ensure that at least 80% of people aged 16-74 have at least basic digital skills by 2030.

Basic technology skills that everyone should have

When we talk about basic technological capabilities, we refer, according to the DigComp framework , to a number of areas, including:

- Information and data literacy: includes locating, retrieving, managing and organising data, judging the relevance of the source and its content.

- Communication and collaboration: involves interacting, communicating and collaborating through digital technologies taking into account cultural and generational diversity. It also includes managing one's own digital presence, identity and reputation.

- Digital content creation: this would be defined as the enhancement and integration of information and content to generate new messages, respecting copyrights and licences. It also involves knowing how to give understandable instructions to a computer system.

- Security: this is limited to the protection of devices, content, personal data and privacy in digital environments, to protect physical and mental health.

- Problem solving: it allows to identify and solve needs and problems in digital environments. It also focuses on the use of digital tools to innovate processes and products, keeping up with digital evolution.

Which data-related jobs are most in demand?

Now that the core competences are clear, it is worth noting that in a world where digitalisation is becoming increasingly important , it is not surprising that the demand for advanced technological and data-related skills is also growing.

According to data from the LinkedIn employment platform, among the 25 fastest growing professions in Spain in 2024 are security analysts (position 1), software development analysts (2), data engineers (11) and artificial intelligence engineers (25). Similar data is offered by Fundación Telefónica's Employment Map, which also highlights four of the most in-demand profiles related to data:

- Data analyst: responsible for the management and exploitation of information, they are dedicated to the collection, analysis and exploitation of data, often through the creation of dashboards and reports.

- Database designer or database administrator: focused on designing, implementing and managing databases. As well as maintaining its security by implementing backup and recovery procedures in case of failures.

- Data engineer: responsible for the design and implementation of data architectures and infrastructures to capture, store, process and access data, optimising its performance and guaranteeing its security.

- Data scientist: focused on data analysis and predictive modelling, optimisation of algorithms and communication of results.

These are all jobs with good salaries and future prospects, but where there is still a large gap between men and women. According to European data, only 1 in 6 ICT specialists and 1 in 3 science, technology, engineering and mathematics (STEM) graduates are women.

To develop data-related professions, you need, among others, knowledge of popular programming languages such as Python, R or SQL, and multiple data processing and visualisation tools, such as those detailed in these articles:

- Debugging and data conversion tools

- Data analysis tools

- Data visualisation tools

- Data visualisation libraries and APIs

- Geospatial visualisation tools

- Network analysis tools

The range of training courses on all these skills is growing all the time.

Future prospects

Nearly a quarter of all jobs (23%) will change in the next five years, according to the World Economic Forum's Future of Jobs 2023 Report. Technological advances will create new jobs, transform existing jobs and destroy those that become obsolete. Technical knowledge, related to areas such as artificial intelligence or Big Data, and the development of cognitive skills, such as analytical thinking, will provide great competitive advantages in the labour market of the future. In this context, policy initiatives to boost society's re-skilling , such as the European Digital Education Action Plan (2021-2027), will help to generate common frameworks and certificates in a constantly evolving world.

The technological revolution is here to stay and will continue to change our world. Therefore, those who start acquiring new skills earlier will be better positioned in the future employment landscape.

Citizen science is consolidating itself as one of the most relevant sources of most relevant sources of reference in contemporary research contemporary research. This is recognised by the Centro Superior de Investigaciones Científicas (CSIC), which defines citizen science as a methodology and a means for the promotion of scientific culture in which science and citizen participation strategies converge.

We talked some time ago about the importance importance of citizen science in society in society. Today, citizen science projects have not only increased in number, diversity and complexity, but have also driven a significant process of reflection on how citizens can actively contribute to the generation of data and knowledge.

To reach this point, programmes such as Horizon 2020, which explicitly recognised citizen participation in science, have played a key role. More specifically, the chapter "Science with and for society"gave an important boost to this type of initiatives in Europe and also in Spain. In fact, as a result of Spanish participation in this programme, as well as in parallel initiatives, Spanish projects have been increasing in size and connections with international initiatives.

This growing interest in citizen science also translates into concrete policies. An example of this is the current Spanish Strategy for Science, Technology and Innovation (EECTI), for the period 2021-2027, which includes "the social and economic responsibility of R&D&I through the incorporation of citizen science" which includes "the social and economic responsibility of I through the incorporation of citizen science".

In short, we commented some time agoin short, citizen science initiatives seek to encourage a more democratic sciencethat responds to the interests of all citizens and generates information that can be reused for the benefit of society. Here are some examples of citizen science projects that help collect data whose reuse can have a positive impact on society:

AtmOOs Academic Project: Education and citizen science on air pollution and mobility.

In this programme, Thigis developed a citizen science pilot on mobility and the environment with pupils from a school in Barcelona's Eixample district. This project, which is already replicable in other schoolsconsists of collecting data on student mobility patterns in order to analyse issues related to sustainability.

On the website of AtmOOs Academic you can visualise the results of all the editions that have been carried out annually since the 2017-2018 academic year and show information on the vehicles used by students to go to class or the emissions generated according to school stage.

WildINTEL: Research project on life monitoring in Huelva

The University of Huelva and the State Agency for Scientific Research (CSIC) are collaborating to build a wildlife monitoring system to obtain essential biodiversity variables. To do this, remote data capture photo-trapping cameras and artificial intelligence are used.

The wildINTEL project project focuses on the development of a monitoring system that is scalable and replicable, thus facilitating the efficient collection and management of biodiversity data. This system will incorporate innovative technologies to provide accurate and objective demographic estimates of populations and communities.

Through this project which started in December 2023 and will continue until December 2026, it is expected to provide tools and products to improve the management of biodiversity not only in the province of Huelva but throughout Europe.

IncluScience-Me: Citizen science in the classroom to promote scientific culture and biodiversity conservation.

This citizen science project combining education and biodiversity arises from the need to address scientific research in schools. To do this, students take on the role of a researcher to tackle a real challenge: to track and identify the mammals that live in their immediate environment to help update a distribution map and, therefore, their conservation.

IncluScience-Me was born at the University of Cordoba and, specifically, in the Research Group on Education and Biodiversity Management (Gesbio), and has been made possible thanks to the participation of the University of Castilla-La Mancha and the Research Institute for Hunting Resources of Ciudad Real (IREC), with the collaboration of the Spanish Foundation for Science and Technology - Ministry of Science, Innovation and Universities.

The Memory of the Herd: Documentary corpus of pastoral life.

This citizen science project which has been active since July 2023, aims to gather knowledge and experiences from sheperds and retired shepherds about herd management and livestock farming.

The entity responsible for the programme is the Institut Català de Paleoecologia Humana i Evolució Social, although the Museu Etnogràfic de Ripoll, Institució Milà i Fontanals-CSIC, Universitat Autònoma de Barcelona and Universitat Rovira i Virgili also collaborate.

Through the programme, it helps to interpret the archaeological record and contributes to the preservation of knowledge of pastoral practice. In addition, it values the experience and knowledge of older people, a work that contributes to ending the negative connotation of "old age" in a society that gives priority to "youth", i.e., that they are no longer considered passive subjects but active social subjects.

Plastic Pirates Spain: Study of plastic pollution in European rivers.

It is a citizen science project which has been carried out over the last year with young people between 12 and 18 years of age in the communities of Castilla y León and Catalonia aims to contribute to generating scientific evidence and environmental awareness about plastic waste in rivers.

To this end, groups of young people from different educational centres, associations and youth groups have taken part in sampling campaigns to collect data on the presence of waste and rubbish, mainly plastics and microplastics in riverbanks and water.

In Spain, this project has been coordinated by the BETA Technology Centre of the University of Vic - Central University of Catalonia together with the University of Burgos and the Oxygen Foundation. You can access more information on their website.

Here are some examples of citizen science projects. You can find out more at the Observatory of Citizen Science in Spain an initiative that brings together a wide range of educational resources, reports and other interesting information on citizen science and its impact in Spain. do you know of any other projects? Send it to us at dinamizacion@datos.gob.es and we can publicise it through our dissemination channels.

In today's digital age, data sharing and opendatahave emerged as key pillars for innovation, transparency and economic development. A number of companies and organisations around the world are adopting these approaches to foster open access to information and enhance data-driven decision making. Below, we explore some international and national examples of how these practices are being implemented.

Global success stories

One of the global leaders in data sharing is LinkedIn with its Data for Impactprogramme. This programme provides governments and organisations with access to aggregated and anonymised economic data, based on LinkedIn's Economic Graph, which represents global professional activity. It is important to clarify that the data may only be used for research and development purposes. Access must be requested via email, attaching a proposal for evaluation, and priority is given to proposals from governments and multilateral organisations. These data have been used by organisations such as the World Bank and the European Central Bank to inform key economic policies and decisions. LinkedIn's focus on privacy and data quality ensures that these collaborations benefit both organisations and citizens, promoting inclusive, green and digitally aligned economic growth.

On the other hand, the Registry of Open Data on AWS (RODA) is an Amazon Web Services (AWS) managed repository that hosts public datasets. The datasets are not provided directly by AWS, but are maintained by government organisations, researchers, companies and individuals. We can find, at the time of writing this post, more than 550 datasets published by different organisations, including some such as the Allen Institute for Artificial Intelligence (AI2) or NASAitself. This platform makes it easy for users to leverage AWS cloud computing services for analytics.

In the field of data journalism, FiveThirtyEight, owned by ABC News, has taken a radical transparency approach by publicly sharing the data and code behind its articles and visualisations. These are accessible via GitHub in easily reusable formats such as CSV. This practice not only allows for independent verification of their work, but also encourages the creation of new stories and analysis by other researchers and journalists. FiveThirtyEight has become a role model for how open data can improve the quality and credibility of journalism.

Success stories in Spain

Spain is not lagging behind in terms of data sharing and open data initiatives by private companies. Several Spanish companies are leading initiatives that promote data accessibility and transparency in different sectors. Let us look at some examples.

Idealista, one of the most important real estate portals in the country, has published an open data set that includes detailed information on more than 180,000 homes in Madrid, Barcelona and Valencia. This dataset provides the geographical coordinates and sales prices of each property, together with its internal characteristics and official information from the Spanish cadastre. This dataset is available for access through GitHub as an R package and has become a great tool for real estate market analysis, allowing researchers and practitioners to develop automatic valuation models and conduct detailed studies on market segmentation. It should be noted that Idealista also reuses public data from organisations such as the land registry or the INE to offer data services that support decisions in the real estate market, such as contracting mortgages, market studies, portfolio valuation, etc. For its part, BBVA, through its Foundation, offers access to an extensive statistical collection with databases that include tables, charts and dynamic graphs. These databases, which are free to download, cover topics such as productivity, competitiveness, human capital and inequality in Spain, among others. They also provide historical series on the Spanish economy, investments, cultural activities and public spending. These tools are designed to complement printed publications and provide an in-depth insight into the country's economic and social developments.

In addition, Esri Spain enables its Open Data Portal, which provides users with a wide variety of content that can be consulted, analysed and downloaded. This portal includes data managed by Esri Spain, together with a collection of other open data portals developed with Esritechnology. This significantly expands the possibilities for researchers, developers and practitioners looking to leverage geospatial data in their projects. Datasets can be found in the categories of health, science and technology or economics, among others.

In the area of public companies, Spain also has outstanding examples of commitment to open data. Renfe, the main railway operator, and Red Eléctrica Española (REE), the entity responsible for the operation of the electricity system ,have developed open data programmes that facilitate access to relevant information for citizens and for the development of applications and services that improve efficiency and sustainability. In the case of REE, it is worth highlighting the possibility of consuming the available data through RESTAPIs, which facilitate the integration of applications on data sets that receive continuous updates on the state of the electricity markets.

Conclusion

Data sharing and open data represent a crucial evolution in the way organisations manage and exploit information. From international tech giants such as LinkedIn and AWS to national innovators such as Idealista and BBVA, they are providing open access to data in order to drive significant change in how decisions are made, policy development and the creation of new economic opportunities. In Spain, both private and public companies are showing a strong commitment to these practices, positioning the country as a leader in the adoption of open data and data sharing models that benefit society as a whole.

Content prepared by Juan Benavente, senior industrial engineer and expert in technologies linked to the data economy. The contents and points of view reflected in this publication are the sole responsibility of the author.

Artificial intelligence (AI) is revolutionising the way we create and consume content. From automating repetitive tasks to personalising experiences, AI offers tools that are changing the landscape of marketing, communication and creativity.

These artificial intelligences need to be trained with data that are fit for purpose and not copyrighted. Open data is therefore emerging as a very useful tool for the future of AI.

The Govlab has published the report "A Fourth Wave of Open Data? Exploring the Spectrum of Scenarios for Open Data and Generative AI" to explore this issue in more detail. It analyses the emerging relationship between open data and generative AI, presenting various scenarios and recommendations. Their key points are set out below.

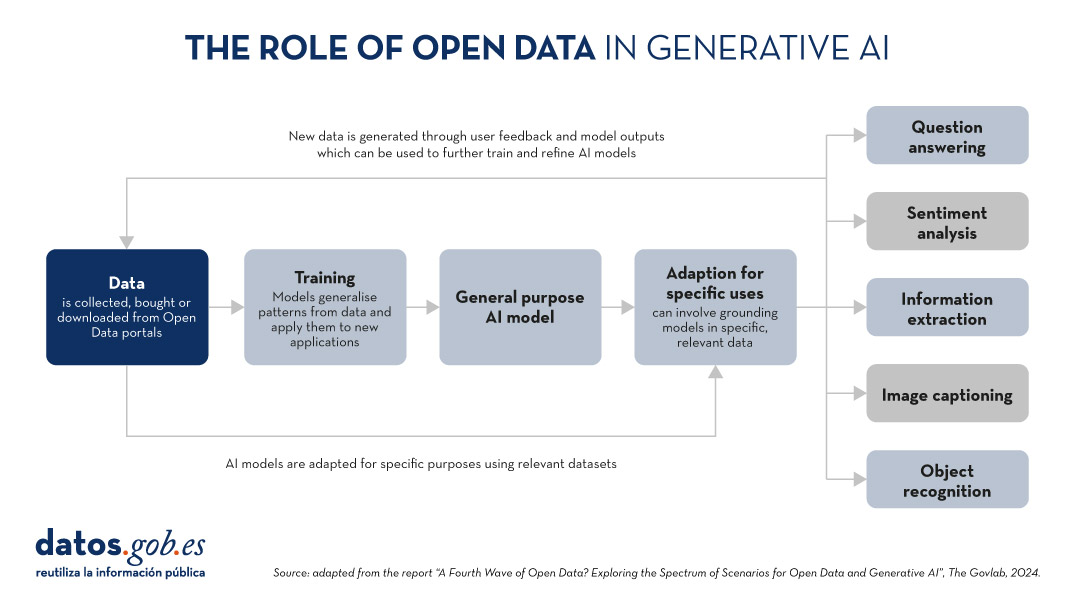

The role of data in generative AI

Data is the fundamental basis for generative artificial intelligence models. Building and training such models requires a large volume of data, the scale and variety of which is conditioned by the objectives and use cases of the model.

The following graphic explains how data functions as a key input and output of a generative AI system. Data is collected from various sources, including open data portals, in order to train a general-purpose AI model. This model will then be adapted to perform specific functions and different types of analysis, which in turn generate new data that can be used to further train models.

Figure 1. The role of open data in generative AI, adapted from the report “A Fourth Wave of Open Data? Exploring the Spectrum of Scenarios for Open Data and Generative AI”, The Govlab, 2024.

5 scenarios where open data and artificial intelligence converge

In order to help open data providers ''prepare'' their data for generative AI, The Govlab has defined five scenarios outlining five different ways in which open data and generative AI can intersect. These scenarios are intended as a starting point, to be expanded in the future, based on available use cases.

| Scenario | Function | Quality requirements | Metadata requirements | Example |

|---|---|---|---|---|

| Pre-training | Training the foundational layers of a generative AI model with large amounts of open data. | High volume of data, diverse and representative of the application domain and non-structured usage. | Clear information on the source of the data. | Data from NASA''s Harmonized Landsat Sentinel-2 (HLS) project were used to train the geospatial foundational model watsonx.ai. |

| Adaptation | Refinement of a pre-trained model with task-specific open data, using fine-tuning or RAG techniques. | Tabular and/or unstructured data of high accuracy and relevance to the target task, with a balanced distribution. | Metadata focused on the annotation and provenance of data to provide contextual enrichment. | Building on the LLaMA 70B model, the French Government created LLaMandement, a refined large language model for the analysis and drafting of legal project summaries. They used data from SIGNALE, the French government''s legislative platform. |

| Inference and Insight Generation | Extracting information and patterns from open data using a trained generative AI model. | High quality, complete and consistent tabular data. | Descriptive metadata on the data collection methods, source information and version control. | Wobby is a generative interface that accepts natural language queries and produces answers in the form of summaries and visualisations, using datasets from different offices such as Eurostat or the World Bank. |

| Data Augmentation | Leveraging open data to generate synthetic data or provide ontologies to extend the amount of training data. | Tabular and/or unstructured data which is a close representation of reality, ensuring compliance with ethical considerations. | Transparency about the generation process and possible biases. | A team of researchers adapted the US Synthea model to include demographic and hospital data from Australia. Using this model, the team was able to generate approximately 117,000 region-specific synthetic medical records. |

| Open-Ended Exploration | Exploring and discovering new knowledge and patterns in open data through generative models. | Tabular data and/or unstructured, diverse and comprehensive. | Clear information on sources and copyright, understanding of possible biases and limitations, identification of entities. | NEPAccess is a pilot to unlock access to data related to the US National Environmental Policy Act (NEPA) through a generative AI model. It will include functions for drafting environmental impact assessments, data analysis, etc. |

Figure 2. Five scenarios where open data and Artificial Intelligence converge, adapted from the report “A Fourth Wave of Open Data? Exploring the Spectrum of Scenarios for Open Data and Generative AI”, The Govlab, 2024.

You can read the details of these scenarios in the report, where more examples are explained. In addition, The Govlab has also launched an observatory where it collects examples of intersections between open data and generative artificial intelligence. It includes the examples in the report along with additional examples. Any user can propose new examples via this form. These examples will be used to further study the field and improve the scenarios currently defined.

Among the cases that can be seen on the web, we find a Spanish company: Tendios. This is a software-as-a-service company that has developed a chatbot to assist in the analysis of public tenders and bids in order to facilitate competition. This tool is trained on public documents from government tenders.

Recommendations for data publishers

To extract the full potential of generative AI, improving its efficiency and effectiveness, the report highlights that open data providers need to address a number of challenges, such as improving data governance and management. In this regard, they contain five recommendations:

- Improve transparency and documentation. Through the use of standards, data dictionaries, vocabularies, metadata templates, etc. It will help to implement documentation practices on lineage, quality, ethical considerations and impact of results.

- Maintaining quality and integrity. Training and routine quality assurance processes are needed, including automated or manual validation, as well as tools to update datasets quickly when necessary. In addition, mechanisms for reporting and addressing data-related issues that may arise are needed to foster transparency and facilitate the creation of a community around open datasets.

- Promote interoperability and standards. It involves adopting and promoting international data standards, with a special focus on synthetic data and AI-generated content.

- Improve accessibility and user-friendliness. It involves the enhancement of open data portals through intelligent search algorithms and interactive tools. It is also essential to establish a shared space where data publishers and users can exchange views and express needs in order to match supply and demand.

- Addressing ethical considerations. Protecting data subjects is a top priority when talking about open data and generative AI. Comprehensive ethics committees and ethical guidelines are needed around the collection, sharing and use of open data, as well as advanced privacy-preserving technologies.

This is an evolving field that needs constant updating by data publishers. These must provide technically and ethically adequate datasets for generative AI systems to reach their full potential.

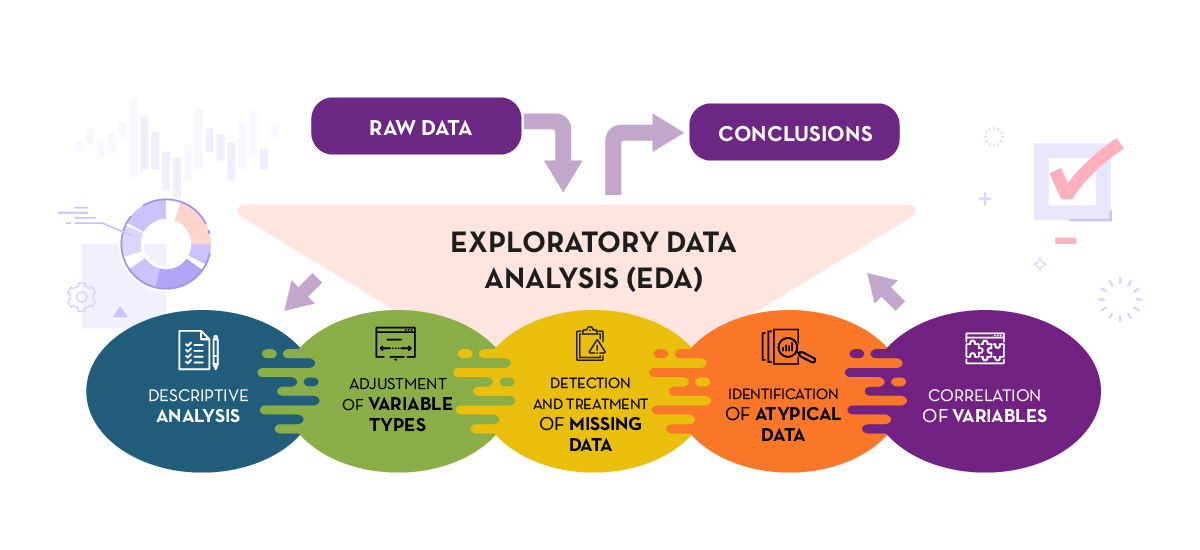

Before performing data analysis, for statistical or predictive purposes, for example through machine learning techniques, it is necessary to understand the raw material with which we are going to work. It is necessary to understand and evaluate the quality of the data in order to, among other aspects, detect and treat atypical or incorrect data, avoiding possible errors that could have an impact on the results of the analysis.

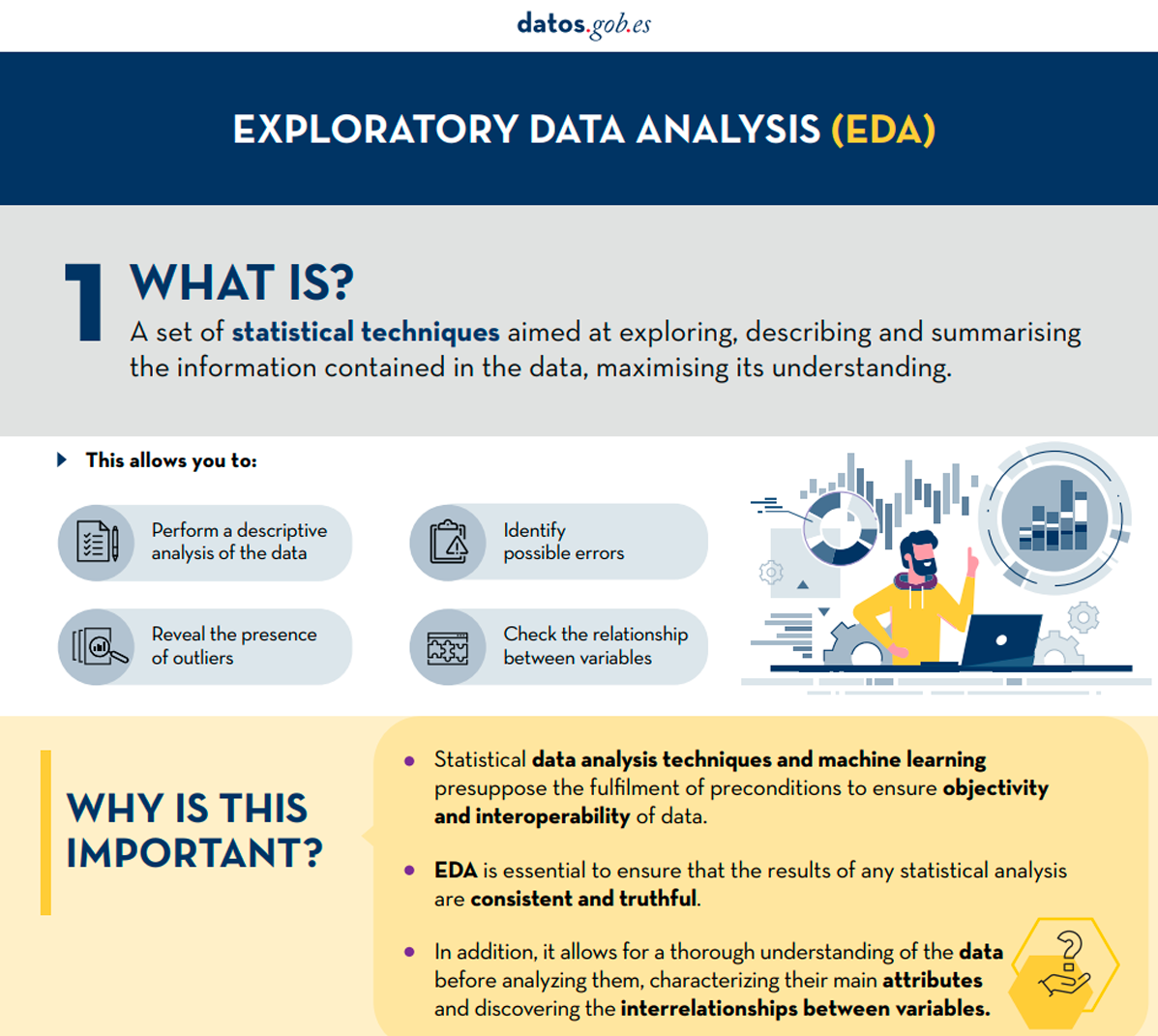

One way to carry out this pre-processing is through exploratory data analysis (EDA).

What is exploratory data analysis?

EDA consists of applying a set of statistical techniques aimed at exploring, describing and summarising the nature of the data, in such a way that we can guarantee its objectivity and interoperability.

This allows us to identify possible errors, reveal the presence of outliers, check the relationship between variables (correlations) and their possible redundancy, and perform a descriptive analysis of the data by means of graphical representations and summaries of the most significant aspects.

On many occasions, this exploration of the data is neglected and is not carried out correctly. For this reason, at datos.gob.es we have prepared an introductory guide that includes a series of minimum tasks to carry out a correct exploratory data analysis, a prior and necessary step before carrying out any type of statistical or predictive analysis linked to machine learning techniques.

What does the guide include?

The guide explains in a simple way the steps to be taken to ensure consistent and accurate data. It is based on the exploratory data analysis described in the freely available book R for Data Science by Wickman and Grolemund (2017). These steps are:

Figure 1. Phases of exploratory data analysis. Source: own elaboration.

The guide explains each of these steps and why they are necessary. They are also illustrated in a practical way through an example. For this case study, we have used the dataset relating to the air quality register in the Autonomous Community of Castilla y León included in our open data catalogue. The processing has been carried out with Open Source and free technological tools. The guide includes the code so that users can replicate it in a self-taught way following the indicated steps.

The guide ends with a section of additional resources for those who want to further explore the subject.

Who is the target audience?

The target audience of the guide is users who reuse open data. In other words, developers, entrepreneurs or even data journalists who want to extract as much value as possible from the information they work with in order to obtain reliable results.

It is advisable that the user has a basic knowledge of the R programming language, chosen to illustrate the examples. However, the bibliography section includes resources for acquiring greater skills in this field.

Below, in the documentation section, you can download the guide, as well as an infographic-summary that illustrates the main steps of exploratory data analysis. The source code of the practical example is also available in our Github.

Click to see the full infographic, in accessible version

Figure 2. Capture of the infographic. Source: own elaboration.

Open data visualization with open source tools (infographic part 2)

1. Introduction

Visualizations are graphical representations of data that allow for the simple and effective communication of information linked to them. The possibilities for visualization are very broad, from basic representations such as line graphs, bar charts or relevant metrics, to visualizations configured on interactive dashboards.

In this section "Visualizations step by step" we are periodically presenting practical exercises using open data available on datos.gob.es or other similar catalogs. In them, the necessary steps to obtain the data, perform the transformations and relevant analyses to, finally obtain conclusions as a summary of said information, are addressed and described in a simple way.

Each practical exercise uses documented code developments and free-to-use tools. All generated material is available for reuse in the GitHub repository of datos.gob.es.

In this specific exercise, we will explore tourist flows at a national level, creating visualizations of tourists moving between autonomous communities (CCAA) and provinces.

Access the data laboratory repository on Github.

Execute the data pre-processing code on Google Colab.

In this video, the author explains what you will find on both Github and Google Colab.

2. Context

Analyzing national tourist flows allows us to observe certain well-known movements, such as, for example, that the province of Alicante is a very popular summer tourism destination. In addition, this analysis is interesting for observing trends in the economic impact that tourism may have, year after year, in certain CCAA or provinces. The article on experiences for the management of visitor flows in tourist destinations illustrates the impact of data in the sector.

3. Objective

The main objective of the exercise is to create interactive visualizations in Python that allow visualizing complex information in a comprehensive and attractive way. This objective will be met using an open dataset that contains information on national tourist flows, posing several questions about the data and answering them graphically. We will be able to answer questions such as those posed below:

- In which CCAA is there more tourism from the same CA?

- Which CA is the one that leaves its own CA the most?

- What differences are there between tourist flows throughout the year?

- Which Valencian province receives the most tourists?

The understanding of the proposed tools will provide the reader with the ability to modify the code contained in the notebook that accompanies this exercise to continue exploring the data on their own and detect more interesting behaviors from the dataset used.

In order to create interactive visualizations and answer questions about tourist flows, a data cleaning and reformatting process will be necessary, which is described in the notebook that accompanies this exercise.

4. Resources

Dataset

The open dataset used contains information on tourist flows in Spain at the CCAA and provincial level, also indicating the total values at the national level. The dataset has been published by the National Institute of Statistics, through various types of files. For this exercise we only use the .csv file separated by ";". The data dates from July 2019 to March 2024 (at the time of writing this exercise) and is updated monthly.

Number of tourists by CCAA and destination province disaggregated by PROVINCE of origin

The dataset is also available for download in this Github repository.

Analytical tools

The Python programming language has been used for data cleaning and visualization creation. The code created for this exercise is made available to the reader through a Google Colab notebook.

The Python libraries we will use to carry out the exercise are:

- pandas: is a library used for data analysis and manipulation.

- holoviews: is a library that allows creating interactive visualizations, combining the functionalities of other libraries such as Bokeh and Matplotlib.

5. Exercise development

To interactively visualize data on tourist flows, we will create two types of diagrams: chord diagrams and Sankey diagrams.

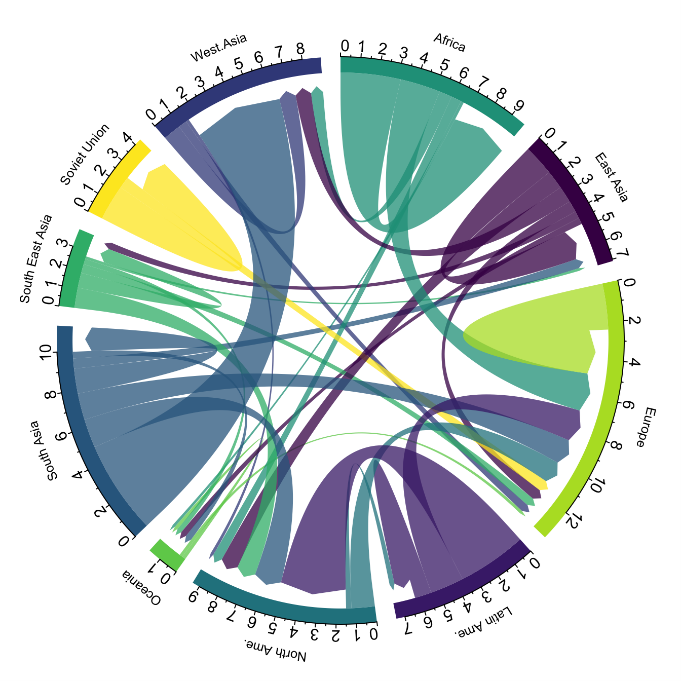

Chord diagrams are a type of diagram composed of nodes and edges, see Figure 1. The nodes are located in a circle and the edges symbolize the relationships between the nodes of the circle. These diagrams are usually used to show types of flows, for example, migratory or monetary flows. The different volume of the edges is visualized in a comprehensible way and reflects the importance of a flow or a node. Due to its circular shape, the chord diagram is a good option to visualize the relationships between all the nodes in our analysis (many-to-many type relationship).

Figure 1. Chord Diagram (Global Migration). Source.

Sankey diagrams, like chord diagrams, are a type of diagram composed of nodes and edges, see Figure 2. The nodes are represented at the margins of the visualization, with the edges between the margins. Due to this linear grouping of nodes, Sankey diagrams are better than chord diagrams for analyses in which we want to visualize the relationship between:

- several nodes and other nodes (many-to-many, or many-to-few, or vice versa)

- several nodes and a single node (many-to-one, or vice versa)

Figure 2. Sankey Diagram (UK Internal Migration). Source.

The exercise is divided into 5 parts, with part 0 ("initial configuration") only setting up the programming environment. Below, we describe the five parts and the steps carried out.

5.1. Load data

This section can be found in point 1 of the notebook.

In this part, we load the dataset to process it in the notebook. We check the format of the loaded data and create a pandas.DataFrame that we will use for data processing in the following steps.

5.2. Initial data exploration

This section can be found in point 2 of the notebook.

In this part, we perform an exploratory data analysis to understand the format of the dataset we have loaded and to have a clearer idea of the information it contains. Through this initial exploration, we can define the cleaning steps we need to carry out to create interactive visualizations.

If you want to learn more about how to approach this task, you have at your disposal this introductory guide to exploratory data analysis.

5.3. Data format analysis

This section can be found in point 3 of the notebook.

In this part, we summarize the observations we have been able to make during the initial data exploration. We recapitulate the most important observations here:

| Province of origin | Province of origin | CCAA and destination province | CCAA and destination province | CCAA and destination province | Tourist concept | Period | Total |

|---|---|---|---|---|---|---|---|

| National Total | National Total | Tourists | 2024M03 | 13.731.096 | |||

| National Total | Ourense | National Total | Andalucía | Almería | Tourists | 2024M03 | 373 |

Figure 3. Fragment of the original dataset.

We can observe in columns one to four that the origins of tourist flows are disaggregated by province, while for destinations, provinces are aggregated by CCAA. We will take advantage of the mapping of CCAA and their provinces that we can extract from the fourth and fifth columns to aggregate the origin provinces by CCAA.

We can also see that the information contained in the first column is sometimes superfluous, so we will combine it with the second column. In addition, we have found that the fifth and sixth columns do not add value to our analysis, so we will remove them. We will rename some columns to have a more comprehensible pandas.DataFrame.

5.4. Data cleaning

This section can be found in point 4 of the notebook.

In this part, we carry out the necessary steps to better format our data. For this, we take advantage of several functionalities that pandas offers us, for example, to rename the columns. We also define a reusable function that we need to concatenate the values of the first and second columns with the aim of not having a column that exclusively indicates "National Total" in all rows of the pandas.DataFrame. In addition, we will extract from the destination columns a mapping of CCAA to provinces that we will apply to the origin columns.

We want to obtain a more compressed version of the dataset with greater transparency of the column names and that does not contain information that we are not going to process. The final result of the data cleaning process is the following:

| Origin | Province of origin | Destination | Province of destination | Period | Total |

|---|---|---|---|---|---|

| National Total | National Total | 2024M03 | 13731096.0 | ||

| Galicia | Ourense | Andalucía | Almería | 2024M03 | 373.0 |

Figure 4. Fragment of the clean dataset.

5.5. Create visualizations

This section can be found in point 5 of the notebook

In this part, we create our interactive visualizations using the Holoviews library. In order to draw chord or Sankey graphs that visualize the flow of people between CCAA and CCAA and/or provinces, we have to structure the information of our data in such a way that we have nodes and edges. In our case, the nodes are the names of CCAA or province and the edges, that is, the relationship between the nodes, are the number of tourists. In the notebook we define a function to obtain the nodes and edges that we can reuse for the different diagrams we want to make, changing the time period according to the season of the year we are interested in analyzing.

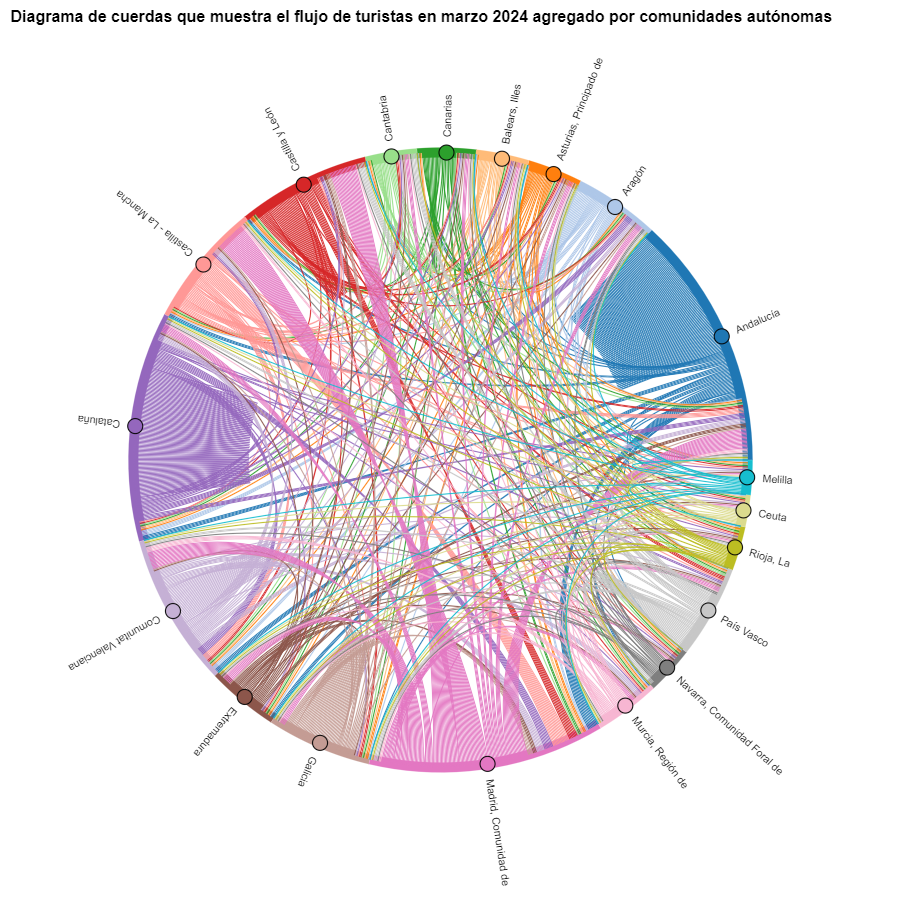

We will first create a chord diagram using exclusively data on tourist flows from March 2024. In the notebook, this chord diagram is dynamic. We encourage you to try its interactivity.

Figure 5. Chord diagram showing the flow of tourists in March 2024 aggregated by autonomous communities.

The chord diagram visualizes the flow of tourists between all CCAA. Each CA has a color and the movements made by tourists from this CA are symbolized with the same color. We can observe that tourists from Andalucía and Catalonia travel a lot within their own CCAA. On the other hand, tourists from Madrid leave their own CA a lot.

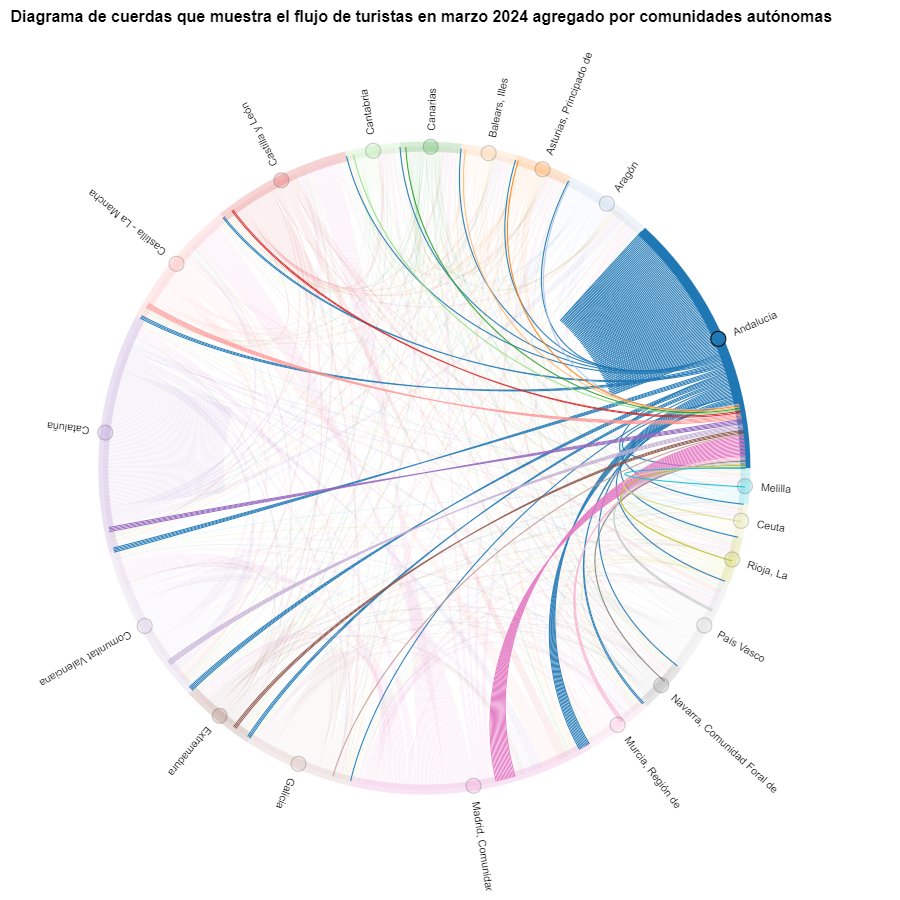

Figure 6. Chord diagram showing the flow of tourists entering and leaving Andalucía in March 2024 aggregated by autonomous communities.

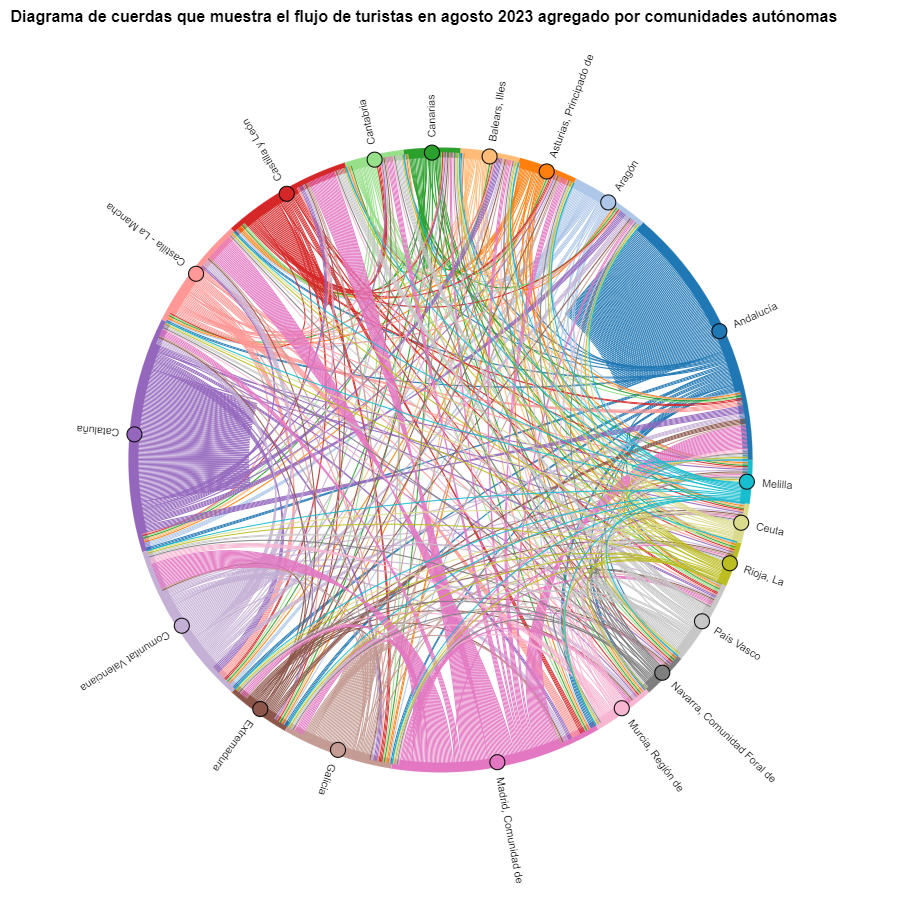

We create another chord diagram using the function we have created and visualize tourist flows in August 2023.

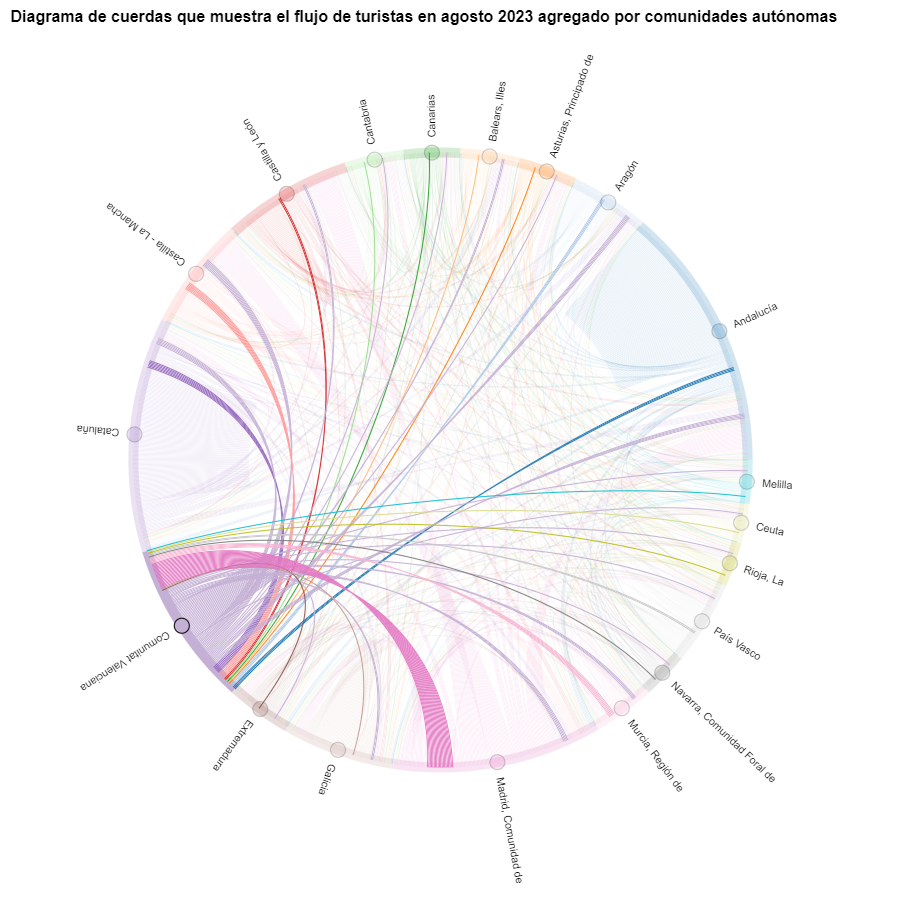

Figure 7. Chord diagram showing the flow of tourists in August 2023 aggregated by autonomous communities.

We can observe that, broadly speaking, tourist movements do not change, only that the movements we have already observed for March 2024 intensify.

Figure 8. Chord diagram showing the flow of tourists entering and leaving the Valencian Community in August 2023 aggregated by autonomous communities.

The reader can create the same diagram for other time periods, for example, for the summer of 2020, in order to visualize the impact of the pandemic on summer tourism, reusing the function we have created.

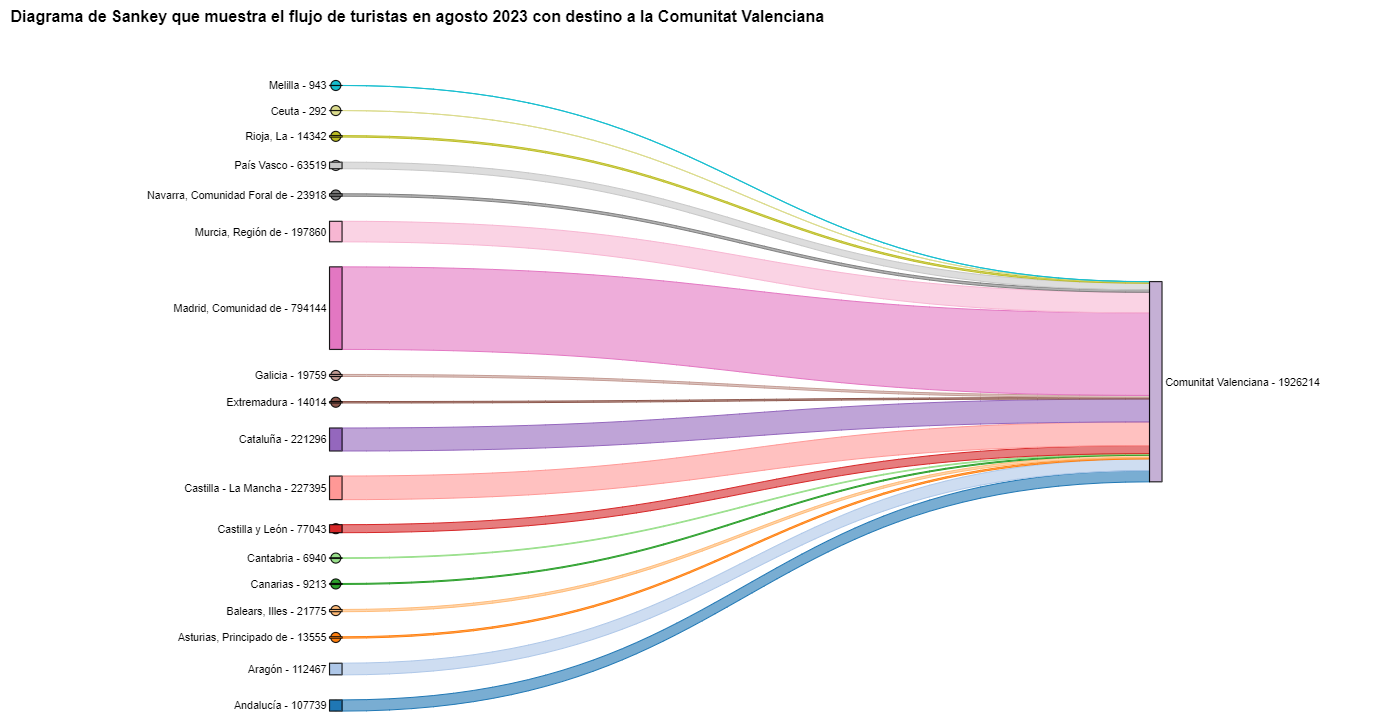

For the Sankey diagrams, we will focus on the Valencian Community, as it is a popular holiday destination. We filter the edges we created for the previous chord diagram so that they only contain flows that end in the Valencian Community. The same procedure could be applied to study any other CA or could be inverted to analyze where Valencians go on vacation. We visualize the Sankey diagram which, like the chord diagrams, is interactive within the notebook. The visual aspect would be like this:

Figure 9. Sankey diagram showing the flow of tourists in August 2023 destined for the Valencian Community.

As we could already intuit from the chord diagram above, see Figure 8, the largest group of tourists arriving in the Valencian Community comes from Madrid. We also see that there is a high number of tourists visiting the Valencian Community from neighboring CCAA such as Murcia, Andalucía, and Catalonia.

To verify that these trends occur in the three provinces of the Valencian Community, we are going to create a Sankey diagram that shows on the left margin all the CCAA and on the right margin the three provinces of the Valencian Community.

To create this Sankey diagram at the provincial level, we have to filter our initial pandas.DataFrame to extract the relevant information from it. The steps in the notebook can be adapted to perform this analysis at the provincial level for any other CA. Although we are not reusing the function we used previously, we can also change the analysis period.

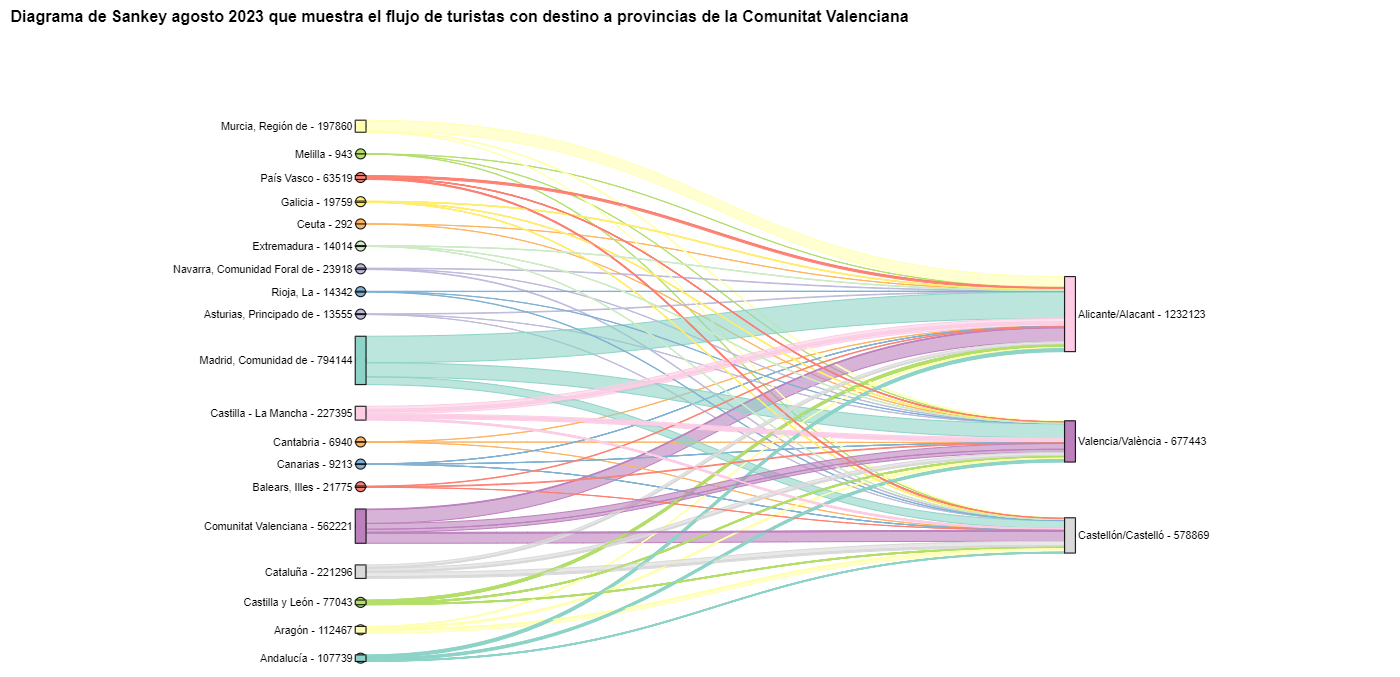

The Sankey diagram that visualizes the tourist flows that arrived in August 2023 to the three Valencian provinces would look like this:

Figure 10. Sankey diagram August 2023 showing the flow of tourists destined for provinces of the Valencian Community.

We can observe that, as we already assumed, the largest number of tourists arriving in the Valencian Community in August comes from the Community of Madrid. However, we can verify that this is not true for the province of Castellón, where in August 2023 the majority of tourists were Valencians who traveled within their own CA.

6. Conclusions of the exercise

Thanks to the visualization techniques used in this exercise, we have been able to observe the tourist flows that move within the national territory, focusing on making comparisons between different times of the year and trying to identify patterns. In both the chord diagrams and the Sankey diagrams that we have created, we have been able to observe the influx of Madrilenian tourists on the Valencian coasts in summer. We have also been able to identify the autonomous communities where tourists leave their own autonomous community the least, such as Catalonia and Andalucía.

7. Do you want to do the exercise?

We invite the reader to execute the code contained in the Google Colab notebook that accompanies this exercise to continue with the analysis of tourist flows. We leave here some ideas of possible questions and how they could be answered:

- The impact of the pandemic: we have already mentioned it briefly above, but an interesting question would be to measure the impact that the coronavirus pandemic has had on tourism. We can compare the data from previous years with 2020 and also analyze the following years to detect stabilization trends. Given that the function we have created allows easily changing the time period under analysis, we suggest you do this analysis on your own.

- Time intervals: it is also possible to modify the function we have been using in such a way that it not only allows selecting a specific time period, but also allows time intervals.

- Provincial level analysis: likewise, an advanced reader with Pandas can challenge themselves to create a Sankey diagram that visualizes which provinces the inhabitants of a certain region travel to, for example, Ourense. In order not to have too many destination provinces that could make the Sankey diagram illegible, only the 10 most visited could be visualized. To obtain the data to create this visualization, the reader would have to play with the filters they apply to the dataset and with the groupby method of pandas, being inspired by the already executed code.

We hope that this practical exercise has provided you with sufficient knowledge to develop your own visualizations. If you have any data science topic that you would like us to cover soon, do not hesitate to propose your interest through our contact channels.

In addition, remember that you have more exercises available in the section "Data science exercises".

The digital revolution is transforming municipal services, driven by the increasing adoption of artificial intelligence (AI) technologies that also benefit from open data. These developments have the potential to redefine the way municipalities deliver services to their citizens, providing tools to improve efficiency, accessibility and sustainability. This report looks at success stories in the deployment of applications and platforms that seek to improve various aspects of life in municipalities, highlighting their potential to unlock more of the vast untapped potential of open data and associated artificial intelligence technologies.

The applications and platforms described in this report have a high potential for replicability in different municipal contexts, as they address common problems. Replication of these solutions can take place through collaboration between municipalities, companies and developers, as well as through the release and standardisation of open data.

Despite the benefits, the adoption of open data for municipal innovation also presents significant challenges. The quality, updating and standardisation of data published by local authorities, as well as interoperability between different platforms and systems, must be ensured. In addition, the open data culture needs to be reinforced among all actors involved, including citizens, developers, businesses and public administrations themselves.

The use cases analysed are divided into four sections. Each of these sections is described below and some examples of the solutions included in the report are shown.

Transport and Mobility

One of the most significant challenges in urban areas is transport and mobility management. Applications using open data have proven to be effective in improving these services. For example, applications such as Park4Dis make it easy to locate parking spaces for people with reduced mobility, using data from multiple municipalities and contributions from volunteers. CityMapper, which has gone global, on the other hand, offers optimised public transport routes in real time, integrating data from various transport modes to provide the most efficient route. These applications not only improve mobility, but also contribute to sustainability by reducing congestion and carbon emissions.

Environment and Sustainability

Growing awareness of sustainability has spurred the development of applications that promote environmentally friendly practices. CleanSpot, for example, facilitates the location of recycling points and the management of municipal waste. The application encourages citizen participation in cleaning and recycling, contributing to the reduction of the ecological footprint. Liight gamifies sustainable behaviour by rewarding users for actions such as recycling or using public transport. These applications not only improve environmental management, but also educate and motivate citizens to adopt more sustainable habits.

Optimisation of Basic Public Services

Urban service management platforms, such as Gestdropper, use open data to monitor and control urban infrastructure in real time. These tools enable more efficient management of resources such as street lighting, water networks and street furniture, optimising maintenance, incident response and reducing operating costs. Moreover, the deployment of appointment management systems, such as CitaME, helps to reduce waiting times and improve efficiency in customer service.

Citizen Services Aggregators

Applications that centralise public information and services, such as Badajoz Es Más and AppValencia, improve accessibility and communication between administrations and citizens. These platforms provide real-time data on public transport, cultural events, tourism and administrative procedures, making life in the municipality easier for residents and tourists alike. For example, integrating multiple services into a single application improves efficiency and reduces the need for unnecessary travel. These tools also support local economies by promoting cultural events and commercial services.

Conclusions

The use of open data and artificial intelligence technologies is transforming municipal management, improving the efficiency, accessibility and sustainability of public services. The success stories presented in this report describe how these tools can benefit both citizens and public administrations by making cities smarter, more inclusive and sustainable environments, and more responsive to the needs and well-being of their inhabitants and visitors.

Listen the podcast (only availible in spanish)

It seems like only yesterday that we were finishing eating our grapes and welcoming the new year. However, six months have already passed, during which we have witnessed many new developments in the world, in Spain and also in the open data ecosystem.

Join us as we take a look back at some of the most newsworthy open data events that have taken place in our country so far this year.

New regulations to boost open data and its use

During the first weeks of 2024, some legislative advances were made in Europe, applicable in our country. On 11 January, the Data Act came into force, which aims to democratise access to data, stimulate innovation and ensure fair use across Europe's digital landscape. You can learn more about it in this infographic that reviews the most important aspects.

On the other hand, at the national level, we have seen how open data is gaining prominence and its promotion is increasingly taken into account in sectoral regulations. This is the case of the Sustainable Mobility Bill, which, among other issues, includes the promotion of open data for administrations, infrastructure managers and public and private operators.

This is a trend that we had already seen in the last days of 2023 with the validation of Royal Decree-Law 6/2023, of 19 December, approving urgent measures for the implementation of the Recovery, Transformation and Resilience Plan for the public service of justice, the civil service, local government and patronage. This Royal Decree-Law includes the general principle of data orientation and emphasises the publication of automatically actionable data in open data portals accessible to citizens. The government's Artificial Intelligence Strategy 2024 also includes references to data held by public bodies. Specifically, it establishes that a common governance model will be created for the data and documentary corpus of the General State Administration in such a way as to guarantee the standards of security, quality, interoperability and reuse of all the data available for the training of models.

In relation to governance, we saw another development at the end of 2023 that has been reflected in 2024: the adoption of the Standard Ordinance on Data Governance in the Local Entity, approved by the Spanish Federation of Municipalities and Provinces (FEMP in its Spanish acronym). Over the last few months, various local councils have incorporated and adapted this ordinance to their territory. This is the case of Zaragoza or Fuenlabrada.

News on data platforms

In this time, new platforms or tools have also materialised that make data available to citizens and businesses:

- The Government of Spain has created the National Access Point for Traffic and Mobility which includes data on the facilities with electric vehicle charging points, detailing the type of connector, format, charging mode, etc.

- The National Institute of Statistics (INE in its Spanish acronym) has launched a Panel of Environmental Indicators. With it, it will be possible to quantify compliance with environmental targets, such as the Green Deal. It has also created a specific section for high-value data.

- The Statistical Institute of the Balearic Islands (IBESTAT in its Spanish acronym) has revamped its web portal which has a specific section on open data.

- Open Data Euskadi has published a new API that facilitates the reuse of public procurement data from the Basque administration.

- MUFACE (General mutual society of civil servants of the State) has launched a space with historical and current data on choice of entity, health care, etc.

- Some of the local authorities that have launched new data portals include the Diputation of Málaga and the City Council of Lucena (Cordoba).

- The Museo del Prado has set up a virtual tour which allows you to tour the main collections in 360º. It also offers a selection of 89 works digitised in gigapixel.

- Researchers from the University of Seville have collaborated in the launch of the PEPAdb (Prehistoric Europe's Personal Adornment database), an online and accessible tool with data on elements of personal adornment in recent prehistory.

In addition, agreements have been signed to make further progress in opening up data and boosting re-use, demonstrating the commitment to open and interoperable data.

- The INE, the State Tax Administration Agency (AEAT in its Spanish acronym), the Social Security, the Bank of Spain and the State Public Employment Service (SEPE) have signed a agreement to facilitate joint access to databases for research of public interest.

- The councils of Castelldefels, El Prat de Llobregat and Esparreguera have joined the Municipal Territorial Information System (SITMUN) to share geographic information and have access to a transversal information system.

- The Universidad Rey Juan Carlos and ASEDIE, the Multisectorial Association of Information, have joined forces to create the Open Data Reuse Observatory which is born with the vision of catalysing progress and transparency in the infomediary field, highlighting the importance of the data-driven economy.

Boosting the re-use of data

Both the data published previously and those published as a result of this work allow for the development of products and services that bring valuable information to the public. Some recently created examples include:

- The government has launched a tool to track the implementation and impact of the Recovery, Transformation and Resilience Plan investments.

- Data on the network of ports, airports, railway terminals, roads and railway lines can be easily consulted with the this visualiser of the Ministry of Transport and Sustainable Mobility.

- The Barcelona Open Data Initiative has presented a new version of the portal DadesXViolènciaXDones a tool for analysing the impact of policies against male violence.

- Madrid City Council has shown how it measures the use of the Cuña Verde Park through data analysis and its GovTech programme.

- Furthermore, in the field of data journalism, we find many examples, such as this article from elDiario.es where one can visualise, neighbourhood by neighbourhood, the price of rent and access to housing according to income.

These data combined with artificial intelligence makes it possible to solve and advance social challenges, as the following examples show:

- The wildINTEL project, of the University of Huelva, in collaboration with the state agency Consejo Superior de Investigaciones Científicas (CSIC), aims to monitor wildlife in Europe. It combines citizen science and AI for effective biodiversity data collection and management.

- Experts at the International University of La Rioja have developed AymurAI, a project that promotes judicial transparency and gender equality through artificial intelligence, based on methodologies with a gender perspective and open data.

- Two researchers from Cantabria have created a model that makes it possible to predict climatic variables in real time and in high resolution by means of artificial intelligence.

On the other hand, to boost the re-use of open data, public bodies have launched competitions and initiatives that have facilitated the creation of new products and services. Examples from recent months include:

- The7th Castile and Leon open data competition.

- The University of Zaragoza's Pedro R. Muro-Medrano Awards.

- The València City Council awards for open data and data journalism projects.

New reports and resources linked to open data

Some of the reports on open data and its re-use published in the first half of the year include:

- The "ASEDIE Report: Data Economy in the infomediary field", now in its twelfth edition.

- The ASEDIE report "Geospatial data in the Ministry of Transport and Sustainable Mobility. Impact of the information co-produced by IGN and CNIG".

- The report "The reuse of open data in Spain" by the COTEC Foundation.

- The "Guide of Good Practices for Data Journalists" elaborated by the Observatori de Dades Obertes i Transparència of the Universitat Politècnica de València (UPV).

A large number of events have also been held, some of which can be viewed online and some of which have been chronicled and summarised:

- The "III National Meeting of Open Data (ENDA): Data to boost the tourism sector", organised by the Deputation of Castellón, the Deputation of Barcelona and the Government of Aragón.

- The round table "Building Tourism Intelligence: Data Sharing for Strategic Decisions in the Tourism Sector" organised by SEGITTUR (State Trading Company for the Management of Innovation and Tourism Technologies) as part of Fitur Know-how & Export.

- The session "Managing Water with Open Data" organised within the framework of the CafèAmbDades initiative of the Generalitat de Catalunya.

- The European Data Portal's workshop "How to use open data for your research", with the participation of the Universidad Politécnica de Madrid.

These are just a fe examples that illustrate the great activity that has taken place in the open data environment over the last six months. Do you know of any other examples? Leave us a comment or write to dinamizacion@datos.gob.es!

1. Introduction

In the information age, artificial intelligence has proven to be an invaluable tool for a variety of applications. One of the most incredible manifestations of this technology is GPT (Generative Pre-trained Transformer), developed by OpenAI. GPT is a natural language model that can understand and generate text, providing coherent and contextually relevant responses. With the recent introduction of Chat GPT-4, the capabilities of this model have been further expanded, allowing for greater customisation and adaptability to different themes.

In this post, we will show you how to set up and customise a specialised critical minerals wizard using GPT-4 and open data sources. As we have shown in previous publications critical minerals are fundamental to numerous industries, including technology, energy and defence, due to their unique properties and strategic importance. However, information on these materials can be complex and scattered, making a specialised assistant particularly useful.

The aim of this post is to guide you step by step from the initial configuration to the implementation of a GPT wizard that can help you to solve doubts and provide valuable information about critical minerals in your day to day life. In addition, we will explore how to customise aspects of the assistant, such as the tone and style of responses, to perfectly suit your needs. At the end of this journey, you will have a powerful, customised tool that will transform the way you access and use critical open mineral information.

Access the data lab repository on Github.

2. Context

The transition to a sustainable future involves not only changes in energy sources, but also in the material resources we use. The success of sectors such as energy storage batteries, wind turbines, solar panels, electrolysers, drones, robots, data transmission networks, electronic devices or space satellites depends heavily on access to the raw materials critical to their development. We understand that a mineral is critical when the following factors are met:

- Its global reserves are scarce

- There are no alternative materials that can perform their function (their properties are unique or very unique)

- They are indispensable materials for key economic sectors of the future, and/or their supply chain is high risk

You can learn more about critical minerals in the post mentioned above.

3. Target

This exercise focuses on showing the reader how to customise a specialised GPT model for a specific use case. We will adopt a "learning-by-doing" approach, so that the reader can understand how to set up and adjust the model to solve a real and relevant problem, such as critical mineral expert advice. This hands-on approach not only improves understanding of language model customisation techniques, but also prepares readers to apply this knowledge to real-world problem solving, providing a rich learning experience directly applicable to their own projects.

The GPT assistant specialised in critical minerals will be designed to become an essential tool for professionals, researchers and students. Its main objective will be to facilitate access to accurate and up-to-date information on these materials, to support strategic decision-making and to promote education in this field. The following are the specific objectives we seek to achieve with this assistant:

- Provide accurate and up-to-date information:

- The assistant should provide detailed and accurate information on various critical minerals, including their composition, properties, industrial uses and availability.

- Keep up to date with the latest research and market trends in the field of critical minerals.

- Assist in decision-making:

- To provide data and analysis that can assist strategic decision making in industry and critical minerals research.

- Provide comparisons and evaluations of different minerals in terms of performance, cost and availability.

- Promote education and awareness of the issue:

- Act as an educational tool for students, researchers and practitioners, helping to improve their knowledge of critical minerals.

- Raise awareness of the importance of these materials and the challenges related to their supply and sustainability.

4. Resources

To configure and customise our GPT wizard specialising in critical minerals, it is essential to have a number of resources to facilitate implementation and ensure the accuracy and relevance of the model''s responses. In this section, we will detail the necessary resources that include both the technological tools and the sources of information that will be integrated into the assistant''s knowledge base.

Tools and Technologies

The key tools and technologies to develop this exercise are:

- OpenAI account: required to access the platform and use the GPT-4 model. In this post, we will use ChatGPT''s Plus subscription to show you how to create and publish a custom GPT. However, you can develop this exercise in a similar way by using a free OpenAI account and performing the same set of instructions through a standard ChatGPT conversation.

- Microsoft Excel: we have designed this exercise so that anyone without technical knowledge can work through it from start to finish. We will only use office tools such as Microsoft Excel to make some adjustments to the downloaded data.

In a complementary way, we will use another set of tools that will allow us to automate some actions without their use being strictly necessary:

- Google Colab: is a Python Notebooks environment that runs in the cloud, allowing users to write and run Python code directly in the browser. Google Colab is particularly useful for machine learning, data analysis and experimentation with language models, offering free access to powerful computational resources and facilitating collaboration and project sharing.

- Markmap: is a tool that visualises Markdown mind maps in real time. Users write ideas in Markdown and the tool renders them as an interactive mind map in the browser. Markmap is useful for project planning, note taking and organising complex information visually. It facilitates understanding and the exchange of ideas in teams and presentations.

Sources of information

- Raw Materials Information System (RMIS): raw materials information system maintained by the Joint Research Center of the European Union. It provides detailed and up-to-date data on the availability, production and consumption of raw materials in Europe.

- International Energy Agency (IEA) Catalogue of Reports and Data: the International Energy Agency (IEA) offers a comprehensive catalogue of energy-related reports and data, including statistics on production, consumption and reserves of energy and critical minerals.

- Mineral Database of the Spanish Geological and Mining Institute (BDMIN in its acronym in Spanish): contains detailed information on minerals and mineral deposits in Spain, useful to obtain specific data on the production and reserves of critical minerals in the country.

With these resources, you will be well equipped to develop a specialised GPT assistant that can provide accurate and relevant answers on critical minerals, facilitating informed decision-making in the field.

5. Development of the exercise

5.1. Building the knowledge base

For our specialised critical minerals GPT assistant to be truly useful and accurate, it is essential to build a solid and structured knowledge base. This knowledge base will be the set of data and information that the assistant will use to answer queries. The quality and relevance of this information will determine the effectiveness of the assistant in providing accurate and useful answers.

We start with the collection of information sources that will feed our knowledge base. Not all sources of information are equally reliable. It is essential to assess the quality of the sources identified, ensuring that:

- Information is up to date: the relevance of data can change rapidly, especially in dynamic fields such as critical minerals.

- The source is reliable and recognised: it is necessary to use sources from recognised and respected academic and professional institutions.

- Data is complete and accessible: it is crucial that data is detailed and accessible for integration into our wizard.

In our case, we developed an online search in different platforms and information repositories trying to select information belonging to different recognised entities:

- Research centres and universities:

- They publish detailed studies and reports on the research and development of critical minerals.

- Example: RMIS of the Joint Research Center of the European Union.

- Governmental institutions and international organisations:

- These entities usually provide comprehensive and up-to-date data on the availability and use of critical minerals.

- Example: International Energy Agency (IEA).

- Specialised databases:

- They contain technical and specific data on deposits and production of critical minerals.

- Example: Minerals Database of the Spanish Geological and Mining Institute (BDMIN).

Selection and preparation of information

We will now focus on the selection and preparation of existing information from these sources to ensure that our GPT assistant can access accurate and useful data.

RMIS of the Joint Research Center of the European Union:

- Selected information:

We selected the report "Supply chain analysis and material demand forecast in strategic technologies and sectors in the EU - A foresight study". This is an analysis of the supply chain and demand for minerals in strategic technologies and sectors in the EU. It presents a detailed study of the supply chains of critical raw materials and forecasts the demand for minerals up to 2050.

- Necessary preparation:

The format of the document, PDF, allows the direct ingestion of the information by our assistant. However, as can be seen in Figure 1, there is a particularly relevant table on pages 238-240 which analyses, for each mineral, its supply risk, typology (strategic, critical or non-critical) and the key technologies that employ it. We therefore decided to extract this table into a structured format (CSV), so that we have two pieces of information that will become part of our knowledge base.

Figure 1: Table of minerals contained in the JRC PDF

To programmatically extract the data contained in this table and transform it into a more easily processable format, such as CSV(comma separated values), we will use a Python script that we can use through the platform Google Colab platform (Figure 2).

Figure 2: Script Python para la extracción de datos del PDF de JRC desarrollado en plataforma Google Colab.

To summarise, this script:

- It is based on the open source library PyPDF2capable of interpreting information contained in PDF files.

- First, it extracts in text format (string) the content of the pages of the PDF where the mineral table is located, removing all the content that does not correspond to the table itself.

- It then goes through the string line by line, converting the values into columns of a data table. We will know that a mineral is used in a key technology if in the corresponding column of that mineral we find a number 1 (otherwise it will contain a 0).

- Finally, it exports the table to a CSV file for further use.

International Energy Agency (IEA):

- Selected information:

We selected the report "Global Critical Minerals Outlook 2024". It provides an overview of industrial developments in 2023 and early 2024, and offers medium- and long-term prospects for the demand and supply of key minerals for the energy transition. It also assesses risks to the reliability, sustainability and diversity of critical mineral supply chains.

- Necessary preparation:

The format of the document, PDF, allows us to ingest the information directly by our virtual assistant. In this case, we will not make any adjustments to the selected information.

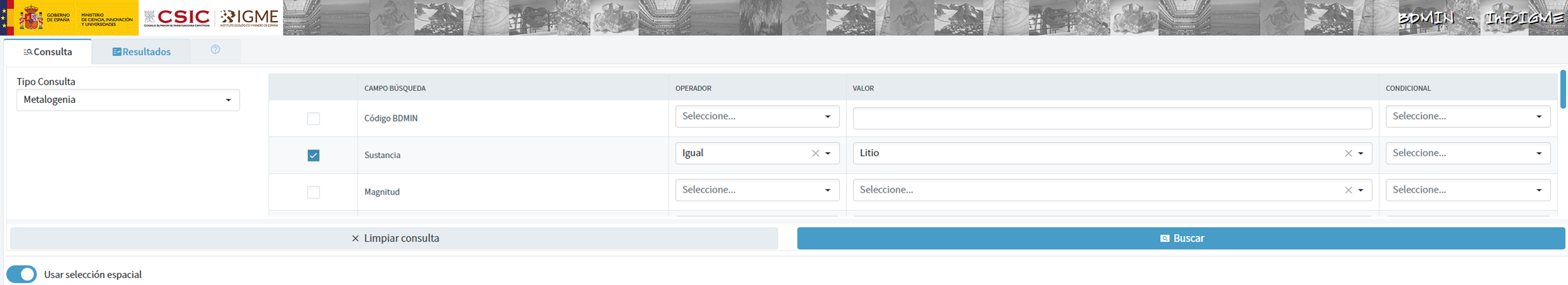

Spanish Geological and Mining Institute''s Minerals Database (BDMIN)

- Selected information:

In this case, we use the form to select the existing data in this database for indications and deposits in the field of metallogeny, in particular those with lithium content.

Figure 3: Dataset selection in BDMIN.

- Necessary preparation:

We note how the web tool allows online visualisation and also the export of this data in various formats. Select all the data to be exported and click on this option to download an Excel file with the desired information.

Figure 4: Visualization and download tool in BDMIN

Figure 5: BDMIN Downloaded Data.

All the files that make up our knowledge base can be found at GitHub, so that the reader can skip the downloading and preparation phase of the information.

5.2. GPT configuration and customisation for critical minerals

When we talk about "creating a GPT," we are actually referring to the configuration and customisation of a GPT (Generative Pre-trained Transformer) based language model to suit a specific use case. In this context, we are not creating the model from scratch, but adjusting how the pre-existing model (such as OpenAI''s GPT-4) interacts and responds within a specific domain, in this case, on critical minerals.

First of all, we access the application through our browser and, if we do not have an account, we follow the registration and login process on the ChatGPT platform. As mentioned above, in order to create a GPT step-by-step, you will need to have a Plus account. However, readers who do not have such an account can work with a free account by interacting with ChatGPT through a standard conversation.

Figure 6: ChatGPT login and registration page.

Once logged in, select the "Explore GPT" option, and then click on "Create" to begin the process of creating your GPT.

Figure 7: Creation of new GPT.

The screen will display the split screen for creating a new GPT: on the left, we will be able to talk to the system to indicate the characteristics that our GPT should have, while on the left we will be able to interact with our GPT to validate that its behaviour is adequate as we go through the configuration process.

Figure 8: Screen of creating new GPT.

In the GitHub of this project, we can find all the prompts or instructions that we will use to configure and customise our GPT and that we will have to introduce sequentially in the "Create" tab, located on the left tab of our screens, to complete the steps detailed below.

The steps we will follow for the creation of the GPT are as follows:

- First, we will outline the purpose and basic considerations for our GPT so that you can understand how to use it.

Figure 9: Basic instructions for new GPT.

2. We will then create a name and an image to represent our GPT and make it easily identifiable. In our case, we will call it MateriaGuru.

Figure 10: Name selection for new GPT.

Figure 11: Image creation for GPT.

3.We will then build the knowledge base from the information previously selected and prepared to feed the knowledge of our GPT.

Figure 12: Uploading of information to the new GPT knowledge base.

4. Now, we can customise conversational aspects such as their tone, the level of technical complexity of their response or whether we expect brief or elaborate answers.

5. Lastly, from the "Configure" tab, we can indicate the conversation starters desired so that users interacting with our GPT have some ideas to start the conversation in a predefined way.

Figure 13: Configure GPT tab.

In Figure 13 we can also see the final result of our training, where key elements such as their image, name, instructions, conversation starters or documents that are part of their knowledge base appear.

5.3. Validation and publication of GPT

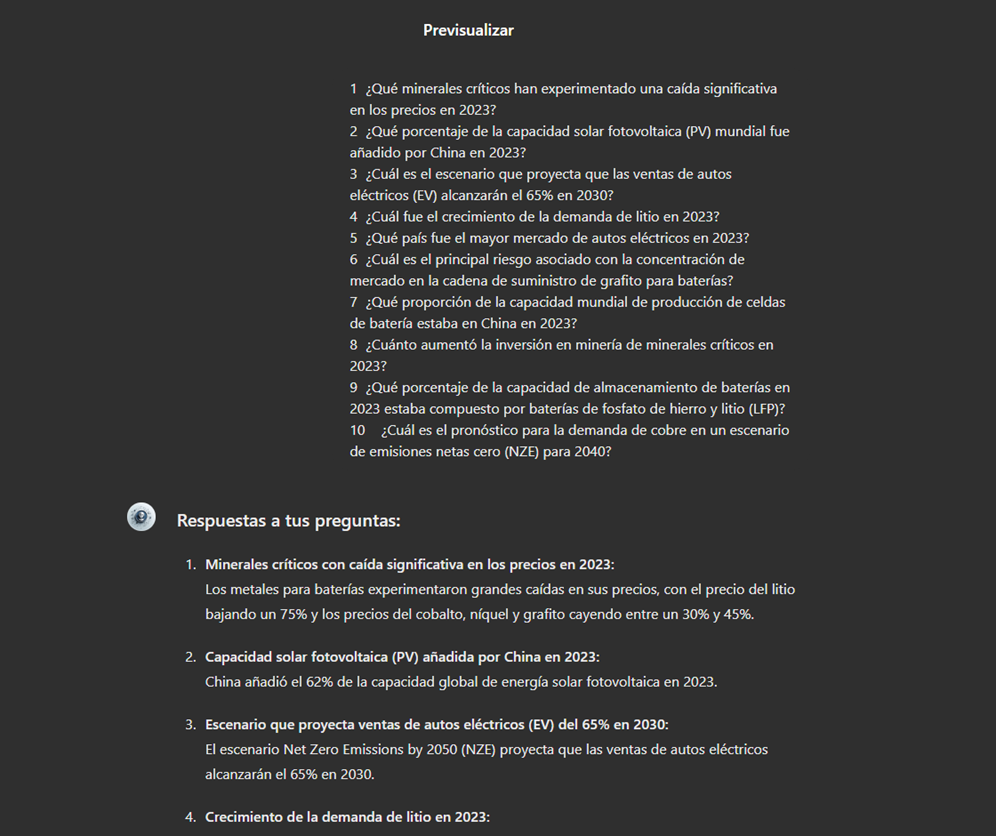

Before we sign off our new GPT-based assistant, we will proceed with a brief validation of its correct configuration and learning with respect to the subject matter around which we have trained it. For this purpose, we prepared a battery of questions that we will ask MateriaGuru to check that it responds appropriately to a real scenario of use.

| # | Question | Answer |

|---|---|---|

| 1 | Which critical minerals have experienced a significant drop in prices in 2023? | Battery mineral prices saw particularly large drops with lithium prices falling by 75% and cobalt, nickel and graphite prices falling by between 30% and 45%. |

| 2 | What percentage of global solar photovoltaic (PV) capacity was added by China in 2023? | China accounted for 62% of the increase in global solar PV capacity in 2023. |

| 3 | What is the scenario that projects electric car (EV) sales to reach 65% by 2030? | The Net Zero Emissions (NZE) scenario for 2050 projects that electric car sales will reach 65% by 2030. |

| 4 | What was the growth in lithium demand in 2023? | Lithium demand increased by 30% in 2023. |

| 5 | Which country was the largest electric car market in 2023? | China was the largest electric car market in 2023 with 8.1 million electric car sales representing 60% of the global total. |

| 6 | What is the main risk associated with market concentration in the battery graphite supply chain? | More than 90% of battery-grade graphite and 77% of refined rare earths in 2030 originate in China, posing a significant risk to market concentration. |

| 7 | What proportion of global battery cell production capacity was in China in 2023? | China owned 85% of battery cell production capacity in 2023. |

| 8 | How much did investment in critical minerals mining increase in 2023? | Investment in critical minerals mining grew by 10% in 2023. |

| 9 | What percentage of battery storage capacity in 2023 was composed of lithium iron phosphate (LFP) batteries? | By 2023, LFP batteries would constitute approximately 80% of the total battery storage market. |

| 10 | What is the forecast for copper demand in a net zero emissions (NZE) scenario for 2040? | In the net zero emissions (NZE) scenario for 2040, copper demand is expected to have the largest increase in terms of production volume. |

Figure 14: Table with battery of questions for the validation of our GPT.

Using the preview section on the right-hand side of our screens, we launch the battery of questions and validate that the answers correspond to those expected.

Figure 15: Validation of GPT responses.

Finally, click on the "Create" button to finalise the process. We will be able to select between different alternatives to restrict its use by other users.

Figure 16: Publication of our GPT.

6. Scenarios of use

In this section we show several scenarios in which we can take advantage of MateriaGuru in our daily life. On the GitHub of the project you can find the prompts used to replicate each of them.

6.1. Consultation of critical minerals information

The most typical scenario for the use of this type of GPTs is assistance in resolving doubts related to the topic in question, in this case, critical minerals. As an example, we have prepared a set of questions that the reader can pose to the GPT created to understand in more detail the relevance and current status of a critical material such as graphite from the reports provided to our GPT.

Figure 17: Resolution of critical mineral queries.

We can also ask you specific questions about the tabulated information provided on existing sites and evidence on Spanish territory.