The carbon footprint is a key indicator for understanding the environmental impact of our actions. It measures the amount of greenhouse gas emissions released into the atmosphere as a result of human activities, most notably the burning of fossil fuels such as oil, natural gas and coal. These gases, which include carbon dioxide (CO2), methane (CH4) and nitrous oxide (N2O), contribute to global warming by trapping heat in the earth's atmosphere.

Many actions are being carried out by different organisations to try to reduce the carbon footprint. These include those included in the European Green Pact or the Sustainable Development Goals. But this is an area where every small action counts and, as citizens, we can also contribute to this goal through small changes in our lifestyles.

Moreover, this is an area where open data can have a major impact. In particular, the report "The economic impact of open data: opportunities for value creation in Europe (2020)" highlights how open data has saved the equivalent of 5.8 million tonnes of oil every year in the European Union by promoting greener energy sources. This include 79.6 billion in cost savings on energy bills.

This article reviews some solutions that help us measure our carbon footprint to raise awareness of the situation, as well as useful open data sources .

Calculators to know your carbon footprint

The European Union has a web application where everyone can analyse the life cycle of products and energy consumed in five specific areas (food, mobility, housing, household appliances and household goods), based on 16 environmental impact indicators. The user enters certain data, such as his energy expenditure or the details of his vehicle, and the solution calculates the level of impact. The website also offers recommendations for improving consumption patterns. It was compiled using data from Ecoinvent y Agrifoot-print, as well as different public reports detailed in its methodology.

The UN also launched a similar solution, but with a focus on consumer goods. It allows the creation of product value chains by mapping the materials, processes and transports that have been used for their manufacture and distribution, using a combination of company-specific activity data and secondary data. The emission factors and datasets for materials and processes come from a combination of data sources such as Ecoinvent, the Swedish Environment Institute, DEFRA (UK Department for Environment, Food and Rural Affairs), academic papers, etc. The calculator is also linked to the the Platform for carbon footprint offsetting of the United Nations. This allows users of the application to take immediate climate action by contributing to UN green projects.

Looking at Spain, the Ministry for Ecological Transition and the Demographic Challenge has several tools to facilitate the calculation of the carbon footprint aimed at different audiences: organisations, municipalities and farms. They take into account both direct emissions and indirect emissions from electricity consumption. Among other data sources, it uses information from National Greenhouse Gas Inventory. It also provides an estimate of the carbon dioxide removals generated by an emission reduction project.

Another tool linked to this ministry is ComidaAPrueba, launched by the Fundación Vida Sostenible and aimed at finding out the sustainability of citizens' diets. The mobile application, available for both iOs and Android, allows us to calculate the environmental footprint of our meals to make us aware of the impact of our actions. It also proposes healthy recipes that help us to reduce food waste.

But not all actions of this kind are driven by public bodies or non-profit associations. The fight against the deterioration of our environment is also a niche market offering business opportunities. Private companies also offer solutions for calculating the carbon footprint, such as climate Hero, which is based on multiple data sources.

Data sources to feed carbon footprint calculators

As we have seen, in order to make these calculations, these solutions need to be based on data that allow them to calculate the relationship between certain consumption habits and the emissions generated. To do this, they draw on a variety of data sources, many of which are open. In Spain, for example, we find:

- National Statistics Institute (INE). The INE provides data on atmospheric emissions by branch of activity, as well as for households. It can be filtered by gas type and its equivalence in thousands of tonnes of CO2. It also provides data on the historical evolution of the achievement of carbon footprint reduction targets, which are based on the National Inventories of Emissions to the Atmosphere, prepared by the Ministry for Ecological Transition and the Demographic Challenge.

- Autonomous Communities. Several regional governments carry out inventories of pollutant emissions into the atmosphere. This is the case of the Basque Country and the Community of Madrid. Some regions also publish open forecast data, such as the Canary Islands, which provides projections of climate change in tourism or drought situations.

Other international data services to consider are:

- EarthData. This service provides full and open access to NASA' s collection of Earth science data to understand and protect our planet. This web provides links to commonly used data on greenhouse gases, including carbon dioxide, methane, nitrous oxide, ozone, chlorofluorocarbons and water vapour, as well as information on their environmental impact.

- Eurostat. The Statistical Office of the European Commission regularly publishes estimates of quarterly greenhouse gas emissions in the European Union, broken down by economic activity. The estimates cover all quarters from 2010 to the present.

- Life Cycle Assessment (LCA). This platform is the EU's knowledge base on sustainable production and consumption. It provides a product life cycle inventory for supply chain analysis. Data from business associations and other sources related to energy carriers, transport and waste management are used.

- Our World in Data. One of the most widely used datasets of this portal contains information on CO2 and greenhouse gas emissions through key metrics. Various primary data sources such as the US Energy Information Agency and The Global Carbon Project have been used for its elaboration. All raw data and scripts are available in their GitHub repository.

These repositories are just a sample, but there are many more sources whit valuable data to help us become more aware of the climate situation we live in and the impact our small day-to-day actions have on our planet. Reducing our carbon footprint is crucial to preserving our environment and ensuring a sustainable future. And only together will we be able to achieve our goals.

The unstoppable advance of ICTs in cities and rural territories, and the social, economic and cultural context that sustains it, requires skills and competences that position us advantageously in new scenarios and environments of territorial innovation. In this context, the Provincial Council of Badajoz has been able to adapt and anticipate the circumstances, and in 2018 it launched the initiative "Badajoz Es Más - Smart Provincia".

What is "Badajoz Es Más"?

The project "Badajoz Is More" is an initiative carried out by the Provincial Council of Badajoz with the aim of achieving more efficient services, improving the quality of life of its citizens and promoting entrepreneurship and innovation through technology and data governance in a region made up of 135 municipalities. The aim is to digitally transform the territory, favouring the creation of business opportunities, social improvement andsettlement of the population.

Traditionally, "Smart Cities" projects have focused their efforts on cities, renovation of historic centres, etc. However, "Badajoz Es Más" is focused on the transformation of rural areas, smart towns and their citizens, putting the focus on rural challenges such as depopulation of rural municipalities, the digital divide, talent retention or the dispersion of services. The aim is to avoid isolated "silos" and transform these challenges into opportunities by improving information management, through the exploitation of data in a productive and efficient way.

Citizens at the Centre

The "Badajoz es Más" project aims to carry out the digital transformation of the territory by making available to municipalities, companies and citizens the new technologies of IoT, Big Data, Artificial Intelligence, etc. The main lines of the project are set out below.

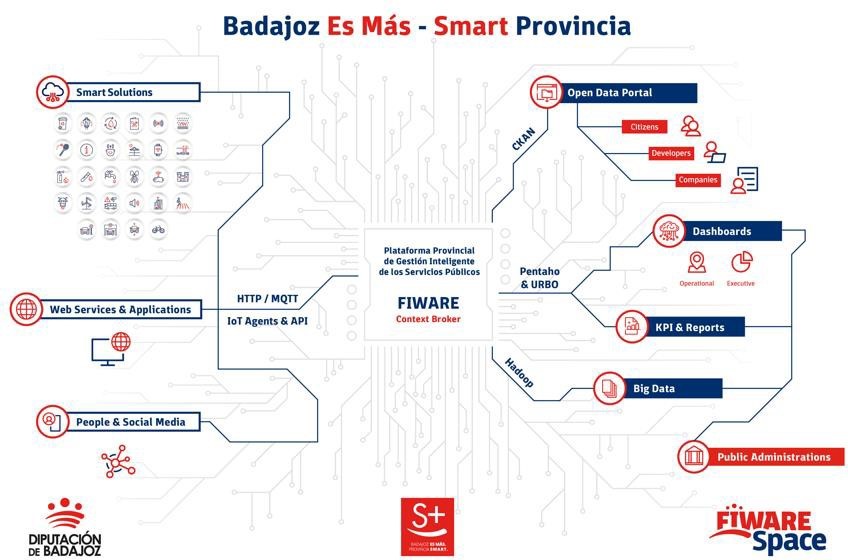

Provincial Platform for the Intelligent Management of Public Services

It is the core component of the initiative, as it allows for the integration of information from any IoT device, information system or data source in one place for storage, visualisation and in a single place for storage, visualisation and analysis. Specifically, data is collected from a variety of sources: the various sensors of smart solutions deployed in the region, web services and applications, citizen feedback and social networks.

All information is collected on a based on the open source standard FIWARE an initiative promoted by the European Commission that provides the capacity to homogenise data (FIWARE Data Model) and favour its interoperability. Built according to the guidelines set by AENOR (UNE 178104), it has a central module Orion Context Broker (OCB) which allows the entire information life cycleto be managed. In this way, it offers the ability to centrally monitor and manage a scalable set of public services through internal dashboards.

The platform is "multi-entity", i.e. it provides information, knowledge and services to both the Provincial Council itself and its associated Municipalities (also known as "Smart Villages"). The visualisation of the different information exploitation models processed at the different levels of the Platform is carried out on different dashboards, which can provide service to a specific municipality or locality only showing its data and services, or also provide a global view of all the services and data at the level of the Provincial Council of Badajoz.

Some of the information collected on the platform is also made available to third parties through various channels:

- Portal of open dopen data portal. Collected data that can be opened to third parties for reuse is shared through its open data portal. In it we can find information as diverse as real time data on the beaches with blue flags blue flag beaches in the region (air quality, water quality, noise pollution, capacity, etc. are monitored) or traffic flow, which makes it possible to predict traffic jams.

- Portal for citizens Digital Province Badajoz. This portal offers information on the solutions currently implemented in the province and their data in real time in a user-friendly way, with a simple user experience that allows non-technical people to access the projects developed.

The following graph shows the cycle of information, from its collection, through the platform and distribution to the different channels. All this under strong data governance.

Efficient public services

In addition to the implementation and start-up of the Provincial Platform for the Intelligent Management of Public Services, this project has already integrated various existing services or "verticals" for:

-

To start implementing these new services in the province and to be the example and the "spearhead" of this technological transformation.

- Show the benefits of the implementation of these technologies in order to disseminate and demonstrate them, with the aim of causing sufficient impact so that other local councils and organisations will gradually join the initiative.

There are currently more than 40 companies sending data to the Provincial Platform, more than 60 integrated data sources, more than 800 connected devices, more than 500 transactions per minute... It should be noted that work is underway to ensure that the new calls for tender include a clause so that data from the various works financed with public money can also be sent to the platform.

The idea is to be able to standardise management, so that the solution that has been implemented in one municipality can also be used in another. This not only improves efficiency, but also makes it possible to compare results between municipalities. You can visualise some of the services already implemented in the Province, as well as their Dashboards built from the Provincial Platform at this video.

Innovation Ecosystem

In order for the initiative to reach its target audience, the Provincial Council of Badajoz has developed an innovation ecosystem that serves as a meeting point for the Badajoz Provincial Council:

-

Citizens, who demand these services.

-

Entrepreneurs and educational entities, which have an interest in these technologies.

-

Companies, which have the capacity to implement these solutions.

- Public entities, which can implement this type of project.

The aim is to facilitate and provide the necessary tools, knowledge and advice so that the projects that emerge from this meeting can be carried out.

At the core of this ecosystem is a physical innovation centre called the FIWARE Space. FIWARE Space carries out tasks such as the organisation of events for the dissemination of Smart technologies and concepts among companies and citizens, demonstrative and training workshops, Hackathons with universities and study centres, etc. It also has a Showroom for the exhibition of solutions, organises financially endowed Challenges and is present at national and international congresses.

In addition, they carry out mentoring work for companies and other entities. In total, around 40 companies have been mentored by FIWARE Space, launching their own solutions on several occasions on the FIWARE Market, or proposing the generated data models as standards for the entire global ecosystem. These companies are offered a free service to acquire the necessary knowledge to work in a standardised way, generating uniform data for the rest of the region, and to connect their solutions to the platform, helping and advising them on the challenges that may arise.

One of the keys to FIWARE Space is its open nature, having signed many collaboration agreements and agreements with both local and international entities. For example, work on the standardisation of advanced data models for tourism is ongoing with the Future Cities Institute (Argentina). For those who would like more information, you can follow your centre's activity through its weekly blog.

Next steps: convergence with Data Spaces and Gaia-X

As a result of the collaborative and open nature of the project, the Data Space concept fits perfectly with the philosophy of "Badajoz is More". The Badajoz Provincial Council currently has a multitude of verticals with interesting information for sharing (and future exploitation) of data in a reliable, sovereign and secure way. As a Public Entity, comparing and obtaining other sources of data will greatly enrich the project, providing an external view that is essential for its growth. Gaia-X is the proposal for the creation of a data infrastructure for Europe, and it is the standard towards which the "Badajoz es Más" project is currently converging, as a result of its collaboration with the gaia-X Spain hub.

The Use Case Observatory is an initiative led by data.europa.eu, the European Open Data Portal. This is a research project on the economic, governmental, social and environmental impact of open data. The project will run for three years, from 2022 to 2025, during which the European Data Portal will monitor 30 cases of open data re-use and publish findings in regular deliverables.

In 2022 it made a first report and now, in April 2024, it has presented volume 2 of the exploratory analysis on the use of open data. In this second instalment, he analyses thirteen of the initial use cases that remain under study, three of them Spanish, and draws the following conclusions:

- The paper first of all underlines the high potential of open data re-use.

- It stresses that many organisations and applications owe their very existence to open data.

- It also points to the need to unlock more broadly the potential impact of open data on the economy, society and the environment.

- To achieve the above point, it points to continued support for the reuse community as crucial to identifying opportunities for financial growth.

The three Spanish cases: UniversiDATA-Lab, Tangible Data and Planttes

To select the use cases, the Use Case Observatory conducted an inventory based on three sources: the examples collected in the European portal's annual maturity studies , the solutions participating in the EU Datathon and the reuse examples available in the data.europa.eu use case repository. Only projects developed in Europe were taken into account, trying to maintain a balance between the different countries.

In addition, projects that had won an award or were aligned with the European Commission's priorities for 2019 to 2024 were highlighted. To finalise the selection, data.europa.eu conducted interviews with representatives of eligible use cases interested in participating in the project.

On this second occasion, the new report reviews one project in the economic impact area, three in the governmental area, six in the social area and four in the environmental area.

In both the first volume and this one, he highlights three Spanish cases: UniversiDATA-Lab and Tangible Data in the social field and Planttes in the environmental category.

UniversiDATA-Lab, the union of six universities around open data

In the case of UniversiDATA-Lab it is focused on higher education. It is a public portal for the advanced and automatic analysis of datasets published by the six Spanish universities that are part of the UniversiDATAportal: the Autonomous University of Madrid (UAM), the Carlos III University of Madrid, the Complutense University of Madrid (UCM), the University of Huelva, the University of Valladolid (UVa) and the Juan Carlos I University.

The aim of UniversiDATA-Lab is to transform the static analyses of the portal section into dynamic results. The Observatory's report notes that this project "encourages the use of shared resources" between the different university centres. Another notable impact is the implementation of dynamic web applications that read the UniversiDATA catalogue in real time, retrieve all available data and perform online data analysis.

Regarding the previous report, it praises its "considerable effort to convert intricate data into user-friendly information", and notes that this project provides detailed documentation to help users understand the nature of the data analysed.

Tangible Data, making spatial data understandable

Tangible Data is a project that transforms data from its digital context into a physical context by creating data sculptures in public space. These data sculptures help people who lack certain digital skills to understand them. It uses data from international agencies (e.g. NASA, World Bank) and other similar platforms as data sources.

In this second volume, they highlight its "significant" evolution, as since last year the project has moved from minimum viable product testing to the delivery of integral projects. This has allowed them to "explore commercial and educational opportunities, such as exhibitions, workshops, etc.", as extrapolated from the interviews conducted. In addition, the four key processes (design, creation, delivery and measurement) have been standardised and have made the project globally accessible and rapidly deployable.

Planttes, an environmental initiative that is making its way into the Observatory

The third Spanish example, Planttes, is a citizen science app that informs users about which plants are in flower and whether this can affect people allergic to pollen. It uses open data from the Aerobiology Information Point (PIA-UAB), among others, which it complements with data provided by users to create personalised maps.

Of this project, the Observatory notes that, by harnessing community involvement and technology, "the initiative has made significant progress in understanding and mitigating the impact of pollen allergies with a commitment to furthering awareness and education in the years to come".

Regarding the work developed, he points out that Planttes has evolved from a mobile application to a web application in order to improve accessibility. The aim of this transition is to make it easier for users to use the platform without the limitations of mobile applications.

The Use Case Observatory will deliver its third volume in 2025. Its raison d'être goes beyond analysing and outlining achievements and challenges. As this is an ongoing project over three years, it will allow for the extrapolation of concrete ideas for improving open data impact assessment methodologies.

1. Introduction

Visualisations are graphical representations of data that allow to communicate, in a simple and effective way, the information linked to the data. The visualisation possibilities are very wide ranging, from basic representations such as line graphs, bar charts or relevant metrics, to interactive dashboards.

In this section of "Step-by-Step Visualisations we are regularly presenting practical exercises making use of open data available at datos.gob.es or other similar catalogues. They address and describe in a simple way the steps necessary to obtain the data, carry out the relevant transformations and analyses, and finally draw conclusions, summarizing the information.

Documented code developments and free-to-use tools are used in each practical exercise. All the material generated is available for reuse in the GitHub repository of datos.gob.es.

In this particular exercise, we will explore the current state of electric vehicle penetration in Spain and the future prospects for this disruptive technology in transport.

Access the data lab repository on Github.

Run the data pre-processing code on Google Colab.

In this video (available with English subtitles), the author explains what you will find both on Github and Google Colab.

2. Context: why is the electric vehicle important?

The transition towards more sustainable mobility has become a global priority, placing the electric vehicle (EV) at the centre of many discussions on the future of transport. In Spain, this trend towards the electrification of the car fleet not only responds to a growing consumer interest in cleaner and more efficient technologies, but also to a regulatory and incentive framework designed to accelerate the adoption of these vehicles. With a growing range of electric models available on the market, electric vehicles represent a key part of the country's strategy to reduce greenhouse gas emissions, improve urban air quality and foster technological innovation in the automotive sector.

However, the penetration of EVs in the Spanish market faces a number of challenges, from charging infrastructure to consumer perception and knowledge of EVs. Expansion of the freight network, together with supportive policies and fiscal incentives, are key to overcoming existing barriers and stimulating demand. As Spain moves towards its sustainability and energy transition goals, analysing the evolution of the electric vehicle market becomes an essential tool to understand the progress made and the obstacles that still need to be overcome.

3. Objective

This exercise focuses on showing the reader techniques for the processing, visualisation and advanced analysis of open data using Python. We will adopt a "learning-by-doing" approach so that the reader can understand the use of these tools in the context of solving a real and topical challenge such as the study of EV penetration in Spain. This hands-on approach not only enhances understanding of data science tools, but also prepares readers to apply this knowledge to solve real problems, providing a rich learning experience that is directly applicable to their own projects.

The questions we will try to answer through our analysis are:

- Which vehicle brands led the market in 2023?

- Which vehicle models were the best-selling in 2023?

- What market share will electric vehicles absorb in 2023?

- Which electric vehicle models were the best-selling in 2023?

- How have vehicle registrations evolved over time?

- Are we seeing any trends in electric vehicle registrations?

- How do we expect electric vehicle registrations to develop next year?

- How much CO2 emission reduction can we expect from the registrations achieved over the next year?

4. Resources

To complete the development of this exercise we will require the use of two categories of resources: Analytical Tools and Datasets.

4.1. Dataset

To complete this exercise we will use a dataset provided by the Dirección General de Tráfico (DGT) through its statistical portal, also available from the National Open Data catalogue (datos.gob.es). The DGT statistical portal is an online platform aimed at providing public access to a wide range of data and statistics related to traffic and road safety. This portal includes information on traffic accidents, offences, vehicle registrations, driving licences and other relevant data that can be useful for researchers, industry professionals and the general public.

In our case, we will use their dataset of vehicle registrations in Spain available via:

- Open Data Catalogue of the Spanish Government.

- Statistical portal of the DGT.

Although during the development of the exercise we will show the reader the necessary mechanisms for downloading and processing, we include pre-processed data

in the associated GitHub repository, so that the reader can proceed directly to the analysis of the data if desired.

*The data used in this exercise were downloaded on 04 March 2024. The licence applicable to this dataset can be found at https://datos.gob.es/avisolegal.

4.2. Analytical tools

- Programming language: Python - a programming language widely used in data analysis due to its versatility and the wide range of libraries available. These tools allow users to clean, analyse and visualise large datasets efficiently, making Python a popular choice among data scientists and analysts.

- Platform: Jupyter Notebooks - ia web application that allows you to create and share documents containing live code, equations, visualisations and narrative text. It is widely used for data science, data analytics, machine learning and interactive programming education.

-

Main libraries and modules:

- Data manipulation: Pandas - an open source library that provides high-performance, easy-to-use data structures and data analysis tools.

- Data visualisation:

- Matplotlib: a library for creating static, animated and interactive visualisations in Python..

- Seaborn: a library based on Matplotlib. It provides a high-level interface for drawing attractive and informative statistical graphs.

- Statistics and algorithms:

- Statsmodels: a library that provides classes and functions for estimating many different statistical models, as well as for testing and exploring statistical data.

- Pmdarima: a library specialised in automatic time series modelling, facilitating the identification, fitting and validation of models for complex forecasts.

5. Exercise development

It is advisable to run the Notebook with the code at the same time as reading the post, as both didactic resources are complementary in future explanations

The proposed exercise is divided into three main phases.

5.1 Initial configuration

This section can be found in point 1 of the Notebook.

In this short first section, we will configure our Jupyter Notebook and our working environment to be able to work with the selected dataset. We will import the necessary Python libraries and create some directories where we will store the downloaded data.

5.2 Data preparation

This section can be found in point 2 of the Notebookk.

All data analysis requires a phase of accessing and processing to obtain the appropriate data in the desired format. In this phase, we will download the data from the statistical portal and transform it into the format Apache Parquet format before proceeding with the analysis.

Those users who want to go deeper into this task, please read this guide Practical Introductory Guide to Exploratory Data Analysis.

5.3 Data analysis

This section can be found in point 3 of the Notebook.

5.3.1 Análisis descriptivo

In this third phase, we will begin our data analysis. To do so,we will answer the first questions using datavisualisation tools to familiarise ourselves with the data. Some examples of the analysis are shown below:

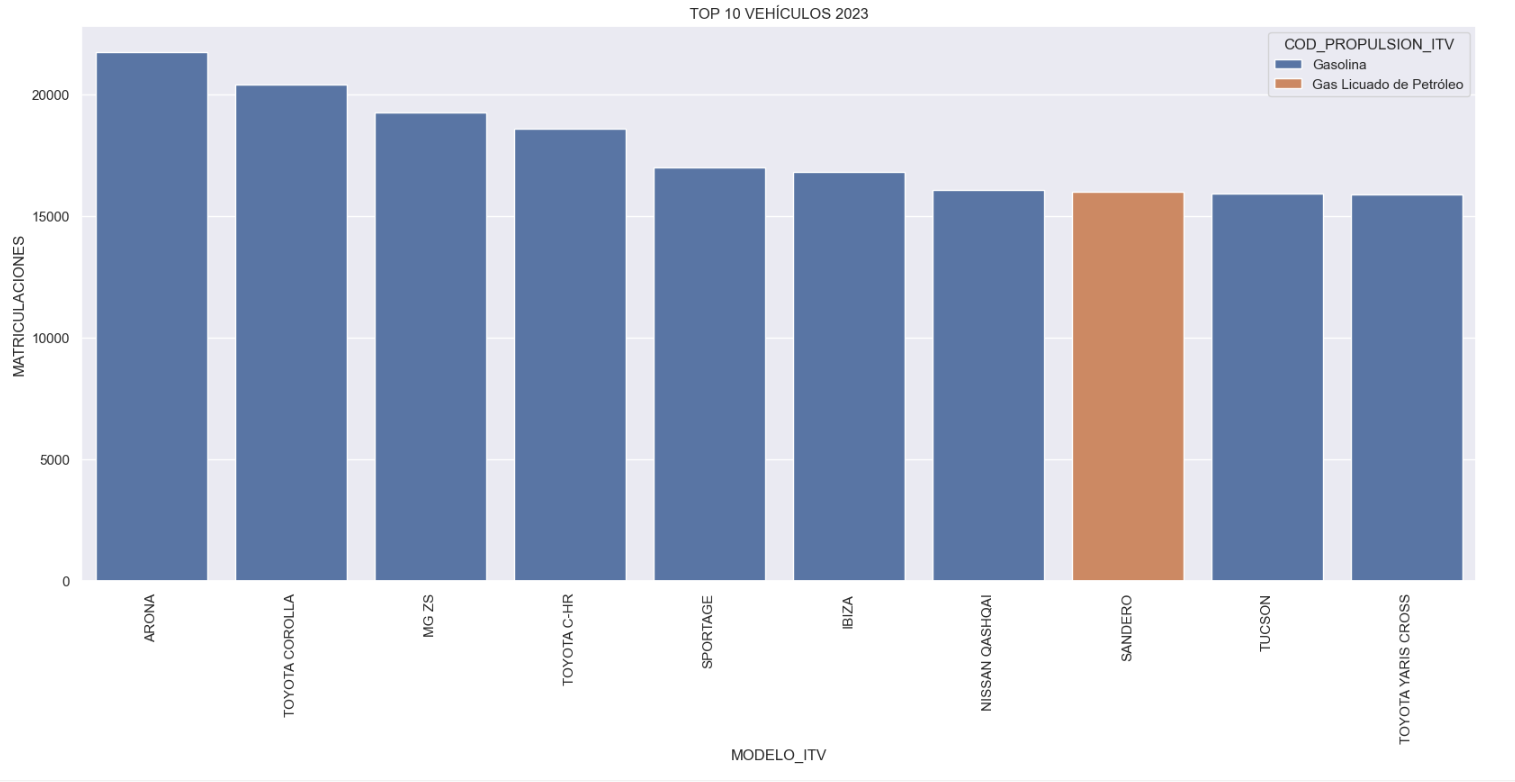

- Top 10 Vehicles registered in 2023: In this visualisation we show the ten vehicle models with the highest number of registrations in 2023, also indicating their combustion type. The main conclusions are:

- The only European-made vehicles in the Top 10 are the Arona and the Ibiza from Spanish brand SEAT. The rest are Asians.

- Nine of the ten vehicles are powered by gasoline.

- The only vehicle in the Top 10 with a different type of propulsion is the DACIA Sandero LPG (Liquefied Petroleum Gas).

Figure 1. Graph "Top 10 vehicles registered in 2023"

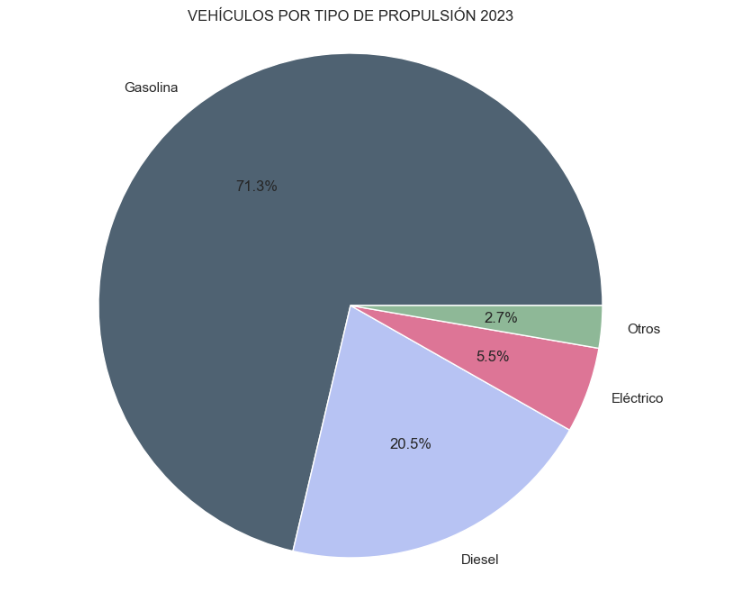

- Market share by propulsion type: In this visualisation we represent the percentage of vehicles registered by each type of propulsion (petrol, diesel, electric or other). We see how the vast majority of the market (>70%) was taken up by petrol vehicles, with diesel being the second choice, and how electric vehicles reached 5.5%.

Figure 2. Graph "Market share by propulsion type".

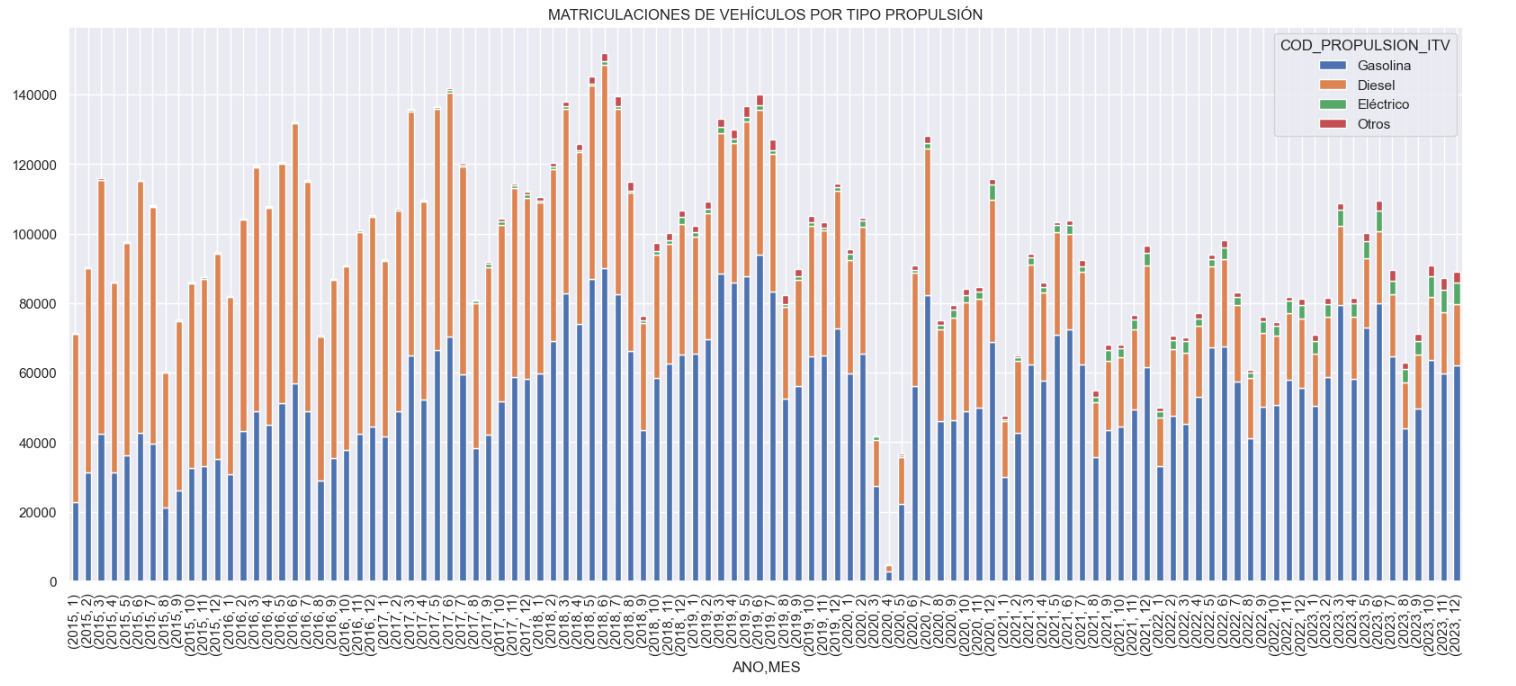

- Historical development of registrations: This visualisation represents the evolution of vehicle registrations over time. It shows the monthly number of registrations between January 2015 and December 2023 distinguishing between the propulsion types of the registered vehicles, and there are several interesting aspects of this graph:

- We observe an annual seasonal behaviour, i.e. we observe patterns or variations that are repeated at regular time intervals. We see recurring high levels of enrolment in June/July, while in August/September they decrease drastically. This is very relevant, as the analysis of time series with a seasonal factor has certain particularities.

-

The huge drop in registrations during the first months of COVID is also very remarkable.

-

We also see that post-covid enrolment levels are lower than before.

-

Finally, we can see how between 2015 and 2023 the registration of electric vehicles is gradually increasing.

Figure 3. Graph "Vehicle registrations by propulsion type".

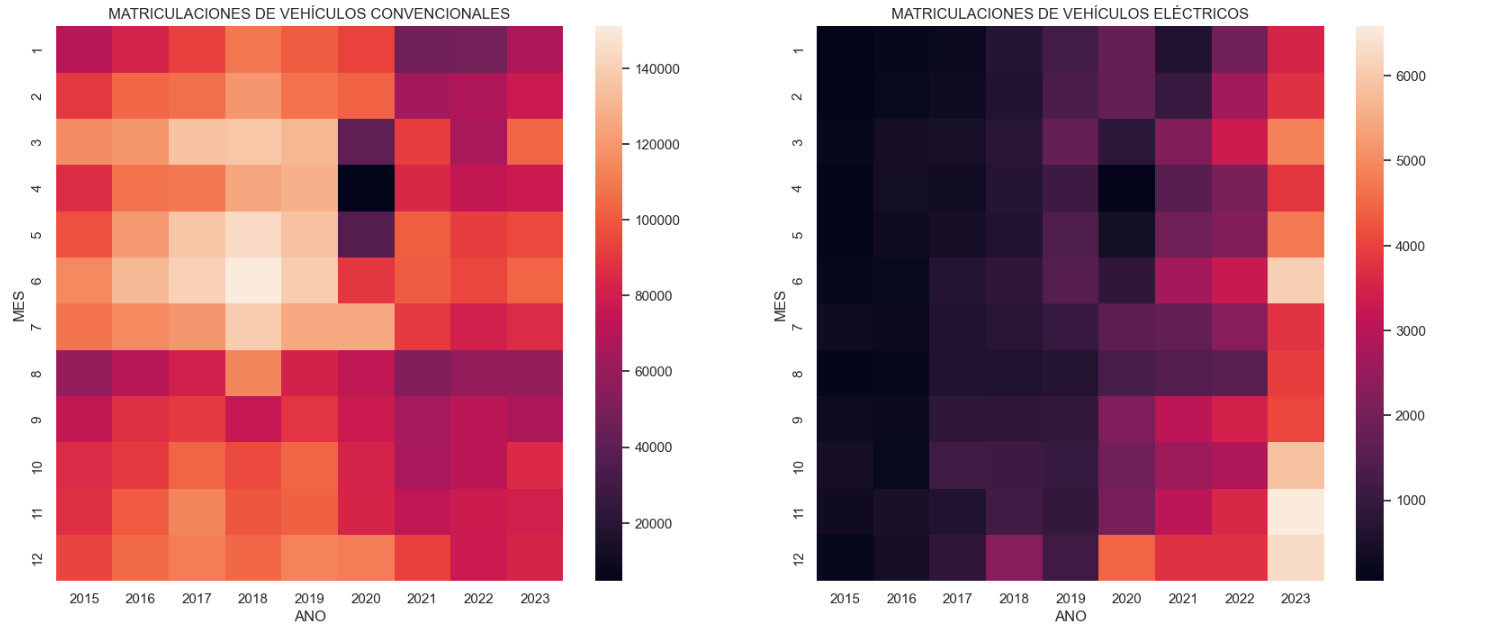

- Trend in the registration of electric vehicles: We now analyse the evolution of electric and non-electric vehicles separately using heat maps as a visual tool. We can observe very different behaviours between the two graphs. We observe how the electric vehicle shows a trend of increasing registrations year by year and, despite the COVID being a halt in the registration of vehicles, subsequent years have maintained the upward trend.

Figure 4. Graph "Trend in registration of conventional vs. electric vehicles".

5.3.2. Predictive analytics

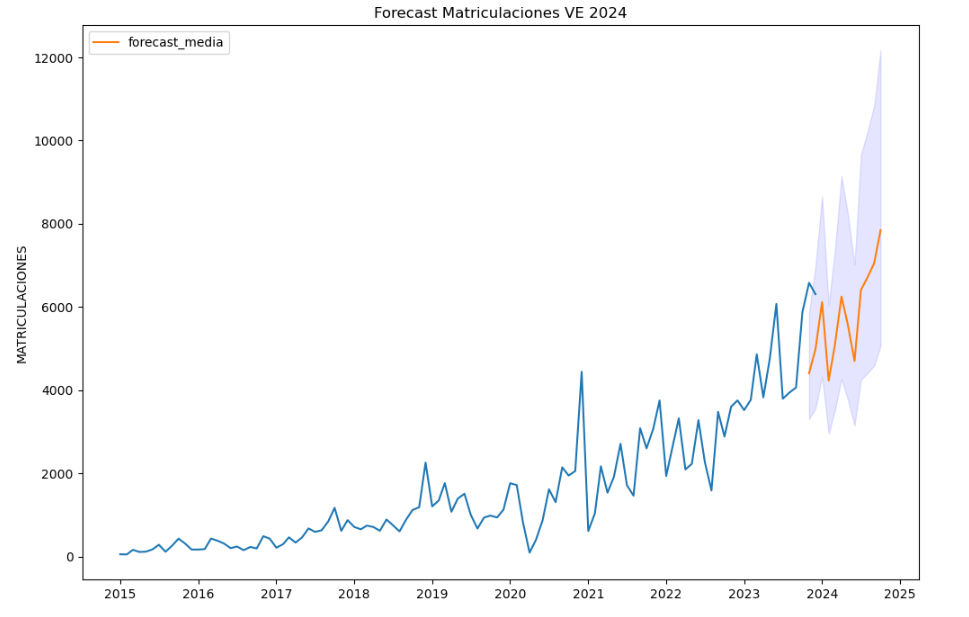

To answer the last question objectively, we will use predictive models that allow us to make estimates regarding the evolution of electric vehicles in Spain. As we can see, the model constructed proposes a continuation of the expected growth in registrations throughout the year of 70,000, reaching values close to 8,000 registrations in the month of December 2024 alone.

Figure 5. Graph "Predicted electric vehicle registrations".

5. Conclusions

As a conclusion of the exercise, we can observe, thanks to the analysis techniques used, how the electric vehicle is penetrating the Spanish vehicle fleet at an increasing speed, although it is still at a great distance from other alternatives such as diesel or petrol, for now led by the manufacturer Tesla. We will see in the coming years whether the pace grows at the level needed to meet the sustainability targets set and whether Tesla remains the leader despite the strong entry of Asian competitors.

6. Do you want to do the exercise?

If you want to learn more about the Electric Vehicle and test your analytical skills, go to this code repository where you can develop this exercise step by step.

Also, remember that you have at your disposal more exercises in the section "Step by step visualisations" "Step-by-step visualisations" section.

Content elaborated by Juan Benavente, industrial engineer and expert in technologies linked to the data economy. The contents and points of view reflected in this publication are the sole responsibility of the author.

In the digital age, data has become an invaluable tool in almost all areas of society, and the world of sport is no exception. The availability of data related to this field can have a positive impact on the promotion of health and wellbeing, as well as on the improvement of physical performance of both athletes and citizens in general. Moreover, its benefits are also evident in the economic sphere, as this data can be used to publicise the sports offer or to generate new services, among other things.

Here are three examples of their impact.

Promoting an active and healthy lifestyle

The availability of public information can inspire citizens to participate in physical activity and sport, both by providing examples of its health benefits and by facilitating access to opportunities that suit their individual preferences and needs.

An example of the possibilities of open data in this field can be found in the project OpenActive. It is an initiative launched in 2016 by the Open Data Institute (ODI) together with Sport England, a public body aimed at encouraging physical activity for everyone in England. OpenActive allows sport providers to publish standardised open data, based on a standard developed by the ODI to ensure quality, interoperability and reliability. These data have enabled the development of tools to facilitate the search for and booking of activities, thus helping to combat citizens' physical inactivity. According to an external impact assessment, this project could have helped prevent up to 110 premature deaths, saved up to £3 million in healthcare costs and generated up to £20 million in productivity gains per year. In addition, it has had a major economic impact for the operators participating that share their data by increasing their customers and thus their profits.

Optimisation of physical work

The data provides teams, coaches and athletes with access to a wealth of information about competitions and their performance, allowing them to conduct detailed analysis and find ways to improve. This includes data on game statistics, health, etc.

In this respect, the French National Agency for Sport, together with the National Institute for Sport, Experience and Performance (INSEP) and the General Directorate for Sport, have developed the Sport Data Hub - FFS. The project was born in 2020 with the idea of boosting the individual and collective performance of French sport in the run-up to the Olympic Games in Pays 2024. It consists of the creation of a collaborative tool for all those involved in the sports movement (federations, athletes, coaches, technical teams, institutions and researchers) to share data that allows for aggregate comparative analysis at national and international level.

Research to understand the impact of data in areas such as health and the economy

Data related to physical activity can also be used in scientific research to analyse the effects of exercise on health and help prevent injury or disease. They can also help us to understand the economic impact of sporting activities.

As an example, in 2021 the European Commission launched the report Mapping of sport statistics and data in the EU, with data on the economic and social impact of sport, both at EU and member state level, between 2012 and 2021. The study identifies available data sources and collects quantitative and qualitative data. These data are used to compile a series of indicators of the impact of sport on the economy and society, including an entire section focusing on health.

This type of study can be used by public bodies to develop policies to promote these activities and to provide citizens with sport-related services and resources adapted to their specific needs. A measure that could help to prevent diseases and thus save on health costs.

Where to find open data related to sport?

In order to carry out these projects, reliable data sources are needed. At datos.gob.es you can find various datasets on this topic. Most of them have been published by local administrations and refer to sports facilities and equipment, as well as events of this nature.

Within the National Catalogue, DEPORTEData stands out. It is a database of the Ministry of Education, Vocational Training and Sport for the storage and dissemination of statistical results in the field of sport. Through their website they offer magnitudes structured in two large blocks:

- Cross-sectional estimates on employment and enterprises, expenditure by households and public administration, education, foreign trade and tourism, all linked to sport.

- Sector-specific information, including indicators on federated sport, coach training, doping control, sporting habits, facilities and venues, as well as university and school championships.

At the European level, we can visit the European Open Data Portal (data.europa.eu), with more than 40,000 datasets on sport, or Eurostat. And if we want to take a closer look at citizens' behaviour, we can go to the Eurobarometer on sport and physical activity, whose data can also be found on data.europa.eu. Similarly, at the global level, the World Health Organisation provides datasets on the effects of physical inactivity.

In conclusion, there is a need to promote the openness of quality, up-to-date and reliable data on sport. Information with a great impact not only for society, but also for the economy, and can help us improve the way we participate, compete and enjoy sport.

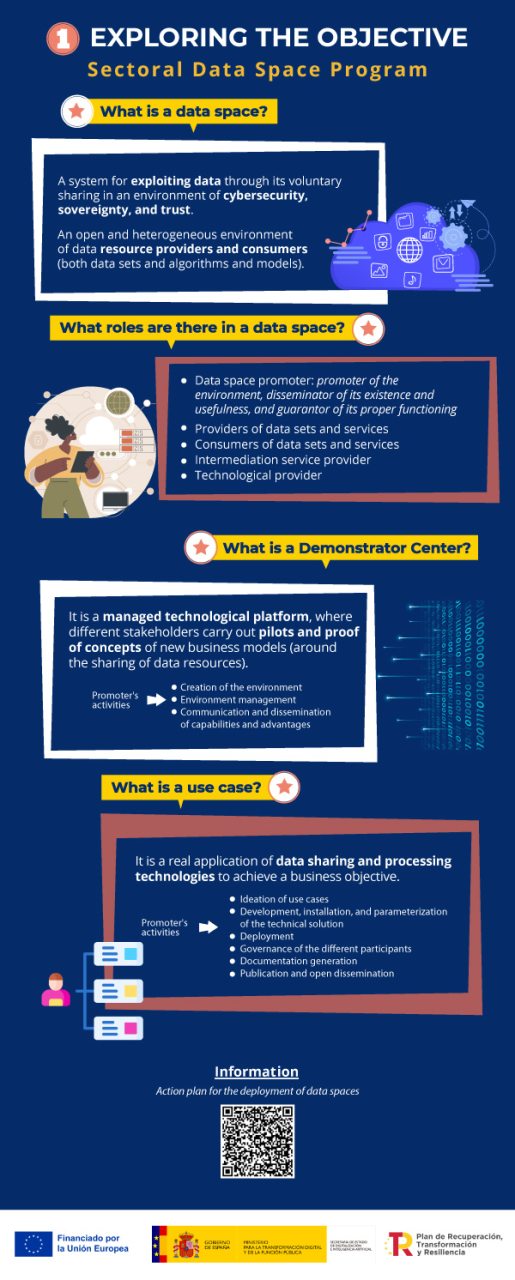

Between 2 April and 16 May, applications for the call on aid for the digital transformation of strategic productive sectors may be submitted at the electronic headquarters of the Ministry for Digital Transformation and Civil Service. Order TDF/1461/2023, of 29 December, modified by Order TDF/294/2024, regulates grants totalling 150 million euros for the creation of demonstrators and use cases, as part of a more general initiative of Sectoral Data Spaces Program, promoted by the State Secretary for Digitalisation and Artificial Intelligence and framed within the Recovery, Transformation and Resilience Plan (PRTR). The objective is to finance the development of data spaces and the promotion of disruptive innovation in strategic sectors of the economy, in line with the strategic lines set out in the Digital Spain Agenda 2026.

Lines, sectors and beneficiaries

The current call includes funding lines for experimental development projects in two complementary areas of action: the creation of demonstration centres (development of technological platforms for data spaces); and the promotion of specific use cases of these spaces. This call is addressed to all sectors except tourism, which has its own call. Beneficiaries may be single entities with their own legal personality, tax domicile in the European Union, and an establishment or branch located in Spain. In the case of the line for demonstration centres, they must also be associative or representative of the value chains of the productive sectors in territorial areas, or with scientific or technological domains.

Infographic-summary

The following infographics show the key information on this call for proposals:

Would you like more information?

- Access to the grant portal for application proposals in the following link. On the portal you will find the regulatory bases and the call for applications, a summary of its content, documentation and informative material with presentations and videos, as well as a complete list of questions and answers. In the mailbox espaciosdedatos@digital.gob.es you will get help about the call and the application procedure. From this portal you can access the electronic office for the application.

- Quick guide to the call for proposals in pdf + downloadable Infographics (on the Sectoral Data Program and Technical Information)

- Link to other documents of interest:

- Additional information on the data space concept

Open data provides relevant information on the state and evolution of different sectors, including employment. Employment data typically includes labour force statistics and information on employees, as well as economic, demographic or benefits-related data, interviews, salaries, vacancies, etc.

This information can provide a clear picture of a country's economic health and the well-being of its citizens, encouraging informed decision-making. In addition, they can also serve as a basis for the creation of innovative solutions to assist in a variety of tasks.

In this article we will review some sources of open data on job quality, as well as examples of use to show the potential benefits of re-use.

Where to find employment data?

In datos.gob.es a large number of data sets on employment are available, with the National Statistics Institute (INE) standing out as the national publisher. Thanks to the INE data, we can find out who is employed by sector of activity, type of studies or working day, as well as the reasons for having a part-time job. The data provided by this entity also allows us to know the employment situation of people with disabilities or by sex.

Other sources of data where you can find information of interest is the State Public Employment Service (sepe.es), where you can find statistical data on job seekers, jobs and placements, from May 2005 to the present day. To this must be added the regional bodies, many of which have launched their own open employment data portal. This is the case of the Junta de Andalucía.

If we are interested in making a comparison between countries, we can also turn to data from the OECD, Eurostat or the World Bank.

All these data can be of great interest to:

- Policy makers, to better understand and react to labour market dynamics.

- Employers, to optimise their recruitment activities.

- Job seekers, to make better career choices.

- Education and training institutions, to adapt curricula to the needs of the labour market.

Use cases of open data in the employment sector

It is just as important to have sources of open employment data as it is to know how to interpret the information they offer about the sector. This is where reusers come in, taking advantage of this raw material to create data products that can respond to different needs. Let's look at some examples:

- Decision-making and implementation of active policies. Active labour market policies are tools that governments use to intervene directly in the labour market, through training, guidance, incentives to hiring, etc. To do so, they need to be aware of market trends and needs. This has led many public bodies to set up observatories, such as the SEPE or the Principality of Asturias. There are also specific observatories for each area, such as the one for Equality and Employment. At the European level, Eurostat's proposal stands out: to establish requirements to create a pan-European system for the production of official statistics and specific policy analyses using open data on online job vacancies. This project has been carried out using the BDTI platform. But this field is not limited to the public sector; other actors can also submit proposals. This is the case of Iseak, a non-profit organisation that promotes a research and transfer centre in economics. Among other questions, Iseak seeks to answer questions such as: does the increase in the minimum wage lead to job destruction or why is there a gender gap in the market?

- Accountability. All this information is not only useful for public bodies, but also for citizens to assess whether their government's employment policies are working. For this reason, many governments, such as Castilla y León, make this data available to citizens through easy-to-understand visualisations. Data journalism also plays a leading role in this field, with pieces that bring information closer to the general public, such as these examples of salaries or the level of unemployment by area. If you want to know how to make this type of visualisations, we explain them in this step-by-step exercise that characterises the demand for employment and hiring registered in Spain.

- Boosting job opportunities. In order to bring information of interest to citizens who are actively seeking employment or new job opportunities, there are tools, such as this app for public employment calls or grants, based on open data. There are also town councils that create solutions to boost employment and the economy in their locality, such as the APP Paterna Empléate. These apps are a much simpler and more user-friendly way of consuming data than traditional job search portals. Barcelona Provincial Council has gone a step further. This tool uses AI applied to open data to, among other purposes, offer personalised services for individuals, companies and rural sectors, as well as access to job offers. The information it offers comes from notice boards, as well as from the Profile of the contracting party and various municipal websites.

- Development of advanced solutions. Employment data can also be used to power a wide variety of machine learning use cases. One example is this US platform for financial analytics that provides data and information to investors and companies. It uses US unemployment rate data, combined with other data such as postcodes, demographics or weather data.

In short, thanks to this type of data we can not only learn more about the employment situation in our environment, but also feed solutions that help boost the economy or facilitate access to job opportunities. It is therefore a category of data whose publication should be promoted by public bodies at all levels.

The year is coming to an end and it is a good time to review some of the issues that have marked the open data and data sharing ecosystem in Spain, a community that continues to grow and build alliances for the development of innovative technologies. A synergy that lays the foundations to face an interconnected, digital future full of possibilities.

With 2024 just a few days away, we take stock of the news, events and training of interest that have marked the year behind us. In this compilation we review some regulatory developments, new portals and projects promoted by the public sector, as well as various educational resources and reference documentation that 2023 has left us.

Legal regulation for the development of collaborative environments

During this year, in datos.gob.es we have echoed relevant news in the open data and data sharing sector. All of them have contributed to consolidate the appropriate context for interoperability and the promotion of the value of data in our society. The following is a review of the most relevant announcements:

-

At the beginning of the year, the European Commission published a first list of high-value datasets that are of great value to the economy, the environment and society because of the information they contain. For this reason, member states must make them available to the public by summer 2024. This first list of categories includes geospatial, earth observation and environmental, meteorological, statistical, business and mobility data. On the other hand, at the end of 2023, the same body made a proposal to expand the list of categories of datasets to be considered of high value, adding another seven proposals for categories that could be included in the future: climate loss, energy, financial, public administration and government, health, justice and language.

-

In the first quarter of the year, Law 37/2007 on the reuse of public sector information was amended in light of the latest European Open Data Directive. Now, public administrations will have to comply with, among others, two essential requirements: to focus on the publication of high-value data through APIs and to designate a unit responsible for information to ensure the correct opening of data. These measures are intended to be aligned with the demands of competitiveness and innovation raised by technologies such as AI and with the key role played by data when it comes to configuring data spaces.

-

The publication of the UNE data specifications was another milestone in standardization that marked 2023. The volume of data continues to grow and mechanisms are needed to ensure its proper use and exploitation. To this end, there are:

-

Another noteworthy advance has been the approval of the consolidated wording of the European Data Regulation (Data Act), which seeks to provide harmonized standards for fair access to and use of data. The legal structure that will drive the data economy in the EU is now a reality. The Data Act and the Data Governance Act also passed in 2023 will contribute to the development of a European Digital Single Market.

-

In October 2023 the future Interoperable Europe Act (Interoperable Europe Act) entered the final legislative stage after getting the go-ahead from the member states. The aim of the Interoperable Europe Act is to strengthen interoperability between public sector administrations in the EU and to create digital public services focused on citizens and businesses.

Advances in the open data ecosystem in Spain

In the last year, many public bodies have opted for opening their data in formats suitable for reuse, many of them focused on specific topics, such as meteorology. Some examples are:

-

The Diputación de Segovia premiered an open data portal with information from city councils.

-

The Cabildo de Palma launched a new open and real-time weather data portal that provides information on current and historical weather and air quality.

-

The City Council of Soria also created a georeferenced information viewer that allows to consult parameters such as air quality, noise level, meteorology or traffic of people, among other variables.

-

The Malaga City Council has recently allied with the CSIC to develop a marine observatory that will collect and share open data in real time on coastal activity.

-

Progress on new portals will continue during 2024, as there are city councils that have expressed their interest in developing projects of this type. One example is the City Council of Las Torres de Cotillas: it recently launched a municipal website and a citizen participation portal in which they plan to enable an open data space in the near future.

On the other hand, many institutions that already published open data have been expanding their catalog of datasets throughout the year. This is the case of the Canary Islands Statistics Institute (ISTAC), which has implemented various improvements such as the expansion of its semantic open data catalog to achieve better data and metadata sharing.

Along these lines, more agreements have also been signed to promote the opening and sharing of data, as well as the acquisition of related skills. For example, with universities:

-

The Navarra Open Data portal incorporated information provided by the Public University of Navarra (UPNA) on its structure, activity, economic data and workforce.

-

The University of Valladolid (UVa) has presented a Chair of Transparency and Open Government that will address issues such as data governance, among others.

-

The University of Burgos has implemented an open science policy to foster collaboration and knowledge sharing and provide equal access to scientific and research work.

-

The Carlos III University of Madrid (UC3M) has partnered with the Community of Madrid to establish the Chair on Territorial Dynamism that will promote research and the development of open data analysis activities, among others.

Disruptive solutions using open data

The winning combination of open data and technology has driven the development of multiple initiatives of interest as a result of the efforts of public administrations, such as, for example:

-

The Community of Madrid managed to optimize by 25% the reliability of the prediction of pollen levels in the territory thanks to artificial intelligence and open data. Through the CAM's open data portal, citizens can access an interactive map to find out the level of pollen in the air in their area.

-

The Valencia City Council's Chair of Governance at the Polytechnic University (UPV) published a study that uses open data sources to calculate the carbon footprint by neighborhoods in the city of Valencia.

-

The Xunta de Galicia presented a digital twin project for territorial management that will have information stored in public and private databases.

-

The Consejo Superior de Investigaciones Científicas (CSIC) initiated the TeresIA project for terminology in Spanish that will generate a meta-search engine for access to terminologies of pan-Hispanic scope based on AI and open data.

During 2023, Public Administrations have not only launched technological projects, but have also boosted entrepreneurship around open data with activities such as the Castilla y León Open Data contest. An event in which projects developed with open data as products or services, ideas, data journalism works and didactic resources were awarded.

Trainings and events to keep up with the trends

Educational materials on open data and related technologies have only grown in 2023. We highlight some free and virtual resources that are available:

-

The European Open Data Portal is a reference source in all aspects, also at the training level. Over the last year, it has shared educational resources such as this free course on data visualization, this one on the legal aspects of open data or this one on how to incorporate open data into an application.

-

In 2023, the European Interoperability Academy published a free online short course on open source licensing for which no prior knowledge of the subject is required.

-

In 2023, we have published more practical exercises from the 'Visualizations step by step' series such as this tutorial to learn how to generate a customized tourist map with MyMaps or this analysis of meteorological data using the "ggplot2" library.

In addition, there are many activities that have been carried out in 2023 to promote the data culture. However, if you missed any of them, you can re-watch the online recordings of the following ones:

-

In March, the European Conference on Data and Semantics was broadcast, presenting trends in multilingual data.

-

In September, the 2nd National Open Data Meeting was held under the theme "Urgent Call to Action for the Environment". The event continued the tradition started in 2022 in Barcelona, consolidating itself as one of the main meetings in Spain in the field of public sector data reuse and presenting training materials of interest to the community.

-

In October, the European benchmark interoperability conference SEMIC 2023, Interoperable Europe in the age of AI, was organized in Madrid.

Reports and other reference documents published in 2023

Once we have reviewed the news, initiatives, trainings and events, we would like to highlight a compendium of extensive knowledge such as the set of in-depth reports that have been published in 2023 on the open data sector and innovative technologies. Some noteworthy ones are:

-

The Asociación Multisectorial de la Información (ASEDIE) presented in April 2023 its 11th edition of the Infomediary Sector Report in which it reviews the health of companies working with data, a sector with growth potential. Here you can read the main conclusions.

-

From October 2023 Spain co-chaired the Steering Committee of the Open Government Partnership (OGP), a task that has involved driving OGP initiatives and leading open government thematic areas. This organization presented its global Open Government Partnership report in 2023, a document that highlights good practices such as the publication of large volumes of open data by European countries. In addition, it also identifies several areas for improvement such as the publication of more high-value data (HDV) in reusable and interoperable formats.

-

The Organisation for Economic Co-operation and Development (OECD) published a report on public administration principles in November 2023 in which it highlighted, among others, digitization as a tool for making data-driven decisions and implementing effective and efficient processes.

-

During this year, the European Commission published a report on the integration of data spaces in the European data strategy. Signed by experts in the field, this document lays the groundwork for implementing European dataspaces.

-

On the other hand, the open data working group of the Red de Entidades Locales por la Transparencia y la Participación Ciudadana and the Spanish Federation of Municipalities and Provinces presented a list of the 80 datasets to be published to continue completing the guides published in previous years. You can consult it here.

These are just a few examples of what the open data ecosystem has given of itself in the last year. If you would like to share with datos.gob.es any other news, leave us a comment or send us an email to dinamizacion@datos.gob.es.

We are currently in the midst of an unprecedented race to master innovations in Artificial Intelligence. Over the past year, the star of the show has been Generative Artificial Intelligence (GenAI), i.e., that which is capable of generating original and creative content such as images, text or music. But advances continue to come and go, and lately news is beginning to arrive suggesting that the utopia of Artificial General Intelligence (AGI) may not be as far away as we thought. We are talking about machines capable of understanding, learning and performing intellectual tasks with results similar to those of the human brain.

Whether this is true or simply a very optimistic prediction, a consequence of the amazing advances achieved in a very short space of time, what is certain is that Artificial Intelligence already seems capable of revolutionizing practically all facets of our society based on the ever-increasing amount of data used to train it.

And the fact is that if, as Andrew Ng argued back in 2017, artificial intelligence is the new electricity, open data would be the fuel that powers its engine, at least in a good number of applications whose main and most valuable source is public information that is accessible for reuse. In this article we will review a field in which we are likely to see great advances in the coming years thanks to the combination of artificial intelligence and open data: artistic creation.

Generative Creation Based on Open Cultural Data

The ability of artificial intelligence to generate new content could lead us to a new revolution in artistic creation, driven by access to open cultural data and a new generation of artists capable of harnessing these advances to create new forms of painting, music or literature, transcending cultural and temporal barriers.

Music

The world of music, with its diversity of styles and traditions, represents a field full of possibilities for the application of generative artificial intelligence. Open datasets in this field include recordings of folk, classical, modern and experimental music from all over the world and from all eras, digitized scores, and even information on documented music theories. From the arch-renowned MusicBrainz, the open music encyclopedia, to datasets opened by streaming industry dominators such as Spotify or projects such as Open Music Europe, these are some examples of resources that are at the basis of progress in this area. From the analysis of all this data, artificial intelligence models can identify unique patterns and styles from different cultures and eras, fusing them to create unpublished musical compositions with tools and models such as OpenAI's MuseNet or Google's Music LM.

Literature and painting

In the realm of literature, Artificial Intelligence also has the potential to make not only the creation of content on the Internet more productive, but to produce more elaborate and complex forms of storytelling. Access to digital libraries that house literary works from antiquity to the present day will make it possible to explore and experiment with literary styles, themes and storytelling archetypes from diverse cultures throughout history, in order to create new works in collaboration with human creativity itself. It will even be possible to generate literature of a more personalized nature to the tastes of more minority groups of readers. The availability of open data such as the Guttemberg Project with more than 70,000 books or the open digital catalogs of museums and institutions that have published manuscripts, newspapers and other written resources produced by mankind, are a valuable resource to feed the learning of artificial intelligence.

The resources of the Digital Public Library of America1 (DPLA) in the United States or Europeana in the European Union are just a few examples. These catalogs not only include written text, but also vast collections of visual works of art, digitized from the collections of museums and institutions, which in many cases cannot even be admired because the organizations that preserve them do not have enough space to exhibit them to the public. Artificial intelligence algorithms, by analyzing these works, discover patterns and learn about artistic techniques, styles and themes from different cultures and historical periods. This makes it possible for tools such as DALL-E2 or Midjourney to create visual works from simple text instructions with aesthetics of Renaissance painting, Impressionist painting or a mixture of both.

However, these fascinating possibilities are accompanied by a still unresolved controversy about copyright that is being debated in academic, legal and juridical circles and that poses new challenges to the definition of authorship and intellectual property. On the one hand, there is the question of the ownership of rights over creations produced by artificial intelligence. On the other hand, there is the use of datasets containing copyrighted works that have been used in the training of models without the consent of the authors. On both issues there are numerous legal disputes around the world and requests for explicit removal of content from the main training datasets.

In short, we are facing a field where the advance of artificial intelligence seems unstoppable, but we must be very aware not only of the opportunities, but also of the risks involved.

Content prepared by Jose Luis Marín, Senior Consultant in Data, Strategy, Innovation & Digitalization. The contents and points of view reflected in this publication are the sole responsibility of its author.

IATE, which stands for Interactive Terminology for Europe, is a dynamic database designed to support the multilingual drafting of European Union texts. It aims to provide relevant, reliable and easily accessible data with a distinctive added value compared to other sources of lexical information such as electronic archives, translation memories or the Internet.

This tool is of interest to EU institutions that have been using it since 2004 and to anyone, such as language professionals or academics, public administrations, companies or the general public. This project, launched in 1999 by the Translation Center, is available to any organization or individual who needs to draft, translate or interpret a text on the EU.

Origin and usability of the platform

IATE was created in 2004 by merging different EU terminology databases.The original Eurodicautom, TIS, Euterpe, Euroterms and CDCTERM databases were imported into IATE. This process resulted in a large number of duplicate entries, with the consequence that many concepts are covered by several entries instead of just one. To solve this problem, a cleaning working group was formed and since 2015 has been responsible for organizing analyses and data cleaning initiatives to consolidate duplicate entries into a single entry. This explains why statistics on the number of entries and terms show a downward trend, as more content is deleted and updated than is created.

In addition to being able to perform queries, there is the possibility to download your datasets together with the IATExtract extraction tool that allows you to generate filtered exports.

This inter-institutional terminology base was initially designed to manage and standardize the terminology of EU agencies. Subsequently, however, it also began to be used as a support tool in the multilingual drafting of EU texts, and has now become a complex and dynamic terminology management system. Although its main purpose is to facilitate the work of translators working for the EU, it is also of great use to the general public.

IATE has been available to the public since 2007 and brings together the terminology resources of all EU translation services. The Translation Center manages the technical aspects of the project on behalf of the partners involved: European Parliament (EP), Council of the European Union (Consilium), European Commission (COM), Court of Justice (CJEU), European Central Bank (ECB), European Court of Auditors (ECA), European Economic and Social Committee (EESC/CoR), European Committee of the Regions (EESC/CoR), European Investment Bank (EIB) and the Translation Centre for the Bodies of the European Union (CoT).

The IATE data structure is based on a concept-oriented approach, which means that each entry corresponds to a concept (terms are grouped by their meaning), and each concept should ideally be covered by a single entry. Each IATE entry is divided into three levels:

-

Language Independent Level (LIL)

-

Language Level (LL)

-

Term Level (TL) For more information, see Section 3 ('Structure Overview') below.

Reference source for professionals and useful for the general public

IATE reflects the needs of translators in the European Union, so that any field that has appeared or may appear in the texts of the publications of the EU environment, its agencies and bodies can be covered. The financial crisis, the environment, fisheries and migration are areas that have been worked on intensively in recent years. To achieve the best result, IATE uses the EuroVoc thesaurus as a classification system for thematic fields.

As we have already pointed out, this database can be used by anyone who is looking for the right term about the European Union. IATE allows exploration in fields other than that of the term consulted and filtering of the domains in all EuroVoc fields and descriptors. The technologies used mean that the results obtained are highly accurate and are displayed as an enriched list that also includes a clear distinction between exact and fuzzy matches of the term.

The public version of IATE includes the official languages of the European Union, as defined in Regulation No. 1 of 1958. In addition, a systematic feed is carried out through proactive projects: if it is known that a certain topic is to be covered in EU texts, files relating to this topic are created or improved so that, when the texts arrive, the translators already have the required terminology in IATE.

How to use IATE

To search in IATE, simply type in a keyword or part of a collection name. You can define further filters for your search, such as institution, type or date of creation. Once the search has been performed, a collection and at least one display language are selected.

To download subsets of IATE data you need to be registered, a completely free option that allows you to store some user preferences in addition to downloading. Downloading is also a simple process and can be done in csv or tbx format.

The IATE download file, whose information can also be accessed in other ways, contains the following fields:

-

Language independent level:

-

Token number: the unique identifier of each concept.

-

Subject field: the concepts are linked to fields of knowledge in which they are used. The conceptual structure is organized around twenty-one thematic fields with various subfields. It should be noted that concepts can be linked to more than one thematic field.

-

Language level:

-

Language code: each language has its own ISO code.

-

Term level

-

Term: concept of the token.

-

Type of term. They can be: terms, abbreviation, phrase, formula or short formula.

-

Reliability code. IATE uses four codes to indicate the reliability of terms: untested, minimal, reliable or very reliable.

-

Evaluation. When several terms are stored in a language, specific evaluations can be assigned as follows: preferable, admissible, discarded, obsolete and proposed.

A continuously updated terminology database

The IATE database is a document in constant growth, open to public participation, so that anyone can contribute to its growth by proposing new terminologies to be added to existing files, or to create new files: you can send your proposal to iate@cdt.europa.eu, or use the 'Comments' link that appears at the bottom right of the file of the term you are looking for. You can provide as much relevant information as you wish to justify the reliability of the proposed term, or suggest a new term for inclusion. A terminologist of the language in question will study each citizen's proposal and evaluate its inclusion in the IATE.

In August 2023, IATE announced the availability of version 2.30.0 of this data system, adding new fields to its platform and improving functions, such as the export of enriched files to optimize data filtering. As we have seen, this EU inter-institutional terminology database will continue to evolve continuously to meet the needs of EU translators and IATE users in general.

Another important aspect is that this database is used for the development of computer-assisted translation (CAT) tools, which helps to ensure the quality of the translation work of the EU translation services. The results of translators' terminology work are stored in IATE and translators, in turn, use this database for interactive searches and to feed domain- or document-specific terminology databases for use in their CAT tools.

IATE, with more than 7 million terms in over 700,000 entries, is a reference in the field of terminology and is considered the largest multilingual terminology database in the world. More than 55 million queries are made to IATE each year from more than 200 countries, which is a testament to its usefulness.