In the era of Artificial Intelligence (AI), data has ceased to be simple records and has become the essential fuel of innovation. However, for this fuel to really drive new services, more effective public policies or advanced AI models, it is not enough to have large volumes of information: the data must be varied, of quality and, above all, accessible.

In this context, the data pooling or Data Clustering, a practice that consists of Pooling data to generate greater value from their joint use. Far from being an abstract idea, the data pooling is emerging as one of the key mechanisms for transforming the data economy in Europe and has just received a new impetus with the proposal of the Digital Omnibus, aimed at simplifying and strengthening the European data-sharing framework.

As we already analyzed in our recent post on the Data Union Strategy, the European Union aspires to build a Single Data Market in which information can flow safely and with guarantees. The data pooling it is, precisely, the Operational tool which makes this vision tangible, connecting data that is now dispersed between administrations, companies and sectors.

But what exactly does "data pooling" mean? Why is this concept being talked about more and more in the context of the European data strategy and the new Digital Omnibus? And, above all, what opportunities does it open up for public administrations, companies and data reusers? In this article we try to answer these questions.

What is data pooling, how does it work and what is it for?

To understand what data pooling is, it can be helpful to think about a traditional agricultural cooperative. In it, small producers who, individually, have limited resources decide to pool their production and their means. By doing so, they gain scale, access better tools, and can compete in markets they wouldn't reach separately.

In the digital realm, data pooling works in a very similar way. It consists of combining or grouping datasets from different organizations or sources to analyze or reuse them with a shared goal. Creating this "common repository" of information—physical or logical—enables more complex and valuable analyses that could hardly be performed from a single isolated source.

This "pooling of data" can take different forms, depending on the technical and organizational needs of each initiative:

- Shared repositories, where multiple organizations contribute data to the same platform.

- Joint or federated access, where data remains in its source systems, but can be analyzed in a coordinated way.

- Governance agreements, which set out clear rules about who can access data, for what purpose, and under what conditions.

In all cases, the central idea is the same: each participant contributes their data and, in return, everyone benefits from a greater volume, diversity and richness of information, always under previously agreed rules.

What is the purpose of sharing data?

The growing interest in data pooling is no coincidence. Sharing data in a structured way allows, among other things:

- Detect patterns that are not visible with isolated data, especially in complex areas such as mobility, health, energy or the environment.

- Enhance the development of artificial intelligence, which needs diverse, quality data at scale to generate reliable results.

- Avoiding duplication, reducing costs and efforts in both the public and private sectors.

- To promote innovation, facilitating new services, comparative studies or predictive analysis.

- Strengthen evidence-based decision-making, a particularly relevant aspect in the design of public policies.

In other words, data pooling multiplies the value of existing data without the need to always generate new sets of information.

Different types of data pooling and their value

Not all data pools are created equal. Depending on the context and the objective pursued, different models of data grouping can be identified:

- M2M (Machine-to-Machine) data pooling, very common in the Internet of Things (IoT). For example, when industrial sensor manufacturers pool data from thousands of machines to anticipate failures or improve maintenance.

- Cross-sector or cross-sector data pooling, which combines data from different sectors – such as transport and energy – to optimise services, for example, the management of electric vehicle charging in smart cities.

- Data pooling for research, especially relevant in the field of health, where hospitals or research centers share anonymized data to train algorithms capable of detecting rare diseases or improving diagnoses.

These examples show that data pooling is not a single solution, but a set of adaptable practices, capable of generating economic, social and scientific value when applied with the appropriate guarantees.

From potential to practice: guarantees, clear rules and new opportunities for data pooling

Talking about sharing data does not mean doing it without limits. For data pooling to build trust and sustainable value, it is imperative to address how to share data responsibly. This has been, in fact, one of the great challenges that have conditioned its adoption in recent years.

Among the main concerns are the Protection of personal data, ensuring compliance with the General Data Protection Regulation (GDPR) and minimizing risks of re-identification; the confidentiality and the protection of trade secrets, especially when companies are involved; as well as the Quality and interoperability of the data, as combining inconsistent information can lead to erroneous conclusions. To all this is added a transversal element: the Trust between the parties, without which no sharing mechanism can function.

For this reason, data pooling is not just a technical issue. It requires clear legal frameworks, strong governance models, and trust mechanisms, which provide security to both those who share the data and those who reuse it.

Europe's role: from sharing data to creating ecosystems

Aware of these challenges, the European Union has been working for years to build a Single Data Market, where sharing information is simpler, safer and more beneficial for all actors involved. In this context, key initiatives have emerged, such as the European Data Spaces, organized by strategic sectors (health, mobility, industry, energy, agriculture), the promotion of Standards and Interoperability, and the appearance of Data Brokers as trusted third parties who facilitate sharing.

Data pooling fits fully into this vision: it is one of the practical mechanisms that allow these data spaces to work and generate real value. By facilitating the aggregation and joint use of data, pooling acts as the "engine" that makes many of these ecosystems operational.

All this is part of the Data Union Strategy, which seeks to connect policies, infrastructures and standards so that data can flow safely and efficiently throughout Europe.

The big brake: regulatory fragmentation

Until recently, this potential was met with a major hurdle: the Complexity of the European legal framework on data. An organization that would like to participate in a data pool cross-border had to navigate between multiple rules – GDPR, Data Governance Act, Data Act, Open Data Directive and sectoral or national regulations—with definitions, obligations, and competent authorities that are not always aligned. This fragmentation generated legal uncertainty: doubts about responsibilities, fear of sanctions, or uncertainty about the real protection of trade secrets. In practice, this "normative labyrinth" has for years held back the development of many common data spaces and limited the adoption of the data pooling, especially among SMEs and medium-sized companies with less legal and technical capacity.

The Digital Omnibus: Simplifying for Data Pooling to Scale

This is where the Digital Omnibus, the European Commission's proposal to simplify and harmonise the digital legal framework, comes into play. Far from adding new regulatory layers, the objective of the Omnibus is to organize, consolidate and reduce administrative burdens, making it easier to share data in practice.

From a data pooling perspective, the message is clear: less fragmentation, more clarity, and greater trust. The Omnibus seeks to concentrate the rules in a more coherent framework, avoid duplication and remove unnecessary barriers that until now discouraged data-driven collaboration, especially in cross-border projects.

In addition, the role of data intermediation services, key actors in organizing pooling in a neutral and reliable way, is reinforced. By clarifying their role and reducing certain burdens, it favors the emergence of new models – including technology startups – capable of acting as "arbiters" of data exchange between multiple participants.

Another particularly relevant element is the strengthening of the protection of trade secrets, allowing data holders to limit or deny access when there is a real risk of misuse or transfer to environments without adequate guarantees. This point is key for industrial and strategic sectors, where trust is an essential condition for sharing data.

New opportunities for data pooling: public sector, companies and data reuse

The regulatory simplification and confidence-building introduced by the Digital Omnibus is not an end in itself. Its true value lies in the concrete opportunities that data pooling opens up for different actors in the data ecosystem, especially for the public sector, companies and information reusers.

In the case of public administrations, data pooling offers particularly relevant potential. It allows data from different sources and administrative levels to be combined to improve the design and evaluation of public policies, move towards evidence-based decision-making and offer more effective and personalised services to citizens. At the same time, it facilitates the breaking down of information silos, the reuse of already available data and the reduction of duplications, with the consequent savings in costs and efforts.

In addition, data pooling reinforces collaboration between the public sector, the research field and the private sector, always under secure and transparent frameworks. In this context, it does not compete with open data, but complements it, making it possible to connect datasets that are currently published in a fragmented way and enabling more advanced analyses that expand their social and economic value.

From a business point of view, the Digital Omnibus introduces a significant novelty by expanding the focus beyond traditional SMEs. The so-called small mid-caps, mid-cap companies that also suffer the impact of bureaucracy, are now benefiting from regulatory simplification. This significantly increases the base of organizations capable of participating in data pooling schemes and expands the volume and diversity of data available in strategic sectors such as industry, automotive or chemicals.

The economic impact of this new scenario is also relevant. The European Commission estimates significant savings in administrative and operational costs, both for companies and public administrations. But beyond the numbers, these savings represent freed up capacity to innovate, invest in new digital services, and develop more advanced AI models, fueled by data that can now be shared more securely.

In short, data pooling is consolidated as a key lever to move from the punctual sharing of data to the systematic generation of value, laying the foundations for a more collaborative, efficient and competitive data economy in Europe.

Conclusion: Cooperate to compete

The proposal of data pooling in the Digital Omnibus marks a before and after in the way we understand the ownership of information. Europe has understood that, in the global data economy, sovereignty is not defended by closing borders, but by creating secure environments where collaboration is the simplest and most profitable option.

Data pooling is at the heart of this transformation. By cutting red tape, simplifying notifications, and protecting trade secrets, the Omnibus is taking the stones out of the way so that businesses and citizens can enjoy the benefits of a true Data Union.

In short, it is a question of moving from an economy of isolated silos to one of connected networks. Because, in the world of data, sharing is not losing control, it is gaining scale.

Content created by Dr. Fernando Gualo, Professor at UCLM and Government and Data Quality Consultant. The content and views expressed in this publication are the sole responsibility of the author.

To speak of the public domain is to speak of free access to knowledge, shared culture and open innovation. The concept has become a key piece in understanding how information circulates and how the common heritage of humanity is built.

In this post we will explore what the public domain means and show you examples of repositories where you can discover and enjoy works that are already part of everyone.

What is the public domain?

Surely at some point in your life you have seen the image of Mickey Mouse Handling the helm on a steamboat. A characteristic image of the Disney company that you can now use freely in your own works. This is because this first version of Mickey (Steamboat Willie, 1928) entered the public domain in January 2024 -be careful, only the version of that date is "free", subsequent adaptations do continue to be protected, as we will explain later-.

When we talk about the public domain, we refer to the body of knowledge, i nformation, works and creations (books, music, films, photos, software, etc.) that are not protected by copyright. Because of this , anyone can reproduce, copy, adapt and distribute them without having to ask permission or pay licenses. However, the moral rights of the author must always be respected, which are inalienable and do not expire. These rights include always respecting the authorship and integrity of the work*.

The public domain, therefore, shapes the cultural space where works become the common heritage of society, which entails multiple benefits:

- Free access to culture and knowledge: any citizen can read, watch, listen to or download these works without paying for licenses or subscriptions. This favors education, research and universal access to culture.

- Preservation of memory and heritage: the public domain ensures that an important part of our history, science and art remains accessible to present and future generations, without being limited by legal restrictions.

- Encourages creativity and innovation: artists, developers, companies, etc. can reuse and mix works from the public domain to create new products (such as adaptations, new editions, video games, comics, etc.) without fear of infringing rights.

- Technological boost: archives, museums and libraries can freely digitise and disseminate their holdings in the public domain, creating opportunities for digital projects and the development of new tools. For example, these works can be used to train artificial intelligence models and natural language processing tools.

What works and elements belong to the public domain, according to Spanish law?

In the public domain we find both content whose copyright has expired and content that has never been protected. Let's see what Spanish legislation says about it:

Works whose copyright protection has expired.

To know if a work belongs to the public domain, we must look at the date of the death of its author. In this sense, in Spain, there is a turning point: 1987. From that year on, and according to the intellectual property law, artistic works enter the public domain 70 years after the death of their author. However, perpetrators who died before that year are subject to the 1879 Law, where the term was generally 80 years – with exceptions.

Only "original literary, artistic or scientific" creations that involve a sufficient level of creativity are protected, regardless of their medium (paper, digital, audiovisual, etc.). This includes books, musical compositions, theatrical, audiovisual or pictorial works and sculptures to graphics, maps and designs related to topography, geography and science or computer programs, among others.

It should be noted that translations and adaptations, revisions, updates and annotations; compendiums, summaries and extracts; musical arrangements, collections of other people's works, such as anthologies or any transformations of a literary, artistic or scientific work, are also subject to intellectual property. Therefore, a recent adaptation of Don Quixote will have its own protection.

Works that are not eligible for copyright protection.

As we saw, not everything that is produced can be covered by copyright, some examples are:

- Official documents: laws, decrees, judgments and other official texts are not subject to copyright. They are considered too relevant to public life to be restricted, and are therefore in the public domain from the moment of publication.

- Works voluntarily transferred: The rights holders themselves can decide to release their works before the legal term expires. For this there are tools such as the license Creative Commons CC0 , which makes it possible to renounce protection and make the work directly available to everyone.

- Facts and Information: Copyright does not cover facts or data. Information and events are common heritage and can be used freely by anyone.

Europeana and its defence of the public domain

Europeana is Europe's great digital library, a project promoted by the European Union that brings together millions of cultural resources from archives, museums and libraries throughout the territory. Its mission is to facilitate free and open access to European cultural heritage, and in that sense the public domain is at the heart of it. Europeana advocates that works that have lost their copyright protection should remain unrestricted, even when digitized, because they are part of the common heritage of humanity.

As a result of its commitment, it has recently updated its Public Domain Charter, which includes a series of essential principles and guidelines for a robust and vibrant public domain in the digital environment. Among other issues, it mentions how technological advances and regulatory changes have expanded the possibilities of access to cultural heritage, but have also generated risks for the availability and reuse of materials in the public domain. Therefore, it proposes eight measures to protect and strengthen the public domain:

- Advocate against extending the terms or scope of copyright, which limits citizens' access to shared culture.

- Oppose attempts to undue control over free materials, avoiding licenses, fees, or contractual restrictions that reconstitute rights.

- Ensure that digital reproductions do not generate new layers of protection, including photos or 3D models, unless they are original creations.

- Avoid contracts that restrict reuse: Financing digitalisation should not translate into legal barriers.

- Clearly and accurately label works in the public domain, providing data such as author and date to facilitate identification.

- Balancing access with other legitimate interests, respecting laws, cultural values and the protection of vulnerable groups.

- Safeguard the availability of heritage, in the face of threats such as conflicts, climate change or the fragility of digital platforms, promoting sustainable preservation.

- To offer high-quality, reusable reproductions and metadata, in open, machine-readable formats, to enhance their creative and educational use.

Other platforms to access works in the public domain

In addition to Europeana, in Spain we have an ecosystem of projects that make cultural heritage in the public domain available to everyone:

- The National Library of Spain (BNE) plays a key role: every year it publishes the list of Spanish authors who enter the public domain and offers access to their digitized works through BNE Digital, a portal that allows you to consult manuscripts, books, engravings and other historical materials. Thus, we can find works by authors of the stature of Antonio Machado or Federico García Lorca. In addition, the BNE publishes the dataset with information on authors in the public domain in the open air.

- The Virtual Library of Bibliographic Heritage (BVPB), promoted by the Ministry of Culture, brings together thousands of digitized ancient works, ensuring that fundamental texts and materials of our literary and scientific history can be preserved and reused without restrictions. It includes digital facsimile reproductions of manuscripts, printed books, historical photographs, cartographic materials, sheet music, maps, etc.

- Hispana acts as a large national aggregator by connecting digital collections from Spanish archives, libraries, and museums, offering unified access to materials that are part of the public domain. To do this, it collects and makes accessible the metadata of digital objects, allowing these objects to be viewed through links that lead to the pages of the owner institutions.

Together, all these initiatives reinforce the idea that the public domain is not an abstract concept, but a living and accessible resource that expands every year and that allows our culture to continue circulating, inspiring and generating new forms of knowledge.

Thanks to Europeana, BNE Digital, the BVPB, Hispana and many other projects of this type, today we have the possibility of accessing an immense cultural heritage that connects us with our past and propels us towards the future. Each work that enters the public domain expands opportunities for learning, innovation and collective enjoyment, reminding us that culture, when shared, multiplies.

*In accordance with the Intellectual Property Law, the integrity of the work refers to preventing any distortion, modification, alteration or attack against it that damages its legitimate interests or damages its reputation.

Spain once again stands out in the European open data landscape. The Open Data Maturity 2025 report places our country among the leaders in the opening and reuse of public sector information, consolidating an upward trajectory in digital innovation.

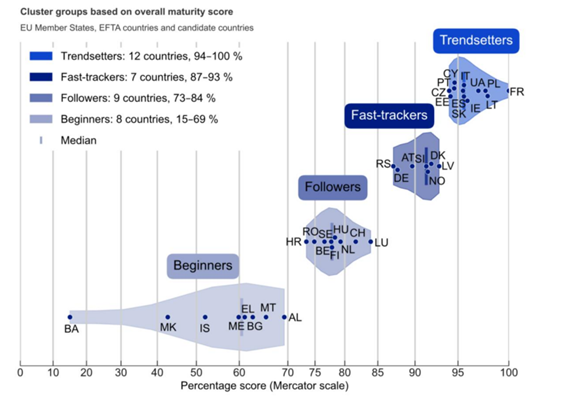

The report, produced annually by the European data portal, data.europa.eu, assesses the degree of maturity of open data in Europe. To do this, it analyzes several indicators, grouped into four dimensions: policy, portal, quality and impact. This year's edition has involved 36 countries, including the 27 Member States of the European Union (EU), three European Free Trade Association countries (Iceland, Norway and Switzerland) and six candidate countries (Albania, Bosnia and Herzegovina, Montenegro, North Macedonia, Serbia and Ukraine).

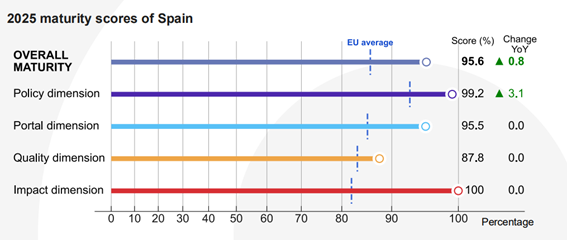

This year, Spain is in fifth position among the countries of the European Union and sixth out of the total number of countries analysed, tied with Italy. Specifically, a total score of 95.6% was obtained, well above the average of the countries analysed (81.1%). With this data, Spain improves its score compared to 2024, when it obtained 94.8%.

Spain, among the European leaders

With this position, Spain is once again among the countries that prescribe open data (trendsetters), i.e. those that set trends and serve as an example of good practices to other States. Spain shares a group with France, Lithuania, Poland, Ukraine, Ireland, the aforementioned Italy, Slovakia, Cyprus, Portugal, Estonia and the Czech Republic.

The countries in this group have advanced open data policies, aligned with the technical and political progress of the European Union, including the publication of high-value datasets. In addition, there is strong coordination of open data initiatives at all levels of government. Its national portals offer comprehensive features and quality metadata, with few limitations on publication or use. This means that published data can be more easily reused for multiple purposes, helping to generate a positive impact in different areas.

Figure 1. Member countries of the different clusters.

The keys to Spain's progress

According to the report, Spain strengthened its leadership in open data through strategic policy development, technical modernization, and reuse-driven innovation. In particular, improvements in the political sphere are what have boosted Spain's growth:

Figure 2. Spain's score in the different dimensions together with growth over the previous year.

As shown in the image, the political dimension has reached a score of 99.2% compared to 96% last year, standing out from the European average of 93.1%. The reason for this growth is the progress in the regulatory framework. In this regard, the report highlights the configuration of the V Open Government Plan, developed through a co-creation process in which all stakeholders participated. This plan has introduced new initiatives related to the governance and reuse of open data. Another noteworthy issue is that Spain promoted the publication of high-value datasets, in line with Implementing Regulation (EU) 2023/138.

The rest of the dimensions remain stable, all of them with scores above the European average: in the portal dimension, 95.5% has been obtained compared to 85.45% in Europe, while the quality dimension has been valued with 87.8% compared to 83.4% in the rest of the countries analysed. The Impact block continues to be our great asset, with 100% compared to 82.1% in Europe. In this dimension, we continue to position ourselves as great leaders, thanks to a clear definition of reuse, the systematic measurement of data use and the existence of examples of impact in the governmental, social, environmental and economic spheres.

Although there have not been major movements in the score of these dimensions, the report does highlight milestones in Spain in all areas. For example, the datos.gob.es platform underwent a major redesign, including adjustments to the DCAT-AP-ES metadata profile, in order to improve quality and interoperability. In this regard, a specific implementation guide was published and a learning and development community was consolidated through GitHub. In addition, the portal's search engine and monitoring tools were improved, including tracking external reuse through GitHub references and rich analytics through interactive dashboards.

The involvement of the infomediary sector has been key in strengthening Spain's leadership in open data. The report highlights the importance of activities such as the National Open Data Meeting, with challenges that are worked on jointly by a multidisciplinary team with representatives of public, private and academic institutions, edition after edition. In addition, the Spanish Federation of Municipalities and Provinces identified 80 essential data sets on which local governments should focus when advancing in the opening of information, promoting coherence and reuse at the municipal level.

The following image shows the specific score for each of the subdimensions analyzed:

Figure 3. Spain's score in the different dimensions and subcategories.

You can see the details of the report for Spain on the website of the European portal.

Next steps and common challenges

The report concludes with a series of specific recommendations for each group of countries. For the group of trendsetters, in which Spain is located, the recommendations are not so much focused on reaching maturity – already achieved – but on deepening and expanding their role as European benchmarks. Some of the recommendations are:

- Consolidate thematic ecosystems (supplier and reuser communities) and prioritize high-value data in a systematic way.

- Align local action with the national strategy, enabling "data-driven" policies.

- Cooperate with data.europa.eu and other countries to implement and adapt an impact assessment framework with domain-by-domain metrics.

- Develop user profiles and allow their contributions to the national portal.

- Improve data and metadata quality and localization through validation tools, artificial intelligence, and user-centric flows.

- Apply domain-specific standards to harmonize datasets and maximize interoperability, quality, and reusability.

- Offer advanced and certified training in regulations and data literacy.

- Collaborate internationally on reusable solutions, such as shared or open source software.

Spain is already working on many of these points to continue improving its open data offer. The aim is for more and more reusers to be able to easily take advantage of the potential of public information to generate services and solutions that generate a positive impact on society as a whole.

The position achieved by Spain in this European ranking is the result of the work of all public initiatives, companies, user communities and reusers linked to open data, which promote an ecosystem that does not stop growing. Thank you for the effort!

On 19 November, the European Commission presented the Data Union Strategy, a roadmap that seeks to consolidate a robust, secure and competitive European data ecosystem. This strategy is built around three key pillars: expanding access to quality data for artificial intelligence and innovation, simplifying the existing regulatory framework, and protecting European digital sovereignty. In this post, we will explain each of these pillars in detail, as well as the implementation timeline of the plan planned for the next two years.

Pillar 1: Expanding access to quality data for AI and innovation

The first pillar of the strategy focuses on ensuring that companies, researchers and public administrations have access to high-quality data that allows the development of innovative applications, especially in the field of artificial intelligence. To this end, the Commission proposes a number of interconnected initiatives ranging from the creation of infrastructure to the development of standards and technical enablers. A series of actions are established as part of this pillar: the expansion of common European data spaces, the development of data labs, the promotion of the Cloud and AI Development Act, the expansion of strategic data assets and the development of facilitators to implement these measures.

1.1 Extension of the Common European Data Spaces (ECSs)

Common European Data Spaces are one of the central elements of this strategy:

-

Planned investment: 100 million euros for its deployment.

-

Priority sectors: health, mobility, energy, (legal) public administration and environment.

-

Interoperability: SIMPL is committed to interoperability between data spaces with the support of the European Data Spaces Support Center (DSSC).

-

Key Applications:

-

European Health Data Space (EHDS): Special mention for its role as a bridge between health data systems and the development of AI.

-

New Defence Data Space: for the development of state-of-the-art systems, coordinated by the European Defence Agency.

-

1.2 Data Labs: the new ecosystem for connecting data and AI development

The strategy proposes to use Data Labs as points of connection between the development of artificial intelligence and European data.

These labs employ data pooling, a process of combining and sharing public and restricted data from multiple sources in a centralized repository or shared environment. All this facilitates access and use of information. Specifically, the services offered by Data Labs are:

-

Makes it easy to access data.

-

Technical infrastructure and tools.

-

Data pooling.

-

Data filtering and labeling

-

Regulatory guidance and training.

-

Bridging the gap between data spaces and AI ecosystems.

Implementation plan:

-

First phase: the first Data Labs will be established within the framework of AI Factories (AI gigafactories), offering data services to connect AI development with European data spaces.

-

Sectoral Data Labs: will be established independently in other areas to cover specific needs, for example, in the energy sector.

-

Self-sustaining model: It is envisaged that the Data Labs model can be deployed commercially, making it a self-sustaining ecosystem that connects data and AI.

1.3 Cloud and AI Development Act: boosting the sovereign cloud

To promote cloud technology, the Commission will propose this new regulation in the first quarter of 2026. There is currently an open public consultation in which you can participate here.

1.4 Strategic data assets: public sector, scientific, cultural and linguistic resources

On the one hand, in 2026 it will be proposed to expand the list of high-value data in English or HVDS to include legal, judicial and administrative data, among others. And on the other hand, the Commission will map existing bases and finance new digital infrastructure.

1.5 Horizontal enablers: synthetic data, data pooling, and standards

The European Commission will develop guidelines and standards on synthetic data and advanced R+D in techniques for its generation will be funded through Horizon Europe.

Another issue that the EU wants to promote is data pooling, as we explained above. Sharing data from early stages of the production cycle can generate collective benefits, but barriers persist due to legal uncertainty and fear of violating competition rules. Its purpose? Make data pooling a reliable and legally secure option to accelerate progress in critical sectors.

Finally, in terms of standardisation, the European standardisation organisations (CEN/CENELEC) will be asked to develop new technical standards in two key areas: data quality and labelling. These standards will make it possible to establish common criteria on how data should be to ensure its reliability and how it should be labelled to facilitate its identification and use in different contexts.

Pillar 2: Regulatory simplification

The second pillar addresses one of the challenges most highlighted by companies and organisations: the complexity of the European regulatory framework on data. The strategy proposes a series of measures aimed at simplifying and consolidating existing legislation.

2.1 Derogations and regulatory consolidation: towards a more coherent framework

The aim is to eliminate regulations whose functions are already covered by more recent legislation, thus avoiding duplication and contradictions. Firstly, the Free Flow of Non-Personal Data Regulation (FFoNPD) will be repealed, as its functions are now covered by the Data Act. However, the prohibition of unjustified data localisation, a fundamental principle for the Digital Single Market, will be explicitly preserved.

Similarly, the Data Governance Act (European Data Governance Regulation or DGA) will be eliminated as a stand-alone rule, migrating its essential provisions to the Data Act. This move simplifies the regulatory framework and also eases the administrative burden: obligations for data intermediaries will become lighter and more voluntary.

As for the public sector, the strategy proposes an important consolidation. The rules on public data sharing, currently dispersed between the DGA and the Open Data Directive, will be merged into a single chapter within the Data Act. This unification will facilitate both the application and the understanding of the legal framework by public administrations.

2.2 Cookie reform: balancing protection and usability

Another relevant detail is the regulation of cookies, which will undergo a significant modernization, being integrated into the framework of the General Data Protection Regulation (GDPR). The reform seeks a balance: on the one hand, low-risk uses that currently generate legal uncertainty will be legalized; on the other, consent banners will be simplified through "one-click" systems. The goal is clear: to reduce the so-called "user fatigue" in the face of the repetitive requests for consent that we all know when browsing the Internet.

2.3 Adjustments to the GDPR to facilitate AI development

The General Data Protection Regulation will also be subject to a targeted reform, specifically designed to release data responsibly for the benefit of the development of artificial intelligence. This surgical intervention addresses three specific aspects:

-

It clarifies when legitimate interest for AI model training may apply.

-

It defines more precisely the distinction between anonymised and pseudonymised data, especially in relation to the risk of re-identification.

-

It harmonises data protection impact assessments, facilitating their consistent application across the Union.

2. 4 Implementation and Support for the Data Act

The recently approved Data Act will be subject to adjustments to improve its application. On the one hand, the scope of business-to-government ( B2G) data sharing is refined, strictly limiting it to emergency situations. On the other hand, the umbrella of protection is extended: the favourable conditions currently enjoyed by small and medium-sized enterprises (SMEs) will also be extended to medium-sized companies or small mid-caps, those with between 250 and 749 employees.

To facilitate the practical implementation of the standard, a model contractual clause for data exchange has already been published , thus providing a template that organizations can use directly. In addition, two additional guides will be published during the first quarter of 2026: one on the concept of "reasonable compensation" in data exchanges, and another aimed at clarifying the key definitions of the Data Act that may generate interpretative doubts.

Aware that SMEs may struggle to navigate this new legal framework, a Legal Helpdesk will be set up in the fourth quarter of 2025. This helpdesk will provide direct advice on the implementation of the Data Act, giving priority precisely to small and medium-sized enterprises that lack specialised legal departments.

2.5 Evolving governance: towards a more coordinated ecosystem

The governance architecture of the European data ecosystem is also undergoing significant changes. The European Data Innovation Board (EDIB) evolves from a primarily advisory body to a forum for more technical and strategic discussions, bringing together both Member States and industry representatives. To this end, its articles will be modified with two objectives: to allow the inclusion of the competent authorities in the debates on Data Act, and to provide greater flexibility to the European Commission in the composition and operation of the body.

In addition, two additional mechanisms of feedback and anticipation are articulated. The Apply AI Alliance will channel sectoral feedback, collecting the specific experiences and needs of each industry. For its part, the AI Observatory will act as a trend radar, identifying emerging developments in the field of artificial intelligence and translating them into public policy recommendations. In this way, a virtuous circle is closed where politics is constantly nourished by the reality of the field.

Pillar 3: Protecting European data sovereignty

The third pillar focuses on ensuring that European data is treated fairly and securely, both inside and outside the Union's borders. The intention is that data will only be shared with countries with the same regulatory vision.

3.1 Specific measures to protect European data

-

Publication of guides to assess the fair treatment of EU data abroad (Q2 2026):

-

Publication of the Unfair Practices Toolbox (Q2 2026):

-

Unjustified location.

-

Exclusion.

-

Weak safeguards.

-

The data leak.

-

-

Taking measures to protect sensitive non-personal data.

All these measures are planned to be implemented from the last quarter of 2025 and throughout 2026 in a progressive deployment that will allow a gradual and coordinated adoption of the different measures, as established in the Data Union Strategy.

In short, the Data Union Strategy represents a comprehensive effort to consolidate European leadership in the data economy. To this end, data pooling and data spaces in the Member States will be promoted, Data Labs and AI gigafactories will be committed to and regulatory simplification will be encouraged.

The European open data portal has published the third volume of its Use Case Observatory, a report that compiles the evolution of data reuse projects across Europe. This initiative highlights the progress made in four areas: economic, governmental, social and environmental impact.

The closure of a three-year investigation

Between 2022 and 2025, the European Open Data Portal has systematically monitored the evolution of various European projects. The research began with an initial selection of 30 representative initiatives, which were analyzed in depth to identify their potential for impact.

After two years, 13 projects continued in the study, including three Spanish ones: Planttes, Tangible Data and UniversiDATA-Lab. Its development over time was studied to understand how the reuse of open data can generate real and sustainable benefits.

The publication of volume III in October 2025 marks the closure of this series of reports, following volume I (2022) and volume II (2024). This last document offers a longitudinal view, showing how the projects have matured in three years of observation and what concrete impacts they have generated in their respective contexts.

Common conclusions

This third and final report compiles a number of key findings:

Economic impact

Open data drives growth and efficiency across industries. They contribute to job creation, both directly and indirectly, facilitate smarter recruitment processes and stimulate innovation in areas such as urban planning and digital services.

The report shows the example of:

- Naar Jobs (Belgium): an application for job search close to users' homes and focused on the available transport options.

This application demonstrates how open data can become a driver for regional employment and business development.

Government impact

The opening of data strengthens transparency, accountability and citizen participation.

Two use cases analysed belong to this field:

- Waar is mijn stemlokaal? (Netherlands): platform for the search for polling stations.

- Statsregnskapet.no (Norway): website to visualize government revenues and expenditures.

Both examples show how access to public information empowers citizens, enriches the work of the media, and supports evidence-based policymaking. All of this helps to strengthen democratic processes and trust in institutions.

Social impact

Open data promotes inclusion, collaboration, and well-being.

The following initiatives analysed belong to this field:

- UniversiDATA-Lab (Spain): university data repository that facilitates analytical applications.

- VisImE-360 (Italy): a tool to map visual impairment and guide health resources.

- Tangible Data (Spain): a company focused on making physical sculptures that turn data into accessible experiences.

- EU Twinnings (Netherlands): platform that compares European regions to find "twin cities"

- Open Food Facts (France): collaborative database on food products.

- Integreat (Germany): application that centralizes public information to support the integration of migrants.

All of them show how data-driven solutions can amplify the voice of vulnerable groups, improve health outcomes and open up new educational opportunities. Even the smallest effects, such as improvement in a single person's life, can prove significant and long-lasting.

Environmental impact

Open data acts as a powerful enabler of sustainability.

As with environmental impact, in this area we find a large number of use cases:

- Digital Forest Dryads (Estonia): a project that uses data to monitor forests and promote their conservation.

- Air Quality in Cyprus (Cyprus): platform that reports on air quality and supports environmental policies.

- Planttes (Spain): citizen science app that helps people with pollen allergies by tracking plant phenology.

- Environ-Mate (Ireland): a tool that promotes sustainable habits and ecological awareness.

These initiatives highlight how data reuse contributes to raising awareness, driving behavioural change and enabling targeted interventions to protect ecosystems and strengthen climate resilience.

Volume III also points to common challenges: the need for sustainable financing, the importance of combining institutional data with citizen-generated data, and the desirability of involving end-users throughout the project lifecycle. In addition, it underlines the importance of European collaboration and transnational interoperability to scale impact.

Overall, the report reinforces the relevance of continuing to invest in open data ecosystems as a key tool to address societal challenges and promote inclusive transformation.

The impact of Spanish projects on the reuse of open data

As we have mentioned, three of the use cases analysed in the Use Case Observatory have a Spanish stamp. These initiatives stand out for their ability to combine technological innovation with social and environmental impact, and highlight Spain 's relevance within the European open data ecosystem. His career demonstrates how our country actively contributes to transforming data into solutions that improve people's lives and reinforce sustainability and inclusion. Below, we zoom in on what the report says about them.

This citizen science initiative helps people with pollen allergies through real-time information about allergenic plants in bloom. Since its appearance in Volume I of the Use Case Observatory, it has evolved as a participatory platform in which users contribute photos and phenological data to create a personalized risk map. This participatory model has made it possible to maintain a constant flow of information validated by researchers and to offer increasingly complete maps. With more than 1,000 initial downloads and about 65,000 annual visitors to its website, it is a useful tool for people with allergies, educators and researchers.

The project has strengthened its digital presence, with increasing visibility thanks to the support of institutions such as the Autonomous University of Barcelona and the University of Granada, in addition to the promotion carried out by the company Thigis.

Its challenges include expanding geographical coverage beyond Catalonia and Granada and sustaining data participation and validation. Therefore, looking to the future, it seeks to extend its territorial reach, strengthen collaboration with schools and communities, integrate more data in real time and improve its predictive capabilities.

Throughout this time, Planttes has established herself as an example of how citizen-driven science can improve public health and environmental awareness, demonstrating the value of citizen science in environmental education, allergy management, and climate change monitoring.

The project transforms datasets into physical sculptures that represent global challenges such as climate change or poverty, integrating QR codes and NFC to contextualize the information. Recognized at the EU Open Data Days 2025, Tangible Data has inaugurated its installation Tangible climate at the National Museum of Natural Sciences in Madrid.

Tangible Data has evolved in three years from a prototype project based on 3D sculptures to visualize sustainability data to become an educational and cultural platform that connects open data with society. Volume III of the Use Case Observatory reflects its expansion into schools and museums, the creation of an educational program for 15-year-old students, and the development of interactive experiences with artificial intelligence, consolidating its commitment to accessibility and social impact.

Its challenges include funding and scaling up the education programme, while its future goals include scaling up school activities, displaying large-format sculptures in public spaces, and strengthening collaboration with artists and museums. Overall, it remains true to its mission of making data tangible, inclusive, and actionable.

UniversiDATA-Lab is a dynamic repository of analytical applications based on open data from Spanish universities, created in 2020 as a public-private collaboration and currently made up of six institutions. Its unified infrastructure facilitates the publication and reuse of data in standardized formats, reducing barriers and allowing students, researchers, companies and citizens to access useful information for education, research and decision-making.

Over the past three years, the project has grown from a prototype to a consolidated platform, with active applications such as the budget and retirement viewer, and a hiring viewer in beta. In addition, it organizes a periodic datathon that promotes innovation and projects with social impact.

Its challenges include internal resistance at some universities and the complex anonymization of sensitive data, although it has responded with robust protocols and a focus on transparency. Looking to the future, it seeks to expand its catalogue, add new universities and launch applications on emerging issues such as school dropouts, teacher diversity or sustainability, aspiring to become a European benchmark in the reuse of open data in higher education.

Conclusion

In conclusion, the third volume of the Use Case Observatory confirms that open data has established itself as a key tool to boost innovation, transparency and sustainability in Europe. The projects analysed – and in particular the Spanish initiatives Planttes, Tangible Data and UniversiDATA-Lab – demonstrate that the reuse of public information can translate into concrete benefits for citizens, education, research and the environment.

Did you know that less than two out of ten European companies use artificial intelligence (AI) in their operations? This data, corresponding to 2024, reveals the margin for improvement in the adoption of this technology. To reverse this situation and take advantage of the transformative potential of AI, the European Union has designed a comprehensive strategic framework that combines investment in computing infrastructure, access to quality data and specific measures for key sectors such as health, mobility or energy.

In this article we explain the main European strategies in this area, with a special focus on the Apply AI Strategy or the AI Continent Action Plan , adopted this year in October and April respectively. In addition, we will tell you how these initiatives complement other European strategies to create a comprehensive innovation ecosystem.

Context: Action plan and strategic sectors

On the one hand, the AI Continent Action Plan establishes five strategic pillars:

- Computing infrastructures: scaling computing capacity through AI Factories, AI Gigafactories and the Cloud and AI Act, specifically:

- AI factories: infrastructures to train and improve artificial intelligence models will be promoted. This strategic axis has a budget of 10,000 million euros and is expected to lead to at least 13 AI factories by 2026.

- Gigafactorie AI: the infrastructures needed to train and develop complex AI models will also be taken into account, quadrupling the capacity of AI factories. In this case, 20,000 million euros are invested for the development of 5 gigafactories.

- Cloud and AI Act: Work is being done on a regulatory framework to boost research into highly sustainable infrastructure, encourage investments and triple the capacity of EU data centres over the next five to seven years.

- Access to quality data: facilitate access to robust and well-organized datasets through the so-called Data Labs in AI Factories.

- Talent and skills: strengthening AI skills across the population, specifically:

- Create international collaboration agreements.

- To offer scholarships in AI for the best students, researchers and professionals in the sector.

- Promote skills in these technologies through a specific academy.

- Test a specific degree in generative AI.

- Support training updating through the European Digital Innovation Hub.

- Development and adoption of algorithms: promoting the use of artificial intelligence in strategic sectors.

- Regulatory framework: Facilitate compliance with the AI Regulation in a simple and innovative way and provide free and adaptable tools for companies.

On the other hand, the recently presented, in October 2025, Apply AI Strategy seeks to boost the competitiveness of strategic sectors and strengthen the EU's technological sovereignty, driving AI adoption and innovation across Europe, particularly among small and medium-sized enterprises. How? The strategy promotes an "AI first" policy, which encourages organizations to consider artificial intelligence as a potential solution whenever they make strategic or policy decisions, carefully evaluating both the benefits and risks of the technology. In addition, it encourages a European procurement approach, i.e. organisations, particularly public administrations, prioritise solutions developed in Europe. Moreover, special importance is given to open source AI solutions, because they offer greater transparency and adaptability, less dependence on external providers and are aligned with the European values of openness and shared innovation.

The Apply AI Strategy is structured in three main sections:

Flagship sectoral initiatives

The strategy identifies 11 priority areas where AI can have the greatest impact and where Europe has competitive strengths:

- Healthcare and pharmaceuticals: AI-powered advanced European screening centres will be established to accelerate the introduction of innovative prevention and diagnostic tools, with a particular focus on cardiovascular diseases and cancer.

- Robotics: Adoption will be driven for the adoption of European robotics connecting developers and user industries, driving AI-powered robotics solutions.

- Manufacturing, engineering and construction: the development of cutting-edge AI models adapted to industry will be supported, facilitating the creation of digital twins and optimisation of production processes.

- Defence, security and space: the development of AI-enabled European situational awareness and control capabilities will be accelerated, as well as highly secure computing infrastructure for defence and space AI models.

- Mobility, transport and automotive: the "Autonomous Drive Ambition Cities" initiative will be launched to accelerate the deployment of autonomous vehicles in European cities.

- Electronic communications: a European AI platform for telecommunications will be created that will allow operators, suppliers and user industries to collaborate on the development of open source technological elements.

- Energy: the development of AI models will be supported to improve the forecasting, optimization and balance of the energy system.

- Climate and environment: An open-source AI model of the Earth system and related applications will be deployed to enable better weather forecasting, Earth monitoring, and what-if scenarios.

- Agri-food: the creation of an agri-food AI platform will be promoted to facilitate the adoption of agricultural tools enabled by this technology.

- Cultural and creative sectors, and media: the development of micro-studios specialising in AI-enhanced virtual production and pan-European platforms using multilingual AI technologies will be incentivised.

- Public sector: A dedicated AI toolkit for public administrations will be built with a shared repository of good practices, open source and reusable, and the adoption of scalable generative AI solutions will be accelerated.

Cross-cutting support measures

For the adoption of artificial intelligence to be effective, the strategy addresses challenges common to all sectors, specifically:

- Opportunities for European SMEs: The more than 250 European Digital Innovation Hubs have been transformed into AI Centres of Expertise. These centres act as privileged access points to the European AI innovation ecosystem, connecting companies with AI Factories, data labs and testing facilities.

- AI-ready workforce: Access to practical AI literacy training, tailored to sectors and professional profiles, will be provided through the AI Skills Academy.

- Supporting the development of advanced AI: The Frontier AI Initiative seeks to accelerate progress on cutting-edge AI capabilities in Europe. Through this project, competitions will be created to develop advanced open-source artificial intelligence models, which will be available to public administrations, the scientific community and the European business sector.

- Trust in the European market: Disclosure will be strengthened to ensure compliance with the European Union's AI Regulation, providing guidance on the classification of high-risk systems and on the interaction of the Regulation with other sectoral legislation.

New governance system

In this context, it is particularly important to ensure proper coordination of the strategy. Therefore, the following is proposed:

- Apply AI Alliance: The existing AI Alliance becomes the premier coordination forum that brings together AI vendors, industry leaders, academia, and the public sector. Sector-specific groups will allow the implementation of the strategy to be discussed and monitored.

- AI Observatory: An AI Observatory will be established to provide robust indicators assessing its impact on currently listed and future sectors, monitor developments and trends.

Complementary strategies: science and data as the main axes

The Apply AI Strategy does not act in isolation, but is complemented by two other fundamental strategies: the AI in Science Strategy and the Data Union Strategy.

AI in Science Strategy

Presented together with the Apply AI Strategy, this strategy supports and incentivises the development and use of artificial intelligence by the European scientific community. Its central element is RAISE (Resource for AI Science in Europe), which was presented in November at the AI in Science Summit and will bring together strategic resources: funding, computing capacity, data and talent. RAISE will operate on two pillars: Science for AI (basic research to advance fundamental capabilities) and AI in Science (use of artificial intelligence for progress in different scientific disciplines).

Data Union Strategy

This strategy will focus on ensuring the availability of high-quality, large-scale datasets, essential for training AI models. A key element will be the Data Labs associated with the AI Factories, which will bring together and federate data from different sectors, linking with the corresponding European Common Data Spaces, making them available to developers under the appropriate conditions.

In short, through significant investments in infrastructure, access to quality data, talent development and a regulatory framework that promotes responsible innovation, the European Union is creating the necessary conditions for companies, public administrations and citizens to take advantage of the full transformative potential of artificial intelligence. The success of these strategies will depend on collaboration between European institutions, national governments, businesses, researchers and developers.

Last September, the first edition of the European Data Spaces Awards was officially launched, an initiative promoted by the Data Spaces Support Centre (DSSC) in collaboration with the European Commission. These awards were created with the aim of promoting the best data exchange initiatives, recognizing their achievements and increasing their visibility. This seeks to promote good practices that can serve as a guide for other actors in the European data ecosystem. The idea is that the awards will be awarded annually, which will help the community grow and improve.

Why are these awards important?

Data is one of Europe's most valuable economic assets, and its strategic harnessing is critical for the development of technologies such as artificial intelligence (AI). Therefore, the European strategy It involves establishing a single market for data that allows innovation to be promoted effectively. However, at present, the data is still widely distributed among many actors in the European ecosystem.

The European Data Spaces Awards are especially relevant because they recognise and promote initiatives that help to overcome this problem: data spaces. These are organisational and technical environments where multiple actors – public and private – share data in a secure, sovereign, controlled way and in accordance with common standards that promote their interoperability. This allows data to flow across sectors and borders, driving innovation.

In Spain, the development of data spaces is also being promoted through specific initiatives such as the Plan to Promote Sectoral Data Spaces.

Two award categories

In this context, two categories of awards have been created:

- Excellence in end-user engagement and financial sustainability: Recognizes data spaces with a strong user focus and viable long-term financial models.

- Most innovative emerging data space: rewards new initiatives that bring fresh and innovative ideas with high impact on the European ecosystem.

Who can participate?

The European Data Spaces Awards are open to any data space that meets these criteria:

- Its governance authority is registered in the European Union.

- It operates wholly or partially within European territory.

- It is being actively used for data exchange.

- It includes restricted data, beyond open data.

Spaces in the implementation phase can also apply, as long as they share data in pilot or pre-operational environments. In these cases, the project coordinator can act on behalf of the project.

The assessment of eligibility will be based on the applicant's self-assessment, facilitating broad and representative participation of the European data ecosystem.

The same data space can apply for both categories, although you must make two different applications.

Schedule: registration open until November 7

The competition is structured in four key phases that set the pace of the participation and evaluation process:

- On 23 September 2025, the launch event was held and the application period was officially opened.

- The application submission phase will run for 7 weeks, until November 7, allowing data spaces to prepare and register their proposals.

- This will be followed by the evaluation phase, which will begin on December 17 and last 6 weeks. During this time, the Data Spaces Support Centre (DSSC) will conduct an internal eligibility review and the jury selects the winners.

- Finally, the awards will be announced and presented during the Data Space Symposium (DSS2026) event, on February 10 and 11, 2026 in Madrid. All nominees will be invited to take the stage during the ceremony, so they will get great visibility and recognition. The winners will not receive any monetary compensation.

How to participate?

To register, participants must access the online form available on the official website of the awards. This page provides all the resources needed to prepare for your application, including reference documents, templates, and updates on the process.

The form includes three required elements:

- Basic questions about the requester and the data space.

- The eligibility self-assessment with four mandatory questions.

- A space to upload the Awards Application Document, a document in PDF format and whose template is available on the platform. (maximum 8 pages). The document, which follows a structure aligned with the Maturity Model v2.0, details the objectives and evaluation criteria by section.

In addition, participants have a space to provide, optionally, links to additional resources that help give context to their proposal.

For any questions that may arise during the process, a support platform has been set up.

The European Data Spaces Awards 2025 not only recognise excellence, but also highlight the impact of projects that are transforming the future of data in Europe. If you are interested in participating, we invite you to read the complete rules of the competition on their website.

Artificial Intelligence (AI) is transforming society, the economy and public services at an unprecedented speed. This revolution brings enormous opportunities, but also challenges related to ethics, security and the protection of fundamental rights. Aware of this, the European Union approved the Artificial Intelligence Act (AI Act), in force since August 1, 2024, which establishes a harmonized and pioneering framework for the development, commercialization and use of AI systems in the single market, fostering innovation while protecting citizens.

A particularly relevant area of this regulation is general-purpose AI models (GPAI), such as large language models (LLMs) or multimodal models, which are trained on huge volumes of data from a wide variety of sources (text, images and video, audio and even user-generated data). This reality poses critical challenges in intellectual property, data protection and transparency on the origin and processing of information.

To address them, the European Commission, through the European AI Office, has published the Template for the Public Summary of Training Content for general-purpose AI models: a standardized format that providers will be required to complete and publish to summarize key information about the data used in training. From 2 August 2025, any general-purpose model placed on the market or distributed in the EU must be accompanied by this summary; models already on the market have until 2 August 2027 to adapt. This measure materializes the AI Act's principle of transparency and aims to shed light on the "black boxes" of AI.

In this article, we explain this template keys´s: from its objectives and structure, to information on deadlines, penalties, and next steps.

Objectives and relevance of the template

General-purpose AI models are trained on data from a wide variety of sources and modalities, such as:

-

Text: books, scientific articles, press, social networks.

-

Images and videos: digital content from the Internet and visual collections.

-

Audio: recordings, podcasts, radio programs, or conversations.

-

User data: information generated in interaction with the model itself or with other services of the provider.

This process of mass data collection is often opaque, raising concerns among rights holders, users, regulators, and society as a whole. Without transparency, it is difficult to assess whether data has been obtained lawfully, whether it includes unauthorised personal information or whether it adequately represents the cultural and linguistic diversity of the European Union.

Recital 107 of the AI Act states that the main objective of this template is to increase transparency and facilitate the exercise and protection of rights. Among the benefits it provides, the following stand out:

-

Intellectual property protection: allows authors, publishers and other rights holders to identify if their works have been used during training, facilitating the defense of their rights and a fair use of their content.

-

Privacy safeguard: helps detect whether personal data has been used, providing useful information so that affected individuals can exercise their rights under the General Data Protection Regulation (GDPR) and other regulations in the same field.

-

Prevention of bias and discrimination: provides information on the linguistic and cultural diversity of the sources used, key to assessing and mitigating biases that may lead to discrimination.

-

Fostering competition and research: reduces "black box" effects and facilitates academic scrutiny, while helping other companies better understand where data comes from, favoring more open and competitive markets.

In short, this template is not only a legal requirement, but a tool to build trust in artificial intelligence, creating an ecosystem in which technological innovation and the protection of rights are mutually reinforcing.

Template structure

The template, officially published on 24 July 2025 after a public consultation with more than 430 participating organisations, has been designed so that the information is presented in a clear, homogeneous and understandable way, both for specialists and for the public.

It consists of three main sections, ranging from basic model identification to legal aspects related to data processing.

1. General information

It provides a global view of the provider, the model, and the general characteristics of the training data:

-

Identification of the supplier, such as name and contact details.

-

Identification of the model and its versions, including dependencies if it is a modification (fine-tuning) of another model.

-

Date of placing the model on the market in the EU.

-

Data modalities used (text, image, audio, video, or others).

-

Approximate size of data by modality, expressed in wide ranges (e.g., less than 1 billion tokens, between 1 billion and 10 trillion, more than 10 trillion).

-

Language coverage, with special attention to the official languages of the European Union.

This section provides a level of detail sufficient to understand the extent and nature of the training, without revealing trade secrets.

2. List of data sources

It is the core of the template, where the origin of the training data is detailed. It is organized into six main categories, plus a residual category (other).

-

Public datasets:

-

Data that is freely available and downloadable as a whole or in blocks (e.g., open data portals, common crawl, scholarly repositories).

-

"Large" sets must be identified, defined as those that represent more than 3% of the total public data used in a specific modality.

-

-

Licensed private sets:

-

Data obtained through commercial agreements with rights holders or their representatives, such as licenses with publishers for the use of digital books.

-

A general description is provided only.

-

-

Other unlicensed private data:

-

Databases acquired from third parties that do not directly manage copyright.

-

If they are publicly known, they must be listed; otherwise, a general description (data type, nature, languages) is sufficient.

-

-

Data obtained through web crawling/scraping:

-

Information collected by or on behalf of the supplier using automated tools.

-

It must be specified:

-

Name/identifier of the trackers.

-

Purpose and behavior (respect for robots.txt, captchas, paywalls, etc.).

-

Collection period.

-

Types of websites (media, social networks, blogs, public portals, etc.).

-

List of most relevant domains, covering at least the top 10% by volume. For SMBs, this requirement is adjusted to 5% or a maximum of 1,000 domains, whichever is less.

-

-

-

Users data:

-

Information generated through interaction with the model or with other provider services.

-

It must indicate which services contribute and the modality of the data (text, image, audio, etc.).

-

-

Synthetic data:

-

Data created by or for the supplier using other AI models (e.g., model distillation or reinforcement with human feedback - RLHF).

-

Where appropriate, the generator model should be identified if it is available in the market.

-

Additional category – Other: Includes data that does not fit into the above categories, such as offline sources, self-digitization, manual tagging, or human generation.

3. Aspects of data processing

It focuses on how data has been handled before and during training, with a particular focus on legal compliance:

-

Respect for Text and Data Mining (TDM): measures taken to honour the right of exclusion provided for in Article 4(3) of Directive 2019/790 on copyright, which allows rightholders to prevent the mining of texts and data. This right is exercised through opt-out protocols, such as tags in files or configurations in robots.txt, that indicate that certain content cannot be used to train models. Vendors should explain how they have identified and respected these opt-outs in their own datasets and in those purchased from third parties.

-

Removal of illegal content: procedures used to prevent or debug content that is illegal under EU law, such as child sexual abuse material, terrorist content or serious intellectual property infringements. These mechanisms may include blacklisting, automatic classifiers, or human review, but without revealing trade secrets.

The following diagram summarizes these three sections:

Balancing transparency and trade secrets

The European Commission has designed the template seeking a delicate balance: offering sufficient information to protect rights and promote transparency, without forcing the disclosure of information that could compromise the competitiveness of suppliers.

-

Public sources: the highest level of detail is required, including names and links to "large" datasets.

-

Private sources: a more limited level of detail is allowed, through general descriptions when the information is not public.

-

Web scraping: a summary list of domains is required, without the need to detail exact combinations.

-

User and synthetic data: the information is limited to confirming its use and describing the modality.

Thanks to this approach, the summary is "generally complete" in scope, but not "technically detailed", protecting both transparency and the intellectual and commercial property of companies.

Compliance, deadlines and penalties

Article 53 of the AI Act details the obligations of general-purpose model providers, most notably the publication of this summary of training data.

This obligation is complemented by other measures, such as:

-

Have a public copyright policy.

-

Implement risk assessment and mitigation processes, especially for models that may generate systemic risks.

-

Establish mechanisms for traceability and supervision of data and training processes.

Non-compliance can lead to significant fines, up to €15 million or 3% of the company's annual global turnover, whichever is higher.

Next Steps for Suppliers

To adapt to this new obligation, providers should:

-

Review internal data collection and management processes to ensure that necessary information is available and verifiable.

-

Establish clear transparency and copyright policies, including protocols to respect the right of exclusion in text and data mining (TDM).

-

Publish the abstract on official channels before the corresponding deadline.

-

Update the summary periodically, at least every six months or when there are material changes in training.

The European Commission, through the European AI Office, will monitor compliance and may request corrections or impose sanctions.

A key tool for governing data

In our previous article, "Governing Data to Govern Artificial Intelligence", we highlighted that reliable AI is only possible if there is a solid governance of data.

This new template reinforces that principle, offering a standardized mechanism for describing the lifecycle of data, from source to processing, and encouraging interoperability and responsible reuse.

This is a decisive step towards a more transparent, fair and aligned AI with European values, where the protection of rights and technological innovation can advance together.

Conclusions

The publication of the Public Summary Template marks a historic milestone in the regulation of AI in Europe. By requiring providers to document and make public the data used in training, the European Union is taking a decisive step towards a more transparent and trustworthy artificial intelligence, based on responsibility and respect for fundamental rights. In a world where data is the engine of innovation, this tool becomes the key to governing data before governing AI, ensuring that technological development is built on trust and ethics.

Content created by Dr. Fernando Gualo, Professor at UCLM and Government and Data Quality Consultant. The content and views expressed in this publication are the sole responsibility of the author.

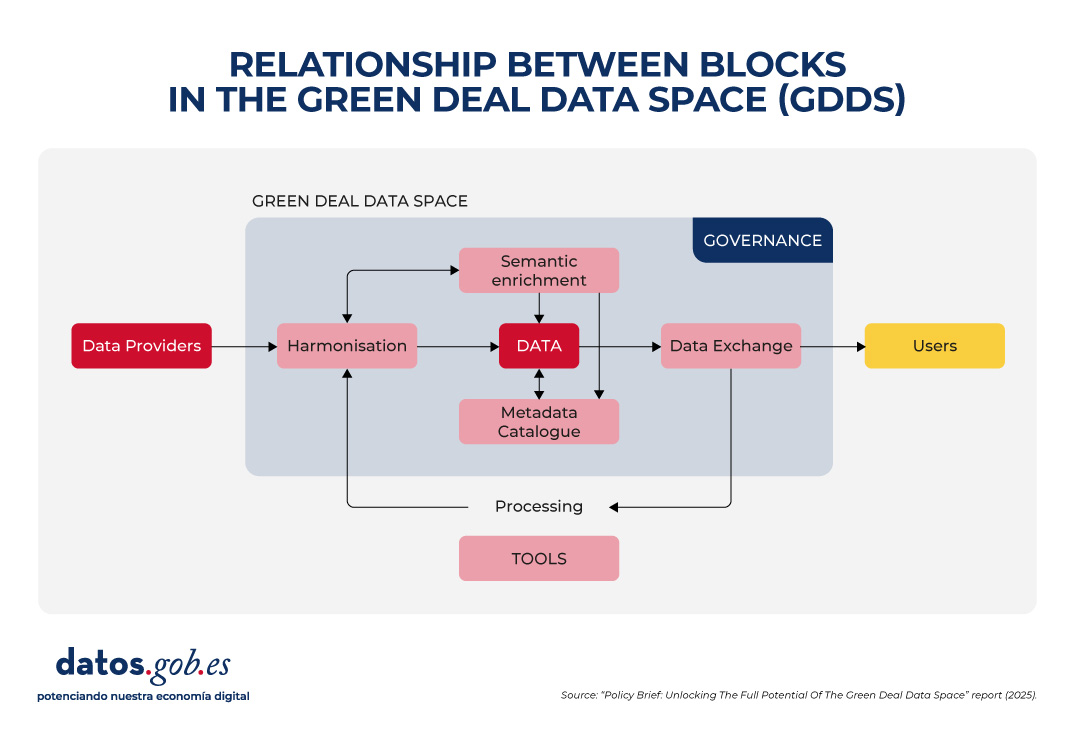

To achieve its environmental sustainability goals, Europe needs accurate, accessible and up-to-date information that enables evidence-based decision-making. The Green Deal Data Space (GDDS) will facilitate this transformation by integrating diverse data sources into a common, interoperable and open digital infrastructure.

In Europe, work is being done on its development through various projects, which have made it possible to obtain recommendations and good practices for its implementation. Discover them in this article!

What is the Green Deal Data Space?

The Green Deal Data Space (GDDS) is an initiative of the European Commission to create a digital ecosystem that brings together data from multiple sectors. It aims to support and accelerate the objectives of the Green Deal: the European Union's roadmap for a sustainable, climate-neutral and fair economy. The pillars of the Green Deal include:

- An energy transition that reduces emissions and improves efficiency.

- The promotion of the circular economy, promoting the recycling, reuse and repair of products to minimise waste.

- The promotion of more sustainable agricultural practices.